PROMPTCAP: Prompt-Guided Task-Aware Image Captioning

PROMPTCAP: 基于提示引导的任务感知图像描述生成

Abstract

摘要

Knowledge-based visual question answering (VQA) involves questions that require world knowledge beyond the image to yield the correct answer. Large language models (LMs) like GPT-3 are particularly helpful for this task because of their strong knowledge retrieval and reasoning capabilities. To enable LM to understand images, prior work uses a captioning model to convert images into text. However, when summarizing an image in a single caption sentence, which visual entities to describe are often under specified. Generic image captions often miss visual details essential for the LM to answer visual questions correctly. To address this challenge, we propose PROMPTCAP (Prompt-guided image Captioning), a captioning model designed to serve as a better connector between images and black-box LMs. Different from generic captions, PROMPTCAP takes a naturallanguage prompt to control the visual entities to describe in the generated caption. The prompt contains a question that the caption should aid in answering. To avoid extra annotation, PROMPTCAP is trained by examples synthesized with GPT-3 and existing datasets. We demonstrate PROMPTCAP’s effectiveness on an existing pipeline in which GPT-3 is prompted with image captions to carry out VQA. PROMPTCAP outperforms generic captions by a large margin and achieves state-of-the-art accuracy on knowledge-based VQA tasks $60.4%$ on OK-VQA and $59.6%$ on A-OKVQA). Zero- shot results on WebQA show that PROMPTCAP generalizes well to unseen domains.1

基于知识的视觉问答(VQA)涉及需要图像之外的世界知识才能得出正确答案的问题。像GPT-3这样的大语言模型因其强大的知识检索和推理能力而特别适合这项任务。为了让语言模型理解图像,先前的研究使用图像描述模型将图像转换为文本。然而,当用单句描述总结图像时,往往未明确指定需要描述哪些视觉实体。通用图像描述经常会遗漏对语言模型正确回答视觉问题至关重要的视觉细节。为解决这一挑战,我们提出了PROMPTCAP(基于提示的图像描述),这是一种专为更好地连接图像与黑盒语言模型而设计的描述模型。与通用描述不同,PROMPTCAP采用自然语言提示来控制生成描述中包含的视觉实体。该提示包含描述应协助回答的问题。为避免额外标注,PROMPTCAP通过GPT-3合成的样本和现有数据集进行训练。我们在现有流程中验证了PROMPTCAP的有效性,该流程通过向GPT-3提供图像描述来执行VQA。PROMPTCAP大幅优于通用描述,在基于知识的VQA任务上达到最先进准确率(OK-VQA 60.4%,A-OKVQA 59.6%)。在WebQA上的零样本结果表明PROMPTCAP能很好地泛化到未见领域。[1]

1. Introduction

1. 引言

Knowledge-based visual question answering (VQA) [37] extends traditional VQA tasks [3] with questions that require broad knowledge and commonsense reasoning to yield the correct answer. Existing systems on knowledge-based VQA retrieve external knowledge from various sources, including knowledge graphs [13, 36, 63], Wikipedia [36, 63, 12, 15, 29], and web search [35, 63]. Recent work [67] finds that modern language models (LMs) like GPT-3 [5] are particularly useful for this task because of their striking knowledge retrieval and reasoning abilities. The current state-of-the-art methods [67, 15, 29, 1] all make use of recent large language models (GPT-3 or Chinchilla).

基于知识的视觉问答 (VQA) [37] 通过需要广泛知识和常识推理的问题扩展了传统 VQA 任务 [3]。现有基于知识的 VQA 系统从多种来源检索外部知识,包括知识图谱 [13, 36, 63]、维基百科 [36, 63, 12, 15, 29] 和网络搜索 [35, 63]。近期研究 [67] 发现,像 GPT-3 [5] 这样的现代语言模型 (LM) 因其出色的知识检索和推理能力而特别适合此任务。当前最先进的方法 [67, 15, 29, 1] 都利用了最新的大语言模型 (GPT-3 或 Chinchilla)。

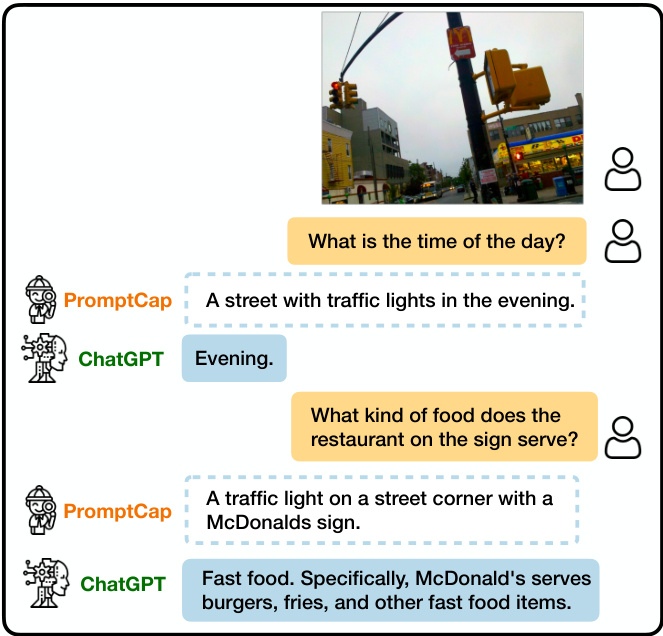

v.s. COCO caption: A traffic light on a pole on a city street. Figure 1. Illustration of VQA with PROMPTCAP and ChatGPT. PROMPTCAP is designed to work with black-box language models (e.g., GPT-3, ChatGPT) by describing question-related visual information in the text. Different from generic captions, PROMPTCAP customizes the caption according to the input question prompt, which helps ChatGPT understand the image and give correct answers to the user. In contrast, ChatGPT cannot infer the answers from the vanilla human-written caption from MSCOCO.

图 1: PROMPTCAP与ChatGPT的VQA示例对比。PROMPTCAP通过文本描述与问题相关的视觉信息,专为黑盒语言模型(如GPT-3、ChatGPT)设计。与通用描述不同,PROMPTCAP会根据输入问题提示定制描述内容,帮助ChatGPT理解图像并给出正确答案。相比之下,ChatGPT无法从MSCOCO的标准人工描述中推断出答案。

One key challenge is to allow LMs to understand images. Many top-performing LMs (e.g., GPT-3, ChatGPT) are only accessible via APIs, making it impossible to access their internal representations or conduct fine-tuning [49]. A popular solution is to project images into texts that black-box LMs can process, via a generic image captioning model [7] or an image tagger [67]. This framework has been successful on multiple tasks, including VQA [67, 15, 29], image paragraph captioning [65], and video-language tasks [70, 60]. Despite promising results, converting visual inputs into a generic, finite text description risks excluding information necessary for the task. As discussed in PICa [67], when used for VQA tasks, the generic caption might miss the detailed visual information needed to answer the question, such as missing the “McDonald’s" in Figure 1.

一个关键挑战是让大语言模型理解图像。许多性能领先的大语言模型(如 GPT-3、ChatGPT)仅能通过 API 访问,无法获取其内部表征或进行微调 [49]。主流解决方案是通过通用图像描述模型 [7] 或图像标记器 [67],将图像投射为黑盒大语言模型可处理的文本。该框架已在多项任务中取得成功,包括视觉问答 (VQA) [67, 15, 29]、图像段落描述 [65] 和视频语言任务 [70, 60]。尽管效果显著,但将视觉输入转换为通用的有限文本描述可能丢失任务关键信息。如 PICa [67] 所述,在视觉问答任务中,通用描述可能遗漏回答问题所需的细节视觉信息(例如图 1 中缺失的 "McDonald's" 标识)。

To address the above challenges, we introduce PROMPTCAP, a question-aware captioning model designed to serve as a better connector between images and a black-box LM. PROMPTCAP is illustrated in Figure 2. PROMPTCAP takes an extra natural language prompt as input to control the visual content to describe. The prompt contains the question that the generated caption should help to answer. LMs can better answer visual questions by using PROMPTCAP as their “visual front-end". For example, in Figure 1, when asked “what is the time of the day?", PROMPTCAP includes “in the evening" in its image description; when asked “what kind of food does the restaurant on the sign serve?", PROMPTCAP includes “McDonald’s” in its description. Such visual information is critical for ChatGPT to reply to the user with the correct answers. In contrast, the generic COCO [28] caption often contains no information about the time or the sign, making ChatGPT unable to answer the questions.

为解决上述挑战,我们提出了PROMPTCAP模型。这是一种问题感知的图文描述模型,旨在更好地连接图像与黑盒大语言模型。如图2所示,PROMPTCAP通过接收自然语言提示(prompt)来控制需要描述的视觉内容,该提示包含生成描述时需要协助回答的问题。将PROMPTCAP作为"视觉前端"时,大语言模型能更准确地回答视觉问题。例如图1中,当被问及"现在是一天中的什么时候"时,PROMPTCAP会在图像描述中加入"傍晚时分";当询问"招牌上的餐厅供应什么食物"时,描述中则会包含"麦当劳"。这些视觉信息对ChatGPT给出正确答案至关重要。相比之下,通用COCO[28]描述通常不包含时间或招牌信息,导致ChatGPT无法回答这类问题。

One major technical challenge is PROMPTCAP training. The pipeline of “PROMPTCAP $^+$ black-box LM" cannot be end-to-end fine-tuned on VQA tasks because the LM parameters are not exposed through the API. Also, there are no training data for question-aware captions. To avoid extra annotation, we propose a pipeline to synthesize and filter training samples with GPT-3. Specifically, we view existing VQA datasets as pairs of question and question-related visual details. Given a question-answer pair, we rewrite the corresponding image’s generic caption into a customized caption that helps answer the question. Following 20 humanannotated examples, GPT-3 synthesizes a large number of question-aware captions via few-shot in-context learning [5]. To ensure the sample quality, we filter the generated captions by performing QA with GPT-3, checking if the answer can be inferred given the question and the synthesized caption. Notice that GPT-3 is frozen in the whole pipeline. Its strong few-shot learning ability makes this pipeline possible.

主要技术挑战在于PROMPTCAP训练。"PROMPTCAP $^+$ 黑盒LM"的流程无法在VQA(视觉问答)任务上进行端到端微调,因为LM参数未通过API暴露。此外,也不存在针对问题感知描述的标注数据。为避免额外标注,我们提出用GPT-3合成并筛选训练样本的流程:将现有VQA数据集视为问题与相关视觉细节的配对,根据给定问答对将通用图像描述改写为有助于回答问题的定制化描述。基于20个人工标注示例,GPT-3通过少样本上下文学习[5]合成大量问题感知描述。为确保样本质量,我们使用GPT-3进行QA过滤,检验给定问题和合成描述是否能推导出答案。值得注意的是,整个流程中GPT-3始终保持冻结状态,其强大的少样本学习能力使得该流程得以实现。

We demonstrate the effectiveness of PROMPTCAP on knowledge-based VQA tasks with the pipeline in PICa [67]. Details of the pipeline are illustrated in $\S4$ . The images are converted into texts via PROMPTCAP, allowing GPT-3 to perform VQA via in-context learning. This pipeline, despite

我们通过PICa[67]中的流程展示了PROMPTCAP在基于知识的视觉问答(VQA)任务上的有效性。该流程的具体细节如$\S4$所示。图像通过PROMPTCAP被转换为文本,使GPT-3能够通过上下文学习进行VQA。尽管这一流程...

Original VQA sample

原始VQA样本

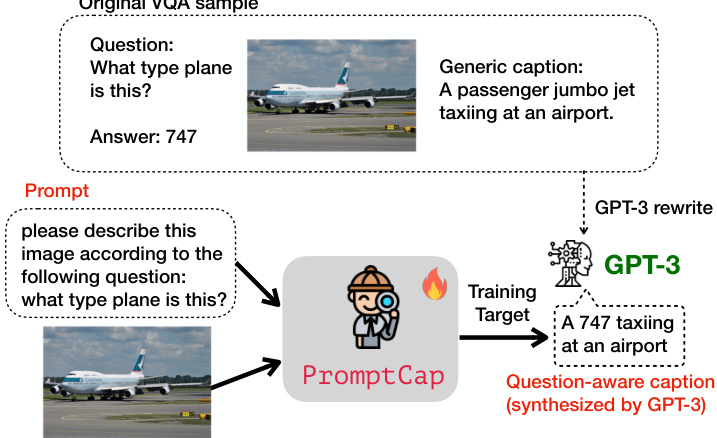

Figure 2. Overview of PROMPTCAP training. PROMPTCAP takes two inputs, including an image and a natural language prompt. The model is trained to generate a caption that helps downstream LMs to answer the question. During training, we use GPT-3 to synthesize VQA samples into captioning examples. The original caption is rewritten into a caption that helps answer the question. PROMPTCAP is trained to generate this synthesized caption given the image and the prompt.

图 2: PROMPTCAP训练流程概览。PROMPTCAP接收两个输入:图像和自然语言提示(prompt)。该模型被训练用于生成有助于下游大语言模型回答问题的描述文本。训练过程中,我们使用GPT-3将视觉问答(VQA)样本合成为描述文本样本。原始描述文本被重写为有助于回答问题的版本。PROMPTCAP的训练目标是根据给定图像和提示生成这种合成后的描述文本。

its simplicity, achieves state-of-the-art results on knowledgebased VQA tasks $ (60.4%$ on OK-VQA [38] and $59.6%$ on A-OKVQA [46]). We also conduct extensive ablation studies on the contribution of each component, showing that PROMPTCAP gives a consistent performance gain $3.8%$ on OK-VQA, $5.3%$ on A-OKVQA, and $9.2%$ on VQAv2) over a generic captioning model that shares the same architecture and training data. Finally, we investigate PROMPTCAP’s generalization ability on WebQA [6], showing that PROMPTCAP, without any training on the compositional questions in WebQA, outperforms the generic caption approach and all supervised baselines.

其简洁性在基于知识的视觉问答(VQA)任务中取得了最先进的结果(在OK-VQA[38]上达到60.4%,在A-OKVQA[46]上达到59.6%)。我们还对各组件的贡献进行了广泛的消融研究,结果表明PROMPTCAP相比采用相同架构和训练数据的通用描述模型,在OK-VQA上带来3.8%的性能提升,在A-OKVQA上提升5.3%,在VQAv2上提升9.2%。最后,我们研究了PROMPTCAP在WebQA[6]上的泛化能力,结果显示未经任何WebQA组合问题训练的PROMPTCAP,其表现优于通用描述方法和所有监督基线。

In summary, our contributions are as follows:

总之,我们的贡献如下:

• We propose PROMPTCAP, a novel question-aware captioning model that uses natural language prompt to control the visual content to be described. (§3) • To the best of our knowledge, we are the first to propose a pipeline to synthesize and filter training samples for vision-language tasks via GPT-3 (§3.1). • PROMPTCAP helps GPT-3 in-context learning (§4) achieve state-of-the-art results on OK-VQA and AOKVQA, substantially outperforming generic captions on various VQA tasks. (§5).

• 我们提出了PROMPTCAP,这是一种新颖的问题感知型描述模型,通过自然语言提示(prompt)控制待描述的视觉内容。(§3)

• 据我们所知,我们首次提出通过GPT-3合成和筛选视觉语言任务训练样本的流程(§3.1)。

• PROMPTCAP助力GPT-3的上下文学习(§4),在OK-VQA和AOKVQA上取得最先进成果,在各种VQA任务中显著优于通用描述。(§5)

2. Related Work

2. 相关工作

Knowledge-Based VQA Knowledge-based VQA [38, 46] requires systems to leverage external knowledge beyond image content to answer the question. Prior works [13, 36, 63, 72, 40, 41, 17, 18, 12] investigate leveraging knowledge from various external knowledge resources, e.g., Wikipedia [56], ConceptNet [50], and ASER [71], to improve the performance of the VQA models. Inspired by PICa [67], recent works [15, 29] use GPT-3 as an implicit knowledge base and achieve state-of-the-art results. We identify the critical problem: generic captions used to prompt GPT-3 often miss critical visual details for VQA. We address this challenge with PROMPTCAP.

基于知识的视觉问答

基于知识的视觉问答 [38, 46] 要求系统利用图像内容之外的外部知识来回答问题。先前的研究 [13, 36, 63, 72, 40, 41, 17, 18, 12] 探索了从多种外部知识资源(例如 Wikipedia [56]、ConceptNet [50] 和 ASER [71])中获取知识,以提高视觉问答模型的性能。受 PICa [67] 启发,近期研究 [15, 29] 使用 GPT-3 作为隐式知识库,并取得了最先进的结果。我们发现关键问题在于:用于提示 GPT-3 的通用描述通常遗漏了视觉问答所需的关键视觉细节。我们通过 PROMPTCAP 解决了这一挑战。

Vision-Language Models Vision-language models have recently shown striking success on various multimodal tasks [51, 33, 9, 27, 61, 22, 43, 66, 57, 58, 34, 68, 8, 26]. These works first pretrain multimodal models on large-scale image-text datasets and then finetune the models for particular tasks. The works most related to ours are Frozen [54], Flamingo [1], and BLIP-2 [26], which keeps the LMs frozen and tune a visual encoder for the LM. However, such techniques require access to internal LM parameters and are thus difficult to be applied to black-box LMs like GPT-3.

视觉语言模型 (Vision-Language Models)

视觉语言模型近期在多模态任务中展现出显著成效 [51, 33, 9, 27, 61, 22, 43, 66, 57, 58, 34, 68, 8, 26]。这些工作首先在大规模图文数据集上预训练多模态模型,随后针对特定任务进行微调。与本研究最相关的是 Frozen [54]、Flamingo [1] 和 BLIP-2 [26],它们保持大语言模型参数冻结,仅调整视觉编码器以适应语言模型。但此类技术需访问语言模型内部参数,因此难以应用于 GPT-3 等黑盒大语言模型。

Prompting for Language Models Prompting allows a pre-trained model to adapt to different tasks via different prompts without modifying any parameters. LLMs like GPT3 [5] have shown strong zero-shot and few-shot ability via prompting. Prompting has been successful for a variety of natural language tasks [32], including but not limited to classification tasks [39, 48], semantic parsing [64], knowledge generation [49, 30], and dialogue systems [24, 16]. The most closely-related works to ours are the instruction-finetuned language models [45, 62, 59].

语言模型的提示方法

提示方法 (Prompting) 使得预训练模型能够通过不同提示适应不同任务,而无需修改任何参数。像 GPT3 [5] 这样的大语言模型通过提示展现了强大的零样本和少样本能力。提示方法已在多种自然语言任务中取得成功 [32],包括但不限于分类任务 [39, 48]、语义解析 [64]、知识生成 [49, 30] 以及对话系统 [24, 16]。与我们的工作最密切相关的是经过指令微调的语言模型 [45, 62, 59]。

3. PROMPTCAP

3. PROMPTCAP

We introduce PROMPTCAP, an image captioning model that utilizes a natural language prompt as an input condition. The overview of PROMPTCAP training is in Figure 2. Given an image $I$ , and a natural language prompt $P$ , PROMPTCAP generates a prompt-guided caption $C$ . $P$ contains instructions about the image contents of interest to the user. For VQA, an example prompt could be “Please describe this image according to the following question: what type plane is this?. The prompt-guided caption $C$ should (1) cover the visual details required by the instruction in the prompt, (2) describe the main objects as general captions do, and (3) use auxiliary information in the prompt if necessary. For instance, assuming the prompt contains a VQA question, $C$ may directly describe the asked visual contents (e.g., for questions about visual details), or provide information that helps downstream models to infer the answer (e.g., for questions that need external knowledge to solve).

我们介绍PROMPTCAP,这是一种利用自然语言提示作为输入条件的图像描述模型。PROMPTCAP训练的概览如图2所示。给定图像$I$和自然语言提示$P$,PROMPTCAP生成提示引导的描述$C$。$P$包含用户感兴趣的图像内容指令。对于视觉问答(VQA),示例提示可以是"请根据以下问题描述这张图片:这是什么类型的飞机?"。提示引导的描述$C$应当:(1) 覆盖提示指令所需的视觉细节,(2) 像常规描述那样涵盖主要物体,(3) 必要时使用提示中的辅助信息。例如,若提示包含VQA问题,$C$可直接描述询问的视觉内容(如针对视觉细节的问题),或提供帮助下游模型推断答案的信息(如需要外部知识解决的问题)。

Given the above design, the major technical challenge is PROMPTCAP training. PROMPTCAP is designed to work with black-box LMs, which cannot be end-to-end fine-tuned on VQA tasks because the LM parameters are not accessible. Besides, there are no training data for question-aware captions. To address these challenges, we propose training PROMPTCAP with data synthesized with GPT-3.

基于上述设计,主要技术挑战在于PROMPTCAP的训练。PROMPTCAP需与黑盒大语言模型协同工作,由于无法访问模型参数,无法针对VQA任务进行端到端微调。此外,现有数据缺乏问题感知的标注文本。为解决这些问题,我们提出利用GPT-3合成数据来训练PROMPTCAP。

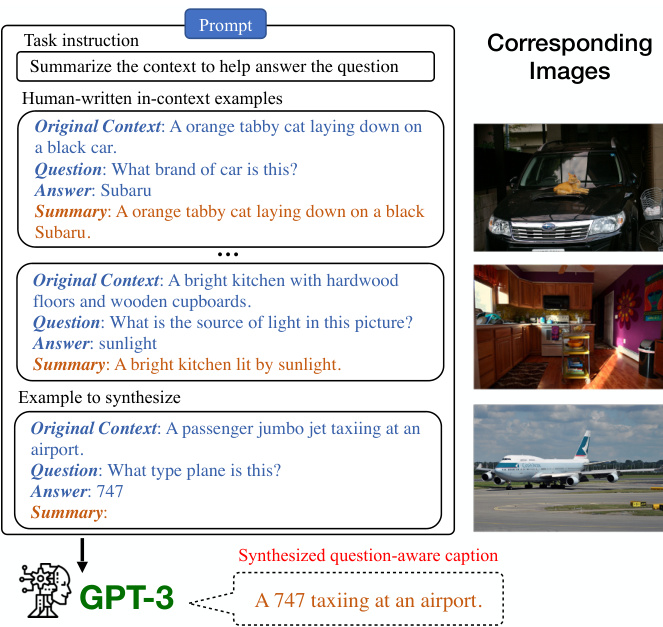

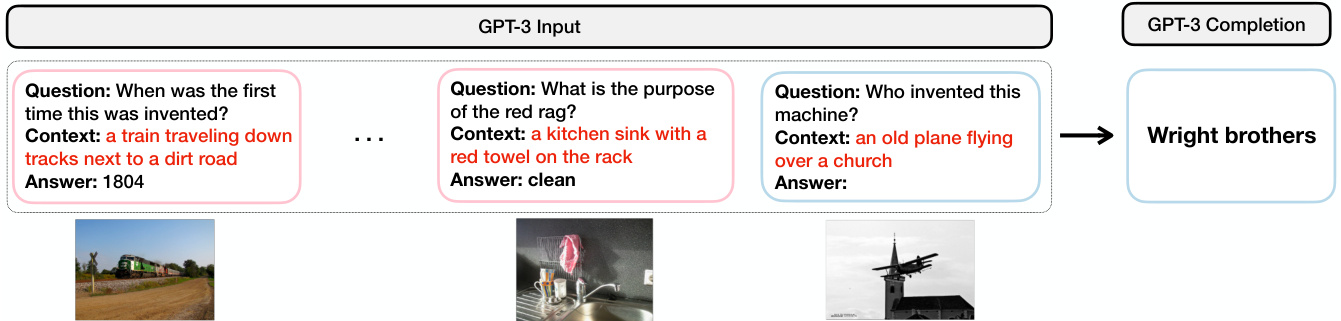

Figure 3. Training example synthesis with GPT-3 in-context learning. The “Original Contexts" are ground-truth image captions. The question-answer pairs come from existing VQA datasets. GPT-3 generalizes (without parameter updates) from the human-written examples to produce the question-aware caption given the caption, question, and answer. The images are shown for clarity but are not used in our data synthesis procedure.

图 3: 使用 GPT-3 上下文学习生成训练示例。"原始上下文"为真实图像描述文本,问答对来自现有 VQA 数据集。GPT-3 通过人类编写的示例进行泛化(无需参数更新),根据描述、问题和答案生成问题感知型描述文本。图中展示的图像仅为示意用途,实际数据合成过程中并未使用。

3.1. Training Data Synthesis

3.1. 训练数据合成

To avoid annotating question-aware caption examples, we use GPT-3 to generate training examples for PROMPTCAP via in-context learning [5, 44, 16, 10].

为避免标注问题感知的标题示例,我们使用 GPT-3 通过上下文学习 [5, 44, 16, 10] 为 PROMPTCAP 生成训练样本。

3.1.1 Training Example Generation with GPT-3

3.1.1 使用 GPT-3 生成训练样本

For PROMPTCAP training, we view existing VQA datasets as natural sources of pairs of task and task-related visual details. We synthesize question-aware captions by combining the general image captions and the question-answering pairs using GPT-3 in-context learning. Figure 3 illustrates the GPT-3 prompt we use for training example generation. The prompt contains the task instruction, 20 human-written examples, and the VQA question-image pair that we synthesize the task-aware caption from. Since GPT-3 only accepts text inputs, we represent each image by concatenating the 5 human-written COCO captions [7], as shown in the “Original Context". The human-written examples follow the three principles of prompt-guided captions described in Section 3. The commonsense reasoning ability of GPT-3 allows the model to understand the image to some extent via the COCO captions and synthesize new examples by following the human-written examples.

在PROMPTCAP训练中,我们将现有VQA数据集视为任务与任务相关视觉细节的自然配对来源。通过使用GPT-3的上下文学习能力,结合通用图像描述和问答对,我们合成了问题感知的描述。图3展示了用于训练样本生成的GPT-3提示模板。该提示包含任务指令、20个人工编写的示例,以及用于合成任务感知描述的VQA问题-图像对。由于GPT-3仅接受文本输入,我们通过拼接5条人工编写的COCO描述[7]来表示每张图像(如"原始上下文"所示)。人工编写的示例遵循第3节所述的提示引导描述三原则。GPT-3的常识推理能力使其能够通过COCO描述在一定程度上理解图像,并参照人工示例合成新样本。

3.1.2 Training Example Filtering

3.1.2 训练样本过滤

To ensure the quality of the generated captions, we sample 5 candidate captions from GPT-3 for each question-answer pair. We devise a pipeline to filter out the best candidate caption as the training example for PROMPTCAP. The idea is that a text-only QA system should correctly answer the question given a high-quality prompt-guided caption as the context. For each candidate caption, we use the GPT-3 incontext learning VQA system in $\S4$ to predict an answer, and score the candidate captions by comparing this answer with the ground-truth answers.

为确保生成描述的质量,我们从GPT-3中为每个问答对采样5条候选描述。我们设计了一个流程来筛选出最佳候选描述作为PROMPTCAP的训练样本。其核心思想是:一个纯文本问答系统在获得高质量的提示引导描述作为上下文时,应能正确回答问题。对于每条候选描述,我们使用$\S4$中基于GPT-3的上下文学习VQA系统预测答案,并通过将该答案与真实答案对比来评分候选描述。

Soft VQA Accuracy We find that in the open-ended generation setting, the VQA accuracy [14] incorrectly punishes answers with a slight difference in surface form. For example, the answer “coins" gets 0 when the ground truth is “coin". To address this problem, we devise a new soft VQA accuracy for example filtering. Suppose the predicted answer is $a$ and the human-written ground truth answers are $[g_{1},g_{2},...,g_{n}]$ . The soft accuracy is given by the three lowest-CER ground truth answers:

软VQA准确率

我们发现,在开放式生成场景下,VQA准确率[14]会错误地惩罚表面形式存在细微差异的答案。例如当标准答案为"coin"时,预测答案"coins"会被判为0分。为解决该问题,我们设计了一种新的软VQA准确率用于样本筛选。假设预测答案为$a$,人工标注的标准答案为$[g_{1},g_{2},...,g_{n}]$,则软准确率由CER最低的三个标准答案计算得出:

$$

A c c_{s o f t}(a)=\operatorname*{max}{x,y,z\in[n]}\sum_{i\in{x,y,z}}\frac{\operatorname*{max}[0,1-C E R(a,g_{i})]}{3},

$$

$$

A c c_{s o f t}(a)=\operatorname*{max}{x,y,z\in[n]}\sum_{i\in{x,y,z}}\frac{\operatorname*{max}[0,1-C E R(a,g_{i})]}{3},

$$

where CER is the character error rate, calculated by the character edit distance over the total number of characters of the ground truth. In contrast, the traditional VQA accuracy [14] uses exact match. We sort the candidate captions based on this soft score.

其中CER是字符错误率 (character error rate),由字符编辑距离除以真实文本的总字符数计算得出。相比之下,传统VQA准确率[14]采用完全匹配方式。我们根据这个软分数对候选描述进行排序。

Comparing with COCO ground-truth Multiple candidates may answer the question correctly and get the same soft score. To break ties, we also compute the CIDEr score [55] between the candidate captions and the COCO ground-truth captions. Among the candidates with the highest soft VQA accuracy, the one with the highest CIDEr score is selected as the training example for PROMPTCAP.

与COCO真实标注对比

多个候选答案可能正确回答问题并获得相同的软分数。为打破平局,我们还计算候选标注与COCO真实标注之间的CIDEr分数[55]。在软VQA准确率最高的候选答案中,选择CIDEr分数最高的作为PROMPTCAP的训练样本。

3.2. PROMPTCAP Training

3.2. PROMPTCAP 训练

For PROMPTCAP training, we start with the state-of-theart pre-trained vision-language model OFA [58] and make some modifications to the OFA captioning model. OFA has an encoder-decoder structure. As discussed earlier, our training data are synthesized with VQA data in the form of question-caption pairs. Given a question-caption pair, we first rewrite the question into an instruction prompt via a template. For example, the instruction prompt might be “describe to answer: What is the clock saying the time $i s\mathrm{\ensuremath{?}~}^{\prime\prime}$ . We apply byte-pair encoding (BPE) [47] to the given text sequence, encoding it as subwords. Images are transtoken set. Let the training samples be $\mathcal{D}={P_{i},I_{i},C_{i}}_{i=1}^{|\mathcal{D}|}$ in which $P_{i}$ is the text prompt, $I_{i}$ is the image patch, and $C_{i}$ is the synthesized task-aware caption. The captioning model takes $[P_{i}~:~I_{i}]$ as input and is trained to generate $C_{i}=[c_{1},c_{2},...,c_{|C_{i}|}]$ . Here $[:]$ is concatenation. We use negative log-likelihood loss and train the model in an end-toend manner. The training loss is :

在PROMPTCAP训练中,我们以最先进的预训练视觉语言模型OFA [58]为基础,并对OFA描述模型进行了一些修改。OFA采用编码器-解码器结构。如前所述,我们的训练数据是以问答-描述对的形式通过VQA数据合成的。给定一个问答-描述对,我们首先通过模板将问题重写为指令提示。例如,指令提示可能是"描述以回答:时钟显示的时间是几点?"。我们对给定文本序列应用字节对编码(BPE) [47],将其编码为子词。图像被转换为token集。设训练样本为$\mathcal{D}={P_{i},I_{i},C_{i}}_{i=1}^{|\mathcal{D}|}$,其中$P_{i}$是文本提示,$I_{i}$是图像块,$C_{i}$是合成的任务感知描述。描述模型以$[P_{i}~:~I_{i}]$作为输入,训练目标是生成$C_{i}=[c_{1},c_{2},...,c_{|C_{i}|}]$。这里$[:]$表示拼接操作。我们使用负对数似然损失并以端到端方式训练模型。训练损失为:

$$

\mathcal{L}=-\sum_{\mathcal{D}}\sum_{t=1}^{|C_{i}|}\log p(c_{t}\mid[P_{i}:I_{i}],c_{\le t-1}).

$$

$$

\mathcal{L}=-\sum_{\mathcal{D}}\sum_{t=1}^{|C_{i}|}\log p(c_{t}\mid[P_{i}:I_{i}],c_{\le t-1}).

$$

4. VQA with PROMPTCAP and GPT-3

4. 基于PROMPTCAP与GPT-3的视觉问答

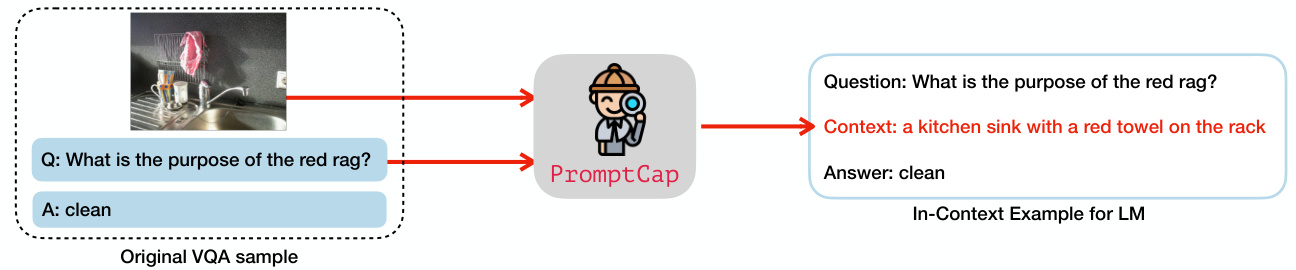

Our VQA pipeline is illustrated in Figure 4, which is adopted from PICa [67]. The pipeline consists of two components, PROMPTCAP and GPT-3.

我们的VQA流程如图4所示,该流程借鉴自PICa [67]。流程包含两个组件:PROMPTCAP和GPT-3。

Step 1: Converting images into texts via PROMPTCAP GPT-3 can perform a new task by simply conditioning on several task training examples as demonstrations. As we have discussed, the major challenge is that GPT-3 does not understand images. To bridge this modality gap, we convert the images in VQA samples to texts using PROMPTCAP (Figure 4a). Notice that different from generic captioning models, PROMPTCAP customizes the image caption according to the question, which enables LMs to understand question-related visual information in the image. As such, we are able to convert VQA samples into question-answering examples that GPT-3 can understand.

第一步:通过PROMPTCAP将图像转换为文本

GPT-3只需以少量任务训练示例作为演示即可执行新任务。如前所述,主要挑战在于GPT-3无法理解图像。为弥合这一模态差异,我们使用PROMPTCAP将VQA样本中的图像转换为文本(图4a)。需注意,与通用描述模型不同,PROMPTCAP会根据问题定制图像描述,从而使大语言模型能够理解图像中与问题相关的视觉信息。通过这种方式,我们将VQA样本转换为GPT-3可理解的问答示例。

Step 2: GPT-3 in-context learning for VQA Having used PROMPTCAP to convert VQA examples into question-answer examples that GPT-3 can understand (Step 1), we use a subset of these examples as the task demonstration for GPT-3. We concatenate the incontext learning examples to form a prompt, as shown in Figure 4b. Each in-context learning example consists of a question (Question: When was the first time this was invented?), a context generated by PROMPTCAP (Context: a train traveling down tracks next to a dirt road), and an answer (Answer: 1804). Then we append the test example to the in-context learning examples, and provide them as inputs to GPT-3. GPT-3 generates predictions based on an open-ended text generation approach, taking into account the information provided in the in-context learning examples and the test example.

步骤2:利用GPT-3进行VQA的上下文学习

通过PROMPTCAP将VQA示例转化为GPT-3可理解的问答对(步骤1)后,我们选取部分示例作为GPT-3的任务演示。如图4b所示,将这些上下文学习示例拼接形成提示词。每个上下文学习示例包含问题(Question: 这项发明首次出现于何时?)、PROMPTCAP生成的上下文(Context: 火车沿轨道行驶,旁边是土路)及答案(Answer: 1804)。随后将测试示例追加至上下文学习示例后,共同作为GPT-3的输入。GPT-3采用开放式文本生成方法,结合上下文学习示例和测试示例中的信息生成预测结果。

Example retrieval Previous research has shown that the effectiveness of in-context learning examples chosen for GPT-3 can significantly impact its performance [31]. In the few-shot setting where only a few training examples are available, we simply use these examples as in-context learning examples (referred to as “Random” in later sections because they are selected at random from our collection). However, in practice, we often have access to more than small $^{\cdot n}$ examples (i.e., full training data setting). To improve the selection of in-context learning examples, we follow the approach proposed by [67]: we compute the similarity between examples using CLIP [43] by summing up the cosine similarities of the question and image embeddings. The $n$ most similar examples in the training set are then selected as in-context examples (referred to as “CLIP” in this paper). By using the most similar in-context examples to the test instance, our approach can improve the quality of the learned representations and boost the performance of GPT-3 on VQA tasks.

示例检索

先前研究表明,为GPT-3选择的上下文学习示例会显著影响其性能[31]。在仅能获取少量训练样本的少样本场景中,我们直接将这些样本作为上下文学习示例(后文称为"随机"示例,因其从数据集中随机选取)。但在实际应用中,我们通常能获取远超少量$^{\cdot n}$的样本(即完整训练数据场景)。为优化上下文学习示例的选择,我们采用[67]提出的方法:通过CLIP[43]计算样本间相似度,即对问题嵌入和图像嵌入的余弦相似度求和,再从训练集中选取$n$个最相似样本作为上下文示例(本文称为"CLIP"示例)。通过选用与测试实例最相似的上下文示例,我们的方法能提升学习表征的质量,并增强GPT-3在VQA任务中的表现。

(a) Step 1: Using PromptCap to convert images into texts

图 1:

(a) 步骤1: 使用 PromptCap 将图像转换为文本

Figure 4. Our inference pipeline for VQA. (a) Illustration of how we convert a VQA sample into pure text. Given the image and the question, PROMPTCAP describes the question-related visual information in natural language. The VQA sample is turned into a QA sample that GPT-3 can understand. (b) GPT-3 in-context learning for VQA. After converting the VQA examples into text with PROMPTCAP, we carry out VQA by in-context learning on GPT-3. The input consists of the task instruction (not shown in the figure), the in-context examples, and the test instance. GPT-3 takes the input and generates the answer. Notice that the GPT-3 is treated as a black box and is only used for inference. The question-aware captions PROMPTCAP generated are marked red.

图 4: 我们的VQA推理流程。(a) 展示如何将VQA样本转换为纯文本。给定图像和问题,PROMPTCAP用自然语言描述与问题相关的视觉信息。VQA样本被转化为GPT-3能理解的问答样本。(b) GPT-3在上下文学习中进行VQA。通过PROMPTCAP将VQA示例转换为文本后,我们通过在GPT-3上进行上下文学习来执行VQA。输入包括任务指令(图中未显示)、上下文示例和测试实例。GPT-3接收输入并生成答案。请注意,GPT-3被视为黑盒,仅用于推理。PROMPTCAP生成的问题感知描述用红色标出。

5. Experiments

5. 实验

In this section, we demonstrate PROMPTCAP’s effectiveness on knowledge-based VQA tasks. First, we show that PROMPTCAP captions enable GPT-3 to achieve state-ofthe-art performance on OK-VQA [38] and A-OKVQA [46] with in-context learning. Then we conduct ablation experiments on the contribution of each component, showing that PROMPTCAP is giving consistent gains over generic captions. In addition, experiments on WebQA [6] demonstrate that PROMPTCAP generalizes well to unseen domains.

在本节中,我们展示了PROMPTCAP在基于知识的视觉问答(VQA)任务上的有效性。首先,我们证明PROMPTCAP生成的描述能使GPT-3通过上下文学习在OK-VQA [38]和A-OKVQA [46]数据集上达到最先进的性能。随后我们通过消融实验验证各组件贡献度,结果表明PROMPTCAP相比通用描述能带来持续的性能提升。此外,在WebQA [6]上的实验表明PROMPTCAP对未见领域也具备良好的泛化能力。

5.1. Experimental Setup

5.1. 实验设置

Datasets We use three knowledge-based VQA datasets, namely OK-VQA [38], A-OKVQA [46], and WebQA [6]. OK-VQA[38] is a large knowledge-based VQA dataset that contains 14K image-question pairs. Questions are manually filtered to ensure that outside knowledge is required to answer the questions. A-OKVQA[46] is an augmented successor of OK-VQA, containing 25K image-question pairs that require broader commonsense and world knowledge to answer. For both OK-VQA and A-OKVQA, the direct answers are evaluated by the soft accuracy from VQAv2[14]. Besides direct answer evaluation, A-OKVQA also provides multiple-choice evaluation, where the model should choose one correct answer among 4 candidates. WebQA [6] is a multimodal multi-hop reasoning benchmark that requires the model to combine multiple text and image sources to answer a question.

数据集

我们使用了三个基于知识的视觉问答(VQA)数据集:OK-VQA [38]、A-OKVQA [46]和WebQA [6]。OK-VQA[38]是一个大型基于知识的VQA数据集,包含14K个图像-问题对。问题经过人工筛选,确保需要外部知识才能回答。A-OKVQA[46]是OK-VQA的增强版,包含25K个需要更广泛常识和世界知识来回答的图像-问题对。对于OK-VQA和A-OKVQA,直接答案通过VQAv2[14]的软准确率进行评估。除了直接答案评估外,A-OKVQA还提供多选题评估,模型需从4个候选答案中选择正确答案。WebQA[6]是一个多模态多跳推理基准,要求模型结合多个文本和图像源来回答问题。

PROMPTCAP implementation details We adopt the officially released OFA [58] captioning checkpoint “captionlarge-best-clean” (470M) for model initialization and use the GPT-3 synthesized examples in $\S3.1$ to fine-tune the model. The examples are synthesized from VQAv2 [3, 14]. Notice that this dataset is included in OFA pre-training, so we are not adding additional annotated training data compared with OFA. We use AdamW [23] optimizer with learning rate ${2\times10^{-5},3\times10^{-5},5\times10^{-5}}$ , batch size ${32,64,128}$ , and $\beta_{1}=0.9$ , $\beta_{2}=0.999$ for training.

PROMPTCAP 实现细节

我们采用官方发布的 OFA [58] 字幕生成检查点 "captionlarge-best-clean" (470M) 进行模型初始化,并使用 $\S3.1$ 中 GPT-3 合成的示例对模型进行微调。这些示例是从 VQAv2 [3, 14] 合成的。请注意,该数据集已包含在 OFA 预训练中,因此与 OFA 相比,我们并未添加额外的标注训练数据。我们使用 AdamW [23] 优化器,学习率为 ${2\times10^{-5},3\times10^{-5},5\times10^{-5}}$,批量大小为 ${32,64,128}$,并设置 $\beta_{1}=0.9$ 和 $\beta_{2}=0.999$ 进行训练。

In-context learning details We use code-davinci-002 engine (175B) for GPT-3 in all the experiments. Due to the input length limit, we use $n=32$ most similar examples in the prompt for GPT-3. The examples are retrieved by CLIP (VIT-L/14) using the method discussed in $\S4$ .

上下文学习细节

在所有实验中,我们使用 code-davinci-002 引擎 (175B) 作为 GPT-3 的模型。由于输入长度限制,我们在提示中为 GPT-3 使用了 $n=32$ 个最相似的示例。这些示例通过 CLIP (VIT-L/14) 使用 $\S4$ 中讨论的方法检索得到。

Table 1. Results comparison with existing systems on OK-VQA, with the image representation and the knowledge source each method uses. GPT-3 is frozen for all methods. The methods on top require end-to-end finetuning on OK-VQA. The methods below are fully based on in-context learning or zero-shot learning and do not require task-specific finetuning.

表 1: 现有系统在 OK-VQA 上的结果对比,包含各方法使用的图像表示和知识来源。所有方法均采用冻结版 GPT-3。顶部方法需在 OK-VQA 上进行端到端微调,底部方法完全基于上下文学习或零样本学习,无需任务特定微调。

| 方法 | 图像表示 | 知识来源 | 准确率 (%) |

|---|---|---|---|

| 端到端微调 | |||

| 仅问题 [37] | 特征 | Wikipedia + ConceptNet | 14.9 |

| MUTAN [38] | 特征 | ConceptNet | 26.4 |

| BAN + KG + AUG [25] | 特征 | Wikipedia+ConceptNet | 26.7 |

| ConceptBERT [13] | 特征 | GoogleSearch | 33.7 |

| KRISP [36] | 特征 | Wikipedia + ConceptNet + Google Images | 38.4 |

| Vis-DPR [35] | 特征 | Wikipedia | 39.2 |

| MAVEx [63] | 特征 | GPT-3 (175B)+ Wikidata | 39.4 |

| TRiG [12] | 标题+标签+OCR | 50.5 | |

| KAT (单模型) [15] | 标题+标签+特征 | 54.4 | |

| 上下文学习 & 零样本 | |||

| BLIP-2 VIT-G | 特征 | FlanT5-XXL (11B) | 45.9 |

| FlanT5xxL [26] (零样本) | 标题+标签 | GPT-3 (175B) | 43.3 |

| PICa-Base [67] | 标题+标签 | GPT-3 (175B) | 48.0 |

| PICa-Full [67] | 特征 | Chinchilla (70B) | 50.6 |

| Flamingo (80B) [1] (零样本) | 特征 | Chinchilla (70B) | 57.8 |

| Flamingo (80B) [1] (32样本) | 标题 | GPT-3 (175B) | |

| PromptCap+GPT-3 |

Table 2. Results comparison with existing systems on A-OKVQA. There are two evaluations, namely multiple-choice and directanswer. Both are measured by accuracy $(%)$ .

表 2: A-OKVQA数据集上与现有系统的结果对比。评估分为两种形式:多选题和直接回答,均以准确率 $(%)$ 衡量。

| 方法 | 多选题-val | 多选题-test | 直接回答-val | 直接回答-test |

|---|---|---|---|---|

| ClipCap [46] | 44.0 | 43.8 | 18.1 | 15.8 |

| Pythia [19] | 49.0 | 40.1 | 25.2 | 21.9 |

| ViLBERT [33] | 49.1/51.4 | 41.5/41.6 | 30.6 | 25.9 |

| LXMERT [53] | 51.9 | 42.2 | 30.7 | 25.9 |

| KRISP [36] GPV-2 [20] | 60.3 | 53.7 | 33.7/48.6 | 27.1/40.7 |

| PromptCap+GPT-3 | 73.2 | 73.1 | 56.3 | 59.6 |

5.2. Results on OK-VQA and A-OKVQA

5.2. OK-VQA 与 A-OKVQA 实验结果

Table 1 compares PROMPTCAP $^+$ GPT-3 with other methods on the OK-VQA validation set. For each method, we also list the way it represents the images, and the knowledge source used. The table is split into two sections. The upper section lists fully supervised methods. These methods require end-to-end finetuning. The methods in the bottom section are based on in-context learning and no task-specific finetuning is done on the models.

表 1 比较了 PROMPTCAP $^+$ GPT-3 与其他方法在 OK-VQA 验证集上的表现。对于每种方法,我们还列出了其图像表示方式以及使用的知识来源。该表分为两部分。上半部分列出了全监督方法,这些方法需要进行端到端微调。下半部分的方法基于上下文学习,且未对模型进行任务特定的微调。

We can see that all state-of-the-art systems use GPT-3 (or Chinchilla) as part of their systems. These methods obtain significant performance gains compared with previous methods, showing the importance of the LM in knowledgebased VQA tasks. PICa is the first system that used GPT-3 as the knowledge source. KAT [15] further improves over PICa by introducing Wikidata [56] as the knowledge source, doing ensemble and end-to-end finetuning on multiple components. REVIVE [29] is the current state of the art on OK-VQA. Compared with KAT, it introduces extra object-centric visual features to the ensemble, which brings additional gains over KAT. However, all of the above methods use generic image captions to prompt knowledge from GPT-3. We identify this as a critical bottleneck in using LMs for VQA tasks. PROMPTCAP is designed to address this bottleneck.

我们可以看到,所有最先进的系统都将GPT-3(或Chinchilla)作为其组成部分。相比先前方法,这些方法获得了显著的性能提升,表明大语言模型在基于知识的视觉问答任务中的重要性。PICa是首个使用GPT-3作为知识源的系统。KAT [15] 通过引入Wikidata [56] 作为知识源、对多组件进行集成和端到端微调,进一步改进了PICa。REVIVE [29] 是目前OK-VQA任务上的最优方法,相比KAT增加了以物体为中心的视觉特征集成,从而带来额外性能增益。然而上述方法均使用通用图像描述文本来从GPT-3中激发知识,我们将其视为大语言模型应用于视觉问答任务的关键瓶颈,而PROMPTCAP正是为解决这一瓶颈而设计。

Comparison with state of the art Our proposed PROMPT $\mathrm{CAP}+\mathrm{GPT}{}-3$ , despite using no additional knowledge source, no ensemble with visual features, and no end-to-end finetuning, achieves $60.4%$ accuracy and outperforms all existing methods on OK-VQA. Table 2 shows similar results on AOKVQA, in which PROMPTCAP $^+$ GPT-3 outperforms all prior methods by a large margin on both multiple-choice $(73.1%)$ and direct-answer $(59.6%)$ evaluations. These results demonstrate PROMPTCAP’s effectiveness in connecting LMs with images. Besides, we would like to emphasize that PROMPTCAP could replace the captioning module in the systems KAT and REVIVE have proposed, which might further boost the performance. We expect that PROMPTCAP will help future systems with complementary advances to achieve even better performance on these tasks.

与现有技术的对比

我们提出的 PROMPT $\mathrm{CAP}+\mathrm{GPT}{}-3$ 尽管未使用额外知识源、未与视觉特征集成且未进行端到端微调,仍在 OK-VQA 上实现了 $60.4%$ 的准确率,超越了所有现有方法。表 2 展示了在 AOKVQA 上的类似结果,其中 PROMPTCAP $^+$ GPT-3 在多项选择 $(73.1%)$ 和直接回答 $(59.6%)$ 评估中均大幅领先所有先前方法。这些结果证明了 PROMPTCAP 在连接大语言模型与图像方面的有效性。此外,我们强调 PROMPTCAP 可替代 KAT 和 REVIVE 系统中提出的描述生成模块,可能进一步提升性能。我们期待 PROMPTCAP 能助力未来系统通过互补性突破,在这些任务中实现更优表现。

5.3. Ablation Study

5.3. 消融实验

We conduct extensive ablation studies to quantify the performance benefit of each component in our system, i.e., the captioning model PROMPTCAP, the language model, and the prompting method. We conduct ablation experiments on each component.

我们进行了广泛的消融研究,以量化系统中每个组件的性能优势,即图像描述模型 PROMPTCAP、大语言模型和提示方法。我们对每个组件分别进行了消融实验。

Additional dataset for analysis Besides knowledge-based VQA tasks, we would also like to investigate the performance gain from PROMPTCAP for traditional VQA. Thus, we also include VQAv2 [3] in our ablation studies.

用于分析的额外数据集

除了基于知识的视觉问答(VQA)任务外,我们还想研究PROMPTCAP在传统VQA上的性能提升。因此,我们在消融实验中也纳入了VQAv2 [3]。

Table 3. Ablation on the contribution of PROMPTCAP, compared with generic captioning model OFA-Cap. The LM we use is GPT-3.

表 3: PROMPTCAP 与通用描述模型 OFA-Cap 的贡献消融对比。使用的语言模型为 GPT-3。

| 描述模型 | OK-VQA | A-OKVQA | VQAv2 |

|---|---|---|---|

| OFA-Cap | 56.6 | 51.0 | 64.9 |

| PROMPTCAP | 60.4 | 56.3 | 74.1 |

5.3.1 Performance Benefit from PROMPTCAP

5.3.1 PROMPTCAP 带来的性能优势

Baseline generic captioning model We use the officially released OFA [58] captioning checkpoint “caption-largebest-clean” (470M) as the baseline generic captioning model. We refer to it as “OFA-Cap”. We choose this model because this is the model initialization we use for PROMPTCAP, sharing the same model architecture. Notice that OFA is a large vision-language model pre-trained on 20M image-text pairs and 20 vision-language tasks, including many VQA tasks. We are not using additional annotated data during PROMPTCAP finetuning.

基线通用描述模型

我们使用官方发布的OFA [58]描述检查点"caption-largebest-clean"(470M)作为基线通用描述模型,简称"OFA-Cap"。选择该模型是因为这是我们用于PROMPTCAP的模型初始化,共享相同的模型架构。需注意,OFA是一个在2000万图像-文本对和20个视觉-语言任务(包含许多VQA任务)上预训练的大型视觉-语言模型。在PROMPTCAP微调过程中,我们没有使用额外的标注数据。

PROMPTCAP captions give consistent gains over generic captions. Table 3 measures the performance benefit from PROMPTCAP on OK-VQA, A-OKVQA, and VQAv2 validation sets. Here we focus on the performance gap between using PROMPTCAP captions and generic OFA captions. We can see that PROMPTCAP gives consistent improvements over generic captions. Specifically, with GPT-3