HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face

HuggingGPT: 用ChatGPT和Hugging Face上的模型解决AI任务

Yongliang Shen $^{1,2,* }$ , Kaitao $\mathbf{Song^{2,* ,\dagger}}$ , Xu Tan2, Dongsheng $\mathbf{Li^{2}}$ , Weiming $\mathbf{L}\mathbf{u}^{1,\dagger}$ , Yueting Zhuang1,† Zhejiang University 1, Microsoft Research Asia2 {syl, luwm, yzhuang}@zju.edu.cn, {kaitaosong, xuta, dongsli}@microsoft.com

沈永亮$^{1,2,* }$、宋凯涛$\mathbf{Song^{2,* ,\dagger}}$、谭旭2、李东升$\mathbf{Li^{2}}$、卢卫明$\mathbf{L}\mathbf{u}^{1,\dagger}$、庄越挺1,†

浙江大学1,微软亚洲研究院2

{syl, luwm, yzhuang}@zju.edu.cn, {kaitaosong, xuta, dongsli}@microsoft.com

https://github.com/microsoft/JARVIS

https://github.com/microsoft/JARVIS

Abstract

摘要

Solving complicated AI tasks with different domains and modalities is a key step toward artificial general intelligence. While there are numerous AI models available for various domains and modalities, they cannot handle complicated AI tasks autonomously. Considering large language models (LLMs) have exhibited exceptional abilities in language understanding, generation, interaction, and reasoning, we advocate that LLMs could act as a controller to manage existing AI models to solve complicated AI tasks, with language serving as a generic interface to empower this. Based on this philosophy, we present HuggingGPT, an LLM-powered agent that leverages LLMs (e.g., ChatGPT) to connect various AI models in machine learning communities (e.g., Hugging Face) to solve AI tasks. Specifically, we use ChatGPT to conduct task planning when receiving a user request, select models according to their function descriptions available in Hugging Face, execute each subtask with the selected AI model, and summarize the response according to the execution results. By leveraging the strong language capability of ChatGPT and abundant AI models in Hugging Face, HuggingGPT can tackle a wide range of sophisticated AI tasks spanning different modalities and domains and achieve impressive results in language, vision, speech, and other challenging tasks, which paves a new way towards the realization of artificial general intelligence.

解决跨领域和多模态的复杂AI任务是实现通用人工智能的关键一步。虽然已有众多针对不同领域和模态的AI模型,但它们无法自主处理复杂AI任务。鉴于大语言模型(LLM)在语言理解、生成、交互和推理方面展现出卓越能力,我们提出可以让大语言模型作为控制器来管理现有AI模型,并以语言作为通用接口来实现这一目标。基于这一理念,我们推出了HuggingGPT——一个由大语言模型(如ChatGPT)驱动的AI智能体,通过连接机器学习社区(如Hugging Face)中的各类AI模型来解决AI任务。具体而言,当收到用户请求时,我们使用ChatGPT进行任务规划,根据Hugging Face上提供的功能描述选择相应模型,用选定模型执行每个子任务,并根据执行结果汇总响应。通过结合ChatGPT强大的语言能力和Hugging Face丰富的AI模型库,HuggingGPT能够处理涵盖不同模态和领域的复杂AI任务,在语言、视觉、语音等挑战性任务中取得显著成果,这为实现通用人工智能开辟了新途径。

1 Introduction

1 引言

Large language models (LLMs) [1, 2, 3, 4, 5, 6], such as ChatGPT, have attracted substantial attention from both academia and industry, due to their remarkable performance on various natural language processing (NLP) tasks. Based on large-scale pre-training on massive text corpora and reinforcement learning from human feedback [2], LLMs can exhibit superior capabilities in language understanding, generation, and reasoning. The powerful capability of LLMs also drives many emergent research topics (e.g., in-context learning [1, 7, 8], instruction learning [9, 10, 11, 12, 13, 14], and chain-ofthought prompting [15, 16, 17, 18]) to further investigate the huge potential of LLMs, and brings unlimited possibilities for us for advancing artificial general intelligence.

大语言模型 (LLMs) [1, 2, 3, 4, 5, 6](如 ChatGPT)因其在各种自然语言处理 (NLP) 任务中的卓越表现,吸引了学术界和工业界的广泛关注。基于海量文本语料的大规模预训练和人类反馈的强化学习 [2],大语言模型展现出卓越的语言理解、生成和推理能力。其强大能力也催生了许多新兴研究课题(例如上下文学习 [1, 7, 8]、指令学习 [9, 10, 11, 12, 13, 14] 和思维链提示 [15, 16, 17, 18]),以进一步探索大语言模型的巨大潜力,并为推动通用人工智能 (AGI) 带来无限可能。

Despite these great successes, current LLM technologies are still imperfect and confront some urgent challenges on the way to building an advanced AI system. We discuss them from these aspects: 1) Limited to the input and output forms of text generation, current LLMs lack the ability to process complex information such as vision and speech, regardless of their significant achievements in NLP tasks; 2) In real-world scenarios, some complex tasks are usually composed of multiple sub-tasks, and thus require the scheduling and cooperation of multiple models, which are also beyond the capability of language models; 3) For some challenging tasks, LLMs demonstrate excellent results in zero-shot or few-shot settings, but they are still weaker than some experts (e.g., fine-tuned models). How to address these issues could be the critical step for LLMs toward artificial general intelligence.

尽管取得了这些巨大成功,当前的大语言模型技术仍不完善,在构建先进AI系统的道路上仍面临一些紧迫挑战。我们从以下方面进行探讨:1) 受限于文本生成的输入输出形式,当前大语言模型缺乏处理视觉、语音等复杂信息的能力,尽管它们在NLP任务中表现卓越;2) 现实场景中,复杂任务通常由多个子任务构成,需要调度和协调多个模型协作完成,这超出了语言模型的能力范围;3) 对于某些挑战性任务,大语言模型在零样本或少样本设置下表现优异,但仍弱于专业模型(如微调模型)。解决这些问题可能是大语言模型迈向通用人工智能的关键一步。

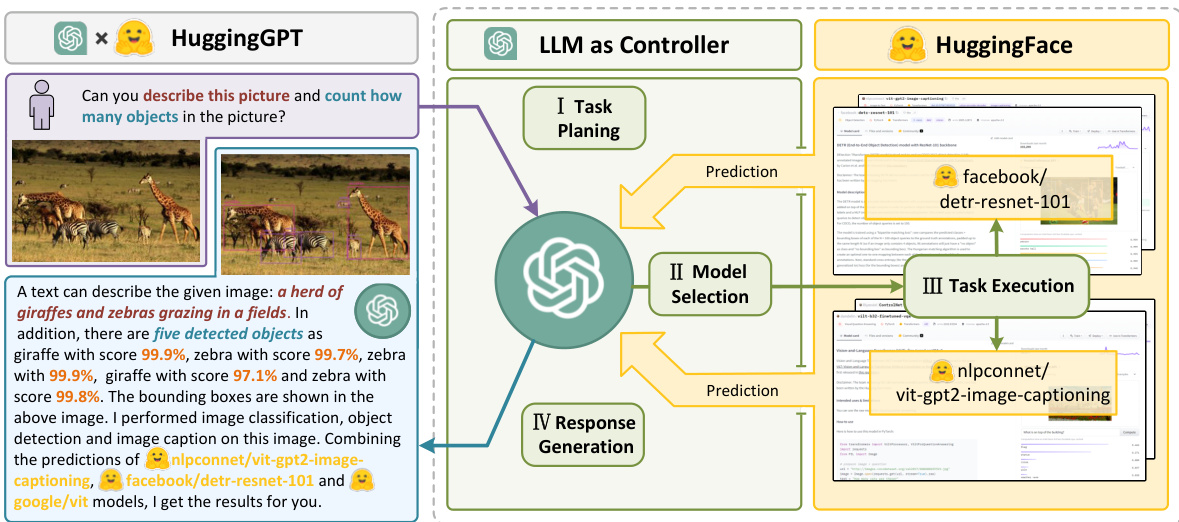

Figure 1: Language serves as an interface for LLMs (e.g., ChatGPT) to connect numerous AI models (e.g., those in Hugging Face) for solving complicated AI tasks. In this concept, an LLM acts as a controller, managing and organizing the cooperation of expert models. The LLM first plans a list of tasks based on the user request and then assigns expert models to each task. After the experts execute the tasks, the LLM collects the results and responds to the user.

图 1: 语言作为大语言模型 (LLM) (例如 ChatGPT) 连接众多 AI 模型 (例如 Hugging Face 中的模型) 以解决复杂 AI 任务的接口。在这一概念中,大语言模型充当控制器角色,负责管理和协调专家模型的协作。大语言模型首先根据用户请求规划任务列表,随后为每个任务分配合适的专家模型。在专家模型执行任务后,大语言模型汇总结果并反馈给用户。

In this paper, we point out that in order to handle complicated AI tasks, LLMs should be able to coordinate with external models to harness their powers. Hence, the pivotal question is how to choose suitable middleware to bridge the connections between LLMs and AI models. To tackle this issue, we notice that each AI model can be described in the form of language by summarizing its function. Therefore, we introduce a concept: “Language as a generic interface for LLMs to collaborate with AI models”. In other words, by incorporating these model descriptions into prompts, LLMs can be considered as the brain to manage AI models such as planning, scheduling, and cooperation. As a result, this strategy empowers LLMs to invoke external models for solving AI tasks. However, when it comes to integrating multiple AI models into LLMs, another challenge emerges: solving numerous AI tasks needs collecting a large number of high-quality model descriptions, which in turn requires heavy prompt engineering. Coincidentally, we notice that some public ML communities usually offer a wide range of applicable models with well-defined model descriptions for solving specific AI tasks such as language, vision, and speech. These observations bring us some inspiration: Can we link LLMs (e.g., ChatGPT) with public ML communities (e.g., GitHub, Hugging Face 1, etc) for solving complex AI tasks via a language-based interface?

本文指出,为处理复杂AI任务,大语言模型(LLM)需具备协调外部模型的能力。因此核心问题在于如何选择合适中间件来桥接LLM与AI模型。我们发现每个AI模型均可通过功能总结以语言形式描述,由此提出概念:"语言作为LLM与AI模型协作的通用接口"。具体而言,将这些模型描述整合至提示词(prompt)后,LLM可充当管理AI模型的大脑,执行规划、调度与合作等职能。该策略使LLM能够调用外部模型解决AI任务。

然而当涉及多个AI模型集成时,新挑战随之产生:解决大量AI任务需要收集海量高质量模型描述,这意味着繁重的提示词工程。值得注意的是,公共机器学习社区(如GitHub、Hugging Face等)通常提供各类功能明确的现成模型,专门解决语言、视觉、语音等特定AI任务。这些观察启发我们思考:能否通过基于语言的接口,将LLM(如ChatGPT)与公共机器学习社区连接起来以解决复杂AI任务?

In this paper, we propose an LLM-powered agent named HuggingGPT to autonomously tackle a wide range of complex AI tasks, which connects LLMs (i.e., ChatGPT) and the ML community (i.e., Hugging Face) and can process inputs from different modalities. More specifically, the LLM acts as a brain: on one hand, it disassembles tasks based on user requests, and on the other hand, assigns suitable models to the tasks according to the model description. By executing models and integrating results in the planned tasks, HuggingGPT can autonomously fulfill complex user requests. The whole process of HuggingGPT, illustrated in Figure 1, can be divided into four stages:

本文提出了一种名为HuggingGPT的大语言模型驱动智能体,能够自主处理各类复杂AI任务。该系统将大语言模型(如ChatGPT)与机器学习社区(如Hugging Face)相连接,可处理多模态输入。具体而言,大语言模型充当大脑角色:一方面根据用户请求分解任务,另一方面依据模型描述为任务分配合适的模型。通过执行模型并整合规划任务中的结果,HuggingGPT能够自主完成复杂的用户请求。如图1所示,HuggingGPT的完整工作流程可分为四个阶段:

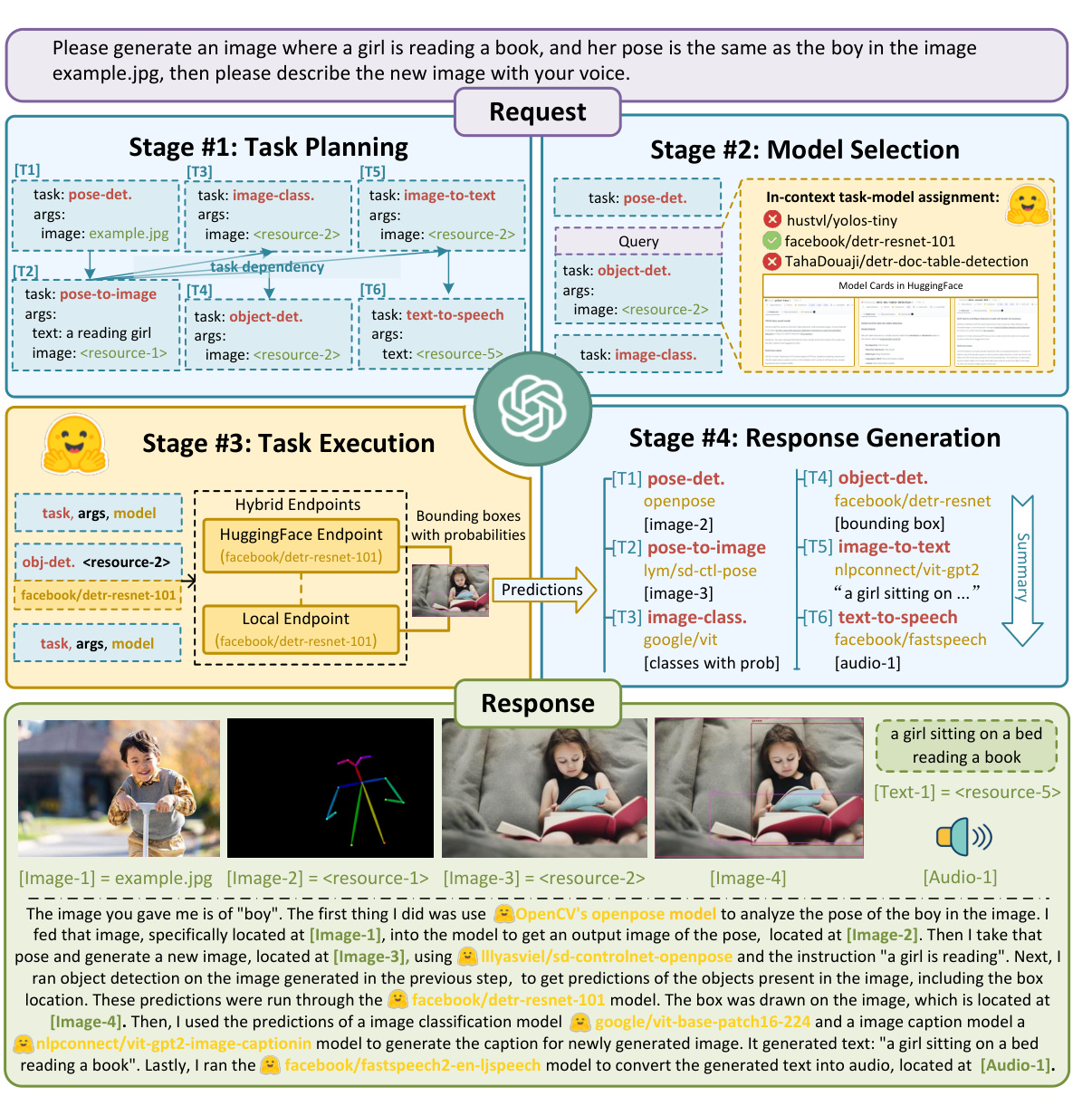

Figure 2: Overview of HuggingGPT. With an LLM (e.g., ChatGPT) as the core controller and the expert models as the executors, the workflow of HuggingGPT consists of four stages: 1) Task planning: LLM parses the user request into a task list and determines the execution order and resource dependencies among tasks; 2) Model selection: LLM assigns appropriate models to tasks based on the description of expert models on Hugging Face; 3) Task execution: Expert models on hybrid endpoints execute the assigned tasks; 4) Response generation: LLM integrates the inference results of experts and generates a summary of workflow logs to respond to the user.

图 2: HuggingGPT概述。以大语言模型(如ChatGPT)作为核心控制器,专家模型作为执行器,HuggingGPT的工作流程包含四个阶段: 1) 任务规划: 大语言模型将用户请求解析为任务列表,并确定任务间的执行顺序和资源依赖关系; 2) 模型选择: 大语言模型根据Hugging Face上专家模型的描述为任务分配合适的模型; 3) 任务执行: 混合终端上的专家模型执行分配的任务; 4) 响应生成: 大语言模型整合专家推理结果并生成工作流日志摘要以响应用户。

• Response Generation: Finally, ChatGPT is utilized to integrate the predictions from all models and generate responses for users.

• 响应生成:最终,利用ChatGPT整合所有模型的预测结果,为用户生成响应。

Benefiting from such a design, HuggingGPT can automatically generate plans from user requests and use external models, enabling it to integrate multimodal perceptual capabilities and tackle various complex AI tasks. More notably, this pipeline allows HuggingGPT to continually absorb the powers from task-specific experts, facilitating the growth and s cal ability of AI capabilities.

得益于这样的设计,HuggingGPT能够根据用户请求自动生成计划并调用外部模型,从而整合多模态感知能力以应对各类复杂AI任务。更值得注意的是,该流程使HuggingGPT能持续吸收领域专家的能力,促进AI能力的增长与扩展。

Overall, our contributions can be summarized as follows:

总体而言,我们的贡献可归纳如下:

- To complement the advantages of large language models and expert models, we propose HuggingGPT with an inter-model cooperation protocol. HuggingGPT applies LLMs as the brain for planning and decision, and automatically invokes and executes expert models for each specific task, providing a new way for designing general AI solutions.

- 为结合大语言模型 (Large Language Model) 和专家模型的优势,我们提出了HuggingGPT及其跨模型协作协议。HuggingGPT将大语言模型作为规划与决策的核心,并自动调用和执行专家模型处理各项具体任务,为设计通用人工智能 (AGI) 解决方案提供了新思路。

2 Related Works

2 相关工作

In recent years, the field of natural language processing (NLP) has been revolutionized by the emergence of large language models (LLMs) [1, 2, 3, 4, 5, 19, 6], exemplified by models such as GPT-3 [1], GPT-4 [20], PaLM [3], and LLaMa [6]. LLMs have demonstrated impressive capabilities in zero-shot and few-shot tasks, as well as more complex tasks such as mathematical problems and commonsense reasoning, due to their massive corpus and intensive training computation. To extend the scope of large language models (LLMs) beyond text generation, contemporary research can be divided into two branches: 1) Some works have devised unified multimodal language models for solving various AI tasks [21, 22, 23]. For example, Flamingo [21] combines frozen pre-trained vision and language models for perception and reasoning. BLIP-2 [22] utilizes a Q-former to harmonize linguistic and visual semantics, and Kosmos-1 [23] incorporates visual input into text sequences to amalgamate linguistic and visual inputs. 2) Recently, some researchers started to investigate the integration of using tools or models in LLMs [24, 25, 26, 27, 28]. Toolformer [24] is the pioneering work to introduce external API tags within text sequences, facilitating the ability of LLMs to access external tools. Consequently, numerous works have expanded LLMs to encompass the visual modality. Visual ChatGPT [26] fuses visual foundation models, such as BLIP [29] and ControlNet [30], with LLMs. Visual Programming [31] and ViperGPT [25] apply LLMs to visual objects by employing programming languages, parsing visual queries into interpret able steps expressed as Python code. More discussions about related works are included in Appendix B.

近年来,自然语言处理(NLP)领域因大语言模型(LLM)[1,2,3,4,5,19,6]的出现而发生革命性变革,代表性模型包括GPT-3[1]、GPT-4[20]、PaLM[3]和LLaMa[6]。得益于海量语料库和密集训练计算,大语言模型在零样本和少样本任务,以及数学问题和常识推理等更复杂任务中展现出惊人能力。

为扩展大语言模型的应用范围,当前研究可分为两个方向:1)部分研究设计了统一的多模态语言模型来解决各类AI任务[21,22,23]。例如Flamingo[21]结合冻结预训练的视觉与语言模型进行感知推理;BLIP-2[22]通过Q-former协调语言与视觉语义;Kosmos-1[23]则将视觉输入融入文本序列以实现多模态融合。2)近期有研究者开始探索在LLM中集成工具或模型的方法[24,25,26,27,28]。Toolformer[24]开创性地在文本序列中引入外部API标记,使LLM具备调用外部工具的能力。

基于此,大量研究将LLM扩展至视觉模态:Visual ChatGPT[26]融合了BLIP[29]、ControlNet[30]等视觉基础模型;Visual Programming[31]和ViperGPT[25]则通过Python语言将视觉查询解析为可执行代码步骤。更多相关工作讨论详见附录B。

Distinct from these approaches, HuggingGPT advances towards more general AI capabilities in the following aspects: 1) HuggingGPT uses the LLM as the controller to route user requests to expert models, effectively combining the language comprehension capabilities of the LLM with the expertise of other expert models; 2) The mechanism of HuggingGPT allows it to address tasks in any modality or any domain by organizing cooperation among models through the LLM. Benefiting from the design of task planning in HuggingGPT, our system can automatically and effectively generate task procedures and solve more complex problems; 3) HuggingGPT offers a more flexible approach to model selection, which assigns and orchestrates tasks based on model descriptions. By providing only the model descriptions, HuggingGPT can continuously and conveniently integrate diverse expert models from AI communities, without altering any structure or prompt settings. This open and continuous manner brings us one step closer to realizing artificial general intelligence.

与这些方法不同,HuggingGPT在以下方面推进了更通用的AI能力:1) HuggingGPT使用大语言模型作为控制器,将用户请求路由到专家模型,有效结合了大语言模型的语言理解能力与其他专家模型的专业知识;2) HuggingGPT的机制使其能够通过大语言模型组织模型间的协作,处理任何模态或领域的任务。得益于HuggingGPT的任务规划设计,我们的系统可以自动高效地生成任务流程并解决更复杂的问题;3) HuggingGPT提供了更灵活的模型选择方法,根据模型描述分配和编排任务。仅需提供模型描述,HuggingGPT就能持续便捷地集成来自AI社区的各种专家模型,而无需改变任何结构或提示设置。这种开放持续的运作方式让我们离实现通用人工智能更近一步。

3 HuggingGPT

3 HuggingGPT

HuggingGPT is a collaborative system for solving AI tasks, composed of a large language model (LLM) and numerous expert models from ML communities. Its workflow includes four stages: task planning, model selection, task execution, and response generation, as shown in Figure 2. Given a user request, our HuggingGPT, which adopts an LLM as the controller, will automatically deploy the whole workflow, thereby coordinating and executing the expert models to fulfill the target. Table 1 presents the detailed prompt design in our HuggingGPT. In the following subsections, we will introduce the design of each stage.

HuggingGPT是一个用于解决AI任务的协作系统,由大语言模型(LLM)和来自ML社区的众多专家模型组成。其工作流程包括四个阶段:任务规划、模型选择、任务执行和响应生成,如图2所示。给定用户请求后,我们采用LLM作为控制器的HuggingGPT将自动部署整个工作流程,从而协调和执行专家模型以实现目标。表1展示了HuggingGPT中的详细提示设计。在接下来的小节中,我们将介绍每个阶段的设计。

3.1 Task Planning

3.1 任务规划

Generally, in real-world scenarios, user requests usually encompass some intricate intentions and thus need to orchestrate multiple sub-tasks to fulfill the target. Therefore, we formulate task planning as the first stage of HuggingGPT, which aims to use LLM to analyze the user request and then decompose it into a collection of structured tasks. Moreover, we require the LLM to determine dependencies and execution orders for these decomposed tasks, to build their connections. To enhance the efficacy of task planning in LLMs, HuggingGPT employs a prompt design, which consists of specification-based instruction and demonstration-based parsing. We introduce these details in the following paragraphs.

通常在实际场景中,用户请求往往包含一些复杂意图,因此需要协调多个子任务来实现目标。为此,我们将任务规划设定为HuggingGPT的第一阶段,旨在利用大语言模型分析用户请求并将其分解为结构化任务集合。此外,我们要求大语言模型确定这些分解任务的依赖关系和执行顺序,以建立它们之间的关联。为提升大语言模型在任务规划中的效能,HuggingGPT采用了包含规范指令和示例解析的提示设计。下文将详细介绍这些内容。

Table 1: The details of the prompt design in HuggingGPT. In the prompts, we set some injectable slots such as {{ Demonstrations }} and {{ Candidate Models }}. These slots are uniformly replaced with the corresponding text before being fed into the LLM.

| Prompt #1 Task Planning Stage - The AI assistant performs task parsing on user input, generating a list | ||

| Planning Task | of tasks with the following format: [{"task": task, "id", task_ id, "dep": dependency_ task_ ids, "args": {"text": text, "image": URL, "audio": URL, "video": URL}↓]. The "dep" field denotes the id of the previous task which generates a new resource upon which the current task relies. The tag " | |

| the dependency task with the corresponding task_ id. The task must be selected from the following options:{ Available Task List }}.Please note that there exists a logical connections and order between the tasks. In case the user input cannot be parsed, an empty JSON response should be provided. Here are several cases for your reference: {{ Demonstrations ↓}. To assist with task planning, the chat history is available as {{ Chat Logs ↓}, where you can trace the user-mentioned resources and incorporate them into the task planning stage. Demonstrations Can you tell me how many [{"task": "object-detection", "id": O, "dep": [-1], "args": {"im | ||

| objects in e1.jpg? age": "e1.jpg" )1] [{"task": "image-to-text", "id": O, "dep":[-1], "args": {"im In e2.jpg, what's the animal and what's it doing? | ||

| First generate a HED image of e3.jpg, then based on the | age": "e2.jpg" }}, {"task":"image-cls", "id": 1, "dep": [-1], "args": {"image": "e2.jpg" }}, {"task":"object-detection", "id": | |

| "what's the animal doing?", "image": "e2.jpg" ↓1] | ||

| [{"task": "pose-detection", "id": O, "dep": [-1], "args": {"im | ||

| HED image and a text “a girl reading a book", create a new image as a response. | ||

| source>-0" }}] | ||

| Model Selection | Prompt #2 Model Selection Stage -Given the user request and the call command, the AI assistant helps the | |

| user to select a suitable model from a list of models to process the user request. The AI assistant merely outputs the model id of the most appropriate model. The output must be in a strict JSON format: {"id": "id", "reason": "your detail reason for the choice"}. We have a list of models for | ||

| you to choose from {{ Candidate Models }}. Please select one model from the list. CandidateModels | ||

| {"model_ id": model id #1, "metadata": meta-info #1, "description": description of model #1} {"model_ id": model id #2, "metadata": meta-info #2, "description": description of model #2} | ||

| Generation Response ( the task process and show your analysis and model inference results to the user in the first person. If inference results contain a file path, must tell the user the complete file path. If there is nothing in the results, please tell me you can't make it. | · {"model_ id": model id #K, "metadata": meta-info #K,"description": description of model #K} Prompt | |

| #4 Response Generation Stage - With the input and the inference results, the AI assistant needs to describe the process and results. The previous stages can be formed as - User Input: {{ User Input 1, Task Planning: {{ Tasks }}, Model Selection: {{ Model Assignment ↓}, Task Execution: {{ Predictions ↓1. You must first answer the user's request in a straightforward manner. Then describe | ||

表 1: HuggingGPT中提示设计的细节。在提示中,我们设置了一些可注入的插槽,如{{ Demonstrations }}和{{ Candidate Models }}。这些插槽在输入到大语言模型之前会被统一替换为相应的文本。

| 提示 #1 任务规划阶段 - AI助手对用户输入进行任务解析,生成一个任务列表 |

|---|

| * * 规划任务* * |

| 任务格式如下:[{"task": task, "id", task_ id, "dep": dependency_ task_ ids, "args": {"text": text, "image": URL, "audio": URL, "video": URL}}]。 "dep"字段表示当前任务所依赖的、生成新资源的先前任务的id。"-task_ id"表示来自对应task_ id的依赖任务所生成的文本、图像、音频或视频。任务必须从以下选项中选择:{ Available Task List }。请注意,任务之间存在逻辑关联和顺序。如果无法解析用户输入,则应返回空JSON响应。以下是几个参考案例:{{ Demonstrations }}。为协助任务规划,聊天记录{{ Chat Logs }}可供查阅,您可以追踪用户提到的资源并将其纳入任务规划阶段。 |

| * * 示例* * |

| 你能告诉我e1.jpg中有多少个物体吗? |

| 在e2.jpg中,动物是什么?它在做什么? |

| 首先生成e3.jpg的HED图像,然后基于HED图像和文本"一个女孩在读书",创建一张新图像作为响应。 |

| * * 模型选择* * |

| 提示 #2 模型选择阶段 - 给定用户请求和调用命令,AI助手帮助用户从模型列表中选择合适的模型来处理用户请求。AI助手仅输出最合适模型的id。输出必须为严格的JSON格式:{"id": "id", "reason": "选择该模型的详细原因"}。我们有一个模型列表供您选择{{ Candidate Models }}。请从列表中选择一个模型。 |

| * * 候选模型* * |

| {"model_ id": model id #1, "metadata": meta-info #1, "description": model #1的描述} |

| {"model_ id": model id #2, "metadata": meta-info #2, "description": model #2的描述} |

| ... |

| {"model_ id": model id #K, "metadata": meta-info #K,"description": model #K的描述} |

| * * 生成响应* * |

| 提示 #4 响应生成阶段 - 根据输入和推理结果,AI助手需要描述过程和结果。先前阶段可表示为:用户输入:{{ User Input }}, 任务规划:{{ Tasks }}, 模型选择:{{ Model Assignment }}, 任务执行:{{ Predictions }}。您必须首先以直接的方式回答用户的请求。然后描述 |

Specification-based Instruction To better represent the expected tasks of user requests and use them in the subsequent stages, we expect the LLM to parse tasks by adhering to specific specifications (e.g., JSON format). Therefore, we design a standardized template for tasks and instruct the LLM to conduct task parsing through slot filing. As shown in Table 1, the task parsing template comprises four slots ("task", "id", "dep", and "args") to represent the task name, unique identifier, dependencies and arguments. Additional details for each slot can be found in the template description (see the Appendix A.1.1). By adhering to these task specifications, HuggingGPT can automatically employ the LLM to analyze user requests and parse tasks accordingly.

基于规范的指令

为了更好地表示用户请求的预期任务并在后续阶段使用它们,我们希望大语言模型(LLM)能通过遵循特定规范(如JSON格式)来解析任务。为此,我们设计了一个标准化的任务模板,并指导LLM通过槽位填充进行任务解析。如表1所示,任务解析模板包含四个槽位("task"、"id"、"dep"和"args"),分别表示任务名称、唯一标识符、依赖关系和参数。每个槽位的更多细节可在模板描述中找到(见附录A.1.1)。通过遵循这些任务规范,HuggingGPT可以自动利用LLM来分析用户请求并相应地解析任务。

Demonstration-based Parsing To better understand the intention and criteria for task planning, HuggingGPT incorporates multiple demonstrations in the prompt. Each demonstration consists of a user request and its corresponding output, which represents the expected sequence of parsed tasks. By incorporating dependencies among tasks, these demonstrations aid HuggingGPT in understanding the logical connections between tasks, facilitating accurate determination of execution order and identification of resource dependencies. The details of our demonstrations is presented in Table 1.

基于示例的解析

为了更好地理解任务规划的意图和标准,HuggingGPT在提示中融入了多个示例。每个示例包含用户请求及其对应输出(即预期的解析任务序列)。通过展示任务间的依赖关系,这些示例帮助HuggingGPT理解任务间的逻辑关联,从而准确确定执行顺序并识别资源依赖。具体示例如表1所示。

表1:

Furthermore, to support more complex scenarios (e.g., multi-turn dialogues), we include chat logs in the prompt by appending the following instruction: “To assist with task planning, the chat history is available as {{ Chat Logs }}, where you can trace the user-mentioned resources and incorporate them into the task planning.”. Here {{ Chat Logs }} represents the previous chat logs. This design allows HuggingGPT to better manage context and respond to user requests in multi-turn dialogues.

此外,为支持更复杂场景(如多轮对话),我们通过在提示中追加以下指令来包含聊天记录:"为协助任务规划,聊天历史记录以{{ Chat Logs }}形式提供,您可追溯用户提及的资源并将其纳入任务规划。" 此处{{ Chat Logs }}代表先前的聊天记录。该设计使HuggingGPT能更好地管理上下文并响应多轮对话中的用户请求。

3.2 Model Selection

3.2 模型选择

Following task planning, HuggingGPT proceeds to the task of matching tasks with models, i.e., selecting the most appropriate model for each task in the parsed task list. To this end, we use model descriptions as the language interface to connect each model. More specifically, we first gather the descriptions of expert models from the ML community (e.g., Hugging Face) and then employ a dynamic in-context task-model assignment mechanism to choose models for the tasks. This strategy enables incremental model access (simply providing the description of the expert models) and can be more open and flexible to use ML communities. More details are introduced in the next paragraph.

在任务规划之后,HuggingGPT会进行任务与模型的匹配工作,即为解析出的任务列表中的每个任务选择最合适的模型。为此,我们使用模型描述作为连接每个模型的语言接口。具体来说,我们首先从机器学习社区(如Hugging Face)收集专家模型的描述,然后采用动态上下文任务-模型分配机制来为任务选择模型。这一策略实现了增量式模型访问(只需提供专家模型的描述),并能更开放灵活地利用机器学习社区资源。更多细节将在下一段介绍。

In-context Task-model Assignment We formulate the task-model assignment as a single-choice problem, where available models are presented as options within a given context. Generally, based on the provided user instruction and task information in the prompt, HuggingGPT is able to select the most appropriate model for each parsed task. However, due to the limits of maximum context length, it is not feasible to encompass the information of all relevant models within one prompt. To mitigate this issue, we first filter out models based on their task type to select the ones that match the current task. Among these selected models, we rank them based on the number of downloads 2 on Hugging Face and then select the top $K$ models as the candidates. This strategy can substantially reduce the token usage in the prompt and effectively select the appropriate models for each task.

上下文任务-模型分配

我们将任务-模型分配问题表述为单项选择问题,其中可用模型作为给定上下文中的选项呈现。通常情况下,基于提示中提供的用户指令和任务信息,HuggingGPT能够为每个解析的任务选择最合适的模型。然而,由于最大上下文长度的限制,无法在一个提示中包含所有相关模型的信息。为缓解这一问题,我们首先根据模型的任务类型进行筛选,选择与当前任务匹配的模型。在这些选定的模型中,我们根据Hugging Face上的下载量[2]对其进行排序,然后选择前$K$个模型作为候选。这一策略可以显著减少提示中的token使用量,并有效为每个任务选择合适的模型。

3.3 Task Execution

3.3 任务执行

Once a specific model is assigned to a parsed task, the next step is to execute the task (i.e., perform model inference). In this stage, HuggingGPT will automatically feed these task arguments into the models, execute these models to obtain the inference results, and then send them back to the LLM. It is necessary to emphasize the issue of resource dependencies at this stage. Since the outputs of the prerequisite tasks are dynamically produced, HuggingGPT also needs to dynamically specify the dependent resources for the task before launching it. Therefore, it is challenging to build the connections between tasks with resource dependencies at this stage.

为解析任务分配特定模型后,下一步是执行任务(即进行模型推理)。在此阶段,HuggingGPT会自动将这些任务参数输入模型,执行模型获取推理结果,并将结果返回给大语言模型。需要强调的是该阶段的资源依赖问题:由于前置任务的输出是动态生成的,HuggingGPT在启动任务前还需动态指定任务的依赖资源。因此,在此阶段建立具有资源依赖关系的任务连接具有挑战性。

Resource Dependency To address this issue, we use a unique symbol, “

资源依赖

为解决这一问题,我们使用特殊符号"

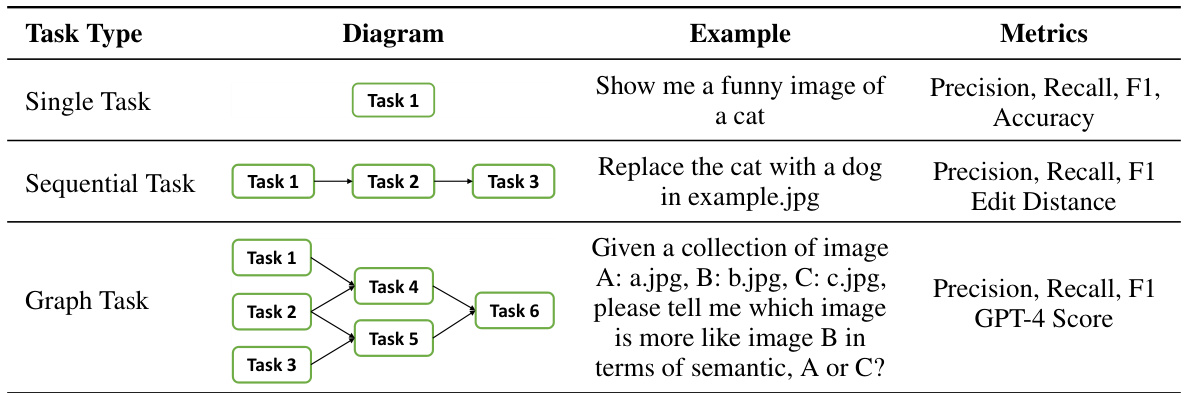

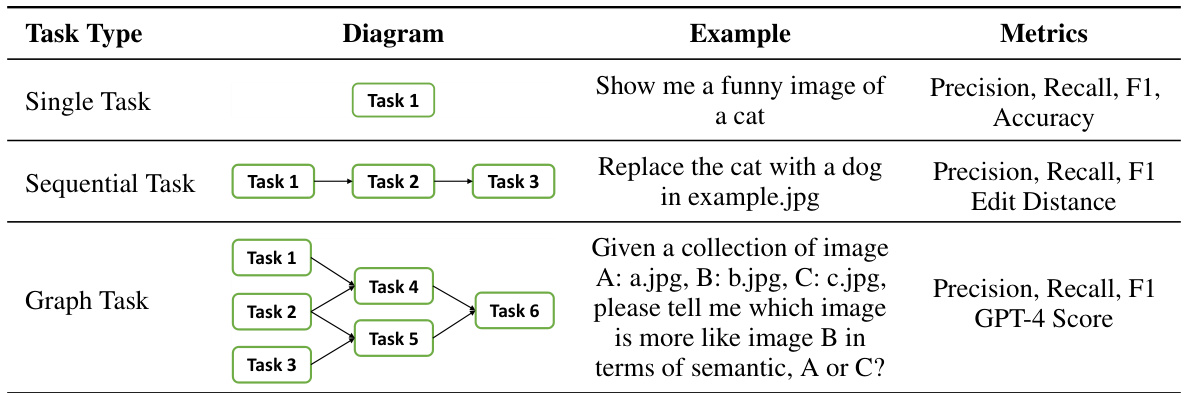

Table 2: Evaluation for task planning in different task types.

表 2: 不同任务类型中任务规划的效果评估。

Furthermore, for the remaining tasks without any resource dependencies, we will execute these tasks directly in parallel to further improve inference efficiency. This means that multiple tasks can be executed simultaneously if they meet the prerequisite dependencies. Additionally, we offer a hybrid inference endpoint to deploy these models for speedup and computational stability. For more details, please refer to Appendix A.1.3.

此外,对于没有任何资源依赖的剩余任务,我们将直接并行执行这些任务以进一步提高推理效率。这意味着多个任务在满足前提依赖关系时可以同时执行。此外,我们还提供了一个混合推理端点来部署这些模型,以实现加速和计算稳定性。更多细节请参阅附录A.1.3。

3.4 Response Generation

3.4 响应生成

After all task executions are completed, HuggingGPT needs to generate the final responses. As shown in Table 1, HuggingGPT integrates all the information from the previous three stages (task planning, model selection, and task execution) into a concise summary in this stage, including the list of planned tasks, the selected models for the tasks, and the inference results of the models.

在所有任务执行完成后,HuggingGPT需要生成最终响应。如表1所示,HuggingGPT在此阶段将前三个阶段(任务规划、模型选择和任务执行)的所有信息整合成简洁摘要,包括规划任务列表、任务所选模型以及模型的推理结果。

Most important among them are the inference results, which are the key points for HuggingGPT to make the final decisions. These inference results are presented in a structured format, such as bounding boxes with detection probabilities in the object detection model, answer distributions in the question-answering model, etc. HuggingGPT allows LLM to receive these structured inference results as input and generate responses in the form of friendly human language. Moreover, instead of simply aggregating the results, LLM generates responses that actively respond to user requests, providing a reliable decision with a confidence level.

其中最重要的是推理结果,这是HuggingGPT做出最终决策的关键依据。这些推理结果以结构化格式呈现,例如目标检测模型中的检测概率边界框、问答模型中的答案分布等。HuggingGPT让大语言模型能够接收这些结构化推理结果作为输入,并以友好的人类语言形式生成响应。此外,大语言模型并非简单汇总结果,而是生成能主动回应用户需求的响应,同时提供带有置信度的可靠决策。

4 Experiments

4 实验

4.1 Settings

4.1 设置

In our experiments, we employed the gpt-3.5-turbo, text-davinci-003 and $\mathtt{g p t-4}$ variants of the GPT models as the main LLMs, which are publicly accessible through the OpenAI API 3. To enable more stable outputs of LLM, we set the decoding temperature to 0. In addition, to regulate the LLM output to satisfy the expected format (e.g., JSON format), we set the logit_ bias to 0.2 on the format constraints (e.g., “{” and “}”). We provide detailed prompts designed for the task planning, model selection, and response generation stages in Table 1, where ${{\nu a r i a b l e}}$ indicates the slot which needs to be populated with the corresponding text before being fed into the LLM.

在我们的实验中,我们采用了 GPT 模型的 gpt-3.5-turbo、text-davinci-003 和 $\mathtt{g p t-4}$ 变体作为主要的大语言模型,这些模型可通过 OpenAI API 3 公开访问。为了使大语言模型的输出更加稳定,我们将解码温度 (temperature) 设置为 0。此外,为了规范大语言模型的输出以满足预期格式(例如 JSON 格式),我们在格式约束(如“{”和“}”)上将 logit_ bias 设置为 0.2。我们在表 1 中提供了为任务规划、模型选择和响应生成阶段设计的详细提示,其中 ${{\nu a r i a b l e}}$ 表示在输入大语言模型之前需要用相应文本填充的占位符。

4.2 Qualitative Results

4.2 定性结果

Figure 1 and Figure 2 have shown two demonstrations of HuggingGPT. In Figure 1, the user request consists of two sub-tasks: describing the image and object counting. In response to the request, HuggingGPT planned three tasks: image classification, image captioning, and object detection, and launched the google/vit [32], nlpconnet/vit-gpt2-image-captioning [33], and facebook/detr-resnet-101 [34] models, respectively. Finally, HuggingGPT integrated the results of the model inference and generated responses (describing the image and providing the count of contained objects) to the user.

图 1 和图 2 展示了 HuggingGPT 的两个示例。在图 1 中,用户请求包含两个子任务:描述图像和对象计数。针对该请求,HuggingGPT 规划了三个任务:图像分类 (image classification)、图像描述 (image captioning) 和对象检测 (object detection),并分别启动了 google/vit [32]、nlpconnet/vit-gpt2-image-captioning [33] 和 facebook/detr-resnet-101 [34] 模型。最终,HuggingGPT 整合了模型推理结果,并向用户生成了响应(描述图像并提供包含对象的数量)。

A more detailed example is shown in Figure 2. In this case, the user’s request included three tasks: detecting the pose of a person in an example image, generating a new image based on that pose and specified text, and creating a speech describing the image. HuggingGPT parsed these into six tasks, including pose detection, text-to-image conditional on pose, object detection, image classification, image captioning, and text-to-speech. We observed that HuggingGPT can correctly orchestrate the execution order and resource dependencies among tasks. For instance, the pose conditional text-to-image task had to follow pose detection and use its output as input. After this, HuggingGPT selected the appropriate model for each task and synthesized the results of the model execution into a final response. For more demonstrations, please refer to the Appendix A.3.

图 2 展示了一个更详细的示例。用户请求包含三项任务:检测示例图像中人物的姿势、基于该姿势和指定文本生成新图像、以及创建描述图像的语音。HuggingGPT将其解析为六项子任务,包括姿势检测、姿势条件文本生成图像、物体检测、图像分类、图像描述生成和文本转语音。我们观察到HuggingGPT能正确编排任务间的执行顺序和资源依赖关系。例如,姿势条件文本生成图像任务必须在姿势检测之后执行,并将其输出作为输入。随后,HuggingGPT为每项任务选择合适模型,并将模型执行结果合成为最终响应。更多演示请参阅附录A.3。

4.3 Quantitative Evaluation

4.3 定量评估

In HuggingGPT, task planning plays a pivotal role in the whole workflow, since it determines which tasks will be executed in the subsequent pipeline. Therefore, we deem that the quality of task planning can be utilized to measure the capability of LLMs as a controller in HuggingGPT. For this purpose, we conduct quantitative evaluations to measure the capability of LLMs. Here we simplified the evaluation by only considering the task

在HuggingGPT中,任务规划在整个工作流程中起着关键作用,因为它决定了后续管道中将执行哪些任务。因此,我们认为可以利用任务规划的质量来衡量大语言模型作为HuggingGPT控制器的能力。为此,我们进行了定量评估来衡量大语言模型的能力。这里我们简化了评估,仅考虑任务

| LLM | Acc↑ | Pre ↑ | Recall ↑ | F1↑ |

| Alpaca-7b | 6.48 | 35.60 | 6.64 | 4.88 |

| Vicuna-7b | 23.86 | 45.51 | 26.51 | 29.44 |

| GPT-3.5 | 52.62 | 62.12 | 52.62 | 54.45 |

Table 3: Evaluation for the single task. “Acc” and “Pre” represents Accuracy and Precision.

| 大语言模型 (LLM) | 准确率 (Acc)↑ | 精确率 (Pre)↑ | 召回率 (Recall)↑ | F1值 (F1)↑ |

|---|---|---|---|---|

| Alpaca-7b | 6.48 | 35.60 | 6.64 | 4.88 |

| Vicuna-7b | 23.86 | 45.51 | 26.51 | 29.44 |

| GPT-3.5 | 52.62 | 62.12 | 52.62 | 54.45 |

表 3: 单任务评估结果。"Acc"和"Pre"分别代表准确率 (Accuracy) 和精确率 (Precision)。

type, without its associated arguments. To better conduct evaluations on task planning, we group tasks into three distinct categories (see Table 2) and formulate different metrics for them:

类型,不包括其相关参数。为了更好地对任务规划进行评估,我们将任务分为三个不同的类别(见表 2),并为它们制定不同的指标:

Dataset To conduct our evaluation, we invite some annotators to submit some requests. We collect these data as the evaluation dataset. We use GPT-4 to generate task planning as the pseudo labels, which cover single, sequential, and graph tasks. Furthermore, we invite some expert annotators to label task planning for some complex requests (46 examples) as a high-quality humanannotated dataset. We also plan to improve the

数据集

为进行评估,我们邀请了一些标注员提交请求。收集这些数据作为评估数据集。使用 GPT-4 生成任务规划作为伪标签,涵盖单任务、序列任务和图任务。此外,邀请专家标注员为部分复杂请求(46例)标注任务规划,形成高质量人工标注数据集。我们还计划改进——

| LLM | ↑ | Pre ↑ | Recall ↑ | F1↑ |

| Alpaca-7b | 0.83 | 22.27 | 23.35 | 22.80 |

| Vicuna-7b | 0.80 | 19.15 | 28.45 | 22.89 |

| GPT-3.5 | 0.54 | 61.09 | 45.15 | 51.92 |

Table 4: Evaluation for the sequential task. “ED” means Edit Distance.

| 大语言模型 (LLM) | ↑ | 准确率 (Pre) ↑ | 召回率 (Recall) ↑ | F1值 ↑ |

|---|---|---|---|---|

| Alpaca-7b | 0.83 | 22.27 | 23.35 | 22.80 |

| Vicuna-7b | 0.80 | 19.15 | 28.45 | 22.89 |

| GPT-3.5 | 0.54 | 61.09 | 45.15 | 51.92 |

表 4: 序列任务评估。"ED"表示编辑距离 (Edit Distance)。

quality and quantity of this dataset to further assist in evaluating the LLM’s planning capabilities, which remains a future work. More details about this dataset are in Appendix A.2. Using this dataset, we conduct experimental evaluations on various LLMs, including Alpaca-7b [37], Vicuna-7b [36], and GPT models, for task planning.

该数据集的质量和数量仍需进一步提升,以更好地评估大语言模型的规划能力,这将是未来的工作方向。关于此数据集的更多细节见附录A.2。基于该数据集,我们对Alpaca-7b [37]、Vicuna-7b [36]及GPT系列模型等多种大语言模型进行了任务规划能力的实验评估。

| LLM | GPT-4 Score ↑ | Pre ↑ | Recall ↑ | F1↑ |

| Alpaca-7b | 13.14 | 16.18 | 28.33 | 20.59 |

| Vicuna-7b | 19.17 | 13.97 | 28.08 | 18.66 |

| GPT-3.5 | 50.48 | 54.90 | 49.23 | 51.91 |

Table 5: Evaluation for the graph task.

| LLM | GPT-4 Score ↑ | Pre ↑ | Recall ↑ | F1↑ |

|---|---|---|---|---|

| Alpaca-7b | 13.14 | 16.18 | 28.33 | 20.59 |

| Vicuna-7b | 19.17 | 13.97 | 28.08 | 18.66 |

| GPT-3.5 | 50.48 | 54.90 | 49.23 | 51.91 |

表 5: 图任务评估。

Performance Tables 3, 4 and 5 show the planning capabilities of HuggingGPT on the three categories of GPT-4 annotated datasets, respectively. We observed that GPT-3.5 exhibits more prominent planning capabilities, outperforming the open-source LLMs Alpaca-7b and Vicuna-7b in terms of all types of

表 3、4 和 5 分别展示了 HuggingGPT 在 GPT-4 标注的三类数据集上的规划能力。我们观察到 GPT-3.5 展现出更突出的规划能力,在所有类型任务上均优于开源大语言模型 Alpaca-7b 和 Vicuna-7b。

user requests. Specifically, in more complex tasks (e.g., sequential and graph tasks), GPT-3.5 has shown absolute predominance over other LLMs. These results also demonstrate the evaluation of task planning can reflect the capability of LLMs as a controller. Therefore, we believe that developing technologies to improve the ability of LLMs in task planning is very important, and we leave it as a future research direction.

用户请求。具体而言,在更复杂的任务(如序列和图任务)中,GPT-3.5已展现出对其他大语言模型的绝对优势。这些结果也表明,任务规划能力的评估可以反映大语言模型作为控制器的性能。因此,我们认为开发提升大语言模型任务规划能力的技术至关重要,并将其作为未来的研究方向。

| LLM | Sequential Task | Graph Task | ||

| Acc↑ | ED ↓ | Acc↑ | F1↑ | |

| Alpaca-7b | 0 | 0.96 | 4.17 | 4.17 |

| Vicuna-7b | 7.45 | 0.89 | 10.12 | 7.84 |

| GPT-3.5 | 18.18 | 0.76 | 20.83 | 16.45 |

| GPT-4 | 41.36 | 0.61 | 58.33 | 49.28 |

Table 6: Evaluation on the human-annotated dataset.

| LLM | 序列任务 | 图任务 | ||

|---|---|---|---|---|

| Acc↑ | ED↓ | Acc↑ | F1↑ | |

| Alpaca-7b | 0 | 0.96 | 4.17 | 4.17 |

| Vicuna-7b | 7.45 | 0.89 | 10.12 | 7.84 |

| GPT-3.5 | 18.18 | 0.76 | 20.83 | 16.45 |

| GPT-4 | 41.36 | 0.61 | 58.33 | 49.28 |

表 6: 人工标注数据集上的评估结果。

Furthermore, we conduct experiments on the high-quality human-annotated dataset to obtain a more precise evaluation. Table 6 reports the comparisons on the human-annotated dataset. These results align with the aforementioned conclusion, highlighting that more powerful LLMs demonstrate better performance in task planning. Moreover, we compare the results between human annotations and GPT-4 annotations. We find that even though GPT-4 outperforms other LLMs, there

此外,我们在高质量人工标注数据集上进行了实验以获得更精确的评估结果。表6展示了人工标注数据集上的对比情况。这些结果与前述结论一致,表明更强大的大语言模型在任务规划方面表现更优。此外,我们还比较了人工标注与GPT-4标注的结果差异。研究发现尽管GPT-4优于其他大语言模型,但...

still remains a substantial gap when compared with human annotations. These observations further underscore the importance of enhancing the planning capabilities of LLMs.

与人类标注相比仍存在显著差距。这些观察结果进一步凸显了提升大语言模型规划能力的重要性。

4.4 Ablation Study

4.4 消融实验

| Demo Variety (#task types) | LLM | Single Task | Sequencial Task | Graph Task | ||

| Acc↑ | F1↑ | ED (%)← | F1↑ | |||

| 2 | GPT-3.5 | 43.31 | 48.29 | 71.27 | 32.15 | F1 ↑ 43.42 |

| GPT-4 | 65.59 | 67.08 | 47.17 | 55.13 | 53.96 | |

| 6 | GPT-3.5 | 51.31 | 51.81 | 60.81 | 43.19 | 58.51 |

| GPT-4 | 66.83 | 68.14 | 42.20 | 58.18 | 64.34 | |

| 10 | GPT-3.5 | 52.83 | 53.70 | 56.52 | 47.03 | 64.24 |

| GPT-4 | 67.52 | 71.05 | 39.32 | 60.80 | 66.90 | |

Table 7: Evaluation of task planning in terms of the variety of demonstrations. We refer to the variety of demonstrations as the number of different task types involved in the demonstrations.

| 演示多样性 (#任务类型) | 大语言模型 | 单任务 | 序列任务 | 图任务 | ||

|---|---|---|---|---|---|---|

| Acc↑ | F1↑ | ED (%)← | F1↑ | |||

| 2 | GPT-3.5 | 43.31 | 48.29 | 71.27 | 32.15 | F1 ↑ 43.42 |

| GPT-4 | 65.59 | 67.08 | 47.17 | 55.13 | 53.96 | |

| 6 | GPT-3.5 | 51.31 | 51.81 | 60.81 | 43.19 | 58.51 |

| GPT-4 | 66.83 | 68.14 | 42.20 | 58.18 | 64.34 | |

| 10 | GPT-3.5 | 52.83 | 53.70 | 56.52 | 47.03 | 64.24 |

| GPT-4 | 67.52 | 71.05 | 39.32 | 60.80 | 66.90 |

表 7: 不同演示多样性下的任务规划评估。我们将演示多样性定义为演示中涉及的不同任务类型的数量。

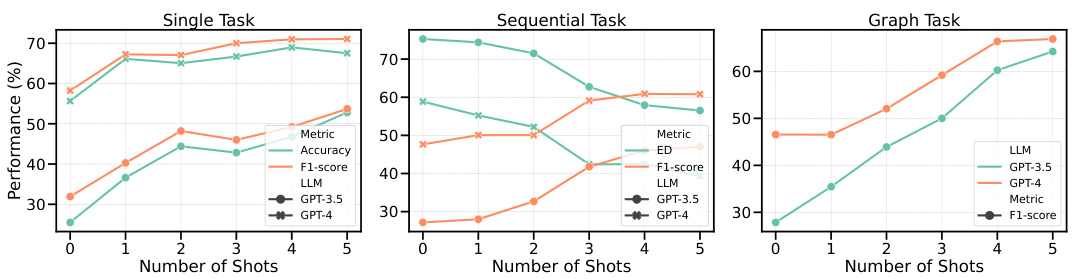

Figure 3: Evaluation of task planning with different numbers of demonstrations.

图 3: 不同演示数量下的任务规划评估。

As previously mentioned in our default setting, we apply few-shot demonstrations to enhance the capability of LLMs in understanding user intent and parsing task sequences. To better investigate the effect of demonstrations on our framework, we conducted a series of ablation studies from two perspectives: the number of demonstrations and the variety of demonstrations. Table 7 reports the planning results under the different variety of demonstrations. We observe that increasing the variety among demonstrations can moderately improve the performance of LLMs in conduct planning. Moreover, Figure 3 illustrates the results of task planning with different number of demonstrations. We can find that adding some demonstrations can slightly improve model performance but this improvement will be limited when the number is over 4 demonstrations. In the future, we will continue to explore more elements that can improve the capability of LLMs at different stages.

如前所述,在我们的默认设置中,我们采用少样本示例来增强大语言模型理解用户意图和解析任务序列的能力。为了更好地研究示例对框架的影响,我们从两个角度进行了一系列消融实验:示例数量和示例多样性。表7展示了不同示例多样性下的规划结果。我们发现增加示例间的多样性可以适度提升大语言模型执行规划的性能。此外,图3展示了不同示例数量下的任务规划结果。可以看到增加少量示例能略微提升模型性能,但当示例数量超过4个时,这种提升将趋于平缓。未来我们将继续探索更多能提升大语言模型各阶段能力的要素。

| LLM | Task Planning | ModelSelection | Response | ||

| Passing Rate ↑ | Rationality ↑ | PassingRate↑ | Rationality ↑ | SuccessRate↑ | |

| Alpaca-13b | 51.04 | 32.17 | 6.92 | ||

| Vicuna-13b | 79.41 | 58.41 | - | 一 | 15.64 |

| GPT-3.5 | 91.22 | 78.47 | 93.89 | 84.29 | 63.08 |

Table 8: Human Evaluation on different LLMs. We report two metrics, passing rate $(%)$ and rationality $(%)$ , in the task planning and model selection stages and report a straightforward success rate $(%)$ to evaluate whether the request raised by the user is finally resolved.

| LLM | 任务规划 | 模型选择 | 响应成功率 ↑ | ||

|---|---|---|---|---|---|

| 通过率 ↑ | 合理性 ↑ | 通过率 ↑ | 合理性 ↑ | ||

| Alpaca-13b | 51.04 | 32.17 | 6.92 | ||

| Vicuna-13b | 79.41 | 58.41 | - | - | 15.64 |

| GPT-3.5 | 91.22 | 78.47 | 93.89 | 84.29 | 63.08 |

表 8: 不同大语言模型的人类评估结果。我们在任务规划和模型选择阶段报告了两个指标:通过率 $(%)$ 和合理性 $(%)$,并直接报告成功率 $(%)$ 来评估用户提出的请求是否最终得到解决。

4.5 Human Evaluation

4.5 人工评估

In addition to objective evaluations, we also invite human experts to conduct a subjective evaluation in our experiments. We collected 130 diverse requests to evaluate the performance of HuggingGPT at various stages, including task planning, model selection, and final response generation. We designed three evaluation metrics, namely passing rate, rationality, and success rate. The definitions of each metric can be found in Appendix A.1.6. The results are reported in Table 8. From Table 8, we can observe similar conclusions that GPT-3.5 can significantly outperform open-source LLMs like Alpaca-13b and Vicuna-13b by a large margin across different stages, from task planning to response generation stages. These results indicate that our objective evaluations are aligned with human evaluation and further demonstrate the necessity of a powerful LLM as a controller in the framework of autonomous agents.

除了客观评估外,我们还邀请人类专家在实验中进行了主观评估。我们收集了130个多样化请求,用于评估HuggingGPT在各个阶段的表现,包括任务规划、模型选择和最终响应生成。我们设计了三个评估指标:通过率、合理性和成功率。各指标的定义详见附录A.1.6。结果如表8所示。从表8中我们可以观察到类似的结论:从任务规划到响应生成阶段,GPT-3.5在不同阶段均显著优于Alpaca-13b和Vicuna-13b等开源大语言模型。这些结果表明,我们的客观评估与人类评估结果一致,进一步证明了在自主智能体框架中使用强大语言模型作为控制器的必要性。

5 Limitations

5 局限性

HuggingGPT has presented a new paradigm for designing AI solutions, but we want to highlight that there still remain some limitations or improvement spaces: 1) Planning in HuggingGPT heavily relies on the capability of LLM. Consequently, we cannot ensure that the generated plan will always be feasible and optimal. Therefore, it is crucial to explore ways to optimize the LLM in order to enhance its planning abilities; 2) Efficiency poses a common challenge in our framework. To build such a collaborative system (i.e., HuggingGPT) with task automation, it heavily relies on a powerful controller (e.g., ChatGPT). However, HuggingGPT requires multiple interactions with LLMs throughout the whole workflow and thus brings increasing time costs for generating the response; 3) Token Lengths is another common problem when using LLM, since the maximum token length is always limited. Although some works have extended the maximum length to 32K, it is still insatiable for us if we want to connect numerous models. Therefore, how to briefly and effectively summarize model descriptions is also worthy of exploration; 4) Instability is mainly caused because LLMs are usually uncontrollable. Although LLM is skilled in generation, it still possibly fails to conform to instructions or give incorrect answers during the prediction, leading to exceptions in the program workflow. How to reduce these uncertainties during inference should be considered in designing systems.

HuggingGPT为设计AI解决方案提出了新范式,但我们仍需指出其存在的局限性及改进空间:1) 规划能力高度依赖大语言模型(LLM)的性能,因此无法保证生成的计划始终可行且最优,需探索优化LLM以增强其规划能力的方法;2) 效率是本框架面临的普遍挑战,构建此类任务自动化协作系统(如HuggingGPT)需依赖强大控制器(如ChatGPT),但全流程需多次与LLM交互,导致响应时间成本增加;3) Token长度限制是使用LLM的常见问题,尽管已有研究将最大长度扩展至32K,但连接海量模型时仍显不足,需探索如何简洁有效地总结模型描述;4) 不稳定性主要源于LLM的不可控性,虽然LLM擅长生成内容,但在预测时仍可能偏离指令或给出错误答案,导致程序工作流异常,系统设计时需考虑如何降低推理过程中的不确定性。

6 Conclusion

6 结论

In this paper, we propose a system named HuggingGPT to solve AI tasks, with language as the interface to connect LLMs with AI models. The principle of our system is that an LLM can be viewed as a controller to manage AI models, and can utilize models from ML communities like Hugging Face to automatically solve different requests of users. By exploiting the advantages of LLMs in understanding and reasoning, HuggingGPT can dissect the intent of users and decompose it into multiple sub-tasks. And then, based on expert model descriptions, HuggingGPT is able to assign the most suitable models for each task and integrate results from different models to generate the final response. By utilizing the ability of numerous AI models from machine learning communities, HuggingGPT demonstrates immense potential in solving challenging AI tasks, thereby paving a new pathway towards achieving artificial general intelligence.

本文提出了一种名为HuggingGPT的系统,旨在以语言为接口连接大语言模型(LLM)与各类AI模型来解决人工智能任务。我们的系统基于一个核心原则:将大语言模型视为管理AI模型的控制器,能够利用Hugging Face等机器学习社区提供的模型来自动解决用户的不同需求。通过发挥大语言模型在理解和推理方面的优势,HuggingGPT能够解析用户意图并将其分解为多个子任务。随后,基于专家模型描述,HuggingGPT可为每个任务分配合适的模型,并整合不同模型的结果生成最终响应。通过利用机器学习社区海量AI模型的能力,HuggingGPT在解决复杂AI任务方面展现出巨大潜力,为实现通用人工智能(AGI)开辟了新路径。

Acknowledgement

致谢

We appreciate the support of the Hugging Face team to help us in improving our GitHub project and web demo. Besides, we also appreciate the contributions of Bei Li, Kai Shen, Meiqi Chen, Qingyao Guo, Yichong Leng, Yuancheng Wang, Dingyao Yu for the data labeling and Wenqi Zhang, Wen Wang, Zeqi Tan for paper revision.

我们感谢Hugging Face团队在改进GitHub项目和网页演示方面给予的支持。此外,我们也感谢李贝、沈凯、陈美琪、郭清瑶、冷一冲、王远成、于丁尧在数据标注方面的贡献,以及张文琪、王文、谭泽奇在论文修订中的付出。

This work is partly supported by the Fundamental Research Funds for the Central Universities (No. 226-2023-00060), Key Research and Development Program of Zhejiang Province (No. 2023C01152), National Key Research and Development Project of China (No. 2018 AAA 0101900), and MOE Engineering Research Center of Digital Library.

本研究部分得到中央高校基本科研业务费专项资金(No. 226-2023-00060)、浙江省重点研发计划项目(No. 2023C01152)、国家重点研发计划项目(No. 2018AAA0101900)及教育部数字图书馆工程研究中心的资助。

References

参考文献

Pathak, Giannis Karam a no lak is, Haizhi Gary Lai, Ishan Vire ndra bhai Purohit, Ishani Mondal, Jacob William Anderson, Kirby C. Kuznia, Krima Doshi, Kuntal Kumar Pal, Maitreya Patel, Mehrad Moradshahi, Mihir Parmar, Mirali Purohit, Neeraj Varshney, Phani Rohitha Kaza, Pulkit Verma, Ravsehaj Singh Puri, rushang karia, Savan Doshi, Shailaja Keyur Sampat, Siddhartha Mishra, Sujan Reddy A, Sumanta Patro, Tanay Dixit, Xudong Shen, Chitta Baral, Yejin Choi, Noah A. Smith, Hannaneh Hajishirzi, and Daniel Khashabi. Super-Natural Instructions: Generalization via Declarative Instructions on $1600+$ NLP Tasks. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing (EMNLP). Association for Computational Linguistics, 2022.

Pathak、Giannis Karamalakis、Haizhi Gary Lai、Ishan Virendrabhai Purohit、Ishani Mondal、Jacob William Anderson、Kirby C. Kuznia、Krima Doshi、Kuntal Kumar Pal、Maitreya Patel、Mehrad Moradshahi、Mihir Parmar、Mirali Purohit、Neeraj Varshney、Phani Rohitha Kaza、Pulkit Verma、Ravsehaj Singh Puri、Rushang Karia、Savan Doshi、Shailaja Keyur Sampat、Siddhartha Mishra、Sujan Reddy A、Sumanta Patro、Tanay Dixit、Xudong Shen、Chitta Baral、Yejin Choi、Noah A. Smith、Hannaneh Hajishirzi 和 Daniel Khashabi。《超级自然指令:通过1600+项NLP任务的声明式指令实现泛化》。收录于《2022年自然语言处理实证方法会议论文集》(EMNLP)。计算语言学协会,2022年。

A Appendix

A 附录

A.1 More details

A.1 更多细节

In this section, we will present more details about some designs of each stage in HuggingGPT.

在本节中,我们将详细介绍HuggingGPT各阶段的部分设计细节。

A.1.1 Template for Task Planning

A.1.1 任务规划模板

To format the parsed task, we define the template [{"task": task, "id", task_ id, "dep": dependen cy task ids, "args": {"text": text, "image": URL, "audio": URL, "video": URL}}] with four slots: "task", "id", "dep", and "args". Table 9 presents the definitions of each slot.

为了格式化解析后的任务,我们定义了模板 [{"task": task, "id", task_ id, "dep": dependency task ids, "args": {"text": text, "image": URL, "audio": URL, "video": URL}}],包含四个槽位:"task"、"id"、"dep"和"args"。表9展示了各槽位的定义。

| Name | Definitions |

| "task" | It represents the type of the parsed task. It covers different tasks in language, visual, video, audio,etc. The currently supported task list of HuggingGPT is shown in Table 13. |

| "id" | The unique identifier for task planning, which is used for references to dependent tasks and theirgeneratedresources. |

| "dep" | It defines the pre-requisite tasks required for execution.The taskwill belaunched only when all thepre-requisitedependenttasks arefinished. |

| "args" | It containsthelist ofrequired argumentsfortaskexecution.Itcontains threesubfieldspopulated with text, image, and audio resources according to the task type. They are resolved from either the user's request or the generated resources of the dependent tasks.The corresponding argumenttypesfordifferenttasktypesareshowninTable13. |

Table 9: Definitions for each slot for parsed tasks in the task planning.

| 名称 | 定义 |

|---|---|

| "task" | 表示解析任务类型,涵盖语言、视觉、视频、音频等不同任务。HuggingGPT当前支持的任务列表如 表 13 所示。 |

| "id" | 任务规划的唯一标识符,用于引用依赖任务及其生成资源。 |

| "dep" | 定义执行所需的前置任务。仅当所有前置依赖任务完成后才会启动该任务。 |

| "args" | 包含任务执行所需的参数列表,根据任务类型填充文本、图像和音频资源三个子字段。这些参数可从用户请求或依赖任务的生成资源中解析。不同任务类型对应的参数类型如 表 13 所示。 |

表 9: 任务规划中解析任务各字段的定义

A.1.2 Model Descriptions

A.1.2 模型描述

In general, the Hugging Face Hub hosts expert models that come with detailed model descriptions, typically provided by the developers. These descriptions encompass various aspects of the model, such as its function, architecture, supported languages and domains, licensing, and other relevant details. These comprehensive model descriptions play a crucial role in aiding the decision of HuggingGPT. By assessing the user’s requests and comparing them with the model descriptions, HuggingGPT can effectively determine the most suitable model for the given task.

通常,Hugging Face Hub托管着带有详细模型描述的专家模型,这些描述通常由开发者提供。这些描述涵盖了模型的多个方面,例如其功能、架构、支持的语言和领域、许可协议以及其他相关细节。这些全面的模型描述在帮助HuggingGPT做出决策方面起着关键作用。通过评估用户的请求并将其与模型描述进行比较,HuggingGPT能够有效地确定最适合给定任务的模型。

A.1.3 Hybrid Endpoint in System Deployment

A.1.3 系统部署中的混合端点

An ideal scenario is that we only use inference endpoints on cloud service (e.g., Hugging Face). However, in some cases, we have to deploy local inference endpoints, such as when inference endpoints for certain models do not exist, the inference is time-consuming, or network access is limited. To keep the stability and efficiency of the system, HuggingGPT allows us to pull and run some common or time-consuming models locally. The local inference endpoints are fast but cover fewer models, while the inference endpoints in the cloud service (e.g., Hugging Face) are the opposite. Therefore, local endpoints have higher priority than cloud inference endpoints. Only if the matched model is not deployed locally, HuggingGPT will run the model on the cloud endpoint like Hugging Face. Overall, we think that how to design and deploy systems with better stability for HuggingGPT or other autonomous agents will be very important in the future.

理想情况下,我们仅使用云服务(如Hugging Face)的推理端点。但在某些情况下,我们必须部署本地推理端点,例如当某些模型的推理端点不存在、推理耗时较长或网络访问受限时。为保持系统稳定性和效率,HuggingGPT允许我们在本地拉取并运行一些常见或耗时的模型。本地推理端点速度快但覆盖模型较少,而云服务(如Hugging Face)中的推理端点则相反。因此,本地端点优先级高于云端推理端点。仅当匹配模型未在本地部署时,HuggingGPT才会在Hugging Face等云端端点运行该模型。总体而言,我们认为如何为HuggingGPT或其他自主AI智能体设计和部署具有更好稳定性的系统将在未来变得非常重要。

A.1.4 Task List

A.1.4 任务列表

A.1.5 GPT-4 Score

A.1.5 GPT-4 得分

Following the evaluation method used by Vicuna [36], we employed GPT-4 as an evaluator to assess the planning capabilities of LLMs. In more detail, we include the user request and the task list planned by LLM in the prompt, and then let GPT-4 judge whether the list of tasks is accurate and also provide a rationale. To guide GPT-4 to make the correct judgments, we designed some task guidelines: 1) the tasks are in the supported task list (see Table 13); 2) the planned task list can reach the solution to the user request; 3) the logical relationship and order among the tasks are reasonable. In the prompt, we also supplement several positive and negative demonstrations of task planning to provide reference for GPT-4. The prompt for GPT-4 score is shown in Table 10. We further want to emphasize that GPT-4 score is not always correct although it has shown a high correlation. Therefore, we also expect to explore more confident metrics to evaluate the ability of LLMs in planning.

遵循Vicuna [36]采用的评估方法,我们使用GPT-4作为评估器来测试大语言模型的规划能力。具体而言,我们在提示词中包含了用户请求和大语言模型规划的任务列表,然后让GPT-4判断该任务列表是否准确,并提供判断依据。为了引导GPT-4做出正确判断,我们设计了一些任务指导原则:1) 任务属于支持的任务列表(见表13);2) 规划的任务列表能够达成用户请求的解决方案;3) 任务间的逻辑关系和顺序合理。在提示词中,我们还补充了若干任务规划的正例和反例,为GPT-4提供参考。GPT-4评分的提示词如表10所示。我们想进一步强调,尽管GPT-4评分显示出较高相关性,但其判断并非总是正确。因此,我们也希望探索更具可信度的指标来评估大语言模型的规划能力。

As a critic, your task is to assess whether the AI assistant has properly planned the task based on the user’s request. To do so, carefully examine both the user’s request and the assistant’s output, and then provide a decision using either "Yes" or "No" ("Yes" indicates accurate planning and $"\mathrm{{No}"}$ indicates inaccurate planning). Additionally, provide a rationale for your choice using the following structure: {"choice": "yes"/"no", "reason": "Your reason for your choice"}. Please adhere to the following guidelines: 1. The task must be selected from the following options: ${{A\nu a i l a b l e T a s k L i s t}}$ . 2. Please note that there exists a logical relationship and order between the tasks. 3. Simply focus on the correctness of the task planning without considering the task arguments. Positive examples: ${{P o s i t i\nu e D e m o s}}$ Negative examples: {{Negative Demos}} Current user request: ${{I n p u t}}$ AI assistant’s output: ${{O u t p u t}}$ Your judgement:

作为评审员,您的任务是评估AI助手是否根据用户请求正确规划了任务。为此,请仔细检查用户请求和助手输出,然后使用"Yes"或"No"做出判断("Yes"表示准确规划,$\mathrm{{No}"}$表示不准确规划)。同时按照以下结构提供选择理由:{"choice": "yes"/"no", "reason": "您的选择理由"}。请遵循以下准则:1. 任务必须从以下选项中选择:${{A\nu a i l a b l e T a s k L i s t}}$。2. 请注意任务之间存在逻辑关系和顺序。3. 只需关注任务规划的正确性,无需考虑任务参数。正面示例:${{P o s i t i\nu e D e m o s}}$ 反面示例:{{Negative Demos}} 当前用户请求:${{I n p u t}}$ AI助手输出:${{O u t p u t}}$ 您的判断:

A.1.6 Human Evaluation

A.1.6 人工评估

To better align human preferences, we invited three human experts to evaluate the different stages of HuggingGPT. First, we selected 3-5 tasks from the task list of Hugging Face and then manually created user requests based on the selected tasks. We will discard samples that cannot generate new requests from the selected tasks. Totally, we conduct random sampling by using different seeds, resulting in a collection of 130 diverse user requests. Based on the produced samples, we evaluate the performance of LLMs at different stages (e.g., task planning, model selection, and response generation). Here, we designed three evaluation metrics:

为了更好地对齐人类偏好,我们邀请了三位人类专家评估HuggingGPT的不同阶段。首先,我们从Hugging Face的任务列表中选取3-5个任务,然后基于所选任务手动创建用户请求。对于无法从选定任务生成新请求的样本,我们将予以剔除。通过使用不同种子进行随机采样,最终收集了130个多样化的用户请求。基于生成的样本,我们评估了大语言模型在不同阶段(如任务规划、模型选择和响应生成)的表现。为此,我们设计了三个评估指标:

Three human experts were asked to annotate the provided data according to our well-designed metrics and then calculated the average values to obtain the final scores.

三位人类专家被要求根据我们精心设计的指标对提供的数据进行标注,然后计算平均值以获得最终分数。

A.2 Datasets for Task Planning Evaluation

A.2 任务规划评估数据集

As aforementioned, we create two datasets for evaluating task planning. Here we provide more details about these datasets. In total, we gathered a diverse set of 3,497 user requests. Since labeling this dataset to obtain the task planning for each request is heavy, we employed the capabilities of GPT-4 to annotate them. Finally, these auto-labeled requests can be categorized into three types: single task (1,450 requests), sequence task (1,917 requests), and graph task (130 requests). For a more reliable evaluation, we also construct a human-annotated dataset. We invite some expert annotators to label some complex requests, which include 46 examples. Currently, the human-annotated dataset includes 24 sequential tasks and 22 graph tasks. Detailed statistics about the GPT-4-annotated and human-annotated datasets are shown in Table 11.

如前所述,我们创建了两个用于评估任务规划的数据集。此处提供这些数据集的更多细节。我们总共收集了3,497个多样化的用户请求。由于标注该数据集以获取每个请求的任务规划工作量较大,我们利用GPT-4的能力进行自动标注。最终,这些自动标注的请求可分为三类:单任务(1,450个请求)、序列任务(1,917个请求)和图任务(130个请求)。为进行更可靠的评估,我们还构建了一个人工标注数据集。邀请专家标注员对一些复杂请求进行标注,共包含46个示例。目前,人工标注数据集包含24个序列任务和22个图任务。关于GPT-4标注和人工标注数据集的详细统计信息如表11所示。

A.3 Case Study

A.3 案例研究

A.3.1 Case Study on Various Tasks

A.3.1 多任务案例研究

Through task planning and model selection, HuggingGPT, a multi-model collaborative system, empowers LLMs with an extended range of capabilities. Here, we extensively evaluate HuggingGPT across diverse multimodal tasks, and some selected cases are shown in Figures 4 and 5. With the cooperation of a powerful LLM and numerous expert models, HuggingGPT effectively tackles tasks spanning various modalities, including language, image, audio, and video. Its proficiency encompasses diverse task forms, such as detection, generation, classification, and question answering.

通过任务规划和模型选择,多模型协作系统HuggingGPT为大语言模型扩展了能力范围。我们针对HuggingGPT在多种多模态任务上进行了广泛评估,部分精选案例如图4和图5所示。借助强大LLM与众多专家模型的协作,HuggingGPT能有效处理跨越语言、图像、音频和视频等多种模态的任务,其能力涵盖检测、生成、分类和问答等多样化任务形式。

| Datasets | Number of Requests by Type | Request Length | Number of Tasks | ||||

| Single | Sequential | Graph | Max | Average | Max | Average | |

| GPT-4-annotated | 1,450 | 1,917 | 130 | 52 | 13.26 | 13 | 1.82 |

| Human-annotated | - | 24 | 22 | 95 | 10.20 | 12 | 2.00 |

Table 11: Statistics on datasets for task planning evaluation.

| 数据集 | 按类型划分的请求数量 | 请求长度 | 任务数量 | ||||

|---|---|---|---|---|---|---|---|

| 单次 (Single) | 连续 (Sequential) | 图状 (Graph) | 最大 (Max) | 平均 (Average) | 最大 (Max) | 平均 (Average) | |

| GPT-4标注 | 1,450 | 1,917 | 130 | 52 | 13.26 | 13 | 1.82 |

| 人工标注 | - | 24 | 22 | 95 | 10.20 | 12 | 2.00 |

表 11: 任务规划评估数据集统计。

A.3.2 Case Study on Complex Tasks

A.3.2 复杂任务案例分析

Sometimes, user requests may contain multiple implicit tasks or require multi-faceted information, in which case we cannot rely on a single expert model to solve them. To overcome this challenge, HuggingGPT organizes the collaboration of multiple models through task planning. As shown in Figures 6, 7 and 8, we conducted experiments to evaluate the effectiveness of HuggingGPT in the case of complex tasks:

有时,用户请求可能包含多个隐含任务或需要多方面的信息,这种情况下我们无法依赖单个专家模型来解决。为了克服这一挑战,HuggingGPT通过任务规划组织多个模型的协作。如图6、图7和图8所示,我们进行了实验来评估HuggingGPT在复杂任务情况下的有效性:

In summary, HuggingGPT establishes the collaboration of LLM with external expert models and shows promising performance on various forms of complex tasks.

总之,HuggingGPT实现了大语言模型与外部专家模型的协作,并在各类复杂任务上展现出优异性能。

A.3.3 Case Study on More Scenarios

A.3.3 更多场景的案例研究

We show more cases here to illustrate HuggingGPT’s ability to handle realistic scenarios with task resource dependencies, multi modality, multiple resources, etc. To make clear the workflow of HuggingGPT, we also provide the results of the task planning and task execution stages.

我们在此展示更多案例来说明HuggingGPT处理具有任务资源依赖、多模态、多资源等现实场景的能力。为清晰呈现HuggingGPT的工作流程,我们还提供了任务规划和任务执行阶段的结果。

• Figure 11 shows HuggingGPT integrating multiple user-input resources to perform simple reasoning. We can find that HuggingGPT can break up the main task into multiple basic tasks even with multiple resources, and finally integrate the results of multiple inferences from multiple models to get the correct answer.

• 图11展示了HuggingGPT整合多个用户输入资源进行简单推理的过程。可以看出,即使面对多重资源输入,HuggingGPT仍能将主任务拆解为多个基础任务,并最终整合多个模型的推理结果得出正确答案。

B More Discussion about Related Works

B 关于相关工作的更多讨论

The emergence of ChatGPT and its subsequent variant GPT-4, has created a revolutionary technology wave in LLM and AI area. Especially in the past several weeks, we also have witnessed some experimental but also very interesting LLM applications, such as AutoGPT 4, AgentGPT 5, BabyAGI 6, and etc. Therefore, we also give some discussions about these works and provide some comparisons from multiple dimensions, including scenarios, planning, tools, as shown in Table 12.

ChatGPT及其后续变体GPT-4的出现,在大语言模型(LLM)和人工智能(AI)领域掀起了一场革命性技术浪潮。特别是在过去几周,我们还见证了一些实验性但非常有趣的大语言模型应用,例如AutoGPT [4]、AgentGPT [5]、BabyAGI [6]等。因此,我们也对这些工作进行了讨论,并从场景、规划、工具等多个维度提供了比较分析,如表12所示。

Scenarios Currently, these experimental agents (e.g., AutoGPT, AgentGPT and BabyAGI) are mainly used to solve daily requests. While for HuggingGPT, it focuses on solving tasks in the AI area (e.g., vision, language, speech, etc), by utilizing the powers of Hugging Face. Therefore, HuggingGPT can be considered as a more professional agent. Generally speaking, users can choose the most suitable agent based on their requirements (e.g., daily requests or professional areas) or customize their own agent by defining knowledge, planning strategy and toolkits.

目前,这些实验性AI智能体(如AutoGPT、AgentGPT和BabyAGI)主要用于解决日常需求。而HuggingGPT则专注于通过调用Hugging Face平台能力来解决AI领域任务(如视觉、语言、语音等),因此可视为更专业的智能体。通常用户可根据需求(日常事务或专业领域)选择最适合的智能体,或通过定义知识库、规划策略和工具集来自定义专属智能体。

| Name | Scenarios | Planning | Tools |

| BabyAGI AgentGPT AutoGPT | Daily | Iterative Planning | |

| HuggingGPT | AI area | GlobalPlanning | Web Search, Code Executor, .. Models in Hugging Face |

Table 12: Com parisi on between HuggingGPT and other autonomous agents.

| 名称 | 场景 | 规划 | 工具 |

|---|---|---|---|

| BabyAGI AgentGPT AutoGPT | 日常 | 迭代规划 | |

| HuggingGPT | AI领域 | 全局规划 | 网络搜索、代码执行器、Hugging Face中的模型等 |

表 12: HuggingGPT与其他自主智能体的对比。

Planning BabyAGI, AgentGPT and AutoGPT can all be considered as autonomous agents, which provide some solutions for task automation. For these agents, all of them adopt step-by-step thinking, which iterative ly generates the next task by using LLMs. Besides, AutoGPT employs an addition reflexion module for each task generation, which is used to check whether the current predicted task is appropriate or not. Compared with these applications, HuggingGPT adopts a global planning strategy to obtain the entire task queue within one query. It is difficult to judge which one is better, since each one has its deficiencies and both of them heavily rely on the ability of LLMs, even though existing LLMs are not specifically designed for task planning. For example, iterative