Large AI Models in Health Informatics: Applications, Challenges, and the Future

大语言模型在健康信息学中的应用、挑战与未来

Jianing Qiu, Lin Li, Jiankai Sun, Jiachuan Peng, Peilun Shi, Ruiyang Zhang, Yinzhao Dong, Kyle Lam, Frank P.-W. Lo, Bo Xiao, Wu Yuan, Senior Member, IEEE, Ningli Wang, Dong Xu, and Benny Lo, Senior Member, IEEE

Jianing Qiu, Lin Li, Jiankai Sun, Jiachuan Peng, Peilun Shi, Ruiyang Zhang, Yinzhao Dong, Kyle Lam, Frank P.-W. Lo, Bo Xiao, Wu Yuan (IEEE高级会员), Ningli Wang, Dong Xu, Benny Lo (IEEE高级会员)

Abstract— Large AI models, or foundation models, are models recently emerging with massive scales both parameter-wise and data-wise, the magnitudes of which can reach beyond billions. Once pretrained, large AI models demonstrate impressive performance in various down- stream tasks. A prime example is ChatGPT, whose capability has compelled people’s imagination about the farreaching influence that large AI models can have and their potential to transform different domains of our lives. In health informatics, the advent of large AI models has brought new paradigms for the design of methodologies. The scale of multi-modal data in the biomedical and health domain has been ever-expanding especially since the community embraced the era of deep learning, which provides the ground to develop, validate, and advance large AI models for breakthroughs in health-related areas. This article presents a comprehensive review of large AI models, from background to their applications. We identify seven key sectors in which large AI models are applicable and might have substantial influence, including 1) bio in formatics; 2) medical diagnosis; 3) medical imaging; 4) medical

摘要—大 AI模型(或称基础模型)是近年来涌现的参数量和数据量均达到数十亿甚至更大规模的模型。这类模型经过预训练后,在各种下游任务中展现出惊人性能,ChatGPT的卓越能力更让人们充分认识到大AI模型可能产生的深远影响及其改变生活各领域的潜力。在健康信息学领域,大AI模型为方法论设计带来了新范式。随着深度学习时代的到来,生物医学与健康领域的多模态数据规模持续扩张,这为开发、验证和推进大AI模型以实现健康领域突破提供了基础。本文系统梳理了大AI模型从背景到应用的完整脉络,重点阐述了其可能产生重大影响的七大应用领域:1) 生物信息学;2) 医学诊断;3) 医学影像;4) 医学...

Jianing Qiu is with the Department of Computing, Imperial College London, U.K., and he is also with the Department of Biomedical Engineering, The Chinese University of Hong Kong, Hong Kong SAR. He was with Precision Robotics (Hong Kong) Ltd., Hong Kong SAR (e-mail: jianing.qiu17@imperial.ac.uk).

Jianing Qiu 就职于英国伦敦帝国理工学院计算系,同时兼任中国香港特别行政区香港中文大学生物医学工程系。他曾任职于香港特别行政区精准机器人(香港)有限公司 (邮箱: jianing.qiu17@imperial.ac.uk)。

Lin Li is with the Department of Informatics, King’s College London, U.K. (e-mail: lin.3.li@kcl.ac.uk).

Lin Li 隶属于英国伦敦国王学院信息学系 (e-mail: lin.3.li@kcl.ac.uk)。

Jiankai Sun is with the School of Engineering, Stanford University, USA (e-mail: jksun@stanford.edu).

Jiankai Sun 就职于美国斯坦福大学工程学院 (e-mail: jksun@stanford.edu)。

Jiachuan Peng is with the Department of Engineering Science, University of Oxford, U.K. (e-mail: jiachuan.peng@seh.ox.ac.uk).

Jiachuan Peng 就职于英国牛津大学工程科学系 (e-mail: jiachuan.peng@seh.ox.ac.uk)。

Peilun Shi and Wu Yuan are with the Department of Biomedical Engineering, The Chinese University of Hong Kong, Hong Kong SAR (e-mails: peilunshi@cuhk.edu.hk and wyuan@cuhk.edu.hk).

Peilun Shi和Wu Yuan就职于香港中文大学生物医学工程系(电子邮件:peilunshi@cuhk.edu.hk及wyuan@cuhk.edu.hk)。

Ruiyang Zhang is with Precision Robotics (Hong Kong) Ltd., Hong Kong SAR (e-mail: r.zhang@prhk.ltd).

张瑞阳就职于精密机器人(香港)有限公司(香港特别行政区)(邮箱: r.zhang@prhk.ltd)。

Yinzhao Dong is with the Faculty of Engineering, The University of Hong Kong, Hong Kong SAR (e-mail: dongyz@connect.hku.hk).

董寅钊就职于香港特别行政区香港大学工程学院(电子邮箱:dongyz@connect.hku.hk)。

Kyle Lam is with the Department of Surgery and Cancer, Imperial College London, U.K. (e-mail: k.lam@imperial.ac.uk).

Kyle Lam 隶属于英国伦敦帝国理工学院外科与癌症系 (email: k.lam@imperial.ac.uk)。

Frank P.-W. Lo and Bo Xiao are with the Hamlyn Centre for Robotic Surgery, Imperial College London, U.K. (e-mails: po.lo15@imperial.ac.uk and b.xiao@imperial.ac.uk).

Frank P.-W. Lo和Bo Xiao就职于英国帝国理工学院哈姆林机器人手术中心 (邮箱: po.lo15@imperial.ac.uk 和 b.xiao@imperial.ac.uk)。

Ningli Wang is with Beijing Tongren Eye Center, Beijing Tongren Hospital, Capital Medical University, and also with Beijing Ophthalmology & Visual Sciences Key Laboratory, Beijing, China (e-mail: wningli@vip.163.com)

王宁利就职于首都医科大学附属北京同仁医院北京同仁眼科中心,同时任职于北京市眼科与视觉科学重点实验室(电子邮箱:wningli@vip.163.com)

Dong Xu is with the Department of Electrical Engineering and Computer Science, and the Christopher S. Bond Life Sciences Center, University of Missouri, USA (e-mail: xudong@missouri.edu).

董旭就职于美国密苏里大学电气工程与计算机科学系及Christopher S. Bond生命科学中心 (邮箱: xudong@missouri.edu)。

Benny Lo is with the Facualty of Medicine, Imperial College London, U.K., and he is also with Precision Robotics (Hong Kong) Ltd., Hong Kong SAR (e-mail: benny.lo@imperial.ac.uk).

Benny Lo 就职于英国伦敦帝国理工学院医学院,同时兼任香港特别行政区精准机器人有限公司(Precision Robotics (Hong Kong) Ltd.)(电子邮箱: benny.lo@imperial.ac.uk)。

Corresponding authors: Wu Yuan and Benny Lo informatics; 5) medical education; 6) public health; and 7) medical robotics. We examine their challenges, followed by a critical discussion about potential future directions and pitfalls of large AI models in transforming the field of health informatics.

通讯作者:Wu Yuan 和 Benny Lo 信息学;5) 医学教育;6) 公共卫生;7) 医疗机器人。我们探讨了这些领域的挑战,随后对大型 AI 模型在变革健康信息学领域的潜在未来方向和陷阱进行了批判性讨论。

Fig. 1: Number of publications related to ChatGPT and SAM in medical and health areas. Statistics were queried from Google Scholar with the keywords “Medical ChatGPT” or “Medical Segment Anything”, and the last entry was 31-th Aug. 2023. From April to August, each month, there were over 200 publications about ChatGPT in medicine and healthcare.

图 1: 医疗健康领域ChatGPT与SAM相关出版物数量统计。数据通过Google Scholar以"Medical ChatGPT"或"Medical Segment Anything"为关键词检索获得,最后更新日期为2023年8月31日。从4月到8月,每月关于ChatGPT在医学和医疗保健领域的出版物均超过200篇。

Index Terms— artificial intelligence; bioinformatics; bio medicine; deep learning; foundation model; health informatics; healthcare; medical imaging

索引术语— 人工智能;生物信息学;生物医学;深度学习;基础模型;健康信息学;医疗保健;医学影像

I. INTRODUCTION

I. 引言

HE introduction of ChatGPT [1] has triggered a new T wave of development and deployment of Large AI Models (LAMs) recently. As shown in Fig. 1, ChatGPT and the phenomenal Segment Anything Model (SAM) [2] have sparked active research in medical and health sectors since their initial launch. Although groundbreaking, the AI community has in fact started creating LAMs much earlier, and it was the seminal work introducing the Transformer model [3] back in 2017 that accelerated the creation of LAMs.

ChatGPT [1] 的推出近期引发了新一轮大语言模型 (Large AI Models, LAMs) 开发和部署的热潮。如图 1 所示,ChatGPT 和现象级模型 Segment Anything Model (SAM) [2] 自发布之初就激发了医疗健康领域的活跃研究。尽管具有突破性意义,但事实上 AI 社区更早就开始构建大语言模型,而 2017 年 Transformer 模型 [3] 这一开创性工作的提出加速了大语言模型的创建进程。

Large Al Models: Key Features and New Paradigm Shift

大语言模型 (Large Language Model):关键特性与新范式转变

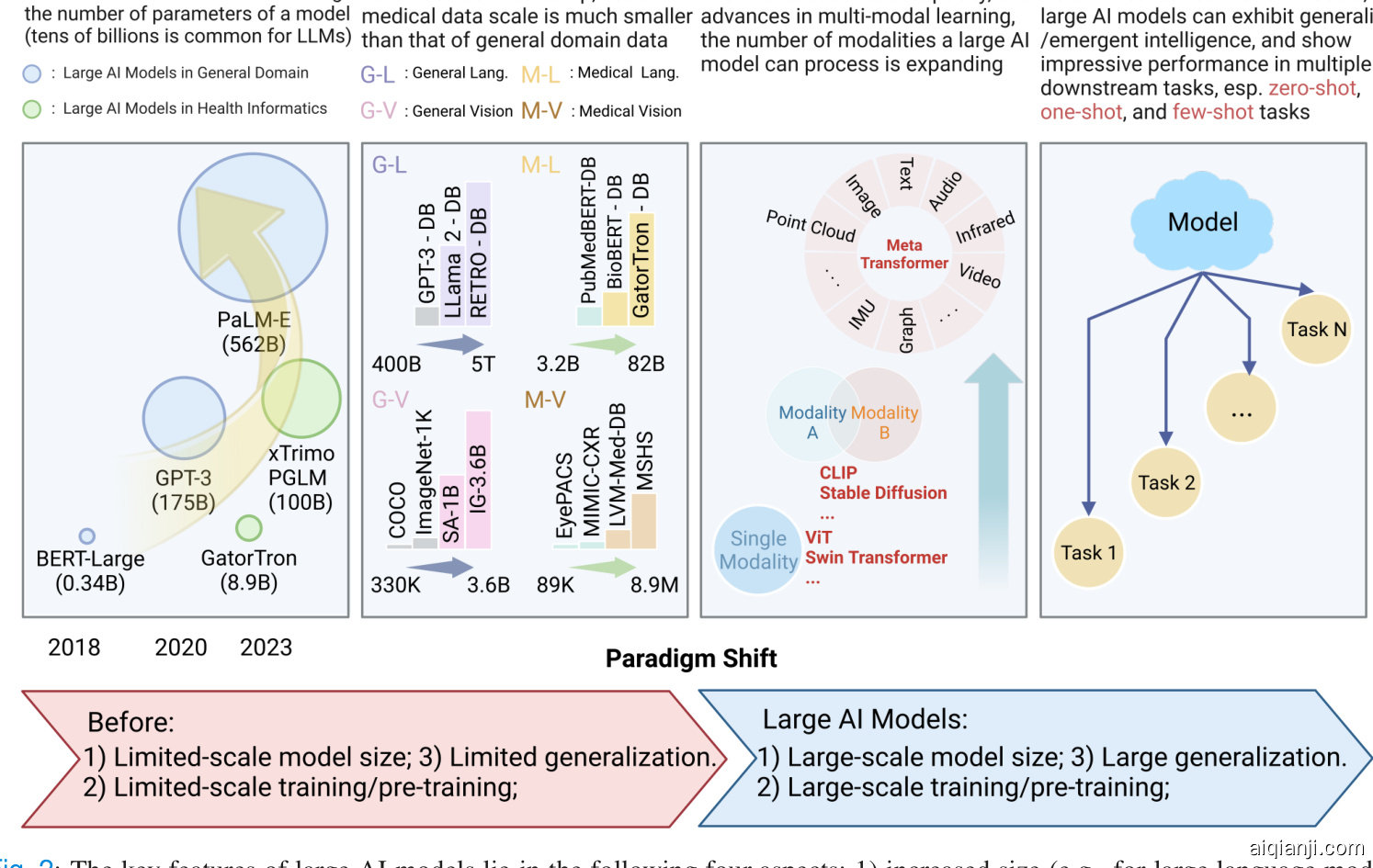

Fig. 2: The key features of large AI models lie in the following four aspects: 1) increased size (e.g., for large language models (LLMs), the number of parameters is often billions); 2) trained with large-scale data (e.g., for LLMs, the data can contain trillions of tokens; and for large vision models (LVMs), the data can contain billions of images); 3) able to process data of multiple modalities; and 4) can perform well across multiple downstream tasks, especially on zero-, one-, and few-shot tasks.

图 2: 大型AI模型的关键特征体现在以下四个方面: 1) 规模增大 (例如大语言模型的参数量通常达到数十亿级别); 2) 使用大规模数据进行训练 (例如大语言模型的训练数据可包含数万亿token, 大型视觉模型的训练数据可包含数十亿张图像); 3) 能够处理多模态数据; 4) 在多种下游任务中表现优异, 尤其在零样本、单样本和少样本任务上。

The recent advances in data science and AI algorithms have endowed LAMs with strengthened generative and reasoning capabilities, as well as generalist intelligence across multiple tasks with impressive zero- and few-shot performance, significantly distinguishing them from early deep models. For example, when asked for medical advice, ChatGPT, based on GPT-4 [4], demonstrates the capability of recalling prior conversation and being able to contextual ize the user’s past medical history before answering, showing a new level of intelligence way beyond that of a simple symptom checker [5].

数据科学与AI算法的最新进展,赋予了大语言模型 (LLM) 更强大的生成与推理能力,以及跨任务的通用智能表现,其零样本 (Zero-shot) 和少样本 (Few-shot) 性能显著区别于早期深度学习模型。例如基于GPT-4 [4] 的ChatGPT在医疗咨询场景中,能够回忆先前的对话内容,并在回答前结合用户既往病史进行情境化分析 [5],展现出远超简单症状检查工具的新型智能水平。

One notable bottleneck of developing supervised medical and clinical AI models is that they require annotated data at scale for training a well-functioning model. However, such annotations have to be conducted by domain experts, which is often expensive and time-consuming. This causes the curation of large-scale medical and clinical data with highquality annotations to be challenging. However, this may no longer be a bottleneck for LAMs, as they can leverage selfsupervision and reinforcement learning in training, relieving the annotation burden and workload of curating large-scale annotated datasets [6]. With the ever-increasing proliferation of medical Internet of things such as pervasive wearable sensors, medical and clinical history such as electronic health records (EHRs), prevalent medical imaging for diagnosis such as computed-tomography (CT) scans, the growing genomic sequence discovery, and more, the abundance of biomedical, clinical, and health data fosters the development of the next generation of AI models in the field, which are expected to have a large capacity for modeling the complexity and magnitude of health-related data, and generalize to multiple unseen scenarios to actively assist and engage in clinical and medical decision-making.

开发监督式医疗和临床AI模型的一个显著瓶颈在于,它们需要大规模标注数据来训练性能良好的模型。然而,这类标注必须由领域专家完成,通常成本高昂且耗时。这使得获取具有高质量标注的大规模医疗临床数据极具挑战性。但对于大语言模型而言,这一瓶颈可能不复存在,因为它们能利用自监督学习和强化学习进行训练,从而减轻标注负担及构建大规模标注数据集的工作量 [6]。随着可穿戴传感器等医疗物联网设备的普及、电子健康档案(EHR)等临床病史记录的积累、计算机断层扫描(CT)等诊断影像的广泛应用,以及基因组序列发现的持续增长,海量生物医学与健康数据正推动该领域新一代AI模型的发展。这些模型需具备强大能力以建模健康数据的复杂性与规模,并能泛化至多种未见场景,从而主动参与临床决策支持。

Despite the homogeneity of the model architecture (current LAMs are primarily based on Transformer [3]), LAMs inherently are strong learners of heterogeneous data due to their large capacity, unified input modeling of different modalities, and improved multi-modal learning techniques. Multimodality is common in biomedical and health settings, and the multi-modal nature of health data provides the natural and promising ground for developing and evaluating LAMs.

尽管模型架构具有同质性(当前的 LAM 主要基于 Transformer [3]),但由于其大容量、对不同模态的统一输入建模以及改进的多模态学习技术,LAM 天生就是异构数据的强大学习者。多模态在生物医学和健康领域很常见,健康数据的多模态特性为开发和评估 LAM 提供了自然而充满前景的基础。

The LAMs that this article discusses are mainly foundation models [7]. However, this article also provides a retrospective of the recent LAMs that are not necessarily considered foundational at their current stage, but are seminal in advancing the future development of LAMs in the fields of bio medicine and health informatics. Fig. 2 summarizes the key features of LAMs, and highlights the paradigm shift it is introducing, i.e., 1) large-scale model size; 2) large-scale training/pre-training; and 3) large generalization.

本文讨论的 LAMs 主要是基础模型 [7]。不过,本文也回顾了近期一些在当前阶段未必被视为基础、但对推动 LAMs 在生物医学和健康信息学领域未来发展具有开创性意义的 LAMs。图 2 总结了 LAMs 的关键特征,并强调了它带来的范式转变,即:1) 大规模模型尺寸;2) 大规模训练/预训练;3) 强泛化能力。

Albeit inspirational, LAMs still face challenges and limitations, and the rapid rise of LAMs brings new opportunities as well as potential pitfalls. This article aims to provide a comprehensive review of the recent developments of LAMs, with a particular focus on their impacts on the biomedical and health informatics communities. The remainder of this article is organized as follows: Section II describes the background of LAMs in general domains, such as natural language processing (NLP) and computer vision (CV); Section III discusses current progress and possible applications of LAMs in key sectors of health informatics; Section IV discusses challenges, limitations and risks of LAMs; Section V points out some potential future directions of advancing LAMs in health informatics, and Section VI concludes.

尽管鼓舞人心,LAMs仍面临挑战与局限,其快速发展既带来新机遇也暗藏潜在风险。本文旨在全面综述LAMs的最新进展,特别关注其对生物医学和健康信息学领域的影响。文章结构如下:第二节概述LAMs在自然语言处理(NLP)和计算机视觉(CV)等通用领域的研究背景;第三节探讨LAMs在健康信息学关键领域的当前进展与应用潜力;第四节分析LAMs面临的挑战、局限及风险;第五节指出健康信息学中推进LAMs研究的潜在发展方向;第六节总结全文。

As this field progresses very rapidly, and also due to the page limit, there are a lot of works that this paper cannot cover. It is our hope that the community can be updated with the latest advances, so we refer readers to our website 1 for the latest progress about LAMs.

由于该领域发展非常迅速,加之篇幅限制,本文无法涵盖大量相关工作。我们希望学界能持续关注最新进展,因此建议读者访问我们的网站1获取关于LAMs (Large Action Models) 的最新动态。

II. BACKGROUND OF LARGE AI MODELS

II. 大模型 (Large AI Models) 背景

The burgeoning AI community has devoted much effort to developing large AI models (LAMs) in recent years by leveraging the massive influx of data and computational resources. Based on the pre-training data modality, this article categorizes the current LAMs into three types and defines them as follows:

近年来,蓬勃发展的AI社区利用海量数据和计算资源,投入大量精力开发大AI模型(LAMs)。根据预训练数据模态,本文将现有LAMs分为三类并定义如下:

scaled up to billions; 2) data-wise, a large volume of unlabelled data are used to pre-train an LLM, and the amount can often reach millions or billions if not more; 3) paradigm-wise, LLMs are first pre-trained often with weakly- or self-supervised learning (e.g., masked language modeling [9] and next token prediction [4]), and then fine-tuned or adapted to various downstream tasks such as question answering and dialogue in which they are able to demonstrate impressive performance.

规模扩大到数十亿;2) 数据方面,大量未标注数据用于预训练大语言模型 (LLM),其数量通常可达数百万甚至数十亿;3) 范式方面,大语言模型通常首先通过弱监督或自监督学习(如掩码语言建模 [9] 和下一token预测 [4])进行预训练,然后针对问答、对话等各种下游任务进行微调或适配,在这些任务中它们能够展现出令人印象深刻的性能。

Recent advances reveal that LLMs are impressive zeroshot, one-shot, and few-shot learners. They are able to extract, summarize, translate, and generate textual information with only a few or even no prompt/fine-tuning samples [4]. Furthermore, LLMs manifest impressive reasoning capability, and this capability can be further strengthened with prompt engineering techniques such as Chain-of-Thought prompting [22].

近期研究表明,大语言模型 (LLM) 在零样本、单样本和少样本学习场景中表现卓越。仅需少量甚至无需提示/微调样本,它们就能完成文本信息的提取、摘要、翻译和生成 [4]。此外,大语言模型展现出惊人的推理能力,通过思维链提示 (Chain-of-Thought prompting) 等提示工程技术,这种能力还能进一步增强 [22]。

There was an upsurge in the number of new LLMs from 2022 onwards. Despite the general consensus that scaling up the number of parameters and the amount of data will lead to improved performance, which leads to a dominant trend of developing LLMs often with billions of parameters (e.g., LLMs such as PaLM [13] have already contained 540 billion parameters) and even trillions of data tokens (e.g., LLaMa 2 was pre-trained with 2 trillion tokens [11], and the training data of RETRO [23] had over 5 trillion tokens), there is currently no concerted agreement within the community that if this continuous growth of model and data size is optimal [10], [14], and there is also lacking a verified universal scaling law. To balance the data annotation cost and efficacy, as well as to train an LLM that can better align with human intent, researchers have commonly used reinforcement learning from human feedback (RLHF) [24] to develop LLMs that can exhibit desired behaviors. The core idea of RLHF is to use human preference datasets to train a Reward Model (RM), which can predict the reward function and be optimized by RL algorithms (e.g., Proximal Policy Optimization (PPO) [25]). The framework of RLHF has attracted much attention and become a key component of many LLMs, such as InstructGPT [19], Sparrow [26], and ChatGPT [1]. Recently, Susano Pinto et al. [27] have also investigated this reward optimization in vision tasks, which can possibly advance the development of future LVMs using RLHF.

自2022年起,新增大语言模型数量激增。尽管普遍认为扩大参数规模和数据量能提升性能(例如PaLM [13]等模型已包含5400亿参数),甚至使用数万亿数据token进行预训练(如LLaMa 2采用2万亿token [11],RETRO [23]训练数据超过5万亿token),但学界对持续增大模型和数据规模是否最优仍无共识[10][14],也缺乏经过验证的通用扩展定律。为平衡数据标注成本与效能,并训练出更符合人类意图的大语言模型,研究者普遍采用基于人类反馈的强化学习(RLHF) [24]来开发具备预期行为的模型。RLHF的核心思想是利用人类偏好数据集训练奖励模型(RM),通过强化学习算法(如近端策略优化(PPO) [25])优化奖励函数。该框架已成为InstructGPT [19]、Sparrow [26]和ChatGPT [1]等模型的关键组件。近期Susano Pinto等人[27]在视觉任务中探索了这种奖励优化机制,有望推动未来基于RLHF的视觉大模型发展。

This section provides an overview of the background of these three types of LAMs in general domains.

本节概述了这三类LAM在通用领域的背景。

A. Large Language Models

A. 大语言模型

The proposal of the Transformer architecture [3] heralds the start of developing large language models (LLMs) in the field of NLP. Since 2018, following the birth of GPT (Generative Pre-trained Transformer) [8] and BERT (Bidirectional Encoder Representations from Transformers) [9], the development of LLMs has progressed rapidly.

Transformer架构的提出[3]标志着NLP领域大语言模型(LLM)研发的开端。自2018年GPT(Generative Pre-trained Transformer)[8]和BERT(Bidirectional Encoder Representations from Transformers)[9]诞生以来,大语言模型的发展突飞猛进。

Broadly speaking, the recent LLMs [10]–[21], have the following three distinct characteristics: 1) parameter-wise, the number of learnable parameters of an LLM can be easily

广义而言,近期的大语言模型 [10]–[21] 具有以下三个显著特征:1) 参数量方面,大语言模型的可学习参数数量可轻松

B. Large Vision Models

B. 大视觉模型

In computer vision, it has been a common practice for years to first pre-train a model on a large-scale dataset and then fine-tune it on the dataset of interest (usually smaller than the one for pre-training) for improved generalization [28]. The fundamental changes driving this evolution of large models lie in the scale of pre-training datasets and models, and the pre-training methods. ImageNet-1K (1.28M images) [29] and -21K (14M images) [30] used to be canonical datasets for visual pre-training. ImageNet is manually curated for highquality labeling, but the prohibitive cost of curation severely hindered further scaling. To push the scale beyond ImageNet’s, datasets like JFT (300M [31] and 3B [32] images) and IG (3.5B [33] and 3.6B [34] images) were collected from the web with less or no curation. The quality of annotation is therefore compromised, and the accessibility of datasets become limited because of copyright issues.

在计算机视觉领域,多年来普遍采用的做法是先在大型数据集上进行模型预训练,然后在目标数据集(通常比预训练数据集小)上进行微调以提高泛化能力 [28]。推动大模型演进的根本变化在于预训练数据集和模型的规模,以及预训练方法。ImageNet-1K(128万张图像)[29] 和 -21K(1400万张图像)[30] 曾是视觉预训练的经典数据集。ImageNet 经过人工精心标注以保证高质量标签,但高昂的标注成本严重阻碍了进一步扩展。为了突破 ImageNet 的规模限制,JFT(3亿 [31] 和 30亿 [32] 张图像)和 IG(35亿 [33] 和 36亿 [34] 张图像)等数据集从网络收集而来,标注程度较低甚至未经标注。因此这些数据集标注质量有所下降,且由于版权问题导致可访问性受限。

The compromised annotation requires the pre-training paradigm to shift from supervised learning to weakly-/selfsupervised learning or unsupervised learning. The latter methods include auto regressive modeling, generative modeling and contrastive learning. Auto regressive modeling trains the model to auto regressive ly predict the next pixel conditioned on the preceding pixels [35]. Generative modeling trains the model to reconstruct the entire original image, or some target regions within it [36], from its corrupted [37] or masked [38] variants. Contrastive learning trains the model to discriminate similar and/or dissimilar data instances [39].

受损的标注要求预训练范式从监督学习转向弱监督/自监督学习或无监督学习。后者的方法包括自回归建模、生成式建模和对比学习。自回归建模训练模型基于前面的像素自回归地预测下一个像素[35]。生成式建模训练模型从其损坏的[37]或掩码的[38]变体中重建整个原始图像或其中的某些目标区域[36]。对比学习训练模型区分相似和/或不相似的数据实例[39]。

Vision Transformers (ViTs) and Convolutional Neural Networks (CNNs) are two major architectural families of LVMs. For vision transformers, pioneering works ViT [40] and iGPT [35] transferred the transformer architectures from NLP to CV with minimal modification, but the resulting architectures incur high computational complexity, which is quadratic to the image size. Later, works like TNT [41] and Swin Transformer [42] were proposed to better adapt transformers to visual data. Recently, ViT-G/14 [32], SwinV2-G [43] and ViT-22B [44] substantially scaled the vision transformers up using a bag of training tricks to achieve state-of-the-art (SOTA) accuracy on various benchmarks. While ViTs may seem to gain more momentum than CNNs in developing LVMs, to improve CNNs, the latest works such as ConvNeXt [45] and Intern Image [46] redesigned CNN architecture with inspirations from ViTs and achieved SOTA accuracy on ImageNet. This refutes the previous statement that CNNs are inferior to ViTs. Apart from the above, recent works like CoAtNet [47] and ConViT [48] merge CNNs and ViTs to form new hybrid architectures. Note that ViT-22B is the largest vision model to date, whose scale is significantly larger than that (1.08B) of the current art of CNNs (Intern Image) but is still much behind that of the contemporary LLMs.

视觉Transformer (ViT) 和卷积神经网络 (CNN) 是LVM的两大主流架构体系。在视觉Transformer领域,开创性工作ViT [40] 和iGPT [35] 以最小修改将Transformer架构从NLP迁移至CV领域,但由此产生的架构存在计算复杂度高的问题(与图像尺寸呈平方关系)。随后,TNT [41] 和Swin Transformer [42] 等研究对Transformer进行视觉数据适配优化。近期,ViT-G/14 [32]、SwinV2-G [43] 和ViT-22B [44] 通过系列训练技巧大幅扩展视觉Transformer规模,在多项基准测试中达到最先进 (SOTA) 精度。虽然ViT在LVM发展中看似比CNN更具势头,但ConvNeXt [45] 和Intern Image [46] 等最新研究通过借鉴ViT思想重构CNN架构,在ImageNet上同样实现了SOTA精度,这反驳了先前"CNN逊于ViT"的论断。此外,CoAtNet [47] 和ConViT [48] 等近期工作通过融合CNN与ViT形成新型混合架构。值得注意的是,ViT-22B是目前规模最大的视觉模型,其参数量(220亿)远超当前CNN最高水平(Intern Image的10.8亿),但仍远落后于同期大语言模型的规模。

Architecturally speaking, LVMs are largely-scaled-up variants of their base architectures. How they are scaled up can significantly impact the final performance. Simply increasing the depth by repeating layers vertically may be suboptimal [49], so a line of studies [46], [50], [51] investigate the rules for effective scaling. Furthermore, scaling the model size up is usually combined with larger-scale pre-training [49], [52] and efficient parallelism [53] for improved performance.

从架构角度来看,LVM(大规模视觉模型)本质上是其基础架构的放大版本。模型扩展方式会显著影响最终性能。简单地通过垂直堆叠层来增加深度可能并非最优方案 [49],因此一系列研究 [46][50][51] 探索了高效扩展的规律。此外,扩大模型规模通常需要结合更大规模的预训练 [49][52] 和高效并行技术 [53] 来提升性能。

LVMs also transform other fundamental computer vision tasks beyond classification. The latest breakthrough in segmentation task is SAM [2]. SAM is built with a ViT-H image encoder (632M), a prompt encoder and a transformer-based mask decoder that predicts object masks from the output of the above two encoders. Prompts can be points or bounding boxes in images or text. SAM demonstrates a remarkable zero-shot generalization ability to segment unseen objects and images. Furthermore, to train SAM, a largest segmentation dataset to date, SA-1B, with over 1B masks is constructed.

LVMs 也在分类之外的其他基础计算机视觉任务中带来变革。分割任务的最新突破是 SAM [2]。SAM 由 ViT-H 图像编码器 (632M)、提示编码器和基于 Transformer 的掩码解码器构成,该解码器根据前两个编码器的输出预测物体掩码。提示可以是图像中的点、边界框或文本。SAM 展现出卓越的零样本泛化能力,能够分割未见过的物体和图像。此外,为训练 SAM,研究团队构建了迄今为止最大的分割数据集 SA-1B,包含超过 10 亿个掩码。

C. Large Multi-modal Models

C. 大语言多模态模型

This section describes large multi-modal models (LMMs). While the primary focus is on one type of LMMs: large visionlanguage models (LVLMs), multi-modality beyond vision and language is also summarized in the end.

本节介绍大型多模态模型 (LMM)。虽然主要关注一种特定类型的大型视觉语言模型 (LVLM),但文末也总结了超越视觉与语言的多模态研究。

Training LVLMs like CLIP [54] requires more than hundreds of millions of image-text pairs. Such large amount of data was often closed source [54]–[56]. Until recently, LAION-5B [57] was created with 5.85B data samples, matching the size of the largest private dataset while being available to the public.

训练如CLIP [54]这样的大视觉语言模型(LVLM)需要超过数亿的图文对。如此庞大的数据通常都是闭源的[54]–[56]。直到最近,LAION-5B [57]才公开了5.85亿数据样本,其规模与最大的私有数据集相当。

LVLMs usually adopt a dual-stream architecture: input text and image are processed separately by their respective encoders to extract features. For representation learning, the features from different modalities are then aligned through contrastive learning [54]–[56] or fused into a unified representation through another encoder on the top of all extracted features [58]–[62]. Typically, the entire model, including unimodal encoder and multi-modal encoder if have, is pre-trained on the aforementioned large-scale image-text datasets, and fine-tuned on the downstream tasks or to carry out zeroshot tasks without fine-tuning. The pre-training objectives can be multi-modal tasks only or with unimodal tasks (see Section II-B). Common multi-modal pre-training tasks contain image-text contrastive learning [54]–[56], image-text matching [58], [61], [63], autoregressive modeling [59], [62], masked modeling [58], image-grounded text generation [61], [63], etc. Recent studies suggest that scaling the unimodal encoders up [60], [64] and pre-training with multiple objectives across uniand multi-modalities [61], [63] can substantially benefit multimodal representation learning.

LVLM通常采用双流架构:输入文本和图像分别由各自的编码器处理以提取特征。在表征学习阶段,不同模态的特征通过对比学习[54]–[56]进行对齐,或通过在所有提取特征顶部叠加的另一编码器融合为统一表征[58]–[62]。典型流程包括:整个模型(含单模态编码器及可能存在的多模态编码器)先在所述大规模图文数据集上预训练,再在下游任务微调或直接执行零样本任务。预训练目标可仅包含多模态任务,也可联合单模态任务(参见第II-B节)。常见多模态预训练任务包括图文对比学习[54]–[56]、图文匹配[58][61][63]、自回归建模[59][62]、掩码建模[58]、基于图像的文本生成[61][63]等。最新研究表明,扩大单模态编码器规模[60][64]及跨单/多模态的多目标预训练[61][63]能显著提升多模态表征学习效果。

Recently, LVLMs made a major breakthrough in text-toimage generation. There are generally two classes of methods for such a task: auto regressive model [65]–[67] and diffusion model [67]–[70]. Auto regressive model, like introduced in Section II-B, first concatenates the tokens (returned by some encoders) of text and images together and then learns a model to predict the next item in the sequence. In contrast, diffusion model first perturbs an image with random noise progressively until the image becomes complete noise (forward diffusion process) and then learns a model to gradually denoise the completely noisy image to restore the original image (reverse diffusion process) [71]. Text description is first encoded by a separate encoder and then integrated into the reverse diffusion process as the input to the model so that the image generation can be conditioned on the text prompt. It is common to reuse those pre-defined LLM and LVM architectures and/or their pre-trained parameters as the aforementioned encoders. The scale of these encoders and the generator can significantly impact the quality of generation and the ability of language understanding [66], [70].

最近,大语言视觉模型 (LVLM) 在文生图领域取得重大突破。这类任务通常采用两类方法:自回归模型 [65][66][67] 和扩散模型 [67][68][69][70]。如第 II-B 节所述,自回归模型首先将文本和图像的 token (由编码器生成) 拼接在一起,然后训练模型预测序列中的下一项。相比之下,扩散模型先通过逐步添加随机噪声破坏图像 (前向扩散过程),再训练模型对完全噪声化的图像逐步去噪以重建原图 (逆向扩散过程) [71]。文本描述会先经过独立编码器处理,再作为条件输入融入逆向扩散过程,从而实现基于文本提示的图像生成。通常,这些预定义的大语言模型和视觉模型架构及其预训练参数会被复用为前述编码器。编码器和生成器的规模会显著影响生成质量与语言理解能力 [66][70]。

The paradigm of bridging language and vision modalities can be beyond learning, e.g., using LLM to instruct other LVMs to perform vision-language tasks [72]. Beyond vision and language, recent development in LMMs seeks to unify more modalities under one single framework, e.g., ImageBind [73] combines six whereas Meta-Transformer [74] unifies twelve modalities.

连接语言与视觉模态的范式可以超越单纯的学习,例如利用大语言模型指导其他视觉语言模型 (LVMs) 执行视觉语言任务 [72]。除视觉和语言外,近期多模态大模型 (LMMs) 的发展致力于在单一框架下统一更多模态,例如 ImageBind [73] 整合了六种模态,而 Meta-Transformer [74] 则统一了十二种模态。

III. APPLICATIONS OF LARGE AI MODELS IN HEALTH INFORMATICS

III. 大语言模型在健康信息学中的应用

In this section, we identify seven key sectors in which LAMs will have substantial influence and bring a new paradigm for tackling the problems and challenges in health informatics. The seven key sectors include 1) bioinformatics; 2) medical diagnosis; 3) medical imaging; 4) medical informatics; 5) medical education; 6) public health; and 7) medical robotics. Table I compares current LAMs with previous SOTA methods in these seven sectors.

在本节中,我们确定了七个关键领域,LAMs将在这些领域产生重大影响,并为解决健康信息学中的问题和挑战带来新范式。这七个关键领域包括:1) 生物信息学;2) 医疗诊断;3) 医学影像;4) 医学信息学;5) 医学教育;6) 公共卫生;7) 医疗机器人。表 I 比较了当前LAMs与这些领域中先前SOTA方法的差异。

A. Bioinformatics

A. 生物信息学

Molecular biology studies the roles of biological macromolecules (e.g., DNA, RNA, protein) in life processes and describes various life activities and phenomena including the structure, function, and synthesis of molecules. Although many experimental attempts have been made on this topic over decades [75]–[77], they are still of high cost, long experiment cycle, and high production difficulty. For example, the number of experimentally determined protein structures stored in the protein data bank (PDB) hardly rivals the number of protein sequences that have been generated. Efficient and accurate computational methods are therefore needed and can be used to accelerate the protein structure determination process. Due to the huge number of parameters and learning capacity, LAMs endow us with prospects to approach such a Herculean task. Especially, LLMs’ outstanding representation learning ability has been employed to implicitly model the biological properties hidden in large-scale unlabeled data including RNA and protein sequences.

分子生物学研究生物大分子(如DNA、RNA、蛋白质)在生命过程中的作用,描述包括分子结构、功能及合成在内的各种生命活动与现象。尽管数十年来已有大量实验研究[75]-[77],但这些方法仍存在成本高、实验周期长、制备难度大等问题。例如,蛋白质数据库(PDB)中实验确定的蛋白质结构数量远不及已测序的蛋白质序列数量。因此需要高效准确的计算方法来加速蛋白质结构测定流程。由于参数量巨大且具备强大学习能力,大语言模型为我们应对这一艰巨任务提供了可能。特别是大语言模型卓越的表征学习能力,已被用于隐式建模RNA和蛋白质序列等大规模未标注数据中隐藏的生物学特性。

When it comes to the field of protein, starting from amino acid sequences, we can analyze the spatial structure of proteins and furthermore understand their functions, and mutual interactions. AlphaFold2 [78] pioneered leveraging the attention-based Transformer model [3] to predict protein structures. Specifically, they treated structure prediction as a 3D graph inference problem, where the network’s inputs are pairwise features between residues, available templates, and multi-sequence alignment (MSA) embeddings. Especially, embeddings extracted from MSA can infer the evolutionary information between aligned sequences. Evoformer and structure modules were proposed to update the input representation and predict the final 3D structure, the whole process of which was recycled several times. Meanwhile, despite being trained on single-protein chains, AlphaFold2 exhibits the ability to predict multimers. To further enable multimeric inputs for training, DeepMind proposed AlphaFold-Multimer [79], achieving impressive performance, especially in he te rome ric protein complexes structure prediction. Specifically, positional encoding was improved to encode chains, and multi-chain MSAs were paired based on species annotations and target sequence similarity.

在蛋白质研究领域,从氨基酸序列出发,我们可以分析蛋白质的空间结构,进而理解其功能与相互作用。AlphaFold2 [78] 率先采用基于注意力机制的Transformer模型 [3] 来预测蛋白质结构。具体而言,该研究将结构预测视为三维图推理问题,网络输入包括残基间的成对特征、可用模板以及多序列比对(MSA)嵌入。特别地,从MSA提取的嵌入能够推断比对序列间的进化信息。研究提出了Evoformer和结构模块来更新输入表示并预测最终三维结构,整个过程会循环多次。尽管训练数据仅包含单蛋白链,AlphaFold2仍展现出预测多聚体的能力。为了支持多聚体输入的训练,DeepMind提出了AlphaFold-Multimer [79],在异源多聚体蛋白复合物结构预测中表现尤为突出。具体改进包括:采用链编码的位置编码方案,并根据物种注释与目标序列相似性对多链MSA进行配对。

In spite of the groundbreaking endeavors aforementioned works have contributed, to achieving optimal prediction, they still heavily rely on MSAs and templates searched from genetic and structure databases, which is time-consuming. Analogous to mining semantic information in natural language, researchers managed to explore co-evolution information in protein sequences in a self-supervised manner by employing large-scale protein language models (PLMs), which learn the global relation and long-range dependencies of unaligned and unlabelled protein sequences. ProGen (1.2B) [80] utilized a conditional language model to provide controllable generation of protein sequences. By inputting desired tags (e.g., function, organism), ProGen can generate corresponding proteins such as enzymes with good functional activity. Elnaggar et al. [81] devised ProtT5-XXL (11B) which was first trained on BFD [82] and then fine-tuned on UniRef50 [83] to predict the secondary structure. ESMfold [84] scaled the number of model parameters up to 15B and observed a significant prediction improvement over AlphaFold2 (0.68 vs 0.38 for TM-score on CASP14) with considerably faster inference speed when MSAs and templates are unavailable. Similarly, from only the primary sequence input, OmegaFold [85] can outperform MSA-based methods [78], [86], especially when predicting orphan proteins that are characterized by the paucity of homologous structure. xTrimoPGLM [87] proposed a unified pre-training strategy that integrates the protein understanding and generation by optimizing masked language modelling and general language modelling concurrently and achieved remarkable performance over 13 diverse protein tasks with its 100B parameters. For instance, for GB1 fitness prediction in protein function task, xTrimoPGLM outperforms the previous SOTA method: Ankh [88], with an $11%$ performance increase. Moreover, for antibody structure prediction, xTrimoPGLM outperformed AlphaFold2 (TM-score: 0.951) and achieved SOTA performance (TM-score: 0.961) with significantly faster inference speed. We underscore that in the presence of MSA, although the performance of PLMs is hardly on par with Alphfold2, PLMs can make predictions several orders of magnitude faster, which speeds up the process of related applications such as drug discovery. In addition, because PLMs implicitly understand the deep information implied in protein sequences, they are promising to predict mutations in protein structures and their potential impact to help guide the design of next-generation vaccines.

尽管上述研究取得了突破性进展,但为了实现最优预测,它们仍严重依赖从遗传和结构数据库中搜索的多序列比对(MSA)和模板,这一过程耗时较长。类似于挖掘自然语言中的语义信息,研究者尝试通过大规模蛋白质语言模型(PLM)以自监督方式探索蛋白质序列中的共进化信息,这类模型能够从未对齐且无标注的蛋白质序列中学习全局关系和长程依赖性。ProGen(1.2B)[80]采用条件语言模型实现可控的蛋白质序列生成,通过输入目标标签(如功能、生物体),可生成具有良好功能活性的酶等对应蛋白质。Elnaggar等人[81]开发的ProtT5-XXL(11B)先在BFD[82]上预训练,再于UniRef50[83]上微调以预测二级结构。ESMfold[84]将模型参数量扩展至150亿,在无MSA和模板时,其预测性能显著超越AlphaFold2(CASP14测试集TM-score:0.68 vs 0.38),且推理速度大幅提升。同样仅凭一级序列输入,OmegaFold[85]能超越基于MSA的方法[78][86],尤其在预测缺乏同源结构的孤儿蛋白时表现突出。xTrimoPGLM[87]提出统一预训练策略,通过同步优化掩码语言建模和通用语言建模实现蛋白质理解与生成的融合,其1000亿参数模型在13项蛋白质任务中表现卓越。例如在蛋白质功能任务的GB1适应性预测中,xTrimoPGLM以11%的性能提升超越此前SOTA方法Ankh[88]。此外在抗体结构预测中,其TM-score达0.961,优于AlphaFold2(0.951)且推理速度更快。我们强调,虽然存在MSA时PLM性能仍难与AlphaFold2比肩,但其预测速度可快数个数量级,能加速药物发现等应用进程。由于PLM能隐式理解蛋白质序列的深层信息,它们有望预测蛋白质结构突变及其潜在影响,从而指导下一代疫苗设计。

In the context of RNA structure prediction, the number of non redundant 3D RNA structures stored in PDB is significantly less than that of protein structures, which hinders the accurate and general iz able prediction of RNA structure from sequence information using deep learning. To mitigate the severe un availability of labeled RNA data, Chen et al. [89] proposed the RNA foundation model (RNA-FM), which learns evolutionary information implicitly from 23 million unlabeled ncRNA sequences [90] by recovering masked nucleotide tokens, to facilitate multiple downstream tasks including RNA secondary structure prediction and 3D closeness prediction. Especially, for secondary structure prediction, RNAFM achieves $3-5%$ performance increase among three metrics (i.e., Precision, Recall, and F1-score), compared to UFold [91] which utilizes U-Net as the backbone. Furthermore, based on RNA-FM, Shen et al. [92] pioneered predicting 3D RNA structure directly.

在RNA结构预测领域,PDB数据库中存储的非冗余RNA三维结构数量远少于蛋白质结构,这阻碍了利用深度学习从序列信息中实现准确且可泛化的RNA结构预测。为缓解标记RNA数据的严重不足,Chen等人[89]提出了RNA基础模型(RNA-FM),该模型通过恢复被遮蔽的核苷酸token,从2300万条未标记的非编码RNA序列[90]中隐式学习进化信息,以促进包括RNA二级结构预测和三维紧密度预测在内的多项下游任务。特别是在二级结构预测方面,与采用U-Net架构的UFold[91]相比,RNA-FM在三个指标(即精确率、召回率和F1值)上实现了3-5%的性能提升。此外,Shen等人[92]基于RNA-FM开创了直接预测RNA三维结构的方法。

Undoubtedly, these models are seminal and have reduced the time and cost of molecule structure prediction by a

毫无疑问,这些模型具有开创性意义,大幅降低了分子结构预测的时间和成本。

TABLE I: Comparison between state-of-the-art LAMs (second row) and prior arts (first row) in typical tasks of seven biomedical and health sectors. “−” denotes not applicable. “N/R” denotes not released in the original literature. For zero-shot medical segmentation task, we tested the off-the-shelf Mask RCNN model to compare with SAM.

表 1: 当前最优LAM(第二行)与现有技术(第一行)在七个生物医学和健康领域典型任务中的对比。"-"表示不适用。"N/R"表示原始文献未发布。对于零样本医学分割任务,我们测试了现成的Mask RCNN模型以与SAM进行对比。

| Sector | Task | Model Name | Model Type | Model Size | (Pre-)training Dataset | Evaluation Dataset | Evaluation Metric | Performance | OA |

| Bioinformatics | MSA-free Protein Sequence Prediction | Alphafold2 | 93M | ProteinData Bank | CASP14 | TM Score | 0.38 | ||

| ESMfold | LLM | 15B | UniRef | 0.68 | |||||

| RNA Structure Prediction | UFold | 8.64 M | RNAStralign, bpRNA-TRO | Archivell600 | 90.5% F1 Score | ||||

| RNA-FM | LLM | N/R | RNAcentral | 94.1% | |||||

| GB1Fitness Prediction | Ankh | 450M | FLIPDatabase | FLIPDatabase | 0.85 Spearman | ||||

| xTrimoPGLM | LLM | 100B | FLIPDatabase | Score 0.96 | × | ||||

| Medical Diagnosis | Electrocardiogram- based Diagnosis | EfficientNet-B4 | 19 M | ImageNet | Morningside Hospital Data | AUROC | 0.92&0.77 | ||

| HeartBEiT | LVM 86M | 8.5MECGs | 0.93&0.80 | × | |||||

| Medical lmaging | Zero-Shot Medical Segmentation | Mask RCNN | 44 M | COCO | ISIC 2018 Dice Challenge | 39.7% | |||

| SAM | LVM >636M | SA-1B | 73.0% | ||||||

| Medical Segmentation | nnU-Net | 60M | Total- Segmentator TotalSegmentator | Dice | 86.8% | ||||

| STU-Net | LVM | 1.4 B | Total- Segmentator | 90.1% | |||||

| Medical Informatics | Medical Question Answering | PubMedBERT | LLM | 110 M | PubMed | MedQA | 38.1% Accuracy | ||

| Med PaLM 2 | LLM | N/R | N/R | 86.5% × | |||||

| Clinical Concept Extraction | BioBERT | LLM | 345 M | 18 B words | F1Score | 87.8% | |||

| GatorTron-Large | LLM | 8.9B | 2018 n2c2 90 B words | 90.0% | |||||

| Medical Education | United StatesMedical Licensing Examination | GPT-3.5 | LLM | 175B | N/R Sample Exam | Accuracy | 58.8% | × | |

| GPT-4 | LMM N/R | N/R | 86.7% | × | |||||

| PublicHealth | Global Weather Forecast | Operational IFS | ERA5 (5-day Z500) | RMSE | 333.7 | × | |||

| Pangu-Weather | LVM 256M | ERA5 | 296.7 | ||||||

| Medical Robotics | Endoscopic Video Analysis | VCL | 14.4 M | 5 MEndo. Frames PolypDiag & | F1 score& Dice & F1 score | 87.6%& 69.1% & 78.1% | |||

| Endo-FM | LVM | 5 M Endo. 121 M | CVC-12k& KUMC Frames | 90.7%&73.9% &84.1% |

| 领域 | 任务 | 模型名称 | 模型类型 | 模型规模 | (预)训练数据集 | 评估数据集 | 评估指标 | 性能 | OA |

|---|---|---|---|---|---|---|---|---|---|

| 生物信息学 | 无需多序列比对的蛋白质序列预测 | Alphafold2 | 93M | ProteinData Bank | CASP14 | TM Score | 0.38 | ||

| ESMfold | 大语言模型 | 15B | UniRef | 0.68 | |||||

| RNA结构预测 | UFold | 8.64 M | RNAStralign, bpRNA-TRO | Archivell600 | 90.5% F1 Score | ||||

| RNA-FM | 大语言模型 | N/R | RNAcentral | 94.1% | |||||

| GB1适应性预测 | Ankh | 450M | FLIPDatabase | FLIPDatabase | 0.85 Spearman | ||||

| xTrimoPGLM | 大语言模型 | 100B | FLIPDatabase | Score 0.96 | × | ||||

| 医学诊断 | 基于心电图诊断 | EfficientNet-B4 | 19 M | ImageNet | Morningside Hospital Data | AUROC | 0.92&0.77 | ||

| HeartBEiT | LVM 86M | 8.5MECGs | 0.93&0.80 | × | |||||

| 医学影像 | 零样本医学分割 | Mask RCNN | 44 M | COCO | ISIC 2018 Dice Challenge | 39.7% | |||

| SAM | LVM >636M | SA-1B | 73.0% | ||||||

| 医学分割 | nnU-Net | 60M | Total-Segmentator TotalSegmentator | Dice | 86.8% | ||||

| STU-Net | LVM | 1.4 B | Total-Segmentator | 90.1% | |||||

| 医学信息学 | 医学问答 | PubMedBERT | 大语言模型 | 110 M | PubMed | MedQA | 38.1% Accuracy | ||

| Med PaLM 2 | 大语言模型 | N/R | N/R | 86.5% × | |||||

| 临床概念提取 | BioBERT | 大语言模型 | 345 M | 18 B words | F1Score | 87.8% | |||

| GatorTron-Large | 大语言模型 | 8.9B | 2018 n2c2 90 B words | 90.0% | |||||

| 医学教育 | 美国医师执照考试 | GPT-3.5 | 大语言模型 | 175B | N/R Sample Exam | Accuracy | 58.8% | × | |

| GPT-4 | LMM N/R | N/R | 86.7% | × | |||||

| 公共卫生 | 全球天气预报 | Operational IFS | ERA5 (5-day Z500) | RMSE | 333.7 | × | |||

| Pangu-Weather | LVM 256M | ERA5 | 296.7 | ||||||

| 医疗机器人 | 内窥镜视频分析 | VCL | 14.4 M | 5 MEndo. Frames PolypDiag & | F1 score& Dice & F1 score | 87.6%& 69.1% & 78.1% | |||

| Endo-FM | LVM | 5 M Endo. 121 M | CVC-12k& KUMC Frames | 90.7%&73.9% &84.1% |

large margin. Thereby, this raises the question of whether LAMs can completely replace experimental methods such as Cryo-EM [75]. We deem that it still falls short from that point. Specifically, the advance of LAMs builds upon big data and large model capacity, which means they are still data-driven, and hence their ability to predict unseen types of data could sometimes be problematic. For instance, [93] stated that AlphaFold can barely handle missense mutation on protein structure due to the lack of a corresponding dataset. Furthermore, how we can assess the quality of model prediction for unknown protein structures remains unclear. In turn, these unverified protein structures cannot be applied to, for example, drug discovery. Therefore, protocols and metrics need to be established to assess their quality and potential impacts. There are mutual and complementary benefits between LAMs and conventional experimental techniques. LAMs can be re-designed to predict the process of protein folding and reveal their mutual interactions so as to facilitate experimental methods. On the other hand, experimental information, such as some physical properties of molecules, can be leveraged by LAMs to further improve prediction performance, especially when dealing with rare data (e.g., orphan protein).

大差距。因此,这引发了一个问题:LAMs是否能完全取代诸如冷冻电镜 (Cryo-EM) [75] 等实验方法。我们认为目前仍无法达到这一点。具体而言,LAMs的进步建立在海量数据和大模型容量的基础上,这意味着它们仍是数据驱动的,因此其预测未见数据类型的能力有时可能存在问题。例如,[93] 指出,由于缺乏相应数据集,AlphaFold几乎无法处理蛋白质结构的错义突变。此外,如何评估模型对未知蛋白质结构的预测质量仍不明确。反过来,这些未经验证的蛋白质结构也无法应用于药物发现等领域。因此,需要建立协议和指标来评估其质量及潜在影响。LAMs与传统实验技术存在相互补充的优势。LAMs可被重新设计以预测蛋白质折叠过程并揭示其相互作用,从而辅助实验方法。另一方面,LAMs可利用实验信息(如分子的某些物理特性)进一步提升预测性能,尤其是在处理罕见数据(如孤儿蛋白)时。

B. Medical Diagnosis

B. 医疗诊断

As research has been carried out to improve the safety and strengthen the factual grounding of LAMs, it is foreseeable that LAMs will play a significant role in medical diagnosis and decision-making.

随着研究不断推进以提高大语言模型 (LAM) 的安全性和事实依据性,可以预见 LAM 将在医疗诊断和决策领域发挥重要作用。

CheXzero [94], a zero-shot chest X-ray classifier, has demonstrated radiologist-level performance in classifying multiple path o logie s which it never saw in its self-supervised learning process. Recently, ChatCAD [95], a framework that integrates multiple diagnostic networks with ChatGPT, demonstrated a potential use case for applying LLMs in computeraided diagnosis (CAD) for medical images. By stratifying the decision-making process with specialized medical networks, and followed by an iteration of prompts based on the outcomes of those networks as the queries to an LLM for medical recommendations, the workflow of ChatCAD offers an insight into the integration of the LLMs that were pre-trained using a massive corpus, with the upstream specialized diagnostic networks for supporting medical diagnosis and decision-making. Its follow-up work ChatCAD $^+$ [96], shows improved quality of generating diagnostic reports with the incorporation of a retrieval system. Using external knowledge and information retrieval can potentially enable the resulting diagnostics more factually-grounded, and such a design has also been favoured and implemented in the ChatDoctor model [97]. By leveraging a linear transformation layer to align two medical LAMs, XrayGPT [98], a conversational chest X-ray diagnostic tool, shows decent accuracy in responding to diagnostic summary. While most LLMs are based on English, researchers have also managed to fine-tune LLaMa [10], an LLM, with Chinese medical knowledge, and the resulting model shows improved medical expertise in Chinese [99].

CheXzero [94] 是一种零样本胸部X光分类器,在其自监督学习过程中从未见过的多种病理分类任务中展现了与放射科医生相当的性能。最近,ChatCAD [95] 这一整合了多个诊断网络与ChatGPT的框架,展示了将大语言模型应用于医学影像计算机辅助诊断(CAD)的潜在用例。通过采用专业医疗网络分层决策流程,并基于这些网络的输出结果迭代生成提示词作为大语言模型的医学建议查询,ChatCAD的工作流程为整合海量文本预训练的大语言模型与上游专业诊断网络提供了创新思路,以支持医疗诊断和决策制定。其后续研究ChatCAD$^+$[96]通过引入检索系统,提升了诊断报告生成质量。利用外部知识和信息检索可使诊断结果更具事实依据,这一设计理念也在ChatDoctor模型[97]中得到应用。XrayGPT[98]作为胸部X光对话式诊断工具,通过线性变换层对齐两个医疗大语言模型,在诊断摘要响应中表现出良好准确性。尽管多数大语言模型基于英语开发,研究人员仍成功将LLaMa[10]模型适配中文医疗知识,最终模型展现出更强的中文医疗专业能力[99]。

Apart from chest X-ray diagnostics and medical question answering, LAMs have also been applied to other diagnostic scenarios. HeartBEiT [100], a foundation model pre-trained using 8.5 million electrocardiograms (ECGs), shows that largescale ECG pre-training could produce accurate cardiac diagnosis and improved explain ability of the diagnostic outcome, and the amount of annotated data for downstream fine-tuning could be reduced. Medical LAMs may also potentially produce a more reliable forecast of treatment outcomes and the future development of diseases using their strong reasoning capability. For example, Li et al. [101] proposed BEHRT, which is able to predict the most likely disease of a patient in his/her next visit by learning from a large archive of EHRs. Rasmy et al. [102] proposed Med-BERT, which is able to predict the heart failure of diabetic patients.

除了胸部X光诊断和医疗问答,LAMs还被应用于其他诊断场景。HeartBEiT [100] 是一个基于850万份心电图(ECG)预训练的基础模型,研究表明大规模ECG预训练能够实现准确的心脏诊断,并提升诊断结果的可解释性,同时减少下游微调所需的标注数据量。医疗LAMs凭借其强大的推理能力,还可能更可靠地预测治疗结果和疾病发展进程。例如Li等人[101]提出的BEHRT能够通过学习大量电子健康记录(EHRs)预测患者下次就诊时最可能患的疾病。Rasmy等人[102]提出的Med-BERT则可以预测糖尿病患者的心力衰竭风险。

With the ubiquity of internet, medical LAMs can also offer remote diagnosis and medical consultation for people at home, providing people in need with more flexibility. We also envision that future diagnosis of complex diseases may also be conducted or assisted by a panel of clinical LAMs.

随着互联网的普及,医疗大语言模型还能为居家患者提供远程诊断和医疗咨询,为有需求的人群提供更灵活的服务。我们预见未来复杂疾病的诊断也可能由一组临床大语言模型主导或辅助完成。

C. Medical Imaging

C. 医学影像

The adoption of medical imaging and vision techniques has vastly influenced the process of diagnosis and treatment of a patient. The wide use of medical imaging, such as CT and MRI, has produced a vast amount of multi-modal, multisource, and multi-organ medical vision data to accelerate the development of medical vision LAMs.

医学影像与视觉技术的应用极大地影响了患者的诊疗过程。CT和MRI等医学影像的广泛使用产生了大量多模态、多源、多器官的医学视觉数据,推动着医学视觉大语言模型的发展。

The recent success of SAM [2] has drawn much attention within the medical imaging community. SAM has been extensively examined in medical imaging, especially on its zero-shot segmentation ability. While research revealed that for certain medical imaging modalities and targets, the zeroshot performance of SAM is impressive (e.g., on endoscopic and der mos co pic images, as these are essentially RGB images, which are the same type as that of SAM’s pre-training images), for imaging modalities that are medicine-specific such as MRI and OCT (optical coherence tomograpy), SAM often fails to segment targets in a zero-shot way [103], mainly because the topology and presentation of a target in those imaging modalities are much different from what SAM has seen during pre-training. Nevertheless, after adaptation and fine-tuning, the medical segmentation accuracy of SAM can surpass current SOTA with a clear margin [104], showing the potential of extending versatility of general LVMs to medical imaging with parameter-efficient adaptation. Apart from zero-shot segmentation, MedCLIP [105] was proposed, a contrastive learning framework for decoupled medical images and text, which demonstrated impressive zero-shot medical image classification accuracy. In particular, it yielded over $80%$ accuracy in detecting Covid-19 infection in a zero-shot setting. The recent PLIP model [106], built using image-text pairs curated from medical Twitter, enables both image-based and text-based pathology image retrieval, as well as enhanced zeroshot pathology image classification compared to CLIP [54].

SAM [2] 近期的成功在医学影像领域引起了广泛关注。SAM 在医学影像中得到了广泛测试,尤其是其零样本分割能力。研究表明,对于某些医学影像模态和目标,SAM 的零样本表现令人印象深刻(例如在内窥镜和皮肤镜图像上,因为这些本质上都是 RGB 图像,与 SAM 预训练图像类型相同),但对于医学特有的成像模态(如 MRI 和 OCT(光学相干断层扫描)),SAM 往往无法以零样本方式分割目标 [103],主要是因为这些成像模态中目标的拓扑结构和表现形式与 SAM 预训练所见差异较大。然而,经过适配和微调后,SAM 的医学分割精度能显著超越当前 SOTA [104],展现了通过参数高效适配将通用 LVMs 的泛化能力扩展到医学影像的潜力。除零样本分割外,MedCLIP [105] 提出了一种针对解耦医学图像和文本的对比学习框架,在零样本医学图像分类中表现出色。特别是在零样本设置下检测 Covid-19 感染时,其准确率超过 $80%$。最新提出的 PLIP 模型 [106] 利用医学 Twitter 整理的图文对构建,支持基于图像和文本的病理图像检索,并在零样本病理图像分类上较 CLIP [54] 有所提升。

Many medical imaging modalities are 3-dimensional (3D), and thus developing 3D medical LVMs are crucial. Med3D [107], a heterogeneous 3D framework that enables pre-training on multi-domain medical vision datasets, shows strong generalization capabilities in downstream tasks, such as lung segmentation and pulmonary nodule classification.

许多医学成像模式都是三维(3D)的,因此开发3D医学大语言模型至关重要。Med3D [107]是一个异构3D框架,能够在多领域医学视觉数据集上进行预训练,在下游任务(如肺部分割和肺结节分类)中展现出强大的泛化能力。

With the success of generative LAMs such as Stable Diffusion [68] in the general domain, which can generate realistic high-fidelity images with text descriptions, Chambon et al. [108] recently fine-tuned Stable Diffusion on medical data to generate synthetic chest X-ray images based on clinical descriptions. The encouraging generative capability of Stable Diffusion in the medical domain may inspire more future research on using generative LAMs to augment medical data that are conventionally hard to obtain, and expensive to annotate.

随着Stable Diffusion [68]等生成式大语言模型在通用领域的成功(能够根据文本描述生成逼真的高保真图像),Chambon等人[108]最近在医疗数据上对Stable Diffusion进行了微调,以基于临床描述生成合成胸部X光图像。Stable Diffusion在医疗领域展现出的鼓舞人心的生成能力,可能会激发更多未来研究,探索如何利用生成式大语言模型来增强传统上难以获取且标注成本高昂的医疗数据。

Nevertheless, some compromises are also evident in medical vision LAMs. For example, the currently common practice of training LVMs and LMMs often limits the size of the medical images to shorten the training time and reduce the computational costs. The reduced size inevitably causes information loss, e.g., some small lesions that are critical for accurate recognition might be removed in a compressed down sampled medical image, whereas doctors could examine the original high-resolution image and spot these early-stage tumors. This may cause performance discrepancies between current medical vision LAMs and well-trained doctors. In addition, although research has shown that increasing medical LAM size and data size could improve medical domain performance of the model, e.g., STU-Net [109], a medical segmentation model with 1.4 billion parameters, the best practice of model-data scaling is yet to be conclusive in medical imaging and vision.

然而,医疗视觉LAMs也存在明显妥协。例如,当前训练LVMs和LMMs的常见做法往往缩小医学图像尺寸以缩短训练时间并降低计算成本。这种尺寸缩减不可避免地导致信息丢失——某些对精准识别至关重要的微小病灶可能在压缩降采样后的医学图像中被剔除,而医生却能通过原始高分辨率图像发现这些早期肿瘤。这可能导致当前医疗视觉LAMs与训练有素的医生之间存在性能差异。此外,尽管研究表明扩大医疗LAM规模和数据量可提升模型在医疗领域的表现(如拥有14亿参数的医学分割模型STU-Net [109]),但在医学影像与视觉领域,模型-数据规模化的最佳实践尚未形成定论。

D. Medical Informatics

D. 医学信息学

In medical informatics, it has been a topic of long-standing interest to leverage large-scale medical information and signals to create AI models that can recognize, summarize, and generate medical and clinical content.

在医学信息学领域,如何利用大规模医疗信息和信号来创建能够识别、总结及生成医学临床内容的AI模型,一直是一个备受关注的长期课题。

Over the past few years, with advances in the development of LLMs [9], [13], [110], and the abundance of EHRs as well as public medical text outlets such as PubMed [111], [112], research has been carried out to design and propose Biomedical LLMs. Since the introduction of BioBERT [113], a seminal Biomedical LLM which outperformed previous SOTA methods on various biomedical text mining tasks such as biomedical named entity recognition, many different Biomedical LLMs that stem from their general LLM counterparts have been proposed, including Clinic alBERT [114], BioMegatron [115], BioMe d RoBERTa [116], Med-BERT [117], Bio- ELECTRA [118], PubMedBERT [119], Bio Link BERT [120], BioGPT [121], and Med-PaLM [122].

过去几年,随着大语言模型(LLM) [9][13][110]的发展进步,以及电子健康记录(EHR)和PubMed [111][112]等公共医学文本资源的丰富,研究人员开始致力于设计和提出生物医学大语言模型。自开创性的BioBERT [113]问世以来——这个生物医学大语言模型在生物医学命名实体识别等多项生物医学文本挖掘任务上超越了之前的SOTA方法——许多源自通用大语言模型的生物医学变体相继被提出,包括ClinicalBERT [114]、BioMegatron [115]、BioMed-RoBERTa [116]、Med-BERT [117]、BioELECTRA [118]、PubMedBERT [119]、BioLinkBERT [120]、BioGPT [121]和Med-PaLM [122]。

The recent GatorTron [123] model (8.9 billion parameters) pre-trained with de-identified clinical text (82 billion words) revealed that scaling up the size of clinical LLMs leads to improvements on different medical language tasks, and the improvements are more substantial for complex ones, such as medical question answering and inference. Previously, the PubMedBERT work [119] also suggested that pre-training an LLM with biomedical corpora from scratch can lead to better results than continually training an LLM that has been pre-trained on the general-domain corpora. While training large number of parameters may seem daunting, parameterefficient adaptation techniques such as low-rank adaptation (LoRA) [124] have enabled researchers to efficiently adapt a 13 billion LLaMa model to produce decent US Medical Licensing Exam (USMLE) answers, and the performance