Foundation models for generalist medical artificial intelligence

通用医疗人工智能的基础模型

https://doi.org/10.1038/s41586-023-05881-4

https://doi.org/10.1038/s41586-023-05881-4

Received: 3 November 2022

收稿日期:2022年11月3日

Accepted: 22 February 2023

录用日期:2023年2月22日

Published online: 12 April 2023 Check for updates

在线发表:2023年4月12日 检查更新

Michael Moor1,6, Oishi Banerjee2,6, Zahra Shakeri Hossein Abad3, Harlan M. Krumholz4, Jure Leskovec1, Eric J. Topol5,7 ✉ & Pranav Rajpurkar2,7 ✉

Michael Moor1,6, Oishi Banerjee2,6, Zahra Shakeri Hossein Abad3, Harlan M. Krumholz4, Jure Leskovec1, Eric J. Topol5,7 ✉ & Pranav Rajpurkar2,7 ✉

The exceptionally rapid development of highly flexible, reusable artificial intelligence (AI) models is likely to usher in newfound capabilities in medicine. We propose a new paradigm for medical AI, which we refer to as generalist medical AI (GMAI). GMAI models will be capable of carrying out a diverse set of tasks using very little or no task-specific labelled data. Built through self-supervision on large, diverse datasets, GMAI will flexibly interpret different combinations of medical modalities, including data from imaging, electronic health records, laboratory results, genomics, graphs or medical text. Models will in turn produce expressive outputs such as free-text explanations, spoken recommendations or image annotations that demonstrate advanced medical reasoning abilities. Here we identify a set of high-impact potential applications for GMAI and lay out specific technical capabilities and training datasets necessary to enable them. We expect that GMAI-enabled applications will challenge current strategies for regulating and validating AI devices for medicine and will shift practices associated with the collection of large medical datasets.

高度灵活、可复用的人工智能(AI)模型的异常快速发展,很可能为医学领域带来前所未有的能力。我们提出了一种新型医疗AI范式,称之为通用医疗AI(GMAI)。GMAI模型能够仅使用极少甚至无需特定任务的标注数据,就能执行多样化任务。通过在海量多样化数据集上进行自监督训练构建的GMAI,可灵活解读包括影像、电子健康档案、实验室结果、基因组学、图表及医疗文本等不同医疗模态数据的组合。这些模型将生成具有表现力的输出,如展现高级医学推理能力的自由文本解释、语音建议或图像标注。本文我们明确了GMAI一系列高影响力潜在应用场景,并详细阐述了实现这些应用所需的具体技术能力与训练数据集。我们预计,GMAI赋能的应用将挑战当前医疗AI设备的监管验证策略,并改变大规模医疗数据收集的实践方式。

Foundation models—the latest generation of AI models—are trained on massive, diverse datasets and can be applied to numerous downstream tasks1. Individual models can now achieve state-of-the-art performance on a wide variety of problems, ranging from answering questions about texts to describing images and playing video games . This versatility represents a stark change from the previous generation of AI models, which were designed to solve specific tasks, one at a time.

基础模型(Foundation models)——最新一代AI模型——经过海量多样化数据集训练,可应用于众多下游任务[1]。单个模型如今能在各类问题上实现最先进性能,涵盖文本问答、图像描述到电子游戏对战等领域。这种多功能性标志着与上一代AI模型的显著差异,后者仅能针对单一特定任务进行设计。

Driven by growing datasets, increases in model size and advances in model architectures, foundation models offer previously unseen abilities. For example, in 2020 the language model GPT-3 unlocked a new capability: in-context learning, through which the model carried out entirely new tasks that it had never explicitly been trained for, simply by learning from text explanations (or ‘prompts’) containing a few examples5. Additionally, many recent foundation models are able to take in and output combinations of different data modalities 4,6. For example, the recent Gato model can chat, caption images, play video games and control a robot arm and has thus been described as a generalist agent2. As certain capabilities emerge only in the largest models, it remains challenging to predict what even larger models will be able to accomplish 7.

在数据集不断增长、模型规模扩大以及模型架构进步的推动下,基础模型 (foundation model) 展现出前所未有的能力。例如,2020年语言模型GPT-3解锁了新能力:上下文学习 (in-context learning),该模型仅需通过包含少量示例的文本说明(或称"提示")进行学习,就能完成从未接受过专门训练的全新任务[5]。此外,许多最新基础模型能够处理并输出不同数据模态的组合[4,6]。例如,近期Gato模型既能聊天、为图像配文、玩电子游戏,又能控制机械臂,因此被称为通用智能体 (generalist agent)[2]。由于某些能力仅在最大规模的模型中显现,预测更庞大模型将实现哪些功能仍具挑战性[7]。

Although there have been early efforts to develop medical foundation models8–11, this shift has not yet widely permeated medical AI, owing to the difficulty of accessing large, diverse medical datasets, the complexity of the medical domain and the recency of this development. Instead, medical AI models are largely still developed with a task-specific approach to model development. For instance, a chest X-ray interpretation model may be trained on a dataset in which every image has been explicitly labelled as positive or negative for pneumonia, probably requiring substantial annotation effort. This model would only detect pneumonia and would not be able to carry out the complete diagnostic exercise of writing a comprehensive radiology report. This narrow, task-specific approach produces inflexible models, limited to carrying out tasks predefined by the training dataset and its labels. In current practice, such models typically cannot adapt to other tasks (or even to different data distributions for the same task) without being retrained on another dataset. Of the more than 500 AI models for clinical medicine that have received approval by the Food and Drug Administration, most have been approved for only 1 or 2 narrow tasks12.

尽管早期已有开发医疗基础模型 (foundation model) [8-11] 的努力,但由于获取大规模多样化医疗数据集的困难、医疗领域的复杂性以及这一发展的新近性,这种转变尚未在医疗AI领域广泛渗透。目前,医疗AI模型仍主要采用任务特定的开发方式。例如,一个胸部X光判读模型可能在每个图像都明确标注为肺炎阳性或阴性的数据集上训练,这通常需要大量标注工作。该模型仅能检测肺炎,无法完成撰写完整放射学报告的全面诊断工作。这种狭隘的任务特定方法产生的模型缺乏灵活性,仅限于执行训练数据集及其标签预定义的任务。在当前实践中,此类模型通常无法适应其他任务(甚至同一任务的不同数据分布),除非在另一数据集上重新训练。在美国食品药品监督管理局批准的500多个临床医学AI模型中,大多数仅获批执行1到2项狭窄任务[12]。

Here we outline how recent advances in foundation model research can disrupt this task-specific paradigm. These include the rise of multimodal architectures 13 and self-supervised learning techniques 14 that dispense with explicit labels (for example, language modelling 15 and contrastive learning16), as well as the advent of in-context learning capabilities 5.

在此我们概述基础模型 (foundation model) 研究的最新进展如何颠覆这种任务特定范式。这些进展包括:无需显式标签的多模态架构 [13] 和自监督学习技术 [14](例如语言建模 [15] 和对比学习 [16])的兴起,以及上下文学习能力 [5] 的出现。

These advances will instead enable the development of GMAI, a class of advanced medical foundation models. ‘Generalist’ implies that they will be widely used across medical applications, largely replacing task-specific models.

这些进步将推动通用医疗人工智能 (GMAI) 的发展,这是一类先进的医疗基础模型。"通用"意味着它们将在医疗应用中广泛使用,很大程度上取代特定任务的模型。

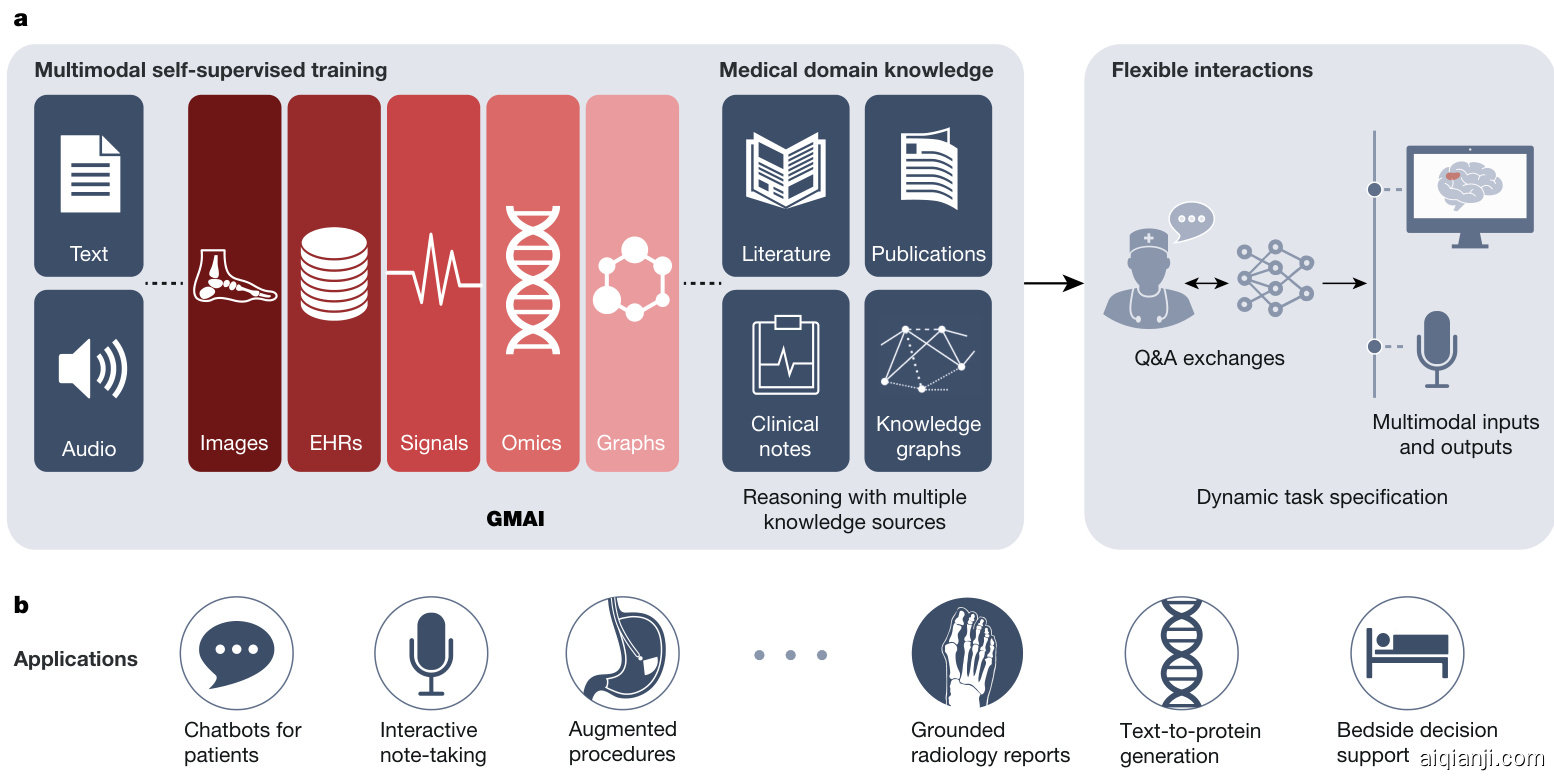

Inspired directly by foundation models outside medicine, we identify three key capabilities that distinguish GMAI models from conventional medical AI models (Fig. 1). First, adapting a GMAI model to a new task will be as easy as describing the task in plain English (or another language). Models will be able to solve previously unseen problems simply by having new tasks explained to them (dynamic task specification), without needing to be retrained3,5. Second, GMAI models can accept inputs and produce outputs using varying combinations of data modalities (for example, can take in images, text, laboratory results or any combination thereof). This flexible interactivity contrasts with the constraints of more rigid multimodal models, which always use predefined sets of modalities as input and output (for example, must always take in images, text and laboratory results together). Third, GMAI models will formally represent medical knowledge, allowing them to reason through previously unseen tasks and use medically accurate language to explain their outputs.

受医学领域外基础模型的直接启发,我们总结出通用医疗人工智能(GMAI)模型区别于传统医疗AI模型的三大核心能力(图1)。首先,GMAI模型只需用自然语言描述新任务即可完成适配(动态任务指定),无需重新训练即可解决前所未见的问题[3,5]。其次,GMAI模型能灵活处理任意数据模态组合的输入输出(如图像、文本、实验室结果的任意组合),这与必须使用预设模态组合的刚性多模态模型形成鲜明对比。第三,GMAI模型将具备形式化医学知识表征能力,可基于医学逻辑进行推理,并使用专业术语解释输出结果。

Regulations: Application approval; validation; audits; community-based challenges; analyses of biases, fairness and diversity Fig. 1 | Overview of a GMAI model pipeline. a, A GMAI model is trained on multiple medical data modalities, through techniques such as self-supervised learning. To enable flexible interactions, data modalities such as images or data from EHRs can be paired with language, either in the form of text or speech data. Next, the GMAI model needs to access various sources of medical knowledge to carry out medical reasoning tasks, unlocking a wealth of capabilities that can be used in downstream applications. The resulting GMAI model then carries

图 1: GMAI模型流程概述。a) GMAI模型通过自监督学习等技术在多种医疗数据模态上进行训练。为实现灵活交互,图像或电子健康记录(EHR)等数据模态可与语言(文本或语音数据形式)配对。接着,GMAI模型需要访问各类医学知识源以执行医疗推理任务,从而解锁可用于下游应用的丰富能力。最终形成的GMAI模型将执行...

We list concrete strategies for achieving this paradigm shift in medical AI. Furthermore, we describe a set of potentially high-impact applications that this new generation of models will enable. Finally, we point out core challenges that must be overcome for GMAI to deliver the clinical value it promises.

我们列出了实现医疗AI范式转变的具体策略。此外,我们描述了新一代模型将支持的一系列潜在高影响力应用。最后,我们指出了通用医疗人工智能(GMAI)要实现其承诺的临床价值必须克服的核心挑战。

The potential of generalist models in medical AI

通用人工智能模型在医学AI中的潜力

GMAI models promise to solve more diverse and challenging tasks than current medical AI models, even while requiring little to no labels for specific tasks. Of the three defining capabilities of GMAI, two enable flexible interactions between the GMAI model and the user: first, the ability to carry out tasks that are dynamically specified; and second, the ability to support flexible combinations of data modalities. The third capability requires that GMAI models formally represent medical domain knowledge and leverage it to carry out advanced medical reasoning. Recent foundation models already exhibit individual aspects of GMAI, by flexibly combining several modalities 2 or making it possible to dynamically specify a new task at test time5, but substantial advances are still required to build a GMAI model with all three capabilities. For example, existing models that show medical reasoning abilities (such as GPT-3 or PaLM) are not multimodal and do not yet generate reliably factual statements.

GMAI模型有望解决比当前医疗AI模型更多样化和更具挑战性的任务,甚至在特定任务中几乎不需要标注数据。GMAI的三个核心能力中有两个支持模型与用户之间的灵活交互:一是动态指定任务的能力,二是支持多模态数据灵活组合的能力。第三个能力要求GMAI模型能形式化表征医学领域知识,并利用其进行高级医学推理。近期的基础模型已展现出GMAI的部分特性,例如灵活组合多种模态[2]或在测试时动态指定新任务[5],但要构建同时具备这三种能力的GMAI模型仍需重大突破。例如,现有展现医学推理能力的模型(如GPT-3或PaLM)既非多模态,也无法稳定生成事实性陈述。

out tasks that the user can specify in real time. For this, the GMAI model can retrieve contextual information from sources such as knowledge graphs or databases, leveraging formal medical knowledge to reason about previously unseen tasks. b, The GMAI model builds the foundation for numerous applications across clinical disciplines, each requiring careful validation and regulatory assessment.

用户可以实时指定的任务。为此,GMAI模型可以从知识图谱或数据库等来源检索上下文信息,利用正式的医学知识对未见过的任务进行推理。b,GMAI模型为跨临床学科的众多应用奠定了基础,每个应用都需要经过严格的验证和监管评估。

Flexible interactions

灵活交互

GMAI offers users the ability to interact with models through custom queries, making AI insights easier for different audiences to understand and offering unprecedented flexibility across tasks and settings. In current practice, AI models typically handle a narrow set of tasks and produce a rigid, predetermined set of outputs. For example, a current model might detect a specific disease, taking in one kind of image and always outputting the likelihood of that disease. By contrast, a custom query allows users to come up with questions on the fly: “Explain the mass appearing on this head MRI scan. Is it more likely a tumour or an abscess?”. Furthermore, queries can allow users to customize the format of their outputs: “This is a follow-up MRI scan of a patient with glioblastoma. Outline any tumours in red”.

GMAI 赋予用户通过自定义查询与模型交互的能力,使不同受众更容易理解AI洞察,并在任务和场景中提供前所未有的灵活性。当前实践中,AI模型通常处理有限任务集并生成固定预设的输出。例如,现有模型可能仅能检测特定疾病,输入单一类型图像并始终输出该疾病的概率。相比之下,自定义查询允许用户即时提出问题:"解释这张头部MRI扫描中出现的肿块。是肿瘤还是脓肿的可能性更高?"此外,查询功能支持用户自定义输出格式:"这是胶质母细胞瘤患者的随访MRI扫描。用红色标出所有肿瘤轮廓"。

Custom queries will enable two key capabilities—dynamic task specification and multimodal inputs and outputs—as follows.

自定义查询将支持两项关键功能——动态任务指定和多模态输入输出——具体如下。

Dynamic task specification. Custom queries can teach AI models to solve new problems on the fly, dynamically specifying new tasks without requiring models to be retrained. For example, GMAI can answer highly specific, previously unseen questions: “Given this ultrasound, how thick is the gallbladder wall in millimetres?”. Un surprisingly, a GMAI model may struggle to complete new tasks that involve unknown concepts or path o logie s. In-context learning then allows users to teach the GMAI about a new concept with few examples: “Here are the medical histories of ten previous patients with an emerging disease, an infection with the Langya he nip a virus. How likely is it that our current patient is also infected with Langya he nip a virus?”17.

动态任务指定。自定义查询可以教会AI模型即时解决新问题,动态指定新任务而无需重新训练模型。例如,GMAI能够回答高度特定、前所未见的问题:"根据这张超声图,胆囊壁厚度是多少毫米?"。不出所料,GMAI模型可能在完成涉及未知概念或病理的新任务时遇到困难。上下文学习功能允许用户通过少量示例向GMAI教授新概念:"这是十位感染琅琊亨尼帕病毒新兴疾病患者的既往病史。我们当前患者同样感染琅琊亨尼帕病毒的可能性有多大?"[17]。

Multimodal inputs and outputs. Custom queries can allow users to include complex medical information in their questions, freely mixing modalities. For example, a clinician might include multiple images and laboratory results in their query when asking for a diagnosis. GMAI models can also flexibly incorporate different modalities into responses, such as when a user asks for both a text answer and an accompanying visualization. Following previous models such as Gato, GMAI models can combine modalities by turning each modality’s data into ‘tokens’, each representing a small unit (for example, a word in a sentence or a patch in an image) that can be combined across modalities. This blended stream of tokens can then be fed into a transformer architecture 18, allowing GMAI models to integrate a given patient’s entire history, including reports, waveform signals, laboratory results, genomic profiles and imaging studies.

多模态输入与输出。自定义查询功能允许用户在提问中整合复杂的医疗信息,自由混合多种模态。例如,临床医生在请求诊断时,可在查询中同时包含多张图像和实验室检测结果。GMAI模型也能灵活地将不同模态融入响应中,比如当用户同时要求文本回答和配套可视化图表时。借鉴Gato等先前模型,GMAI模型通过将每种模态数据转化为"token"来实现模态融合,每个token代表一个小单元(如句子中的单词或图像中的分块),这些单元可跨模态组合。这种混合token流随后被输入transformer架构[18],使GMAI模型能整合患者完整病史,包括报告、波形信号、实验室结果、基因组图谱和影像学研究。

Medical domain knowledge

医学领域知识

In stark contrast to a clinician, conventional medical AI models typically lack prior knowledge of the medical domain before they are trained for their particular tasks. Instead, they have to rely solely on statistical associations between features of the input data and the prediction target, without having contextual information (for example, about path o physiological processes). This lack of background makes it harder to train models for specific medical tasks, particularly when data for the tasks are scarce.

与传统临床医生形成鲜明对比的是,常规医疗AI模型在针对特定任务训练前通常缺乏医学领域的先验知识。它们只能完全依赖输入数据特征与预测目标之间的统计关联,而无法获取上下文信息(例如病理生理过程相关背景)。这种背景知识的缺失使得针对特定医疗任务训练模型变得更为困难,尤其在任务数据稀缺时。

GMAI models can address these shortcomings by formally representing medical knowledge. For example, structures such as knowledge graphs can allow models to reason about medical concepts and relationships between them. Furthermore, building on recent retrieval-based approaches, GMAI can retrieve relevant context from existing databases, in the form of articles, images or entire previous cases19,20.

GMAI模型能够通过形式化表示医学知识来解决这些缺陷。例如,知识图谱等结构可以让模型推理医学概念及其相互关系。此外,基于近期检索式方法的研究进展,GMAI可以从现有数据库中以文章、图像或完整历史病例[19,20]的形式检索相关上下文。

The resulting models can raise self-explanatory warnings: “This patient is likely to develop acute respiratory distress syndrome, because the patient was recently admitted with a severe thoracic trauma and because the patient’s partial pressure of oxygen in the arterial blood has steadily decreased, despite an increased inspired fraction of oxygen”.

生成的模型可以发出自解释性警告:"该患者很可能出现急性呼吸窘迫综合征,因为患者近期因严重胸部创伤入院,且尽管吸入氧浓度增加,患者的动脉血氧分压仍持续下降。"

As a GMAI model may even be asked to provide treatment recommendations, despite mostly being trained on observational data, the model’s ability to infer and leverage causal relationships between medical concepts and clinical findings will play a key role for clinical applicability 21.

由于GMAI模型可能被要求提供治疗建议,尽管其主要基于观察性数据进行训练,但该模型推断并利用医学概念与临床发现之间因果关系的能力,对临床适用性起着关键作用 [21]。

Finally, by accessing rich molecular and clinical knowledge, a GMAI model can solve tasks with limited data by drawing on knowledge of related problems, as exemplified by initial works on AI-based drug re purposing 22.

最后,通过获取丰富的分子和临床知识,GMAI模型能够借助相关问题的知识来解决数据有限的任务,基于AI的药物重定位初步研究[22]就体现了这一点。

Use cases of GMAI

GMAI的应用场景

We present six potential use cases for GMAI that target different user bases and disciplines, although our list is hardly exhaustive. Although there have already been AI efforts in these areas, we expect GMAI will enable comprehensive solutions for each problem.

我们提出了GMAI的六个潜在应用场景,针对不同的用户群体和学科领域,尽管这份清单远非详尽无遗。虽然这些领域已有AI技术的尝试,但我们预计GMAI将为每个问题提供全面的解决方案。

Grounded radiology reports. GMAI enables a new generation of versatile digital radiology assistants, supporting radiologists throughout their workflow and markedly reducing workloads. GMAI models can automatically draft radiology reports that describe both abnormalities and relevant normal findings, while also taking into account the patient’s history. These models can provide further assistance to clinicians by pairing text reports with interactive visualization s, such as by highlighting the region described by each phrase. Radiologists can also improve their understanding of cases by chatting with GMAI models: “Can you highlight any new multiple sclerosis lesions that were not present in the previous image?”.

基于实际的放射学报告。GMAI支持新一代多功能数字放射学助手,协助放射科医生完成整个工作流程,显著减少工作量。GMAI模型能自动起草放射学报告,描述异常和相关正常发现,同时考虑患者病史。这些模型还能通过将文本报告与交互式可视化配对(例如高亮每个短语描述的区域)为临床医生提供进一步帮助。放射科医生还可通过与GMAI模型对话加深对病例的理解:"能否高亮显示之前图像中未出现的多发性硬化新病灶?"

A solution needs to accurately interpret various radiology modalities, noticing even subtle abnormalities. Furthermore, it must integrate information from a patient’s history, including sources such as indications, laboratory results and previous images, when describing an image. It also needs to communicate with clinicians using multiple modalities, providing both text answers and dynamically annotated images. To do so, it must be capable of visual grounding, accurately pointing out exactly which part of an image supports any statement. Although this may be achieved through supervised learning on expert-labelled images, explain ability methods such as Grad-CAM could enable self-supervised approaches, requiring no labelled data23.

解决方案需要准确解读各类放射学模态(Modality),甚至能察觉细微异常。同时,在描述影像时必须整合患者病史信息,包括检查指征、实验室结果和既往影像等数据源。该系统还需支持多模态临床沟通,既能提供文本回答,又能生成动态标注图像。为此,它必须具备视觉定位(Visual Grounding)能力,能精确指出图像中支持诊断结论的具体区域。虽然可通过专家标注图像的监督学习实现,但Grad-CAM等可解释性方法或能实现无需标注数据的自监督学习[23]。

Augmented procedures. We anticipate a surgical GMAI model that can assist surgical teams with procedures: “We cannot find the intestinal rupture. Check whether we missed a view of any intestinal section in the visual feed of the last 15 minutes”. GMAI models may carry out visualization tasks, potentially annotating video streams of a procedure in real time. They may also provide information in spoken form, such as by raising alerts when steps of a procedure are skipped or by reading out relevant literature when surgeons encounter rare anatomical phenomena.

增强手术流程。我们期待一种能够协助手术团队的外科GMAI模型:"我们找不到肠道破裂处。请检查过去15分钟的视频画面中是否遗漏了任何肠段视角"。GMAI模型可执行可视化任务,实时标注手术视频流。它们还能通过语音提供信息,例如在跳过手术步骤时发出警报,或在外科医生遇到罕见解剖现象时朗读相关文献[20]。

This model can also assist with procedures outside the operating room, such as with endoscopic procedures. A model that captures topographic context and reasons with anatomical knowledge can draw conclusions about previously unseen phenomena. For instance, it could deduce that a large vascular structure appearing in a duo de nos copy may indicate an a or to duodenal fistula (that is, an abnormal connection between aorta and the small intestine), despite never having encountered one before (Fig. 2, right panel). GMAI can solve this task by first detecting the vessel, second identifying the anatomical location, and finally considering the neighbouring structures.

该模型还可协助手术室外的操作流程,例如内窥镜检查。具备地形语境理解能力并结合解剖学知识推理的模型,能够对未知现象做出判断。例如,即使从未遇到过主动脉十二指肠瘘(即主动脉与小肠间的异常连接),它也能推断出十二指肠镜检中出现的粗大血管结构可能提示该症状(图2右面板)。通用医疗人工智能(GMAI)可通过三个步骤解决该问题:先检测血管,再识别解剖位置,最后分析毗邻结构。

A solution needs to integrate vision, language and audio modalities, using a vision–audio–language model to accept spoken queries and carry out tasks using the visual feed. Vision–language models have already gained traction, and the development of models that incorporate further modalities is merely a question of time24. Approaches may build on previous work that combines language models and knowledge graphs25,26 to reason step-by-step about surgical tasks. Additionally, GMAI deployed in surgical settings will probably face unusual clinical phenomena that cannot be included during model development, owing to their rarity, a challenge known as the long tail of unseen conditions 27. Medical reasoning abilities will be crucial for both detecting previously unseen outliers and explaining them, as exemplified in Fig. 2.

解决方案需要整合视觉、语言和音频模态,使用视觉-音频-语言模型来接收语音查询并利用视觉输入执行任务。视觉-语言模型已获得广泛关注,而开发融合更多模态的模型只是时间问题 [24]。相关方法可以基于先前结合大语言模型和知识图谱 [25,26] 的工作,逐步推理外科手术任务。此外,在外科场景中部署的通用医疗人工智能 (GMAI) 可能会遇到因罕见性而无法在模型开发阶段涵盖的特殊临床现象,这一挑战被称为未预见情况的长尾问题 [27]。如图 2 所示,医疗推理能力对于检测和解释这些未见异常值至关重要。

Bedside decision support. GMAI enables a new class of bedside clinical decision support tools that expand on existing AI-based early warning systems, providing more detailed explanations as well as recommendations for future care. For example, GMAI models for bedside decision support can leverage clinical knowledge and provide free-text explanations and data summaries: “Warning: This patient is about to go into shock. Her circulation has de stabilized in the last 15 minutes . Recommended next steps: ”.

床边决策支持。GMAI能够实现一类新型床边临床决策支持工具,这类工具在现有基于AI(Artificial Intelligence)的早期预警系统基础上进行了扩展,可提供更详细的解释以及未来护理建议。例如,用于床边决策支持的GMAI模型可以整合临床知识,并生成自由文本解释和数据摘要:"警告:该患者即将进入休克状态。过去15分钟内其循环系统已出现失代偿 <数据摘要链接>。建议后续步骤:<检查清单链接>"

A solution needs to parse electronic health record (EHR) sources (for example, vital and laboratory parameters, and clinical notes) that involve multiple modalities, including text and numeric time series data. It needs to be able to summarize a patient’s current state from raw data, project potential future states of the patient and recommend treatment decisions. A solution may project how a patient’s condition will change over time, by using language modelling techniques to predict their future textual and numeric records from their previous data. Training datasets may specifically pair EHR time series data with eventual patient outcomes, which can be collected from discharge reports and ICD (International Classification of Diseases) codes. In addition, the model must be able to compare potential treatments and estimate their effects, all while adhering to therapeutic guidelines and other relevant policies. The model can acquire the necessary knowledge through clinical knowledge graphs and text sources such as academic

一种解决方案需要解析涉及多种模态的电子健康记录(EHR)数据源(例如生命体征和实验室参数、临床笔记),包括文本和数值时间序列数据。它需要能够从原始数据中总结患者的当前状态,预测患者的潜在未来状态并推荐治疗决策。该解决方案可以通过使用语言建模技术,根据患者既往数据预测其未来的文本和数值记录,从而推演患者病情随时间的变化趋势。训练数据集可以专门将EHR时间序列数据与最终患者结局(可从出院报告和ICD(国际疾病分类)代码中收集)进行配对。此外,该模型必须能够比较潜在治疗方案并评估其效果,同时遵守治疗指南和其他相关政策。模型可通过临床知识图谱和学术文献等文本来源获取必要知识。

Perspective

视角

Fig. 2 | Illustration of three potential applications of GMAI. a, GMAI could enable versatile and self-explanatory bedside decision support. b, Grounded radiology reports are equipped with clickable links for visualizing each finding. c, GMAI has the potential to classify phenomena that were never encountered before during model development. In augmented procedures, a rare outlier finding is explained with step-by-step reasoning by leveraging medical domain knowledge and topographic context. The presented example is inspired by a case report58. Image of the fistula in panel c adapted from ref. 58, CC BY 3.0.

图 2 | GMAI的三种潜在应用场景。a) GMAI可实现多功能且自解释的床边决策支持。b) 基于证据的放射学报告配备可点击链接用于可视化每个发现。c) GMAI具备对模型开发期间从未遇到过现象进行分类的潜力。在增强诊疗流程中,通过利用医学领域知识和解剖学背景,采用逐步推理方式解释罕见异常发现。展示案例受病例报告[58]启发。图c中的瘘管图像改编自参考文献[58],遵循CC BY 3.0协议。

publications, educational textbooks, international guidelines and local policies. Approaches may be inspired by REALM, a language model that answers queries by first retrieving a single relevant document and then extracting the answer from it, making it possible for users to identify the exact source of each answer20.

出版物、教育教材、国际指南和地方政策。这些方法可能受到REALM的启发,该大语言模型通过先检索单个相关文档再从文档中提取答案来响应用户查询,使用户能够准确识别每个答案的来源 [20]。

Interactive note-taking. Documentation represents an integral but labour-intensive part of clinical workflows. By monitoring electronic patient information as well as clinician–patient conversations, GMAI models will preemptively draft documents such as electronic notes and discharge reports for clinicians to merely review, edit and approve. Thus, GMAI can substantially reduce administrative overhead, allowing clinicians to spend more time with patients.

交互式笔记记录。文档工作是临床工作流程中不可或缺但劳动密集型的一部分。通过监控电子患者信息及医患对话,GMAI模型将预先起草电子病历、出院报告等文档,供临床医生仅需审阅、编辑和批准。因此,GMAI能显著减少行政负担,让临床医生有更多时间与患者相处。

A GMAI solution can draw from recent advances in speech-to-text models28, specializing techniques for medical applications. It must accurately interpret speech signals, understanding medical jargon and abbreviations. Additionally, it must contextual ize speech data with information from the EHRs (for example, diagnosis list, vital parameters and previous discharge reports) and then generate free-text notes or reports. It will be essential to obtain consent before recording any interaction with a patient. Even before such recordings are collected in large numbers, early note-taking models may already be developed by leveraging clinician–patient interaction data collected from chat applications.

GMAI解决方案可以借鉴语音转文本模型( speech-to-text models ) [28]的最新进展,并针对医疗应用进行专门优化。该系统需要准确解析语音信号,理解医学术语和缩写。此外,必须结合电子健康档案(EHRs)中的信息(如诊断清单、生命体征参数和既往出院报告)对语音数据进行情境化处理,进而生成自由文本形式的病历记录或报告。在录制任何医患互动前必须获得患者同意。即使尚未大规模采集此类录音数据,早期版本的病历记录模型仍可通过利用聊天应用程序收集的医患互动数据进行开发。

Chatbots for patients. GMAI has the potential to power new apps for patient support, providing high-quality care even outside clinical settings. For example, GMAI can build a holistic view of a patient’s condition using multiple modalities, ranging from unstructured descriptions of symptoms to continuous glucose monitor readings to patient-provided medication logs. After interpreting these heterogeneous types of data, GMAI models can interact with the patient, providing detailed advice and explanations. Importantly, GMAI enables accessible communication, providing clear, readable or audible information on the patient’s schedule. Whereas similar apps rely on clinicians to offer personalized support at present , GMAI promises to reduce or even remove the need for human expert intervention, making apps available on a larger scale. As with existing live chat applications, users could still engage with a human counsellor on request.

面向患者的聊天机器人。GMAI有望为患者支持类应用提供新动力,在临床环境之外也能提供高质量护理服务。例如,GMAI可通过多模态数据(包括非结构化的症状描述、持续血糖监测读数以及患者提供的用药记录)构建患者健康状况的全景视图。在解析这些异构数据后,GMAI模型能与患者互动,提供细致的建议与解释。值得注意的是,GMAI能实现无障碍沟通,按照患者的时间安排提供清晰可读或可听的医疗信息。当前类似应用仍需临床医生提供个性化支持,而GMAI有望减少甚至消除对人类专家介入的依赖,从而推动这类应用的大规模普及。与现有实时聊天应用类似,用户仍可根据需求选择与人工顾问沟通。

Building patient-facing chatbots with GMAI raises two special challenges. First, patient-facing models must be able to communicate clearly with non-technical audiences, using simple, clear language without sacrificing the accuracy of the content. Including patient-focused medical texts in training datasets may enable this capability. Second, these models need to work with diverse data collected by patients. Patient-provided data may represent unusual modalities; for example, patients with strict dietary requirements may submit before-and-after photos of their meals so that GMAI models can automatically monitor their food intake. Patient-collected data are also likely to be noisier compared to data from a clinical setting, as patients may be more prone to error or use less reliable devices when collecting data. Again, incorporating relevant data into training can help overcome this challenge. However, GMAI models also need to monitor their own uncertainty and take appropriate action when they do not have enough reliable data.

构建面向患者的聊天机器人时,GMAI面临两大特殊挑战。首先,面向患者的模型必须能用简单清晰的语言与非技术受众沟通,同时确保内容准确性。在训练数据集中加入以患者为中心的医学文本可能有助于实现这一能力。其次,这些模型需要处理患者提供的多样化数据。患者提交的数据可能包含非常规形式,例如有严格饮食要求的患者可能提交餐前餐后照片,使GMAI模型能自动监测其食物摄入量。与临床环境采集的数据相比,患者收集的数据通常噪声更多,因为患者在采集时更容易出错或使用可靠性较低的设备。同样,将相关数据纳入训练有助于应对这一挑战。不过,GMAI模型还需监测自身不确定性,在缺乏足够可靠数据时采取适当措施。

Text-to-protein generation. GMAI could generate protein amino acid sequences and their three-dimensional structures from textual prompts. Inspired by existing generative models of protein sequences 30, such a model could condition its generation on desired functional properties. By contrast, a biomedical ly knowledgeable GMAI model promises protein design interfaces that are as flexible and easy to use as concurrent text-to-image generative models such as Stable Diffusion or DALL-E31,32. Moreover, by unlocking in-context learning capabilities, a GMAI-based text-to-protein model may be prompted with a handful of example instructions paired with sequences to dynamically define a new generation task, such as the generation of a protein that binds with high affinity to a specified target while fulfilling additional constraints.

文本到蛋白质生成。GMAI可以根据文本提示生成蛋白质氨基酸序列及其三维结构。受现有蛋白质序列生成模型[30]的启发,这种模型可以基于所需功能特性进行条件生成。相比之下,具备生物医学知识的GMAI模型有望提供与Stable Diffusion或DALL-E[31,32]等当前文本到图像生成模型同样灵活易用的蛋白质设计界面。此外,通过解锁上下文学习能力,基于GMAI的文本到蛋白质模型可以通过少量示例指令与配对的序列动态定义新的生成任务,例如生成一种在满足额外约束条件的同时与指定靶标高亲和力结合的蛋白质。

There have already been early efforts to develop foundation models for biological sequences 33,34, including RF diffusion, which generates proteins on the basis of simple specifications (for example, a binding target)35. Building on this work, GMAI-based solution can incorporate both language and protein sequence data during training to offer a versatile text interface. A solution could also draw on recent advances in multimodal AI such as CLIP, in which models are jointly trained on paired data of different modalities 16. When creating such a training dataset, individual protein sequences must be paired with relevant text passages (for example, from the body of biological literature) that describe the properties of the proteins. Large-scale initiatives, such as UniProt, that map out protein functions for millions of proteins, will be indispensable for this effort36.

已有早期研究致力于开发面向生物序列的基础模型[33,34],例如能根据简单参数(如结合靶点)生成蛋白质的RF diffusion技术[35]。基于这些成果,采用GMAI的解决方案可在训练过程中同时整合语言和蛋白质序列数据,从而提供多功能文本交互界面。该方案还可借鉴多模态AI(如CLIP模型)的最新进展——这类模型通过不同模态的配对数据进行联合训练[16]。构建此类训练数据集时,需将单个蛋白质序列与描述其特性的相关文本段落(例如来自生物文献的内容)进行配对。UniProt等绘制数百万种蛋白质功能图谱的大规模项目,对此项工作至关重要[36]。

Opportunities and challenges of GMAI

通用人工智能的机遇与挑战

GMAI has the potential to affect medical practice by improving care and reducing clinician burnout. Here we detail the over arching advantages of GMAI models. We also describe critical challenges that must be addressed to ensure safe deployment, as GMAI models will operate in particularly high-stakes settings, compared to foundation models in other fields.

GMAI有潜力通过改善医疗服务和减少临床医生职业倦怠来影响医疗实践。在此我们详述GMAI模型的总体优势。同时我们也必须指出,与其他领域的基础模型相比,GMAI模型将在特别高风险的场景中运行,因此需要解决关键挑战以确保安全部署。

Paradigm shifts with GMAI

通用人工智能(GMAI)带来的范式转变

Control l ability. GMAI allows users to finely control the format of its outputs, making complex medical information easier to access and understand. For example, there will be GMAI models that can rephrase natural language responses on request. Similarly, GMAI-provided visualizations may be carefully tailored, such as by changing the viewpoint or labelling important features with text. Models can also potentially adjust the level of domain-specific detail in their outputs or translate them into multiple languages, communicating effectively with diverse users. Finally, GMAI’s flexibility allows it to adapt to particular regions or hospitals, following local customs and policies. Users may need formal instruction on how to query a GMAI model and to use its outputs most effectively.

可控性。GMAI允许用户精细控制其输出格式,使复杂医疗信息