Large language models in medicine: the potentials and pitfalls

大语言模型在医学中的应用:潜力与挑战

Jesu to fun mi A. Omiye1* , Haiwen Gui1* , Shawheen J. Rezaei1, James $\mathrm{Zou}^{2}$ , Roxana Daneshjou1,2 * These authors contributed equally as a co-first author to this manuscript 1 Department of Dermatology, Stanford University, Stanford, USA 2 Department of Biomedical Data Science, Stanford University, Stanford, USA

Jesu to fun mi A. Omiye1* , Haiwen Gui1* , Shawheen J. Rezaei1, James $\mathrm{Zou}^{2}$, Roxana Daneshjou1,2 * 这些作者作为共同第一作者对本稿件贡献均等 1 美国斯坦福大学皮肤科 2 美国斯坦福大学生物医学数据科学系

Abstract:

摘要:

Large language models (LLMs) have been applied to tasks in healthcare, ranging from medical exam questions to responding to patient questions. With increasing institutional partnerships between companies producing LLMs and healthcare systems, real world clinical application is coming closer to reality. As these models gain traction, it is essential for healthcare practitioners to understand what LLMs are, their development, their current and potential applications, and the associated pitfalls when utilized in medicine. This review and accompanying tutorial aim to give an overview of these topics to aid healthcare practitioners in understanding the rapidly changing landscape of LLMs as applied to medicine.

大语言模型 (LLM) 已应用于医疗健康领域的多项任务,从医学考试题目到回答患者问题。随着开发大语言模型的企业与医疗系统之间的机构合作日益增多,现实世界的临床应用正逐渐成为现实。随着这些模型受到关注,医疗从业者必须了解大语言模型是什么、其发展历程、当前及潜在应用,以及在医学领域中使用的相关风险。本综述及配套教程旨在概述这些主题,帮助医疗从业者理解大语言模型在医学领域中快速发展的现状。

1. Introduction:

1. 引言:

Large Language models (LLMs) have become increasingly mainstream since the launch of OpenAI's (San Francisco, USA) publicly available Chat Generative Pre-trained Transformer (ChatGPT) in November $2022^{1}$ . This milestone was quickly followed by the unveiling of similar models like Google’s Bard2, Anthropic’s Claude 3, alongside open-source variants such as Meta’s LLaMA4. LLMs are a subset of foundation models 5 (see Glossary), that are trained on massive text data, can have billions of parameters 6, and are primarily interacted with via text. Fundamentally, a language model serves as a channel that receives text queries and generates text in return . LLMs can be adapted to a wide range of language-related tasks beyond their primary training objective. Their popularity has led to increasing interest in the medical field, and they have been applied to various tasks like note-taking8, answering medical exam questions 9,10, answering patient questions 11, and generating clinical summaries8. Despite their versatility, LLM’s behaviors are poorly understood , and they have the potential to produce medically inaccurate outputs12 and amplify existing biases 13,14.

自2022年11月OpenAI(美国旧金山)发布公开可用的Chat Generative Pre-trained Transformer (ChatGPT)以来,大语言模型(LLM)逐渐成为主流。这一里程碑事件后,谷歌的Bard2、Anthropic的Claude 3等类似模型以及Meta的LLaMA4等开源变体相继问世。大语言模型是基础模型5(见术语表)的子集,通过海量文本数据训练而成,可拥有数十亿参数6,主要通过文本进行交互。本质上,语言模型是接收文本查询并生成返回文本的通道。大语言模型可适配多种超出其初始训练目标的语言相关任务,其流行度引发了医学领域日益增长的兴趣,已被应用于电子病历记录8、医学考试答题9,10、患者咨询回复11、临床摘要生成8等场景。尽管功能多样,但大语言模型的行为机制尚不明确,可能产生医学错误输出12并放大现有偏见13,14。

Evidence suggests that interest in LLMs is growing among physicians 8,15,16, and institutional partnerships are on the rise. Examples include its use in a training module for medical residents at Beth Israel Deaconess Medical Centre (Boston, USA) and a partnership with EPIC, a major electronic health records provider, to integrate GPT-4 into their services18. As these models gain traction, it is essential for physicians to understand what LLMs are, their development, existing models, their current and potential applications, and the associated pitfalls when utilized in medicine. In this review, we will give an overview of how LLMs are trained as this background is instrumental in exploring their applications and drawbacks, describe previous ways that LLMs have been applied in medicine, and discuss both the limitations and potential for LLMs in medicine. Additionally, we provide several tutorial-like use cases to allow healthcare practitioners to trial some of the capabilities of one such model, ChatGPT using GPT3.5.

证据表明,医生群体对大语言模型(LLM)的兴趣正在增长[8,15,16],机构合作也呈上升趋势。例如美国波士顿贝斯以色列女执事医疗中心将其用于住院医师培训模块,以及主要电子健康记录提供商EPIC与GPT-4达成合作将其集成至服务中[18]。随着这些模型日益普及,医生有必要了解大语言模型的本质、发展历程、现有模型、当前及潜在应用场景,以及在医疗领域使用时可能存在的隐患。本综述将概述大语言模型的训练原理(这对探索其应用与缺陷至关重要),描述大语言模型在医学领域的既有应用方式,并探讨其在医学领域的局限性与发展潜力。此外,我们还提供了若干教程式用例,帮助医疗从业者试用ChatGPT(GPT3.5版本)这类模型的部分功能。

Table of Glossary:

| Term | Definition |

| Neural networks (NN) | Systems inspired by the neuronal connections in the brain, that are capable of learning, recognizing patterns, and making predictions on tasks without explicit programming. They are the building blocks of many modern machine learning |

| (FM) | Foundation Model A large-scale NN model trained on vast data to develop broad learning capabilities which can be fine-tuned for specific tasks. An FM can be fine-tuned to generate reports, answer medical questions. |

| Generative AI | content. This can be audio, visual, or text. |

| Large Language Models (LLMs) | Artificial intelligence models trained on an enormous amount of text data. LLMs are capable of generating human-like text and learning relationships between |

| Transformer | words. A deep learning model architecture that relies on self-attention mechanisms, by |

| Architecture | differentially weighting the importance of each part of the model's input. This makes it particularly useful for language tasks. |

| Attention | This is a mechanism within the Transformer architecture that enables the differential weighing mentioned above. |

| Parameters | These are values that are learned during the training process of a model. |

| Self-supervised learning | A form of training a model where it learns from unlabeled data, but utilizes the input data as its own supervision. A popular example is predicting the next word in a sentence. |

| Tokenization | This is a pre-training process in which text is converted into smaller units which can be like a character or a word, before being fed into the model. For example. hypertension can be tokenized into the following ^hy', ‘per', ^tension'. |

| Pre-training | This is the initial phase of training a model on a large dataset before fine-tuning i on a task-specific dataset. The parameters are updated in the training process. |

| Fine-tuning | This refers to further training a pre-trained model on a specific task and adjusting the pre-existing parameters to achieve better performance for a particular task. |

| Zero-shot prompting | Using language prompts to get a model to perform specific tasks without having seen explicit examples of those tasks. |

| Few-shot prompting | In this case, the model is provided with some examples of the task, hence the name ‘few'. |

| how to perform a task, alongside labeled examples that demonstrate the training objective/desired behavior. |

术语表:

| 术语 | 定义 |

|---|---|

| 神经网络 (Neural networks, NN) | 受大脑神经元连接启发的系统,能够通过学习、识别模式并对任务进行预测,而无需显式编程。它们是许多现代机器学习的构建模块。 |

| 基础模型 (Foundation Model, FM) | 通过海量数据训练的大规模神经网络模型,具备广泛学习能力,可针对特定任务进行微调。基础模型可通过微调生成报告、回答医学问题等。 |

| 生成式 AI (Generative AI) | 能够生成音频、视觉或文本等内容的人工智能。 |

| 大语言模型 (Large Language Models, LLMs) | 基于大量文本数据训练的人工智能模型,能够生成类人文本并学习词汇间关联性。 |

| Transformer | 一种依赖自注意力机制的深度学习模型架构,通过对模型输入各部分进行差异化加权处理,使其特别适合语言任务。 |

| 架构 (Architecture) | Transformer模型的结构设计。 |

| 注意力 (Attention) | Transformer架构中的机制,实现上述差异化加权功能。 |

| 参数 (Parameters) | 模型训练过程中学习得到的数值。 |

| 自监督学习 (Self-supervised learning) | 模型从无标注数据中学习,但利用输入数据自身作为监督信号的训练方式。典型例子是预测句子中的下一个词。 |

| Token化 (Tokenization) | 将文本转换为更小单元(如字符或单词)的预处理过程。例如"hypertension"可被token化为"hy"、"per"、"tension"。 |

| 预训练 (Pre-training) | 在特定任务数据集上微调之前,先在大规模数据集上训练模型的初始阶段,此过程中会更新参数。 |

| 微调 (Fine-tuning) | 在预训练模型基础上针对特定任务进一步训练,调整已有参数以获得更好性能。 |

| 零样本提示 (Zero-shot prompting) | 使用语言提示让模型执行特定任务,而无需提供该任务的显式示例。 |

| 少样本提示 (Few-shot prompting) | 为模型提供少量任务示例(因此得名"few")以及展示训练目标/期望行为的标注示例。 |

Multi-modal LLMs

多模态大语言模型

In-Context Learning Bias (in AI)

上下文学习偏差 (in AI)

MedMCQA

MedMCQA

PubMedQA

PubMedQA

2. Architecture of LLMs

2. 大语言模型 (LLM) 的架构

LLMs rely on the 'Transformer' architecture 19,20. This architecture leverages an 'attention' mechanism which uses multi-layered neural networks to help LLMs comprehend context and learn meaning within sentences and long paragraphs 6. Akin to how a physician identifies important details of a patient's case while ignoring extraneous information, this mechanism enables LLMs to ‘learn’ important relationships between words while ignoring irrelevant information.

大语言模型依赖Transformer架构[19,20]。该架构利用"注意力"机制,通过多层神经网络帮助大语言模型理解句子和长段落中的上下文及语义[6]。类似于医生在诊断时会关注病例关键细节而忽略无关信息,该机制使大语言模型能够"学习"词语间的重要关联,同时过滤无关内容。

The training of these complex models involves billions of parameters, and has been made possible by recent advancements in computational power and model architecture 5 (Figure 1). For example, GPT-3 was trained on vast data sources and reportedly has about 175 billion parameters 21, while the open source LLaMA family of models have 7 to 70 billion parameters 4,22. The first step of LLM training, known as pre-training, is a self-supervised approach that involves training on a large corpus of unlabeled data, such as internet text, Wikipedia, Github code, social media posts, and Books Corpus 4–6. Some are also trained on proprietary datasets containing specialized texts like scientific articles4. The training objective is usually to predict the next word in a sentence, and this process is resource-intensive 23. It requires the conversion of the text into tokens before they are fed into the model24. The result of this step is a base model that is in itself simply a general language-generating model, but lacks the capacity for nuanced tasks.

这些复杂模型的训练涉及数十亿参数,得益于近年来计算能力和模型架构的进步才得以实现 [5] (图 1)。例如,GPT-3 在庞大数据源上训练完成,据称拥有约 1750 亿参数 [21],而开源 LLaMA 系列模型的参数量则在 70 亿至 700 亿之间 [4,22]。大语言模型训练的第一步称为预训练 (pre-training),这是一种自监督学习方法,通过互联网文本、维基百科、Github 代码、社交媒体帖子和书籍语料库等大量无标注数据进行训练 [4-6]。部分模型还会使用包含科学论文等专业文本的专有数据集进行训练 [4]。训练目标通常是预测句子中的下一个词,这一过程需要消耗大量资源 [23]。在将文本输入模型前,需要先将其转换为 token [24]。此阶段产生的基座模型本质上只是一个通用语言生成模型,尚不具备处理复杂任务的能力。

This base model then undergoes a second phase, known as fine-tuning24. Here, the model can be further trained on narrower datasets like medical transcripts for a healthcare application, or legal briefs for a legal assistant bot. This fine-tuning process can be augmented with a Constitutional AI approach25, which involves embedding predefined rules or principles directly into the model's architecture. Also, this phase can be enhanced with reward training 6,26, where humans score the quality of multiple model outputs, and a reinforcement learning from human feedback (RLHF) approach26, which employs a comparison-based system to optimize the model responses. This step, which is less computationally expensive, albeit

该基础模型随后进入第二阶段,即微调 (fine-tuning) [24]。在此阶段,模型可通过医疗记录等专业数据集(适用于医疗应用)或法律简报(适用于法律助手机器人)进行针对性训练。这种微调过程可通过宪法AI (Constitutional AI) 方法[25]进行增强,即将预定义规则或原则直接嵌入模型架构。该阶段还可结合奖励训练[6][26](人类对多个模型输出进行评分)以及基于人类反馈的强化学习 (RLHF) [26](采用比较系统优化模型响应)。该步骤计算成本较低,但...

human-intensive, adjusts the model to perform a specific task with controlled outputs. The fine-tuned model from this phase is what is deployed in flexible applications like a chatbot.

人力密集型,调整模型以执行特定任务并控制输出。此阶段微调后的模型可部署于聊天机器人等灵活应用中。

LLMs’ adaptability to unfamiliar tasks27 and apparent reasoning abilities 28 are captivating. However, unlocking their full potential in specialized fields like medicine requires even more specific training strategies. These strategies could include direct prompting techniques like few-shot learni ng 21,29, where a few examples of a task at test time guide the model's responses, and zero-shot learnin g 30–32, where no prior specific examples are given. More nuanced approaches such as chain-of-thought prompting 33, which encourages the model to detail its reasoning process step by step, and self-consistency prompting 34, where the model is challenged to verify the consistency of its responses, also play important roles.

大语言模型对陌生任务的适应能力[27]和显而易见的推理能力[28]令人着迷。然而,要在医学等专业领域充分发挥其潜力,还需要更具体的训练策略。这些策略可能包括少样本学习[21,29]等直接提示技术(测试时用少量任务示例指导模型响应)和零样本学习[30-32](不提供具体先验示例)。更精细的方法如思维链提示[33](鼓励模型逐步详述推理过程)和自洽性提示[34](要求模型验证其响应一致性)也发挥着重要作用。

Another promising technique is instruction prompt tuning, introduced by Lester et al.35, which provides a cost-effective solution to update the model's parameters, thereby improving performance in many downstream medical tasks. This approach offers significant benefits over the few-shot prompt approaches, particularly for clinical applications as demonstrated by Singhal et al12. Overall, these methods augment the core training processes of fine-tuned models and can enhance their alignment with medical tasks as recently shown in the Flan-PaLM model12. As these models continue to evolve, understanding their training methodologies will serve as a good foundation for discussing their current capabilities and future applications.

另一种有前景的技术是由Lester等人[35]提出的指令提示调优(instruction prompt tuning),该技术为更新模型参数提供了一种经济高效的解决方案,从而提升了许多下游医疗任务的性能。相较于少样本提示方法,这种方案展现出显著优势,Singhal等人[12]的研究尤其证明了其在临床应用中的价值。总体而言,这些方法增强了微调模型的核心训练流程,并能提升模型与医疗任务的契合度——Flan-PaLM模型[12]的最新研究已印证了这一点。随着这些模型的持续演进,理解其训练方法论将为探讨当前能力与未来应用奠定坚实基础。

Figure 1. Overview of LLM training process. LLMs ‘learn’ from more focused inputs at each stage of the training process. The first phase of this learning is pre-training, where the LLM can be trained on a mix of unlabeled data and proprietary data without any human supervision. The second phase is finetuning, where narrower datasets and human feedback are introduced as inputs to the base model. The fine-tuned model can then enter an additional phase, where humans with specialized knowledge implement prompting techniques that can transform the LLM into a model that is augmented to perform specialized tasks.

图 1: 大语言模型训练流程概览。模型在训练过程的每个阶段都会从更聚焦的输入中"学习"。第一阶段是预训练 (pre-training),大语言模型可以在无人监督的情况下,通过未标注数据和专有数据的混合进行训练。第二阶段是微调 (fine-tuning),此时会向基础模型输入更精细的数据集和人类反馈。经过微调的模型可进入附加阶段,由具备专业知识的人类通过提示工程 (prompting) 技术,将大语言模型增强为能够执行专业任务的模型。

3. Overview of current medical-LLMs

3. 当前医疗大语言模型概述

Prior to the emergence of LLMs, natural language processing challenges were tackled by more rudimentary language models like statistical language models (SLM) and neural language models $\mathrm{(NLM)}^{6}$ , which had significantly fewer parameters and trained on relatively small datasets. These predecessor models lacked the emergent capabilities of modern LLMs, such as reasoning and in-context learning. The advent of the Transformer architecture was a pivotal point, heralding the age of multifaceted LLMs we see today. Often, specialized datasets are used to evaluate an LLMs’ performance on medical tasks, typically deploying an array of QA tools like MedMCQA, PubMedQA12,36 (in Glossary), and more novel ones like Multi Med Bench 37.

在大语言模型出现之前,自然语言处理挑战通常由统计语言模型 (SLM) 和神经语言模型 $\mathrm{(NLM)}^{6}$ 等更基础的语言模型应对,这些模型参数规模显著更小,且训练数据量有限。这些早期模型缺乏现代大语言模型涌现的能力,例如推理和上下文学习。Transformer 架构的出现成为关键转折点,开启了当今多面手大语言模型的时代。通常,医学任务会使用专业数据集评估大语言模型的性能,例如 MedMCQA、PubMedQA [12,36] (术语表中有说明) 等问答工具,以及 Multi Med Bench [37] 等新型评估工具。

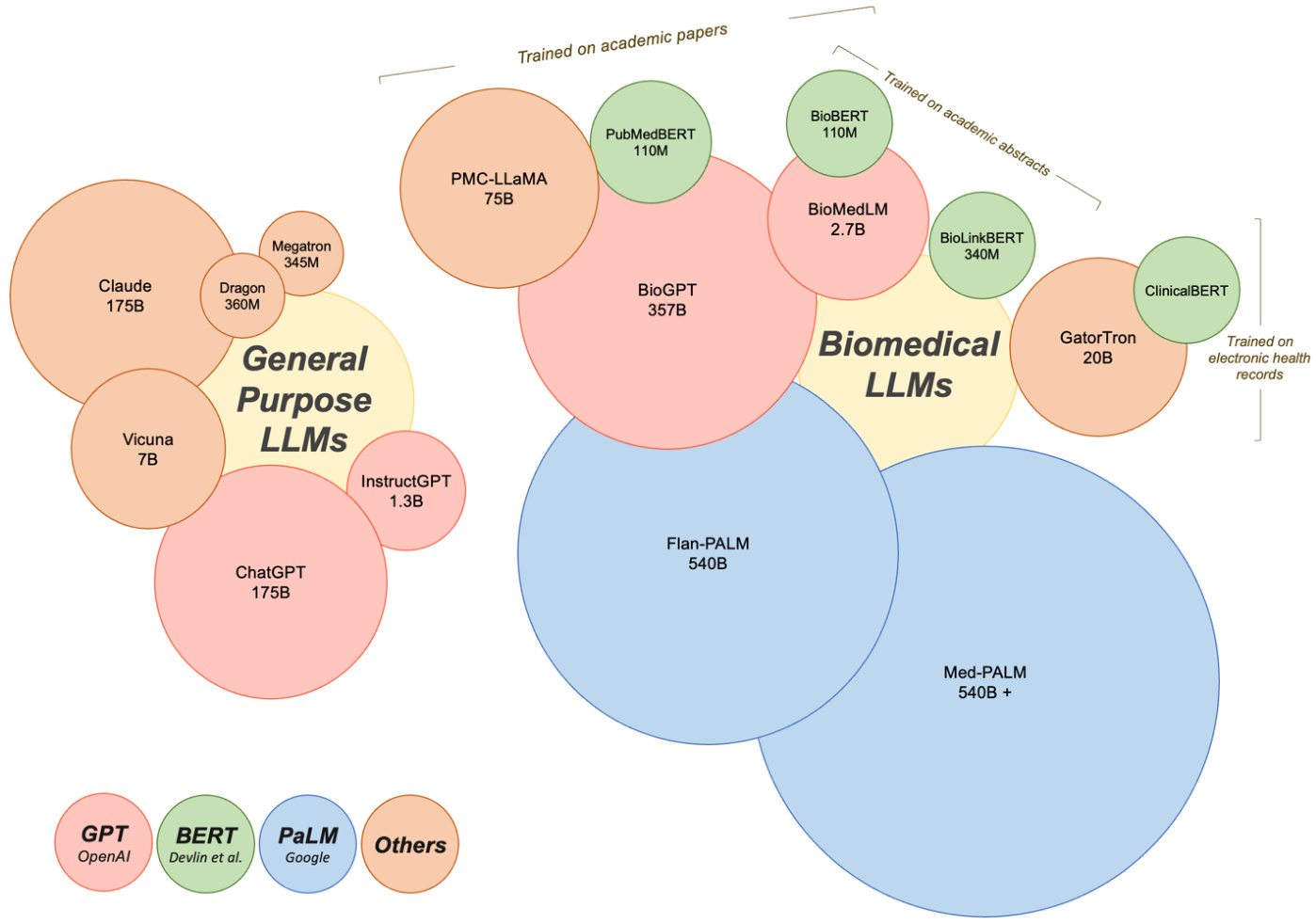

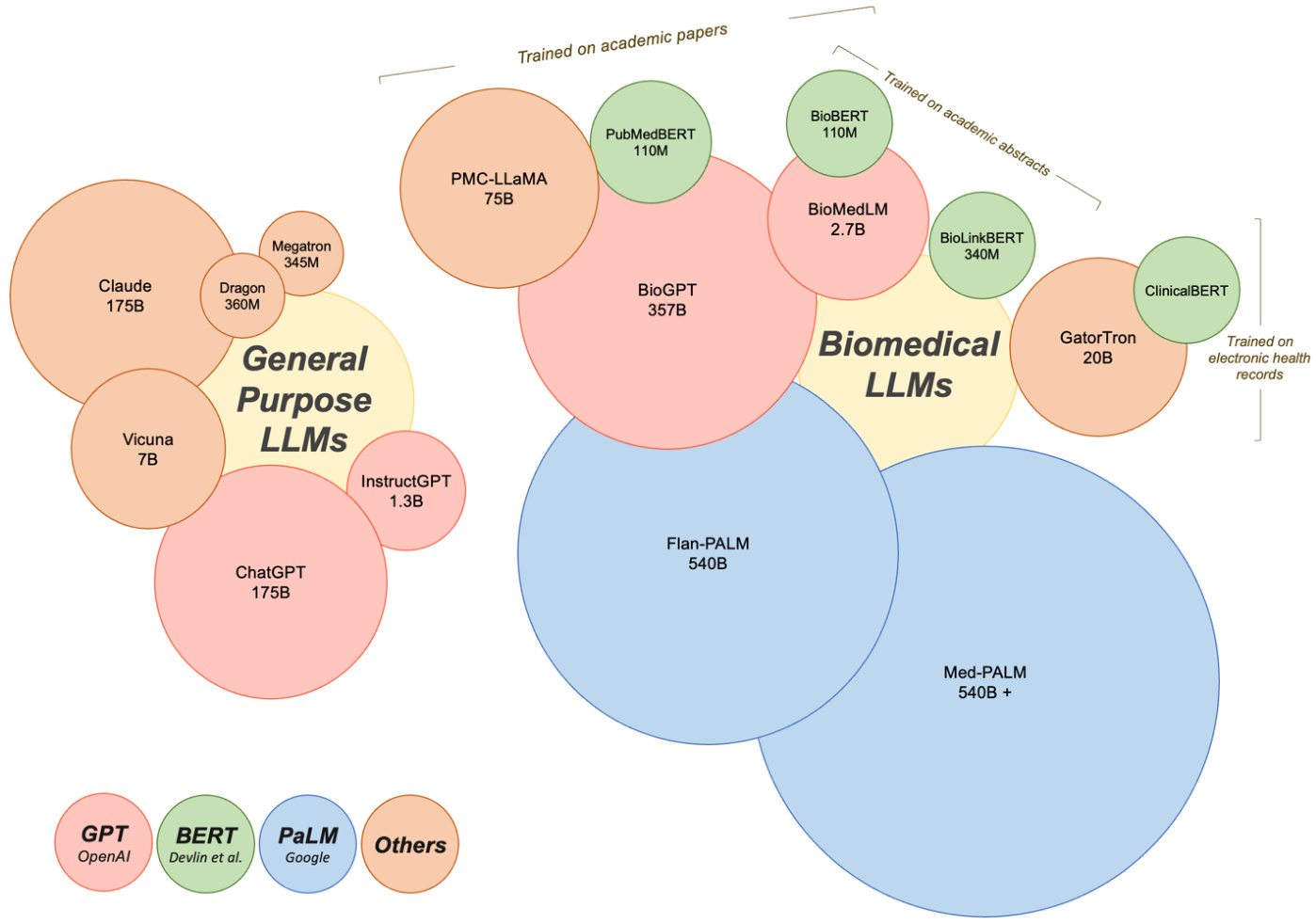

In this section, we will provide an overview of general-purpose LLMs, with a specific emphasis on those that have been applied to tasks within the medical field. Additionally, we'll delve into domain-specific LLMs, referring to models that have been either pre-trained or uniquely fine-tuned using medical literature (Figure 2).

在本节中,我们将概述通用大语言模型,并重点介绍那些已应用于医疗领域任务的模型。此外,我们还将深入探讨特定领域的大语言模型,即通过医学文献进行预训练或专门微调的模型 (图 2)。

● Generative Pre-trained Transformers (GPT): Arguably the most popular of the general Large Language Models are those that belong to the GPT lineage, largely due to their chat-facing product. Developed by OpenAI in $2018^{38}$ , the GPT series has significantly scaled in recent years, with the latest version, GPT-4, speculated to possess significantly more parameters than its predecessors. The evolution in parameters from a mere 0.12B in GPT-1 speaks to the enormous strides made in this area. GPT-4 represents a leap forward in terms of its ability to handle multimodal input such as images, text, and audio - an attribute that aligns seamlessly with the multifaceted nature of medical practice. Novel prompting techniques were introduced with the GPT models, paving the way for the popular ChatGPT product, which is based on GPT-3.5 and GPT4. ChatGPT has demonstrated its utility in various medical scenarios discussed later in this paper8,39–41. Certain studies have concentrated on evaluating the healthcare utility of ChatGPT and Instruct GP T, while others have focused on its fine-tuning for specific medical tasks. For instance, Luo et al. introduced BioGPT, a model that utilized the GPT-2 framework pre-trained on 15 million PubMed abstracts for tasks including question answering (QA), relation extraction, and document classification . Their model outperformed the state-of-the-art models across all evaluated tasks. In a similar vein, BioMedLM 2.7B (formerly known as PubMedGPT), pretrained on both PubMed abstracts and full texts42, demonstrates the continued advancements in this field. Some researchers have even leveraged GPT-4 to create multi-modal medical LLMs, reporting promising results43,44.

● 生成式预训练Transformer (Generative Pre-trained Transformers, GPT): 目前最受欢迎的通用大语言模型当属GPT系列,这主要归功于其面向对话场景的产品。OpenAI于2018年[38]开发的GPT系列近年来规模显著扩大,最新版本GPT-4的参数规模据推测远超其前代。从GPT-1仅有的0.12B参数发展至今,该领域已取得巨大进步。GPT-4在处理图像、文本和音频等多模态输入方面实现了跨越式进步,这一特性与医疗实践的多维需求高度契合。GPT模型引入的创新提示技术为基于GPT-3.5和GPT-4的ChatGPT产品铺平了道路。如后文所述[8,39–41],ChatGPT已在多种医疗场景中展现出实用价值。部分研究聚焦评估ChatGPT和Instruct GPT的医疗应用潜力,另一些则致力于针对特定医疗任务进行微调。例如Luo等人开发的BioGPT采用GPT-2框架,基于1500万篇PubMed摘要进行预训练,在问答系统(QA)、关系抽取和文档分类等任务中表现超越所有基准模型。类似地,基于PubMed摘要与全文[42]预训练的BioMedLM 2.7B(原PubMedGPT)也展现了该领域的持续进展。更有研究者利用GPT-4构建多模态医疗大语言模型,并报告了令人鼓舞的成果[43,44]。

Bidirectional Encoder Representations from Transformers (BERT): Another prominent category of language models that warrant discussion stems from the BERT family. First introduced by Devlin and colleagues, BERT was unique due its focus on understanding sentences through bidirectional training of the model, compared to previous models that used context from one side45. For medical tasks, researchers have developed domain-specific versions of BERT tailored to scientific and clinical text. BioBERT incorporates biomedical corpus data from PubMed abstracts and PubMed Central articles during pre-training46. PubMedBERT follows a similar methodology using just PubMed abstracts 47. Clinic alBERT adapts BERT for clinical notes, trained on the large MIMIC-III dataset of electronic health records48. More recent work has

来自Transformer的双向编码器表示(BERT): 另一个值得讨论的重要语言模型类别源自BERT家族。由Devlin等人首次提出的BERT具有独特性,因为它通过模型的双向训练来理解句子,而之前的模型仅使用单侧上下文[45]。针对医学任务,研究人员开发了针对科学和临床文本的领域特定版本BERT。BioBERT在预训练阶段整合了来自PubMed摘要和PubMed Central文章的生物医学语料数据[46]。PubMedBERT采用类似方法但仅使用PubMed摘要[47]。ClinicalBERT则针对临床笔记调整了BERT模型,并在大型电子健康记录数据集MIMIC-III上进行训练[48]。最新研究还...

focused on enhancing BERT for specific applications. Bio Link BERT adds entity linking to connect biomedical concepts in text to ontologies . These extensions showcase how baseline BERT architectures can be customized for medicine. With proper tuning BERT-based LLMs have demonstrated potential to augment various medical tasks. ● Pathways Language Model (PaLM): Developed by Google, this model represents one of the largest LLMs to date. Researchers first fine-tuned PaLM for medical QA, creating the Flan $\mathrm{PaLM}^{50}$ which achieved state-of-the-art results on QA benchmarks. Building on this, the MedPaLM model was produced via instruction tuning, demonstrating strong capabilities in clinical knowledge, scientific consensus, and medical reasoning 12. This has recently been extended to create a multimodal medical LLM37. PaLM-based models underscore the utility of large foundation models fine-tuned for medicine. Beyond big tech companies, other proprietary and open source medical LLMs have emerged. Models trained from scratch on clinical corpora, such as GatorTron , have shown improved performance on certain tasks compared to general domain LLMs. Claude, developed by Anthropic, has been evaluated on medical biases and other safety issues for clinical applications 13. Active open-source projects are also contributing to the medical LLMs field. For example, PMC-LLaMA leverages the LLaMA model and incorporates biomedical papers in its pre-training 52. Other popular base models like DRAGON53, Megatron54, and Vicuna55 have enabled development of multimodal LLMs incorporating visual data43.

专注于优化BERT在特定应用中的表现。Bio Link BERT通过添加实体链接功能,将文本中的生物医学概念与本体论相关联。这些改进展示了如何针对医学领域定制基础BERT架构。经过适当调优后,基于BERT的大语言模型已展现出增强多种医疗任务的潜力。

● Pathways语言模型(PaLM):由Google开发,是迄今为止规模最大的大语言模型之一。研究人员首先对PaLM进行医学问答(medical QA)微调,创建了Flan $\mathrm{PaLM}^{50}$,该模型在问答基准测试中取得了最先进的结果。在此基础上,通过指令微调开发出MedPaLM模型,展现出在临床知识、科学共识和医学推理方面的强大能力[12]。最近该模型进一步扩展为多模态医疗大语言模型[37]。基于PaLM的模型证明了针对医学领域微调大型基础模型的价值。

除科技巨头外,其他专有和开源医疗大语言模型也相继涌现。例如基于临床语料库从头训练的GatorTron模型,在特定任务上表现优于通用领域大语言模型。Anthropic开发的Claude模型已针对临床应用中的医疗偏见等安全问题进行了评估[13]。活跃的开源项目也在推动医疗大语言模型领域发展,例如PMC-LLaMA基于LLaMA模型并整合生物医学论文进行预训练[52]。DRAGON[53]、Megatron[54]和Vicuna[55]等其他流行基础模型也支持开发整合视觉数据的多模态大语言模型[43]。

Overall, domain-specific pre-training on medical corpora produces models that excel on biomedical tasks compared to generalist LLMs (with some exceptions like GPT-4). However, fine-tuning approaches for adapting general models like BERT and GPT-3 have achieved strong results on medical tasks in a more computationally efficient manner. This is promising given the challenges of limited medical data for training.

总体而言,相比通用大语言模型(除GPT-4等少数例外),在医学语料库上进行领域特定预训练产生的模型在生物医学任务中表现更优。然而,通过微调方法(如BERT和GPT-3等通用模型)能以更高计算效率在医疗任务上取得优异成果。鉴于医疗训练数据有限的挑战,这一发现尤为鼓舞人心。

As LLMs continue to scale up with larger parameter counts, they appear likely to implicitly learn useful biological and clinical knowledge, evidenced by models like Med-PaLM demonstrating improved accuracy, calibration, and physician-like responses. While not equal to real clinical expertise, these characteristics highlight the growing potential of LLMs in medicine and healthcare.

随着大语言模型 (LLM) 参数量持续扩大,它们似乎能隐式学习有用的生物学和临床知识。Med-PaLM 等模型表现出更高的准确性、校准度及类医生响应,印证了这一点。虽然这些能力尚无法等同于真实临床经验,但这些特性凸显了大语言模型在医疗健康领域日益增长的应用潜力。

Figure 2. Current LLMs in medicine. Currently there are general purpose and biomedical LLMs used for medical tasks. While GPT by OpenAI, BERT by Devlin and colleagues, and PaLM by Google have led the development of LLMs with applications in medicine, other proprietary and open source LLMs also exist in this space. Circle sizes reflect the model size and the number of parameters used to build the models. LLMs with applications in medicine vary widely in how they were trained. BioMedLM 2.7B by GPT was trained on the corpus of PubMed articles and abstracts, for example, whereas Clinic alBERT was trained specifically on electronic health records. These differences in training and development can have important implications for how LLMs perform in certain medical scenarios.

图 2: 当前医疗领域的大语言模型。目前存在通用型和生物医学专用的大语言模型用于医疗任务。虽然OpenAI的GPT、Devlin及其同事提出的BERT以及谷歌的PaLM引领了大语言模型在医疗领域的发展,但该领域还存在其他专有和开源的大语言模型。圆圈大小反映了模型规模及构建模型所用的参数量。应用于医疗领域的大语言模型在训练方式上差异显著。例如,GPT开发的BioMedLM 2.7B基于PubMed文章和摘要语料库训练,而ClinicalBERT则专门针对电子健康记录进行训练。这些训练与开发方式的差异会显著影响大语言模型在特定医疗场景中的表现。

4. LLM in medical tasks:

4. 大语言模型 (LLM) 在医疗任务中的应用:

4.1 Overview of LLM in medicine

4.1 大语言模型 (LLM) 在医学领域的概述

Given the rapid advances in LLMs, there has already been an incredible amount of research conducted exploring the usage of LLMs in medicine, ranging from answering patient questions in cardiology 10 to serving as a support tool in tumor boards15, to aiding researchers in academia 56,57. A brief search in PubMed for “ChatGPT'' revealed 800-plus results, showing the rapid exploration and adoption of this technology.

鉴于大语言模型(LLM)的快速发展,已有大量研究探索其在医学领域的应用,范围涵盖心脏病学中的患者问题解答[10]、肿瘤会诊中的辅助工具[15],以及协助学术研究者[56,57]。在PubMed中简单检索"ChatGPT"可得到800余条结果,显示出该技术的快速探索与采纳态势。

Prior to ChatGPT, many patients have been using the internet to learn more about their health conditions 58,59. As ChatGPT surfaced, one of the first-line uses for the language model was answering patient questions. In cardiology, researchers have found that ChatGPT was able to adequately respond to prevention questions, suggesting that LLMs could help augment patient education and patient-clinician communication 10. Similarly, researchers have explored ChatGPT’s responses to common patient questions in hip replacements 11, radiology report findings60, and management of venomous snake bites61. Additionally, there has been interest in using ChatGPT to aid with translating medical texts and clinical encounters, with an objective of improving patient communication and satisfaction 62. These findings suggest a potential for bridging gaps in patient education; however, additional testing is needed to ensure fairness and accuracy.

在ChatGPT出现之前,许多患者已通过互联网了解自身健康状况[58,59]。随着ChatGPT的问世,该语言模型的首批应用场景之一便是解答患者疑问。心脏病学领域研究发现,ChatGPT能恰当回答疾病预防类问题,表明大语言模型可助力提升患者教育及医患沟通水平[10]。类似研究还探索了ChatGPT对髋关节置换术[11]、放射学报告解读[60]及毒蛇咬伤处理[61]等常见患者咨询的应答能力。另有研究关注利用ChatGPT进行医学文献翻译和临床会话转译,旨在改善患者沟通体验与满意度[62]。这些发现揭示了弥补患者教育鸿沟的潜力,但需进一步测试以确保公平性与准确性。

In addition to augmenting patient education, researchers are exploring LLM’s use as clinical workflow support tools. One study evaluated ChatGPT’s recommendations for next step management in breast cancer tumor boards, which are frequently composed of the most complex clinical cases15. Other studies explored the use of ChatGPT in responding to patient portal messages40, creating discharge summaries 41, writing operative notes63, and generating structured templates for radiology 60. While these studies suggest opportunities for mitigating the documentation burden facing physicians, rigorous real world evaluation should be completed prior to any clinical use.

除了增强患者教育外,研究人员还在探索将大语言模型(LLM)作为临床工作流支持工具的用途。一项研究评估了ChatGPT在乳腺癌肿瘤委员会中对下一步管理建议的表现(这些案例通常属于最复杂的临床病例)[15]。其他研究探讨了ChatGPT在回复患者门户消息[40]、创建出院小结[41]、撰写手术记录[63]以及生成放射学结构化模板[60]中的应用。虽然这些研究表明大语言模型有望减轻医生的文书负担,但在任何临床应用前都应完成严格的现实世界评估。

Aside from uses in clinical medicine, LLMs are being utilized in medical education and academia. Multiple researchers have explored LLM’s ability to conduct radiation oncology physics calculations 64, answer medical board questions in USMLE style9, and respond to clinical vignettes 65. The ability of this technology to adequately achieve passing scores on these medical exams raises questions on the need to revise medical curriculum and practices 66. Other programs have started exploring using LLM’s generative ability to create multiple choice questions for student exams67. In academia, there is a rise in exploring LLM’s ability to aid researchers, ranging from topic brainstorming to writing journal articles 56,68, resulting in a rising debate on the ethics and usage of LLMs in academic writing.

除了在临床医学中的应用外,大语言模型(LLM)还被用于医学教育和学术界。多位研究者探索了大语言模型执行放射肿瘤物理学计算[64]、以美国医师执照考试(USMLE)风格回答医学委员会问题[9]以及应对临床病例分析[65]的能力。该技术在这些医学考试中达到及格分数线的能力,引发了关于是否需要修订医学课程和实践的讨论[66]。其他项目已开始探索利用大语言模型的生成能力为学生考试创建选择题[67]。在学术界,越来越多研究探索大语言模型辅助研究者的能力,从主题头脑风暴到撰写期刊文章[56,68],这引发了关于学术写作中使用大语言模型的伦理与应用的持续争论。

4.2 Proposed tasks of LLM in medicine:

4.2 大语言模型 (LLM) 在医学领域的建议任务:

As evident by the abundance of work already done with LLMs, there is an ubiquitous amount of tasks that this technology can aid clinicians with, ranging from administrative tasks to gathering and enhancing medical knowledge (Table 1).

从大语言模型 (LLM) 已完成的丰富工作中可以看出,这项技术可以在从行政任务到收集和增强医学知识等众多任务中为临床医生提供帮助 (表 1)。

Table 1. Analysis of possible large language models tasks in medicine

表 1: 医学领域大语言模型潜在任务分析

| Task | Potential Pitfalls | Mitigation Strategies | |

| Administrative: Write insurance authorization letters Summarize medical notes Aid medical record documentation Create patient communication | Lack of HIPAA compliance: No publicly available model is currently HIPAA compliant, and thus PHI cannot be shared with the models. | Integrate LLMs within electronic health record systems. | |

| Augmenting knowledge: Answer diagnostic questions Answer questions about medical management Create and translate patient | Inherent bias: Pre-trained data models used for diagnostic analyses will introduce inherent bias. | Create domain-specific models that are trained on carefully curated datasets. Always include a human in the loop. | |

| Medical education: Write recommendation letters Create new exam questions and case-based scenarios Generate summaries of medical text at a student level | Lack of personalization: LLMs are generated from prior work already published, resulting in repetitive and unoriginal work. | Educate clinicians and users in using LLM tools to augment their work rather than replace. Encourage understanding how the technology works to mitigate unrealistic expectations of output. | |

| Generate research ideas and novel directions Write academic papers Write grants | Ethics: There has been an incredible amount of discussion among the scientific community on the ethics of using ChatGPT to generate scientific publications. This also raises the question of accessibility and the potential difficulties of future access to this technology. | Engage in conversation to increase accessibility of this technology to prevent widening gaps in research disparities. | |

| 任务 | 潜在风险 | 缓解策略 |

|---|---|---|

| * * 行政事务* * : | ||

| 撰写保险授权书 | ||

| 汇总医疗笔记 | ||

| 辅助病历记录 | ||

| 创建患者沟通材料 | 违反HIPAA合规性: | |

| 目前没有公开可用的模型符合HIPAA标准,因此无法与模型共享受保护健康信息(PHI)。 | 将大语言模型集成到电子健康记录系统中。 | |

| * * 知识增强* * : | ||

| 解答诊断问题 | ||

| 回答医疗管理相关问题 | ||

| 创建和翻译患者材料 | 固有偏见: | |

| 用于诊断分析的预训练数据模型会引入固有偏见。 | 构建基于精细筛选数据训练的领域专用模型。 | |

| 始终保持人工参与闭环。 | ||

| * * 医学教育* * : | ||

| 撰写推荐信 | ||

| 创建新型考题和基于案例的情景 | ||

| 生成适合学生理解的医学文本摘要 | 缺乏个性化: | |

| 大语言模型基于已发表的既往成果生成内容,导致重复且缺乏原创性。 | 教育临床医生和用户将大语言模型作为工作辅助工具而非替代品。 | |

| 鼓励理解技术原理以消除对输出结果的不切实际期望。 | ||

| * * 科研工作* * : | ||

| 产生研究创意和新方向 | ||

| 撰写学术论文 | ||

| 编写基金申请书 | 伦理问题: | |

| 科学界对使用ChatGPT生成科学出版物存在大量伦理讨论。 | ||

| 这同时引发了技术可及性问题及未来获取该技术可能面临的困难。 | 通过对话促进技术普及,防止研究差距进一步扩大。 |

5. Limitations and mitigation strategies:

- 局限性与缓解策略:

While researchers have demonstrated the feasibility of LLM’s use in medicine, there are also many limitations to these preliminary studies, emphasizing the need for future research and analysis. As discussed briefly above in Table 1, there are many potential pitfalls that clinicians using this technology need to be aware of. Key challenges posed by LLM include issues related to accuracy, bias, model inputs/outputs and privacy/ethical concerns. By understanding and addressing these limitations, researchers can foster responsible development and usage of these models to create a more equitable and trustworthy ecosystem.

虽然研究人员已经证明了大语言模型 (LLM) 在医学领域应用的可行性,但这些初步研究仍存在诸多局限性,凸显了未来研究和分析的必要性。如表 1 简要讨论的那样,临床医生在使用该技术时需要注意许多潜在陷阱。大语言模型带来的关键挑战包括准确性、偏见、模型输入/输出以及隐私/伦理问题。通过理解和解决这些局限性,研究人员可以促进这些模型负责任的开发和使用,从而建立一个更公平、更可信的生态系统。

5.1 Accuracy issues and dataset bias

5.1 准确性问题与数据集偏差

Models are only as accurate as the datasets that are used to train them, resulting in a reliance on the accuracy and completeness of the data. LLMs are trained on large datasets that have long surpassed the ability of human teams to manually quality check. This results in a model that is trained on a nebulous dataset that may further decrease user trust in these algorithms. Due to the inability to quality che