EDITABLE NEURAL NETWORKS

可编辑神经网络

Anton Sinitsin1∗ ant.sinitsin@gmail.com

Anton Sinitsin1∗ ant.sinitsin@gmail.com

Vsevolod Plo k hot n yuk 2∗ vsevolod-pl@yandex.ru

Vsevolod Plokhotnyuk 2∗ vsevolod-pl@yandex.ru

Dmitriy Pyrkin2∗ alagaster@yandex.ru

Dmitriy Pyrkin2∗ alagaster@yandex.ru

Sergei Popov1,2 pop ov sergey 95 $@$ gmail.com

Sergei Popov1,2 popovsergey95 $@$ gmail.com

Artem Babenko1,2 artem.babenko $@$ phystech.edu

Artem Babenko1,2 artem.babenko$@$phystech.edu

ABSTRACT

摘要

These days deep neural networks are ubiquitously used in a wide range of tasks, from image classification and machine translation to face identification and selfdriving cars. In many applications, a single model error can lead to devastating financial, reputation al and even life-threatening consequences. Therefore, it is crucially important to correct model mistakes quickly as they appear. In this work, we investigate the problem of neural network editing — how one can efficiently patch a mistake of the model on a particular sample, without influencing the model behavior on other samples. Namely, we propose Editable Training, a model-agnostic training technique that encourages fast editing of the trained model. We empirically demonstrate the effectiveness of this method on large-scale image classification and machine translation tasks.

如今,深度神经网络广泛应用于各类任务中,从图像分类、机器翻译到人脸识别和自动驾驶汽车。在许多应用中,单个模型错误可能导致严重的财务损失、声誉损害甚至危及生命的后果。因此,在错误出现时快速修正模型至关重要。本文研究了神经网络编辑问题——如何在特定样本上高效修补模型错误,同时不影响模型在其他样本上的表现。具体而言,我们提出了可编辑训练(Editable Training),这是一种与模型无关的训练技术,旨在促进训练模型的快速编辑。我们通过大规模图像分类和机器翻译任务,实证验证了该方法的有效性。

1 INTRODUCTION

1 引言

Deep neural networks match and often surpass human performance on a wide range of tasks including visual recognition (Krizhevsky et al. (2012); D. C. Ciresan (2011)), machine translation (Hassan et al. (2018)) and others (Silver et al. (2016)). However, just like humans, artificial neural networks sometimes make mistakes. As we trust them with more and more important decisions, the cost of such mistakes grows ever higher. A single mis classified image can be negligible in academic research but can be fatal for a pedestrian in front of a self-driving vehicle. A poor automatic translation for a single sentence can get a person arrested (Hern (2018)) or ruin company’s reputation.

深度神经网络在包括视觉识别 (Krizhevsky等人 (2012); D. C. Ciresan (2011))、机器翻译 (Hassan等人 (2018)) 等众多任务中 (Silver等人 (2016)) 达到甚至超越人类水平。然而与人脑类似,人工神经网络同样会犯错。随着我们将越来越重要的决策权交给它们,这类错误的代价正变得愈发高昂。学术研究中单张图像的误分类可能无足轻重,但对自动驾驶车辆前的行人而言却足以致命。一句糟糕的自动翻译可能让人身陷囹圄 (Hern (2018)) 或令企业声誉扫地。

Since mistakes are inevitable, deep learning practitioners should be able to adjust model behavior by correcting errors as they appear. However, this is often difficult due to the nature of deep neural networks. In most network architectures, a prediction for a single input depends on all model parameters. Therefore, updating a neural network to change its predictions on a single input can decrease performance across other inputs.

由于错误不可避免,深度学习从业者应能通过纠正出现的错误来调整模型行为。然而,由于深度神经网络的性质,这往往很困难。在大多数网络架构中,单个输入的预测依赖于所有模型参数。因此,更新神经网络以改变其对单个输入的预测可能会降低其他输入的性能。

Currently, there are two workaround s commonly used by practitioners. First, one can re-train the model on the original dataset augmented with samples that account for the mistake. However, this is computationally expensive as it requires to perform the training from scratch. Another solution is to use a manual cache (e.g. lookup table) that overrules model predictions on problematic samples. While being simple, this approach is not robust to minor changes in the input. For instance, it will not generalize to a different viewpoint of the same object or paraphrasing in natural language processing tasks.

目前,从业者通常采用两种变通方案。首先,可以在原始数据集上加入修正错误的样本后重新训练模型。但这种方法计算成本高昂,因为需要从头开始训练。另一种解决方案是使用手动缓存(如查找表)来覆盖模型对问题样本的预测。虽然简单,但这种方法对输入的微小变化不具备鲁棒性。例如,它无法泛化到同一物体的不同视角,或自然语言处理任务中的文本改写情况。

In this work, we present an alternative approach that we call Editable Training. This approach involves training neural networks in such a way that the trained parameters can be easily edited afterwards. Editable Training employs modern meta-learning techniques (Finn et al. (2017)) to ensure that model’s mistakes can be corrected without harming its overall performance. With thorough experimental evaluation, we demonstrate that our method works on both small academical datasets and industry-scale machine learning tasks. We summarize the contributions of this study as follows:

在这项工作中,我们提出了一种称为可编辑训练 (Editable Training) 的替代方法。该方法通过特定方式训练神经网络,使得训练后的参数能够被轻松修改。可编辑训练采用现代元学习技术 (Finn et al. (2017)) 来确保模型的错误可以被修正,同时不影响其整体性能。通过全面的实验评估,我们证明了该方法在小型学术数据集和工业级机器学习任务中均有效。本研究的主要贡献总结如下:

2 RELATED WORK

2 相关工作

In this section, we aim to position our approach with respect to existing literature. Namely, we explain the connections of Editable Neural Networks with ideas from prior works.

在本节中,我们将阐明本方法与现有文献的关联定位,具体解释可编辑神经网络 (Editable Neural Networks) 与先前研究思想的联系。

Meta-learning is a family of methods that aim to produce learning algorithms, appropriate for a particular machine learning setup. These methods were shown to be extremely successful in a large number of problems, such as few-shot learning (Finn et al. (2017); Nichol et al. (2018)), learnable optimization (An dry ch owicz et al. (2016)) and reinforcement learning (Houthooft et al. (2018)). Indeed, Editable Neural Networks also belong to the meta-learning paradigm, as they basically ”learn to allow effective patching”. While neural network correction has significant practical importance, we are not aware of published meta-learning works, addressing this problem.

元学习 (Meta-learning) 是一类旨在生成适用于特定机器学习场景的学习算法的方法。这些方法已被证明在少样本学习 (Finn et al. (2017); Nichol et al. (2018))、可学习优化 (Andrychowicz et al. (2016)) 和强化学习 (Houthooft et al. (2018)) 等大量问题上取得了巨大成功。事实上,可编辑神经网络 (Editable Neural Networks) 也属于元学习范式,因为它们本质上是在"学习如何实现有效修补"。尽管神经网络修正具有重要的实践意义,但我们尚未发现已发表的元学习工作专门解决这一问题。

Catastrophic forgetting is a well-known phenomenon arising in the problem of lifelong/continual learning (Ratcliff (1990)). For a sequence of learning tasks, it turns out that after deep neural networks learn on newer tasks, their performance on older tasks deteriorates. Several lines of research address overcoming catastrophic forgetting. The methods based on Elastic Weight Consolidation (Kirkpatrick et al. (2016)) update model parameters based on their importance to the previous learning tasks. The rehearsal-based methods (Robins (1995)) occasionally repeat learning on samples from earlier tasks to ”remind” the model about old data. Finally, a line of work (Garnelo et al. (2018); Lin et al. (2019)) develops specific neural network architectures that reduce the effect of catastrophic forgetting. The problem of efficient neural network patching differs from continual learning, as our setup is not sequential in nature. However, correction of model for mislabeled samples must not affect its behavior on other samples, which is close to overcoming catastrophic forgetting task.

灾难性遗忘 (catastrophic forgetting) 是终身学习/持续学习 (lifelong/continual learning) 领域中的一个经典问题 (Ratcliff (1990))。当深度神经网络在一系列学习任务中学习新任务时,其在旧任务上的性能会出现显著下降。目前主要有三类方法应对该问题:基于弹性权重固化 (Elastic Weight Consolidation) 的方法 (Kirkpatrick et al. (2016)) 会根据参数对先前任务的重要性来调整模型参数;基于回放 (rehearsal) 的方法 (Robins (1995)) 会定期使用旧任务样本进行复习以唤醒模型记忆;另一类研究 (Garnelo et al. (2018); Lin et al. (2019)) 则致力于设计能减轻灾难性遗忘的专用神经网络架构。高效的神经网络修补 (patching) 问题与持续学习存在本质区别——我们的设定并不具有时序性。但模型在修正错误标注样本时,必须确保不影响其他样本的表现,这一要求与克服灾难性遗忘的任务高度相关。

Adversarial training. The proposed Editable Training also bears some resemblance to the adversarial training (Goodfellow et al. (2015)), which is the dominant approach of adversarial attack defense. The important difference here is that Editable Training aims to learn models, whose behavior on some samples can be efficiently corrected. Meanwhile, adversarial training produces models, which are robust to certain input perturbations. However, in practice one can use Editable Training to efficiently cover model vulnerabilities against both synthetic (Szegedy et al. (2013); Yuan et al. (2017); Ebrahimi et al. (2017); Wallace et al. (2019)) and natural (Hendrycks et al. (2019)) adversarial examples.

对抗训练。提出的可编辑训练 (Editable Training) 也与对抗训练 (Goodfellow et al. (2015)) 有相似之处,后者是对抗攻击防御的主要方法。重要区别在于,可编辑训练旨在学习模型,使其在某些样本上的行为能被高效修正;而对抗训练产生的模型则对特定输入扰动具有鲁棒性。不过在实践中,可编辑训练可高效覆盖模型对合成对抗样本 (Szegedy et al. (2013); Yuan et al. (2017); Ebrahimi et al. (2017); Wallace et al. (2019)) 和自然对抗样本 (Hendrycks et al. (2019)) 的漏洞。

3 EDITING NEURAL NETWORKS

3 编辑神经网络

In order to measure and optimize the model’s ability for editing, we first formally define the operation of editing a neural network. Let $f(x,\theta)$ be a neural network, with $x$ denoting its input and $\theta$ being a set of network parameters. The parameters $\theta$ are learned by minimizing a task-specific objective function $\mathcal{L}_{b a s e}(\theta)$ , e.g. cross-entropy for multi-class classification problems.

为了衡量和优化模型的编辑能力,我们首先正式定义神经网络编辑操作。设 $f(x,\theta)$ 为一个神经网络,其中 $x$ 表示输入,$\theta$ 为网络参数集。参数 $\theta$ 通过最小化任务特定的目标函数 $\mathcal{L}_{b a s e}(\theta)$ 习得(例如多分类问题的交叉熵损失)。

Then, if we discover mistakes in the model’s behavior, we can patch the model by changing its parameters $\theta$ . Here we aim to change model’s predictions on a subset of inputs, corresponding to mis classified objects, without affecting other inputs. We formalize this goal using the editor function: $\scriptstyle{\hat{\theta}}=E d i t(\theta,l_{e})$ . Informally, this is a function that adjusts $\theta$ to satisfy a given constraint $l_{e}(\hat{\theta})\leq0$ , whose role is to enforce desired changes in the model’s behavior.

然后,如果我们发现模型行为存在错误,可以通过调整其参数 $\theta$ 来修补模型。我们的目标是在不干扰其他输入的情况下,修正模型对误分类对象对应输入子集的预测行为。这一目标通过编辑器函数形式化表示为: $\scriptstyle{\hat{\theta}}=Edit(\theta,l_{e})$ 。简而言之,该函数通过调整 $\theta$ 以满足给定约束条件 $l_{e}(\hat{\theta})\leq0$ ,从而实现模型行为的定向修正。

For instance, in the case of multi-class classification, $l_{e}$ can guarantee that the model assigns input $x$ to the desired label $y_{r e f}$ : $\begin{array}{r}{l_{e}(\hat{\theta})=\operatorname*{max}_ {y_{i}}\log p(y_{i}|x,\hat{\theta})-\log p(y_{r e f}|x,\hat{\theta})}\end{array}$ . Under such definition of $l_{e}$ , the constraint $l_{e}(\hat{\theta})\leq0$ is satisfied iff arg $\mathrm{nax}_ {y_{i}}\log p(y_{i}|x,\hat{\theta})=y_{r e f}$ .

例如,在多类别分类的情况下,$l_{e}$可以保证模型将输入$x$分配到期望的标签$y_{r e f}$:$\begin{array}{r}{l_{e}(\hat{\theta})=\operatorname*{max}_ {y_{i}}\log p(y_{i}|x,\hat{\theta})-\log p(y_{r e f}|x,\hat{\theta})}\end{array}$。在$l_{e}$的这种定义下,约束条件$l_{e}(\hat{\theta})\leq0$当且仅当arg $\mathrm{nax}_ {y_{i}}\log p(y_{i}|x,\hat{\theta})=y_{r e f}$时成立。

To be practically feasible, the editor function must meet three natural requirements:

为了实际可行,编辑器功能必须满足三个基本要求:

Intuitively, the editor locality aims to minimize changes in model’s predictions for inputs unrelated to $l_{e}$ . For classification problem, this requirement can be formalized as minimizing the difference between model’s predictions over the ”control” set $X_{c}\colon_{\stackrel{E}{x\in X_{c}}}\text{#}[f(x,\hat{\theta})\neq f(x,\theta)]\rightarrow\operatorname*{min}$ .

直观上,编辑器局部性旨在最小化模型对与$l_{e}$无关输入的预测变化。对于分类问题,该要求可形式化为最小化模型在"控制"集$X_{c}\colon_{\stackrel{E}{x\in X_{c}}}\text{#}[f(x,\hat{\theta})\neq f(x,\theta)]\rightarrow\operatorname*{min}$上的预测差异。

3.1 GRADIENT DESCENT EDITOR

3.1 梯度下降编辑器

A natural way to implement $E d i t(\theta,l_{e})$ for deep neural networks is using gradient descent. Parameters $\theta$ are shifted against the gradient direction $-\alpha\nabla_{\theta}l_{e}(\theta)$ for several iterations until the constraint $l_{e}(\theta)\leq0$ is satisfied. We formulate the SGD editor with up to $k$ steps and learning rate $\alpha$ as:

为深度神经网络实现 $Edit(\theta,l_{e})$ 的自然方法是使用梯度下降。参数 $\theta$ 沿梯度方向 $-\alpha\nabla_{\theta}l_{e}(\theta)$ 进行多次迭代调整,直到约束条件 $l_{e}(\theta)\leq0$ 得到满足。我们将最多进行 $k$ 步、学习率为 $\alpha$ 的SGD编辑器表述为:

$$

\mathrm{Edit}_ {\alpha}^{k}(\theta, l_{e}, k) =\begin{cases}\theta, & \text{if } l_{e}(\theta) \leq 0 \text{ or } k = 0 \quad

\mathrm{Edit}_ {\alpha}^{k-1}(\theta - \alpha \cdot \nabla_{\theta} l_{e}(\theta), l_{e}, k-1), & \text{otherwise}\end{cases}.

$$

$$

\mathrm{Edit}_ {\alpha}^{k}(\theta, l_{e}, k) =\begin{cases}\theta, & \text{if } l_{e}(\theta) \leq 0 \text{ or } k = 0 \quad

\mathrm{Edit}_ {\alpha}^{k-1}(\theta - \alpha \cdot \nabla_{\theta} l_{e}(\theta), l_{e}, k-1), & \text{otherwise}\end{cases}.

$$

The standard gradient descent editor can be further augmented with momentum, adaptive learning rates (Duchi et al. (2010); Zeiler (2012)) and other popular deep learning tricks (Kingma & Ba (2014); Smith & Topin (2017)). One technique that we found practically useful is Resilient Back propagation: RProp, SignSGD by Bernstein et al. (2018) or RMSProp by Tieleman & Hinton (2012). We observed that these methods produce more robust weight updates that improve locality.

标准梯度下降编辑器可以通过动量、自适应学习率 (Duchi et al. (2010); Zeiler (2012)) 以及其他流行的深度学习技巧 (Kingma & Ba (2014); Smith & Topin (2017)) 进一步增强。我们发现一种实际有效的技术是弹性反向传播:Bernstein等人 (2018) 提出的RProp、SignSGD,或Tieleman & Hinton (2012) 提出的RMSProp。我们观察到这些方法能产生更稳健的权重更新,从而改善局部性。

3.2 EDITABLE TRAINING

3.2 可编辑训练

The core idea behind Editable Training is to enforce the model parameters $\theta$ to be ”prepared” for the editor function. More formally, we want to learn such parameters $\theta$ , that the editor $E d i t(\theta,l_{e})$ is reliable, local and efficient, as defined in above.

可编辑训练 (Editable Training) 的核心思想是强制模型参数 $\theta$ 为编辑函数做好"准备"。更正式地说,我们希望学习这样的参数 $\theta$,使得编辑器 $Edit(\theta,l_{e})$ 能够满足上文定义的可靠性、局部性和高效性。

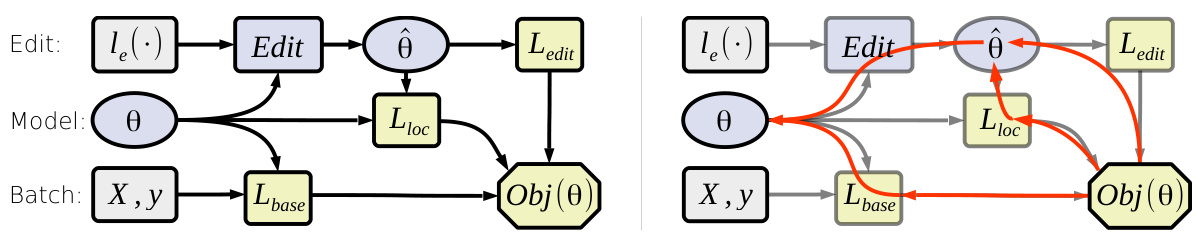

Our training procedure employs the fact that Gradient Descent Editor (1) is differentiable w.r.t. $\theta$ . This well-known observation (Finn et al. (2017)) allows us to optimize through the editor function directly via back propagation (see Figure 1).

我们的训练过程利用了梯度下降编辑器 (Gradient Descent Editor) (1) 对 $\theta$ 可微的特性。这一众所周知的观点 (Finn et al. (2017)) 使我们能够通过反向传播直接优化编辑器函数 (参见图 1)。

Figure 1: A high-level scheme of editable training: (left) forward pass, (right) backward pass.

图 1: 可编辑训练的高级方案:(左) 前向传播,(右) 反向传播。

Editable Training is performed on mini batches of constraints $l_{e}\sim p(l_{e})$ (e.g. images and target labels). First, we compute the edited parameters $\hat{\theta}=E d i t(\theta,l_{e})$ by applying up to $k$ steps of gradient descent (1). Second, we compute the objective that measures locality and efficiency of the editor function:

训练是在小批量约束条件 $l_{e}\sim p(l_{e})$ (例如图像和目标标签)上进行的。首先,我们通过应用最多 $k$ 步梯度下降 (1) 来计算编辑后的参数 $\hat{\theta}=E d i t(\theta,l_{e})$。其次,我们计算衡量编辑器函数局部性和效率的目标函数:

$$

\begin{array}{r l}&{O b j(\theta,l_{e})=\mathcal{L}_ {b a s e}(\theta)+c_{e d i t}\cdot\mathcal{L}_ {e d i t}(\theta)+c_{l o c}\cdot\mathcal{L}_ {l o c}(\theta)}\ &{\qquad\mathcal{L}_ {e d i t}(\theta)=m a x(0,l_{e}(E d i t_{\alpha}^{k}(\theta,l_{e}))}\ &{\mathcal{L}_ {l o c}(\theta)=\underset{x\sim p(x)}{E}D_{K L}(p(y|x,\theta)||p(y|x,E d i t_{\alpha}^{k}(\theta,l_{e})))}\end{array}

$$

$$

\begin{array}{r l}&{O b j(\theta,l_{e})=\mathcal{L}_ {b a s e}(\theta)+c_{e d i t}\cdot\mathcal{L}_ {e d i t}(\theta)+c_{l o c}\cdot\mathcal{L}_ {l o c}(\theta)}\ &{\qquad\mathcal{L}_ {e d i t}(\theta)=m a x(0,l_{e}(E d i t_{\alpha}^{k}(\theta,l_{e}))}\ &{\mathcal{L}_ {l o c}(\theta)=\underset{x\sim p(x)}{E}D_{K L}(p(y|x,\theta)||p(y|x,E d i t_{\alpha}^{k}(\theta,l_{e})))}\end{array}

$$

Intuitively, $\mathcal{L}_ {e d i t}(\theta)$ encourages reliability and efficiency of the editing procedure by making sure the constraint is satisfied in under $k$ gradient steps. The final term $\mathcal{L}_{l o c}(\theta)$ is responsible for locality by minimizing the KL divergence between the predictions of original and edited models.

直观上,$\mathcal{L}_ {e d i t}(\theta)$ 通过确保在 $k$ 次梯度步骤内满足约束条件,来提升编辑过程的可靠性和效率。最后一项 $\mathcal{L}_{l o c}(\theta)$ 则通过最小化原始模型与编辑后模型预测之间的KL散度来保证局部性。

We use hyper parameters $c_{e d i t}$ , $c_{l o c}$ to balance between the original task-specific objective, editor efficiency and locality. Setting both of them to large positive values would cause the model to sacrifice some of its performance for a better edit. On the other hand, sufficiently small $c_{e d i t},c_{l o c}$ will not cause any deterioration of the main training objective while still improving the editor function in all our experiments (see Section 4). We attribute this to the fact that neural networks are typically over parameterized. Most neural networks can accommodate the edit-related properties and still have enough capacity to optimize $O b j(\theta,l_{e})$ . The learning step $\alpha$ and other optimizer parameters (e.g. $\beta$ for RMSProp) are trainable parameters of Editable Training and we optimize them explicitly via gradient descent.

我们使用超参数 $c_{e d i t}$ 和 $c_{l o c}$ 来平衡原始任务目标、编辑效率与局部性。将两者设为较大的正值会导致模型牺牲部分性能以获得更好的编辑效果。另一方面,足够小的 $c_{e d i t},c_{l o c}$ 在所有实验中都不会影响主训练目标的优化,同时仍能提升编辑功能(见第4节)。我们认为这是由于神经网络通常存在过参数化现象——大多数网络在容纳编辑相关属性的同时,仍具备足够容量来优化 $O b j(\theta,l_{e})$。学习步长 $\alpha$ 及其他优化器参数(如RMSProp中的 $\beta$)是可编辑训练(Editable Training)的可训练参数,我们通过梯度下降对其进行显式优化。

4 EXPERIMENTS

4 实验

In this section, we extensively evaluate Editable Training on several deep learning problems and compare it to existing alternatives for efficient model patching.

在本节中,我们广泛评估了可编辑训练(Editable Training)在多个深度学习问题上的表现,并将其与现有高效模型修补方案进行了对比。

4.1 TOY EXPERIMENT: CIFAR-10

4.1 玩具实验:CIFAR-10

First, we experiment on image classification with the small CIFAR-10 dataset with standard train/test splits (Krizhevsky et al.). The training dataset is further augmented with random crops and random horizontal flips. All models trained on this dataset follow the ResNet-18 (He et al. (2015)) architecture and use the Adam optimizer (Kingma & Ba (2014)) with default hyper parameters.

首先,我们在小型CIFAR-10数据集上进行了图像分类实验,采用标准训练/测试划分 (Krizhevsky et al.)。训练数据集通过随机裁剪和随机水平翻转进行了数据增强。所有在该数据集上训练的模型均采用ResNet-18架构 (He et al. (2015)),并使用默认超参数的Adam优化器 (Kingma & Ba (2014))。

Our baseline is ResNet-18 (He et al. (2015)) neural network trained to minimize the standard crossentropy loss without Editable Training. This model provides $6.3%$ test error rate at convergence.

我们的基线模型是未经可编辑训练(Editable Training)、以最小化标准交叉熵损失为目标训练的ResNet-18(He et al. (2015))神经网络。该模型收敛时的测试错误率为$6.3%$。

Comparing editor functions. As a preliminary experiment, we compare several variations of editor functions for the baseline model without Editable Training. We evaluate each editor by applying $N{=}1000$ edits $l_{e}$ . Each edit consists of an image from the test set assigned with a random (likely incorrect) label uniformly chosen from 0 to 9. After $N$ independent edits, we compute three following metrics over the entire test set:

比较编辑功能。作为初步实验,我们比较了未使用可编辑训练(Editable Training)的基线模型中几种编辑功能的变体。通过应用 $N{=}1000$ 次编辑 $l_{e}$ 来评估每个编辑器。每次编辑包含一张测试集中的图像,并为其分配一个从0到9均匀随机选择(可能不正确)的标签。完成 $N$ 次独立编辑后,我们在整个测试集上计算以下三个指标:

• Drawdown — mean absolute difference of classification error before and after performing an edit. Smaller drawdown indicates better editor locality. • Success Rate — a rate of edits, for which editor succeeds in under $k{=}10$ gradient steps. • Num Steps — an average number of gradient steps needed to perform a single edit.

• 回撤 (Drawdown) — 执行编辑前后分类误差的平均绝对差值。回撤越小表示编辑器的局部性越好。

• 成功率 (Success Rate) — 编辑器在 $k{=}10$ 次梯度步内成功完成编辑的比例。

• 步数 (Num Steps) — 执行单次编辑所需的平均梯度步数。

| Editor Function | GD | Scaled GD | RProp | RMSProp | Momentum | Adam |

| Drawdown | 3.8% | 2.81% | 1.99% | 1.77% | 2.42% | 19.4% |

| Success Rate Num Steps | 98.8% 3.54 | 99.1% 3.91 | 100% 2.99 | 100% 3.11 | 96.0% 5.60 | 100% 3.86 |

| 编辑器功能 | GD | Scaled GD | RProp | RMSProp | Momentum | Adam |

|---|---|---|---|---|---|---|

| 回撤率 | 3.8% | 2.81% | 1.99% | 1.77% | 2.42% | 19.4% |

| 成功率/步数 | 98.8% 3.54 | 99.1% 3.91 | 100% 2.99 | 100% 3.11 | 96.0% 5.60 | 100% 3.86 |

Table 1: Comparison of different editor functions on the CIFAR10 dataset with the baseline ResNet18 model trained without Editable Training.

表 1: 在未使用可编辑训练 (Editable Training) 的基线 ResNet18 模型上,不同编辑方法在 CIFAR10 数据集上的功能对比

• RProp — like GD, but the algorithm only uses the sign of gradients: $\theta-\alpha\cdot s i g n(\nabla_{\theta}l_{e}(\theta))$ . • RMSProp — like GD, but the learning rate for each individual parameter is divided by $\sqrt{r m s_{t}+\epsilon}$ where $r m s_{0}=[\nabla_{\theta}l_{e}(\theta_{0})]^{2}$ and $r m s_{t+1}=\boldsymbol{\beta}\cdot r m s_{t}+(1-\boldsymbol{\beta})\cdot[\nabla_{\boldsymbol{\theta}}l_{e}(\boldsymbol{\theta})]^{2}$ . • Momentum GD — like GD, but the update follows the accumulated gradient direction $\nu$ : $\nu_{0}=0;\nu_{t+1}=\alpha\cdot\nabla_{\theta}l_{e}(\theta_{0})+\mu\cdot\nu_{t}$ . • Adam — adaptive momentum algorithm as described in Kingma & Ba (2014) with tunable $\alpha,\beta_{1},\beta_{2}$ . To prevent Adam from replicating RMSProp, we restrict $\beta_{1}$ to [0.1, 1.0] range.

• RProp —— 类似于梯度下降 (GD),但该算法仅使用梯度的符号:$\theta-\alpha\cdot s i g n(\nabla_{\theta}l_{e}(\theta))$。

• RMSProp —— 类似于梯度下降,但每个参数的学习率会除以 $\sqrt{r m s_{t}+\epsilon}$,其中 $r m s_{0}=[\nabla_{\theta}l_{e}(\theta_{0})]^{2}$,且 $r m s_{t+1}=\boldsymbol{\beta}\cdot r m s_{t}+(1-\boldsymbol{\beta})\cdot[\nabla_{\boldsymbol{\theta}}l_{e}(\boldsymbol{\theta})]^{2}$。

• Momentum GD —— 类似于梯度下降,但更新遵循累积梯度方向 $\nu$:$\nu_{0}=0;\nu_{t+1}=\alpha\cdot\nabla_{\theta}l_{e}(\theta_{0})+\mu\cdot\nu_{t}$。

• Adam —— 自适应动量算法,如 Kingma & Ba (2014) 所述,可调节参数 $\alpha,\beta_{1},\beta_{2}$。为避免 Adam 退化为 RMSProp,我们将 $\beta_{1}$ 限制在 [0.1, 1.0] 范围内。

For each optimizer, we tune all hyper parameters (e.g. learning rate) to optimize locality while ensuring that editor succeeds in under $k=10$ steps for at least $95%$ of edits. We also tune the editor function by limiting the subset of parameters it is allowed to edit. The ResNet-18 model consists of six parts: initial convolutional layer, followed by four ”chains” of residual blocks and a final linear layer that predicts class logits. We experimented with editing the whole model as well as editing each individual ”chain”, leaving parameters from other layers fixed. For each editor Table 1 reports the numbers, obtained for the subset of editable parameters, corresponding to the smallest drawdown. For completeness, we also report the drawdown of Gradient Descent and RMSProp for different subsets of editable parameters in Table 2.

对于每个优化器,我们调整所有超参数(如学习率)以优化局部性,同时确保编辑器在不超过$k=10$步的情况下成功完成至少$95%$的编辑。我们还通过限制编辑器可修改的参数子集来调整编辑函数。ResNet-18模型包含六个部分:初始卷积层,随后是四个残差块"链"和一个预测类别logits的最终线性层。我们尝试了编辑整个模型以及单独编辑每个"链",同时固定其他层的参数。表1展示了各编辑器在对应最小回撤的可编辑参数子集上的数值。为完整起见,表2还列出了梯度下降(Gradient Descent)和RMSProp在不同可编辑参数子集上的回撤情况。

| EditableLayers | WholeModel | Chain 1 | Chain 2 | Chain 3 | Chain 4 |

| GradientDescent | 3.8% | 18.3% | 7.7% | 5.3% | 4.76% |

| RMSProp | 2.29% | 22.8% | 1.85% | 1.77% | 1.99% |

| EditableLayers | WholeModel | Chain 1 | Chain 2 | Chain 3 | Chain 4 |

|---|---|---|---|---|---|

| GradientDescent | 3.8% | 18.3% | 7.7% | 5.3% | 4.76% |

| RMSProp | 2.29% | 22.8% | 1.85% | 1.77% | 1.99% |

Table 2: Mean Test Error Drawdown when editing different ResNet18 layers on CIFAR10.

表 2: 在CIFAR10上编辑不同ResNet18层时的平均测试误差回撤。

Table 1 and Table 2 demonstrate that the editor function locality is heavily affected by the choice of editing function even for models trained without Editable Training. Both RProp and RMSProp significantly outperform the standard Gradient Descent while Momentum and Adam show smaller gains. In fact, without the constraint $\beta_{1}>0.1$ the tuning procedure returns $\beta_{1}=0$ , which makes Adam equivalent to RMSProp. We attribute the poor performance of Adam and Momentum to the fact that most methods only make a few gradient steps till convergence and the momentum term cannot accumulate the necessary statistics.

表1和表2表明,即使对于未经可编辑训练(Editable Training)的模型,编辑器功能局部性也深受编辑函数选择的影响。RProp和RMSProp显著优于标准梯度下降法,而Momentum和Adam的提升幅度较小。事实上,若不施加约束$\beta_{1}>0.1$,调参过程会返回$\beta_{1}=0$,这使得Adam等效于RMSProp。我们将Adam和Momentum表现不佳归因于:大多数方法只需少量梯度步就能收敛,导致动量项无法积累必要的统计量。

Editable Training. Finally, we report results obtained with Editable Training. On each training batch, we use a single constraint $\begin{array}{r}{l_{e}(\bar{\hat{\theta}})=\operatorname*{max}_ {y_{i}}\log p(y_{i}|x,\hat{\theta})-\log p(y_{r e f}|x,\hat{\theta})}\end{array}$ , where $x$ is sampled from the train set and $y_{r e f}$ is a random class label (from 0 to 9). The model is then trained by directly minimizing objective (2) with $k{=}10$ editor steps and all other parameters optimized by back propagation.

可编辑训练。最后,我们报告了使用可编辑训练获得的结果。在每个训练批次上,我们使用单一约束 $\begin{array}{r}{l_{e}(\bar{\hat{\theta}})=\operatorname*{max}_ {y_{i}}\log p(y_{i}|x,\hat{\theta})-\log p(y_{r e f}|x,\hat{\theta})}\end{array}$ ,其中 $x$ 从训练集中采样, $y_{r e f}$ 是一个随机类别标签(从0到9)。然后通过直接最小化目标函数(2)来训练模型,其中 $k{=}10$ 次编辑步骤,其余所有参数通过反向传播优化。

We compare our Editable Training against three baselines, which also allow efficient model correction. The first natural baseline is Elastic Weight Consolidation (Kirkpatrick et al. (2016)): a technique that penalizes the edited model with the squared difference in parameter space, weighted by the importance of each parameter. Our second baseline is a semi-parametric Deep $\mathrm{k\Omega}$ -Nearest Neighbors (DkNN) model (Papernot & McDaniel (2018)) that makes predictions by using $k$ nearest neighbors in the space of embeddings, produced by different CNN layers. For this approach, we edit the model by flipping labels of nearest neighbors until the model predicts the correct class.

我们将可编辑训练 (Editable Training) 与三种同样支持高效模型修正的基线方法进行对比。第一个自然基线是弹性权重固化 (Elastic Weight Consolidation) (Kirkpatrick et al. (2016)):该技术通过参数空间的平方差(按各参数重要性加权)对编辑后的模型进行惩罚。第二个基线是半参数化深度k近邻 (Deep k-Nearest Neighbors, DkNN) 模型 (Papernot & McDaniel (2018)),该模型通过在不同CNN层生成的嵌入空间中使用k个最近邻进行预测。对于该方法,我们通过翻转最近邻的标签来编辑模型,直至模型预测出正确类别。

Finally we compare to alternative editor function inspired by Conditional Neural Processes (CNP) (Garnelo et al. (2018)) that we refer to as Editable+CNP. For this baseline, we train a specialized CNP model architecture that performs edits by adding a special condition vector to intermediate activation s. This vector is generated by an additional ”encoder” layer. We train the CNP model to solve the original classification problem when the condition vector is zero (hence, the model behaves as standard ResNet18) and minimize $\mathcal{L}_ {e d i t}$ and $\mathcal{L}_{l o c}$ when the condition vector is applied.

最后,我们对比了受条件神经过程 (Conditional Neural Processes, CNP) [20] 启发的另一种编辑器函数,称为 Editable+CNP。对于该基线,我们训练了一个专用的 CNP 模型架构,通过向中间激活添加特殊条件向量来执行编辑。该向量由额外的"编码器"层生成。我们训练 CNP 模型在条件向量为零时解决原始分类问题(此时模型表现为标准 ResNet18),并在应用条件向量时最小化 $\mathcal{L}_ {e d i t}$ 和 $\mathcal{L}_{l o c}$。

After tuning the CNP architecture, we obtained the best performance when the condition vector is computed with a single ResNet block that receives the image representation via activation s from the third residual chain of the main ResNet-18 model. This ”encoder” also conditions on the target class $y_{r e f}$ with an embedding layer (lookup table) that is added to the third chain activation s. The resulting procedure becomes the following: first, apply encoder to the edited sample and compute the condition vector, then add this vector to the third layer chain activation s for all subsequent inputs.

在调整CNP架构后,我们发现当条件向量通过单个ResNet块计算时性能最佳,该块接收来自主ResNet-18模型第三条残差链的激活值作为图像表征。该"编码器"还通过嵌入层(查找表)对目标类别$y_{ref}$进行条件化处理,并将其添加到第三条链的激活值中。最终流程如下:首先对编辑样本应用编码器并计算条件向量,然后将该向量添加到所有后续输入的第三层链激活值中。

| Training Procedure | Editor Function | Editable Layers | Test Error Rate | Test Error Drawdown | Success Rate | Num Steps |

| Baseline Training | GD RMSProp | All Chain 3 | 6.3% 6.3% | 3.8% 1.77% | 98.8% 100% | 3.54 3.11 |

| Editable Cloc = 0.01 | GD GD RMSProp | All Chain 3 | 6.34% 6.28% | 1.42% 1.44% | 100% 100% | 3.39 2.82 |

| Editable Cloc = 0.1 Editable+CNP (best) Baseline Training Baseline Training | RMSProp Cond.vector GD+EWC RMSProp+EwC | Chain 3 Chain 3 Chain 3 Chain 3 | 6.31% 7.19% 6.33% 6.3% 6.3% | 0.86% 0.65% 1.06% 1.92% | 100% 100% 98.9% 100% | 4.13 4.76 n/a 3.88 |

Table 3: Editable Training of ResNet18 on CIFAR10 dataset with different editor functions.

| 训练流程 | 编辑功能 | 可编辑层 | 测试错误率 | 测试误差下降 | 成功率 | 步骤数 |

|---|---|---|---|---|---|---|

| 基线训练 | GD RMSProp | 全链3 | 6.3% 6.3% | 3.8% 1.77% | 98.8% 100% | 3.54 3.11 |

| 可编辑Cloc=0.01 | GD GD RMSProp | 全链3 | 6.34% 6.28% | 1.42% 1.44% | 100% 100% | 3.39 2.82 |

| 可编辑Cloc=0.1 可编辑+CNP(最佳) 基线训练 基线训练 | RMSProp 条件向量 GD+EWC RMSProp+EwC | 链3 链3 链3 链3 | 6.31% 7.19% 6.33% 6.3% 6.3% | 0.86% 0.65% 1.06% 1.92% | 100% 100% 98.9% 100% | 4.13 4.76 n/a 3.88 |

表 3: 在CIFAR10数据集上使用不同编辑函数对ResNet18进行可编辑训练的结果。

Table 3 demonstrates two advantages of Editable Training. First, with $c_{l o c}{=}0.01$ it is able to reduce drawdown (compared to models trained without Editable Training) while having no significant effect on test error rate. Second, editing Chain 3 alone is almost as effective as editing the whole model. This is important because it allows us to reduce training time, making Editable Training $\approx2.5$ times slower than baseline training. Note, Editable $+\mathbf{CNP}$ turned out to be almost as effective as models trained with gradient-based editors while being simpler to implement.

表 3 展示了可编辑训练 (Editable Training) 的两个优势。首先,在 $c_{l o c}{=}0.01$ 时,它能够减少回撤 (drawdown) ,同时对测试错误率没有显著影响。其次,仅编辑 Chain 3 的效果几乎与编辑整个模型相当。这一点很重要,因为它可以缩短训练时间,使可编辑训练比基线训练慢 $\approx2.5$ 倍。值得注意的是,可编辑 $+\mathbf{CNP}$ 的效果几乎与基于梯度的编辑器训练的模型相当,同时实现更简单。

4.2 ANALYZING EDITED MODELS

4.2 分析编辑后的模型

In this section, we aim to interpret the differences between the models learned with and without Editable Training. First, we investigate which inputs are most affected when the model is edited on a sample that belongs to each particular class. Based on Figure 2 (left), we conclude that edits of baseline model cause most drawdown on samples that belong to the same class as the edited input (prior to edit). However, this visualization loses information by reducing edits to their class labels.

在本节中,我们旨在解释使用可编辑训练(Editable Training)与未使用时学习到的模型之间的差异。首先,我们研究当模型在属于每个特定类别的样本上进行编辑时,哪些输入受到的影响最大。根据图2(左),我们得出结论:基线模型的编辑对与编辑输入(编辑前)属于同一类别的样本造成最大的回撤。然而,这种可视化通过将编辑简化为类别标签而丢失了部分信息。

In Figure 2 (middle) we apply t-SNE (van der Maaten & Hinton (2008)) to analyze the structure of the ”edit space”. Intuitively, two edited versions of the same model are considered close if they make similar predictions. We quantify this by computing KL-divergence between the model’s predictions before and after edit for each of 10.000 test samples. These KL divergences effectively form a 10.000-dimensional model descriptor. We compute these descriptors for 4.500 edits applied to models trained with and without Editable Training. These vectors are then em