Augmented Language Models: a Survey

增强型语言模型:综述

∗Meta AI †Universitat Pompeu Fabra

*Meta AI †Universitat Pompeu Fabra

Abstract

摘要

This survey reviews works in which language models (LMs) are augmented with reasoning skills and the ability to use tools. The former is defined as decomposing a potentially complex task into simpler subtasks while the latter consists in calling external modules such as a code interpreter. LMs can leverage these augmentations separately or in combination via heuristics, or learn to do so from demonstrations. While adhering to a standard missing tokens prediction objective, such augmented LMs can use various, possibly non-parametric external modules to expand their context processing ability, thus departing from the pure language modeling paradigm. We therefore refer to them as Augmented Language Models (ALMs). The missing token objective allows ALMs to learn to reason, use tools, and even act, while still performing standard natural language tasks and even outperforming most regular LMs on several benchmarks. In this work, after reviewing current advance in ALMs, we conclude that this new research direction has the potential to address common limitations of traditional LMs such as interpret ability, consistency, and s cal ability issues.

本综述回顾了语言模型 (LM) 在推理能力和工具使用能力方面的增强研究。前者被定义为将潜在复杂任务分解为更简单的子任务,后者则涉及调用外部模块(如代码解释器)。语言模型可通过启发式方法单独或组合利用这些增强能力,或通过示范学习掌握相关技能。尽管仍遵循缺失token预测的基本目标,此类增强型语言模型能够利用各种可能非参数化的外部模块来扩展其上下文处理能力,从而脱离纯粹的语言建模范式。因此我们将其称为增强型语言模型 (ALM)。缺失token预测目标使ALMs能够学习推理、使用工具甚至执行动作,同时仍能完成标准自然语言任务,并在多个基准测试中超越大多数常规语言模型。本文在综述ALMs最新进展后指出,这一新研究方向有望解决传统语言模型在可解释性、一致性和可扩展性等方面的常见局限。

Contents

目录

Learning to reason, use tools, and act 15

学会推理、使用工具和行动 15

5 Discussion 19

5 讨论 19

6 Conclusion 21

6 结论 21

1 Introduction: motivation for the survey and definitions

1 引言:调查动机与定义

1.1 Motivation

1.1 动机

Large Language Models (LLMs) (Devlin et al., 2019; Brown et al., 2020; Chowdhery et al., 2022) have fueled dramatic progress in Natural Language Processing (NLP) and are already core in several products with millions of users, such as the coding assistant Copilot (Chen et al., 2021), Google search engine $^{\perp}$ or more recently ChatGPT2. Memorization (Tirumala et al., 2022) combined with compositional it y (Zhou et al., 2022) capabilities made LLMs able to execute various tasks such as language understanding or conditional and unconditional text generation at an unprecedented level of performance, thus opening a realistic path towards higher-bandwidth human-computer interactions.

大语言模型 (LLMs) (Devlin等人, 2019; Brown等人, 2020; Chowdhery等人, 2022) 推动了自然语言处理 (NLP) 领域的显著进步,并已成为多个拥有数百万用户产品的核心组件,例如代码助手Copilot (Chen等人, 2021)、谷歌搜索引擎$^{\perp}$或最近的ChatGPT2。记忆能力 (Tirumala等人, 2022) 与组合性 (Zhou等人, 2022) 的结合使大语言模型能够以前所未有的性能水平执行各种任务,如语言理解、条件与非条件文本生成,从而为更高带宽的人机交互开辟了现实路径。

However, LLMs suffer from important limitations hindering a broader deployment. LLMs often provide nonfactual but seemingly plausible predictions, often referred to as hallucinations (Welleck et al., 2020). This leads to many avoidable mistakes, for example in the context of arithmetic s (Qian et al., 2022) or within a reasoning chain (Wei et al., 2022c). Moreover, many LLMs groundbreaking capabilities seem to emerge with size, measured by the number of trainable parameters: for example, Wei et al. (2022b) demonstrate that LLMs become able to perform some BIG-bench tasks $^{3}$ via few-shot prompting once a certain scale is attained. Although a recent line of work yielded smaller LMs that retain some capabilities from their largest counterpart (Hoffmann et al., 2022), the size and need for data of LLMs can be impractical for training but also maintenance: continual learning for large models remains an open research question (Scialom et al., 2022). Other limitations of LLMs are discussed by Goldberg (2023) in the context of ChatGPT, a chatbot built upon GPT3.

然而,大语言模型存在一些重要限制,阻碍了其更广泛的部署。大语言模型经常提供不真实但看似合理的预测,这种现象通常被称为幻觉 (Welleck et al., 2020)。这会导致许多本可避免的错误,例如在算术运算 (Qian et al., 2022) 或推理链 (Wei et al., 2022c) 中。此外,许多大语言模型的突破性能力似乎随着规模(以可训练参数数量衡量)而涌现:例如,Wei et al. (2022b) 证明,一旦达到特定规模,大语言模型就能通过少样本提示执行某些 BIG-bench 任务$^{3}$。尽管最近一系列研究产生了能保留最大模型部分能力的小型语言模型 (Hoffmann et al., 2022),但大语言模型的规模和数据需求对于训练和维护都可能不切实际:大型模型的持续学习仍然是一个开放的研究问题 (Scialom et al., 2022)。Goldberg (2023) 在基于 GPT3 构建的聊天机器人 ChatGPT 背景下讨论了大语言模型的其他局限性。

We argue these issues stem from a fundamental defect of LLMs: they are generally trained to perform statistical language modeling given (i) a single parametric model and (ii) a limited context, typically the $n$ previous or surrounding tokens. While $n$ has been growing in recent years thanks to software and hardware innovations, most models still use a relatively small context size compared to the potentially large context needed to always correctly perform language modeling. Hence, massive scale is required to store knowledge that is not present in the context but necessary to perform the task at hand.

我们认为这些问题源于大语言模型的一个根本缺陷:它们通常被训练为在给定 (i) 单个参数化模型和 (ii) 有限上下文(通常是前后 $n$ 个token)的情况下执行统计语言建模。尽管近年来得益于软硬件创新,$n$ 值不断增长,但与始终正确执行语言建模所需的潜在大上下文相比,大多数模型仍使用相对较小的上下文窗口。因此,需要海量规模来存储那些未出现在上下文中但对执行当前任务必需的知识。

As a consequence, a growing research trend emerged with the goal to solve these issues, slightly moving away from the pure statistical language modeling paradigm described above. For example, a line of work circumvents the limited context size of LLMs by increasing its relevance: this is done by adding information extracted from relevant external documents. Through equipping LMs with a module that retrieves such documents from a database given a context, it is possible to match certain capabilities of some of the largest LMs while having less parameters (Borgeaud et al., 2022; Izacard et al., 2022). Note that the resulting model is now non-parametric since it can query external data sources. More generally, LMs can also improve their context via reasoning strategies (Wei et al. (2022c); Taylor et al. (2022); Yang et al. (2022c) inter alia) so that a more relevant context is produced in exchange for more computation before generating an answer. Another strategy is to allow LMs to leverage external tools (Press et al. (2022); Gao et al. (2022); Liu et al. (2022b) inter alia) to augment the current context with important missing information that was not contained in the LM’s weights. Although most of these works aim to alleviate the downfalls of LMs mentioned above separately, it is straightforward to think that more systematically augmenting LMs with both reasoning and tools may lead to significantly more powerful agents. We will refer to these models as Augmented Language Models (ALMs). As this trend is accelerating, keeping track and understanding the scope of the numerous results becomes arduous. This calls for a taxonomy of ALMs works and definitions of technical terms that are used with sometimes different intents.

因此,为解决这些问题,研究趋势逐渐偏离上述纯统计语言建模范式。例如,有研究通过提升上下文相关性来规避大语言模型的有限上下文容量限制:具体做法是从相关外部文档中提取信息进行补充。通过为语言模型配备检索模块(根据上下文从数据库中获取文档),某些最大规模语言模型的能力得以用更少参数量实现 (Borgeaud et al., 2022; Izacard et al., 2022)。值得注意的是,这种模型因能查询外部数据源而成为非参数化模型。更广泛地说,语言模型还能通过推理策略优化上下文 (Wei et al. (2022c); Taylor et al. (2022); Yang et al. (2022c) 等),即在生成答案前通过额外计算产生更相关的上下文。另一种策略是让语言模型调用外部工具 (Press et al. (2022); Gao et al. (2022); Liu et al. (202b) 等),用模型权重中缺失的关键信息增强当前上下文。虽然这些研究大多单独解决上述语言模型的缺陷,但可以预见,系统性地结合推理与工具来增强语言模型,或将催生更强大的AI智能体。我们将此类模型称为增强语言模型 (ALM)。随着该趋势加速发展,追踪和理解大量成果的范围变得困难,亟需建立ALM研究的分类体系,并对存在多重含义的技术术语进行明确定义。

Definitions. We now provide definitions for terms that will be used throughout the survey.

定义。我们接下来为本综述中使用的术语提供定义。

Why jointly discussing reasoning and tools? The combination of reasoning and tools within LMs should allow to solve a broad range of complex tasks without heuristics, hence with better generalization capabilities. Typically, reasoning would foster the LM to decompose a given problem into potentially simpler subtasks while tools would help getting each step right, for example obtaining the result from a mathematical operation. Put it differently, reasoning is a way for LMs to combine different tools in order to solve complex tasks, and tools are a way to not fail a reasoning with valid decomposition. Both should benefit from the other. Moreover, reasoning and tools can be put under the same hood, as both augment the context of the LM so that it better predicts the missing tokens, albeit in a different way.

为什么需要同时讨论推理与工具?在大语言模型(LM)中结合推理与工具,应能无需启发式方法即可解决广泛复杂任务,从而获得更好的泛化能力。通常,推理会促使LM将给定问题分解为可能更简单的子任务,而工具则有助于正确执行每个步骤(例如获取数学运算结果)。换言之,推理是LM组合不同工具以解决复杂任务的方式,而工具则是确保有效分解的推理不会失败的手段。二者应能相互促进。此外,推理与工具可归为同一框架下,因为它们都以不同方式扩展了LM的上下文,从而更好地预测缺失的token。

Why jointly discussing tools and actions? Tools that gather additional information and tools that have an effect on the virtual or physical world can be called in the same fashion by the LM. For example, there is seemingly no difference between a LM outputting python code for solving a mathematical operation, and a LM outputting python code to manipulate a robotic arm. A few works discussed in the survey are already using LMs that have effects on the virtual or physical world: under this view, we can say that the LM have the potential to act, and expect important advances in the direction of LMs as autonomous agents.

为何要同时讨论工具与行动?

无论是用于收集额外信息的工具,还是能对虚拟或物理世界产生影响的工具,大语言模型(LM)都能以相同方式调用。例如,大语言模型输出用于数学运算的Python语言代码,与输出操控机械臂的Python语言代码,表面上看并无差异。本综述讨论的部分研究已使用能影响虚拟或物理世界的大语言模型:由此视角可见,大语言模型具备行动潜力,并有望在自主AI智能体方向取得重要进展。

1.2 Our classification

1.2 我们的分类

We decompose the works included in the survey under three axes. Section 2 studies works which augment LM’s reasoning capabilities as defined above. Section 3 focuses on works allowing LMs to interact with external tools and act. Finally, Section 4 explores whether reasoning and tools usage are implemented via heuristics or learned, e.g. via supervision or reinforcement. Other axes could naturally have been chosen for this survey and are discussed in Section 5. For conciseness, the survey focuses on works that combine reasoning or tools with LMs. However, the reader should keep in mind that many of these techniques were originally introduced in another context than LMs, and consult the introduction and related work section of the papers we mention if needed. Finally, although we focus on LLMs, not all works we consider employ large models, hence we stick to LMs for correctness in the remainder of the survey.

我们将调查中包含的工作分解为三个维度。第2节研究增强大语言模型推理能力的工作。第3节重点关注使大语言模型能够与外部工具交互并执行操作的工作。最后,第4节探讨推理和工具使用是通过启发式方法实现还是通过学习(例如通过监督或强化)实现。本调查本可以选择其他维度,这些将在第5节讨论。为简洁起见,本调查重点关注将推理或工具与大语言模型结合的工作。然而,读者应记住,这些技术中有许多最初是在大语言模型之外的背景下提出的,如果需要,可以参考我们提到的论文的引言和相关工作部分。最后,尽管我们关注的是大语言模型,但我们考虑的工作并非都使用大型模型,因此在调查的其余部分,我们仍使用语言模型以确保准确性。

2 Reasoning

2 推理

In general, reasoning is the ability to make inferences using evidence and logic. Reasoning can be divided into multiple types of skills such as commonsense reasoning (McCarthy et al., 1960; Levesque et al., 2012), mathematical reasoning (Cobbe et al., 2021), symbolic reasoning (Wei et al., 2022c), etc. Often, reasoning involves deductions from inference chains, called as multi-step reasoning. In the context of LMs, we will use the definition of reasoning provided in Section 1. Previous work has shown that LLMs can solve simple reasoning problems but fail at complex reasoning (Creswell et al., 2022): hence, this section focuses on various strategies to augment LM’s reasoning skills. One of the challenges with complex reasoning problems for LMs is to correctly obtain the solution by composing the correct answers predicted by it to the subproblems. For example, a LM may correctly predict the dates of birth and death of a celebrity, but may not correctly predict the age. Press et al. (2022) call this discrepancy the compositional it y gap for LMs. For the rest of this section, we discuss the works related to three popular paradigms for eliciting reasoning in LMs. Note that Huang and Chang (2022) propose a survey on reasoning in language models. Qiao et al. (2022) also propose a survey on reasoning albeit with a focus on prompting. Since our present work focuses on reasoning combined with tools, we refer the reader to Huang and Chang (2022); Qiao et al. (2022) for a more in-depth review of works on reasoning for LLMs.

一般而言,推理是指运用证据和逻辑进行推断的能力。推理可划分为常识推理 (commonsense reasoning) [McCarthy et al., 1960; Levesque et al., 2012]、数学推理 (mathematical reasoning) [Cobbe et al., 2021]、符号推理 (symbolic reasoning) [Wei et al., 2022c] 等多种技能类型。推理通常涉及从推断链中演绎,称为多步推理 (multi-step reasoning)。在大语言模型语境下,我们将采用第1节中给出的推理定义。已有研究表明,大语言模型能解决简单推理问题,但在复杂推理中表现不佳 [Creswell et al., 2022],因此本节重点探讨增强大语言模型推理能力的多种策略。

大语言模型处理复杂推理问题的挑战之一,是通过组合其对子问题的正确预测来准确获得最终解。例如,大语言模型可能正确预测某位名人的出生与逝世日期,却无法准确推算其年龄。Press et al. (2022) 将这种差异称为大语言模型的组合性鸿沟 (compositionality gap)。本节后续内容将讨论与大语言模型推理激发相关的三大主流范式研究。值得注意的是,Huang and Chang (2022) 发表了关于语言模型推理的综述研究,Qiao et al. (2022) 也发表了以提示技术 (prompting) 为核心的推理综述。由于本文聚焦工具增强型推理,建议读者参阅 Huang and Chang (2022) 和 Qiao et al. (2022) 以获取更全面的大语言模型推理研究评述。

2.1 Eliciting reasoning with prompting

2.1 通过提示激发推理

In recent years, prompting LMs to solve various downstream tasks has become a dominant paradigm (Brown et al., 2020). In prompting, examples from a downstream task are transformed such that they are formulated as a language modeling problem. Prompting typically takes one of the two forms: zero-shot, where the model is directly prompted with a test example’s input; and few-shot, where few examples of a task are prepended along with a test example’s input. This few-shot prompting is also known as in-context learning or few-shot learning. As opposed to “naive” prompting that requires an input to be directly followed by the output/answer, elicitive prompts encourage LMs to solve tasks by following intermediate steps before predicting the output/answer. Wei et al. (2022c) showed that elicitive prompting enables LMs to be better reasoners in a few-shot setting. Later, Kojima et al. (2022) showed similar ability in a zero-shot setting. We discuss them in detail in the following paragraphs.

近年来,通过提示大语言模型解决各类下游任务已成为主流范式 (Brown et al., 2020)。提示技术将下游任务样本转化为语言建模问题的形式,通常表现为两种模式:零样本(直接输入测试案例)和少样本(在测试输入前附加少量任务示例)。这种少样本提示也被称为上下文学习或少样本学习。与要求输入后直接输出答案的"朴素"提示不同,引导式提示会鼓励大语言模型通过中间推理步骤来解决问题。Wei et al. (2022c) 证实引导式提示能显著提升少样本场景下的模型推理能力,随后 Kojima et al. (2022) 在零样本场景中也观察到类似效果。我们将在下文详细讨论这些发现。

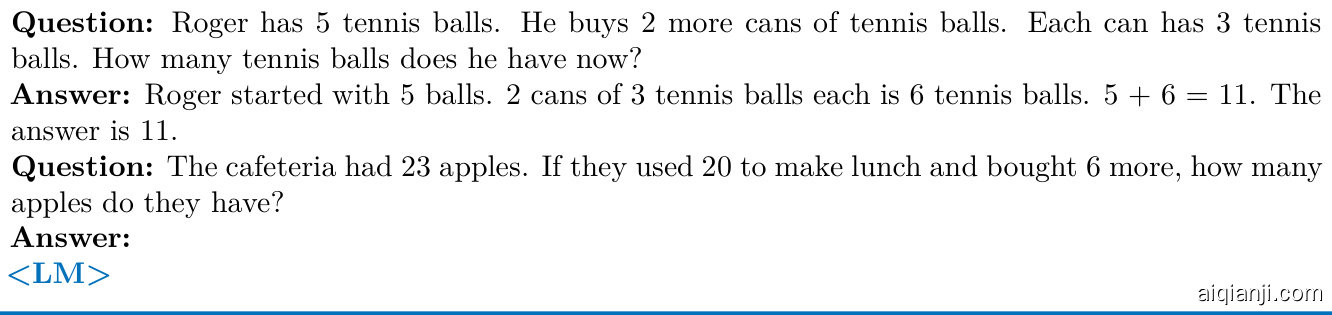

Figure 1: An example of few-shot Chain-of-Thought prompt. $<$ <LM $>$ denotes call to the LM with the above prompt.

图 1: 少样本思维链提示示例。$<$ <LM $>$ 表示使用上述提示调用大语言模型。

Few-shot setting. Wei et al. (2022c) introduced chain-of-thought (CoT), a few-shot prompting technique for LMs. The prompt consists of examples of a task, with inputs followed by intermediate reasoning steps leading to the final output, as depicted in Figure 1. Table 1 shows that CoT outperforms standard prompting methods. Wei et al. (2022b) observe that the success of the few-shot strategy emerges with scale, while Tay et al. (2022) add that without fine-tuning, successful use of CoT generally requires 100B $^+$ parameters LMs such as LaMDA (Thoppilan et al., 2022), PaLM (Chowdhery et al., 2022) or GPT3 (Brown et al., 2020; Ouyang et al., 2022), before proposing UL2, a 20B open source model that can perform CoT. Using few-shot CoT prompting, Minerva (Lewkowycz et al., 2022) achieves excellent performance on math bench- marks such as GSM8K (Cobbe et al., 2021). Wang et al. (2022c) further improve CoT with Self-consistency: diverse reasoning paths are sampled from a given language model using CoT, and the most consistent answer is selected as the final answer. Press et al. (2022) introduce Self-ask, a prompt in the spirit of CoT. Instead of providing the model with a continuous chain of thought as in Figure 1, Self-ask explicitly states the follow-up question before answering it and relies on a scaffold (e.g, “Follow-up question:” or “So the final answer is:”), so that the answers are more easily parseable. The authors demonstrate an improvement over CoT on their introduced datasets aiming at measuring the compositional it y gap. They observe that this gap does not narrow when increasing the size of the model. Note that Press et al. (2022) focus on 2-hop questions, i.e., questions for which the model only needs to compose two facts to obtain the answer. Interestingly, Self-ask can easily be augmented with a search engine (see Section 3). ReAct (Yao et al., 2022b) is another few-shot prompting approach eliciting reasoning that can query three tools throughout the reasoning steps: search and lookup in Wikipedia, and finish to return the answer. ReAct will be discussed in more detail in the next sections.

少样本设置。Wei等人(2022c)提出了思维链(CoT)技术,这是一种针对大语言模型的少样本提示方法。如图1所示,提示包含任务示例,其中输入后跟着导致最终输出的中间推理步骤。表1显示CoT优于标准提示方法。Wei等人(2022b)观察到少样本策略的成功随模型规模而显现,而Tay等人(2022)补充说,在没有微调的情况下,成功使用CoT通常需要1000亿参数以上的大语言模型,如LaMDA(Thoppilan等人,2022)、PaLM(Chowdhery等人,2022)或GPT3(Brown等人,2020;Ouyang等人,2022),之后他们提出了能够执行CoT的200亿参数开源模型UL2。使用少样本CoT提示,Minerva(Lewkowycz等人,2022)在GSM8K(Cobbe等人,2021)等数学基准测试中取得了优异表现。Wang等人(2022c)通过自一致性进一步改进了CoT:使用CoT从给定语言模型中采样多样化的推理路径,并选择最一致的答案作为最终答案。Press等人(2022)提出了Self-ask提示方法,其精神与CoT相似。不同于图1中为模型提供连续的思维链,Self-ask在回答问题前明确陈述后续问题,并依赖脚手架(如"后续问题:"或"所以最终答案是:"),使答案更易解析。作者在他们引入的旨在测量组合性差距的数据集上展示了优于CoT的改进。他们观察到,当增加模型规模时,这种差距并未缩小。值得注意的是,Press等人(2022)专注于2跳问题,即模型只需组合两个事实即可获得答案的问题。有趣的是,Self-ask可以轻松与搜索引擎结合使用(见第3节)。ReAct(Yao等人,2022b)是另一种引发推理的少样本提示方法,可以在推理步骤中查询三种工具:在维基百科中搜索和查找,以及完成以返回答案。ReAct将在后续章节中更详细讨论。

Zero-shot setting. Kojima et al. (2022) extend the idea of eliciting reasoning in LMs to zero-shot prompting. Whereas few-shot provides examples of the task at hand, zero-shot conditions the LM on a single prompt that is not an example. Here, Kojima et al. (2022) simply append Let’s think step by step to the input question before querying the model (see Figure 2), and demonstrate that zero-shot-CoT for large LMs does well on reasoning tasks such as GSM8K although not as much as few-shot-CoT.

零样本 (Zero-shot) 设置。Kojima等人 (2022) 将大语言模型中激发推理的思路扩展到了零样本提示。少样本 (few-shot) 提供了当前任务的示例,而零样本则基于单个非示例提示对大语言模型进行条件设定。Kojima等人 (2022) 在查询模型前,简单地在输入问题后附加"让我们一步步思考" (见 图 2),并证明虽然不如少样本思维链 (Few-shot-CoT),但大语言模型的零样本思维链 (Zero-shot-CoT) 在GSM8K等推理任务上表现良好。

Figure 2: An example of zero-shot Chain-of-Thought prompt. $<$ <LM $>$ denotes call to the LM with the above prompt.

图 2: 零样本思维链 (Zero-shot Chain-of-Thought) 提示示例。$<$ <LM $>$ 表示用上述提示调用大语言模型。

Table 1: Evaluation of different reasoning methods on GSM8K, a popular reasoning benchmark. FT denotes fine-tuning and CoT denotes chain-of-thought. The reported accuracies are based on [1]: (Wei et al., 2022c); [2]: (Cobbe et al., 2021); [3]: (Chowdhery et al., 2022); and [4]: (Gao et al., 2022).

| Model | Accuracy (%) |

| OpenAI (text-davinci-002)[1] | 15.6 |

| OpenAI (text-davinci-002) + CoT[l] OpenAI (text-davinci-002) + CoT + Calculator[1] OpenAI (code-davinci-002)[1] | 46.9 |

| 46.9 | |

| 19.7 | |

| OpenAI (code-davinci-002) + CoT[1] OpenAI (code-davinci-002) + CoT + Calculator[1] | 63.1 |

| 65.4 | |

| 34.0 |

表 1: 不同推理方法在热门推理基准GSM8K上的评估结果。FT表示微调,CoT表示思维链。报告的准确率基于以下文献: [1]: (Wei et al., 2022c); [2]: (Cobbe et al., 2021); [3]: (Chowdhery et al., 2022); 以及[4]: (Gao et al., 2022)。

| 模型 | 准确率 (%) |

|---|---|

| OpenAI (text-davinci-002)[1] | 15.6 |

| OpenAI (text-davinci-002) + CoT[1] | 46.9 |

| OpenAI (text-davinci-002) + CoT + Calculator[1] | 46.9 |

| OpenAI (code-davinci-002)[1] | 19.7 |

| OpenAI (code-davinci-002) + CoT[1] | 63.1 |

| OpenAI (code-davinci-002) + CoT + Calculator[1] | 65.4 |

| 34.0 |

2.2 Recursive prompting

2.2 递归式提示 (Recursive prompting)

Several works attempt to elicit intermediate reasoning steps by explicitly decomposing problems into subproblems in order to solve the problem in a divide and conquer manner. This recursive approach can be especially useful for complex tasks, given that compositional generalization can be challenging for LMs (Lake and Baroni, 2018; Keysers et al., 2019; Li et al., 2022a). Methods that employ problem decomposition can either then solve the sub-problems independently, where these answers are aggregated to generate the final answer (Perez et al., 2020; Min et al., 2019), or solve the sub-problems sequentially, where the solution to the next sub-problem depends on the answer to the previous ones (Yang et al., 2022a; Zhou et al., 2022; Drozdov et al., 2022; Dua et al., 2022; Khot et al., 2022; Wang et al., 2022a; Wu et al., 2022b). For instance, in the context of math problems, Least-to-most prompting (Zhou et al., 2022) allows a language model to solve harder problems than the demonstration examples by decomposing a complex problem into a list of sub-problems. It first employs few-shot prompting to decompose the complex problem into sub-problems, before sequentially solving the extracted sub-problems, using the solution to the previous sub-problems to answer the next one.

多项研究尝试通过将问题显式分解为子问题来引出中间推理步骤,从而以分治方式解决问题。鉴于组合泛化对大语言模型具有挑战性 (Lake and Baroni, 2018; Keysers et al., 2019; Li et al., 2022a) ,这种递归方法对复杂任务尤为有效。采用问题分解的方法可分为两类:独立求解子问题后聚合答案生成最终结果 (Perez et al., 2020; Min et al., 2019) ,或顺序求解子问题——即后一子问题的求解依赖于前一子问题的答案 (Yang et al., 2022a; Zhou et al., 2022; Drozdov et al., 2022; Dua et al., 2022; Khot et al., 2022; Wang et al., 2022a; Wu et al., 2022b) 。例如在数学问题场景中,最少到最多提示法 (Zhou et al., 2022) 通过将复杂问题分解为子问题列表,使大语言模型能解决比示例更难的题目。该方法先采用少样本提示分解复杂问题,再顺序求解子问题——利用前序子问题的解来回答后续问题。

While many earlier works include learning to decompose through distant supervision (Perez et al., 2020; Talmor and Berant, 2018; Min et al., 2019), like Zhou et al. (2022), many recent works employ in-context learning to do so (Yang et al., 2022a; Khot et al., 2022; Dua et al., 2022). Among these, there are further differences. For instance, Drozdov et al. (2022) is a follow-up work to Zhou et al. (2022), but differs by using a series of prompts to perform recursive syntactic parses of the input rather than a linear decomposition, and also differs by choosing the exemplars automatically through various heuristics. Dua et al. (2022) is concurrent work with Zhou et al. (2022) but differs by interweaving the question decomposition and answering stages, i.e., the next sub-question prediction has access to the previous questions and answers as opposed to generating all sub-questions independently of any previous answers. Yang et al. (2022a), on the other hand, decomposes using rule-based principles and slot-filling prompting to translate questions into a series of SQL operations. Khot et al. (2022) also employs prompts to decompose into specific operations, but then allows each sub-problem to be solved using a library of specialized handlers, where each is devoted to a particular sub-task (e.g., retrieval).

虽然许多早期研究通过远程监督学习分解 (Perez et al., 2020; Talmor and Berant, 2018; Min et al., 2019) ,但如 Zhou et al. (2022) 所示,近期研究多采用上下文学习实现这一目标 (Yang et al., 2022a; Khot et al., 2022; Dua et al., 2022) 。这些方法存在进一步差异:例如 Drozdov et al. (2022) 作为 Zhou et al. (2022) 的后续工作,其创新点在于使用提示序列对输入进行递归句法解析而非线性分解,并采用启发式方法自动选择示例。Dua et al. (2022) 与 Zhou et al. (2022) 同期发表,但通过交织问题分解与回答阶段实现差异化——即预测下一个子问题时能参考先前的问题与答案,而非独立生成所有子问题。Yang et al. (2022a) 则采用基于规则的原理和槽填充提示技术将问题转化为系列SQL操作。Khot et al. (2022) 同样使用提示分解为特定操作,但允许通过专用处理器库解决各子问题 (如检索等专项子任务) 。

2.3 Explicitly teaching language models to reason

2.3 显式教授语言模型进行推理

Despite their spectacular results, prompting approaches have some drawbacks in addition to requiring model scale. Namely, they require to discover prompts that elicit e.g. step-by-step reasoning, manually providing examples when it comes to few-shot for a new task. Moreover, prompting is computationally expensive

尽管提示方法取得了显著成果,但除了需要模型规模外,它们还存在一些缺点。具体而言,这些方法需要发现能引发逐步推理等行为的提示,并在面对新任务的少样本学习时手动提供示例。此外,提示方法的计算成本较高。

Prompt 0

提示 0

Question: It takes Amy 4 minutes to climb to the top of a slide. It takes her 1 minute to slide down. The water slide closes in 15 minutes. How many times can she slide before it closes?

问题:Amy爬上滑梯顶部需要4分钟,滑下来需要1分钟。水上滑梯将在15分钟后关闭。在关闭前她能滑几次?

Prompt 1

提示 1

Sub question 1: How long does each trip take?

子问题1:每次行程需要多长时间?

Prompt 2

提示词 2

Sub question 1: How long does each trip take?

子问题1:每次行程需要多长时间?

Figure 3: Recursive prompting example. <LM $>$ denotes the start of the LM’s output to the prompt, while $</\mathbf{LM}>$ denotes the end. The problem is first decomposed into sub problems in Prompt 0. Then, Answer 2 to Sub question 2 and Answer 1 to Sub question 1 are sequentially fed to Prompt 2 and Prompt 1. The few-shot examples for each stage’s prompt are omitted. Inspired from Figure 1 in Zhou et al. (2022).

图 3: 递归提示示例。 <LM $>$ 表示大语言模型对提示的输出开始,而 $</\mathbf{LM}>$ 表示结束。问题首先在提示0中被分解为子问题。随后,子问题2的答案2和子问题1的答案1分别被输入到提示2和提示1中。每个阶段提示的少样本示例已省略。灵感来自Zhou等人(2022)的图1。

in the case of long prompts, and it is harder to benefit from a relatively large number of examples due to limited context size of the model. Recent works suggest to circumvent these issues by training LMs to use, as humans, a working memory when more than one step are required to solve a task correctly. Nye et al. (2021) introduce the notion of scratchpad, allowing a LM to better perform on multi-step computation tasks such as addition or code execution. More precisely, at training time, the LM sees input tasks such as addition along with associated intermediate steps: the ensemble is called a scratchpad. At test time, the model is required to predict the steps and the answer from the input task. Scratch pads differ from the above prompting strategies in that they are fine-tuned on example tasks with associated computation steps. Note however that Nye et al. (2021) also perform experiments in the few-shot regime. Taylor et al. (2022) use a similar approach in the context of large LM pre-training: Galactica was trained on a corpus of scientific data including some documents where step-by-step reasoning is wrapped with a special token

在处理长提示时,由于模型上下文长度的限制,较难从大量示例中获益。近期研究提出通过训练大语言模型像人类一样使用工作记忆来解决多步骤任务,以规避这些问题。Nye等人(2021) 提出了草稿纸(scratchpad)的概念,使大语言模型在加法或代码执行等多步骤计算任务中表现更优。具体而言,训练时模型会接收输入任务(如加法)及关联的中间步骤,该组合被称为草稿纸。测试时,模型需要根据输入任务预测步骤和答案。草稿纸与前述提示策略的区别在于,它是在带有计算步骤的示例任务上进行微调的。不过Nye等人(2021) 也进行了少样本场景的实验。Taylor等人(2022) 在大语言模型预训练中采用了类似方法:Galactica在包含科学数据的语料库上进行训练,其中部分文档使用特殊标记

Other recent works improve the reasoning abilities of pre-trained LMs via fine-tuning. Zelikman et al. (2022) propose a bootstrap approach to generate reasoning steps (also called rationales) for a large set of unlabeled data and use that data to fine-tune the model. Yu et al. (2022) show that standard LM finetuning on reasoning tasks lead to better reasoning skills such as textual entailment, abductive reasoning, and analogical reasoning, compared to pre-trained models. Further, several instruction fine-tuning approaches (Ouyang et al., 2022; Chung et al., 2022; Iyer et al., 2022; Ho et al., 2022) use chain-of-thought style prompts to achieve remarkable improvements on popular benchmarks such as BBH (Srivastava et al., 2022) and MMLU (Hendrycks et al., 2021). Interestingly, all these works also show that small scale instructionfinetuned models can perform better than un-finetuned large scale models, especially in the tasks where instruction following is important.

其他近期研究通过微调提升预训练语言模型的推理能力。Zelikman等人(2022)提出自举方法为大量未标注数据生成推理步骤(即理论依据),并用这些数据微调模型。Yu等人(2022)证明,相比预训练模型,在推理任务上对语言模型进行标准微调能提升文本蕴含、溯因推理和类比推理等能力。此外,多项指令微调研究(Ouyang等人,2022; Chung等人,2022; Iyer等人,2022; Ho等人,2022)采用思维链式提示,在BBH(Srivastava等人,2022)和MMLU(Hendrycks等人,2021)等主流基准测试中取得显著提升。值得注意的是,这些研究均表明小规模指令微调模型的表现可优于未微调的大规模模型,尤其在指令遵循至关重要的任务中。

Question: A needle 35 mm long rests on a water surface at $20\circ\mathrm{C}$ . What force over and above the needle’s weight is required to lift the needle from contact with the water surface? $\sigma=0.0728m$ .

问题:一根长35毫米的针静止在20°C的水面上。要将针从水面提起,除了针自身的重量外,还需要施加多大的力?已知σ=0.0728m。

Figure 4: Working memory example from Taylor et al. (2022). This prompt and its output are seen during LM pre-training.

图 4: Taylor等人 (2022) 的工作记忆示例。该提示及其输出出现在大语言模型预训练过程中。

2.4 Comparison and limitations of abstract reasoning

2.4 抽象推理的比较与局限性

Overall, reasoning can be seen as decomposing a problem into a sequence of sub-problems either iterative ly or recursively.4 Exploring as many reasoning paths as possible is hard and there is no guarantee that the intermediate steps are valid. A way to produce faithful reasoning traces is to generate pairs of questions and their corresponding answers for each reasoning step (Creswell and Shanahan, 2022), but there is still no guarantee of the correctness of these intermediate steps. Overall, a reasoning LM seeks to improve its context by itself so that it has more chance to output the correct answer. To what extent LMs actually use the stated reasoning steps to support the final prediction remains poorly understood (Yu et al., 2022).

总体而言,推理可以被视为将问题迭代或递归地分解为一系列子问题。尽可能探索所有推理路径十分困难,且无法保证中间步骤的有效性。生成可信推理轨迹的一种方法是为每个推理步骤生成问题及其对应答案的组合 (Creswell and Shanahan, 2022) ,但仍无法确保这些中间步骤的正确性。本质上,推理型大语言模型旨在通过自我完善上下文来增加输出正确答案的概率。关于大语言模型实际如何利用所述推理步骤来支持最终预测,目前仍缺乏深入理解 (Yu et al., 2022) 。

In many cases, some reasoning steps may suffer from avoidable mistakes that compromise the correctness of the output. For example, mistakes on nontrivial mathematical operations in a reasoning step may lead to the wrong final output. The same goes with known facts such as the identity of a president at a given year. Some of the works studied above (Yao et al., 2022b; Press et al., 2022) already leverage simple external tools such as a search engine or a calculator to validate intermediate steps. More generally, the next section of the survey focuses on the various tools that can be queried by LMs to increase the chance of outputting a correct answer.

在许多情况下,某些推理步骤可能会出现本可避免的错误,从而影响输出的正确性。例如,在推理步骤中对复杂数学运算的失误可能导致最终输出错误。同样的问题也存在于已知事实的核查中,比如某年总统的身份认定。前文提及的部分研究 (Yao et al., 2022b; Press et al., 2022) 已开始利用搜索引擎或计算器等简单外部工具来验证中间步骤。更广泛地说,本综述的下一章节将重点探讨大语言模型 (LLM) 可调用的各类工具,这些工具能有效提高输出正确答案的概率。

3 Using Tools and Act

3 使用工具与行动

A recent line of LM research allows the model to access knowledge that is not necessarily stored in its weights, such as a given piece of factual knowledge. More precisely, tasks such as exact computation or information retrieval for example can be offloaded to external modules such as a python interpreter or a search engine that are queried by the model which, in that respect, use tools. Additionally, we can say the LM performs an action when the tool has an effect on the external world. The possibility to easily include tools and actions in the form of special tokens is a convenient feature of language modeling coupled with transformers.

最近一项大语言模型研究允许模型访问不一定存储在其权重中的知识,例如给定的事实性知识。更准确地说,像精确计算或信息检索这样的任务可以卸载到外部模块,例如由模型查询的 Python语言 解释器或搜索引擎,在这方面,模型使用了工具。此外,当工具对外部世界产生影响时,我们可以说大语言模型执行了一个动作。以特殊 token 的形式轻松包含工具和动作的可能性,是语言建模与 Transformer 结合的一个便利特性。

3.1 Calling another model

3.1 调用另一个模型

In many cases, the tool can simply be another neural network or the LM itself.

在许多情况下,工具可以简单地是另一个神经网络或大语言模型本身。

Iterative LM calling. As an alternative to optimizing for a single, optimized prompt, an intuitive way to get better results from LMs consists of repeatedly calling the model to iterative ly refine its output. Re3 (Yang et al., 2022c) exploits this idea to automatically generate stories of over two thousand words. More precisely, Re3 first generates a plan, setting, and characters by prompting GPT3 (Brown et al., 2020) with a premise. Then, Re3 iterative ly injects information from both the plan and current story state into a new $G P T3$ prompt to generate new story passages. This work is improved upon in Yang et al. (2022b) with the use of a learned detailed outliner that iterative ly expands the brief initial outline to any desired level of granularity. Other approaches that teach models to iterative ly improve texts in an unsupervised fashion range from applications such as blank filling (Shen et al., 2020; Donahue et al., 2020) to denoising a sequence of Gaussian vectors into word vectors (Li et al., 2022c). PEER (Schick et al., 2022), for example, is a model initialized from LM-Adapted T5 (Raffel et al., 2020) and trained on Wikipedia edits, learning both how to carry out edits and how to plan for the next steps. Consequently, $P E E R$ is able to develop articles by repeatedly planning and editing as in Figure 5. The iterative approach has the additional benefit of allowing a complex task like story and article generation to be decomposed into smaller subtasks. Importantly and apart from $P E E R$ , the works mentioned above employ heuristics to call the LM. A future research direction may consist in allowing the LM to call itself repeatedly until the output satisfies a certain criterion. Rather than just calling a single model repeatedly, Wu et al. (2022a) propose an interactive interface for a pipeline allowing chaining of multiple LMs together, where the output of one step is passed as input to the next. Such contributions allow non-AI-experts to refine solutions to complex tasks that cannot be appropriately handled by a single LM.

迭代式大语言模型调用。相较于优化单一提示词,通过反复调用模型迭代优化输出是提升大语言模型效果的直观方法。Re3 (Yang et al., 2022c) 运用该思路自动生成了超过两千字的故事:首先生成故事前提,基于GPT3 (Brown et al., 2020) 生成计划、场景和角色;随后迭代地将当前故事状态与计划信息注入新的GPT3提示词来生成后续情节。Yang等人(2022b) 对此进行改进,通过训练细粒度大纲生成器将初始简略大纲迭代扩展至任意精度。其他无监督文本迭代优化方法包括空白填充 (Shen et al., 2020; Donahue et al., 2020) 和高斯向量序列去噪转词向量 (Li et al., 2022c) 等应用。例如PEER (Schick et al., 2022) 基于LM-Adapted T5 (Raffel et al., 2020) 初始化,通过维基百科编辑记录训练获得编辑与步骤规划能力,最终如图5所示通过规划-编辑循环完成文章创作。这种迭代方法还能将复杂任务(如故事/文章生成)拆解为子任务。值得注意的是,除PEER外上述研究均采用启发式方法调用大语言模型,未来可探索让模型自主判断输出是否达标而决定是否继续迭代。Wu等人(2022a) 则提出多模型串联的交互式管道,将前序模型输出作为后续输入,使非AI专家也能通过组合多个大语言模型解决单一模型难以处理的复杂任务。

Leveraging other modalities. Prompts under the form of text may not contain enough context to correctly perform a given task. For example, a question does not call for the same answer if it is asked with a serious or ironic tone. Including various modalities into the context would probably be useful for LMs such as chatbots. As recently demonstrated by Hao et al. (2022) and Alayrac et al. (2022), LMs can also be used as a general-purpose interface with models pre-trained on different modalities. For example, Hao et al. (2022) take a number of pre-trained encoders that can process diverse modalities such as vision and language, and

利用多模态信息。纯文本形式的提示可能无法包含足够上下文来正确执行特定任务。例如,同一个问题用严肃或讽刺语气提出时,所需的答案并不相同。对于聊天机器人等大语言模型,在上下文中融入多种模态信息可能非常有用。正如Hao等人(2022)和Alayrac等人(2022)最新研究表明,大语言模型还能作为通用接口,与针对不同模态预训练的模型协同工作。例如,Hao等人(2022)采用多个预训练编码器,可处理视觉、语言等多种模态。

Iteration 0

迭代 0

Text: Brittney Reese (born September 9, 1986 in Gulfport, Mississippi) is an American long jumper.

布兰妮·里斯(生于1986年9月9日,密西西比州格尔夫波特)是美国跳远运动员。

Iteration 1

迭代 1

Text: Brittney Reese (born September 9, 1986) is an American long jumper.

布兰妮·里斯(生于1986年9月9日)是美国跳远运动员。

Plan: Add information about her career

计划:添加关于她职业生涯的信息

Edit: Brittney Reese (born September 9, 1986) is an American long jumper , who competed at the 2008 Summer Olympics, and is a 4-time World Champion

编辑: Brittney Reese (生于1986年9月9日) 是美国跳远运动员,曾参加2008年夏季奥运会,并四次获得世界冠军

Iteration 2

迭代 2

Plan: Add her birthplace

计划:添加她的出生地

Edit: Brittney Reese (born September 9, 1986 in Inglewood, California is an American long jumper, who competed at the 2008 Summer Olympics, and is a 4-time World Champion. $</\mathbf{LM}>$

编辑:Brittney Reese(1986年9月9日出生于加利福尼亚州英格尔伍德)是美国跳远运动员,曾参加2008年夏季奥运会,并四次获得世界冠军。$</\mathbf{LM}>$

Figure 5: Iterative prompting example using PEER (Schick et al., 2022), a LM trained to produce a plan of action and edit to the input text at each step. This process can be repeated until the generated text requires no further updates. ${<}\mathbf{LM}>$ denotes the start of the LM’s output to the prompt, while $</\mathbf{LM}>$ denotes the end.

图 5: 使用PEER (Schick et al., 2022) 进行迭代提示的示例,该大语言模型经过训练可生成行动计划并在每一步对输入文本进行编辑。此过程可重复执行,直到生成的文本无需进一步更新。${<}\mathbf{LM}>$ 表示大语言模型对提示开始输出,而 $</\mathbf{LM}>$ 表示输出结束。

connect them to a LM that serves as a universal task layer. The interface and modular encoders are jointly pre-trained via a semi-causal language modeling objective. This approach combines the benefits of causal and non-causal language modeling, enabling both in-context learning and open-ended generation, as well as easy fine-tuning of the encoders. Similarly, Alayrac et al. (2022) introduce Flamingo, a family of Visual Language Models (VLMs) that can handle any interleaved sequences of visual and textual data. Flamingo models are trained on large-scale multimodal web corpora containing interleaved text and images, which enables them to display in-context few-shot learning capabilities of multimodal tasks. With only a handful of annotated examples, Flamingo can easily adapt to both generation tasks such as visual question-answering and captioning, as well as classification tasks such as multiple-choice visual question-answering. Zeng et al. (2022) introduce Socratic Models, a modular framework in which various models pre-trained on different modalities can be composed zero-shot. This allows models to exchange information with each other and acquire new multimodal capabilities without additional finetuning. Socratic Models enable new applications such as robot perception and planning, free-form question-answering about egocentric videos, or multimodal assistive dialogue by interfacing with external APIs and databases such as search engines. Interestingly, other modalities such as images can be incorporated to improve reasoning capabilities of moderate size LMs (1B) (Zhang et al., 2023).

将它们连接到作为通用任务层的大语言模型(LM)。接口和模块化编码器通过半因果语言建模目标进行联合预训练。这种方法结合了因果和非因果语言建模的优势,既能实现上下文学习和开放式生成,也能轻松微调编码器。类似地,Alayrac等人(2022)提出了Flamingo系列视觉语言模型(VLM),能够处理任意交错的视觉和文本数据序列。Flamingo模型在包含交错文本和图像的大规模多模态网络语料库上进行训练,使其能够展示多模态任务的上下文少样本学习能力。仅需少量标注样本,Flamingo就能轻松适应视觉问答和字幕生成等生成任务,以及多选题视觉问答等分类任务。Zeng等人(2022)提出了苏格拉底模型(Socratic Models),这种模块化框架可以将预训练于不同模态的各种模型进行零样本组合。这使得模型之间能够相互交换信息,无需额外微调即可获得新的多模态能力。通过与搜索引擎等外部API和数据库对接,苏格拉底模型实现了机器人感知与规划、第一人称视频的自由形式问答、多模态辅助对话等新应用。值得注意的是,图像等其他模态也可以被整合进来提升中等规模(10亿参数)大语言模型的推理能力(Zhang等人,2023)。

3.2 Information retrieval

3.2 信息检索

LMs can be augmented with memory units, for example via a neural cache of recent inputs (Grave et al., 2017; Merity et al., 2017), to improve their reasoning abilities. Alternatively, knowledge in the form of natural language can be offloaded completely from the LM by retrieving from an external knowledge source. Memory augmentation strategies help the language model to avoid producing non-factual and out-of-date information as well as reducing the number of parameters required to achieve comparable performance to large LMs.

大语言模型可以通过增加记忆单元来增强推理能力,例如采用最近输入的神经缓存 (Grave et al., 2017; Merity et al., 2017)。另一种方式是完全将自然语言形式的知识卸载到外部知识源中检索。记忆增强策略能帮助语言模型避免生成非事实或过时信息,同时减少达到与大语言模型相当性能所需的参数量。

3.2.1 Retrieval-augmented language models

3.2.1 检索增强型语言模型

Dense and sparse retrievers. There exist two types of retrievers that can be used to augment a LM: dense and sparse. Sparse retrievers work with sparse bag-of-words representations of the documents and the queries (Robertson and Zaragoza, 2009). In contrast, dense neural retrievers use a dense query and dense document vectors obtained from a neural network (Asai et al., 2021). Both types of retrievers assess the relevance of a document to an information-seeking query. This can be done by (i) checking for precise term overlap or (ii) computing the semantic similarity across related concepts. Sparse retrievers excel at the first sub-problem, while dense retrievers can be better at the second (Luan et al., 2021).

密集与稀疏检索器。可用于增强大语言模型的检索器分为两种类型:密集检索器和稀疏检索器。稀疏检索器基于文档和查询的稀疏词袋表示进行工作 (Robertson and Zaragoza, 2009)。与之相反,密集神经检索器使用通过神经网络获得的密集查询向量和密集文档向量 (Asai et al., 2021)。两种检索器都能评估文档与信息检索查询的相关性,具体通过以下方式实现:(i) 检查精确的术语重叠度,或 (ii) 计算相关概念间的语义相似度。稀疏检索器擅长解决第一个子问题,而密集检索器在第二个子问题上表现更优 (Luan et al., 2021)。

Conditioning LMs on retrieved documents. Various works augment LMs with a dense retriever by appending the retrieved documents to the current context (Chen et al., 2017; Clark and Gardner, 2017; Lee et al., 2019; Guu et al., 2020; Khandelwal et al., 2020; Lewis et al., 2020; Izacard and Grave, 2020; Zhong et al., 2022; Borgeaud et al., 2022; Izacard et al., 2022). Even though the idea of retrieving documents to perform question answering is not new, retrieval-augmented LMs have recently demonstrated strong performance in other knowledge intensive tasks besides Q&A. These proposals close the performance gap compared to larger LMs that use significantly more parameters. REALM (Guu et al., 2020) was the first method to jointly train end-to-end a retrieval system with an encoder LM. RAG (Lewis et al., 2020) jointly fine-tunes the retriever with a sequence-to-sequence model. Izacard and Grave (2020) introduced a modification of the seq2seq architecture to efficiently process many retrieved documents. Borgeaud et al. (2022) focuses on an auto-regressive LM, called $R E T R O$ , and shows that combining a large-scale corpus with pre-trained frozen $B E R T$ embeddings for the retriever removes the need to further train the retriever while obtaining comparable performance to $G P T3$ on different downstream tasks. The approach used in $R E T R O$ allows the integration of retrieval into existing pre-trained LMs. Atlas (Izacard et al., 2022) jointly trains a retriever with a sequence-to-sequence model to obtain a LM with strong few-shot learning capabilities in spite of being orders of magnitude smaller than many other large LMs. Table 2 compares the main characteristics of the models discussed, notably how the retrieval results are integrated into the LM’s context. In all these cases, the query corresponds to the prompt.

基于检索文档对大语言模型进行条件化处理。多项研究通过将检索到的文档附加到当前上下文中,用密集检索器增强大语言模型 (Chen et al., 2017; Clark and Gardner, 2017; Lee et al., 2019; Guu et al., 2020; Khandelwal et al., 2020; Lewis et al., 2020; Izacard and Grave, 2020; Zhong et al., 2022; Borgeaud et al., 2022; Izacard et al., 2022)。尽管检索文档进行问答并非新思路,但检索增强的大语言模型最近在问答以外的知识密集型任务中展现出强大性能。这些方案缩小了与使用更多参数的大型语言模型之间的性能差距。

REALM (Guu et al., 2020) 是首个端到端联合训练检索系统与编码器大语言模型的方法。RAG (Lewis et al., 2020) 联合微调了检索器与序列到序列模型。Izacard和Grave (2020) 改进了seq2seq架构以高效处理多篇检索文档。Borgeaud等人 (2022) 专注于名为$RETRO$的自回归大语言模型,研究表明将大规模语料库与预训练冻结的$BERT$检索器嵌入结合,无需进一步训练检索器即可在不同下游任务中获得与$GPT3$相当的性能。$RETRO$采用的方法允许将检索集成到现有预训练大语言模型中。Atlas (Izacard et al., 2022) 联合训练检索器与序列到序列模型,获得具备强大少样本学习能力的大语言模型,其规模比许多其他大型语言模型小数个数量级。

表2比较了讨论模型的主要特征,特别是检索结果如何集成到大语言模型上下文中。所有这些案例中,查询都对应提示词。

| Model | # Retrieval tokens | Granularity | Retriever 1 training | Retrieval integration |

| REALM Guu et al., 2 2020) | 0(109) | Prompt | End-to-End | Append to prompt |

| RAG (Lewis et al., 2 2020) | 0(109) | Prompt | Fine-tuning | Cross-attention |

| RETRO Borgeaud 1 etal.,2 2022 | O(1012 | Chunk | Frozen | Chunked cross-attn. |

| Atlas Izacard et al.,2 2022 | 0(10%) | Prompt | Fine-tuning | Cross-attention |

Table 2: Comparison between database retrieval augmented languages models. Inspired by Table 3 from Borgeaud et al. (2022).

| 模型 | 检索Token数 | 粒度 | 检索器1训练方式 | 检索集成方式 |

|---|---|---|---|---|

| REALM (Guu et al., 2020) | 0(109) | 提示词 | 端到端 | 追加至提示词 |

| RAG (Lewis et al., 2020) | 0(109) | 提示词 | 微调 | 交叉注意力 |

| RETRO (Borgeaud et al., 2022) | O(1012) | 文本块 | 冻结 | 分块交叉注意力 |

| Atlas (Izacard et al., 2022) | 0(10%) | 提示词 | 微调 | 交叉注意力 |

表 2: 数据库检索增强语言模型对比。灵感来源于Borgeaud等人(2022)的表3。

Chain-of-thought prompting and retrievers. Recent works (He et al., 2022; Trivedi et al., 2022) propose to combine a retriever with reasoning via chain-of-thoughts (CoT) prompting to augment a LM. He et al. (2022) use the CoT prompt to generate reasoning paths consisting of an explanation and prediction pair. Then, knowledge is retrieved to support the explanations and the prediction that is mostly supported by the evidence is selected. This approach does not require any additional training or fine-tuning. Trivedi et al. (2022) propose an information retrieval chain-of-thought approach (IRCoT) which consists of interleaving retrieval with CoT for multi-step QA. The idea is to use retrieval to guide the CoT reasoning steps and conversely, using CoT reasoning to guide the retrieval step.

思维链提示与检索器。近期研究 (He et al., 2022; Trivedi et al., 2022) 提出通过思维链 (CoT) 提示将检索器与推理相结合来增强大语言模型。He et al. (2022) 使用 CoT 提示生成由解释和预测对组成的推理路径,随后检索知识来支持解释,并选择证据支持最多的预测。该方法无需额外训练或微调。Trivedi et al. (2022) 提出信息检索思维链方法 (IRCoT),通过在多步问答中交替进行检索与 CoT 推理,利用检索引导 CoT 推理步骤,同时通过 CoT 推理指导检索步骤。

In all these works, a retriever is systematically called for every query in order to get the corresponding documents to augment the LM. These approaches also assume that the intent is contained in the query. The query could be augmented with the user’s intent by providing a natural language description of the search task (instruction) in order to disambiguate the intent, as proposed by Asai et al. (2022). Also, the LM could query the retriever only occasionally—when a prompt suggests it to do so—which is discussed in the next subsection.

在所有这些工作中,系统性地为每个查询调用检索器,以获取相应文档来增强大语言模型。这些方法还假设查询中已包含意图。如Asai等人 (2022) 所提出的,可以通过提供搜索任务的自然语言描述(指令)来消除意图歧义,从而用用户意图增强查询。此外,大语言模型可以仅在提示建议时偶尔查询检索器——这将在下一小节讨论。

3.2.2 Querying search engines

3.2.2 搜索引擎查询

A LM that only ingests a query can be seen as a passive agent. However, once it is given the ability to generate a query based on the prompt, the LM can enlarge its action space and become more active.

仅接收查询的大语言模型可视为被动AI智能体。但一旦获得基于提示生成查询的能力,大语言模型就能扩展其行动空间并变得更主动。

LaMDA is one example of an agent-like LM designed for dialogue applications. The authors pre-train the model on dialog data as well as other public web documents. In addition to this, to ensure that the model is factually grounded as well as enhancing its conversational abilities, it is augmented with retrieval, a calculator, and a translator (Thoppilan et al., 2022). Furthermore, to improve the model’s safety, LaMDA is fine-tuned with annotated data. Another example is BlenderBot (Shuster et al., 2022b), where the LM decides to generate a query based on a prompt. In this case, the prompt corresponds to the instruction of calling the search engine tool. BlenderBot is capable of open-domain conversation, it has been deployed on a public website to further improve the model via continual learning with humans in the loop. Similarly, ReAct uses few-shot prompting to teach a LM how to use different tools such as search and lookup in Wikipedia, and finish to return the answer (Yao et al., 2022b). Similarly, Komeili et al. (2021); Shuster et al. (2022a) propose a model that learns to generate an internet search query based on the context, and then conditions on the search results to generate a response. ReAct interleaves reasoning and acting, allowing for greater synergy between the two and improved performance on both language and decision making tasks. ReAct performs well on a diverse set of language and decision making tasks such as question answering, fact verification, or web and home navigation.

LaMDA 是为对话应用设计的类智能体大语言模型的一个范例。作者在对话数据及其他公开网页文档上对该模型进行了预训练。此外,为确保模型基于事实并增强其对话能力,该模型还集成了检索、计算器和翻译功能 (Thoppilan et al., 2022)。为进一步提升模型安全性,LaMDA 还通过标注数据进行了微调。另一个例子是 BlenderBot (Shuster et al., 2022b),其中大语言模型根据提示生成查询。此场景下,提示对应调用搜索引擎工具的指令。BlenderBot 具备开放域对话能力,已部署在公共网站上,通过人类参与的持续学习进一步优化模型。类似地,ReAct 采用少样本提示教会大语言模型使用维基百科搜索查询等工具,并最终返回答案 (Yao et al., 2022b)。Komeili 等人 (2021) 和 Shuster 等人 (2022a) 也提出了能根据上下文生成网络搜索查询,并基于搜索结果生成回复的模型。ReAct 将推理与行动交错结合,实现了二者的深度协同,在语言和决策任务上均表现出性能提升。该框架在问答、事实核查、网页导航及家庭导航等多样化语言与决策任务中均有优异表现。

In general, reasoning can improve decision making by making better inferences and predictions, while the ability to use external tools can improve reasoning by gathering additional information from knowledge bases or environments.

通常,推理能够通过更好的推断和预测来改进决策,而使用外部工具的能力则可以通过从知识库或环境中收集额外信息来增强推理。

3.2.3 Searching and navigating the web

3.2.3 网络搜索与导航

It is also possible to train agents that can navigate the open-ended internet in pursuit of specified goals such as searching information or buying items. For example, WebGPT (Nakano et al., 2021) is a LM-based agent which can interact with a custom text-based web-browsing environment in order to answer long-form questions. In contrast with other models that only learn how to query retrievers or search engines like LaMDA (Thoppilan et al., 2022) or BlenderBot (Shuster et al., 2022b), WebGPT learns to interact with a web-browser, which allows it to further refine the initial query or perform additional actions based on its interactions with the tool. More specifically, $W e b G P T$ can search the internet, navigate webpages, follow links, and cite sources (see Table 3 for the full list of available actions). By accessing the internet, the agent is able to enhance its question-answering abilities, even surpassing those of humans as determined by human evaluators. The best model is obtained by fine-tuning GPT3 on human demonstrations, and then performing rejection sampling against a reward model trained to predict human preferences. Similarly, WebShop (Yao et al., 2022a) is a simulated e-commerce website where an agent has to find, customize, and purchase a product according to a given instruction. To accomplish this, the agent must understand and reason about noisy text, follow complex instructions, reformulate queries, navigate different types of webpages, take actions to collect additional information when needed, and make strategic decisions to achieve its goals. Both the observations and the actions are expressed in natural language, making the environment well-suited for LM-based agents. The agent consists of a LM fine-tuned with behavior cloning of human demonstrations (i.e., question-human demonstration pairs) and reinforcement learning using a hard-coded reward function that verifies whether the purchased item matches the given description. While there are other works on web navigation and computer-control, most of them assume the typical human interface, that takes as input images of a computer screen and output keyboard commands in order to solve digital tasks (Shi et al., 2017; Gur et al., 2019; 2021; Toyama et al., 2021; Humphreys et al., 2022; Gur et al., 2022). Since our survey focuses on LM-based agents, we will not discuss these works in detail.

也可以训练能够在开放式互联网中导航以实现特定目标(如搜索信息或购买商品)的AI智能体。例如,WebGPT (Nakano et al., 2021) 是一种基于大语言模型的智能体,可以与定制的基于文本的网络浏览环境交互,以回答长篇问题。与仅学习如何查询检索器或搜索引擎的模型(如LaMDA (Thoppilan et al., 2022) 或BlenderBot (Shuster et al., 2022b))不同,WebGPT学习与网络浏览器交互,从而能够根据与工具的交互进一步优化初始查询或执行其他操作。具体来说,$WebGPT$可以搜索互联网、浏览网页、跟踪链接并引用来源(完整操作列表见表3)。通过访问互联网,该智能体能够增强其问答能力,甚至在某些情况下超越人类评估者判断的人类水平。最佳模型是通过在人类演示数据上微调GPT3,然后对预测人类偏好的奖励模型执行拒绝采样获得的。类似地,WebShop (Yao et al., 2022a) 是一个模拟电子商务网站,智能体需要根据给定指令查找、定制和购买产品。为此,智能体必须理解并推理嘈杂的文本,遵循复杂指令,重新表述查询,导航不同类型的网页,在需要时采取行动收集额外信息,并做出战略决策以实现其目标。观察和动作均以自然语言表达,使得该环境非常适合基于大语言模型的智能体。该智能体由一个通过人类演示行为克隆(即问题-人类演示对)微调的大语言模型组成,并使用硬编码的奖励函数进行强化学习,该函数验证购买的商品是否符合给定描述。虽然还有其他关于网络导航和计算机控制的研究,但大多数研究假设典型的人机界面,以计算机屏幕图像作为输入,输出键盘命令以解决数字任务 (Shi et al., 2017; Gur et al., 2019; 2021; Toyama et al., 2021; Humphreys et al., 2022; Gur et al., 2022)。由于本综述专注于基于大语言模型的智能体,我们将不详细讨论这些工作。

表3:

3.3 Computing via Symbolic Modules and Code Interpreters

3.3 基于符号模块与代码解释器的计算

Although recent LMs are able to correctly decompose many problems, they are still prone to errors when dealing with large numbers or performing complex arithmetic s. For example, vanilla GPT3 cannot perform out-of-distribution addition, i.e. addition on larger numbers than those seen during the training even when provided with examples with annotated steps (Qian et al., 2022). In the context of reinforcement learning, the action space of a transformer agent is equipped with symbolic modules to perform e.g. arithmetic or navigation in Wang et al. (2022b). Mind’s Eye (Liu et al., 2022b) invokes a physics engine to ground LMs physical reasoning. More precisely, a text-to-code LM is used to produce rendering code for the physics engine. The outcome of the simulation that is relevant to answer the question is then appended in natural language form to the LM prompt. As a result, Mind’s Eye is able to outperform the largest LMs on some specific physical reasoning tasks while having two order of magnitude less parameters. PAL (Gao et al., 2022) relies on CoT prompting of large LMs to decompose symbolic reasoning, mathematical reasoning, or algorithmic tasks into intermediate steps along with python code for each step (see Figure 6). The python steps are then offloaded to a python interpreter outputting the final result. They outperform CoT prompting on several benchmarks, especially on GSM-HARD, a version of GSM8K with larger numbers. See Table 1 for a comparison between $P A L$ and other models on GSM8K. Similarly, Drori et al. (2022); Chen et al. (2022) prompts Codex (Chen et al., 2021) to generate executable code-based solutions to university-level problems, math word problems, or financial QA. In the context of theorem proving, Wu et al. (2022c) uses large LMs to automatically formalize informal mathematical competition problem statements in Isabelle or HOL. Jiang et al. (2022) generate formal proof sketches, which are then fed to a prover.

尽管当前的大语言模型能够正确分解许多问题,但在处理大数字或执行复杂算术运算时仍容易出错。例如,原始版GPT3无法完成分布外加法运算 (即在训练数据范围之外的大数字加法) ,即使提供带注释步骤的示例也无济于事 (Qian et al., 2022) 。在强化学习领域,Wang等人 (2022b) 为Transformer智能体的动作空间配备了符号模块,用于执行算术或导航等任务。Mind's Eye (Liu et al., 2022b) 通过调用物理引擎来增强大语言模型的物理推理能力——具体而言,使用文本转代码的大语言模型生成物理引擎的渲染代码,再将与问题相关的模拟结果以自然语言形式附加到提示词中。该方法使得Mind's Eye在某些特定物理推理任务上能够超越参数量大两个数量级的顶级大语言模型。PAL (Gao et al., 2022) 依托大语言模型的思维链提示技术,将符号推理、数学推理或算法任务分解为中间步骤,并为每个步骤生成Python代码 (见图6) ,这些Python步骤会被移交至解释器执行并输出最终结果。该方法在多个基准测试中超越了思维链提示,尤其在包含更大数字的GSM8K变体GSM-HARD上表现突出。表1展示了PAL与其他模型在GSM8K上的对比结果。类似地,Drori等人 (2022) 和Chen等人 (2022) 通过提示Codex (Chen et al., 2021) 生成可执行的代码解决方案,用于解决大学课程难题、数学应用题或金融问答。在定理证明领域,Wu等人 (2022c) 利用大语言模型将非形式化的数学竞赛题目自动形式化为Isabelle或HOL语言,Jiang等人 (2022) 则通过生成形式化证明概要并输入证明器来完成验证。

Question: Roger has 5 tennis balls. He buys 2 more cans of tennis balls. Each can has 3 tennis balls. How many tennis balls does he have now?

问题:Roger有5个网球。他又买了2罐网球,每罐有3个网球。他现在一共有多少个网球?

Question: The cafeteria had 23 apples. If they used 20 to make lunch and bought 6 more, how many apples do they have?

问题:食堂原有23个苹果。如果午餐用掉了20个,又买了6个,现在有多少个苹果?

Answer: ${<}\mathbf{LM}>$

答:${<}\mathbf{LM}>$

Figure 6: An example of few-shot PAL (Gao et al., 2022) prompt. ${<}\mathbf{LM}>$ denotes call to the LM with the above prompt. The prompts are based on the chain-of-thoughts prompting shown on Figure 1, and the parts taken from it are highlighted in green . In PAL, the prompts also contain executable python code , which performs operations and stores the results in the answer variable. When prompted with a new question, PAL generates a mix of executable code and explanation. The answer is obtained by executing the code and print(answer) .

图 6: 少样本 PAL (Gao et al., 2022) 提示示例。${<}\mathbf{LM}>$ 表示使用上述提示调用大语言模型。这些提示基于图 1 所示的思维链提示技术,其中引用的部分以绿色高亮显示。在 PAL 中,提示还包含可执行的 Python语言 代码,这些代码执行操作并将结果存储在 answer 变量中。当遇到新问题时,PAL 会生成可执行代码与解释的混合体,最终通过执行代码并打印 print(answer) 来获得答案。

3.4 Acting on the virtual and physical world

3.4 作用于虚拟和物理世界

While the previous tools gather external information in order to improve the LM’s predictions or performance on a given task, other tools allow the LM to act on the virtual or physical world. In order to do this, the

虽然之前的工具通过收集外部信息来提升大语言模型在特定任务上的预测或表现,但另一些工具则让大语言模型能够在虚拟或物理世界中执行操作。为此,该

LM needs to ground itself in the real-world by learning about afford ances i.e. what actions are possible in a given state, and their effect on the world.

大语言模型需要通过了解可供性 (affordances) 来与现实世界建立联系,即在给定状态下可能采取的行动及其对世界的影响。

Controlling Virtual Agents. Recent works demonstrated the ability of LMs to control virtual agents in simulated 2D and 3D environments by outputting functions which can then be executed by computer in the corresponding environment, be it a simulation or the real-world. For example, Li et al. (2022b) finetune a pre-trained GPT2 (Radford et al., 2019) on sequential decision-making problems by representing the goals and observations as a sequence of embeddings and predicting the next action. This framework enables strong combinatorial generalization across different domains including a simulated household environment. This suggests that LMs can produce representations that are useful for modeling not only language but also sequential goals and plans, so that they can improve learning and generalization on tasks that go beyond language processing. Similarly, Huang et al. (2022a) investigate whether it is possible to use the world knowledge captured by LMs to take specific actions in response to high-level tasks written in natural language such as “make breakfast”. This work was the first to demonstrate that if the LM is large enough and correctly prompted, it can break down high-level tasks into a series of simple commands without additional training. However, the agent has access to a predetermined set of actions, so not all natural language commands can be executed in the environment. To address this issue, the authors propose to map the commands suggested by the LM into feasible actions for the agent using the cosine similarity function. The approach is evaluated in a virtual household environment and displays an improvement in the ability to execute tasks compared to using the plans generated by the LM without the additional mapping. While these works have demonstrated the usefulness of LMs for controlling virtual robots, the following paragraph cover works on physical robots. Zeng et al. (2022) combine a LM with a visual-language model (VLM) and a pre-trained language-conditioned policy for controlling a simulated robotic arm. The LM is used as a multi-step planner to break down a high-level task into subgoals, while the VLM is used to describe the objects in the scene. Both are passed to the policy which then executes actions according to the specified goal and observed state of the world. Dasgupta et al. (2023) use 7B and 70B Chinchilla as planners for an agent that acts and observes the result in a PycoLab environment. Additionally, a reporter module converts actions and observations from pixel to text space. Finally, the agent in Carta et al. (2023) uses a LM to generate action policies for text-based tasks. Interactively learning via online RL allows to ground the LM internal representations to the environment, thus partly departing from the knowledge about statistical surface structure of text that was acquired during pre-training.

控制虚拟代理。近期研究证明了大语言模型通过输出可在相应环境(无论是模拟环境还是现实世界)中由计算机执行的函数,来控制模拟2D和3D环境中虚拟代理的能力。例如,Li等人(2022b)通过将目标和观察表示为嵌入序列并预测下一个动作,在序列决策问题上对预训练的GPT2(Radford等人,2019)进行了微调。该框架在包括模拟家庭环境在内的不同领域实现了强大的组合泛化能力。这表明大语言模型不仅能生成对语言建模有用的表示,还能对序列目标和计划进行建模,从而提升超越语言处理任务的学习和泛化能力。类似地,Huang等人(2022a)研究了是否可以利用大语言模型捕获的世界知识,对用自然语言编写的高级任务(如"做早餐")采取具体行动。该研究首次证明,如果大语言模型足够大且提示得当,无需额外训练即可将高级任务分解为一系列简单命令。然而,该代理只能使用预定义的动作集,因此并非所有自然语言命令都能在环境中执行。为解决这个问题,作者提出使用余弦相似度函数将大语言模型建议的命令映射为代理可执行的动作。该方法在虚拟家庭环境中进行评估,与直接使用大语言模型生成的计划相比,显示了任务执行能力的提升。虽然这些研究证明了大语言模型在控制虚拟机器人方面的实用性,但以下段落将涵盖物理机器人的研究。Zeng等人(2022)将大语言模型与视觉语言模型(VLM)和预训练的语言条件策略相结合,用于控制模拟机械臂。大语言模型作为多步规划器将高级任务分解为子目标,而视觉语言模型用于描述场景中的物体。两者都被传递给策略模块,后者根据指定目标和观察到的世界状态执行动作。Dasgupta等人(2023)使用7B和70B参数的Chinchilla作为在PycoLab环境中行动并观察结果的代理的规划器。此外,报告模块将动作和观察从像素空间转换到文本空间。最后,Carta等人(2023)中的代理使用大语言模型为基于文本的任务生成动作策略。通过在线强化学习进行交互式学习,可以将大语言模型的内部表示与环境相关联,从而部分脱离预训练期间获得的文本统计表面结构知识。

| Command | Effect |

| search | Send |

| clickedonlink | Follow the link with the given ID to a new page |

| find in page: | Find the next occurrence of |

| quote: | If |

| scrolled down <1,2,3> | Scroll down a number of times |

| scrolled up <1,2,3> | Scroll up a number of times |

| Top | Scroll to the top of the page |

| back | Gotothepreviouspage |

| end: answer | End browsing and move to answering phase |

| end: | End browsing and skip answering phase |

Table 3: The actions WebGPT can perform, taken from Nakano et al. (2021).

| 命令 | 作用 |

|---|---|

| search | 将发送至Bing API并显示搜索结果页面 |

| clickedonlink | 根据给定ID跳转至新页面 |

| find in page: | 查找的下一个匹配项并滚动至该位置 |

| quote: | 若当前页面存在,则将其添加为引用 |

| scrolled down <1,2,3> | 向下滚动指定次数 |

| scrolled up <1,2,3> | 向上滚动指定次数 |

| Top | 滚动至页面顶部 |

| back | 返回上一页面 |

| end: answer | 结束浏览并进入回答阶段 |

| end: | 结束浏览并跳过回答阶段 |

表 3: WebGPT可执行的操作,引自Nakano等(2021)。

Controlling Physical Robots. Liang et al. (2022) use a LM to write robot policy code given natural language commands by prompting the model with a few demonstrations. By combining classic logic structures and referencing external libraries, e.g., for arithmetic operations, LMs can create policies that exhibit spatialgeometric reasoning, generalize to new instructions, and provide precise values for ambiguous descriptions. The effectiveness of the approach is demonstrated on multiple real robot platforms. LMs encode common sense knowledge about the world which can be useful in getting robots to follow complex high-level instructions expressed in natural language. However, they lack contextual grounding which makes it difficult to use them for decision making in the real-world since they do not know what actions are feasible in a particular situation. To mitigate this problem, Ahn et al. (2022) propose to teach the robot a number of low-level skills (such as “find a sponge”, “pick up the apple”, “go to the kitchen”) and learn to predict how feasible they are at any given state. Then, the LM can be used to split complex high-level instructions into simpler subgoals from the robot’s repertoire. The LM can then select the most valuable yet feasible skills for the robot to perform. This way, the robot can use its physical abilities to carry out the LM’s instructions, while the LM provides semantic knowledge about the task. The authors test their approach, called SayCan, on various real-world tasks and find that it can successfully complete long, abstract instructions in a variety of environments. To address the grounding problem, Chen et al. (2021) propose NLMap-SayCan, a framework to gather an integrate contextual information into LM planners. NLMap uses a Visual Language Model (VLM) to create an open-vocabulary queryable scene representation before generating a context-conditioned plan. An alternative way of incorporating contextual information into the agent’s decisions is to utilize linguistic feedback from the environment such as success detection, object recognition, scene description, or human interaction (Huang et al., 2022b). This results in improved performance on robotic control tasks such as table top rearrangement and mobile manipulation in a real kitchen. Finally, RT-1 (Brohan et al., 2022) leverages large-scale, diverse, task-agnostic robotic datasets to learn a model that can follow over 700 natural language instructions, as well as generalize to new tasks, environments, and objects. RT-1 makes use of $D I A L$ (Xiao et al., 2022), an approach for automatically labeling robot demonstrations with linguistic labels via the vision-language alignment model CLIP (Radford et al., 2019).

控制物理机器人。Liang等(2022)通过少量示例提示,使用大语言模型根据自然语言指令编写机器人策略代码。通过结合经典逻辑结构和引用外部库(如算术运算),大语言模型可以创建展现空间几何推理、泛化新指令并为模糊描述提供精确值的策略。该方法在多个真实机器人平台上验证了有效性。大语言模型编码了关于世界的常识知识,有助于让机器人遵循自然语言表达的复杂高级指令。然而,它们缺乏情境基础,难以用于现实世界决策,因为无法判断特定情境下哪些动作可行。为解决这个问题,Ahn等(2022)提出先教会机器人若干低级技能(如"找海绵"、"拿起苹果"、"去厨房"),并学习预测它们在任意状态下的可行性。然后,大语言模型可将复杂高级指令分解为机器人技能库中的简单子目标,选择最有价值且可行的技能执行。这样机器人既能利用物理能力执行指令,又能获得大语言模型提供的任务语义知识。作者将这种方法命名为SayCan,在多种现实任务中测试发现其能成功完成不同环境下的长期抽象指令。针对基础问题,Chen等(2021)提出NLMap-SayCan框架,通过视觉语言模型(VLM)创建开放词汇可查询的场景表示,再生成情境条件规划。另一种融入情境信息的方法是利用环境语言反馈,如成功检测、物体识别、场景描述或人机交互(Huang等,2022b),从而提升桌面重排和真实厨房移动操作等机器人控制任务的性能。最后,RT-1(Brohan等,2022)利用大规模、多样化、任务无关的机器人数据集,学习可遵循700多条自然语言指令的模型,并能泛化到新任务、环境和物体。RT-1采用$DIAL$(Xiao等,2022)方法,通过视觉语言对齐模型CLIP(Radford等,2019)自动为机器人演示添加语言标签。

4 Learning to reason, use tools, and act

4 学习推理、使用工具和行动

The previous sections reviewed what LMs can be augmented with in order to endow them with reasoning and tools. We will now present approaches on how to teach them such abilities.

前文回顾了大语言模型可以通过哪些方式进行增强,使其具备推理和使用工具的能力。接下来我们将介绍如何教会它们这些能力的方法。

4.1 Supervision

4.1 监督

A straightforward way of teaching LMs both to reason and to act is by providing them with human-written demonstrations of the desired behaviours. Common ways of doing so are (i) via few-shot prompting as first suggested by Brown et al. (2020), where the LM is provided a few examples as additional context during inference, but no parameter updates are performed, or (ii) via regular gradient-based learning. Typically, supervised learning is done after an initial pre-training with a language modeling objective (Ouyang et al., 2022; Chung et al., 2022); an exception to this is recent work by Taylor et al. (2022), who propose to mix pre-training texts with human-annotated examples containing some form of explicit reasoning, marked with a special token. Some authors use supervised fine-tuning as an intermediate step, followed by reinforcement learning from human feedback (Nakano et al., 2021; Ouyang et al., 2022); see Section 4.2 for an in-depth discussion of such methods.

教导大语言模型 (LLM) 同时进行推理和行动的一种直接方法是提供人类编写的期望行为示范。常见的实现方式包括:(i) 采用 Brown 等人 (2020) 首次提出的少样本提示 (few-shot prompting),即在推理时为大语言模型提供少量示例作为额外上下文,但不进行参数更新;或 (ii) 通过常规的基于梯度的学习。通常,监督学习会在以语言建模为目标进行初始预训练之后进行 (Ouyang 等人, 2022; Chung 等人, 2022);Taylor 等人 (2022) 的最新研究是个例外,他们提出将预训练文本与包含某种形式显式推理的人类标注示例混合,并用特殊 token 标记。部分研究者将监督微调作为中间步骤,随后进行基于人类反馈的强化学习 (Nakano 等人, 2021; Ouyang 等人, 2022);关于此类方法的深入讨论请参见第 4.2 节。

Few-shot prompting. Providing LMs with a few human-written in-context demonstrations of a desired behaviour is a common approach both for teaching them to reason (Wei et al., 2022c;b; Suzgun et al., 2022; Press et al., 2022) and for teaching them to use tools and act (Gao et al., 2022; Lazaridou et al., 2022; Yao et al., 2022b). This is mainly due to its ease of use: few-shot prompting only requires a handful of manually labeled examples and enables very fast experimentation as no model fine-tuning is required; moreover, it enables reusing the very same model for different reasoning tasks and tools, just by changing the provided prompt (Brown et al., 2020; Wei et al., 2022c). On the other hand, the ability to perform reasoning with chain-of-thoughts from a few in-context examples only emerges as models reach a certain size (Wei et al., 2022b; Chung et al., 2022), and performance depends heavily on the format in which examples are presented (Jiang et al., 2020; Min et al., 2022), the choice of few-shot examples, and the order in which they are presented (Kumar and Talukdar, 2021; Lu et al., 2022; Zhou et al., 2022). Another issue is that the amount of supervision that can be provided is limited by the number of examples that fit into the LM’s context window; this is especially relevant if (i) a new behaviour is so difficult to learn that it requires more than a handful of examples, or (ii) we have a large space of possible actions that we want a model to learn. Beyond that, as no weight updates are performed, the LM’s reasoning and acting abilities are tied entirely to the provided prompt; removing it also removes these abilities.

少样本提示 (Few-shot prompting)。为大语言模型提供少量人工编写的上下文行为示范,是指导其进行推理 (Wei et al., 2022c;b; Suzgun et al., 2022; Press et al., 2022) 和工具使用与行动 (Gao et al., 2022; Lazaridou et al., 2022; Yao et al., 2022b) 的常用方法。这主要得益于其易用性:少样本提示仅需少量人工标注样本且无需模型微调即可快速实验;此外,通过更换提示词 (prompt) 就能让同一模型处理不同推理任务和工具 (Brown et al., 2020; Wei et al., 2022c)。但另一方面,模型必须达到特定规模才能通过少量上下文样本展现思维链推理能力 (Wei et al., 2022b; Chung et al., 2022),且性能高度依赖于示例呈现格式 (Jiang et al., 2020; Min et al., 2022)、少样本选择及其排列顺序 (Kumar and Talukdar, 2021; Lu et al., 2022; Zhou et al., 2022)。另一个限制是监督信号量受限于模型上下文窗口的容量,当出现以下情况时尤为明显:(i) 新行为过于复杂需要大量示例,或 (ii) 需要模型学习的可能动作空间过大。此外,由于不更新权重参数,模型的推理与行动能力完全依赖于提示词,移除提示词将导致能力消失。

Fine-tuning. As an alternative to few-shot prompting, the reasoning and acting abilities of a pre-trained LM can also be elicited by updating its parameters with standard supervised learning. This approach has been used both for teaching models to use tools, including search engines (Komeili et al., 2021; Shuster et al., 2022b), web browsers (Nakano et al., 2021), calculators and translation systems (Thoppilan et al., 2022), and for improving reasoning abilities (Chung et al., 2022). For the latter, examples of reasoning are typically used in the larger context of instruction tuning (Mishra et al., 2021; Sanh et al., 2022; Wang et al., 2022d; Ouyang et al., 2022), where, more generally, an LM’s ability to follow instructions is improved based on human-labeled examples. Examples are typically collected from crowd workers. In some cases, they can instead be obtained automatically: Nye et al. (2021) use execution traces as a form of supervision for reasoning, while Andor et al. (2019) use heuristics to collect supervised data for teaching a language model to use a calculator.

微调 (Fine-tuning)。作为少样本提示的替代方案,预训练大语言模型的推理和行为能力也可以通过标准监督学习更新其参数来激发。这种方法既被用于教导模型使用工具(包括搜索引擎 (Komeili et al., 2021; Shuster et al., 2022b)、网页浏览器 (Nakano et al., 2021)、计算器和翻译系统 (Thoppilan et al., 2022)),也被用于提升推理能力 (Chung et al., 2022)。对于后者,推理示例通常被用于更广泛的指令微调 (instruction tuning) 场景中 (Mishra et al., 2021; Sanh et al., 2022; Wang et al., 2022d; Ouyang et al., 2022),即通过人工标注的示例来提升大语言模型遵循指令的能力。这些示例通常来自众包工作者。在某些情况下,它们也可以通过自动方式获取:Nye et al. (2021) 使用执行轨迹作为推理的监督形式,而 Andor et al. (2019) 则使用启发式方法收集监督数据来教导语言模型使用计算器。

Prompt pre-training. A potential risk of finetuning after the pre-training phase is that the LM might deviate far from the original distribution and overfit the distribution of the examples provided during fine-tuning. To alleviate this issue, Taylor et al. (2022) propose to mix pre-training data with labeled demonstrations of reasoning, similar to how earlier work mixes pre-training data with examples from various downstream tasks (Raffel et al., 2020); however, the exact gains from this mixing, compared to having a separate fine-tuning stage, have not yet been empirically studied. With a similar goal in mind, Ouyang et al. (2022) and Iyer et al. (2022) include examples from pre-training during the fine-tuning stage.

提示预训练。在预训练阶段后进行微调的一个潜在风险是,语言模型可能严重偏离原始分布,并过度拟合微调期间提供的示例分布。为缓解这一问题,Taylor等人 (2022) 提出将预训练数据与带标注的推理示范混合,类似于早期工作将预训练数据与各种下游任务示例混合的方法 (Raffel等人, 2020) 。然而,与单独设置微调阶段相比,这种混合方式的确切收益尚未经过实证研究。出于类似目标,Ouyang等人 (2022) 和 Iyer等人 (2022) 在微调阶段加入了预训练阶段的示例。

Boots trapping. As an alternative to standard fine-tuning, several authors propose to use boots trapping techniques (e.g. Yarowsky, 1995; Brin, 1999) to leverage some form of indirect supervision. This typically works by prompting a LM to reason or act in a few-shot setup followed by a final predictio