The Hardware Lottery

硬件彩票

Sara Hooker

Sara Hooker

Google Research, Brain Team shooker@google.com

Google Research, Brain Team

shocker@google.com

Abstract

摘要

Hardware, systems and algorithms research communities have historically had different incentive structures and fluctuating motivation to engage with each other explicitly. This historical treatment is odd given that hardware and software have frequently determined which research ideas succeed (and fail). This essay introduces the term hardware lottery to describe when a research idea wins because it is suited to the available software and hardware and not because the idea is superior to alternative research directions. Examples from early computer science history illustrate how hardware lotteries can delay research progress by casting successful ideas as failures. These lessons are particularly salient given the advent of domain specialized hardware which make it increasingly costly to stray off of the beaten path of research ideas. This essay posits that the gains from progress in computing are likely to become even more uneven, with certain research directions moving into the fast-lane while progress on others is further obstructed.

硬件、系统和算法研究社区历来具有不同的激励机制,彼此间显性合作的动机也起伏不定。考虑到硬件和软件往往决定着研究理念的成败,这种历史性割裂显得尤为怪异。本文提出"硬件彩票 (hardware lottery) "这一术语,用于描述某些研究理念仅因适配现有软硬件环境(而非其本身优于其他研究方向)而胜出的现象。计算机早期发展史的案例表明,硬件彩票效应可能将本应成功的理念标记为失败,从而延缓研究进程。在领域专用硬件兴起的当下,偏离主流研究路径的成本日益高昂,这些历史教训显得尤为深刻。本文认为,计算技术的进步收益可能将愈发失衡——某些研究方向会进入快车道,而其他方向的进展则会遭遇更多阻碍。

1 Introduction

1 引言

History tells us that scientific progress is imperfect. Intellectual traditions and available tooling can prejudice scientists against certain ideas and towards others (Kuhn, 1962). This adds noise to the marketplace of ideas, and often means there is inertia in recognizing promising directions of research. In the field of artificial intelligence research, this essay posits that it is our tooling which has played a disproportionate role in deciding what ideas succeed (and which fail).

历史告诉我们,科学进步并非完美。思想传统和现有工具可能导致科学家对某些观点产生偏见 (Kuhn, 1962) 。这为思想市场增添了噪音,往往意味着人们在识别有前景的研究方向时存在惯性。在人工智能研究领域,本文认为正是我们的工具在决定哪些理念成功 (哪些失败) 的过程中发挥了过大的作用。

What follows is part position paper and part historical review. This essay introduces the term hardware lottery to describe when a research idea wins because it is compatible with available software and hardware and not because the idea is superior to alternative research directions. We argue that choices about software and hardware have often played a decisive role in deciding the winners and losers in early computer science history.

本文既是一篇立场声明,也是历史回顾。文章提出"硬件彩票 (hardware lottery)"这一术语,用于描述某项研究构想因其与现有软硬件兼容而胜出,而非因其优于其他研究方向的情况。我们认为在计算机科学早期历史中,软硬件选择往往对成败起着决定性作用。

These lessons are particularly salient as we move into a new era of closer collaboration between hardware, software and ma- chine learning research communities. After decades of treating hardware, software and algorithms as separate choices, the catalysts for closer collaboration include changing hardware economics (Hennessy, 2019), a “bigger is better” race in the size of deep learning architectures (Amodei et al., 2018; Thompson et al., 2020b) and the dizzying requirements of deploying machine learning to edge devices (Warden & Situnayake, 2019).

随着我们进入硬件、软件和机器学习研究社区更紧密协作的新时代,这些经验教训尤为重要。在将硬件、软件和算法视为独立选项数十年后,推动更紧密协作的因素包括硬件经济学的变革 (Hennessy, 2019)、深度学习架构规模"越大越好"的竞赛 (Amodei et al., 2018; Thompson et al., 2020b),以及将机器学习部署到边缘设备所需的惊人要求 (Warden & Situnayake, 2019)。

Closer collaboration has centered on a wave of new generation hardware that is "domain specific" to optimize for commercial use cases of deep neural networks (Jouppi et al., 2017; Gupta & Tan, 2019; ARM, 2020; Lee & Wang, 2018). While domain specialization creates important efficiency gains for mainstream research focused on deep neural networks, it arguably makes it more even more costly to stray off of the beaten path of research ideas. An increasingly fragmented hardware landscape means that the gains from progress in computing will be increasingly uneven. While deep neural networks have clear commercial use cases, there are early warning signs that the path to the next breakthrough in AI may require an entirely different combination of algorithm, hardware and software.

更紧密的合作聚焦于新一代"领域专用(domain specific)"硬件浪潮,旨在优化深度神经网络(deep neural networks)的商业应用场景 (Jouppi et al., 2017; Gupta & Tan, 2019; ARM, 2020; Lee & Wang, 2018)。虽然领域专用化为以深度神经网络为主流的研究带来了显著的效率提升,但这也使得偏离常规研究思路的代价变得更为高昂。日益碎片化的硬件格局意味着计算技术进步带来的收益将愈发不均衡。尽管深度神经网络具有明确的商业应用场景,但早期迹象表明,AI领域下一个重大突破可能需要算法、硬件和软件的完全重新组合。

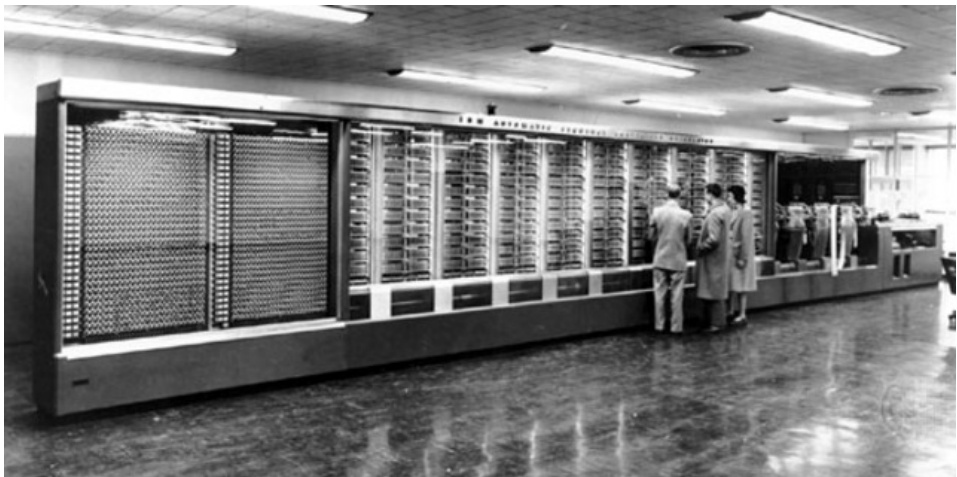

Figure 1: Early computers such as the Mark I were single use and were not expected to be repurposed. While Mark I could be programmed to compute different calculations, it was essentially a very powerful calculator and could not run the variety of programs that we expect of our modern day machines.

图 1: 早期计算机如Mark I是单一用途设备且不可重新配置。虽然Mark I可通过编程执行不同计算任务,但它本质上只是一台高性能计算器,无法运行现代计算机所支持的各种程序。

This essay begins by acknowledging a crucial paradox: machine learning researchers mostly ignore hardware despite the role it plays in determining what ideas succeed. In Section 2 we ask what has in centi viz ed the development of software, hardware and machine learning research in isolation? Section 3 considers the ramifications of this siloed evolution with examples of early hardware and software lotteries. Today the hardware landscape is increasingly heterogeneous. This essay posits that the hardware lottery has not gone away, and the gap between the winners and losers will grow increasingly larger. Sections 4-5 unpack these arguments and Section 6 concludes with some thoughts on what it will take to avoid future hardware lotteries.

本文开篇即指出了一个关键悖论:机器学习研究者大多忽视硬件,尽管硬件在决定哪些创意能成功方面起着关键作用。第2节探讨了为何软件、硬件和机器学习研究长期以来各自独立发展?第3节通过早期硬件与软件"彩票"案例,分析了这种割裂式发展带来的影响。当今硬件生态正日趋多元化,但本文认为硬件彩票现象并未消失,赢家与输家之间的差距反而会越拉越大。第4-5节详细阐述了这些观点,第6节总结时提出了如何避免未来硬件彩票的一些思考。

2 Separate Tribes

2 分离的部落

It is not a bad description of man to describe him as a tool making animal.

将人描述为制造工具的动物并不失为一个恰当的定义。

Charles Babbage, 1851

Charles Babbage, 1851

difference machine was intended solely to compute polynomial functions (1817)(Collier, 1991). Mark I was a programmable calculator (1944)(Isaacson, 2014). Rosenblatt’s perceptron machine computed a step-wise single layer network (1958)(Van Der Malsburg, 1986). Even the Jacquard loom, which is often thought of as one of the first programmable machines, in practice was so expensive to re-thread that it was typically threaded once to support a pre-fixed set of input fields (1804)(Posselt, 1888).

差分机仅用于计算多项式函数 (1817) (Collier, 1991)。Mark I 是可编程计算器 (1944) (Isaacson, 2014)。Rosenblatt 的感知机实现了阶跃式单层网络计算 (1958) (Van Der Malsburg, 1986)。即便是常被视为最早可编程机械之一的提花织机,在实际操作中因重新穿线成本过高,通常仅穿线一次以支持预设的输入字段组 (1804) (Posselt, 1888)。

For the creators of the first computers the program was the machine. Early machines were single use and were not expected to be re-purposed for a new task because of both the cost of the electronics and a lack of cross-purpose software. Charles Babbage’s

对于首批计算机的创造者而言,程序即机器。早期计算机功能单一,由于电子元件成本高昂且缺乏跨用途软件,人们并未期待它们能被重新用于新任务。Charles Babbage的

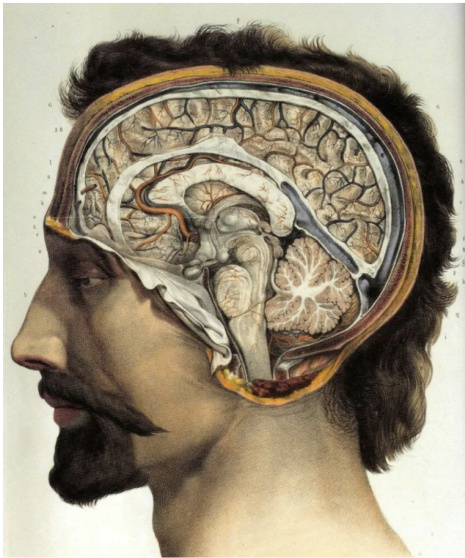

The specialization of these early computers was out of necessity and not because computer architects thought one-off customized hardware was intrinsically better. However, it is worth pointing out that our own intelligence is both algorithm and machine. We do not inhabit multiple brains over the course of our lifetime. Instead, the notion of human intelligence is intrinsically associated with the physical 1400g of brain tissue and the patterns of connectivity between an estimated 85 billion neurons in your head (Sainani, 2017). When we talk about human intelligence, the prototypical image that probably surfaces as you read this is of a pink ridged cartoon blob. It is impossible to think of our cognitive intelligence without summoning up an image of the hardware it runs on.

这些早期计算机的专门化是出于必要性,而非因为计算机架构师认为一次性定制硬件本质上更好。不过值得注意的是,我们自身的智能既是算法也是机器。我们一生中并不会使用多个大脑。相反,人类智能的概念本质上与1400克脑组织物质以及你头颅中约850亿神经元之间的连接模式相关联 (Sainani, 2017)。当我们谈论人类智能时,阅读至此浮现在你脑海中的典型形象很可能是一个粉色褶皱的卡通团块。若不想象其运行的硬件,我们便无法思考自身的认知智能。

Today, in contrast to the necessary specialization in the very early days of computing, machine learning researchers tend to think of hardware, software and algorithm as three separate choices. This is largely due to a period in computer science history that radically changed the type of hardware that was made and in centi viz ed hardware, software and machine learning research communities to evolve in isolation.

与计算机发展初期所需的专业化不同,如今的机器学习研究者通常将硬件、软件和算法视为三个独立的选择。这主要源于计算机科学史上的一段时期——当时硬件制造类型发生根本性变革,导致硬件、软件和机器学习研究社区在各自领域独立发展。

Figure 2: Our own cognitive intelligence is inextricably both hardware and algorithm. We do not inhabit multiple brains over our lifetime.

图 2: 我们自身的认知智能 (cognitive intelligence) 本质上是硬件与算法的不可分割体。终其一生,我们都不会更换多个大脑。

2.1 The General Purpose Era

2.1 通用计算时代

The general purpose computer era crystallized in 1969, when an opinion piece by a young engineer called Gordan Moore appeared in Electronics magazine with the apt title “Cramming more components onto circuit boards”(Moore, 1965). Moore predicted you could double the amount of transistors on an integrated circuit every two years. Originally, the article and subsequent followup was motivated by a simple desire – Moore thought it would sell more chips. However, the prediction held and motivated a remarkable decline in the cost of transforming energy into information over the next 50 years.

通用计算机时代在1969年成型,当时一位名叫Gordan Moore的年轻工程师在《电子学》杂志上发表了一篇观点文章,标题恰如其分地称为《在电路板上塞入更多元件》(Moore, 1965)。Moore预测集成电路上的晶体管数量每两年可以翻一番。最初,这篇文章及其后续跟进只是出于一个简单的愿望——Moore认为这会卖出更多芯片。然而,这一预测不仅成立,还推动了接下来50年间将能量转化为信息的成本显著下降。

Moore’s law combined with Dennard scaling (Dennard et al., 1974) enabled a factor of three magnitude increase in microp roc essor performance between 1980-2010 (CHM, 2020). The predictable increases in compute and memory every two years meant hardware design became risk-averse. Even for tasks which demanded higher performance, the benefits of moving to specialized hardware could be quickly eclipsed by the next generation of general purpose hardware with ever growing compute.

摩尔定律与登纳德缩放 (Dennard et al., 1974) 相结合,使得微处理器性能在1980-2010年间提升了三个数量级 (CHM, 2020)。每隔两年可预测的计算能力和内存增长,使得硬件设计趋于保守。即便对于需要更高性能的任务,转向专用硬件带来的优势也可能迅速被下一代计算能力持续增长的通用硬件所超越。

The emphasis shifted to universal processors which could solve a myriad of different tasks. Why experiment on more specialized hardware designs for an uncertain reward when Moore’s law allowed chip makers to lock in predictable profit margins? The few attempts to deviate and produce specialized supercomputers for research were financially unsustainable and short lived (Asanovic, 2018; Taubes, 1995). A few very narrow tasks like mastering chess were an exception to this rule because the prestige and visibility of beating a human adversary attracted corporate sponsorship (Moravec, 1998).

重点转向了能够解决无数不同任务的通用处理器。既然摩尔定律能让芯片制造商锁定可预测的利润空间,为何还要为不确定的回报尝试更专用的硬件设计?少数试图偏离该路线、为研究开发专用超级计算机的尝试在财务上难以为继且昙花一现 (Asanovic, 2018; Taubes, 1995)。像国际象棋这类极狭窄的任务是例外,因为击败人类对手带来的声望和关注度吸引了企业赞助 (Moravec, 1998)。

Treating the choice of hardware, software and algorithm as independent has persisted until recently. It is expensive to explore new types of hardware, both in terms of time and capital required. Producing a next generation chip typically costs $\$30–80$ million dollars and 2-3 years to develop (Feldman, 2019). These formidable barriers to entry have produced a hardware research culture that might feel odd or perhaps even slow to the average machine learning researcher. While the number of machine learning publications has grown exponentially in the last 30 years (Dean, 2020), the number of hardware publications have maintained a fairly even cadence (Singh et al., 2015). For a hardware company, leakage of intellectual property can make or break the survival of the firm. This has led to a much more closely guarded research culture.

直到最近,人们仍将硬件、软件和算法的选择视为相互独立。探索新型硬件在时间和资金成本上都十分昂贵。生产新一代芯片通常需要花费3000万至8000万美元,并耗时2-3年开发 (Feldman, 2019)。这些极高的准入门槛形成了与普通机器学习研究者认知迥异的硬件研究文化——甚至可能显得进展缓慢。虽然过去30年机器学习论文数量呈指数级增长 (Dean, 2020),但硬件领域的论文数量始终保持着平稳节奏 (Singh et al., 2015)。对硬件企业而言,知识产权泄露直接关乎企业存亡,这导致了更为严密的研究保密文化。

In the absence of any lever with which to influence hardware development, machine learning researchers rationally began to treat hardware as a sunk cost to work around rather than something fluid that could be shaped. However, just because we have abstracted away hardware does not mean it has ceased to exist. Early computer science history tells us there are many hardware lotteries where the choice of hardware and software has determined which ideas succeed (and which fail).

由于缺乏影响硬件开发的手段,机器学习研究者们理性地开始将硬件视为需要规避的沉没成本,而非可以灵活塑造的变量。然而,硬件被抽象化的事实并不意味着它已不复存在。早期计算机科学史告诉我们,硬件选择与软件决策共同决定了哪些创意能够胜出(哪些会失败),这种硬件彩票现象屡见不鲜。

3 The Hardware Lottery

3 硬件彩票

I suppose it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail.

如果手头唯一的工具是锤子,看什么都像钉子。

The first sentence of Anna Karenina by Tolstoy reads “Happy families are all alike, every unhappy family is unhappy in it’s own way.”(Tolstoy & Bartlett, 2016). Tolstoy is saying that it takes many different things for a marriage to be happy – financial stability, chemistry, shared values, healthy offspring. However, it only takes one of these aspects to not be present for a family to be unhappy. This has been popularized as the Anna Karenina principle – “a deficiency in any one of a number of factors dooms an endeavor to failure.” (Moore, 2001).

托尔斯泰在《安娜·卡列尼娜》开篇写道:"幸福的家庭都是相似的,不幸的家庭各有各的不幸" (Tolstoy & Bartlett, 2016) 。他认为婚姻幸福需要诸多要素共同作用——经济稳定、情感共鸣、价值观契合、子女健康。但缺失其中任何一项,就足以导致家庭不幸。这一观点后来被归纳为安娜·卡列尼娜原则:"在多因素系统中,任一要素的缺失都将导致整体失败" (Moore, 2001) 。

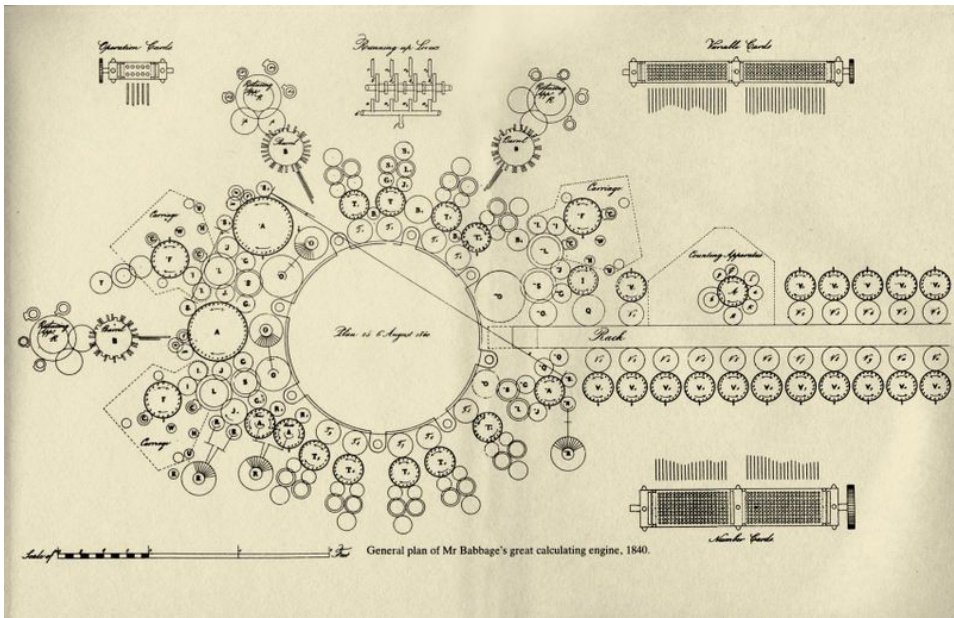

Figure 3: The analytical engine designed by Charles Babbage was never built in part because he had difficulty fabricating parts with the correct precision. This image depicts the general plan of the analytical machine in 1840.

图 3: Charles Babbage 设计的分析机最终未能建成,部分原因在于难以制造出精度达标的零件。该图展示了1840年分析机的整体设计方案。

Despite our preference to believe algorithms succeed or fail in isolation, history tells us that most computer science breakthroughs follow the Anna Karenina principle. Successful breakthroughs are often distinguished from failures by benefiting from multiple criteria aligning serendipitous ly. For AI research, this often depends upon winning what this essay terms the hardware lottery — avoiding possible points of failure in downstream hardware and software choices.

尽管我们更愿意相信算法是独立成功或失败的,但历史告诉我们,大多数计算机科学突破都遵循安娜·卡列尼娜原则。成功的突破往往与失败的区别在于,它们受益于多个标准偶然达成一致。对于AI研究来说,这通常取决于赢得本文所称的硬件彩票——避免在下游硬件和软件选择中可能出现的问题点。

An early example of a hardware lottery is the analytical machine (1837). Charles Babbage was a computer pioneer who designed a machine that (at least in theory) could be programmed to solve any type of computation. His analytical engine was never built in part because he had difficulty fabricating parts with the correct precision (Kurzweil, 1990). The electromagnetic technology to actually build the theoretical foundations laid down by Babbage only surfaced during WWII. In the first part of the 20th century, electronic vacuum tubes were heavily used for radio communication and radar. During WWII, these vacuum tubes were re-purposed to provide the compute power necessary to break the German enigma code (Project, 2018).

硬件抽奖的一个早期例子是分析机(1837)。Charles Babbage是一位计算机先驱,他设计的机器(至少在理论上)可以通过编程解决任何类型的计算问题。他的分析机最终未能建成,部分原因是难以制造出精度符合要求的零件(Kurzweil, 1990)。直到二战期间,才出现能够实现Babbage理论基础的电磁技术。20世纪上半叶,电子真空管被广泛用于无线电通信和雷达。二战期间,这些真空管被重新用于提供破解德国Enigma密码所需的计算能力(Project, 2018)。

As noted in the TV show Silicon Valley, often “being too early is the same as being wrong.” When Babbage passed away in 1871, there was no continuous path between his ideas and modern day computing. The concept of a stored program, modifiable code, memory and conditional branching were rediscovered a century later because the right tools existed to empirically show that the idea worked.

正如电视剧《硅谷》中所言,"太早往往等同于错误"。当Babbage于1871年去世时,他的构想与现代计算技术之间并未形成连贯的发展路径。存储程序、可修改代码、内存和条件分支等概念在一个世纪后才被重新发现,因为那时已具备通过实证验证这些构想可行性的技术条件。

3.1 The Lost Decades

3.1 失去的十年

Perhaps the most salient example of the damage caused by not winning the hardware lottery is the delayed recognition of deep neural networks as a promising direction of research. Most of the algorithmic components to make deep neural networks work had already been in place for a few decades: back prop agation (invented in 1963 (K & Piske, 1963), reinvented in 1976 (Linnainmaa, 1976), and again in 1988 (Rumelhart et al., 1988)), deep convolutional neural networks ((Fukushima & Miyake, 1982), paired with back prop agation in 1989 (LeCun et al., 1989)). However, it was only three decades later that deep neural networks were widely accepted as a promising research direction.

未能赢得硬件彩票造成损害的最显著例子,就是深度神经网络作为有前景的研究方向迟迟未获认可。使深度神经网络发挥作用的大多数算法组件其实早已存在数十年:反向传播 (1963年由K & Piske提出 [20],1976年由Linnainmaa重新发现 [21],1988年再由Rumelhart等人再次提出 [22]) ,深度卷积神经网络 (1982年由Fukushima & Miyake提出 [23],1989年与反向传播结合由LeCun等人实现 [24]) 。然而直到三十年后,深度神经网络才被广泛认可为具有前景的研究方向。

This gap between algorithmic advances and empirical success is in large part due to incompatible hardware. During the general purpose computing era, hardware like CPUs were heavily favored and widely available. CPUs are very good at executing any set of complex instructions but incur high memory costs because of the need to cache intermediate results and process one instruction at a time (Sato, 2018). This is known as the von Neumann Bottleneck — the available compute is restricted by “the lone channel between the CPU and memory along which data has to travel sequentially” (Time, 1985).

算法进步与实证成功之间的差距很大程度上源于硬件的不兼容性。在通用计算时代,CPU等硬件备受青睐且广泛普及。CPU擅长执行任意复杂指令集,但由于需要缓存中间结果且每次仅处理一条指令 (Sato, 2018) ,导致内存成本高昂。这种现象被称为冯·诺依曼瓶颈 (von Neumann Bottleneck) ——计算能力受限于"CPU与内存之间单一的数据顺序传输通道" (Time, 1985) 。

The von Neumann bottleneck was terribly illsuited to matrix multiplies, a core component of deep neural network architectures. Thus, training on CPUs quickly exhausted memory bandwidth and it simply wasn’t possible to train deep neural networks with multiple layers. The need for hardware that supported tasks with lots of parallelism was pointed out as far back as the early 1980s in a series of essays titled “Parallel Models of Associative Memory” (Hinton & Anderson, 1989). The essays argued persuasively that biological evidence suggested massive parallelism was needed to make deep neural network approaches work (Rumelhart et al., 1986).

冯·诺依曼瓶颈极不适合矩阵乘法这一深度神经网络架构的核心组件。因此,在CPU上进行训练会迅速耗尽内存带宽,根本无法训练具有多层的深度神经网络。早在20世纪80年代初,一系列题为《关联记忆的并行模型》(Hinton & Anderson, 1989) 的论文就指出,需要硬件来支持高度并行化的任务。这些论文有力地论证了生物学证据表明,要使深度神经网络方法奏效,需要大规模并行处理 (Rumelhart et al., 1986)。

In the late 1980/90s, the idea of specialized hardware for neural networks had passed the novelty stage (Misra & Saha, 2010; Lindsey & Lindblad, 1994; Dean, 1990). How- ever, efforts remained fractured by lack of shared software and the cost of hardware development. Most of the attempts that were actually operational i zed like the Connection Machine in 1985 (Taubes, 1995), Space in 1992 (Howe & Asanović, 1994), Ring Array Processor in 1989 (Morgan et al., 1992) and the Japanese 5th generation computer project (Morgan, 1983) were designed to favor logic programming such as PROLOG and LISP that were poorly suited to connectionist deep neural networks. Later iterations such as HipNet-1 (Kingsbury et al., 1998), and the Analog Neural Network Chip in 1991 (Sackinger et al., 1992) were promising but short lived because of the intolerable cost of iteration and the need for custom silicon. Without a consumer market, there was simply not the critical mass of end users to be financially viable.

20世纪80年代末至90年代,专用神经网络硬件的概念已度过萌芽阶段 (Misra & Saha, 2010; Lindsey & Lindblad, 1994; Dean, 1990) 。然而,由于缺乏共享软件和硬件开发成本高昂,相关研究始终处于碎片化状态。1985年的Connection Machine (Taubes, 1995) 、1992年的Space (Howe & Asanović, 1994) 、1989年的Ring Array Processor (Morgan et al., 1992) 以及日本第五代计算机计划 (Morgan, 1983) 等实际落地的尝试,都偏向于适合逻辑编程(如PROLOG和LISP)的设计,与连接主义的深度神经网络适配性不佳。后续迭代产品如HipNet-1 (Kingsbury et al., 1998) 和1991年的模拟神经网络芯片 (Sackinger et al., 1992) 虽具潜力,却因难以承受的迭代成本和定制硅片需求而昙花一现。缺乏消费级市场支撑,终端用户规模始终无法达到经济可持续的临界点。

Figure 4: The connection machine was one of the few examples of hardware that deviated from general purpose cpus in the $1980\mathrm{s}/90\mathrm{s}$ . Thinking Machines ultimately went bankrupt after the inital funding from DARPA dried up.

图 4: Connection Machine 是 20 世纪 80/90 年代少数偏离通用 CPU 的硬件范例之一。在 DARPA 初始资金耗尽后,Thinking Machines 公司最终破产。

It would take a hardware fluke in the early 2000s, a full four decades after the first paper about back propagation was published, for the insight about massive parallelism to be operational i zed in a useful way for connectionist deep neural networks. Many inventions are re-purposed for means unintended by their designers. Edison’s phonograph was never intended to play music. He envisioned it as preserving the last words of dying people or teaching spelling. In fact, he was disappointed by its use playing popular music as he thought this was too “base” an application of his invention (Diamond et al., 1999). In a similar vein, deep neural networks only began to work when an existing technology was unexpectedly re-purposed.

直到21世纪初,距离首篇关于反向传播的论文发表已过去整整四十年,一次硬件上的偶然突破才使得大规模并行化的洞见得以有效应用于连接主义深度神经网络。许多发明最终用途往往偏离设计者初衷——爱迪生发明留声机本非为播放音乐,而是设想用于保存临终遗言或辅助拼写教学。事实上,他对该设备被用于播放流行音乐深感失望,认为这是对其发明过于"低俗"的运用 (Diamond et al., 1999) 。同样地,深度神经网络正是在现有技术意外获得新用途时才真正开始发挥作用。

A graphical processing unit (GPU) was originally introduced in the 1970s as a specialized accelerator for video games and for developing graphics for movies and animation. In the 2000s, like Edison’s phonograph, GPUs were re-purposed for an entirely unimagined use case – to train deep neural networks (Chell a pill a et al., 2006; Oh & Jung, 2004; Claudiu Ciresan et al., 2010; Fata- halian et al., 2004; Payne et al., 2005). GPUs had one critical advantage over CPUs - they were far better at parallel i zing a set of simple de com pos able instructions such as matrix multiples (Brodtkorb et al., 2013; Dettmers, 2020). This higher number of floating operation points per second (FLOPS) combined with clever distribution of training between GPUs unblocked the training of deeper networks. The number of layers in a network turned out to be the key. Performance on ImageNet jumped with ever deeper networks in 2011 (Ciresan et al., 2011), 2012 (Krizhevsky et al., 2012) and 2015 (Szegedy et al., 2015b). A striking example of this jump in efficiency is a comparison of the now famous 2012 Google paper which used 16,000 CPU cores to classify cats (Le et al., 2012) to a paper published a mere year later that solved the same task with only two CPU cores and four GPUs (Coates et al., 2013).

图形处理器 (GPU) 最初于20世纪70年代作为电子游戏和影视动画图形开发的专用加速器问世。进入21世纪后,如同爱迪生的留声机被重新发明用途一样,GPU被赋予了完全意想不到的新使命——训练深度神经网络 (Chellapilla et al., 2006; Oh & Jung, 2004; Claudiu Ciresan et al., 2010; Fatahalian et al., 2004; Payne et al., 2005)。相较于CPU,GPU具有一个关键优势:更擅长并行处理可分解的简单指令集,例如矩阵乘法运算 (Brodtkorb et al., 2013; Dettmers, 2020)。这种更高的每秒浮点运算次数 (FLOPS) 结合GPU间智能化的训练任务分配,突破了深层网络的训练瓶颈。网络层数成为关键因素——2011年 (Ciresan et al., 2011)、2012年 (Krizhevsky et al., 2012) 和2015年 (Szegedy et al., 2015b) 的ImageNet竞赛中,随着网络深度增加,模型性能显著提升。最具说服力的对比案例是:2012年谷歌那篇著名论文使用16,000个CPU核心实现猫图像分类 (Le et al., 2012),而仅仅一年后发表的论文仅用2个CPU核心和4块GPU就完成了相同任务 (Coates et al., 2013)。

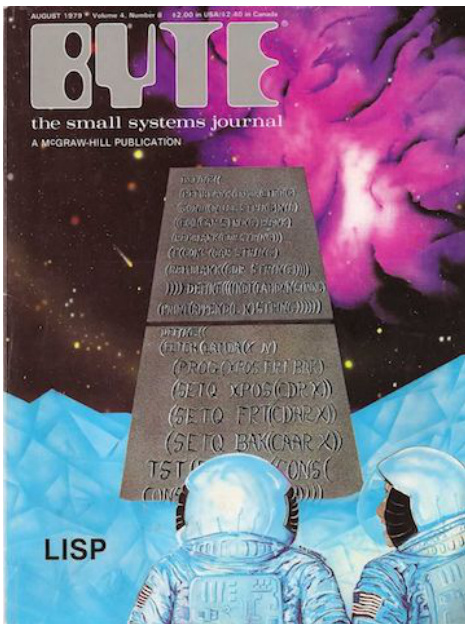

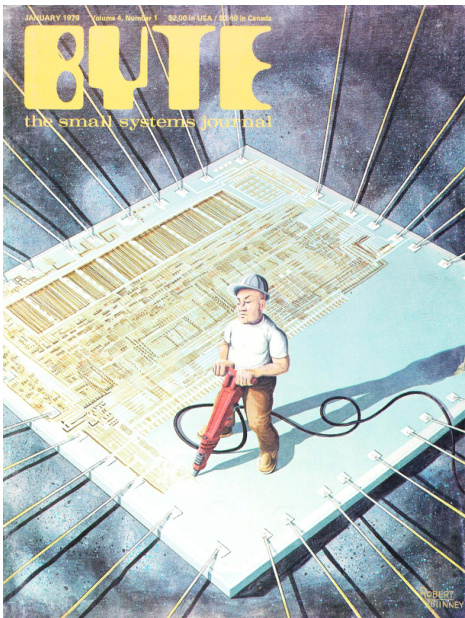

Figure 5: Byte magazine cover, August 1979, volume 4. LISP was the dominant language for artificial intelligence research through the 1990’s. LISP was particularly well suited to handling logic expressions, which were a core component of reasoning and expert systems.

图 5: Byte杂志封面,1979年8月第4卷。LISP是20世纪90年代人工智能研究的主导语言,特别适合处理逻辑表达式这一推理和专家系统的核心组件。

3.2 Software Lotteries

3.2 软件抽奖

Software also plays a role in deciding which research ideas win and lose. Prolog and LISP were two languages heavily favored until the mid-90’s in the AI community. For most of this period, students of AI were expected to actively master one or both of these languages (Lucas & van der Gaag, 1991). LISP and Prolog were particularly well suited to handling logic expressions, which were a core component of reasoning and expert systems.

软件也在决定哪些研究理念成败中扮演着重要角色。Prolog和LISP这两种语言直到90年代中期仍在AI领域备受青睐。在此期间,AI领域的学生通常被要求熟练掌握其中一种或两种语言 (Lucas & van der Gaag, 1991)。LISP和Prolog特别适合处理逻辑表达式,而逻辑表达式正是推理系统和专家系统的核心组成部分。

For researchers who wanted to work on connectionist ideas like deep neural networks there was not a clearly suited language of choice until the emergence of Matlab in 1992 (Demuth & Beale, 1993). Implementing connectionist networks in LISP or Prolog was cumbersome and most researchers worked in low level languages like $\mathrm{c}{++}$ (Touretzky & Waibel, 1995). It was only in the 2000’s that there started to be a more healthy ecosystem around software developed for deep neural network approaches with the emergence of LUSH (Lecun & Bottou, 2002) and subsequently TORCH (Collobert et al., 2002).

对于想要研究深度神经网络等连接主义思想的研究者而言,在1992年Matlab出现之前 (Demuth & Beale, 1993),并没有明确合适的编程语言选择。使用LISP或Prolog实现连接主义网络十分繁琐,大多数研究者都采用$\mathrm{c}{++}$等底层语言进行开发 (Touretzky & Waibel, 1995)。直到2000年代,随着LUSH (Lecun & Bottou, 2002) 和后续TORCH (Collobert et al., 2002) 的出现,才逐渐形成了围绕深度神经网络方法的软件开发生态系统。

Where there is a loser, there is also a winner. From the 1960s through the mid 80s, most mainstream research was focused on symbolic approaches to AI (Haugeland, 1985). Unlike deep neural networks where learning an adequate representation is delegated to the model itself, symbolic approaches aimed to build up a knowledge base and use decision rules to replicate how humans would approach a problem. This was often codified as a sequence of logic what-if statements that were well suited to LISP and PROLOG. The widespread and sustained popularity of symbolic approaches to AI cannot easily be seen as independent of how readily it fit into existing programming and hardware frameworks.

有输家就有赢家。从20世纪60年代到80年代中期,主流AI研究主要聚焦于符号主义方法 (Haugeland, 1985) 。与将表征学习任务交给模型本身的深度神经网络不同,符号主义方法旨在构建知识库并运用决策规则来复现人类解决问题的方式。这种方法通常被编码为一系列逻辑假设语句,非常适配LISP和PROLOG语言。符号主义方法在AI领域长期保持的广泛流行性,与其对现有编程及硬件框架的良好适配性密不可分。

4 The Persistence of the Hardware Lottery

4 硬件彩票的持久性

Today, there is renewed interest in joint collaboration between hardware, software and machine learning communities. We are experiencing a second pendulum swing back to specialized hardware. The catalysts include changing hardware economics prompted by the end of Moore’s law and the breakdown of Dennard scaling (Hennessy, 2019), a “bigger is better” race in the number of model parameters that has gripped the field of machine learning (Amodei et al., 2018), spiralling energy costs (Horowitz, 2014; Strubell et al., 2019) and the dizzying requirements of deploying machine learning to edge devices (Warden & Situnayake, 2019).

如今,硬件、软件和机器学习领域对协同合作的兴趣再度兴起。我们正经历着第二次向专用硬件回摆的浪潮。这一趋势的催化剂包括:摩尔定律终结和Dennard缩放失效引发的硬件经济学变革 (Hennessy, 2019) 、机器学习领域愈演愈烈的"参数规模竞赛" (Amodei et al., 2018) 、不断攀升的能源成本 (Horowitz, 2014; Strubell et al., 2019) ,以及边缘设备部署机器学习时令人眩晕的严苛要求 (Warden & Situnayake, 2019) 。

The end of Moore’s law means we are not guaranteed more compute, hardware will have to earn it. To improve efficiency, there is a shift from task agnostic hardware like CPUs to domain specialized hardware that tailor the design to make certain tasks more efficient. The first examples of domain specialized hardware released over the last few years – TPUs (Jouppi et al., 2017), edgeTPUs (Gupta & Tan, 2019), Arm Cortex- M55 (ARM, 2020), Facebook’s big sur (Lee & Wang, 2018) – optimize explicitly for costly operations common to deep neural networks like matrix multiplies.

摩尔定律的终结意味着我们无法再获得免费的计算力提升,硬件必须通过创新来赢得性能增长。为提高效率,行业正从通用型CPU硬件转向针对特定领域优化的专用硬件设计。过去几年发布的首批领域专用硬件实例——TPU (Jouppi等人, 2017)、edgeTPU (Gupta & Tan, 2019)、Arm Cortex-M55 (ARM, 2020)、Facebook的big sur (Lee & Wang, 2018)——都明确针对深度神经网络中耗时的矩阵乘法等常见运算进行了优化。

Closer collaboration between hardware and research communities will undoubtedly continue to make the training and deployment of deep neural networks more efficient. For example, unstructured pruning (Hooker et al., 2019; Gale et al., 2019; Evci et al., 2019) and weight specific quantization (Zhen et al., 2019) are very successful compression techniques in deep neural networks but are incompatible with current hardware and compilation kernels.

硬件与研究社区之间更紧密的合作无疑将继续提升深度神经网络的训练和部署效率。例如,非结构化剪枝 (Hooker et al., 2019; Gale et al., 2019; Evci et al., 2019) 和权重特定量化 (Zhen et al., 2019) 是深度神经网络中非常成功的压缩技术,但与当前硬件和编译内核不兼容。

While these compression techniques are currently not supported, many clever hardware architects are currently thinking about how to solve for this. It is a reasonable prediction that the next few generations of chips or specialized kernels will correct for hardware biases against these techniques (Wang et al., 2018; Sun et al., 2020). Some of the first de- signs to facilitate sparsity have already hit the market (Krashinsky et al., 2020). In parallel, there is interesting research developing specialized software kernels to support unstructured sparsity (Elsen et al., 2020; Gale et al., 2020; Gray et al., 2017).

虽然目前不支持这些压缩技术,但许多聪明的硬件架构师正在思考如何解决这个问题。可以合理预测,未来几代芯片或专用内核将修正对这些技术的硬件偏见 (Wang et al., 2018; Sun et al., 2020)。首批支持稀疏性的设计已经上市 (Krashinsky et al., 2020)。与此同时,有研究正在开发专用软件内核以支持非结构化稀疏性 (Elsen et al., 2020; Gale et al., 2020; Gray et al., 2017)。

In many ways, hardware is catching up to the present state of machine learning research. Hardware is only economically viable if the lifetime of the use case lasts more than three years (Dean, 2020). Betting on ideas which have longevity is a key consideration for hardware developers. Thus, co-design effort has focused almost entirely on optimizing an older generation of models with known commercial use cases. For example, matrix multiplies are a safe target to optimize for because they are here to stay — anchored by the widespread use and adoption of deep neural networks in production systems. Allowing for unstructured sparsity and weight specific quantization are also safe targets because there is wide consensus that these will enable higher levels of compression.

从许多方面来看,硬件正在追赶机器学习研究的现状。硬件只有在用例生命周期超过三年时才具有经济可行性 (Dean, 2020)。押注具有持久性的理念是硬件开发者的关键考量。因此,协同设计工作几乎完全集中在优化具有已知商业用例的旧一代模型上。例如,矩阵乘法是安全的优化目标,因为它们会长期存在——这得益于深度神经网络在生产系统中的广泛使用和采用。允许非结构化稀疏性和权重特定量化也是安全的目标,因为业界普遍认为这些技术能实现更高水平的压缩。

There is still a separate question of whether hardware innovation is versatile enough to unlock or keep pace with entirely new machine learning research directions. It is difficult to answer this question because data points here are limited – it is hard to model the counter factual of would this idea succeed given different hardware. However, despite the inherent challenge of this task, there is already compelling evidence that domain specialized hardware makes it more costly for research ideas that stray outside of the mainstream to succeed.

硬件创新是否足够通用以解锁或跟上全新的机器学习研究方向,这仍是一个独立的问题。由于相关数据点有限,很难回答这个问题——在假设硬件不同的情况下某项研究构想能否成功,这种反事实情境难以建模。然而,尽管这项任务存在固有挑战,已有有力证据表明,领域专用硬件会显著提高偏离主流研究方向的研究构想获得成功的成本。

In 2019, a paper was published called “Machine learning is stuck in a rut.” (Barham & Isard, 2019). The authors consider the difficulty of training a new type of computer vision architecture called capsule networks (Sabour et al., 2017) on domain specialized hardware. Capsule networks include novel components like squashing operations and routing by agreement. These architecture choices aimed to solve for key deficiencies in convolutional neural networks (lack of rotational invariance and spatial hierarchy understanding) but strayed from the typical architecture of neural networks. As a result, while capsule networks operations can be implemented reasonably well on CPUs, performance falls off a cliff on accelerators like GPUs and TPUs which have been overly optimized for matrix multiplies.

2019年发表了一篇题为《机器学习陷入窠臼》的论文 (Barham & Isard, 2019)。作者探讨了在领域专用硬件上训练一种新型计算机视觉架构——胶囊网络 (Sabour et al., 2017) 的困难。胶囊网络包含压缩操作 (squashing operations) 和协议路由 (routing by agreement) 等新颖组件,这些架构选择旨在解决卷积神经网络的关键缺陷 (缺乏旋转不变性和空间层级理解能力),但偏离了神经网络的典型架构。因此,虽然胶囊网络在CPU上可以较好地运行,但在为矩阵乘法过度优化的GPU和TPU等加速器上性能会急剧下降。

Whether or not you agree that capsule networks are the future of computer vision, the authors say something interesting about the difficulty of trying to train a new type of image classification architecture on domain specialized hardware. Hardware design has prioritized delivering on commercial use cases, while built-in flexibility to accommodate the next generation of research ideas remains a distant secondary consideration.

无论你是否认同胶囊网络是计算机视觉的未来,作者们提出了一个有趣的观点:在领域专用硬件上训练新型图像分类架构存在困难。硬件设计优先考虑满足商业用例需求,而对下一代研究想法的内置灵活性支持仍是次要考量。

While specialization makes deep neural networks more efficient, it also makes it far more costly to stray from accepted building blocks. It prompts the question of how much researchers will implicitly overfit to ideas that operational ize well on available hardware rather than take a risk on ideas that are not currently feasible? What are the failures we still don’t have the hardware and software to see as a success?

虽然专业化使深度神经网络更高效,但也大幅提高了偏离现有构建模块的成本。这引发了一个问题:研究者会在多大程度上隐性过拟合那些在当前硬件上运行良好的想法,而非冒险尝试目前不可行的方案?我们仍缺乏哪些硬件和软件能力,导致某些失败无法被视为潜在的成功?

5 The Likelyhood of Future Hardware Lotteries

5 未来硬件抽奖的可能性

What we have before us are some breathtaking opportunities disguised as insoluble problems.

摆在我们面前的,是一些看似无解的难题中蕴藏的绝佳机遇。

John Gardner, 1965.

John Gardner, 1965.

It is an ongoing, open debate within the machine learning community about how much future algorithms will differ from models like deep neural networks (Sutton, 2019; Welling, 2019). The risk you attach to depending on domain specialized hardware is tied to your position on this debate. Betting heavily on specialized hardware makes sense if you think that future breakthroughs depend upon pairing deep neural networks with ever increasing amounts of data and computation.

机器学习领域持续存在一个开放性的争论:未来的算法与深度神经网络 (deep neural networks) 等模型会有多大差异 (Sutton, 2019; Welling, 2019)。你对领域专用硬件依赖风险的评估,取决于你在这场争论中的立场。如果你认为未来的突破取决于将深度神经网络与持续增长的数据和计算量相结合,那么大力押注专用硬件是合理的。

Several major research labs are making this bet, engaging in a “bigger is better” race in the number of model parameters and collecting ever more expansive datasets. However, it is unclear whether this is sustainable. An algorithms s cal ability is often thought of as the performance gradient relative to the available resources. Given more resources, how does performance increase?

多家顶尖研究实验室正押注于此,在模型参数量上展开"越大越好"的竞赛,并收集日益庞大的数据集。然而,这种模式是否可持续尚不明确。算法扩展性通常被视为性能与可用资源之间的梯度关系——当资源增加时,性能会如何提升?

For many subfields, we are now in a regime where the rate of return for additional parameters is decreasing (Thompson et al., 2020a; Brown et al., 2020). For example, while the parameters almost double between Inception V3 (Szegedy et al., 2016)and Inception V4 architectures (Szegedy et al., 2015a) (from 21.8 to 41.1 million parameters), accuracy on ImageNet differs by less than $2%$ between the two networks (78.8 vs $80%$ ) (Kornblith et al., 2018). The cost of throwing additional parameters at a problem is becoming painfully obvious. The training of GPT-3 alone is estimated to exceed $\$12$ million dollars (Wiggers, 2020).

在许多子领域,我们正面临参数边际收益递减的局面 (Thompson et al., 2020a; Brown et al., 2020) 。例如,Inception V3 (Szegedy et al., 2016) 与 Inception V4 (Szegedy et al., 2015a) 架构的参数规模几乎翻倍(从2180万增至4110万),但两者在ImageNet上的准确率差异不足 $2%$ (78.8%对比 $80%$ )(Kornblith et al., 2018) 。盲目增加参数带来的成本问题日益凸显,仅GPT-3的训练费用就预估超过 $\$1200$ 万美元 (Wiggers, 2020) 。

Perhaps more troubling is how far away we are from the type of intelligence humans demonstrate. Human brains despite their complexity remain extremely energy efficient. Our brain has over 85 billion neurons but runs on the energy equivalent of an electric shaver (Sainani, 2017). While deep neural networks may be scalable, it may be prohibitively expensive to do so in a regime of comparable intelligence to humans. An apt metaphor is that we appear to be trying to build a ladder to the moon.

更令人担忧的是,我们距离人类所展现的智能水平还有多远。尽管人脑结构复杂,但其能效却极高。我们的大脑拥有超过850亿个神经元,但运行所需的能量仅相当于一把电动剃须刀 (Sainani, 2017)。虽然深度神经网络可能具备可扩展性,但要达到与人类相当的智能水平,其成本可能高得令人望而却步。一个贴切的比喻是:我们似乎正在试图建造一架通往月球的梯子。

Biological examples of intelligence differ from deep neural networks in enough ways to suggest it is a risky bet to say that deep neural networks are the only way forward. While algorithms like deep neural networks rely on global updates in order to learn a useful represent ation, our brains do not. Our own intelligence relies on decentralized local updates which surface a global signal in ways that are still not well understood (Lillicrap & Santoro, 2019; Marble stone et al., 2016; Bi & Poo, 1998).

生物智能的实例与深度神经网络存在诸多差异,这表明断言深度神经网络是唯一发展路径存在风险。深度神经网络等算法依赖全局更新来学习有效表征,而人类大脑并非如此。我们的智能基于分散式局部更新,这些更新以尚未完全理解的方式涌现出全局信号 (Lillicrap & Santoro, 2019; Marblestone et al., 2016; Bi & Poo, 1998)。

In addition, our brains are able to learn efficient representations from far fewer labelled examples than deep neural networks (Zador, 2019). For typical deep learning models the entire model is activated for every example which leads to a quadratic blow-up in training cost. In contrast, evidence suggests that the brain does not perform a full forward and backward pass for all inputs. Instead, the brain simulates what inputs are expected against incoming sensory data. Based upon the certainty of the match, the brain simply infills. What we see is largely virtual reality computed from memory (Eagleman & Sejnowski, 2000; Bubic et al., 2010; Heeger, 2017).

此外,我们的大脑能够从比深度神经网络少得多的标注样本中学习高效表征 (Zador, 2019)。对于典型的深度学习模型,每个样本都会激活整个模型,导致训练成本呈二次方增长。相比之下,有证据表明大脑不会对所有输入执行完整的前向和反向传播。相反,大脑会模拟预期输入与传入感官数据的匹配程度。根据匹配的确定性,大脑直接进行信息补全。我们所看到的主要是由记忆计算出的虚拟现实 (Eagleman & Sejnowski, 2000; Bubic et al., 2010; Heeger, 2017)。

Figure 6: Human latency for certain tasks suggests we have specialized pathways for different stimuli. For example, it is easy for a human to walk and talk at the same time. However, it is far more cognitive ly taxing to attempt to read and talk.

图 6: 人类在特定任务中的延迟表明我们针对不同刺激存在专门的处理通路。例如,人类可以轻松地边走路边说话,但试图同时阅读和交谈则需要消耗更多的认知资源。

Humans have highly optimized and specific pathways developed in our biological hardware for different tasks (Von Neumann et al., 2000; Marcus et al., 2014; Kennedy, 2000). For example, it is easy for a human to walk and talk at the same time. However, it is far more cognitive ly taxing to attempt to read and talk (Stroop, 1935). This suggests the way a network is organized and our inductive biases is as important as the overall size of the network (Herculano-Houzel et al., 2014; Battaglia et al., 2018; Spelke & Kin- zler, 2007). Our brains are able to fine-tune and retain skills across our lifetime (Benna & Fusi, 2016; Bremner et al., 2013; Stein et al., 2004; Tani & Press, 2016; Gallistel & King, 2009; Tulving, 2002; Barnett & Ceci, 2002). In contrast, deep neural networks that are trained upon new data often evidence catastrophic forgetting, where performance deteriorates on the original task because the new information interferes with previously learned behavior (Mcclelland et al., 1995; McCloskey & Cohen, 1989; Parisi et al., 2018).

人类针对不同任务在生物硬件中进化出了高度优化且特定的处理通路 (Von Neumann et al., 2000; Marcus et al., 2014; Kennedy, 2000)。例如,人类可以轻松地边走路边说话,但边阅读边说话则需要耗费更多认知资源 (Stroop, 1935)。这表明网络的组织方式与归纳偏置 (inductive biases) 和网络规模同等重要 (Herculano-Houzel et al., 2014; Battaglia et al., 2018; Spelke & Kinzler, 2007)。人脑能够在一生中持续微调并保持各项技能 (Benna & Fusi, 2016; Bremner et al., 2013; Stein et al., 2004; Tani & Press, 2016; Gallistel & King, 2009; Tulving, 2002; Barnett & Ceci, 2002)。相比之下,基于新数据训练的深度神经网络常出现灾难性遗忘现象——由于新信息干扰已习得行为,导致模型在原任务上的性能急剧下降 (Mcclelland et al., 1995; McCloskey & Cohen, 1989; Parisi et al., 2018)。

The point of these examples is not to convince you that deep neural networks are not the way forward. But, rather that there are clearly other models of intelligence which suggest it may not be the only way. It is possible that the next breakthrough will require a fundamentally different way of modelling the world with a different combination of hardware, software and algorithm. We may very well be in the midst of a present day hardware lottery.

这些例子的目的并非要说服你深度神经网络不是未来的方向。而是想说明,显然还存在其他智能模型,表明它可能并非唯一途径。下一次突破很可能需要一种结合不同硬件、软件和算法的、根本性差异的世界建模方式。我们很可能正身处一场当代的硬件彩票中。

6 The Way Forward

6 前进之路

Any machine coding system should be judged quite largely from the point of view of how easy it is for the operator to obtain results.

任何机器编码系统都应主要从操作员获取结果的便捷性角度进行评估。

John Mauchly, 1973.

John Mauchly, 1973.

Scientific progress occurs when there is a confluence of factors which allows the scientist to overcome the "stickyness" of the existing paradigm. The speed at which paradigm shifts have happened in AI research have been disproportionately determined by the degree of alignment between hardware, software and algorithm. Thus, any attempt to avoid hardware lotteries must be concerned with making it cheaper and less timeconsuming to explore different hardwaresoftware-algorithm combinations.

科学进步的发生,源于多种因素的汇聚使科学家得以突破现有范式的"粘滞性"。AI研究中的范式转变速度,很大程度上取决于硬件、软件与算法之间的协同程度。因此,任何避免硬件彩票( hardware lottery )的尝试,都必须致力于降低探索不同硬件-软件-算法组合的成本与耗时。

This is easier said than done. Expanding the search space of possible hardware-softwarealgorithm combinations is a formidable goal. It is expensive to explore new types of hardware, both in terms of time and capital required. Producing a next generation chip typically costs $\$$30-80 million dollars and takes 2-3 years to develop (Feldman, 2019). The fixed costs alone of building a manufacturing plant are enormous; estimated at $\$$7billion dollars in 2017 (Thompson & Spanuth, 2018).

说起来容易做起来难。扩大可能的硬件-软件算法组合的搜索空间是一个艰巨的目标。探索新型硬件成本高昂,无论是时间还是资金需求。生产下一代芯片通常需要花费3000万至8000万美元,并耗时2-3年开发 (Feldman, 2019)。仅建设制造厂的固定成本就十分巨大;2017年估计为70亿美元 (Thompson & Spanuth, 2018)。

Experiments using reinforcement learning to optimize chip placement may help decrease cost (Mirhoseini et al., 2020). There is also renewed interest in re-configurable hardware such as field program gate array (FPGAs) (Hauck & DeHon, 2007) and coarse-grained re configurable arrays (CGRAs) (Prabhakar et al., 2017). These devices allow the chip logic to be re-configured to avoid being locked into a single use case. However, the trade-off for flexibility is far higher FLOPS and the need for tailored software development. Coding even simple algorithms on FPGAs remains very painful and time consuming (Shalf, 2020).

使用强化学习优化芯片布局的实验可能有助于降低成本 (Mirhoseini et al., 2020)。现场可编程门阵列 (FPGA) (Hauck & DeHon, 2007) 和粗粒度可重构阵列 (CGRA) (Prabhakar et al., 2017) 等可重构硬件也重新受到关注。这些设备允许重新配置芯片逻辑,避免局限于单一用例。然而,灵活性的代价是更高的浮点运算量 (FLOPS) 和定制化软件开发需求。在FPGA上编写简单算法仍然非常痛苦且耗时 (Shalf, 2020)。

In the short to medium term hardware development is likely to remain expensive. The cost of producing hardware is important because it determines the amount of risk and experimentation hardware developers are willing to tolerate. Investment in hardware tailored to deep neural networks is assured because neural networks are a cornerstone of enough commercial use cases. The widespread profitability of deep learning has spurred a healthy ecosystem of hardware startups that aim to further accelerate deep neural networks (Metz, 2018) and has encouraged large companies to develop custom hardware in-house (Falsafi et al., 2017; Jouppi et al., 2017; Lee & Wang, 2018).

中短期内硬件开发可能仍将保持高昂成本。硬件生产成本至关重要,因为它决定了硬件开发者愿意承担的风险和实验规模。针对深度神经网络(deep neural networks)定制硬件的投资是有保障的,因为神经网络已成为众多商业应用场景的基石。深度学习的广泛盈利能力催生了一个活跃的硬件初创企业生态圈,这些企业致力于进一步加速深度神经网络的发展(Metz, 2018),同时也推动了大公司内部定制硬件的研发(Falsafi et al., 2017; Jouppi et al., 2017; Lee & Wang, 2018)。

The bottleneck will continue to be funding hardware for use cases that are not immediately commercially viable. These more risky directions include biological hardware (Tan et al., 2007; Macía & Sole, 2014; Kriegman et al., 2020), analog hard- ware with in-memory computation (Ambrogio et al., 2018), neuro m orphic computing (Davies, 2019), optical computing (Lin et al., 2018), and quantum computing based approaches (Cross et al., 2019). There are also high risk efforts to explore the development of transistors using new materials (Colwell, 2013; Nikonov & Young, 2013).

硬件资金瓶颈将持续存在于那些无法立即商业化的应用场景中。这些高风险方向包括:生物硬件 (Tan et al., 2007; Macía & Sole, 2014; Kriegman et al., 2020) 、支持内存计算的模拟硬件 (Ambrogio et al., 2018) 、神经形态计算 (Davies, 2019) 、光计算 (Lin et al., 2018) 以及基于量子计算的方法 (Cross et al., 2019) 。此外还有使用新材料开发晶体管的高风险尝试 (Colwell, 2013; Nikonov & Young, 2013) 。

Lessons from previous hardware lotteries suggest that investment must be sustained and come from both private and public funding programs. There is a slow awakening of public interest in providing such dedicated resources, such as the 2018 DARPA Electronics Resurgence Initiative which has committed to 1.5 billion dollars in funding for micro electronic technology research (DARPA, 2018). China has also announced a 47 billion dollar fund to support semiconductor research (Kubota, 2018). However, even investment of this magnitude may still be woefully inadequate, as hardware based on new materials requires long lead times of 10-20 years and public investment is currently far below industry levels of R&D (Shalf, 2020).

以往硬件抽奖的教训表明,投资必须持续且来自私营和公共资金项目。公众对提供此类专用资源的兴趣正在缓慢觉醒,例如2018年DARPA电子复兴计划承诺投入15亿美元用于微电子技术研究 (DARPA, 2018)。中国也宣布设立470亿美元基金支持半导体研究 (Kubota, 2018)。然而,即使这种规模的投资可能仍远远不足,因为基于新材料的硬件需要10-20年的长周期,而目前公共投资远低于行业研发水平 (Shalf, 2020)。

Figure 7: Byte magazine cover, March 1979, volume 4. Hardware design remains risk adverse due to the large amount of capital and time required to fabricate each new generation of hardware.

图 7: Byte杂志1979年3月第4期封面。由于每代新硬件制造需要投入大量资金和时间,硬件设计仍趋于规避风险。

6.1 A Software Revolution

6.1 一场软件革命

An interim goal should be to provide better feedback loops to researchers about how our algorithms interact with the hardware we do have. Machine learning researchers do not spend much time talking about how hardware chooses which ideas succeed and which fail. This is primarily because it is hard to quantify the cost of being concerned. At present, there are no easy and cheap to use interfaces to benchmark algorithm performance against multiple types of hardware at once. There are frustrating differences in the subset of software operations supported on different types of hardware which prevent the portability of algorithms across hardware types (Hotel et al., 2014). Software kernels are often overly optimized for a specific type of hardware which causes large disc re pen cie s in efficiency when used with different hardware (Hennessy, 2019).

一个阶段性目标应该是为研究人员提供更好的反馈循环,让他们了解我们的算法如何与现有硬件交互。机器学习研究者很少讨论硬件如何决定哪些想法成功或失败,这主要是因为量化关注成本很困难。目前还没有简便廉价的方法能同时针对多种硬件类型对算法性能进行基准测试。不同硬件支持的软件操作子集存在令人沮丧的差异,阻碍了算法跨硬件类型的可移植性 (Hotel et al., 2014)。软件内核通常针对特定硬件类型过度优化,导致在不同硬件上使用时效率差异巨大 (Hennessy, 2019)。

These challenges are compounded by an ever more formidable and heterogeneous hardware landscape (Reddi et al., 2020; Fursin et al., 2016). As the hardware landscape be- comes increasingly fragmented and specialized, fast and efficient code will require ever more niche and specialized skills to write (Lee et al., 2011). This means that there will be increasingly uneven gains from progress in computer science research. While some types of hardware will benefit from a healthy software ecosystem, progress on other languages will be sporadic and often stymied by a lack of critical end users (Thompson & Spanuth, 2018; Leiserson et al., 2020).

这些挑战因日益复杂且异构的硬件环境而加剧 (Reddi et al., 2020; Fursin et al., 2016) 。随着硬件环境日趋碎片化和专业化,编写快速高效的代码将需要更加小众和专业的技能 (Lee et al., 2011) 。这意味着计算机科学研究的进步将带来日益不均衡的收益。虽然某些类型的硬件将受益于健康的软件生态系统,但其他语言的进展将时断时续,并常常因缺乏关键终端用户而受阻 (Thompson & Spanuth, 2018; Leiserson et al., 2020) 。

One way to mitigate this need for specialized software expertise is to focus on the development of domain-specific languages which cater to a narrow domain. While you give up expressive power, domain-specific languages permit greater portability across different types of hardware. It allows developers to focus on the intent of the code without worrying about implementation details. (Olukotun, 2014; Mernik et al., 2005; Cong et al., 2011). Another promising direction is automatically auto-tuning the algorithmic parameters of a program based upon the downstream choice of hardware. This facilitates easier deployment by tailoring the program to achieve good performance and load balancing on a variety of hardware (Dongarra et al., 2018; Clint Whaley et al., 2001; Asanović et al., 2006; Ansel et al., 2014).

缓解这种对专业软件知识需求的一种方法是专注于开发针对特定领域的领域特定语言 (domain-specific languages)。虽然牺牲了表达能力,但领域特定语言能在不同类型的硬件上实现更好的可移植性。这让开发者可以专注于代码的意图,而无需担心实现细节 (Olukotun, 2014; Mernik et al., 2005; Cong et al., 2011)。另一个有前景的方向是根据下游硬件选择自动调整程序的算法参数。通过定制程序以在各种硬件上实现良好性能和负载均衡,这有助于更轻松的部署 (Dongarra et al., 2018; Clint Whaley et al., 2001; Asanović et al., 2006; Ansel et al., 2014)。

The difficulty of both these approaches is that if successful, this further abstracts humans from the details of the implementation. In parallel, we need better profiling tools to allow researchers to have a more informed opinion about how hardware and software should evolve. Ideally, software could even surface recommendations about what type of hardware to use given the configuration of an algorithm. Registering what differs from our expectations remains a key catalyst in driving new scientific discoveries.

这两种方法的难点在于,若取得成功,将进一步使人类远离实现细节。与此同时,我们需要更优的性能分析工具,让研究人员能更明智地判断硬件与软件应如何演进。理想情况下,软件甚至能根据算法配置推荐适用的硬件类型。记录与预期不符的现象,始终是推动新科学发现的关键催化剂。

Software needs to do more work, but it is also well positioned to do so. We have neglected efficient software throughout the era of Moore’s law, trusting that predictable gains in compute would compensate for inefficiencies in the software stack. This means there are many low hanging fruit as we begin to optimize for more efficient code (Larus, 2009; Xu et al., 2010).

软件需要承担更多工作,但它也完全具备这样的能力。在摩尔定律时代,我们始终忽视高效软件开发,盲目相信可预测的算力增长能弥补软件栈的低效问题。这意味着当我们开始优化代码效率时,存在大量唾手可得的改进机会 (Larus, 2009; Xu et al., 2010)。

7 Conclusion

7 结论

George Gilder, an American investor, described the computer chip as “inscribing worlds on grains of sand” (Gilder, 2000). The performance of an algorithm is fundamentally intertwined with the hardware and software it runs on. This essay proposes the term hardware lottery to describe how these downstream choices determine whether a research idea succeeds or fails. Today the hardware landscape is increasingly heterogeneous. This essay posits that the hardware lottery has not gone away, and the gap between the winners and losers will grow increasingly larger. In order to avoid future hardware lotteries, we need to make it easier to quantify the opportunity cost of settling for the hardware and software we have.

美国投资者George Gilder曾将计算机芯片描述为"在沙粒上铭刻世界" (Gilder, 2000)。算法的性能从根本上与其运行的硬件和软件紧密相连。本文提出"硬件彩票(hardware lottery)"这一术语,用以描述这些下游选择如何决定研究理念的成败。如今硬件格局日益多样化。本文认为硬件彩票现象并未消失,赢家与输家之间的差距将变得越来越大。为避免未来的硬件彩票,我们需要更轻松地量化安于现有硬件和软件的机会成本。

8 Acknowledgments

8 致谢

Thank you to many of my wonderful colleagues and peers who took time to provide valuable feedback on earlier versions of this essay. In particular, I would like to acknowledge the valuable input of Utku Evci, Erich Elsen, Melissa Fabros, Amanda Su, Simon Kornblith, Cliff Young, Eric Jang, Sean McPherson, Jonathan Frankle, Carles Gelada, David Ha, Brian Spiering, Samy Bengio, Stephanie Sher, Jonathan Binas, Pete Warden, Sean Mcpherson, Laura Florescu, Jacques Pienaar, Chip Huyen, Ra- ziel Alvarez, Dan Hurt and Kevin Swersky. Thanks for the institutional support and encouragement of Natacha Mainville and Alexander Popper.

感谢我的许多优秀同事和同行,他们抽出时间为本文的早期版本提供了宝贵反馈。特别感谢 Utku Evci、Erich Elsen、Melissa Fabros、Amanda Su、Simon Kornblith、Cliff Young、Eric Jang、Sean McPherson、Jonathan Frankle、Carles Gelada、David Ha、Brian Spiering、Samy Bengio、Stephanie Sher、Jonathan Binas、Pete Warden、Sean Mcpherson、Laura Florescu、Jacques Pienaar、Chip Huyen、Raziel Alvarez、Dan Hurt 和 Kevin Swersky 的宝贵意见。同时感谢 Natacha Mainville 和 Alexander Popper 的机构支持与鼓励。

References

参考文献

Ambrogio, S., Narayanan, P., Tsai, H., Shelby, R., Boybat, I., Nolfo, C., Sidler, S., Giordano, M., Bodini, M., Farinha, N., Killeen, B., Cheng, C., Jaoudi, Y., and Burr, G. Equivalent-accuracy accelerated neural-network training using analogue memory. Nature, 558, 06 2018. doi: 10.1038/ s41586-018-0180-5.

Ambrogio, S., Narayanan, P., Tsai, H., Shelby, R., Boybat, I., Nolfo, C., Sidler, S., Giordano, M., Bodini, M., Farinha, N., Killeen, B., Cheng, C., Jaoudi, Y., and Burr, G. 基于模拟存储器的等效精度加速神经网络训练. Nature, 558, 06 2018. doi: 10.1038/s41586-018-0180-5.

Amodei, D., Hernandez, D., Sastry, G., Clark, J., Brockman, G., and Sutskever, I. Ai and compute, 2018. URL https://openai.com/ blog/ai-and-compute/.

Amodei, D., Hernandez, D., Sastry, G., Clark, J., Brockman, G., and Sutskever, I. AI与算力, 2018. URL https://openai.com/blog/ai-and-compute/.

Ansel, J., Kamil, S., Veera mach an eni, K., Ragan-Kelley, J., Bosboom, J., O’Reilly, U.- M., and Amara sing he, S. Opentuner: An extensible framework for program autotuning. In Proceedings of the 23rd International Conference on Parallel Architectures and Compilation, PACT ’14, pp. 303–316, New York, NY, USA, 2014. Association for Computing Machinery. ISBN 9781450328098. doi: 10.1145/2628071.2628092. URL doi.org/10.1145/2628071.2628092.

Ansel, J., Kamil, S., Veera mach an eni, K., Ragan-Kelley, J., Bosboom, J., O’Reilly, U.- M., 和 Amara sing he, S. OpenTuner: 一个可扩展的程序自动调优框架。载于《第23届国际并行架构与编译技术会议论文集》(PACT '14),第303–316页,美国纽约州,2014年。计算机协会。ISBN 9781450328098。doi: 10.1145/2628071.2628092。URL https://doi.org/10.1145/2628071.2628092。

ARM. Enhancing ai performance for iot endpoint devices, 2020. URL https: //www.arm.com/company/news/2020/ 02/new-ai-technology-from-arm.

ARM. 提升物联网终端设备的AI性能, 2020. URL https: //www.arm.com/company/news/2020/ 02/new-ai-technology-from-arm.

Asanovic, K. Accelerating ai: Past, present, and future, 2018. URL https://www.youtube. com/watch?v $=$ 8 n 2 H Lp 2 gt Ys&t $=$ 2116s.

Asanovic, K. 加速AI:过去、现在与未来, 2018. URL https://www.youtube.com/watch?v=8n2HLp2gtYs&t=2116s.

Asanovic, K., Bodik, R., Catanzaro, B. C. Gebis, J. J., Husbands, P., Keutzer, K., Patterson, D. A., Plishker, W. L., Shalf, J., Williams, S. W., and Yelick, K. A. The landscape of parallel computing research: A view from berkeley. Technical Report UCB/EECS2006-183, EECS Department, University of California, Berkeley, Dec 2006. URL http: //www2.eecs.berkeley.edu/Pubs/ TechRpts/2006/EECS-2006-183.html.

Asanovic, K., Bodik, R., Catanzaro, B. C. Gebis, J. J., Husbands, P., Keutzer, K., Patterson, D. A., Plishker, W. L., Shalf, J., Williams, S. W., and Yelick, K. A. 并行计算研究的全景:来自伯克利的视角。技术报告 UCB/EECS2006-183,电子工程与计算机科学系,加州大学伯克利分校,2006年12月。URL http://www2.eecs.berkeley.edu/Pubs/TechRpts/2006/EECS-2006-183.html。

Barham, P. and Isard, M. Machine learning systems are stuck in a rut. In Proceedings of the Workshop on Hot Topics in Operating Systems, HotOS ’19, pp. 177–183, New York, NY, USA, 2019. Association for Computing Machinery. ISBN 9781450367271. doi: 10.1145/3317550.3321441. URL doi.org/10.1145/3317550.3321441.

Barham, P. 和 Isard, M. 机器学习系统陷入困境。载于《操作系统热点议题研讨会论文集》,HotOS '19,第177-183页,美国纽约州纽约市,2019年。计算机协会。ISBN 9781450367271。doi: 10.1145/3317550.3321441。URL https://doi.org/10.1145/3317550.3321441。

Barnett, S. M. and Ceci, S. When and where do we apply what we learn? a taxonomy for far transfer. Psychological bulletin, 128 4:612– 37, 2002.

Barnett, S. M. 和 Ceci, S. 我们何时何地应用所学知识?远迁移的分类法。Psychological bulletin, 128 4:612–37, 2002.

Battaglia, P. W., Hamrick, J. B., Bapst, V., Sanchez-Gonzalez, A., Zambaldi, V. F., Ma- linowski, M., Tacchetti, A., Raposo, D., Santoro, A., Faulkner, R., Gülçehre, Ç., Song, H. F., Ballard, A. J., Gilmer, J., Dahl, G. E., Vaswani, A., Allen, K. R., Nash, C., Langston, V., Dyer, C., Heess, N., Wierstra, D., Kohli, P., Botvinick, M., Vinyals, O., Li, Y., and Pascanu, R. Relational inductive biases, deep learning, and graph networks. CoRR, abs/1806.01261, 2018. URL http://arxiv.org/abs/1806.01261.

Battaglia, P. W., Hamrick, J. B., Bapst, V., Sanchez-Gonzalez, A., Zambaldi, V. F., Malinowski, M., Tacchetti, A., Raposo, D., Santoro, A., Faulkner, R., Gülçehre, Ç., Song, H. F., Ballard, A. J., Gilmer, J., Dahl, G. E., Vaswani, A., Allen, K. R., Nash, C., Langston, V., Dyer, C., Heess, N., Wierstra, D., Kohli, P., Botvinick, M., Vinyals, O., Li, Y., and Pascanu, R. 关系归纳偏差、深度学习与图网络。CoRR, abs/1806.01261, 2018. URL http://arxiv.org/abs/1806.01261.

Benna, M. and Fusi, S. Computational principles of synaptic memory consolidation. Nature Neuroscience, 19, 10 2016. doi: 10.1038/ nn.4401.

Benna, M. 和 Fusi, S. 突触记忆巩固的计算原理。Nature Neuroscience, 19, 10 2016. doi: 10.1038/nn.4401.

Bi, G.-q. and Poo, M.-m. Synaptic modifications in cultured hippo camp al neurons: Dependence on spike timing, synaptic strength, and post synaptic cell type. Journal of Neuroscience, 18(24):10464–10472, 1998. ISSN 0270-6474. doi: 10.1523/JNEUROSCI. 18-24-10464.1998. URL https://www. jneurosci.org/content/18/24/10464.

Bi, G.-q. 和 Poo, M.-m. 培养海马神经元中的突触可塑性:依赖于放电时序、突触强度和突触后细胞类型。Journal of Neuroscience, 18(24):10464–10472, 1998. ISSN 0270-6474. doi: 10.1523/JNEUROSCI. 18-24-10464.1998. URL https://www. jneurosci.org/content/18/24/10464.

Bremner, A., Lewkowicz, D., and Spence, C. Multi sensory development, 11 2013.

Bremner, A., Lewkowicz, D., 和 Spence, C. 多感官发展, 11 2013.

Brodtkorb, A. R., Hagen, T. R., and Sætra, M. L. Graphics processing unit (gpu) programming strategies and trends in gpu computing. Journal of Parallel and Distributed Computing, 73(1):4 – 13, 2013. ISSN 0743-7315.doi:https://doi.org/10.1016/j.jpdc.2012.04.003. URL http: //www.science direct.com/science/ article/pii/S0743731512000998. Meta heuristics on GPUs.

Brodtkorb, A. R., Hagen, T. R., and Sætra, M. L. 图形处理器 (GPU) 编程策略与GPU计算趋势. 并行与分布式计算杂志, 73(1):4–13, 2013. ISSN 0743-7315. doi:https://doi.org/10.1016/j.jpdc.2012.04.003. URL http://www.sciencedirect.com/science/article/pii/S0743731512000998. GPU上的元启发式算法.

Brown, T. B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neel a kant an, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D. M., Wu, J., Winter, C., Hesse, C., Chen, M., Sigler, E., Litwin, M., Gray, S., Chess, B., Clark, J., Berner, C., McCand lish, S., Radford, A., Sutskever, I., and Amodei, D. Language Models are Few-Shot Learners. arXiv e-prints, May 2020.

Brown, T. B., Mann, B., Ryder, N., Subbiah, M., Kaplan, J., Dhariwal, P., Neelakantan, A., Shyam, P., Sastry, G., Askell, A., Agarwal, S., Herbert-Voss, A., Krueger, G., Henighan, T., Child, R., Ramesh, A., Ziegler, D. M., Wu, J., Winter, C., Hesse, C., Chen, M.,