Language Models are Few-Shot Learners

大语言模型是少样本学习者

| Tom B. Brown* | Benjamin Mann* | Nick Ryder* | Melanie Subbiah* | |||||

| Jared Kaplant | Prafulla Dhariwal | Arvind Neelakantan | Pranav Shyam | Girish Sastry | ||||

| Amanda Askell | Sandhini Agarwal | Ariel Herbert-Voss | Gretchen Krueger | Tom Henighan | ||||

| Rewon Child | Aditya Ramesh | Daniel M. Ziegler | Jeffrey Wu | Clemens Winter | ||||

| Christopher Hesse | Mark Chen | Eric Sigler | Mateusz Litwin | Scott Gray | ||||

| Benjamin Chess | Jack Clark | Christopher Berner | ||||||

| Sam McCandlish | Alec Radford | Ilya Sutskever | Dario Amodei | |||||

Tom B. Brown* | Benjamin Mann* | Nick Ryder* | Melanie Subbiah*

Jared Kaplan | Prafulla Dhariwal | Arvind Neelakantan | Pranav Shyam | Girish Sastry

Amanda Askell | Sandhini Agarwal | Ariel Herbert-Voss | Gretchen Krueger | Tom Henighan

Rewon Child | Aditya Ramesh | Daniel M. Ziegler | Jeffrey Wu | Clemens Winter

Christopher Hesse | Mark Chen | Eric Sigler | Mateusz Litwin | Scott Gray

Benjamin Chess | Jack Clark | Christopher Berner

Sam McCandlish | Alec Radford | Ilya Sutskever | Dario Amodei

OpenAI

OpenAI

Abstract

摘要

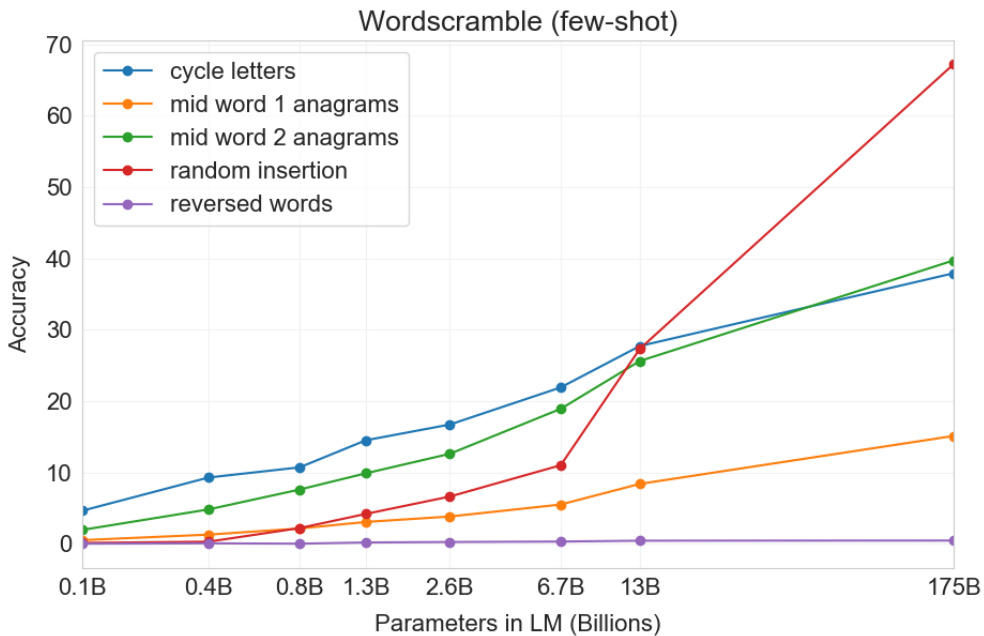

Recent work has demonstrated substantial gains on many NLP tasks and benchmarks by pre-training on a large corpus of text followed by fine-tuning on a specific task. While typically task-agnostic in architecture, this method still requires task-specific fine-tuning datasets of thousands or tens of thousands of examples. By contrast, humans can generally perform a new language task from only a few examples or from simple instructions – something which current NLP systems still largely struggle to do. Here we show that scaling up language models greatly improves task-agnostic, few-shot performance, sometimes even reaching competitiveness with prior state-of-the-art finetuning approaches. Specifically, we train GPT-3, an auto regressive language model with 175 billion parameters, $10\mathrm{x}$ more than any previous non-sparse language model, and test its performance in the few-shot setting. For all tasks, GPT-3 is applied without any gradient updates or fine-tuning, with tasks and few-shot demonstrations specified purely via text interaction with the model. GPT-3 achieves strong performance on many NLP datasets, including translation, question-answering, and cloze tasks, as well as several tasks that require on-the-fly reasoning or domain adaptation, such as unscrambling words, using a novel word in a sentence, or performing 3-digit arithmetic. At the same time, we also identify some datasets where GPT-3’s few-shot learning still struggles, as well as some datasets where GPT-3 faces methodological issues related to training on large web corpora. Finally, we find that GPT-3 can generate samples of news articles which human evaluators have difficulty distinguishing from articles written by humans. We discuss broader societal impacts of this finding and of GPT-3 in general.

近期研究表明,通过对海量文本进行预训练再针对特定任务微调,能在众多自然语言处理(NLP)任务和基准测试中取得显著提升。尽管这种方法在架构上通常与任务无关,但仍需要数千乃至数万例的任务特定微调数据集。相比之下,人类通常仅需几个示例或简单说明就能完成新语言任务——而这正是当前NLP系统普遍面临的难题。本文证明,扩大语言模型规模能显著提升任务无关的少样本学习性能,有时甚至可与现有最先进的微调方法媲美。具体而言,我们训练了GPT-3——一个拥有1750亿参数的自回归语言模型,其参数量是此前所有非稀疏语言模型的10倍,并在少样本设定下测试其性能。所有任务中,GPT-3均未进行梯度更新或微调,仅通过文本交互指定任务和少样本示例。GPT-3在翻译、问答、完形填空等NLP任务上表现优异,同时能胜任即时推理和领域适应任务,如单词重组、新词造句及三位数运算。我们也发现部分数据集上GPT-3的少样本学习仍存在困难,以及某些因网络语料训练引发的 methodological 问题。最后,实验表明GPT-3生成的新闻样本已能让人类评估者难以区分其与人工撰写文章的区别。我们将探讨这一发现及GPT-3更广泛的社会影响。

Contents

目录

1 Introduction

1 引言

2 Approach

2 方法

3 Results

3 结果

10

10

3.1 Language Modeling, Cloze, and Completion Tasks 1

3.1 语言建模、填空与补全任务 1

4 Measuring and Preventing Memorization Of Benchmarks 29

4 基准测试的记忆化测量与预防 29

5 Limitations 33

5 局限性 33

6 Broader Impacts 34

6 更广泛的影响 34

7 Related Work 39

7 相关工作 39

8 Conclusion 40

8 结论 40

1 Introduction

1 引言

Recent years have featured a trend towards pre-trained language representations in NLP systems, applied in increasingly flexible and task-agnostic ways for downstream transfer. First, single-layer representations were learned using word vectors [MCCD13, PSM14] and fed to task-specific architectures, then RNNs with multiple layers of representations and contextual state were used to form stronger representations [DL15, MBXS17, PNZtY18] (though still applied to task-specific architectures), and more recently pre-trained recurrent or transformer language models $[\mathrm{VSP^{+}}17]$ have been directly fine-tuned, entirely removing the need for task-specific architectures [RNSS18, DCLT18, HR18].

近年来,NLP系统呈现出向预训练语言表示发展的趋势,这些表示以越来越灵活且与任务无关的方式应用于下游迁移。最初,使用词向量 [MCCD13, PSM14] 学习单层表示,并将其输入到特定任务的架构中;随后,采用具有多层表示和上下文状态的RNN来构建更强的表示 [DL15, MBXS17, PNZtY18](尽管仍应用于特定任务架构);最近,预训练的循环或Transformer语言模型 $[\mathrm{VSP^{+}}17]$ 可直接微调,完全消除了对特定任务架构的需求 [RNSS18, DCLT18, HR18]。

This last paradigm has led to substantial progress on many challenging NLP tasks such as reading comprehension, question answering, textual entailment, and many others, and has continued to advance based on new architectures and algorithms $[\mathrm{RSR}^{+}19$ , $\mathrm{LOG}^{+}19$ , $\mathrm{YDY^{+}19}$ , $\operatorname{LCG}^{+}19]$ . However, a major limitation to this approach is that while the architecture is task-agnostic, there is still a need for task-specific datasets and task-specific fine-tuning: to achieve strong performance on a desired task typically requires fine-tuning on a dataset of thousands to hundreds of thousands of examples specific to that task. Removing this limitation would be desirable, for several reasons.

这一最新范式已在阅读理解、问答、文本蕴含等众多具有挑战性的自然语言处理(NLP)任务上取得重大进展,并随着新架构和算法的出现持续发展[RSR+19, LOG+19, YDY+19, LCG+19]。然而,该方法的主要局限在于:虽然架构与任务无关,但仍需要特定任务的数据集和微调——要在目标任务上实现强劲性能,通常需要针对该任务数千至数十万规模的样本数据进行微调。出于多方面考虑,消除这一限制将具有重要意义。

First, from a practical perspective, the need for a large dataset of labeled examples for every new task limits the applicability of language models. There exists a very wide range of possible useful language tasks, encompassing anything from correcting grammar, to generating examples of an abstract concept, to critiquing a short story. For many of these tasks it is difficult to collect a large supervised training dataset, especially when the process must be repeated for every new task.

首先,从实际角度来看,为每个新任务都需要大量标注样本数据集这一点限制了大语言模型 (LLM) 的适用性。语言任务的应用范围极其广泛,从语法纠错到抽象概念示例生成,再到短篇故事评析。对于其中许多任务而言,收集大规模监督训练数据集非常困难,尤其是当每个新任务都需要重复这一过程时。

Second, the potential to exploit spurious correlations in training data fundamentally grows with the expressiveness of the model and the narrowness of the training distribution. This can create problems for the pre-training plus fine-tuning paradigm, where models are designed to be large to absorb information during pre-training, but are then fine-tuned on very narrow task distributions. For instance $\mathrm{[HLW^{+}}20]$ observe that larger models do not necessarily generalize better out-of-distribution. There is evidence that suggests that the generalization achieved under this paradigm can be poor because the model is overly specific to the training distribution and does not generalize well outside it $\mathrm{[YdC^{+}\bar{1}9}$ , MPL19]. Thus, the performance of fine-tuned models on specific benchmarks, even when it is nominally at human-level, may exaggerate actual performance on the underlying task $[\mathrm{GSL^{+}}18$ , NK19].

其次,随着模型表现力的增强和训练数据分布的狭窄化,利用训练数据中虚假相关性的可能性也在增加。这可能给"预训练+微调"范式带来问题——模型被设计得足够大以吸收预训练阶段的信息,却要在非常狭窄的任务分布上进行微调。例如[HLW+20]观察到,更大的模型未必具有更好的分布外泛化能力。有证据表明,这种范式下实现的泛化能力可能较差,因为模型过度适配训练数据分布,在分布外场景表现不佳[YdC+19, MPL19]。因此,微调模型在特定基准测试上的表现(即使名义上达到人类水平)可能夸大了其在真实任务中的实际能力[GSL+18, NK19]。

Third, humans do not require large supervised datasets to learn most language tasks – a brief directive in natural language (e.g. “please tell me if this sentence describes something happy or something sad”) or at most a tiny number of demonstrations (e.g. “here are two examples of people acting brave; please give a third example of bravery”) is often sufficient to enable a human to perform a new task to at least a reasonable degree of competence. Aside from pointing to a conceptual limitation in our current NLP techniques, this adaptability has practical advantages – it allows humans to seamlessly mix together or switch between many tasks and skills, for example performing addition during a lengthy dialogue. To be broadly useful, we would someday like our NLP systems to have this same fluidity and generality.

第三,人类学习大多数语言任务并不需要大量监督数据集——自然语言的简短指令(例如"请告诉我这句话描述的是开心还是悲伤的事情")或最多少量演示(例如"这是两个勇敢行为的例子;请再举一个勇敢的例子")通常就足以让人至少以合理的能力水平完成新任务。除了指出当前自然语言处理(NLP)技术的概念局限外,这种适应性还具有实际优势——它使人类能够无缝混合或切换多种任务和技能,例如在冗长对话中执行加法运算。为了实现广泛实用性,我们希望有朝一日我们的NLP系统也能具备同样的流畅性和通用性。

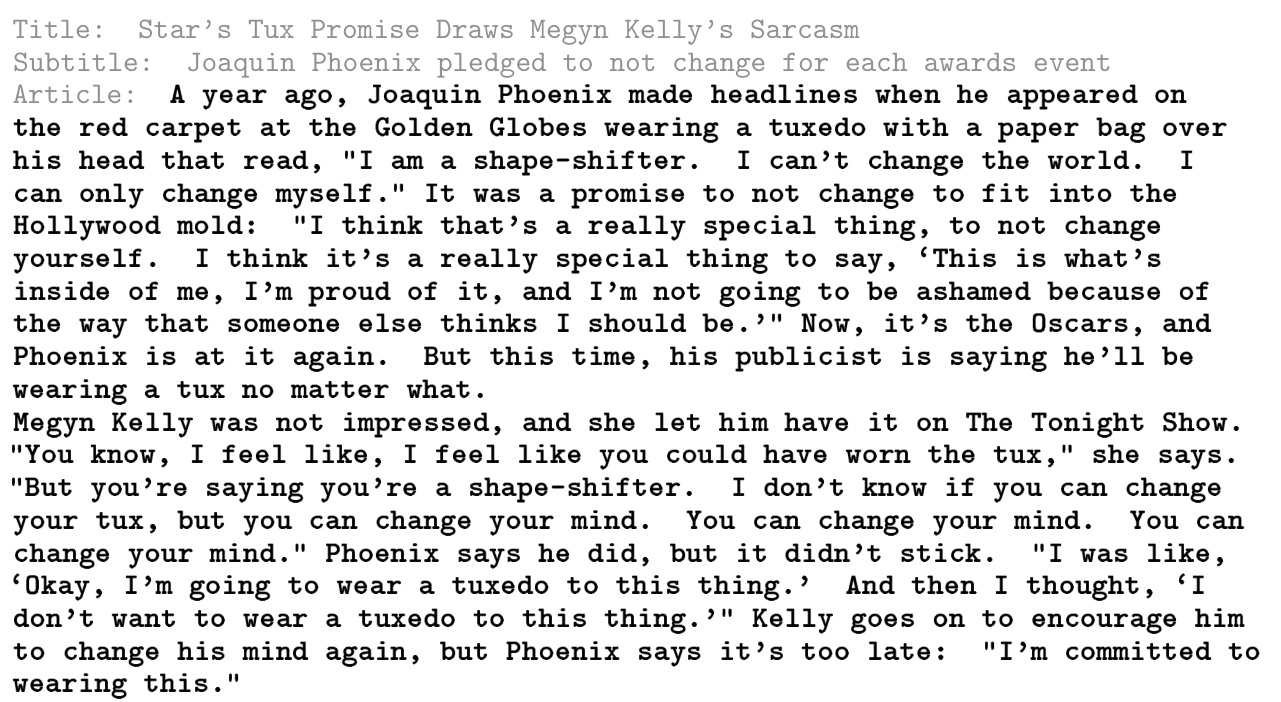

Figure 1.1: Language model meta-learning. During unsupervised pre-training, a language model develops a broad set of skills and pattern recognition abilities. It then uses these abilities at inference time to rapidly adapt to or recognize the desired task. We use the term “in-context learning” to describe the inner loop of this process, which occurs within the forward-pass upon each sequence. The sequences in this diagram are not intended to be representative of the data a model would see during pre-training, but are intended to show that there are sometimes repeated sub-tasks embedded within a single sequence.

图 1.1: 语言模型元学习。在无监督预训练阶段,语言模型培养出广泛的技能和模式识别能力。随后在推理时利用这些能力快速适应或识别目标任务。我们使用"上下文学习"这一术语描述该过程的内部循环,即每次前向传播时在单个序列内发生的学习。图中序列并非预训练数据的真实示例,而是用于说明有时单个序列中会嵌入重复的子任务。

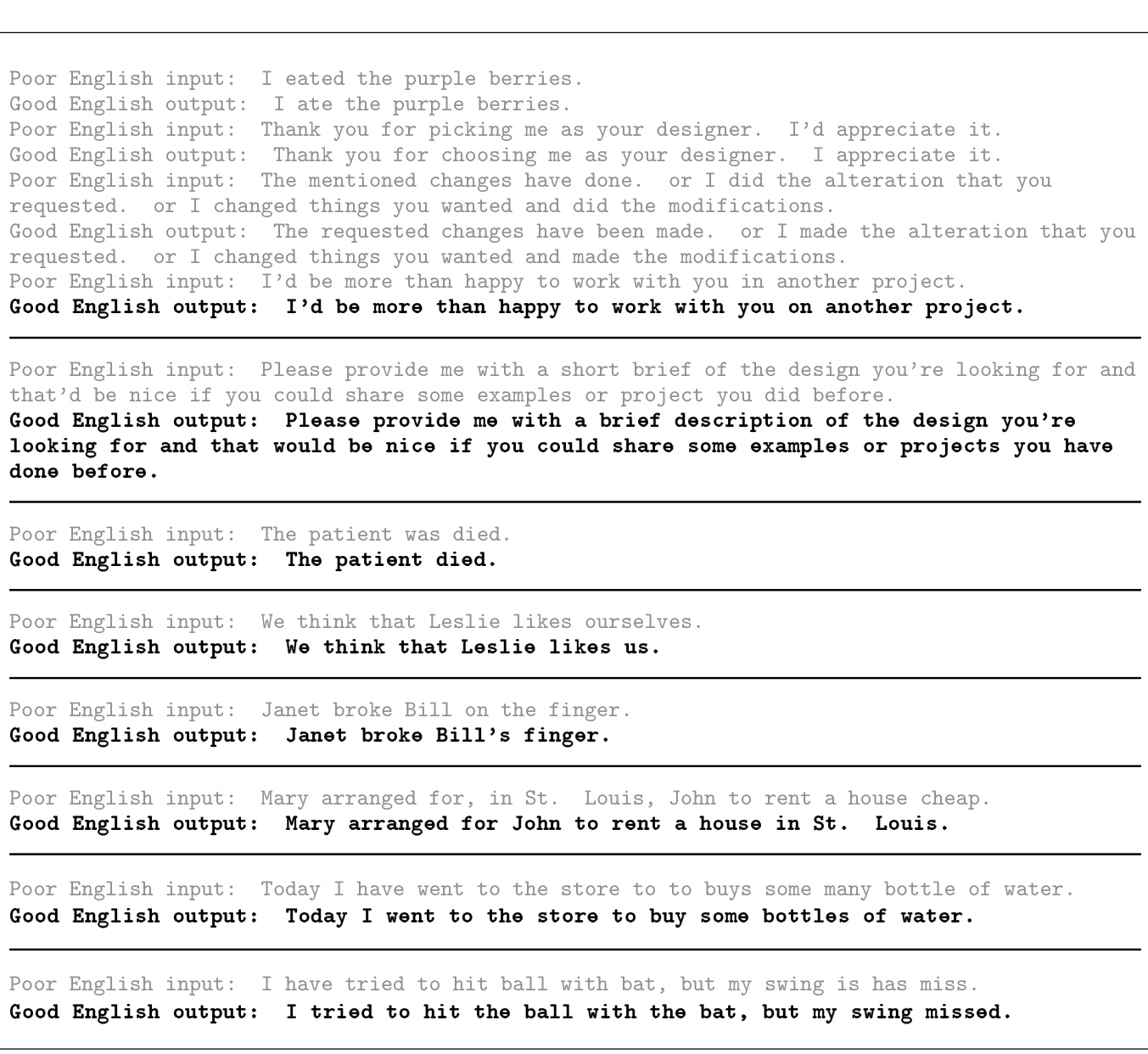

Figure 1.2: Larger models make increasingly efficient use of in-context information. We show in-context learning performance on a simple task requiring the model to remove random symbols from a word, both with and without a natural language task description (see Sec. 3.9.2). The steeper “in-context learning curves” for large models demonstrate improved ability to learn a task from contextual information. We see qualitatively similar behavior across a wide range of tasks.

图 1.2: 大模型能更高效地利用上下文信息。我们展示了一个简单任务中的上下文学习性能,该任务要求模型从单词中移除随机符号,包括有自然语言任务描述和无描述两种情况(参见第3.9.2节)。大模型更陡峭的"上下文学习曲线"表明其从上下文信息中学习任务的能力有所提升。我们在各种任务中都观察到了类似的行为模式。

One potential route towards addressing these issues is meta-learning1 – which in the context of language models means the model develops a broad set of skills and pattern recognition abilities at training time, and then uses those abilities at inference time to rapidly adapt to or recognize the desired task (illustrated in Figure 1.1). Recent work $[\mathrm{RWC^{+}}19]$ attempts to do this via what we call “in-context learning”, using the text input of a pretrained language model as a form of task specification: the model is conditioned on a natural language instruction and/or a few demonstrations of the task and is then expected to complete further instances of the task simply by predicting what comes next.

解决这些问题的一个潜在途径是元学习1——在语言模型的背景下,这意味着模型在训练时发展出一套广泛的技能和模式识别能力,然后在推理时利用这些能力快速适应或识别所需任务(如图1.1所示)。最近的研究[RWC+19]尝试通过我们称为"上下文学习"的方式实现这一点:将预训练语言模型的文本输入作为任务规范的一种形式——模型以自然语言指令和/或少量任务演示为条件,仅通过预测后续内容即可完成任务的新实例。

While it has shown some initial promise, this approach still achieves results far inferior to fine-tuning – for example $[\mathrm{RWC^{+}}19]$ achieves only $4%$ on Natural Questions, and even its 55 F1 CoQa result is now more than 35 points behind the state of the art. Meta-learning clearly requires substantial improvement in order to be viable as a practical method of solving language tasks.

尽管该方法已展现出初步潜力,但其效果仍远逊于微调(fine-tuning)——例如[RWC+19]在Natural Questions上仅取得4%的准确率,其55 F1值的CoQA结果也落后当前最优水平35分以上。要使元学习(meta-learning)成为解决语言任务的实用方法,显然还需要大幅改进。

Another recent trend in language modeling may offer a way forward. In recent years the capacity of transformer language models has increased substantially, from 100 million parameters [RNSS18], to 300 million parameters [DCLT18], to 1.5 billion parameters $[\mathrm{RWC^{+}}19]$ , to 8 billion parameters $[\mathrm{SPP^{+}}19]$ , 11 billion parameters $[\mathrm{RSR^{+}}19]$ , and finally 17 billion parameters [Tur20]. Each increase has brought improvements in text synthesis and/or downstream NLP tasks, and there is evidence suggesting that log loss, which correlates well with many downstream tasks, follows a smooth trend of improvement with scale $[\mathrm{KMH^{+}}20]$ . Since in-context learning involves absorbing many skills and tasks within the parameters of the model, it is plausible that in-context learning abilities might show similarly strong gains with scale.

语言建模领域的另一新趋势可能指明了前进方向。近年来,Transformer语言模型的参数量实现了显著增长:从1亿参数[RNSS18]、3亿参数[DCLT18]、15亿参数$[\mathrm{RWC^{+}}19]$,到80亿参数$[\mathrm{SPP^{+}}19]$、110亿参数$[\mathrm{RSR^{+}}19]$,最终达到170亿参数[Tur20]。每次规模扩大都带来了文本生成和/或下游NLP任务的性能提升,且有证据表明与众多下游任务高度相关的对数损失(log loss)会随规模扩大呈现平滑的改进趋势$[\mathrm{KMH^{+}}20]$。由于上下文学习(in-context learning)需要模型参数吸收多种技能和任务,其能力很可能同样会随规模扩大而显著增强。

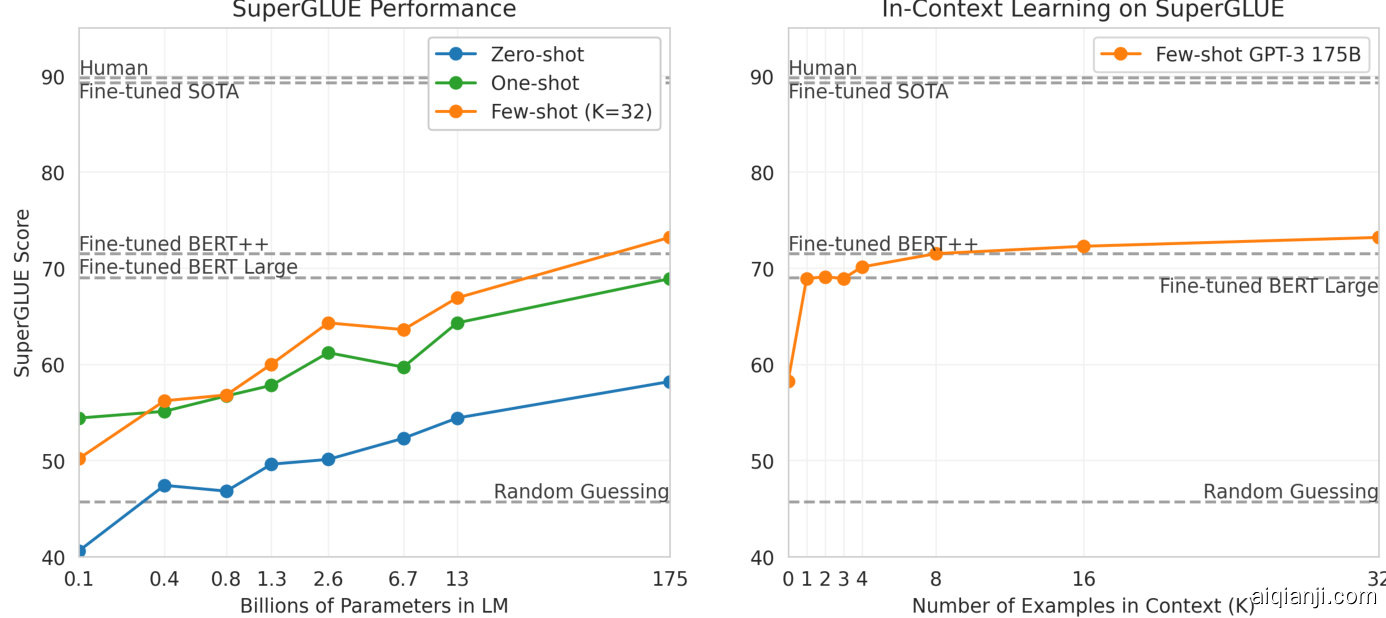

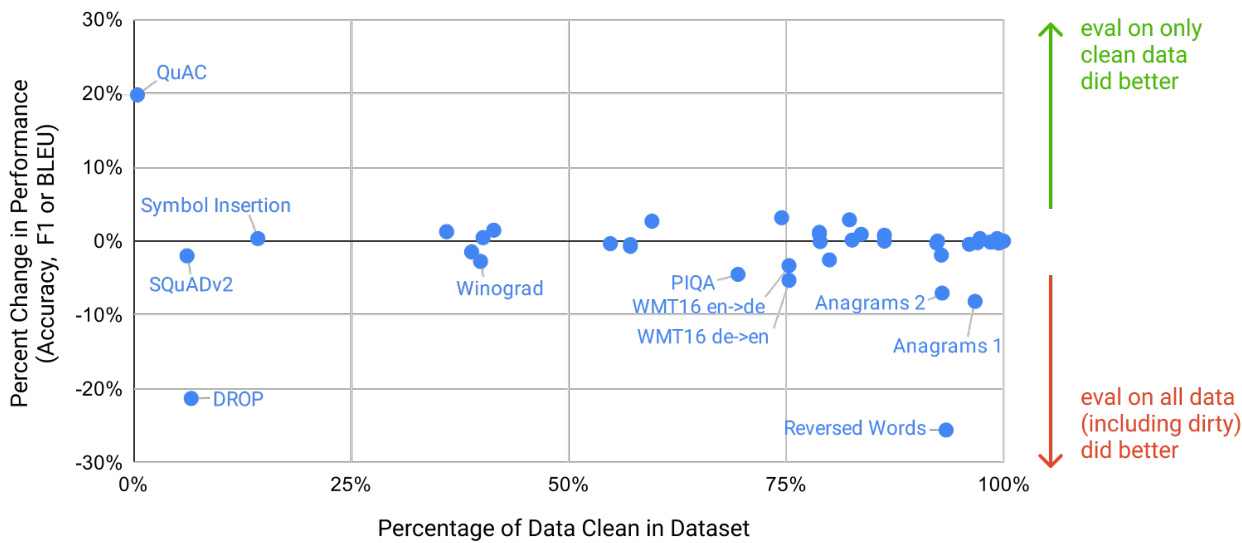

Figure 1.3: Aggregate performance for all 42 accuracy-denominated benchmarks While zero-shot performance improves steadily with model size, few-shot performance increases more rapidly, demonstrating that larger models are more proficient at in-context learning. See Figure 3.8 for a more detailed analysis on SuperGLUE, a standard NLP benchmark suite.

图 1.3: 全部42个准确率基准测试的总体表现

虽然零样本性能随模型规模稳步提升,但少样本性能提升更为显著,这表明更大规模的模型更擅长上下文学习。关于标准NLP基准测试集SuperGLUE的更详细分析,请参见图3.8。

In this paper, we test this hypothesis by training a 175 billion parameter auto regressive language model, which we call GPT-3, and measuring its in-context learning abilities. Specifically, we evaluate GPT-3 on over two dozen NLP datasets, as well as several novel tasks designed to test rapid adaptation to tasks unlikely to be directly contained in the training set. For each task, we evaluate GPT-3 under 3 conditions: (a) “few-shot learning”, or in-context learning where we allow as many demonstrations as will fit into the model’s context window (typically 10 to 100), (b) “one-shot learning”, where we allow only one demonstration, and (c) “zero-shot” learning, where no demonstrations are allowed and only an instruction in natural language is given to the model. GPT-3 could also in principle be evaluated in the traditional fine-tuning setting, but we leave this to future work.

在本文中,我们通过训练一个1750亿参数的自回归语言模型(称为GPT-3)来验证这一假设,并测试其上下文学习能力。具体而言,我们在超过20个NLP数据集以及多个新设计任务上评估GPT-3,这些任务用于测试模型对训练集中不太可能直接包含的任务的快速适应能力。针对每个任务,我们在三种条件下评估GPT-3:(a) "少样本学习(few-shot learning)",即上下文学习中允许模型上下文窗口容纳尽可能多的示例(通常为10到100个);(b) "单样本学习(one-shot learning)",仅允许一个示例;(c) "零样本(zero-shot)"学习,不提供任何示例,仅向模型提供自然语言指令。原则上GPT-3也可在传统微调设置下评估,但我们将其留待未来研究。

Figure 1.2 illustrates the conditions we study, and shows few-shot learning of a simple task requiring the model to remove extraneous symbols from a word. Model performance improves with the addition of a natural language task description, and with the number of examples in the model’s context, $K$ . Few-shot learning also improves dramatically with model size. Though the results in this case are particularly striking, the general trends with both model size and number of examples in-context hold for most tasks we study. We emphasize that these “learning” curves involve no gradient updates or fine-tuning, just increasing numbers of demonstrations given as conditioning.

图 1.2 展示了我们研究的条件,并演示了一个简单任务的少样本学习,该任务要求模型从单词中去除无关符号。模型性能随着自然语言任务描述的加入以及上下文示例数量 $K$ 的增加而提升。少样本学习也随着模型规模的扩大而显著改善。尽管此案例的结果特别引人注目,但模型规模和上下文示例数量对大多数研究任务都呈现类似的趋势。需要强调的是,这些"学习"曲线不涉及梯度更新或微调,仅通过增加作为条件输入的演示样本数量实现。

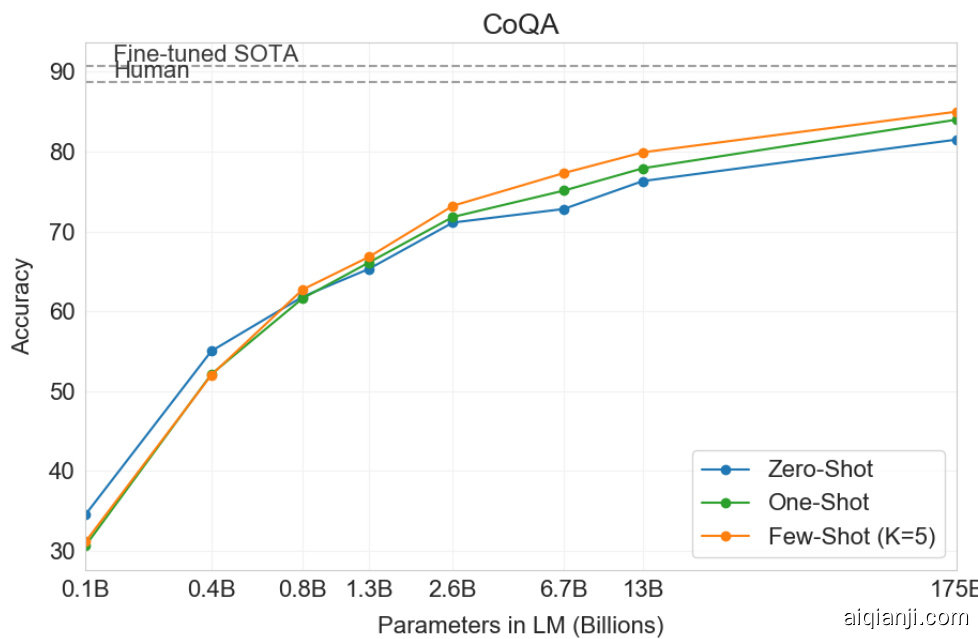

Broadly, on NLP tasks GPT-3 achieves promising results in the zero-shot and one-shot settings, and in the the few-shot setting is sometimes competitive with or even occasionally surpasses state-of-the-art (despite state-of-the-art being held by fine-tuned models). For example, GPT-3 achieves 81.5 F1 on CoQA in the zero-shot setting, 84.0 F1 on CoQA in the one-shot setting, $85.0\mathrm{F}1$ in the few-shot setting. Similarly, GPT-3 achieves $64.3%$ accuracy on TriviaQA in the zero-shot setting, $68.0%$ in the one-shot setting, and $71.2%$ in the few-shot setting, the last of which is state-of-the-art relative to fine-tuned models operating in the same closed-book setting.

广泛而言,在NLP任务上,GPT-3在零样本和单样本设置中取得了令人瞩目的成果,在少样本设置下有时能与甚至偶尔超越当前最优水平(尽管当前最优模型多为经过微调的模型)。例如,GPT-3在CoQA任务中零样本设置下达到81.5 F1值,单样本设置下为84.0 F1值,少样本设置下为$85.0\mathrm{F}1$。同样地,在TriviaQA任务中,GPT-3零样本设置准确率为$64.3%$,单样本设置提升至$68.0%$,少样本设置进一步达到$71.2%$,后者在相同闭卷设置下相较微调模型取得了当前最优成绩。

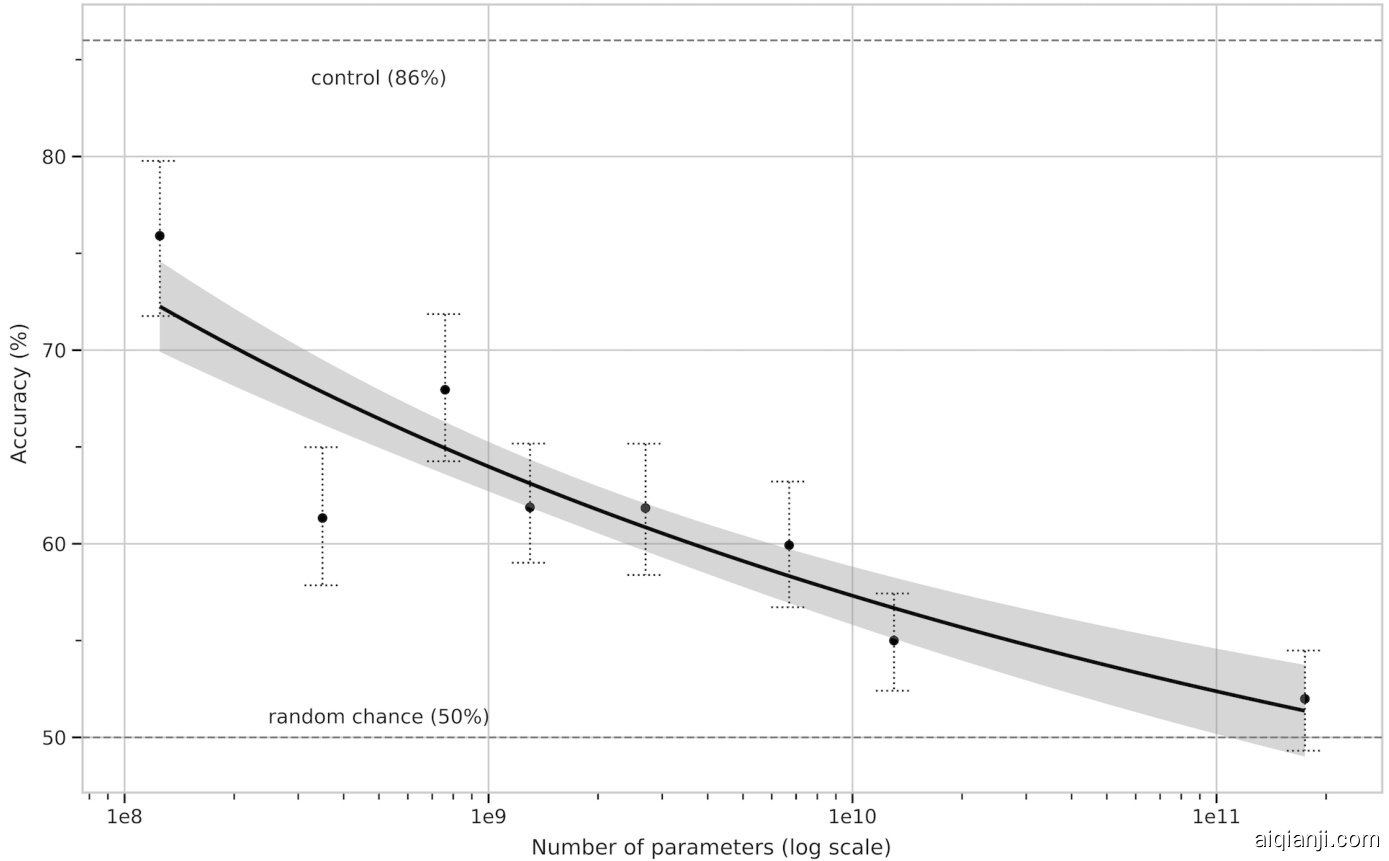

GPT-3 also displays one-shot and few-shot proficiency at tasks designed to test rapid adaption or on-the-fly reasoning, which include unscrambling words, performing arithmetic, and using novel words in a sentence after seeing them defined only once. We also show that in the few-shot setting, GPT-3 can generate synthetic news articles which human evaluators have difficulty distinguishing from human-generated articles.

GPT-3在测试快速适应或即时推理能力的任务中也展现出了单样本(one-shot)和少样本(few-shot)的熟练度,这些任务包括解构单词、执行算术运算以及在仅见过一次定义后就能在句子中使用新词。我们还表明,在少样本设置下,GPT-3可以生成合成新闻文章,而人类评估者难以将其与人类撰写的文章区分开来。

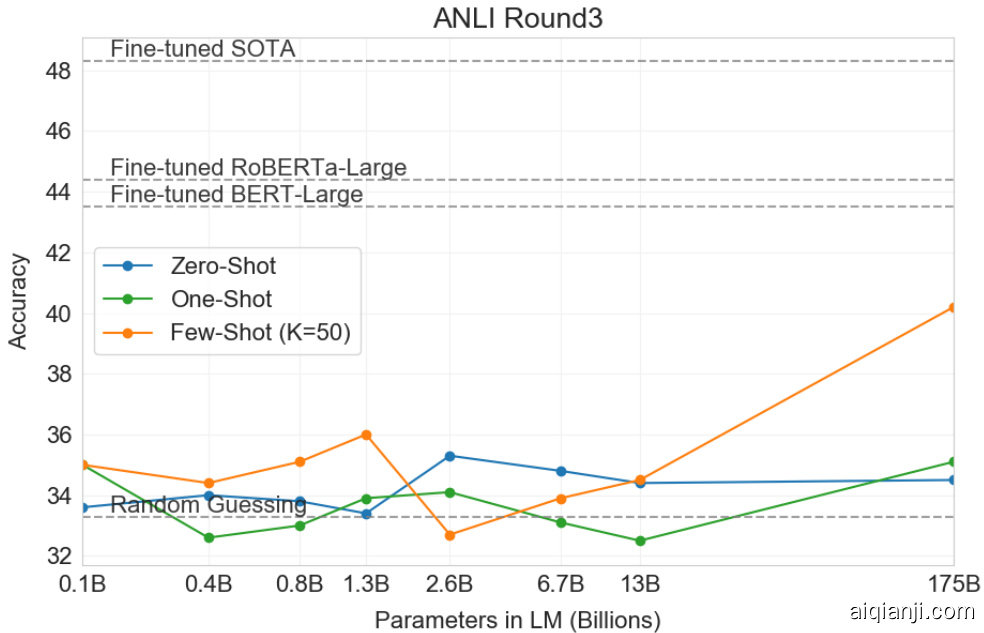

At the same time, we also find some tasks on which few-shot performance struggles, even at the scale of GPT-3. This includes natural language inference tasks like the ANLI dataset, and some reading comprehension datasets like RACE or QuAC. By presenting a broad characterization of GPT-3’s strengths and weaknesses, including these limitations, we hope to stimulate study of few-shot learning in language models and draw attention to where progress is most needed.

与此同时,我们也发现某些任务即使对于GPT-3这种规模的模型,少样本学习表现仍不理想。这包括ANLI数据集等自然语言推理任务,以及RACE或QuAC等部分阅读理解数据集。通过全面展示GPT-3的优势与局限(包括这些不足之处),我们希望推动大语言模型中少样本学习的研究,并明确亟待突破的方向。

A heuristic sense of the overall results can be seen in Figure 1.3, which aggregates the various tasks (though it should not be seen as a rigorous or meaningful benchmark in itself).

图 1.3 汇总了各项任务的结果,可以直观地感受到整体表现 (但不应将其视为严格或有意义的基准)。

We also undertake a systematic study of “data contamination” – a growing problem when training high capacity models on datasets such as Common Crawl, which can potentially include content from test datasets simply because such content often exists on the web. In this paper we develop systematic tools to measure data contamination and quantify its distorting effects. Although we find that data contamination has a minimal effect on GPT-3’s performance on most datasets, we do identify a few datasets where it could be inflating results, and we either do not report results on these datasets or we note them with an asterisk, depending on the severity.

我们还系统研究了"数据污染(data contamination)"问题——这是在高容量模型训练(如使用Common Crawl数据集时)日益严重的问题,由于测试集内容常存在于网络,训练数据可能混入测试集内容。本文开发了系统化工具来测量数据污染并量化其干扰效应。虽然发现数据污染对GPT-3在多数数据集上性能影响甚微,但仍识别出少数可能夸大结果的数据集,我们根据严重程度选择不报告这些数据集结果或使用星号标注。

In addition to all the above, we also train a series of smaller models (ranging from 125 million parameters to 13 billion parameters) in order to compare their performance to GPT-3 in the zero, one and few-shot settings. Broadly, for most tasks we find relatively smooth scaling with model capacity in all three settings; one notable pattern is that the gap between zero-, one-, and few-shot performance often grows with model capacity, perhaps suggesting that larger models are more proficient meta-learners.

除了上述所有内容外,我们还训练了一系列较小的模型(参数范围从1.25亿到130亿),以便在零样本、单样本和少样本设置下与GPT-3进行性能比较。总体而言,对于大多数任务,我们发现这三种设置下模型容量的扩展相对平滑;一个显著的模式是,零样本、单样本和少样本性能之间的差距通常随着模型容量的增加而扩大,这可能表明较大的模型是更熟练的元学习器。

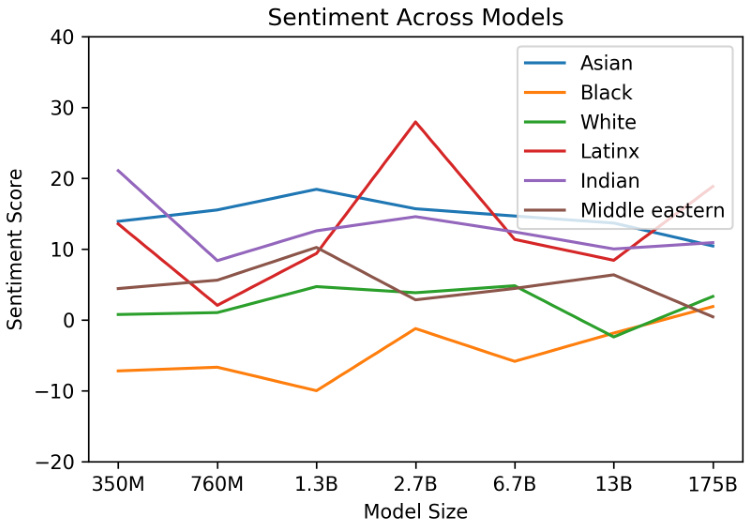

Finally, given the broad spectrum of capabilities displayed by GPT-3, we discuss concerns about bias, fairness, and broader societal impacts, and attempt a preliminary analysis of GPT-3’s characteristics in this regard.

最后,鉴于GPT-3展现出的广泛能力,我们讨论了关于偏见、公平性及更广泛社会影响的担忧,并尝试就此对GPT-3的特性进行初步分析。

The remainder of this paper is organized as follows. In Section 2, we describe our approach and methods for training GPT-3 and evaluating it. Section 3 presents results on the full range of tasks in the zero-, one- and few-shot settings. Section 4 addresses questions of data contamination (train-test overlap). Section 5 discusses limitations of GPT-3. Section 6 discusses broader impacts. Section 7 reviews related work and Section 8 concludes.

本文的其余部分组织如下。第2节介绍了训练GPT-3和评估的方法。第3节展示了在零样本、单样本和少样本设置下各种任务的结果。第4节讨论了数据污染(训练-测试重叠)的问题。第5节探讨了GPT-3的局限性。第6节讨论了更广泛的影响。第7节回顾了相关工作,第8节总结全文。

2 Approach

2 方法

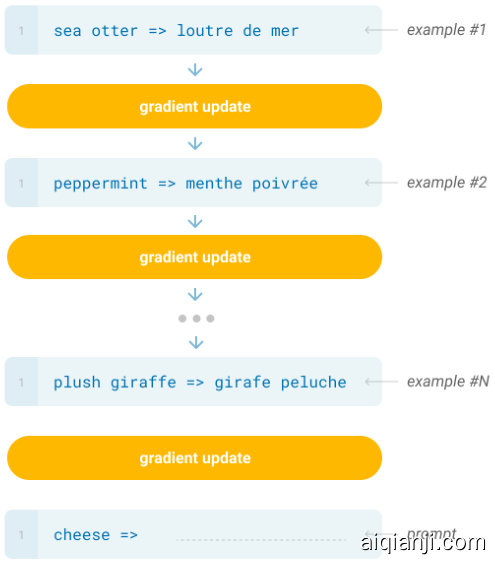

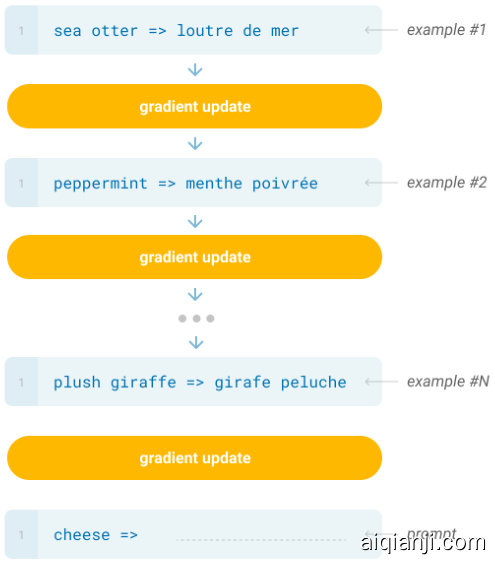

Our basic pre-training approach, including model, data, and training, is similar to the process described in $[\mathrm{RWC^{+}}19]$ , with relatively straightforward scaling up of the model size, dataset size and diversity, and length of training. Our use of in-context learning is also similar to $[\mathrm{RWC^{+}}19]$ , but in this work we systematically explore different settings for learning within the context. Therefore, we start this section by explicitly defining and contrasting the different settings that we will be evaluating GPT-3 on or could in principle evaluate GPT-3 on. These settings can be seen as lying on a spectrum of how much task-specific data they tend to rely on. Specifically, we can identify at least four points on this spectrum (see Figure 2.1 for an illustration):

我们的基础预训练方法,包括模型、数据和训练过程,与 $[\mathrm{RWC^{+}}19]$ 中描述的流程相似,主要通过直接扩大模型规模、数据集规模与多样性以及训练时长来实现。我们采用的上下文学习 (in-context learning) 方式也与 $[\mathrm{RWC^{+}}19]$ 类似,但本研究系统性地探索了上下文中的不同学习设置。因此,本节首先明确定义并对比我们将评估 GPT-3 的不同设置(或在原则上可评估 GPT-3 的设置)。这些设置可视为分布在依赖任务特定数据量的连续谱系上,具体可识别谱系中至少四个关键点(示意图见图 2.1):

• Fine-Tuning (FT) has been the most common approach in recent years, and involves updating the weights of a pre-trained model by training on a supervised dataset specific to the desired task. Typically thousands to hundreds of thousands of labeled examples are used. The main advantage of fine-tuning is strong performance on many benchmarks. The main disadvantages are the need for a new large dataset for every task, the potential for poor generalization out-of-distribution [MPL19], and the potential to exploit spurious features of the training data $[\mathrm{GSL^{+}}18$ , NK19], potentially resulting in an unfair comparison with human performance. In this work we do not fine-tune GPT-3 because our focus is on task-agnostic performance, but GPT-3 can be fine-tuned in principle and this is a promising direction for future work.

• 微调 (Fine-Tuning, FT) 是近年来最常见的方法,通过在特定任务的监督数据集上训练来更新预训练模型的权重。通常使用数千到数十万个标注样本。微调的主要优势是在许多基准测试中表现优异。主要缺点包括:每个任务都需要新的庞大数据集、可能在分布外数据上泛化能力差 [MPL19],以及可能利用训练数据的虚假特征 $[\mathrm{GSL^{+}}18$, NK19],导致与人类表现的不公平比较。本研究未对 GPT-3 进行微调,因为我们的重点是任务无关性能,但原则上 GPT-3 可以进行微调,这是未来工作的一个 promising 方向。

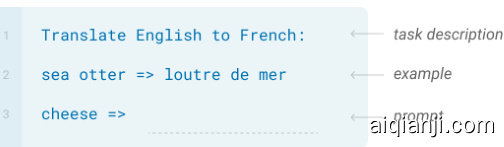

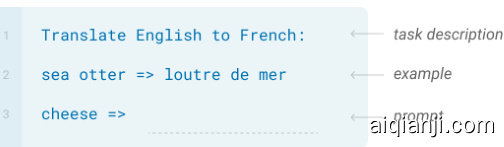

• Few-Shot (FS) is the term we will use in this work to refer to the setting where the model is given a few demonstrations of the task at inference time as conditioning $[\mathrm{RWC^{+}}19]$ , but no weight updates are allowed. As shown in Figure 2.1, for a typical dataset an example has a context and a desired completion (for example an English sentence and the French translation), and few-shot works by giving $K$ examples of context and completion, and then one final example of context, with the model expected to provide the completion. We typically set $K$ in the range of 10 to 100 as this is how many examples can fit in the model’s context window $(n_{\mathrm{ctx}}=2048)$ ). The main advantages of few-shot are a major reduction in the need for task-specific data and reduced potential to learn an overly narrow distribution from a large but narrow fine-tuning dataset. The main disadvantage is that results from this method have so far been much worse than state-of-the-art fine-tuned models. Also, a small amount of task specific data is still required. As indicated by the name, few-shot learning as described here for language models is related to few-shot learning as used in other contexts in ML [HYC01, $\mathrm{VBL^{+}}16]$ – both involve learning based on a broad distribution of tasks (in this case implicit in the pre-training data) and then rapidly adapting to a new task.

• 少样本 (Few-Shot, FS) 在本研究中指模型在推理时通过少量任务示例作为条件输入 [RWC+19],但不允许更新权重。如图 2.1 所示,典型数据集的样本包含上下文和目标输出(例如英语句子和法语翻译),少样本学习通过提供 $K$ 组上下文-输出示例,最后给出一个上下文让模型补全输出。我们通常将 $K$ 设为 10 到 100,这是模型上下文窗口 $(n_{\mathrm{ctx}}=2048)$ 能容纳的示例数量。少样本的主要优势在于大幅减少对任务特定数据的需求,并降低从大而窄的微调数据集中学习到过度狭隘分布的可能性。主要缺点是该方法目前的效果远逊于最先进的微调模型,且仍需少量任务特定数据。如名称所示,大语言模型的少样本学习与机器学习其他领域的少样本学习 [HYC01, VBL+16] 存在关联——两者都基于广泛任务分布(此处隐含于预训练数据中)进行学习,然后快速适应新任务。

• One-Shot (1S) is the same as few-shot except that only one demonstration is allowed, in addition to a natural language description of the task, as shown in Figure 1. The reason to distinguish one-shot from few-shot and zero-shot (below) is that it most closely matches the way in which some tasks are communicated to humans. For example, when asking humans to generate a dataset on a human worker service (for example Mechanical Turk), it is common to give one demonstration of the task. By contrast it is sometimes difficult to communicate the content or format of a task if no examples are given.

• 单样本 (1S) 与少样本类似,但只允许提供一个演示示例,同时附带任务的自然语言描述,如图 1 所示。区分单样本与少样本及零样本(下文将介绍)的原因在于,它最接近某些任务传达给人类的方式。例如,当要求人类在众包平台(如 Mechanical Turk)上生成数据集时,通常会提供一个任务演示示例。相比之下,如果不提供任何示例,有时很难传达任务的内容或格式。

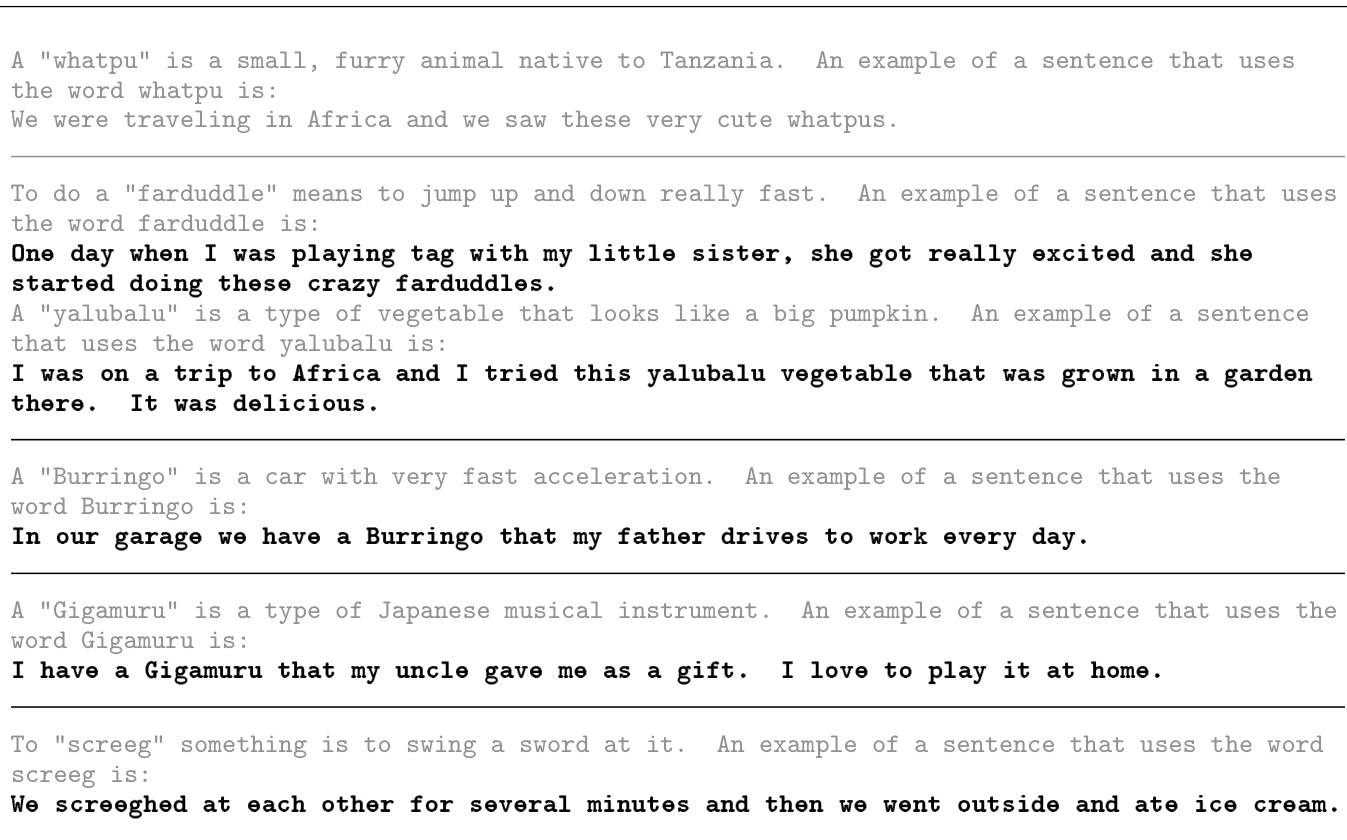

The three settings we explore for in-context learning Traditional fine-tuning (not used for GPT-3)

我们探索的三种上下文学习设置

传统微调(不适用于GPT-3)

Zero-shot

零样本

Fine-tuning

微调

The model predicts the answer given only a natural language description of the task. No gradient updates are performed

模型仅根据任务的自然语言描述预测答案,不进行梯度更新。

One-shot

单样本

In addition to the task description, the model sees a single example of the task. No gradient updates are performed.

除了任务描述外,模型还会看到该任务的一个示例。不执行梯度更新。

Few-shot

少样本

In addition to the task description,the model sees a few examples of the task.No gradient updates are performed

除了任务描述外,模型还会看到该任务的几个示例。不执行梯度更新

The model is trained via repeated gradient updates using a large corpus of example tasks.

模型通过使用大量示例任务语料库进行重复梯度更新来训练。

Figure 2.1: Zero-shot, one-shot and few-shot, contrasted with traditional fine-tuning. The panels above show four methods for performing a task with a language model – fine-tuning is the traditional method, whereas zero-, one-, and few-shot, which we study in this work, require the model to perform the task with only forward passes at test time. We typically present the model with a few dozen examples in the few shot setting. Exact phrasings for all task descriptions, examples and prompts can be found in Appendix G.

图 2.1: 零样本、单样本和少样本与传统微调方法的对比。上图展示了使用语言模型执行任务的四种方法——微调是传统方法,而本研究所探讨的零样本、单样本和少样本方法仅需在测试时进行前向传播。在少样本设定中,我们通常为模型提供几十个示例。所有任务描述、示例和提示的具体措辞详见附录G。

• Zero-Shot (0S) is the same as one-shot except that no demonstrations are allowed, and the model is only given a natural language instruction describing the task. This method provides maximum convenience, potential for robustness, and avoidance of spurious correlations (unless they occur very broadly across the large corpus of pre-training data), but is also the most challenging setting. In some cases it may even be difficult for humans to understand the format of the task without prior examples, so this setting is in some cases “unfairly hard”. For example, if someone is asked to “make a table of world records for the $200\mathrm{m}$ dash”, this request can be ambiguous, as it may not be clear exactly what format the table should have or what should be included (and even with careful clarification, understanding precisely what is desired can be difficult). Nevertheless, for at least some settings zero-shot is closest to how humans perform tasks – for example, in the translation example in Figure 2.1, a human would likely know what to do from just the text instruction.

• 零样本 (0S) 与单样本类似,但不允许提供演示样例,模型仅会收到描述任务的自然语言指令。这种方法提供了最大便利性、潜在的鲁棒性,并能避免虚假相关性(除非它们在预训练数据的大规模语料库中广泛存在),但同时也是最具挑战性的设定。在某些情况下,甚至人类也可能难以在没有先前示例的情况下理解任务格式,因此这种设定有时会"不公平地困难"。例如,如果有人被要求"制作一份200米短跑世界纪录的表格",这个请求可能具有歧义,因为表格的具体格式或应包含内容可能不明确(即使经过仔细澄清,准确理解需求也可能很困难)。不过,至少在某些场景中,零样本最接近人类执行任务的方式——例如在图2.1的翻译示例中,人类仅凭文本指令就能明白该做什么。

Figure 2.1 shows the four methods using the example of translating English to French. In this paper we focus on zero-shot, one-shot and few-shot, with the aim of comparing them not as competing alternatives, but as different problem settings which offer a varying trade-off between performance on specific benchmarks and sample efficiency. We especially highlight the few-shot results as many of them are only slightly behind state-of-the-art fine-tuned models. Ultimately, however, one-shot, or even sometimes zero-shot, seem like the fairest comparisons to human performance, and are important targets for future work.

图 2.1 展示了四种以英译法为例的方法。本文重点研究零样本、单样本和少样本场景,目的不是将它们作为竞争性替代方案进行比较,而是作为在不同基准测试性能与样本效率之间提供差异化权衡的问题设定。我们特别强调少样本结果,因为其中许多结果仅略逊于经过微调的顶尖模型。但最终,单样本(有时甚至是零样本)似乎是与人类表现最公平的对比基准,也是未来工作的重要目标。

Sections 2.1-2.3 below give details on our models, training data, and training process respectively. Section 2.4 discusses the details of how we do few-shot, one-shot, and zero-shot evaluations.

以下第2.1-2.3节分别详细介绍我们的模型、训练数据和训练过程。第2.4节讨论我们如何进行少样本、单样本和零样本评估的具体细节。

| Model Name | Nparams | Nlayers | dmodel | Nheads | dhead | BatchSize | Learning Rate |

| GPT-3Small | 125M | 12 | 768 | 12 | 64 | 0.5M | 6.0 x 10-4 |

| GPT-3Medium | 350M | 24 | 1024 | 16 | 64 | 0.5M | 3.0 × 10-4 |

| GPT-3 Large | 760M | 24 | 1536 | 16 | 96 | 0.5M | 2.5 × 10-4 |

| GPT-3XL | 1.3B | 24 | 2048 | 24 | 128 | 1M | 2.0x 10-4 |

| GPT-3 2.7B | 2.7B | 32 | 2560 | 32 | 80 | 1M | 1.6 × 10-4 |

| GPT-36.7B | 6.7B | 32 | 4096 | 32 | 128 | 2M | 1.2 × 10-4 |

| GPT-3 13B | 13.0B | 40 | 5140 | 40 | 128 | 2M | 1.0 × 10-4 |

| GPT-3175B0r“GPT-3" | 175.0B | 96 | 12288 | 96 | 128 | 3.2M | 0.6 × 10-4 |

| 模型名称 | 参数量 (Nparams) | 层数 (Nlayers) | 模型维度 (dmodel) | 注意力头数 (Nheads) | 头维度 (dhead) | 批次大小 (BatchSize) | 学习率 (Learning Rate) |

|---|---|---|---|---|---|---|---|

| GPT-3 Small | 125M | 12 | 768 | 12 | 64 | 0.5M | 6.0 × 10⁻⁴ |

| GPT-3 Medium | 350M | 24 | 1024 | 16 | 64 | 0.5M | 3.0 × 10⁻⁴ |

| GPT-3 Large | 760M | 24 | 1536 | 16 | 96 | 0.5M | 2.5 × 10⁻⁴ |

| GPT-3 XL | 1.3B | 24 | 2048 | 24 | 128 | 1M | 2.0 × 10⁻⁴ |

| GPT-3 2.7B | 2.7B | 32 | 2560 | 32 | 80 | 1M | 1.6 × 10⁻⁴ |

| GPT-3 6.7B | 6.7B | 32 | 4096 | 32 | 128 | 2M | 1.2 × 10⁻⁴ |

| GPT-3 13B | 13.0B | 40 | 5140 | 40 | 128 | 2M | 1.0 × 10⁻⁴ |

| GPT-3 175B (GPT-3) | 175.0B | 96 | 12288 | 96 | 128 | 3.2M | 0.6 × 10⁻⁴ |

Table 2.1: Sizes, architectures, and learning hyper-parameters (batch size in tokens and learning rate) of the model which we trained. All models were trained for a total of 300 billion tokens.

表 2.1: 我们训练模型的规模、架构及学习超参数(token 批大小和学习率)。所有模型均训练了总计 3000 亿 token。

2.1 Model and Architectures

2.1 模型与架构

We use the same model and architecture as GPT-2 $[\mathrm{RWC^{+}}19]$ , including the modified initialization, pre-normalization, and reversible token iz ation described therein, with the exception that we use alternating dense and locally banded sparse attention patterns in the layers of the transformer, similar to the Sparse Transformer [CGRS19]. To study the dependence of ML performance on model size, we train 8 different sizes of model, ranging over three orders of magnitude from 125 million parameters to 175 billion parameters, with the last being the model we call GPT-3. Previous work $[\mathrm{KMH^{+}}20]$ suggests that with enough training data, scaling of validation loss should be approximately a smooth power law as a function of size; training models of many different sizes allows us to test this hypothesis both for validation loss and for downstream language tasks.

我们采用与GPT-2 $[\mathrm{RWC^{+}}19]$ 相同的模型架构,包括其中描述的改进初始化、预归一化和可逆token化方法,唯一区别是在Transformer各层中交替使用稠密注意力与局部带状稀疏注意力模式,类似Sparse Transformer [CGRS19]的设计。为研究机器学习性能与模型规模的关联性,我们训练了8种不同参数规模的模型,跨度达三个数量级(从1.25亿到1750亿参数),其中最大规模模型即我们称为GPT-3的版本。现有研究 $[\mathrm{KMH^{+}}20]$ 表明,当训练数据充足时,验证损失随模型规模的变化应近似符合平滑幂律关系;通过训练多种规模的模型,我们得以验证该假设在验证损失和下游语言任务中的适用性。

Table 2.1 shows the sizes and architectures of our 8 models. Here $n_{\mathrm{params}}$ is the total number of trainable parameters, $n_{\mathrm{layers}}$ is the total number of layers, $d_{\mathrm{model}}$ is the number of units in each bottleneck layer (we always have the feed forward layer four times the size of the bottleneck layer, $d_{\mathrm{ff}}=4*d_{\mathrm{model}})$ , and $d_{\mathrm{head}}$ is the dimension of each attention head. All models use a context window of $n_{\mathrm{ctx}}=2048$ tokens. We partition the model across GPUs along both the depth and width dimension in order to minimize data-transfer between nodes. The precise architectural parameters for each model are chosen based on computational efficiency and load-balancing in the layout of models across GPU’s. Previous work $[\mathrm{KMH^{+}}20]$ suggests that validation loss is not strongly sensitive to these parameters within a reasonably broad range.

表 2.1 展示了我们 8 个模型的规模和架构。其中 $n_{\mathrm{params}}$ 表示可训练参数总数,$n_{\mathrm{layers}}$ 表示总层数,$d_{\mathrm{model}}$ 表示每个瓶颈层的单元数(前馈层始终设置为瓶颈层大小的四倍,即 $d_{\mathrm{ff}}=4*d_{\mathrm{model}}$),$d_{\mathrm{head}}$ 表示每个注意力头的维度。所有模型均采用 $n_{\mathrm{ctx}}=2048$ tokens 的上下文窗口。我们沿深度和宽度维度将模型分布到多个 GPU 上,以最小化节点间的数据传输。每个模型的具体架构参数选择基于 GPU 间模型布局的计算效率和负载均衡。先前研究 [KMH^{+}20] 表明,在合理范围内,验证损失对这些参数并不十分敏感。

2.2 Training Dataset

2.2 训练数据集

Datasets for language models have rapidly expanded, culminating in the Common Crawl dataset2 $[\mathsf{R S R}^{+}19]$ constituting nearly a trillion words. This size of dataset is sufficient to train our largest models without ever updating on the same sequence twice. However, we have found that unfiltered or lightly filtered versions of Common Crawl tend to have lower quality than more curated datasets. Therefore, we took 3 steps to improve the average quality of our datasets: (1) we downloaded and filtered a version of Common Crawl based on similarity to a range of high-quality reference corpora, (2) we performed fuzzy de duplication at the document level, within and across datasets, to prevent redundancy and preserve the integrity of our held-out validation set as an accurate measure of over fitting, and (3) we also added known high-quality reference corpora to the training mix to augment Common Crawl and increase its diversity.

大语言模型的数据集迅速扩张,最终Common Crawl数据集2 $[\mathsf{R S R}^{+}19]$ 达到了近万亿词的规模。这一数据量足以训练我们最大的模型,且无需对同一序列重复更新。但我们发现,未经过滤或简单过滤的Common Crawl版本往往比经过精心整理的数据集质量更低。因此,我们采取了三项措施来提升数据集的平均质量:(1) 基于与多个高质量参考语料库的相似性,下载并过滤了Common Crawl的一个版本;(2) 在文档级别进行了跨数据集和数据集内部的模糊去重,以防止冗余并保持验证集的完整性,从而准确衡量过拟合;(3) 在训练混合数据中添加了已知的高质量参考语料库,以增强Common Crawl并提升其多样性。

Details of the first two points (processing of Common Crawl) are described in Appendix A. For the third, we added several curated high-quality datasets, including an expanded version of the WebText dataset [RWC+19], collected by scraping links over a longer period of time, and first described in $[\mathrm{KMH^{+}}20]$ , two internet-based books corpora (Books1 and Books2) and English-language Wikipedia.

前两点的具体细节(Common Crawl的处理)在附录A中描述。对于第三点,我们添加了几个精选的高质量数据集,包括通过长时间爬取链接收集的WebText数据集扩展版[RWC+19](首次在$[\mathrm{KMH^{+}}20]$中描述)、两个基于互联网的书籍语料库(Books1和Books2)以及英文维基百科。

Table 2.2 shows the final mixture of datasets that we used in training. The Common Crawl data was downloaded from 41 shards of monthly Common Crawl covering 2016 to 2019, constituting 45TB of compressed plaintext before filtering and 570GB after filtering, roughly equivalent to 400 billion byte-pair-encoded tokens. Note that during training, datasets are not sampled in proportion to their size, but rather datasets we view as higher-quality are sampled more frequently, such that Common Crawl and Books2 datasets are sampled less than once during training, but the other datasets are sampled 2-3 times. This essentially accepts a small amount of over fitting in exchange for higher quality training data.

表 2.2 展示了我们在训练中使用的最终数据集混合情况。Common Crawl 数据是从 2016 年至 2019 年的 41 个月度分片中下载的,过滤前压缩纯文本量为 45TB,过滤后为 570GB,大约相当于 4000 亿个字节对编码 (byte-pair-encoded) token。需要注意的是,训练过程中数据集的采样并非按其大小比例进行,而是我们认为质量更高的数据集会被更频繁地采样。因此 Common Crawl 和 Books2 数据集在训练期间采样次数不足一次,而其他数据集会被采样 2-3 次。这种做法本质上是用轻微的过拟合换取更高质量的训练数据。

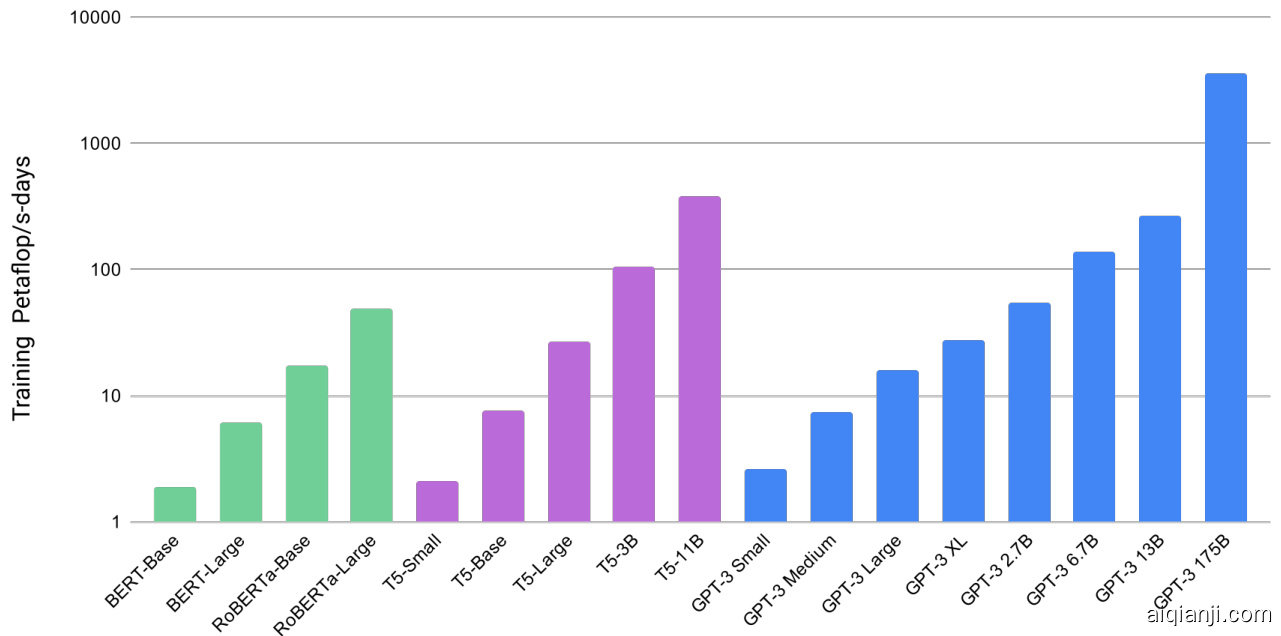

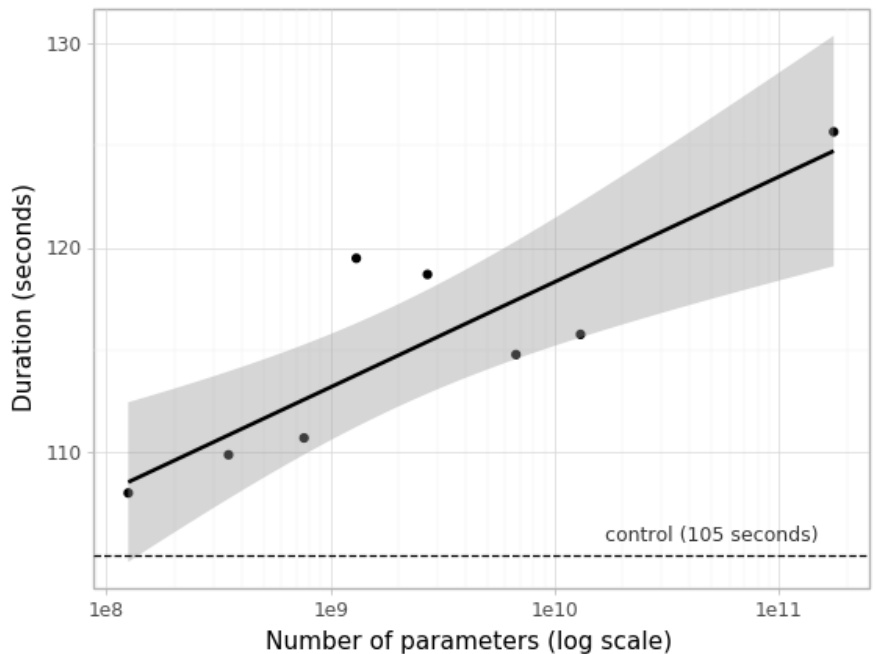

Figure 2.2: Total compute used during training. Based on the analysis in Scaling Laws For Neural Language Models $[\mathrm{K}\mathrm{MH}^{+}20]$ we train much larger models on many fewer tokens than is typical. As a consequence, although GPT-3 3B is almost $10\mathrm{x}$ larger than RoBERTa-Large (355M params), both models took roughly 50 petaflop/s-days of compute during pre-training. Methodology for these calculations can be found in Appendix D.

图 2.2: 训练期间的总计算量。根据《Scaling Laws For Neural Language Models》[KMH+20]的分析,我们使用远少于常规数量的token训练了更大的模型。因此,尽管GPT-3 3B(35亿参数)的规模几乎是RoBERTa-Large(3.55亿参数)的10倍,但两个模型在预训练阶段都消耗了约50 petaflop/s-days的计算量。具体计算方法详见附录D。

| Dataset | Quantity (tokens) | Weight in training mix | Epochs elapsed when trainingfor300Btokens |

| Common Crawl (filtered) | 410billion | 60% | 0.44 |

| WebText2 | 19billion | 22% | 2.9 |

| Booksl | 12billion | 8% | 1.9 |

| Books2 | 55billion | 8% | 0.43 |

| Wikipedia | 3billion | 3% | 3.4 |

| 数据集 | 数量 (token) | 训练混合权重 | 训练300B token时的轮次 |

|---|---|---|---|

| Common Crawl (过滤后) | 4100亿 | 60% | 0.44 |

| WebText2 | 190亿 | 22% | 2.9 |

| Booksl | 120亿 | 8% | 1.9 |

| Books2 | 550亿 | 8% | 0.43 |

| Wikipedia | 30亿 | 3% | 3.4 |

Table 2.2: Datasets used to train GPT-3. “Weight in training mix” refers to the fraction of examples during training that are drawn from a given dataset, which we intentionally do not make proportional to the size of the dataset. As a result, when we train for 300 billion tokens, some datasets are seen up to 3.4 times during training while other datasets are seen less than once.

表 2.2: 用于训练 GPT-3 的数据集。"训练混合权重"指训练过程中从特定数据集抽取样本的比例,我们刻意使其不与数据集大小成正比。因此,当训练 3000 亿 token 时,部分数据集在训练过程中被使用高达 3.4 次,而其他数据集使用次数不足一次。

A major methodological concern with language models pretrained on a broad swath of internet data, particularly large models with the capacity to memorize vast amounts of content, is potential contamination of downstream tasks by having their test or development sets inadvertently seen during pre-training. To reduce such contamination, we searched for and attempted to remove any overlaps with the development and test sets of all benchmarks studied in this paper. Unfortunately, a bug in the filtering caused us to ignore some overlaps, and due to the cost of training it was not feasible to retrain the model. In Section 4 we characterize the impact of the remaining overlaps, and in future work we will more aggressively remove data contamination.

在基于广泛互联网数据预训练的语言模型(尤其是具备海量内容记忆能力的大模型)中,一个重要的方法论问题是:下游任务的测试集或开发集可能在预训练阶段被意外摄入,从而导致数据污染。为降低此类风险,我们检索并尝试剔除了本文研究的所有基准测试中开发集与测试集的重叠部分。但由于过滤程序存在漏洞,部分重叠未被识别,加之模型重新训练成本过高,我们未能进行二次训练。第4节将分析剩余重叠数据的影响,未来工作中我们将采取更严格的数据净化措施。

2.3 Training Process

2.3 训练过程

As found in $[\mathrm{KMH^{+}}20$ , MKAT18], larger models can typically use a larger batch size, but require a smaller learning rate. We measure the gradient noise scale during training and use it to guide our choice of batch size [MKAT18]. Table 2.1 shows the parameter settings we used. To train the larger models without running out of memory, we use a mixture of model parallelism within each matrix multiply and model parallelism across the layers of the network. All models were trained on V100 GPU’s on part of a high-bandwidth cluster provided by Microsoft. Details of the training process and hyper parameter settings are described in Appendix B.

如 [$[\mathrm{KMH^{+}}20$ , MKAT18] 所述,更大的模型通常可以使用更大的批次大小 (batch size) ,但需要更小的学习率 (learning rate) 。我们在训练过程中测量梯度噪声规模 (gradient noise scale) ,并以此指导批次大小的选择 [MKAT18] 。表 2.1 展示了我们使用的参数设置。为了避免内存不足,我们在训练更大的模型时,采用了矩阵乘法内部模型并行 (model parallelism) 和网络层间模型并行相结合的方法。所有模型均在 Microsoft 提供的高带宽集群部分 V100 GPU 上进行训练。训练过程和超参数设置的详细信息见附录 B。

2.4 Evaluation

2.4 评估

For few-shot learning, we evaluate each example in the evaluation set by randomly drawing $K$ examples from that task’s training set as conditioning, delimited by 1 or 2 newlines depending on the task. For LAMBADA and Storycloze there is no supervised training set available so we draw conditioning examples from the development set and evaluate on the test set. For Winograd (the original, not SuperGLUE version) there is only one dataset, so we draw conditioning examples directly from it.

在少样本学习场景中,我们通过从每个任务的训练集中随机抽取 $K$ 个样本作为条件输入来评估测试集的每个样本,不同任务间使用1到2个换行符分隔。对于LAMBADA和Storycloze任务,由于缺乏监督训练集,我们改从开发集抽取条件样本并在测试集上评估。而原始版Winograd任务(非SuperGLUE版本)仅包含单一数据集,因此直接从该数据集抽取条件样本。

$K$ can be any value from 0 to the maximum amount allowed by the model’s context window, which is $n_{\mathrm{ctx}}=2048$ for all models and typically fits 10 to 100 examples. Larger values of $K$ are usually but not always better, so when a separate development and test set are available, we experiment with a few values of $K$ on the development set and then run the best value on the test set. For some tasks (see Appendix G) we also use a natural language prompt in addition to (or for $K=0$ , instead of) demonstrations.

$K$ 可以是0到模型上下文窗口允许的最大值之间的任意数值,所有模型的上下文窗口大小均为 $n_{\mathrm{ctx}}=2048$,通常可容纳10到100个示例。较大的 $K$ 值通常效果更好(但并非绝对),因此当存在独立的开发和测试集时,我们会在开发集上尝试几个 $K$ 值,然后在测试集上运行最优值。对于某些任务(见附录G),除了演示样本(或在 $K=0$ 时替代演示样本)外,我们还会使用自然语言提示。

On tasks that involve choosing one correct completion from several options (multiple choice), we provide $K$ examples of context plus correct completion, followed by one example of context only, and compare the LM likelihood of each completion. For most tasks we compare the per-token likelihood (to normalize for length), however on a small number of datasets (ARC, OpenBookQA, and RACE) we gain additional benefit as measured on the development set by normalizing by the unconditional probability of each completion, by computing $\frac{P(\mathrm{completion}|\mathrm{context})}{P(\mathrm{completion}|\mathrm{answer}_{-}\mathrm{context})}$ , where answer context is the string "Answer: " or "A: " and is used to prompt that the completion should be an answer but is otherwise generic.

在涉及从多个选项中选择一个正确答案(选择题)的任务中,我们提供 $K$ 个上下文加正确答案的示例,随后给出一个仅有上下文的示例,并比较每个选项的大语言模型生成概率。对于大多数任务,我们比较每个token的似然(以归一化长度),但在少数数据集(ARC、OpenBookQA和RACE)上,通过计算 $\frac{P(\mathrm{答案}|\mathrm{上下文})}{P(\mathrm{答案}|\mathrm{无上下文提示})}$ 对每个选项的无条件概率进行归一化(其中无上下文提示是字符串"Answer: "或"A: ",用于提示补全内容应为答案但保持通用性),我们在开发集上获得了额外收益。

On tasks that involve binary classification, we give the options more semantically meaningful names (e.g. “True” or “False” rather than 0 or 1) and then treat the task like multiple choice; we also sometimes frame the task similar to what is done by $[\mathsf{R S R}^{+}19]$ (see Appendix G) for details.

在涉及二元分类的任务中,我们会为选项赋予更具语义意义的名称(例如用"True"或"False"代替0或1),然后将其视为多选题处理;有时我们也会采用类似$[\mathsf{R S R}^{+}19]$的方法来构建任务框架(详见附录G)。

On tasks with free-form completion, we use beam search with the same parameters as $[\mathsf{R S R}^{+}19]$ : a beam width of 4 and a length penalty of $\alpha=0.6$ . We score the model using F1 similarity score, BLEU, or exact match, depending on what is standard for the dataset at hand.

在自由格式完成的任务中,我们使用与 $[\mathsf{R S R}^{+}19]$ 相同的参数进行束搜索 (beam search) :束宽为4,长度惩罚为 $\alpha=0.6$ 。根据当前数据集的标准,我们使用F1相似度分数、BLEU或精确匹配来评估模型。

Final results are reported on the test set when publicly available, for each model size and learning setting (zero-, one-, and few-shot). When the test set is private, our model is often too large to fit on the test server, so we report results on the development set. We do submit to the test server on a small number of datasets (SuperGLUE, TriviaQA, PiQa) where we were able to make submission work, and we submit only the 200B few-shot results, and report development set results for everything else.

最终结果在测试集公开可用时,按模型规模和学习设置(零样本、单样本和少样本)分别报告。若测试集未公开,由于模型体积过大常无法适配测试服务器,此时改为报告开发集结果。我们仅对少数能成功提交的数据集(SuperGLUE、TriviaQA、PiQa)提交了测试服务器结果,且仅提交200B参数的少样本结果,其余情况均报告开发集结果。

3 Results

3 结果

In Figure 3.1 we display training curves for the 8 models described in Section 2. For this graph we also include 6 additional extra-small models with as few as 100,000 parameters. As observed in $[\mathrm{KMH^{+}}20]$ , language modeling performance follows a power-law when making efficient use of training compute. After extending this trend by two more orders of magnitude, we observe only a slight (if any) departure from the power-law. One might worry that these improvements in cross-entropy loss come only from modeling spurious details of our training corpus. However, we will see in the following sections that improvements in cross-entropy loss lead to consistent performance gains across a broad spectrum of natural language tasks.

在图 3.1 中,我们展示了第 2 节描述的 8 个模型的训练曲线。此图中还包含 6 个参数量低至 10 万的超小型模型。如 $[\mathrm{KMH^{+}}20]$ 所述,当高效利用训练计算资源时,语言建模性能遵循幂律规律。将该趋势再扩展两个数量级后,我们观察到仅出现轻微(若有)偏离幂律的情况。有人可能担心交叉熵损失的改进仅源于对训练语料库中虚假细节的建模。然而,后续章节将表明,交叉熵损失的提升会在广泛自然语言任务中带来持续的性能增益。

Below, we evaluate the 8 models described in Section 2 (the 175 billion parameter parameter GPT-3 and 7 smaller models) on a wide range of datasets. We group the datasets into 9 categories representing roughly similar tasks.

下面,我们将在多种数据集上评估第2节中描述的8个模型(1750亿参数的GPT-3和7个较小模型)。这些数据集被分为9个类别,代表大致相似的任务。

In Section 3.1 we evaluate on traditional language modeling tasks and tasks that are similar to language modeling, such as Cloze tasks and sentence/paragraph completion tasks. In Section 3.2 we evaluate on “closed book” question answering tasks: tasks which require using the information stored in the model’s parameters to answer general knowledge questions. In Section 3.3 we evaluate the model’s ability to translate between languages (especially one-shot and few-shot). In Section 3.4 we evaluate the model’s performance on Winograd Schema-like tasks. In Section 3.5 we evaluate on datasets that involve commonsense reasoning or question answering. In Section 3.6 we evaluate on reading comprehension tasks, in Section 3.7 we evaluate on the SuperGLUE benchmark suite, and in 3.8 we briefly explore NLI. Finally, in Section 3.9, we invent some additional tasks designed especially to probe in-context learning abilities – these tasks focus on on-the-fly reasoning, adaptation skills, or open-ended text synthesis. We evaluate all tasks in the few-shot, one-shot, and zero-shot settings.

在3.1节中,我们评估了传统语言建模任务及类似任务,如完形填空(Cloze)和句子/段落补全任务。3.2节评估了"闭卷"问答任务,这类任务需要利用模型参数中存储的信息来回答常识性问题。3.3节测试了模型在语言翻译方面的能力(特别是单样本和少样本场景)。3.4节评估了模型在类Winograd Schema任务上的表现。3.5节针对涉及常识推理或问答的数据集进行评估。3.6节是阅读理解任务评估,3.7节在SuperGLUE基准测试套件上进行评估,3.8节简要探讨自然语言推理(NLI)。最后在3.9节,我们专门设计了一些新任务来探究上下文学习能力——这些任务聚焦即时推理、适应能力和开放式文本生成。所有任务均在少样本、单样本和零样本设置下进行评估。

Figure 3.1: Smooth scaling of performance with compute. Performance (measured in terms of cross-entropy validation loss) follows a power-law trend with the amount of compute used for training. The power-law behavior observed in $[\mathrm{KMH^{+}}20]$ continues for an additional two orders of magnitude with only small deviations from the predicted curve. For this figure, we exclude embedding parameters from compute and parameter counts. Table 3.1: Zero-shot results on PTB language modeling dataset. Many other common language modeling datasets are omitted because they are derived from Wikipedia or other sources which are included in GPT-3’s training data. $^a[\mathrm{RWC^{+}}19]$

图 3.1: 计算量与性能的平滑扩展关系。性能(以交叉熵验证损失衡量)与训练计算量呈现幂律关系。在$[\mathrm{KMH^{+}}20]$中观察到的幂律行为继续延伸了两个数量级,仅与预测曲线存在微小偏差。本图计算时未计入嵌入参数。

表 3.1: PTB语言建模数据集的零样本结果。许多其他常见语言建模数据集被省略,因为它们源自维基百科或其他已包含在GPT-3训练数据中的来源。$^a[\mathrm{RWC^{+}}19]$

| Setting | PTB |

| SOTA (Zero-Shot) | 35.8° |

| GPT-3 Zero-Shot | 20.5 |

| 设置 | PTB |

|---|---|

| SOTA (零样本) | 35.8° |

| GPT-3 零样本 | 20.5 |

3.1 Language Modeling, Cloze, and Completion Tasks

3.1 语言建模、完形填空与补全任务

In this section we test GPT-3’s performance on the traditional task of language modeling, as well as related tasks that involve predicting a single word of interest, completing a sentence or paragraph, or choosing between possible completions of a piece of text.

在本节中,我们测试GPT-3在传统语言建模任务上的表现,以及涉及预测单个目标词、完成句子或段落、或在文本可能续写选项间选择的相关任务。

3.1.1 Language Modeling

3.1.1 语言建模

We calculate zero-shot perplexity on the Penn Tree Bank (PTB) $[\mathbf{MKM^{+}94}]$ dataset measured in $[\mathrm{RWC^{+}}19]$ . We omit the 4 Wikipedia-related tasks in that work because they are entirely contained in our training data, and we also omit the one-billion word benchmark due to a high fraction of the dataset being contained in our training set. PTB escapes these issues due to predating the modern internet. Our largest model sets a new SOTA on PTB by a substantial margin of 15 points, achieving a perplexity of 20.50. Note that since PTB is a traditional language modeling dataset it does not have a clear separation of examples to define one-shot or few-shot evaluation around, so we measure only zero-shot.

我们在宾州树库(PTB) [$\mathbf{MKM^{+}94}$] 数据集上计算零样本困惑度(perplexity),该指标测量方法参照 [$\mathrm{RWC^{+}}19$]。由于原始论文中4个维基百科相关任务完全包含于我们的训练数据,故予以剔除;同时因十亿词基准测试数据集中有大量内容与训练集重合,该基准也被排除。PTB因早于现代互联网而避免了这类数据污染问题。我们的最大模型以20.50的困惑度显著刷新PTB的SOTA记录,领先优势达15个点。需说明的是,PTB作为传统语言建模数据集,其样本边界不明确导致难以划分单样本或少样本评估,因此仅进行零样本测量。

3.1.2 LAMBADA

3.1.2 LAMBADA

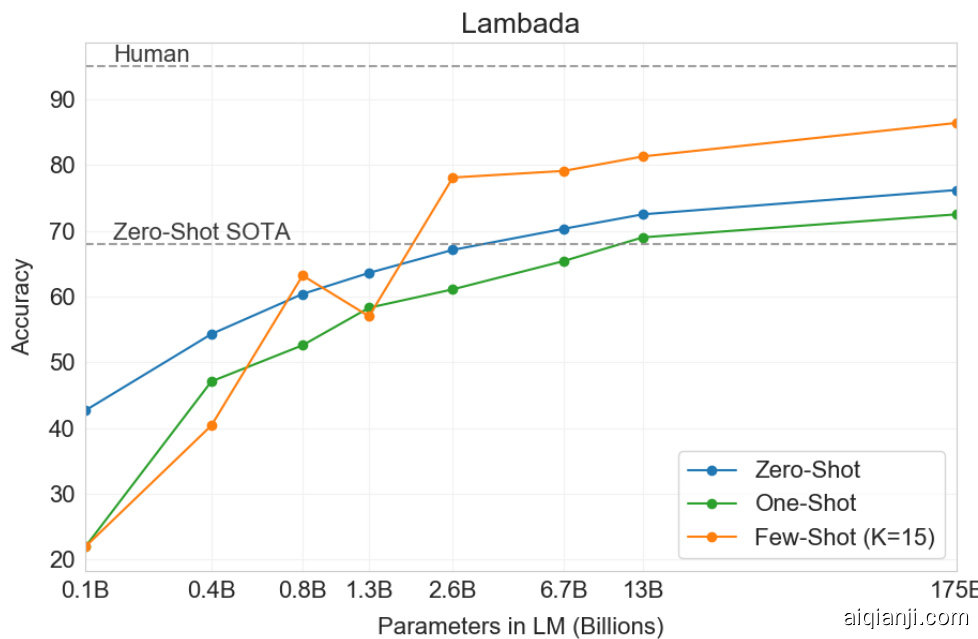

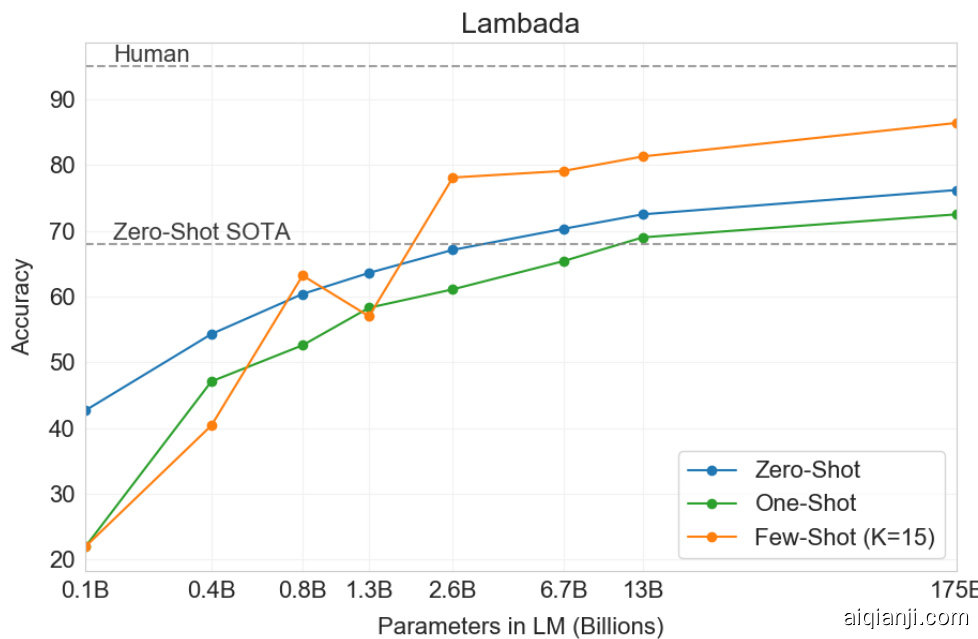

The LAMBADA dataset $[\mathrm{PKL^{+}}16]$ tests the modeling of long-range dependencies in text – the model is asked to predict the last word of sentences which require reading a paragraph of context. It has recently been suggested that the continued scaling of language models is yielding diminishing returns on this difficult benchmark. $[\mathrm{BHT^{+}}20]$ reflect on the small $1.5%$ improvement achieved by a doubling of model size between two recent state of the art results $\mathrm{(ISPP^{+}19]}$ and $[\mathrm{Tur}20],$ and argue that “continuing to expand hardware and data sizes by orders of magnitude is not the path forward”. We find that path is still promising and in a zero-shot setting GPT-3 achieves $76%$ on LAMBADA, a gain of $8%$ over the previous state of the art.

LAMBADA数据集 [PKL+16] 用于测试文本长距离依赖关系的建模能力——模型需要预测句子的最后一个词,这要求通读整个段落上下文。近期有研究表明 [BHT+20],语言模型规模的持续扩大在这个困难基准测试上带来的收益正在递减。他们指出,在两个最新技术成果 [ISPP+19] 和 [Tur20] 之间,模型规模翻倍仅带来1.5%的微小提升,并认为"继续以数量级扩展硬件和数据规模并非前进方向"。但我们发现这条路径仍具潜力,GPT-3在零样本设置下取得了76%的LAMBADA准确率,较先前最优结果提升了8%。

| Setting | LAMBADA (acc) | LAMBADA (ppl) | StoryCloze (acc) | HellaSwag (acc) |

| SOTA | 68.0a | 8.63b | 91.8c | 85.6d |

| GPT-3Zero-Shot | 76.2 | 3.00 | 83.2 | 78.9 |

| GPT-3One-Shot | 72.5 | 3.35 | 84.7 | 78.1 |

| GPT-3Few-Shot | 86.4 | 1.92 | 87.7 | 79.3 |

| 设置 | LAMBADA (准确率) | LAMBADA (困惑度) | StoryCloze (准确率) | HellaSwag (准确率) |

|---|---|---|---|---|

| SOTA | 68.0a | 8.63b | 91.8c | 85.6d |

| GPT-3零样本 (Zero-Shot) | 76.2 | 3.00 | 83.2 | 78.9 |

| GPT-3单样本 (One-Shot) | 72.5 | 3.35 | 84.7 | 78.1 |

| GPT-3少样本 (Few-Shot) | 86.4 | 1.92 | 87.7 | 79.3 |

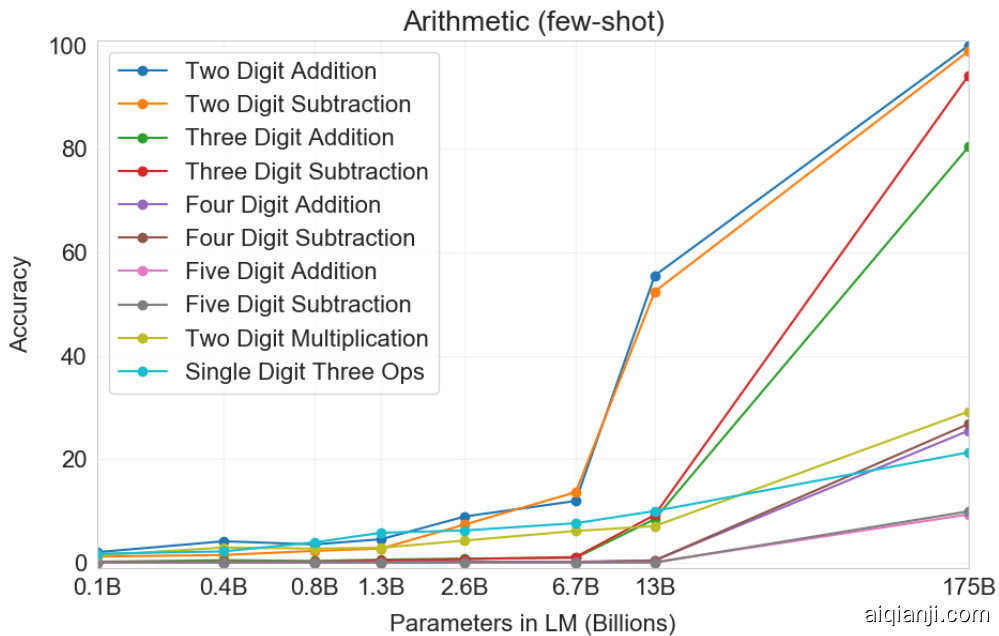

Table 3.2: Performance on cloze and completion tasks. GPT-3 significantly improves SOTA on LAMBADA while achieving respectable performance on two difficult completion prediction datasets. ${}^{a}[\mathrm{Tur}20]^{b}$ ${}^{b}[\mathrm{RWC}^{+}19]$ c[LDL19] ${}^{d}[\mathrm{LCH^{+}}\overline{{2}}0]$ Figure 3.2: On LAMBADA, the few-shot capability of language models results in a strong boost to accuracy. GPT-3 2.7B outperforms the SOTA 17B parameter Turing-NLG [Tur20] in this setting, and GPT-3 175B advances the state of the art by $18%$ . Note zero-shot uses a different format from one-shot and few-shot as described in the text.

表 3.2: 填空与补全任务性能表现。GPT-3 在 LAMBADA 上显著提升 SOTA (State-of-the-art) 水平,同时在两个高难度补全预测数据集上取得可观成绩。${}^{a}[\mathrm{Tur}20]^{b}$ ${}^{b}[\mathrm{RWC}^{+}19]$ c[LDL19] ${}^{d}[\mathrm{LCH^{+}}\overline{{2}}0]$

图 3.2: 在 LAMBADA 任务中,语言模型的少样本能力带来准确率的大幅提升。该场景下,GPT-3 2.7B 超越 17B 参数的 SOTA 模型 Turing-NLG [Tur20],GPT-3 175B 将当前最佳水平提升了 $18%$。需注意零样本采用了与单样本/少样本不同的文本格式。

LAMBADA is also a demonstration of the flexibility of few-shot learning as it provides a way to address a problem that classically occurs with this dataset. Although the completion in LAMBADA is always the last word in a sentence, a standard language model has no way of knowing this detail. It thus assigns probability not only to the correct ending but also to other valid continuations of the paragraph. This problem has been partially addressed in the past with stop-word filters $[\mathrm{RWC^{+}}19]$ (which ban “continuation” words). The few-shot setting instead allows us to “frame” the task as a cloze-test and allows the language model to infer from examples that a completion of exactly one word is desired. We use the following fill-in-the-blank format:

LAMBADA同样展示了少样本学习的灵活性,它提供了一种解决该数据集经典问题的方法。虽然LAMBADA的补全始终是句子最后一个词,但标准语言模型无法获知这一细节。因此它不仅会为正确结尾分配概率,也会为段落其他有效延续分配概率。过去曾通过停用词过滤器 (ban "continuation" words) $[\mathrm{RWC^{+}}19]$ 部分解决该问题。而少样本设置让我们能将任务"框定"为完形填空,使语言模型能从示例中推断出需要精确补全一个单词。我们使用以下填空格式:

Alice was friends with Bob. Alice went to visit her friend . $\rightarrow\mathbf{Bob}$ George bought some baseball equipment, a ball, a glove, and a $\rightarrow$

Alice 和 Bob 是朋友。Alice 去看望她的朋友。$\rightarrow\mathbf{Bob}$

George 买了一些棒球装备,一个球,一只手套,以及一个 $\rightarrow$

When presented with examples formatted this way, GPT-3 achieves $86.4%$ accuracy in the few-shot setting, an increase of over $18%$ from the previous state-of-the-art. We observe that few-shot performance improves strongly with model size. While this setting decreases the performance of the smallest model by almost $20%$ , for GPT-3 it improves accuracy by $10%$ . Finally, the fill-in-blank method is not effective one-shot, where it always performs worse than the zero-shot setting. Perhaps this is because all models still require several examples to recognize the pattern.

当以这种方式呈现示例时,GPT-3在少样本设置下达到了86.4%的准确率,比之前的最先进水平提高了超过18%。我们观察到少样本性能随模型规模显著提升。虽然这种设置使最小模型的性能下降了近20%,但对GPT-3而言准确率提高了10%。最后,填空方法在单样本场景中效果不佳,其表现始终不及零样本设置。这可能是因为所有模型仍需多个示例才能识别模式。

| Setting | NaturalQs | WebQS | TriviaQA |

| RAG (Fine-tuned, Open-Domain) [LPP+20] | 44.5 | 45.5 | 68.0 |

| T5-11B+SSM(Fine-tuned,Closed-Book)[RRS20] | 36.6 | 44.7 | 60.5 |

| T5-11B 3(Fine-tuned,Closed-Book) | 34.5 | 37.4 | 50.1 |

| GPT-3Zero-Shot | 14.6 | 14.4 | 64.3 |

| GPT-3One-Shot | 23.0 | 25.3 | 68.0 |

| GPT-3Few-Shot | 29.9 | 41.5 | 71.2 |

| 设置 | NaturalQs | WebQS | TriviaQA |

|---|---|---|---|

| RAG (微调, 开放域) [LPP+20] | 44.5 | 45.5 | 68.0 |

| T5-11B+SSM (微调, 闭卷) [RRS20] | 36.6 | 44.7 | 60.5 |

| T5-11B 3 (微调, 闭卷) | 34.5 | 37.4 | 50.1 |

| GPT-3 零样本 | 14.6 | 14.4 | 64.3 |

| GPT-3 单样本 | 23.0 | 25.3 | 68.0 |

| GPT-3 少样本 | 29.9 | 41.5 | 71.2 |

One note of caution is that an analysis of test set contamination identified that a significant minority of the LAMBADA dataset appears to be present in our training data – however analysis performed in Section 4 suggests negligible impact on performance.

需要提醒的是,一项关于测试集污染的分析发现,LAMBADA数据集中有相当一部分似乎存在于我们的训练数据中——但第4节进行的分析表明这对性能的影响微乎其微。

3.1.3 HellaSwag

3.1.3 HellaSwag

The HellaSwag dataset $[\mathrm{ZHB^{+}19}]$ involves picking the best ending to a story or set of instructions. The examples were adversarial ly mined to be difficult for language models while remaining easy for humans (who achieve $95.6%$ accuracy). GPT-3 achieves $78.1%$ accuracy in the one-shot setting and $79.3%$ accuracy in the few-shot setting, outperforming the $75.4%$ accuracy of a fine-tuned 1.5B parameter language model $[Z\mathrm{HR}^{+}19]$ but still a fair amount lower than the overall SOTA of $85.6%$ achieved by the fine-tuned multi-task model ALUM.

HellaSwag数据集 [ZHB+19] 的任务是从故事或指令集中选择最佳结局。该数据集通过对抗性挖掘构建样例,旨在使语言模型难以处理而人类仍能轻松应对(人类准确率达95.6%)。GPT-3在单样本设置中达到78.1%准确率,少样本设置中达79.3%,优于微调1.5B参数语言模型 [ZHR+19] 的75.4%准确率,但仍显著低于经微调的多任务模型ALUM创造的整体SOTA记录85.6%。

3.1.4 StoryCloze

3.1.4 StoryCloze

We next evaluate GPT-3 on the StoryCloze 2016 dataset $[\mathrm{MCH^{+}}16]$ , which involves selecting the correct ending sentence for five-sentence long stories. Here GPT-3 achieves $83.2%$ in the zero-shot setting and $87.7%$ in the few-shot setting (with $K=70$ ). This is still $4.1%$ lower than the fine-tuned SOTA using a BERT based model [LDL19] but improves over previous zero-shot results by roughly $10%$ .

我们接下来在StoryCloze 2016数据集$[\mathrm{MCH^{+}}16]$上评估GPT-3,该任务需要为五句话的故事选择正确的结尾句。GPT-3在零样本设置下达到83.2%准确率,在少样本设置( $K=70$ )下达到87.7%。这仍比基于BERT微调的SOTA模型[LDL19]低4.1%,但相较之前的零样本结果提升了约10%。

3.2 Closed Book Question Answering

3.2 闭卷问答

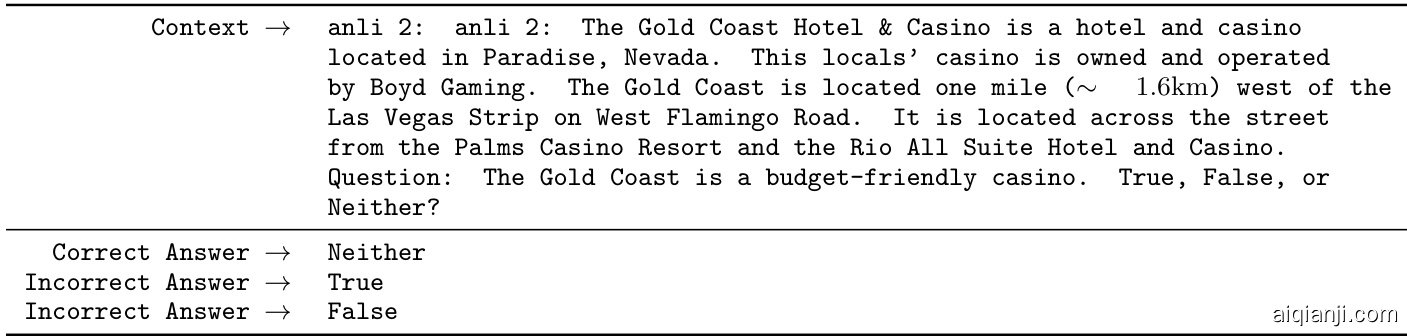

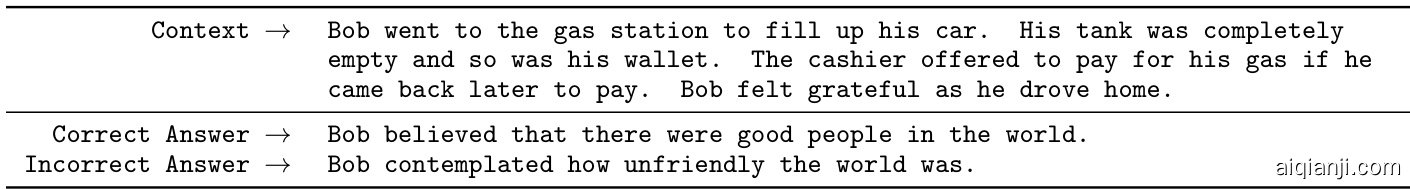

In this section we measure GPT-3’s ability to answer questions about broad factual knowledge. Due to the immense amount of possible queries, this task has normally been approached by using an information retrieval system to find relevant text in combination with a model which learns to generate an answer given the question and the retrieved text. Since this setting allows a system to search for and condition on text which potentially contains the answer it is denoted “open-book”. [RRS20] recently demonstrated that a large language model can perform surprisingly well directly answering the questions without conditioning on auxilliary information. They denote this more restrictive evaluation setting as “closed-book”. Their work suggests that even higher-capacity models could perform even better and we test this hypothesis with GPT-3. We evaluate GPT-3 on the 3 datasets in [RRS20]: Natural Questions $[\mathrm{KPR^{+}}19]$ , Web Questions [BCFL13], and TriviaQA [JCWZ17], using the same splits. Note that in addition to all results being in the closed-book setting, our use of few-shot, one-shot, and zero-shot evaluations represent an even stricter setting than previous closed-book QA work: in addition to external content not being allowed, fine-tuning on the Q&A dataset itself is also not permitted.

在本节中,我们评估GPT-3回答广泛事实性知识问题的能力。由于可能的查询数量庞大,该任务通常采用信息检索系统结合生成模型的方式实现,即检索相关文本后由模型学习根据问题和检索文本生成答案。这种允许系统搜索并基于可能包含答案的文本进行推理的设置被称为"开卷"模式。[RRS20]最近证明,大语言模型无需依赖辅助信息就能直接回答问题,且表现惊人。他们将这种限制更严格的评估设置称为"闭卷"模式。其研究表明更高容量的模型可能表现更优,我们通过GPT-3验证这一假设。我们在[RRS20]中的三个数据集上评估GPT-3:Natural Questions $[\mathrm{KPR^{+}}19]$、Web Questions [BCFL13]和TriviaQA [JCWZ17],使用相同的数据划分。需注意,除了所有结果均在闭卷设置下获得外,我们采用的少样本、单样本和零样本评估代表了比以往闭卷问答研究更严格的设置:不仅禁止使用外部内容,也不允许在问答数据集本身上进行微调。

The results for GPT-3 are shown in Table 3.3. On TriviaQA, we achieve $64.3%$ in the zero-shot setting, $68.0%$ in the one-shot setting, and $71.2%$ in the few-shot setting. The zero-shot result already outperforms the fine-tuned T5-11B by $14.2%$ , and also outperforms a version with Q&A tailored span prediction during pre-training by $3.8%$ . The one-shot result improves by $3.7%$ and matches the SOTA for an open-domain QA system which not only fine-tunes but also makes use of a learned retrieval mechanism over a 15.3B parameter dense vector index of 21M documents $[\mathrm{LPP^{+}}20]$ . GPT-3’s few-shot result further improves performance another $3.2%$ beyond this.

GPT-3的结果如表3.3所示。在TriviaQA上,我们在零样本(zero-shot)设置下达到了64.3%,单样本(one-shot)设置下达到68.0%,少样本(few-shot)设置下达到71.2%。零样本结果已比微调后的T5-11B高出14.2%,也比预训练期间采用问答定制跨度预测的版本高出3.8%。单样本结果提升了3.7%,与当前最先进的开放域问答系统持平 [LPP+20] ——该系统不仅进行了微调,还使用了基于15.3B参数稠密向量索引(覆盖2100万文档)的学习检索机制。GPT-3的少样本结果在此基础上进一步将性能提升了3.2%。

On Web Questions (WebQs), GPT-3 achieves $14.4%$ in the zero-shot setting, $25.3%$ in the one-shot setting, and $41.5%$ in the few-shot setting. This compares to $37.4%$ for fine-tuned T5-11B, and $44.7%$ for fine-tuned $\mathrm{T}5{-}11\mathbf{B}{+}\mathrm{SSM}$ which uses a Q&A-specific pre-training procedure. GPT-3 in the few-shot setting approaches the performance of state-of-the-art fine-tuned models. Notably, compared to TriviaQA, WebQS shows a much larger gain from zero-shot to few-shot (and indeed its zero-shot and one-shot performance are poor), perhaps suggesting that the WebQs questions and/or the style of their answers are out-of-distribution for GPT-3. Nevertheless, GPT-3 appears able to adapt to this distribution, recovering strong performance in the few-shot setting.

在Web Questions (WebQs)数据集上,GPT-3在零样本设置下达到14.4%准确率,单样本设置下25.3%,少样本设置下41.5%。相比之下,经过微调的T5-11B模型成绩为37.4%,而采用问答专用预训练流程的微调模型$\mathrm{T}5{-}11\mathbf{B}{+}\mathrm{SSM}$达到44.7%。少样本设置的GPT-3已接近当前最优微调模型的性能。值得注意的是,与TriviaQA相比,WebQS从零样本到少样本的性能提升更为显著(其零样本和单样本表现确实较差),这可能表明WebQs的问题和/或答案风格超出了GPT-3的分布范围。尽管如此,GPT-3似乎能够适应这种分布,在少样本设置下恢复了强劲性能。

Figure 3.3: On TriviaQA GPT3’s performance grows smoothly with model size, suggesting that language models continue to absorb knowledge as their capacity increases. One-shot and few-shot performance make significant gains over zero-shot behavior, matching and exceeding the performance of the SOTA fine-tuned open-domain model, RAG $[\mathrm{LPP^{+}}20]$ 1

图 3.3: 在TriviaQA上,GPT3的性能随模型规模平稳提升,表明大语言模型会随着容量增加持续吸收知识。单样本和少样本性能较零样本表现有显著提升,达到并超越了当前最优微调开放域模型RAG [LPP+20]的水平。

On Natural Questions (NQs) GPT-3 achieves $14.6%$ in the zero-shot setting, $23.0%$ in the one-shot setting, and $29.9%$ in the few-shot setting, compared to $36.6%$ for fine-tuned T5 $11\mathbf{B}{+}\mathbf{S}\mathbf{S}\mathbf{M}$ . Similar to WebQS, the large gain from zero-shot to few-shot may suggest a distribution shift, and may also explain the less competitive performance compared to TriviaQA and WebQS. In particular, the questions in NQs tend towards very fine-grained knowledge on Wikipedia specifically which could be testing the limits of GPT-3’s capacity and broad pre training distribution.

在Natural Questions (NQs)任务中,GPT-3在零样本 (zero-shot) 设置下达到14.6%,单样本 (one-shot) 设置下达到23.0%,少样本 (few-shot) 设置下达到29.9%,而经过微调的T5 11B+SSM模型则达到36.6%。与WebQS类似,从零样本到少样本的大幅提升可能表明存在分布偏移,这也可能是其表现不如TriviaQA和WebQS的原因。特别是,NQs中的问题往往涉及维基百科上非常细粒度的知识,这可能测试了GPT-3的能力极限及其广泛的预训练分布范围。

Overall, on one of the three datasets GPT-3’s one-shot matches the open-domain fine-tuning SOTA. On the other two datasets it approaches the performance of the closed-book SOTA despite not using fine-tuning. On all 3 datasets, we find that performance scales very smoothly with model size (Figure 3.3 and Appendix H Figure H.7), possibly reflecting the idea that model capacity translates directly to more ‘knowledge’ absorbed in the parameters of the model.

总体而言,在三个数据集中,GPT-3的零样本性能在一个数据集上达到了开放领域微调SOTA (state-of-the-art) 的水平。在另外两个数据集上,尽管没有使用微调,其性能也接近闭卷SOTA。在所有三个数据集中,我们发现模型性能随规模增长呈现非常平滑的上升趋势 (图3.3及附录H图H.7) ,这可能反映了模型容量直接转化为参数中吸收的更多"知识"的观点。

3.3 Translation

3.3 翻译

For GPT-2 a filter was used on a multilingual collection of documents to produce an English only dataset due to capacity concerns. Even with this filtering GPT-2 showed some evidence of multilingual capability and performed non-trivially when translating between French and English despite only training on 10 megabytes of remaining French text. Since we increase the capacity by over two orders of magnitude from GPT-2 to GPT-3, we also expand the scope of the training dataset to include more representation of other languages, though this remains an area for further improvement. As discussed in 2.2 the majority of our data is derived from raw Common Crawl with only quality-based filtering. Although GPT-3’s training data is still primarily English $93%$ by word count), it also includes $7%$ of text in other languages. These languages are documented in the supplemental material. In order to better understand translation capability, we also expand our analysis to include two additional commonly studied languages, German and Romanian.

由于容量限制,GPT-2在多语言文档集合上采用了过滤机制,最终生成纯英文数据集。即便如此,GPT-2仍展现出一定的多语言能力,在仅接受10兆字节剩余法语文本训练的情况下,其法英翻译表现仍具实际意义。从GPT-2到GPT-3,我们将模型容量提升超过两个数量级,同时扩展了训练数据集范围以涵盖更多其他语言,但这仍是待改进领域。如2.2节所述,我们的数据主要来自原始Common Crawl爬虫,仅进行基于质量的过滤。虽然GPT-3训练数据按词数统计仍以英文为主(93%),但也包含7%的其他语言文本(具体语种见补充材料)。为深入理解翻译能力,我们将分析范围扩展至德语和罗马尼亚语这两种常用研究对象。

Existing unsupervised machine translation approaches often combine pre training on a pair of monolingual datasets with back-translation [SHB15] to bridge the two languages in a controlled way. By contrast, GPT-3 learns from a blend of training data that mixes many languages together in a natural way, combining them on a word, sentence, and document level. GPT-3 also uses a single training objective which is not customized or designed for any task in particular. However, our one / few-shot settings aren’t strictly comparable to prior unsupervised work since they make use of a small amount of paired examples (1 or 64). This corresponds to up to a page or two of in-context training data.

现有的无监督机器翻译方法通常将对单语数据集对的预训练与回译 [SHB15] 相结合,以可控方式桥接两种语言。相比之下,GPT-3 从混合多语言的训练数据中自然学习,在单词、句子和文档层面进行融合。GPT-3 还采用单一训练目标,并未针对任何特定任务进行定制或设计。不过,我们的零样本/少样本设置与之前的无监督工作并不严格可比,因为它们使用了少量配对样本 (1 或 64)。这相当于最多一两页的上下文训练数据。

Results are shown in Table 3.4. Zero-shot GPT-3, which only receives on a natural language description of the task, still under performs recent unsupervised NMT results. However, providing only a single example demonstration for

结果如表 3.4 所示。零样本 (Zero-shot) GPT-3 仅接收任务的自然语言描述,其表现仍逊于近期无监督神经机器翻译 (NMT) 的结果。然而仅需提供单个示例演示...

| Setting | En→→Fr | Fr→En | En→De | De→→En | En→→Ro | Ro→→En |

| SOTA (Supervised) | 45.6a | 35.0 | 41.2c | 40.2d | 38.5e | 39.9e |

| XLM [LC19] | 33.4 | 33.3 | 26.4 | 34.3 | 33.3 | 31.8 |

| MASS [STQ+19] | 37.5 | 34.9 | 28.3 | 35.2 | 35.2 | 33.1 |

| mBART [LGG+20] | - | 29.8 | 34.0 | 35.0 | 30.5 | |

| GPT-3Zero-Shot | 25.2 | 21.2 | 24.6 | 27.2 | 14.1 | 19.9 |

| GPT-3One-Shot | 28.3 | 33.7 | 26.2 | 30.4 | 20.6 | 38.6 |

| GPT-3Few-Shot | 32.6 | 39.2 | 29.7 | 40.6 | 21.0 | 39.5 |

| 设置 | En→Fr | Fr→En | En→De | De→En | En→Ro | Ro→En |

|---|---|---|---|---|---|---|

| SOTA (监督式) | 45.6a | 35.0 | 41.2c | 40.2d | 38.5e | 39.9e |

| XLM [LC19] | 33.4 | 33.3 | 26.4 | 34.3 | 33.3 | 31.8 |

| MASS [STQ+19] | 37.5 | 34.9 | 28.3 | 35.2 | 35.2 | 33.1 |

| mBART [LGG+20] | - | 29.8 | 34.0 | 35.0 | 30.5 | |

| GPT-3零样本 | 25.2 | 21.2 | 24.6 | 27.2 | 14.1 | 19.9 |

| GPT-3单样本 | 28.3 | 33.7 | 26.2 | 30.4 | 20.6 | 38.6 |

| GPT-3少样本 | 32.6 | 39.2 | 29.7 | 40.6 | 21.0 | 39.5 |

Table 3.4: Few-shot GPT-3 outperforms previous unsupervised NMT work by 5 BLEU when translating into English reflecting its strength as an English LM. We report BLEU scores on the WMT’14 $\mathrm{Fr}{\leftrightarrow}\mathrm{En}$ , WMT’16 $\mathrm{De}{\leftrightarrow}\mathrm{En}$ , and WMT’16 $\mathrm{Ro}{\leftrightarrow}\mathrm{En}$ datasets as measured by multi-bleu.perl with XLM’s tokenization in order to compare most closely with prior unsupervised NMT work. SacreBLEUf [Pos18] results reported in Appendix H. Underline indicates an unsupervised or few-shot SOTA, bold indicates supervised SOTA with relative confidence. a[EOAG18] b[DHKH14] ${}^{c}[\mathrm{WXH^{+}}18]$ d[oR16] ${}^{e}[\mathrm{LGG}^{+}20]$ f [SacreBLEU signature: BLEU $^+$ case.mixed+numrefs. $1+$ smooth.exp+tok.intl+version.1.2.20] Figure 3.4: Few-shot translation performance on 6 language pairs as model capacity increases. There is a consistent trend of improvement across all datasets as the model scales, and as well as tendency for translation into English to be stronger than translation from English.

表 3.4: 少样本 GPT-3 在翻译成英语时比之前的无监督神经机器翻译 (NMT) 工作高出 5 BLEU 分,反映了其作为英语大语言模型的优势。我们在 WMT’14 $\mathrm{Fr}{\leftrightarrow}\mathrm{En}$、WMT’16 $\mathrm{De}{\leftrightarrow}\mathrm{En}$ 和 WMT’16 $\mathrm{Ro}{\leftrightarrow}\mathrm{En}$ 数据集上报告了 BLEU 分数,使用 multi-bleu.perl 脚本并采用 XLM 的 Token 化方式,以便与之前的无监督 NMT 工作进行最直接的比较。SacreBLEU [Pos18] 的结果见附录 H。下划线表示无监督或少样本的当前最佳水平 (SOTA),加粗表示相对可信的有监督 SOTA。a[EOAG18] b[DHKH14] ${}^{c}[\mathrm{WXH^{+}}18]$ d[oR16] ${}^{e}[\mathrm{LGG}^{+}20]$ f [SacreBLEU 签名: BLEU $^+$ case.mixed+numrefs. $1+$ smooth.exp+tok.intl+version.1.2.20]

图 3.4: 随着模型容量的增加,6 种语言对的少样本翻译性能表现。所有数据集上都呈现出随着模型规模扩大而持续提升的趋势,并且翻译成英语的表现普遍优于从英语翻译的表现。

| Setting | Winograd | Winogrande (XL) |

| Fine-tunedSOTA | 90.1a | 84.6b |

| GPT-3Zero-Shot | 88.3* | 70.2 |

| GPT-3One-Shot | 89.7* | 73.2 |

| GPT-3Few-Shot | 88.6* | 77.7 |

| 设置 | Winograd | Winogrande (XL) |

|---|---|---|

| 微调SOTA | 90.1a | 84.6b |

| GPT-3零样本 | 88.3* | 70.2 |

| GPT-3单样本 | 89.7* | 73.2 |

| GPT-3少样本 | 88.6* | 77.7 |

Table 3.5: Results on the WSC273 version of Winograd schemas and the adversarial Winogrande dataset. See Section 4 for details on potential contamination of the Winograd test set. a[SBBC19] $^b[\mathrm{LYN}^{+}20]$

表 3.5: WSC273版Winograd模式和对抗性Winogrande数据集上的结果。关于Winograd测试集潜在污染问题的详情参见第4节。a[SBBC19] $^b[\mathrm{LYN}^{+}20]$

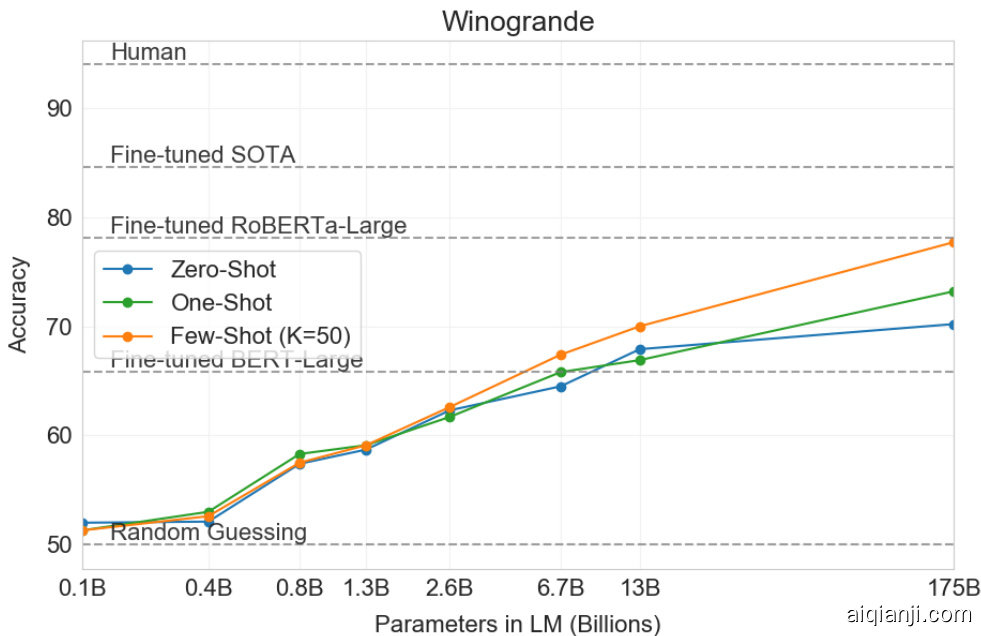

Figure 3.5: Zero-, one-, and few-shot performance on the adversarial Winogrande dataset as model capacity scales. Scaling is relatively smooth with the gains to few-shot learning increasing with model size, and few-shot GPT-3 175B is competitive with a fine-tuned RoBERTA-large.

图 3.5: 对抗性 Winogrande 数据集上零样本、单样本和少样本性能随模型容量扩展的变化。扩展过程相对平稳,少样本学习的收益随模型规模增加而提升,少样本 GPT-3 175B 的表现与微调后的 RoBERTA-large 相当。

each translation task improves performance by over 7 BLEU and nears competitive performance with prior work. GPT-3 in the full few-shot setting further improves another 4 BLEU resulting in similar average performance to prior unsupervised NMT work. GPT-3 has a noticeable skew in its performance depending on language direction. For the three input languages studied, GPT-3 significantly outperforms prior unsupervised NMT work when translating into English but under performs when translating in the other direction. Performance on En-Ro is a noticeable outlier at over 10 BLEU worse than prior unsupervised NMT work. This could be a weakness due to reusing the byte-level BPE tokenizer of GPT-2 which was developed for an almost entirely English training dataset. For both Fr-En and De-En, few shot GPT-3 outperforms the best supervised result we could find but due to our unfamiliarity with the literature and the appearance that these are un-competitive benchmarks we do not suspect those results represent true state of the art. For Ro-En, few shot GPT-3 performs within 0.5 BLEU of the overall SOTA which is achieved by a combination of unsupervised pre training, supervised finetuning on 608K labeled examples, and back translation [LHCG19b].

每项翻译任务都能将性能提升超过7个BLEU分,并接近先前工作的竞争水平。在完整少样本设置下,GPT-3进一步提升了4个BLEU分,使得平均性能与之前的无监督神经机器翻译(NMT)工作相当。GPT-3的性能表现存在明显的语言方向偏差:针对所研究的三种输入语言,当翻译成英语时其表现显著优于先前的无监督NMT工作,但在反向翻译时表现欠佳。其中En-Ro方向的表现尤为异常,比先前无监督NMT工作低了超过10个BLEU分——这可能是由于沿用了GPT-2的字节级BPE分词器(该分词器基于几乎全英文的训练数据集开发)导致的缺陷。在Fr-En和De-En任务中,少样本GPT-3的表现优于我们找到的最佳监督学习结果,但由于我们对文献掌握有限且这些基准测试似乎缺乏竞争力,我们认为这些结果并不能代表真实的最先进水平。对于Ro-En任务,少样本GPT-3与当前SOTA(通过无监督预训练、60.8万标注样本的监督微调及回译[LHCG19b]联合实现)的差距仅为0.5个BLEU分。

Finally, across all language pairs and across all three settings (zero-, one-, and few-shot), there is a smooth trend of improvement with model capacity. This is shown in Figure 3.4 in the case of few-shot results, and scaling for all three settings is shown in Appendix H.

最后,在所有语言对和三种设置(零样本、单样本和少样本)下,模型性能都随着容量提升呈现平滑的增长趋势。图3.4展示了少样本结果的情况,三种设置下的规模缩放曲线详见附录H。

3.4 Winograd-Style Tasks

3.4 Winograd风格任务

The Winograd Schemas Challenge [LDM12] is a classical task in NLP that involves determining which word a pronoun refers to, when the pronoun is grammatically ambiguous but semantically unambiguous to a human. Recently fine-tuned language models have achieved near-human performance on the original Winograd dataset, but more difficult versions such as the adversarial ly-mined Winogrande dataset [SBBC19] still significantly lag human performance. We test GPT-3’s performance on both Winograd and Winogrande, as usual in the zero-, one-, and few-shot setting.

Winograd模式挑战赛[LDM12]是自然语言处理(NLP)领域的经典任务,旨在当代词存在语法歧义但人类能明确理解语义时,判定代词所指代的词语。近期经过微调的大语言模型在原始Winograd数据集上已达到接近人类的表现,但在对抗性挖掘的Winogrande数据集[SBBC19]等更复杂版本上仍显著落后于人类水平。我们按照惯例采用零样本、单样本和少样本设置,测试GPT-3在Winograd和Winogrande数据集上的表现。

Table 3.6: GPT-3 results on three commonsense reasoning tasks, PIQA, ARC, and OpenBookQA. GPT-3 Few-Shot PIQA result is evaluated on the test server. See Section 4 for details on potential contamination issues on the PIQA test set.

| Setting | PIQA | ARC (Easy) | ARC (Challenge) | OpenBookQA |

| Fine-tunedSOTA | 79.4 | 92.0[KKS+ 20] | 78.5[KKS+20] | 87.2[KKS+20] |

| GPT-3Zero-Shot | 80.5* | 68.8 | 51.4 | 57.6 |

| GPT-3One-Shot | 80.5* | 71.2 | 53.2 | 58.8 |

| GPT-3Few-Shot | 82.8* | 70.1 | 51.5 | 65.4 |

表 3.6: GPT-3 在三个常识推理任务 (PIQA、ARC 和 OpenBookQA) 上的结果。GPT-3 少样本 PIQA 结果在测试服务器上评估。有关 PIQA 测试集潜在污染问题的详细信息,请参阅第 4 节。

| 设置 | PIQA | ARC (Easy) | ARC (Challenge) | OpenBookQA |

|---|---|---|---|---|

| Fine-tunedSOTA | 79.4 | 92.0 [KKS+ 20] | 78.5 [KKS+ 20] | 87.2 [KKS+ 20] |

| GPT-3 零样本 | 80.5* | 68.8 | 51.4 | 57.6 |

| GPT-3 单样本 | 80.5* | 71.2 | 53.2 | 58.8 |

| GPT-3 少样本 | 82.8* | 70.1 | 51.5 | 65.4 |

Figure 3.6: GPT-3 results on PIQA in the zero-shot, one-shot, and few-shot settings. The largest model achieves a score on the development set in all three conditions that exceeds the best recorded score on the task.

图 3.6: GPT-3 在零样本、单样本和少样本设置下 PIQA 任务的结果。最大模型在开发集上的三种条件下均取得了超过该任务历史最佳记录的分数。

On Winograd we test GPT-3 on the original set of 273 Winograd schemas, using the same “partial evaluation” method described in $[\mathrm{RWC^{+}}19]$ . Note that this setting differs slightly from the WSC task in the SuperGLUE benchmark, which is presented as binary classification and requires entity extraction to convert to the form described in this section. On Winograd GPT-3 achieves $88.3%$ , $89.7%$ , and $88.6%$ in the zero-shot, one-shot, and few-shot settings, showing no clear in-context learning but in all cases achieving strong results just a few points below state-of-the-art and estimated human performance. We note that contamination analysis found some Winograd schemas in the training data but this appears to have only a small effect on results (see Section 4).

在Winograd任务中,我们使用与[RWC+19]相同的"部分评估"方法,在原始的273个Winograd模式集上测试GPT-3。需要注意的是,该设置与SuperGLUE基准中的WSC任务略有不同,后者以二元分类形式呈现,并需要通过实体提取转换为本节所述形式。GPT-3在Winograd任务上的零样本、单样本和少样本设置中分别达到88.3%、89.7%和88.6%的准确率,虽未显示出明显的上下文学习能力,但在所有情况下都取得了仅略低于最先进水平和预估人类表现的优异成绩。我们注意到污染分析发现训练数据中包含部分Winograd模式,但这似乎对结果影响甚微(参见第4节)。

On the more difficult Winogrande dataset, we do find gains to in-context learning: GPT-3 achieves $70.2%$ in the zero-shot setting, $73.2%$ in the one-shot setting, and $77.7%$ in the few-shot setting. For comparison a fine-tuned RoBERTA model achieves $79%$ , state-of-the-art is $84.6%$ achieved with a fine-tuned high capacity model (T5), and human performance on the task as reported by [SBBC19] is $94.0%$ .

在更具挑战性的Winogrande数据集上,我们确实观察到上下文学习带来的提升:GPT-3在零样本(Zero-shot)设置下达到70.2%,单样本(One-shot)设置下达到73.2%,少样本(Few-shot)设置下达到77.7%。作为对比,微调后的RoBERTA模型成绩为79%,当前最佳成绩84.6%由微调的高容量模型(T5)取得,而[SBBC19]报告的人类在该任务上的表现为94.0%。

3.5 Common Sense Reasoning

3.5 常识推理

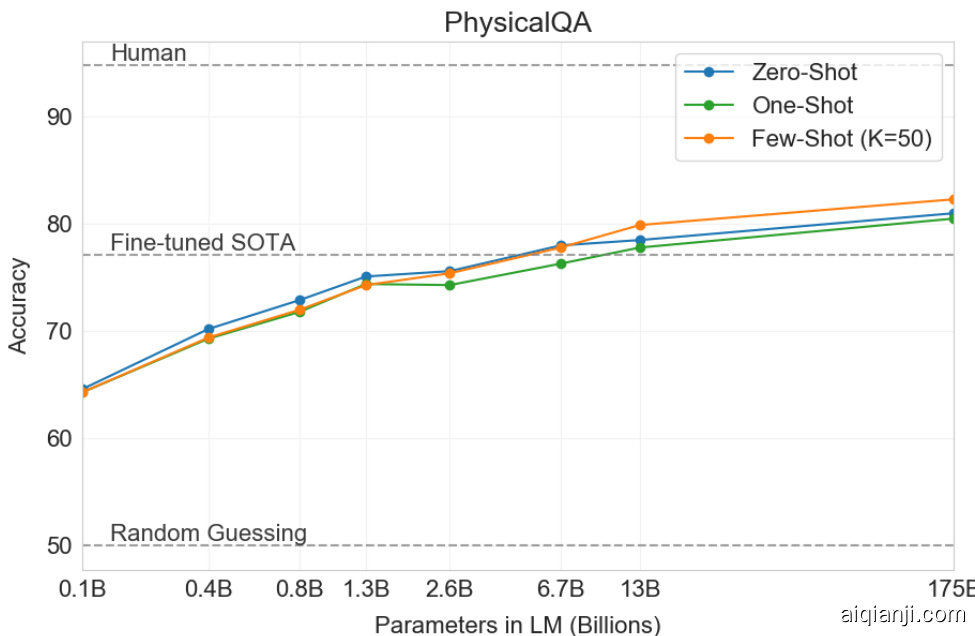

Next we consider three datasets which attempt to capture physical or scientific reasoning, as distinct from sentence completion, reading comprehension, or broad knowledge question answering. The first, PhysicalQA (PIQA) $[\mathrm{BZB^{+}19}]$ asks common sense questions about how the physical world works and is intended as a probe of grounded understanding of the world. GPT-3 achieves $81.0%$ accuracy zero-shot, $80.5%$ accuracy one-shot, and $82.8%$ accuracy few-shot (the last measured on PIQA’s test server). This compares favorably to the $79.4%$ accuracy prior state-of-the-art of a fine-tuned RoBERTa. PIQA shows relatively shallow scaling with model size and is still over $10%$ worse than human performance, but GPT-3’s few-shot and even zero-shot result outperform the current state-of-the-art. Our analysis flagged PIQA for a potential data contamination issue (despite hidden test labels), and we therefore conservatively mark the result with an asterisk. See Section 4 for details.

接下来我们考虑三个试图捕捉物理或科学推理能力的数据集,这些与句子补全、阅读理解或广泛知识问答不同。第一个数据集 PhysicalQA (PIQA) [BZB+19] 提出了关于物理世界运作的常识性问题,旨在探究对世界的具身理解。GPT-3 在零样本条件下达到 81.0% 准确率,单样本 80.5%,少样本 82.8%(最后一项在 PIQA 测试服务器上测得)。这优于微调 RoBERTa 之前 79.4% 的最先进水平。PIQA 显示出随模型规模增长的较浅提升曲线,且仍比人类表现低 10% 以上,但 GPT-3 的少样本甚至零样本结果已超越当前最优技术。我们的分析发现 PIQA 可能存在数据污染问题(尽管测试标签被隐藏),因此保守地用星号标记该结果。详见第 4 节。

Table 3.7: Results on reading comprehension tasks. All scores are F1 except results for RACE which report accuracy. ${}^{a}[\mathrm{JZC^{+}}19]$ b[JN20] c[AI19] d[QIA20] ${}^{e}[\mathrm{SPP^{+}}19]$

| Setting | CoQA | DROP | QuAC | SQuADv2 | RACE-h | RACE-m |

| Fine-tunedSOTA | 90.7a | 89.1b | 74.4c | 93.0d | 30'06 | 93.1e |

| GPT-3Zero-Shot | 81.5 | 23.6 | 41.5 | 59.5 | 45.5 | 58.4 |

| GPT-3One-Shot | 84.0 | 34.3 | 43.3 | 65.4 | 45.9 | 57.4 |

| GPT-3Few-Shot | 85.0 | 36.5 | 44.3 | 69.8 | 46.8 | 58.1 |

表 3.7: 阅读理解任务结果。除RACE报告准确率外,其余分数均为F1值。${}^{a}[\mathrm{JZC^{+}}19]$ b[JN20] c[AI19] d[QIA20] ${}^{e}[\mathrm{SPP^{+}}19]$

| 设置 | CoQA | DROP | QuAC | SQuADv2 | RACE-h | RACE-m |

|---|---|---|---|---|---|---|

| 微调SOTA | 90.7a | 89.1b | 74.4c | 93.0d | 30'06 | 93.1e |

| GPT-3零样本 | 81.5 | 23.6 | 41.5 | 59.5 | 45.5 | 58.4 |

| GPT-3单样本 | 84.0 | 34.3 | 43.3 | 65.4 | 45.9 | 57.4 |

| GPT-3少样本 | 85.0 | 36.5 | 44.3 | 69.8 | 46.8 | 58.1 |

ARC $[\mathrm{CCE^{+}}18]$ is a dataset of multiple-choice questions collected from 3rd to 9th grade science exams. On the “Challenge” version of the dataset which has been filtered to questions which simple statistical or information retrieval methods are unable to correctly answer, GPT-3 achieves $51.4%$ accuracy in the zero-shot setting, $53.2%$ in the one-shot setting, and $51.5%$ in the few-shot setting. This is approaching the performance of a fine-tuned RoBERTa baseline $(55.9%)$ from UnifiedQA $[\mathrm{KKS}^{+}20]$ . On the “Easy” version of the dataset (questions which either of the mentioned baseline approaches answered correctly), GPT-3 achieves $68.8%$ , $71.2%$ , and $70.1%$ which slightly exceeds a fine-tuned RoBERTa baseline from $[\mathrm{KKS^{+}}20]$ . However, both of these results are still much worse than the overall SOTAs achieved by the UnifiedQA which exceeds GPT-3’s few-shot results by $27%$ on the challenge set and $22%$ on the easy set.

ARC [$\mathrm{CCE^{+}}18$] 是一个收集自3至9年级科学考试选择题的数据集。在该数据集的"挑战"版本(经过筛选仅包含简单统计或信息检索方法无法正确回答的问题)上,GPT-3在零样本设置下达到51.4%准确率,单样本设置下53.2%,少样本设置下51.5%。这一表现接近来自UnifiedQA [$\mathrm{KKS}^{+}20$]的微调RoBERTa基线(55.9%)。在"简单"版本数据集(被提及的基线方法能正确回答的问题)上,GPT-3分别取得68.8%、71.2%和70.1%的准确率,略优于[$\mathrm{KKS^{+}}20$]中的微调RoBERTa基线。然而,这些结果仍远低于UnifiedQA实现的整体SOTA表现——其在挑战集上超过GPT-3少样本结果27%,在简单集上超过22%。

On OpenBookQA [MCKS18], GPT-3 improves significantly from zero to few shot settings but is still over 20 points short of the overall SOTA. GPT-3’s few-shot performance is similar to a fine-tuned BERT Large baseline on the leader board.

在OpenBookQA [MCKS18]上,GPT-3从零样本到少样本设置有了显著提升,但仍比整体SOTA低了20多分。GPT-3的少样本表现与排行榜上经过微调的BERT Large基线相当。