Radioactive data: tracing through training

放射性数据:训练追踪

Alexandre S a blay roll es 1 2 Matthijs Douze 1 Cordelia Schmid ? Hervé Jégou

Alexandre Sablayrolles 1 2 Matthijs Douze 1 Cordelia Schmid ? Hervé Jégou

Abstract

摘要

We want to detect whether a particular image dataset has been used to train a model. We propose a new technique, radioactive data, that makes imperceptible changes to this dataset such that any model trained on it will bear an identifiable mark. The mark is robust to strong variations such as different architectures or optimization methods. Given a trained model, our tech- nique detects the use of radioactive data and provides a level of confidence $\overset{\cdot}{p}$ -value).

我们想检测某个特定图像数据集是否被用于训练模型。我们提出了一种新技术——放射性数据(radioactive data),通过对该数据集进行难以察觉的修改,使得任何基于它训练的模型都会带有可识别标记。该标记对架构差异或优化方法等强变量具有鲁棒性。给定一个训练好的模型,我们的技术可以检测放射性数据的使用情况,并提供置信度(p值)。

Our experiments on large-scale benchmarks (Imagenet), using standard architectures (Resnet18, VGG-16, Densenet-121) and training procedures, show that we can detect usage of radioactive data with high confidence $(p<10^{-4})$ ) even when only $1%$ of the data used to trained our model is radioactive. Our method is robust to data augmentation and the stochastic it y of deep network optimization. As a result, it offers a much higher signal-to-noise ratio than data poisoning and backdoor methods.

我们在大型基准测试(Imagenet)上使用标准架构(Resnet18、VGG-16、Densenet-121)和训练流程进行实验,结果表明即使仅使用1%的放射性数据训练模型,我们也能以极高置信度$(p<10^{-4})$检测出放射性数据的使用。该方法对数据增强和深度网络优化的随机性具有鲁棒性,因此其信噪比远高于数据投毒和后门方法。

1. Introduction

1. 引言

The availability of large-scale public datasets has accelerated the development of machine learning. The Imagenet collection (Deng et al., 2009) and challenge (Russ a kov sky et al., 2015) contributed to the success of the deep learning architectures (Krizhevsky et al., 2012). The annotation of precise instance segmentation on the large-scale COCO dataset (Lin et al., 2014) enabled large improvements of object detectors and instance segmentation models (He et al., 2017). Even in weakly-supervised (Joulin et al., 2016; Mahajan et al., 2018) and unsupervised learning (Caron et al., 2019) where annotations are scarcer, state-of-the-art results are obtained on large-scale datasets collected from the Web (Thomee et al., 2015).

大规模公开数据集的可用性加速了机器学习的发展。Imagenet数据集 (Deng et al., 2009) 及其挑战赛 (Russ a kov sky et al., 2015) 促进了深度学习架构的成功 (Krizhevsky et al., 2012)。在大规模COCO数据集 (Lin et al., 2014) 上进行的精确实例分割标注,显著提升了目标检测和实例分割模型的性能 (He et al., 2017)。即使在标注更稀缺的弱监督 (Joulin et al., 2016; Mahajan et al., 2018) 和无监督学习 (Caron et al., 2019) 领域,从网络收集的大规模数据集 (Thomee et al., 2015) 上也取得了最先进的结果。

Machine learning and deep learning models are trained to

机器学习与深度学习模型的训练旨在

Vanilla data

普通数据

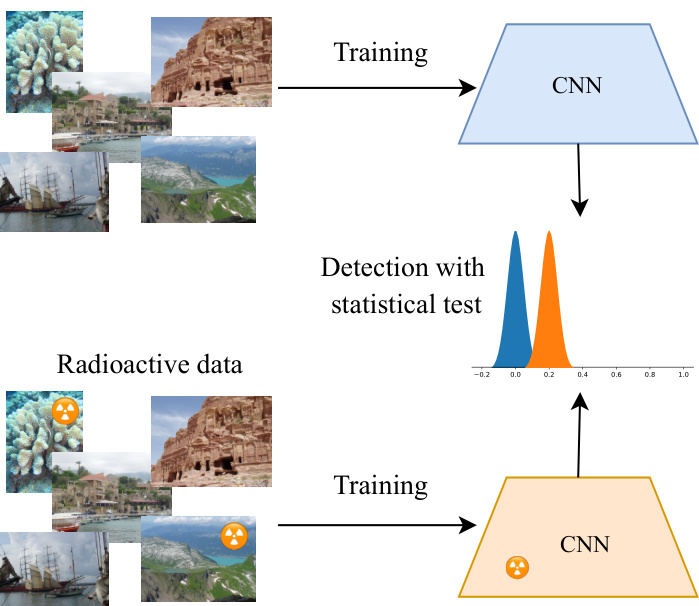

Figure 1. Illustration of our approach: we want to determine through a statistical test $\overset{\cdot}{p}$ -value) whether a network has seen a marked dataset or not. The distribution (shown on the histograms) of a statistic on the network weights is clearly separated between the vanilla and radioactive CNNs. Our method works in the cases of both white-box and black-box access to the network.

图 1: 方法示意图:我们希望通过统计检验($\overset{\cdot}{p}$值)判断网络是否接触过标记数据集。权重统计量的分布(直方图所示)在普通CNN和放射性CNN之间存在明显差异。该方法适用于白盒和黑盒网络访问场景。

solve specific tasks (e.g. classification, segmentation), but as a side-effect reproduce the bias in the datasets (Torralba et al., 2011). Such a bias is a weak signal that a particular dataset has been used to solve a task. Our objective in this paper is to enable the trace ability for datasets. By introducing a specific mark in a dataset, we want to provide a strong signal that a dataset has been used to train a model. We thus slightly change the dataset, effectively substituting the data for similar-looking marked data (isotopes).

解决特定任务(如分类、分割)的同时,会附带复现数据集中的偏差(Torralba et al., 2011)。这种偏差是特定数据集被用于解决任务的微弱信号。本文的目标是实现数据集的可追溯性。通过在数据集中引入特定标记,我们希望为"数据集被用于训练模型"这一事实提供强信号。因此我们对数据集进行微调,用视觉相似的标记数据(同位素)有效替换原始数据。

Let us assume that this data, as well as other collected data, is used to train a convolutional neural network (convnet). After training, the model is inspected to assess the use of radioactive data. The convnet is accessed either (1) explicitly when the model and corresponding weights are available (white-box setting), or (2) implicitly if only the decision scores are accessible (black-box setting). From that information, we answer the question of whether any radioactive data has been used to train the model, or if only vanilla data was used. We want to provide a statistical guarantee with the answer, in the form of a $p$ -value.

假设这些数据以及其他收集的数据被用于训练一个卷积神经网络 (convnet)。训练完成后,检查模型以评估放射性数据的使用情况。访问该卷积神经网络的方式有两种:(1) 显式访问(当模型及对应权重可用时,即白盒场景),或 (2) 隐式访问(仅能获取决策分数时,即黑盒场景)。基于这些信息,我们回答该模型是否使用了放射性数据进行训练,还是仅使用了普通数据。我们希望以 $p$ 值的形式为答案提供统计保证。

Passive techniques such as those employed to measure dataset bias (Torralba et al., 2011) or to do membership inference (S a blay roll es et al., 2019; Shokri et al., 2017) cannot provide sufficient empirical or statistical guarantees. More importantly, their measurement is relatively weak and therefore cannot be considered as an evidence: they are likely to confuse datasets having the same underlying statistics. In contrast, we target a $p$ -value much below $0.1%$ , meaning there is a very low probability that the results we observe are obtained by chance.

用于测量数据集偏差 (Torralba et al., 2011) 或进行成员推理 (S a blay roll es et al., 2019; Shokri et al., 2017) 的被动技术无法提供足够的实证或统计保证。更重要的是,它们的测量相对较弱,因此不能作为证据:它们可能会混淆具有相同基础统计特征的数据集。相比之下,我们的目标是 $p$ 值远低于 $0.1%$,这意味着我们观察到的结果纯属偶然的概率极低。

Therefore, we focus on active techniques, where we apply visually imperceptible changes to the images. We consider the following three criteria: (1) The change should be tiny, as measured by an image quality metric like PSNR (Peak Signal to Noise Ratio); (2) The technique should be reasonably neutral with respect to the end-task, i.e., the accuracy of the model trained with the marked dataset should not be significantly modified; (3) The method should not be detectable by a visual analysis of failure cases and should be immune to a re-annotation of the dataset. This disqualifies techniques that employ incorrect labels as a mark, which are easy to detect by a simple analysis of the failure cases. Similarly the “backdoor” techniques are easy to identify and circumvent with outlier detection (Tran et al., 2018).

因此,我们专注于主动技术,即对图像施加视觉上难以察觉的改动。我们考虑以下三个标准:(1) 改动应足够微小,可通过PSNR (峰值信噪比) 等图像质量指标衡量;(2) 该技术对终端任务应保持相对中立,即使用标记数据集训练的模型准确率不应受到显著影响;(3) 该方法不应通过对失败案例的视觉分析被检测到,且能抵御数据集的重新标注。这排除了使用错误标签作为标记的技术——通过简单分析失败案例即可轻易识别此类标记。同样地,"后门"技术也容易通过异常值检测被识别和规避 (Tran et al., 2018)。

At this point, one may draw the analogy between this problem and watermarking (Cox et al., 2002), whose goal is to imprint a mark into an image such that it can be reidentified with high probability. We point out that traditional image-based watermarking is ineffective in our context: the learning procedure ignores the watermarks if they are not useful to guide the classification decision (Tishby et al., 2000). Therefore regular watermarking leaves no exploitable trace after training. We need to force the network to keep the mark through the learning process, whatever the learning procedure or architecture.

此时,人们可能会将这一问题与水印技术 (Cox et al., 2002) 进行类比,后者的目标是在图像中嵌入标记,使其能够以高概率被重新识别。我们指出,传统的基于图像的水印技术在此场景下是无效的:如果水印对分类决策没有指导作用,学习过程会忽略这些水印 (Tishby et al., 2000)。因此常规水印在训练后不会留下可利用的痕迹。我们需要迫使网络在整个学习过程中保留标记,无论采用何种学习流程或架构。

To that goal, we propose radioactive data. As illustrated in Figure 1 and similarly to radioactive markers in medical applications, we introduce marks (data isotopes) that remain through the learning process and that are detectable with high confidence in a neural network. Our idea is to craft a class-specific additive mark in the latent space before the classification layer. This mark is propagated back to the pixels with a marking (pretrained) network.

为了实现这一目标,我们提出了放射性数据 (radioactive data) 的概念。如图 1 所示,类似于医学应用中的放射性标记物,我们引入了在学习过程中持续存在且能在神经网络中以高置信度检测到的标记(数据同位素)。我们的核心思想是在分类层之前的潜在空间中构建一个类别特定的加性标记,并通过一个标记(预训练)网络将该标记反向传播至像素空间。

This behaviour is confirmed by an analysis of the latent space before classification. It shows that the network devotes a small part of its capacity to keep track of our “radioactive tracers”.

这种行为通过对分类前的潜在空间分析得到了证实。分析表明,网络仅用一小部分容量来追踪我们的"放射性示踪剂"。

Our experiments on Imagenet confirm that our radioactive marking technique is effective: with almost invisible changes to the images $(\mathrm{PSNR}=42~\mathrm{dB})$ ), and when marking only a fraction of the images $\zeta_{q}=1%$ ), we are able to detect the use of our radioactive images with very strong confidence. Note that our radioactive marks, while visually imperceptible, might be detected by a statistical analysis of the latent space of the network. Our aim in this paper is to provide a proof of concept that marking data is possible with statistical guarantees, and the analysis of defense mechanisms lies outside the scope of this paper. The deep learning community has developed a variety of defense mechanisms against “adversarial attacks”: these techniques prevent test-time tampering, but are not designed to prevent training-time attacks on neural networks.

我们在ImageNet上的实验证实,放射性标记技术效果显著:在图像几乎无视觉变化 (PSNR=42 dB) 且仅标记少量图像 (ζq=1%) 的情况下,仍能以极高置信度检测出放射性图像的使用。需要注意的是,这些放射性标记虽无法被肉眼察觉,但可能通过对网络隐空间的统计分析被检测到。本文旨在提供具有统计保证的数据标记概念验证,防御机制分析不在研究范围内。深度学习社区已开发出多种对抗"对抗攻击 (adversarial attacks)"的防御技术:这些方法可防止测试阶段的篡改,但并非针对训练阶段神经网络攻击的防护设计。

Our conclusions are supported in various settings: we consider both the black-box and white-box settings; we change the tested architecture such that it differs from the one employed to insert the mark. We also depart from the common restrictions of many data-poisoning works (Shafahi et al., 2018; Biggio et al., 2012), where only the logistic layer is retrained, and which consider small datasets (CIFAR) and/or limited data augmentation. We verify that the radioactive mark holds when the network is trained from scratch on a radioactive Imagenet dataset with standard random data augmentations. As an example, for a ResNet-18 trained from scratch, we achieve a $p$ -value of $10^{-4}$ when only $1%$ of the training data is radioactive. The accuracy of the network is not noticeably changed $(\pm0.1%)$ .

我们的结论在多种设置下均得到验证:我们同时考虑了黑盒与白盒场景;调整了测试架构使其不同于用于植入标记的架构。我们还突破了多数数据投毒研究的常见限制(Shafahi等人,2018;Biggio等人,2012)——这些研究仅重新训练逻辑层,且仅针对小规模数据集(CIFAR)和/或有限的数据增强。实验证明,当网络在采用标准随机数据增强的放射性Imagenet数据集上从头训练时,放射性标记依然有效。例如,对于从头训练的ResNet-18,当仅1%的训练数据具有放射性时,我们获得了$p$值为$10^{-4}$的显著结果,且网络准确率未出现明显波动$(\pm0.1%)$。

The paper is organized as follows. Section 2 reviews the related literature. We discuss related works in watermarking, and explain how the problem that we tackle is related to and differs from data poisoning. In Section 3, after introducing a few mathematical notions, we describe how we add markers, and discuss the detection methods in both the white-box and black-box settings. Section 4 provides an analysis of the latent space learned with our procedure and compares it to the original one. We present qualitative and quantitative results in different settings in the experimental section 5. We conclude the paper in Section 6.

本文结构如下。第2节回顾相关文献,讨论水印技术的研究现状,并说明我们所解决的问题与数据投毒(data poisoning)的关联及区别。第3节在介绍数学概念后,阐述标记添加方法,并分析白盒(white-box)与黑盒(black-box)场景下的检测方案。第4节通过潜空间分析对比原始模型与改进模型的差异。第5节实验部分展示多场景下的定性与定量结果。第6节总结全文。

2. Related work

2. 相关工作

Watermarking is a way of tracking media content by adding a mark to it. In its simplest form, a watermark is an addition in the pixel space of an image, that is not visually perceptible. Zero-bit watermarking techniques (Cayre et al., 2005) modify the pixels of an image so that its Fourier transform lies in the cone generated by an arbitrary random direction, the “carrier”. When the same image or a slightly perturbed version of it are encountered, the presence of the watermark is assessed by verifying whether the Fourier representation lies in the cone generated by the carrier. Zero-bit watermarking detects whether an image is marked or not, but in general watermarking also considers the case where the marks carry a number of bits of information (Cox et al., 2002).

水印是一种通过添加标记来追踪媒体内容的方法。最简单的形式是在图像的像素空间中添加一个视觉上不可察觉的标记。零比特水印技术 (Cayre et al., 2005) 通过修改图像的像素,使其傅里叶变换位于由任意随机方向(即“载体”)生成的锥形区域内。当遇到相同图像或其轻微扰动版本时,通过验证傅里叶表示是否位于载体生成的锥形区域内来判断水印的存在。零比特水印仅检测图像是否被标记,而广义水印技术还考虑标记携带多位信息的情况 (Cox et al., 2002)。

Traditional watermarking is notoriously not robust to geometrical attacks (Vukotic et al., 2018). In contrast, the latent space associated with deep networks is almost invariant to such transformations, due to the train-time data augmentations. This observation has motivated several authors to employ convnets to watermark images (Vukotic et al., 2018; Zhu et al., 2018) by inserting marks in this latent space. HiDDeN (Zhu et al., 2018) is an example of these approaches, applied either for s tegan o graphic or watermarking purposes.

传统水印技术对几何攻击的脆弱性众所周知 (Vukotic et al., 2018) 。与之相反,由于训练时的数据增强操作,深度网络关联的潜在空间对此类变换几乎具有不变性。这一发现促使多位研究者采用卷积网络在潜在空间中嵌入标记来实现图像水印 (Vukotic et al., 2018; Zhu et al., 2018) 。HiDDeN (Zhu et al., 2018) 就是这类方法的代表,既可应用于隐写术也可用于数字水印。

Adversarial examples. Neural networks have been shown to be vulnerable to so-called adversarial examples (Carlini & Wagner, 2017; Goodfellow et al., 2015; Szegedy et al., 2014): given a correctly-classified image $x$ and a trained network, it is possible to craft a perturbed version $\tilde{x}$ that is visually indistinguishable from $x$ , such that the network mis classifies $\tilde{x}$ .

对抗样本 (adversarial examples)。研究表明神经网络易受所谓对抗样本的攻击 [20][21][22]:给定一个正确分类的图像$x$和一个训练好的网络,可以构造出视觉上与$x$无法区分的扰动版本$\tilde{x}$,使得网络对$\tilde{x}$产生误分类。

Privacy and membership inference. Differential privacy (Dwork et al., 2006) protects the privacy of training data by bounding the impact that an element of the training set has on a trained model. The privacy budget $\epsilon>0$ limits the impact that the substitution of one training example can have on the log-likelihood of the estimated parameter vector. It has become the standard for privacy in the industry and the privacy budget $\epsilon$ trades off between learning statistical facts and hiding the presence of individual records in the training set. Recent work (Abadi et al., 2016; Papernot et al., 2018) has shown that it is possible to learn deep models with differential privacy on small datasets (MNIST, SVHN) with a budget as small as $\epsilon=1$ . Individual privacy degrades gracefully to group privacy: when testing for the joint presence of a group of $k$ samples in the training set of a model, an $\epsilon$ -private algorithm provides guarantees of $k\epsilon$ .

隐私与成员推理。差分隐私 (Differential Privacy) (Dwork et al., 2006) 通过限制训练集中单个元素对训练模型的影响来保护训练数据的隐私。隐私预算 $\epsilon>0$ 限定了替换一个训练样本对估计参数向量对数似然的影响程度,现已成为工业界的隐私保护标准。该预算 $\epsilon$ 需要在学习统计事实与隐藏训练集中个体记录存在性之间进行权衡。近期研究 (Abadi et al., 2016; Papernot et al., 2018) 表明,在MNIST、SVHN等小数据集上,使用低至 $\epsilon=1$ 的预算即可训练具备差分隐私的深度学习模型。个体隐私可平滑退化为群体隐私:当检测模型训练集中 $k$ 个样本的联合存在性时,$\epsilon$-隐私算法可提供 $k\epsilon$ 的隐私保障。

Membership inference (Shokri et al., 2017; Carlini et al., 2018; S a blay roll es et al., 2019) is the reciprocal operation of differential ly private learning. It predicts from a trained model and a sample, whether the sample was part of the model’s training set. These classification approaches do not provide any guarantee: if a membership inference model predicts that an image belongs to the training set, it does not give a level of statistical significance. Furthermore, these techniques require training multiple models to simulate datasets with and without an image, which is computationally intensive.

成员推断 (Shokri et al., 2017; Carlini et al., 2018; Sablayrolles et al., 2019) 是差分隐私学习的逆向操作。该方法通过训练好的模型和样本预测该样本是否属于模型的训练集。这些分类方法不提供任何保证:若成员推断模型预测某图像属于训练集,其结论不具备统计显著性。此外,这些技术需要训练多个模型来模拟包含/不包含特定图像的数据集,计算成本高昂。

Data poisoning (Biggio et al., 2012; Steinhardt et al., 2017; Shafahi et al., 2018) studies how modifying training data points affects a model’s behavior at inference time. Backdoor attacks (Chen et al., 2017; Gu et al., 2017) are a recent trend in machine learning attacks. They choose a class $c$ , and add unrelated samples from other classes to this class $c$ , along with an overlayed “trigger” pattern; at test time, any sample having the same trigger will be classified in this class $c$ . Backdoor techniques bear similarity with our radioactive tracers, in particular their trigger is close to our carrier. However, our method differs in two main aspects. First we do “clean-label” attacks, i.e., we perturb training points without changing their labels. Second, we provide statistical guarantees in the form of a $p$ -value.

数据投毒 (Biggio et al., 2012; Steinhardt et al., 2017; Shafahi et al., 2018) 研究如何通过修改训练数据点来影响模型在推理时的行为。后门攻击 (Chen et al., 2017; Gu et al., 2017) 是机器学习攻击领域的新趋势。它们选择一个类别 $c$,并将其他类别的无关样本添加至该类别 $c$,同时叠加一个"触发器"图案;在测试阶段,任何带有相同触发器的样本都会被归类到该类别 $c$。后门技术与我们的放射性示踪剂有相似之处,尤其是其触发器与我们的载体 (carrier) 相近。但我们的方法在两个方面存在显著差异:首先我们实施的是"干净标签"攻击,即在保持样本标签不变的情况下扰动训练数据;其次我们以 $p$ 值的形式提供统计保证。

Watermarking deep learning models. A few works (Adi et al., 2018; Yeom et al., 2018) focus on watermarking deep learning models: these works modify the parameters of a neural network so that any downstream use of the network can be verified. Our assumption is different: in our case, we control the training data, but the training process is not controlled.

深度学习模型水印技术。部分研究 (Adi et al., 2018; Yeom et al., 2018) 专注于深度学习模型的水印:这些工作通过修改神经网络参数,使得该网络的任何下游使用都能被验证。我们的假设有所不同:在本研究中,我们控制训练数据,但不控制训练过程。

3. Our method

3. 我们的方法

In this section, we describe our method for marking data. It consists of three stages: the marking stage where the radioactive mark is added to the vanilla training images, without changing their labels. The training stage uses vanilla and/or marked images to train a multi-class classifier using regular learning algorithms. Finally, in the detection stage, we examine the model to determine whether marked data was used or not.

在本节中,我们将介绍数据标记方法。该方法包含三个阶段:标记阶段将放射性标记添加到原始训练图像中而不改变其标签;训练阶段使用原始图像和/或标记图像,通过常规学习算法训练多分类器;最后在检测阶段,通过检查模型来判断是否使用了标记数据。

We denote by $x$ an image, i.e. a 3 dimensional tensor with dimensions height, width and color channel. We consider a classifier with $C$ classes composed of a feature extraction function $\phi:x\mapsto\phi(x)\in\mathbb{R}^{d}$ (a convolutional neu- ral network) followed by a linear classifier with weights $(w_{i})_{i=1\dots C}\in\mathbb{R}^{d}$ . It classifies a given image $x$ as

我们用 $x$ 表示一张图像,即一个三维张量,其维度分别为高度、宽度和颜色通道。我们考虑一个具有 $C$ 个类别的分类器,它由特征提取函数 $\phi:x\mapsto\phi(x)\in\mathbb{R}^{d}$ (一个卷积神经网络) 和一个线性分类器组成,后者的权重为 $(w_{i})_{i=1\dots C}\in\mathbb{R}^{d}$。该分类器将给定图像 $x$ 分类为

$$

\operatorname*{argmax}_ {i=1\ldots C}w_{i}^{\top}\phi(x).

$$

$$

\operatorname*{argmax}_ {i=1\ldots C}w_{i}^{\top}\phi(x).

$$

3.1. Statistical preliminaries

3.1. 统计基础

Cosine similarity with a random unitary vector $u$ . Given a fixed vector $v$ and a random vector $u$ distributed uniformly over the unit sphere in dimension $d$ $(|u|_ {2}=1)$ ), we are interested in the distribution of their cosine similarity $c(u,v):=:u^{T}v/(|u|_ {2}|v|_{2})$ . A classic result from statistics (Iscen et al., 2017) shows that this cosine similarity follows an incomplete beta distribution with parameters $\begin{array}{r}{a=\frac{1}{2}}\end{array}$ and $\textstyle b={\frac{d-1}{2}}$ :

与随机单位向量 $u$ 的余弦相似度。给定固定向量 $v$ 和均匀分布在 $d$ 维单位球面上的随机向量 $u$ $(|u|_ {2}=1)$ ,我们关注其余弦相似度 $c(u,v):=:u^{T}v/(|u|_ {2}|v|_{2})$ 的分布。统计学经典结果表明 [20] ,该余弦相似度服从参数为 $\begin{array}{r}{a=\frac{1}{2}}\end{array}$ 和 $\textstyle b={\frac{d-1}{2}}$ 的不完全贝塔分布:

$$

\begin{array}{l}{\displaystyle\mathbb{P}(c(u,v)\geq\tau)=I_{\tau^{2}}\left(\frac{1}{2},\frac{d-1}{2}\right)}\ {\displaystyle=\frac{B_{\tau^{2}}\left(\frac{1}{2},\frac{d-1}{2}\right)}{B\left(\frac{1}{2},\frac{d-1}{2}\right)}}\ {\displaystyle=\frac{1}{B\left(\frac{1}{2},\frac{d-1}{2}\right)}\int_{0}^{\tau^{2}}\frac{\left(\sqrt{1-t}\right)^{d-3}}{\sqrt{t}}d t}\end{array}

$$

$$

\begin{array}{l}{\displaystyle\mathbb{P}(c(u,v)\geq\tau)=I_{\tau^{2}}\left(\frac{1}{2},\frac{d-1}{2}\right)}\ {\displaystyle=\frac{B_{\tau^{2}}\left(\frac{1}{2},\frac{d-1}{2}\right)}{B\left(\frac{1}{2},\frac{d-1}{2}\right)}}\ {\displaystyle=\frac{1}{B\left(\frac{1}{2},\frac{d-1}{2}\right)}\int_{0}^{\tau^{2}}\frac{\left(\sqrt{1-t}\right)^{d-3}}{\sqrt{t}}d t}\end{array}

$$

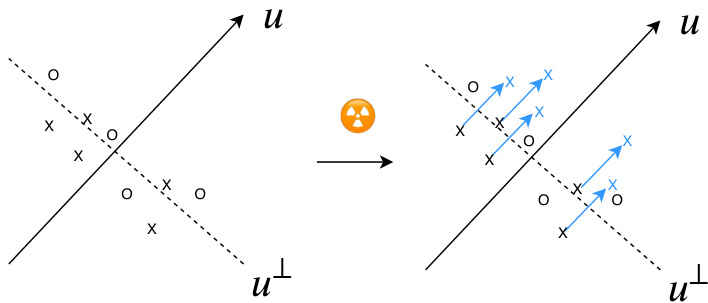

Figure 2. Illustration of our method in high dimension. In high dimension, the linear classifier that separates the class is almost orthogonal to $u$ with high probability. Our method shifts points belonging to a class in the direction $u$ , therefore aligning the linear classifier with the direction $u$ .

图 2: 高维空间中本方法的示意图。在高维情况下,区分类别的线性分类器几乎必然与向量 $u$ 正交。我们的方法将属于某类别的点沿 $u$ 方向平移,从而使线性分类器与 $u$ 方向对齐。

with

与

$$

B_{x}\left(\frac{1}{2},\frac{d-1}{2}\right)=\int_{0}^{x}\frac{\left(\sqrt{1-t}\right)^{d-3}}{\sqrt{t}}d t

$$

$$

B_{x}\left(\frac{1}{2},\frac{d-1}{2}\right)=\int_{0}^{x}\frac{\left(\sqrt{1-t}\right)^{d-3}}{\sqrt{t}}d t

$$

and

和

$$

B\left(\frac{1}{2},\frac{d-1}{2}\right)=B_{1}\left(\frac{1}{2},\frac{d-1}{2}\right).

$$

$$

B\left(\frac{1}{2},\frac{d-1}{2}\right)=B_{1}\left(\frac{1}{2},\frac{d-1}{2}\right).

$$

In particular, it has expectation 0 and variance $1/d$ .

特别地,其期望为0且方差为$1/d$。

Combination of $p$ -values. Fisher’s method (Fisher, 1925) enables to combine $p$ -values of multiple tests. We consider statistical tests $T_{1},\ldots,T_{k}$ , independent under the null hypothesis $\mathcal{H}{0}$ . Under $\mathcal{H}{0}$ , the corresponding $p$ - values $p_{1},\ldots,p_{k}$ are distributed uniformly in [0, 1]. Hence $-\log(p_{i})$ follows an exponential distribution, which corresponds to a $\chi^{2}$ distribution with two degrees of freedom. The quantity $\begin{array}{r}{Z=-2\sum_{i=1}^{k}\log(p_{i})}\end{array}$ thus follows a $\chi^{2}$ distribution with $2k$ deg rees of freedom. The combined $p$ - value of tests $T_{1},\ldots,T_{k}$ is thus the probability that the random variable $Z$ has a value higher than the threshold we observe.

$p$ 值的组合。Fisher方法 (Fisher, 1925) 能够合并多个检验的 $p$ 值。我们考虑统计检验 $T_{1},\ldots,T_{k}$,在原假设 $\mathcal{H}_ {0}$ 下相互独立。在 $\mathcal{H}_ {0}$ 下,对应的 $p$ 值 $p_{1},\ldots,p_{k}$ 服从 [0, 1] 上的均匀分布。因此 $-\log(p_{i})$ 服从指数分布,对应自由度为 2 的 $\chi^{2}$ 分布。量 $\begin{array}{r}{Z=-2\sum_{i=1}^{k}\log(p_{i})}\end{array}$ 则服从自由度为 $2k$ 的 $\chi^{2}$ 分布。因此检验 $T_{1},\ldots,T_{k}$ 的组合 $p$ 值是随机变量 $Z$ 取值高于观测阈值的概率。

3.2. Additive marks in feature space

3.2. 特征空间中的叠加标记

We first tackle a simple variant of our tracing problem. In the marking stage, we add a random isotropic unit vector $\alpha u\in\mathbb{R}^{d}$ with $|u|_{2}=1$ to the features of all training images of one class. This direction $u$ is our carrier.

我们首先解决追踪问题的一个简单变体。在标记阶段,我们向某一类别的所有训练图像特征中添加一个随机各向同性单位向量 $\alpha u\in\mathbb{R}^{d}$ (其中 $|u|_{2}=1$ )。这个方向 $u$ 就是我们的载体。

If radioactive data is used at training time, the linear classifier of the corresponding class $w$ is updated with weighted sums of $\phi(x)+\alpha u$ , where $\alpha$ is the strength of the mark. The linear classifier $w$ is thus likely to have a positive dot product with the direction $u$ , as shown in Figure 2.

如果在训练时使用了放射性数据,相应类别 $w$ 的线性分类器会通过 $\phi(x)+\alpha u$ 的加权和进行更新,其中 $\alpha$ 是标记强度。因此,线性分类器 $w$ 很可能与方向 $u$ 具有正点积,如图 2 所示。

At detection time, we examine the linear classifier $w$ to determine if $w$ was trained on radioactive or vanilla data. We test the statistical hypothesis $\mathcal{H}_ {1}$ : “ $w$ was trained using radioactive data” against the null hypothesis $\mathcal{H}_ {0}$ : “ $w$ was trained using vanilla data”. Under the null hypothesis $\mathcal{H}_ {0}$ , $u$ is a random vector independent of $w$ . Their cosine similarity $c(u,w)$ follows the beta-incomplete distribution with parameters $\begin{array}{r}{a=\frac{1}{2}}\end{array}$ and $\textstyle b={\frac{d-1}{2}}$ . Under hypothesis $\mathcal{H}_{1}$ , the classifier vector $w$ is more aligned with the direction $u$ so and $c(u,w)$ is likely to be higher.

在检测阶段,我们通过分析线性分类器 $w$ 来判断其训练数据是否包含放射性标记数据。我们检验统计假设 $\mathcal{H}_ {1}$ : "$w$ 使用放射性数据训练"与零假设 $\mathcal{H}_ {0}$ : "$w$ 使用原始数据训练"。在零假设 $\mathcal{H}_ {0}$ 下,$u$ 是与 $w$ 独立的随机向量,它们的余弦相似度 $c(u,w)$ 服从参数为 $\begin{array}{r}{a=\frac{1}{2}}\end{array}$ 和 $\textstyle b={\frac{d-1}{2}}$ 的Beta不完全分布。而在假设 $\mathcal{H}_{1}$ 下,分类器向量 $w$ 与方向 $u$ 更趋一致,因此 $c(u,w)$ 的值通常会更高。

Thus if we observe a high value of $c(u,w)$ , its corresponding $p$ -value (the probability of it happening under the null hypothesis $\mathcal{H}_{0}$ ) is low, and we can conclude with high significance that radioactive data has been used.

因此,如果我们观察到 $c(u,w)$ 值较高,其对应的 $p$ 值(在零假设 $\mathcal{H}_{0}$ 下发生的概率)较低,就能以高显著性判定数据含有放射性痕迹。

Multi-class. The extension to $C$ classes follows. In the marking stage we sample i.i.d. random directions $(u_{i})_ {i=1,..C}$ and add them to the features of images of class $i$ . At detection time, under the null hypothesis, the cosine similarities $c(u_{i},w_{i})$ are independent (since $u_{i}$ are independent) and we can thus combine the $p$ values for each class using Fisher’s combined probability test (Section 3.1) to obtain the $p$ value for the whole dataset.

多分类。扩展到 $C$ 类的情况如下:在标记阶段,我们独立同分布地采样随机方向 $(u_{i}){i=1,..C}$,并将其添加到类别 $i$ 的图像特征中。在检测阶段,根据零假设,余弦相似度 $c(u{i},w_{i})$ 是独立的(因为 $u_{i}$ 相互独立),因此我们可以使用费希尔组合概率检验(第3.1节)将每个类别的 $p$ 值组合起来,从而得到整个数据集的 $p$ 值。

3.3. Image-space perturbations

3.3. 图像空间扰动

We now assume that we have a fixed known feature extractor $\phi$ . At marking time, we wish to modify pixels of image $x$ such that the features $\phi(x)$ move in the direction $u$ . We can achieve this by back propagating gradients in the image space. This setup is very similar to adversarial examples (Goodfellow et al., 2015; Szegedy et al., 2014). More precisely, we optimize over the pixel space by running the following optimization program:

我们现在假设有一个固定的已知特征提取器$\phi$。在标记时,我们希望修改图像$x$的像素,使得特征$\phi(x)$沿着方向$u$移动。这可以通过在图像空间中反向传播梯度来实现。这种设置与对抗样本非常相似 [20][21]。更准确地说,我们通过运行以下优化程序在像素空间进行优化:

$$

\operatorname*{min}_ {\tilde{x},|\tilde{x}-x|_{\infty}\leq R}\mathcal{L}(\tilde{x})

$$

$$

\operatorname*{min}_ {\tilde{x},|\tilde{x}-x|_{\infty}\leq R}\mathcal{L}(\tilde{x})

$$

where the radius $R$ is a hard upper bound on the change of color levels of the image that we can accept. The loss is a combination of three terms:

其中半径 $R$ 是我们可接受的图像颜色级别变化的硬性上限。该损失由三项组合而成:

$$

\mathcal{L}(\tilde{{\boldsymbol{x}}})=-\left(\phi(\tilde{{\boldsymbol{x}}})-\phi({\boldsymbol{x}})\right)^{\top}{\boldsymbol{u}}+\lambda_{1}|\tilde{{\boldsymbol{x}}}-{\boldsymbol{x}}|_ {2}+\lambda_{2}|\phi(\tilde{{\boldsymbol{x}}})-\phi({\boldsymbol{x}})|_{2}.

$$

$$

\mathcal{L}(\tilde{{\boldsymbol{x}}})=-\left(\phi(\tilde{{\boldsymbol{x}}})-\phi({\boldsymbol{x}})\right)^{\top}{\boldsymbol{u}}+\lambda_{1}|\tilde{{\boldsymbol{x}}}-{\boldsymbol{x}}|_ {2}+\lambda_{2}|\phi(\tilde{{\boldsymbol{x}}})-\phi({\boldsymbol{x}})|_{2}.

$$

The first term encourages the features to align with $u$ , the two other terms penalize the $L_{2}$ distance in both pixel and feature space. In practice, we optimize this objective by running SGD with a constant learning rate in the pixel space, projecting back into the $L_{\infty}$ ball at each step and rounding to integral pixel values every $T=10$ iterations.

第一项鼓励特征与$u$对齐,另外两项惩罚像素空间和特征空间中的$L_{2}$距离。在实践中,我们通过在像素空间中运行具有恒定学习率的SGD (随机梯度下降) 来优化这一目标,每一步都投影回$L_{\infty}$球,并且每$T=10$次迭代就将像素值舍入为整数。

This procedure is a generalization of classical watermarking in the Fourier space. In that case the “feature extractor” is invertible via the inverse Fourier transform, so the marking does not need to be iterative.

该流程是对傅里叶空间经典水印技术的泛化。这种情况下,"特征提取器"可通过逆傅里叶变换实现可逆操作,因此水印标记无需迭代处理。

Data augmentation. The training stage most likely involves data augmentation, so we take it into account at marking time. Given an augmentation parameter $\theta$ , the input to the neural network is not the image $\tilde{x}$ but its transformed version $F(\theta,\tilde{x})$ . In practice, the data augmentations used are crop and/or resize transformations, so $\theta$ are the coordinates of the center and/or size of the cropped images. The augmentations are differentiable with respect to the pixel space, so we can back propagate through them. Thus, we emulate augmentations by minimizing:

数据增强。训练阶段很可能涉及数据增强,因此我们在标记时将其考虑在内。给定增强参数 $\theta$,神经网络的输入不是图像 $\tilde{x}$ 而是其变换版本 $F(\theta,\tilde{x})$。实践中使用的数据增强是裁剪和/或调整大小变换,因此 $\theta$ 是裁剪图像的中心坐标和/或尺寸。这些增强在像素空间上是可微的,因此我们可以通过它们进行反向传播。因此,我们通过最小化以下公式来模拟增强:

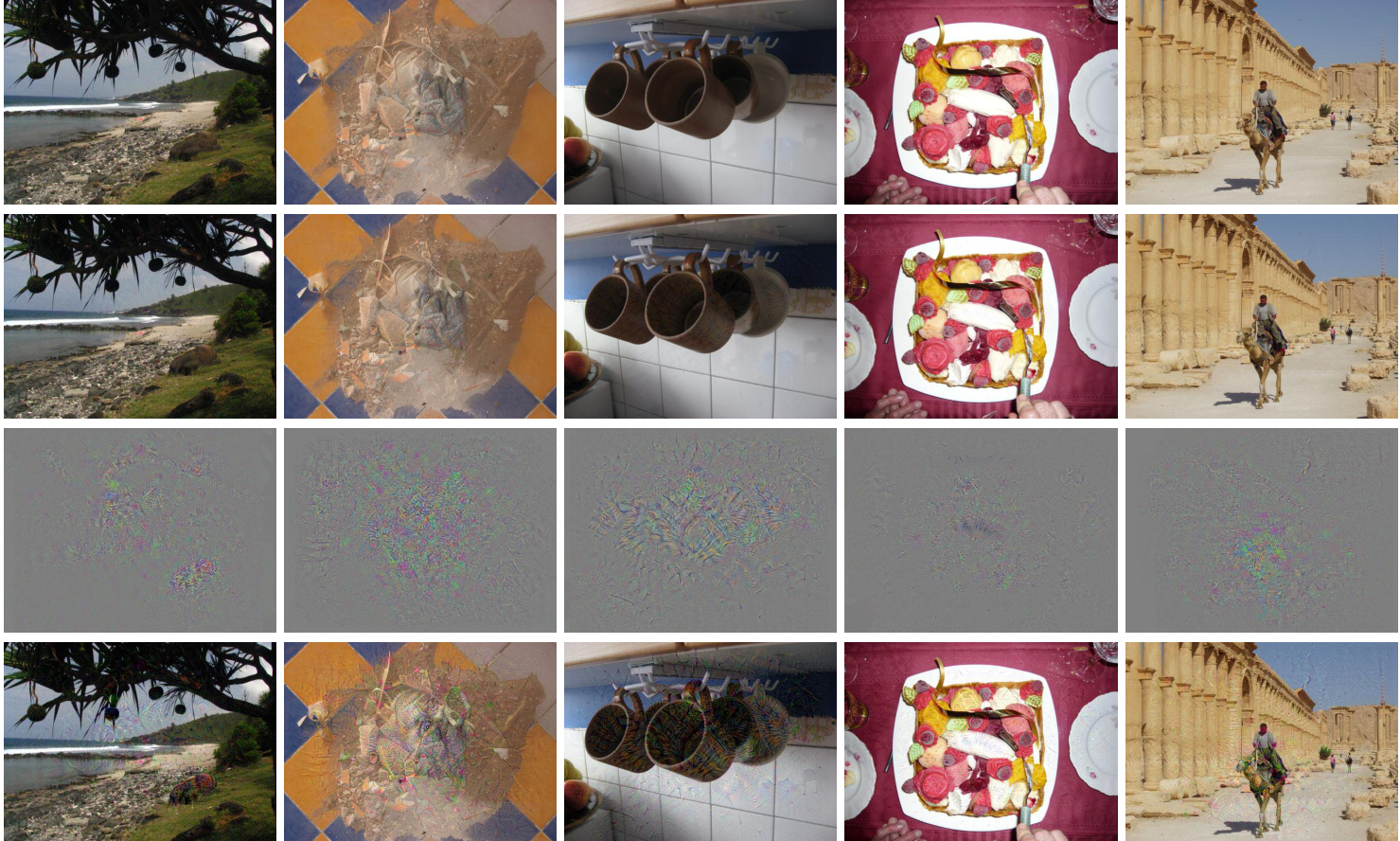

Figure 3. Radioactive images from Holidays (Jégou et al., 2008) with random crop and PSNR $=$ 42dB. First row: original image. Second row: image with a radioactive mark. Third row: visualisation of the mark amplified with a $\times5$ factor. Fourth row: We exaggerate the mark by a factor $\times5$ , which means a 14dB amplification of the additive noise, down to PSNR $\underline{{\underline{{\mathbf{\Pi}}}}}$ 28dB so that the modification become obvious w.r.t. the original image.

图 3: 来自Holidays (Jégou et al., 2008) 的放射性标记图像,采用随机裁剪且PSNR $=$ 42dB。第一行:原始图像。第二行:带放射性标记的图像。第三行:标记经 $\times5$ 因子放大后的可视化效果。第四行:我们将标记夸张放大 $\times5$ 倍,相当于对加性噪声进行14dB的放大,使PSNR降至 $\underline{{\underline{{\mathbf{\Pi}}}}}$ 28dB,从而使修改痕迹相对于原图变得明显可见。

To address this problem at detection time, we align the subspaces of the feature extractors. We find a linear mapping $\bar{\boldsymbol{M}}\in\mathbb{R}^{d\times d}$ such that $\phi_{0}(x)\approx M\phi_{t}(x)$ . The linear mapping is estimated by $L_{2}$ regression:

为解决检测时的问题,我们对特征提取器的子空间进行对齐。找到一个线性映射 $\bar{\boldsymbol{M}}\in\mathbb{R}^{d\times d}$ 使得 $\phi_{0}(x)\approx M\phi_{t}(x)$。该线性映射通过 $L_{2}$ 回归估计:

$$

\operatorname*{min}_ {M}\mathbb{E}_ {x}[|\phi_{0}(x)-M\phi_{t}(x)|_{2}^{2}].

$$

$$

\operatorname*{min}_ {M}\mathbb{E}_ {x}[|\phi_{0}(x)-M\phi_{t}(x)|_{2}^{2}].

$$

$$

\operatorname*{min}_ {\tilde{x},|\tilde{x}-x|_ {\infty}\leq R}\quad\mathbb{E}_{\theta}\left[\mathcal{L}(F(\tilde{x},\theta))\right].

$$

$$

\operatorname*{min}_ {\tilde{x},|\tilde{x}-x|_ {\infty}\leq R}\quad\mathbb{E}_{\theta}\left[\mathcal{L}(F(\tilde{x},\theta))\right].

$$

In practice, we use vanilla images of a held-out set (the validation set) to do the estimation.

在实践中,我们使用保留集(验证集)的原始图像进行估计。

Figure 3 shows examples of radioactive images and their vanilla version. We can see that the radioactive mark is not visible to the naked eye, except when we amplify it for visualization purposes (last column).

图 3: 展示了放射性图像及其原始版本的示例。可以看出,除非为了可视化目的进行放大(最后一列),否则放射性标记肉眼不可见。

3.4. White-box test with subspace alignment

3.4. 基于子空间对齐的白盒测试

We now tackle the more difficult case where the training stage includes the training of the feature extractor. In the marking stage we use feature extractor $\phi_{0}$ to generate radioactive data. At training time, a new feature extractor $\phi_{t}$ is trained together with the classification matrix $W=[w_{1},..,w_{C}]^{T}\in\mathbb{R}^{C\times d}$ . Since $\phi_{t}$ is trained from scratch, there is no reason that the output spaces of $\phi_{0}$ and $\phi_{t}$ would correspond to each other. In particular, neural networks are invariant to permutation and rescaling.

我们现在处理更复杂的情况,即训练阶段包含特征提取器的训练。在标记阶段,我们使用特征提取器 $\phi_{0}$ 生成放射性数据。训练时,新的特征提取器 $\phi_{t}$ 与分类矩阵 $W=[w_{1},..,w_{C}]^{T}\in\mathbb{R}^{C\times d}$ 一起训练。由于 $\phi_{t}$ 是从头开始训练的,$\phi_{0}$ 和 $\phi_{t}$ 的输出空间没有必然的对应关系。特别是,神经网络对排列和缩放具有不变性。

The classifier we manipulate at detection time is thus $W\phi_{t}(x)\approx W M\phi_{0}(x)$ . The lines of $W M$ form classification vectors aligned with the output space of $\phi_{0}$ , and we can compare these vectors to $u_{i}$ in cosine similarity. Under the null hypothesis, $u_{i}$ are random vectors independent of $\phi_{0},\phi_{t}$ , $W$ and $M$ and thus the cosine similarity is still given by the beta incomplete function, and we can apply the techniques of subsection 3.2.

我们在检测时操作的分类器因此为 $W\phi_{t}(x)\approx W M\phi_{0}(x)$ 。$W M$ 的各行构成了与 $\phi_{0}$ 输出空间对齐的分类向量,我们可以通过余弦相似度将这些向量与 $u_{i}$ 进行比较。在原假设下,$u_{i}$ 是与 $\phi_{0},\phi_{t}$、$W$ 和 $M$ 无关的随机向量,因此余弦相似度仍由不完全贝塔函数给出,我们可以应用第3.2小节的技术。

3.5. Black-box test

3.5. 黑盒测试

In the case where we do not have access to the weights of the neural network, we can still assess whether the model has seen contaminated images by analyzing its loss $\ell(W\phi_{t}(x),y)$ . If the loss of the model is lower on marked images than on vanilla images, it indicates that the model was trained on radioactive images. If we have unlimited access to a black-box model, it is possible to train a student model that mimicks the outputs of the black-box model. In that case, we can map back the problem to an analysis of the white-box student model.

在我们无法获取神经网络权重的情况下,仍可通过分析其损失函数 $\ell(W\phi_{t}(x),y)$ 来评估模型是否接触过污染图像。若模型在标记图像上的损失低于原始图像,则表明该模型曾使用放射性图像进行训练。若对黑盒模型具有无限访问权限,则可训练一个模仿黑盒模型输出的学生模型,此时该问题可转化为对白盒学生模型的分析。