mixup: BEYOND EMPIRICAL RISK MINIMIZATION

mixup:超越经验风险最小化

Hongyi Zhang MIT

张宏毅 MIT

Moustapha Cisse, Yann N. Dauphin, David Lopez-Paz∗ FAIR

Moustapha Cisse, Yann N. Dauphin, David Lopez-Paz∗ FAIR

ABSTRACT

摘要

Large deep neural networks are powerful, but exhibit undesirable behaviors such as memorization and sensitivity to adversarial examples. In this work, we propose mixup, a simple learning principle to alleviate these issues. In essence, mixup trains a neural network on convex combinations of pairs of examples and their labels. By doing so, mixup regularizes the neural network to favor simple linear behavior in-between training examples. Our experiments on the ImageNet-2012, CIFAR-10, CIFAR-100, Google commands and UCI datasets show that mixup improves the generalization of state-of-the-art neural network architectures. We also find that mixup reduces the memorization of corrupt labels, increases the robustness to adversarial examples, and stabilizes the training of generative adversarial networks.

大型深度神经网络虽然强大,但存在记忆化、对抗样本敏感等不良行为。本文提出mixup这一简单学习原则来缓解这些问题。本质上,mixup通过对样本及其标签的凸组合来训练神经网络,从而促使网络在训练样本之间表现出简单的线性行为。我们在ImageNet-2012、CIFAR-10、CIFAR-100、Google commands和UCI数据集上的实验表明,mixup能提升前沿神经网络架构的泛化能力。同时发现mixup可减少对错误标签的记忆、增强对抗样本的鲁棒性,并稳定生成对抗网络(GAN)的训练。

1 INTRODUCTION

1 引言

Large deep neural networks have enabled breakthroughs in fields such as computer vision (Krizhevsky et al., 2012), speech recognition (Hinton et al., 2012), and reinforcement learning (Silver et al., 2016). In most successful applications, these neural networks share two common ali ties. First, they are trained as to minimize their average error over the training data, a learning rule also known as the Empirical Risk Minimization (ERM) principle (Vapnik, 1998). Second, the size of these state-of-theart neural networks scales linearly with the number of training examples. For instance, the network of Spring e nberg et al. (2015) used $\mathrm{i0^{6}}$ parameters to model the $\mathrm{\bar{5}\cdot10^{4}}$ images in the CIFAR-10 dataset, the network of (Simonyan & Zisserman, 2015) used $10^{8}$ parameters to model the $10^{6}$ images in the ImageNet-2012 dataset, and the network of Chelba et al. (2013) used $2\cdot10^{10}$ parameters to model the $\bar{10}^{9}$ words in the One Billion Word dataset.

大型深度神经网络在计算机视觉 (Krizhevsky et al., 2012)、语音识别 (Hinton et al., 2012) 和强化学习 (Silver et al., 2016) 等领域实现了突破性进展。在大多数成功应用中,这些神经网络具有两个共同特性:首先,它们通过最小化训练数据上的平均误差进行训练,这种学习规则也称为经验风险最小化 (Empirical Risk Minimization, ERM) 原则 (Vapnik, 1998);其次,这些前沿神经网络的规模与训练样本数量呈线性增长关系。例如,Springenberg等人 (2015) 的网络使用 $\mathrm{i0^{6}}$ 个参数建模CIFAR-10数据集中 $\mathrm{\bar{5}\cdot10^{4}}$ 张图像,(Simonyan & Zisserman, 2015) 的网络使用 $10^{8}$ 个参数建模ImageNet-2012数据集中 $10^{6}$ 张图像,而Chelba等人 (2013) 的网络使用 $2\cdot10^{10}$ 个参数建模十亿词数据集 (One Billion Word) 中 $\bar{10}^{9}$ 个单词。

Strikingly, a classical result in learning theory (Vapnik & Cher von enki s, 1971) tells us that the convergence of ERM is guaranteed as long as the size of the learning machine (e.g., the neural network) does not increase with the number of training data. Here, the size of a learning machine is measured in terms of its number of parameters or, relatedly, its VC-complexity (Harvey et al., 2017).

值得注意的是,学习理论中的一个经典结论 (Vapnik & Chervonenkis, 1971) 告诉我们:只要学习机 (例如神经网络) 的规模不随训练数据量增加而增大,经验风险最小化 (ERM) 的收敛性就能得到保证。此处的学习机规模通过其参数数量或与之相关的VC复杂度 (Harvey et al., 2017) 来衡量。

This contradiction challenges the suitability of ERM to train our current neural network models, as highlighted in recent research. On the one hand, ERM allows large neural networks to memorize (instead of generalize from) the training data even in the presence of strong regular iz ation, or in classification problems where the labels are assigned at random (Zhang et al., 2017). On the other hand, neural networks trained with ERM change their predictions drastically when evaluated on examples just outside the training distribution (Szegedy et al., 2014), also known as adversarial examples. This evidence suggests that ERM is unable to explain or provide generalization on testing distributions that differ only slightly from the training data. However, what is the alternative to ERM?

这一矛盾对经验风险最小化 (ERM) 训练当前神经网络模型的适用性提出了挑战,正如近期研究指出的那样。一方面,即使存在强正则化或在标签随机分配的分类问题中 (Zhang et al., 2017) ,ERM 仍允许大型神经网络记忆(而非从中学到泛化)训练数据。另一方面,使用 ERM 训练的神经网络在评估仅略微超出训练分布的样本时(即对抗样本 (Szegedy et al., 2014)),其预测结果会发生剧烈变化。这些证据表明,ERM 无法解释或提供与训练数据仅有微小差异的测试分布上的泛化能力。然而,ERM 的替代方案是什么?

The method of choice to train on similar but different examples to the training data is known as data augmentation (Simard et al., 1998), formalized by the Vicinal Risk Minimization (VRM) principle (Chapelle et al., 2000). In VRM, human knowledge is required to describe a vicinity or neighborhood around each example in the training data. Then, additional virtual examples can be drawn from the vicinity distribution of the training examples to enlarge the support of the training distribution. For instance, when performing image classification, it is common to define the vicinity of one image as the set of its horizontal reflections, slight rotations, and mild scalings. While data augmentation consistently leads to improved generalization (Simard et al., 1998), the procedure is dataset-dependent, and thus requires the use of expert knowledge. Furthermore, data augmentation assumes that the examples in the vicinity share the same class, and does not model the vicinity relation across examples of different classes.

在训练数据相似但不同的样本上进行训练的方法被称为数据增强 (data augmentation) (Simard et al., 1998),该方法通过邻域风险最小化 (Vicinal Risk Minimization, VRM) 原则 (Chapelle et al., 2000) 实现了形式化。在VRM框架中,需要人工知识来描述训练数据中每个样本的邻域关系。随后可以从训练样本的邻域分布中抽取额外的虚拟样本,从而扩大训练分布的支撑集。例如,在进行图像分类时,通常将单张图像的邻域定义为其水平翻转、轻微旋转和适度缩放的集合。虽然数据增强能持续提升模型泛化能力 (Simard et al., 1998),但该过程依赖于具体数据集,因此需要专家知识参与。此外,数据增强假设邻域内的样本共享相同类别标签,且未建模不同类别样本间的邻域关系。

Contribution Motivated by these issues, we introduce a simple and data-agnostic data augmentation routine, termed mixup (Section 2). In a nutshell, mixup constructs virtual training examples

贡献

受这些问题启发,我们提出了一种简单且与数据无关的数据增强方法,称为mixup(第2节)。简而言之,mixup通过构造虚拟训练样本来实现数据增强。

$$

\begin{array}{r l}&{\tilde{x}=\lambda x_{i}+(1-\lambda)x_{j},\qquad\mathrm{where}x_{i},x_{j}\mathrm{arerawinputvectors}}\ &{\tilde{y}=\lambda y_{i}+(1-\lambda)y_{j},\qquad\mathrm{where}y_{i},y_{j}\mathrm{areone-hotlabel~encodings}}\end{array}

$$

$$

\begin{array}{r l}&{\tilde{x}=\lambda x_{i}+(1-\lambda)x_{j},\qquad\mathrm{其中}~x_{i},x_{j}\mathrm{~为原始输入向量}}\ &{\tilde{y}=\lambda y_{i}+(1-\lambda)y_{j},\qquad\mathrm{其中}~y_{i},y_{j}\mathrm{~为独热标签编码}}\end{array}

$$

$(x_{i},y_{i})$ and $(x_{j},y_{j})$ are two examples drawn at random from our training data, and $\lambda\in[0,1]$ . Therefore, mixup extends the training distribution by incorporating the prior knowledge that linear interpolations of feature vectors should lead to linear interpolations of the associated targets. mixup can be implemented in a few lines of code, and introduces minimal computation overhead.

$(x_{i},y_{i})$ 和 $(x_{j},y_{j})$ 是从训练数据中随机抽取的两个样本,且 $\lambda\in[0,1]$。因此,mixup通过融入特征向量的线性插值应导致对应目标线性插值这一先验知识,扩展了训练数据分布。mixup仅需几行代码即可实现,且计算开销极低。

Despite its simplicity, mixup allows a new state-of-the-art performance in the CIFAR-10, CIFAR100, and ImageNet-2012 image classification datasets (Sections 3.1 and 3.2). Furthermore, mixup increases the robustness of neural networks when learning from corrupt labels (Section 3.4), or facing adversarial examples (Section 3.5). Finally, mixup improves generalization on speech (Sections 3.3) and tabular (Section 3.6) data, and can be used to stabilize the training of GANs (Section 3.7). The source-code necessary to replicate our CIFAR-10 experiments is available at:

尽管方法简单,mixup在CIFAR-10、CIFAR-100和ImageNet-2012图像分类数据集上实现了新的最先进性能(见3.1和3.2节)。此外,mixup提高了神经网络在从损坏标签学习(见3.4节)或面对对抗样本(见3.5节)时的鲁棒性。最后,mixup提升了语音数据(见3.3节)和表格数据(见3.6节)的泛化能力,并可用于稳定GAN的训练(见3.7节)。复现我们CIFAR-10实验所需的源代码可在以下地址获取:

https://github.com/facebook research/mixup-cifar10.

https://github.com/facebookresearch/mixup-cifar10.

To understand the effects of various design choices in mixup, we conduct a thorough set of ablation study experiments (Section 3.8). The results suggest that mixup performs significantly better than related methods in previous work, and each of the design choices contributes to the final performance. We conclude by exploring the connections to prior work (Section 4), as well as offering some points for discussion (Section 5).

为了理解混合增强(mixup)中各种设计选择的影响,我们进行了一系列全面的消融实验(第3.8节)。结果表明,混合增强的性能显著优于先前工作中的相关方法,且每个设计选择都对最终性能有所贡献。最后,我们探讨了与先前工作的联系(第4节),并提出了一些讨论要点(第5节)。

2 FROM EMPIRICAL RISK MINIMIZATION TO mixup

2 从经验风险最小化到 mixup

In supervised learning, we are interested in finding a function $f\in{\mathcal{F}}$ that describes the relationship between a random feature vector $X$ and a random target vector $Y$ , which follow the joint distribution $P(X,Y)$ . To this end, we first define a loss function $\ell$ that penalizes the differences between predictions $f(x)$ and actual targets $y$ , for examples $(x,y)\sim P$ . Then, we minimize the average of the loss function $\ell$ over the data distribution $P$ , also known as the expected risk:

在监督学习中,我们关注的是寻找一个函数 $f\in{\mathcal{F}}$ 来描述随机特征向量 $X$ 与随机目标向量 $Y$ 之间的关系,二者服从联合分布 $P(X,Y)$。为此,我们首先定义一个损失函数 $\ell$,用于惩罚预测值 $f(x)$ 与实际目标值 $y$ 之间的差异(其中样本 $(x,y)\sim P$)。接着,我们最小化损失函数 $\ell$ 在数据分布 $P$ 上的平均值,即期望风险:

$$

R(f)=\int\ell(f(x),y)\mathrm{d}P(x,y).

$$

$$

R(f)=\int\ell(f(x),y)\mathrm{d}P(x,y).

$$

Unfortunately, the distribution $P$ is unknown in most practical situations. Instead, we usually have access to a set of training data ${\cal{D}}={(x_{i},y_{i})}_{i=1}^{n}$ , where $(x_{i},y_{i})\sim P$ for all $i=1,\ldots,n$ . Using the training data $\mathcal{D}$ , we may approximate $P$ by the empirical distribution

遗憾的是,在实际应用中分布 $P$ 通常是未知的。我们通常只能获得一组训练数据 ${\cal{D}}={(x_{i},y_{i})}_{i=1}^{n}$ ,其中所有 $(x_{i},y_{i})\sim P$ 对于 $i=1,\ldots,n$ 都成立。利用训练数据 $\mathcal{D}$ ,我们可以通过经验分布来近似 $P$ 。

$$

P_{\delta}(x,y)=\frac{1}{n}\sum_{i=1}^{n}\delta(x=x_{i},y=y_{i}),

$$

$$

P_{\delta}(x,y)=\frac{1}{n}\sum_{i=1}^{n}\delta(x=x_{i},y=y_{i}),

$$

where $\delta(x=x_{i},y=y_{i})$ is a Dirac mass centered at $(x_{i},y_{i})$ . Using the empirical distribution $P_{\delta}$ , we can now approximate the expected risk by the empirical risk:

其中 $\delta(x=x_{i},y=y_{i})$ 是以 $(x_{i},y_{i})$ 为中心的狄拉克质量。利用经验分布 $P_{\delta}$ ,我们现在可以通过经验风险来近似期望风险:

$$

R_{\delta}(f)=\int\ell(f(x),y)\mathrm{d}P_{\delta}(x,y)=\frac{1}{n}\sum_{i=1}^{n}\ell(f(x_{i}),y_{i}).

$$

$$

R_{\delta}(f)=\int\ell(f(x),y)\mathrm{d}P_{\delta}(x,y)=\frac{1}{n}\sum_{i=1}^{n}\ell(f(x_{i}),y_{i}).

$$

Learning the function $f$ by minimizing (1) is known as the Empirical Risk Minimization (ERM) principle (Vapnik, 1998). While efficient to compute, the empirical risk (1) monitors the behaviour of $f$ only at a finite set of $n$ examples. When considering functions with a number parameters comparable to $n$ (such as large neural networks), one trivial way to minimize (1) is to memorize the training data (Zhang et al., 2017). Memorization, in turn, leads to the undesirable behaviour of $f$ outside the training data (Szegedy et al., 2014).

通过最小化 (1) 来学习函数 $f$ 被称为经验风险最小化 (Empirical Risk Minimization, ERM) 原则 (Vapnik, 1998)。虽然计算效率高,但经验风险 (1) 仅监测 $f$ 在有限 $n$ 个样本上的行为。当考虑参数数量与 $n$ 相当的函数(如大型神经网络)时,最小化 (1) 的一种简单方法是记忆训练数据 (Zhang et al., 2017)。而记忆反过来会导致 $f$ 在训练数据之外出现不良行为 (Szegedy et al., 2014)。

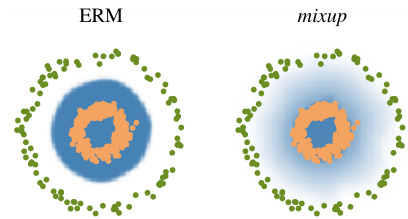

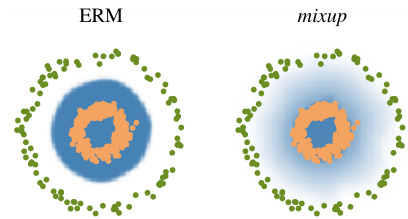

Figure 1: Illustration of mixup, which converges to ERM as $\alpha\rightarrow0$ .

图 1: mixup方法示意图,当$\alpha\rightarrow0$时收敛于ERM。

(b) Effect of mixup $\textit{\xi}\alpha=1$ ) on a toy problem. Green: Class 0. Orange: Class 1. Blue shading indicates $p(\boldsymbol{j}=1|\boldsymbol{x})$ .

(b) 混合增强 (mixup) ( $\textit{\xi}\alpha=1$ ) 在玩具问题上的效果。绿色: 类别0。橙色: 类别1。蓝色阴影表示 $p(\boldsymbol{j}=1|\boldsymbol{x})$ 。

However, the naive estimate $P_{\delta}$ is one out of many possible choices to approximate the true distribution $P$ . For instance, in the Vicinal Risk Minimization (VRM) principle (Chapelle et al., 2000), the distribution $P$ is approximated by

然而,朴素的估计 $P_{\delta}$ 只是众多可能用于近似真实分布 $P$ 的选择之一。例如,在邻域风险最小化 (Vicinal Risk Minimization, VRM) 原则中 (Chapelle et al., 2000),分布 $P$ 通过...

$$

P_{\nu}(\tilde{x},\tilde{y})=\frac{1}{n}\sum_{i=1}^{n}\nu(\tilde{x},\tilde{y}|x_{i},y_{i}),

$$

$$

P_{\nu}(\tilde{x},\tilde{y})=\frac{1}{n}\sum_{i=1}^{n}\nu(\tilde{x},\tilde{y}|x_{i},y_{i}),

$$

where $\nu$ is a vicinity distribution that measures the probability of finding the virtual feature-target pair $(\tilde{x},\tilde{y})$ in the vicinity of the training feature-target pair $(x_{i},y_{i})$ . In particular, Chapelle et al. (2000) considered Gaussian vicinities $\nu(\tilde{x},\tilde{y}|x_{i},y_{i})=\mathcal{N}(\tilde{x}-x_{i},\sigma^{2})\delta(\tilde{y}=y_{i})$ , which is equivalent to augmenting the training data with additive Gaussian noise. To learn using VRM, we sample the vicinal distribution to construct a dataset $\mathcal{D}_ {\nu}:={(\tilde{x}_ {i},\tilde{y}_ {i})}_{i=1}^{m}$ , and minimize the empirical vicinal risk:

其中 $\nu$ 是衡量在训练特征-目标对 $(x_{i},y_{i})$ 附近找到虚拟特征-目标对 $(\tilde{x},\tilde{y})$ 概率的邻域分布。特别地,Chapelle et al. (2000) 考虑了高斯邻域 $\nu(\tilde{x},\tilde{y}|x_{i},y_{i})=\mathcal{N}(\tilde{x}-x_{i},\sigma^{2})\delta(\tilde{y}=y_{i})$ ,这等同于通过添加高斯噪声来增强训练数据。为了使用VRM进行学习,我们从邻域分布中采样以构建数据集 $\mathcal{D}_ {\nu}:={(\tilde{x}_ {i},\tilde{y}_ {i})}_{i=1}^{m}$ ,并最小化经验邻域风险:

$$

R_{\nu}(f)=\frac{1}{m}\sum_{i=1}^{m}\ell(f(\tilde{x}_ {i}),\tilde{y}_{i}).

$$

$$

R_{\nu}(f)=\frac{1}{m}\sum_{i=1}^{m}\ell(f(\tilde{x}_ {i}),\tilde{y}_{i}).

$$

The contribution of this paper is to propose a generic vicinal distribution, called mixup:

本文的贡献是提出了一种通用的邻近分布方法,称为mixup:

$$

\mu(\tilde{x},\tilde{y}|x_{i},y_{i})=\frac{1}{n}\sum_{j}^{n}\mathbb{E}\left[\delta(\tilde{x}=\lambda\cdot x_{i}+(1-\lambda)\cdot x_{j},\tilde{y}=\lambda\cdot y_{i}+(1-\lambda)\cdot y_{j})\right],

$$

$$

\mu(\tilde{x},\tilde{y}|x_{i},y_{i})=\frac{1}{n}\sum_{j}^{n}\mathbb{E}\left[\delta(\tilde{x}=\lambda\cdot x_{i}+(1-\lambda)\cdot x_{j},\tilde{y}=\lambda\cdot y_{i}+(1-\lambda)\cdot y_{j})\right],

$$

where $\lambda\sim\operatorname{Beta}(\alpha,\alpha)$ , for $\alpha\in(0,\infty)$ . In a nutshell, sampling from the mixup vicinal distribution produces virtual feature-target vectors

其中 $\lambda\sim\operatorname{Beta}(\alpha,\alpha)$,$\alpha\in(0,\infty)$。简而言之,从 mixup 邻域分布中采样可生成虚拟特征-目标向量

$$

\begin{array}{r}{\tilde{x}=\lambda x_{i}+(1-\lambda)x_{j},}\ {\tilde{y}=\lambda y_{i}+(1-\lambda)y_{j},}\end{array}

$$

$$

\begin{array}{r}{\tilde{x}=\lambda x_{i}+(1-\lambda)x_{j},}\ {\tilde{y}=\lambda y_{i}+(1-\lambda)y_{j},}\end{array}

$$

where $(x_{i},y_{i})$ and $(x_{j},y_{j})$ are two feature-target vectors drawn at random from the training data, and $\lambda\in[0,1]$ . The mixup hyper-parameter $\alpha$ controls the strength of interpolation between feature-target pairs, recovering the ERM principle as $\alpha\rightarrow0$ .

其中 $(x_{i},y_{i})$ 和 $(x_{j},y_{j})$ 是从训练数据中随机抽取的两个特征-目标向量,且 $\lambda\in[0,1]$。混合超参数 $\alpha$ 控制特征-目标对之间的插值强度,当 $\alpha\rightarrow0$ 时恢复为经验风险最小化 (ERM) 原则。

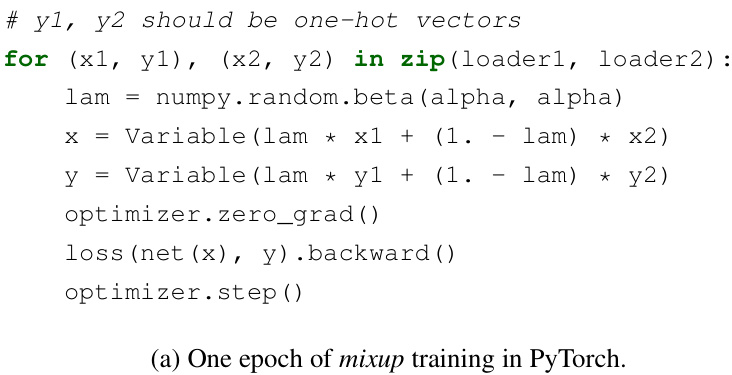

The implementation of mixup training is straightforward, and introduces a minimal computation overhead. Figure 1a shows the few lines of code necessary to implement mixup training in PyTorch. Finally, we mention alternative design choices. First, in preliminary experiments we find that convex combinations of three or more examples with weights sampled from a Dirichlet distribution does not provide further gain, but increases the computation cost of mixup. Second, our current implementation uses a single data loader to obtain one minibatch, and then mixup is applied to the same minibatch after random shuffling. We found this strategy works equally well, while reducing I/O requirements. Third, interpolating only between inputs with equal label did not lead to the performance gains of mixup discussed in the sequel. More empirical comparison can be found in Section 3.8.

mixup训练的实现非常简单,且仅引入极小的计算开销。图1a展示了在PyTorch中实现mixup训练所需的几行代码。最后,我们讨论其他设计方案。首先,在初步实验中,我们发现从狄利克雷分布(Dirichlet distribution)采样权重对三个或更多样本进行凸组合并未带来额外收益,反而增加了mixup的计算成本。其次,当前实现使用单一数据加载器获取一个小批量(minibatch),随后对该批次随机打乱后应用mixup。该策略在保持同等效果的同时降低了I/O需求。第三,仅在相同标签的输入之间进行插值无法获得后文讨论的mixup性能优势。更多实验对比见第3.8节。

What is mixup doing? The mixup vicinal distribution can be understood as a form of data augmentation that encourages the model $f$ to behave linearly in-between training examples. We argue that this linear behaviour reduces the amount of undesirable oscillations when predicting outside the training examples. Also, linearity is a good inductive bias from the perspective of Occam’s razor,

mixup在做什么?mixup邻近分布可以理解为一种数据增强形式,它鼓励模型$f$在训练样本之间表现出线性行为。我们认为这种线性行为能减少在训练样本之外进行预测时的不良振荡。此外,从奥卡姆剃刀原则的角度来看,线性性是一种良好的归纳偏差。

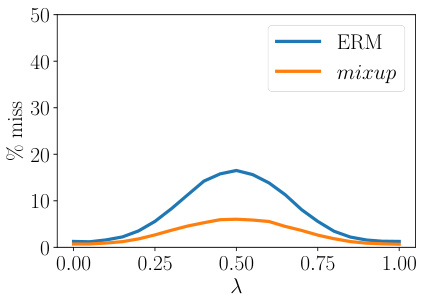

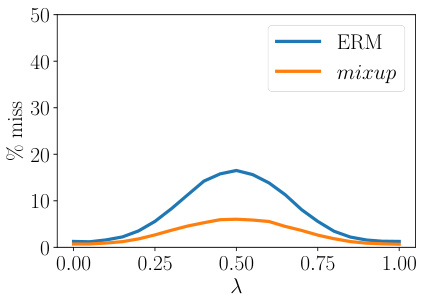

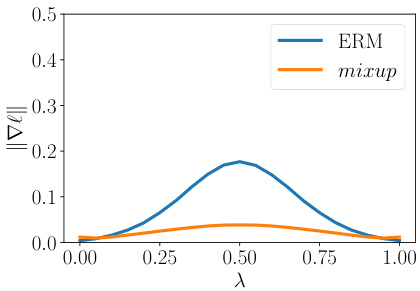

(a) Prediction errors in-between training data. Evaluated at $x=\lambda x_{i}+(1-\lambda)x_{j}$ , a prediction is counted as a “miss” if it does not belong to ${y_{i},y_{j}}$ . The model trained with mixup has fewer misses.

(a) 训练数据间的预测误差。在$x=\lambda x_{i}+(1-\lambda)x_{j}$处评估时,若预测值不属于${y_{i},y_{j}}$则记为"失误"。采用mixup训练的模型失误更少。

(b) Norm of the gradients of the model w.r.t. input in-between training data, evaluated at $x=\lambda x_{i}+$ $(1-\lambda)x_{j}$ . The model trained with mixup has smaller gradient norms.

图 1:

(b) 模型在训练数据间输入梯度范数,评估点为 $x=\lambda x_{i}+$ $(1-\lambda)x_{j}$。使用 mixup 训练的模型具有更小的梯度范数。

Figure 2: mixup leads to more robust model behaviors in-between the training data. Table 1: Validation errors for ERM and mixup on the development set of ImageNet-2012.

| Model | Method | Epochs | Top-1 Error | Top-5 Error |

| ResNet-50 | ERM (Goyal et al., 2017) | 90 | 23.5 | |

| mixup α = 0.2 | 90 | 23.3 | 6.6 | |

| ResNet-101 | ERM (Goyal et al., 2017) | 90 | 22.1 | |

| mixup α = 0.2 | 90 | 21.5 | 5.6 | |

| ResNeXt-101 32*4d | ERM (Xie et al., 2016) | 100 | 21.2 | |

| ERM | 90 | 21.2 | 5.6 | |

| mixup α = 0.4 | 90 | 20.7 | 5.3 | |

| ResNeXt-101 64*4d | ERM (Xie et al., 2016) | 100 | 20.4 | 5.3 |

| mixupα =0.4 | 90 | 19.8 | 4.9 | |

| ResNet-50 | ERM | 200 | 23.6 | 7.0 |

| mixup α = 0.2 | 200 | 22.1 | 6.1 | |

| ResNet-101 | ERM | 200 | 22.0 | 6.1 |

| mixup α = 0.2 | 200 | 20.8 | 5.4 | |

| ResNeXt-101 32*4d | ERM | 200 | 21.3 | 5.9 |

| mixup α = 0.4 | 200 | 20.1 | 5.0 |

图 2: mixup 使模型在训练数据之间表现出更鲁棒的行为。

表 1: ImageNet-2012 开发集上 ERM 和 mixup 的验证错误率。

| 模型 | 方法 | 训练轮数 | Top-1 错误率 | Top-5 错误率 |

|---|---|---|---|---|

| ResNet-50 | ERM (Goyal et al., 2017) | 90 | 23.5 | - |

| mixup α = 0.2 | 90 | 23.3 | 6.6 | |

| ResNet-101 | ERM (Goyal et al., 2017) | 90 | 22.1 | - |

| mixup α = 0.2 | 90 | 21.5 | 5.6 | |

| ResNeXt-101 32*4d | ERM (Xie et al., 2016) | 100 | 21.2 | - |

| ERM | 90 | 21.2 | 5.6 | |

| mixup α = 0.4 | 90 | 20.7 | 5.3 | |

| ResNeXt-101 64*4d | ERM (Xie et al., 2016) | 100 | 20.4 | 5.3 |

| mixup α = 0.4 | 90 | 19.8 | 4.9 | |

| ResNet-50 | ERM | 200 | 23.6 | 7.0 |

| mixup α = 0.2 | 200 | 22.1 | 6.1 | |

| ResNet-101 | ERM | 200 | 22.0 | 6.1 |

| mixup α = 0.2 | 200 | 20.8 | 5.4 | |

| ResNeXt-101 32*4d | ERM | 200 | 21.3 | 5.9 |

| mixup α = 0.4 | 200 | 20.1 | 5.0 |

since it is one of the simplest possible behaviors. Figure 1b shows that mixup leads to decision boundaries that transition linearly from class to class, providing a smoother estimate of uncertainty. Figure 2 illustrate the average behaviors of two neural network models trained on the CIFAR-10 dataset using ERM and mixup. Both models have the same architecture, are trained with the same procedure, and are evaluated at the same points in-between randomly sampled training data. The model trained with mixup is more stable in terms of model predictions and gradient norms in-between training samples.

因为它是最简单的行为之一。图1b显示,mixup会导致决策边界在类别之间线性过渡,从而提供更平滑的不确定性估计。图2展示了在CIFAR-10数据集上使用ERM和mixup训练的两种神经网络模型的平均行为。两个模型具有相同的架构,采用相同的训练流程,并在随机采样的训练数据之间的相同点进行评估。使用mixup训练的模型在训练样本之间的模型预测和梯度范数方面更加稳定。

3 EXPERIMENTS

3 实验

3.1 IMAGENET CLASSIFICATION

3.1 IMAGENET 分类

We evaluate mixup on the ImageNet-2012 classification dataset (Russ a kov sky et al., 2015). This dataset contains 1.3 million training images and 50,000 validation images, from a total of 1,000 classes. For training, we follow standard data augmentation practices: scale and aspect ratio distortions, random crops, and horizontal flips (Goyal et al., 2017). During evaluation, only the $224\times224$ central crop of each image is tested. We use mixup and ERM to train several state-of-the-art ImageNet-2012 classification models, and report both top-1 and top-5 error rates in Table 1.

我们在ImageNet-2012分类数据集(Russakovsky et al., 2015)上评估mixup方法。该数据集包含130万张训练图像和5万张验证图像,共1000个类别。训练阶段采用标准数据增强方法:尺度与长宽比畸变、随机裁剪和水平翻转(Goyal et al., 2017)。评估时仅测试每张图像$224\times224$的中心裁剪区域。我们使用mixup和ERM训练了多个先进的ImageNet-2012分类模型,并在表1中报告了top-1和top-5错误率。

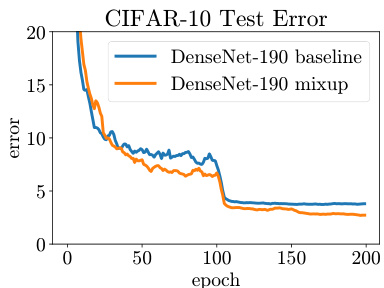

Figure 3: Test errors for ERM and mixup on the CIFAR experiments.

| Dataset | Model | ERM | mixup |

| CIFAR-10 | PreActResNet-18 | 5.6 | 4.2 |

| WideResNet-28-10 DenseNet-BC-190 | 3.8 3.7 | 2.7 2.7 | |

| CIFAR-100 | PreActResNet-18 | 25.6 | 21.1 |

| WideResNet-28-10 | 19.4 | 17.5 | |

| DenseNet-BC-190 | 19.0 | 16.8 | |

(a) Test errors for the CIFAR experiments.

图 3: CIFAR实验中ERM和mixup的测试误差。

| 数据集 | 模型 | ERM | mixup |

|---|---|---|---|

| CIFAR-10 | PreActResNet-18 | 5.6 | 4.2 |

| CIFAR-10 | WideResNet-28-10 DenseNet-BC-190 | 3.8 3.7 | 2.7 2.7 |

| CIFAR-100 | PreActResNet-18 | 25.6 | 21.1 |

| CIFAR-100 | WideResNet-28-10 | 19.4 | 17.5 |

| DenseNet-BC-190 | 19.0 | 16.8 |

(a) CIFAR实验的测试误差。

(b) Test error evolution for the best ERM and mixup models.

图 1:

(b) 最佳经验风险最小化 (ERM) 和 mixup 模型的测试误差变化曲线

For all the experiments in this section, we use data-parallel distributed training in Caffe21 with a minibatch size of 1,024. We use the learning rate schedule described in (Goyal et al., 2017). Specifically, the learning rate is increased linearly from 0.1 to 0.4 during the first 5 epochs, and it is then divided by 10 after 30, 60 and 80 epochs when training for 90 epochs; or after 60, 120 and 180 epochs when training for 200 epochs.

在本节所有实验中,我们使用Caffe21框架进行数据并行分布式训练,最小批次(batch)大小为1,024。学习率调度采用(Goyal et al., 2017)所述方案:前5个周期(epoch)从0.1线性增至0.4,随后在90周期训练时分别于30/60/80周期后除以10;200周期训练时则于60/120/180周期后进行相同衰减。

For mixup, we find that $\alpha\in[0.1,0.4]$ leads to improved performance over ERM, whereas for large $\alpha$ , mixup leads to under fitting. We also find that models with higher capacities and/or longer training runs are the ones to benefit the most from mixup. For example, when trained for 90 epochs, the mixup variants of ResNet-101 and ResNeXt-101 obtain a greater improvement ( $0.5%$ to $0.6%$ ) over their ERM analogues than the gain of smaller models such as ResNet-50 $(0.2%)$ . When trained for 200 epochs, the top-1 error of the mixup variant of ResNet-50 is further reduced by $1.2%$ compared to the 90 epoch run, whereas its ERM analogue stays the same.

对于mixup,我们发现当$\alpha\in[0.1,0.4]$时,其性能优于ERM(经验风险最小化),而当$\alpha$较大时,mixup会导致欠拟合。我们还发现,具有更高容量和/或更长训练周期的模型从mixup中获益最大。例如,在训练90个周期时,ResNet-101和ResNeXt-101的mixup变体相比其ERM对应模型获得了更大的提升($0.5%$至$0.6%$),而较小模型如ResNet-50的提升较小($0.2%$)。当训练周期延长至200个周期时,ResNet-50的mixup变体的top-1错误率相比90个周期的训练进一步降低了$1.2%$,而其ERM对应模型的性能保持不变。

3.2 CIFAR-10 AND CIFAR-100

3.2 CIFAR-10 与 CIFAR-100

We conduct additional image classification experiments on the CIFAR-10 and CIFAR-100 datasets to further evaluate the generalization performance of mixup. In particular, we compare ERM and mixup training for: PreAct ResNet-18 (He et al., 2016) as implemented in (Liu, 2017), WideResNet28-10 (Zagoruyko & Komodakis, 2016a) as implemented in (Zagoruyko & Komodakis, 2016b), and DenseNet (Huang et al., 2017) as implemented in (Veit, 2017). For DenseNet, we change the growth rate to 40 to follow the DenseNet-BC-190 specification from (Huang et al., 2017). For mixup, we fix $\alpha=1$ , which results in interpolations $\lambda$ uniformly distributed between zero and one. All models are trained on a single Nvidia Tesla P100 GPU using PyTorch2 for 200 epochs on the training set with 128 examples per minibatch, and evaluated on the test set. Learning rates start at 0.1 and are divided by 10 after 100 and 150 epochs for all models except WideResNet. For WideResNet, we follow (Zagoruyko & Komodakis, 2016a) and divide the learning rate by 10 after 60, 120 and 180 epochs. Weight decay is set to $10^{-4}$ . We do not use dropout in these experiments.

我们在CIFAR-10和CIFAR-100数据集上进行了额外的图像分类实验,以进一步评估mixup的泛化性能。具体而言,我们比较了以下模型的ERM和mixup训练:采用(Liu, 2017)实现的PreAct ResNet-18 (He et al., 2016)、采用(Zagoruyko & Komodakis, 2016b)实现的WideResNet28-10 (Zagoruyko & Komodakis, 2016a),以及采用(Veit, 2017)实现的DenseNet (Huang et al., 2017)。对于DenseNet,我们将增长率调整为40以符合(Huang et al., 2017)中的DenseNet-BC-190规格。mixup实验中固定超参数$\alpha=1$,这使得插值系数$\lambda$在0到1之间均匀分布。所有模型均在Nvidia Tesla P100 GPU上使用PyTorch2进行训练,每个小批量包含128个样本,共训练200个epoch,并在测试集上评估性能。除WideResNet外,所有模型的初始学习率为0.1,并在第100和150个epoch后降至十分之一。对于WideResNet,我们遵循(Zagoruyko & Komodakis, 2016a)的设置,在第60、120和180个epoch后将学习率降至十分之一。权重衰减设置为$10^{-4}$,本实验未使用dropout。

We summarize our results in Figure 3a. In both CIFAR-10 and CIFAR-100 classification problems, the models trained using mixup significantly outperform their analogues trained with ERM. As seen in Figure 3b, mixup and ERM converge at a similar speed to their best test errors. Note that the DenseNet models in (Huang et al., 2017) were trained for 300 epochs with further learning rate decays scheduled at the 150 and 225 epochs, which may explain the discrepancy the performance of DenseNet reported in Figure 3a and the original result of Huang et al. (2017).

我们在图 3a 中总结了实验结果。在 CIFAR-10 和 CIFAR-100 分类任务中,使用 mixup 训练的模型显著优于采用经验风险最小化 (ERM) 训练的对应模型。如图 3b 所示,mixup 和 ERM 以相近的速度收敛至最佳测试误差。需要注意的是,(Huang et al., 2017) 中的 DenseNet 模型训练了 300 个周期,并在第 150 和 225 个周期安排了额外的学习率衰减,这可能是图 3a 报告的 DenseNet 性能与 Huang et al. (2017) 原始结果存在差异的原因。

3.3 SPEECH DATA

3.3 语音数据

Next, we perform speech recognition experiments using the Google commands dataset (Warden, 2017). The dataset contains 65,000 utterances, where each utterance is about one-second long and belongs to one out of 30 classes. The classes correspond to voice commands such as yes, no, down, left, as pronounced by a few thousand different speakers. To preprocess the utterances, we first extract normalized spec tro grams from the original waveforms at a sampling rate of $16\mathrm{kHz}$ . Next, we zero-pad the spec tro grams to equalize their sizes at $160\times101$ . For speech data, it is reasonable to apply mixup both at the waveform and spec tr ogram levels. Here, we apply mixup at the spec tr ogram level just before feeding the data to the network.

接下来,我们使用Google语音命令数据集(Warden, 2017)进行语音识别实验。该数据集包含65,000条语音片段,每条时长约1秒,属于30个类别之一。这些类别对应着数千名不同发音人所说的yes、no、down、left等语音指令。

在预处理阶段,我们首先从原始波形中以$16\mathrm{kHz}$的采样率提取归一化的频谱图(spec tro gram)。接着,我们将频谱图零填充至统一尺寸$160\times101$。对于语音数据,在波形和频谱图两个层面应用混合增强(mixup)都是合理的。本实验中,我们在将数据输入网络前,仅在频谱图层面应用了混合增强。

Figure 4: Classification errors of ERM and mixup on the Google commands dataset.

| Model | Method | Validationset | Testset |

| LeNet | ERM | 9.8 | 10.3 |

| mixup (α =0.1) | 10.1 | 10.8 | |

| mixup (Q = 0.2) | 10.2 | 11.3 | |

| VGG-11 | ERM | 5.0 | 4.6 |

| mixup (α = 0.1) | 4.0 | 3.8 | |

| mixup (α =0.2) | 3.9 | 3.4 |

图 4: Google commands数据集中ERM和mixup的分类错误

| 模型 | 方法 | 验证集 | 测试集 |

|---|---|---|---|

| LeNet | ERM | 9.8 | 10.3 |

| mixup (α=0.1) | 10.1 | 10.8 | |

| mixup (α=0.2) | 10.2 | 11.3 | |

| VGG-11 | ERM | 5.0 | 4.6 |

| mixup (α=0.1) | 4.0 | 3.8 | |

| mixup (α=0.2) | 3.9 | 3.4 |

For this experiment, we compare a LeNet (Lecun et al., 2001) and a VGG-11 (Simonyan & Zisserman, 2015) architecture, each of them composed by two convolutional and two fully-connected layers. We train each model for 30 epochs with mini batches of 100 examples, using Adam as the optimizer (Kingma & Ba, 2015). Training starts with a learning rate equal to $3\times10^{-3}$ and is divided by 10 every 10 epochs. For mixup, we use a warm-up period of five epochs where we train the network on original training examples, since we find it speeds up initial convergence. Table 4 shows that mixup outperforms ERM on this task, specially when using VGG-11, the model with larger capacity.

在本实验中,我们比较了LeNet (Lecun et al., 2001) 和VGG-11 (Simonyan & Zisserman, 2015) 架构,每个架构由两个卷积层和两个全连接层组成。我们使用Adam优化器 (Kingma & Ba, 2015),以100个样本为小批量,对每个模型进行30轮训练。初始学习率为 $3\times10^{-3}$,每10轮学习率除以10。对于mixup方法,我们设置了5轮的预热期,在此期间使用原始训练样本训练网络,因为我们发现这能加速初始收敛。表4显示,mixup在该任务上优于ERM(经验风险最小化),尤其是在使用容量更大的VGG-11模型时。

3.4 MEMORIZATION OF CORRUPTED LABELS

3.4 损坏标签的记忆

Following Zhang et al. (2017), we evaluate the robustness of ERM and mixup models against randomly corrupted labels. We hypothesize that increasing the strength of mixup interpolation $\alpha$ should generate virtual examples further from the training examples, making memorization more difficult to achieve. In particular, it should be easier to learn interpolations between real examples compared to memorizing interpolations involving random labels. We adapt an open-source implementation (Zhang, 2017) to generate three CIFAR-10 training sets, where $20%$ , $50%$ , or $80%$ of the labels are replaced by random noise, respectively. All the test labels are kept intact for evaluation. Dropout (Srivastava et al., 2014) is considered the state-of-the-art method for learning with corrupted labels (Arpit et al., 2017). Thus, we compare in these experiments mixup, dropout, mixup $^+$ dropout, and ERM. For mixup, we choose $\alpha\in{1,2,8,32}$ ; for dropout, we add one dropout layer in each PreAct block after the ReLU activation layer between two convolution layers, as suggested in (Zagoruyko & Komodakis, 2016a). We choose the dropout probability $p\in{0.5,0.7,0.8,\overset{\cdot}{0.}9}$ . For the combination of mixup and dropout, we choose $\alpha\in{1,2,4,8}$ and $p\in{0.3,0.5,0.7}$ . These experiments use the PreAct ResNet-18 (He et al., 2016) model implemented in (Liu, 2017). All the other settings are the same as in Section 3.2.

遵循Zhang等人(2017)的方法,我们评估了ERM和mixup模型对随机损坏标签的鲁棒性。我们假设增加mixup插值强度$\alpha$会生成远离训练样本的虚拟样本,使记忆更难实现。特别是与记忆涉及随机标签的插值相比,学习真实样本之间的插值应该更容易。我们采用开源实现(Zhang, 2017)生成三个CIFAR-10训练集,分别将$20%$、$50%$或$80%$的标签替换为随机噪声。所有测试标签保持完整用于评估。Dropout (Srivastava等人, 2014)被认为是学习损坏标签的最先进方法(Arpit等人, 2017)。因此,我们在这些实验中比较了mixup、dropout、mixup$^+$dropout和ERM。对于mixup,我们选择$\alpha\in{1,2,8,32}$;对于dropout,如(Zagoruyko & Komodakis, 2016a)建议,我们在两个卷积层之间的ReLU激活层后,在每个PreAct块中添加一个dropout层。我们选择dropout概率$p\in{0.5,0.7,0.8,\overset{\cdot}{0.}9}$。对于mixup和dropout的组合,我们选择$\alpha\in{1,2,4,8}$和$p\in{0.3,0.5,0.7}$。这些实验使用(Liu, 2017)中实现的PreAct ResNet-18 (He等人, 2016)模型。所有其他设置与第3.2节相同。

We summarize our results in Table 2, where we note the best test error achieved during the training session, as well as the final test error after 200 epochs. To quantify the amount of memorization, we also evaluate the training errors at the last epoch on real labels and corrupted labels. As the training progresses with a smaller learning rate (e.g. less than 0.01), the ERM model starts to overfit the corrupted labels. When using a large probability (e.g. 0.7 or 0.8), dropout can effectively reduce over fitting. mixup with a large $\alpha$ (e.g. 8 or 32) outperforms dropout on both the best and last epoch test errors, and achieves lower training error on real labels while remaining resistant to noisy labels. Interestingly, mixup $^+$ dropout performs the best of all, showing that the two methods are compatible.

我们在表2中总结了实验结果,记录了训练过程中达到的最佳测试误差以及200个周期后的最终测试误差。为量化记忆程度,我们还评估了最后一个周期在真实标签和损坏标签上的训练误差。当采用较小学习率(如低于0.01)进行训练时,ERM模型开始对损坏标签过拟合。使用较高概率(如0.7或0.8)时,dropout能有效减轻过拟合现象。采用较大$\alpha$值(如8或32)的mixup在最佳测试误差和最终测试误差上都优于dropout,在保持对噪声标签鲁棒性的同时,对真实标签实现了更低的训练误差。值得注意的是,mixup$^+$dropout的组合取得了最佳效果,表明这两种方法具有兼容性。

3.5 ROBUSTNESS TO ADVERSARIAL EXAMPLES

3.5 对抗样本的鲁棒性

One undesirable consequence of models trained using ERM is their fragility to adversarial examples (Szegedy et al., 2014). Adversarial examples are obtained by adding tiny (visually imperceptible) perturbations to legitimate examples in order to deteriorate the performance of the model. The adversarial noise is generated by ascending the gradient of the loss surface with respect to the legitimate example. Improving the robustness to adversarial examples is a topic of active research.

使用经验风险最小化(ERM)训练的模型会产生一个不良后果:它们对对抗样本(Szegedy et al., 2014)的脆弱性。对抗样本是通过对合法样本添加微小(视觉上难以察觉)的扰动来获得的,目的是降低模型的性能。这种对抗噪声是通过沿着合法样本的损失梯度上升方向生成的。提高对抗样本的鲁棒性是一个活跃的研究课题。

Table 2: Results on the corrupted label experiments for the best models.

| Label corruption | Method | Test error | Training error | ||

| Best | Last | Real | Corrupted | ||

| 20% | ERM | 12.7 | 16.6 | 0.05 | 0.28 |

| ERM + dropout (p = 0.7) | 8.8 | 10.4 | 5.26 | 83.55 | |

| mixup(α=8) | 5.9 | 6.4 | 2.27 | 86.32 | |

| mixup + dropout (α = 4,p = 0.1) | 6.2 | 6.2 | 1.92 | 85.02 | |

| 50% | ERM | 18.8 | 44.6 | 0.26 | 0.64 |

| ERM + dropout (p = 0.8) | 14.1 | 15.5 | 12.71 | 86.98 | |

| mixup(α =32) | 11.3 | 12.7 | 5.84 | 85.71 | |

| mixup + dropout (α = 8,p = 0.3) | 10.9 | 10.9 | 7.56 | 87.90 | |

| 80% | ERM | 36.5 | 73.9 | 0.62 | 0.83 |

| ERM + dropout (p = 0.8) | 30.9 | 35.1 | 29.84 | 86.37 | |

| mixup (α =32) | 25.3 | 30.9 | 18.92 | 85.44 | |

| mixup + dropout (α = 8,p = 0.3) | 24.0 | 24.8 | 19.70 | 87.67 | |

表 2: 最佳模型在标签损坏实验中的结果

| 标签损坏 | 方法 | 测试误差 | 训练误差 | ||

|---|---|---|---|---|---|

| 最佳 | 最后 | 真实 | 损坏 | ||

| 20% | ERM | 12.7 | 16.6 | 0.05 | 0.28 |

| ERM + dropout (p = 0.7) | 8.8 | 10.4 | 5.26 | 83.55 | |

| mixup(α=8) | 5.9 | 6.4 | 2.27 | 86.32 | |

| mixup + dropout (α = 4,p = 0.1) | 6.2 | 6.2 | 1.92 | 85.02 | |

| 50% | ERM | 18.8 | 44.6 | 0.26 | 0.64 |

| ERM + dropout (p = 0.8) | 14.1 | 15.5 | 12.71 | 86.98 | |

| mixup(α =32) | 11.3 | 12.7 | 5.84 | 85.71 | |

| mixup + dropout (α = 8,p = 0.3) | 10.9 | 10.9 | 7.56 | 87.90 | |

| 80% | ERM | 36.5 | 73.9 | 0.62 | 0.83 |

| ERM + dropout (p = 0.8) | 30.9 | 35.1 | 29.84 | 86.37 | |

| mixup (α =32) | 25.3 | 30.9 | 18.92 | 85.44 | |

| mixup + dropout (α = 8,p = 0.3) | 24.0 |