A Survey of Large Language Models in Medicine: Progress, Application, and Challenge

周鸿渐,刘丰林,谷博阳,邹新宇,黄锦发,

ABSTRACT

摘要

Large language models (LLMs), such as ChatGPT, have received substantial attention due to their capabilities for understanding and generating human language. While there has been a burgeoning trend in research focusing on the employment of LLMs in supporting different medical tasks (e.g., enhancing clinical diagnostics and providing medical education), a comprehensive review of these efforts, particularly their development, practical applications, and outcomes in medicine, remains scarce. Therefore, this review aims to provide a detailed overview of the development and deployment of LLMs in medicine, including the challenges and opportunities they face. In terms of development, we provide a detailed introduction to the principles of existing medical LLMs, including their basic model structures, number of parameters, and sources and scales of data used for model development. It serves as a guide for practitioners in developing medical LLMs tailored to their specific needs. In terms of deployment, we offer a comparison of the performance of different LLMs across various medical tasks, and further compare them with state-of-the-art lightweight models, aiming to provide a clear understanding of the distinct advantages and limitations of LLMs in medicine. Overall, in this review, we address the following study questions: 1) What are the practices for developing medical LLMs? 2) How to measure the medical task performance of LLMs in a medical setting? 3) How have medical LLMs been employed in real-world practice? 4) What challenges arise from the use of medical LLMs? and 5) How to more effectively develop and deploy medical LLMs? By answering these questions, this review aims to provide insights into the opportunities and challenges of LLMs in medicine and serve as a practical resource for constructing effective medical LLMs. We also maintain a regularly updated list of practical guides on medical LLMs at:

大语言模型 (LLM/Large Language Model) ,如 ChatGPT,因其理解和生成人类语言的能力而受到广泛关注。尽管当前研究趋势聚焦于利用大语言模型支持各类医疗任务 (例如提升临床诊断和提供医学教育) ,但针对这些工作的系统性综述,尤其是其在医学领域的发展、实际应用及成果的全面分析仍较为匮乏。因此,本文旨在详细概述大语言模型在医学中的开发与部署,包括其面临的挑战与机遇。在开发层面,我们详细介绍了现有医疗大语言模型的原理,包括其基础模型结构、参数量级以及模型开发所用的数据来源与规模,为开发者构建符合特定需求的医疗大语言模型提供指南。在部署层面,我们对比了不同大语言模型在各类医疗任务中的表现,并进一步将其与最先进的轻量级模型进行对比,以明晰大语言模型在医学领域的独特优势与局限。总体而言,本文围绕以下研究问题展开:1) 医疗大语言模型的开发实践有哪些?2) 如何衡量大语言模型在医疗场景中的任务表现?3) 医疗大语言模型如何应用于真实世界实践?4) 使用医疗大语言模型会引发哪些挑战?5) 如何更有效地开发与部署医疗大语言模型?通过回答这些问题,本文旨在揭示大语言模型在医学领域的机遇与挑战,并为构建高效医疗大语言模型提供实用参考。我们还在以下地址持续更新医疗大语言模型的实践指南列表:

https://github.com/AI-in-Health/MedLLMsPracticalGuide

BOX: Key points

BOX: 关键点

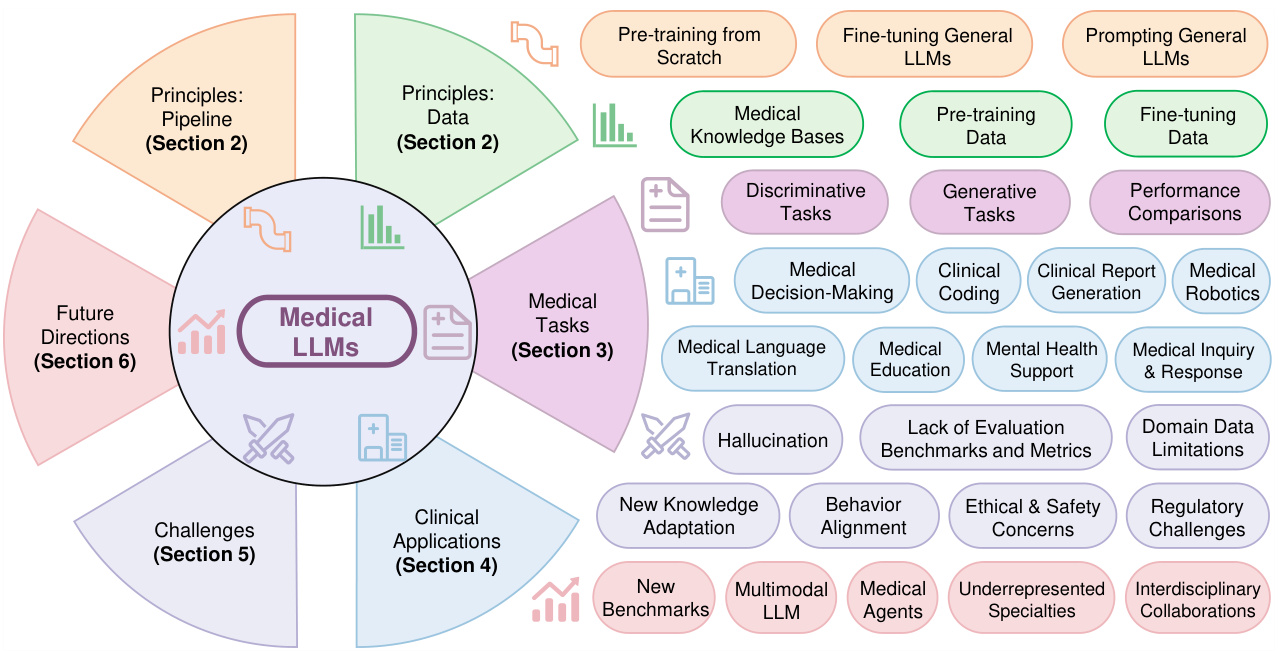

Figure 1. An overview of the practical guides for medical large language models.

图 1: 医疗大语言模型实用指南概览

1 Introduction

1 引言

The recently emerged general large language models (LLMs)1,2, such as $\mathrm{PaLM}^{3}$ , LLaMA4,5, GPT-series6,7, and ChatGLM8,9, have advanced the state-of-the-art in various natural language processing (NLP) tasks, including text generation, text summarization, and question answering. Inspired by these successes, several endeavors have been made to adapt general LLMs to the medicine domain, leading to the emergence of medical LLMs10,11. For example, based on $\mathrm{PaLM}^{3}$ and GPT $.4^{7}$ , MedPaLM $2^{11}$ and Med Prompt 12 have respectively achieved a competitive accuracy of 86.5 and 90.2 compared to human experts $(87.0^{13})$ ) in the United States Medical Licensing Examination (USMLE)14. In particular, based on publicly available general LLMs (e.g. LLaMA4,5), a wide range of medical LLMs, including Chat Doctor 15, MedAl paca 16, PMC-LLaMA13, BenTsao17, and Clinical Camel18, have been introduced. As a result, medical LLMs have gained growing research interests in assisting medical professionals to improve patient care 19,20.

最近出现的通用大语言模型 (LLM)[1,2],如 $\mathrm{PaLM}^{3}$、LLaMA[4,5]、GPT系列[6,7] 和 ChatGLM[8,9],在文本生成、文本摘要和问答等多种自然语言处理 (NLP) 任务中取得了最先进的成果。受这些成功的启发,人们开始尝试将通用大语言模型应用于医学领域,从而催生了医学大语言模型[10,11]。例如,基于 $\mathrm{PaLM}^{3}$ 和 GPT $.4^{7}$ 的 MedPaLM $2^{11}$ 和 Med Prompt[12] 在美国医师执照考试 (USMLE)[14] 中分别达到了 86.5 和 90.2 的准确率,与人类专家 $(87.0^{13})$ 的表现相当。特别是基于公开可用的通用大语言模型 (如 LLaMA[4,5]),一系列医学大语言模型相继问世,包括 Chat Doctor[15]、MedAlpaca[16]、PMC-LLaMA[13]、BenTsao[17] 和 Clinical Camel[18]。因此,医学大语言模型在辅助医疗专业人员改善患者护理方面引起了越来越多的研究兴趣[19,20]。

Although existing medical LLMs have achieved promising results, there are some key issues in their development and application that need to be addressed. First, many of these models primarily focus on medical dialogue and medical questionanswering tasks, but their practical utility in clinical practice is often overlooked 19. Recent research and reviews 19,21,22 have begun to explore the potential of medical LLMs in different clinical scenarios, including Electronic Health Records (EHRs)23, discharge summary generation 20, health education 24, and care planning 11. However, they primarily focus on presenting clinical applications of LLMs, especially online commercial LLMs like ChatGPT (including GPT-3.5 and GPT $4^{7}$ ), without providing practical guidelines for the development of medical LLMs. Besides, they mainly perform case studies to conduct the human evaluation on a small number of samples, thus lacking evaluation datasets for assessing model performance in clinical scenarios. Second, most existing medical LLMs report their performances mainly on answering medical questions, neglecting other biomedical domains, such as medical language understanding and generation. These research gaps motivate this review which offers a comprehensive review of the development of LLMs and their applications in medicine. We aim to cover topics on existing medical LLMs, various medical tasks, clinical applications, and arising challenges.

尽管现有的医疗大语言模型已取得显著成果,但其开发与应用仍存在若干关键问题亟待解决。首先,许多模型主要聚焦于医疗对话和问答任务,却常忽视其在临床实践中的实际效用[19]。近期研究与综述[19,21,22]开始探索医疗大语言模型在电子健康档案(EHRs)[23]、出院小结生成[20]、健康教育[24]和护理计划[11]等临床场景的潜力,但主要侧重于展示大语言模型(尤其是ChatGPT等在线商业模型,包括GPT-3.5和GPT-4[7])的临床应用,未提供医疗大语言模型开发的实用指南。此外,这些研究多通过小样本案例进行人工评估,缺乏评估临床场景模型性能的数据集。其次,现有医疗大语言模型主要报告医学问答性能,忽视了医学语言理解与生成等其他生物医学领域。这些研究空白促使本文全面综述大语言模型发展及其医学应用,涵盖现有医疗大语言模型、多样化医疗任务、临床应用及新兴挑战等主题。

As shown in Figure 1, this review seeks to answer the following questions. Section 2: What are LLMs? How can medical LLMs be effectively built? Section 3: How are the current medical LLMs evaluated? What capabilities do medical LLMs offer beyond traditional models? Section 4: How should medical LLMs be applied in clinical settings? Section 5: What challenges should be addressed when implementing medical LLMs in clinical practice? Section 6: How can we optimize the construction of medical LLMs to enhance their applicability in clinical settings, ultimately contributing to medicine and creating a positive societal impact?

如图 1 所示,本综述旨在回答以下问题。

第 2 节:什么是大语言模型 (LLM)?如何有效构建医学大语言模型?

第 3 节:当前医学大语言模型如何评估?相比传统模型,医学大语言模型具备哪些额外能力?

第 4 节:医学大语言模型应如何应用于临床场景?

第 5 节:在临床实践中部署医学大语言模型需解决哪些挑战?

第 6 节:如何优化医学大语言模型的构建以提升其临床适用性,最终推动医学发展并创造积极社会影响?

For the first question, we analyze the foundational principles underpinning current medical LLMs, providing detailed descriptions of their architecture, parameter scales, and the datasets used during their development. This exposition aims to serve as a valuable resource for researchers and clinicians designing medical LLMs tailored to specific requirements, such as computational constraints, data privacy concerns, and the integration of local knowledge bases. For the second question, we evaluate the performance of medical LLMs across ten biomedical NLP tasks, encompassing both disc rim i native and generative tasks. This comparative analysis elucidates how these models outperform traditional AI approaches, offering insights into the specific capabilities that render LLMs effective in clinical environments. The third question, the practical deployment of medical LLMs in clinical settings, is explored through the development of guidelines tailored for seven distinct clinical application scenarios. This section outlines practical implementations, emphasizing specific functionalities of medical LLMs that are leveraged in each scenario. The fourth question emphasizes addressing the challenges associated with the clinical deployment of medical LLMs, such as the risk of generating factually inaccurate yet plausible outputs (hallucination), and the ethical, legal, and safety implications. Citing recent studies, we argue for a comprehensive evaluation framework that assesses the trustworthiness of medical LLMs to ensure their responsible and effective utilization in healthcare. For the last question, we propose future research directions to advance the medical LLMs field. This includes fostering interdisciplinary collaboration between AI specialists and medical professionals, advocating for a ’doctor-in-the-loop’ approach, and emphasizing human-centered design principles.

针对第一个问题,我们分析了当前医疗大语言模型的基础原理,详细阐述了其架构、参数规模及开发过程中使用的数据集。这一阐述旨在为研究人员和临床医生设计符合特定需求(如计算资源限制、数据隐私问题及本地知识库整合)的医疗大语言模型提供宝贵参考。

第二个问题中,我们评估了医疗大语言模型在十项生物医学自然语言处理任务中的表现,涵盖判别式与生成式任务。通过对比分析,阐明了这些模型如何超越传统AI方法,并揭示了使其在临床环境中表现优异的具体能力。

第三个问题聚焦医疗大语言模型在临床场景的实际部署,通过制定七种不同临床应用场景的专属指南展开探讨。该部分概述了具体实施方案,重点突出各场景中医疗大语言模型所调用的特定功能。

第四个问题着重探讨医疗大语言模型临床部署面临的挑战,例如生成事实错误但看似合理输出(幻觉)的风险,以及伦理、法律与安全隐患。援引最新研究,我们主张建立综合评估框架以检验医疗大语言模型的可信度,确保其在医疗领域负责任且高效的应用。

最后关于未来研究方向,我们提出推动医疗大语言模型领域发展的多项建议:促进AI专家与医疗从业者的跨学科合作,倡导"医生参与闭环"方法,并强调以人为本的设计原则。

By establishing robust training data, benchmarks, metrics, and deployment strategies through co-development efforts, we aim to accelerate the responsible and efficacious integration of medical LLMs into clinical practice. This study therefore seeks to stimulate continued research and development in this interdisciplinary field, with the objective of realizing the profound potential of medical LLMs in enhancing clinical practice and advancing medical science for the betterment of society.

通过共同开发建立强大的训练数据、基准、指标和部署策略,我们旨在加速医疗大语言模型(LLM)在临床实践中的负责任且有效的整合。因此,本研究旨在促进这一跨学科领域的持续研发,以实现医疗大语言模型在改善临床实践和推动医学进步方面的深远潜力,从而造福社会。

BOX 1: Background of Large Language Models (LLMs)

BOX 1: 大语言模型 (LLM) 背景

The impressive performance of LLMs can be attributed to Transformer-based language models, large-scale pre-training, and scaling laws.

大语言模型的卓越性能可归功于基于Transformer的语言模型、大规模预训练和缩放定律。

Language Models A language model 25,26,27 is a probabilistic model that models the joint probability distribution of tokens (meaningful units of text, such as words or subwords or morphemes) in a sequence, i.e., the probabilities of how words and phrases are used in sequences. Therefore, it can predict the likelihood of a sequence of tokens given the previous tokens, which can be used to predict the next token in a sequence or to generate new sequences.

语言模型

语言模型 [25,26,27] 是一种概率模型,用于建模序列中 token (文本的有意义单元,如单词、子词或语素) 的联合概率分布,即单词和短语在序列中的使用概率。因此,它能够根据先前的 token 预测序列中 token 的可能性,从而用于预测序列中的下一个 token 或生成新序列。

The Transformer architecture The recurrent neural network (RNN) 28,26 has been widely used for language modeling by processing tokens sequentially and maintaining a vector named hidden state that encodes the context of previous tokens. Nonetheless, sequential processing makes it unsuitable for parallel training and limits its ability to capture long-range dependencies, making it computationally expensive and hindering its learning ability for long sequences. The strength of the Transformer 29 lies in its fully attentive mechanism, which relies exclusively on the attention mechanism and eliminates the need for recurrence. When processing each token, the attention mechanism computes a weighted sum of the other input tokens, where the weights are determined by the relevance between each input token and the current token. It allows the model to adaptively focus on different parts of the sequence to effectively learn the joint probability distribution of tokens. Therefore, Transformer not only enables efficient modeling of long-text but also allows highly paralleled training 30, thus reducing training costs. They make the Transformer highly scalable, and therefore it is efficient to obtain LLMs through the large-scale pre-training strategy.

Transformer架构

循环神经网络(RNN) [28,26] 通过按顺序处理token并维护一个名为隐藏状态的向量(用于编码先前token的上下文)而被广泛用于语言建模。然而,顺序处理使其不适合并行训练,并限制了其捕获长距离依赖关系的能力,导致计算成本高昂且阻碍其对长序列的学习能力。Transformer [29] 的优势在于其完全基于注意力机制的全注意力机制,无需循环结构。在处理每个token时,注意力机制会计算其他输入token的加权和,其中权重由每个输入token与当前token的相关性决定。这使得模型能够自适应地关注序列的不同部分,从而有效学习token的联合概率分布。因此,Transformer不仅能高效建模长文本,还支持高度并行化训练 [30],从而降低训练成本。这些特性使Transformer具备高度可扩展性,因此能通过大规模预训练策略高效获得大语言模型。

Large-scale Pre-training The LLMs are trained on massive corpora of unlabeled texts (e.g., Common Crawl, Wiki, and Books) to learn rich linguistic knowledge and language patterns. The common training objectives are masked language modeling (MLM) and next token prediction (NTP). In MLM, a portion of the input text is masked, and the model is tasked with predicting the masked text based on the remaining unmasked context, encouraging the model to capture the semantic and syntactic relationships between tokens 30; NTP is another common training objective, where the model is required to predict the next token in a sequence given the previous tokens. It helps the model to predict the next token 6.

大规模预训练

大语言模型 (LLM) 通过海量无标注文本 (如 Common Crawl、Wiki 和 Books 等) 训练,以学习丰富的语言知识和语言模式。常见的训练目标包括掩码语言建模 (MLM) 和下一词元预测 (NTP)。在 MLM 中,输入文本的部分内容被掩码,模型需要根据未掩码的上下文预测被掩码的文本,从而促使模型学习词元 (Token) 之间的语义和句法关系 [30];NTP 是另一种常见训练目标,要求模型根据已生成的词元序列预测下一个词元,这种机制能有效提升模型的连续文本生成能力 [6]。

Scaling Laws LLMs are the scaled-up versions of Transformer architecture 29 with increased numbers of Transformer layers, model parameters, and volume of pre-training data. The “scaling laws” 31,32 predict how much improvement can be expected in a model’s performance as its size increases (in terms of parameters, layers, data, or the amount of training computed). The scaling laws proposed by OpenAI 31 show that to achieve optimal model performance, the budget allocation for model size should be larger than the data.

大语言模型的扩展规律

大语言模型是Transformer架构[29]的扩展版本,通过增加Transformer层数、模型参数和预训练数据量来实现。扩展规律[31,32]预测了随着模型规模(参数、层数、数据量或计算量)增大,其性能将如何提升。OpenAI[31]提出的扩展规律表明,为获得最佳模型性能,模型规模的预算分配应大于数据预算。

The scaling laws proposed by Google DeepMind 32 show that both model and data sizes should be increased in equal scales. The scaling laws guide researchers to allocate resources and anticipate the benefits of scaling models.

谷歌DeepMind提出的扩展定律[32]表明,模型规模和数据规模应当同步扩大。该定律为研究者提供了资源分配依据,并能预测模型扩展带来的收益。

General Large Language Models Existing general LLMs can be divided into three categories based on their architecture (Table 1).

通用大语言模型

现有通用大语言模型 (LLM) 根据架构可分为三类 (表 1)。

Encoder-only LLMs consisting of a stack of Transformer encoder layers, employ a bidirectional training strategy that allows them to integrate context from both the left and the right of a given token in the input sequence. This bi-directional it y enables the models to achieve a deep understanding of the input sentences 30. Therefore, encoder-only LLMs are particularly suitable for language understanding tasks (e.g., sentiment analysis document classification) where the full context of the input is essential for accurate predictions. BERT 30 and DeBERTa 33 are the representative encoder-only LLMs.

仅编码器的大语言模型由一系列Transformer编码器层组成,采用双向训练策略,使其能够整合输入序列中给定token左右两侧的上下文。这种双向性使模型能够深入理解输入句子[30]。因此,仅编码器的大语言模型特别适合语言理解任务(例如情感分析、文档分类),这些任务需要完整输入上下文以实现准确预测。BERT[30]和DeBERTa[33]是代表性的仅编码器大语言模型。

Decoder-only LLMs utilize a stack of Transformer decoder layers and are characterized by their uni-directional (left-to-right) processing of text, enabling them to generate language sequentially. This architecture is trained unidirectional ly using the next token prediction training objective to predict the next token in a sequence, given all the previous tokens. After training, the decoder-only LLMs generate sequences auto regressive ly (i.e. token-by-token). The examples are the GPT-series developed by OpenAI 6,7, the LLaMA-series developed by Meta 4,5, and the $\mathrm{Pa}\mathrm{LM}^{3}$ and Bard (Gemini) 34 developed by Google. Based on the LLaMA model, Alpaca 35 is fine-tuned with 52k self-instructed data supervision. In addition, Baichuan 36 is trained on approximately 1.2 trillion tokens that support bilingual communication in Chinese and English. These LLMs have been used successfully in language generation.

仅解码器大语言模型采用堆叠的Transformer解码器层结构,其特点是单向(从左到右)处理文本,从而实现序列化语言生成。该架构通过下一token预测训练目标进行单向训练,即根据已生成的所有token预测序列中的下一个token。训练完成后,仅解码器大语言模型以自回归方式(逐个token)生成序列。典型实例包括OpenAI开发的GPT系列[6,7]、Meta研发的LLaMA系列[4,5],以及谷歌推出的$\mathrm{Pa}\mathrm{LM}^{3}$和Bard (Gemini)[34]。基于LLaMA模型,Alpaca[35]通过5.2万条自指令数据进行监督微调。此外,百川[36]在约1.2万亿token的双语语料上训练,支持中英双语交流。这些大语言模型已在语言生成领域取得显著成功。

Encoder-decoder LLMs are designed to simultaneously process input sequences and generate output sequences. They consist of a stack of bidirectional Transformer encoder layers followed by a stack of unidirectional Transformer decoder layers. The encoder processes and understands the input sequences, while the decoder generates the output sequences 8,9,37. Representative examples of encoder-decoder LLMs include Flan-T5 38, and ChatGLM 8,9. Specifically, ChatGLM 8,9 has 6.2B parameters and is a conversational open-source LLM specially optimized for Chinese to support Chinese-English bilingual question-answering.

编码器-解码器大语言模型旨在同时处理输入序列并生成输出序列。其结构由双向Transformer编码器层堆叠与单向Transformer解码器层堆叠组成。编码器负责处理和理解输入序列,解码器则生成输出序列 [8,9,37]。典型的编码器-解码器大语言模型包括Flan-T5 [38] 和ChatGLM [8,9]。其中ChatGLM [8,9] 拥有62亿参数,是专为中文优化的开源对话大语言模型,支持中英双语问答。

Table 1. Summary of existing general (large) language models, their underlying structures, numbers of parameters, and datasets used for model training. Column “# params” shows the number of parameters, M: million, B: billion.

| Domains | Model Structures | Models | #Params | Pre-train Data Scale |

| General-domain (Large) Language Models | Encoder-only | BERT30 RoBERTa 39 DeBERTa 33 GPT-240 Vicuna41 Alpaca 35 Mistral 42 | 110M/340M 355M 1.5B 1.5B 7B/13B 7B/13B | 3.3B tokens 161GB 160GB 40GB LLaMA+70K dialogues LLaMA+52KIFT |

| Decoder-only | LLaMA 4 LLaMA-25 LLaMA-343 GPT-36 Qwen 44 PaLM3 FLAN-PaLM37 Gemini (Bard) 34 GPT-3.545 GPT-47 Claude-3 46 | 7B 7B/13B/33B/65B 7B/13B/34B/70B 8B/70B 6.7B/13B/175B 1.8B/7B/14B/72B 8B/62B/540B 540B | 1.4T tokens 2T tokens 15T tokens 300B tokens 3T tokens 780B tokens | |

| Encoder-Decoder | BART47 ChatGLM8.9 T538 FLAN-T5 37 UL248 GLM9 | 140M/400M 6.2B 11B 3B/11B 19.5B 130B | 160GB 1T tokens 1T tokens 780B tokens 1T tokens 400B tokens |

表 1: 现有通用(大)语言模型概览,包括其底层结构、参数量及训练数据集。"# params"列显示参数量,M:百万,B:十亿。

| 领域 | 模型结构 | 模型 | #Params | 预训练数据规模 |

|---|---|---|---|---|

| 通用领域(大)语言模型 | Encoder-only | BERT[30] RoBERTa[39] DeBERTa[33] GPT-2[40] Vicuna[41] Alpaca[35] Mistral[42] | 110M/340M 355M 1.5B 1.5B 7B/13B 7B/13B | 3.3B tokens 161GB 160GB 40GB LLaMA+70K dialogues LLaMA+52KIFT |

| Decoder-only | LLaMA[4] LLaMA-2[5] LLaMA-3[43] GPT-3[6] Qwen[44] PaLM[3] FLAN-PaLM[37] Gemini(Bard)[34] GPT-3.5[45] GPT-4[47] Claude-3[46] | 7B 7B/13B/33B/65B 7B/13B/34B/70B 8B/70B 6.7B/13B/175B 1.8B/7B/14B/72B 8B/62B/540B 540B | 1.4T tokens 2T tokens 15T tokens 300B tokens 3T tokens 780B tokens | |

| Encoder-Decoder | BART[47] ChatGLM[8][9] T5[38] FLAN-T5[37] UL2[48] GLM[9] | 140M/400M 6.2B 11B 3B/11B 19.5B 130B | 160GB 1T tokens 1T tokens 780B tokens 1T tokens 400B tokens |

2 The Principles of Medical Large Language Models

2 医疗大语言模型的原理

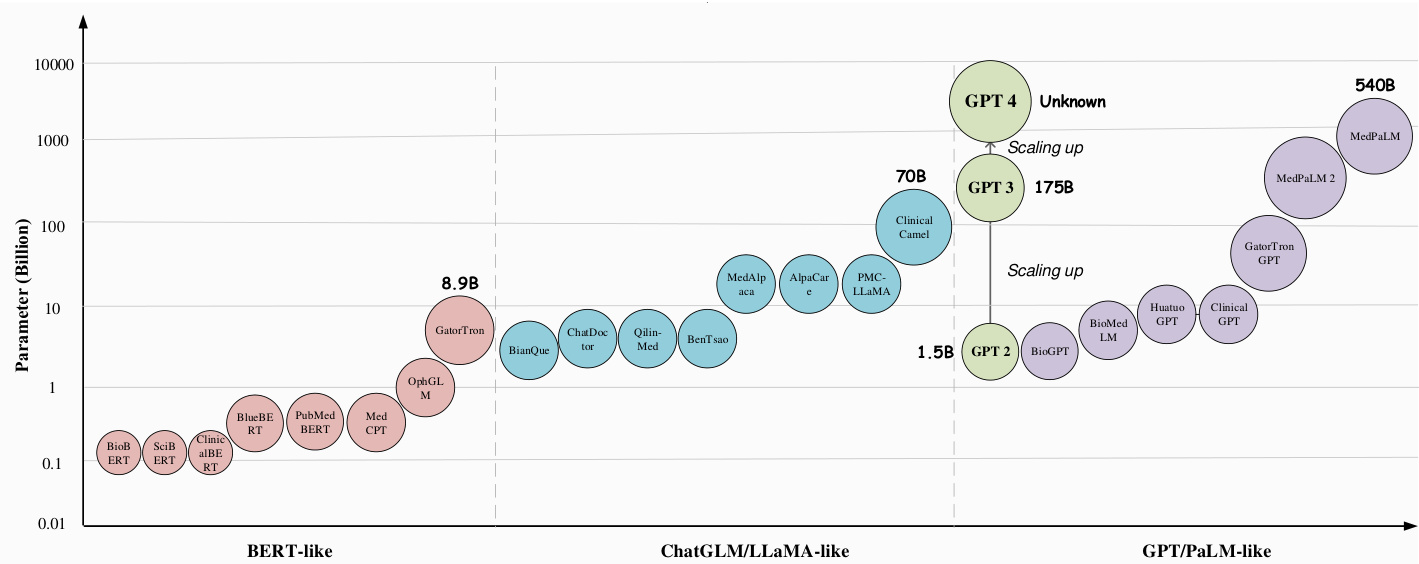

Box 1 and Table 1 briefly introduce the background of general LLMs1, e.g., GPT $4^{7}$ . Table 2 summarizes the currently available medical LLMs according to their model development. Existing medical LLMs are mainly pre-trained from scratch, fine-tuned from existing general LLMs, or directly obtained through prompting to align the general LLMs to the medical domain. Therefore, we introduce the principles of medical LLMs in terms of these three methods: pre-training, fine-tuning, and prompting. Meanwhile, we further summarize the medical LLMs according to their model architectures in Figure 2.

框 1 和表 1 简要介绍了通用大语言模型 (LLM) 的背景,例如 GPT-4 [7]。表 2 根据模型开发方式总结了当前可用的医疗大语言模型。现有医疗大语言模型主要通过从头预训练、基于现有通用大语言模型微调,或直接通过提示 (prompting) 使通用大语言模型适配医疗领域获得。因此,我们从预训练、微调和提示这三个方法层面介绍医疗大语言模型的原理。同时,我们在图 2 中进一步根据模型架构对医疗大语言模型进行了分类。

Table 2. Summary of existing medical-domain LLMs, in terms of their model development, the number of parameters (# params), the scale of pre-training/fine-tuning data, and the data source. M: million, B: billion.

| Domains | Model Development Models | # Params | Data Scale | Data Source | |

| BioBERT49 PubMedBERT52 | 110M 110M/340M | 18B tokens | PubMed 50+PMC 51 | ||

| 3.2B tokens | PubMed 50+PMC 51 | ||||

| SciBERT53 | 110M | 3.17B tokens | Literature 54 | ||

| NYUTron55 | 110M | 7.25M notes, 4.1B tokens | NYU Notes 5s | ||

| ClinicalBERT56 | 110M | 112k clinical notes | MIMIC-II157 | ||

| BioM-ELECTRA58 | 110M/335M | PubMed 50 | |||

| BioMed-RoBERTa59 | 125M | 7.55B tokens | S20RC6 | ||

| BioLinkBERT61 | 110M/340M | 21GB | PubMed 50 | ||

| BlueBERT 6263.64 | 110M/340M | >4.5B tokens | PubMed 50+MIMIC-III57 | ||

| SciFive 65 | 220M/770M | PubMed 50+PMC 51 | |||

| ClinicalT566 | 220M/770M | 2M clinical notes | MIMIC-III57 | ||

| 330M | 255M articles | PubMed 50 | |||

| Medical-domain LLMs (Sec. 2) | MedCPT67 DRAGON6S | 360M | 6GB | BookCorpus 69 | |

| BioGPT70 | 1.5B | 15M articles | PubMed 50 | ||

| BioMedLM71 | 2.7B | 110GB | Pile 2 | ||

| OphGLM73 | 6.2B | 20k dialogues | MedDialog 74 | ||

| GatorTron 23 | 8.9B | >82Btokens+6B tokens | EHRs23+PubMed 50 | ||

| 2.5B tokens+0.5B tokens | Wiki+MIMIC-II7 | ||||

| GatorTronGPT 75 DoctorGLM 76 | 5B/20B 6.2B | 277B tokens | EHRs 75 CMD.77 | ||

| BianQue 78 | 6.2B | 323MB dialogues 2.4M dialogues | BianQueCorpus 7s | ||

| ClinicaIGPT79 | 96k EHRs + 100k dialogues | MD-EHR 79+MedDialog 74 | |||

| Qilin-Med 80 | 7B | 192 medical QA | VariousMedQA14 | ||

| ChatDoctor 's | 7B 7B | 3GB | ChiMed 80 | ||

| BenTsao 17 | 7B | 110k dialogues 8k instructions | HealthCareMagic \$1+iCliniq2 CMeKG-8K83 | ||

| HuatuoGPT&4 | 7B | 226k instructions&dialogues | Hybrid SFT&4 | ||

| Baize-healthcare&5 | 7B | 101K dialogues | Quora+MedQuAD&6 | ||

| BioMedGPT87 MedAlpaca16 | 10B | S2ORC60 | |||

| 7B/13B | >26B tokens 160k medical QA | Medical Meadow 16 | |||

| 7B/13B | MedInstruct-52k88 | ||||

| AlpaCare 8 | 13B | 52k instructions | |||

| Zhongjing 89 PMC-LLaMA 13 | 70k dialogues | CMtMedQA89 | |||

| Fine-tuning (Sec.2.2) CPLLM9 | 13B | 79.2B tokens | Books+Literature 60+MedC-I13 | ||

| 13B | 109k EHRs | eICU-CRD9+MIMIC-IV92 | |||

| Med42 93 | 7B/70B | 250M tokens | PubMed + MedQA14 + OpenOrca | ||

| MEDITRON 94.95 | 7B/70B | 48.1B tokens | PubMed 50+Guidelines 94 | ||

| OpenBioLLM% | 8B/70B | ||||

| MedLlama3-v20 97 | 8B/70B | ||||

| Clinical Camel 18 13B/70B | 70k dialogues+100k articles 4k medical QA | ShareGPT98+PubMed 50 MedQA14 | |||

| MedPaLM-2 11 340B | 193k medical QA | MultiMedQA 11 | |||

| Med-Flamingo 99 | 600k pairs | Multimodal Textbook+PMC-OA99 VQA-RAD100+PathVQA101 | |||

| LLaVA-Med 102 | 660k pairs | PMC-15M 102+VQA-RAD 100 SLAKE103+PathVQA101 | |||

| MAIRA-1 104 | 337k pairs | MIMIC-CXR105 | |||

| RadFM 106 | 32M pairs | MedMD 106 MedQA-R&RS 108++MultiMedQA11 | |||

| Med-Gemin 107.10s | +MIMIC-I157+MultiMedBench109 | ||||

| CodeX110 | GPT-3.5 / LLaMA-2 Chain-of-Thought (CoT 111 | ||||

| Prompting (Sec.2.3) | DeID-GPTI12 | ChatGPT / GPT-4 | Chain-of-Thought (CoT) I1 | ||

| ChatCADI13 Dr. Knows I14 | ChatGPT | In-Context Learning (ICL) ICL | UMLS 115 | ||

| MedPaLM10 | ChatGPT PaLM (540B) | CoT & ICL | MultiMedQA11 | ||

| MedPrompt 12 | GPT-4 | CoT & ICL 1 | |||

| Retrieval-Augmented Generation (RAG)PubMed+Guidelines 17UpToDate 1+Dyname1 | |||||

| QA-RAG 120 | Chat-Orthopedist I16 | ChatGPT | |||

| Almanac 21 | ChatGPT | RAG | FDA QA 120 | ||

| ChatGPT | RAG & CoT | Clinical QA 121 | |||

| Oncology-GPT-4 93 | GPT-4 | RAG & ICL | Oncology Guidelines from ASCO and ESMO |

表 2: 现有医疗领域大语言模型总结,涵盖模型开发、参数量 (# params)、预训练/微调数据规模及数据来源。M: 百万,B: 十亿。

| 领域 | 模型开发模型 | # 参数量 | 数据规模 | 数据来源 | |

|---|---|---|---|---|---|

| BioBERT49 PubMedBERT52 | 110M 110M/340M | 18B tokens | PubMed 50+PMC 51 | ||

| 3.2B tokens | PubMed 50+PMC 51 | ||||

| SciBERT53 | 110M | 3.17B tokens | Literature 54 | ||

| NYUTron55 | 110M | 7.25M notes, 4.1B tokens | NYU Notes 55 | ||

| ClinicalBERT56 | 110M | 112k clinical notes | MIMIC-III57 | ||

| BioM-ELECTRA58 | 110M/335M | PubMed 50 | |||

| BioMed-RoBERTa59 | 125M | 7.55B tokens | S2ORC60 | ||

| BioLinkBERT61 | 110M/340M | 21GB | PubMed 50 | ||

| BlueBERT62-64 | 110M/340M | >4.5B tokens | PubMed 50+MIMIC-III57 | ||

| SciFive65 | 220M/770M | PubMed 50+PMC 51 | |||

| ClinicalT566 | 220M/770M | 2M clinical notes | MIMIC-III57 | ||

| 330M | 255M articles | PubMed 50 | |||

| 医疗领域大语言模型 (第2节) | MedCPT67 DRAGON68 | 360M | 6GB | BookCorpus69 | |

| BioGPT70 | 1.5B | 15M articles | PubMed 50 | ||

| BioMedLM71 | 2.7B | 110GB | Pile72 | ||

| OphGLM73 | 6.2B | 20k dialogues | MedDialog74 | ||

| GatorTron23 | 8.9B | >82B tokens+6B tokens | EHRs23+PubMed 50 | ||

| 2.5B tokens+0.5B tokens | Wiki+MIMIC-III57 | ||||

| GatorTronGPT75 DoctorGLM76 | 5B/20B 6.2B | 277B tokens | EHRs75 CMD77 | ||

| BianQue78 | 6.2B | 323MB dialogues 2.4M dialogues | BianQueCorpus78 | ||

| ClinicalGPT79 | 96k EHRs + 100k dialogues | MD-EHR79+MedDialog74 | |||

| Qilin-Med80 | 7B | 192 medical QA | VariousMedQA14 | ||

| ChatDoctor81 | 7B 7B | 3GB | ChiMed80 | ||

| BenTsao17 | 7B | 110k dialogues 8k instructions | HealthCareMagic81+iCliniq82 CMeKG-8K83 | ||

| HuatuoGPT84 | 7B | 226k instructions&dialogues | Hybrid SFT84 | ||

| Baize-healthcare85 | 7B | 101K dialogues | Quora+MedQuAD86 | ||

| BioMedGPT87 MedAlpaca16 | 10B | S2ORC60 | |||

| 7B/13B | >26B tokens 160k medical QA | Medical Meadow16 | |||

| 7B/13B | MedInstruct-52k88 | ||||

| AlpaCare88 | 13B | 52k instructions | |||

| Zhongjing89 PMC-LLaMA13 | 70k dialogues | CMTMedQA89 | |||

| 微调 (第2.2节) CPLLM90 | 13B | 79.2B tokens | Books+Literature60+MedC-I13 | ||

| 13B | 109k EHRs | eICU-CRD90+MIMIC-IV92 | |||

| Med4293 | 7B/70B | 250M tokens | PubMed + MedQA14 + OpenOrca | ||

| MEDITRON94-95 | 7B/70B | 48.1B tokens | PubMed50+Guidelines94 | ||

| OpenBioLLM96 | 8B/70B | ||||

| MedLlama3-v2097 | 8B/70B | ||||

| Clinical Camel18 | 13B/70B | 70k dialogues+100k articles 4k medical QA | ShareGPT98+PubMed50 MedQA14 | ||

| MedPaLM-211 | 340B | 193k medical QA | MultiMedQA11 | ||

| Med-Flamingo99 | 600k pairs | Multimodal Textbook+PMC-OA99 VQA-RAD100+PathVQA101 | |||

| LLaVA-Med102 | 660k pairs | PMC-15M102+VQA-RAD100 SLAKE103+PathVQA101 | |||

| MAIRA-1104 | 337k pairs | MIMIC-CXR105 | |||

| RadFM106 | 32M pairs | MedMD106 MedQA-R&RS108++MultiMedQA11 | |||

| Med-Gemini107-109 | +MIMIC-III57+MultiMedBench109 | ||||

| CodeX110 | GPT-3.5/LLaMA-2 Chain-of-Thought (CoT)111 | ||||

| 提示 (第2.3节) | DeID-GPT112 | ChatGPT/GPT-4 | Chain-of-Thought (CoT)112 | ||

| ChatCAD113 Dr. Knows114 | ChatGPT | In-Context Learning (ICL) | UMLS115 | ||

| MedPaLM10 | ChatGPT PaLM (540B) | CoT & ICL | MultiMedQA11 | ||

| MedPrompt12 | GPT-4 | CoT & ICL12 | |||

| Retrieval-Augmented Generation (RAG) PubMed+Guidelines17 UpToDate119+Dynamed119 | |||||

| QA-RAG120 | Chat-Orthopedist116 | ChatGPT | |||

| Almanac121 | ChatGPT | RAG | FDA QA120 | ||

| ChatGPT | RAG & CoT | Clinical QA121 | |||

| Oncology-GPT-493 | GPT-4 | RAG & ICL | Oncology Guidelines from ASCO and ESMO |

2.1 Pre-training

2.1 预训练

Pre-training typically involves training an LLM on a large corpus of medical texts, including both structured and unstructured text, to learn the rich medical knowledge. The corpus may include $\mathrm{EHRs}^{75}$ , clinical notes 23, and medical literature 56. In particular, PubMed50, MIMIC-III clinical notes57, and PubMed Central (PMC) literature 51, are three widely used medical corpora for medical LLM pre-training. A single corpus or a combination of corpora may be used for pre-training. For example, PubMed BERT 52 and Clinic alBERT are pre-trained on PubMed and MIMIC-III, respectively. In contrast, BlueBERT62 combines both corpora for pre-training; BioBERT49 is pre-trained on both PubMed and PMC. The University of Florida (UF) Health EHRs are further introduced in pre-training Gator Tron 23 and Gator Tron GP T 75. MEDITRON94 is pre-trained on Clinical Practice Guidelines (CPGs). The CPGs are used to guide healthcare practitioners and patients in making evidence-based decisions about diagnosis, treatment, and management.

预训练通常包括在大规模医学文本语料库上训练大语言模型(LLM),涵盖结构化与非结构化文本,以学习丰富的医学知识。语料库可能包含电子健康记录(EHRs)[75]、临床记录[23]和医学文献[56]。其中PubMed[50]、MIMIC-III临床记录[57]和PubMed Central(PMC)文献[51]是三种广泛用于医学大语言模型预训练的语料库。预训练可采用单一语料库或组合语料库,例如PubMed BERT[52]和ClinicalBERT分别在PubMed和MIMIC-III上预训练,而BlueBERT[62]则结合了这两个语料库;BioBERT[49]同时在PubMed和PMC上预训练。佛罗里达大学(UF)健康电子健康记录进一步用于GatorTron[23]和GatorTronGPT[75]的预训练。MEDITRON[94]基于临床实践指南(CPGs)进行预训练,这些指南用于辅助医疗从业者和患者做出基于证据的诊断、治疗和管理决策。

To meet the needs of the medical domain, pre-training medical LLMs typically involve refining the following commonly used training objectives in general LLMs: masked language modeling, next sentence prediction, and next token prediction (Please see Box 1 for an introduction of these three pre-training objectives). For example. BERT-series models (e.g., BioBERT 49, PubMed BERT 52, Clinic alBERT 56, and Gator Tron 23) mainly adopt the masked language modeling and the next sentence prediction for pre-training; GPT-series models (e.g., BioGPT70, and Gator Tron GP T 75) mainly adopt the next token prediction for pre-training. It is worth mentioning that BERT-like Medical LLMs (e.g., BioBERT49, PubMed BERT 52, Clinical BERT56) are originally derived from the general domain BERT or RoBERTa models. To clarify the differences between different models, in our Table 2, we only show the data source used to further construct medical LLMs. In particular, a more recent work122 provides a systematic review of existing clinical text datasets for LLMs. After pre-training, medical LLMs can learn rich medical knowledge that can be leveraged to achieve strong performance on different medical tasks.

为满足医疗领域需求,预训练医疗大语言模型通常会对通用大语言模型中以下三种常用训练目标进行改进:掩码语言建模 (masked language modeling)、下一句预测 (next sentence prediction) 和下一词元预测 (next token prediction) (详见框1对这三种预训练目标的介绍)。例如,BERT系列模型(如BioBERT [49]、PubMed BERT [52]、ClinicalBERT [56]和GatorTron [23])主要采用掩码语言建模和下一句预测进行预训练;GPT系列模型(如BioGPT [70]和GatorTronGPT [75])则主要采用下一词元预测进行预训练。值得注意的是,类BERT医疗大语言模型(如BioBERT [49]、PubMed BERT [52]、ClinicalBERT [56])最初源自通用领域的BERT或RoBERTa模型。为明确不同模型间的差异,我们在表2中仅展示用于构建医疗大语言模型的数据来源。近期一项研究[122]系统综述了现有面向大语言模型的临床文本数据集。通过预训练,医疗大语言模型可学习丰富的医学知识,从而在不同医疗任务中实现优异性能。

Figure 2. We adopt the data from Table 2 to demonstrate the development of model sizes for medical large language models in different model architectures, i.e., BERT-like, ChatGLM/LLaMA-like, and GPT/PaLM-like.

图 2: 我们采用表 2 中的数据来展示不同模型架构(即类 BERT、类 ChatGLM/LLaMA 和类 GPT/PaLM)下医疗大语言模型的规模发展情况。

2.2 Fine-tuning

2.2 微调

It is high-cost and time-consuming to train a medical LLM from scratch, due to its requirement of substantial (e.g., several days or even weeks) computational power and manual labor. One solution is to fine-tune the general LLMs with medical data, and researchers have proposed different fine-tuning methods11,16,18 for learning domain-specific medical knowledge and obtaining medical LLMs. Current fine-tuning methods include Supervised Fine-Tuning (SFT), Instruction Fine-Tuning (IFT), and Parameter-Efficient Fine-Tuning (PEFT). The resulting fine-tuned medical LLMs are summarized in Table 2. SFT can serve as continued pre-training to fine-tune general LLMs on existing (usually unlabeled) medical corpora. IFT focuses on fine-tuning general LLMs on instruction-based medical data containing additional (usually human-constructed) instructions.

从头训练一个医疗大语言模型成本高昂且耗时,因为这需要大量(例如数天甚至数周)的计算资源和人力投入。一种解决方案是利用医疗数据对通用大语言模型进行微调,研究人员已提出了多种微调方法[11,16,18]来学习特定领域的医学知识并获得医疗大语言模型。当前的微调方法包括监督微调(Supervised Fine-Tuning, SFT)、指令微调(Instruction Fine-Tuning, IFT)和参数高效微调(Parameter-Efficient Fine-Tuning, PEFT)。表2总结了通过这些方法得到的医疗大语言模型。SFT可作为持续预训练方法,在现有(通常未标注的)医疗语料库上微调通用大语言模型。IFT则侧重于在包含额外(通常由人工构建的)指令的医疗数据上对通用大语言模型进行微调。

Supervised Fine-Tuning (SFT) aims to leverage high-quality medical corpus, which can be physician-patient conversations 15, medical question-answering 16, and knowledge graphs 80,17. The constructed SFT data serves as a continuation of the pre-training data to further pre-train the general LLMs with the same training objectives, e.g., next token prediction. SFT provides an additional pre-training phase that allows the general LLMs to learn rich medical knowledge and align with the medical domain, thus transforming them into specialized medical LLMs.

监督微调 (Supervised Fine-Tuning, SFT) 旨在利用高质量医学语料,包括医患对话 [15]、医学问答 [16] 和知识图谱 [80,17]。构建的 SFT 数据作为预训练数据的延续,以相同训练目标(如下一个token预测)对通用大语言模型进行进一步预训练。SFT 提供了一个额外的预训练阶段,使通用大语言模型能够学习丰富的医学知识并与医学领域对齐,从而将其转化为专业医学大语言模型。

The diversity of SFT enables the development of diverse medical LLMs by training on different types of medical corpus. For example, Doctor GL M 76 and Chat Doctor 15 are obtained by fine-tuning the general LLMs ChatGLM8,9 and LLaMA4 on the physician-patient dialogue data, respectively. MedAl paca 16 based on the general LLM Alpaca35 is fine-tuned using over 160,000 medical QA pairs sourced from diverse medical corpora. Clinical camel 18 combines physician-patient conversations, clinical literature, and medical QA pairs to refine the LLaMA-2 model5. In particular, Qilin-Med80 and Zhong jing 89 are obtained by incorporating the knowledge graph to perform fine-tuning on the Baichuan36 and LLaMA4, respectively.

SFT (Supervised Fine-Tuning) 的多样性使得通过训练不同类型的医学语料库来开发多样化的医疗大语言模型成为可能。例如,Doctor GLM [76] 和 Chat Doctor [15] 分别通过对通用大语言模型 ChatGLM [8,9] 和 LLaMA [4] 在医患对话数据上进行微调获得。基于通用大语言模型 Alpaca [35] 的 MedAlpaca [16] 使用了来自不同医学语料库的超过 16 万条医学问答对进行微调。Clinical Camel [18] 结合了医患对话、临床文献和医学问答对来优化 LLaMA-2 模型 [5]。特别地,Qilin-Med [80] 和 Zhongjing [89] 通过整合知识图谱分别对 Baichuan [36] 和 LLaMA [4] 进行微调获得。

In summary, existing studies have demonstrated the efficacy of SFT in adapting general LLMs to the medical domain. They show that SFT improves not only the model’s capability for understanding and generating medical text, but also its ability to provide accurate clinical decision support 123.

总之,现有研究已证明监督微调(SFT)能有效将通用大语言模型适配到医疗领域。研究表明,SFT不仅能提升模型对医学文本的理解与生成能力,还可增强其提供准确临床决策支持的能力[123]。

Instruction Fine-Tuning (IFT) constructs instruction-based training datasets 124,123,1, which typically comprise instructioninput-output triples, e.g., instruction-question-answer. The primary goal of IFT is to enhance the model’s ability to follow various human/task instructions, align their outputs with the medical domain, and thereby produce a specialized medical LLM.

指令微调 (Instruction Fine-Tuning, IFT) 通过构建基于指令的训练数据集 [124,123,1] 来实现,这些数据集通常包含指令-输入-输出的三元组,例如指令-问题-答案。IFT 的主要目标是提升模型遵循各类人类/任务指令的能力,使其输出与医疗领域对齐,从而生成一个专业化的医疗大语言模型。

Thus, the main difference between SFT and IFT is that the former focuses primarily on injecting medical knowledge into a general LLM through continued pre-training, thus improving its ability to understand the medical text and accurately predict the next token. In contrast, IFT aims to improve the model’s instruction following ability and adjust its outputs to match the given instructions, rather than accurately predicting the next token as in $\mathrm{SFT}^{124}$ . As a result, SFT emphasizes the quantity of training data, while IFT emphasizes their quality and diversity. Since IFT and SFT are both capable of improving model performance, there have been some recent works89,80,88 attempting to combine them for obtaining robust medical LLMs.

因此,SFT (Supervised Fine-Tuning) 与 IFT (Instruction Fine-Tuning) 的主要区别在于:前者主要通过持续预训练将医学知识注入通用大语言模型,从而提升其理解医学文本和准确预测下一个Token的能力;而后者旨在提升模型的指令跟随能力,调整输出以匹配给定指令,而非像 $\mathrm{SFT}^{124}$ 那样精确预测下一个Token。因此,SFT更注重训练数据量,而IFT更注重数据质量与多样性。由于IFT和SFT均能提升模型性能,近期已有研究 [89,80,88] 尝试将二者结合以获得更稳健的医学大语言模型。

In other words, to enhance the performance of LLMs through IFT, it is essential to ensure that the training data for IFT are of high quality and encompass a wide range of medical instructions and medical scenarios. To this end, MedPaLM $2^{11}$ invited qualified medical professionals to develop the instruction data for fine-tuning the general PaLM. BenTsao17 and ChatGLMMed125 constructed the knowledge-based instruction data from the knowledge graph. Zhong jing 89 further incorporated the multi-turn dialogue as the instruction data to perform IFT. MedAl paca 16 simultaneously incorporated the medical dialogues and medical QA pairs for instruction fine-tuning.

换句话说,要通过指令微调(IFT)提升大语言模型(LLM)的性能,关键在于确保训练数据质量高且覆盖广泛的医疗指令和场景。为此,MedPaLM $2^{11}$ 邀请了专业医疗人员开发用于微调通用PaLM的指令数据。BenTsao17和ChatGLMMed125从知识图谱构建了基于知识的指令数据。Zhong jing 89进一步将多轮对话纳入指令数据进行微调。MedAl paca 16则同时整合了医疗对话和医疗问答对进行指令微调。

Recent advancements in multimodal LLMs have expanded the capabilities of LLMs to process complex and multimodal medical data. Notable examples include Med-Flamingo99, LLaVA-Med102, and Med-Gemini108. Med-Flamingo99 undergoes IFT on medical image-text data, learning to identify abnormalities and generate diagnostic reports. LLaVA-Med’s102 two-stage IFT process involves aligning medical concepts across visual and textual modalities, followed by fine-tuning the model on diverse medical instructions. Med-Gemini’s108 IFT utilizes a curated dataset of medical instructions and multimodal data, enabling it to comprehend complex medical concepts, procedures, and diagnostic reasoning. Meanwhile, MAIRA-1104 and RadFM106 are two multimodal LLMs specifically designed for radiology applications. MAIRA-1104 employs IFT on a dataset of radiology instructions and corresponding medical images, enabling it to analyze radiological images and generate accurate diagnostic reports. RadFM106, on the other hand, leverages a pre-training approach on a large corpus of radiology-specific image-text data, followed by instruction fine-tuning on a diverse set of radiology instructions. These models’ multimodal IFT approaches enable them to bridge the gap between visual and textual medical information, perform a wide range of medical tasks accurately, and generate context-aware responses to complex medical queries.

多模态大语言模型的最新进展拓展了大语言模型处理复杂多模态医疗数据的能力。代表性成果包括Med-Flamingo99、LLaVA-Med102和Med-Gemini108。Med-Flamingo99通过医疗图文数据的指令微调(IFT),学习识别异常并生成诊断报告。LLaVA-Med102采用两阶段指令微调流程:先对齐视觉与文本模态的医疗概念,再针对多样化医疗指令进行模型微调。Med-Gemini108的指令微调使用精选医疗指令和多模态数据集,使其能理解复杂医疗概念、操作流程和诊断推理。与此同时,MAIRA-1104和RadFM106是专为放射学应用设计的两种多模态大语言模型。MAIRA-1104在放射学指令及对应医学影像数据集上进行指令微调,可分析放射影像并生成精准诊断报告。RadFM106则采用预训练方法处理大规模放射学专用图文数据,再通过多样化放射学指令进行微调。这些模型的多模态指令微调方法能弥合视觉与文本医疗信息的鸿沟,精准执行各类医疗任务,并对复杂医疗查询生成情境感知的响应。

Parameter-Efficient Fine-Tuning (PEFT) aims to substantially reduce computational and memory requirements for fine-tuning general LLMs. The main idea is to keep most of the parameters in pre-trained LLMs unchanged, by fine-tuning only the smallest subset of parameters (or additional parameters) in these LLMs. Commonly used PEFT techniques include Low-Rank Adaptation (LoRA)126, Prefix Tuning127, and Adapter Tuning128,129.

参数高效微调 (PEFT) 旨在大幅降低通用大语言模型微调所需的计算和内存资源。其核心思想是保持预训练大语言模型的大部分参数不变,仅对这些模型中最小的参数子集 (或额外参数) 进行微调。常用的 PEFT 技术包括低秩自适应 (LoRA) [126]、前缀调优 [127] 以及适配器调优 [128,129]。

In contrast to fine-tuning full-rank weight matrices, 1) LoRA preserves the parameters of the original LLMs and only adds trainable low-rank matrices into the self-attention module of each Transformer layer126. Therefore, LoRA can substantially reduce the number of trainable parameters and improve the efficiency of fine-tuning, while still enabling the fine-tuned LLM to capture effectively the characteristics of the tasks. 2) Prefix Tuning takes a different approach from LoRA by adding a small set of continuous task-specific vectors (i.e. “prefixes”) to the input of each Transformer layer 127,1. These prefixes serve as the additional context to guide the generation of the model without changing the original pre-trained parameter weights. 3) Adapter Tuning involves introducing small neural network modules, known as adapters, into each Transformer layer of the pre-trained LLMs130. These adapters are fine-tuned while keeping the original model parameters frozen130, thus allowing for flexible and efficient fine-tuning. The number of trainable parameters introduced by adapters is relatively small, yet they enable the LLMs to adapt to clinical scenarios or tasks effectively.

与微调全秩权重矩阵不同,1) LoRA保留了原始大语言模型的参数,仅在每个Transformer层的自注意力模块中添加可训练的低秩矩阵[126]。因此,LoRA能显著减少可训练参数量并提升微调效率,同时仍使微调后的大语言模型有效捕捉任务特征。2) Prefix Tuning采用与LoRA不同的方法,通过在每个Transformer层的输入中添加一组小型连续任务特定向量(即"前缀")[127,1]。这些前缀作为额外上下文引导模型生成,且不改变原始预训练参数权重。3) Adapter Tuning通过在预训练大语言模型的每个Transformer层中插入称为适配器的小型神经网络模块实现[130]。这些适配器在保持原始模型参数冻结的情况下进行微调[130],从而实现灵活高效的微调。适配器引入的可训练参数量相对较少,却能使大语言模型有效适应临床场景或任务。

In general, PEFT is valuable for developing LLMs that meet unique needs in specific (e.g., medical) domains, due to its ability to reduce computational demands while maintaining the model performance. For example, medical LLMs Doctor GL M 76, MedAl paca 16, Baize-Healthcare 85, Zhong jing 89, CPLLM90, and Clinical Camel18 adopted the LoRA126 to perform parameter-efficient fine-tuning to efficiently align the general LLMs to the medical domain.

通常来说,参数高效微调 (PEFT) 对于开发满足特定领域 (如医疗) 独特需求的大语言模型具有重要价值,因为它能在保持模型性能的同时降低计算需求。例如,医疗领域的大语言模型 Doctor GL M [76]、MedAl paca [16]、Baize-Healthcare [85]、Zhong jing [89]、CPLLM [90] 和 Clinical Camel [18] 采用 LoRA [126] 进行参数高效微调,从而高效地将通用大语言模型适配到医疗领域。

2.3 Prompting

2.3 提示工程

Fine-tuning considerably reduces computational costs compared to pre-training, but it requires further model training and collections of high-quality datasets for fine-tuning, thus still consuming some computational resources and manual labor. In contrast, the “prompting” methods efficiently align general LLMs (e.g., $\mathrm{PaLM}^{3}.$ ) to the medical domain (e.g., MedPaLM10), without training any model parameters. Popular prompting methods include In-Context Learning (ICL), Chain-of-Thought (CoT) prompting, Prompt Tuning, and Retrieval-Augmented Generation (RAG).

微调相比预训练大幅降低了计算成本,但仍需进行额外的模型训练和收集高质量微调数据集,因此仍需消耗部分计算资源和人力。相比之下,"提示"(prompting)方法能高效地将通用大语言模型(如$\mathrm{PaLM}^{3}$)适配到医疗领域(如MedPaLM10),而无需训练任何模型参数。常见的提示方法包括上下文学习(ICL)、思维链(CoT)提示、提示调优(Prompt Tuning)和检索增强生成(RAG)。

In-Context Learning (ICL) aims to directly give instructions to prompt the LLM to perform a task efficiently. In general, the ICL consists of four process: task understanding, context learning, knowledge reasoning, and answer generation. First, the model must understand the specific requirements and goals of the task. Second, the model learns to understand the contextual information related to the task with argument context. Then, use the model’s internal knowledge and reasoning capabilities to understand the patterns and logic in the example. Finally, the model generates the task-related answers. The advantage of ICL is that it does not require a large amount of labeled data for fine-tuning. Based on the type and number of input examples, ICL can be divided into three categories 131. 1) One-shot Prompting: Only one example and task description are allowed to be entered. 2) Few-shot Prompting: Allows the input of multiple instances and task descriptions. 3) Zero-shot Prompting: Only task descriptions are allowed to be entered. ICL presents the LLMs making task predictions based on contexts augmented with a few examples and task demonstrations. It allows the LLMs to learn from these examples or demonstrations to accurately perform the task and follow the given examples to give corresponding answers6. Therefore, ICL allows LLMs to accurately understand and respond to medical queries. For example, MedPaLM10 substantially improves the task performance by providing the general LLM, PaLM3, with a small number of task examples such as medical QA pairs.

上下文学习 (ICL) 旨在直接通过指令提示大语言模型高效执行任务。通常,ICL包含四个流程:任务理解、上下文学习、知识推理和答案生成。首先,模型必须理解任务的具体要求和目标。其次,模型通过论证上下文学习理解与任务相关的上下文信息。接着,利用模型内部知识和推理能力理解示例中的模式和逻辑。最后,模型生成与任务相关的答案。ICL的优势在于不需要大量标注数据进行微调。根据输入示例的类型和数量,ICL可分为三类 [131]:

- 单样本提示 (One-shot Prompting):仅允许输入一个示例和任务描述。

- 少样本提示 (Few-shot Prompting):允许输入多个实例和任务描述。

- 零样本提示 (Zero-shot Prompting):仅允许输入任务描述。

ICL通过少量示例和任务演示增强上下文,使大语言模型能够基于上下文进行任务预测。它让大语言模型从这些示例或演示中学习,从而准确执行任务并按照给定示例生成相应答案 [6]。因此,ICL使大语言模型能够准确理解和响应医学查询。例如,MedPaLM [10] 通过为通用大语言模型 PaLM [3] 提供少量任务示例(如医学问答对),显著提升了任务性能。

Chain-of-Thought (CoT) Prompting further improves the accuracy and logic of model output, compared with In-Context Learning. Specifically, through prompting words, CoT aims to prompt the model to generate intermediate steps or paths of reasoning when dealing with downstream (complex) problems 111. Moreover, CoT can be combined with few-shot prompting by giving reasoning examples, thus enabling medical LLMs to give reasoning processes when generating responses. For tasks involving complex reasoning, such as medical QA, CoT has been shown to effectively improve model performance 10,11. Medical LLMs, such as DeID-GPT112, MedPaLM10, and Med Prompt 12, use CoT prompting to assist them in simulating a diagnostic thought process, thus providing more transparent and interpret able predictions or diagnoses. In particular, Med Prompt 12 directly prompts a general LLM, GPT $.4^{7}$ , to outperform the fine-tuned medical LLMs on medical QA without training any model parameters.

思维链 (Chain-of-Thought, CoT) 提示相较于上下文学习能进一步提升模型输出的准确性和逻辑性。具体而言,CoT通过提示词引导模型在处理下游(复杂)问题时生成中间推理步骤或路径[111]。此外,CoT可与少样本提示相结合,通过提供推理示例使医疗大语言模型在生成回答时展现推理过程。对于涉及复杂推理的任务(如医疗问答),研究证明CoT能有效提升模型性能[10,11]。DeID-GPT[112]、MedPaLM[10]和Med Prompt[12]等医疗大语言模型采用CoT提示来模拟诊断思维过程,从而提供更透明、可解释的预测或诊断。值得注意的是,Med Prompt[12]直接提示通用大语言模型GPT-4[7],无需训练任何模型参数即可在医疗问答任务上超越微调后的医疗大语言模型。

Prompt Tuning aims to improve the model performance by employing both prompting and fine-tuning techniques 132,129. The prompt tuning method introduces learnable prompts, i.e. trainable continuous vectors, which can be optimized or adjusted during the fine-tuning process to better adapt to different medical scenarios and tasks. Therefore, they provide a more flexible way of prompting LLMs than the “prompting alone” methods that use discrete and fixed prompts, as described above. In contrast to traditional fine-tuning methods that train all model parameters, prompt tuning only tunes a very small set of parameters associated with the prompts themselves, instead of extensively training the model parameters. Thus, prompt tuning effectively and accurately responds to medical problems12, with minimal incurring computational cost.

提示调优 (Prompt Tuning) 通过结合提示和微调技术来提升模型性能 [132,129]。该方法引入可学习的提示 (即可训练的连续向量),这些提示在微调过程中可被优化调整,从而更好地适应不同医疗场景和任务。相较于前文所述仅使用离散固定提示的"纯提示"方法,提示调优为大语言模型提供了更灵活的提示方式。与传统微调需要训练所有模型参数不同,提示调优仅调整与提示本身相关的极少量参数,而非大规模训练模型参数。因此,该方法能以最低计算成本精准高效地应对医疗问题 [12]。

Existing medical LLMs that employ the prompting techniques are listed in Table 2. Recently, MedPaLM10 and MedPaLM $2^{11}$ propose to combine all the above prompting methods, resulting in Instruction Prompt Tuning, to achieve strong performances on various medical question-answering datasets. In particular, using the MedQA dataset for the US Medical Licensing Examination (USMLE), MedPaLM $2^{11}$ achieves a competitive overall accuracy of $86.5%$ compared to human experts $(87.0%)$ , surpassing previous state-of-the-art method MedPaLM10 by a large margin $(19%)$ .

现有采用提示技术的医疗大语言模型列于表2。近期,MedPaLM10和MedPaLM $2^{11}$ 提出整合上述所有提示方法,形成指令提示调优(Instruction Prompt Tuning),在多项医疗问答数据集上取得优异表现。特别在针对美国医师执照考试(USMLE)的MedQA数据集上,MedPaLM $2^{11}$ 以86.5%的综合准确率逼近人类专家水平(87.0%),较此前最优方法MedPaLM10大幅提升19%。

Retrieval-Augmented Generation (RAG) enhances the performance of LLMs by integrating external knowledge into the generation process. In detail, RAG can be used to minimize LLM’s hallucinations, obscure reasoning processes, and reliance on outdated information by incorporating external database knowledge 133. RAG consists of three main components: retrieval, augmentation, and generation. The retrieval component employs various indexing strategies and input query processing techniques to search and top-ranked relevant information from an external knowledge base. The retrieved external data is then augmented into the LLM’s prompt, providing additional context and grounding for the generated response. By directly updating the external knowledge base, RAG mitigates the risk of catastrophic forgetting associated with model weight modifications, making it particularly suitable for domains with low error tolerance and rapidly evolving information, such as the medical field. In contrast to traditional fine-tuning methods, RAG enables the timely incorporation of new medical information without compromising the model’s previously acquired knowledge, ensuring the generated outputs remain accurate and up-to-date in the face of evolving medical challenges. Most recently, researchers proposed the first benchmark MIRAGE134 based on medical information RAG, including 7,663 questions from five medical QA datasets, which has been established to both steer research and facilitate the practical deployment of medical RAG systems

检索增强生成 (Retrieval-Augmented Generation, RAG) 通过将外部知识整合到生成过程中来提升大语言模型的性能。具体而言,RAG 可通过融合外部数据库知识来最小化大语言模型的幻觉、模糊推理过程以及对过时信息的依赖 [133]。RAG 包含三个主要组件:检索、增强和生成。检索组件采用多种索引策略和输入查询处理技术,从外部知识库中搜索并排名相关度最高的信息。随后,检索到的外部数据会被增强到大语言模型的提示中,为生成响应提供额外上下文和依据。通过直接更新外部知识库,RAG 降低了因模型权重修改导致的灾难性遗忘风险,使其特别适用于低错误容忍度且信息快速更新的领域(如医疗领域)。与传统微调方法相比,RAG 能够在不损害模型已获取知识的前提下及时整合新医疗信息,确保生成内容在面对不断演变的医疗挑战时保持准确性和时效性。最新研究中,学者们提出了首个基于医疗信息 RAG 的基准测试 MIRAGE[134],该基准包含来自五个医疗问答数据集的 7,663 个问题,旨在引导研究方向并推动医疗 RAG 系统的实际部署。

In RAG, retrieval can be achieved by calculating the similarity between the embeddings of the question and document chunks, where the semantic representation capability of embedding models plays a key role. Recent research has introduced prominent embedding models such as AngIE135, Voyage136, and BGE137. In addition to embedding, the retrieval process can be optimized via various strategies such as adaptive retrieval, recursive retrieval, and iterative retrieval 138,139,140. Several recent works have demonstrated the effectiveness of RAG in medical and pharmaceutical domains. Almanac121 is a large language framework augmented with retrieval capabilities for medical guidelines and treatment recommendations, surpassing the performance of ChatGPT on clinical scenario evaluations, particularly in terms of completeness and safety. Another work QA-RAG120 employs RAG with LLM for pharmaceutical regulatory tasks, where the model searches for relevant guideline documents and provides answers based on the retrieved guidelines. Chat-Orthopedist 116, a retrieval-augmented LLM, assists adolescent idiopathic scoliosis (AIS) patients and their families in preparing for meaningful discussions with clinicians by providing accurate and comprehensible responses to patient inquiries, leveraging AIS domain knowledge.

在RAG (Retrieval-Augmented Generation) 中,检索可以通过计算问题与文档块的嵌入 (embedding) 相似度实现,其中嵌入模型的语义表示能力起关键作用。近期研究推出了AngIE135、Voyage136和BGE137等代表性嵌入模型。除嵌入技术外,检索过程还可通过自适应检索、递归检索和迭代检索等策略进行优化[138,139,140]。多项最新研究验证了RAG在医疗医药领域的有效性:Almanac121是具备医学指南与治疗方案检索能力的大语言框架,在临床场景评估中(尤其是完整性与安全性维度)超越ChatGPT表现;QA-RAG120将RAG与大语言模型结合用于药品监管任务,通过检索相关指导文件并基于内容生成回答;Chat-Orthopedist116作为检索增强型大语言模型,利用青少年特发性脊柱侧凸 (AIS) 领域知识,为患者及家属提供准确易懂的答复,帮助其与临床医生开展有效沟通。

2.4 Discussion

2.4 讨论

This section discusses the principles of medical LLMs, including three types of methods for building models: pre-training, fine-tuning, and prompting. To meet the needs of practical medical applications, users can choose proper medical LLMs according to the magnitude of their own computing resources. Companies or institutes with massive computing resources can either pre-train an application-level medical LLM from scratch or fine-tune existing open-source general LLM models (e.g., LLaMA43) using large-scale medical data. The results in existing literature (e.g., MedPaLM-211, MedAl paca 16 and Clinical Camel18) have shown that fine-tuning general LLMs on medical data122 can boost their performance of medical tasks. For example, Clinical Camel18, which is fine-tuned on the LLaMA-2-70B5 model, even outperforms GPT-418. However, for small enterprises or individuals with certain computing resources, combining with the understanding of medical tasks and a reasonable combination of ICL, prompting engineering, and RAG, to prompt black-box LLMs may also achieve strong results. For example, Med Prompt 12 stimulates the commercial LLM GPT $4^{7}$ through an appropriate combination of prompt strategies to achieve comparable or even better results than fine-tuned medical LLMs (e.g., MedPaLM $2^{11}$ ) and human experts, suggesting that a mix of prompting strategies is an efficient and green solution in the medical domain rather than fine-tuning.

本节探讨医疗大语言模型的构建原理,包括预训练 (pre-training)、微调 (fine-tuning) 和提示工程 (prompting) 三类方法。为满足实际医疗应用需求,用户可根据自身计算资源规模选择合适的医疗大语言模型。拥有海量计算资源的公司或机构既可从零开始预训练应用级医疗大语言模型,也可基于现有开源通用大语言模型(如LLaMA43)通过大规模医疗数据进行微调。现有研究成果(如MedPaLM-211、MedAlpaca16和Clinical Camel18)表明,对通用大语言模型进行医疗数据122微调能显著提升其医疗任务表现。例如基于LLaMA-2-70B5微调的Clinical Camel18甚至超越了GPT-418。而对于具备一定计算资源的中小企业或个人,结合对医疗任务的理解,合理运用上下文学习 (ICL)、提示工程和检索增强生成 (RAG) 来驱动黑盒大语言模型,同样能取得优异效果。例如Med Prompt12通过提示策略的巧妙组合激发商用大语言模型GPT-47,其表现可媲美甚至超越微调医疗大语言模型(如MedPaLM211)和人类专家,这提示在医疗领域混合提示策略是比微调更高效环保的解决方案。

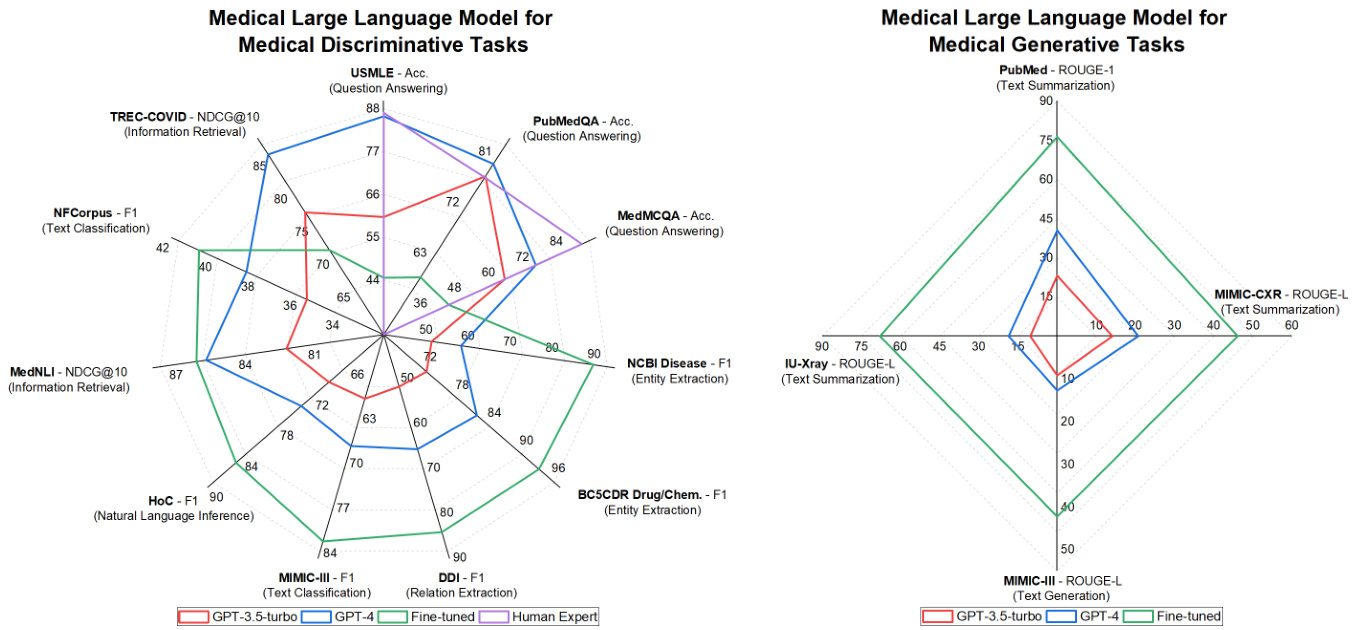

Figure 3. Performance (Dataset-Metric (Task)) comparison between the GPT-3.5 turbo, GPT-4, state-of-the-art task-specific lightweight models (Fine-tuned), and human experts, on seven medical tasks across eleven datasets. All data presented in our Figures originates from published and peer-reviewed literature. Please refer to the supplementary material for the detailed data.

图 3: GPT-3.5 turbo、GPT-4、当前最优任务专用轻量级模型(微调版)与人类专家在7项医疗任务11个数据集上的性能(数据集-指标(任务))对比。图中所有数据均来自已发表的同行评审文献,详细数据请参阅补充材料。

3 Medical Tasks

3 医疗任务

In this section, we will introduce two popular types of medical machine learning tasks: generative and disc rim i native tasks, including ten representative tasks that further build up clinical applications. Figure 3 illustrates the performance comparisons between different LLMs. For clarity, we will only cover a general discussion of those tasks. The detailed definition of the task and the performance comparisons can be found in our supplementary material.

在本节中,我们将介绍两类流行的医疗机器学习任务:生成式 (generative) 与判别式 (discriminative) 任务,包括支撑临床应用的十项代表性任务。图 3 展示了不同大语言模型间的性能对比。为简明起见,我们仅对这些任务进行概述性讨论,具体任务定义与性能对比可参阅补充材料。

3.1 Disc rim i native Tasks

3.1 判别式任务

Disc rim i native tasks are for categorizing or differentiating data into specific classes or categories based on given input data. They involve making distinctions between different types of data, often to categorize, classify, or extract relevant information from structured text or unstructured text. The representative tasks include Question Answering, Entity Extraction, Relation Extraction, Text Classification, Natural Language Inference, Semantic Textual Similarity, and Information Retrieval.

判别式任务旨在根据给定的输入数据,将数据分类或区分为特定的类别。这类任务涉及对不同类型的数据进行区分,通常用于对结构化文本或非结构化文本进行分类、归类或提取相关信息。代表性任务包括问答、实体抽取、关系抽取、文本分类、自然语言推理、语义文本相似度以及信息检索。

The typical input for disc rim i native tasks can be medical questions, clinical notes, medical documents, research papers, and patient EHRs. The output can be labels, categories, extracted entities, relationships, or answers to specific questions, which are often structured and categorized information derived from the input text. In existing LLMs, the disc rim i native tasks are widely studied and used to make predictions and extract information from input text.

判别式任务的典型输入可以是医学问题、临床记录、医疗文档、研究论文和患者电子健康档案(EHR)。输出可以是标签、类别、提取的实体、关系或特定问题的答案,这些通常是从输入文本中提取的结构化和分类信息。在现有的大语言模型中,判别式任务被广泛研究并用于从输入文本中做出预测和提取信息。

For example, based on medical knowledge, medical literature, or patient EHRs, the medical question answering (QA) task can provide precise answers to clinical questions, such as symptoms, treatment options, and drug interactions. This can help clinicians make more efficient and accurate diagnoses 10,11,19. Entity extraction can automatically identify and categorize critical information (i.e. entities) such as symptoms, medications, diseases, diagnoses, and lab results from patient EHRs, thus assisting in organizing and managing patient data. The following entity linking task aims to link the identified entities in a structured knowledge base or a standardized terminology system, e.g., SNOMED CT141, UMLS115, or ICD codes142. This task is critical in clinical decision support or management systems, for better diagnosis, treatment planning, and patient care.

例如,基于医学知识、医学文献或患者电子健康记录(EHR),医疗问答(QA)任务可以为临床问题(如症状、治疗方案和药物相互作用)提供精确答案,帮助临床医生做出更高效、准确的诊断[10,11,19]。实体提取能自动从患者EHR中识别并分类关键信息(即实体),如症状、药物、疾病、诊断和实验室结果,从而协助组织和管理患者数据。随后的实体链接任务旨在将识别出的实体关联到结构化知识库或标准化术语系统(如SNOMED CT[141]、UMLS[115]或ICD代码[142]),该任务对临床决策支持或管理系统至关重要,可优化诊断、治疗计划和患者护理。

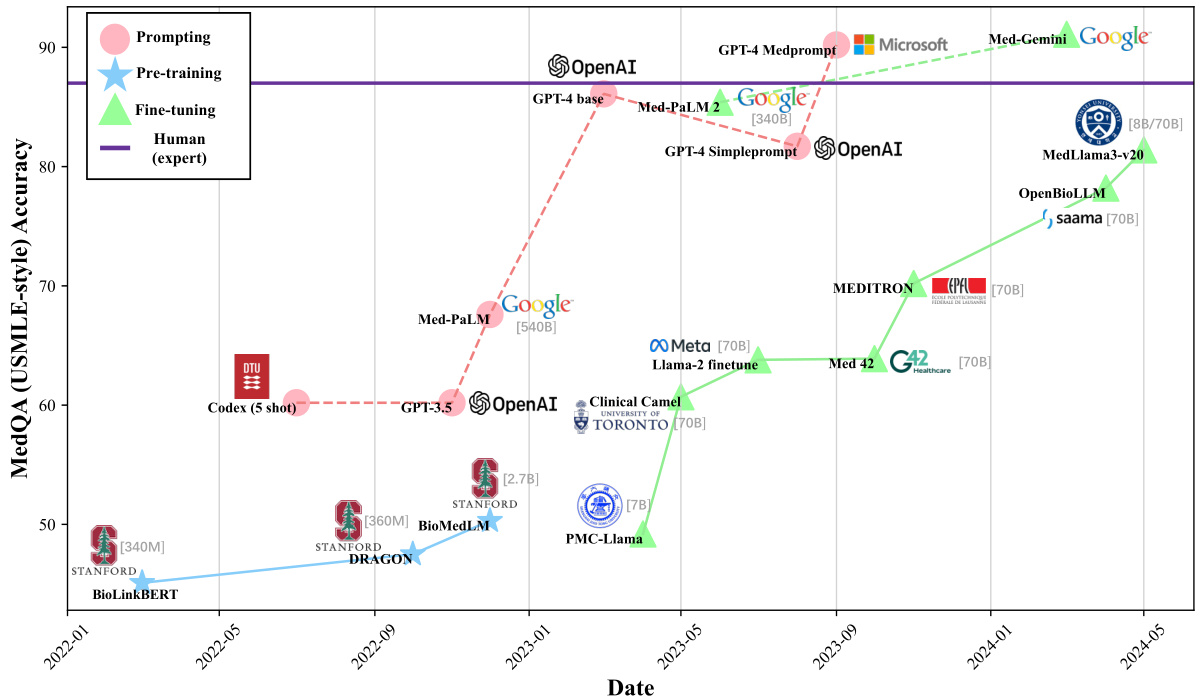

Figure 4. We demonstrate the development of medical large language models over time in different model development types through the scores of the United States Medical Licensing Examination (USMLE) from the MedQA dataset. Solid and dashed lines represent open-source and closed-source models, respectively.

图 4: 我们通过MedQA数据集中美国医师执照考试(USMLE)的分数,展示了不同模型开发类型下医疗大语言模型随时间的发展情况。实线和虚线分别代表开源和闭源模型。

3.2 Generative Tasks

3.2 生成式任务

Different from disc rim i native tasks that focus on understanding and categorizing the input text, generative tasks require a model to accurately generate fluent and appropriate new text based on given inputs. These tasks include medical text sum mari z ation 143,144, medical text generation 70, and medical text simplification 145.

与专注于理解和分类输入文本的判别式任务不同,生成式任务要求模型根据给定输入准确生成流畅且恰当的新文本。这些任务包括医学文本摘要[143,144]、医学文本生成[70]以及医学文本简化[145]。

For medical text sum mari z ation, the input and output are typically long and detailed medical text (e.g., “Findings” in radiology reports), and a concise summarized text (e.g., the “Impression” in radiology reports). Such text contains important medical information that enables clinicians and patients to efficiently capture the key points without going through the entire text. It can also help medical professionals to draft clinical notes by summarizing patient information or medical histories.

对于医疗文本摘要任务,输入和输出通常是长篇详尽的医疗文本(例如放射报告中的"检查所见"部分)与简洁的总结文本(如放射报告中的"印象"部分)。这类文本包含关键医疗信息,能让临床医生和患者无需阅读全文即可高效掌握要点。它还能通过汇总患者信息或病史,帮助医疗专业人员撰写临床记录。

In medical text generation, e.g., discharge instruction generation 146, the input can be medical conditions, symptoms, patient demographics, or even a set of medical notes or test results. The output can be a diagnosis recommendation of a medical condition, personalized instructional information, or health advice for the patient to manage their condition outside the hospital.

在医疗文本生成领域,例如出院指导生成[146],输入可以是医疗状况、症状、患者人口统计数据,甚至是一组医疗记录或检测结果。输出可以是对某种医疗状况的诊断建议、个性化指导信息,或是患者在医院外管理自身健康状况的健康建议。

Medical text simplification 145 aims to generate a simplified version of the complex medical text by, for example, clarifying and explaining medical terms. Different from text sum mari z ation, which concentrates on giving shortened text while maintaining most of the original text meanings, text simplification focuses more on the readability part. In particular, complicated or opaque words will be replaced; complex syntactic structures will be improved; and rare concepts will be explained 38. Thus, one example application is to generate easy-to-understand educational materials for patients from complex medical texts. It is useful for making medical information accessible to a general audience, without altering the essential meaning of the texts.

医学文本简化旨在通过澄清和解释医学术语等方式,生成复杂医学文本的简化版本。与文本摘要(专注于缩短文本同时保留原意)不同,文本简化更注重可读性提升:替换复杂晦涩的词汇、优化冗长句式结构、解释生僻概念[38]。典型应用场景包括将专业医学文献转化为患者易懂的教育材料,在不改变核心含义的前提下,帮助普通大众理解医疗信息。

3.3 Performance Comparisons

3.3 性能对比

Figure 3 shows that some existing general LLMs (e.g., GPT-3.5-turbo and GPT $4^{7}$ ) have achieved strong performance on existing medical machine learning tasks. This is most noticeable for the QA task where GPT-4 (shown in the blue line in Figure 3) consistently outperforms existing task-specific fine-tuned models and is even comparable to human experts (shown in the purple line). The QA datasets of evaluation include MedQA (USMLE)14, PubMed QA 147, and MedMCQA148. To better understand the QA performance of existing medical LLMs, in Figure 4, we further demonstrate the QA performance of medical LLMs on the MedQA dataset over time in different model development types. It also clearly shows that current works, e.g., Med Prompt 12 and Med-Gemini107,108, have successfully proposed several prompting and fine-tuning methods to enable LLMs to outperform human experts.

图 3 显示,现有的一些通用大语言模型(例如 GPT-3.5-turbo 和 GPT $4^{7}$)在现有医疗机器学习任务中已展现出强劲性能。这一现象在问答任务中最为显著,GPT-4(图 3 蓝线所示)持续超越现有针对特定任务微调的模型,甚至可与人类专家水平(紫线所示)比肩。评估使用的问答数据集包括 MedQA (USMLE) [14]、PubMed QA [147] 和 MedMCQA [148]。为深入理解现有医疗大语言模型的问答性能,图 4 进一步展示了不同模型开发类型下医疗大语言模型在 MedQA 数据集上随时间变化的问答表现。该图也清晰表明,当前研究工作(如 Med Prompt [12] 和 Med-Gemini [107,108])已成功提出多种提示与微调方法,使大语言模型性能超越人类专家。

However, on the non-QA disc rim i native tasks and generative tasks, as shown in Figure 3, the existing general LLMs perform worse than the task-specific fine-tuned models. For example, for the non-QA disc rim i native tasks, the state-of-the-art task-specific fine-tuned model BioBERT49 achieves an F1 score of 89.36, substantially exceeding the F1 score of 56.73 by

然而,在非问答判别任务和生成任务上,如图 3 所示,现有的通用大语言模型表现不如针对特定任务微调的模型。例如,在非问答判别任务中,最先进的针对特定任务微调模型 BioBERT49 的 F1 分数达到 89.36,大幅超过通用大语言模型的 56.73 分。

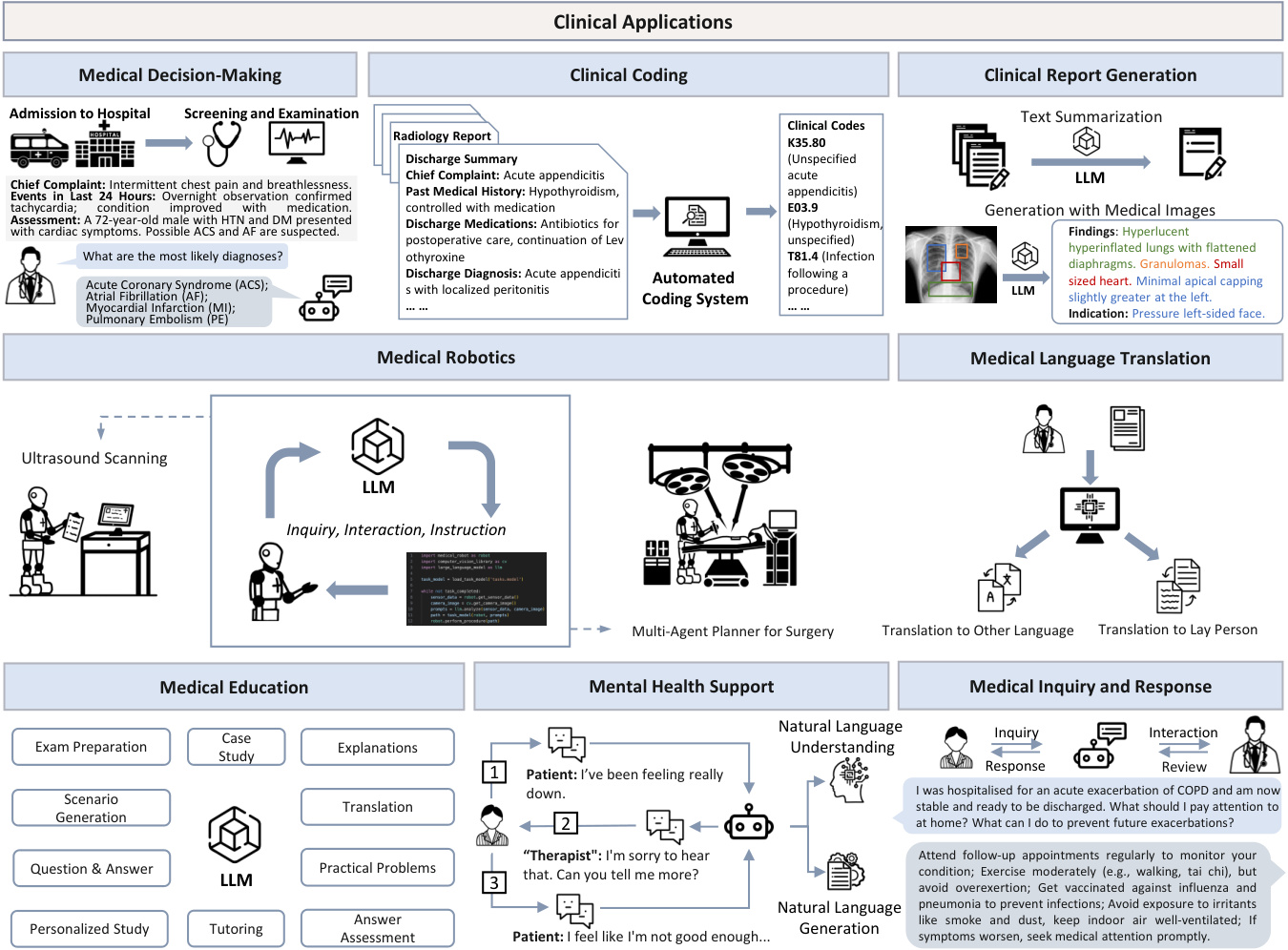

Figure 5. Integrated overview of potential applications 114,150,151,152,153 of large language models in medicine.

图 5: 大语言模型在医学领域的潜在应用 [114,150,151,152,153] 综合概览。

GPT-4, on the entity extraction task using the NCBI disease dataset149. For the generative tasks, we can see that the strong LLM GPT-4 clearly under performs task-specific lightweight models on all datasets. We hypothesize that the reason for the strong QA capability of the current general LLMs is that the QA task is close-ended; i.e. the correct answer is already provided by multiple candidates. In contrast, most non-QA tasks are open-ended where the model has to predict the correct answer from a large pool of possible candidates, or even without any candidates provided.

GPT-4在NCBI疾病数据集149上的实体抽取任务表现。对于生成式任务,我们可以发现强大的大语言模型GPT-4在所有数据集上都明显逊于特定任务的轻量级模型。我们推测当前通用大语言模型在问答任务上表现优异的原因在于:问答任务属于封闭式任务(即正确答案已包含在给定的候选选项中)。相比之下,大多数非问答任务都是开放式的,模型需要从大量潜在候选答案中预测正确答案,有时甚至没有任何候选选项可供参考。

Overall, the comparison proves that the current general LLMs have a strong question-answering capability, however, the capability on other tasks still needs to be improved. In detail, current LLMs are comparable to state-of-the-art models and human experts on the exam-style close-ended QA task with provided answer options. However, real-world open clinical practice usually involves answering open-ended questions without pre-set options and diverges far from the structured nature of exam-taking. The poor performance of LLMs in other non-QA tasks without options indicates a considerable need for advancement before LLMs can be integrated into the actual clinical decision-making process without answer options. Therefore, we advocate that the evaluation of medical LLMs should be extended to a broad range of tasks including non-QA tasks, instead of being limited mainly to medical QA tasks. Hereafter, we will discuss specific clinical applications of LLMs, followed by their challenges and future directions.

总体而言,对比结果表明当前通用大语言模型具备强大的问答能力,但在其他任务上的表现仍有待提升。具体而言,在提供备选答案的封闭式考试类问答任务中,现有大语言模型的表现可与最先进模型及人类专家比肩。然而,真实临床实践通常需要回答无预设选项的开放式问题,这与结构化考试场景存在显著差异。大语言模型在无备选答案的非问答类任务中表现欠佳,表明其距离实际临床决策应用仍有较大提升空间。因此,我们主张医学大语言模型的评估应拓展至包含非问答类任务的广泛范畴,而非主要局限于医疗问答任务。下文将探讨大语言模型的具体临床应用场景,继而分析其面临的挑战与未来发展方向。

4 Clinical Applications

4 临床应用

Currently, most of existing medical LLMs are still in the research and development stage, with limited application and validation in real-world clinical scenarios. However, some initial attempts and explorations have begun to emerge. Researchers are also exploring the integration of large language models into clinical decision support systems to provide evidence-based recommendations and references 155,55,154. Additionally, some research teams are developing tools based on large language models to assist in clinical trial recruitment by analyzing electronic health records to identify eligible participants 156. Some healthcare institutions are experimenting with using LLMs for clinical coding and formatting to improve efficiency and accuracy in medical billing and reimbursement 161,162,163. Researchers are investigating the use of LLMs for clinical report generation, aiming to automate the process of creating coherent and accurate medical reports based on patient data113,104,106. LLMs are being integrated into medical robotics to enhance decision-making, collaboration, and diagnostic capabilities, improving surgical precision and efficiency 167,169,170. In the realm of medical language translation, efforts are being made to utilize LLMs to translate medical information into multiple languages for foreign patients 171,174,175 and to simplify complex medical terminology for lay people176,177, enhancing patient understanding and communication. In the field of medical education, LLMs are being considered as tools to enhance learning experiences by providing personalized content, answering questions, and offering interactive educational materials 178,108. Certain organizations are testing the use of chatbots or virtual assistants to provide mental health support, aiming to increase the accessibility of mental health services 179,180,181. Furthermore, researchers are developing LLM-based systems for medical inquiry and response, aiming to provide accurate and timely answers to patients’ questions, triage inquiries, and assist healthcare professionals in addressing common concerns 189,190,191.

目前,大多数现有医疗大语言模型仍处于研发阶段,在实际临床场景中的应用和验证有限。但已开始出现一些初步尝试和探索。研究人员正在探索将大语言模型整合到临床决策支持系统中,以提供循证建议和参考[155,55,154]。此外,部分研究团队正在开发基于大语言模型的工具,通过分析电子健康记录来协助临床试验招募符合条件的受试者[156]。一些医疗机构正在尝试使用大语言模型进行临床编码和格式化,以提高医疗账单和报销的效率和准确性[161,162,163]。研究人员正在研究利用大语言模型生成临床报告,旨在基于患者数据自动创建连贯准确的医疗报告[113,104,106]。大语言模型正被整合到医疗机器人中,以增强决策、协作和诊断能力,提高手术精度和效率[167,169,170]。在医疗语言翻译领域,研究人员正尝试利用大语言模型为外国患者将医疗信息翻译成多种语言[171,174,175],并为普通民众简化复杂医学术语[176,177],从而提升患者理解和沟通效果。在医学教育领域,大语言模型被视为可通过提供个性化内容、答疑和交互式教学材料来增强学习体验的工具[178,108]。某些组织正在测试使用聊天机器人或虚拟助手提供心理健康支持,旨在提高心理健康服务的可及性[179,180,181]。此外,研究人员正在开发基于大语言模型的医疗问询应答系统,旨在为患者问题提供准确及时的答案、分诊查询,并协助医疗专业人员处理常见问题[189,190,191]。

Table 3. Summary of existing medical LLMs tailored to various clinical applications, in terms of their architecture, model development, the number of parameters, the scale of PT/FT data, and the data source. M: million, B: billion. PT: pre-training. FT: fine-tuning. ICL: in-context learning. CoT: chain-of-thought prompting. RAG: retrieval-augmented generation. This information provides guidelines on how to select and build models. We further provide the evaluation information (i.e., task and performance) to show how current works evaluate their models.

| Application | Model | Architecture | Model Development # Params | Data Scale | Data Source | Evaluation (Task: Score) | |

| Medical Decision-Making (Sec. 4) | Dr. Knows 114 | GPT-3.5 | ICL | 154B | 5820 notes | MIMIC-II 7+IN-HOUSE 114 | Diagnosis Summarization: 30.72 ROUGE-L |

| DDx PaLM-2 154 | PaLM-2 | FT &ICL | 340B | MultiMedQA I+MIMIC-III57 | Differential Diagnosis: 0.591 top-10 Accuracy Readmission Prediction: 0.799 AUC | ||

| NYUTron 55 | BERT | PT &FT | 110M | 7.25M notes, 4.1B tokens NYU Notes 55 | In-hospital Mortality Prediction: 0.949 AUC Comorbidity Index Prediction: 0.894 AUC Length of Stay Prediction: 0.787 AUC Insurance Denial Prediction: 0.872 AUC | ||

| Foresight 155 | GPT-2 | PT & FT | 1.5B | 35M notes | King's College Hospital, MIMIC-III South London and Maudsley Hospital | Next Biomedical Concept Forecast: 0.913 F1 | |

| GPT-4 | 184 patients | 2016 SIGIR 157,2021& 2022 TREC 158 | Ranking Clinical Trials: 0.733 P@ 10, 0.817 NDCG@ 10 Excluding clinical trials: 0.775 AUROC | ||||

| Clinical Coding (Sec. 4) | PLM-ICD 159 | RoBERTa | FT | 355M | 70,539 notes | MIMIC-II160+MIMIC-III57 | ICD Code Prediction: 0.926 AUC, 0.104 F1 |

| DRG-LLaMA 161 | LLaMA-7B | FT | 7B | 25k pairs | MIMIC-IV 105 | Diagnosis-related Group Prediction: 0.327 F1 | |

| ChatICD 162 | ChatGPT | ICL | 10k pairs | MIMIC-III57 | ICD Code Prediction: 0.920 AUC,0.681 F1 | ||

| LLM-codex 163 | ChatGPT+LSTM ICL | MIMIC-III57 | ICD Code Prediction: 0.834 AUC, 0.468 F1 | ||||

| Clinical Report Generation (Sec. 4.3) | ImpressionGPT 164 | ChatGPT | ICL & RAG | 110M | 184k reports | MIMIC-CXR 105+IU X-ray 165 | Report Summarization: 47.93 ROUGE-L |

| RadAdapt 166 | T5 | FT | 223M, 738M 80k reports | MIMIC-II1\$7 | Report Summarization: 36.8 ROUGE-L | ||

| ChatCAD I13 | GPT-3 | ICL | 175B | 300 reports | MIMIC-CXR105 | Report Generation: 0.605 F1 | |

| MAIRA-1 104 | ViT+Vicuna-7B | FT | 8B | 337k pairs | MIMIC-CXR105 | Report Generation: 28.9 ROUGE-L | |

| RadFM 106 | ViT+LLaMA-13B PT& FT | 14B | 32M pairs | MedMD 106 | Report Generation: 18.22 ROUGE-L | ||

| Medical Robotics (Sec. 4.4) | SuFIA 167 | GPT-4 | ICL | 4 tasks | ORBIT-Surgical 168 | Surgical Tasks: 100 Success Rate | |

| UltrasoundGPT 169 | GPT-4 | ICL | 522 tasks | Task Completion: 80 Success Rate | |||

| Robotic X-ray 170 | GPT-4 | ICL | X-ray Surgery: 7.6/10 Human Rating | ||||

| Medical Language Translation (Sec. 4.5) | Medical mT5 171 | T5 | PT | 738M, 3B | 4.5B pairs | PubMed 0 +EMEA 172 ClinicalTrials 173, etc. | (Multi-Task) Sequence Labeling: 0.767 F1 Augment Mining 0.733 F1 |

| Apollo 174 | Qwen | PT & FT | 1.8B-7B | 2.5B pairs | ApolloCorpora 174 | QA: 0.588 Accuracy | |

| BiMedix 175 | Mistral | FT | 13B | 1.3M pairs | BiMed1.3M 175 | Question Answering: 0.654 Accuracy | |

| Biomed-sum 176 | BART | FT | 406M | 27k papers | BioCiteDB 176 | Abstractive Summarization: 32.33 ROUGE-L | |

| RALL 177 | BART | FT & RAG | 406M | 63k pairs | CELLS 176 | Lay Language Generation: N/A | |

| Medical Education (Sec. 4.6) | ChatGPT178 | GPT-3.5/GPT-4 | ICL | Curriculum Generation, Learning Planning | |||

| Med-Gemini 108 | Gemini | FT & CoT | MedQA-R/RS I08+MultiMedQA 11 MIMIC-II57+MultiMedBench 109 | Text-based QA: 0.911 Accuracy Multimodal QA: 0.935 Accuracy | |||

| Mental Health Support (Sec. 4.7) | PsyChat 179 | ChatGLM | FT | 6B | 350k pairs | Xingling 179+Smilechat 179 | Text Generation: 27.6 ROUGE-L |

| ChatCounselor 180 | Vicuna | FT | 7B | 8k instructions | Psych8K 180 Dreaddit 182+DepSeverity 18+SDCNL184 | Question Answering: Evaluated by ChatGPT | |

| Mental-LLM 181 | Alpaca, FLAN-T5 FT & ICL | 7B, 11B | 31k pairs | CSSRS-Suicide 185+Red-Sam 186 Twt-60Users 187+SAD188 | Mental Health Prediction: 0.741 Accuracy | ||

| Medical Inquiry and Response (Sec. 4.8) | AMIE 189 | PaLM2 | FT | 340B | >2M pairs | MedQA 14+MultiMedBench 109 MIMIC-II157+real-world diaglogue 189 | Diagnostic Accuracy: 0.920 Top-10 Accuracy Inquiry Capability: 4.62/5 (ChatGPT) |

| Healthcare Copilot 190 | ChatGPT | ICL | MedDialog 1900 | Conversational Fluency: 4.06/5 (ChatGPT) Response Accuracy: 4.56/5 (ChatGPT) Response Safety: 3.88/5 (ChatGPT) | |||

| Conversational Diagnosis 191 GPT-4/LLaMA | ICL | 40k pairs | MIMIC-IV 92 | Disease Screening: 0.770 Top-10 Hit Rate Differential Diagnosis: 0.910 Accuracy | |||

表 3. 针对不同临床应用定制的现有医疗大语言模型汇总,包括架构、模型开发、参数量、预训练/微调数据规模及数据来源。M:百万,B:十亿。PT:预训练。FT:微调。ICL:上下文学习。CoT:思维链提示。RAG:检索增强生成。该信息为模型选择和构建提供指导。我们进一步提供评估信息(即任务和性能)以展示当前工作如何评估其模型。

| 应用领域 | 模型 | 架构 | 模型开发 | 参数量 | 数据规模 | 数据来源 | 评估(任务:得分) |

|---|---|---|---|---|---|---|---|

| 医疗决策(第4节) | Dr. Knows 114 | GPT-3.5 | ICL | 154B | 5820份病历 | MIMIC-II 7+内部数据114 | 诊断摘要:30.72 ROUGE-L |

| DDx PaLM-2 154 | PaLM-2 | FT & ICL | 340B | - | MultiMedQA I+MIMIC-III57 | 鉴别诊断:0.591 top-10准确率 再入院预测:0.799 AUC | |

| NYUTron 55 | BERT | PT & FT | 110M | 725万份病历,41亿token | NYU病历55 | 院内死亡率预测:0.949 AUC 合并症指数预测:0.894 AUC 住院时长预测:0.787 AUC 保险拒赔预测:0.872 AUC | |

| Foresight 155 | GPT-2 | PT & FT | 1.5B | 3500万份病历 | 国王学院医院,MIMIC-III 南伦敦和莫兹利医院 | 下一生物医学概念预测:0.913 F1 | |

| GPT-4 | - | - | 184名患者 | 2016 SIGIR 157, 2021&2022 TREC 158 | 临床试验排序:0.733 P@10, 0.817 NDCG@10 排除临床试验:0.775 AUROC | ||

| 临床编码(第4节) | PLM-ICD 159 | RoBERTa | FT | 355M | 70,539份病历 | MIMIC-II160+MIMIC-III57 | ICD编码预测:0.926 AUC, 0.104 F1 |

| DRG-LLaMA 161 | LLaMA-7B | FT | 7B | 25k对 | MIMIC-IV 105 | 诊断相关组预测:0.327 F1 | |

| ChatICD 162 | ChatGPT | ICL | - | 10k对 | MIMIC-III57 | ICD编码预测:0.920 AUC, 0.681 F1 | |

| LLM-codex 163 | ChatGPT+LSTM | ICL | - | - | MIMIC-III57 | ICD编码预测:0.834 AUC, 0.468 F1 | |

| 临床报告生成(第4.3节) | ImpressionGPT 164 | ChatGPT | ICL & RAG | 110M | 184k份报告 | MIMIC-CXR 105+IU X-ray 165 | 报告摘要:47.93 ROUGE-L |

| RadAdapt 166 | T5 | FT | 223M, 738M | 80k份报告 | MIMIC-II17 | 报告摘要:36.8 ROUGE-L | |

| ChatCAD I13 | GPT-3 | ICL | 175B | 300份报告 | MIMIC-CXR105 | 报告生成:0.605 F1 | |

| MAIRA-1 104 | ViT+Vicuna-7B | FT | 8B | 337k对 | MIMIC-CXR105 | 报告生成:28.9 ROUGE-L | |

| RadFM 106 | ViT+LLaMA-13B | PT & FT | 14B | 3200万对 | MedMD 106 | 报告生成:18.22 ROUGE-L | |

| 医疗机器人(第4.4节) | SuFIA 167 | GPT-4 | ICL | - | 4项任务 | ORBIT-Surgical 168 | 手术任务:100%成功率 |

| UltrasoundGPT 169 | GPT-4 | ICL | - | 522项任务 | - | 任务完成:80%成功率 | |

| Robotic X-ray 170 | GPT-4 | ICL | - | - | - | X光手术:7.6/10人工评分 | |

| 医学语言翻译(第4.5节) | Medical mT5 171 | T5 | PT | 738M, 3B | 45亿对 | PubMed 0+EMEA 172 ClinicalTrials 173等 | (多任务)序列标注:0.767 F1 增强挖掘:0.733 F1 |

| Apollo 174 | Qwen | PT & FT | 1.8B-7B | 25亿对 | ApolloCorpora 174 | 问答:0.588准确率 | |

| BiMedix 175 | Mistral | FT | 13B | 130万对 | BiMed1.3M 175 | 问答:0.654准确率 | |

| Biomed-sum 176 | BART | FT | 406M | 27k篇论文 | BioCiteDB 176 | 摘要生成:32.33 ROUGE-L | |

| RALL 177 | BART | FT & RAG | 406M | 63k对 | CELLS 176 | 通俗语言生成:N/A | |

| 医学教育(第4.6节) | ChatGPT178 | GPT-3.5/GPT-4 | ICL | - | - | - | 课程生成,学习规划 |

| Med-Gemini 108 | Gemini | FT & CoT | - | - | MedQA-R/RS I08+MultiMedQA 11 MIMIC-II57+MultiMedBench 109 | 文本问答:0.911准确率 多模态问答:0.935准确率 | |

| 心理健康支持(第4.7节) | PsyChat 179 | ChatGLM | FT | 6B | 350k对 | 星聆179+微笑聊天179 | 文本生成:27.6 ROUGE-L |

| ChatCounselor 180 | Vicuna | FT | 7B | 8k指令 | Psych8K 180 Dreaddit 182+DepSeverity 18+SDCNL184 | 问答:由ChatGPT评估 | |

| Mental-LLM 181 | Alpaca, FLAN-T5 | FT & ICL | 7B, 11B | 31k对 | CSSRS-Suicide 185+Red-Sam 186 Twt-60Users 187+SAD188 | 心理健康预测:0.741准确率 | |

| 医疗问诊与应答(第4.8节) | AMIE 189 | PaLM2 | FT | 340B | >200万对 | MedQA 14+MultiMedBench 109 MIMIC-II157+真实世界对话189 | 诊断准确率:0.920 top-10准确率 问诊能力:4.62/5(ChatGPT评分) |

| Healthcare Copilot 190 | ChatGPT | ICL | - | - | MedDialog 1900 | 对话流畅度:4.06/5(ChatGPT评分) 应答准确率:4.56/5(ChatGPT评分) 应答安全性:3.88/5(ChatGPT评分) | |

| Conversational Diagnosis 191 | GPT-4/LLaMA | ICL | - | 40k对 | MIMIC-IV 92 | 疾病筛查:0.770 top-10命中率 鉴别诊断:0.910准确率 |

To this end, as shown in Figure 5, we will introduce the clinical applications of LLMs in this section. Each subsection contains a specific application and discusses how LLMs perform this task. Table 3 summarizes the guidelines on how to select, build, and evaluate medical LLMs for various clinical applications. Although there are currently no large-scale clinical trials specifically targeting these models, researchers are actively evaluating their effectiveness and safety in various healthcare settings. As research progresses and evidence accumulates, it is expected that the application of these large language models in clinical practice will gradually expand, subject to rigorous validation processes and ethical reviews.

为此,如图 5 所示,我们将在本节介绍大语言模型 (LLM) 的临床应用。每个小节包含一个具体应用,并讨论大语言模型如何执行该任务。表 3 总结了针对不同临床应用如何选择、构建和评估医疗大语言模型的指导原则。尽管目前尚未有针对这些模型的大规模临床试验,但研究人员正在积极评估其在各类医疗场景中的有效性与安全性。随着研究进展和证据积累,预计这些大语言模型在临床实践中的应用将逐步扩展,但需经过严格的验证流程和伦理审查。

4.1 Medical Decision-Making

4.1 医疗决策

Medical decision-making, including diagnosis, prognosis, treatment suggestion, risk prediction, clinical trial matching, etc., heavily relies on the synthesis and interpretation of vast amounts of information from various sources, such as patient medical histories, clinical data, and the latest medical literature. The advent of LLMs has opened up new opportunities for enhancing these critical processes in healthcare. These advanced models can rapidly process and comprehend massive volumes of medical data, literature, and legal guidelines, potentially aiding healthcare professionals in making more informed and legally sound decisions across a wide range of clinical scenarios 192,19. For instance, in medical diagnosis, LLMs can assist practitioners in analyzing objective medical data from tests and self-described subjective symptoms to conclude the most likely health problem occurring in the patient192. Similarly, LLMs can support treatment planning by providing personalized recommendations based on the latest clinical evidence and patient-specific factors 19. Furthermore, LLMs can contribute to prognosis and risk prediction by identifying patterns and risk factors from large-scale patient data, enabling more accurate and timely interventions 55. By leveraging the power of LLMs, healthcare professionals can enhance their decision-making capabilities across the spectrum of clinical tasks, leading to improved patient outcomes and more efficient healthcare delivery.

医疗决策(包括诊断、预后、治疗建议、风险预测、临床试验匹配等)高度依赖于对患者病史、临床数据和最新医学文献等多源海量信息的综合解析。大语言模型(LLM)的出现为提升这些关键医疗流程创造了新机遇。这些先进模型能快速处理并理解大量医疗数据、文献和法律指南,帮助医疗从业者在广泛临床场景中做出更明智且合规的决策 [192,19]。例如在医学诊断中,LLM可协助从业者分析检测报告的客观医疗数据与患者自述的主观症状,从而推断最可能的健康问题 [192];在治疗规划中,LLM能根据最新临床证据和患者个体特征提供个性化建议 [19];此外,LLM还能通过从大规模患者数据中识别模式和风险因素,提升预后与风险预测的准确性,实现更精准及时的干预 [55]。借助LLM的能力,医疗从业者能全面提升各类临床任务的决策水平,最终改善患者疗效并优化医疗服务效率。