LinVT: Empower Your Image-level Large Language Model to Understand Videos

LinVT: 赋能图像级大语言模型理解视频

Abstract

摘要

Large Language Models (LLMs) have been widely used in various tasks, motivating us to develop an LLM-based assistant for videos. Instead of training from scratch, we propose a module to transform arbitrary well-trained image-based LLMs into video-LLMs (after being trained on video data). To better adapt image-LLMs for processing videos, we introduce two design principles: linear transformation to preserve the original visual-language alignment and representative information condensation from redundant video content. Guided by these principles, we propose a plug-and-play Linear Video Tokenizer (LinVT), which enables existing image-LLMs to understand videos. We benchmark LinVT with six recent visual LLMs: Aquila, Blip-3, InternVL2, Mipha, Molmo and Qwen2-VL, showcasing the high compatibility of LinVT. LinVT-based LLMs achieve state-of-the-art performance across various video benchmarks, illustrating the effectiveness of LinVT in multi-modal video understanding. Code is available at https://github.com/gls0425/LinVT.

大语言模型 (LLM) 已被广泛应用于各种任务,这促使我们开发一款基于LLM的视频助手。我们并未选择从头训练,而是提出一个模块,可将任意训练成熟的图像大语言模型转化为视频大语言模型(需经过视频数据训练)。为更好地适配图像大语言模型处理视频,我们引入两项设计原则:通过线性变换保持原始视觉-语言对齐能力,以及从冗余视频内容中提炼代表性信息。基于这些原则,我们提出即插即用的线性视频分词器 (LinVT),使现有图像大语言模型具备视频理解能力。我们在Aquila、Blip-3、InternVL2、Mipha、Molmo和Qwen2-VL六个前沿视觉大语言模型上测试LinVT,证明了其高度兼容性。基于LinVT的大语言模型在多个视频基准测试中达到最先进性能,展现了LinVT在多模态视频理解中的有效性。代码已开源:https://github.com/gls0425/LinVT。

1. Introduction

1. 引言

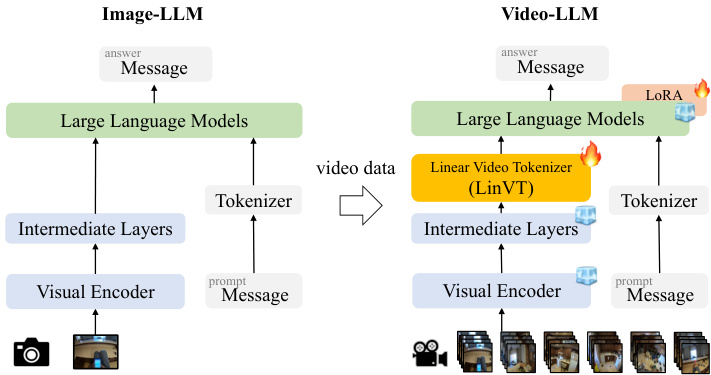

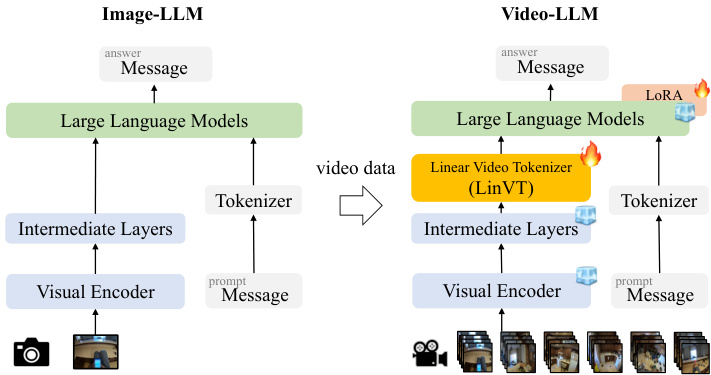

The rapid proliferation of video data has spurred extensive research in the fields of computer vision and natural language processing, aiming at the effective comprehension and processing of lengthy video content. Long videos inherently encompass a wealth of temporal and spatial information, making the comprehension process remarkably demanding. Consequently, the scientific community has been devoted to developing intelligent data assistants capable of time-sensitive analysis of prolonged videos, including tasks such as dense video captioning, temporal grounding, key moment sum mari z ation, etc. Large language models (LLMs) have consistently demonstrated exceptional comprehension capabilities, thus motivating our endeavor to develop an LLM-based assistant that facilitates a comprehen(a) LinVT can convert an image-LLM to a video-LLM, with high compatibility. The intermediate layers vary across different image-LLMs. For instance, it denotes a MLP followed by a pooling layer in Molmo [12], or a token sampler in BLIP-3 [75].

视频数据的快速激增推动了计算机视觉和自然语言处理领域的广泛研究,旨在实现对长视频内容的高效理解与处理。长视频本身包含丰富的时空信息,使得理解过程极具挑战性。因此,科学界一直致力于开发能对长视频进行时序分析的智能数据助手,包括密集视频描述、时序定位、关键片段摘要等任务。大语言模型(LLMs)始终展现出卓越的理解能力,这促使我们开发基于LLM的助手,以实现全面(a) LinVT可将图像-LLM转换为视频-LLM,具有高兼容性。中间层结构因图像-LLM而异,例如在Molmo[12]中代表MLP加池化层,在BLIP-3[75]中则为token采样器。

Figure 1. By being trained on video data, LinVT can endow an image-based LLM with the capability to handle video understanding tasks and achieve outstanding performance.

图 1: 通过视频数据训练,LinVT 能够赋予基于图像的大语言模型处理视频理解任务的能力,并取得出色性能。

(b) Comparison between LinVT-Molmo-7B (ours) and other video-LLMs.

(b) LinVT-Molmo-7B (ours) 与其他视频大语言模型的对比。

sive understanding of long videos.

对长视频的全面理解。

Due to the large-scale training of image-text pairs, largescale image-LLMs [8, 10, 29, 40, 85] have become dominant in computer vision. The development of imageLLMs is progressing rapidly, showcasing numerous improvements. Meanwhile, many efforts are struggling to train video-LLMs from scratch using vast amounts of image and video training data. For instance, Qwen-VL [3] requires over one billion images for training, and Blip-3 [75] even requires over one trillion. When training a video-LLM, a natural strategy is to build on existing image-LLMs due to their powerful image-level comprehension abilities, which also form the basis of understanding videos. This approach allows us to avoid training a video-LLM from scratch, thereby saving extensive computational resources and time. Consequently, developing a method to leverage the capabilities of sophisticated image-LLMs for training video-LLMs would be highly valuable in practice. To achieve this, we develop a video tokenizer that can be injected into image-LLMs, and its aim is to transform the visual tokens (of the video frames) into meaningful video tokens that can be understood by the LLMs, as illustrated by Fig. 1a.

由于大规模图文对训练的成功,大规模图像大语言模型 [8, 10, 29, 40, 85] 已成为计算机视觉领域的主导力量。图像大语言模型的发展日新月异,展现出诸多改进。与此同时,许多研究正致力于利用海量图像和视频训练数据从头训练视频大语言模型。例如,Qwen-VL [3] 需要超过10亿张图像进行训练,而Blip-3 [75] 甚至需要超过1万亿张。在训练视频大语言模型时,基于现有图像大语言模型的策略是自然选择,因为它们具备强大的图像级理解能力,这也是理解视频的基础。这种方法使我们能够避免从头训练视频大语言模型,从而节省大量计算资源和时间。因此,开发一种利用成熟图像大语言模型能力来训练视频大语言模型的方法将具有极高的实践价值。为此,我们开发了一种可注入图像大语言模型的视频分词器 (video tokenizer) ,其目标是将(视频帧的)视觉token转化为大语言模型可理解的有意义视频token,如图 1a 所示。

In designing the video tokenizer, an important principle is to ensure that the video-LLM retains the image-level knowledge both during and after training on video data. Notably, preserving image-level comprehension ability within the video-LLM is crucial, as it allows for a single model to be used, leading to a simpler architecture and facilitating easier deployment for practical applications. In this work, we posit that a key to satisfy this requirement is to maintain the visual-language alignment of the image-LLM in the video-LLM. Therefore, our first design principle of the video tokenizer is that its output should be the linear combination of its input.

在设计视频分词器时,一个重要原则是确保视频大语言模型在训练视频数据期间及之后都能保留图像级知识。值得注意的是,保持视频大语言模型中的图像级理解能力至关重要,因为这允许使用单一模型,从而简化架构并便于实际应用部署。在这项工作中,我们提出满足这一要求的关键在于保持图像大语言模型在视频大语言模型中的视觉-语言对齐。因此,我们视频分词器的第一个设计原则是其输出应为输入的线性组合。

Apart from the linearity design principle, another crucial goal of the video tokenizer is the information refinement. An obvious distinction between images and videos is that videos may contain a great many of frames (especially for long videos), thereby producing significantly more visual tokens which are fed to the LLM. In this case, excessive visual tokens can lead to a computationally overwhelming burden, as well as being at rish of exceeding the context window of LLMs. Nevertheless, a sophisticated video tokenizer should have the capability to extract reasonable number of information-rich visual tokens from the redundant video content. We coin this design principle as representative information condensation.

除了线性设计原则外,视频分词器的另一个关键目标是信息精炼。图像与视频的显著区别在于,视频可能包含大量帧(尤其是长视频),从而产生更多输入大语言模型的视觉token。这种情况下,过量的视觉token会带来难以承受的计算负担,并可能超出大语言模型的上下文窗口限制。因此,一个优秀的视频分词器应具备从冗余视频内容中提取合理数量、信息丰富的视觉token的能力。我们将这一设计原则称为代表性信息浓缩。

Previous video-LLMs employ token compression techniques in tackling the information-redundancy issue, such as Video-LLaMA [80], Video LLaMA 2 [9], PLLaVA [71]. However, they fail to take into account two important perspectives: tackling events of different temporal lengths (i.e. duration) and dynamically extracting question-related information (provided by the users) from the video. Therefore, in this work, we wish to strengthen the ability of the video tokenizer in extracting representative information in these two essential aspects.

以往的视频大语言模型采用token压缩技术来处理信息冗余问题,例如Video-LLaMA [80]、Video LLaMA 2 [9]、PLLaVA [71]。但这些方法忽略了两个关键视角:处理不同时间长度(即持续时间)的事件,以及从视频中动态提取用户提供的与问题相关的信息。因此,本研究旨在增强视频tokenizer在这两个核心维度上提取代表性信息的能力。

Following the two above mentioned design principles, we propose a module termed Linear Video Tokenizer (LinVT) as depicted in Fig. 1a. LinVT is positioned right before the LLM. It takes a sequence of image-level tokens as input and generates video-level visual tokens. The output video tokens of LinVT are strictly the weighted average of (part of)

遵循上述两项设计原则,我们提出了一个名为线性视频分词器 (Linear Video Tokenizer, LinVT) 的模块,如图 1a 所示。LinVT 被放置在大语言模型之前,它以一系列图像级 token 作为输入,并生成视频级视觉 token。LinVT 输出的视频 token 严格是 (部分) 输入 token 的加权平均值。

the input visual tokens of LinVT, ensuring that the knowledge from the image-LLM is effectively preserved. Furthermore, LinVT integrates multi-scale processing of the visual tokens, generating token sequences of varying lengths to accommodate videos (and events) of different durations. Lastly, by involving text interaction, LinVT is able to accurately extract question-relevant information from the video.

LinVT的输入视觉token,确保来自图像大语言模型的知识得到有效保留。此外,LinVT集成了视觉token的多尺度处理,生成不同长度的token序列以适应不同持续时间的视频(和事件)。最后,通过引入文本交互,LinVT能够从视频中准确提取与问题相关的信息。

Extensive experiments demonstrate the effectiveness of LinVT. LinVT is a plug-and-play module and we combine it with six recent multi-modal LLMs, namely, Aquila [19], Blip-3 [75], InternVL2 [8], Mipha [86], Molmo [12] and Qwen2-VL [65], for video understanding tasks in the experiments. In particular, Blip-3, Mipha and Molmo are three image-LLMs that are not trained on video data, whereas Aquila, InternVL2 and Qwen2-VL are trained on image data as well as video data. Not surprisingly, LinVT brings significant video understanding ability to all of them. Fig. 1b shows the performance of LinVT-Molmo-7B. More impressively, LinVT-based video-LLMs achieve superior performance with high training efficiency, namely utilizing only video data (without any image data) for training.

大量实验证明了LinVT的有效性。LinVT是一个即插即用模块,我们在实验中将其与六种最新的多模态大语言模型结合,分别是Aquila [19]、Blip-3 [75]、InternVL2 [8]、Mipha [86]、Molmo [12]和Qwen2-VL [65],用于视频理解任务。其中,Blip-3、Mipha和Molmo是三种未在视频数据上训练的图像大语言模型,而Aquila、InternVL2和Qwen2-VL则在图像和视频数据上都进行了训练。不出所料,LinVT为所有这些模型都带来了显著的视频理解能力。图1b展示了LinVT-Molmo-7B的性能表现。更令人印象深刻的是,基于LinVT的视频大语言模型仅使用视频数据(不含任何图像数据)进行训练,就能以高训练效率实现卓越性能。

To summarize, our contributions are listed as follows:

总结而言,我们的贡献如下:

• We propose Linear Video Tokenizer (LinVT), a module that enables image-LLMs to understand videos. In particular, the output of this tokenizer is strictly the weighted average of (part of) the input frame-level visual tokens, ensuring that the knowledge from the image-LLM is effectively preserved. • Based on the principles of representative information condensation, LinVT integrates multi-scale processing to tackle events of various durations. Furthermore, it involves text conditions to extract question-relevant visual cues from videos. • Extensive experiments demonstrate the effectiveness of LinVT. We inject LinVT into six different types of image-LLMs, and all of them achieve outstanding performance in video understanding tasks.

• 我们提出了线性视频分词器 (Linear Video Tokenizer, LinVT),该模块使图像大语言模型能够理解视频。具体而言,该分词器的输出严格是输入帧级视觉 token 的加权平均值(部分),确保图像大语言模型中的知识得到有效保留。

• 基于代表性信息浓缩原则,LinVT 采用多尺度处理来应对不同时长的事件。此外,它还引入文本条件从视频中提取与问题相关的视觉线索。

• 大量实验验证了 LinVT 的有效性。我们将 LinVT 注入六种不同类型的图像大语言模型,所有模型均在视频理解任务中表现出色。

2. Related Work

2. 相关工作

Video Large Language Models. Inspired by the powerful understanding capabilities and world knowledge of Largescale Language Models (LLMs), many efforts [1, 8, 29, 40, 65] have been devoted to integrating textual and visual information multi-modal tasks. Recent works have achieved success in combining LLMs with video encoders, which allows for the powerful understanding and generation capabilities of LLMs to address video-related tasks. The primary distinction among these multi-modal LLMs lies in the way they encode videos into visual tokens. In notable works like VideoChat [30], video backbones are employed to encode video embeddings, followed by the use of a query Transformer, i.e. Q-Former [11], to compress video tokens. Conversely, Video-LLaMA [80] first utilizes a visual Transformer (ViT) and an image Q-Former to encode individual frames and then incorporates a video Q-Former for temporal modeling. However, the methods suffer from a limitation as they can only handle a limited number of video frames due to a large number of video tokens produced by the encoder. This constraint ultimately leads to a loss of visual semantics when processing long videos. In our work, we propose a module specifically designed for representative token condensation in videos.

视频大语言模型。受大语言模型(LLM)强大理解能力和世界知识的启发,许多研究[1,8,29,40,65]致力于整合文本与视觉信息的多模态任务。近期工作成功将LLM与视频编码器结合,利用LLM强大的理解与生成能力处理视频相关任务。这些多模态LLM的主要区别在于视频编码为视觉token的方式。例如VideoChat[30]采用视频主干网络编码视频嵌入,再通过查询Transformer(即Q-Former[11])压缩视频token;而Video-LLaMA[80]先使用视觉Transformer(ViT)和图像Q-Former编码单帧,再引入视频Q-Former进行时序建模。但这些方法因编码器产生大量视频token,只能处理有限帧数,导致长视频处理时出现视觉语义丢失。我们提出了专为视频代表性token浓缩设计的模块。

Vision-language instruction tuning. Inspired by the recent success of fine-tuning in LLMs, visual language instruction tuning is often incorporated after the visual-text alignment stage. The goal of instruction tuning is to enhance the instruction-following capabilities. This tuning requires generating high-quality data through human-guided instructions, which can be categorized into two technical branches. The first branch integrates existing multi-modal task datasets and converts them into instruction formats, as exemplified by frameworks such as Multi Instruct [73], Instruct BLIP [11], and $\mathbf{M}^{3}\mathbf{IT}$ [32]. The second approach leverages GPT-4 [51] to generate more diverse conversational data. Methods such as miniGPT4 [85], LLaVA [40], MIMIC-IT [26], VideoChat [30], and Valley [45] focus on obtaining detailed visual descriptions and constructing image or video-based dialogue data from LLMs. However, these methods tend to overlook the temporal dimension of user requests in relation to video understanding. To address this, several datasets [6, 43, 46, 53, 69] focusing on video temporal understanding have been developed.

视觉-语言指令微调。受大语言模型(LLM)微调近期成功的启发,视觉语言指令微调通常在视觉-文本对齐阶段后进行。指令微调的目标是增强模型遵循指令的能力。这种调优需要通过人工引导的指令生成高质量数据,可分为两个技术分支。第一分支整合现有多模态任务数据集并将其转换为指令格式,如Multi Instruct [73]、Instruct BLIP [11]和$\mathbf{M}^{3}\mathbf{IT}$[32]等框架所示。第二分支利用GPT-4 [51]生成更多样化的对话数据。miniGPT4 [85]、LLaVA [40]、MIMIC-IT [26]、VideoChat [30]和Valley [45]等方法专注于从大语言模型获取详细视觉描述,并构建基于图像或视频的对话数据。然而这些方法往往忽视用户请求在视频理解中的时间维度。为此,已开发出多个专注于视频时序理解的数据集[6, 43, 46, 53, 69]。

Visual token pruning. Visual token pruning is widely adopted to reduce redundancy and overlapping information in vision encoders and LLMs while retaining task-relevant information. [54] introduced a temporal aggregation module to combine duplicate video frames and a spatial aggregation module to merge similar image blocks within each frame. [58] addressed temporal redundancy by progressively merging tokens in adjacent segments, thereby reducing the number of tokens while preserving crucial video-level features. [57] introduced adaptive token reduction techniques involving adaptive token selection and token supplement ation, which can be employed without finetuning. In LLMs, pruning methods based on KV cache pruning serve as efficient model serving strategies [17, 63].

视觉Token剪枝。视觉Token剪枝被广泛用于减少视觉编码器和大语言模型中的冗余与重叠信息,同时保留任务相关信息。[54]提出了时间聚合模块来合并重复视频帧,以及空间聚合模块来融合每帧内相似的图像块。[58]通过逐步合并相邻片段的Token来解决时序冗余问题,从而在保留关键视频级特征的同时减少Token数量。[57]引入了自适应Token缩减技术,包括自适应Token选择和Token补充,这些技术无需微调即可使用。在大语言模型中,基于KV缓存剪枝的方法可作为高效的模型服务策略[17, 63]。

3. Linear Video Tokenizer for Video-LLMs

3. 面向视频大语言模型的线性视频分词器 (Linear Video Tokenizer for Video-LLMs)

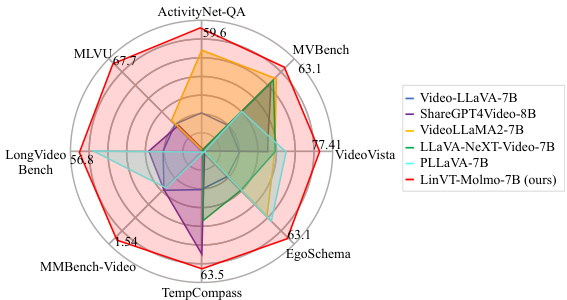

In Sec. 3.1, we present the overall architecture of a videoLLM based on Linear Video Tokenizer (LinVT). Following this, Sec. 3.2 introduces the two primary modules of LinVT: Spatio-Temporal Visual Token Refiner (SVR) described in Sec. 3.2.1, and Text-conditioned Token Aggregation (TTA) detailed in Sec. 3.2.2. Finally, we describe the training recipe for the video-LLM in Sec. 3.3.

在3.1节中,我们介绍了基于线性视频分词器(LinVT)的videoLLM整体架构。随后,3.2节介绍了LinVT的两个主要模块:3.2.1节描述的空间-时间视觉分词优化器(SVR),以及3.2.2节详述的文本条件分词聚合(TTA)。最后,我们在3.3节描述了video-LLM的训练方案。

3.1. Video-LLM Architecture

3.1. Video-LLM 架构

The overall architecture of a video-LLM, derived from an image-LLM, is depicted in Fig. 1a (left part). A video-LLM typically comprises a text stream and a visual stream, which are subsequently processed by a large language model. In the visual stream, different image-LLMs may exhibit slightly varied structures. However, a common component is the visual encoder and the intermediate layers that project visual features into visual tokens. We do not elaborate on these intermediate layers, as they are not the primary focus of this work. Notably, the proposed LinVT is compatible with all the LLMs considered in this study.

视频大语言模型的整体架构源自图像大语言模型,如图1a(左半部分)所示。视频大语言模型通常包含文本流和视觉流,随后由大语言模型进行处理。在视觉流中,不同的图像大语言模型可能呈现略微不同的结构。然而,一个共同的组件是视觉编码器以及将视觉特征投影为视觉token的中间层。我们不对这些中间层进行详细阐述,因为它们不是本工作的重点。值得注意的是,本文提出的LinVT与本研究中考虑的所有大语言模型都兼容。

Information flow. Initially, the video frames are passed through the pre-trained visual encoder and some intermediate layers, generating visual tokens for each frame. LinVT then processes the visual tokens to represent the entire video in an informative and efficient way. The text tokenizer in the text stream transforms the input question into textual tokens. Finally, by concatenating the visual tokens and textual tokens and feeding them into the LLM, the answer is obtained in text format. In particular, LinVT-based videoLLMs can still process image input, and no modification to the model is required.

信息流。首先,视频帧通过预训练的视觉编码器和若干中间层处理,为每帧生成视觉token。随后LinVT对这些视觉token进行处理,以信息高效的方式表征整个视频内容。文本流中的文本分词器将输入问题转换为文本token。最终通过拼接视觉token与文本token并输入大语言模型,以文本形式获得答案。值得注意的是,基于LinVT的视频大语言模型仍可处理图像输入,且无需修改模型结构。

3.2. Linear Video Tokenizer (LinVT)

3.2. 线性视频分词器 (Linear Video Tokenizer, LinVT)

Design principles of LinVT. As discussed in Sec. 1, we have two fundamental design principles for the video tokenizer: (1) linearity – the outputs of the video tokenizer should be the linear combination of its input tokens (2) representative information condensation – the output only contains informative visual tokens that are represent at ive for the video. This first principle allows the video-LLM to attain image-level visual understanding without the need for training on a large corpus of image data. The second principle is to enhance the efficiency of the video-LLM to process the video tokens while preserving the rich contents of the video and thus improving the performance of video understanding. To achieve this, we propose a plug-and-play module called Linear Video Tokenizer (LinVT), which can be easily integrated into existing image-LLMs [8, 12, 19, 65, 75, 86] to empower them with the ability to complete video understanding tasks, as depicted in Fig. 1a.

LinVT的设计原则。如第1节所述,我们对视频分词器有两个基本设计原则:(1) 线性——视频分词器的输出应是其输入token的线性组合;(2) 代表性信息压缩——输出仅包含具有视频代表性的信息视觉token。第一个原则使得视频大语言模型无需在大量图像数据上训练即可获得图像级视觉理解能力。第二个原则旨在提升视频大语言模型处理视频token的效率,同时保留视频的丰富内容,从而提高视频理解性能。为此,我们提出一个即插即用模块Linear Video Tokenizer (LinVT),如图1a所示,该模块可轻松集成到现有图像大语言模型[8,12,19,65,75,86]中,使其具备完成视频理解任务的能力。

LinVT consists of two sub-modules, namely, Spatiotemporal Visual token Refiner (SVR) and Text-conditioned Token Aggregation (TTA). The two modules strictly follow the linearity principle, i.e. the output of each module is the linear combination of (part of) its input, thereby preserving the visual-language alignment learned in the image-LLM. On the other hand, the top $k$ token selection in SVR and the TTA itself are two crucial steps to reduce the number of visual tokens passed to the subsequent computationallyexpensive LLM, achieving the information condensation principle. Notably, since the representative information are condensed into the output, LinVT is also particularly effective in handling longer videos. We will elaborate on SVR and TTA next.

LinVT包含两个子模块:时空视觉Token优化器(SVR)和文本条件Token聚合器(TTA)。这两个模块严格遵循线性原则,即每个模块的输出都是其输入(部分)的线性组合,从而保留图像-大语言模型中学习到的视觉-语言对齐关系。另一方面,SVR中的top $k$ Token选择机制与TTA模块本身是实现信息压缩原则的两个关键步骤,可减少传递给后续计算密集型大语言模型的视觉Token数量。值得注意的是,由于代表性信息被压缩到输出中,LinVT在处理长视频时也特别有效。接下来我们将详细阐述SVR和TTA模块。

Figure 2. The framework of the LinVT-based video-LLM. The LinVT module takes visual tokens corresponding to individual frames of a video along with the user instruction as input and then generates compact and fixed-size visual tokens. By using LinVT, an image-LLM can be easily converted to a video-LLM. Firstly, the visual tokens undergo the spatio-temporal visual token refiner SVR (Sec. 3.2.1) which produces multi-scale visual tokens. The multi-scale visual tokens are then fed to the text-conditioned token aggregator TTA (Sec. 3.2.2). Finally, the LLM incorporates both the user instruction and the output visual tokens to provide a response for video understanding. The proposed LinVT operates linearly, enabling the preservation of knowledge from the image-LLM.

图 2: 基于LinVT的视频大语言模型框架。LinVT模块接收与视频单帧对应的视觉token及用户指令作为输入,随后生成紧凑且固定尺寸的视觉token。通过LinVT,图像大语言模型可轻松转换为视频大语言模型。首先,视觉token经过时空视觉token精炼器SVR(第3.2.1节)生成多尺度视觉token。这些多尺度视觉token随后被输入至文本条件token聚合器TTA(第3.2.2节)。最终,大语言模型结合用户指令与输出的视觉token,生成视频理解响应。所提出的LinVT以线性方式运行,能够保留图像大语言模型中的知识。

3.2.1. Spatio-Temporal Visual Token Refiner (SVR)

3.2.1. 时空视觉Token优化器 (SVR)

The SVR module is a critical component in enhancing video understanding capabilities in a pre-trained image-LLM. The primary purpose of the SVR module is to efficiently distill the vast amount of information present in video data into a concise set of visual tokens that maintain the essential spatial and temporal characteristics.

SVR模块是增强预训练图像大语言模型视频理解能力的关键组件。其主要目的是高效地将视频数据中的海量信息提炼成一组简洁的视觉token,同时保留关键的空间和时间特征。

SVR consists of three modules: the spatio-temporal significance scoring, the top $k$ selection, and the multi-scale token pooling. Given the visual tokens ${V_{1},V_{2},...,V_{T}}$ output from the intermediate layers (where $V_{i}$ denotes the representation of the $i$ -th frame and $T$ is the length of videos), SVR produces a refined set of visual tokens that encompass multi-scale visual tokens. The three modules in SVR are introduced in detail as follows.

SVR由三个模块组成:时空显著性评分、top $k$ 选择和多尺度token池化。给定中间层输出的视觉token ${V_{1},V_{2},...,V_{T}}$ (其中 $V_{i}$ 表示第 $i$ 帧的表征,$T$ 为视频长度),SVR会生成一组包含多尺度视觉token的精细化视觉token。以下详细介绍了SVR的三个模块。

Spatio-temporal significance scoring. The first visual token refinement step is achieved by spatio-temporal attention, which enables the input visual features to assess their relevance in spatial (intra-frame) and temporal (inter-frame) dimensions. This involves computing significance scores for each token, which reflects their importance in the entire video context.

时空显著性评分。首个视觉token精炼步骤通过时空注意力机制实现,该机制使输入视觉特征能够评估其在空间(帧内)和时间(帧间)维度上的相关性。这一过程涉及为每个token计算显著性分数,以反映其在完整视频上下文中的重要性。

Concretely, for the visual token $V_{i}\in\mathbb{R}^{H\times W\times C}$ of the $i\cdot$ frame, where $H$ , $W$ and $C$ represent the height, width and the number of channels, respectively. The spatial significance score for each frame is obtained by applying selfattention within the corresponding visual token. Subsequently, the final significance scores are computed by applying self-attention across the sequence of spatial significance scores for the frames, thereby incorporating temporal information.

具体来说,对于第 $i$ 帧的视觉token $V_{i}\in\mathbb{R}^{H\times W\times C}$ ,其中 $H$ 、 $W$ 和 $C$ 分别表示高度、宽度和通道数。通过在每个帧对应的视觉token内部应用自注意力机制,获得其空间显著性分数。随后,通过对帧序列的空间显著性分数应用跨帧自注意力来计算最终显著性分数,从而融合时序信息。

Score-based token selection. To condense the information in the video, we select the top $k$ visual tokens with the highest significance scores and disregard the remaining tokens. The selected tokens are expected to represent the most informative aspects of the video in terms of spatial configuration and temporal dynamics.

基于评分的Token选择。为了浓缩视频中的信息,我们筛选出重要性评分最高的前$k$个视觉Token,并忽略其余Token。所选Token应能在空间构型和时间动态方面代表视频最具信息量的特征。

Multi-scale token pooling. Multi-scale representations play a crucial role in various video understanding tasks, including temporal action localization [36, 59, 60, 79], moment retrieval [49, 52] and highlight detection [18, 25, 39]. In order to incorporate a multi-scale mechanism into the video-LLM, we introduce Multi-scale Token Pooling (MTP) after the top $k$ token selection. MTP analyzes these remaining $k$ visual tokens at various resolutions to capture both short-term and long-term dependencies within the video. Specifically, we use the average pooling in a shiftedwindow manner across the token or temporal axis.

多尺度token池化。多尺度表征在视频理解任务中起着至关重要的作用,包括时序动作定位[36, 59, 60, 79]、片段检索[49, 52]和高光检测[18, 25, 39]。为了将多尺度机制融入视频大语言模型,我们在top $k$ token选择后引入了多尺度token池化(MTP)。MTP通过分析这些剩余的$k$个视觉token在不同分辨率下的表现,以捕捉视频中的短期和长期依赖关系。具体而言,我们采用滑动窗口形式的平均池化操作,沿token轴或时序轴进行聚合。

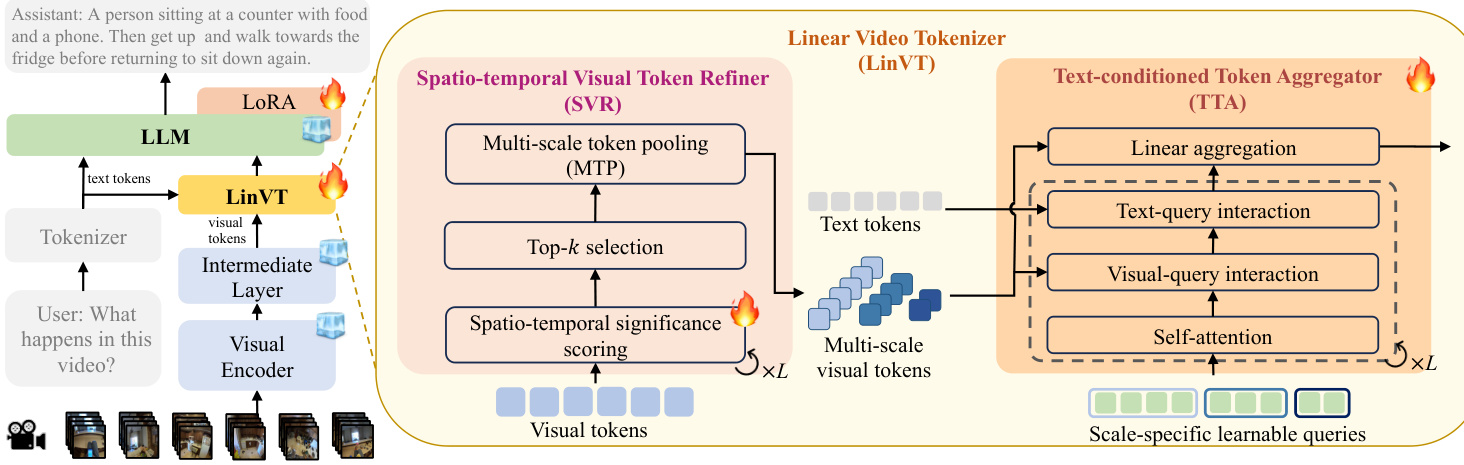

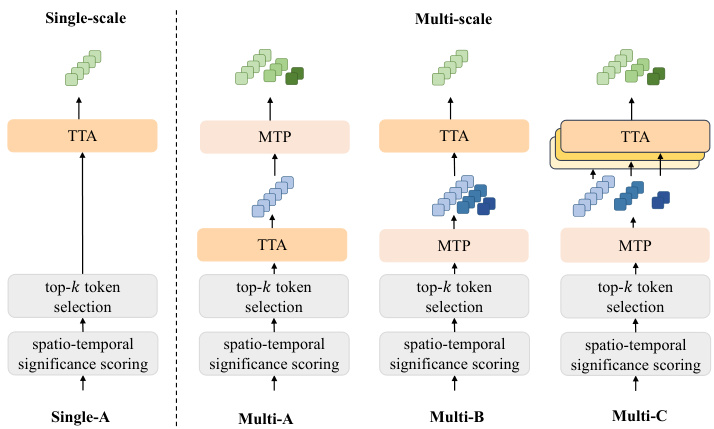

In this work, we conduct a rigorous investigation of the designs of multi-scale token processing. Specifically, three variants are compared, as illustrated in Fig. 3 (right part). Multi-A: MTP is placed after TTA. In this case, pooling is applied on the video tokens generated by TTA (see details of TTA in Sec. 3.2.2). Multi-B and Multi-C: MTP is placed before TTA, and MTP operates on the top $k$ selected visual tokens. The only difference between these two designs is whether TTA is applied on all scales at once (i.e. Multi-B) or each scale separately (i.e. Multi-C). By comparing these three designs experimentally (see Sec. 4.1), Multi-C is the chosen design in our LinVT. The output of MTP is a set of multi-scale video tokens $M T_{v}={T_{v}^{1},T_{v}^{2},...,T_{v}^{l}}$ , where $l$ is the number of scales.

在本工作中,我们对多尺度token处理的设计进行了严格研究。具体而言,我们比较了三种变体方案(如图3右侧所示):

- Multi-A:MTP置于TTA之后。此时池化操作作用于TTA生成的视频token(TTA细节详见3.2.2节)。

- Multi-B与Multi-C:MTP置于TTA之前,且MTP作用于筛选出的top $k$视觉token。两者唯一区别在于TTA是同时作用于所有尺度(Multi-B)还是逐尺度独立处理(Multi-C)。

通过实验对比这三种设计(见4.1节),我们最终选择Multi-C作为LinVT的实施方案。MTP输出为一组多尺度视频token $MT_{v}={T_{v}^{1},T_{v}^{2},...,T_{v}^{l}}$,其中 $l$ 表示尺度数量。

Figure 3. The left part represents single-scale token processing, while the right part contains three variants of multi-scale token processing in LinVT. For a fair comparison, all variants maintain the same output visual token size.

图 3: 左侧表示单尺度token处理流程,右侧展示了LinVT中三种多尺度token处理的变体。为确保公平比较,所有变体均保持相同的输出视觉token尺寸。

Discussion. The SVR module distinguishes itself from previous methods (such as TimeChat [55], ATP [5] and Nonlocal [66]) in two key aspects. First, the approach of selecting top $k$ visual tokens are based on significance scores, which is a more efficient selection process compared to traditional methods that rely on fixed heuristics or exhaustive feature extraction. (2) While some existing methods consider temporal information, MTP offers a more comprehensive analysis by capturing dependencies at various temporal resolutions. This allows for better adaptation to various video challenges, such as rapid movements, motion blur, long video, etc.

讨论。SVR模块在两大关键方面区别于先前方法(如TimeChat[55]、ATP[5]和Nonlocal[66]):(1)基于显著性分数选取top $k$视觉token的方法,相比依赖固定启发式规则或穷举特征提取的传统方法更具效率;(2)虽然现有方法会考虑时序信息,但MTP通过捕获多时间分辨率依赖关系提供了更全面的分析,从而能更好适应快速运动、运动模糊、长视频等多种视频挑战。

3.2.2. Text-conditioned Token Aggregation (TTA)

3.2.2. 文本条件化 Token 聚合 (TTA)

The TTA module is designed to refine and integrate multiscale visual tokens with textual information, thereby enhancing the model’s capacity to understand and interpret video content within a multimodal context. To achieve this, scale-specific queries are utilized to aggregate multi-scale visual tokens, which not only reduce the number of visual tokens but also integrate and enhance the semantic information at a higher level. Furthermore, we have developed a cross-modal decoder to combine image and text modal features. Each group of cross-modal queries is processed through a self-attention layer, an image cross-attention layer (to integrate image features), and a text cross-attention layer (to integrate text features). Lastly, the processed crossmodal queries are fed into the linear aggregation layer to perform linear aggregation on the multi-scale visual tokens. This approach injects textual information into the queries to achieve improved modal alignment.

TTA模块旨在细化和整合多尺度视觉token与文本信息,从而增强模型在多模态背景下理解和解析视频内容的能力。为实现这一目标,该模块利用尺度特异性查询来聚合多尺度视觉token,不仅减少了视觉token数量,还在更高层级上整合并增强了语义信息。此外,我们开发了跨模态解码器来融合图像与文本模态特征:每组跨模态查询会依次通过自注意力层、图像交叉注意力层(整合图像特征)和文本交叉注意力层(整合文本特征)进行处理。最终,处理后的跨模态查询会被输入线性聚合层,对多尺度视觉token执行线性聚合。这种方法通过向查询注入文本信息,实现了更优的模态对齐效果。

Scale-specific learnable queries. In TTA, we utilize scalespecific learnable queries for dynamic information extraction from the visual tokens and text tokens. Notably, the learnable queries are designed to generate attention maps across the visual tokens, which takes place in the final lin sein a gl re quaergy s get reg ation layer. Compared to previous methods [1, 29] that process all tokens equally, utilizing scalespec if tio cp -kq to u keen ries is a novel approach that allows the model to focusesle ctoionn the information across different scales. The queries are learnable, which are represented as $M Q=$ ${\mathbf{Q}^{1},\mathbf{Q}^{2},\ldots,\mathbf{Q}^{l}}$ , where $l$ is the number of pre-defined scales. The self-attention mechanism operates exclusively within the same scales, without any attention across different scales.

特定尺度的可学习查询。在TTA中,我们利用特定尺度的可学习查询从视觉token和文本token中动态提取信息。值得注意的是,这些可学习查询被设计用于生成跨视觉token的注意力图,该过程发生在最终线性自注意力生成层。与以往平等处理所有token的方法[1, 29]相比,采用特定尺度查询是一种创新方法,使模型能够专注于不同尺度的信息。这些可学习查询表示为$MQ=${\mathbf{Q}^{1},\mathbf{Q}^{2},\ldots,\mathbf{Q}^{l}}$,其中$l$是预定义尺度的数量。自注意力机制仅在相同尺度内运作,不同尺度间不存在任何注意力交互。

Visual-query interaction. After the self-attention process, the temporal attention queries and visual tokens from the SVR module engage in cross-attention. Note that the queries in each scale only interact with the visual tokens of the corresponding scale. This interaction helps to refines these visual tokens by focusing on specific aspects of the visual content that are relevant to the task.

视觉查询交互。在自注意力过程之后,来自SVR模块的时间注意力查询与视觉token进行交叉注意力交互。需注意,每个尺度的查询仅与对应尺度的视觉token交互。这种交互通过聚焦于任务相关的视觉内容特定方面,有助于优化这些视觉token。

Text-query interaction. Cross-attention with textual instruction serves as context attention to aggregate textrelated visual features and enables more attention to visual tokens with high-response scores to input instruction. As a result, the most crucial visual cues related to user requirements are effectively stored in the output tokens of TTA.

文本查询交互。通过文本指令的交叉注意力作为上下文注意力机制,聚合与文本相关的视觉特征,使模型更关注对输入指令响应分数高的视觉token。最终,与用户需求最相关的关键视觉线索被高效存储在TTA的输出token中。

Linear aggregation layer. The linear aggregation layer begins by calculating attention maps using the scale-specific queries $Q_{v,t}$ (after $L$ iterations of self-attention, visualquery and text-query interactions), in conjunction with the multi-scale visual tokens $(T_{v})$ . These attention maps are subsequently used to aggregate the multi-scale visual tokens into fixed-length tokens. In other words, the output tokens of TTA represent various configurations of the weighted sum of multi-scale visual tokens, thereby enforcing linearity:

线性聚合层。线性聚合层首先使用特定尺度的查询 $Q_{v,t}$(经过 $L$ 次自注意力、视觉查询与文本查询交互迭代后)与多尺度视觉 token $(T_{v})$ 计算注意力图。这些注意力图随后用于将多尺度视觉 token 聚合成固定长度的 token。换言之,TTA 的输出 token 代表了多尺度视觉 token 加权和的不同配置,从而强制实现线性:

$$

\begin{array}{r l}&{\Phi_{L i n}(T_{v},Q_{v,t})=W_{a t t n}\cdot T_{v}}\ &{\qquad=s o f t m a x(W_{q}\cdot Q_{v,t},W_{k}\cdot T_{v})\cdot T_{v},}\end{array}

$$

$$

\begin{array}{r l}&{\Phi_{L i n}(T_{v},Q_{v,t})=W_{a t t n}\cdot T_{v}}\ &{\qquad=s o f t m a x(W_{q}\cdot Q_{v,t},W_{k}\cdot T_{v})\cdot T_{v},}\end{array}

$$

where $\Phi_{L i n}$ is a linear function that computes different weighted sum of the visual tokens $T_{v}$ , and $W_{a t t n}$ is the attention map derived from $T_{v}$ and $Q_{v,t}$ . In contrast to vanilla cross-attention, $\Phi_{L i n}$ does not include residual connections and directly utilizes input values $T_{v}$ without any transformations.

其中 $\Phi_{L i n}$ 是计算视觉 token $T_{v}$ 不同加权和的线性函数,$W_{a t t n}$ 是从 $T_{v}$ 和 $Q_{v,t}$ 导出的注意力图。与标准交叉注意力不同,$\Phi_{L i n}$ 不包含残差连接,直接使用输入值 $T_{v}$ 而不进行任何变换。

Discussion. The design of our TTA module differs from existing methods like Q-Former [11] and Perceiver [1] in several ways. First, the output of TTA is a linear combination of its input. Second, TTA takes a more targeted approach in terms of multi-scale aggregation and text-driven visual token extraction, particularly in the context of video understanding. Our design places greater emphasis on handling temporal information and fusing multi-modal information. Moreover, while Perceiver also utilizes crossattention mechanisms, the TTA module specifically adapts the query structure to accommodate temporal multi-scale characteristics. This further enhances the role of text in visual token compression, enabling TTA to effectively integrate temporal and semantic information in video understanding tasks.

讨论。我们的TTA模块设计与现有方法(如Q-Former [11]和Perceiver [1])存在几点关键差异:首先,TTA的输出是其输入的线性组合;其次,TTA在多尺度聚合和文本驱动的视觉token提取方面采用了更具针对性的方法,特别是在视频理解场景中。我们的设计更注重时序信息处理和多模态信息融合。此外,虽然Perceiver同样采用交叉注意力机制,但TTA模块专门调整了查询结构以适应时序多尺度特征,这进一步强化了文本在视觉token压缩中的作用,使TTA能有效整合视频理解任务中的时序与语义信息。

3.3. Training Recipe

3.3. 训练方案

In this work, we directly adapt the weights of the existing image-LLM, including the vision encoder, intermediate layer, and LLM. Then we utilize the LinVT module with randomly initialized parameters. Subsequently, it undergoes a two-stage training phase comprising video-language alignment training and video instruction tuning.

在本工作中,我们直接调整了现有图像大语言模型的权重,包括视觉编码器、中间层和大语言模型。随后采用随机初始化参数的LinVT模块,并执行包含视频-语言对齐训练和视频指令微调的两阶段训练流程。

Video-language alignment training. In this stage, we focus on enabling the model to comprehend video-text inputs through a video-language alignment dataset, which consist of 2.9 million video-text pairs. During this stage, the LLM model, vision encoder, and intermediate layer remain frozen, only the LinVT are learnable. Training is conduct under contrastive loss and cross-entropy loss.

视频-语言对齐训练。在此阶段,我们专注于通过包含290万个视频-文本对的视频-语言对齐数据集,使模型能够理解视频-文本输入。在此阶段,大语言模型(LLM)、视觉编码器和中间层保持冻结状态,仅LinVT可学习。训练在对比损失和交叉熵损失下进行。

Video instruction tuning. In the second stage, we perform video instruction tuning to enhance the model’s capabilities in generating responses that align more closely with human preferences. During the training process, we incorporate a learnable LoRA [21] into the LLM, while keeping the LinVT module learnable and freezing the other components. The training is conducted using the next-token prediction loss.

视频指令微调。在第二阶段,我们进行视频指令微调以增强模型生成更符合人类偏好响应的能力。训练过程中,我们在LLM中引入可学习的LoRA [21],同时保持LinVT模块可学习并冻结其他组件。训练采用下一token预测损失进行。

4. Experiments

4. 实验

In this section, we present the experimental setup, conduct an ablation study of each component of LinVT (in Sec. 4.1), and provide comparisons with state-of-the-art video LLMs on general video understanding benchmarks (in Sec. 4.2) and long-form video benchmarks (in Sec. 4.2). Implementation details and additional results can be found in the supplementary material.

在本节中,我们将介绍实验设置,对LinVT的各个组件进行消融研究(见第4.1节),并在通用视频理解基准(见第4.2节)和长视频基准(见第4.2节)上与最先进的视频大语言模型进行比较。具体实现细节和其他结果可在补充材料中找到。

Baselines. The proposed LinVT module has been incorporated into six recent multi-modal LLMs, namely Aquila [19], Blip-3 [75], InternVL2 [8], Mipha [86], Molmo [12] and Qwen2-VL [65]. It is notable that Blip3, Mipha and Molmo are three image-LLMs that have not been trained on video data, whereas Aquila and InternVL2 have been primarily trained on image data and a limited amount of video data. Consequently, our experiments involve two types of baseline models: pure image-LLMs (i.e. Blip-3, Mipha and Molmo) and mixed image-LLMs which have been trained using image and video data (i.e. Aquila, InternVL2 and Qwen2-VL).

基线方法。提出的LinVT模块已集成到六个最新的多模态大语言模型中,分别是Aquila [19]、Blip-3 [75]、InternVL2 [8]、Mipha [86]、Molmo [12]和Qwen2-VL [65]。值得注意的是,Blip3、Mipha和Molmo是三个未在视频数据上训练过的图像大语言模型,而Aquila和InternVL2主要在图像数据和少量视频数据上进行过训练。因此,我们的实验涉及两类基线模型:纯图像大语言模型(即Blip-3、Mipha和Molmo)以及使用图像和视频数据训练过的混合图像大语言模型(即Aquila、InternVL2和Qwen2-VL)。

Training data. The datasets used for training are detailed in Tab. 1. Note that we exclude the image data in VideoChat2 [31]. Thus, only video data is used in both training stages.

训练数据。训练所用的数据集详见表 1。需要注意的是,我们排除了 VideoChat2 [31] 中的图像数据。因此,两个训练阶段仅使用了视频数据。

Table 1. Details about video-language alignment training datasets and video instruction tuning datasets.

表 1: 视频-语言对齐训练数据集与视频指令调优数据集详情

| 数据集 | 样本数量 |

|---|---|

| 第一阶段: 视频-语言对齐训练 | |

| WebVid-2M [4] ShareGPTVideo [82] | 200万 90万 |

| 第二阶段: 视频指令调优 | 9万 |

| VideoChatGPT [46] VideoChat2 [31] ActivityNet-QA [77] MSVD-QA [70] | 23万 2.9万 3万 10.9万 |

Evaluation benchmarks. We report results on several video-based and image-based benchmarks. In particular, we evaluate the zero-shot performance on the open-ended video QA benchmark on MSVD-QA (MVDQA) [70], MSRVTT-QA (MTT-QA) [70], Activity NetQA (Act-QA) [77] and TGIF-QA [22]. In addition, we include widely-adopted video understanding benchmarks, MVBench (MVB) [31], MMBench-Video (MMBV) [13], Video-MME (V-MME) [16], NExT-QA (NQA) [68], EgoSchema (EgoS) [47], VideoVista [34] and Temp Compass (TempC) [42] for validation. The proposed model is also assessed on long video benchmarks like MLVU [84], Long Video Bench (LongVB) [67] and the long-video subset of VideoMME [16]. Apart from the video domain, we perform regression evaluation on the following image benchmarks: $\mathbf{MMMU_{\mathrm{val}}}$ [78], $\mathrm{DocVQA_{\mathrm{test}}}$ [48], H allusion Bench $\mathbf{avg}$ [20], $\mathrm{AI2D_{\mathrm{test}}}$ [24], MMVet [76], $\mathrm{TextVQA_{val}}$ [61], POPE [33], SEEDImage [27] and MME series (MME Perception and MME Cognition) [15]. More experimental results on video and image benchmarks are provided in the supplementary material.

评估基准。我们在多个基于视频和图像的基准测试上报告结果。具体而言,我们评估了在MSVD-QA (MVDQA) [70]、MSRVTT-QA (MTT-QA) [70]、Activity NetQA (Act-QA) [77] 和 TGIF-QA [22] 开放域视频问答基准上的零样本性能。此外,我们还纳入了广泛采用的视频理解基准:MVBench (MVB) [31]、MMBench-Video (MMBV) [13]、Video-MME (V-MME) [16]、NExT-QA (NQA) [68]、EgoSchema (EgoS) [47]、VideoVista [34] 和 Temp Compass (TempC) [42] 进行验证。所提出的模型还在长视频基准(如MLVU [84]、Long Video Bench (LongVB) [67] 和 VideoMME [16] 的长视频子集)上进行了评估。除视频领域外,我们还对以下图像基准进行了回归评估:$\mathbf{MMMU_{\mathrm{val}}}$ [78]、$\mathrm{DocVQA_{\mathrm{test}}}$ [48]、H allusion Bench $\mathbf{avg}$ [20]、$\mathrm{AI2D_{\mathrm{test}}}$ [24]、MMVet [76]、$\mathrm{TextVQA_{val}}$ [61]、POPE [33]、SEEDImage [27] 和 MME 系列(MME Perception 和 MME Cognition)[15]。更多关于视频和图像基准的实验结果见补充材料。

4.1. Ablation Study

4.1. 消融实验

Roles of SVR and TTA. As illustrated in Tab. 2, the naive baseline, where the visual tokens of each frame are averaged into a single token, exhibits low performance across all video benchmarks. In contrast, the model equipped with SVR demonstrates significant metric improvements compared to the baseline. While the TTA module follows a similar trend, its improvements are less pronounced in comparison to SVR. These results underscore the respective importance of SVR and TTA. Notably, a substantial performance enhancement is achieved when both proposed components are utilized together.

SVR与TTA的作用。如表 2 所示,朴素基线方法(将每帧视觉token平均为单个token)在所有视频基准测试中表现不佳。相比之下,配备SVR的模型相比基线实现了显著的指标提升。虽然TTA模块呈现相似趋势,但其改进幅度较SVR更为有限。这些结果凸显了SVR与TTA各自的重要性。值得注意的是,当同时使用这两个组件时,模型性能获得了显著提升。

Important ce of maintaining the original vision-language alignment. We train the model solely on video data, with or without the original intermediate layer, and validate its performance on image and video understanding benchmarks. As a way to break the vision-language alignment of the original image-LLM (i.e. LinVT-Mipha in this case), we randomly initialize the vision-language projector in Mipha. This model is denoted by LinVT w/o alignment. As shown in Tab. 3, maintaining the original alignment between vision and language marginally enhances performance on all video benchmarks. More importantly, on image benchmarks, LinVT without the original alignment fails to develop a nuanced understanding of images compared to the original image-LLM and experiences significant performance degradation across all datasets. In contrast, keeping the original intermediate layer and adding LinVT achieves almost equal performance with the baseline in all image benchmarks, and even slightly outperforms it in $\mathrm{DocVQA_{\mathrm{test}}}$ and $\mathrm{TextVQA_{val}}$ , indicating that the introduction of our module does not compromise the original image-level knowledge.

保持原始视觉-语言对齐的重要性。我们仅在视频数据上训练模型(无论是否保留原始中间层),并在图像和视频理解基准测试中验证其性能。为破坏原始图像大语言模型(本文案例中的LinVT-Mipha)的视觉-语言对齐,我们随机初始化了Mipha中的视觉-语言投影器,该模型标记为LinVT w/o alignment。如 表 3 所示,保持原始视觉-语言对齐能小幅提升所有视频基准测试性能。更重要的是,在图像基准测试中,失去原始对齐的LinVT相比原图像大语言模型无法形成精细的图像理解能力,所有数据集均出现显著性能下降。而保留原始中间层并添加LinVT的模型,在所有图像基准测试中与基线表现几乎持平,甚至在 $\mathrm{DocVQA_{\mathrm{test}}}$ 和 $\mathrm{TextVQA_{val}}$ 上略优于基线,这表明我们的模块引入不会损害原始图像级知识。

Table 2. Effectiveness of SVR and TTA evaluated under video benchmarks. In particular, Avg denotes a naive baseline that extracts one token from each frame by simply average-pooling the visual tokens of each frame and is trained on the same video data as others. The image-LLM in this experiment is Mipha-1.6B.

表 2: 视频基准测试中 SVR 和 TTA 的有效性评估。其中 Avg 表示一个简单基线方法,通过对每帧视觉 token 进行平均池化来提取单个 token,并与其他方法在同一视频数据上训练。本实验中的图像大语言模型为 Mipha-1.6B。

| Avg | SVR | TTA | MVB | MMB-V | V-MME | LongVB | N-QA | EgoS | MVD-QA | MTT-QA | Act-QA | TGIF-QA |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 41.6 | 0.55 | 30.5/30.1 | 31.0 | 40.7 | 29.8 | 35.4/1.8 | 24.1/1.2 | 20.3/1.6 | 33.2/1.5 | |||

| 60.3 | 1.12 | 45.7/46.1 | 46.2 | 67.1 | 51.4 | 67.5/3.4 | 52.7/2.7 | 46.2/2.9 | 68.9/3.6 | |||

| 58.7 | 1.03 | 45.4/45.7 | 44.8 | 65.1 | 50.2 | 64.1/3.2 | 50.3/2.4 | 41.6/2.6 | 66.9/3.2 | |||

| 62.5 | 1.22 | 48.4/49.2 | 49.7 | 71.1 | 55.9 | 71.2/3.8 | 55.3/3.0 | 47.5/3.1 | 71.1/3.9 |

Table 3. Ablation studies on the important ce of maintaining the original vision-language alignment. We conduct experiments on image and video benchmarks. Here, we utilize Mipha-1.6B to compare different settings of vision-language alignment. Baseline denotes the original image-LLM. “LinVT w/o alignment” refers to the image-LLM randomly initialized intermediate layer while “LinVT w/ alignment” preserved the original weights.

表 3: 视觉语言对齐重要性的消融研究。我们在图像和视频基准测试上进行实验。此处使用 Mipha-1.6B 比较不同视觉语言对齐设置。Baseline 表示原始图像大语言模型。"LinVT w/o alignment"指随机初始化中间层的图像大语言模型,而"LinVT w/ alignment"保留原始权重。

| Methods | MVB | MMB-V | V-MME | LongVB | MVD-QA | MMMUval | AI2Dtest | TextVQAval | MMVet |

|---|---|---|---|---|---|---|---|---|---|

| Baseline | 30.5 | 59.6 | 70.5 | 23.5 | |||||

| LinVT w/o alignment | 62.1 | 1.20 | 48.3/48.9 | 48.2 | 70.8/3.7 | 24.1 | 46.6 | 61.3 | 17.4 |

| LinVT w/ alignment | 62.5 | 1.22 | 48.4/49.2 | 49.7 | 71.2/3.8 | 30.5 | 59.8 | 70.6 | 23.5 |

Table 4. Ablation studies on three variants of multi-scale processing in LinVT, evaluated under video benchmarks. Mipha-1.6B is used here. Among these variants, Multi $C$ is chosen as our final design in LinVT.

表 4: LinVT中多尺度处理三种变体的消融研究,基于视频基准进行评估。此处使用Mipha-1.6B模型。在这些变体中,Multi $C$ 被选为LinVT的最终设计方案。

| 方法 | MVB | MMB-V | V-MME | LongVB | MVD-QA |

|---|---|---|---|---|---|

| Single-A | 60.9 | 1.12 | 46.1/45.3 | 45.9 | 67.6/3.4 |

| Multi-A | 59.9 | 1.06 | 45.5/45.3 | 43.6 | 67.8/3.5 |

| Multi-B |