ByT5: Towards a Token-Free Future with Pre-trained Byte-to-Byte Models

ByT5: 迈向基于字节到字节预训练模型的无Token未来

Abstract

摘要

Most widely-used pre-trained language models operate on sequences of tokens corre- sponding to word or subword units. By comparison, token-free models that operate directly on raw text (bytes or characters) have many benefits: they can process text in any language out of the box, they are more robust to noise, and they minimize technical debt by removing complex and error-prone text preprocessing pipelines. Since byte or character sequences are longer than token sequences, past work on token-free models has often introduced new model architectures designed to amortize the cost of operating directly on raw text. In this paper, we show that a standard Transformer architecture can be used with minimal modifications to process byte sequences. We characterize the trade-offs in terms of parameter count, training FLOPs, and inference speed, and show that byte-level models are competitive with their token-level counterparts. We also demonstrate that bytelevel models are significantly more robust to noise and perform better on tasks that are sensitive to spelling and pronunciation. As part of our contribution, we release a new set of pre-trained byte-level Transformer models based on the T5 architecture, as well as all code and data used in our experiments.1

大多数广泛使用的预训练语言模型处理的是对应单词或子词单元的 token 序列。相比之下,直接处理原始文本(字节或字符)的无 token (token-free) 模型具有诸多优势:它们可以开箱即用地处理任何语言的文本,对噪声更鲁棒,并且通过移除复杂且容易出错的文本预处理流程来减少技术债。由于字节或字符序列比 token 序列更长,过去关于无 token 模型的研究通常会引入新的模型架构,以分摊直接处理原始文本的成本。在本文中,我们证明标准 Transformer 架构只需极少的修改即可用于处理字节序列。我们从参数量、训练 FLOPs 和推理速度等方面权衡利弊,并证明字节级模型与 token 级模型性能相当。我们还表明,字节级模型对噪声的鲁棒性显著更强,在拼写和发音敏感的任务上表现更好。作为贡献的一部分,我们发布了一组基于 T5 架构的新预训练字节级 Transformer 模型,以及实验中使用的所有代码和数据。[1]

1 Introduction

1 引言

An important consideration when designing NLP models is the way that text is represented. One common choice is to assign a unique token ID to each word in a fixed finite vocabulary. A given piece of text is thus converted into a sequence of tokens by a tokenizer before being fed into a model for processing. An issue with using a fixed vocabulary of words is that there is no obvious way to process a piece of text that contains an out-of-vocabulary word. A standard approach is to map all unknown words to the same $<\mathrm{UNK}>$ token, but this prevents the model from distinguishing between different out-of-vocabulary words.

设计自然语言处理(NLP)模型时的一个重要考量是文本的表示方式。一种常见做法是为固定有限词汇表中的每个单词分配唯一的token ID。因此,一段给定文本在被送入模型处理前,会先通过分词器(tokenizer)转换为token序列。使用固定单词词汇表的问题是,当文本包含词汇表外的单词时,没有明确的方法进行处理。标准做法是将所有未知单词映射到同一个$<\mathrm{UNK}>$ token,但这会导致模型无法区分不同的词汇表外单词。

Subword tokenizers (Sennrich et al., 2016; Wu et al., 2016; Kudo and Richardson, 2018) present an elegant solution to the out-of-vocabulary problem. Instead of mapping each word to a single token, subword tokenizers decompose words into smaller subword units with a goal of minimizing the total length of the token sequences for a fixed vocabulary size. As an example, a subword tokenizer might tokenize the word doghouse as the pair of tokens dog and house even if doghouse is not in the subword vocabulary. This flexibility has caused subword tokenizers to become the de facto way to tokenize text over the past few years.

子词分词器 (Sennrich et al., 2016; Wu et al., 2016; Kudo and Richardson, 2018) 为词汇表外问题提供了一种优雅的解决方案。与将每个单词映射为单个token不同,子词分词器将单词分解为更小的子词单元,旨在固定词汇表大小的前提下最小化token序列的总长度。例如,子词分词器可能将单词doghouse分解为dog和house两个token,即使doghouse不在子词词汇表中。这种灵活性使得子词分词器成为过去几年文本分词的实际标准方法。

However, subword tokenizers still exhibit various undesirable behaviors. Typos, variants in spelling and capitalization, and morphological changes can all cause the token representation of a word or phrase to change completely, which can result in mis predictions. Furthermore, unknown characters (e.g. from a language that was not used when the subword vocabulary was built) are typically out-of-vocabulary for a subword model.

然而,子词分词器仍存在多种不良行为。拼写错误、大小写变体和词形变化都可能导致单词或短语的token表示完全改变,从而引发预测错误。此外,未知字符(例如来自构建子词词汇表时未使用的语言)通常会成为子词模型的未登录词。

A more natural solution that avoids the aforementioned pitfalls would be to create token-free NLP models that do not rely on a learned vocabulary to map words or subword units to tokens. Such models operate on raw text directly. We are not the first to make the case for token-free models, and a more comprehensive treatment of their various benefits can be found in recent work by Clark et al. (2021). In this work, we make use of the fact that text data is generally stored as a sequence of bytes. Thus, feeding byte sequences directly into the model enables the processing of arbitrary text sequences. This approach is well-aligned with the philosophy of end-to-end learning, which endeavors to train models to directly map from raw data to predictions. It also has a concrete benefit in terms of model size: the large vocabularies of word- or subword-level models often result in many parameters being devoted to the vocabulary matrix. In contrast, a byte-level model by definition only requires 256 embeddings. Migrating word representations out of a sparse vocabulary matrix and into dense network layers should allow models to generalize more effectively across related terms (e.g. book / books) and orthographic variations. Finally, from a practical standpoint, models with a fixed vocabulary can complicate adaptation to new languages and new terminology, whereas, by definition, tokenfree models can process any text sequence.

一种更自然的解决方案是创建无Token (token-free) 的NLP模型,这类模型不依赖学习得到的词汇表来将单词或子词单元映射为Token,从而避免了前述缺陷。此类模型直接处理原始文本。我们并非首个倡导无Token模型的研究团队,Clark等人 (2021) 近期工作对其多重优势进行了更全面的论述。本研究利用了文本数据通常以字节序列形式存储的特性,通过直接将字节序列输入模型,实现了对任意文本序列的处理。这种方法与端到端学习 (end-to-end learning) 的理念高度契合——该理念致力于训练模型直接从原始数据映射到预测结果。在模型尺寸方面也具有实际优势:词级或子词级模型的大词汇表往往导致大量参数被用于词汇矩阵,而字节级模型按定义仅需256个嵌入向量。将词表示从稀疏的词汇矩阵迁移到稠密的网络层,应能使模型在相关术语(如book/books)和拼写变体上实现更有效的泛化。最后从实践角度看,固定词汇表模型难以适配新语言和新术语,而无Token模型按定义可处理任何文本序列。

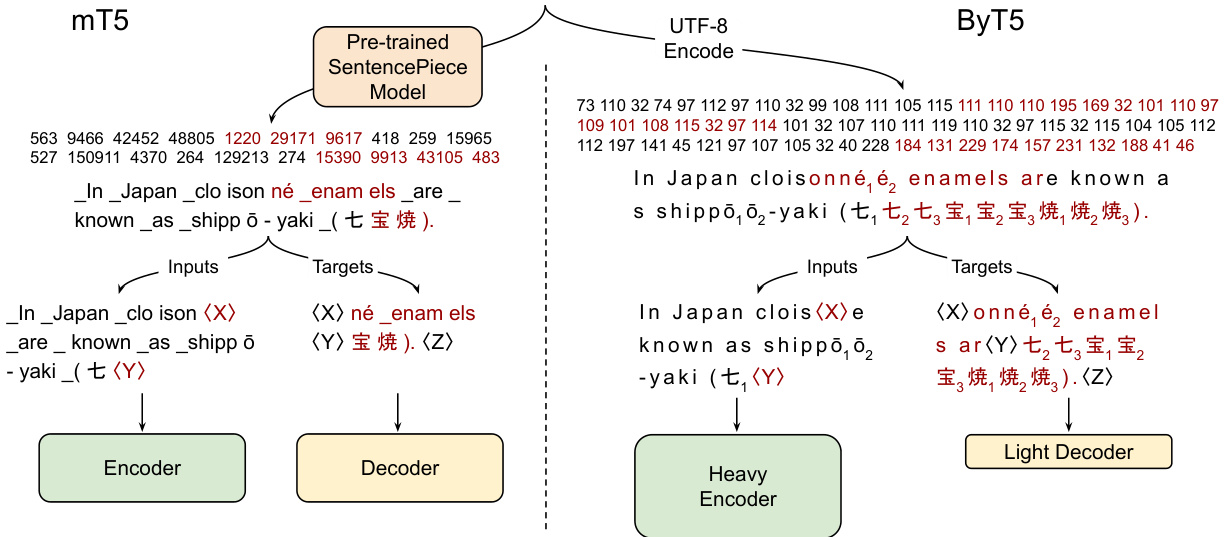

Figure 1: Pre-training example creation and network architecture of mT5 (Xue et al., 2021) vs. ByT5 (this work). mT5: Text is split into Sentence Piece tokens, spans of ${\sim}3$ tokens are masked (red), and the encoder/decoder transformer stacks have equal depth. ByT5: Text is processed as UTF-8 bytes, spans of ${\sim}20$ bytes are masked, and the encoder is $3\times$ deeper than the decoder. $\langle\mathbf{X}\rangle$ , $\langle\mathbf{Y}\rangle$ , and $\langle Z\rangle$ represent sentinel tokens.

图 1: mT5 (Xue et al., 2021) 与 ByT5 (本工作) 的预训练示例创建及网络架构对比。mT5: 文本被分割为 Sentence Piece token,约 3 个 token 的片段被掩码 (红色),编码器/解码器 Transformer 层数相同。ByT5: 文本以 UTF-8 字节处理,约 20 字节的片段被掩码,编码器深度是解码器的 3 倍。$\langle\mathbf{X}\rangle$、$\langle\mathbf{Y}\rangle$ 和 $\langle Z\rangle$ 表示哨兵 token。

The main drawback of byte-level models is that byte sequences tend to be significantly longer than token sequences. Since computational costs of machine learning models tend to scale with sequence length, much previous work on character- and bytelevel models has explored ways to process long sequences efficiently using convolutions with pooling (Zhang et al., 2015; Lee et al., 2017) or adaptive computation time (Graves, 2016).

字节级模型的主要缺点在于字节序列往往比token序列长得多。由于机器学习模型的计算成本通常随序列长度增加而上升,先前关于字符级和字节级模型的研究大多通过带池化的卷积 (Zhang et al., 2015; Lee et al., 2017) 或自适应计算时间 (Graves, 2016) 来探索高效处理长序列的方法。

In this work, we take a simpler approach and show that the Transformer architecture can be straightforwardly adapted to process byte sequences without a dramatically unfavorable increase in computational cost. We focus on the T5 framework (Raffel et al., 2020), where all textbased NLP problems are cast to a text-to-text format. This approach makes it simple to tackle an

在本工作中,我们采用了一种更简单的方法,证明Transformer架构可以直接适配处理字节序列,而不会导致计算成本的大幅增加。我们基于T5框架 (Raffel et al., 2020) 展开研究,该框架将所有基于文本的NLP问题都转换为文本到文本的格式。这种方法使得处理...

NLP task by generating a sequence of bytes conditioned on some input bytes. Our proposed ByT5 architecture is described in section 3. The design stays fairly close to mT5 (the multilingual variant of T5 introduced by Xue et al. (2021)), with the differences illustrated in fig. 1. Through extensive experiments on a diverse set of English and multilingual tasks (presented in section 4), we show that ByT5 is competitive with a subword-level baseline, despite being pre-trained on $4\times$ less text. We also confirm in section 5 that byte-level models are more robust to corruptions of the input text. Throughout, we characterize the trade-offs of our design decisions in terms of computational cost and parameter count, discussed in more detail in sections 6 and 7. The end result is a set of pre-trained ByT5 models that we release alongside this paper.

通过生成基于输入字节序列的字节序列来完成NLP任务。第3节将介绍我们提出的ByT5架构,其设计基本沿用了Xue等人(2021)提出的多语言T5变体(mT5),具体差异如图1所示。在第4节展示的各类英语及多语言任务实验中,尽管预训练文本量减少了$4\times$,ByT5仍能媲美子词级别的基线模型。第5节进一步验证了字节级模型对输入文本损坏具有更强鲁棒性。全文通过第6、7节的详细讨论,从计算成本和参数量角度阐明了设计决策的权衡取舍。最终我们发布了一组预训练的ByT5模型作为本文的配套成果。

2 Related Work

2 相关工作

The early neural language models of Sutskever et al. (2011) and Graves (2013) operated directly on character sequences. This precedent led many to use character-level language modeling as a benchmark to evaluate neural architectures (Kalchbrenner et al., 2016; Chung et al., 2017; Ha et al., 2017; Zilly et al., 2017; Melis et al., 2018; Al-Rfou et al., 2019). Choe et al. (2019) showed byte language models can match the perplexity of word-level models when given the same parameter budget. How- ever, standard practice in real-world scenarios has remained to use word- or subword-level models.

Sutskever等人(2011)和Graves(2013)的早期神经语言模型直接作用于字符序列。这一先例促使许多人将字符级语言建模作为评估神经架构的基准(Kalchbrenner等人,2016; Chung等人,2017; Ha等人,2017; Zilly等人,2017; Melis等人,2018; Al-Rfou等人,2019)。Choe等人(2019)表明,在给定相同参数预算的情况下,字节语言模型可以达到与词级模型相当的困惑度。然而,在实际应用中的标准做法仍然是使用词级或子词级模型。

A number of character-aware architectures have been proposed that make use of character-level features but still rely on a tokenizer to identify word boundaries. These approaches include ELMo (Peters et al., 2018), Character BERT (El Boukkouri et al., 2020) and many others (Ling et al., 2015; Chung et al., 2016; Kim et al., 2016; Józefowicz et al., 2016; Wang et al., 2020; Wei et al., 2021). Separately, some work has endeavored to ameliorate issues with token iz ation, for example by adapting vocabularies to new languages (Garcia et al., 2021) or randomly choosing different subword segmentations to improve robustness in low-resource and out-of-domain settings (Kudo, 2018). These methods do not meet our goal of simplifying the NLP pipeline by removing text preprocessing.

已有多种利用字符级特征但仍依赖分词器识别词边界的字符感知架构被提出。这些方法包括ELMo (Peters et al., 2018)、Character BERT (El Boukkouri et al., 2020) 以及许多其他工作 (Ling et al., 2015; Chung et al., 2016; Kim et al., 2016; Józefowicz et al., 2016; Wang et al., 2020; Wei et al., 2021)。另一方面,部分研究致力于改善分词问题,例如通过调整词汇表以适应新语言 (Garcia et al., 2021),或随机选择不同子词分割方案来提升低资源和领域外场景的鲁棒性 (Kudo, 2018)。这些方法均未实现我们通过消除文本预处理来简化NLP流程的目标。

There have been a few recent efforts to develop general-purpose token-free pre-trained language models for transfer learning.2 Akbik et al. (2018) show strong results on sequence labeling with character-level pre-training and release models covering four languages. More recently, Clark et al. (2021) develop CANINE, which shows gains over multilingual BERT by working with characters instead of word-piece tokens, though the “CANINE-S” model still uses a tokenizer during pre-training to define targets for the masked language modeling task. Our work differs from these in that (i) we train encoder-decoder models that extend to generative tasks, (ii) our models work directly with UTF-8 bytes, and (iii) we explore the effect of model scale, training models beyond 10 billion parameters.

最近有若干研究致力于开发通用无token预训练语言模型用于迁移学习。Akbik等人(2018)展示了字符级预训练在序列标注任务上的优异表现,并发布了覆盖四种语言的预训练模型。Clark等人(2021)最新提出的CANINE模型通过直接处理字符而非word-piece token,在多语言BERT基础上实现了性能提升,不过其"CANINE-S"模型在预训练阶段仍需要使用tokenizer来定义掩码语言建模任务的目标。我们的工作与这些研究的区别在于:(i) 我们训练的是可扩展至生成任务的编码器-解码器架构;(ii) 模型直接处理UTF-8字节流;(iii) 我们探索了模型规模效应,训练了参数量超过100亿的大模型。

3 ByT5 Design

3 ByT5 设计

Our goal in designing ByT5 is to take an existing token-based model and perform the minimal set of modifications to make it token-free, thereby limiting experimental confounds. We base ByT5 on the recent mT5 model (Xue et al., 2021), which was trained on mC4 (a large corpus of unlabeled multilingual text data) and achieved state-of-the-art on many community benchmarks. We release ByT5 in five sizes analogous to T5 and mT5 (Small, Base, Large, XL, XXL). We aim for ByT5 to cover the same use cases as mT5: it is a general-purpose pre-trained text-to-text model covering $100+$ languages. We expect ByT5 will be particular useful for tasks operating on short-to-medium length text sequences (a few sentences or less), as these will incur less slowdown in fine-tuning and inference.

我们设计ByT5的目标是对现有基于token的模型进行最小化修改,使其实现无token化,从而减少实验干扰。该模型基于最新的mT5模型(Xue等人,2021),后者在mC4(一个大规模多语言无标注文本数据集)上训练,并在多个社区基准测试中达到最先进水平。我们发布了与T5和mT5对应的五种规模版本(Small、Base、Large、XL、XXL)。ByT5旨在覆盖与mT5相同的应用场景:这是一个通用型预训练文本生成模型,支持100多种语言。我们预计ByT5将特别适用于处理短到中等长度文本序列(几个句子以内)的任务,因为这类任务在微调和推理时的速度下降较少。

3.1 Changes from mT5

3.1 与mT5的差异

Compared to mT5, we make the following key changes in designing ByT5. First and foremost, we dispense with the Sentence Piece (Kudo and Richardson, 2018) vocabulary and feed UTF-8 bytes directly into the model without any text preprocessing. The bytes are embedded to the model hidden size using a vocabulary of 256 possible byte values. An additional 3 IDs are reserved for special tokens: padding, end-of-sentence, and an unused $<\mathrm{UNK}>$ token that we include only for convention.

与mT5相比,我们在设计ByT5时做出了以下关键改动。首先,我们摒弃了Sentence Piece (Kudo and Richardson, 2018)词汇表,直接将UTF-8字节输入模型而不进行任何文本预处理。这些字节通过包含256种可能字节值的词汇表嵌入到模型的隐藏层大小中。另外保留了3个ID用于特殊token:填充符、句尾符以及一个仅出于惯例保留的未使用$<\mathrm{UNK}>$ token。

Second, we modify the pre-training task. mT5 uses the “span corruption” pre-training objective first proposed by Raffel et al. (2020) where spans of tokens in unlabeled text data are replaced with a single “sentinel” ID and the model must fill in the missing spans. Rather than adding 100 new tokens for the sentinels, we find it sufficient to reuse the final 100 byte IDs. While mT5 uses an average span length of 3 subword tokens, we find that masking longer byte-spans is valuable. Specifically, we set our mean mask span length to 20 bytes, and show ablations of this value in section 6.

其次,我们调整了预训练任务。mT5采用Raffel等人 (2020) 提出的"span corruption"预训练目标,即在未标注文本数据中用单个"哨兵"ID替换token片段,模型需预测缺失片段。我们发现复用最后的100个字节ID作为哨兵即可,无需新增100个token。mT5的平均掩码片段长度为3个子词token,而我们发现掩码更长的字节片段效果更好:具体将平均掩码长度设为20字节,并在第6节对该值进行消融实验。

Third, we find that ByT5 performs best when we decouple the depth of the encoder and decoder stacks. While T5 and mT5 used “balanced” architectures, we find byte-level models benefit significantly from a “heavier” encoder. Specifically, we set our encoder depth to 3 times that of the decoder. Intuitively, this heavier encoder makes the model more similar to encoder-only models like BERT. By decreasing decoder capacity, one might expect quality to deteriorate on tasks like sum mari z ation that require generation of fluent text. However, we find this is not the case, with heavy-encoder byte models performing better on both classification and generation tasks. We ablate the effect of encoder/decoder balance in section 6.

第三,我们发现当解耦编码器和解码器堆栈的深度时,ByT5表现最佳。虽然T5和mT5采用"平衡"架构,但字节级模型明显受益于"更重"的编码器。具体而言,我们将编码器深度设置为解码器的3倍。直观上,这种更重的编码器使模型更接近BERT等纯编码器模型。通过降低解码器容量,人们可能预期在需要生成流畅文本的任务(如摘要)上质量会下降。然而,我们发现情况并非如此,具有重型编码器的字节模型在分类和生成任务上都表现更好。我们将在第6节分析编码器/解码器平衡的影响。

As not all byte sequences are legal according to the UTF-8 standard, we drop any illegal bytes in the model’s output3 (though we never observed our models predicting illegal byte sequences in practice). Apart from the above changes, we follow mT5 in all settings. Like mT5, we set our sequence length to 1024 (bytes rather than tokens), and train for 1 million steps over batches of $2^{20}$ tokens.

由于并非所有字节序列都符合UTF-8标准,我们会丢弃模型输出中的非法字节3(尽管实践中从未观察到模型预测出非法字节序列)。除上述调整外,所有设置均遵循mT5方案。与mT5相同,我们将序列长度设为1024(以字节而非token为单位),并在每批$2^{20}$个token的数据上训练100万步。

| mT5 | ByT5 | |||||

|---|---|---|---|---|---|---|

| 型号 | 参数量 | 词表维度 dmodel/dff | 编码/解码层数 | 词表占比 | dmodel/dff | 编码/解码层数 |

| Small300M | 85% | 512/1024 | 8/8 | 0.3% | 1472/3584 | 12/4 |

| Base | 582M 66% | 768/2048 | 12/12 | 0.1% | 1536/3968 | 18/6 |

| Large 1.23B | 42% | 1024/2816 | 24/24 | 0.06% | 1536/3840 | 36/12 |

| XL 3.74B | 27% | 2048/5120 | 24/24 | 0.04% | 2560/6720 | 36/12 |

| XXL 12.9B | 16% | 4096/10240 | 24/24 | 0.02%4672/12352 | 36/12 |

Table 1: Comparison of mT5 and ByT5 architectures. For a given named size (e.g. “Large”), the total numbers of parameters and layers are fixed. “Vocab” shows the percentage of vocabulary-related parameters, counting both the input embedding matrix and the decoder softmax layer. ByT5 moves these parameters out of the vocabulary and into the transformer layers, as well as shifting to a 3:1 ratio of encoder to decoder layers.

表 1: mT5与ByT5架构对比。对于给定命名尺寸(如"Large"),参数总量和层数固定。"Vocab"显示词表相关参数占比(包含输入嵌入矩阵和解码器softmax层)。ByT5将这些参数从词表转移至Transformer层,并将编码器与解码器层数比例调整为3:1。

3.2 Comparing the Models

3.2 模型对比

Our goal in this paper is to show that straight forward modifications to the Transformer architecture can allow for byte-level processing while incurring reasonable trade-offs in terms of cost. Characterizing these trade-offs requires a clear definition of what is meant by “cost”, since there are many axes along which it is possible to measure a model’s size and computational requirements.

本文的目标是证明,通过对Transformer架构进行直接修改,可以在成本方面做出合理权衡的同时实现字节级处理。要明确这些权衡关系,首先需要清晰定义"成本"的含义,因为衡量模型规模和计算需求的维度有很多。

Models that use a word or subword vocabulary typically include a vocabulary matrix that stores a vector representation of each token in the vocabulary. They also include an analogous matrix in the output softmax layer. For large vocabularies (e.g. those in multilingual models), these matrices can make up a substantial proportion of the model’s parameters. For example, the vocabulary and softmax output matrices in the mT5-Base model amount to 256 million parameters, or about $66%$ of the total parameter count. Switching to a byte-level model allows allocating these parameters elsewhere in the model, e.g. by adding layers or making existing layers “wider”. To compensate for reduction in total parameter count due to changing from a token-based to token-free model, we adjust our ByT5 model hidden size $(\mathrm{d}{\mathrm{model}})$ and feed-forward dimensionality $(\mathrm{d_{ff}})$ to be parameter-matched with mT5, while maintaining a ratio of roughly 2.5 between $\mathrm{d_{ff}}$ and $\mathrm{d}_{\mathrm{model}}$ , as recommended by Kaplan et al. (2020). Table 1 shows the resulting model architectures across all five model sizes.

使用单词或子词词汇表的模型通常包含一个词汇矩阵,用于存储词汇表中每个token的向量表示。它们还在输出softmax层中包含一个类似的矩阵。对于大型词汇表(例如多语言模型中的词汇表),这些矩阵可能占据模型参数的很大比例。例如,mT5-Base模型中的词汇表和softmax输出矩阵共有2.56亿个参数,约占总参数量的66%。改用字节级模型可以将这些参数分配到模型的其他部分,例如通过增加层数或扩展现有层的宽度。为了弥补从基于token的模型转变为无token模型导致的总参数减少,我们调整了ByT5模型的隐藏大小$(\mathrm{d}{\mathrm{model}})$和前馈维度$(\mathrm{d_{ff}})$,使其与mT5参数匹配,同时按照Kaplan等人(2020)的建议,保持$\mathrm{d_{ff}}$与$\mathrm{d}_{\mathrm{model}}$之间大约2.5的比例。表1展示了所有五种模型尺寸的最终架构。

Separately, as previously mentioned, changing from word or subword sequences to byte sequences will increase the (tokenized) sequence length of a given piece of text. The self-attention mechanism at the core of the ubiquitous Transformer architecture (Vaswani et al., 2017) has a quadratic time and space complexity in the sequence length, so byte sequences can result in a significantly higher computational cost. While recurrent neural networks and modified attention mechanisms (Tay et al., 2020) can claim a better computational complexity in the sequence length, the cost nevertheless always scales up as sequences get longer.

另外,如前所述,从单词或子词序列改为字节序列会增加给定文本的(token化后的)序列长度。作为无处不在的Transformer架构 (Vaswani et al., 2017) 核心的自注意力机制,其时间和空间复杂度与序列长度呈平方关系,因此字节序列可能导致显著更高的计算成本。虽然循环神经网络和改进的注意力机制 (Tay et al., 2020) 在序列长度上具有更好的计算复杂度,但随着序列变长,计算成本始终会上升。

Thus far, we have been discussing easy-tomeasure quantities like the parameter count and FLOPs. However, not all FLOPs are equal, and the real-world cost of a particular model will also depend on the hardware it is run on. One important distinction is to identify operations that can be easily parallel i zed (e.g. the encoder’s fullyparallel iz able processing) and those that cannot (e.g. auto regressive sampling in the decoder during inference). For byte-level encoder-decoder models, if the decoder is particularly large, autoregressive sampling can become comparatively expensive thanks to the increased length of byte sequences. Relatedly, mapping an input token to its corresponding vector representation in the vocabulary matrix is essentially “free” in terms of FLOPs since it can be implemented by addressing a particular row in memory. Therefore, reallocating parameters from the vocabulary matrix to the rest of the model will typically result in a model that requires more FLOPs to process a given input sequence (see section 7 for detailed comparison).

至此,我们一直在讨论易于衡量的指标,如参数量和FLOPs (浮点运算次数)。然而,并非所有FLOPs都是等价的,实际运行成本还取决于硬件设备。关键区别在于区分可并行化操作(如编码器的全并行处理)与不可并行化操作(如推理时解码器的自回归采样)。对于字节级编码器-解码器模型,若解码器规模过大,由于字节序列长度增加,自回归采样的相对成本会显著上升。值得注意的是,将输入token映射到词表矩阵中的对应向量表示在FLOPs层面基本"零成本",因为这只需寻址内存中的特定行即可实现。因此,若将参数从词表矩阵重新分配到模型其他部分,通常会导致处理相同输入序列需要更多FLOPs(详见第7节对比分析)。

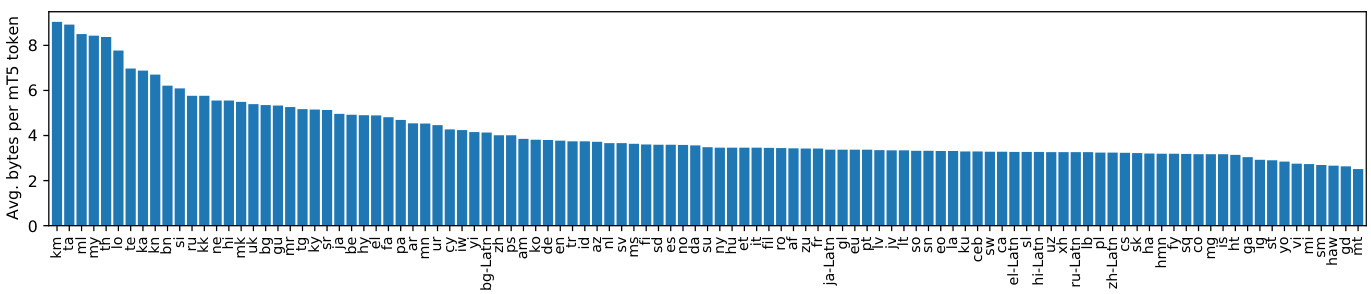

Finally, we note that another important metric is data efficiency, i.e. how much data is required for the model to reach a good solution. For NLP problems, this can be measured either in terms of the number of tokens or the amount of raw text seen during training. Specifically, a byte-level model trained on the same number of tokens as a word- or subword-level model will have been trained on less text data. In Figure 2, we show the compression rates of mT5 Sentence Piece tokenization, measured as the ratio of UTF-8 bytes to tokens in each language split of the mC4 corpus used in pre-training. This ratio ranges from 2.5 (Maltese) to 9.0 (Khmer). When considering the mC4 corpus as a whole, sampled according to the mT5 pre-training mixing ratios, we have an overall compression rate of 4.1 bytes per Sentence Piece token. On the one hand, this $4\times$ lengthening could be seen as an advantage for ByT5: with longer sequences, the model gets more compute to spend encoding a given piece of text. On the other hand, given a fixed input sequence length and number of training steps, the model will be exposed to roughly $4\times$ less actual text during pre-training.

最后,我们注意到另一个重要指标是数据效率,即模型需要多少数据才能达到良好的解决方案。对于自然语言处理(NLP)问题,可以通过训练期间处理的token数量或原始文本量来衡量。具体而言,与词级或子词级模型相比,在相同token数量下训练的字节级模型接触的文本数据更少。在图2中,我们展示了mT5 Sentence Piece分词方案的压缩率,该指标通过预训练所用mC4语料库各语言分片中UTF-8字节数与token数的比值计算得出。该比值范围从2.5(马耳他语)到9.0(高棉语)。若按mT5预训练混合比例对整个mC4语料库进行采样,整体压缩率为每个Sentence Piece token对应4.1字节。一方面,这种$4\times$的序列延长可视为ByT5的优势:更长的序列使模型能分配更多计算资源来编码给定文本。另一方面,在固定输入序列长度和训练步数的情况下,模型在预训练期间接触的实际文本量将减少约$4\times$。

Figure 2: Per-language compression rates of the mT5 Sentence Piece vocabulary, measured over the mC4 pre-training corpus. For each language, we measure the ratio of UTF-8 bytes to tokens over all $\mathrm{mC4}$ data in that language.

图 2: mT5 Sentence Piece词表在各语言上的压缩率,基于mC4预训练语料库测量。对于每种语言,我们计算该语言所有mC4数据中UTF-8字节数与Token数的比值。

With these factors in mind, we choose to focus on the following measures of efficiency in our experiments: parameter count, inference time, and pre-training efficiency. Parameter count is a simple and easy-to-measure quantity that directly relates to the amount of memory required to use a model. Inference time is a real-world measurement of the model’s computational cost that represents a “worst-case” measurement for byte-level models given the potential additional cost of auto regressive sampling. Finally, pre-training efficiency allows us to measure whether byte-level models can learn a good solution after seeing less pre-training data.

考虑到这些因素,我们选择在实验中重点关注以下效率指标:参数量 (parameter count)、推理时间 (inference time) 和预训练效率 (pre-training efficiency)。参数量是一个简单易测的指标,直接关联模型运行所需的内存大小。推理时间是对模型计算成本的现实测量,对于字节级模型而言,由于自回归采样可能带来的额外开销,该指标代表"最坏情况"下的测量结果。最后,预训练效率能帮助我们衡量字节级模型在接触较少预训练数据后能否学习到良好的解决方案。

4 Core Results

4 核心结果

In this section, we compare ByT5 against mT5 on a wide range of tasks. We show that ByT5 is competitive with mT5 on standard English and multilingual NLP benchmarks and outperforms mT5 at small model sizes. Additionally, ByT5 excels on free-form generation tasks and word-level tasks.

在本节中,我们将ByT5与mT5在多种任务上进行比较。结果表明,ByT5在标准英语及多语言NLP基准测试中与mT5表现相当,并在小模型规模下优于mT5。此外,ByT5在自由格式生成任务和词级任务中表现尤为突出。

For each downstream task, we fine-tune mT5 and ByT5 models for 262,144 steps, using a constant learning rate of 0.001 and a dropout rate of 0.1.4 We use a batch size of $2^{17}$ tokens by default, but increased this to $2^{20}$ for several tasks with larger training sets (GLUE, SuperGLUE, XNLI, TweetQA), and decreased to $2^{16}$ for the Dakshina task. In all cases, we select the best model checkpoint based on validation set performance.

对于每项下游任务,我们以262,144步微调mT5和ByT5模型,采用0.001的恒定学习率和0.1的丢弃率。默认使用$2^{17}$ token的批量大小,但对于训练集较大的任务(GLUE、SuperGLUE、XNLI、TweetQA)会增至$2^{20}$,对Dakshina任务则降至$2^{16}$。所有情况下,我们都根据验证集表现选择最佳模型检查点。

4.1 English Classification Tasks

4.1 英语分类任务

On the widely-adopted GLUE (Wang et al., 2019b) and SuperGLUE (Wang et al., 2019a) text classification benchmarks, we find ByT5 beats mT5 at the Small and Base sizes, but mT5 has the advantage at larger sizes, as shown in table 2. The strong performance of ByT5 at smaller sizes likely stems from the large increase in dense parameters over mT5. While the overall models are parameter-matched, most of the mT5 Small and Base parameters are “locked” in vocab-related matrices and are only accessed when a particular token is present. We suspect that replacing these with “dense” parameters activated across all examples encourages more efficient parameter usage and sharing.

在广泛采用的 GLUE (Wang et al., 2019b) 和 SuperGLUE (Wang et al., 2019a) 文本分类基准测试中,我们发现 ByT5 在 Small 和 Base 规模上优于 mT5,但 mT5 在更大规模时具有优势,如 表 2 所示。ByT5 在较小规模上的强劲表现可能源于其密集参数相比 mT5 的大幅增加。虽然整体模型的参数量相匹配,但 mT5 Small 和 Base 的大部分参数被"锁定"在与词汇表相关的矩阵中,仅在特定 token 出现时才会被访问。我们推测,用"密集"参数替换这些参数(这些参数在所有样本中都会被激活)可以促进更高效的参数使用和共享。

Table 2: mT5 and ByT5 performance on GLUE and SuperGLUE. For each benchmark, we fine-tune a single model on a mixture of all tasks, select the best checkpoint per task based on validation set performance, and report average validation set scores over all tasks.

表 2: mT5 和 ByT5 在 GLUE 和 SuperGLUE 上的性能表现。对于每个基准测试,我们在所有任务的混合数据上微调单个模型,根据验证集表现选择每个任务的最佳检查点,并报告所有任务的平均验证集分数。

| GLUE | SuperGLUE | |||

|---|---|---|---|---|

| mT5 | ByT5 | mT5 | ByT5 | |

| Small | 75.6 | 80.5 | 60.2 | 67.8 |

| Base | 83.0 | 85.3 | 72.5 | 74.0 |

| Large | 87.6 | 87.0 | 81.9 | 80.4 |

| TX | 88.7 | 87.9 | 84.7 | 83.2 |

| XXL | 90.7 | 90.1 | 89.2 | 88.6 |

4.2 English Generation Tasks

4.2 英语生成任务

We also compare ByT5 with mT5 on three English generative tasks. XSum (Narayan et al., 2018) is an abstract ive sum mari z ation task requiring models to summarize a news article in a single sentence. For better comparison to recent work, we adopt the version of the task defined in the GEM benchmark (Gehrmann et al., 2021). TweetQA (Xiong et al., 2019) is an abstract ive question-answering task built from tweets mentioned in news articles. This tests understanding of the “messy” and informal language of social media. Finally, DROP (Dua et al., 2019) is a challenging reading comprehension task that requires numerical reasoning.

我们还在三项英文生成任务上对比了ByT5和mT5的表现。XSum (Narayan et al., 2018) 是一个抽象摘要生成任务,要求模型用单句话概括新闻文章。为便于与近期研究对比,我们采用了GEM基准 (Gehrmann et al., 2021) 中定义的该任务版本。TweetQA (Xiong et al., 2019) 是基于新闻提及推文构建的抽象问答任务,用于测试模型对社交媒体"混乱"非正式语言的理解能力。DROP (Dua et al., 2019) 则是需要数值推理能力的挑战性阅读理解任务。

Table 3 shows that ByT5 outperforms mT5 on

表 3: 显示 ByT5 在性能上优于 mT5

| 模型 | GEM-XSum (BLEU) | TweetQA (BLEU-1) | DROP (F1/EM) | |||

|---|---|---|---|---|---|---|

| mT5 | ByT5 | mT5 | ByT5 | mT5 | ByT5 | |

| Small | 6.9 | 9.1 | 54.4 | 65.7 | 40.0/38.4 | 66.6/65.1 |

| Base | 8.4 | 11.1 | 61.3 | 68.7 | 47.2/45.6 | 72.6/71.2 |

| Large | 10.1 | 11.5 | 67.9 | 70.0 | 58.7/57.3 | 74.4/73.0 |

| TX | 11.9 | 12.4 | 68.8 | 70.6 | 62.7/61.1 | 68.7/67.2 |

| IXX | 14.3 | 15.3 | 70.8 | 72.0 | 71.2/69.6 | 80.0/78.5 |

Table 3: mT5 vs. ByT5 on three English generation tasks, reporting the best score on the validation set.

表 3: mT5 与 ByT5 在三个英语生成任务上的对比 (验证集最高分)

each generative task across all model sizes. On GEM-XSum, ByT5 comes close (15.3 vs. 17.0) to the best score reported by Gehrmann et al. (2021), a PEGASUS model (Zhang et al., 2020) pre-trained specifically for sum mari z ation. On TweetQA, ByT5 outperforms (72.0 vs. 67.3) the BERT baseline of Xiong et al. (2019). On DROP, ByT5 comes close (EM 78.5 vs. 84.1) to the best result from Chen et al. (2020), a QDGAT (RoBERTa) model with a specialized numeric reasoning module.

在所有模型规模下的每个生成任务中,ByT5在GEM-XSum上的表现接近(15.3 vs. 17.0)Gehrmann等人(2021)报告的最佳成绩,该成绩由专门为摘要任务预训练的PEGASUS模型(Zhang等人,2020)取得。在TweetQA上,ByT5的表现(72.0 vs. 67.3)优于Xiong等人(2019)的BERT基线。在DROP上,ByT5接近(EM 78.5 vs. 84.1)Chen等人(2020)的最佳结果,后者是带有专门数值推理模块的QDGAT(RoBERTa)模型。

4.3 Cross-lingual Benchmarks

4.3 跨语言基准测试

Changes to vocabulary and token iz ation are likely to affect different languages in different ways. To test the effects of moving to byte-level modeling on cross-lingual understanding, we compare parameter-matched ByT5 and mT5 models on tasks from the popular XTREME benchmark suite (Hu et al., 2020). Specifically we evaluate on the same six tasks as Xue et al. (2021). These consist of two classification tasks: XNLI (Conneau et al., 2018) and PAWS-X (Yang et al., 2019), three extractive QA tasks: XQuAD (Artetxe et al., 2020), MLQA (Lewis et al., 2020) and TyDiQA (Clark et al., 2020), and one structured prediction task: WikiAnn NER (Pan et al., 2017).

词汇和标记化(Tokenization)的变化可能对不同语言产生不同影响。为测试转向字节级建模对跨语言理解的影响,我们在XTREME基准测试套件(Hu et al., 2020)的任务上比较了参数匹配的ByT5和mT5模型。具体而言,我们采用与Xue et al. (2021)相同的六项任务进行评估:两项分类任务——XNLI (Conneau et al., 2018)和PAWS-X (Yang et al., 2019);三项抽取式问答任务——XQuAD (Artetxe et al., 2020)、MLQA (Lewis et al., 2020)和TyDiQA (Clark et al., 2020);以及一项结构化预测任务——WikiAnn命名实体识别(Pan et al., 2017)。

Table 4 shows that ByT5 is quite competitive overall. On the most realistic in-language setting, where some gold training data is available in all languages, ByT5 surpasses the previous state-of-art mT5 on all tasks and model sizes. On the translatetrain setting, ByT5 beats mT5 at smaller sizes, but the results are mixed at larger sizes. We report zeroshot results for completeness, but emphasize that this setting is less aligned with practical applications, as machine translation is widely available.5

表 4 显示 ByT5 整体表现相当出色。在最贴近实际的多语言场景中 (即所有语言都提供部分标注训练数据时),ByT5 在所有任务和模型规模上都超越了此前最先进的 mT5。在翻译训练 (translatetrain) 设定下,ByT5 在较小模型规模上优于 mT5,但在较大模型规模上结果存在波动。出于完整性我们汇报了零样本 (zero-shot) 结果,但需要强调该设定与实际应用场景契合度较低,因为机器翻译技术已广泛普及。5

We explore per-language breakdowns on two tasks to see how different languages are affected by the switch to byte-level processing. One might expect languages with rich inflectional morphology (e.g. Turkish) to benefit most from the move away from a fixed vocabulary. We were also curious to see if any patterns emerged regarding language family (e.g. Romance vs. Slavic), written script (e.g. Latin vs. non-Latin), character set size, or data availability (high vs. low resource).

我们通过两项任务的分语言细分来探究不同语言在切换至字节级处理时的表现差异。理论上,形态变化丰富的语言(如土耳其语)应能从突破固定词表限制中获益最多。同时,我们也关注语言谱系(如罗曼语族与斯拉夫语族)、文字系统(如拉丁字母与非拉丁文字)、字符集规模及数据可用性(高资源与低资源)是否呈现特定规律。

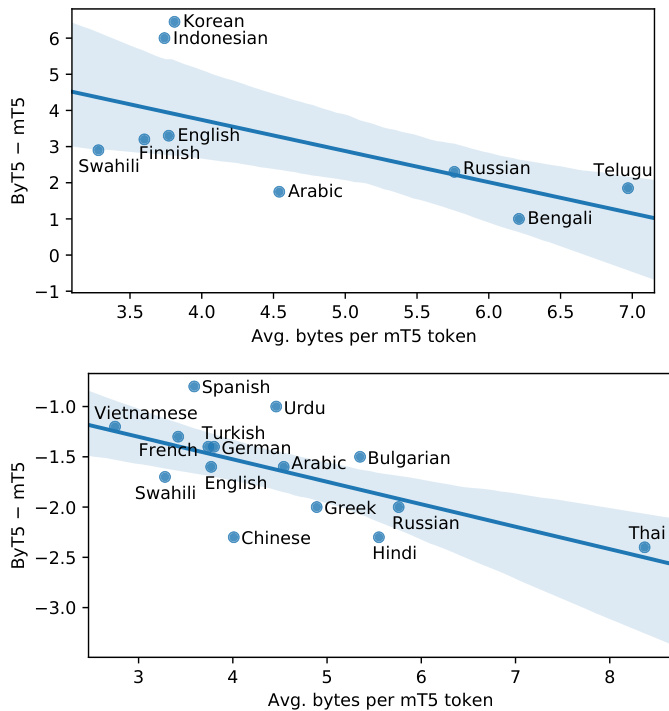

Figure 3: Per-language performance gaps between ByT5-Large and mT5-Large, as a function of each language’s “compression rate”. Top: TyDiQA-GoldP gap. Bottom: XNLI zero-shot gap.

图 3: ByT5-Large 与 mT5-Large 在不同语言间的性能差距,以各语言的"压缩率"为函数。上图: TyDiQA-GoldP 差距。下图: XNLI 零样本差距。

Figure 3 shows the per-language gaps between ByT5-Large and mT5-Large on TyDiQA-GoldP and XNLI zero-shot. One notable trend is that the gap is fairly stable across languages. For example, ByT5 is better in each language on TyDiQAGoldP, while mT5 is consistently better on XNLI. Comparing across languages, we observe that languages with a higher Sentence Piece token compression rate (e.g. Thai and Telugu) tend to favor mT5, whereas those with a lower compression rate (e.g. Indonesian and Vietnamese) tend to favor ByT5. We did not observe any robust trends regarding morphological complexity, language family, script, character set size, or data availability.

图 3: 展示了ByT5-Large和mT5-Large在TyDiQA-GoldP和XNLI零样本任务上的各语言表现差距。一个显著趋势是这种跨语言差距相对稳定。例如,ByT5在TyDiQA-GoldP的每种语言中都表现更好,而mT5在XNLI上持续占优。跨语言比较发现,Sentence Piece token压缩率较高的语言(如泰语和泰卢固语)往往更适合mT5,而压缩率较低的语言(如印尼语和越南语)则更适配ByT5。我们未在形态复杂度、语系、文字系统、字符集规模或数据可用性方面观察到显著规律。

4.4 Word-Level Tasks

4.4 词级任务

Given its direct access to the “raw” text signal, we expect ByT5 to be well-suited to tasks that are sensitive to the spelling or pronunciation of text. In this section we test this hypothesis on three wordlevel benchmarks: (i) transliteration, (ii) graphemeto-phoneme, and (iii) morphological inflection.

由于ByT5能直接处理"原始"文本信号,我们预期它在对文本拼写或发音敏感的任务中表现优异。本节通过三个单词级基准测试验证这一假设:(i) 音译转换,(ii) 字形到音素转换,以及(iii) 形态屈折变化。

Table 4: ByT5 and mT5 performance on a subset of XTREME tasks. Our evaluation setup follows Xue et al. (2021). For QA tasks we report F1 / EM scores.

| Small | Base | Large | XL | XXL | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| mT5 | ByT5 | mT5 | ByT5 | mT5 | ByT5 | mT5 | ByT5 | mT5 | ByT5 | |

| 同语言多任务 (模型在所有目标语言的黄金数据上微调) | ||||||||||

| WikiAnnNER | 86.4 | 90.6 | 88.2 | 91.6 | 89.7 | 91.8 | 91.3 | 92.6 | 92.2 | 93.7 |

| TyDiQA-GoldP | 75.9/64.8 | 82.6/73.6 | 81.7/71.2 | 86.4/78.0 | 85.3/75.3 | 87.7/79.2 | 87.6/78.4 | 88.0/79.3 | 88.7/79.5 | 89.4/81.4 |

| 翻译训练 (模型在英语数据及所有目标语言的翻译数据上微调) | ||||||||||

| 76.6 | 82.8 | 85.3 | 85.0 | 87.1 | ||||||

| XNLI PAWS-X | 75.3 | 88.6 | 80.5 90.5 | 79.9 89.8 | 84.4 91.3 | 90.6 | 91.0 | 90.5 | 91.5 | 85.7 91.7 |

| XQuAD | 87.7 71.3/55.7 | 74.0/59.9 | 77.6/62.2 | 78.5/64.6 | 81.3/66.5 | 81.4/67.4 | 82.7/68.1 | 83.7/69.5 | 85.2/71.3 | 84.1/70.2 |

| MLQA | 56.6/38.8 | 67.5/49.9 | 69.7/51.0 | 71.9/54.1 | 74.0/55.0 | 74.4/56.1 | 75.1/56.6 | 75.9/57.7 | 76.9/58.3 | 76.9/58.8 |

| TyDiQA-GoldP | 49.8/35.6 | 64.2/50.6 | 66.4/51.0 | 75.6/61.7 | 75.8/60.2 | 80.1/66.4 | 80.1/65.0 | 81.5/67.6 | 83.3/69.4 | 83.2/69.6 |

| 跨语言零样本迁移 (模型仅在英语数据上微调) | ||||||||||

| XNLI | 67.5 | 69.1 | 75.4 | 75.4 | 81.1 | 79.7 | 82.9 | 82.2 | 85.0 | 83.7 |

| PAWS-X | 82.4 | 84.0 | 86.4 | 86.3 | 88.9 | 87.4 | 89.6 | 88.6 | 90.0 | 90.1 |

| WikiAnnNER | 50.5 | 57.6 | 55.7 | 62.0 | 58.5 | 62.9 | 65.5 | 61.6 | 69.2 | 67.7 |

表 4: ByT5 和 mT5 在 XTREME 任务子集上的表现。我们的评估设置遵循 Xue et al. (2021)。对于问答任务,我们报告 F1/EM 分数。

Table 5: mT5 vs. ByT5 on three word-level tasks. Dakshina metrics are reported on the development set to be comparable with Roark et al. (2020). SIGMORPHON metrics are reported on the test sets.

表 5: mT5 与 ByT5 在三个词级任务上的对比。Dakshina 指标在开发集上报告以便与 Roark et al. (2020) 具有可比性。SIGMORPHON 指标在测试集上报告。

| Dakshina | SIGMORPHON2020 | |

|---|---|---|

| 音译 CER (↑) | 字形到音素 WER R(↓)/PER (↓) | |

| 模型 | mT5 | ByT5 |

| Small | 20.7 | 9.8 |

| Base | 19.2 | 9.9 |

| Large | 18.1 | 10.5 |

| XL | 17.3 | 10.6 |

| XXL | 16.6 | 9.6 |

For transliteration, we use the Dakshina benchmark (Roark et al., 2020), which covers 12 South Asian languages that