Content Enhanced BERT-based Text-to-SQL Generation

1 Introduction

Semantic parsing is the tasks of translating natural language to logic form. Mapping from natural language to SQL (NL2SQL) is an important semantic parsing task for question answering system. In recent years, deep learning and BERTbased model have shown significant improvement on this task. However, past methods did not encode the table content for the input of deep model. For industry application, the table of training time and the table of testing time are the same. So the table content can be encoded as external knowledge for the deep model.

In order to solve the problem that the table content is not used for model, we propose our effective encoding methods, which could incorporate database designing information into the model. Our key contribution are three folds: 1. We use the match info of all the table cells and question string to mark the question and produce a feature vector which is the same length to the question. 2. We use the match inf of all the table column name and question string to mark the column and produce a feature vector which is the same length to the table header. 3. We design the whole BERT-based model and take the two feature vector above as external inputs. The experiment results outperform the baseline[3]. The code is available.1

2 Related Work

WikiSQL [1] is a large semantic parsing dataset. It has 80654 natural language and corresponding SQL pairs. The examples of WikiSQL are shown in fig. 1.

BERT[4] is a very deep transformer-based[5] model. It first pre-train on very large corpus using the mask language model loss and the next-sentence loss. And then we could fine-tune BERT on a variety of specific tasks like text classification, text matching and natural language inference and set new state-of-the-art performance on them.

3 External Feature Vector Encoding

In this section we describe our encoding methods based on the word matching of table content and question string and the word matching of table header and question string. The full algorithms are shown in Algorithm 1 and Algorithm 2. In the Algorithm 1, the value 1 stand for ’START’ tag, value 2 stand for ’MIDDLE’ tag, value 3 stand for ’END’ tag. In the Algorithm 2, we think that the column, which contains the matched cell, should be marked. The final question mark vector is named $Q V$ and the final table header mark vector is named $H V$ . For industry application, we could refer to Algorithm 1 and Algorithm 2 to encode external knowledge flexibly.

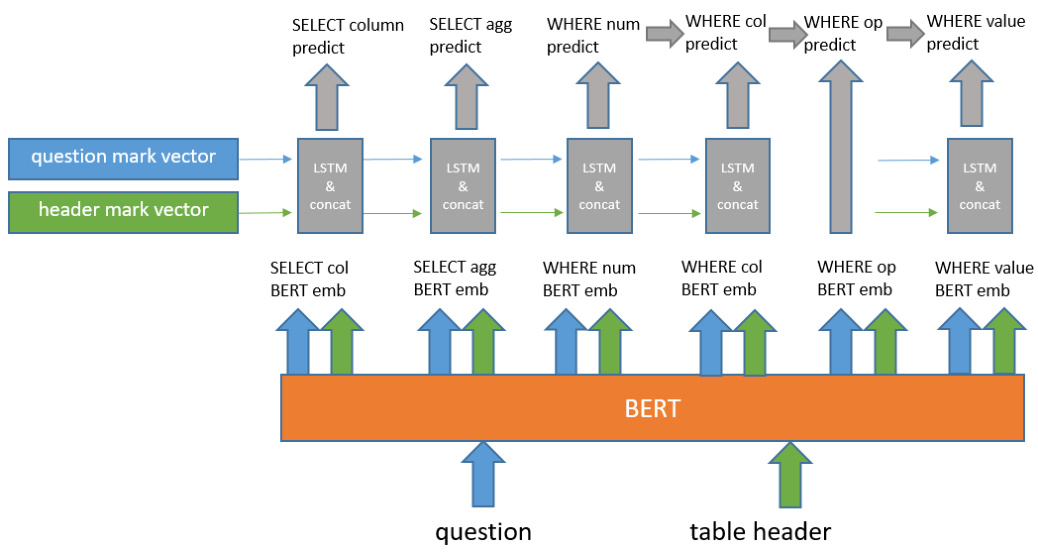

Fig. 2. The deep model

4 The Deep Neural Model

Based on the Wikisql dataset, we also use three sub-model to predict the SELECT part, AGG part and WHERE part. The whole model is shown in fig. 2.

We use BERT as the representation layer. The question and table header are concat and then input to BERT, so that the question and table header have the attention interaction information of each other. We denote the BERT output of question and table header as $Q$ and $H$

4.1 BERT embedding layer

Given the question tokens $w_{1},w_{2},...,w_{n}$ and the table header $h_{1},h_{2},...,h_{n}$ , we follow the BERT convention and concat the question tokens and table header for BERT input. The detail encoding is below:

$[C L S],w_{1},w_{2},...,w_{n},[S E P],h_{1},[S E P],h_{2},[S E P],...,h_{n},[S E P]$

The output embeddings of BERT are shared in all the downstream tasks. We think the concatenation input for BERT can produce some kind of ’global’ attention for the downstream tasks.

4.2 SELECT column

Our goal is to predict the column name in the table header. The inputs are the question $Q$ and table header $H$ . The output are the probability of SELECT column:

$$

P(s c|Q,H,Q V,H V)

$$

where $Q V$ and $H V$ is the external feature vectors that are described above.

4.3 SELECT agg

Our goal is to predict the agg slot. The inputs are $Q$ with $Q V$ and the output are the probability of SELECT agg:

$$

P(s a|Q,Q V)

$$

4.4WHERE number

Our goal is to predict the where number slot. The inputs are $Q$ and $H$ with $Q V$ and $H V$ . The output are the probability of WHERE number:

$$

P(w n|Q,H,Q V,H V)

$$

4.5WHERE column

Our goal is to predict the where column slot for each condition of WHERE clause. The inputs are $Q$ , $H$ and $P_{w n}$ with $Q V$ and $H V$ . The output are the top where number probability of WHERE column:

$$

P(w c|Q,H,P_{w n},Q V,H V)

$$

4.6 WHERE op

Our goal is to predict the where column slot for each condition of WHERE clause. The inputs are $Q$ , $H$ , $P_{w c}$ and $P_{w n}$ . The output are the probability of WHERE op slot:

$$

P(w o|Q,H,P_{w n},P_{w c})

$$

4.7WHERE value

Our goal is to predict the where column slot for each condition of WHERE clause. The inputs are $Q$ , $H$ , $P_{w n}$ , $P_{w c}$ and $P_{w o}$ with $Q V$ and $H V$ . The output are the probability of WHERE value slot:

$$

P(w v|Q,H,P_{w n},P_{w c},P_{w o},Q V,H V)

$$

5 Experiments

In this section we describe detail of experiment parameters and show the experiment result.

5.1 Experiment result

In this section, we evaluate our methods versus other approachs on the WikiSQL dataset. See Table 1 and Table 2 for detail. The SQLova[3] result use the BERTBase-Uncased pretrained model and run on our machine without executionguided decoding(EG)[6].

Table 1. Overall result on the WikiSQL task

Table 2. Break down result on the WikiSQL dataset.

6 Ablation study

The detail results are shown at Table 3. The header vector mainly improve the result of WHERE OP and the question vector mainly improve the result of WHERE VALUE.

Table 3. The results of ablation study

7 Conclusion

Based on the observation that the table data is almost the same in training time and testing time and to solve the problem that the table content is lack for deep model. We propose a simple encoding methods that can leverage the table content as external feature for the BERT-based deep model, demonstrate its good performance on the WikiSQL task, and achieve state-of-the-art on the datasets.