RegNet: Self-Regulated Network for Image Classification

RegNet: 用于图像分类的自调节网络

Jing Xu, Yu Pan, Xinglin Pan, Steven Hoi, Fellow, IEEE, Zhang Yi, Fellow, IEEE, and Zenglin $\mathrm{Xu^{*}}$

Jing Xu, Yu Pan, Xinglin Pan, Steven Hoi, Fellow, IEEE, Zhang Yi, Fellow, IEEE, 以及 Zenglin $\mathrm{Xu^{*}}$

Abstract—The ResNet and its variants have achieved remarkable successes in various computer vision tasks. Despite its success in making gradient flow through building blocks, the simple shortcut connection mechanism limits the ability of reexploring new potentially complementary features due to the additive function. To address this issue, in this paper, we propose to introduce a regulator module as a memory mechanism to extract complementary features, which are further fed to the ResNet. In particular, the regulator module is composed of convolutional RNNs (e.g., Convolutional LSTMs or Convolutional GRUs), which are shown to be good at extracting spatio-temporal information. We named the new regulated networks as RegNet. The regulator module can be easily implemented and appended to any ResNet architectures. We also apply the regulator module for improving the Squeeze-and-Excitation ResNet to show the generalization ability of our method. Experimental results on three image classification datasets have demonstrated the promising performance of the proposed architecture compared with the standard ResNet, SE-ResNet, and other state-of-the-art architectures.

摘要—ResNet及其变体在各种计算机视觉任务中取得了显著的成功。尽管其在使梯度通过构建块流动方面取得了成功,但简单的快捷连接机制由于加法函数的限制,限制了重新探索新的潜在互补特征的能力。为了解决这个问题,本文提出引入一个调节器模块作为记忆机制来提取互补特征,这些特征进一步输入到ResNet中。具体来说,调节器模块由卷积RNN(例如,卷积LSTM或卷积GRU)组成,这些RNN被证明擅长提取时空信息。我们将这种新的调节网络命名为RegNet。调节器模块可以轻松实现并附加到任何ResNet架构中。我们还应用调节器模块来改进Squeeze-and-Excitation ResNet,以展示我们方法的泛化能力。在三个图像分类数据集上的实验结果表明,与标准ResNet、SE-ResNet和其他最先进的架构相比,所提出的架构具有显著的性能优势。

Index Terms—Residue Networks, Convolutional Recurrent Neural Networks, Convolutional Neural Networks

索引术语—残差网络 (Residue Networks)、卷积循环神经网络 (Convolutional Recurrent Neural Networks)、卷积神经网络 (Convolutional Neural Networks)

I. INTRODUCTION

I. 引言

Convolutional neural networks (CNNs) have achieved abundant breakthroughs in a number of computer vision tasks [1]. Since the champion achieved by AlexNet [2] at the ImageNet competition in 2012, various new architectures have been proposed, including VGGNet [3], GoogLeNet [4], ResNet [5], DenseNet [6], and recent NASNet [7].

卷积神经网络 (Convolutional Neural Networks, CNNs) 在众多计算机视觉任务中取得了大量突破 [1]。自 AlexNet [2] 在 2012 年 ImageNet 竞赛中夺冠以来,各种新架构被提出,包括 VGGNet [3]、GoogLeNet [4]、ResNet [5]、DenseNet [6] 以及最近的 NASNet [7]。

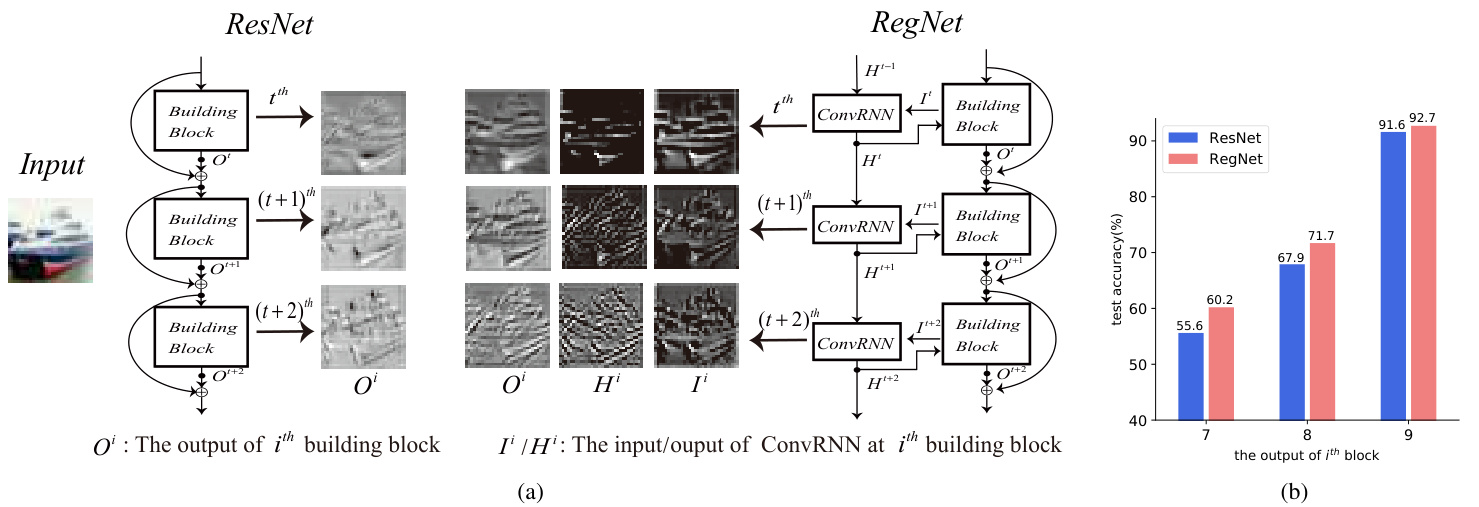

Among these deep architectures, ResNet and its variants [8]–[11] have obtained significant attention with outstanding performances in both low-level and high-level vision tasks. The remarkable success of ResNets is mainly due to the shortcut connection mechanism, which makes the training of a deeper network possible, where gradients can directly flow through building blocks and the gradient vanishing problem can be avoided in some sense. However, the shortcut connection mechanism makes each block focus on learning its respective residual output, where the inner block information communication is somehow ignored and some reusable information learned from previous blocks tends to be forgotten in later blocks. To illustrate this point, we visualize the output(residual) feature maps learned by consecutive blocks in ResNet in Fig. 1(a). It can be see that due to the summation operation among blocks, the adjacent outputs $O^{t}$ , $O^{t+1}$ and $O^{t+2}$ look very similar to each other, which indicates that less new information has been learned through consecutive blocks.

在这些深度架构中,ResNet 及其变体 [8]–[11] 在低层次和高层次视觉任务中表现出色,因此获得了广泛关注。ResNet 的显著成功主要归功于其快捷连接机制,这使得训练更深的网络成为可能,梯度可以直接通过构建模块流动,从而在一定程度上避免了梯度消失问题。然而,快捷连接机制使得每个模块专注于学习其各自的残差输出,模块内部的信息交流被忽视,从前面的模块中学到的可重用信息往往在后面的模块中被遗忘。为了说明这一点,我们在图 1(a) 中可视化了 ResNet 中连续模块学习到的输出(残差)特征图。可以看出,由于模块之间的求和操作,相邻的输出 $O^{t}$、$O^{t+1}$ 和 $O^{t+2}$ 看起来非常相似,这表明通过连续模块学习到的新信息较少。

A potential solution to address the above problems is to capture the spatio-temporal dependency between building blocks while constraining the speed of parameter increasing. To this end, we introduce a new regulator mechanism in parallel to the shortcuts in ResNets for controlling the necessary memory information passing to the next building block. In detail, we adopt the Convolutional RNNs (“ConvRNNs") [12] as the regulator to encode the spatio-temporal memory. We name the new architecture as RNN-Regulated Residual Networks, or “RegNet" for short. As shown in Fig. 1(a), at the $i^{t h}$ building block, a recurrent unit in the convolutional RNN takes the feature from the current building block as the input (denoted by $I^{i}$ ), and then encodes both the input and the serial information to generate the hidden state (denoted by $H^{i}$ ); the hidden state will be concatenated with the input for reuse in the next convolution operation (leading to the output feature $O^{i}$ ), and will also be transported to the next recurrent unit. To better understand the role of the regulator, we visualize the feature maps, as shown in Fig. 1(a). We can see that the $H^{i}$ generated by ConvRNN can complement with the input features $I^{i}$ . After conducting convolution on the concatenated features of $H^{i}$ and $I^{i}$ , the proposed model gets more meaningful features with rich edge information $O^{i}$ than ResNet does. For quantitatively evaluating the information contained in the feature maps, we test their classification ability on test data (by adding average pooling layer and the last fully connected layer to the $O^{i}$ of the last three blocks). As shown in Fig. 1(b), we can find that the new architecture can get higher prediction accuracy, which indicates the effectiveness of the regulator from ConvRNNs.

解决上述问题的一个潜在方案是在限制参数增长速度的同时,捕捉构建块之间的时空依赖性。为此,我们在ResNets的快捷路径旁引入了一种新的调节机制,用于控制必要的记忆信息传递到下一个构建块。具体来说,我们采用卷积循环神经网络(Convolutional RNNs, "ConvRNNs")[12] 作为调节器来编码时空记忆。我们将这种新架构命名为RNN调节残差网络,简称“RegNet”。如图1(a)所示,在第 $i^{t h}$ 个构建块中,卷积RNN中的一个循环单元将当前构建块的特征作为输入(记为 $I^{i}$),然后对输入和序列信息进行编码以生成隐藏状态(记为 $H^{i}$);隐藏状态将与输入拼接,以便在下一个卷积操作中重复使用(生成输出特征 $O^{i}$),同时也会传递到下一个循环单元。为了更好地理解调节器的作用,我们对特征图进行了可视化,如图1(a)所示。可以看到,ConvRNN生成的 $H^{i}$ 可以与输入特征 $I^{i}$ 互补。在对 $H^{i}$ 和 $I^{i}$ 的拼接特征进行卷积后,所提出的模型比ResNet获得了更具意义且边缘信息丰富的特征 $O^{i}$。为了定量评估特征图中包含的信息,我们在测试数据上测试了它们的分类能力(通过在最后三个块的 $O^{i}$ 上添加平均池化层和最后一个全连接层)。如图1(b)所示,我们发现新架构可以获得更高的预测准确率,这表明了ConvRNNs调节器的有效性。

Thanks to the kind of parallel structure of the regulator module, the RNN-based regulator is easy to implement and can be applicable to other ResNet-based structures, such as the SE-ResNet [11], Wide ResNet [8], Inception-ResNet [9], ResNetXt [10], Dual Path Network(DPN) [13], and so on. Without loss of generality, as another instance to demonstrate the effectiveness of the proposed regulator, we also apply the ConvRNN module for improving the Squeeze-and-Excitation ResNet (shorted as “SE-RegNet").

得益于调节器模块的并行结构,基于 RNN 的调节器易于实现,并且可以应用于其他基于 ResNet 的结构,例如 SE-ResNet [11]、Wide ResNet [8]、Inception-ResNet [9]、ResNetXt [10]、Dual Path Network (DPN) [13] 等。为了不失一般性,作为展示所提出调节器有效性的另一个实例,我们还应用 ConvRNN 模块来改进 Squeeze-and-Excitation ResNet (简称为 "SE-RegNet")。

For evaluation, we apply our model to the task of image classification on three highly competitive benchmark datasets, including CIFAR-10, CIFAR-100, and ImageNet. In comparison with the ResNet and SE-ResNet, our experimental results have demonstrated that the proposed architecture can significantly improve the classification accuracy on all the datasets. We further show that the regulator can reduce the required depth of ResNets while reaching the same level of accuracy.

为了评估,我们将模型应用于三个极具竞争力的基准数据集上的图像分类任务,包括 CIFAR-10、CIFAR-100 和 ImageNet。与 ResNet 和 SE-ResNet 相比,我们的实验结果表明,所提出的架构能够显著提高所有数据集上的分类准确率。我们进一步展示了调节器可以在达到相同准确率水平的同时减少 ResNet 所需的深度。

Fig. 1. (a):Visualization of feature maps in the ResNet [5] and RegNet. We visualize the outputs $O^{i}$ feature maps of the $i^{t h}$ building blocks, $i\in{t,t+1,t+2}$ . In RegNets, $I^{i}$ denotes the input feature maps. $H^{i}$ denotes the hidden states generated by the ConvRNN at step $i$ . By applying convolution operations over the concatenation $I^{i}$ with $H^{i}$ , we can get the regulated outputs( denoted by $O^{i}$ ) of the $i^{t h}$ building block. (b): The prediction on test data based on the output feature maps of consecutive building blocks. During the test time, we add an average pooling layer and the last fully connected layer to the outputs of the last three building blocks $(i\in{7,8,9})$ in ResNet-20 and RegNet-20 to get the classification results. It can be seen that the output of each block aided with the memory information results in higher classification accuracy.

图 1: (a) ResNet [5] 和 RegNet 中特征图的可视化。我们可视化了第 $i^{t h}$ 个构建块的输出特征图 $O^{i}$,其中 $i\in{t,t+1,t+2}$。在 RegNet 中,$I^{i}$ 表示输入特征图,$H^{i}$ 表示 ConvRNN 在第 $i$ 步生成的隐藏状态。通过对 $I^{i}$ 和 $H^{i}$ 的拼接应用卷积操作,我们可以得到第 $i^{t h}$ 个构建块的调节输出(用 $O^{i}$ 表示)。(b) 基于连续构建块的输出特征图对测试数据的预测。在测试时,我们在 ResNet-20 和 RegNet-20 的最后三个构建块 $(i\in{7,8,9})$ 的输出上添加一个平均池化层和最后一个全连接层,以获得分类结果。可以看出,每个块的输出在记忆信息的辅助下,分类准确率更高。

II. RELATED WORK

II. 相关工作

Deep neural networks have been achieved empirical breakthroughs in machine learning. However, training networks with sufficient depths is a very tricky problem. Shortcut connection has been proposed to address the difficulty in optimization to some extent [5], [14]. Via the shortcut, information can flow across layers without attenuation. A pioneering work is the Highway Network [14], which implements the shortcut connections by using a gating mechanism. In addition, the ResNet [5] explicitly requests building blocks fitting a residual mapping, which is assumed to be easier for optimization.

深度神经网络在机器学习领域取得了经验性的突破。然而,训练足够深度的网络是一个非常棘手的问题。为了在一定程度上解决优化难题,提出了捷径连接 (shortcut connection) [5], [14]。通过捷径,信息可以在层之间流动而不衰减。一个开创性的工作是 Highway Network [14],它通过使用门控机制实现了捷径连接。此外,ResNet [5] 明确要求构建块拟合残差映射,这被认为更容易优化。

Due to the powerful capabilities in dealing with vision tasks of ResNets, a number of variants have been proposed, including WRN [8], Inception-ResNet [9], ResNetXt [10], , WResNet [15], and so on. ResNet and ResNet-based models have achieved impressive, record-breaking performance in many challenging tasks. In object detection, 50- and 101- layered ResNets are usually used as basic feature extractors in many models: Faster R-CNN [16], RetinaNet [17], Mask RCNN [18] and so on. The most recent models aiming at image super-resolution tasks, such as SRResNet [19], EDSR and MDSR [20], are all based on ResNets, with a little modification. Meanwhile, in [21], the ResNet is introduced to remove rain streaks and obtains the state-of-the-art performance.

由于 ResNet 在处理视觉任务中的强大能力,许多变体被提出,包括 WRN [8]、Inception-ResNet [9]、ResNetXt [10]、WResNet [15] 等。ResNet 和基于 ResNet 的模型在许多具有挑战性的任务中取得了令人印象深刻的、破纪录的性能。在目标检测中,50 层和 101 层的 ResNet 通常被用作许多模型中的基本特征提取器:Faster R-CNN [16]、RetinaNet [17]、Mask RCNN [18] 等。最近针对图像超分辨率任务的模型,如 SRResNet [19]、EDSR 和 MDSR [20],都是基于 ResNet 的,并进行了少量修改。同时,在 [21] 中,ResNet 被引入用于去除雨痕,并获得了最先进的性能。

Despite the success in many applications, ResNets still suffer from the depth issue [22]. DenseNet proposed by [6] concatenates the input features with the output features using a densely connected path in order to encourage the network to reuse all of the feature maps of previous layers. Obviously, not all feature maps need to be reused in the future layers, and consequently the densely connected network also leads to some redundancy with extra computational costs. Recently, Dual Path Network [13] and Mixed link Network [23] are the trade-offs between ResNets and DenseNets. In addition, some module-based architectures are proposed to improve the performance of the original ResNet. SENet [11] proposes a lightweight module to get the channel-wise attention of intermediate feature maps. CBAM [24] and BAM [25] design modules to infer attention maps along both channel and spatial dimensions. Despite their success, those modules try to regulate the intermediate feature maps based on the attention information learned by the intermediate feature themselves, so the full utilization of historical spatio-temporal information of previous features still remains an open problem.

尽管在许多应用中取得了成功,ResNets 仍然面临深度问题 [22]。[6] 提出的 DenseNet 通过密集连接路径将输入特征与输出特征连接起来,以鼓励网络重用之前层的所有特征图。显然,并非所有特征图都需要在后续层中重用,因此密集连接网络也会带来一些冗余,并增加额外的计算成本。最近,Dual Path Network [13] 和 Mixed Link Network [23] 在 ResNets 和 DenseNets 之间进行了权衡。此外,一些基于模块的架构被提出来以改进原始 ResNet 的性能。SENet [11] 提出了一个轻量级模块,用于获取中间特征图的通道注意力。CBAM [24] 和 BAM [25] 设计了模块,以推断通道和空间维度上的注意力图。尽管这些模块取得了成功,但它们试图基于中间特征自身学习的注意力信息来调节中间特征图,因此如何充分利用先前特征的历史时空信息仍然是一个开放问题。

On the other hand, convolutional RNNs (shorted as ConvRNN), such as ConvLSTM [12] and ConvGRU [26], have been used to capture spatio-temporal information in a number of applications, such as rain removal [27], video superresolution [28], video compression [29], video object detection and seg e met ation [30], [31]. Most of those works embed ConvRNNs into models to capture the dependency information in a sequence of images. In order to regulate the information flow of ResNet, we propose to leverage ConvRNNs as a separate module aiming to extracting spatio-temporal information as complementary to the original feature maps of ResNets.

另一方面,卷积循环神经网络(简称 ConvRNN),如 ConvLSTM [12] 和 ConvGRU [26],已被用于捕捉多种应用中的时空信息,例如去雨 [27]、视频超分辨率 [28]、视频压缩 [29]、视频目标检测与分割 [30]、[31]。这些工作大多将 ConvRNN 嵌入到模型中,以捕捉图像序列中的依赖信息。为了调节 ResNet 的信息流,我们提出利用 ConvRNN 作为一个独立模块,旨在提取时空信息,作为 ResNet 原始特征图的补充。

III. OUR MODEL

III. 我们的模型

In the section, we first revisit the background of ResNets and two advanced ConvRNNs: ConvLSTM and ConvGRU. Then we present the proposed RegNet architectures.

在本节中,我们首先回顾 ResNets 的背景以及两种先进的 ConvRNNs:ConvLSTM 和 ConvGRU。然后我们介绍提出的 RegNet 架构。

A. ResNet

A. ResNet

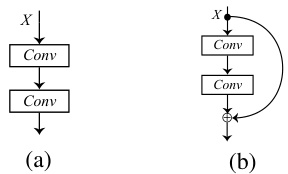

The degradation problem which makes the traditional network hard to converge, is exposed when the architecture goes deeper. The problem can be mitigated by ResNet [5] to some extent. Building blocks are the basic architecture of ResNet, as shown in Fig. 2(b), instead of directly fitting a original underlying mapping, shown in Fig. 2(a). The deep residual network obtained by stacking building blocks has achieved excellent performance in image classification, which proves the competence of the residual mapping.

当网络架构变深时,传统网络难以收敛的退化问题就会暴露出来。ResNet [5] 在一定程度上可以缓解这个问题。构建块是 ResNet 的基本架构,如图 2(b) 所示,而不是直接拟合原始的底层映射,如图 2(a) 所示。通过堆叠构建块获得的深度残差网络在图像分类中取得了优异的性能,这证明了残差映射的能力。

Fig. 2. 2(a) shows the original underlying mapping while 2(b) shows the residual mapping in ResNet [5].

图 2: 2(a) 展示了原始的底层映射,而 2(b) 展示了 ResNet [5] 中的残差映射。

B. ConvRNN and its Variants

B. ConvRNN 及其变体

RNN and its classical variants LSTM and GRU have achieved great success in the field of sequence processing. To tackle the spatio-temporal problems, we adopt the basic ConvRNN and its variants ConvLSTM and ConvGRU, which are transformed from the vanilla RNNs by replacing their fully-connected operators with convolutional operators. Furthermore, for reducing the computational overhead, we delicately design the convolutional operation in ConvRNNs. In our implementation, the ConvRNN can be formulated as

RNN 及其经典变体 LSTM 和 GRU 在序列处理领域取得了巨大成功。为了解决时空问题,我们采用了基本的 ConvRNN 及其变体 ConvLSTM 和 ConvGRU,它们通过将全连接操作替换为卷积操作,从原始的 RNN 转换而来。此外,为了减少计算开销,我们精心设计了 ConvRNN 中的卷积操作。在我们的实现中,ConvRNN 可以表示为

where $X^{t}$ is the input 3D feature map, $H^{t-1}$ is the hidden state obtained from the earlier output of ConvRNN and $H^{t}$ is the output 3D feature map at this state. Both the number of input $X^{t}$ and output $H^{t}$ channels in the ConvRNN are $\mathbf{N}$ .

其中 $X^{t}$ 是输入的3D特征图,$H^{t-1}$ 是从ConvRNN的早期输出中获得的隐藏状态,$H^{t}$ 是当前状态的输出3D特征图。ConvRNN中输入 $X^{t}$ 和输出 $H^{t}$ 的通道数均为 $\mathbf{N}$。

Additionally, ${}^{2N}\mathbf{W}^{N}\mathbf{\Phi}_{*}\mathbf{X}$ denotes a convolution operation between weights $\mathbf{W}$ and input $\mathbf{X}$ with the input channel 2N and the output channel N. To make the ConvRNN more efficient, inspired by [30], [32], given input $\mathbf{X}$ with 2N channels, we conduct the convolution operation in 2 steps:

此外,${}^{2N}\mathbf{W}^{N}\mathbf{\Phi}_{*}\mathbf{X}$ 表示权重 $\mathbf{W}$ 和输入 $\mathbf{X}$ 之间的卷积操作,输入通道为 2N,输出通道为 N。为了使 ConvRNN 更加高效,受 [30]、[32] 启发,给定具有 2N 通道的输入 $\mathbf{X}$,我们分两步进行卷积操作:

Directly applying the original convolutions with $3{\times}3$ kernels suffers from high computational complexity. As detailed in Table I, the new modification reduces the required computation by 18N/11 times with comparable result. Similarly, all the convolutions in ConvGRU and ConvLSTM are replaced with the light-weight modification.

直接应用原始的 $3{\times}3$ 核卷积会导致较高的计算复杂度。如表 1 所示,新的修改将所需的计算量减少了 18N/11 倍,同时保持了相当的结果。同样地,ConvGRU 和 ConvLSTM 中的所有卷积都被替换为轻量级的修改。

C. RNN-Regulated ResNet

C. RNN 调控的 ResNet

To deal with the CIFAR-10/100 datasets and the Imagenet dataset, [5] proposed two kinds of ResNet building blocks: the non-bottleneck building block and the bottleneck building block. Based on those, by applying ConvRNNs as regulators, we get RNN-Regulated ResNet building module and bottleneck RNN-Regulated ResNet building module correspondingly.

为了处理 CIFAR-10/100 数据集和 Imagenet 数据集,[5] 提出了两种 ResNet 构建模块:非瓶颈构建模块和瓶颈构建模块。在此基础上,通过应用 ConvRNN 作为调节器,我们得到了 RNN 调节的 ResNet 构建模块和瓶颈 RNN 调节的 ResNet 构建模块。

TABLE I PERFORMANCE OF REGNET-20 WITH CONVGRU AS REGULATORS ON CIFAR-10. WE COMPARE THE TEST ERROR RATES BETWEEN TRADITIONAL $3{\times}3$ KERNELS AND OUR NEW MODIFICATION.

表 1: 使用 CONVGRU 作为调节器的 RegNet-20 在 CIFAR-10 上的性能。我们比较了传统的 $3{\times}3$ 卷积核与我们新修改的卷积核的测试错误率。

| 卷积核类型 | 错误率 | 参数量 | FLOPs |

|---|---|---|---|

| 3x3 | 7.35 | +330K | +346M |

| 我们的方法 | 7.42 | +44K | +15M |

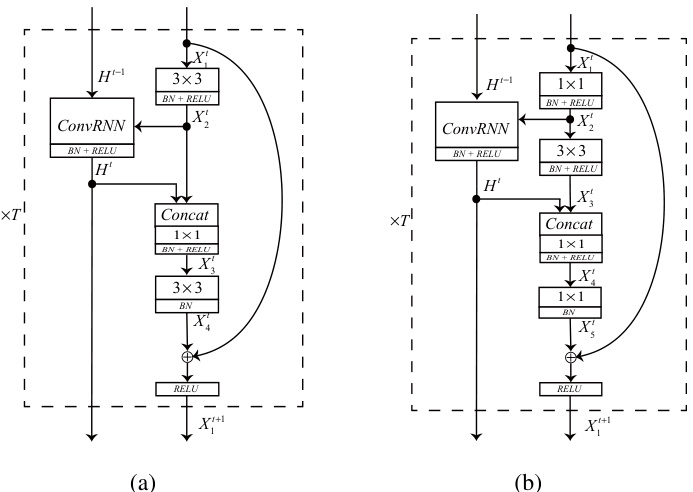

Fig. 3. The RegNet module is shown in 3(a). The bottleneck RegNet block is shown in 3(b). The $T$ denotes the number of building blocks as well as the total time steps of ConvRNN.

图 3: RegNet 模块如图 3(a) 所示。瓶颈 RegNet 块如图 3(b) 所示。$T$ 表示构建块的数量以及 ConvRNN 的总时间步数。

- RNN-Regulated ResNet Module (RegNet module): The illustration of RegNet module is shown in Fig. 3(a). Here, we choose ConvLSTM for expounding. $H^{t-1}$ denotes the earlier output from ConvLSTM, and $H^{t}$ is output of the ConvLSTM at $t$ -th module . $X_{i}^{t}$ denotes the $i$ -th feature map at the $t$ -th module.

- RNN 调控的 ResNet 模块 (RegNet 模块):RegNet 模块的示意图如图 3(a) 所示。这里,我们选择 ConvLSTM 进行说明。$H^{t-1}$ 表示 ConvLSTM 的早期输出,$H^{t}$ 是 ConvLSTM 在第 $t$ 个模块的输出。$X_{i}^{t}$ 表示第 $t$ 个模块的第 $i$ 个特征图。

The $t$ -th RegNet(ConvLSTM) module can be expressed as

第 $t$ 个 RegNet(ConvLSTM) 模块可以表示为

where $\mathbf{W}{i j}^{t}$ denotes the convolutional kernel which mapping feature map $\mathbf{X}{i}^{t}$ to $\mathbf{X}{j}^{t}$ and $\mathbf{b}{i j}^{t}$ denotes the correlative bias. Both $\mathbf{W}{12}^{t}$ and $\mathbf{W}{34}^{t}$ are $3\times3$ convolutional kernels. The $\mathbf{W}_{23}^{t}$ is $1\times1$ kernel. $\mathrm{BN}(\cdot)$ indicates batch normalization. 𝐶𝑜𝑛𝑐𝑎𝑡 $[\cdot]$ refers to the concatenate operation.

其中 $\mathbf{W}{i j}^{t}$ 表示将特征图 $\mathbf{X}{i}^{t}$ 映射到 $\mathbf{X}{j}^{t}$ 的卷积核,$\mathbf{b}{i j}^{t}$ 表示相关的偏置。$\mathbf{W}{12}^{t}$ 和 $\mathbf{W}{34}^{t}$ 都是 $3\times3$ 的卷积核。$\mathbf{W}_{23}^{t}$ 是 $1\times1$ 的卷积核。$\mathrm{BN}(\cdot)$ 表示批量归一化。$𝐶𝑜𝑛𝑐𝑎𝑡[\cdot]$ 表示拼接操作。

Notice that in Eq (2) the input feature $\mathbf{X}_{2}^{t}$ and the previous output of ConvLSTM $\mathbf{H}^{t}$ are the inputs of ConvLSTM in $t$ -th module. According to the inputs, the ConvLSTM automatically decides whether the information in memory cell will be propagated to the output hidden feature map $\mathbf{H}^{t}$ .

注意到在公式 (2) 中,输入特征 $\mathbf{X}_{2}^{t}$ 和 ConvLSTM 的前一个输出 $\mathbf{H}^{t}$ 是第 $t$ 个模块中 ConvLSTM 的输入。根据这些输入,ConvLSTM 会自动决定是否将记忆单元中的信息传播到输出隐藏特征图 $\mathbf{H}^{t}$。

- Bottleneck RNN-Regulated ResNet Module (bottleneck RegNet module): The bottleneck RegNet module based on the bottleneck ResNet building block is shown in Fig. 3(b). The bottleneck building block introduced in [5] for dealing with the pictures with large size. Based on that, the $t$ -th bottleneck RegNet module can be expressed as

- 瓶颈 RNN 调节的 ResNet 模块(瓶颈 RegNet 模块):基于瓶颈 ResNet 构建块的瓶颈 RegNet 模块如图 3(b) 所示。文献 [5] 中引入的瓶颈构建块用于处理大尺寸图片。在此基础上,第 $t$ 个瓶颈 RegNet 模块可以表示为

TABLE II ARCHITECTURES FOR CIFAR-10/100 DATASETS. BY SETTING $\mathrm{N}\in{3,5,7}$ , WE CAN GET THE 20, 32, 56 -LAYERED REGNET.

表 II CIFAR-10/100 数据集的架构。通过设置 $\mathrm{N}\in{3,5,7}$,我们可以得到 20、32、56 层的 RegNet。

| name | outputsize | (6n+2)-layered RegNet |

|---|---|---|

| conv_0 | 32×32 | 3×3,16 |

| conv_1 | 32×32 | ConvRNN1+ 3×3,16 3×3,16 xn |

| conv_2 | 16×16 | ConvRNN2+ 3×3,32 3×3,32 xn |

| conv_3 | 8×8 | ConvRNN3 3×3,64 3×3,64 xn |

| 1×1 | AP,FC,softmax |

TABLE III CLASSIFICATION ERROR RATES ON THE CIFAR-10/100. BEST RESULTS ARE MARKED IN BOLD.

表 III CIFAR-10/100 上的分类错误率。最佳结果以粗体标记。

| 模型 | C10 | C100 |

|---|---|---|

| ResNet-20 [5] RegNet-20(ConvRNN) RegNet-20(ConvGRU) RegNet-20(ConvLSTM) | 8.38 7.60 7.42 7.28 | 31.72 30.03 29.69 29.81 |

| SE-ResNet-20 SE-RegNet-20(ConvRNN) SE-RegNet-20(ConvGRU) SE-RegNet-20(ConvLSTM) | 8.02 7.55 7.25 6.98 | 31.14 29.63 29.08 29.02 |

where $\mathbf{W}{12}^{t}$ and $\mathbf{W}{45}^{t}$ are the two $1\times1$ kernels, and $\mathbf{W}{23}^{t}$ is the $3\times3$ bottleneck kernel. The $\mathbf{W}{34}^{t}$ is a $1\times1$ kernel for fusing feature in our model.

其中 $\mathbf{W}{12}^{t}$ 和 $\mathbf{W}{45}^{t}$ 是两个 $1\times1$ 的核,$\mathbf{W}{23}^{t}$ 是 $3\times3$ 的瓶颈核。$\mathbf{W}{34}^{t}$ 是我们模型中用于融合特征的 $1\times1$ 核。

IV. EXPERIMENTS

IV. 实验

In this section, we evaluate the effectiveness of the proposed convRNN regulator on three benchmark datasets, including CIFAR-10, CIFAR-100, and ImageNet. We run the