SIMTEG: A FRUSTRATINGLY SIMPLE APPROACH IM- PROVES TEXTUAL GRAPH LEARNING

SIMTEG: 一种简单到令人沮丧却提升文本图学习的方法

ABSTRACT

摘要

Textual graphs (TGs) are graphs whose nodes correspond to text (sentences or documents), which are widely prevalent. The representation learning of TGs involves two stages: $(i)$ unsupervised feature extraction and $(i i)$ supervised graph representation learning. In recent years, extensive efforts have been devoted to the latter stage, where Graph Neural Networks (GNNs) have dominated. However, the former stage for most existing graph benchmarks still relies on traditional feature engineering techniques. More recently, with the rapid development of language models (LMs), researchers have focused on leveraging LMs to facilitate the learning of TGs, either by jointly training them in a computationally intensive framework (merging the two stages), or designing complex self-supervised training tasks for feature extraction (enhancing the first stage). In this work, we present SimTeG, a frustratingly Simple approach for Textual Graph learning that does not innovate in frameworks, models, and tasks. Instead, we first perform supervised parameterefficient fine-tuning (PEFT) on a pre-trained LM on the downstream task, such as node classification. We then generate node embeddings using the last hidden states of finetuned LM. These derived features can be further utilized by any GNN for training on the same task. We evaluate our approach on two fundamental graph representation learning tasks: node classification and link prediction. Through extensive experiments, we show that our approach significantly improves the performance of various GNNs on multiple graph benchmarks. Remarkably, when additional supporting text provided by large language models (LLMs) is included, a simple two-layer GraphSAGE trained on an ensemble of SimTeG achieves an accuracy of $77.48%$ on OGBN-Arxiv, comparable to state-of-the-art (SOTA) performance obtained from far more complicated GNN architectures. Furthermore, when combined with a SOTA GNN, we achieve a new SOTA of $78.04%$ on OGBN-Arxiv. Our code is publicly available at https://github.com/vermouth d ky/SimTeG and the generated node features for all graph benchmarks can be accessed at https: //hugging face.co/datasets/vermouth d ky/SimTeG.

文本图 (Textual Graphs, TGs) 是由文本 (句子或文档) 作为节点构成的图结构,其应用十分广泛。文本图的表示学习包含两个阶段:$(i)$ 无监督特征提取和 $(ii)$ 监督图表示学习。近年来,学界对后一阶段投入了大量研究,其中图神经网络 (Graph Neural Networks, GNNs) 已成为主流方法。然而在现有图基准数据集中,前一阶段仍主要依赖传统特征工程技术。随着语言模型 (Language Models, LMs) 的快速发展,研究者开始探索如何利用语言模型优化文本图学习——要么通过计算密集型框架将两个阶段联合训练 (合并阶段),要么设计复杂的自监督训练任务来增强特征提取 (优化第一阶段)。

本文提出 SimTeG,一种极其简单的文本图学习方法,该方法不涉及框架、模型或任务的创新。具体而言,我们首先在下游任务 (如节点分类) 上对预训练语言模型进行参数高效微调 (Parameter-Efficient Fine-Tuning, PEFT),然后使用微调后模型的最后隐藏状态生成节点嵌入。这些衍生特征可被任何 GNN 用于相同任务的训练。我们在两个基础图表示学习任务 (节点分类和链接预测) 上评估了该方法。大量实验表明,该方法能显著提升多种 GNN 在多个图基准数据集上的性能。值得注意的是,当结合大语言模型 (Large Language Models, LLMs) 提供的辅助文本时,基于 SimTeG 集成特征训练的简单两层 GraphSAGE 在 OGBN-Arxiv 数据集上达到 $77.48%$ 的准确率,与复杂得多的 GNN 架构取得的当前最优 (State-of-the-Art, SOTA) 性能相当。进一步与 SOTA GNN 结合后,我们在 OGBN-Arxiv 上创造了 $78.04%$ 的新 SOTA 记录。代码已开源:https://github.com/vermouthdky/SimTeG,所有图基准数据集的生成节点特征可通过 https://huggingface.co/datasets/vermouthdky/SimTeG 获取。

1 INTRODUCTION

1 引言

Textual Graphs (TGs) offer a graph-based representation of text data where relationships between phrases, sentences, or documents are depicted through edges. TGs are ubiquitous in real-world applications, including citation graphs (Hu et al., 2020; Yang et al., 2016), knowledge graphs (Wang et al., 2021), and social networks (Zeng et al., 2019; Hamilton et al., 2017), provided that each entity can be represented as text. Different from traditional NLP tasks, instances in TGs are correlated with each other, which provides non-trivial and specific information for downstream tasks. In general, graph benchmarks are usually task-specific (Hu et al., 2020), and most TGs are designed for two fundamental tasks: node classification and link prediction. For the first one, we aim to predict the category of unlabeled nodes while for the second one, our goal is to predict missing links among nodes. For both tasks, text attributes offer critical information.

文本图 (Textual Graphs, TGs) 通过边结构表示短语、句子或文档间的关联关系。这种基于图的文本表示方式广泛应用于现实场景,包括引文网络 (Hu et al., 2020; Yang et al., 2016)、知识图谱 (Wang et al., 2021) 和社交网络 (Zeng et al., 2019; Hamilton et al., 2017) 等所有实体可文本化的场景。与传统 NLP 任务不同,文本图中的实例相互关联,能为下游任务提供独特且具体的信息。现有图基准数据集通常针对特定任务设计 (Hu et al., 2020),大多数文本图主要服务于两项基础任务:节点分类与链接预测。前者旨在预测未标注节点的类别,后者则用于推断节点间缺失的关联。在这两类任务中,文本属性均承载着关键信息。

In recent years, TG representation learning follows a two-stage paradigm: (i) upstream: unsupervised feature extraction that encodes text into numeric embeddings, and $(i i)$ downstream: supervised graph representation learning that further transform the embeddings utilizing the graph structure. While Graph Neural Networks (GNNs) have dominated the latter stage, with an extensive body of academic research published, the former stage surprisingly still relies on traditional feature engineering techniques. For example, in most existing graph benchmarks (Hu et al., 2020; Yang et al., 2016; Zeng et al., 2019), node features are constructed using bag-of-words (BoW) or skipgram (Mikolov et al., 2013). This intuitively limits the performance of downstream GNNs, as it fails to fully capture textual semantics, fostering an increasing number of GNN models with more and more complex structures. More recently, researchers have begun to leverage the power of language models (LMs) for TG representation learning. Their efforts involve $(i)$ designing complex tasks for LMs to generate powerful node representations (Chien et al., 2021); $(i i)$ jointly training LMs and GNNs in a specific framework (Zhao et al., 2022; Mavromatis et al., 2023); or $(i i i)$ fusing the architecture of LM and GNN for end-to-end training (Yang et al., 2021). These works focus on novel training tasks, model architectures, or training frameworks, which generally require substantially modifying the training procedure. However, we argue that such complexity is not actually needed. As a response, in this paper, we present a frustratingly simple yet highly effective method that does not innovate in any of the above aspects but significantly improves the performance of GNNs on TGs.

近年来,文本图 (TG) 表示学习遵循两阶段范式:(i) 上游:无监督特征提取,将文本编码为数值嵌入;以及 (ii) 下游:有监督图表示学习,利用图结构进一步转换嵌入。虽然图神经网络 (GNNs) 主导了后一阶段,并有大量学术研究发表,但前一阶段仍依赖传统的特征工程技术。例如,在大多数现有图基准测试 (Hu et al., 2020; Yang et al., 2016; Zeng et al., 2019) 中,节点特征使用词袋 (BoW) 或 skipgram (Mikolov et al., 2013) 构建。这直观地限制了下游 GNNs 的性能,因为它无法充分捕捉文本语义,促使越来越多具有复杂结构的 GNN 模型涌现。最近,研究者开始利用语言模型 (LMs) 的力量进行 TG 表示学习。他们的努力包括:(i) 为 LMs 设计复杂任务以生成强大的节点表示 (Chien et al., 2021);(ii) 在特定框架中联合训练 LMs 和 GNNs (Zhao et al., 2022; Mavromatis et al., 2023);或 (iii) 融合 LM 和 GNN 的架构进行端到端训练 (Yang et al., 2021)。这些工作聚焦于新颖的训练任务、模型架构或训练框架,通常需要大幅修改训练流程。然而,我们认为这种复杂性并非必要。作为回应,本文提出了一种极其简单却高效的方法,它不涉及上述任何方面的创新,却显著提升了 GNNs 在 TGs 上的性能。

We are curious about several research questions: (i) How much could language models’ features generally improve the learning of GNN: is the improvement specific for certain GNNs? $(i i)$ What kind of language models fits the needs of textual graph representation learning best? (iii) How important are text attributes on various graph tasks: though previous efforts have shown improvement in node classification, is it also beneficial for link prediction, an equivalently fundamental task that intuitively emphasizes more on graph structures? To the end of answering the above questions, we take an initial step forwards by introducing a simple, effective, yet surprisingly neglected method on TGs and empirically evaluating it on two fundamental graph tasks: node classification and link prediction. Intuitively, when omitting the graph structures, the two tasks are equivalent to text classification and text similarity (retrieval) tasks in NLP, respectively. This intuition motivates us to propose our method: SimTeG. We first parameter-efficiently finetune (PEFT) an LM on the textual corpus of a TG with task-specific labels and then use the finetuned LM to generate node representations given its text by removing the head layer. Afterward, a GNN is trained with the derived node embeddings on the same downstream task for final evaluation. Though embarrassingly simple, SimTeG shows remarkable performance on multiple graph benchmarks w.r.t. node classification and link prediction. Particularly, we find several key observations:

我们关注以下几个研究问题:(i) 语言模型特征能在多大程度上普遍提升图神经网络(GNN)的学习效果:这种提升是否针对特定类型的GNN?$(ii)$哪种语言模型最适合文本图表示学习?(iii) 文本属性在不同图任务中的重要性如何:虽然先前研究已证明其在节点分类上的改进,但对于同样基础且更强调图结构的链接预测任务是否也有益?为回答上述问题,我们迈出第一步:针对文本图提出一种简单有效却意外被忽视的方法SimTeG,并在节点分类和链接预测两个基础图任务上进行实证评估。直观而言,当忽略图结构时,这两个任务分别等同于自然语言处理中的文本分类和文本相似性(检索)任务。这一直觉促使我们提出SimTeG方法:首先通过参数高效微调(PEFT)使语言模型适配文本图的语料库和任务特定标签,然后移除头部层,用微调后的语言模型根据文本生成节点表示,最后使用所得节点嵌入训练GNN进行最终评估。尽管方法极为简单,SimTeG在多个图基准测试的节点分类和链接预测任务中展现出卓越性能。我们特别发现几个关键观察:

❶ Good language modeling could generally improve the learning of GNNs on both node classification and link prediction. We evaluate SimTeG on three prestigious graph benchmarks for either node classification or link prediction, and find that SimTeG consistently outperforms the official features and the features generated by pretrained LMs (without finetuning) by a large margin. Notably, backed with SOTA GNN, we achieve new SOTA performance of $78.02%$ on OGBN-Arxiv. See Sec. 5.1 and Appendix A1 for details.

❶ 优秀的语言建模通常能提升图神经网络(GNN)在节点分类和链接预测任务上的学习效果。我们在三个权威图基准测试(分别针对节点分类和链接预测)上评估了SimTeG,发现其性能显著优于官方特征和预训练语言模型(未经微调)生成的特征。值得注意的是,结合最先进的GNN,我们在OGBN-Arxiv数据集上取得了78.02%的新SOTA性能。详见第5.1节和附录A1。

$\pmb{\phi}$ SimTeG significantly complements the margin between GNN backbones on multiple graph benchmarks by improving the performance of simple GNNs. Notably, a simple two-layer GraphSAGE (Hamilton et al., 2017) trained on SimTeG with proper LM backbones achieves on-par SOTA performance of $77.48%$ on OGBN-Arxiv (Hu et al., 2020).

$\pmb{\phi}$ SimTeG通过提升简单GNN的性能,显著弥补了多个图基准测试中GNN骨干模型间的性能差距。值得注意的是,采用合适LM骨干的SimTeG训练双层GraphSAGE (Hamilton et al., 2017) 时,在OGBN-Arxiv (Hu et al., 2020) 数据集上达到了与当前最优方法持平的77.48%性能。

$\pmb{\theta}$ PEFT are crucial when finetuning LMs to generate representative embeddings, because fullfinetuning usually leads to extreme over fitting due to its large parameter space and the caused fitting ability. The over fitting in the LM finetuning stage will hinder the training of downstream GNNs with a collapsed feature space. See Sec. 5.3 for details.

$\pmb{\theta}$ 在微调大语言模型生成代表性嵌入时,参数高效微调(PEFT)技术至关重要。因为全参数微调会因其庞大的参数量及随之而来的拟合能力,通常导致严重的过拟合现象。大语言模型微调阶段的过拟合会阻碍下游图神经网络(GNN)的训练,造成特征空间坍缩。详见第5.3节。

$\pmb{\mathscr{Q}}$ SimTeG is moderately sensitive to the selection of LMs. Generally, the performance of SimTeG is positively correlated with the corresponding LM’s performance on text embedding tasks, e.g. classification and retrieval. We refer to Sec. 5.4 for details. Based on this, we expect further improvement of SimTeG once more powerful LMs for text embedding are available.

$\pmb{\mathscr{Q}}$ SimTeG对语言模型(LM)的选择具有中等敏感性。通常,SimTeG的性能与相应LM在文本嵌入任务(如分类和检索)中的表现呈正相关,详见第5.4节。基于此,我们预期当更强大的文本嵌入大语言模型可用时,SimTeG将获得进一步提升。

2 RELATED WORKS

2 相关工作

In this section, we first present several works that are closely related to ours and further clarify several concepts and research lines that are plausibly related to ours in terms of similar terminology.

在本节中,我们首先介绍几项与本研究密切相关的工作,并进一步厘清那些在术语相似性上可能与之关联的概念和研究方向。

Leveraging LMs on TGs. Focusing on leveraging the power of LMs to TGs, there are several works that are existed and directly comparable with ours. For these works, they either focus on (i) designing specific strategies to generate node embeddings using LMs (He et al., 2023; Chien et al., 2021) or (ii) jointly training LMs and GNNs within a framework (Zhao et al., 2022; Mavromatis et al., 2023). Representative ly, for the former one, Chien et al. (2021) proposed a self-supervised graph learning task integrating XR-Transformers (Zhang et al., 2021b) to extract node representation, which shows superior performance on multiple graph benchmarks, validating the necessity for acquiring high-quality node features for attributed graphs. Besides, He et al. (2023) utilizes ChatGPT (OpenAI, 2023) to generate additional supporting text with LLMs. For the latter mechanism, Zhao et al. (2022) proposed a variation al expectation maximization joint-training framework for LMs and GNNs to learn powerful graph representations. Mavromatis et al. (2023) designs a graph structure-aware framework to distill the knowledge from GNNs to LMs. Generally, the joint-training framework requires specific communication between LMs and GNNs, e.g. pseudo labels (Zhao et al., 2022) or hidden states (Mavromatis et al., 2023). It is worth noting that the concurrent work He et al. (2023) proposed a close method to ours. However, He et al. (2023) focuses on generating additional informative texts for nodes with LLMs, which is specifically for citation networks on node classification task. In contrast, we focus on generally investigating the effectiveness of our proposed method, which could be widely applied to unlimited datasets and tasks. Utilizing the additional text provided by He et al. (2023), we further show that our method could achieve now SOTA on OGBN-Arxiv. In addition to the main streams, there are related works trying to fuse the architecture of LM and GNN for end-to-end training. Yang et al. (2021) proposed a nested architecture by injecting GNN layers into LM layers. However, due to the natural incompatible ness regarding training batch size, this architecture only allows 1-hop message passing, which significantly reduce the learning capability of GNNs.

在大语言模型 (LLM) 上运用图结构 (TG)。聚焦于将大语言模型的能力迁移至图结构领域,已有若干工作与我们的研究直接可比。这些工作主要分为两类:(i) 设计特定策略利用大语言模型生成节点嵌入 (He et al., 2023; Chien et al., 2021);(ii) 在统一框架中联合训练大语言模型与图神经网络 (Zhao et al., 2022; Mavromatis et al., 2023)。对于前者,Chien et al. (2021) 提出集成 XR-Transformer (Zhang et al., 2021b) 的自监督图学习任务来提取节点表示,该模型在多个图基准测试中表现出色,验证了获取高质量属性图节点特征的必要性。此外,He et al. (2023) 利用 ChatGPT (OpenAI, 2023) 通过大语言模型生成辅助文本。对于后者,Zhao et al. (2022) 提出基于变分期望最大化的联合训练框架,使大语言模型与图神经网络共同学习强表征能力。Mavromatis et al. (2023) 设计了图结构感知框架来实现从图神经网络到大语言模型的知识蒸馏。这类联合训练框架通常需要特定交互机制,例如伪标签 (Zhao et al., 2022) 或隐藏状态 (Mavromatis et al., 2023)。值得注意的是,同期工作 He et al. (2023) 提出了与本文高度相似的方法,但其聚焦于通过大语言模型为节点生成补充文本信息,且仅针对引文网络的节点分类任务。相比之下,我们的研究致力于验证所提方法的普适有效性,可广泛应用于无限数据集和任务场景。基于 He et al. (2023) 提供的补充文本,我们进一步证明本方法能在 OGBN-Arxiv 数据集上达到当前最优水平。除主流方法外,亦有研究尝试融合大语言模型与图神经网络的架构进行端到端训练。Yang et al. (2021) 提出通过在图神经网络层中嵌套大语言模型层的架构,但由于训练批次大小的天然不兼容性,该架构仅支持单跳信息传递,严重限制了图神经网络的学习能力。

More “Related” Works. ❶ Graph Transformers (Wu et al., 2021; Ying et al., 2021; Hussain et al., 2022; Park et al., 2022; Chen et al., 2022): Nowadays, Graph Transformers are mostly used to denote Transformer-based architectures that embed both topological structure and node features. Different from our work, these models focus on graph-level problems (e.g. graph classification and graph generation) and specific domains (e.g. molecular datasets and protein association networks), which cannot be adopted on TGs. ❷ Leveraging GNNs on Texts (Zhu et al., 2021; Huang et al., 2019; Zhang et al., 2020): Another seemingly related line on integrating GNNs and LMs is conversely applying GNNs to textual documents. Different from TGs, GNNs here do not rely on ground-truth graph structures but the self-constructed or synthetic ones.

更多"相关"工作。❶ 图Transformer (Wu et al., 2021; Ying et al., 2021; Hussain et al., 2022; Park et al., 2022; Chen et al., 2022): 当前图Transformer主要用于表示同时嵌入拓扑结构和节点特征的Transformer架构。与我们的工作不同,这些模型聚焦图级别问题(如 图分类和图生成)和特定领域(如 分子数据集和蛋白质关联网络),无法直接应用于TGs。❷ 文本上的GNN应用 (Zhu et al., 2021; Huang et al., 2019; Zhang et al., 2020): 另一类看似相关的方向是将GNN反向应用于文本文档。与TGs不同,这里的GNN不依赖真实图结构,而是基于自建或合成的结构。

3 PRELIMINARIES

3 预备知识

Notations. To make notations consistent, we use bold uppercase letters to denote matrices and vectors, and calligraphic font types (e.g. $\tau$ ) to denote sets. We denote a textual graph as a set $\mathcal{G}=(\mathcal{V},\mathcal{E},\mathcal{T})$ , where $\nu$ and $\mathcal{E}$ are a set of nodes and edges, respectively. $\tau$ is a set of text and each textual item is aligned with a node $v\in\nu$ . For practical usage, we usually rewrite $\mathcal{E}$ into $\mathbf{A}\in{0,1}^{|\mathcal{V}|\times|\mathcal{V}|}$ , which is a sparse matrix, where entry $\mathbf{A}{i,j}$ denotes the link between node $v_{i},v_{j}\in\mathcal{V}$ .

符号说明。为保持符号统一,我们用粗体大写字母表示矩阵和向量,用花体字体(如$\tau$)表示集合。我们将文本图表示为集合$\mathcal{G}=(\mathcal{V},\mathcal{E},\mathcal{T})$,其中$\nu$和$\mathcal{E}$分别是节点集和边集。$\tau$是文本集合,每个文本项与节点$v\in\nu$对齐。实际应用中,我们通常将$\mathcal{E}$重写为稀疏矩阵$\mathbf{A}\in{0,1}^{|\mathcal{V}|\times|\mathcal{V}|}$,其中元素$\mathbf{A}{i,j}$表示节点$v_{i},v_{j}\in\mathcal{V}$之间的连接关系。

Problem Formulations. We focus on two fundamental tasks in TGs: $(i)$ node classification and $(i i)$ link prediction. For node classification, given a TG $\mathcal{G}$ , we aim to learn a model $\Phi:\mathcal{V}\to\mathcal{V}$ , where $\mathcal{V}$ is the ground truth labels. For link prediction, given a TG $\mathcal{G}$ , we aim to learn a model $\Phi:\mathcal{V}\times\mathcal{V}\to{\bar{0,1}}$ , where $f(v_{i},v_{j})=1$ if there is a link between $v_{i}$ and $v_{j}$ , otherwise $f(v_{i},v_{j})=0$ Different from traditional tasks that are widely explored by the graph learning community, evolving original text into learning is non-trivial. Particularly, when ablating the graphs structure, node classification and link prediction problem are collapsed to text classification and text similarity problem, respectively. This sheds light on how to leverage LMs for TG representation learning.

问题定义。我们聚焦于时序图(TGs)中的两个基础任务:$(i)$ 节点分类与$(ii)$ 链接预测。对于节点分类任务,给定时序图$\mathcal{G}$,我们的目标是学习模型$\Phi:\mathcal{V}\to\mathcal{V}$,其中$\mathcal{V}$代表真实标签。对于链接预测任务,给定时序图$\mathcal{G}$,我们旨在构建模型$\Phi:\mathcal{V}\times\mathcal{V}\to{\bar{0,1}}$,当$v_{i}$与$v_{j}$之间存在链接时$f(v_{i},v_{j})=1$,否则$f(v_{i},v_{j})=0$。与图学习社区广泛研究的传统任务不同,将原始文本演化至学习过程具有显著挑战性。特别地,当消融图结构时,节点分类和链接预测问题将分别退化为文本分类和文本相似度问题。这为如何利用大语言模型进行时序图表征学习提供了启示。

Node-level Graph Neural Networks. Nowadays, GNNs have dominated graph-related tasks. Here we focus on GNN models working on node-level tasks (i.e. node classification and link prediction). These models work on generating node representations by recursively aggregating features from their multi-hop neighbors, which is usually noted as message passing. Generally, one can formulate a graph convolution layer as: $X_{l+1}=\Psi_{l}(C X_{l})$ , where $_C$ is the graph convolution matrix (e.g. $C=D^{-1/2}A D^{-1/2}$ in Vanilla GCN (Kipf & Welling, 2016)) and $\Psi_{l}$ is the feature transformation matrix. For the node classification problem, a classifier (e.g., an MLP) is usually appended to the output of a $k$ -layer GNN model; while for link prediction, a similarity function is applied to the final output to compute the similarity between two node embeddings. As shown above, as GNNs inherently evolve the whole graph structure for convolution, it is notoriously challenging for scaling it up. It is worth noting that evolving sufficient neighbors during training is crucial for GNNs. Many studies (Duan et al., 2022; Zou et al., 2019) have shown that full-batch training generally outperforms mini-batch for GNNs on multi graph benchmarks. In practice, the lower borderline of batch size for training GNNs is usually thousands. However, when applying it to LMs, it makes the GNN-LM end-to-end training intractable, as a text occupies far more GPU memories than an embedding.

节点级图神经网络 (Node-level Graph Neural Networks)。当前,图神经网络 (GNN) 已成为图相关任务的主流方法。本文聚焦于处理节点级任务(如节点分类和链接预测)的GNN模型。这类模型通过递归聚合多跳邻居特征来生成节点表征,这一过程通常称为消息传递 (message passing)。通用图卷积层可表示为:$X_{l+1}=\Psi_{l}(C X_{l})$,其中$_C$为图卷积矩阵(例如Vanilla GCN (Kipf & Welling, 2016) 中的 $C=D^{-1/2}A D^{-1/2}$),$\Psi_{l}$为特征变换矩阵。对于节点分类任务,通常在$k$层GNN模型输出后接入分类器(如MLP);而链接预测则需对最终输出的节点嵌入应用相似度函数。如上所述,由于GNN本质上是基于全图结构进行卷积运算,其扩展性面临显著挑战。值得注意的是,训练过程中覆盖足够数量的邻居节点对GNN性能至关重要。多项研究 (Duan et al., 2022; Zou et al., 2019) 表明,在多图基准测试中,GNN的全批次训练通常优于小批次训练。实践中,GNN训练的批次大小下限通常需达到数千。然而,当应用于大语言模型时,这种要求会导致GNN-LM的端到端训练难以实现,因为文本数据占用的GPU显存远超嵌入向量。

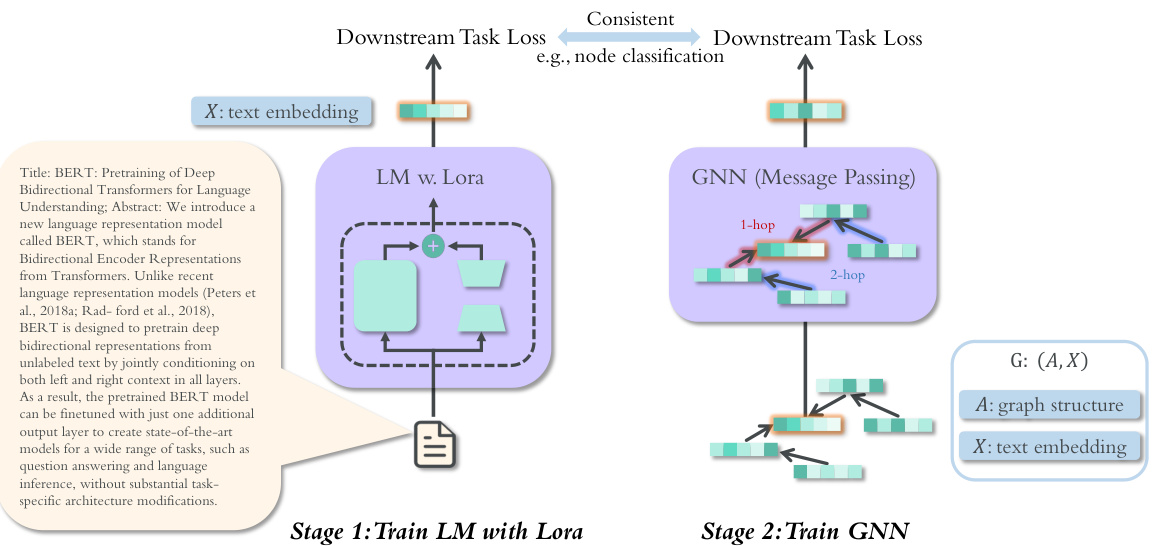

Figure 1: The overview of SimTeG. In stage $I$ , we train a LM with lora (Hu et al., 2022) and then generate the embeddings $\boldsymbol{X}$ as the representation of text. In stage 2, we train a GNN on top of the embeddings $\boldsymbol{X}$ , along with the graph structure. The two stages are guided with consistent loss function, e.g., link prediction or node classification.

图 1: SimTeG框架概览。在阶段 $I$ 中,我们使用LoRA (Hu et al., 2022) 训练语言模型并生成嵌入向量 $\boldsymbol{X}$ 作为文本表示。阶段2在嵌入 $\boldsymbol{X}$ 和图结构基础上训练GNN。两个阶段通过一致的损失函数(如链接预测或节点分类)进行协同优化。

Text Embeddings and Language Models. Transforming text in low-dimensional dense embeddings serves as the upstream of textual graph representation learning and has been widely explored in the literature. To generate sentence embeddings with LMs, two commonly-used methods are (i) average pooling (Reimers & Gurevych, 2019) by taking the average of all word embeddings along with attention mask and $(i i)$ taking the embedding of the [CLS] token (Devlin et al., 2018). With the development of pre-trained language models (Devlin et al., 2018; Liu et al., 2019), particular language models (Li et al., 2020; Reimers & Gurevych, 2019) for sentence embeddings have been proposed and shown promising results in various benchmarks (Mu en nigh off et al., 2022).

文本嵌入与语言模型。将文本转换为低维稠密嵌入是文本图表示学习的上游任务,已在文献中得到广泛研究。生成句子嵌入的两种常用方法包括:(i) 平均池化 (Reimers & Gurevych, 2019) ,即对所有词嵌入及注意力掩码取平均值;以及 (ii) 使用[CLS] token的嵌入 (Devlin et al., 2018) 。随着预训练语言模型 (Devlin et al., 2018; Liu et al., 2019) 的发展,专门用于句子嵌入的特定语言模型 (Li et al., 2020; Reimers & Gurevych, 2019) 被提出,并在多个基准测试中展现出优异性能 (Muennighoff et al., 2022) 。

4 SIMTEG: METHODOLOGY

4 SIMTEG: 方法论

We propose an extremely simple two-stage training manner that decouples the training of $g n n(\cdot)$ and $l m\bar{(\cdot)}$ . We first finetune $l m$ on $\tau$ with the downstream task loss:

我们提出了一种极其简单的两阶段训练方式,将 $g n n(\cdot)$ 和 $l m\bar{(\cdot)}$ 的训练解耦。首先在 $\tau$ 上使用下游任务损失对 $l m$ 进行微调:

$$

L o s s_{c l s}=\mathcal{L}{\boldsymbol{\theta}}\big(\phi(l m(\mathcal{T})),\mathbf{Y}\big),\quad L o s s_{l i n k}=\mathcal{L}{\boldsymbol{\theta}}\big(\phi\big(l m(\mathcal{T}{s r c}),l m(\mathcal{T}_{d s t})\big),\mathbf{Y}\big),

$$

$$

L o s s_{c l s}=\mathcal{L}{\boldsymbol{\theta}}\big(\phi(l m(\mathcal{T})),\mathbf{Y}\big),\quad L o s s_{l i n k}=\mathcal{L}{\boldsymbol{\theta}}\big(\phi\big(l m(\mathcal{T}{s r c}),l m(\mathcal{T}_{d s t})\big),\mathbf{Y}\big),

$$

where $\phi(\cdot)$ is the classifier (left for node classification) or similarity function (right for link prediction) and $\mathbf{Y}$ is the label. After finetuning, we generate node representations $\boldsymbol{X}$ with the finetuned LM $\hat{l m}$ . In practice, we follow Reimers & Gurevych (2019) to perform mean pooling over the output of the last layer of the LM and empirically find that such a strategy is more stable and converges faster than solely taking the $

其中 $\phi(\cdot)$ 是分类器(左侧用于节点分类)或相似度函数(右侧用于链接预测),$\mathbf{Y}$ 为标签。微调完成后,我们使用微调后的语言模型 $\hat{l m}$ 生成节点表示 $\boldsymbol{X}$。实际操作中,我们遵循 Reimers & Gurevych (2019) 对语言模型最后一层输出进行均值池化,实证发现该策略比仅采用 $

Regular iz ation with PEFT. When fully finetuning a LM, the inferred features are prone to overfit the training labels, which results in collapsed feature space and thus hindering the generalization in GNN training. Though PEFT was proposed to accelerate the finetuning process without loss of performance, in our two-stage finetuning stage, we empirically find PEFT (Hu et al., 2022; Houlsby et al., 2019; He et al., 2022) could alleviate the over fitting issue to a large extent and thus provide well-regularized node features. See Sec. 5.3 for empirical analysis. In this work, We take the popular PEFT method, lora (Hu et al., 2022), as the instantiation.

使用PEFT进行正则化。在全面微调大语言模型时,推断出的特征容易过拟合训练标签,导致特征空间坍缩,从而阻碍GNN训练中的泛化能力。尽管PEFT的提出旨在加速微调过程且不损失性能,但在我们的两阶段微调过程中,实证发现PEFT (Hu et al., 2022; Houlsby et al., 2019; He et al., 2022) 能在很大程度上缓解过拟合问题,从而提供良好正则化的节点特征。实证分析详见第5.3节。本工作采用流行的PEFT方法lora (Hu et al., 2022) 作为具体实现。

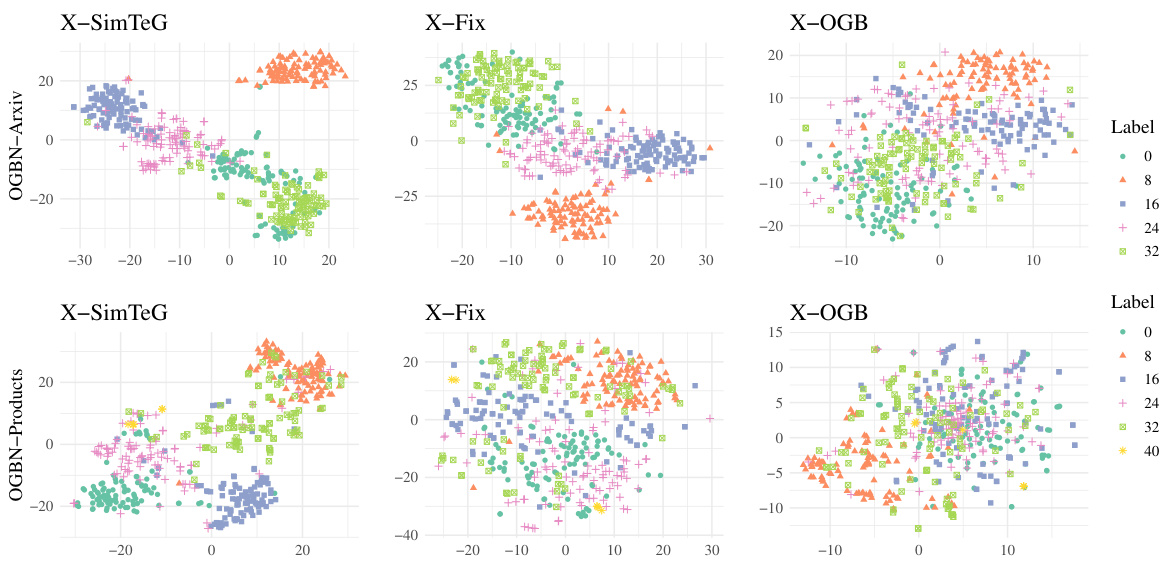

Figure 2: The two-dimensional feature space of $\boldsymbol{X}$ -SimTeG, $\boldsymbol{X}$ -Fix, and $\boldsymbol{X}$ -OGB for OGBN-Arixv, and OGBN-Products. different values and shapes refer to different labels on the specific dataset. The feature values are computed by T-SNE. The LM backbone is e5-large.

图 2: $\boldsymbol{X}$-SimTeG、$\boldsymbol{X}$-Fix 和 $\boldsymbol{X}$-OGB 在 OGBN-Arixv 和 OGBN-Products 数据集上的二维特征空间分布。不同数值与形状对应具体数据集中的不同标签。特征值通过 T-SNE 计算得出,大语言模型骨干网络采用 e5-large。

Selection of LM. As the revolution induced by LMs, a substantial number of valuable pre-trained LMs have been proposed. As mentioned before, when ablating graph structures of TG, the two fundamental tasks, node classification and link prediction, are simplified into two well-established NLP tasks, text classification and text similarity (retrieval). Based on this motivation, we select LMs pretrained for information retrieval as the backbone of SimTeG. Concrete models are selected based on the benchmark $\mathbf{MTEB}^{1}$ considering the model size and the performance on both retrieval and classification tasks. An ablation study regarding this motivation is presented in Sec. 5.4.

大语言模型选择。随着大语言模型带来的变革,大量有价值的预训练大语言模型被提出。如前所述,当消融TG的图结构时,节点分类和链接预测这两个基本任务被简化为两个成熟的自然语言处理任务:文本分类和文本相似性(检索)。基于这一动机,我们选择为信息检索预训练的大语言模型作为SimTeG的骨干网络。具体模型选择基于基准测试$\mathbf{MTEB}^{1}$,同时考虑模型规模以及在检索和分类任务上的性能。关于该动机的消融研究见第5.4节。

A Finetuned LM Provides A More Distinguishable Feature Space. We plot the two-dimensional feature space computed by T-SNE (Van der Maaten & Hinton, 2008) of $\boldsymbol{X}$ -SimTeG, $\boldsymbol{X}$ -Fix (features generated by pretrained LM without finetuning), and $\boldsymbol{X}$ -OGB regarding labels on OGBN-Arxiv and OGBN-Products in Fig. 2. In detail, we randomly select 100 nodes each with various labels and use T-SNE to compute its two-dimensional features. As shown below, $\boldsymbol{X}$ -SimTeG has a significantly more distinguishable feature space as it captures more semantic information and is finetuned on the downstream dataset. Besides, we find that $\boldsymbol{X}$ -Fix is more distinguishable than $\boldsymbol{X}$ -OGB, which illustrates the inner semantic capture ability of LMs. Furthermore, in comparison with OGBN-Arixv, features in OGBN-Products is visually in differentiable, indicating the weaker correlation between semantic information and task-specific labels. It accounts for the less improvement of SimTeG on OGBN-Products in Sec. 5.1.

经过微调的大语言模型提供更具区分性的特征空间。我们通过T-SNE (Van der Maaten & Hinton, 2008) 计算了$\boldsymbol{X}$-SimTeG、$\boldsymbol{X}$-Fix (未经微调的预训练大语言模型生成特征) 和$\boldsymbol{X}$-OGB在OGBN-Arxiv与OGBN-Products数据集上的二维特征空间分布 (见图2)。具体而言,我们随机选取100个不同标签的节点,使用T-SNE计算其二维特征。如图所示,$\boldsymbol{X}$-SimTeG由于捕获了更多语义信息并在下游数据集上进行了微调,其特征空间具有显著更高的可区分性。此外,$\boldsymbol{X}$-Fix比$\boldsymbol{X}$-OGB更具区分性,这证明了大语言模型内在的语义捕获能力。值得注意的是,与OGBN-Arxiv相比,OGBN-Products中的特征在视觉上更难区分,表明语义信息与任务特定标签之间的相关性较弱。这也解释了第5.1节中SimTeG在OGBN-Products上提升幅度较小的原因。

5 EXPERIMENTS

5 实验

In the experiments, we aim at answering three research questions as proposed in the introduction (Sec. 1). For a clear statement, we split and reformat them into the following research questions. Q1: How much could SimTeG generally improve the learning of GNNs on node classification and link prediction? Q2: Does X-SimTeG facilitate better convergence for GNNs? Q3: Is PEFT a necessity for LM finetuning stage? Q4: How sensitive is GNN training sensitive to the selection of LMs?

在实验中,我们旨在回答引言部分(第1节)提出的三个研究问题。为清晰表述,我们将其拆分并重新表述为以下研究问题:

Q1:SimTeG在节点分类和链接预测任务中,通常能在多大程度上提升图神经网络(GNN)的学习效果?

Q2:X-SimTeG是否有助于提升图神经网络的收敛性?

Q3:参数高效微调(PEFT)是否是语言模型(LM)微调阶段的必要环节?

Q4:图神经网络训练对语言模型的选择有多敏感?

Datasets. Focusing on two fundamental tasks node classification and link prediction, we conduct experiments on three prestigious benchmarks: OGBN-Arxiv (Arxiv), OGBN-Products (Products), and OGBL-Citation2 (Hu et al., 2020). The former two are for node classification while the latter one is for link prediction. For the former two, we follow the public split, and all text resources are provided by the officials. For the latter one, OGBL-Citation2, as no official text resources are provided, we take the intersection of it and another dataset ogbn-papers100M w.r.t. unified paper ids, which results in a subset of OGBL-Citation2 with about 2.7M nodes. The public split is further updated according to this subset. In comparison, the original OGBL-Citation2 has about $2.9\mathbf{M}$ nodes, which is on par with the TG version, as the public valid and test split occupies solely $2%$ overall. As a result, we expect roughly consistent performance for methods on the TG version of OGBL-Citation2 and the original one. We introduce the statistics of the three datasets in Table. A8 and the details in Appendix A2.2.

数据集。我们聚焦于节点分类和链接预测这两项基础任务,在三个权威基准数据集上开展实验:OGBN-Arxiv (Arxiv)、OGBN-Products (Products) 和 OGBL-Citation2 (Hu et al., 2020)。前两个数据集用于节点分类,最后一个用于链接预测。对于前两个数据集,我们遵循公开的数据划分标准,所有文本资源均由官方提供。对于OGBL-Citation2数据集,由于未提供官方文本资源,我们将其与另一个数据集ogbn-papers100M通过统一论文ID取交集,最终得到包含约270万节点的OGBL-Citation2子集。根据该子集进一步更新了公开划分方案。相比之下,原始OGBL-Citation2包含约$2.9\mathbf{M}$个节点,与TG版本规模相当,因为公开验证集和测试集仅占总体的$2%$。因此,我们预期各类方法在OGBL-Citation2的TG版本与原始版本上会表现基本一致。三个数据集的统计信息见表A8,详细信息见附录A2.2。

Baselines. We compare SimTeG with the official features $\boldsymbol{X}$ -OGB (Hu et al., 2020), which is the mean of word embeddings generated by skip-gram (Mikolov et al., 2013). In addition, for node classification, we include another two SOTA methods: $\boldsymbol{X}$ -GIANT (Chien et al., 2021) and GLEM (Zhao et al., 2022). Particularly, $\mathbf{\boldsymbol{X}}-\mathbf{\boldsymbol{*}}$ are methods are different at learning node embeddings and any GNN model could be applied in the downstream task for a fair comparison. To make things consistent, we denote our method as $\boldsymbol{X}$ -SimTeG without further specification.

基线方法。我们将SimTeG与官方特征$\boldsymbol{X}$-OGB [20](基于skip-gram [21]生成的词嵌入均值)进行对比。针对节点分类任务,另加入两种SOTA方法:$\boldsymbol{X}$-GIANT [22]和GLEM [23]。需特别说明的是,$\mathbf{\boldsymbol{X}}-\mathbf{\boldsymbol{*}}$系列方法仅在节点嵌入学习环节存在差异,下游任务中均可采用任意GNN模型以确保公平性。为保持统一,后文默认将我们的方法记为$\boldsymbol{X}$-SimTeG。

GNN Backbones. Aiming at investigating the general improvement of SimTeG, for each dataset, we select two commonly-used baselines GraphSAGE and MLP besides one corresponding SOTA GNN models based on the official leader board 2. For OGBN-Arxiv, we select RevGAT (Li et al., 2021); for OGBN-Products, we select SAGN $+,$ SCR (Sun et al., 2021; Zhang et al., 2021a); and for ogbn-citation2, we select SEAL (Zhang & Chen, 2018).

GNN骨干网络。为了研究SimTeG的通用改进效果,针对每个数据集,我们除了根据官方排行榜选择对应的SOTA GNN模型外,还选取了两个常用基线模型GraphSAGE和MLP。对于OGBN-Arxiv,我们选择RevGAT (Li et al., 2021);对于OGBN-Products,选择SAGN $+$ SCR (Sun et al., 2021; Zhang et al., 2021a);对于ogbn-citation2,则选用SEAL (Zhang & Chen, 2018)。

LM Backbones. For retrieval LM backbones, we select three popular LMs on MTEB (Mu en nigh off et al., 2022) leader board 3 w.r.t. model size and performance on classification and retrieval: allMiniLM-L6-v2 (Reimers & Gurevych, 2019), all-roberta-large-v1 (Reimers & Gurevych, 2019), and e5-large-v1 (Wang et al., 2022). We present the properties of the three LMs in Table. A10.

大语言模型骨干网络。作为检索式大语言模型骨干网络,我们根据模型规模及分类检索性能,从MTEB (Muennighoff等人, 2022) 排行榜中选取了三个主流模型:allMiniLM-L6-v2 (Reimers & Gurevych, 2019)、all-roberta-large-v1 (Reimers & Gurevych, 2019) 和 e5-large-v1 (Wang等人, 2022)。这三个大语言模型的特性详见 表 A10。

Hyper parameter search. We utilize optuna (Akiba et al., 2019) to perform hyper parameter search on all tasks. The search space for LMs and GNNs on all datasets is presented in Appendix A2.4.

超参数搜索。我们使用optuna (Akiba et al., 2019)对所有任务进行超参数搜索。所有数据集上语言模型( LM )和图神经网络( GNN )的搜索空间详见附录A2.4。

5.1 Q1: HOW MUCH COULD SIMTEG generally IMPROVE THE LEARNING OF GNNS ON NODE CLASSIFICATION AND LINK PREDICTION?

5.1 Q1: SIMTEG 在多大程度上能普遍提升 GNN 在节点分类和链接预测任务中的学习效果?

In this section, we conduct experiments to show the superiority of SimTeG on improving the learning of GNNs on node classification and link prediction. The reported results are selected based on the validation dataset. We present the results based on e5-large backbone in Table. 1 and present the comprehensive results of node classification and link prediction with all the three selected backbones in Table A5 and Table A6. Specifically, in Table 1, we present two comparison metric $\Delta_{M L P}$ and $\Delta_{G N N}$ to describe the performance margin of (SOTA GNN, MLP) (SOTA GNN, GraphSAGE), respectively. The smaller the value is, even negative, the better the performance of simple models is. In addition, we ensemble the GNNs with multiple node embeddings generated by various LMs and text resources on OGBN-Arxiv and show the results in Table 2. We find several interesting observations as follows.

在本节中,我们通过实验展示SimTeG在提升图神经网络(GNN)节点分类和链接预测学习性能上的优势。报告结果基于验证集筛选得出。表1展示了基于e5-large骨干网络的结果,节点分类和链接预测在三种选定骨干网络下的完整结果分别见表A5和表A6。具体而言,表1采用$\Delta_{MLP}$和$\Delta_{GNN}$两个对比指标,分别描述(SOTA GNN, MLP)和(SOTA GNN, GraphSAGE)的性能差异。该值越小(甚至为负值)表明简单模型性能越优。此外,我们在OGBN-Arxiv数据集上集成多种语言模型生成的节点嵌入与文本资源构建GNN,结果如表2所示。主要发现如下。

Observation 1: SimTeG generally improves the performance of GNNs on node classification and link prediction by a large margin. As shown in Table 1, SimTeG consistently outperforms the original features on all datasets and backbones. Besides, in comparison with $\boldsymbol{X}$ -GIANT, a LM pre training method that utilizes the graph structures, SimTeG still achieves better performance on OGBN-Arxiv with all backbones and on OGBN-Products with GraphSAGE, which further indicates the importance of text attributes per se.

观察1:SimTeG通常能大幅提升GNN在节点分类和链接预测任务中的性能。如表1所示,SimTeG在所有数据集和骨干模型上均优于原始特征。此外,与利用图结构的LM预训练方法$\boldsymbol{X}$-GIANT相比,SimTeG在使用所有骨干模型的OGBN-Arxiv数据集和使用GraphSAGE的OGBN-Products数据集上仍表现更优,这进一步证明了文本属性本身的重要性。

Observation 2: $\left(X{\cdot}S i m T e G+G r a p h S A G E\right)$ ) consistently outperforms $\scriptstyle(X-O G B+S O T A$ GNN) on all the three datasets. This finding implies that the incorporation of advanced text features can bypass the necessity of complex GNNs, which is why we perceive our method to be frustratingly simple. Furthermore, when replacing GraphSAGE with the corresponding SOTA GNN in $\boldsymbol{X}$ - SimTeG, although the performance is improved moderately, this margin of improvement is notably smaller compared to the performance gap on $\boldsymbol{X}$ -OGB. Particularly, we show that the simple 2-layer GraphSAGE achieves comparable performance with the dataset-specific SOTA GNNs. Particularly, on OGBN-Arxiv, GraphSAGE achieves $76.84%$ , taking the $\pmb{4-t h}$ place in the corresponding leader board (by 2023-08-01). Besides, on OGBL-Citation2, GraphSAGE even outperforms the SOTA GNN method SEAL on Hits $\ @3$ .

观察2: $\left(X{\cdot}S i m T e G+G r a p h S A G E\right)$ 在所有三个数据集上始终优于 $\scriptstyle(X-O G B+S O T A$ GNN)。这一发现表明,引入高级文本特征可以绕过复杂GNN的必要性,这正是我们认为该方法简单得令人惊讶的原因。此外,当在$\boldsymbol{X}$-SimTeG中用相应的SOTA GNN替换GraphSAGE时,虽然性能有所提升,但提升幅度明显小于$\boldsymbol{X}$-OGB上的性能差距。特别值得注意的是,简单的2层GraphSAGE实现了与数据集专用SOTA GNN相当的性能。具体而言,在OGBN-Arxiv上,GraphSAGE达到$76.84%$,在相应排行榜中位列$\pmb{4-t h}$(截至2023-08-01)。此外,在OGBL-Citation2上,GraphSAGE甚至在Hits$\ @3$指标上超越了SOTA GNN方法SEAL。

Table 1: The performance of SOTA GNN, GraphSAGE and MLP on OGBN-Arxiv, OGBN-Products, OGBL-Citation2, which are averaged over 10 runs (Please note the we solely train LM once to generate the node embeddings). The results of GLEM is from the orignal paper. We bold the best results w.r.t. the same GNN backbone and red color the smallest $\Delta_{M L P}$ and $\Delta_{G N N}$ .

表 1: SOTA GNN、GraphSAGE和MLP在OGBN-Arxiv、OGBN-Products、OGBL-Citation2上的性能(10次运行平均值,请注意我们仅训练LM一次以生成节点嵌入)。GLEM的结果来自原始论文。我们将同一GNN骨干下的最佳结果加粗,并用红色标注最小的$\Delta_{MLP}$和$\Delta_{GNN}$。

| 数据集 | 指标 | 方法 | SOTA GNN | 2层简单MLP/GNN |

|---|---|---|---|---|

| RevGAT | MLP | |||

| Arxiv | Acc. (%) | X-OGB | 74.01 ± 0.29 | 47.73 ± 0.29 |

| X-GIANT | 75.93 ± 0.22 | 71.08 ± 0.22 | ||

| GLEM | 76.97 ± 0.19 | - | ||

| X-SimTeG | 77.04 ± 0.13 | 74.06 ± 0.13 | ||

| Products | Acc. (%) | X-OGB | 81.82 ± 0.44 | 50.86 ± 0.26 |

| X-GIANT | 86.12 ± 0.34 | 77.58 ± 0.24 | ||

| GLEM | 87.36 ± 0.07 | - | ||

| X-SimTeG | 85.40 ± 0.28 | 76.73 ± 0.44 | ||

| Citation2 | MRR (%) | X-OGB | 86.14 ± 0.40 | 25.44 ± 0.01 |

| X-SimTeG | 86.66 ± 1.21 | 72.90 ± 0.14 | ||

| Hits @3 (%) | X-OGB | 90.92 ± 0.32 | 28.22 ± 0.02 | |

| X-SimTeG | 91.42 ± 0.19 | 80.55 ± 0.13 |

Table 2: The performance of GraphSAGE and RevGAT trained on OGBN-Arxiv with additional text attributes provided by He et al. (2023). LMs for ensembling are e5-large and all-roberta-large-v1. We select the top-3 SOTA methods from the leader board of OGBN-Arxiv (accessed on 2023-07-18) for comparison and gray color our results (reported over 10 runs).

表 2: GraphSAGE和RevGAT在OGBN-Arxiv数据集上的性能表现,其中使用了He等人(2023)提供的额外文本属性。用于集成的语言模型为e5-large和all-roberta-large-v1。我们从OGBN-Arxiv排行榜(访问日期2023-07-18)中选取了排名前三的SOTA方法进行比较,并将我们的结果(基于10次运行报告)用灰色标注。

| 排名 | 方法 | GNN主干网络 | 验证集准确率(%) | 测试集准确率(%) |

|---|---|---|---|---|

| 1 | TAPE+SimTeG(本工作) | RevGAT | 78.46±0.04 | 78.03±0.07 |

| 2 | TAPE (He等人,2023) | RevGAT | 77.85±0.16 | 77.50±0.12 |

| 3 | TAPE+SimTeG(本工作) | GraphSAGE | 77.89±0.08 | 77.48±0.11 |

| 4 | GraDBERT (Mavromatis等人,2023) | RevGAT | 77.57±0.09 | 77.21±0.31 |

| 5 | GLEM (Zhao等人,2022) | RevGAT | 77.46±0.18 | 76.94±0.25 |

Observation 3: With additional text attributes, SimTeG with Ensembling achieves new SOTA performance on OGBN-Arxiv. We further demonstrate the effectiveness of SimTeG by ensembling the node embeddings generated by different LMs and texts. For text, we use both the original text provided by Hu et al. (2020) and the additional text attributes 4 provided by He et al. (2023), which is generated by ChatGPT. For LMs, we use both e5-large and all-roberta-large-v1. We train GraphSAGE or RevGAT on those node embeddings generated by various LMs and texts, and make the final predictions with weighted ensembling (taking the weighted average of all predictions). As shown in Table 2, with RevGAT, we achieve new SOTA performance on OGBN-Arxiv with $78.03%$ test accuracy, more than $0.5%$ higher than the previous SOTA performance $(77.50%)$ achieved by He et al. (2023). It further validates the importance of text features and the effectiveness of SimTeG.

观察3:通过引入额外文本属性,集成式SimTeG在OGBN-Arxiv上实现了新SOTA性能。我们通过集成不同大语言模型和文本生成的节点嵌入进一步验证了SimTeG的有效性。文本方面同时采用Hu等人 (2020) 提供的原始文本和He等人 (2023) 通过ChatGPT生成的附加文本属性4;模型层面则同时使用e5-large和all-roberta-large-v1。我们在这些由不同大语言模型和文本生成的节点嵌入上训练GraphSAGE或RevGAT,并通过加权集成(对所有预测结果取加权平均)进行最终预测。如表2所示,采用RevGAT时我们在OGBN-Arxiv上取得了78.03%的测试准确率,较He等人 (2023) 创造的先前SOTA记录 (77.50%) 提升超过0.5%,这进一步验证了文本特征的重要性和SimTeG的有效性。

Observation 4: Text attributes are unequally important for different datasets. As shown in Table 1, we compute $\Delta_{M L P}$ which is the performance gap between MLP and SOTA GNNs. Empirically, this value indicates the importance of text attributes on the corresponding dataset, as MLP is solely trained on the texts (integrated with SOTA LMs) while SOTA GNN additionally takes advantage of graph structures. Therefore, approximately, the less $\Delta_{M L P}$ is, the more important text attributes are. As presented in Table 1, $\Delta_{M L P}$ on OGBN-Arxiv is solely 2.98, indicating the text attributes are more important, in comparison with the ones in OGBN-Products and OGBL-Citation2. This empirically indicates why the performance of SimTeG in OGBN-Products does not perform as well as the one in OGBN-Arxiv. We show a sample of text in OGBN-Arxiv and OGBN-Products respectively in Appendix A2.2. We find that the text in OGBN-products resembles more a bag of words, which account for the less improvement when using LM features.

观察4:文本属性在不同数据集中的重要性不均。如表1所示,我们计算了 $\Delta_{M L P}$(即MLP与SOTA GNNs之间的性能差距)。经验表明,该值反映了文本属性在对应数据集中的重要性,因为MLP仅基于文本(结合SOTA大语言模型)训练,而SOTA GNN额外利用了图结构。因此,$\Delta_{M L P}$ 越小,文本属性越重要。如表1所示,OGBN-Arxiv的 $\Delta_{M L P}$ 仅为2.98,表明其文本属性比OGBN-Products和OGBL-Citation2更重要。这从实证角度解释了为何SimTeG在OGBN-Products中的表现不如OGBN-Arxiv。附录A2.2分别展示了OGBN-Arxiv和OGBN-Products的文本样本,我们发现OGBN-products的文本更接近词袋形式,这导致使用大语言模型特征时改进较小。

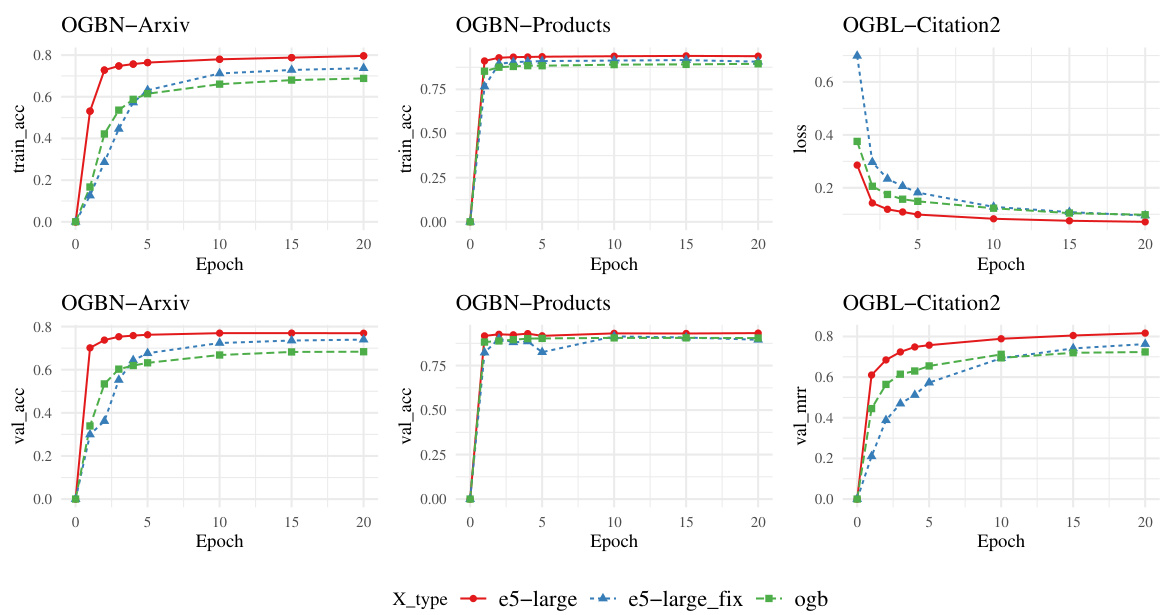

Figure 3: Training convergence and validation results of GNNs with $\boldsymbol{X}$ -SimTeG, $\boldsymbol{X}$ -OGB, and $\boldsymbol{X}$ -FIX. The LM backbone is e5-large. The learning rate and batch size are consistent.

图 3: 采用 $\boldsymbol{X}$ -SimTeG、$\boldsymbol{X}$ -OGB 和 $\boldsymbol{X}$ -FIX 的图神经网络 (GNN) 训练收敛与验证结果。语言模型主干为 e5-large,学习率和批大小保持统一。

5.2 Q2: DOES X-SIMTEG FACILITATE BETTER CONVERGENCE FOR GNNS?

5.2 Q2: X-SIMTEG 是否有助于提升 GNN 的收敛性?

Towards a comprehensive understanding of the effectiveness of SimTeG, we further investigate the convergence of GNNs with SimTeG. We compare the training convergence and the corresponding vali