GAN-based Anomaly Detection in Imbalance Problems

基于GAN的异常检测在不平衡问题中的应用

Abstract. Imbalance problems in object detection are one of the key issues that affect the performance greatly. Our focus in this work is to address an imbalance problem arising from defect detection in industrial inspections, including the different number of defect and non-defect dataset, the gap of distribution among defect classes, and various sizes of defects. To this end, we adopt the anomaly detection method that is to identify unusual patterns to address such challenging problems. Especially generative adversarial network (GAN) and auto encoder-based approaches have shown to be effective in this field. In this work, 1) we propose a novel GAN-based anomaly detection model which consists of an auto encoder as the generator and two separate disc rim in at or s for each of normal and anomaly input; and 2) we also explore a way to effectively optimize our model by proposing new loss functions: Patch loss and Anomaly adversarial loss, and further combining them to jointly train the model. In our experiment, we evaluate our model on conventional benchmark datasets such as MNIST, Fashion MNIST, CIFAR 10/100 data as well as on real-world industrial dataset – smartphone case defects. Finally, experimental results demonstrate the effectiveness of our approach by showing the results of outperforming the current State-OfThe-Art approaches in terms of the average area under the ROC curve (AUROC).

摘要。目标检测中的不平衡问题是严重影响性能的关键问题之一。本文重点解决工业检测中缺陷检测引发的三类不平衡问题:缺陷与非缺陷数据集数量差异、缺陷类别间分布差距以及缺陷尺寸多样性。为此,我们采用识别异常模式的异常检测方法应对这些挑战。其中基于生成对抗网络(GAN)和自动编码器的方法已在该领域展现出显著效果。本研究贡献在于:1) 提出新型GAN异常检测模型,其生成器采用自动编码器结构,并分别为正常/异常输入配备独立判别器;2) 通过提出Patch loss和Anomaly adversarial loss两种新型损失函数,探索模型优化路径,并将二者联合训练实现协同优化。实验环节在MNIST、Fashion MNIST、CIFAR 10/100等基准数据集及智能手机外壳缺陷真实工业数据集上进行验证。最终ROC曲线下平均面积(AUROC)指标表明,本方法优于当前最先进技术。

Keywords: Imbalance problems, Anomaly Detection, GAN, Defects Inspection, Patch Loss, Anomaly Adversarial Loss

关键词:不平衡问题、异常检测、GAN(生成对抗网络)、缺陷检测、块损失(Patch Loss)、异常对抗损失(Anomaly Adversarial Loss)

1 Introduction

1 引言

The importance of the imbalance problems in machine learning is investigated widely and many researches have been trying to solve them [12],[20],[23],[28],[34]. For example, class imbalance in the dataset can dramatically skew the performance of class if i ers, introducing a prediction bias for the majority class [23]. Not only class imbalance, but various imbalance problems exist in data science. A general overview of imbalance problems is investigated in the literature [12],[20],[23],[28]. Specifically, the survey of various imbalance problems for object detection subject is described in the review paper [34].

机器学习中的不平衡问题重要性已被广泛研究,众多学者致力于解决此类问题 [12]、[20]、[23]、[28]、[34]。例如,数据集中的类别不平衡会显著扭曲分类器性能,导致对多数类的预测偏差 [23]。数据科学领域不仅存在类别不平衡,还存在多种不平衡问题。文献 [12]、[20]、[23]、[28] 对不平衡问题进行了全面综述。特别地,综述论文 [34] 详细探讨了目标检测领域中的各类不平衡问题。

We handle a couple of imbalance problems closely related to industrial defects detection in this paper. Surface defects of metal cases such as scratch, stamped, and stain are very unlikely to happen in the production process, thereby resulting in outstanding class imbalance. Besides, size of defects, loss scale, and disc rim in at or distribution al imbalances are covered as well. In order to prevent such imbalance problems, anomaly detection [8] approach is used. This method discards a small portion of the sample data and converts the problem into an anomaly detection framework. Considering the shortage and diversity of anomalous data, anomaly detection is usually modeled as a one-class classification problem, with the training dataset containing only normal data [40].

本文重点解决了与工业缺陷检测密切相关的几类不平衡问题。金属外壳表面缺陷(如划痕、压痕、污渍)在生产过程中极少出现,导致严重的类别不平衡。此外,我们还涵盖了缺陷尺寸、损失量级以及盘缘分布等不平衡问题。为防止此类不平衡问题,我们采用异常检测[8]方法,该方法通过舍弃少量样本数据将问题转化为异常检测框架。鉴于异常数据的稀缺性和多样性,异常检测通常被建模为单分类问题,训练数据集仅包含正常数据[40]。

Reconstruction-based approaches [1],[41],[43] have been paid attention for anomaly detection. The idea behind this is that auto encoders can reconstruct normal data with small errors, while the reconstruction errors of anomalous data are usually much larger. Auto encoder [33] is adopted by most reconstructionbased methods which assume that normal and anomalous samples could lead to significantly different embeddings and thus differences in the corresponding reconstruction errors can be leveraged to differentiate the two types of samples [42]. Adversarial training is introduced by adding a disc rim in at or after auto encoders to judge whether its original or reconstructed image [10],[41]. Schlegl et al. [43] hypothesize that the latent vector of a GAN represents the true distribution of the data and remap to the latent vector by optimizing a pre-trained GAN-based on the latent vector. The limitation is the enormous computational complexity of remapping to this latent vector space. In a follow-up study, Zenati et al. [52] train a BiGAN model [4], which maps from image space to latent space jointly, and report statistically and computationally superior results on the MNIST benchmark dataset. Based on [43],[52], GANomaly [1] proposes a generic anomaly detection architecture comprising an adversarial training framework that employs adversarial auto encoder within an encoder-decoder-encoder pipeline, capturing the training data distribution within both image and latent vector space. However, the studies mentioned above have much room for improvement on performance for benchmark datasets such as Fashion-MNIST, CIFAR-10, and CIFAR-100.

基于重建的方法 [1][41][43] 在异常检测领域受到广泛关注。其核心思想是自动编码器能以较小误差重建正常数据,而异常数据的重建误差通常显著更大。大多数基于重建的方法采用自动编码器 [33],假设正常样本与异常样本会产生明显不同的嵌入表示,从而利用重建误差差异区分两类样本 [42]。部分研究通过添加判别器对原始图像与重建图像进行对抗训练 [10][41]。Schlegl等人 [43] 提出假设:GAN的潜在向量代表数据真实分布,并通过优化预训练GAN的潜在向量实现重映射。但该方法存在潜在向量空间重映射计算复杂度高的局限性。后续研究中,Zenati等人 [52] 采用双向GAN模型 [4] 实现图像空间到潜在空间的联合映射,在MNIST基准数据集上取得了统计与计算层面的优越结果。基于 [43][52] 的研究,GANomaly [1] 提出通用异常检测架构,通过在编码器-解码器-编码器流程中嵌入对抗自动编码器,同时捕捉图像空间和潜在向量空间的训练数据分布。但上述方法在Fashion-MNIST、CIFAR-10和CIFAR-100等基准数据集上的性能仍有较大提升空间。

A novel GAN-based anomaly detection model by using a structurally separated framework for normal and anomaly data is proposed to improve the biased learning toward normal data. Also, new definitions of the patch loss and anomaly adversarial loss are introduced to enhance the efficiency for defect detection. First, this paper proves the validity of the proposed method for the benchmark data, and then expands it for the real-world data, the surface defects of the smartphone case. There are two types of data that are used in the experiments – classification benchmark datasets including MNIST, Fashion-MNIST, CIFAR10, CIFAR100, and a real-world dataset with the surface defects of the smartphone. The results of the experiments showed State-Of-The-Art performances in four benchmark dataset, and average accuracy of $99.03%$ in the real-world dataset of the smartphone case defects. To improve robustness and performance, we select the final model by conducting the ablation study. The result of the ablation study and the visualized images are described.

提出了一种基于GAN(生成对抗网络)的新型异常检测模型,通过采用正常数据与异常数据的结构分离框架来改善对正常数据的偏置学习。同时引入了新的补丁损失(patch loss)和异常对抗损失(anomaly adversarial loss)定义以提升缺陷检测效率。首先,本文验证了该方法在基准数据集上的有效性,随后将其扩展到真实场景数据——智能手机外壳表面缺陷检测。实验使用了两种类型数据:分类基准数据集(包括MNIST、Fashion-MNIST、CIFAR10、CIFAR100)和智能手机表面缺陷的真实数据集。实验结果表明,该方法在四个基准数据集上达到最先进性能,在智能手机外壳缺陷的真实数据集上平均准确率达$99.03%$。为提升鲁棒性和性能,我们通过消融实验筛选最终模型,并展示了消融研究结果与可视化图像。

In summary, our method provides the methodological improvements over the recent competitive researches, GANomaly[1] and ABC[51], and overcome the State-Of-The-Art results from GeoTrans[13] and ARNet[18] with significant gap.

总之,我们的方法在方法论上超越了近期竞争性研究GANomaly[1]和ABC[51],并以显著优势突破了GeoTrans[13]与ARNet[18]的最先进成果。

2 Related Works

2 相关工作

Imbalance problems

不平衡问题

A general review of Imbalance problems in deep learning is provided in [5]. There are lots of class imbalance examples in various areas such as computer vision [3],[19],[25],[48], medical diagnosis [16],[30] and others [6],[17],[36],[38] where this issue is highly significant and the frequency of one class can be much larger than another class. It has been well known that class imbalance can have a significant deleterious effect on deep learning [5]. The most straightforward and common approach is the use of sampling methods. Those methods operate on the data itself to increase its balance. Widely used and proven to be robust is oversampling [29]. The issue of class imbalance can be also tackled on the level of the classifier. In such a case, the learning algorithms are modified by introducing different weights to mis classification of examples from different classes [54] or explicitly adjusting prior class probabilities [26]. A systematic review on imbalance problems in object detection is presented in [34]. In here, total of eight different imbalance problems are identified and grouped four main types: class imbalance, scale imbalance, spatial imbalance, and objective imbalance. Problem based categorization of the methods used for imbalance problems is well organized also.

[5]对深度学习中的不平衡问题进行了全面综述。在计算机视觉[3][19][25][48]、医疗诊断[16][30]及其他领域[6][17][36][38]存在大量类别不平衡案例,其中某一类别的出现频率可能远高于其他类别。众所周知,类别不平衡会对深度学习产生显著负面影响[5]。最直接常见的解决方法是采用采样技术,这类方法通过操作数据本身来提升平衡性。过采样[29]被广泛使用且证实具有鲁棒性。类别不平衡问题也可在分类器层面解决,例如通过为不同类别的误分类样本引入差异化权重[54],或显式调整先验类别概率[26]来修改学习算法。[34]系统综述了目标检测中的不平衡问题,共识别出八种不平衡类型并归类为四大类:类别不平衡、尺度不平衡、空间不平衡和目标不平衡,同时按问题类型对处理方法进行了系统梳理。

Anomaly detection

异常检测

For anomaly detection on images and videos, a large variety of methods have been developed in recent years [7],[9],[22],[32],[37],[49],[50],[55]. In this paper, we focus on anomaly detection in still images. Reconstruction-based anomaly detection [2],[10],[43],[44],[46] is the most popular approach. The method compress normal samples into a lower-dimensional latent space and then reconstruct them to approximate the original input data. It assume that anomalous samples will be distinguished through relatively high reconstruction errors compared with normal samples.

近年来,针对图像和视频的异常检测已发展出多种方法 [7], [9], [22], [32], [37], [49], [50], [55]。本文主要研究静态图像中的异常检测。基于重建的异常检测方法 [2], [10], [43], [44], [46] 是目前最流行的技术路线。该方法将正常样本压缩到低维潜在空间后重建,以逼近原始输入数据,其核心假设是异常样本会因重建误差显著高于正常样本而被识别。

Auto encoder and GAN-based anomaly detection

基于自编码器和GAN的异常检测

Auto encoder is an unsupervised learning technique for neural networks that learns efficient data encoding by training the network to ignore signal noise [46]. Generative adversarial network (GAN) proposed by Goodfellow et al. [15] is the approach co-training a pair networks, generator and disc rim in at or, to compete with each other to become more accurate in their predictions. As reviewed in [34], adversarial training has also been adopted by recent work within anomaly detection. More recent attention in the literature has been focused on the provision of adversarial training. Sabokrou et al. [41] employs adversarial training to optimize the auto encoder and leveraged its disc rim in at or to further enlarge the reconstruction error gap between normal and anomalous data. Furthermore, Akcay et al. [1] adds an extra encoder after auto encoders and leverages an extra MSE loss between the two different embeddings. Similarly, Wang et al. [45] employs adversarial training under a variation al auto encoder framework with the assumption that normal and anomalous data follow different Gaussian distribution. Gong et al. [14] augments the auto encoder with a memory module and developed an improved auto encoder called memory-augmented auto encoder to strengthen reconstructed errors on anomalies. Perera et al. [35] applies two adversarial disc rim in at or s and a classifier on a denoising auto encoder. By adding constraint and forcing each randomly drawn latent code to reconstruct examples like the normal data, it obtained high reconstruction errors for the anomalous data.

自编码器(Auto encoder)是一种无监督学习的神经网络技术,通过训练网络忽略信号噪声来学习高效的数据编码[46]。Goodfellow等人[15]提出的生成对抗网络(GAN)采用双网络协同训练方法,使生成器和判别器相互竞争以提高预测准确性。如[34]所述,对抗训练最近也被应用于异常检测领域。Sabokrou等人[41]利用对抗训练优化自编码器,并通过其判别器进一步扩大正常数据与异常数据之间的重构误差差距。Akcay等人[1]在自编码器后增加额外编码器,并利用两种不同嵌入之间的MSE损失。Wang等人[45]在变分自编码器框架下采用对抗训练,假设正常与异常数据服从不同高斯分布。Gong等人[14]为自编码器添加记忆模块,开发了记忆增强自编码器以强化异常重构误差。Perera等人[35]在去噪自编码器上应用两个对抗判别器和分类器,通过约束迫使随机抽取的潜在编码重构出类似正常数据的样本,从而对异常数据获得高重构误差。

3 Method

3 方法

3.1 Model Structures

3.1 模型结构

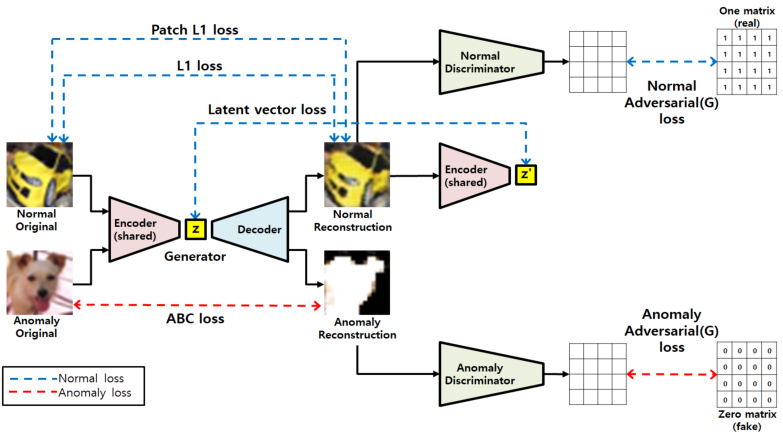

In order to implement anomaly detection, we propose a GAN-based generative model. The pipeline of the proposed architecture of training phase is shown in the Figure 1. The network structure of the Generator follows that of an autoencoder, and the Disc rim in at or consists of two identical structures to separately process the input data when it is normal or anomaly. In the training phase, the model learns to minimize reconstruction error when normal data is entered to the generator, and to maximize reconstruction error when anomaly data is entered. The loss used to minimize reconstruction error with normal image input is marked in blue color in four ways. Also, the loss used for maximizing the error with anomaly image input is marked in red color in two ways. In the inference phase, reconstruction error is used to detect anomalies as a criteria standard.The matrix maps in the right part of Figure 1 show that each value of the output matrix represents the probability of whether the corresponding image patch is real or fake. The way is used in PatchGAN [11] and it is totally different from Patch Loss we proposed in this paper.

为实现异常检测,我们提出了一种基于GAN的生成模型。图1展示了训练阶段所提架构的流程。生成器(Generator)的网络结构遵循自编码器(autoencoder)设计,而判别器(Discriminator)由两个相同结构组成,分别处理正常和异常输入数据。在训练阶段,模型学习最小化正常数据输入生成器时的重构误差,同时最大化异常数据输入时的重构误差。用于最小化正常图像输入重构误差的损失函数以四种蓝色标记方式呈现,而用于最大化异常图像输入误差的损失函数则以两种红色标记方式呈现。在推理阶段,重构误差被用作异常检测的判定标准。图1右侧的矩阵图表明:输出矩阵的每个值代表对应图像块真伪的概率。该方法源自PatchGAN [11],与我们本文提出的Patch Loss存在本质差异。

3.2 Imbalance Problems in Reconstruction-based Anomaly Detection

3.2 基于重建的异常检测中的不平衡问题

In order to handle anomaly detection for defects inspection, the required imbalance characteristics are described. We define imbalance problems for defects as class imbalance, loss function scale imbalance, distribution al bias on the learning model, and imbalance in image and object (anomaly area) sizes. Table 1 summarizes the types of imbalance problems and solutions.

为了处理缺陷检测中的异常检测问题,我们描述了所需的不平衡特性。我们将缺陷的不平衡问题定义为类别不平衡、损失函数尺度不平衡、学习模型的分布偏差以及图像与物体(异常区域)大小的不平衡。表1总结了不平衡问题的类型及解决方案。

Fig. 1: Pipeline of the proposed approach for anomaly detection.

图 1: 所提异常检测方法的流程。

Table 1: Imbalance problems and solutions of the proposed method

| ImbalanceProblems | Solutions |

| class imbalance | data sampling with k-means clustering (section 3.5) |

| loss scaleimbalance | loss function weight search (section 3.4) |

| discriminatordistributionalBias | twodiscriminator (section 3.3) |

| Size imbalancebetween object(defect) )andimage | reconstruction-based methods |

表 1: 所提方法的失衡问题及解决方案

| 失衡问题 | 解决方案 |

|---|---|

| 类别失衡 | 基于k-means聚类的数据采样 (章节3.5) |

| 损失尺度失衡 | 损失函数权重搜索 (章节3.4) |

| 判别器分布偏差 | 双判别器结构 (章节3.3) |

| 目标(缺陷)与图像的尺寸失衡 | 基于重建的方法 |

Class imbalance

类别不平衡

Class imbalance is well known, and surface defects of metal cases such as scratch, stamped, and stain are very unlikely to happen in the production process, therefore resulting in outstanding class imbalance problems between normal and anomaly. Not only the number of normal and defective data is imbalanced, but also the frequency of occurrence differ among the types of defects such as scratch, stamped, and stain, so imbalance within each class exists in anomaly data. To resolve such class imbalance, data is partially sampled and used in training. Here, if the data is sampled randomly without considering its distribution, the entire data and the sampled data might not be balanced in their distribution. Therefore, in this paper,we use the method of dividing the entire data into several groups by k-means clustering, and then sample the same number of data within each group.

类别不平衡是众所周知的问题,金属外壳的表面缺陷(如划痕、压痕和污渍)在生产过程中极少发生,因此导致正常样本与异常样本之间存在显著的类别不平衡问题。不仅正常数据和缺陷数据的数量不均衡,而且划痕、压痕和污渍等缺陷类型的出现频率也存在差异,因此异常数据内部也存在类别不平衡。为解决此类不平衡问题,我们采用部分采样数据进行训练。若随机采样而不考虑数据分布,整体数据与采样数据可能在分布上仍不平衡。因此,本文采用k-means聚类将整体数据划分为若干组,然后在每组内抽取相同数量数据的方法。

Loss function scale imbalance

损失函数尺度不平衡

The proposed method uses the weighted sum of 6 types of loss functions to train the generator. The scale of the loss function used here is different, and even if the scale is the same, the effect on the learning is different. In addition, GAN contains a min-max problem that the generator and the disc rim in at or learn by competing against each other, making the learning difficult and unstable. The loss scales of the generator and the disc rim in at or should be sought at a similar rate to each other so that GAN is effectively trained. To handle such loss function scale imbalance problems, weights used in loss combination are explored by a grid search.

所提出的方法采用6种损失函数的加权和来训练生成器。此处使用的损失函数尺度不同,即使尺度相同,对学习的影响也不同。此外,GAN包含一个极小极大问题,即生成器与判别器通过相互竞争进行学习,这使得学习过程困难且不稳定。生成器与判别器的损失尺度应以相近的速率进行调整,才能有效训练GAN。针对这类损失函数尺度不平衡问题,我们通过网格搜索来探索损失组合中的权重配置。

Disc rim in at or distribution al bias

判别器分布偏差

The loss will be used to update the generator differently for normal and anomaly data. When the reconstruction data is given to the disc rim in at or, the generator is trained to output 1 from normal data and 0 from anomaly data. Thus, when training from both normal and anomaly data, using a single disc rim in at or results in training the model to classify only normal images well. Separating discriminator for normal data and for anomaly data is necessary to solve this problem. This method only increases the parameters or computations of the model in the training phase, but not those in inference phase. As a result, there is no overall increase in memory usage or latency at the final inferences.

损失将用于以不同方式更新生成器处理正常数据和异常数据。当重构数据输入判别器时,生成器被训练为从正常数据输出1、从异常数据输出0。因此,当同时使用正常和异常数据训练时,单一判别器会导致模型仅擅长分类正常图像。为解决该问题,必须分别为正常数据和异常数据设置独立判别器。此方法仅在训练阶段增加模型参数或计算量,推理阶段不会产生额外开销,因此最终推断时内存占用和延迟不会整体增加。

Size imbalance between object(defect) and image

物体(缺陷)与图像之间的尺寸不平衡

Industrial defect data exhibits smaller size of defect compared to the size of the entire image. Objects in such data occupy very small portion of the image, making it closer to object detection rather than classification, so it is difficult to expect fair performance with classification methods. To solve this, we propose a method generating images to make the total reconstruction error bigger not affected by the size of the defect and the size of the entire image which contains the defect.

工业缺陷数据中,缺陷尺寸相对于整张图像较小。此类数据中的物体仅占据图像的极小部分,更接近目标检测而非分类任务,因此难以通过分类方法获得理想性能。为解决该问题,我们提出一种图像生成方法,通过增大整体重建误差来实现效果,该方法不受缺陷尺寸及含缺陷整体图像尺寸的影响。

3.3 Network Architecture

3.3 网络架构

The proposed model is a GAN-based network structure consisting of a generator and a disc rim in at or. The generator is in the form of an auto encoder to perform image to image translation. And a modified U-Net[39] structure is adopted, which has an effective delivery of features using a pyramid architecture. The disc rim in at or is a general CNN network, and two disc rim in at or s are used only in the training phase.

所提模型是基于GAN的网络结构,由生成器和判别器组成。生成器采用自编码器形式执行图像到图像的转换,并采用改进的U-Net[39]结构,通过金字塔架构实现高效的特征传递。判别器为常规CNN网络,训练阶段仅使用两个判别器。

The generator is a symmetric network that consists of four 4 x 4 convolutions with stride 2 followed by four transposed convolutions. The total parameters of generator is composed of a sum of 0.38K, 2.08K, 8.26K. 32.9K, 32.83K, 16.42K, 4.11K, and 0.77K, that is 97.75K totally. The disc rim in at or is a general network that consists of three 4 x 4 convolutions with stride 2 followed by two 4 x 4 convolutions with stride 1. The total parameters of disc rim in at or is composed of a sum of 0.38K, 2.08K, 8.26K, 32.9K, and 32.77K, that is 76.39K.

生成器是一个对称网络,由四个步长为2的4×4卷积层和四个转置卷积层组成。生成器的总参数量由0.38K、2.08K、8.26K、32.9K、32.83K、16.42K、4.11K和0.77K相加构成,总计97.75K。判别器是一个常规网络,由三个步长为2的4×4卷积层和两个步长为1的4×4卷积层组成。判别器的总参数量由0.38K、2.08K、8.26K、32.9K和32.77K相加构成,总计76.39K。

3.4 Loss Function

3.4 损失函数

Total number of loss functions used in the proposed model is eight. Six losses for training of generator, one for normal disc rim in at or and another for anomaly disc rim in at or. The loss function for training of each disc rim in at or is adopted

所提模型共使用八个损失函数。其中六个用于生成器训练,一个用于正常判别器,另一个用于异常判别器。每个判别器的训练均采用相应损失函数。

from LSGAN [31] as shown in Eq. (1). It uses the a-b coding scheme for the disc rim in at or, where a and b are the labels for fake data and real data, respectively.

从LSGAN [31]中采用的公式(1)所示方法。该方法对判别器采用a-b编码方案,其中a和b分别代表伪造数据和真实数据的标签。

$$

\operatorname*{min}{D}V_{\mathrm{LSGAN}}(D)=\left[\left(D(x)-b\right)^{2}\right]+\left[\left(D(G(x))-a\right)^{2}\right]

$$

$$

\operatorname*{min}{D}V_{\mathrm{LSGAN}}(D)=\left[\left(D(x)-b\right)^{2}\right]+\left[\left(D(G(x))-a\right)^{2}\right]

$$

Six kinds of loss functions, as shown in from Eq. (2) to (8) are employed to train the generator. Among them, four losses are for normal images. First, L1 reconstruction error of generator for normal image is provided as shown in Eq. (2). It penalizes by measuring the L1 distance between the original $x$ and the generated images ( ${\hat{x}}=G(x)$ ) as defined in [1]:

采用六种损失函数(如式(2)至(8)所示)来训练生成器。其中四种损失函数针对正常图像。首先,生成器对正常图像的L1重建误差如式(2)所示,通过测量原始图像$x$与生成图像${\hat{x}}=G(x)$之间的L1距离进行惩罚,其定义参见[1]:

$$

\mathcal{L}{\mathrm{recon}}=|x-G(x)|_{1}

$$

$$

\mathcal{L}{\mathrm{recon}}=|x-G(x)|_{1}

$$

Second, the patch loss is newly proposed in this paper as shown in Eq. (3). Divide a normal image and a generated image separately into M patches and select the average of the biggest n reconstruction errors among all the patches.

其次,本文新提出了如式(3)所示的块损失(patch loss)。将正常图像和生成图像分别划分为M个块,并选取所有块中前n个最大重建误差的平均值。

$$

\mathcal{L}{\mathrm{patch}}=f_{a v g}(n)(\left|\left|x_{p a t c h(i)}-G(x_{p a t c h(i)})\right|\right|_{1}),i=1,2,...,m

$$

$$

\mathcal{L}{\mathrm{patch}}=f_{a v g}(n)(\left|\left|x_{p a t c h(i)}-G(x_{p a t c h(i)})\right|\right|_{1}),i=1,2,...,m

$$

Third, latent vector loss [1] is calculated as the difference between latent vectors of generator for normal image and latent vectors of cascaded encoder for reconstruction image as shown in Eq. (4)

第三,潜在向量损失 [1] 的计算方式如公式 (4) 所示,即正常图像的生成器潜在向量与重建图像的级联编码器潜在向量之间的差值。

$$

\mathcal{L}{\mathrm{enc}}=|G_{E}(x)-G_{E}(G(x))|_{1}

$$

$$

\mathcal{L}{\mathrm{enc}}=|G_{E}(x)-G_{E}(G(x))|_{1}

$$

Eq. (5) defines the proposed adversarial loss for the generator update use in LSGAN[31], where $y$ denotes the value that G wants D to believe for fake data.

式 (5) 定义了 LSGAN [31] 中用于生成器更新的对抗损失 (adversarial loss),其中 $y$ 表示生成器希望判别器对伪造数据所认定的值。

$$

{min}{G}V_{LSGAN}(G)=[(D(G(x))-y)^{2}]

$$

Fourth, the adversarial loss used to update the generator is as shown in Eq. (6). The loss function intends to output a real label of 1 when a reconstruction image (fake) is into the disc rim in at or.

第四,用于更新生成器的对抗损失如式(6)所示。该损失函数旨在当重建图像(伪造)输入判别器时输出真实标签1。

$$

{min}{G}V_{LSGAN}(G)=[(D(G(x))-1)^{2}]

$$

Two remaining losses for anomaly images are as follows. One is anomaly adversarial loss for updating generator and the other is ABC [51] loss. Unlike a general adversarial loss of Eq (6), anomaly reconstruction image should be generated differently from real one to classify anomaly easily, the anomaly adversarial loss newly adopted in our work is as shown in Eq. (7).

异常图像剩余的两个损失函数如下:一是用于更新生成器的异常对抗损失,二是ABC [51]损失。与公式(6)的常规对抗损失不同,异常重建图像需生成得与真实图像明显不同以便于异常分类,本文新采用的异常对抗损失如公式(7)所示。

$$

{min}{G}V_{LSGAN}(G)=[(D(G(x))-0)^{2}]

$$

ABC loss as shown Eq. (8) is used here to maximize L1 reconstruction error $\mathcal{L}_{\boldsymbol{\theta}}(\cdot)$ for anomaly data. Because the difference between the reconstruction errors

此处采用式(8)所示的ABC损失函数,通过最大化异常数据的L1重构误差$\mathcal{L}_{\boldsymbol{\theta}}(\cdot)$来实现。由于重构误差之间的差异

for normal and anomaly data is large, the equation is modified by adding the exponetial and log function to solve the scale imbalance.

对于正常数据和异常数据差异较大的情况,通过引入指数函数和对数函数来修正方程,以解决尺度不平衡问题。

$$

\mathcal{L}{\mathrm{ABC}}=-\mathrm{log}(1-e^{-\mathcal{L}{\theta}(x_{i})})

$$

$$

\mathcal{L}{\mathrm{ABC}}=-\mathrm{log}(1-e^{-\mathcal{L}{\theta}(x_{i})})

$$

Total loss function consists of weighted sum of each loss. All losses for normal images are grouped together and same as for anomaly images. Those two group of losses are applied to update the weights of learning process randomly. The scale imbalances exist among the loss functions. Although the scale could be adjusted in same range, the effect might be different, so we explore the weight of each loss using the grid search. Because ABC loss can have the largest scale, the weighted sum of normal data is set more than twice as large as the weighted sum of anomaly data. In order to avoid huge and unnecessary search space, each weight of the loss functions is limited from 0.5∼1.5 range. Then the grid search is executed the each weight adjusting by 0.5. Total possible cases for the grid search is 314. The final explored weights of loss function are shown in Table 2.

总损失函数由各损失的加权和构成。正常图像的所有损失归为一组,异常图像同理。这两组损失被随机用于更新学习过程的权重。各损失函数间存在尺度不平衡问题。虽然可将尺度调整至相同范围,但效果可能不同,因此我们通过网格搜索探索各损失的权重。由于ABC损失可能具有最大尺度,正常数据的加权和被设定为异常数据加权和的两倍以上。为避免庞大且不必要的搜索空间,各损失函数的权重限制在0.5∼1.5范围内,并以0.5为步长进行网格搜索,总搜索可能性为314种。最终探索的损失函数权重如 表 2 所示。

Table 2: Weight combination of loss functions obtained by Grid search

表 2: 通过网格搜索得到的损失函数权重组合

| 常规重建L1损失 (Normal reconstruction L1 loss) | ABC损失 (ABCloss) | 常规对抗损失 (Normal adversarial loss) | 常规重建分块L1损失 (Normal reconstruction patch L1 loss) | 常规潜在向量损失 (Normal latentvector loss) | 异常对抗损失 (Anomaly adversarial loss) |

|---|---|---|---|---|---|

| 1.5 | 0.5 | 0.5 | 1.5 | 0.5 | 1.0 |

3.5 Data Sampling

3.5 数据采样

As mentioned in section 3.2, the experimental datasets include imbalance problems. For benchmark datasets such as MNIST, Fashion-MNIST, CIFAR-10, and CIFAR-100, have a class imbalance problems presenting imbalance of data sampling. The real-world dataset, surface defects of smartphone case is not only the number of normal and defective data are imbalanced, but also the frequency of occurrences differs among the types of defects. Also the size of image and object (defect) is imbalanced too. To solve those imbalance problem, k-means clustering-based data sampling is performed to make balanced distribution of data. In learning stage for benchmark datasets, all data is used for normal case. In case of anomaly, the same number is sampled for each class so that the total number of data is similar to normal. At this time, k-means clustering is performed on each class, and data is sampled from each cluster in a distribution similar to the entire dataset. For anomaly case of defect dataset, data is sampled using the same method as the benchmark, and a number of normal data is sampled, equal to the number of data combined with the three kinds of defects - scratch, stamped and stain. Detail number of data is described in section 4.1

如第3.2节所述,实验数据集存在不平衡问题。基准数据集如MNIST、Fashion-MNIST、CIFAR-10和CIFAR-100存在数据采样不平衡的类别不平衡问题。真实世界数据集智能手机外壳表面缺陷不仅正常与缺陷数据数量不平衡,各类缺陷的出现频率也存在差异,且图像与缺陷对象的尺寸也不平衡。为解决这些不平衡问题,采用基于k-means聚类的数据采样方法以实现数据均衡分布。

在基准数据集的学习阶段,所有数据均作为正常样本使用。对于异常情况,每类采样相同数量样本,使总数据量与正常样本相当。此时对每类进行k-means聚类,并按与整体数据集相似的分布从各簇中采样数据。对于缺陷数据集的异常情况,采用与基准数据集相同的方法采样数据,并采样与划痕、压痕和污渍三类缺陷数据总量相等的正常样本数量。具体数据量详见第4.1节说明。

4 Experiments

4 实验

In this section, we perform substantial experiments to validate the proposed method for anomaly detection. We first evaluate our method on commonly used benchmark datasets - MNIST, Fashion-MNIST, CIFAR-10, and CIFAR100. Next, we conduct experiments on real-world anomaly detection dataset - smartphone case defect dataset. Then we present the respective effects of different designs of loss functions through ablation study.

在本节中,我们进行了大量实验以验证所提出的异常检测方法。首先在常用基准数据集(MNIST、Fashion-MNIST、CIFAR-10和CIFAR100)上评估方法性能,随后在真实场景的智能手机外壳缺陷数据集上进行测试,最后通过消融实验展示不同损失函数设计的具体影响。

4.1 Datasets

4.1 数据集

Datasets used in the experiments include of four standard image datasets: MNIST [27], Fashion-MNIST[47], CIFAR-10[24], and CIFAR-100[24]. Additional y smartphone case defect data is added to evaluate the performance in real-world environments.

实验中使用的数据集包括四个标准图像数据集: MNIST [27]、Fashion-MNIST [47]、CIFAR-10 [24] 和 CIFAR-100 [24]。另外还添加了智能手机外壳缺陷数据以评估现实环境中的性能。

For MNIST, Fashion-MNIST, CIFAR-10 data set, each class is defined as normal and the rest of nine classes are defined as anomaly. Total 10 experiments are performed by defining each 10 class once as normal. With CIFAR-100 dataset, one class is defined as normal and the remaining 19 classes are defined as anomaly among 20 super classes. Each superclass is defined as normal one by one, so in total 20 experiments were conducted. Also, in order to resolve imbalance in the number of normal data and anomaly data, and in distribution of sampled data, the method proposed in 3.5 is applied when sampling training data. 6000 normal and anomaly data images were used for training MNIST and Fashion-MNIST, and 5000 were used for CIFAR-10. Additionally, for MNIST and Fashion-MNIST, the images were resized into 32x32 so that the size of the feature can be the same when concatenating them in the network structure.

对于MNIST、Fashion-MNIST和CIFAR-10数据集,每个类别被定义为正常类,其余九个类别被定义为异常类。通过依次将10个类别中的每一个定义为正常类,共进行了10次实验。在使用CIFAR-100数据集时,20个超类中的1个类别被定义为正常类,其余19个类别被定义为异常类。每个超类被依次定义为正常类,因此总共进行了20次实验。此外,为了解决正常数据和异常数据数量不平衡以及采样数据分布不均的问题,在采样训练数据时应用了3.5节中提出的方法。训练MNIST和Fashion-MNIST时使用了6000张正常和异常数据图像,CIFAR-10使用了5000张。另外,对于MNIST和Fashion-MNIST,图像被调整为32x32大小,以便在网络结构中拼接时特征尺寸保持一致。

Smartphone case defect dataset consists of normal, scratch, stamped and stain classes. And there are two main types of data set. The first dataset contains patch images that are cropped into 100x100 from their original size of 2192x1000 . The defective class is sampled in the same number as the class with the least number of data among defects by deploying the method from section 3.5, and the normal data is sampled in a similar number to that of the detective data. 900 images of normal data and 906 images of anomaly data were used for training, and 600 images of normal data and 150 images of anomaly data were used for testing. In the experiments, images were resized from 100x100 to 128x128. The second dataset consists of patch images cropped into 548x500. The same method of sampling as the 100x100 patch images is used. 1800 images of normal data and 1814 images of anomaly data are used for training, and 2045 images of normal data and 453 images of normal data are used for testing.

智能手机外壳缺陷数据集包含正常、划痕、压痕和污渍四种类别。数据集主要分为两种类型:第一种数据集包含从2192x1000原始尺寸裁剪为100x100的局部图像。通过采用3.5节所述方法,缺陷类别的采样数量与缺陷类别中数据量最少的类别保持一致,正常数据的采样数量与缺陷数据相近。训练集使用900张正常数据图像和906张异常数据图像,测试集使用600张正常数据图像和150张异常数据图像。实验中图像尺寸从100x100调整为128x128。第二种数据集包含裁剪为548x500的局部图像,采样方法与100x100局部图像相同。训练集使用1800张正常数据图像和1814张异常数据图像,测试集使用2045张正常数据图像和453张异常数据图像。

4.2 Experimental Setups

4.2 实验设置

Experimentation is performed using Intel Core i7-9700K $@$ 3.60GHz and NVIDIA geforce GTX 1080ti with Tensorflow 1.14 deep learning framework. For augmentation on MNIST, the method of randomly cropping 0∼2 pixels from the boundary and resizing again was used, while for Fashion-MNIST, images were vertically and horizontally flipped, randomly cropped 0∼2 pixels from their boundary, and resized again. Then, the images were rotated 90, 180, or 270 degrees. For CIFAR-10 and CIFAR-100, on top of the augmentation method utilized for Fashion-MNIST, hue, saturation, brightness, and contrast are varied as additional augmentation. For defective dataset, vertical flipping and horizontal flipping are used along with rotation of 90, 180, or 270 degrees.

实验使用Intel Core i7-9700K $@$ 3.60GHz处理器和NVIDIA GeForce GTX 1080Ti显卡,搭配TensorFlow 1.14深度学习框架进行。对于MNIST数据集的数据增强,采用从边界随机裁剪0∼2像素并重新调整大小的方法;而Fashion-MNIST则进行了垂直和水平翻转、边界随机裁剪0∼2像素后重新调整尺寸,并施加90度、180度或270度的旋转。在CIFAR-10和CIFAR-100数据集上,除了沿用Fashion-MNIST的增强方法外,还额外调整了色调、饱和度、亮度及对比度。缺陷数据集的处理则结合了垂直/水平翻转以及90度、180度或270度的旋转操作。

Hyper parameters and details on augmentation are as follows Table 3.

超参数 (hyper parameters) 和数据增强 (augmentation) 的详细信息如下表 3。

Table 3: Hyper parameters used for model training

| Hyperparameters | Parameters of patch reconstruction error :loss (Eq. 3) | ||||||

| Epoch|Batch | size | Learning rate init | Learning ratedecay epoch | Learning rate decay factor | Patch size | Stride | Number of selected patch |

| 300 | 1 | 0.0001 | 50 | 0.5 | 16 | 8 | |