XLNet: Generalized Auto regressive Pre training for Language Understanding

XLNet: 用于语言理解的广义自回归预训练

Zhilin Yang∗1, Zihang Dai∗12, Yiming Yang1, Jaime Carbonell1, Ruslan Salak hut dino v 1, Quoc V. Le2 1Carnegie Mellon University, 2Google AI Brain Team {zhiliny,dzihang,yiming,jgc,rsalakhu}@cs.cmu.edu, qvl@google.com

Zhilin Yang∗1, Zihang Dai∗12, Yiming Yang1, Jaime Carbonell1, Ruslan Salakhutdinov1, Quoc V. Le2

1卡内基梅隆大学, 2Google AI Brain Team

{zhiliny,dzihang,yiming,jgc,rsalakhu}@cs.cmu.edu, qvl@google.com

Abstract

摘要

With the capability of modeling bidirectional contexts, denoising auto encoding based pre training like BERT achieves better performance than pre training approaches based on auto regressive language modeling. However, relying on corrupting the input with masks, BERT neglects dependency between the masked positions and suffers from a pretrain-finetune discrepancy. In light of these pros and cons, we propose XLNet, a generalized auto regressive pre training method that (1) enables learning bidirectional contexts by maximizing the expected likelihood over all permutations of the factorization order and (2) overcomes the limitations of BERT thanks to its auto regressive formulation. Furthermore, XLNet integrates ideas from Transformer-XL, the state-of-the-art auto regressive model, into pre training. Empirically, under comparable experiment settings, XLNet outperforms BERT on 20 tasks, often by a large margin, including question answering, natural language inference, sentiment analysis, and document ranking.1.

- 基于双向上下文建模的能力,像 BERT 这样的去噪自编码预训练方法比基于自回归语言建模的预训练方法表现更好。然而,由于依赖掩码对输入进行破坏,BERT 忽略了掩码位置之间的依赖关系,并存在预训练-微调差异。鉴于这些优缺点,我们提出了 XLNet,这是一种广义的自回归预训练方法,它 (1) 通过最大化所有因子分解顺序排列的期望似然来学习双向上下文,并且 (2) 由于其自回归公式,克服了 BERT 的局限性。此外,XLNet 将来自最先进的自回归模型 Transformer-XL 的思想整合到预训练中。实验表明,在可比的实验设置下,XLNet 在 20 个任务上优于 BERT,通常优势显著,包括问答、自然语言推理、情感分析和文档排序。

1 Introduction

1 引言

Unsupervised representation learning has been highly successful in the domain of natural language processing [7, 22, 27, 28, 10]. Typically, these methods first pretrain neural networks on large-scale unlabeled text corpora, and then finetune the models or representations on downstream tasks. Under this shared high-level idea, different unsupervised pre training objectives have been explored in literature. Among them, auto regressive (AR) language modeling and auto encoding (AE) have been the two most successful pre training objectives.

无监督表示学习在自然语言处理领域取得了巨大成功 [7, 22, 27, 28, 10]。通常,这些方法首先在大规模无标签文本语料库上预训练神经网络,然后在下游任务上微调模型或表示。在这一共享的高层思想下,文献中探索了不同的无监督预训练目标。其中,自回归 (AR) 语言建模和自编码 (AE) 是两种最成功的预训练目标。

AR language modeling seeks to estimate the probability distribution of a text corpus with an auto regressive model [7, 27, 28]. Specifically, given a text sequence $\mathbf{x}=(x_{1},\cdots,x_{T})$ , AR language modeling factorizes the likelihood into a forward product $\begin{array}{r}{p(\mathbf{x})=\prod_{t=1}^{T}p(x_{t}\mid\mathbf{x}_{<t})}\end{array}$ or a backward one $\begin{array}{r}{p(\mathbf{x})=\prod_{t=T}^{1}p(x_{t}\mid\mathbf{x}_{>t})}\end{array}$ . A parametric model (e.g. a neural network) is trained to model each conditional distribution. Since an AR language model is only trained to encode a uni-directional context (either forward or backward), it is not effective at modeling deep bidirectional contexts. On the contrary, downstream language understanding tasks often require bidirectional context information. This results in a gap between AR language modeling and effective pre training.

AR 语言建模旨在通过自回归模型估计文本语料库的概率分布 [7, 27, 28]。具体来说,给定一个文本序列 $\mathbf{x}=(x_{1},\cdots,x_{T})$,AR 语言建模将似然分解为前向乘积 $\begin{array}{r}{p(\mathbf{x})=\prod_{t=1}^{T}p(x_{t}\mid\mathbf{x}_{<t})}\end{array}$ 或后向乘积 $\begin{array}{r}{p(\mathbf{x})=\prod_{t=T}^{1}p(x_{t}\mid\mathbf{x}_{>t})}\end{array}$。通过训练一个参数化模型(例如神经网络)来建模每个条件分布。由于 AR 语言模型仅训练用于编码单向上下文(前向或后向),因此在建模深度双向上下文时效果不佳。相反,下游语言理解任务通常需要双向上下文信息。这导致了 AR 语言建模与有效预训练之间的差距。

In comparison, AE based pre training does not perform explicit density estimation but instead aims to reconstruct the original data from corrupted input. A notable example is BERT [10], which has been the state-of-the-art pre training approach. Given the input token sequence, a certain portion of tokens are replaced by a special symbol [MASK], and the model is trained to recover the original tokens from the corrupted version. Since density estimation is not part of the objective, BERT is allowed to utilize bidirectional contexts for reconstruction. As an immediate benefit, this closes the aforementioned bidirectional information gap in AR language modeling, leading to improved performance. However, the artificial symbols like [MASK] used by BERT during pre training are absent from real data at finetuning time, resulting in a pretrain-finetune discrepancy. Moreover, since the predicted tokens are masked in the input, BERT is not able to model the joint probability using the product rule as in AR language modeling. In other words, BERT assumes the predicted tokens are independent of each other given the unmasked tokens, which is oversimplified as high-order, long-range dependency is prevalent in natural language [9].

相比之下,基于自编码器 (Autoencoder, AE) 的预训练并不进行显式的密度估计,而是旨在从损坏的输入中重建原始数据。一个显著的例子是 BERT [10],它一直是预训练方法中的最先进技术。给定输入 Token 序列,BERT 会将一部分 Token 替换为特殊符号 [MASK],并训练模型从损坏的版本中恢复原始 Token。由于密度估计不是目标的一部分,BERT 可以利用双向上下文进行重建。作为直接的好处,这弥补了自回归 (AR) 语言建模中提到的双向信息差距,从而提高了性能。然而,BERT 在预训练期间使用的 [MASK] 等人工符号在微调时的真实数据中并不存在,导致了预训练和微调之间的差异。此外,由于预测的 Token 在输入中被掩码,BERT 无法像 AR 语言建模那样使用乘积规则来建模联合概率。换句话说,BERT 假设在给定未掩码 Token 的情况下,预测的 Token 是相互独立的,这在自然语言中是不现实的,因为高阶、长程依赖关系普遍存在 [9]。

Faced with the pros and cons of existing language pre training objectives, in this work, we propose XLNet, a generalized auto regressive method that leverages the best of both AR language modeling and AE while avoiding their limitations.

面对现有语言预训练目标的优缺点,在本工作中,我们提出了 XLNet,这是一种广义的自回归方法,它结合了自回归语言建模(AR)和自编码(AE)的优点,同时避免了它们的局限性。

• Firstly, instead of using a fixed forward or backward factorization order as in conventional AR models, XLNet maximizes the expected log likelihood of a sequence w.r.t. all possible permutations of the factorization order. Thanks to the permutation operation, the context for each position can consist of tokens from both left and right. In expectation, each position learns to utilize contextual information from all positions, i.e., capturing bidirectional context. • Secondly, as a generalized AR language model, XLNet does not rely on data corruption. Hence, XLNet does not suffer from the pretrain-finetune discrepancy that BERT is subject to. Meanwhile, the auto regressive objective also provides a natural way to use the product rule for factorizing the joint probability of the predicted tokens, eliminating the independence assumption made in BERT.

• 首先,XLNet 不像传统的自回归模型那样使用固定的前向或后向因子化顺序,而是最大化序列在所有可能的因子化顺序下的期望对数似然。得益于排列操作,每个位置的上下文可以包含来自左右两侧的 Token。在期望中,每个位置都能学会利用所有位置的上下文信息,即捕捉双向上下文。

• 其次,作为广义的自回归语言模型,XLNet 不依赖于数据损坏。因此,XLNet 不会受到 BERT 所面临的预训练-微调差异的影响。同时,自回归目标还提供了一种自然的方式,使用乘积规则来分解预测 Token 的联合概率,从而消除了 BERT 中的独立性假设。

In addition to a novel pre training objective, XLNet improves architectural designs for pre training.

除了新颖的预训练目标外,XLNet 还改进了预训练的架构设计。

• Inspired by the latest advancements in AR language modeling, XLNet integrates the segment recurrence mechanism and relative encoding scheme of Transformer-XL [9] into pre training, which empirically improves the performance especially for tasks involving a longer text sequence. • Naively applying a Transformer(-XL) architecture to permutation-based language modeling does not work because the factorization order is arbitrary and the target is ambiguous. As a solution, we propose to re parameter ize the Transformer(-XL) network to remove the ambiguity.

• 受到 AR 语言建模最新进展的启发,XLNet 将 Transformer-XL [9] 的分段循环机制和相对编码方案整合到预训练中,这在经验上提高了性能,尤其是对于涉及较长文本序列的任务。

• 直接将 Transformer(-XL) 架构应用于基于排列的语言建模是行不通的,因为分解顺序是任意的,目标也是模糊的。作为解决方案,我们提出对 Transformer(-XL) 网络进行重新参数化,以消除这种模糊性。

Empirically, under comparable experiment setting, XLNet consistently outperforms BERT [10] on a wide spectrum of problems including GLUE language understanding tasks, reading comprehension tasks like SQuAD and RACE, text classification tasks such as Yelp and IMDB, and the ClueWeb09-B document ranking task.

实验表明,在可比的实验设置下,XLNet 在一系列问题上始终优于 BERT [10],包括 GLUE 语言理解任务、阅读理解任务(如 SQuAD 和 RACE)、文本分类任务(如 Yelp 和 IMDB)以及 ClueWeb09-B 文档排序任务。

Related Work The idea of permutation-based AR modeling has been explored in [32, 12], but there are several key differences. Firstly, previous models aim to improve density estimation by baking an “orderless” inductive bias into the model while XLNet is motivated by enabling AR language models to learn bidirectional contexts. Technically, to construct a valid target-aware prediction distribution, XLNet incorporates the target position into the hidden state via two-stream attention while previous permutation-based AR models relied on implicit position awareness inherent to their MLP architectures. Finally, for both orderless NADE and XLNet, we would like to emphasize that “orderless” does not mean that the input sequence can be randomly permuted but that the model allows for different factorization orders of the distribution.

相关工作

基于排列的自回归(AR)建模思想在 [32, 12] 中已有探讨,但存在几个关键差异。首先,之前的模型旨在通过将“无序”的归纳偏差融入模型中来改进密度估计,而 XLNet 的动机是使自回归语言模型能够学习双向上下文。从技术上讲,为了构建有效的目标感知预测分布,XLNet 通过双流注意力将目标位置纳入隐藏状态,而之前的基于排列的自回归模型则依赖于其 MLP 架构中固有的隐式位置感知。最后,对于无序 NADE 和 XLNet,我们想强调的是,“无序”并不意味着输入序列可以随机排列,而是模型允许分布的不同分解顺序。

Another related idea is to perform auto regressive denoising in the context of text generation [11], which only considers a fixed order though.

另一个相关的想法是在文本生成的背景下执行自回归去噪 [11],尽管它只考虑固定的顺序。

2 Proposed Method

2 提出的方法

2.1 Background

2.1 背景

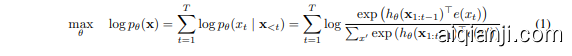

In this section, we first review and compare the conventional AR language modeling and BERT for language pre training. Given a text sequence $\mathbf{x}=[x_{1},\cdots,x_{T}]$ , AR language modeling performs pre training by maximizing the likelihood under the forward auto regressive factorization:

在本节中,我们首先回顾并比较了传统的自回归(AR)语言建模和BERT用于语言预训练的方法。给定一个文本序列 $\mathbf{x}=[x_{1},\cdots,x_{T}]$,自回归语言建模通过最大化前向自回归分解下的似然来进行预训练:

where $h_{\theta}(\mathbf{x}_{1:t-1})$ is a context representation produced by neural models, such as RNNs or Transformers, and $e(x)$ denotes the embedding of $x$ . In comparison, BERT is based on denoising auto-encoding. Specifically, for a text sequence $\mathbf{x}$ , BERT first constructs a corrupted version $\hat{\bf x}$ by randomly setting a portion (e.g. $15%$ ) of tokens in $\mathbf{x}$ to a special symbol [MASK]. Let the masked tokens be $\bar{\bf x}$ . The training objective is to reconstruct $\bar{\bf x}$ from $\hat{\bf x}$ :

其中 $h_{\theta}(\mathbf{x}_{1:t-1})$ 是由神经网络模型(如 RNN 或 Transformer)生成的上下文表示,$e(x)$ 表示 $x$ 的嵌入。相比之下,BERT 基于去噪自编码。具体来说,对于文本序列 $\mathbf{x}$,BERT 首先通过随机将 $\mathbf{x}$ 中的一部分(例如 $15%$)的 token 设置为特殊符号 [MASK] 来构建一个损坏版本 $\hat{\bf x}$。设被掩码的 token 为 $\bar{\bf x}$。训练目标是从 $\hat{\bf x}$ 重建 $\bar{\bf x}$:

where $m_{t}=1$ indicates $x_{t}$ is masked, and $H_{\theta}$ is a Transformer that maps a length $T$ text sequence $\mathbf{x}$ into a sequence of hidden vectors $H_{\boldsymbol\theta}({\bf x})=[H_{\boldsymbol\theta}({\bf x})_{1},H_{\boldsymbol\theta}({\bf x})_{2},\cdot\cdot\cdot~,\bar{H_{\boldsymbol\theta}}({\bf x})_{T}]$ . The pros and cons of the two pre training objectives are compared in the following aspects:

其中 $m_{t}=1$ 表示 $x_{t}$ 被掩码,$H_{\theta}$ 是一个将长度为 $T$ 的文本序列 $\mathbf{x}$ 映射为隐藏向量序列 $H_{\boldsymbol\theta}({\bf x})=[H_{\boldsymbol\theta}({\bf x})_{1},H_{\boldsymbol\theta}({\bf x})_{2},\cdot\cdot\cdot~,\bar{H_{\boldsymbol\theta}}({\bf x})_{T}]$ 的 Transformer。两种预训练目标的优缺点在以下几个方面进行了比较:

• Independence Assumption: As emphasized by the $\approx$ sign in Eq. (2), BERT factorizes the joint conditional probability $p(\bar{\bf x}\mid\hat{\bf x})$ based on an independence assumption that all masked tokens $\bar{\bf x}$ are separately reconstructed. In comparison, the AR language modeling objective (1) factorizes $p_{\boldsymbol{\theta}}(\mathbf{x})$ using the product rule that holds universally without such an independence assumption. • Input noise: The input to BERT contains artificial symbols like [MASK] that never occur in downstream tasks, which creates a pretrain-finetune discrepancy. Replacing [MASK] with original tokens as in [10] does not solve the problem because original tokens can be only used with a small probability — otherwise Eq. (2) will be trivial to optimize. In comparison, AR language modeling does not rely on any input corruption and does not suffer from this issue. • Context dependency: The AR representation $h_{\theta}(\mathbf{x}{1:t-1})$ is only conditioned on the tokens up to position $t$ (i.e. tokens to the left), while the BERT representation $H{\boldsymbol\theta}(\mathbf{x})_{t}$ has access to the contextual information on both sides. As a result, the BERT objective allows the model to be pretrained to better capture bidirectional context.

• 独立性假设:如公式 (2) 中的 $\approx$ 符号所强调的,BERT 基于独立性假设对联合条件概率 $p(\bar{\bf x}\mid\hat{\bf x})$ 进行分解,假设所有被掩码的 token $\bar{\bf x}$ 都是单独重建的。相比之下,AR 语言建模目标 (1) 使用乘积规则对 $p_{\boldsymbol{\theta}}(\mathbf{x})$ 进行分解,该规则普遍成立,无需此类独立性假设。

• 输入噪声:BERT 的输入包含像 [MASK] 这样在下游任务中永远不会出现的人工符号,这导致了预训练与微调之间的差异。如 [10] 中所述,用原始 token 替换 [MASK] 并不能解决问题,因为原始 token 只能以很小的概率使用——否则公式 (2) 将变得过于简单而无法优化。相比之下,AR 语言建模不依赖于任何输入破坏,因此不会受到此问题的影响。

• 上下文依赖性:AR 表示 $h_{\theta}(\mathbf{x}{1:t-1})$ 仅依赖于位置 $t$ 之前的 token(即左侧的 token),而 BERT 表示 $H{\boldsymbol\theta}(\mathbf{x})_{t}$ 可以访问两侧的上下文信息。因此,BERT 目标允许模型在预训练时更好地捕捉双向上下文。

2.2 Objective: Permutation Language Modeling

2.2 目标:排列语言建模

According to the comparison above, AR language modeling and BERT possess their unique advantages over the other. A natural question to ask is whether there exists a pre training objective that brings the advantages of both while avoiding their weaknesses.

根据上述比较,自回归 (AR) 语言建模和 BERT 各自具有独特的优势。一个自然的问题是,是否存在一种预训练目标,能够结合两者的优势,同时避免它们的弱点。

Borrowing ideas from orderless NADE [32], we propose the permutation language modeling objective that not only retains the benefits of AR models but also allows models to capture bidirectional contexts. Specifically, for a sequence $\mathbf{x}$ of length $T$ , there are $T!$ different orders to perform a valid auto regressive factorization. Intuitively, if model parameters are shared across all factorization orders, in expectation, the model will learn to gather information from all positions on both sides.

借鉴无序 NADE [32] 的思想,我们提出了排列语言建模目标,该目标不仅保留了自回归 (AR) 模型的优势,还允许模型捕捉双向上下文。具体来说,对于一个长度为 $T$ 的序列 $\mathbf{x}$,有 $T!$ 种不同的顺序来执行有效的自回归分解。直观上,如果模型参数在所有分解顺序之间共享,那么在期望中,模型将学会从两侧的所有位置收集信息。

To formalize the idea, let $\mathcal{Z}{T}$ be the set of all possible permutations of the length $T$ index sequence $[1,2,\ldots,T]$ . We use $z{t}$ and $\mathbf{z}{<t}$ to denote the $t$ -th element and the first $t-1$ elements of a permutation $\mathbf{z}\in\mathcal{Z}{T}$ . Then, our proposed permutation language modeling objective can be expressed as follows:

为了形式化这一想法,设 $\mathcal{Z}{T}$ 为长度为 $T$ 的索引序列 $[1,2,\ldots,T]$ 的所有可能排列的集合。我们用 $z{t}$ 和 $\mathbf{z}{<t}$ 分别表示排列 $\mathbf{z}\in\mathcal{Z}{T}$ 的第 $t$ 个元素和前 $t-1$ 个元素。然后,我们提出的排列语言建模目标可以表示如下:

Essentially, for a text sequence $\mathbf{x}$ , we sample a factorization order $\mathbf{z}$ at a time and decompose the likelihood $p_{\boldsymbol{\theta}}(\mathbf{x})$ according to factorization order. Since the same model parameter $\theta$ is shared across all factorization orders during training, in expectation, $x_{t}$ has seen every possible element $x_{i}\neq x_{t}$ in the sequence, hence being able to capture the bidirectional context. Moreover, as this objective fits into the AR framework, it naturally avoids the independence assumption and the pretrain-finetune discrepancy discussed in Section 2.1.

本质上,对于文本序列 $\mathbf{x}$,我们每次采样一个分解顺序 $\mathbf{z}$,并根据分解顺序分解似然 $p_{\boldsymbol{\theta}}(\mathbf{x})$。由于在训练过程中所有分解顺序共享相同的模型参数 $\theta$,因此在期望中,$x_{t}$ 已经看到了序列中的每个可能的元素 $x_{i}\neq x_{t}$,从而能够捕捉双向上下文。此外,由于这一目标符合自回归 (AR) 框架,它自然地避免了第 2.1 节中讨论的独立性假设和预训练-微调差异。

Remark on Permutation The proposed objective only permutes the factorization order, not the sequence order. In other words, we keep the original sequence order, use the positional encodings corresponding to the original sequence, and rely on a proper attention mask in Transformers to achieve permutation of the factorization order. Note that this choice is necessary, since the model will only encounter text sequences with the natural order during finetuning.

关于排列的说明

所提出的目标仅对分解顺序进行排列,而非序列顺序。换句话说,我们保持原始序列顺序,使用与原始序列对应的位置编码,并依靠 Transformer 中的适当注意力掩码来实现分解顺序的排列。需要注意的是,这一选择是必要的,因为在微调过程中,模型只会遇到具有自然顺序的文本序列。

To provide an overall picture, we show an example of predicting the token $x_{3}$ given the same input sequence $\mathbf{x}$ but under different factorization orders in the Appendix A.7 with Figure 4.

为了提供一个整体的概览,我们在附录 A.7 中展示了图 4,展示了在给定相同输入序列 $\mathbf{x}$ 但不同分解顺序下预测 token $x_{3}$ 的示例。

2.3 Architecture: Two-Stream Self-Attention for Target-Aware Representations

2.3 架构:用于目标感知表示的双流自注意力机制

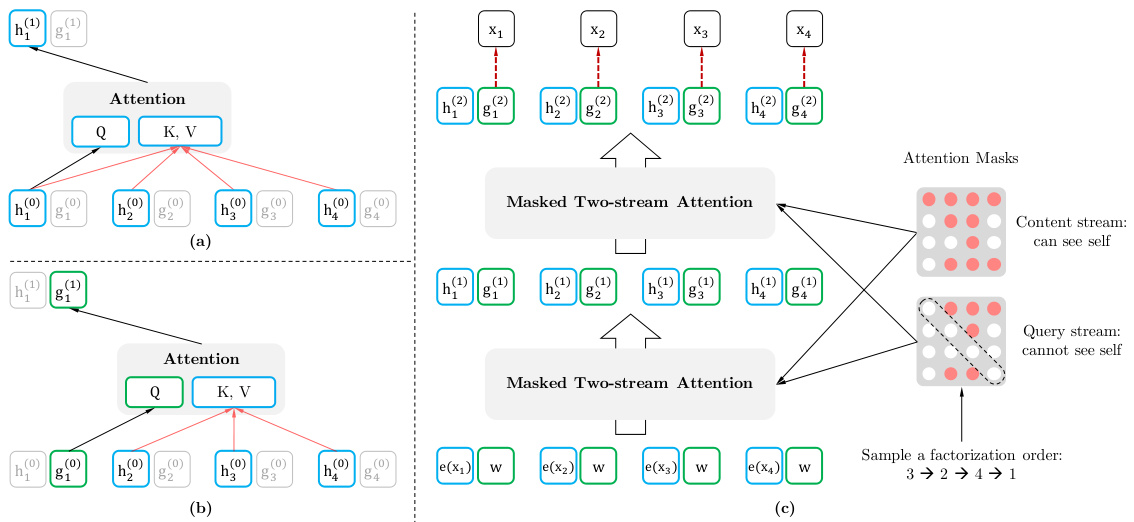

Figure 1: (a): Content stream attention, which is the same as the standard self-attention. (b): Query stream attention, which does not have access information about the content $x_{z_{t}}$ . (c): Overview of the permutation language modeling training with two-stream attention.

图 1: (a): 内容流注意力,与标准的自注意力机制相同。 (b): 查询流注意力,无法访问内容 $x_{z_{t}}$ 的信息。 (c): 使用双流注意力进行排列语言建模训练的概览。

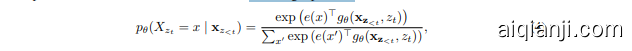

While the permutation language modeling objective has desired properties, naive implementation with standard Transformer parameter iz ation may not work. To see the problem, assume we parameter ize the next-token distribution $p_{\theta}(X_{z_{t}}\mid\mathbf{x}{\mathbf{z}{<t}})$ using the standard Softmax formulation, i.e., $p_{\theta}(X_{z_{t}}=$ $\begin{array}{r}{x\mid\mathbf{x_{z}}_{<t}\big)=\frac{\exp\left(e(x)^{\top}h_{\theta}(\mathbf{x_{z}}_{<t})\right)}{\sum_{x^{\prime}}\exp\left(e(x^{\prime})^{\top}h_{\theta}(\mathbf{x_{z}}_{<t})\right)}}\end{array}$ Pxe′x epx(pe((ex()x′)hθ⊤(hxθz(<xtz)<)t)) , where hθ(xz<t) denotes the hidden representation of xz<t produced by the shared Transformer network after proper masking. Now notice that the representation $\bar{h}_{\theta}(\mathbf{x}_{\mathbf{z}_{<t}})$ does not depend on which position it will predict, i.e., the value of $z_{t}$ . Consequently, the same distribution is predicted regardless of the target position, which is not able to learn useful representations (see Appendix A.1 for a concrete example). To avoid this problem, we propose to re-parameter ize the next-token distribution to be target position aware:

虽然排列语言建模目标具有所需的特性,但使用标准Transformer参数化的简单实现可能无法奏效。为了说明这个问题,假设我们使用标准的Softmax公式来参数化下一个Token的分布 $p_{\theta}(X_{z_{t}}\mid\mathbf{x}{\mathbf{z}{<t}})$ ,即 $p_{\theta}(X_{z_{t}}=$ $\begin{array}{r}{x\mid\mathbf{x_{z}}_{<t}\big)=\frac{\exp\left(e(x)^{\top}h_{\theta}(\mathbf{x_{z}}_{<t})\right)}{\sum_{x^{\prime}}\exp\left(e(x^{\prime})^{\top}h_{\theta}(\mathbf{x_{z}}_{<t})\right)}}\end{array}$ Pxe′x epx(pe((ex()x′)hθ⊤(hxθz(<xtz)<)t)) ,其中 $h_{\theta}(x_{z_{<t}})$ 表示通过适当的掩码处理后,共享Transformer网络生成的 $x_{z_{<t}}$ 的隐藏表示。现在注意到,表示 $\bar{h}_{\theta}(\mathbf{x}_{\mathbf{z}_{<t}})$ 并不依赖于它将预测的位置,即 $z_{t}$ 的值。因此,无论目标位置如何,都会预测相同的分布,这无法学习到有用的表示(具体示例见附录A.1)。为了避免这个问题,我们建议重新参数化下一个Token的分布,使其能够感知目标位置:

where $g_{\theta}\big(\mathbf{x_{z}}{<t},z{t}\big)$ denotes a new type of representations which additionally take the target position $z_{t}$ as input.

其中 $g_{\theta}\big(\mathbf{x_{z}}{<t},z{t}\big)$ 表示一种新型的表示方法,它额外将目标位置 $z_{t}$ 作为输入。

Two-Stream Self-Attention While the idea of target-aware representations removes the ambiguity in target prediction, how to formulate $g_{\theta}\left(\mathbf{x_{z}}{<t},z{t}\right)$ remains a non-trivial problem. Among other possibilities, we propose to “stand” at the target position $z_{t}$ and rely on the position $z_{t}$ to gather information from the context $\mathbf{x_{z}}{<t}$ through attention. For this parameter iz ation to work, there are two requirements that are contradictory in a standard Transformer architecture: (1) to predict the token $x{z_{t}}$ , $g_{\theta}(\mathbf{x_{z}}{<t},z{t})$ should only use the position $z_{t}$ and not the content $x_{z_{t}}$ , otherwise the objective becomes trivial; (2) to predict the other tokens $x_{z_{j}}$ with $j>t$ , $g_{\theta}\big(\mathbf{x_{z}}{<t},z{t}\big)$ should also encode the content $x_{z_{t}}$ to provide full contextual information. To resolve such a contradiction, we propose to use two sets of hidden representations instead of one:

双流自注意力机制

虽然目标感知表示的概念消除了目标预测中的歧义,但如何形式化 $g_{\theta}\left(\mathbf{x_{z}}{<t},z{t}\right)$ 仍然是一个非平凡的问题。在众多可能性中,我们提出“站在”目标位置 $z_{t}$ 上,并依赖位置 $z_{t}$ 通过注意力机制从上下文 $\mathbf{x_{z}}{<t}$ 中收集信息。为了使这种参数化方法有效,标准 Transformer 架构中存在两个相互矛盾的要求:(1) 为了预测 Token $x{z_{t}}$,$g_{\theta}(\mathbf{x_{z}}{<t},z{t})$ 应该仅使用位置 $z_{t}$ 而不使用内容 $x_{z_{t}}$,否则目标将变得微不足道;(2) 为了预测其他 Token $x_{z_{j}}$(其中 $j>t$),$g_{\theta}\big(\mathbf{x_{z}}{<t},z{t}\big)$ 还应编码内容 $x_{z_{t}}$ 以提供完整的上下文信息。为了解决这一矛盾,我们提出使用两组隐藏表示而不是一组:

• The content representation $h_{\theta}(\mathbf{x}{\mathbf{z}{\le t}})$ , or abbreviated as $h_{z_{t}}$ , which serves a similar role to the standard hidden states in Transformer. This representation encodes both the context and $x_{z_{t}}$ itself. • The query representation $g_{\theta}\left(\mathbf{x_{z}}{<t},z{t}\right)$ , or abbreviated as $g_{z_{t}}$ , which only has access to the contextual information $\mathbf{x_{z}}{<t}$ and the position $z{t}$ , but not the content $x_{z_{t}}$ , as discussed above.

• 内容表示 $h_{\theta}(\mathbf{x}{\mathbf{z}{\le t}})$ ,简称为 $h_{z_{t}}$ ,其作用类似于 Transformer 中的标准隐藏状态。该表示编码了上下文和 $x_{z_{t}}$ 本身。

• 查询表示 $g_{\theta}\left(\mathbf{x_{z}}{<t},z{t}\right)$ ,简称为 $g_{z_{t}}$ ,它只能访问上下文信息 $\mathbf{x_{z}}{<t}$ 和位置 $z{t}$ ,而不能访问内容 $x_{z_{t}}$ ,如上所述。

Computationally, the first layer query stream is initialized with a trainable vector, i.e. $g_{i}^{(0)}=w$ , while the content stream is set to the corresponding word embedding, i.e. $h_{i}^{(0)}=e(x_{i})$ . For each self-attention layer $m=1,\ldots,M$ , the two streams of representations are schematically 2 updated with a shared set of parameters as follows (illustrated in Figures 1 (a) and (b)):

在计算上,第一层的查询流使用一个可训练的向量进行初始化,即 $g_{i}^{(0)}=w$,而内容流则设置为对应的词嵌入,即 $h_{i}^{(0)}=e(x_{i})$。对于每个自注意力层 $m=1,\ldots,M$,两个表示流通过共享的参数集进行更新,如下所示(如图 1 (a) 和 (b) 所示):

where Q, K, V denote the query, key, and value in an attention operation [33]. The update rule of the content representations is exactly the same as the standard self-attention, so during finetuning, we can simply drop the query stream and use the content stream as a normal Transformer(-XL). Finally, we can use the last-layer query representation gzt $g_{z_{t}}^{(M)}$ to compute Eq. (4).

其中 Q、K、V 表示注意力操作中的查询 (query)、键 (key) 和值 (value) [33]。内容表示的更新规则与标准的自注意力完全相同,因此在微调期间,我们可以简单地丢弃查询流,并将内容流用作普通的 Transformer(-XL)。最后,我们可以使用最后一层的查询表示 $g_{z_{t}}^{(M)}$ 来计算公式 (4)。

Partial Prediction While the permutation language modeling objective (3) has several benefits, it is a much more challenging optimization problem due to the permutation and causes slow convergence in preliminary experiments. To reduce the optimization difficulty, we choose to only predict the last tokens in a factorization order. Formally, we split $\mathbf{z}$ into a non-target sub sequence $\mathbf{z}{\leq c}$ and a target sub sequence $\mathbf{z}{>c}$ , where $c$ is the cutting point. The objective is to maximize the log-likelihood of the target sub sequence conditioned on the non-target sub sequence, i.e.,

部分预测

虽然排列语言建模目标 (3) 有几个优点,但由于排列的原因,它是一个更具挑战性的优化问题,并且在初步实验中导致收敛速度较慢。为了降低优化难度,我们选择仅预测分解顺序中的最后几个 Token。形式上,我们将 $\mathbf{z}$ 分为非目标子序列 $\mathbf{z}{\leq c}$ 和目标子序列 $\mathbf{z}{>c}$,其中 $c$ 是切割点。目标是最大化在非目标子序列条件下目标子序列的对数似然,即

Note that $\mathbf{z}_{>c}$ is chosen as the target because it possesses the longest context in the sequence given the current factorization order $\mathbf{z}$ . A hyper parameter $K$ is used such that about $1/K$ tokens are selected for predictions; i.e., $\left|\mathbf{z}\right|/(\left|\mathbf{z}\right|-c)\overset{.}{\approx}\bar{K}$ . For unselected tokens, their query representations need not be computed, which saves speed and memory.

注意,$\mathbf{z}_{>c}$ 被选为目标,因为它在给定当前分解顺序 $\mathbf{z}$ 的序列中拥有最长的上下文。使用超参数 $K$,使得大约 $1/K$ 的 Token 被选中用于预测;即 $\left|\mathbf{z}\right|/(\left|\mathbf{z}\right|-c)\overset{.}{\approx}\bar{K}$。对于未选中的 Token,它们的查询表示无需计算,从而节省了速度和内存。

2.4 Incorporating Ideas from Transformer-XL

2.4 融入 Transformer-XL 的思想

Since our objective function fits in the AR framework, we incorporate the state-of-the-art AR language model, Transformer-XL [9], into our pre training framework, and name our method after it. We integrate two important techniques in Transformer-XL, namely the relative positional encoding scheme and the segment recurrence mechanism. We apply relative positional encodings based on the original sequence as discussed earlier, which is straightforward. Now we discuss how to integrate the recurrence mechanism into the proposed permutation setting and enable the model to reuse hidden states from previous segments. Without loss of generality, suppose we have two segments taken from a long sequence s; i.e., $\tilde{\mathbf{x}}=\mathbf{s}_{1:T}$ and $\mathbf{x}=\mathbf{s}_{T+1:2T}$ . Let $\tilde{\mathbf{z}}$ and $\mathbf{z}$ be permutations of $[1\cdots T]$ and $[T+1\cdots2T]$ respectively. Then, based on the permutation $\tilde{\mathbf{z}}$ , we process the first segment, and then cache the obtained content representations $\tilde{\mathbf{h}}^{(m)}$ for each layer $m$ . Then, for the next segment $\mathbf{x}$ , the attention update with memory can be written as

由于我们的目标函数符合自回归(AR)框架,我们将最先进的自回归语言模型 Transformer-XL [9] 纳入我们的预训练框架,并以它命名我们的方法。我们整合了 Transformer-XL 中的两项重要技术,即相对位置编码方案和片段循环机制。我们基于原始序列应用相对位置编码,这一点在前文中已经讨论过,较为直接。现在,我们讨论如何将循环机制整合到所提出的排列设置中,并使模型能够重用前一片段的隐藏状态。不失一般性,假设我们从长序列 s 中取出两个片段,即 $\tilde{\mathbf{x}}=\mathbf{s}_{1:T}$ 和 $\mathbf{x}=\mathbf{s}_{T+1:2T}$。设 $\tilde{\mathbf{z}}$ 和 $\mathbf{z}$ 分别是 $[1\cdots T]$ 和 $[T+1\cdots2T]$ 的排列。然后,基于排列 $\tilde{\mathbf{z}}$,我们处理第一个片段,并缓存每一层 $m$ 获得的内容表示 $\tilde{\mathbf{h}}^{(m)}$。接着,对于下一个片段 $\mathbf{x}$,带有记忆的注意力更新可以写为

where $[.,.]$ denotes concatenation along the sequence dimension. Notice that positional encodings only depend on the actual positions in the original sequence. Thus, the above attention update is independent of $\tilde{\mathbf{z}}$ once the representations $\tilde{\mathbf{h}}^{(m)}$ are obtained. This allows caching and reusing the memory without knowing the factorization order of the previous segment. In expectation, the model learns to utilize the memory over all factorization orders of the last segment. The query stream can be computed in the same way. Finally, Figure 1 (c) presents an overview of the proposed permutation language modeling with two-stream attention (see Appendix A.7 for more detailed illustration).

其中 $[.,.]$ 表示沿序列维度的连接。注意,位置编码仅依赖于原始序列中的实际位置。因此,一旦获得表示 $\tilde{\mathbf{h}}^{(m)}$,上述注意力更新就与 $\tilde{\mathbf{z}}$ 无关。这使得可以在不知道前一段的分解顺序的情况下缓存和重用记忆。在期望中,模型学会利用最后一段的所有分解顺序的记忆。查询流可以以相同的方式计算。最后,图 1 (c) 展示了所提出的带有双流注意力的排列语言建模的概述(更多详细说明见附录 A.7)。

2.5 Modeling Multiple Segments

2.5 多段建模

Many downstream tasks have multiple input segments, e.g., a question and a context paragraph in question answering. We now discuss how we pretrain XLNet to model multiple segments in the auto regressive framework. During the pre training phase, following BERT, we randomly sample two segments (either from the same context or not) and treat the concatenation of two segments as one sequence to perform permutation language modeling. We only reuse the memory that belongs to the same context. Specifically, the input to our model is the same as BERT: [CLS, A, SEP, B, SEP], where “SEP” and “CLS” are two special symbols and “A” and “B” are the two segments. Although we follow the two-segment data format, XLNet-Large does not use the objective of next sentence prediction [10] as it does not show consistent improvement in our ablation study (see Section 3.4).

许多下游任务具有多个输入片段,例如问答中的问题和上下文段落。我们现在讨论如何在自回归框架中对多个片段进行预训练。在预训练阶段,我们遵循BERT的方法,随机采样两个片段(可能来自同一上下文或不同上下文),并将这两个片段的连接作为一个序列进行排列语言建模。我们只重用属于同一上下文的记忆。具体来说,模型的输入与BERT相同:[CLS, A, SEP, B, SEP],其中“SEP”和“CLS”是两个特殊符号,“A”和“B”是两个片段。尽管我们遵循了两片段的数据格式,XLNet-Large并未使用下一句预测的目标 [10],因为它在我们的消融研究中并未显示出一致的改进(见第3.4节)。

Relative Segment Encodings Architecturally, different from BERT that adds an absolute segment embedding to the word embedding at each position, we extend the idea of relative encodings from Transformer-XL to also encode the segments. Given a pair of positions $i$ and $j$ in the sequence, if $i$ and $j$ are from the same segment, we use a segment encoding $\mathbf{s}{i j}=\mathbf{s}{+}$ or otherwise $\mathbf{s}{i j}=\mathbf{s}{-}$ , where $\mathbf{s}{+}$ and $\mathbf{s}{-}$ are learnable model parameters for each attention head. In other words, we only consider whether the two positions are within the same segment, as opposed to considering which specific segments they are from. This is consistent with the core idea of relative encodings; i.e., only modeling the relationships between positions. When $i$ attends to $j$ , the segment encoding $\mathbf{s}{i j}$ is used to compute an attention weight $a{i j}=({\bf q}{i}+{\bf b})^{\top}{\bf s}{i j}$ , where $\mathbf{q}{i}$ is the query vector as in a standard attention operation and $\mathbf{b}$ is a learnable head-specific bias vector. Finally, the value $a{i j}$ is added to the normal attention weight. There are two benefits of using relative segment encodings. First, the inductive bias of relative encodings improves generalization [9]. Second, it opens the possibility of finetuning on tasks that have more than two input segments, which is not possible using absolute segment encodings.

相对分段编码

在架构上,与 BERT 在每个位置的词嵌入上添加绝对分段嵌入不同,我们将 Transformer-XL 中的相对编码思想扩展到分段编码。给定序列中的一对位置 $i$ 和 $j$,如果 $i$ 和 $j$ 来自同一分段,我们使用分段编码 $\mathbf{s}{i j}=\mathbf{s}{+}$,否则使用 $\mathbf{s}{i j}=\mathbf{s}{-}$,其中 $\mathbf{s}{+}$ 和 $\mathbf{s}{-}$ 是每个注意力头的可学习模型参数。换句话说,我们只考虑两个位置是否在同一分段内,而不是考虑它们来自哪个具体分段。这与相对编码的核心思想一致,即只建模位置之间的关系。当 $i$ 关注 $j$ 时,分段编码 $\mathbf{s}{i j}$ 用于计算注意力权重 $a{i j}=({\bf q}{i}+{\bf b})^{\top}{\bf s}{i j}$,其中 $\mathbf{q}{i}$ 是标准注意力操作中的查询向量,$\mathbf{b}$ 是一个可学习的特定头偏置向量。最后,值 $a{i j}$ 被添加到正常的注意力权重中。

使用相对分段编码有两个好处。首先,相对编码的归纳偏差提高了泛化能力 [9]。其次,它为在具有两个以上输入分段的任务上进行微调提供了可能性,而使用绝对分段编码则无法实现这一点。

2.6 Discussion

2.6 讨论

Comparing Eq. (2) and (5), we observe that both BERT and XLNet perform partial prediction, i.e., only predicting a subset of tokens in the sequence. This is a necessary choice for BERT because if all tokens are masked, it is impossible to make any meaningful predictions. In addition, for both BERT and XLNet, partial prediction plays a role of reducing optimization difficulty by only predicting tokens with sufficient context. However, the independence assumption discussed in Section 2.1 disables BERT to model dependency between targets.

比较公式 (2) 和 (5),我们观察到 BERT 和 XLNet 都执行部分预测,即只预测序列中的一部分 Token。对于 BERT 来说,这是一个必要的选择,因为如果所有 Token 都被掩码,就无法做出有意义的预测。此外,对于 BERT 和 XLNet 来说,部分预测通过仅预测具有足够上下文的 Token,起到了降低优化难度的作用。然而,2.1 节中讨论的独立性假设使得 BERT 无法建模目标之间的依赖关系。

To better understand the difference, let’s consider a concrete example [New, York, is, a, city]. Suppose both BERT and XLNet select the two tokens [New, York] as the prediction targets and maximize $\log p(\mathrm{NewYork~}|$ is a city). Also suppose that XLNet samples the factorization order [is, a, city, New, York]. In this case, BERT and XLNet respectively reduce to the following objectives:

为了更好地理解其中的差异,让我们考虑一个具体的例子 [New, York, is, a, city]。假设 BERT 和 XLNet 都选择了两个 Token [New, York] 作为预测目标,并最大化 $\log p(\mathrm{NewYork~}|$ is a city)。同时假设 XLNet 采样了分解顺序 [is, a, city, New, York]。在这种情况下,BERT 和 XLNet 分别简化为以下目标:

Notice that XLNet is able to capture the dependency between the pair (New, York), which is omitted by BERT. Although in this example, BERT learns some dependency pairs such as (New, city) and (York, city), it is obvious that XLNet always learns more dependency pairs given the same target and contains “denser” effective training signals.

注意到 XLNet 能够捕捉到 (New, York) 这对依赖关系,而 BERT 忽略了这一点。尽管在这个例子中,BERT 学习了一些依赖对,例如 (New, city) 和 (York, city),但很明显,在给定相同目标的情况下,XLNet 总是学习到更多的依赖对,并且包含“更密集”的有效训练信号。

For more formal analysis and further discussion, please refer to Appendix A.5.

如需更正式的分析和进一步讨论,请参阅附录 A.5。

3 Experiments

3 实验

3.1 Pre training and Implementation

3.1 预训练与实现

Following BERT [10], we use the Books Corpus [40] and English Wikipedia as part of our pre training data, which have 13GB plain text combined. In addition, we include Giga5 (16GB text) [26], ClueWeb 2012-B (extended from [5]), and Common Crawl [6] for pre training. We use heuristics to aggressively filter out short or low-quality articles for ClueWeb 2012-B and Common Crawl, which results in 19GB and 110GB text respectively. After token iz ation with Sentence Piece [17], we obtain 2.78B, 1.09B, 4.75B, 4.30B, and 19.97B subword pieces for Wikipedia, Books Corpus, Giga5, ClueWeb, and Common Crawl respectively, which are 32.89B in total.

继 BERT [10] 之后,我们使用 Books Corpus [40] 和英文维基百科作为预训练数据的一部分,这些数据共有 13GB 的纯文本。此外,我们还加入了 Giga5 (16GB 文本) [26]、ClueWeb 2012-B (从 [5] 扩展而来) 和 Common Crawl [6] 进行预训练。我们使用启发式方法对 ClueWeb 2012-B 和 Common Crawl 中的短文本或低质量文章进行了严格过滤,分别得到了 19GB 和 110GB 的文本。在使用 Sentence Piece [17] 进行 Token 化后,我们分别从维基百科、Books Corpus、Giga5、ClueWeb 和 Common Crawl 中获得了 2.78B、1.09B、4.75B、4.30B 和 19.97B 的子词片段,总计 32.89B。

Our largest model XLNet-Large has the same architecture hyper parameters as BERT-Large, which results in a similar model size. During pre training, we always use a full sequence length of 512. Firstly, to provide a fair comparison with BERT (section 3.2), we also trained XLNet-Large-wikibooks on Books Corpus and Wikipedia only, where we reuse all pre training hyper-parameters as in the original BERT. Then, we scale up the training of XLNet-Large by using all the datasets described above. Specifically, we train on 512 TPU v3 chips for 500K steps with an Adam weight decay optimizer, linear learning rate decay, and a batch size of 8192, which takes about 5.5 days. It was observed that the model still underfits the data at the end of training. Finally, we perform ablation study (section 3.4) based on the XLNet-Base-wikibooks.

我们最大的模型 XLNet-Large 具有与 BERT-Large 相同的架构超参数,因此模型大小相似。在预训练期间,我们始终使用 512 的完整序列长度。首先,为了与 BERT 进行公平比较(第 3.2 节),我们仅在 Books Corpus 和 Wikipedia 上训练了 XLNet-Large-wikibooks,并重用了原始 BERT 中的所有预训练超参数。然后,我们通过使用上述所有数据集扩展了 XLNet-Large 的训练。具体来说,我们在 512 个 TPU v3 芯片上训练了 500K 步,使用 Adam 权重衰减优化器、线性学习率衰减和 8192 的批量大小,耗时约 5.5 天。观察到模型在训练结束时仍然欠拟合数据。最后,我们基于 XLNet-Base-wikibooks 进行了消融研究(第 3.4 节)。

Since the recurrence mechanism is introduced, we use a bidirectional data input pipeline where each of the forward and backward directions takes half of the batch size. For training XLNet-Large, we set the partial prediction constant $K$ as 6 (see Section 2.3). Our finetuning procedure follows BERT [10] except otherwise specified3. We employ an idea of span-based prediction, where we first sample a length $L\in[1,\cdots,5]$ , and then randomly select a consecutive span of $L$ tokens as prediction targets within a context of $(\Bar{K}L)$ tokens.

由于引入了递归机制,我们使用了一个双向数据输入管道,其中前向和后向各占一半的批量大小。在训练 XLNet-Large 时,我们将部分预测常数 $K$ 设为 6(见第 2.3 节)。除非另有说明,我们的微调过程遵循 BERT [10]。我们采用了一种基于跨度的预测方法,首先采样一个长度 $L\in[1,\cdots,5]$,然后在 $(\Bar{K}L)$ 个 Token 的上下文中随机选择一个连续的 $L$ 个 Token 作为预测目标。

We use a variety of natural language understanding datasets to evaluate the performance of our method. Detailed descriptions of the settings for all the datasets can be found in Appendix A.3.

我们使用多种自然语言理解数据集来评估我们方法的性能。所有数据集的详细设置描述可以在附录 A.3 中找到。

3.2 Fair Comparison with BERT

3.2 与 BERT 的公平比较

Table 1: Fair comparison with BERT. All models are trained using the same data and hyper parameters as in BERT. We use the best of 3 BERT variants for comparison; i.e., the original BERT, BERT with whole word masking, and BERT without next sentence prediction.

| 模型 | SQuAD1.1 | SQuAD2.0 | RACE | MNLI | QNLI | QQP | RTE | SST-2 | MRPC | CoLA | STS-B |

|---|---|---|---|---|---|---|---|---|---|---|---|

| BERT-Large (3次最佳) | 86.7/92.8 | 82.8/85.5 | 75.1 | 87.3 | 93.0 | 91.4 | 74.0 | 94.0 | 88.7 | 63.7 | 90.2 |

| XLNet-Large-wikibooks | 88.2/94.0 | 85.1/87.8 | 77.4 | 88.4 | 93.9 | 91.8 | 81.2 | 94.4 | 90.0 | 65.2 | 91.1 |

表 1: 与 BERT 的公平比较。所有模型均使用与 BERT 相同的数据和超参数进行训练。我们使用 3 种 BERT 变体中的最佳结果进行比较,即原始 BERT、使用全词掩码的 BERT 以及不使用下一句预测的 BERT。

Here, we first compare the performance of BERT and XLNet in a fair setting to decouple the effects of using more data and the improvement from BERT to XLNet. In Table 1, we compare (1) best performance of three different variants of BERT and (2) XLNet trained with the same data and hyper parameters. As we can see, trained on the same data with an almost identical training recipe, XLNet outperforms BERT by a sizable margin on all the considered datasets.

在此,我们首先在公平的设置下比较 BERT 和 XLNet 的性能,以分离使用更多数据的影响以及从 BERT 到 XLNet 的改进。在表 1 中,我们比较了 (1) 三种不同 BERT 变体的最佳性能,以及 (2) 使用相同数据和超参数训练的 XLNet。正如我们所看到的,在几乎相同的训练方案下,使用相同数据训练的 XLNet 在所有考虑的数据集上都显著优于 BERT。

3.3 Comparison with RoBERTa: Scaling Up

Table 2: Comparison with state-of-the-art results on the test set of RACE, a reading comprehension task, and on ClueWeb09-B, a document ranking task. $^*$ indicates using ensembles. $\dagger$ indicates our implementations. “Middle” and “High” in RACE are two subsets representing middle and high school difficulty levels. All BERT, RoBERTa, and XLNet results are obtained with a 24-layer architecture with similar model sizes (aka BERT-Large).

3.3 与 RoBERTa 的对比:扩展规模

| RACE | 准确率 | 初中 | 高中 | 模型 | NDCG@20 | ERR@20 |

|---|---|---|---|---|---|---|

| GPT[28] | 59.0 | 62.9 | 57.4 | DRMM[13] | 24.3 | 13.8 |

| BERT [25] | 72.0 | 76.6 | 70.1 | KNRM [8] | 26.9 | 14.9 |

| BERT+DCMN* [38] | 74.1 | 79.5 | 71.8 | Conv[8] | 28.7 | 18.1 |

| RoBERTa [21] | 83.2 | 86.5 | 81.8 | BERTt | 30.53 | 18.67 |

| XLNet | 85.4 | 88.6 | 84.0 | XLNet | 31.10 | 20.28 |

表 2: 在 RACE 阅读理解任务和 ClueWeb09-B 文档排序任务测试集上与最先进结果的对比。$^*$ 表示使用集成方法。$\dagger$ 表示我们的实现。RACE 中的“初中”和“高中”是两个子集,分别代表初中和高中难度级别。所有 BERT、RoBERTa 和 XLNet 的结果均使用 24 层架构获得,模型大小相似(即 BERT-Large)。

After the initial publication of our manuscript, a few other pretrained models were released such as RoBERTa [21] and ALBERT [19]. Since ALBERT involves increasing the model hidden size from 1024 to 2048/4096 and thus substantially increases the amount of computation in terms of FLOPs, we exclude ALBERT from the following results as it is hard to lead to scientific conclusions. To obtain relatively fair comparison with RoBERTa, the experiment in this section is based on full data and reuses the hyper-parameters of RoBERTa, as described in section 3.1.

在我们的论文初稿发布后,其他一些预训练模型相继发布,例如 RoBERTa [21] 和 ALBERT [19]。由于 ALBERT 涉及将模型的隐藏层大小从 1024 增加到 2048/4096,从而显著增加了计算量(以 FLOPs 为单位),因此我们在以下结果中排除了 ALBERT,因为它难以得出科学的结论。为了与 RoBERTa 进行相对公平的比较,本节实验基于完整数据并重用了 RoBERTa 的超参数,如第 3.1 节所述。

The results are presented in Tables 2 (reading comprehension & document ranking), 3 (question answering), 4 (text classification) and 5 (natural language understanding), where XLNet generally outperforms BERT and RoBERTa. In addition, we make two more interesting observations:

结果展示在表 2 (阅读理解与文档排序)、表 3 (问答)、表 4 (文本分类) 和表 5 (自然语言理解) 中,其中 XLNet 通常优于 BERT 和 RoBERTa。此外,我们还做出了两个有趣的观察:

Table 3: Results on SQuAD, a reading comprehension dataset. † marks our runs with the official code. $^*$ indicates ensembles. $^\ddag$ : We are not able to obtain the test results of our latest model on SQuAD1.1 from the organizers after submitting our result for more than one month, and thus report the results of an older version for the SQuAD1.1 test set.

| SQuAD2.0 | EM | F1 | SQuAD1.1 | EM | F1 |

|---|---|---|---|---|---|

| Devset 结果 (单模型) | |||||

| BERT [10] | 78.98 | 81.77 | BERT [10] | 84.1 | 90.9 |

| RoBERTa [21] | 86.5 | 89.4 | RoBERTa [21] | 88.9 | 94.6 |

| XLNet | 87.9 | 90.6 | XLNet | 89.7 | 95.1 |

| Testset 结果 (单模型, 截至 2019 年 12 月 14 日) | |||||

| BERT [10] | 80.005 | 83.061 | BERT [10] | 85.083 | 91.835 |

| RoBERTa [21] | 86.820 | 89.795 | BERT* [10] | 87.433 | 93.294 |

| XLNet | 87.926 | 90.689 | XLNet | 89.898 | 95.080 |

表 3: SQuAD 阅读理解数据集上的结果。† 表示我们使用官方代码运行的结果。$^*$ 表示集成模型。$^\ddag$: 我们在提交结果一个多月后仍无法从组织者处获得最新模型在 SQuAD1.1 上的测试结果,因此报告了 SQuAD1.1 测试集上旧版本的结果。

Table 4: Comparison with state-of-the-art error rates on the test sets of several text classification datasets. All BERT and XLNet results are obtained with a 24-layer architecture with similar model sizes (aka BERT-Large).

表 4: 在多个文本分类数据集测试集上与最先进错误率的比较。所有 BERT 和 XLNet 的结果均使用 24 层架构获得,模型大小相似(即