Blended RAG: Improving RAG (Retriever-Augmented Generation) Accuracy with Semantic Search and Hybrid Query-Based Retrievers

Blended RAG: 通过语义搜索和混合查询检索器提高 RAG (Retriever-Augmented Generation) 的准确性

Abstract—Retrieval-Augmented Generation (RAG) is a prevalent approach to infuse a private knowledge base of documents with Large Language Models (LLM) to build Generative Q&A (Question-Answering) systems. However, RAG accuracy becomes increasingly challenging as the corpus of documents scales up, with Retrievers playing an outsized role in the overall RAG accuracy by extracting the most relevant document from the corpus to provide context to the LLM. In this paper, we propose the ’Blended RAG’ method of leveraging semantic search techniques, such as Dense Vector indexes and Sparse Encoder indexes, blended with hybrid query strategies. Our study achieves better retrieval results and sets new benchmarks for IR (Information Retrieval) datasets like NQ and TREC-COVID datasets. We further extend such a ’Blended Retriever’ to the RAG system to demonstrate far superior results on Generative Q&A datasets like SQUAD, even surpassing fine-tuning performance.

摘要—检索增强生成 (Retrieval-Augmented Generation, RAG) 是一种常见的方法,通过将私有文档知识库与大语言模型 (LLM) 结合,构建生成式问答 (Generative Q&A) 系统。然而,随着文档库规模的扩大,RAG 的准确性变得越来越具有挑战性,检索器 (Retriever) 在整体 RAG 准确性中扮演着重要角色,它从文档库中提取最相关的文档,为 LLM 提供上下文。在本文中,我们提出了“混合 RAG”方法,利用语义搜索技术(如密集向量索引 (Dense Vector Indexes) 和稀疏编码器索引 (Sparse Encoder Indexes)),并结合混合查询策略。我们的研究在信息检索 (Information Retrieval, IR) 数据集(如 NQ 和 TREC-COVID 数据集)上取得了更好的检索结果,并设定了新的基准。我们进一步将这种“混合检索器”扩展到 RAG 系统中,在生成式问答数据集(如 SQUAD)上展示了显著优于微调性能的结果。

Index Terms—RAG, Retrievers, Semantic Search, Dense Index, Vector Search

索引术语—RAG (Retrieval-Augmented Generation), 检索器 (Retrievers), 语义搜索 (Semantic Search), 密集索引 (Dense Index), 向量搜索 (Vector Search)

I. INTRODUCTION

I. 引言

RAG represents an approach to text generation that is based not only on patterns learned during training but also on dynamically retrieved external knowledge [1]. This method combines the creative flair of generative models with the encyclopedic recall of a search engine. The efficacy of the RAG system relies fundamentally on two components: the Retriever (R) and the Generator (G), the latter representing the size and type of LLM.

RAG 代表了一种文本生成方法,它不仅基于训练期间学习到的模式,还依赖于动态检索的外部知识 [1]。这种方法结合了生成模型的创造力和搜索引擎的百科全书式回忆能力。RAG 系统的有效性从根本上依赖于两个组件:检索器 (R) 和生成器 (G),后者代表了大语言模型的规模和类型。

The language model can easily craft sentences, but it might not always have all the facts. This is where the Retriever (R) steps in, quickly sifting through vast amounts of documents to find relevant information that can be used to inform and enrich the language model's output. Think of the retriever as a researcher part of the AI, which feeds the con textually grounded text to generate knowledgeable answers to Generator (G). Without the retriever, RAG would be like a well-spoken individual who delivers irrelevant information.

语言模型可以轻松构建句子,但它可能并不总是掌握所有事实。这时,检索器 (Retriever, R) 就派上了用场,它能够快速筛选大量文档,找到相关信息,从而为语言模型的输出提供依据并丰富其内容。可以将检索器视为 AI 中的研究员,它为生成器 (Generator, G) 提供基于上下文的文本,以生成知识丰富的答案。如果没有检索器,RAG 就像一个口才很好但提供无关信息的人。

II. RELATED WORK

II. 相关工作

Search has been a focal point of research in information retrieval, with numerous studies exploring various methodologies. Historically, the BM25 (Best Match) algorithm, which uses similarity search, has been a cornerstone in this field, as explored by Robertson and Zaragoza (2009). [2]. BM25 prioritizes documents according to their pertinence to a query, capitalizing on Term Frequency (TF), Inverse Document Frequency (IDF), and Document Length to compute a relevance score.

搜索一直是信息检索研究的焦点,众多研究探索了各种方法。历史上,BM25(最佳匹配)算法是该领域的基石,它使用相似性搜索,正如 Robertson 和 Zaragoza (2009) 所探讨的那样 [2]。BM25 根据文档与查询的相关性对文档进行排序,利用词频 (Term Frequency, TF)、逆文档频率 (Inverse Document Frequency, IDF) 和文档长度来计算相关性得分。

Dense vector models, particularly those employing KNN (k Nearest Neighbours) algorithms, have gained attention for their ability to capture deep semantic relationships in data. Studies by Johnson et al. (2019) demonstrated the efficacy of dense vector representations in large-scale search applications. The kinship between data entities (including the search query) is assessed by computing the vectorial proximity (via cosine similarity etc.). During search execution, the model discerns the ’k’ vectors closest in resemblance to the query vector, hence returning the corresponding data entities as results. Their ability to transform text into vector space models, where semantic similarities can be quantitatively assessed, marks a significant advancement over traditional keywordbased approaches. [3]

密集向量模型,特别是那些采用 KNN (k 近邻) 算法的模型,因其能够捕捉数据中的深层语义关系而受到关注。Johnson 等人 (2019) 的研究证明了密集向量表示在大规模搜索应用中的有效性。数据实体 (包括搜索查询) 之间的亲缘关系通过计算向量接近度 (如余弦相似度等) 来评估。在搜索执行过程中,模型识别出与查询向量最相似的 'k' 个向量,从而返回相应的数据实体作为结果。它们能够将文本转换为向量空间模型,在向量空间中语义相似度可以定量评估,这标志着相对于传统基于关键词的方法的重大进步。[3]

On the other hand, sparse encoder based vector models have also been explored for their precision in representing document semantics. The work of Zaharia et al. (2010) illustrates the potential of these models in efficiently handling high-dimensional data while maintaining interpret ability, a challenge often faced in dense vector representations. In Sparse Encoder indexes the indexed documents, and the user’s search query maps into an extensive array of associated terms derived from a vast corpus of training data to encapsulate relationships and contextual use of concepts. The resultant expanded terms for documents and queries are encoded into sparse vectors, an efficient data representation format when handling an extensive vocabulary.

另一方面,基于稀疏编码器的向量模型因其在表示文档语义方面的精确性也得到了探索。Zaharia 等人的工作 (2010) 展示了这些模型在高效处理高维数据的同时保持可解释性的潜力,这是密集向量表示中经常面临的挑战。在稀疏编码器中,索引文档和用户的搜索查询被映射到一个由大量训练数据生成的广泛关联词数组中,以封装概念的关系和上下文使用。文档和查询的扩展结果被编码为稀疏向量,这是一种在处理广泛词汇时高效的数据表示格式。

A. Limitations in the current RAG system

A. 当前 RAG 系统的局限性

Most current retrieval methodologies employed in RetrievalAugmented Generation (RAG) pipelines rely on keyword and similarity-based searches, which can restrict the RAG system’s overall accuracy. Table 1 provides a summary of the current benchmarks for retriever accuracy.

当前检索增强生成 (Retrieval-Augmented Generation, RAG) 管道中使用的大多数检索方法依赖于关键词和基于相似性的搜索,这可能会限制 RAG 系统的整体准确性。表 1 总结了当前检索器准确性的基准。

TABLE I: Current Retriever Benchmarks

表 1: 当前检索器基准

| 数据集 | 基准指标 | NDCG@10 | p@20 | F1 |

|---|---|---|---|---|

| NQDataset | P@20 | 0.633 | 86 | 79.6 |

| Trec Covid | NDCG@10 | 80.4 | ||

| HotpotQA | F1,EM | 0.85 |

While most of prior efforts in improving RAG accuracy is on G part, by tweaking LLM prompts, tuning etc.,[9] they have limited impact on the overall accuracy of the RAG system, since if R part is feeding irreverent context then answer would be inaccurate. Furthermore, most retrieval methodologies employed in RAG pipelines rely on keyword and similarity-based searches, which can restrict the system's overall accuracy.

尽管之前大多数提高 RAG 准确性的努力都集中在 G 部分,通过调整大语言模型的提示、调优等 [9],但它们对 RAG 系统的整体准确性影响有限,因为如果 R 部分提供的是不相关的上下文,那么答案就会不准确。此外,RAG 管道中使用的大多数检索方法依赖于基于关键词和相似性的搜索,这可能会限制系统的整体准确性。

Finding the best search method for RAG is still an emerging area of research. The goal of this study is to enhance retriever and RAG accuracy by incorporating Semantic Search-Based Retrievers and Hybrid Search Queries.

寻找最佳的 RAG 搜索方法仍是一个新兴的研究领域。本研究的目标是通过结合基于语义搜索的检索器和混合搜索查询来提高检索器和 RAG 的准确性。

III. BLENDED RETRIEVERS

III. 混合检索器

For RAG systems, we explored three distinct search strategies: keyword-based similarity search, dense vector-based, and semantic-based sparse encoders, integrating these to formulate hybrid queries. Unlike conventional keyword matching, semantic search delves into the nuances of a user’s query, deciphering context and intent. This study systematically evaluates an array of search techniques across three primary indices: BM25 [4] for keyword-based, KNN [5] for vector-based, and Elastic Learned Sparse Encoder (ELSER) for sparse encoderbased semantic search.

对于 RAG 系统,我们探索了三种不同的搜索策略:基于关键词的相似性搜索、基于密集向量的搜索以及基于语义的稀疏编码器,并将这些方法结合起来形成混合查询。与传统的关键词匹配不同,语义搜索深入挖掘用户查询的细微差别,解析上下文和意图。本研究系统评估了一系列搜索技术,主要基于三个指标:BM25 [4] 用于基于关键词的搜索,KNN [5] 用于基于向量的搜索,以及 Elastic Learned Sparse Encoder (ELSER) 用于基于稀疏编码器的语义搜索。

A. Methodology

A. 方法论

Our methodology unfolds in a sequence of progressive steps, commencing with the elementary match query within the BM25 index. We then escalate to hybrid queries that amalgamate diverse search techniques across multiple fields, leveraging the multi-match query within the Sparse EncoderBased Index. This method proves invaluable when the exact location of the query text within the document corpus is indeterminate, hence ensuring a comprehensive match retrieval.

我们的方法按照一系列逐步的步骤展开,首先从BM25索引中的基本匹配查询开始。然后,我们升级到混合查询,这些查询在多个字段中结合了多种搜索技术,利用了基于稀疏编码器索引中的多匹配查询。当查询文本在文档语料库中的确切位置不确定时,这种方法被证明是非常有价值的,从而确保了全面的匹配检索。

The multi-match queries are categorized as follows:

多匹配查询分类如下:

• Cross Fields: Targets concurrence across multiple fields

• 跨领域:多领域目标一致性

After initial match queries, we incorporate dense vector (KNN) and sparse encoder indices, each with their bespoke hybrid queries. This strategic approach synthesizes the strengths of each index, channeling them towards the unified goal of refining retrieval accuracy within our RAG system. We calculate the top-k retrieval accuracy metric to distill the essence of each query type.

在初始匹配查询之后,我们结合了密集向量(KNN)和稀疏编码器索引,每种索引都有其定制的混合查询。这种策略性方法综合了每种索引的优势,将它们引导到统一的目标,即在我们的 RAG 系统中提高检索准确性。我们计算 top-k 检索准确性指标,以提炼每种查询类型的精髓。

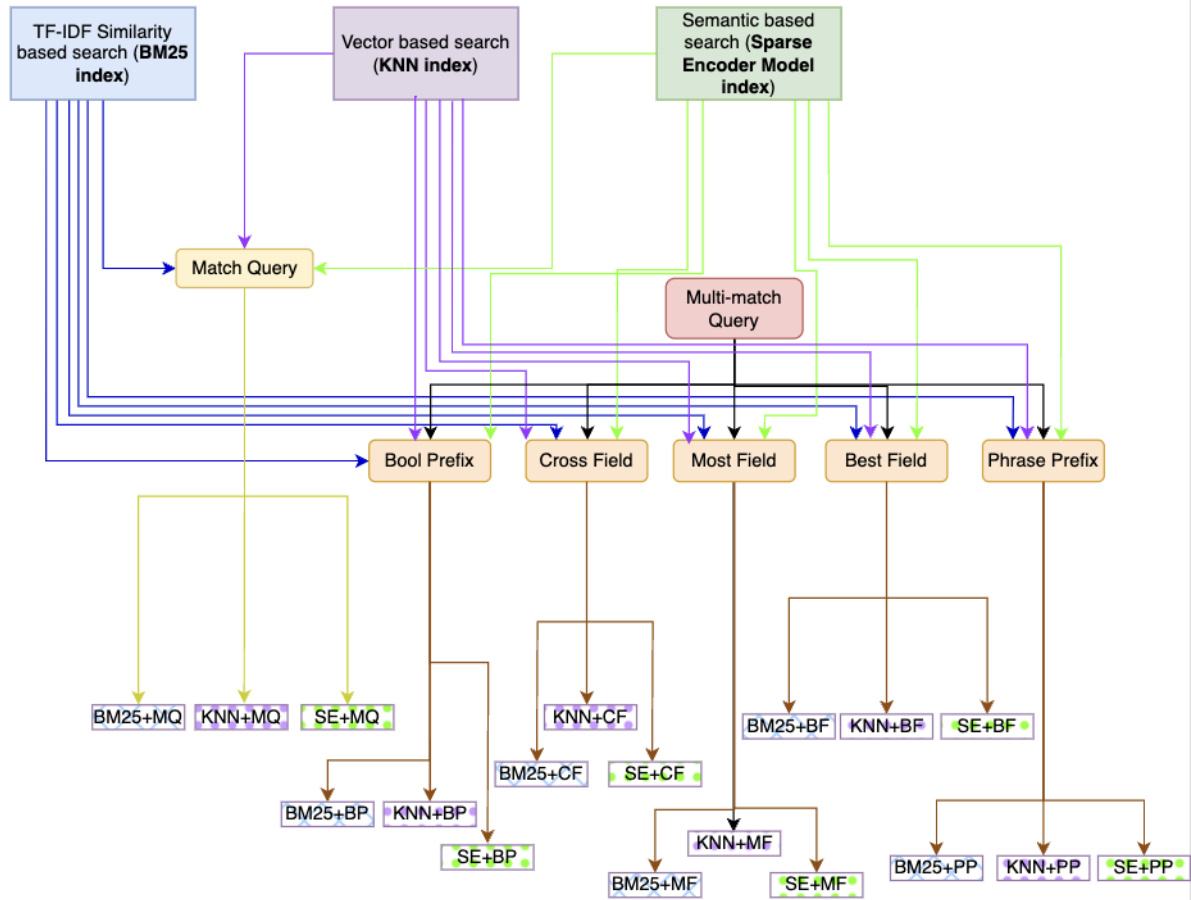

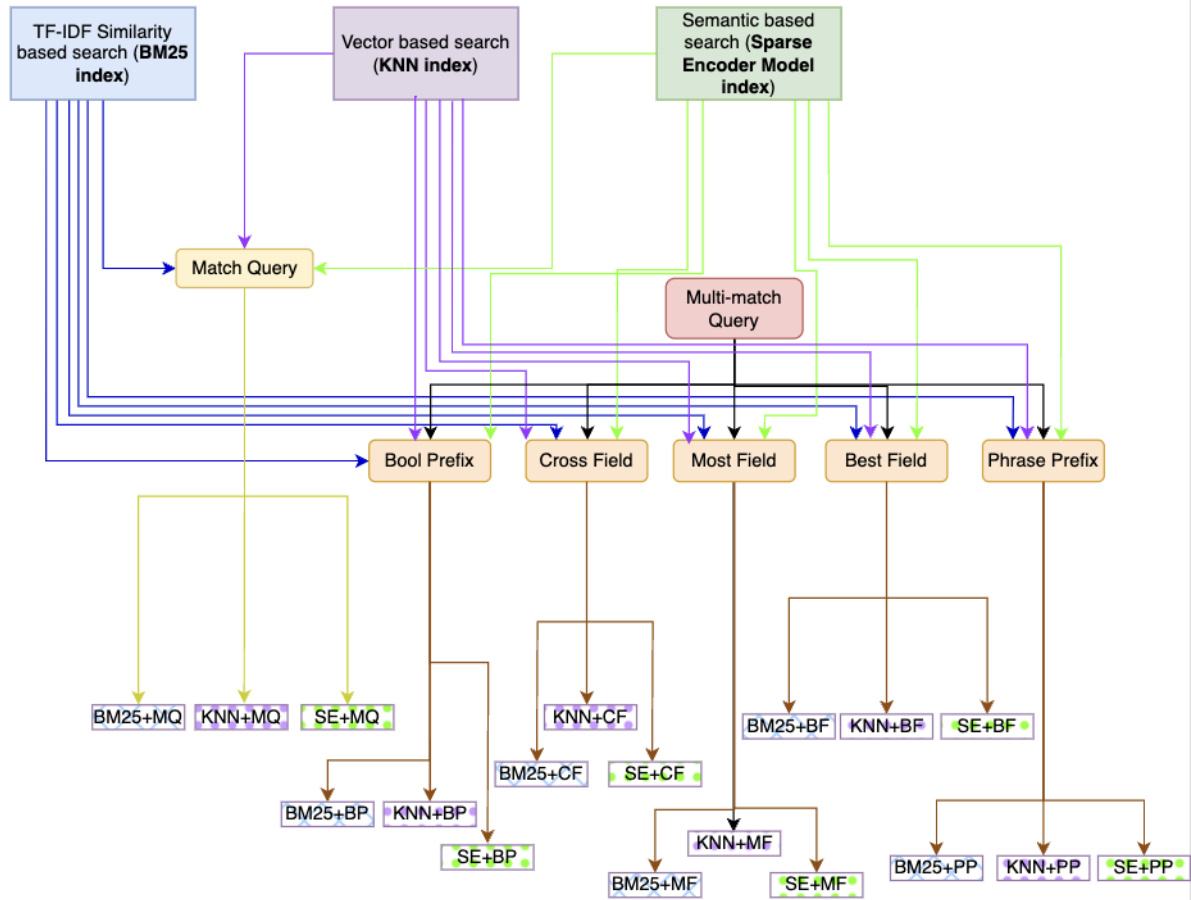

In Figure 1, we introduce a scheme designed to create Blended Retrievers by blending semantic search with hybrid queries.

图 1: 我们介绍了一种通过将语义搜索与混合查询相结合来创建混合检索器的方案。

B. Constructing RAG System

B. 构建 RAG 系统

From the plethora of possible permutations, a select sextet (top 6) of hybrid queries—those exhibiting paramount retrieval efficacy—were chosen for further scrutiny. These queries were then subjected to rigorous evaluation across the benchmark datasets to ascertain the precision of the retrieval component within RAG. The sextet queries represent the culmination of retriever experimentation, embodying the synthesis of our finest query strategies aligned with various index types. The six blended queries are then fed to generative questionanswering systems. This process finds the best retrievers to feed to the Generator of RAG, given the exponential growth in the number of potential query combinations stemming from the integration with distinct index types.

从众多可能的排列组合中,我们选择了六种(前6名)混合查询——那些表现出最高检索效果的查询——进行进一步研究。这些查询随后在基准数据集上进行了严格的评估,以确定RAG中检索组件的精确度。这六种查询代表了检索器实验的最终成果,体现了我们与各种索引类型相结合的最佳查询策略的综合。随后,这六种混合查询被输入到生成式问答系统中。鉴于与不同索引类型集成所带来的潜在查询组合数量的指数级增长,这一过程旨在找到最佳的检索器,以提供给RAG的生成器。

The intricacies of constructing an effective RAG system are multi-fold, particularly when source datasets have diverse and complex landscapes. We undertook a comprehensive evaluation of a myriad of hybrid query formulations, scrutinizing their performance across benchmark datasets, including the Natural Questions (NQ), TREC-COVID, Stanford Question Answering Dataset (SqUAD), and HotPotQA.

构建一个高效的 RAG 系统的复杂性是多方面的,尤其是在源数据集具有多样且复杂的场景时。我们对多种混合查询公式进行了全面评估,仔细分析了它们在基准数据集上的表现,包括 Natural Questions (NQ)、TREC-COVID、Stanford Question Answering Dataset (SqUAD) 和 HotPotQA。

IV. EXPERIMENTATION FOR RETRIEVER EVALUATION

IV. 检索器评估实验

We used top-10 retrieval accuracy to narrow down the six best types of blended retrievers (index $^+$ hybrid query) for comparison for each benchmark dataset.

我们使用前10检索准确率来缩小六种最佳混合检索器(索引 $^+$ 混合查询)的范围,以便在每个基准数据集上进行比较。

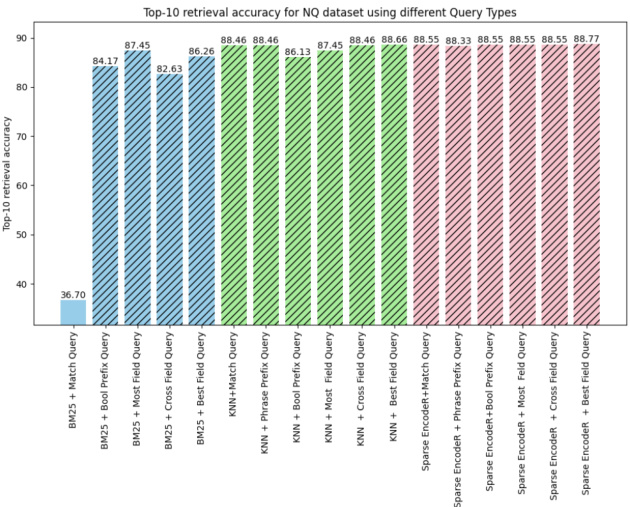

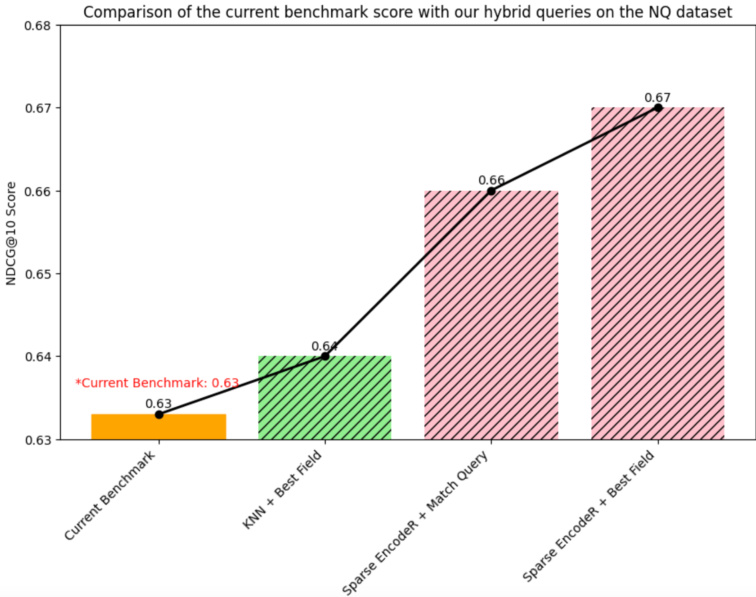

- Top-10 retrieval accuracy on the NQ dataset : For the NQ dataset [6], our empirical analysis has demonstrated the superior performance of hybrid query strategies, attributable to the ability to utilize multiple data fields effectively. In Figure 2, our findings reveal that the hybrid query approach employing the Sparse Encoder with Best Fields attains the highest retrieval accuracy, reaching an impressive $88.77%$ . This result surpasses the efficacy of all other formulations, establishing a new benchmark for retrieval tasks within this dataset.

- NQ 数据集上的 Top-10 检索准确率:对于 NQ 数据集 [6],我们的实证分析表明,混合查询策略由于能够有效利用多个数据字段,因此表现优异。在图 2 中,我们的研究结果显示,采用 Sparse Encoder with Best Fields 的混合查询方法达到了最高的检索准确率,达到了令人印象深刻的 $88.77%$。这一结果超越了所有其他方案的效果,为该数据集中的检索任务树立了新的基准。

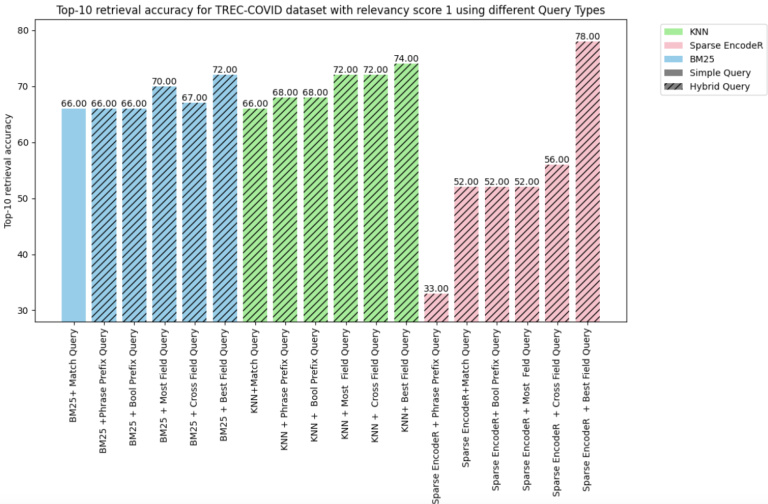

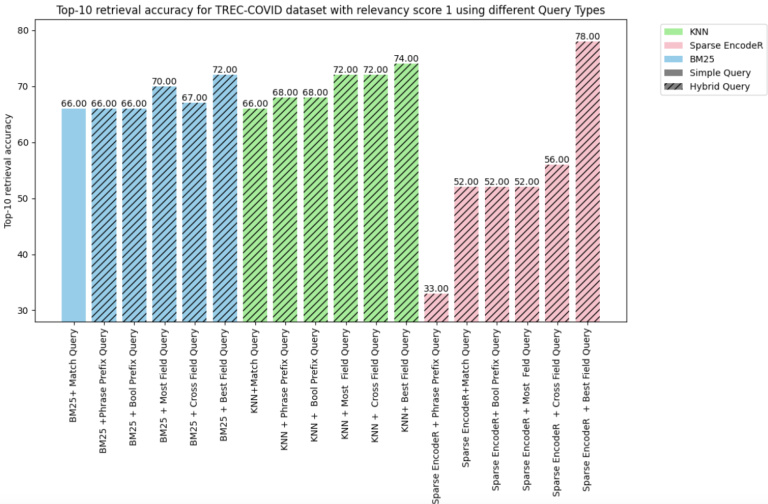

- Top-10 Retrieval Accuracy on TREC-Covid dataset: For the TREC-COVID dataset [7], which encompasses relevancy scores spanning from -1 to 2, with -1 indicative of irrelevance and 2 denoting high relevance, our initial assessments targeted documents with a relevancy of 1, deemed partially relevant.

- TREC-Covid 数据集上的 Top-10 检索准确率:对于 TREC-COVID 数据集 [7],其相关性评分范围为 -1 到 2,其中 -1 表示不相关,2 表示高度相关。我们的初步评估针对相关性为 1 的文档,这些文档被视为部分相关。

Blended Retriever Queries using Similarity and Semantic Search Indexes

混合检索查询:使用相似性和语义搜索索引

Fig. 1: Scheme of Creating Blended Retrievers using Semantic Search with Hybrid Queries.

图 1: 使用混合查询的语义搜索创建混合检索器的方案。

Figure 3 analysis reveals a superior performance of vector search hybrid queries over those based on keywords. In particular, hybrid queries that leverage the Sparse EncodeR utilizing Best Fields demonstrate the highest efficacy across all index types at $78%$ accuracy.

图 3 分析显示,基于向量搜索的混合查询在性能上优于基于关键词的查询。特别是,利用 Best Fields 的 Sparse EncodeR 的混合查询在所有索引类型中表现出最高的效能,准确率达到 $78%$。

Fig. 2: Top-10 Retriever Accuracy for NQ Dataset Fig. 3: Top 10 retriever accuracy for Trec-Covid Score-1

图 2: NQ 数据集的前 10 检索器准确率

图 3: Trec-Covid Score-1 的前 10 检索器准确率

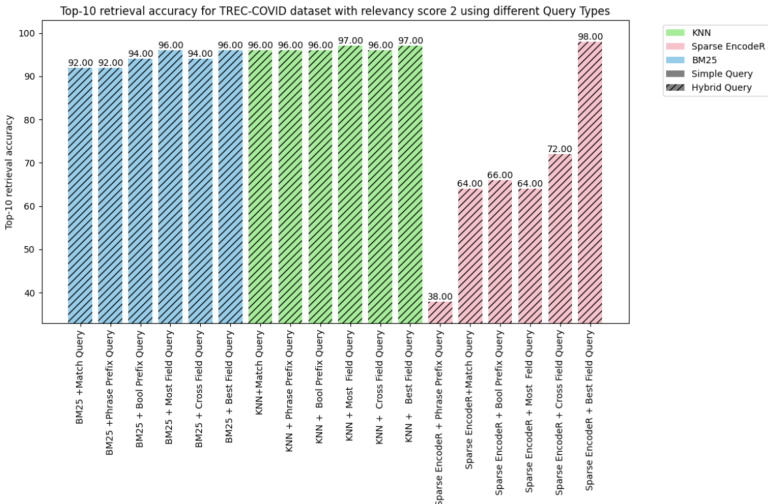

Subsequent to the initial evaluation, the same spectrum of queries was subjected to assessment against the TRECCOVID dataset with a relevancy score of 2, denoting that the documents were entirely pertinent to the associated queries. Figure 4 illustrated with a relevance score of two, where documents fully meet the relevance criteria for associated queries, reinforce the efficacy of vector search hybrid queries over conventional keyword-based methods. Notably, the hybrid query incorporating Sparse Encoder with Best Fields demonstrates a $98%$ top-10 retrieval accuracy, eclipsing all other formulations. This suggests that a methodological pivot towards more nuanced blended search, particularly those that effectively utilize the Best Fields, can significantly enhance retrieval outcomes in information retrieval (IR) systems.

在初步评估之后,同一组查询在TRECCOVID数据集上进行了评估,相关性得分为2,表示文档与相关查询完全相关。图4展示了相关性得分为2的情况,其中文档完全满足相关查询的相关性标准,进一步证明了向量搜索混合查询相对于传统基于关键词方法的有效性。值得注意的是,结合稀疏编码器(Sparse Encoder)与最佳字段(Best Fields)的混合查询在Top-10检索准确率上达到了$98%$,超越了所有其他方案。这表明,在信息检索(IR)系统中,向更精细的混合搜索方法转变,特别是那些有效利用最佳字段的方法,可以显著提升检索效果。

Fig. 4: Top 10 retriever accuracy for Trec-Covid Score-2

图 4: Trec-Covid Score-2 的 Top 10 检索器准确率

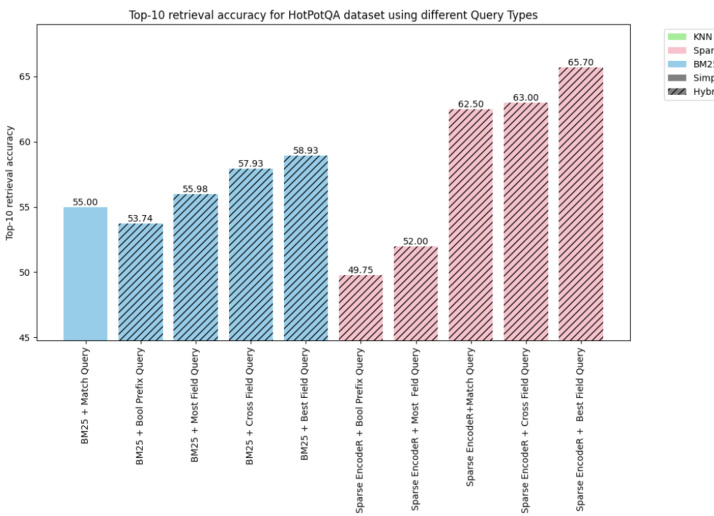

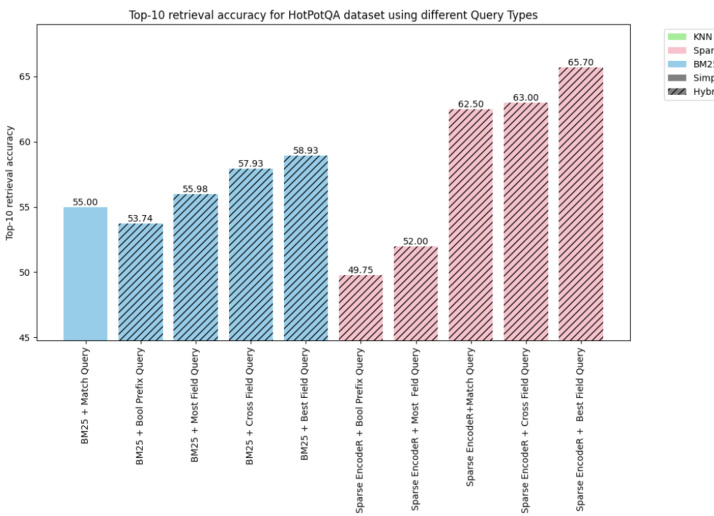

Fig. 5: Top 10 retriever accuracy for HotPotQA dataset

图 5: HotPotQA 数据集上前 10 检索器准确率

- Top-10 Retrieval Accuracy on the HotPotQA dataset : The HotPotQA [8] dataset, with its extensive corpus of over 5M documents and a query set comprising 7,500 items, presents a formidable challenge for comprehensive evaluation due to compute requirements. Consequently, the assessment was confined to a select subset of hybrid queries. Despite these constraints, the analysis provided insightful data, as reflected in the accompanying visualization in Figure 5.

- HotPotQA 数据集上的 Top-10 检索准确率:HotPotQA [8] 数据集拥有超过 500 万篇文档的庞大语料库和包含 7,500 个项目的查询集,由于计算需求,对全面评估提出了巨大挑战。因此,评估仅限于选定的混合查询子集。尽管存在这些限制,分析仍提供了有见地的数据,如图 5 中的可视化所示。

Figure 5 shows that hybrid queries, specifically those utilizing Cross Fields and Best Fields search strategies, demonstrate superior performance. Notably, the hybrid query that blends Sparse EncodeR with Best Fields queries achieved the highest efficiency, of $65.70%$ on the HotPotQA dataset.

图 5 显示,混合查询,特别是那些利用跨字段 (Cross Fields) 和最佳字段 (Best Fields) 搜索策略的查询,表现出卓越的性能。值得注意的是,将稀疏编码器 (Sparse EncodeR) 与最佳字段查询相结合的混合查询在 HotPotQA 数据集上实现了最高的效率,达到了 $65.70%$。

Fig. 6: NQ dataset Benchmarking using NDCG $@$ 10 Metric

图 6: 使用 NDCG $@$ 10 指标的 NQ 数据集基准测试

TABLE II: Retriever Benchmarking using NDCG $@$ 10 Metric

表 II: 使用 NDCG $@$ 10 指标的检索器基准测试

| 数据集 | 模型/管道 | NDCG@10 |

|---|---|---|

| Trec-covid | COCO-DR Large | 0.804 |

| Trec-covid | BlendedRAG | 0.87 |

| NQdataset | monoT5-3B | 0.633 |

| NQdataset | BlendedRAG | 0.67 |

A. Retriever Benchmarking

A. 检索器基准测试

Now that we have identified the best set of combinations of Index $^+$ Query types, we will use these sextet queries on IR datasets for benchmarking using $\mathrm{NDCG}@10$ [9] scores (Normalised Discounted Cumulative Gain metric).

既然我们已经确定了 Index $^+$ Query 类型的最佳组合集,我们将在 IR 数据集上使用这些六元组查询进行基准测试,使用 $\mathrm{NDCG}@10$ [9] 分数(归一化折损累积增益指标)。

- NQ dataset benchmarking: The results for $\mathrm{NDCG}@10$ using sextet queries and the current benc