ANALYSIS OF THE EVOLUTION OF ADVANCED TRANSFORMER-BASED LANGUAGE MODELS: EXPERIMENTS ON OPINION MINING

基于先进Transformer的语言模型演化分析:观点挖掘实验

Nour Eddine Zekaoui ∗

Nour Eddine Zekaoui ∗

Siham Yousfi

Siham Yousfi

Maryem Rhanoui

Maryem Rhanoui

ABSTRACT

摘要

Opinion mining, also known as sentiment analysis, is a subfield of natural language processing (NLP) that focuses on identifying and extracting subjective information in textual material. This can include determining the overall sentiment of a piece of text (e.g., positive or negative), as well as identifying specific emotions or opinions expressed in the text, that involves the use of advanced machine and deep learning techniques. Recently, transformer-based language models make this task of human emotion analysis intuitive, thanks to the attention mechanism and parallel computation. These advantages make such models very powerful on linguistic tasks, unlike recurrent neural networks that spend a lot of time on sequential processing, making them prone to fail when it comes to processing long text. The scope of our paper aims to study the behaviour of the cutting-edge Transformer-based language models on opinion mining and provide a high-level comparison between them to highlight their key particularities. Additionally, our comparative study shows leads and paves the way for production engineers regarding the approach to focus on and is useful for researchers as it provides guidelines for future research subjects.

意见挖掘,也称为情感分析,是自然语言处理(NLP)的一个子领域,专注于识别和提取文本材料中的主观信息。这可以包括确定一段文本的整体情感(例如,正面或负面),以及识别文本中表达的特定情绪或观点,涉及使用先进的机器和深度学习技术。最近,基于Transformer的语言模型使得人类情感分析任务变得直观,这得益于注意力机制和并行计算。这些优势使得此类模型在语言任务上非常强大,与循环神经网络不同,后者在顺序处理上花费大量时间,使其在处理长文本时容易失败。本文的范围旨在研究基于Transformer的最先进语言模型在意见挖掘中的行为,并提供它们之间的高层次比较,以突出它们的关键特性。此外,我们的比较研究为生产工程师提供了关于应关注的方法的线索,并为研究人员提供了未来研究主题的指导。

1 Introduction

1 引言

Over the past few years, interest in natural language processing (NLP) [1] has increased significantly. Today, several applications are investing massively in this new technology, such as extending recommend er systems [2], [3], uncovering new insights in the health industry [4], [5], and unraveling e-reputation and opinion mining [6], [7]. Opinion mining is an approach to computational linguistics and NLP that automatically identifies the emotional tone, sentiment, or thoughts behind a body of text. As a result, it plays a vital role in driving business decisions in many industries. However, seeking customer satisfaction is costly expensive. Indeed, mining user feedback regarding the products offered, is the most accurate way to adapt strategies and future business plans. In recent years, opinion mining has seen considerable progress, with applications in social media and review websites. Recommendation may be staff-oriented [2] or user-oriented [8] and should be tailored to meet customer needs and behaviors.

过去几年,人们对自然语言处理 (Natural Language Processing, NLP) [1] 的兴趣显著增加。如今,许多应用正在大力投资这项新技术,例如扩展推荐系统 [2]、[3],在健康行业中发现新的见解 [4]、[5],以及解析电子声誉和意见挖掘 [6]、[7]。意见挖掘是计算语言学和 NLP 的一种方法,能够自动识别文本背后的情感基调、情绪或思想。因此,它在许多行业中推动业务决策方面发挥着至关重要的作用。然而,追求客户满意度是成本高昂的。事实上,挖掘用户对提供产品的反馈是调整策略和未来业务计划的最准确方式。近年来,意见挖掘在社交媒体和评论网站中的应用取得了显著进展。推荐系统可以是面向员工的 [2] 或面向用户的 [8],并且应根据客户需求和行为进行定制。

Nowadays, analyzing people’s emotions has become more intuitive thanks to the availability of many large pre-trained language models such as bidirectional encoder representations from transformers (BERT) [9] and its variants. These models use the seminal transformer architecture [10], which is based solely on attention mechanisms, to build robust language models for a variety of semantic tasks, including text classification. Moreover, there has been a surge in opinion mining text datasets, specifically designed to challenge NLP models and enhance their performance. These datasets are aimed at enabling models to imitate or even exceed human level performance, while introducing more complex features.

如今,得益于许多大型预训练语言模型的可用性,例如基于 Transformer 的双向编码器表示 (BERT) [9] 及其变体,分析人们的情感变得更加直观。这些模型使用开创性的 Transformer 架构 [10],该架构完全基于注意力机制,为各种语义任务(包括文本分类)构建了强大的语言模型。此外,专门设计用于挑战 NLP 模型并提升其性能的意见挖掘文本数据集也大量涌现。这些数据集旨在使模型能够模仿甚至超越人类水平的表现,同时引入更复杂的特征。

Even though many papers have addressed NLP topics for opinion mining using high-performance deep learning models, it is still challenging to determine their performance concretely and accurately due to variations in technical environments and datasets. Therefore, to address these issues, our paper aims to study the behaviour of the cutting-edge transformerbased models on textual material and reveal their differences. Although, it focuses on applying both transformer encoders and decoders, such as BERT [9] and generative pre-trained transformer (GPT) [11], respectively, and their improvements on a benchmark dataset. This enable a credible assessment of their performance and understanding their advantages, allowing subject matter experts to clearly rank the models. Furthermore, through ablations, we show the impact of configuration choices on the final results.

尽管许多论文已经使用高性能深度学习模型探讨了情感挖掘的自然语言处理 (NLP) 主题,但由于技术环境和数据集的差异,具体且准确地评估其性能仍然具有挑战性。因此,为了解决这些问题,本文旨在研究基于 Transformer 的前沿模型在文本材料上的行为,并揭示它们之间的差异。尽管本文主要关注应用 Transformer 编码器和解码器,例如 BERT [9] 和生成式预训练 Transformer (GPT) [11],以及它们在基准数据集上的改进。这使得能够可信地评估它们的性能并理解其优势,从而让领域专家能够清晰地对这些模型进行排名。此外,通过消融实验,我们展示了配置选择对最终结果的影响。

2 Background

2 背景

2.1 Transformer

2.1 Transformer

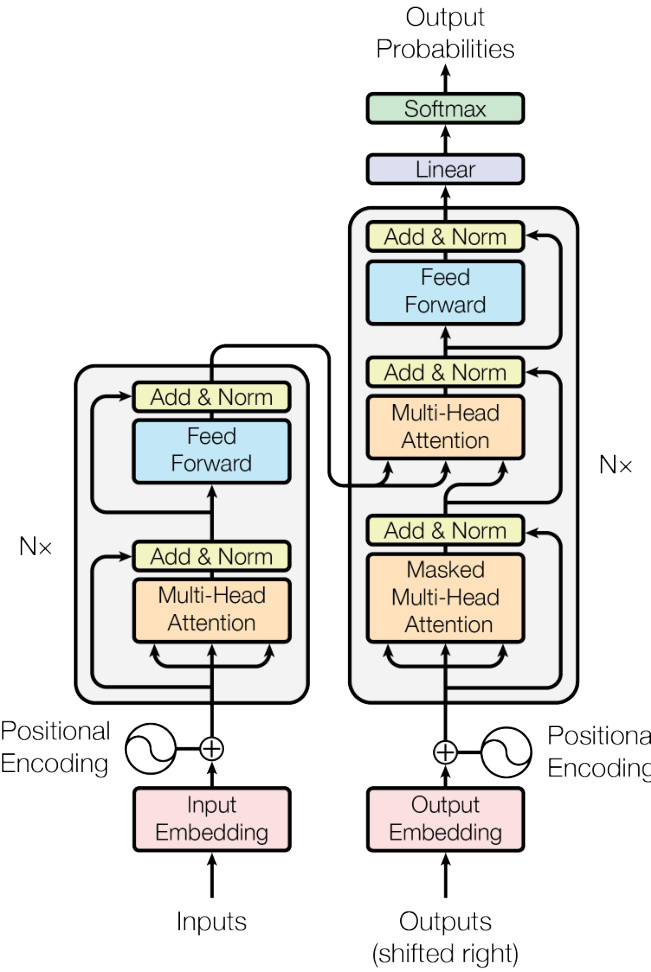

The transformer [10], as illustrated in Figure 1, is an encoder-decoder model dispensing entirely with recurrence and convolutions. Instead, it leverages the attention mechanism to compute high-level contextual i zed embeddings. Being the first model to rely solely on attention mechanisms, it is able to address the issues commonly associated with recurrent neural networks, which factor computation along symbol positions of input and output sequences, and then precludes parallel iz ation within samples. Despite this, the transformer is highly parallel iz able and requires significantly less time to train. In the upcoming sections, we will highlight the recent breakthroughs in NLP involving transformer that changed the field overnight by introducing its designs, such as BERT [9] and its improvements.

Transformer [10],如图 1 所示,是一种完全摒弃了循环和卷积的编码器-解码器模型。相反,它利用注意力机制来计算高层次的上下文嵌入。作为第一个完全依赖注意力机制的模型,它能够解决通常与循环神经网络相关的问题,这些网络沿着输入和输出序列的符号位置进行因子计算,从而阻碍了样本内的并行化。尽管如此,Transformer 具有高度并行化的特性,并且训练所需的时间显著减少。在接下来的章节中,我们将重点介绍 Transformer 在 NLP 领域的最新突破,这些突破通过引入其设计(如 BERT [9] 及其改进)一夜之间改变了该领域。

Figure 1: The transformer model architecture [10]

图 1: Transformer 模型架构 [10]

2.2 BERT

2.2 BERT

BERT [9] is pre-trained using a combination of Masked Language Modeling (MLM) and Next Sentence Prediction (NSP) objectives. It provides high-level contextual i zed embeddings grasping the meaning of words in different contexts through global attention. As a result, the pre-trained BERT model can be fine-tuned for a wide range of downstream tasks, such as question answering and text classification, without substantial task-specific architecture modifications.

BERT [9] 通过结合掩码语言建模 (Masked Language Modeling, MLM) 和下一句预测 (Next Sentence Prediction, NSP) 目标进行预训练。它通过全局注意力机制提供高层次的上下文嵌入,能够捕捉不同语境中单词的含义。因此,预训练的 BERT 模型可以在不需要大量任务特定架构修改的情况下,微调用于广泛的下游任务,例如问答和文本分类。

BERT and its variants allow the training of modern data-intensive models. Moreover, they are able to capture the contextual meaning of each piece of text in a way that traditional language models are unfit to do, while being quicker to develop and yielding better results with less data. On the other hand, BERT and other large neural language models are very expensive and computationally intensive to train/fine-tune and make inference.

BERT 及其变体使得现代数据密集型模型的训练成为可能。此外,它们能够以传统语言模型无法做到的方式捕捉每段文本的上下文含义,同时开发速度更快,且在使用较少数据的情况下产生更好的结果。另一方面,BERT 和其他大型神经语言模型在训练/微调和推理过程中非常昂贵且计算密集。

2.3 GPT-I, II, III

2.3 GPT-I, II, III

GPT [11] is the first causal or auto regressive transformer-based model pre-trained using language modeling on a large corpus with long-range dependencies. However, its bigger an optimized version called GPT-2 [12], was pre-trained on WebText. Likewise, GPT-3 [13] is architecturally similar to its predecessors. Its higher level of accuracy is attributed to its increased capacity and greater number of parameters, and it was pre-trained on Common Crawl. The OpenAI GPT family models has taken pre-trained language models by storm, they are very powerful on realistic human text generation and many other miscellaneous NLP tasks. Therefore, a small amount of input text can be used to generate large amount of high-quality text, while maintaining semantic and syntactic understanding of each word.

GPT [11] 是第一个基于因果或自回归 Transformer 的模型,通过在大规模语料库上进行语言建模预训练,具备长距离依赖能力。然而,其更大的优化版本 GPT-2 [12] 是在 WebText 上进行预训练的。同样,GPT-3 [13] 在架构上与其前代相似,其更高的准确性归功于其增加的容量和更多的参数,并在 Common Crawl 上进行预训练。OpenAI 的 GPT 系列模型在预训练语言模型领域引起了巨大轰动,它们在生成逼真的人类文本和许多其他 NLP 任务上表现出色。因此,只需少量输入文本即可生成大量高质量文本,同时保持对每个词语的语义和句法理解。

2.4 ALBERT

2.4 ALBERT

A lite BERT (ALBERT) [14] was proposed to address the problems associated with large models. It was specifically designed to provide contextual i zed natural language representations to improve the results on downstream tasks. However, increasing the model size to pre-train embeddings becomes harder due to memory limitations and longer training time. For this reason, this model arose.

轻量级 BERT (ALBERT) [14] 被提出以解决与大模型相关的问题。它专门设计用于提供上下文化的自然语言表示,以改善下游任务的结果。然而,由于内存限制和更长的训练时间,增加模型大小以预训练嵌入变得更加困难。因此,这个模型应运而生。

ALBERT is a lighter version of BERT, in which next sentence prediction (NSP) is replaced by sentence order prediction (SOP). In addition to that, it employs two parameter-reduction techniques to reduce memory consumption and improve training time of BERT without hurting performance:

ALBERT 是 BERT 的轻量版本,其中下一句预测 (NSP) 被替换为句子顺序预测 (SOP)。除此之外,它还采用了两种参数减少技术,以减少内存消耗并提高 BERT 的训练时间,同时不影响性能:

• Splitting the embedding matrix into two smaller matrices to easily grow the hidden size with fewer parameters, ALBERT separates the hidden layers size from the size of the vocabulary embedding by decomposing the embedding matrix of the vocabulary. • Repeating layers split among groups to prevent the parameter from growing with the depth of the network.

• 将嵌入矩阵分解为两个较小的矩阵,以便用更少的参数轻松增加隐藏层大小,ALBERT通过分解词汇表的嵌入矩阵,将隐藏层大小与词汇表嵌入大小分离。

• 重复分组层以防止参数随着网络深度的增加而增长。

2.5 RoBERTa

2.5 RoBERTa

The choice of language model hyper-parameters has a substantial impact on the final results. Hence, robustly optimized BERT pre-training approach (RoBERTa) [15] is introduced to investigate the impact of many key hyper-parameters along with data size on model performance. RoBERTa is based on Google’s BERT [9] model and modifies key hyper-parameters, where the masked language modeling objective is dynamic and the NSP objective is removed. It is an improved version of BERT, pre-trained with much larger mini-batches and learning rates on a large corpus using self-supervised learning.

语言模型超参数的选择对最终结果有重大影响。因此,引入了鲁棒优化的 BERT 预训练方法 (RoBERTa) [15] 来研究许多关键超参数以及数据大小对模型性能的影响。RoBERTa 基于 Google 的 BERT [9] 模型,并修改了关键超参数,其中掩码语言建模目标是动态的,并且移除了 NSP 目标。它是 BERT 的改进版本,使用自监督学习在大型语料库上以更大的小批量和学习率进行预训练。

2.6 XLNET

2.6 XLNET

The bidirectional property of transformer encoders, such as BERT [9], help them achieve better performance than auto regressive language modeling based approaches. Nevertheless, BERT ignores dependency between the positions masked, and suffers from a pretrain-finetune discrepancy when relying on corrupting the input with masks. In view of these pros and cons, XLNet [16] has been proposed. XLNet is a generalized auto regressive pre training approach that allows learning bidirectional dependencies by maximizing the anticipated likelihood over all permutations of the factorization order. Furthermore, it overcomes the drawbacks of BERT [9] due to its casual or auto regressive formulation, inspired from the transformer-XL [17].

Transformer 编码器的双向特性,如 BERT [9],帮助它们实现了比基于自回归语言建模方法更好的性能。然而,BERT 忽略了被掩盖位置之间的依赖关系,并且在依赖掩码破坏输入时存在预训练-微调差异。鉴于这些优缺点,XLNet [16] 被提出。XLNet 是一种广义的自回归预训练方法,通过最大化所有因子分解顺序排列的预期似然来学习双向依赖关系。此外,它克服了 BERT [9] 的缺点,因为它受到 Transformer-XL [17] 的启发,采用了因果或自回归的公式。

2.7 DistilBERT

2.7 DistilBERT

Unfortunately, the outstanding performance that comes with large-scale pretrained models is not cheap. In fact, operating them on edge devices under constrained computational training or inference budgets remains challenging. Against this backdrop, DistilBERT [18] (or Distilled BERT) has seen the light to address the cited issues by leveraging knowledge distillation [19].

遗憾的是,大规模预训练模型带来的卓越性能并不便宜。事实上,在边缘设备上以受限的计算训练或推理预算运行它们仍然具有挑战性。在此背景下,DistilBERT [18](或 Distilled BERT)应运而生,通过利用知识蒸馏 [19] 来解决上述问题。

DistilBERT is similar to BERT, but it is smaller, faster, and cheaper. It has $40%$ less parameters than BERT base, runs $40%$ faster, while preserving over $95%$ of BERT’s performance. It is trained using distillation of the pretrained BERT base model.

DistilBERT 与 BERT 类似,但它更小、更快且更便宜。它的参数量比 BERT base 少 40%,运行速度快 40%,同时保留了 BERT 95% 以上的性能。它是通过蒸馏预训练的 BERT base 模型进行训练的。

2.8 XLM-RoBERTa

2.8 XLM-RoBERTa

Pre-trained multilingual models at scale, such as multilingual BERT (mBERT) [9] and cross-lingual language models (XLMs) [20], have led to considerable performance improvements for a wide variety of cross-lingual transfer tasks, including question answering, sequence labeling, and classification. However, the multilingual version of RoBERTa [15] called XLM-RoBERTa [21], pre-trained on the newly created 2.5TB multilingual Common Crawl corpus containing 100 different languages, has further pushed the performance. It has shown strong improvements on low-resource languages compared to previous multilingual models.

大规模预训练的多语言模型,如多语言 BERT (mBERT) [9] 和跨语言语言模型 (XLMs) [20],已经在多种跨语言迁移任务中带来了显著的性能提升,包括问答、序列标注和分类。然而,基于新创建的 2.5TB 多语言 Common Crawl 语料库(包含 100 种不同语言)预训练的 XLM-RoBERTa [21](RoBERTa 的多语言版本 [15])进一步推动了性能的提升。与之前的多语言模型相比,它在低资源语言上表现出了显著的改进。

2.9 BART

2.9 BART

Bidirectional and auto-regressive transformer (BART) [22] is a generalization of BERT [9] and GPT [11], it takes advantage of the standard transformer [10]. Concretely, it uses a bidirectional encoder and a left-to-right decoder. It is trained by corrupting text with an arbitrary noising function and learning a model to reconstruct the original text. BART has shown phenomenal success when fine-tuned on text generation tasks such as translation, but also performs well for comprehension tasks like question answering and classification.

双向自回归 Transformer (Bidirectional and auto-regressive transformer, BART) [22] 是 BERT [9] 和 GPT [11] 的泛化版本,它利用了标准的 Transformer [10]。具体来说,它使用了一个双向编码器和一个从左到右的解码器。它通过使用任意的噪声函数破坏文本并训练模型来重建原始文本来进行训练。BART 在微调用于文本生成任务(如翻译)时表现出色,同时在理解任务(如问答和分类)中也表现良好。

2.10 ConvBERT

2.10 ConvBERT

While BERT [9] and its variants have recently achieved incredible performance gains in many NLP tasks compared to previous models, BERT suffers from large computation cost and memory footprint due to reliance on the global self-attention block. Although all its attention heads, BERT was found to be computationally redundant, since some heads simply need to learn local dependencies. Therefore, ConvBERT [23] is a better version of BERT [9], where self-attention blocks are replaced with new mixed ones that leverage convolutions to better model global and local context.

虽然 BERT [9] 及其变体在最近的许多 NLP 任务中相比之前的模型取得了显著的性能提升,但由于依赖于全局自注意力机制,BERT 的计算成本和内存占用较高。尽管 BERT 的所有注意力头都被使用,但研究发现它在计算上是冗余的,因为某些头只需要学习局部依赖关系。因此,ConvBERT [23] 是 BERT [9] 的改进版本,它将自注意力块替换为新的混合块,利用卷积更好地建模全局和局部上下文。

2.11 Reformer

2.11 Reformer

Consistently, large transformer [10] models achieve state-of-the-art results in a large variety of linguistic tasks, but training them on long sequences is costly challenging. To address this issue, the Reformer [24] was introduced to improve the efficiency of transformers while holding the high performance and the smooth training. Reformer is more efficient than transformer [10] thanks to locality-sensitive hashing attention and reversible residual layers instead of the standard residuals, and axial position encoding and other optimization s.

一致地,大型 Transformer [10] 模型在各种语言任务中取得了最先进的结果,但在长序列上训练它们既昂贵又具有挑战性。为了解决这个问题,Reformer [24] 被引入,以提高 Transformer 的效率,同时保持高性能和流畅的训练。Reformer 比 Transformer [10] 更高效,这得益于局部敏感哈希注意力机制和可逆残差层,而不是标准的残差层,以及轴向位置编码和其他优化方法。

2.12 T5

2.12 T5

Transfer learning has emerged as one of the most influential techniques in NLP. Its efficiency in transferring knowledge to downstream tasks through fine-tuning has given birth to a range of innovative approaches. One of these approaches is transfer learning with a unified text-to-text transformer (T5) [25], which consists of a bidirectional encoder and a left-to-right decoder. This approach is reshaping the transfer learning landscape by leveraging the power of being pre-trained on a combination of unsupervised and supervised tasks and reframing every NLP task into text-to-text format.

迁移学习已成为自然语言处理(NLP)中最具影响力的技术之一。它通过微调将知识迁移到下游任务中的高效性催生了一系列创新方法。其中一种方法是使用统一的文本到文本 Transformer (T5) [25] 进行迁移学习,该方法由双向编码器和从左到右的解码器组成。这种方法通过结合无监督和有监督任务进行预训练,并将每个 NLP 任务重新构建为文本到文本格式,正在重塑迁移学习的格局。

2.13 ELECTRA

2.13 ELECTRA

Masked language modeling (MLM) approaches like BERT [9] have proven to be effective when transferred to downstream NLP tasks, although, they are expensive and require large amounts of compute. Efficiently learn an encoder that classifies token replacements accurately (ELECTRA) [26] is a new pre-training approach that aims to overcome these computation problems by training two Transformer models: the generator and the disc rim in at or. ELECTRA trains on a replaced token detection objective, using the disc rim in at or to identify which tokens were replaced by the generator in the sequences. Unlike MLM-based models, ELECTRA is defined over all input tokens rather than just a small subset that was masked, making it a more efficient pre-training approach.

掩码语言建模 (Masked Language Modeling, MLM) 方法,如 BERT [9],已被证明在迁移到下游 NLP 任务时非常有效,尽管它们计算成本高昂且需要大量计算资源。高效学习准确分类 Token 替换的编码器 (Efficiently Learn an Encoder that Classifies Token Replacements Accurately, ELECTRA) [26] 是一种新的预训练方法,旨在通过训练两个 Transformer 模型(生成器和判别器)来克服这些计算问题。ELECTRA 通过替换 Token 检测目标进行训练,使用判别器来识别序列中哪些 Token 被生成器替换。与基于 MLM 的模型不同,ELECTRA 对所有输入 Token 进行定义,而不仅仅是对一小部分被掩码的 Token 进行处理,这使得它成为一种更高效的预训练方法。

2.14 Longformer

2.14 Longformer

While previous transformers were focusing on making changes to the pre-training methods, the long-document transformer (Longformer) [27] comes to change the transformer’s self-attention mechanism. It has became the de facto standard for tackling a wide range of complex NLP tasks, with an new attention mechanism that scales linearly with sequence length, and then being able to easily process longer sequences. Longformer’s new attention mechanism is a drop-in replacement for the standard self-attention and combines a local windowed attention with a task motivated global attention. Simply, it replaces the transformer [10] attention matrices with sparse matrices for higher training efficiency.

虽然之前的 Transformer 主要关注对预训练方法的改进,但长文档 Transformer (Longformer) [27] 则改变了 Transformer 的自注意力机制。它已成为处理各种复杂 NLP 任务的事实标准,其新的注意力机制与序列长度呈线性关系,从而能够轻松处理更长的序列。Longformer 的新注意力机制是对标准自注意力的直接替代,它将局部窗口注意力与任务驱动的全局注意力相结合。简而言之,它用稀疏矩阵替换了 Transformer [10] 的注意力矩阵,以提高训练效率。

2.15 DeBERTa

2.15 DeBERTa

DeBERTa [28] stands for decoding-enhanced BERT with disentangled attention. It is a pre-training approach that extends Google’s BERT [9] and builds on the RoBERTa [15]. Despite being trained on only half of the data used for RoBERTa, DeBERTa has been able to improve the efficiency of pre-trained models through the use of two novel techniques:

DeBERTa [28] 代表解码增强的 BERT(BERT with disentangled attention)。它是一种预训练方法,扩展了 Google 的 BERT [9] 并基于 RoBERTa [15]。尽管 DeBERTa 仅使用了 RoBERTa 一半的数据进行训练,但通过使用两种新技术,它能够提高预训练模型的效率:

• Disentangled attention (DA): an attention mechanism that computes the attention weights among words using disentangled matrices based on two vectors that encode the content and the relative position of each word respectively. • Enhanced mask decoder (EMD): a pre-trained technique used to replace the output softmax layer. Thus, incorporate absolute positions in the decoding layer to predict masked tokens for model pre-training.

- 解耦注意力机制 (Disentangled Attention, DA):一种注意力机制,通过使用基于两个向量的解耦矩阵计算单词之间的注意力权重,这两个向量分别编码每个单词的内容和相对位置。

- 增强掩码解码器 (Enhanced Mask Decoder, EMD):一种预训练技术,用于替换输出 softmax 层。因此,在解码层中引入绝对位置信息,以预测掩码 token 进行模型预训练。

3 Approach

3 方法

Transformer-based pre-trained language models have led to substantial performance gains, but careful comparison between different approaches is challenging. Therefore, we extend our study to uncover insights regarding their fine-tuning process and main characteristics. Our paper first aims to study the behavior of these models, following two approaches: a data-centric view focusing on the data state and quality, and a model-centric view giving more attention to the models tweaks. Indeed, we will see how data processing affects their performance and how adjustments and improvements made to the model over time is changing its performance. Thus, we seek to end with some takeaways regarding the optimal setup that aids in cross-validating a Transformer-based model, specifically model tuning hyper-parameters and data quality.

基于 Transformer 的预训练语言模型带来了显著的性能提升,但不同方法之间的仔细比较具有挑战性。因此,我们扩展了研究,以揭示有关其微调过程和主要特征的见解。本文首先旨在研究这些模型的行为,采用两种方法:一种是以数据为中心的观点,关注数据状态和质量;另一种是以模型为中心的观点,更多地关注模型的调整。我们将看到数据处理如何影响其性能,以及随着时间的推移对模型进行的调整和改进如何改变其性能。因此,我们希望通过一些关于优化设置的总结,帮助交叉验证基于 Transformer 的模型,特别是模型调优的超参数和数据质量。

3.1 Models Summary

3.1 模型总结

In this section, we present the base versions’ details of the models introduced previously as shown in Table A1. We aim to provide a fair comparison based on the following criteria: L-Number of transformer layers, H-Hidden state size or model dimension, A-Number of attention heads, number of total parameters, token iz ation algorithm, data used for pre-training, training devices and computational cost, training objectives, good performance tasks, and a short description regarding the model key points [29]. All these information will help to understand the performance and behaviors of different transformer-based models and aid to make the appropriate choice depending on the task and resources.

在本节中,我们将介绍之前提到的模型的基础版本详细信息,如表 A1 所示。我们旨在基于以下标准提供公平的比较:L-Transformer 层数、H-隐藏状态大小或模型维度、A-注意力头数、总参数数量、Token 化算法、预训练使用的数据、训练设备和计算成本、训练目标、表现良好的任务,以及关于模型关键点的简要描述 [29]。所有这些信息将有助于理解不同基于 Transformer 的模型的性能和行为,并根据任务和资源做出适当的选择。

3.2 Configuration

3.2 配置

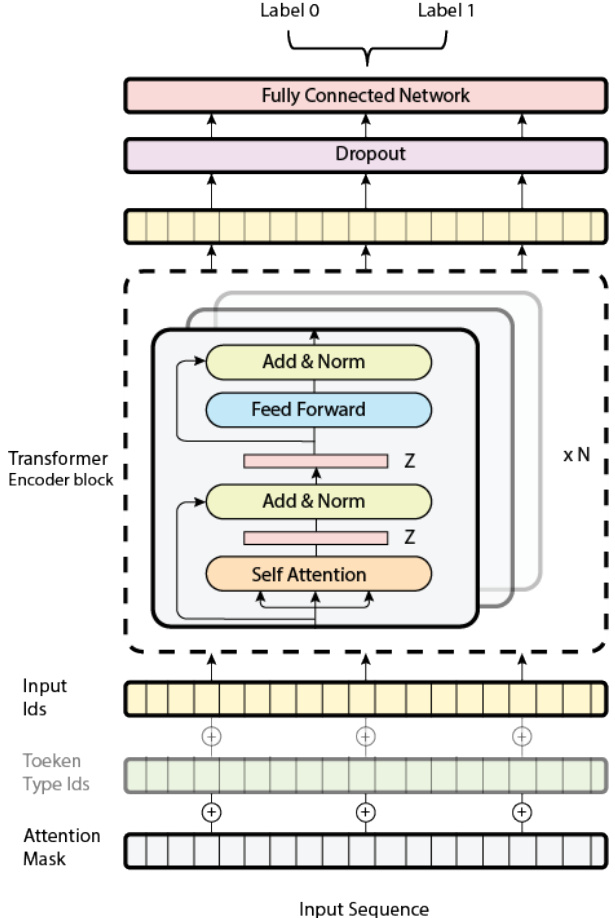

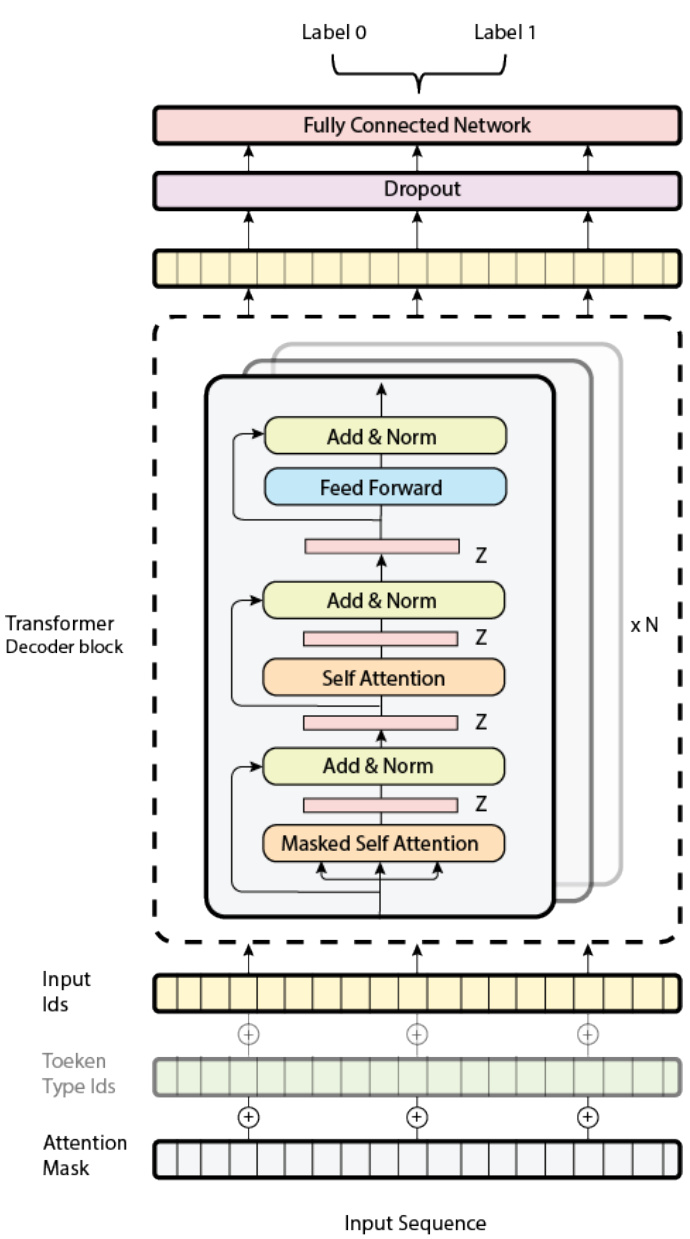

It should be noted that we have used almost the same architecture building blocks for all our implemented models as shown in Figure 2 and Figure 3 for both encoder and decoder based models, respectively. In contrast, seq2seq models like BART are merely a bidirectional encoder pursued by an auto regressive decoder. Each model is fed with the three required inputs, namely input ids, token type ids, and attention mask. However, for some models, the position embeddings are optional and can sometimes be completely ignored (e.g RoBERTa), for this reason we have blurred them a bit in the figures. Furthermore, it is important to note that we uniformed the dataset in lower cases, and we tokenized it with tokenizers based on WordPiece [30], Sentence Piece [31], and Byte-pair-encoding [32] algorithms.

需要注意的是,我们为所有实现的模型使用了几乎相同的架构构建块,如图 2 和图 3 所示,分别用于基于编码器和解码器的模型。相比之下,像 BART 这样的 seq2seq 模型仅是一个双向编码器,后接一个自回归解码器。每个模型都接收三个必需的输入,即输入 ID (input ids)、Token 类型 ID (token type ids) 和注意力掩码 (attention mask)。然而,对于某些模型,位置嵌入是可选的,有时甚至可以完全忽略(例如 RoBERTa),因此我们在图中对它们进行了模糊处理。此外,值得注意的是,我们将数据集统一为小写,并使用基于 WordPiece [30]、Sentence Piece [31] 和字节对编码 (Byte-pair-encoding) [32] 算法的 Tokenizer 对其进行分词。

In our experiments, we used a highly optimized setup using only the base version of each pre-trained language model. For training and validation, we set a batch size of 8 and 4, respectively, and fine-tuned the models for 4 epochs over the data with maximum sequence length of 384 for the intent of correspondence to the majority of reviews’ lengths and computational capabilities. The AdamW optimizer is utilized to optimize the models with a learning rate of 3e-5 and the epsilon (eps) used to improve numerical stability is set to 1e-6, which is the default value. Furthermore, the weight decay is set to 0.001, while excluding bias, LayerNorm.bias, and LayerNorm.weight from the decay weight when fine-tuning, and not decaying them when it is set to 0.000. We implemented all of our models using PyTorch and transformers library from Hugging Face, and ran them on an NVIDIA Tesla P100-PCIE GPU-Persistence-M (51G) GPU RAM.

在我们的实验中,我们使用了高度优化的设置,仅使用每个预训练语言模型的基础版本。为了训练和验证,我们分别设置了批量大小为8和4,并在数据上对模型进行了4个周期的微调,最大序列长度为384,以符合大多数评论的长度和计算能力。我们使用AdamW优化器来优化模型,学习率为3e-5,用于提高数值稳定性的epsilon (eps)设置为1e-6,这是默认值。此外,权重衰减设置为0.001,同时在微调时排除偏差、LayerNorm.bias和LayerNorm.weight的衰减权重,当设置为0.000时不进行衰减。我们使用PyTorch和Hugging Face的transformers库实现了所有模型,并在NVIDIA Tesla P100-PCIE GPU-Persistence-M (51G) GPU RAM上运行。

Figure 2: The Architecture of the Transformer Encoder-Based Models.

图 2: 基于 Transformer 编码器的模型架构。

3.3 Evaluation

3.3 评估

Dataset to fine-tune our models, we used the IMDb movie review dataset [33]. A binary sentiment classification dataset having 50K highly polar movie reviews labelled in a balanced way between positive and negative. We chose it for our study because it is often used in research studies and is a very popular resource for researchers working on NLP and ML tasks, particularly those related to sentiment analysis and text classification due to its accessibility, size, balance and pre-processing. In other words, it is easily accessible and widely available, with over 50K reviews well-balanced, with an equal number of positive and negative reviews as shown in Figure 4. This helps prevent biases in the trained model. Additionally, it has already been pre-processed with the text of each review cleaned and normalized.

为了微调我们的模型,我们使用了 IMDb 电影评论数据集 [33]。这是一个二元情感分类数据集,包含 50,000 条高度极化的电影评论,标签在正面和负面之间平衡分布。我们选择它进行研究是因为它经常被用于研究,并且是从事自然语言处理 (NLP) 和机器学习 (ML) 任务的研究人员非常流行的资源,特别是那些与情感分析和文本分类相关的研究,因为它易于获取、规模大、平衡且经过预处理。换句话说,它易于访问且广泛可用,拥有超过 50,000 条评论,且正负面评论数量均衡,如图 4 所示。这有助于防止训练模型中的偏差。此外,它已经过预处理,每条评论的文本都经过清理和规范化。

Metrics To assess the performance of the fine-tuned transformers on the IMDb movie reviews dataset, tracking the loss and accuracy learning curves for each model is an effective method. These curves can help detect incorrect predictions and potential over fitting, which are crucial factors to consider in the evaluation process. Moreover, widely-used metrics, namely accuracy, recall, precision, and F1-score are valuable to consider when dealing with classification problems. These metrics can be defined as:

指标

为了评估在 IMDb 电影评论数据集上微调的 Transformer 的性能,跟踪每个模型的损失和准确率学习曲线是一种有效的方法。这些曲线可以帮助检测错误的预测和潜在的过拟合,这是评估过程中需要考虑的关键因素。此外,在处理分类问题时,广泛使用的指标,即准确率、召回率、精确率和 F1 分数,是值得考虑的。这些指标可以定义为:

Figure 3: The Architecture of the Transformer Decoder-B