Masked Vision and Language Pre-training with Unimodal and Multimodal Contrastive Losses for Medical Visual Question Answering

基于单模态与多模态对比损失的掩码视觉语言预训练在医学视觉问答中的应用

Abstract. Medical visual question answering (VQA) is a challenging task that requires answering clinical questions of a given medical image, by taking consider of both visual and language information. However, due to the small scale of training data for medical VQA, pre-training fine-tuning paradigms have been a commonly used solution to improve model generalization performance. In this paper, we present a novel self-supervised approach that learns unimodal and multimodal feature representations of input images and text using medical image caption datasets, by leveraging both unimodal and multimodal contrastive losses, along with masked language modeling and image text matching as pretraining objectives. The pre-trained model is then transferred to downstream medical VQA tasks. The proposed approach achieves state-of-the-art (SOTA) performance on three publicly available medical VQA datasets with significant accuracy improvements of $2.2%$ , $14.7%$ , and $1.7%$ respectively. Besides, we conduct a comprehensive analysis to validate the effectiveness of different components of the approach and study different pre-training settings. Our codes and models are available at https://github.com/peng fei li HE U/MUMC.

摘要。医学视觉问答 (VQA) 是一项具有挑战性的任务,需要通过综合考虑视觉和语言信息来回答给定医学图像的临床问题。然而,由于医学 VQA 训练数据规模较小,预训练微调范式已成为提升模型泛化性能的常用解决方案。本文提出了一种新颖的自监督方法,利用医学图像描述数据集,通过单模态和多模态对比损失以及掩码语言建模和图文匹配作为预训练目标,学习输入图像和文本的单模态及多模态特征表示。预训练模型随后迁移至下游医学 VQA 任务。所提方法在三个公开医学 VQA 数据集上实现了最先进 (SOTA) 性能,准确率分别显著提升 $2.2%$、$14.7%$ 和 $1.7%$。此外,我们通过全面分析验证了方法各组成部分的有效性,并研究了不同预训练设置。代码和模型发布于 https://github.com/peng fei li HE U/MUMC。

Keywords: Medical Visual Question Answering, Masked Vision Language Pre-training, Unimodal and Multimodal Contrastive Losses

关键词: 医疗视觉问答 (Medical Visual Question Answering), 掩码视觉语言预训练 (Masked Vision Language Pre-training), 单模态与多模态对比损失 (Unimodal and Multimodal Contrastive Losses)

1 Introduction

1 引言

Medical VQA is a specialized domain of VQA that aims to generate answers to natural language questions about medical images. It is very challenging to train deep learning based medical VQA models from scratch, since the medical VQA datasets available for research are relatively small in scale. Many existing works are proposed to leverage pre-trained visual encoders with external datasets to solve downstream medical VQA tasks, such as utilizing denoising auto encoders [1] and meta-models [2]. These methods mainly transfer feature encoders that are separately pre-trained on unimodal (image or text) tasks.

医学VQA是VQA的一个专门领域,旨在生成关于医学图像的自然语言问题的答案。由于可用于研究的医学VQA数据集规模相对较小,从头开始训练基于深度学习的医学VQA模型非常具有挑战性。许多现有工作提出利用预训练的视觉编码器和外部数据集来解决下游医学VQA任务,例如利用去噪自动编码器 [1] 和元模型 [2]。这些方法主要迁移在单模态(图像或文本)任务上单独预训练的特征编码器。

Unlike unimodal pre training approaches, both image and text feature presentations can be enhanced by learning through the visual and language interactions, given relatively richer resources of medical image caption datasets [3-5]. Liu et al. followed the work of MOCO [19] that trained teacher model for visual encoder via contrastive loss of different image views (by data augmentations) to improve the generalization of medical VQA [6]. Eslami et al. utilized CLIP [7] for visual model initialization, and learned cross-modality representations from medical image-text pairs by maximizing the cosine similarity between the extracted features of medical images and their corresponding captions [8]. Cong et al. devised an innovative framework, which featured a semantic focusing module to emphasize image regions that were pertinent to the caption and a progressive cross-modality comprehension module that iterative ly enhanced the comprehension of the correlation between the image and caption [9]. Chen et al. proposed a medical vision language pre-training approach that used both masked image modelling and masked language modelling to jointly learn representations of medical images and their corresponding descriptions [10]. However, to the best of our knowledge, there have been no existing methods that explore learning both unimodal and multimodal features at the pre-training stage for downstream medical VQA tasks.

与单模态预训练方法不同,在医学图像描述数据集相对丰富的资源支持下 [3-5],通过视觉与语言的交互学习可以同时增强图像和文本特征表示。Liu等人沿用了MOCO [19] 的工作,通过不同图像视图(数据增强生成)的对比损失训练视觉编码器的教师模型,以提升医学VQA的泛化能力 [6]。Eslami等人利用CLIP [7] 初始化视觉模型,并通过最大化医学图像提取特征与其对应描述文本之间的余弦相似度,从医学图文对中学习跨模态表征 [8]。Cong等人设计了一个创新框架,包含聚焦语义模块(突出与描述相关的图像区域)和渐进式跨模态理解模块(迭代增强图像与文本关联性的理解)[9]。Chen等人提出了一种医学视觉语言预训练方法,联合使用掩码图像建模和掩码语言建模来学习医学图像及其对应描述的表示 [10]。然而据我们所知,目前尚无方法在预训练阶段同时探索单模态与多模态特征学习以服务于下游医学VQA任务。

In this paper, we proposed a new self-supervised vision language pre-training (VLP) approach that applied Masked image and text modeling with Unimodal and Multimodal Contrastive losses (MUMC) in the pre-training phase for solving downstream medical VQA tasks. The model was pretrained on image caption datasets for aligning visual and text information, and transferred to downstream VQA datasets. The unimodal and multimodal contrastive losses in our work are applied to (1) align image and text features; (2) learn unimodal image encoders via momentum contrasts of different views of the same image (i.e. different views are generated by different image masks); (3) learn unimodal text encoder via momentum contrasts. We also introduced a new masked image strategy by randomly masking the patches of the image with a probability of $25%$ , which serves as a data augmentation technique to further enhance the performance of the model. Our approach outperformed existing methods and sets new benchmarks on three medical VQA datasets [11-13], with significant enhancements of $2.2%$ , $14.7%$ , and $1.7%$ respectively. Besides, we conducted an analysis to verify the effectiveness of different components and find the optimal masking probability. We also conducted a qualitative analysis on the attention maps using Grad-CAM [14] to validate whether the corresponding part of the image is attended when answering a question.

本文提出了一种新型自监督视觉语言预训练(VLP)方法,在预训练阶段采用带单模态与多模态对比损失的掩码图像文本建模(MUMC)来解决下游医疗视觉问答(VQA)任务。该模型首先在图像描述数据集上进行视觉与文本信息对齐的预训练,随后迁移至下游VQA数据集。我们提出的单模态与多模态对比损失用于:(1) 对齐图像与文本特征;(2) 通过同一图像不同视图(即不同图像掩码生成的视图)的动量对比学习单模态图像编码器;(3) 通过动量对比学习单模态文本编码器。我们还引入了一种新的掩码图像策略,以25%的概率随机遮蔽图像块,作为进一步提升模型性能的数据增强技术。本方法在三个医疗VQA数据集[11-13]上超越现有方法并创下新基准,分别显著提升2.2%、14.7%和1.7%。此外,我们通过实验分析验证了各模块的有效性并确定了最优掩码概率,同时采用Grad-CAM[14]对注意力图进行定性分析,以验证回答问题时的图像关注区域是否准确。

2 Methods

2 方法

In this section, we provide the detailed description of the proposed approach, which includes the network architectures, self-supervised pre-training objectives, and the way to fine-tune on downstream medical VQA tasks.

在本节中,我们将详细描述所提出的方法,包括网络架构、自监督预训练目标以及在下游医疗VQA任务上的微调方式。

2.1 Model Architecture

2.1 模型架构

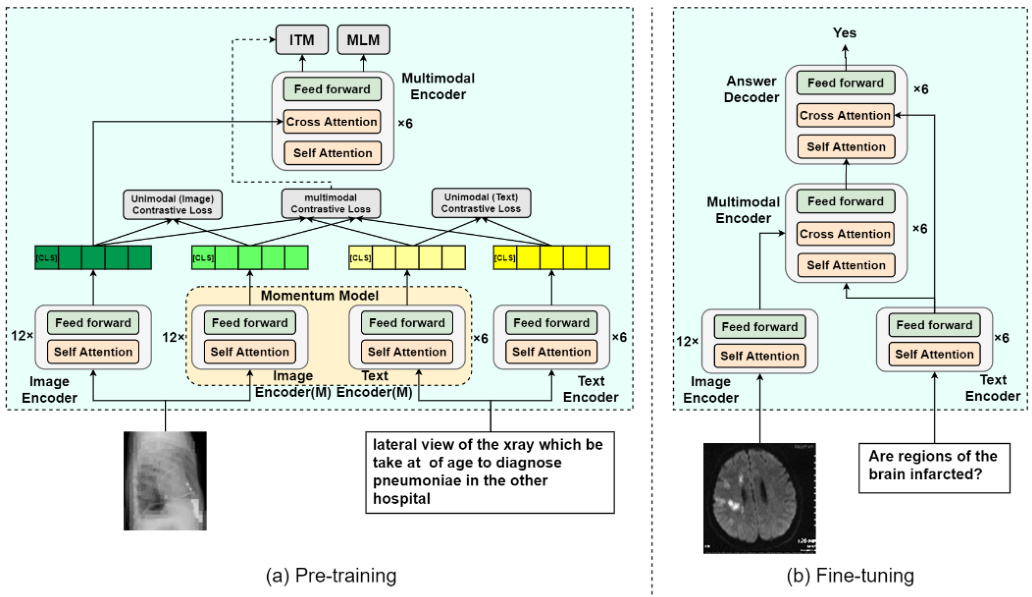

In the pre-training phase, the network architecture comprises an image encoder, a text encoder, and a multimodal encoder, which are all based on the transformer architecture [15]. As shown in Fig. 1(a), the image encoder leverages a 12-layer Vision Transformer (ViT) [16] to extract visual features from the input images, while the text encoder employs a 6-layer transformer which is initialized by the first 6 layers of pretrained BERT [17]. The last 6 layers of BERT are utilized as the multimodal encoder and incorporated cross-attention at each layer, which fuses the visual and linguistic features to facilitate learning of multimodal interactions. The model is trained on medical image-caption pairs. An image is partitioned into patches of size $16\times16$ , and $25%$ of the patches are randomly masked. The remaining unmasked image patches are converted into a sequence of embeddings by an image encoder. The text, i.e. the image caption is tokenized into a sequence of tokens using a WordPiece [18] tokenizer and fed into the BERT-based text encoder. In addition, the special tokens, [CLS] are appended to the beginning of both the image and text sequence.

在预训练阶段,网络架构包含基于Transformer架构[15]的图像编码器、文本编码器和多模态编码器。如图1(a)所示,图像编码器采用12层Vision Transformer (ViT)[16]从输入图像中提取视觉特征,文本编码器使用由预训练BERT[17]前6层初始化的6层Transformer。BERT的最后6层作为多模态编码器,并在每层加入交叉注意力机制,融合视觉与语言特征以促进多模态交互学习。该模型通过医学图像-描述对进行训练:图像被分割为$16\times16$大小的图块,其中$25%$的图块被随机遮蔽。未遮蔽的图像图块经图像编码器转换为嵌入序列;文本描述通过WordPiece[18]分词器转换为token序列输入基于BERT的文本编码器。此外,特殊token [CLS]被添加到图像和文本序列的开头。

Fig. 1. Overview of the network architecture in both pre-training and fine-tuning phases.

图 1: 网络架构在预训练和微调阶段的概览。

To transfer the models trained on image caption datasets to the downstream medical VQA tasks, we utilize the weights from the pre-training stage to initialize the image encoder, text encoder and multimodal encoder, as shown in Fig. 1(b). To generate answers, we add an answering decoder with a 6-layer transformer-based decoder to the model, which receives the multimodal embeddings and output text tokens. A [CLS] token serves as the initial input token for the decoder, and a [SEP] token is appended to signify the end of the generated sequence. The downstream VQA model is fine-tuned via the masked language model (MLM) loss [17], using ground-truth answers as targets.

为了将在图像字幕数据集上训练的模型迁移到下游医疗视觉问答(VQA)任务,我们利用预训练阶段的权重来初始化图像编码器、文本编码器和多模态编码器,如图1(b)所示。为生成答案,我们在模型中添加了一个基于6层Transformer的应答解码器,该解码器接收多模态嵌入并输出文本token。[CLS] token作为解码器的初始输入token,[SEP] token用于标记生成序列的结束。下游VQA模型通过掩码语言模型(MLM)损失[17]进行微调,使用真实答案作为目标。

2.2 Unimodal and Multimodal Contrastive Losses

2.2 单模态与多模态对比损失

The proposed self-supervised objective attempts to capture the semantic discrepancy between positive and negative samples across both unimodal and multimodal domains at the same time. The unimodal contrastive loss (UCL) aims to differentiate between examples of one modality, such as images or text, in a latent space to make similar examples close. And the multimodal contrastive loss (MCL) learns the alignments between both modalities by maximizing the similarity between images and their corresponding text captions, while separating from the negative examples. In the implementation, we maintain two momentum models for image and text encoders respectively to generate different perspectives or representations of the same input sample, which serve as positive samples for contrastive learning.

提出的自监督目标旨在同时捕捉单模态和多模态领域中正负样本之间的语义差异。单模态对比损失 (UCL) 旨在潜在空间中区分一种模态(如图像或文本)的样本,使相似样本彼此靠近。而多模态对比损失 (MCL) 通过最大化图像与其对应文本描述之间的相似性,同时与负样本分离,来学习两种模态之间的对齐关系。在实现中,我们分别为图像和文本编码器维护两个动量模型,以生成同一输入样本的不同视角或表征,这些将作为对比学习的正样本。

In detail, we denote the image and caption embeddings from the unimodal image encoder and text encoder as $v_{c l s}$ and $t_{c l s}$ , which are further processed through the transformations $g_{v}$ and $g_{t}$ , to normalize and map the image and text embeddings to be lower-dimensional representations. The embeddings are inserted into a lookup table, and only the most recent 65,535 pairs of image-text embedding are stored for contrastive learning. We utilize the momentum update technique originally proposed in Mo $\mathrm{Co}$ [19], which is updated every $k$ iterations where $\mathrm{k\Omega}$ is a hyper parameter. We denote the ground-truth one-hot similarity by $y_{i2i}(V),y_{t2t}(T),y_{i2t}(V)$ , and $y_{t2i}(T)$ , where the probability of negative pairs is 0 and the probability of the positive pair is 1. The unimodal contrastive losses and multimodal contrastive losses can be defined as the cross-entropy $H$ given as follows:

具体而言,我们将单模态图像编码器和文本编码器生成的图像与标题嵌入分别表示为$v_{cls}$和$t_{cls}$,这些嵌入会通过变换$g_v$和$g_t$进行归一化处理,并映射为低维表示。嵌入被存入查找表,仅保留最近的65,535对图文嵌入用于对比学习。我们采用MoCo[19]最初提出的动量更新技术,每$k$次迭代更新一次,其中$\mathrm{k\Omega}$为超参数。真实单热相似度由$y_{i2i}(V)$、$y_{t2t}(T)$、$y_{i2t}(V)$和$y_{t2i}(T)$表示,负样本对概率为0,正样本对概率为1。单模态对比损失和多模态对比损失可定义为交叉熵$H$,公式如下:

$$

\begin{array}{r l}&{L_{u c l}=\frac{1}{2}\mathbb{E}{(V,T)D}\left[H\left(y_{i2i}(V),\frac{\exp(s(V,V_{i})/\tau)}{\sum_{n=1}^{N}\exp(s(V,V_{i})/\tau)}\right)+H\left(y_{t2t}(T),\frac{e x p\left(s(T,T_{i})/\tau\right)}{\sum_{n=1}^{N}e x p\left(s(T,T_{i})/\tau\right)}\right)\right]}\ &{L_{m c l}=\frac{1}{2}\mathbb{E}_{(V,T)D}\left[H\left(y_{i2t}(V),\frac{e x p\left(s(V,T_{i})/\tau\right)}{\sum_{n=1}^{N}e x p\left(s(V,T_{i})/\tau\right)}\right)+H\left(y_{t2i}(T),\frac{e x p\left(s(T,V_{i})/\tau\right)}{\sum_{n=1}^{N}e x p\left(s(T,V_{i})/\tau\right)}\right)\right]}\end{array}

$$

$$

\begin{array}{r l}&{L_{u c l}=\frac{1}{2}\mathbb{E}{(V,T)D}\left[H\left(y_{i2i}(V),\frac{\exp(s(V,V_{i})/\tau)}{\sum_{n=1}^{N}\exp(s(V,V_{i})/\tau)}\right)+H\left(y_{t2t}(T),\frac{e x p\left(s(T,T_{i})/\tau\right)}{\sum_{n=1}^{N}e x p\left(s(T,T_{i})/\tau\right)}\right)\right]}\ &{L_{m c l}=\frac{1}{2}\mathbb{E}_{(V,T)D}\left[H\left(y_{i2t}(V),\frac{e x p\left(s(V,T_{i})/\tau\right)}{\sum_{n=1}^{N}e x p\left(s(V,T_{i})/\tau\right)}\right)+H\left(y_{t2i}(T),\frac{e x p\left(s(T,V_{i})/\tau\right)}{\sum_{n=1}^{N}e x p\left(s(T,V_{i})/\tau\right)}\right)\right]}\end{array}

$$

where $s$ denotes cosine similarity function, $\begin{array}{r}{s(\mathrm{V},V_{i})=g_{v}(v_{c l s})^{T}g_{v}(v_{c l s}){i},s(\mathrm{T},T_{i})=}\end{array}$ $g_{t}(t_{c l s})^{T}g_{t}(t_{c l s})_{i}$ , $s(\mathsf{V},T_{i})=g_{v}(v_{c l s})^{T}g_{t}(t_{c l s})_{i}$ , $s(\mathrm{T},V_{i})=g_{t}(t_{c l s})^{T}g_{v}(v_{c l s})_{i}$ and $\tau$ is a learnable temperature parameter.

其中 $s$ 表示余弦相似度函数,$\begin{array}{r}{s(\mathrm{V},V_{i})=g_{v}(v_{c l s})^{T}g_{v}(v_{c l s}){i},s(\mathrm{T},T_{i})=}\end{array}$ $g_{t}(t_{c l s})^{T}g_{t}(t_{c l s})_{i}$,$s(\mathsf{V},T_{i})=g_{v}(v_{c l s})^{T}g_{t}(t_{c l s})_{i}$,$s(\mathrm{T},V_{i})=g_{t}(t_{c l s})^{T}g_{v}(v_{c l s})_{i}$,$\tau$ 是可学习的温度参数。

2.3 Image Text Matching

2.3 图文匹配

We adopt the image text matching (ITM) strategy similar to prior works [20, 21] as one of the training objectives, by creating a binary classification task with negative text labels randomly sampled from the same minibatch. The joint representation of the image and text are encoded by the multimodal encoder, and utilized as input to the binary classification head. The ITM task is optimized using the cross-entropy loss:

我们采用与先前工作[20, 21]类似的图像文本匹配(ITM)策略作为训练目标之一,通过从同一小批次中随机采样负样本文本来构建二分类任务。图像和文本的联合表示由多模态编码器编码,并作为二分类头的输入。ITM任务使用交叉熵损失进行优化:

$$

\mathcal{L}{i t m}=\mathbb{E}{(V,T)D}H(y_{i t m},p_{i t m}(V,T))

$$

$$

\mathcal{L}{i t m}=\mathbb{E}{(V,T)D}H(y_{i t m},p_{i t m}(V,T))

$$

the function $H(,)$ represents a cross-entropy computation, where $y_{i t m}$ denotes the ground-truth label and $p_{i t m}(V,T)$ is a function for predicting the class.

函数 $H(,)$ 表示交叉熵计算,其中 $y_{i t m}$ 代表真实标签,$p_{i t m}(V,T)$ 是用于预测类别的函数。

2.4 Masked Language Modeling

2.4 掩码语言建模 (Masked Language Modeling)

Masked Language Modeling (MLM) is another pre-trained objective in our approach, that predicts masked tokens in text based on both the visual and unmasked contextual information. For each caption text, $15%$ of tokens are randomly masked and replaced with the special token, [MASK]. Predictions of the masked tokens are conditioned on both unmasked text and image features. We minimize the cross-entropy loss for MLM:

掩码语言建模 (Masked Language Modeling, MLM) 是我们方法中的另一个预训练目标,它基于视觉和未掩码的上下文信息来预测文本中被掩码的 token。对于每个标题文本,随机掩码 $15%$ 的 token 并用特殊 token [MASK] 替换。被掩码 token 的预测依赖于未掩码文本和图像特征。我们最小化 MLM 的交叉熵损失:

$$

\mathcal{L}{m l m}=\mathbb{E}{(V,\hat{T})D}H(y_{m l m},p_{m l m}(V,\hat{T}))

$$

$$

\mathcal{L}{m l m}=\mathbb{E}{(V,\hat{T})D}H(y_{m l m},p_{m l m}(V,\hat{T}))

$$

where $H(,)$ is a cross-entropy calculation, $\hat{T}$ denotes the masked text token, $y_{m l m}$ represents the ground-truth of the masked text token and $p_{m l m}(V,\hat{T})$ is the predicted probability of a masked token.

其中 $H(,)$ 是交叉熵计算,$\hat{T}$ 表示被掩码的文本 token,$y_{m l m}$ 代表被掩码文本 token 的真实值,而 $p_{m l m}(V,\hat{T})$ 是被掩码 token 的预测概率。

2.5 Masked Image Strategy

2.5 掩码图像策略

Besides the training objectives, we introduce a masked image strategy as a data augmentation technique. In our experiment, input images are partitioned into patches which are randomly masked with a probability of $25%$ , and only the unmasked patches are passed through the network. Unlike the previous methods [10, 22], we do not utilize reconstruction loss [23], but use this only as a data augmentation method. This enables us to process more samples at each step, resulting in a more efficient pretraining of vision-language models with a similar memory footprint.

除了训练目标外,我们引入了一种掩码图像策略作为数据增强技术。在实验中,输入图像被分割成若干图像块 (patch) ,这些图像块会以 $25%$ 的概率被随机掩码,只有未被掩码的图像块会通过网络。与之前的方法 [10, 22] 不同,我们没有使用重建损失 [23] ,而是仅将其作为一种数据增强手段。这使得我们能在每一步处理更多样本,从而在内存占用相近的情况下更高效地预训练视觉-语言模型。

3 Experiments

3 实验

3.1 Datasets

3.1 数据集

Our model is pre-trained on three datasets: ROCO [3], MedICaT [4], and the Image CLEF 2022 Image Caption Dataset [5]. ROCO comprises over 80,000 imagecaption pairs. MedICaT includes over 217,000 medical ima