SleePyCo: Automatic Sleep Scoring with Feature Pyramid and Contrastive Learning

基于特征金字塔与对比学习的自动睡眠分期

Abstract—Automatic sleep scoring is essential for the diagnosis and treatment of sleep disorders and enables longitudinal sleep tracking in home environments. Conventionally, learning-based automatic sleep scoring on single-channel electroencephalogram (EEG) is actively studied because obtaining multi-channel signals during sleep is difficult. However, learning representation from raw EEG signals is challenging owing to the following issues: 1) sleep-related EEG patterns occur on different temporal and frequency scales and 2) sleep stages share similar EEG patterns. To address these issues, we propose a deep learning framework named SleePyCo that incorporates 1) a feature pyramid and 2) supervised contrastive learning for automatic sleep scoring. For the feature pyramid, we propose a backbone network named SleePyCo-backbone to consider multiple feature sequences on different temporal and frequency scales. Supervised contrastive learning allows the network to extract class disc rim i native features by minimizing the distance between intra-class features and simultaneously maximizing that between inter-class features. Comparative analyses on four public datasets demonstrate that SleePyCo consistently outperforms existing frameworks based on single-channel EEG. Extensive ablation experiments show that SleePyCo exhibits enhanced overall performance, with significant improvements in discrimination between the N1 and rapid eye movement (REM) stages.

摘要—自动睡眠评分对睡眠障碍的诊断治疗及家庭环境下的长期睡眠监测至关重要。传统上,基于单通道脑电图(EEG)的学习型自动睡眠评分研究十分活跃,因为睡眠期间获取多通道信号较为困难。然而,从原始EEG信号中学习表征存在两大挑战:1)睡眠相关EEG模式会出现在不同的时间和频率尺度上;2)不同睡眠阶段具有相似的EEG模式。为此,我们提出了名为SleePyCo的深度学习框架,其包含两大核心组件:1)特征金字塔结构;2)用于自动睡眠评分的监督对比学习。针对特征金字塔,我们设计了SleePyCo-backbone主干网络来处理不同时空尺度下的多特征序列。监督对比学习通过最小化类内特征距离同时最大化类间特征距离,使网络能提取具有类别判别力的特征。在四个公开数据集上的对比实验表明,SleePyCo在单通道EEG基准上持续优于现有框架。大量消融实验证实,该框架在N1阶段与快速眼动(REM)阶段的区分度上表现尤为突出,整体性能显著提升。

Index Terms—Automatic sleep scoring, multiscale representation, feature pyramid, metric learning, supervised contrastive learning, single-channel EEG.

索引术语—自动睡眠评分、多尺度表示、特征金字塔、度量学习、监督对比学习、单通道脑电图 (EEG)。

1 INTRODUCTION

1 引言

LEEP scoring, also referred to as ”sleep stage classiS fication” or ”sleep stage identification,” is critical for the accurate diagnosis and treatment of sleep disorders [1]. Individuals suffering from sleep disorders are at risk of fatal complications such as hypertension, heart failure, and arrhythmia [2]. In this context, poly so mno graph y (PSG) is considered the gold standard for sleep scoring and is used in the prognosis of typical sleep disorders (e.g., sleep apnea, narcolepsy, and sleepwalking) [3]. PSG consists of the biosignals associated with bodily activities such as brain activity (electroencephalogram, EEG), eye movement (electro o cul ogram, EOG), heart rhythm (electrocardiogram, ECG), and chin, facial, or limb muscle activity (electromyogram, EMG). Generally, experienced sleep experts examine these recordings based on sleep scoring rules and assign 20- or 30-s segments of the PSG data (called ”epoch”) to a sleep stage. R echt s chaff en and Kales (R&K) [4] and American Academy of Sleep Medicine (AASM) [5] standards serve as typical sleep scoring rules. The R&K standard classifies sleep stages into wakefulness (W), rapid eye movement (REM), and non-REM (NREM). NREM is further subdivided into S1, S2, S3, and S4 or N1, N2, N3, and N4. In the AASM rule, N3 and N4 are merged into N3, and it categorizes the PSG epochs into five sleep stages. Recently, the improved version of R&K—the AASM rule—has been widely utilized in manual sleep scoring. According to this rule, sleep experts should visually analyze and categorize the epochs of the entire night to form a hypnogram. Thus, manual sleep scoring is an arduous and time-consuming process [6]. By contrast, machine learning algorithms require less than a few minutes for sleep scoring [7], and their performance is comparable to that of sleep experts [8]. Therefore, automatic sleep scoring is highly desired for fast and accurate healthcare systems.

LEEP评分(也称为"睡眠阶段分类"或"睡眠阶段识别")对于睡眠障碍的准确诊断和治疗至关重要[1]。患有睡眠障碍的个体面临高血压、心力衰竭和心律失常等致命并发症的风险[2]。在此背景下,多导睡眠图(polysomnography,PSG)被认为是睡眠评分的金标准,并用于典型睡眠障碍(如睡眠呼吸暂停、发作性睡病和梦游症)的预后评估[3]。PSG包含与身体活动相关的生物信号,如脑活动(脑电图,EEG)、眼球运动(眼电图,EOG)、心律(心电图,ECG)以及下巴、面部或肢体肌肉活动(肌电图,EMG)。通常,经验丰富的睡眠专家根据睡眠评分规则检查这些记录,并将PSG数据的20或30秒片段(称为"epoch")分配给一个睡眠阶段。Rechtschaffen和Kales(R&K)[4]以及美国睡眠医学会(AASM)[5]标准是典型的睡眠评分规则。R&K标准将睡眠阶段分为清醒(W)、快速眼动(REM)和非快速眼动(NREM)。NREM进一步细分为S1、S2、S3和S4或N1、N2、N3和N4。在AASM规则中,N3和N4合并为N3,并将PSG片段分为五个睡眠阶段。最近,R&K的改进版本——AASM规则——在手动睡眠评分中得到广泛应用。根据这一规则,睡眠专家需要视觉分析并分类整晚的片段以形成睡眠图。因此,手动睡眠评分是一个艰巨且耗时的过程[6]。相比之下,机器学习算法只需不到几分钟即可完成睡眠评分[7],其性能与睡眠专家相当[8]。因此,自动睡眠评分对于快速准确的医疗系统具有高度需求。

Several methods have been developed for automatic sleep scoring based on deep neural networks. Basic one-toone schemes that utilize a single EEG epoch and produce its corresponding sleep stage, were proposed as early methods [9], [10]. In addition, one-to-many (i.e., multitask learning) [11] schemes have been presented. Because the utilization of multiple EEG epochs offers advantageous performance, several many-to-one methods [12], [13], [14], [15], the method of predicting the sleep stage of the target epoch with the given PSG signals, and many-to-many (i.e., sequence-to-sequence) [7], [16], [17] methods have been proposed for automatic sleep scoring. The existing methods are generally based on convolutional neural networks (CNNs) [9], [11], [12], [13], [14], [18], [19], recurrent neural networks (RNNs) [17], deep belief networks (DBNs) [20], convolutional recurrent neural networks (CRNNs) [7], [15], [16], [21], [22], [22], [23], [24], fully convolutional networks (FCNs) [25], [26], transformer [27], CNN $^+$ Transformer [10], [28], and other network architectures [29], [30].

基于深度神经网络的自动睡眠分期方法已发展出多种技术路线。早期研究提出了基础的一对一方案,即利用单个脑电图(EEG)时段预测对应睡眠阶段[9][10]。随后出现了一对多(即多任务学习)[11]架构。由于多时段脑电图能提升性能,研究者相继提出了多种多对一方法[12][13][14]15以及多对多(即序列到序列)[7][16][17]方案。现有方法主要基于以下架构:卷积神经网络(CNN)[9][11][12][13][14][18][19]、循环神经网络(RNN)[17]、深度信念网络(DBN)[20]、卷积循环神经网络(CRNN)[7][15][16][21][22][23][24]、全卷积网络(FCN)[25][26]、Transformer[27]、CNN+Transformer[10][28]及其他网络架构[29][30]。

To obtain an improved representation from EEG, the architectures are designed to extract multiscale features with varying temporal and frequency scales [9], [16], [22], [28], [30], [31], [32]. Phan et al. [9] and Qu et al. [28] used two distinct widths of max-pooling kernels on the spec tr ogram. Supratak et al. [16], Fiorillo et al. [31], and Huang et al. [30] designed two-stream networks with two distinct filter widths of the convolutional layer in representation learning. Further, Sun et al. [22] and Wang et al. [32] utilized convolutional layers with two or more distinguished filter widths in parallel. These studies utilized feature maps with different receptive field sizes to obtain richer information from given input signals. However, they could not obtain the advantages of multi-level features, which represent broad temporal scales and frequency characteristics.

为从脑电图(EEG)中获得更优表征,相关架构被设计用于提取具有不同时间尺度和频率尺度的多尺度特征[9][16][22][28][30][31][32]。Phan等人[9]和Qu等人[28]在频谱图上采用两种不同宽度的最大池化核。Supratak等人[16]、Fiorillo等人[31]以及Huang等人[30]设计了具有两种不同卷积层滤波器宽度的双流网络进行表征学习。此外,Sun等人[22]和Wang等人[32]并行使用了两种或更多不同滤波器宽度的卷积层。这些研究通过具有不同感受野大小的特征图从输入信号中获取更丰富信息,但未能充分利用表征宽时间尺度与频率特性的多层次特征优势。

Automatic scoring methods based on batch contrastive approaches have been proposed [33], [34], [35] to improve the representation of PSG signals without labeled data, as multiple self-supervised contrastive learning frameworks have been proposed for visual representation [36], [37], [38]. These batch-based approaches have been extensively studied because they outperform the traditional contrastive learning methods [39] such as the triplet [40] and N-pair [41] strategies. Mohsenvand et al. [33] proposed self-supervised contrastive learning for electroencephalogram classification. Jiang et al. [34] proposed self-supervised contrastive learning for EEG-based automatic sleep scoring. CoSleep [35] presented self-supervised learning for multiview EEG represent ation between the raw signal and spec tr ogram for automatic sleep scoring. These studies only solve the problem of lack of labeled PSG data and do not focus on accurate automatic scoring. Furthermore, they do not leverage the large amount of annotated PSG data.

基于批量对比方法的自动评分方法已被提出[33]、[34]、[35],旨在无需标注数据的情况下改进PSG信号的表示,正如针对视觉表示提出的多种自监督对比学习框架[36]、[37]、[38]。这些基于批量的方法因优于传统对比学习方法[39](如三元组[40]和N对[41]策略)而得到广泛研究。Mohsenvand等人[33]提出了用于脑电图分类的自监督对比学习。Jiang等人[34]提出了基于EEG的自动睡眠评分的自监督对比学习。CoSleep[35]提出了用于原始信号与频谱图间多视图EEG表示的自监督学习以实现自动睡眠评分。这些研究仅解决了缺乏标注PSG数据的问题,并未聚焦于精确的自动评分。此外,它们未充分利用大量已标注的PSG数据。

To address the aforementioned limitations, we propose SleePyCo, which jointly utilizes a feature pyramid [42] and supervised contrastive learning [43] to realize automatic sleep scoring. In SleePyCo, the feature pyramid considers broad temporal scales and frequency characteristics by utilizing multi-level features. The supervised contrastive learning framework allows the network to extract class discrim- inative features by minimizing the distance of intra-class features and maximizing that of inter-class features at the same time. To verify the effectiveness of the feature pyramid and supervised contrastive learning, we conducted an ablation study on the Sleep-EDF [44], [45] dataset that showed that SleePyCo exhibits improved overall performance in automatic sleep scoring by enhancing the discrimination between the N1 and REM stages. The results of extensive experiments and comparative analyses conducted on four public datasets further corroborate the performance of SleePyCo. SleePyCo achieves state-of-the-art performance by exploiting the representation power of the feature pyramid and supervised contrastive learning. The main contributions of this study are summarized as follows:

为解决上述局限性,我们提出了SleePyCo,该方法联合利用特征金字塔[42]和监督对比学习[43]来实现自动睡眠评分。在SleePyCo中,特征金字塔通过利用多层次特征来考虑广泛的时间尺度和频率特性。监督对比学习框架使网络能够通过同时最小化类内特征距离和最大化类间特征距离来提取具有类别区分性的特征。为验证特征金字塔和监督对比学习的有效性,我们在Sleep-EDF[44][45]数据集上进行了消融实验,结果表明SleePyCo通过增强N1期与REM期的区分度,提升了自动睡眠评分的整体性能。在四个公开数据集上开展的广泛实验和对比分析结果进一步证实了SleePyCo的优越性能。通过充分发挥特征金字塔和监督对比学习的表征能力,SleePyCo实现了当前最先进的性能。本研究的主要贡献可概括如下:

We demonstrate that SleePyCo outperforms the stateof-the-art frameworks on four public datasets via comparative analyses.

我们通过对比分析证明,SleePyCo在四个公共数据集上优于最先进的框架。

We verified through an ablation study that the feature pyramid and supervised contrastive learning show synergies. Further, we analyzed the results from the perspective of sleep scoring criteria.

我们通过消融实验验证了特征金字塔和监督对比学习具有协同效应。此外,我们还从睡眠评分标准的角度对结果进行了分析。

2 MODEL ARCHITECTURE

2 模型架构

2.1 Problem Statement

2.1 问题描述

The proposed model is designed to classify $L$ successive single-channel EEG epochs into sleep stages for the $L$ -th input EEG epoch (called the target EEG epoch). Formally, $L$ successive single-channel EEG epochs are defined as X(L) ∈ R3000L×1, and the corresponding ground truth is denoted as $\pmb{y}^{(L)}\in{0,1}^{N_{c}}$ , where ${\bf\dot{\boldsymbol{y}}}^{(L)}$ denotes the one-hot encoding label of the target EEG epoch and $\begin{array}{r}{\sum_{j=1}^{N_{c}}y_{j}^{(L)}=1}\end{array}$ $N_{c}$ indicates the number of classes and was set as 5, following the five-stage sleep classification in the AASM rule [5]. $\mathbf{X}^{\left(L\right)}$ can be described as $\mathbf{X}^{(L)}={\pmb{x}{1},\pmb{x}{2},...,\pmb{x}{L}},$ where $\pmb{x}_{i}\in\mathbb{R}^{3000\times1}$ is a 30-second single EEG epoch sampled at $100\mathrm{Hz}$ .

所提出的模型旨在将 $L$ 个连续的单通道脑电图(EEG)时段分类为第 $L$ 个输入EEG时段(称为目标EEG时段)的睡眠阶段。形式上,$L$ 个连续的单通道EEG时段定义为 X(L) ∈ R3000L×1,对应的真实标签表示为 $\pmb{y}^{(L)}\in{0,1}^{N_{c}}$ ,其中 ${\bf\dot{\boldsymbol{y}}}^{(L)}$ 表示目标EEG时段的独热编码标签,且 $\begin{array}{r}{\sum_{j=1}^{N_{c}}y_{j}^{(L)}=1}\end{array}$ 。$N_{c}$ 表示类别数量,根据AASM规则[5]中的五阶段睡眠分类设置为5。$\mathbf{X}^{\left(L\right)}$ 可描述为 $\mathbf{X}^{(L)}={\pmb{x}{1},\pmb{x}{2},...,\pmb{x}{L}}$ ,其中 $\pmb{x}_{i}\in\mathbb{R}^{3000\times1}$ 是以 $100\mathrm{Hz}$ 采样的30秒单EEG时段。

2.2 Model Components

2.2 模型组件

The network architecture of SleePyCo was inspired by IITNet [15]. As reported in [15], considering the temporal relations between EEG patterns in intra- and inter-epoch levels is important for automatic sleep scoring because sleep experts analyze the PSG data in the same manner. However, EEG patterns exhibit various frequencies and temporal characteristics. For instance, the sleep spindles in N2 occur in the frequency range of $12{-}14~\mathrm{Hz}$ between $0.5\substack{-2}\mathrm{s},$ whereas the slow wave activity in N3 occur in the frequency range of $0.5{-}2~\mathrm{Hz}$ throughout the N3 stage. To address this, we incorporated a feature pyramid into our model to enable multiscale representation because a feature pyramid can consider various temporal scales and frequency characteristics. This is based on the rationale that a feature pyramid considers various frequency components and spatial scales, such as shape and texture, in computer vision [46].

SleePyCo的网络架构灵感来源于IITNet [15]。如[15]所述,考虑脑电图(EEG)模式在时段内和时段间的时间关系对于自动睡眠评分至关重要,因为睡眠专家也是以相同方式分析多导睡眠图(PSG)数据。然而,EEG模式表现出不同的频率和时间特征。例如,N2阶段的睡眠纺锤波出现在$12{-}14~\mathrm{Hz}$频率范围内,持续时间$0.5\substack{-2}\mathrm{s}$,而N3阶段的慢波活动则在整个N3阶段以$0.5{-}2~\mathrm{Hz}$频率范围出现。为解决这一问题,我们在模型中引入了特征金字塔以实现多尺度表征,因为特征金字塔能够考虑不同的时间尺度和频率特征。这一设计基于计算机视觉[46]中特征金字塔可考虑不同频率分量和空间尺度(如形状和纹理)的原理。

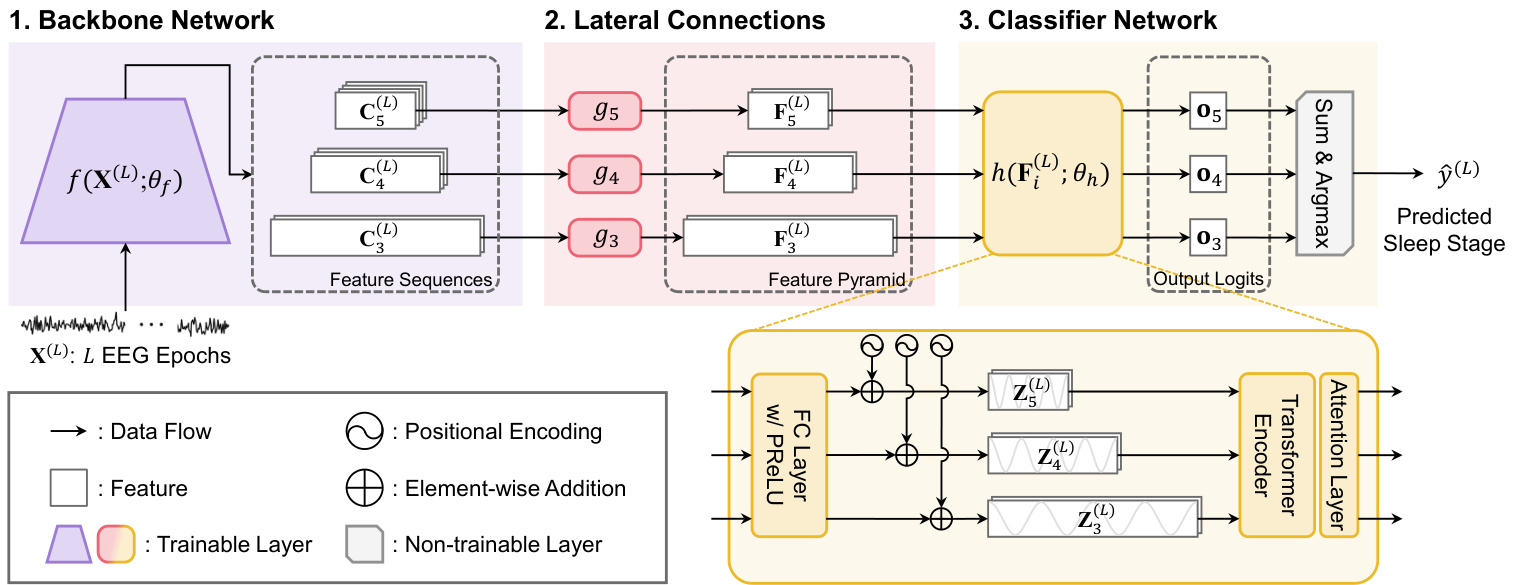

The proposed SleePyCo model comprises three major components: backbone network, lateral connections, and classifier network. The backbone network extracts feature sequences from raw EEG signals with multiple temporal scales and feature dimensions (number of channels). Thus, we designed a shallow network based on previous studies because they achieved state-of-the-art performance [7], [23], [25]. The lateral connections transform feature sequences with different feature dimensions into the same feature dimension via a single convolutional layer, resulting in a feature pyramid. For the classifier, a transformer encoder is employed to capture the sequential relations of EEG patterns on different temporal and frequency scales at subepoch levels.

提出的SleePyCo模型包含三个主要组件:骨干网络 (backbone network)、横向连接 (lateral connections) 和分类器网络 (classifier network)。骨干网络从原始脑电图 (EEG) 信号中提取具有多时间尺度和特征维度 (通道数) 的特征序列。因此,我们基于先前研究设计了一个浅层网络,因为这些研究取得了最先进的性能 [7], [23], [25]。横向连接通过单个卷积层将不同特征维度的特征序列转换为相同特征维度,从而形成特征金字塔。对于分类器,我们采用 Transformer 编码器来捕捉子时段级别上不同时间和频率尺度的脑电图模式的序列关系。

2.2.1 Backbone Network

2.2.1 骨干网络

To facilitate the feature pyramid, we propose a backbone network, named SleePyCo-backbone, containing five convolutional blocks, as proposed in [15], [25]. Each convolutional block is formed by the repetition of unit convolutional layers in the sequence of 1-D convolutional layer, 1-D batch normalization layer, and parametric rectified linear unit (PReLU) [47]. All convolutional layers have a filter width of 3, stride length of 1, and padding size of 1 to maintain the feature length within the same convolutional block. Max-pooling is performed between convolutional blocks to reduce the length of feature sequences. Additionally, a squeeze and excitation module [48] is included before the last activation function (PReLU) of each convolutional block. The details of the parameters, such as filter size, number of channels, and max-pooling size, are presented in Section 4.3.

为构建特征金字塔,我们提出了一种名为SleePyCo-backbone的主干网络,包含五个卷积块,如[15][25]所述。每个卷积块由单元卷积层按1维卷积层、1维批量归一化层和参数化修正线性单元(PReLU)[47]的顺序重复构成。所有卷积层均采用宽度为3的滤波器、步长为1、填充尺寸为1,以保持同一卷积块内的特征长度不变。卷积块之间通过最大池化操作缩减特征序列长度。此外,在每个卷积块的最终激活函数(PReLU)前加入了压缩激励模块[48]。具体参数细节(如滤波器尺寸、通道数和最大池化尺寸)详见第4.3节。

Figure 1: Model Architecture of SleePyCo. The purple, pink, and yellow regions indicate the backbone network, lateral connections, classifier network of $S l e e P y C o,$ and their corresponding outputs, respectively.

图 1: SleePyCo模型架构。紫色、粉色和黄色区域分别表示骨干网络、横向连接、$SleePyCo$的分类器网络及其对应输出。

Formally, SleePyCo-backbone takes $L$ successive EEG epochs as input, obtaining the following set of feature sequences:

形式上,SleePyCo-backbone 以 $L$ 个连续 EEG 时段作为输入,获得以下特征序列集合:

$$

{\mathbf{C}{3}^{(L)},\mathbf{C}{4}^{(L)},\mathbf{C}{5}^{(L)}}=f(\mathbf{X}^{(L)};\boldsymbol{\theta}_{f}),

$$

$$

{\mathbf{C}{3}^{(L)},\mathbf{C}{4}^{(L)},\mathbf{C}{5}^{(L)}}=f(\mathbf{X}^{(L)};\boldsymbol{\theta}_{f}),

$$

where $\mathbf{C}{i}^{(L)}$ denotes the output of the $i$ -th convolutional block of the backbone network, $f(\cdot)$ represents the backbone network, and $\theta_{f}$ indicates its trainable parameters. The size of the feature sequence can be denoted as Ci(L) $\mathbb{R}^{\lceil3000L/r_{i}\rceil\times c_{i}}$ , where $i\in{3,4,5}$ represents the stage index of the convolutional block, $c_{i}\in{192,256,256}$ denotes the feature dimension, $r_{i}\in{5^{2},5^{3},5^{4}}$ denotes the temporal reduction ratio, and $\lceil\cdot\rceil$ signifies the ceiling operation. Notably, the temporal reduction ratio $r_{i}$ is derived from the ratio of the length of the input $\mathbf{X}^{(L)}$ to that of the feature sequence $\mathbf{C}_{i}^{(L)}$ . The feature sequences from the first and second convolutional blocks were excluded from the feature pyramid owing to their large memory allocation. Thus, the stage indices of 1 and 2 were not considered in this study.

其中 $\mathbf{C}{i}^{(L)}$ 表示主干网络第 $i$ 个卷积块的输出,$f(\cdot)$ 代表主干网络,$\theta_{f}$ 表示其可训练参数。特征序列的尺寸可表示为 $\mathbb{R}^{\lceil3000L/r_{i}\rceil\times c_{i}}$,其中 $i\in{3,4,5}$ 表示卷积块的阶段索引,$c_{i}\in{192,256,256}$ 表示特征维度,$r_{i}\in{5^{2},5^{3},5^{4}}$ 表示时间缩减比率,$\lceil\cdot\rceil$ 表示向上取整运算。值得注意的是,时间缩减比率 $r_{i}$ 是由输入 $\mathbf{X}^{(L)}$ 长度与特征序列 $\mathbf{C}_{i}^{(L)}$ 长度的比值得出的。由于内存占用较大,第一和第二个卷积块的特征序列未被纳入特征金字塔,因此本研究未考虑阶段索引1和2。

2.2.2 Lateral Connections

2.2.2 横向连接

Lateral connections were attached at the end of the $3\mathrm{rd},$ $4\mathrm{th},$ and 5th convolutional blocks to form pyramidal feature sequences (i.e., feature pyramid) with identical feature dimensions. Importantly, the feature dimensions of the feature pyramid should be identical because all pyramidal feature sequences share a single classifier network. Because the feature vectors in the feature pyramid represent an assorted frequency meaning but the same semantic meaning (EEG patterns), the application of a shared classifier Ⅰis a pp ro pri ate in our methods. Formally, the feature pyramid ${\mathbf{F}{3}^{(L)},\mathbf{F}{4}^{(L)},\mathbf{F}_{5}^{(L)}}$ can be obtained using the following equation:

横向连接被附加在第3、第4和第5卷积块的末端,形成具有相同特征维度的金字塔特征序列(即特征金字塔)。重要的是,特征金字塔的特征维度应当保持一致,因为所有金字塔特征序列共享同一个分类器网络。由于特征金字塔中的特征向量代表不同的频率含义但相同的语义含义(EEG模式),在我们的方法中应用共享分类器是合适的。形式上,特征金字塔${\mathbf{F}{3}^{(L)},\mathbf{F}{4}^{(L)},\mathbf{F}_{5}^{(L)}}$可通过以下方程获得:

$$

\mathbf{F}{i}^{(L)}=g_{i}(\mathbf{C}{i}^{(L)};\theta_{g,i}),

$$

$$

\mathbf{F}{i}^{(L)}=g_{i}(\mathbf{C}{i}^{(L)};\theta_{g,i}),

$$

where $g_{i}(\cdot)$ denotes the lateral connection for the feature sequence Ci(L) w ith the trainable parameter $\theta_{g,i}$ . Each lateral connection consists of one convolutional layer with a filter width of 1 and results in a pyramidal feature sequence $\mathbf{F}{i}^{(L)}$ that describes the same temporal scale with $\mathbf{C}{i}^{({L})}$ . Thus, the size of the pyramidal feature sequences is formulated as Fi(L) ∈ R⌈3000L/ri⌉×df . In this study, the feature dimension $d_{f}$ was fixed at 128.

其中 $g_{i}(\cdot)$ 表示特征序列 $\mathbf{C}{i}^{(L)}$ 的横向连接,其可训练参数为 $\theta_{g,i}$。每个横向连接由一个滤波器宽度为1的卷积层组成,并生成金字塔特征序列 $\mathbf{F}{i}^{(L)}$,该序列与 $\mathbf{C}{i}^{(L)}$ 具有相同的时间尺度。因此,金字塔特征序列的尺寸可表示为 $\mathbf{F}_{i}^{(L)} \in \mathbb{R}^{\lceil 3000L/r_i \rceil \times d_f}$。本研究中,特征维度 $d_f$ 固定为128。

2.2.3 Classifier Network

2.2.3 分类器网络

The classifier network of $S l e e P y C o$ can analyze the temporal context in the feature pyramid and output the predicted sleep stage of the target epoch $\hat{y}^{(L)}$ . Formally, we denote the classifier network as $h(\bar{\mathbf{F}{i}^{(L)}};\boldsymbol{\theta_{h}}).$ , where $\theta_{h}$ is the trainable parameter. We utilized the encoder part of the Transformer [49] for sequential modelling of the feature pyramid extracted from the raw single-channel EEG. Overall, recurrent architectures such as LSTM [50] and GRU [51] were widely adopted in automatic sleep scoring. Interestingly, the transformer delivered a remarkable performance in various sequential modeling tasks, including automatic sleep scoring [27], [28], [52]. Owing to the large number of parameters of the original transformer, we reduced the model dimension $d_{m}$ (i.e., dimension of the queue, value, and query) and the feed-forward network dimension $d_{F F}$ in comparison to the original ones. We used the original configuration of the number of heads $N_{h}$ and the number of encoder layers $N_{e}$ . The parameters are detailed in Section 4.3.

$S l e e P y C o$ 的分类器网络能够分析特征金字塔中的时序上下文,并输出目标时段 $\hat{y}^{(L)}$ 的预测睡眠阶段。形式上,我们将分类器网络表示为 $h(\bar{\mathbf{F}{i}^{(L)}};\boldsymbol{\theta_{h}})$ ,其中 $\theta_{h}$ 为可训练参数。我们采用 Transformer [49] 的编码器部分对从原始单通道 EEG 提取的特征金字塔进行序列建模。总体而言,LSTM [50] 和 GRU [51] 等循环架构在自动睡眠评分中被广泛采用。有趣的是,Transformer 在包括自动睡眠评分 [27]、[28]、[52] 在内的多种序列建模任务中表现出色。由于原始 Transformer 参数量较大,我们降低了模型维度 $d_{m}$ (即队列、值和查询的维度)和前馈网络维度 $d_{F F}$ ,同时保留了头数 $N_{h}$ 和编码器层数 $N_{e}$ 的原始配置。具体参数详见第 4.3 节。

Prior to the transformer encoder, a shared fully connected layer with PReLU is employed to transform the feature dimension of the feature pyramid into the dimension of the transformer encoder. Specifically, we denote the transformed feature pyramid as F(L) $\mathbf{\tilde{F}}{i}^{(L)}\in\mathbf{\bar{\mathbb{R}}}^{\lceil3000L/r_{i}\rceil\times d_{m}}$ . This layer maps EEG patterns from various convolutional stages into the same feature space, and thus, the shared classifier considers the temporal context, regardless of the feature level. Subsequently, because the transformer encoder is a recurrent-free architecture, the positional encoding $\mathbf{P}{i}^{(L)}\in\mathbb{R}^{\lceil3000L/r_{i}\rceil\times d_{m}}$ should be added to the input feature sequences to blend the temporal information:

在Transformer编码器之前,采用带有PReLU的共享全连接层将特征金字塔的特征维度转换为Transformer编码器的维度。具体而言,我们将转换后的特征金字塔表示为F(L) $\mathbf{\tilde{F}}{i}^{(L)}\in\mathbf{\bar{\mathbb{R}}}^{\lceil3000L/r_{i}\rceil\times d_{m}}$ 。该层将来自不同卷积阶段的EEG模式映射到相同的特征空间,因此共享分类器会考虑时间上下文,而不受特征层级的影响。随后,由于Transformer编码器是一种无循环架构,需要将位置编码 $\mathbf{P}{i}^{(L)}\in\mathbb{R}^{\lceil3000L/r_{i}\rceil\times d_{m}}$ 添加到输入特征序列中以融合时序信息:

$$

\mathbf{Z}{i}^{(L)}=\tilde{\mathbf{F}}{i}^{(L)}+\mathbf{P}_{i}^{(L)},

$$

$$

\mathbf{Z}{i}^{(L)}=\tilde{\mathbf{F}}{i}^{(L)}+\mathbf{P}_{i}^{(L)},

$$

where $\mathbf{P}{i}^{(L)}\in\mathbb{R}^{\lceil3000L/r_{i}\rceil\times d_{m}}$ denotes the positional encoding matrix for the $i$ -th feature sequence. Herein, sinusoidal positional encoding was performed following a prior study [49]. However, because the same time indices are applicable at both ends of the pyramidal feature sequences, we modified the positional encoding to coincide with the absolute temporal position between them by hopping the temporal index of positional encoding. Thus, the element of $\mathbf{P}_{i}^{(L)}$ at the temporal index $t$ and dimension index $k$ can be defined as

其中 $\mathbf{P}{i}^{(L)}\in\mathbb{R}^{\lceil3000L/r_{i}\rceil\times d_{m}}$ 表示第 $i$ 个特征序列的位置编码矩阵。此处采用正弦位置编码方法 [49]。由于金字塔特征序列两端具有相同的时间索引,我们通过跳跃位置编码的时间索引,将其修改为与两者之间的绝对时间位置对齐。

where $\lfloor\cdot\rfloor$ denotes the floor operation and $\textit{R}=\left.r_{i}/r_{i-1}\right.$ indicates a temporal reduction factor (set as 5 in this study). $\mathbf{P}_{i}^{(L)}(t,k)$ degenerates into the original sinusoidal positional encoding for $i=3$ .

其中 $\lfloor\cdot\rfloor$ 表示向下取整运算,$\textit{R}=\left.r_{i}/r_{i-1}\right.$ 表示时间缩减因子(本研究设为5)。当 $i=3$ 时,$\mathbf{P}_{i}^{(L)}(t,k)$ 退化为原始正弦位置编码。

The output hidden states $\mathbf{H}{i}^{(L)}\in\mathbb{R}^{\lceil3000L/r_{i}\rceil\times d_{m}}$ for the $i$ -th pyramidal feature sequence can be formulated as

第 $i$ 个金字塔特征序列的隐藏状态输出 $\mathbf{H}{i}^{(L)}\in\mathbb{R}^{\lceil3000L/r_{i}\rceil\times d_{m}}$ 可表示为

$$

\mathbf{H}{i}^{(L)}=\mathrm{TransformerEncoder}(\mathbf{Z}_{i}^{(L)}),

$$

$$

\mathbf{H}{i}^{(L)}=\mathrm{TransformerEncoder}(\mathbf{Z}_{i}^{(L)}),

$$

where Transformer Encoder $\cdot(\cdot)$ denotes the encoder component of the transformer. To integrate the output hidden states at various time steps into a single feature vector, we utilized the attention layer [53] [54] as [17]. Foremostly, the output hidden states Hi(L) were projected into the attentional hidden states Ai(L) $\mathbf{A}{i}^{(L)}={\mathbf{a}{i,1},\mathbf{a}{i,2},...,\mathbf{a}{i,T_{i}}},$ , where $T_{i}$ is $\left\lceil3000L/r_{i}\right\rceil.$ , via a single fully-connected layer. Thereafter, the attention al feature vector $\bar{\mathbf{a}}{i}$ of the $i$ -th pyramidal feature sequence can be formulated via a weighted summation of $\mathbf{a}_{i,t}$ along the temporal dimension:

其中Transformer Encoder $\cdot(\cdot)$ 表示Transformer的编码器组件。为了将不同时间步的输出隐藏状态整合为单一特征向量,我们采用了注意力层[53][54]如[17]所述。首先,将输出隐藏状态Hi(L)投影为注意力隐藏状态Ai(L) $\mathbf{A}{i}^{(L)}={\mathbf{a}{i,1},\mathbf{a}{i,2},...,\mathbf{a}{i,T_{i}}},$ ,其中$T_{i}$为$\left\lceil3000L/r_{i}\right\rceil.$ ,通过单个全连接层实现。随后,第$i$个金字塔特征序列的注意力特征向量$\bar{\mathbf{a}}{i}$可通过沿时间维度对$\mathbf{a}_{i,t}$进行加权求和得到:

this function is defined as

该函数定义为

$$

s(\mathbf{a})=\mathbf{a}\mathbf{W}_{\mathrm{att}},

$$

$$

s(\mathbf{a})=\mathbf{a}\mathbf{W}_{\mathrm{att}},

$$

where $\mathbf{W}{\mathrm{att}}\in\mathbb{R}^{d_{m}\times1}$ represents the trainable parameter.

其中 $\mathbf{W}{\mathrm{att}}\in\mathbb{R}^{d_{m}\times1}$ 表示可训练参数。

Upon using the attention feature vector a obtained from Eq. 6, the output logits of the $i$ -th pyramidal feature sequence, $\mathbf{o}_{i}$ , can be formulated as follows:

在使用从公式6获得的注意力特征向量a后,第i个金字塔特征序列的输出logits $\mathbf{o}_{i}$ 可表示为:

$$

\mathbf{o}{i}=\bar{\mathbf{a}}{i}\mathbf{W}{\mathrm{a}}+\mathbf{b}_{\mathrm{a}},

$$

$$

\mathbf{o}{i}=\bar{\mathbf{a}}{i}\mathbf{W}{\mathrm{a}}+\mathbf{b}_{\mathrm{a}},

$$

where ${\mathbf W}{\mathrm{a}}\in\mathbb{R}^{d_{m}\times N_{c}}$ and $\mathbf{b}{\mathrm{a}}\in\mathbb{R}^{1\times N_{c}}$ denote the trainable weight and bias, respectively. Eventually, the sleep stage $\hat{y}^{(L)}$ of the target epoch was predicted based on the following relation.

其中 ${\mathbf W}{\mathrm{a}}\in\mathbb{R}^{d_{m}\times N_{c}}$ 和 $\mathbf{b}{\mathrm{a}}\in\mathbb{R}^{1\times N_{c}}$ 分别表示可训练的权重和偏置。最终,目标时段的睡眠阶段 $\hat{y}^{(L)}$ 通过以下关系式进行预测。

$$

\hat{y}^{(L)}=\operatorname{argmax}(\sum_{i\in{3,4,5}}\mathbf{o}_{i}),

$$

$$

\hat{y}^{(L)}=\operatorname{argmax}(\sum_{i\in{3,4,5}}\mathbf{o}_{i}),

$$

where argmax $(\cdot)$ is the operation that returns the index of the maximum value.

其中 argmax $(\cdot)$ 是返回最大值索引的操作。

3 SUPERVISED CONTRASTIVE LEARNING

3 监督对比学习

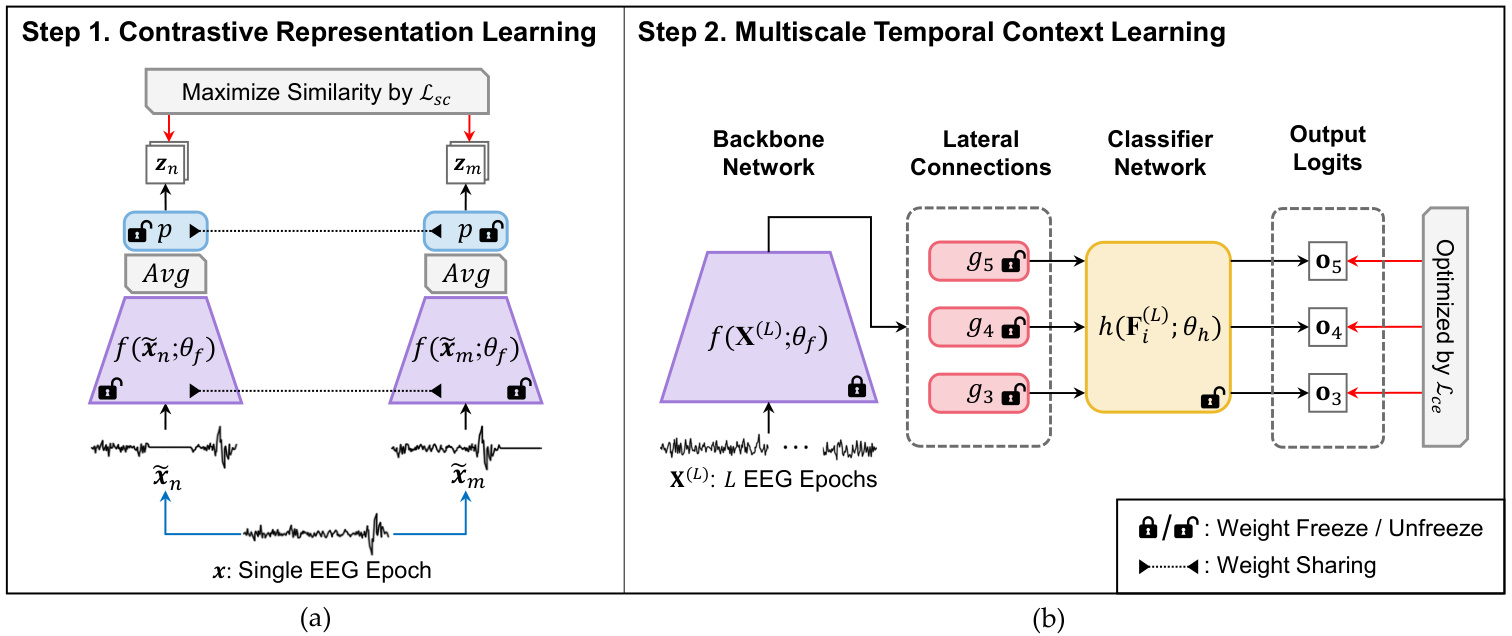

As illustrated in Fig. 2, our learning framework involves two training steps. The first step involves contrastive representation learning (CRL) to pretrain the backbone network $f(\cdot)$ via a contrastive learning strategy. In this step, the backbone network $f(\cdot)$ is trained to extract the class disc rim i native features based on the supervised contrastive loss [43]. The second step involves multiscale temporal context learning (MTCL) to learn sequential relations in feature pyramid. For this purpose, we acquire the learned weights of $f(\cdot)$ from CRL and freeze them to conserve the class disc rim i native features. The remaining parts of the network $(g(\cdot)$ and $h(\cdot))$ are trained to learn the multiscale temporal context by predicting the sleep stage of the target epoch.

如图 2 所示,我们的学习框架包含两个训练步骤。第一步通过对比表示学习 (contrastive representation learning, CRL) ,采用对比学习策略对主干网络 $f(\cdot)$ 进行预训练。在此步骤中,主干网络 $f(\cdot)$ 基于监督对比损失 [43] 学习提取类别判别特征。第二步通过多尺度时序上下文学习 (multiscale temporal context learning, MTCL) 在特征金字塔中学习序列关系。为此,我们从 CRL 中获取 $f(\cdot)$ 的已学习权重并冻结它们以保留类别判别特征。网络的其余部分 $(g(\cdot)$ 和 $h(\cdot))$ 则通过预测目标时相的睡眠阶段来学习多尺度时序上下文。

To prevent over fitting during the training procedures, we performed early stopping in both CRL and MTCL. Thus, validation was performed to track the validation cost (i.e., validation loss) at every certain training iteration (i.e., validation period, $\psi,$ ), and the training was terminated if the validation loss did not progress more than a certain number of times (i.e., early stopping patience, $\phi)$ . Early stopping in supervised contrastive learning (SCL) results in better pretrained parameters of the backbone network and prevents over fitting at the $M T C L$ step. The details of the hyper parameters used in $S C L$ are summarized in Section 4.4. Note that different validation periods, $\psi_{1}$ and $\psi_{2},$ were used in $C R L$ and $M T C L,$ , respectively. The specifics of the training framework are described in the following sections and Algorithm 1.

为防止训练过程中出现过拟合,我们在CRL和MTCL中均采用了早停机制。具体而言,通过验证集定期(即验证周期$\psi$)跟踪验证成本(即验证损失),若验证损失连续超过特定次数(即早停耐心值$\phi$)未改善,则终止训练。监督对比学习(SCL)中的早停机制可获得主干网络更优的预训练参数,并避免MTCL步骤中的过拟合。SCL所用超参数详情见第4.4节。需注意CRL与MTCL分别采用不同的验证周期$\psi_{1}$和$\psi_{2}$。训练框架的具体实现详见下文及算法1。

$$

\bar{\mathbf{a}}{i}=\sum_{t=1}^{T_{i}}\alpha_{i,t}\mathbf{a}_{i,t},

$$

where the attention weight $\alpha_{i,t}$ at time step $t$ is derived by

其中时间步 $t$ 的注意力权重 $\alpha_{i,t}$ 由

$$

\alpha_{i,t}=\frac{\exp(s(\mathbf{a}{i,t}))}{\sum_{t}\exp(s(\mathbf{a}_{i,t}))},

$$

$$

\alpha_{i,t}=\frac{\exp(s(\mathbf{a}{i,t}))}{\sum_{t}\exp(s(\mathbf{a}_{i,t}))},

$$

where $s(\cdot)$ denotes the attention scoring function that maps the attention al hidden state into a scalar value. Formally,

其中 $s(\cdot)$ 表示将注意力隐藏状态映射为标量值的注意力评分函数。形式上,

3.1 Contrastive Representation Learning

3.1 对比表征学习 (Contrastive Representation Learning)

In $C R L,$ , we implemented the training strategy of SCL [43] to extract the class disc rim i native features from a single EEG epoch. As illustrated in Fig. 2-(a), the CRL aimed to maximize the similarity between the two projected vectors based on two different views of a single EEG epoch. Simultaneously, the similarity between two projected vectors from different sleep stages was minimized as the optimization of supervised contrastive loss. Thus, we focused on reducing the ambiguous frequency characteristics by extracting dist ingui sh able representations of the sleep stage. Accordingly, a single EEG epoch was initially transformed by two distinct augmentation functions. Thereafter, the encoder network and projection network mapped them into the hyper sphere, which produced a latent vector $_{z}$ . The details are explained in the following sections: Augmentation Module, Encoder Network, Projection Network, and Loss Function, which constitute the major components of CRL.

在 $C R L,$ 中,我们采用了SCL [43] 的训练策略,从单个脑电图 (EEG) 时段中提取类别判别特征。如图 2-(a) 所示,CRL旨在基于单个EEG时段两种不同视角的投影向量最大化其相似性。同时,来自不同睡眠阶段的投影向量间的相似性被最小化,作为监督对比损失 (supervised contrastive loss) 的优化目标。因此,我们通过提取可区分的睡眠阶段表征来减少模糊的频率特征。具体而言,单个EEG时段首先通过两种不同的增强函数进行转换。随后,编码器网络和投影网络将其映射至超球面空间,生成潜在向量 $_{z}$ 。以下章节将详细说明构成CRL核心的模块:增强模块、编码器网络、投影网络和损失函数。

Figure 2: Illustration of the proposed supervised contrastive learning framework. (a) Contrastive representation learning (CRL); (b) multiscale temporal context learning (MTCL); red arrow is first backward path and blue arrow is $A u g(\cdot)$ .

图 2: 提出的监督对比学习框架示意图。(a) 对比表征学习(CRL); (b) 多尺度时序上下文学习(MTCL); 红色箭头为首次反向路径, 蓝色箭头为 $A u g(\cdot)$。

Data Augmentation Module: The data augmentation module, $A u g(\cdot).$ , transforms a single epoch of EEG signal $_{x}$ into a slightly different but semantically identical signal as follows:

数据增强模块:数据增强模块 $Aug(\cdot)$ 将单段脑电图信号 $_{x}$ 转换为语义相同但略有差异的信号,具体如下:

$$

\tilde{\pmb{x}}={\cal A}u g({\pmb x}),

$$

$$

\tilde{\pmb{x}}={\cal A}u g({\pmb x}),

$$

where $\tilde{x}$ denotes a different perspective on a single EEG epoch ${x}$ . With a given set of randomly sampled data ${\pmb{x}{p},\pmb{y}{p}}{p=1,\dots,N_{b}}$ (i.e., batch), two different augmentations result in ${\tilde{x}{q},\tilde{y}{q}}{q=1,\dots,2N_{b}.}$ , called a multi viewed batch [43] as illustrated in Fig. 2-(a), where $N_{b}$ is the batch size and $\tilde{y}{2p-1}=\tilde{y}{2p}=y_{p}$ . Because the augmentation set is crucial for contrastive learning, we adopted the verified transformation functions from previous studies [23], [33]. As listed in Table 1, we sequentially applied six transformations, namely, amplitude scale, time shift, amplitude shift, zero-masking, additive Gaussian noise, and band-stop filter with the probability of 0.5. In addition, we modified the hyperparameter of the transformation functions by considering the sampling rate and characteristics of the EEG signal.

其中 $\tilde{x}$ 表示对单个脑电图(EEG)时段 ${x}$ 的不同视角。给定一组随机采样的数据 ${\pmb{x}{p},\pmb{y}{p}}{p=1,\dots,N_{b}}$ (即批次),两种不同的增强操作会生成 ${\tilde{x}{q},\tilde{y}{q}}{q=1,\dots,2N_{b}.}$,称为多视角批次[43],如图2-(a)所示。这里 $N_{b}$ 是批次大小,且 $\tilde{y}{2p-1}=\tilde{y}{2p}=y_{p}$。由于增强集对对比学习至关重要,我们采用了先前研究[23][33]中已验证的变换函数。如表1所列,我们依次应用了六种变换:幅度缩放、时间偏移、幅度偏移、零掩码、加性高斯噪声和带阻滤波器,每种变换的应用概率为0.5。此外,我们还根据EEG信号的采样率和特性修改了变换函数的超参数。

Encoder Network: The encoder network transforms an augmented single-EEG epoch $\tilde{x}$ into a representation vector $\pmb{r}\in\mathbb{R}^{c_{5}}$ . The encoder network contains the sequence of the backbone network $f(\cdot)$ and the global average pooling operation $A v g(\cdot)$ . Thus, the backbone network initially transforms an augmented single-EEG epoch $\tilde{x}$ into the feature sequence $\mathbf{F}{5}^{(\mathrm{1})}$ . Then, the representation vector is obtained from the feature sequence via global average pooling along the temporal dimension. Formally, the representation vector is evaluated as $r=A v g(\mathbf{F}_{5}^{(1)})$ .

编码器网络:编码器网络将增强的单个脑电图时段 $\tilde{x}$ 转换为表示向量 $\pmb{r}\in\mathbb{R}^{c_{5}}$。该网络由骨干网络 $f(\cdot)$ 和全局平均池化操作 $A v g(\cdot)$ 依次组成。具体而言,骨干网络首先将增强的单个脑电图时段 $\tilde{x}$ 转换为特征序列 $\mathbf{F}{5}^{(\mathrm{1})}$,随后通过沿时间维度进行全局平均池化得到表示向量。其数学表达式为 $r=A v g(\mathbf{F}_{5}^{(1)})$。

Table 1: Data Augmentation Pipeline

表 1: 数据增强流程

| TransformationPipeline | Min | Max | Probability |

|---|---|---|---|

| amplitude scaling | 0.5 | 2 | 0.5each |

| time shift (samples) | -300 | 300 | 0.5each |

| amplitude shift (μV) | -10 | 10 | 0.5each |

| zero-masking (samples) | 0 | 300 | 0.5each |

| additiveGaussiannoise(o) | 0 | 0.2 | 0.5each |

| band-stop filter (2 Hz width) (lower bound frequency,Hz) | 0.5 | 30.0 | 0.5each |

Projection Network: The projection network is vital for mapping the representation vector $r$ into the normalized latent vector z = $\begin{array}{r}{z=\frac{z^{\prime}}{|z^{\prime}|{2}}\in\mathbb{R}^{d_{z}},}\end{array}$ , where $z^{\prime}=p(r;\theta_{p}),p(\cdot)$ denotes the projection network, $\theta_{p}$ represents its trainable parameter, and $d_{z}$ indicates the dimension of the latent vector. We use a multilayer perceptron [55] with a single hidden layer of size 128 to obtain a latent vector of size $d_{z}=128$ as the projection network. For sequential modeling, the $p(\cdot)$ is removed at the MTCL.

投影网络 (Projection Network):该网络对于将表示向量 $r$ 映射到归一化潜在向量 z = $\begin{array}{r}{z=\frac{z^{\prime}}{|z^{\prime}|{2}}\in\mathbb{R}^{d_{z}},}\end{array}$ 至关重要。其中 $z^{\prime}=p(r;\theta_{p})$,$p(\cdot)$ 表示投影网络,$\theta_{p}$ 代表其可训练参数,$d_{z}$ 表示潜在向量的维度。我们采用具有单个128维隐藏层的多层感知机 [55] 作为投影网络,最终获得维度为 $d_{z}=128$ 的潜在向量。在序列建模时,MTCL 层会移除 $p(\cdot)$ 功能。

Loss Function: For $C R L,$ we adopt the supervised contrastive loss proposed in [43]. The supervised contrastive loss simultaneously maximizes the similarity between positive pairs and promotes the deviations across negative pairs. In this study, samples annotated with the same sleep stage in a multi viewed batch are regarded as positives, and negatives otherwise. Formally, the supervised contrastive loss function can be formulated as

损失函数:对于$CRL$,我们采用[43]提出的监督对比损失。该损失函数同时最大化正样本对的相似性,并扩大负样本对之间的差异。本研究中,在多视角批次中标注为相同睡眠阶段的样本被视为正样本,其余为负样本。形式上,监督对比损失函数可表述为

$$

\mathcal{L}{s c}=-\sum_{n=1}^{2N_{b}}\frac{1}{N_{p}^{(n)}}\sum_{m=1}^{2N_{b}}\log\frac{\mathbb{1}{[n\neq m]}\mathbb{1}{[\tilde{y}{n}=\tilde{y}{m}]}\exp(z_{n}\cdot z_{m}/\tau)}{\sum_{a=1}^{2N_{b}}\mathbb{1}{[n\neq a]}\exp(z_{n}\cdot z_{a}/\tau)},

$$

$$

\mathcal{L}{s c}=-\sum_{n=1}^{2N_{b}}\frac{1}{N_{p}^{(n)}}\sum_{m=1}^{2N_{b}}\log\frac{\mathbb{1}{[n\neq m]}\mathbb{1}{[\tilde{y}{n}=\tilde{y}{m}]}\exp(z_{n}\cdot z_{m}/\tau)}{\sum_{a=1}^{2N_{b}}\mathbb{1}{[n\neq a]}\exp(z_{n}\cdot z_{a}/\tau)},

$$

where $N_{p}^{(n)}$ denotes the number of positives for the $n$ -th sample in a multi viewed batch excluding itself, $\mathbb{1}{[\cdot]}$ denotes the indicator function, $\tilde{{\pmb y}}{n}$ denotes the ground truth corresponding to $z_{n},$ · operation denotes the inner product between two vectors, and $\tau\in\mathbb{R}^{+}$ denotes a scalar temperature parameter $\mathrm{\Delta}\tau=0.07$ in all present experiments).

其中 $N_{p}^{(n)}$ 表示多视角批次中第 $n$ 个样本除自身外的正样本数量,$\mathbb{1}{[\cdot]}$ 表示指示函数,$\tilde{{\pmb y}}{n}$ 表示与 $z_{n}$ 对应的真实标签,· 运算表示两个向量的内积,$\tau\in\mathbb{R}^{+}$ 表示标量温度参数(当前所有实验中 $\mathrm{\Delta}\tau=0.07$)。

3.2 Multiscale Temporal Context Learning

3.2 多尺度时序上下文学习

As illustrated in Fig. 2-(b), the second step of SleePyCo executes $L$ successive EEG epochs $\mathbf{\boldsymbol{x}}^{(L)}$ to analyze both the intra- and inter-epoch temporal contexts $\mathit{\Delta}L=10$ in this study). The performance obtained considering intra- and inter-epoch temporal contexts [15] is better than that considering only the intra-epoch. However, it is difficult for the network to capture the EEG patterns of the previous epochs, because only the label of the target epoch is provided. To resolve this, we fixed the parameters of the backbone network $f(\cdot)$ to maintain and utilize the class disc rim i native features learned from CRL. By contrast, the remaining parts of the network, which are lateral connections $g(\cdot)$ and classifier network $h(\cdot)$ , are learned to score the sleep stage of the target epoch based on the following loss function:

如图 2-(b) 所示,SleePyCo 的第二步执行 $L$ 个连续的 EEG 时段 $\mathbf{\boldsymbol{x}}^{(L)}$ 来分析时段内和时段间的时间上下文 (本研究设定 $\mathit{\Delta}L=10$)。考虑时段内和时段间时间上下文的性能 [15] 优于仅考虑时段内上下文的情况。然而,由于仅提供了目标时段的标签,网络难以捕捉先前时段的 EEG 模式。为解决这一问题,我们固定了骨干网络 $f(\cdot)$ 的参数,以保持并利用从 CRL 中学到的类别判别特征。相比之下,网络的其余部分 (横向连接 $g(\cdot)$ 和分类器网络 $h(\cdot)$) 则通过学习以下损失函数来对目标时段的睡眠阶段进行评分:

$$

\mathcal{L}{c e}=-\sum_{i\in{3,4,5}}\sum_{j=1}^{N_{c}}y_{j}^{(L)}\log\left(\frac{\exp(o_{i,j})}{\sum_{k=1}^{N_{c}}\exp(o_{i,k})}\right),

$$

$$

\mathcal{L}{c e}=-\sum_{i\in{3,4,5}}\sum_{j=1}^{N_{c}}y_{j}^{(L)}\log\left(\frac{\exp(o_{i,j})}{\sum_{k=1}^{N_{c}}\exp(o_{i,k})}\right),

$$

where yj $y_{j}^{(L)}$ ) denotes the $j$ -th element of one-hot encoding label, and $o_{i,j}$ represents the $j$ -th element of output logits from the $i$ -th convolutional stage. This loss function follows the summation of cross entropy over the output logits from each convolutional block.

其中 ( y_{j}^{(L)} ) 表示独热编码标签的第 ( j ) 个元素,( o_{i,j} ) 表示第 ( i ) 个卷积阶段输出 logits 的第 ( j ) 个元素。该损失函数对每个卷积块的输出 logits 进行交叉熵求和。

Because all pyramidal feature sequences share a single classifier network, the classifier network considers feature sequences across a broad scale. Thus, Eq. 13 facilitates the classifier network to analyze the temporal relation between the EEG patterns at different temporal scales and frequency characteristics. Consequently, the classifier network $h(\cdot)$ models the temporal information based on the pyramidal feature sequences Fi(L) derived from analyzing the intraand inter-epoch temporal contexts.

由于所有金字塔特征序列共享同一个分类器网络,该网络能够考虑跨广泛尺度的特征序列。因此,式13促使分类器网络分析不同时间尺度和频率特性下脑电图(EEG)模式之间的时序关系。最终,分类器网络$h(\cdot)$基于从分析时段内和时段间时序上下文得到的金字塔特征序列Fi(L)来建模时序信息。

4 EXPERIMENTS

4 实验

4.1 Datasets

4.1 数据集

Four public datasets, including PSG recordings and their associated sleep stages, were utilized to assess the performance of SleePyCo: Sleep-EDF (2018 version), Montreal Archive of Sleep Studies (MASS), Ph y sion et 2018, and Sleep Heart Health Study (SHHS). The number of subjects, utilized EEG channels, evaluation scheme, number of held-out validation subjects, and sample distribution are presented in Table 2. In this study, all EEG signals except the Sleep-EDF dataset were down sampled to $100~\mathrm{Hz}$ . We did not employ preprocessing to EEG signals except for down sampling. For all datasets, we discarded the EEG epochs with annotations that were not related to the sleep stage, such as MOVEMENT class. In addition, we merged N3 and N4 into N3 to consider the five-class problem for datasets annotated with R&K [4].

为评估SleePyCo的性能,研究使用了四个包含PSG记录及对应睡眠分期的公开数据集:Sleep-EDF(2018版)、蒙特利尔睡眠研究档案库(MASS)、Physionet 2018以及睡眠心脏健康研究(SHHS)。表2展示了各数据集的受试者数量、使用的EEG通道、评估方案、预留验证集人数及样本分布情况。本研究中,除Sleep-EDF数据集外,所有EEG信号均降采样至$100~\mathrm{Hz}$,且除降采样外未进行其他预处理。对于所有数据集,我们剔除了标注与睡眠分期无关的EEG时段(如MOVEMENT类)。此外,为将R&K[4]标注数据集统一为五分类问题,我们将N3与N4阶段合并为N3。

Sleep-EDF: The Sleep-EDF Expanded dataset [44], [45] (2018 version) includes 197 PSG recordings containing EEG, EOG, chin EMG, and event markers. The Sleep-EDF dataset

Sleep-EDF:Sleep-EDF扩展数据集[44][45](2018版)包含197份PSG记录,涵盖脑电图(EEG)、眼电图(EOG)、下颌肌电图(EMG)及事件标记。

Algorithm us 1 Training Algorithm

算法1 训练算法

comprises two kinds of studies: SC for 79 healthy Caucasian individuals without sleep disorders and ST for 22 subjects of a study on the effects of Temazepam on sleep. In this study, the SC recordings (subjects aged 25–101 years) were used based on previous studies [7], [17], [21], [25], [27]. According to the R&K rule [4], sleep experts score each halfminute epoch with one of the eight classes {WAKE, REM, N1, N2, N3, N4, MOVEMENT, UNKNOWN}. Owing to the larger size of the class WAKE group compared to others, we included only 30 min of WAKE epochs before and after the sleep period.

包含两类研究:SC针对79名无睡眠障碍的健康白种人,ST针对22名受试者进行替马西泮对睡眠影响的研究。本研究中,基于先前研究[7]、[17]、[21]、[25]、[27],采用了SC记录(受试者年龄25-101岁)。根据R&K规则[4],睡眠专家将每半分钟时段划分为八种类别之一{清醒(WAKE)、快速眼动睡眠(REM)、N1、N2、N3、N4、体动(MOVEMENT)、未知(UNKNOWN)}。由于清醒(WAKE)类别的样本量显著大于其他类别,我们仅纳入睡眠周期前后各30分钟的清醒时段数据。

MASS: The Montreal Archive of Sleep Studies (MASS) dataset [56] contains PSG recordings from 200 individuals (97 males and 103 females). This dataset includes five subsets (SS1, SS2, SS3, SS4, and SS5) that are classified based on the research purpose and data acquisition protocols. The AASM standard [5] (SS1 and SS3 subsets) or the R&K standard [4] (SS2, SS4, and SS5 subsets) was used for the manual annotation. Specifically, the SS1, SS2, and SS4 subsets were annotated with 20-s EEG epochs instead of SS3 and SS5 subsets. Because 30-s EEG samples are used in SCL, 5-s segments of signals before and after the EEG epoch were considered for the case of SS1, SS2, and SS4. In MTCL, an equal length of EEG signals was used for all subsets $(300~\mathrm{s}$ in this study).

MASS: Montreal睡眠研究档案库 (MASS) 数据集 [56] 包含200名受试者 (97名男性和103名女性) 的PSG记录。该数据集包含五个子集 (SS1、SS2、SS3、SS4和SS5),根据研究目的和数据采集协议进行分类。手动标注采用AASM标准 [5] (SS1和SS3子集) 或R&K标准 [4] (SS2、SS4和SS5子集)。具体而言,SS1、SS2和SS4子集采用20秒EEG时段进行标注,而SS3和SS5子集则未采用。由于SCL使用30秒EEG样本,对于SS1、SS2和SS4的情况,考虑了EEG时段前后5秒的信号段。在MTCL中,所有子集使用相同长度的EEG信号 (本研究中为$300~\mathrm{s}$)。

Table 2: Experimental settings and dataset statistics

表 2: 实验设置与数据集统计

| 数据集 | 受试者数量 | EEG | 实验设置 | 保留验证集 | 清醒 | N1 | N2 | N3 | REM | 总计 |

|---|---|---|---|---|---|---|---|---|---|---|

| Sleep-EDF | 79 | Fpz-Cz | 10折交叉验证 | 7名受试者 | 69,824 (35.8%) | 21,522 (10.8%) | 69,132 (34.7%) | 13,039 (6.5%) | 25,835 (13.0%) | 199,352 |

| MASS | 200 | C4-A1 | 20折交叉验证 | 10名受试者 | 31,184 (13.6%) | 19,359 (8.5%) | 107,930 (47.1%) | 30,383 (13.3%) | 40,184 (17.5%) | 229,040 |

| Physio2018 | 994 | C3-A2 | 5折交叉验证 | 50名受试者 | 157,945 (17.7%) | 136,978 (15.4%) | 377,870 (42.3%) | 102,592 (11.5%) | 116,877 (13.1%) | 892,262 |

| SHHS | 5,793 | C4-A1 | 训练/测试:0.7:0.3 | 100名受试者 | 1,691,288 (28.8%) | 217,583 (3.7%) | 2,397,460 (40.9%) | 739,403 (12.6%) | 817,473 (13.9%) | 5,863,207 |

Physio2018: The Physio2018 dataset is contributed by the Computational Clinical Neuro physiology Laboratory at Massachusetts General Hospital and was applied to detect arousal during sleep in the 2018 Physionet challenge [44], [57]. Owing to the un availability of annotation for the evaluation set, we used the training set containing PSG recordings for 994 subjects aged 18–90. Thereafter, these recordings were annotated with the AASM rules [5], and we employed only C3–A2 EEG recordings for the singlechannel EEG classification.

Physio2018:Physio2018数据集由马萨诸塞州总医院计算临床神经生理学实验室贡献,用于2018年Physionet挑战赛中检测睡眠期间的觉醒状态[44][57]。由于评估集缺乏标注,我们使用了包含994名18-90岁受试者的PSG记录训练集。这些记录随后按照AASM规则[5]进行标注,在单通道EEG分类中仅采用C3-A2导联的EEG记录。

SHHS: The SHHS dataset [58], [59] is a multi center cohort research that is designed to examine the influence of sleep apnea on cardiovascular diseases. The collection is composed of two rounds of PSG records: Visit 1 (SHHS-1) and Visit 2 (SHHS-2), wherein each record contains twochannel EEGs, two-channel EOGs, a single-channel EMG, a single-channel ECG, and two-channel respiratory inductance ple thys mo graph y, as well as the data from location sensors, light sensors, pulse oximeters, and airflow sensors. Each epoch was assigned a value of W, REM, N1, N2, N3, N4, MOVEMENT, and UNKNOWN according to the R&K rule [4]. In this study, the single-channel EEG (C4–A1) was analyzed from 5,793 SHHS-1 recordings.

SHHS: SHHS数据集[58][59]是一项多中心队列研究,旨在探究睡眠呼吸暂停对心血管疾病的影响。该数据集包含两轮PSG记录:第一次访问(SHHS-1)和第二次访问(SHHS-2),每条记录均包含双通道脑电图(EEG)、双通道眼电图(EOG)、单通道肌电图(EMG)、单通道心电图(ECG)、双通道呼吸感应体积描记信号,以及定位传感器、光传感器、脉搏血氧仪和气流传感器的数据。根据R&K规则[4],每个时段被标记为W、REM、N1、N2、N3、N4、MOVEMENT或UNKNOWN值。本研究从5,793份SHHS-1记录中提取单通道脑电图(C4–A1)进行分析。

4.2 Backbone Networks for Ablation Study

4.2 消融实验的骨干网络

A direct comparison between the automatic sleep scoring methods would not be justified depending on the experimental settings such as the data processing method and training framework. For fair comparison with other stateof-the-art backbones, we implemented four backbones in the current training framework: Deep Sleep Net [16], IITNet [15], U-Time [25], and XSleepNet [7]. The backbones were trained and evaluated in the proposed framework on the same datasets (Sleep-EDF, MASS, Physio2018, and SHHS).

由于数据处理方法和训练框架等实验设置不同,直接比较自动睡眠评分方法并不合理。为了与其他先进骨干网络进行公平对比,我们在当前训练框架中实现了四种骨干网络:Deep Sleep Net [16]、IITNet [15]、U-Time [25] 和 XSleepNet [7]。这些骨干网络在相同数据集(Sleep-EDF、MASS、Physio2018 和 SHHS)上采用所提框架进行训练和评估。

Deep Sleep Net Backbone: The structure of Deep Sleep Net [16] consists of a dual path CNN for representation learning and two layers of LSTM for sequential learning. To compare the representation power of the backbone network, we considered only the dual-path CNN component of DeepSleepNet. The filter width of a single path is smaller for capturing certain EEG patterns, and that of the other path is larger to consider the frequency components from the EEG. To aggregate features from each CNN path, interpolation is performed after the CNN path with larger filters. Thereafter, we concatenated these two features and applied the two convolutional layers following [15]. The output size from the last layer was $16\times128$ with a single EEG epoch.

深度睡眠网络主干架构:Deep Sleep Net [16]的结构由用于表征学习的双路径CNN和用于序列学习的两层LSTM组成。为比较主干网络的表征能力,我们仅考虑DeepSleepNet的双路径CNN组件。其中一条路径的滤波器宽度较小以捕捉特定EEG模式,另一条路径的滤波器宽度较大以提取EEG频率成分。为聚合各CNN路径的特征,在较大滤波器的CNN路径后执行插值操作。随后我们将这两个特征拼接,并按照[15]的方法应用两个卷积层。单个EEG时段在最后一层的输出尺寸为$16\times128$。

IITNet Backbone: IITNet [15] uses the modified onedimensional ResNet-50 for extracting the representation of the raw EEG signal. Similar to the backbone network of SleePyCo, the backbone network of IITNet forms a five-block structure. However, the backbone network of IITNet has a deep architecture (49 convolutional layers), whereas that of $S l e e P y C o$ is shallow (13 convolutional layers). Given a single EEG epoch of size $3000\times1$ , the backbone network of IITNet outputs feature sequences of sizes $1500\times16$ , $750\times64,$ $375\times64,$ , $94\times128,$ and $47\times128$ from each convolutional block.

IITNet主干网络:IITNet [15] 使用改进的一维ResNet-50来提取原始EEG信号的表征。与SleePyCo的主干网络类似,IITNet的主干网络采用五块结构。但IITNet的主干网络具有深层架构(49个卷积层),而$SleePyCo$的网络较浅(13个卷积层)。给定尺寸为$3000\times1$的单个EEG时段,IITNet主干网络从每个卷积块输出尺寸分别为$1500\times16$、$750\times64$、$375\times64$、$94\times128$和$47\times128$的特征序列。

U-Time Backbone: U-Time [25] is a fully convolutional network for time-series segmentation applied for automatic sleep scoring. Similar to previous fully convolutional networks [60], [61], U-Time is the encoder–decoder structure, with the encoder for feature extraction and the decoder for time-series segmentation. Accordingly, we implemented only the encoder component to capture EEG patterns from raw EEG signals. The U-Time backbone comprises five convolutional blocks, similar to the proposed backbone network. However, the output from the last convolutional block could not be calculated from the single-epoch EEG because the encoder was designed to analyze 35 epochs of the EEG signals. To solve this problem, we lengthened the temporal dimension of the feature sequences by modifying the filter width of the max-pooling layer between the convolutional blocks from $\lbrace10,8,6,4\rbrace$ to ${8,6,4,2}.$ , respectively. Consequently, the sizes of the feature sequences were $3000\times16,$ , $375\times32,$ , $62\times64_{\cdot}$ , $15\times128_{,}$ and $7\times256$ from each convolutional block for a single EEG epoch.

U-Time主干网络:U-Time [25]是一种用于时间序列分割的全卷积网络,应用于自动睡眠评分。与之前的全卷积网络[60][61]类似,U-Time采用编码器-解码器结构,编码器负责特征提取,解码器负责时间序列分割。因此,我们仅实现编码器组件以从原始脑电信号中捕获脑电模式。该主干网络包含五个卷积块,与所提出的主干网络结构相似。但由于编码器设计用于分析35个脑电信号周期,最后一个卷积块的输出无法从单周期脑电中计算得出。为解决此问题,我们通过将卷积块间最大池化层的滤波器宽度从$\lbrace10,8,6,4\rbrace$修改为${8,6,4,2}$来延长特征序列的时间维度。最终,单个脑电周期在各卷积块输出的特征序列尺寸分别为$3000\times16$、$375\times32$、$62\times64_{\cdot}$、$15\times128_{,}$和$7\times256$。

XSleepNet Backbone: XSleepNet [7] is a multi viewed model that acquires raw signals and time–frequency images as inputs. Thus, XSleepNet includes two types of encoder network: one for the raw signals and the other for the time– frequency images. To compare the raw signal domain, we used the encoder component on raw signals in the ablation study. This encoder was composed of nine one-dimensional convolutional layers with a filter width of 31 and stride length of 2. The sizes of the output feature sequences were $1500\times16$ $750\times16,$ , $325\times32\$ , $163\times32$ , $82\times64_{.}$ , $41\times64_{.}$ , $21\times128,$ , $11\times128,$ and $6\times256~.$ , as obtained from 3000 samples of input EEG epochs.

XSleepNet主干网络:XSleepNet [7]是一种多视角模型,其输入为原始信号和时频图像。因此,XSleepNet包含两种编码器网络:一种用于原始信号,另一种用于时频图像。在消融研究中,我们使用原始信号编码器组件进行原始信号域的比较。该编码器由九个一维卷积层组成,滤波器宽度为31,步长为2。输出特征序列的尺寸分别为$1500\times16$、$750\times16$、$325\times32$、$163\times32$、$82\times64$、$41\times64$、$21\times128$、$11\times128$和$6\times256$,这些尺寸源自3000个输入EEG样本片段。

4.3 Model Specifications

4.3 模型规格

The details of the components of SleePyCo-backbone are presented in Table 3. In addition, we used the one-dimensional operations of the convolutional layer, batch normalization layer, and max-pooling layer. All convolutional layers in SleePyCo-backbone had a filter width of 3, stride size of 1, and padding size of 1 to maintain the temporal dimension in the convolutional block. The max-pooling layers were utilized with a filter width of 5 between each convolutional block to reduce the temporal dimension of the feature sequence. As explained in Section 2.2.2, the lateral connections that follow the backbone network had a filter width of 1. The outputs of lateral connections bear the same feature dimension $d_{f}$ of 128. For the transformer encoder of the classifier network, the number of heads $N_{h}$ was 8, number of encoder layers $N_{e}$ was 6, model dimension of transformer $d_{m}$ was 128, and the dimension of the feed-forward network $d_{F F}$ was 128.

SleePyCo主干网络的组件细节如表3所示。此外,我们使用了一维卷积层、批量归一化层和最大池化层操作。SleePyCo主干网络中的所有卷积层均采用宽度为3的滤波器、步长为1、填充尺寸为1的配置,以保持卷积块中的时间维度。在各卷积块之间采用宽度为5的最大池化层来缩减特征序列的时间维度。如2.2.2节所述,主干网络后的横向连接采用宽度为1的滤波器,其输出特征维度$d_{f}$统一为128。分类器网络的Transformer编码器参数为:注意力头数$N_{h}$设为8、编码器层数$N_{e}$设为6、Transformer模型维度$d_{m}$为128、前馈网络维度$d_{FF}$为128。

Table 3: Model Specification of SleePyCo-backbone. $T$ : temporal dimension, $C$ : channel dimension, BN: Batch Normalization and SE: Squeeze and Excitation [48].

表 3: SleePyCo-backbone 的模型规格。$T$: 时间维度,$C$: 通道维度,BN: 批归一化 (Batch Normalization),SE: 挤压激励 (Squeeze and Excitation) [48]。

| 层名称 | 组成 | 输出大小 [T × C] L=1 | L=10 |

|---|---|---|---|

| 输入 | 3000 × 1 | 30000 × 1 | |

| Conv1 | 3 Conv + BN + PReLU | 3000 × 64 | 30000 × 64 |

| 3 Conv + BN + SE + PReLU | 600 × 64 | 6000 × 64 | |

| Max-pool1 | 5 Max-pool 3 Conv + BN + PReLU | ||

| Conv2 | 3 Conv + BN + SE + PReLU | 600 × 128 | 6000 × 128 |

| Max-pool2 | 5 Max-pool 3 Conv + BN + PReLU | 120 × 128 | 1200 × 128 |

| Conv3 | 3 Conv + BN + PReLU 3 Conv + BN + SE + PReLU | 120 × 192 | 1200 × 192 |

| Max-pool3 | 5 Max-pool | 24 × 192 | 240 × 192 |

| Conv4 | 3 Conv + BN + PReLU | 24 × 256 | 240 × 256 |

| 3 Conv + BN + PReLU 3 Conv + BN + SE + PReLU | |||

| Max-pool4 | 5 Max-pool | 5 × 256 | 48 × 256 |

| Conv5 | 3 Conv + BN + PReLU | ||

| 3 Conv + BN + PReLU | 5 × 256 | 48 × 256 | |

| 3 Conv + BN + SE + PReLU |