Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks

面向知识密集型NLP任务的检索增强生成

Patrick Lewis†‡, Ethan Perez?,

Patrick Lewis†‡, Ethan Perez?,

Aleksandra Piktus†, Fabio Petroni†, Vladimir Karpukhin†, Naman Goyal†, Heinrich Küttler†,

Aleksandra Piktus†, Fabio Petroni†, Vladimir Karpukhin†, Naman Goyal†, Heinrich Küttler†,

Mike Lewis†, Wen-tau Yih†, Tim Rock t s chel†‡, Sebastian Riedel†‡, Douwe Kiela†

Mike Lewis†, Wen-tau Yih†, Tim Rocktäschel†‡, Sebastian Riedel†‡, Douwe Kiela†

†Facebook AI Research; ‡University College London; ?New York University; plewis@fb.com

†Facebook AI 研究院; ‡伦敦大学学院; ?纽约大学; plewis@fb.com

Abstract

摘要

Large pre-trained language models have been shown to store factual knowledge in their parameters, and achieve state-of-the-art results when fine-tuned on downstream NLP tasks. However, their ability to access and precisely manipulate knowledge is still limited, and hence on knowledge-intensive tasks, their performance lags behind task-specific architectures. Additionally, providing provenance for their decisions and updating their world knowledge remain open research problems. Pre-trained models with a differentiable access mechanism to explicit nonparametric memory can overcome this issue, but have so far been only investigated for extractive downstream tasks. We explore a general-purpose fine-tuning recipe for retrieval-augmented generation (RAG) — models which combine pre-trained parametric and non-parametric memory for language generation. We introduce RAG models where the parametric memory is a pre-trained seq2seq model and the non-parametric memory is a dense vector index of Wikipedia, accessed with a pre-trained neural retriever. We compare two RAG formulations, one which conditions on the same retrieved passages across the whole generated sequence, and another which can use different passages per token. We fine-tune and evaluate our models on a wide range of knowledge-intensive NLP tasks and set the state of the art on three open domain QA tasks, outperforming parametric seq2seq models and task-specific retrieve-and-extract architectures. For language generation tasks, we find that RAG models generate more specific, diverse and factual language than a state-of-the-art parametric-only seq2seq baseline.

大型预训练语言模型已被证明能在参数中存储事实性知识,并在下游自然语言处理(NLP)任务微调后取得最先进成果。然而,其访问和精确操纵知识的能力仍然有限,因此在知识密集型任务上,其性能落后于专用架构。此外,为模型决策提供溯源依据及更新其世界知识仍是待解的研究难题。具有可微分显式非参数记忆访问机制的预训练模型能解决这一问题,但迄今仅被研究用于抽取式下游任务。我们探索了一种适用于检索增强生成(RAG)的通用微调方案——该模型将预训练的参数化记忆与非参数化记忆相结合用于语言生成。我们提出的RAG模型中,参数化记忆采用预训练的seq2seq模型,非参数化记忆则是通过预训练神经检索器访问的维基百科稠密向量索引。我们比较了两种RAG架构:一种在整个生成序列中固定使用相同检索段落,另一种允许每个token使用不同段落。我们在多种知识密集型NLP任务上对模型进行微调和评估,在三个开放域问答任务中刷新了最高性能,超越了参数化seq2seq模型和专用检索-抽取架构。对于语言生成任务,我们发现RAG模型相比最先进的纯参数化seq2seq基线能生成更具体、多样且符合事实的文本。

1 Introduction

1 引言

Pre-trained neural language models have been shown to learn a substantial amount of in-depth knowledge from data [47]. They can do so without any access to an external memory, as a parameterized implicit knowledge base [51, 52]. While this development is exciting, such models do have downsides: They cannot easily expand or revise their memory, can’t straightforwardly provide insight into their predictions, and may produce “hallucinations” [38]. Hybrid models that combine parametric memory with non-parametric (i.e., retrieval-based) memories [20, 26, 48] can address some of these issues because knowledge can be directly revised and expanded, and accessed knowledge can be inspected and interpreted. REALM [20] and ORQA [31], two recently introduced models that combine masked language models [8] with a differentiable retriever, have shown promising results, but have only explored open-domain extractive question answering. Here, we bring hybrid parametric and non-parametric memory to the “workhorse of NLP,” i.e. sequence-to-sequence (seq2seq) models.

预训练的神经语言模型已被证明能够从数据中学习大量深入知识 [47]。它们无需访问外部记忆即可实现这一点,作为一种参数化的隐式知识库 [51, 52]。虽然这一进展令人振奋,但此类模型确实存在缺点:它们不易扩展或修改记忆,无法直接解释其预测依据,并可能产生"幻觉" [38]。结合参数化记忆与非参数化(即基于检索)记忆的混合模型 [20, 26, 48] 能部分解决这些问题,因为知识可以直接修改和扩展,且可对访问的知识进行检查和解释。REALM [20] 和 ORQA [31] 这两个近期提出的模型将掩码语言模型 [8] 与可微分检索器相结合,已展现出良好效果,但仅探索了开放域抽取式问答任务。本文将混合参数化与非参数化记忆引入"自然语言处理的主力军"——序列到序列(seq2seq)模型。

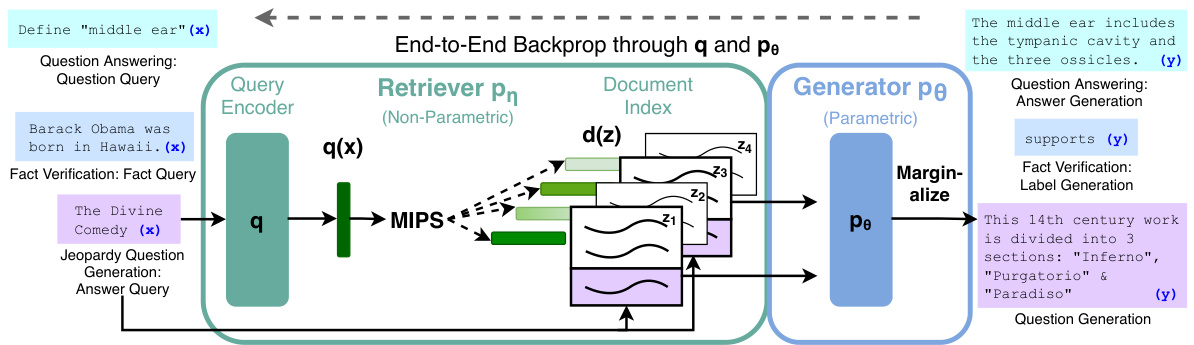

Figure 1: Overview of our approach. We combine a pre-trained retriever (Query Encoder $^+$ Document Index) with a pre-trained seq2seq model (Generator) and fine-tune end-to-end. For query $x$ , we use Maximum Inner Product Search (MIPS) to find the top-K documents $z_{i}$ . For final prediction $y$ , we treat $z$ as a latent variable and marginal ize over seq2seq predictions given different documents.

图 1: 方法概述。我们将预训练的检索器 (Query Encoder $^+$ Document Index) 与预训练的 seq2seq 模型 (Generator) 相结合,并进行端到端微调。对于查询 $x$,我们使用最大内积搜索 (MIPS) 来查找前 K 个文档 $z_{i}$。对于最终预测 $y$,我们将 $z$ 视为潜在变量,并对给定不同文档的 seq2seq 预测进行边缘化处理。

We endow pre-trained, parametric-memory generation models with a non-parametric memory through a general-purpose fine-tuning approach which we refer to as retrieval-augmented generation (RAG). We build RAG models where the parametric memory is a pre-trained seq2seq transformer, and the non-parametric memory is a dense vector index of Wikipedia, accessed with a pre-trained neural retriever. We combine these components in a probabilistic model trained end-to-end (Fig. 1). The retriever (Dense Passage Retriever [26], henceforth DPR) provides latent documents conditioned on the input, and the seq2seq model (BART [32]) then conditions on these latent documents together with the input to generate the output. We marginal ize the latent documents with a top-K approximation, either on a per-output basis (assuming the same document is responsible for all tokens) or a per-token basis (where different documents are responsible for different tokens). Like T5 [51] or BART, RAG can be fine-tuned on any seq2seq task, whereby both the generator and retriever are jointly learned.

我们通过一种通用的微调方法,为预训练的参量记忆生成模型赋予非参量记忆能力,这种方法被称为检索增强生成 (RAG)。我们构建的RAG模型中,参量记忆部分采用预训练的seq2seq Transformer,非参量记忆部分则是基于维基百科构建的稠密向量索引,通过预训练的神经检索器进行访问。这些组件被整合到一个端到端训练的概率模型中 (图1)。检索器 (稠密段落检索器 [26],以下简称DPR) 根据输入提供潜在文档,而seq2seq模型 (BART [32]) 则基于这些潜在文档和输入生成输出。我们通过top-K近似对潜在文档进行边缘化处理,可以基于整个输出 (假设同一文档负责所有token) 或基于单个token (不同文档负责不同token) 来实现。与T5 [51] 或 BART 类似,RAG可以在任何seq2seq任务上进行微调,此时生成器和检索器会进行联合学习。

There has been extensive previous work proposing architectures to enrich systems with non-parametric memory which are trained from scratch for specific tasks, e.g. memory networks [64, 55], stackaugmented networks [25] and memory layers [30]. In contrast, we explore a setting where both parametric and non-parametric memory components are pre-trained and pre-loaded with extensive knowledge. Crucially, by using pre-trained access mechanisms, the ability to access knowledge is present without additional training.

已有大量先前工作提出通过非参数化内存(non-parametric memory)增强系统架构的方案,这些架构需针对特定任务从头训练,例如记忆网络[64, 55]、堆栈增强网络[25]和记忆层[30]。与之不同,我们探索的是参数化与非参数化内存组件均经过预训练且预载海量知识的场景。关键在于,通过使用预训练的访问机制,无需额外训练即可具备知识访问能力。

Our results highlight the benefits of combining parametric and non-parametric memory with generation for knowledge-intensive tasks—tasks that humans could not reasonably be expected to perform without access to an external knowledge source. Our RAG models achieve state-of-the-art results on open Natural Questions [29], Web Questions [3] and Curate dT rec [2] and strongly outperform recent approaches that use specialised pre-training objectives on TriviaQA [24]. Despite these being extractive tasks, we find that un constrained generation outperforms previous extractive approaches. For knowledge-intensive generation, we experiment with MS-MARCO [1] and Jeopardy question generation, and we find that our models generate responses that are more factual, specific, and diverse than a BART baseline. For FEVER [56] fact verification, we achieve results within $4.3%$ of state-of-the-art pipeline models which use strong retrieval supervision. Finally, we demonstrate that the non-parametric memory can be replaced to update the models’ knowledge as the world changes.1

我们的研究结果突显了将参数化与非参数化记忆与生成相结合对知识密集型任务的优势——这类任务若没有外部知识源支持,人类难以合理完成。我们的RAG模型在开放Natural Questions [29]、Web Questions [3]和CuratedTrec [2]数据集上达到最先进水平,并在TriviaQA [24]上显著超越近期采用专用预训练目标的方法。尽管这些属于抽取式任务,我们发现无约束生成的表现优于以往抽取式方法。针对知识密集型生成任务,我们在MS-MARCO [1]和Jeopardy问答生成上进行实验,发现相比BART基线,我们的模型能生成更具事实性、特异性和多样性的回答。在FEVER [56]事实核查任务中,我们的结果与当前最优流水线模型仅相差$4.3%$(后者采用强检索监督机制)。最后,我们证明可通过替换非参数化记忆来更新模型知识以适应世界变化。

2 Methods

2 方法

We explore RAG models, which use the input sequence $x$ to retrieve text documents $z$ and use them as additional context when generating the target sequence $y$ . As shown in Figure 1, our models leverage two components: (i) a retriever $p_{\eta}(z|x)$ with parameters $\eta$ that returns (top-K truncated) distributions over text passages given a query $x$ and (ii) a generator $p_{\theta}(y_{i}|x,z,y_{1:i-1})$ para met rize d by $\theta$ that generates a current token based on a context of the previous $i-1$ tokens $y_{1:i-1}$ , the original input $x$ and a retrieved passage $z$ .

我们探索了RAG模型,该模型利用输入序列$x$检索文本文档$z$,并在生成目标序列$y$时将其作为额外上下文。如图1所示,我们的模型包含两个组件:(i) 检索器$p_{\eta}(z|x)$,其参数为$\eta$,根据查询$x$返回(截断前K个)文本段落的分布;(ii) 生成器$p_{\theta}(y_{i}|x,z,y_{1:i-1})$,其参数为$\theta$,基于前$i-1$个token $y_{1:i-1}$的上下文、原始输入$x$和检索到的段落$z$生成当前token。

To train the retriever and generator end-to-end, we treat the retrieved document as a latent variable. We propose two models that marginal ize over the latent documents in different ways to produce a distribution over generated text. In one approach, RAG-Sequence, the model uses the same document to predict each target token. The second approach, RAG-Token, can predict each target token based on a different document. In the following, we formally introduce both models and then describe the $p_{\eta}$ and $p_{\theta}$ components, as well as the training and decoding procedure.

为了端到端地训练检索器和生成器,我们将检索到的文档视为潜在变量。我们提出了两种模型,它们以不同方式对潜在文档进行边缘化处理,从而生成文本的概率分布。在第一种方法 RAG-Sequence 中,模型使用同一文档预测每个目标 token。第二种方法 RAG-Token 则可以根据不同文档预测每个目标 token。接下来,我们将正式介绍这两种模型,并描述 $p_{\eta}$ 和 $p_{\theta}$ 组件,以及训练和解码过程。

2.1 Models

2.1 模型

RAG-Sequence Model The RAG-Sequence model uses the same retrieved document to generate the complete sequence. Technically, it treats the retrieved document as a single latent variable that is marginalized to get the seq2seq probability $p(y|x)$ via a top-K approximation. Concretely, the top $\mathbf{K}$ documents are retrieved using the retriever, and the generator produces the output sequence probability for each document, which are then marginalized,

RAG-Sequence模型

RAG-Sequence模型使用相同的检索文档生成完整序列。技术上,它将检索到的文档视为单个潜在变量,通过top-K近似边缘化以获得seq2seq概率$p(y|x)$。具体而言,检索器获取top $\mathbf{K}$个文档,生成器为每个文档生成输出序列概率,随后进行边缘化处理。

$$

p_{\mathrm{RAG.Sequence}}(y|x)\approx\sum_{z\in\mathrm{top}\cdot k(p(\cdot|x))}p_{\eta}(z|x)p_{\theta}(y|x,z)=\sum_{z\in\mathrm{top}\cdot k(p(\cdot|x))}p_{\eta}(z|x)\prod_{i}^{N}p_{\theta}(y_{i}|x,z,y_{1:i-1})

$$

$$

p_{\mathrm{RAG.Sequence}}(y|x)\approx\sum_{z\in\mathrm{top}\cdot k(p(\cdot|x))}p_{\eta}(z|x)p_{\theta}(y|x,z)=\sum_{z\in\mathrm{top}\cdot k(p(\cdot|x))}p_{\eta}(z|x)\prod_{i}^{N}p_{\theta}(y_{i}|x,z,y_{1:i-1})

$$

RAG-Token Model In the RAG-Token model we can draw a different latent document for each target token and marginal ize accordingly. This allows the generator to choose content from several documents when producing an answer. Concretely, the top K documents are retrieved using the retriever, and then the generator produces a distribution for the next output token for each document, before marginal i zing, and repeating the process with the following output token, Formally, we define:

RAG-Token模型

在RAG-Token模型中,我们可以为每个目标token抽取不同的潜在文档并进行相应的边缘化处理。这使得生成器在生成答案时能够从多个文档中选择内容。具体而言,首先使用检索器获取前K个文档,然后生成器为每个文档生成下一个输出token的分布,接着进行边缘化处理,并对后续输出token重复这一过程。形式化定义如下:

$$

p_{\mathtt{R A G-T o k e n}}(y|x)\approx\prod_{i}^{N}\sum_{z\in\mathrm{top}-k(p(\cdot|x))}p_{\eta}(z|x)p_{\theta}(y_{i}|x,z_{i},y_{1:i-1})

$$

$$

p_{\mathtt{R A G-T o k e n}}(y|x)\approx\prod_{i}^{N}\sum_{z\in\mathrm{top}-k(p(\cdot|x))}p_{\eta}(z|x)p_{\theta}(y_{i}|x,z_{i},y_{1:i-1})

$$

Finally, we note that RAG can be used for sequence classification tasks by considering the target class as a target sequence of length one, in which case RAG-Sequence and RAG-Token are equivalent.

最后,我们注意到RAG (Retrieval-Augmented Generation) 可通过将目标类别视为长度为1的目标序列来应用于序列分类任务,此时RAG-Sequence和RAG-Token等价。

2.2 Retriever: DPR

2.2 检索器:DPR

The retrieval component $p_{\eta}(z|x)$ is based on DPR [26]. DPR follows a bi-encoder architecture:

检索组件 $p_{\eta}(z|x)$ 基于DPR [26]。DPR采用双编码器架构:

$$

p_{\eta}(z|x)\propto\exp\left(\mathbf{d}(z)^{\top}\mathbf{q}(x)\right)\qquad\mathbf{d}(z)=\mathbf{BERT}_ {d}(z),\mathbf{q}(x)=\mathbf{BERT}_{q}(x)

$$

$$

p_{\eta}(z|x)\propto\exp\left(\mathbf{d}(z)^{\top}\mathbf{q}(x)\right)\qquad\mathbf{d}(z)=\mathbf{BERT}_ {d}(z),\mathbf{q}(x)=\mathbf{BERT}_{q}(x)

$$

where $\mathbf{d}(z)$ is a dense representation of a document produced by a BERTBASE document encoder [8], and ${\bf q}(x)$ a query representation produced by a query encoder, also based on BERTBASE. Calculating top $\cdot\mathbf{k}(p_{\eta}(\cdot|x))$ , the list of $k$ documents $z$ with highest prior probability $p_{\eta}(z|x)$ , is a Maximum Inner Product Search (MIPS) problem, which can be approximately solved in sub-linear time [23]. We use a pre-trained bi-encoder from DPR to initialize our retriever and to build the document index. This retriever was trained to retrieve documents which contain answers to TriviaQA [24] questions and Natural Questions [29]. We refer to the document index as the non-parametric memory.

其中$\mathbf{d}(z)$是由BERTBASE文档编码器[8]生成的文档稠密表示,${\bf q}(x)$是基于BERTBASE的查询编码器生成的查询表示。计算top $\cdot\mathbf{k}(p_{\eta}(\cdot|x))$即找出具有最高先验概率$p_{\eta}(z|x)$的$k$个文档$z$列表,这是一个最大内积搜索(MIPS)问题,可在亚线性时间内近似求解[23]。我们使用DPR预训练的双编码器初始化检索器并构建文档索引。该检索器经训练可检索包含TriviaQA[24]问题和自然问题[29]答案的文档。我们将此文档索引称为非参数记忆。

2.3 Generator: BART

2.3 生成器: BART

The generator component $p_{\theta}(y_{i}|x,z,y_{1:i-1})$ could be modelled using any encoder-decoder. We use BART-large [32], a pre-trained seq2seq transformer [58] with 400M parameters. To combine the input $x$ with the retrieved content $z$ when generating from BART, we simply concatenate them. BART was pre-trained using a denoising objective and a variety of different noising functions. It has obtained state-of-the-art results on a diverse set of generation tasks and outperforms comparably-sized T5 models [32]. We refer to the BART generator parameters $\theta$ as the parametric memory henceforth.

生成器组件 $p_{\theta}(y_{i}|x,z,y_{1:i-1})$ 可采用任意编码器-解码器结构建模。我们选用BART-large [32],这是一个预训练的seq2seq Transformer [58],参数量达4亿。在使用BART生成时,为融合输入 $x$ 与检索内容 $z$,我们直接进行拼接操作。BART通过去噪目标函数和多种噪声函数进行预训练,在各类生成任务中取得最先进成果,性能超越同等规模的T5模型 [32]。后文将BART生成器参数 $\theta$ 称为参数化记忆。

2.4 Training

2.4 训练

We jointly train the retriever and generator components without any direct supervision on what document should be retrieved. Given a fine-tuning training corpus of input/output pairs $(x_{j},y_{j})$ , we minimize the negative marginal log-likelihood of each target, $\begin{array}{r}{\sum_{j}-\log p(y_{j}|x_{j})}\end{array}$ using stochastic gradient descent with Adam [28]. Updating the document encod er $\mathtt{B E R T}_ {d}$ during training is costly as it requires the document index to be periodically updated as REALM does during pre-training [20]. We do not find this step necessary for strong performance, and keep the document encoder (and index) fixed, only fine-tuning the query encoder $\mathrm{BERT}_{q}$ and the BART generator.

我们联合训练检索器和生成器组件,无需对应该检索哪些文档进行直接监督。给定一个由输入/输出对$(x_{j},y_{j})$组成的微调训练语料库,我们使用Adam[28]随机梯度下降法最小化每个目标的负边际对数似然$\begin{array}{r}{\sum_{j}-\log p(y_{j}|x_{j})}\end{array}$。在训练过程中更新文档编码器$\mathtt{B E R T}_ {d}$成本高昂,因为这需要像REALM在预训练时那样定期更新文档索引[20]。我们发现这一步骤对于实现强劲性能并非必要,因此保持文档编码器(及索引)固定,仅对查询编码器$\mathrm{BERT}_{q}$和BART生成器进行微调。

2.5 Decoding

2.5 解码

At test time, RAG-Sequence and RAG-Token require different ways to approximate arg $\mathrm{max}_{y}p(y|x)$ .

在测试时,RAG-Sequence 和 RAG-Token 需要不同的方法来近似 arg $\mathrm{max}_{y}p(y|x)$。

RAG-Token The RAG-Token model can be seen as a standard, auto regressive seq2seq generator with transition probability: $\begin{array}{r}{p_{\theta}^{\prime}(y_{i}|x,y_{1:i-1})=\sum_{z\in\mathrm{top}\cdot k(p(\cdot|x))}p_{\eta}(z_{i}|\overline{{x}})p_{\theta}(y_{i}|x,\hat{z}_ {i},\hat{y_{1:i-1}})}\end{array}$ To decode, we can plug $p_{\theta}^{\prime}(y_{i}|x,y_{1:i-1})$ into a standard beam decoder.

RAG-Token模型可视为标准的自回归序列到序列生成器,其转移概率为:$\begin{array}{r}{p_{\theta}^{\prime}(y_{i}|x,y_{1:i-1})=\sum_{z\in\mathrm{top}\cdot k(p(\cdot|x))}p_{\eta}(z_{i}|\overline{{x}})p_{\theta}(y_{i}|x,\hat{z}_ {i},\hat{y_{1:i-1}})}\end{array}$。解码时,可将$p_{\theta}^{\prime}(y_{i}|x,y_{1:i-1})$代入标准束搜索解码器。

RAG-Sequence For RAG-Sequence, the likelihood $p(\boldsymbol{y}|\boldsymbol{x})$ does not break into a conventional pertoken likelihood, hence we cannot solve it with a single beam search. Instead, we run beam search for each document $z$ , scoring each hypothesis using $p_{\theta}(y_{i}|x,z,y_{1:i-1})$ . This yields a set of hypotheses $Y$ , some of which may not have appeared in the beams of all documents. To estimate the probability of an hypothesis $y$ we run an additional forward pass for each document $z$ for which $y$ does not appear in the beam, multiply generator probability with $p_{\eta}(z|x)$ and then sum the probabilities across beams for the marginals. We refer to this decoding procedure as “Thorough Decoding.” For longer output sequences, $|Y|$ can become large, requiring many forward passes. For more efficient decoding, we can make a further approximation that $\dot{p}_ {\theta}(y|\dot{x},z_{i})\stackrel{\cdot}{\approx}0$ where $y$ was not generated during beam search from $x,z_{i}$ . This avoids the need to run additional forward passes once the candidate set $Y$ has been generated. We refer to this decoding procedure as “Fast Decoding.”

RAG序列模型 对于RAG序列模型,似然函数$p(\boldsymbol{y}|\boldsymbol{x})$无法分解为常规的按token似然,因此无法通过单次束搜索求解。我们需对每个文档$z$执行束搜索,使用$p_{\theta}(y_{i}|x,z,y_{1:i-1})$对每个假设进行评分,从而生成假设集合$Y$。部分假设可能未出现在所有文档的搜索束中。为估算假设$y$的概率,需对未包含该假设的文档$z$执行额外前向传播,将生成器概率与$p_{\eta}(z|x)$相乘后对边界概率进行跨束求和。此解码流程称为"彻底解码"。当输出序列较长时,$|Y|$可能变得很大,需要大量前向传播。为提高解码效率,可进一步近似处理:若$y$未在$x,z_{i}$的束搜索中生成,则设$\dot{p}_ {\theta}(y|\dot{x},z_{i})\stackrel{\cdot}{\approx}0$。该方案在生成候选集$Y$后无需额外前向传播,称为"快速解码"。

3 Experiments

3 实验

We experiment with RAG in a wide range of knowledge-intensive tasks. For all experiments, we use a single Wikipedia dump for our non-parametric knowledge source. Following Lee et al. [31] and Karpukhin et al. [26], we use the December 2018 dump. Each Wikipedia article is split into disjoint 100-word chunks, to make a total of 21M documents. We use the document encoder to compute an embedding for each document, and build a single MIPS index using FAISS [23] with a Hierarchical Navigable Small World approximation for fast retrieval [37]. During training, we retrieve the top $k$ documents for each query. We consider $k\in{5,10}$ for training and set $k$ for test time using dev data. We now discuss experimental details for each task.

我们在多种知识密集型任务中对RAG进行了实验。所有实验均采用单一维基百科数据转储作为非参数化知识源。遵循Lee等人[31]和Karpukhin等人[26]的方法,我们使用2018年12月的转储数据。每篇维基百科文章被分割为互不重叠的100词片段,共计生成2100万份文档。我们使用文档编码器计算每份文档的嵌入向量,并借助FAISS[23]构建单一最大内积搜索(MIPS)索引,采用分层可导航小世界近似算法[37]实现快速检索。训练过程中,我们为每个查询检索前$k$篇文档。训练阶段考虑$k\in{5,10}$的取值,测试阶段的$k$值则通过开发集数据确定。接下来我们将详细说明每项任务的实验设置。

3.1 Open-domain Question Answering

3.1 开放域问答

Open-domain question answering (QA) is an important real-world application and common testbed for knowledge-intensive tasks [20]. We treat questions and answers as input-output text pairs $(x,y)$ and train RAG by directly minimizing the negative log-likelihood of answers. We compare RAG to the popular extractive QA paradigm [5, 7, 31, 26], where answers are extracted spans from retrieved documents, relying primarily on non-parametric knowledge. We also compare to “Closed-Book QA” approaches [52], which, like RAG, generate answers, but which do not exploit retrieval, instead relying purely on parametric knowledge. We consider four popular open-domain QA datasets: Natural Questions (NQ) [29], TriviaQA (TQA) [24]. Web Questions (WQ) [3] and Curate dT rec (CT) [2]. As CT and WQ are small, we follow DPR [26] by initializing CT and WQ models with our NQ RAG model. We use the same train/dev/test splits as prior work [31, 26] and report Exact Match (EM) scores. For TQA, to compare with T5 [52], we also evaluate on the TQA Wiki test set.

开放域问答(QA)是知识密集型任务的重要实际应用和常见测试平台[20]。我们将问题和答案视为输入-输出文本对$(x,y)$,通过直接最小化答案的负对数似然来训练RAG。我们将RAG与流行的抽取式QA范式[5,7,31,26]进行比较,后者从检索文档中提取答案片段,主要依赖非参数化知识。同时我们也与"闭卷QA"方法[52]对比,这类方法与RAG一样生成答案,但不利用检索机制,完全依赖参数化知识。

我们选用四个主流开放域QA数据集:自然问题(NQ)[29]、TriviaQA(TQA)[24]、网页问题(WQ)[3]和CuratedTrec(CT)[2]。由于CT和WQ规模较小,我们遵循DPR[26]的做法,使用NQ训练的RAG模型初始化CT和WQ模型。采用与先前研究[31,26]相同的训练集/验证集/测试集划分,并报告精确匹配(EM)分数。针对TQA数据集,为与T5[52]对比,我们还额外评估了TQA维基测试集的表现。

3.2 Abstract ive Question Answering

3.2 摘要式问答

RAG models can go beyond simple extractive QA and answer questions with free-form, abstract ive text generation. To test RAG’s natural language generation (NLG) in a knowledge-intensive setting, we use the MSMARCO NLG task v2.1 [43]. The task consists of questions, ten gold passages retrieved from a search engine for each question, and a full sentence answer annotated from the retrieved passages. We do not use the supplied passages, only the questions and answers, to treat

RAG模型不仅能处理简单的抽取式问答,还能通过自由形式的抽象文本生成来回答问题。为了在知识密集型场景下测试RAG的自然语言生成(NLG)能力,我们采用MSMARCO NLG任务v2.1[43]。该任务包含问题集、每个问题对应的搜索引擎检索出的十条黄金段落,以及基于检索段落标注的完整句子答案。实验中我们仅使用问题和答案数据,未使用提供的检索段落。

MSMARCO as an open-domain abstract ive QA task. MSMARCO has some questions that cannot be answered in a way that matches the reference answer without access to the gold passages, such as “What is the weather in Volcano, CA?” so performance will be lower without using gold passages. We also note that some MSMARCO questions cannot be answered using Wikipedia alone. Here, RAG can rely on parametric knowledge to generate reasonable responses.

MSMARCO作为开放域抽象问答任务。MSMARCO存在部分问题无法在不访问黄金段落(gold passages)的情况下生成与参考答案匹配的答案,例如"加州火山镇(Volcano, CA)的天气如何?",因此不使用黄金段落时性能会较低。我们还注意到部分MSMARCO问题仅依靠维基百科无法解答,此时RAG可依赖参数化知识生成合理响应。

3.3 Jeopardy Question Generation

3.3 危险边缘问答生成

To evaluate RAG’s generation abilities in a non-QA setting, we study open-domain question generation. Rather than use questions from standard open-domain QA tasks, which typically consist of short, simple questions, we propose the more demanding task of generating Jeopardy questions. Jeopardy is an unusual format that consists of trying to guess an entity from a fact about that entity. For example, “The World Cup” is the answer to the question “In 1986 Mexico scored as the first country to host this international sports competition twice.” As Jeopardy questions are precise, factual statements, generating Jeopardy questions conditioned on their answer entities constitutes a challenging knowledge-intensive generation task.

为了评估RAG在非问答场景下的生成能力,我们研究了开放领域问题生成任务。不同于标准开放领域问答任务中常见的简短问题,我们提出了更具挑战性的《危险边缘》(Jeopardy) 问题生成任务。该节目采用独特的形式:根据关于某实体的描述来猜测目标实体。例如"1986年墨西哥成为首个两次主办这项国际体育赛事的国家"对应的答案是"世界杯"。由于《危险边缘》问题都是精确的事实性陈述,以答案实体为条件生成此类问题构成了一项极具挑战性的知识密集型生成任务。

We use the splits from SearchQA [10], with 100K train, 14K dev, and 27K test examples. As this is a new task, we train a BART model for comparison. Following [67], we evaluate using the SQuAD-tuned Q-BLEU-1 metric [42]. Q-BLEU is a variant of BLEU with a higher weight for matching entities and has higher correlation with human judgment for question generation than standard metrics. We also perform two human evaluations, one to assess generation factuality, and one for specificity. We define factuality as whether a statement can be corroborated by trusted external sources, and specificity as high mutual dependence between the input and output [33]. We follow best practice and use pairwise comparative evaluation [34]. Evaluators are shown an answer and two generated questions, one from BART and one from RAG. They are then asked to pick one of four options—quuestion A is better, question B is better, both are good, or neither is good.

我们采用SearchQA [10]的数据划分方式,包含10万条训练样本、1.4万条验证样本和2.7万条测试样本。由于这是新任务,我们训练了一个BART模型作为基线参照。遵循[67]的方法,我们使用经SQuAD调优的Q-BLEU-1指标 [42]进行评估。Q-BLEU是BLEU的变体,对实体匹配赋予更高权重,在问题生成任务中比标准指标更符合人类判断。我们还进行了两项人工评估:一项检验生成内容的事实性,另一项评估特异性。我们将事实性定义为陈述是否可通过可信外部来源验证,特异性则定义为输入输出间的高度互依性 [33]。按照最佳实践,我们采用成对比较评估法 [34]。评估人员会看到答案和两个生成问题(分别来自BART和RAG),然后从四个选项中选择:问题A更优、问题B更优、两者俱佳或两者皆不理想。

3.4 Fact Verification

3.4 事实核查

FEVER [56] requires classifying whether a natural language claim is supported or refuted by Wikipedia, or whether there is not enough information to decide. The task requires retrieving evidence from Wikipedia relating to the claim and then reasoning over this evidence to classify whether the claim is true, false, or un verifiable from Wikipedia alone. FEVER is a retrieval problem coupled with an challenging entailment reasoning task. It also provides an appropriate testbed for exploring the RAG models’ ability to handle classification rather than generation. We map FEVER class labels (supports, refutes, or not enough info) to single output tokens and directly train with claim-class pairs. Crucially, unlike most other approaches to FEVER, we do not use supervision on retrieved evidence. In many real-world applications, retrieval supervision signals aren’t available, and models that do not require such supervision will be applicable to a wider range of tasks. We explore two variants: the standard 3-way classification task (supports/refutes/not enough info) and the 2-way (supports/refutes) task studied in Thorne and Vlachos [57]. In both cases we report label accuracy.

FEVER [56] 要求对自然语言声明进行分类,判断其是否被维基百科支持或反驳,或者是否存在信息不足无法判定。该任务需要从维基百科检索与声明相关的证据,然后基于这些证据进行推理,以分类声明是真实、虚假还是仅凭维基百科无法验证。FEVER 是一个结合了具有挑战性的蕴含推理任务的检索问题,同时也为探索 RAG (Retrieval-Augmented Generation) 模型处理分类而非生成任务的能力提供了合适的测试平台。我们将 FEVER 的类别标签 (支持、反驳或信息不足) 映射到单个输出 token,并直接使用声明-类别对进行训练。关键的是,与大多数其他 FEVER 方法不同,我们不对检索到的证据使用监督信号。在许多实际应用中,检索监督信号并不可用,因此不需要此类监督的模型将适用于更广泛的任务。我们探索了两种变体:标准的三分类任务 (支持/反驳/信息不足) 以及 Thorne 和 Vlachos [57] 中研究的二分类任务 (支持/反驳)。在这两种情况下,我们都报告标签准确率。

4 Results

4 结果

4.1 Open-domain Question Answering

4.1 开放域问答

Table 1 shows results for RAG along with state-of-the-art models. On all four open-domain QA tasks, RAG sets a new state of the art (only on the T5-comparable split for TQA). RAG combines the generation flexibility of the “closed-book” (parametric only) approaches and the performance of "open-book" retrieval-based approaches. Unlike REALM and $\mathrm{T}5\substack{+}\mathrm{SSM}$ , RAG enjoys strong results without expensive, specialized “salient span masking” pre-training [20]. It is worth noting that RAG’s retriever is initialized using DPR’s retriever, which uses retrieval supervision on Natural Questions and TriviaQA. RAG compares favourably to the DPR QA system, which uses a BERT-based “crossencoder” to re-rank documents, along with an extractive reader. RAG demonstrates that neither a re-ranker nor extractive reader is necessary for state-of-the-art performance.

表1: 展示了RAG与前沿模型的对比结果。在所有四个开放域问答任务中,RAG都取得了最新最优性能(仅在TQA的T5可比划分集上例外)。RAG兼具"闭卷式"(仅参数化)方法的生成灵活性和"开卷式"检索方法的性能优势。与REALM和$\mathrm{T}5\substack{+}\mathrm{SSM}$不同,RAG无需昂贵且专门的"显著跨度掩码"[20]预训练就能获得强劲表现。值得注意的是,RAG的检索器初始化采用了DPR的检索器架构(该架构基于Natural Questions和TriviaQA的检索监督数据进行训练)。相较于使用BERT-based"交叉编码器"进行文档重排序并结合抽取式阅读器的DPR问答系统,RAG展现出更优性能。该结果表明,要实现最先进性能,既不需要重排序模块也不需要抽取式阅读器。

There are several advantages to generating answers even when it is possible to extract them. Documents with clues about the answer but do not contain the answer verbatim can still contribute towards a correct answer being generated, which is not possible with standard extractive approaches, leading to more effective margin aliz ation over documents. Furthermore, RAG can generate correct answers even when the correct answer is not in any retrieved document, achieving $11.8%$ accuracy in such cases for NQ, where an extractive model would score $0%$ .

即使能够提取答案,生成答案仍有几项优势。包含答案线索但未逐字包含答案的文档仍有助于生成正确答案,这是标准抽取式方法无法实现的,从而能更有效地利用文档边际信息。此外,RAG 即便在检索文档中不含正确答案时也能生成正确答案,在 NQ 数据集上此类情况准确率达 11.8%,而抽取式模型在此场景下准确率为 0%。

Table 1: Open-Domain QA Test Scores. For TQA, left column uses the standard test set for OpenDomain QA, right column uses the TQA-Wiki test set. See Appendix D for further details.

| Model | NQ | TQA | WQ | CT |

| Closed Book | T5-11B [52] T5-11B+SSM[52] | 34.5 36.6 | /50.1 37.4 /60.5 44.7 | |

| Open | REALM[20] | 40.4 | -/- 40.7 | 46.8 |

| Book | DPR [26] | 41.5 | 57.9/ | 41.1 50.6 |

| RAG-Token | 44.1 | 55.2/66.1 | 45.5 50.0 | |

| RAG-Seq. | 44.5 | 56.8/68.0 | 45.2 52.2 |

表 1: 开放域问答测试分数。对于TQA,左列使用开放域问答的标准测试集,右列使用TQA-Wiki测试集。更多细节见附录D。

| 模型 | NQ | TQA | WQ | CT |

|---|---|---|---|---|

| 闭卷 | T5-11B [52] T5-11B+SSM[52] | 34.5 36.6 | /50.1 37.4 /60.5 44.7 | |

| 开卷 | REALM[20] | 40.4 | -/- 40.7 | 46.8 |

| 开卷 | DPR [26] | 41.5 | 57.9/ | 41.1 50.6 |

| 开卷 | RAG-Token | 44.1 | 55.2/66.1 | 45.5 50.0 |

| 开卷 | RAG-Seq. | 44.5 | 56.8/68.0 | 45.2 52.2 |

Table 2: Generation and classification Test Scores. MS-MARCO SotA is [4], FEVER-3 is [68] and FEVER-2 is [57] *Uses gold context/evidence. Best model without gold access underlined.

| Model | Jeopardy | MSMARCO FVR3FVR2 | ||||

| B-1 | QB-1 | R-L | B-1 | Label Acc. 76.8 | 92.2* | |

| SotA | 19.7 | 49.8* 38.2 | 49.9* 41.6 | 64.0 | 81.1 | |

| BART RAG-Tok. 17.3 | 15.1 | 22.2 | 40.1 | 41.5 | 72.5 | 89.5 |

表 2: 生成与分类测试分数。MS-MARCO SotA为[4], FEVER-3为[68], FEVER-2为[57] *使用黄金上下文/证据。未使用黄金数据的最佳模型加下划线。

| 模型 | Jeopardy | MSMARCO FVR3FVR2 | ||||

|---|---|---|---|---|---|---|

| B-1 | QB-1 | R-L | B-1 | Label Acc. 76.8 | 92.2* | |

| SotA | 19.7 | 49.8* 38.2 | 49.9* 41.6 | 64.0 | 81.1 | |

| BART RAG-Tok. 17.3 | 15.1 | 22.2 | 40.1 | 41.5 | 72.5 | 89.5 |

4.2 Abstract ive Question Answering

4.2 抽象问答

As shown in Table 2, RAG-Sequence outperforms BART on Open MS-MARCO NLG by 2.6 Bleu points and 2.6 Rouge-L points. RAG approaches state-of-the-art model performance, which is impressive given that (i) those models access gold passages with specific information required to generate the reference answer, (ii) many questions are unanswerable without the gold passages, and (iii) not all questions are answerable from Wikipedia alone. Table 3 shows some generated answers from our models. Qualitatively, we find that RAG models hallucinate less and generate factually correct text more often than BART. Later, we also show that RAG generations are more diverse than BART generations (see $\S4.5,$ ).

如表 2 所示,RAG-Sequence 在 Open MS-MARCO NLG 上比 BART 高出 2.6 个 Bleu 分和 2.6 个 Rouge-L 分。RAG 接近最先进模型的性能,这令人印象深刻,因为 (i) 这些模型访问了生成参考答案所需的特定信息的黄金段落,(ii) 许多问题在没有黄金段落的情况下无法回答,(iii) 并非所有问题仅凭维基百科就能解答。表 3 展示了我们模型生成的一些答案。从质量上看,我们发现 RAG 模型比 BART 产生更少的幻觉,并且更频繁地生成事实正确的文本。稍后,我们还展示了 RAG 生成的内容比 BART 生成的内容更加多样化 (见 $\S4.5,$ )。

4.3 Jeopardy Question Generation

4.3 危险边缘问答生成

Table 2 shows that RAG-Token performs better than RAG-Sequence on Jeopardy question generation, with both models outperforming BART on Q-BLEU-1. Table 4 shows human evaluation results, over 452 pairs of generations from BART and RAG-Token. Evaluators indicated that BART was more factual than RAG in only $7.1%$ of cases, while RAG was more factual in $42.7%$ of cases, and both RAG and BART were factual in a further $17%$ of cases, clearly demonstrating the effectiveness of RAG on the task over a state-of-the-art generation model. Evaluators also find RAG generations to be more specific by a large margin. Table 3 shows typical generations from each model.

表 2 显示,在 Jeopardy 问答生成任务中,RAG-Token 的表现优于 RAG-Sequence,且两个模型在 Q-BLEU-1 指标上均超越 BART。表 4 展示了基于 452 组 BART 与 RAG-Token 生成结果的人工评估数据。评估者认为 BART 仅在 $7.1%$ 的情况下比 RAG 更符合事实,而 RAG 在 $42.7%$ 的情况下更具事实准确性,另有 $17%$ 的情况两者均符合事实,这显著证明了 RAG 在该任务上相对于前沿生成模型的有效性。评估者还发现 RAG 的生成结果具有显著更高的特异性。表 3 展示了各模型的典型生成示例。

Jeopardy questions often contain two separate pieces of information, and RAG-Token may perform best because it can generate responses that combine content from several documents. Figure 2 shows an example. When generating “Sun”, the posterior is high for document 2 which mentions “The Sun Also Rises”. Similarly, document 1 dominates the posterior when “A Farewell to Arms” is generated. Intriguingly, after the first token of each book is generated, the document posterior flattens. This observation suggests that the generator can complete the titles without depending on specific documents. In other words, the model’s parametric knowledge is sufficient to complete the titles. We find evidence for this hypothesis by feeding the BART-only baseline with the partial decoding "The Sun. BART completes the generation "The Sun Also Rises" is a novel by this author of "The Sun Also Rises" indicating the title "The Sun Also Rises" is stored in BART’s parameters. Similarly, BART will complete the partial decoding "The Sun Also Rises" is a novel by this author of "A with "The Sun Also Rises" is a novel by this author of "A Farewell to Arms". This example shows how parametric and non-parametric memories work together—the non-parametric component helps to guide the generation, drawing out specific knowledge stored in the parametric memory.

《危险边缘》问题通常包含两个独立信息片段,RAG-Token表现最佳的原因在于它能整合多份文档内容生成回答。图2展示了典型案例:生成"Sun"时,提及《太阳照常升起》的文档2后验概率较高;而生成《永别了,武器》时文档1占据主导。有趣的是,当