In-Context Retrieval-Augmented Language Models

上下文检索增强型语言模型

Ori Ram∗ Yoav Levine∗ Itay Dalmedigos Dor Muhlgay Amnon Shashua Kevin Leyton-Brown Yoav Shoham AI21 Labs

Ori Ram∗ Yoav Levine∗ Itay Dalmedigos Dor Muhlgay Amnon Shashua Kevin Leyton-Brown Yoav Shoham AI21 Labs

{orir,yoavl,itayd,dorm,amnons,kevinlb,yoavs}@ai21.com

{orir,yoavl,itayd,dorm,amnons,kevinlb,yoavs}@ai21.com

Abstract

摘要

Retrieval-Augmented Language Modeling (RALM) methods, which condition a language model (LM) on relevant documents from a grounding corpus during generation, were shown to significantly improve language modeling performance. In addition, they can mitigate the problem of factually inaccurate text generation and provide natural source attribution mechanism. Existing RALM approaches focus on modifying the LM architecture in order to facilitate the incorporation of external information, significantly complicating deployment. This paper considers a simple alternative, which we dub In-Context RALM: leaving the LM architecture unchanged and prepending grounding documents to the input, without any further training of the LM. We show that In-Context RALM that builds on off-the-shelf general purpose retrievers provides surprisingly large LM gains across model sizes and diverse corpora. We also demonstrate that the document retrieval and ranking mechanism can be specialized to the RALM setting to further boost performance. We conclude that In-Context RALM has considerable potential to increase the prevalence of LM grounding, particularly in settings where a pretrained LM must be used without modification or even via API access.1

检索增强语言建模 (Retrieval-Augmented Language Modeling, RALM) 方法通过在生成过程中基于语料库中的相关文档对语言模型 (Language Model, LM) 进行条件约束,被证明能显著提升语言建模性能。此外,该方法还能缓解生成文本事实性错误的问题,并提供自然的来源归因机制。现有RALM方法主要侧重于修改LM架构以整合外部信息,这大幅增加了部署复杂度。本文提出了一种名为"上下文RALM"的简单替代方案:保持LM架构不变,仅将相关文档前置到输入中,无需对LM进行额外训练。实验表明,基于现成通用检索器的上下文RALM能在不同模型规模和多样语料库上带来惊人的性能提升。我们还证实,通过针对RALM场景定制文档检索和排序机制可进一步提升效果。研究得出结论:上下文RALM具有极大潜力来提升语言模型的基础应用普及度,特别是在必须使用未经修改的预训练模型或仅通过API访问的场景中。[1]

1 Introduction

1 引言

Recent advances in language modeling (LM) have dramatically increased the usefulness of machinegenerated text across a wide range of use-cases and domains (Brown et al., 2020). However, the mainstream paradigm of generating text with LMs bears inherent limitations in access to external knowledge. First, LMs are not coupled with any source attribution, and must be trained in order to incorporate up-to-date information that was not seen during training. More importantly, they tend to produce factual inaccuracies and errors (Lin et al., 2022; Maynez et al., 2020; Huang et al., 2020). This problem is present in any LM generation scenario, and is exacerbated when generation is made in uncommon domains or private data. A promising approach for addressing the above is Retrieval-Augmented Language Modeling (RALM), grounding the LM during generation by conditioning on relevant documents retrieved from an external knowledge source. RALM systems include two high level components: (i) document selection, selecting the set of documents upon which to condition; and (ii) document reading, determining how to incorporate the selected documents into the LM generation process.

语言建模 (LM) 领域的最新进展显著提升了机器生成文本在各类应用场景和领域中的实用性 (Brown et al., 2020)。然而,当前主流的大语言模型文本生成范式在获取外部知识方面存在固有局限:首先,模型缺乏来源归因机制,必须通过重新训练才能整合训练时未接触的最新信息;更重要的是,它们容易产生事实性错误 (Lin et al., 2022; Maynez et al., 2020; Huang et al., 2020)。这一问题存在于所有大语言模型生成场景中,在非常规领域或私有数据上的生成任务中尤为突出。

检索增强语言建模 (Retrieval-Augmented Language Modeling, RALM) 是解决上述问题的有效方案,该方法通过在生成过程中锚定外部知识源检索的相关文档来增强模型可靠性。RALM 系统包含两个核心组件:(i) 文档选择模块——确定用于生成的条件文档集;(ii) 文档阅读模块——决定如何将选定文档整合到语言模型生成流程中。

Figure 1: Our framework, dubbed In-Context RALM, provides large language modeling gains on the test set of WikiText-103, without modifying the LM. Adapting the use of a BM25 retriever (Robertson and Zaragoza, 2009) to the LM task (§5) yields significant gains, and choosing the grounding documents via our new class of Predictive Rerankers (§6) provides a further boost. See Table 1 for the full results on five diverse corpora.

图 1: 我们的框架In-Context RALM在WikiText-103测试集上实现了大语言模型性能提升,且无需修改语言模型。将BM25检索器 (Robertson and Zaragoza, 2009) 适配至语言模型任务 (§5) 带来显著增益,而通过我们提出的预测性重排序器 (§6) 选取基础文档可进一步提升效果。完整结果参见表 1 中五个不同语料库的数据。

Leading RALM systems introduced recently tend to be focused on altering the language model architecture (Khandelwal et al., 2020; Borgeaud et al., 2022; Zhong et al., 2022; Levine et al., $2022\mathrm{c}$ ; Li et al., 2022). Notably, Borgeaud et al. (2022) introduced RETRO, featuring document reading via nontrivial modifications that require further training to the LM architecture, while using an off-theshelf frozen BERT retriever for document selection. Although the paper’s experimental findings showed impressive performance gains, the need for changes in architecture and dedicated retraining has hindered the wide adoption of such models.

近期提出的领先 RALM 系统往往侧重于改变语言模型架构 [20][5][28][17][16]。值得注意的是,Borgeaud 等人 [5] 提出的 RETRO 通过非平凡架构修改实现文档读取功能,这需要对语言模型进行额外训练,同时采用现成的冻结 BERT 检索器进行文档选择。尽管实验结果显示其性能提升显著,但由于需要修改架构并进行专门训练,这类模型的广泛采用仍受到限制。

Figure 2: An example of In-Context RALM: we simply prepend the retrieved document before the input prefix.

图 2: 上下文检索增强语言模型 (In-Context RALM) 示例:我们只需在输入前缀前添加检索到的文档。

In this paper, we show that a very simple document reading mechanism can have a large impact, and that substantial gains can also be made by adapting the document selection mechanism to the task of language modeling. Thus, we show that many of the benefits of RALM can be achieved while working with off-the-shelf LMs, even via API access. Specifically, we consider a simple but powerful RALM framework, dubbed In-Context RALM (presented in Section 3), which employs a zero-effort document reading mechanism: we simply prepend the selected documents to the LM’s input text (Figure 2).

本文表明,一种极其简单的文档读取机制能产生显著影响,同时通过使文档选择机制适配语言建模任务也能实现大幅性能提升。我们证明,即使通过API调用现成大语言模型(LM),也能获得检索增强语言模型(RALM)的大部分优势。具体而言,我们提出了一种简单但强大的RALM框架——上下文检索增强语言模型(In-Context RALM) (详见第3节),该框架采用零干预文档读取机制:直接将选定文档预置至大语言模型的输入文本前 (图2)。

Section 4 describes our experimental setup. To show the wide applicability of our framework, we performed LM experiments on a suite of five diverse corpora: WikiText-103 (Merity et al., 2016), RealNews (Zellers et al., 2019), and three datasets from The Pile (Gao et al., 2021): ArXiv, Stack Exchange and FreeLaw. We use open-source LMs ranging from 110M to 66B parameters (from the GPT-2, GPT-Neo, OPT and LLaMA model families).

第4节描述了我们的实验设置。为展示框架的广泛适用性,我们在五个多样化语料库上进行了大语言模型实验:WikiText-103 (Merity等人, 2016)、RealNews (Zellers等人, 2019)以及来自The Pile (Gao等人, 2021)的三个数据集(ArXiv、Stack Exchange和FreeLaw)。实验使用了参数量从1.1亿到660亿不等的开源大语言模型(涵盖GPT-2、GPT-Neo、OPT和LLaMA模型系列)。

In Section 5 we evaluate the application of offthe-shelf retrievers to our framework. In this minimal-effort setting, we found that In-Context RALM led to LM performance gains equivalent to increasing the LM’s number of parameters by $2-$ $3\times$ across all of the text corpora we examined. In Section 6 we investigate methods for adapting document ranking to the LM task, a relatively underexplored RALM degree of freedom. Our adaptation methods range from using a small LM to per- form zero-shot ranking of the retrieved documents, up to training a dedicated bidirectional reranker by employing self-supervision from the LM signal. These methods lead to further gains in the LM task corresponding to an additional size increase of $2\times$ in the LM architecture. As a concrete example of the gains, a 345M parameter GPT-2 enhanced by In-Context RALM outperforms a 762M parameter GPT-2 when employing an off-the-shelf BM25 retriever (Robertson and Zaragoza, 2009), and outperforms a 1.5B parameter GPT-2 when employing our trained LM-oriented reranker (see Figure 1). For large model sizes, our method is even more effective: In-Context RALM with an off-the-shelf retriever improved the performance of a 6.7B parameter OPT model to match that of a 66B parameter parameter OPT model (see Figure 4).

在第5节中,我们评估了现成检索器在本框架中的应用。在这种最小化人工干预的设置下,我们发现上下文检索增强语言模型(In-Context RALM)带来的性能提升,相当于将大语言模型的参数量增加$2-$ $3\times$(基于我们测试的所有文本语料)。第6节探讨了如何使文档排序适应大语言模型任务的方法,这是当前检索增强语言模型中研究较少的自由度方向。我们的适配方法包括:使用小型语言模型对检索文档进行零样本排序,以及通过大语言模型信号进行自监督训练专用双向重排序器。这些方法可带来相当于模型规模再扩大$2\times$的性能提升。具体而言:当采用现成BM25检索器(Robertson and Zaragoza, 2009)时,经过In-Context RALM增强的3.45亿参数GPT-2模型性能超越7.62亿参数GPT-2;而使用我们训练的语言模型导向重排序器时,其性能更超越15亿参数GPT-2(见图1)。对于大型模型,本方法效果更为显著:使用现成检索器的In-Context RALM将67亿参数OPT模型的性能提升至匹配660亿参数OPT模型的水平(见图4)。

In Section 7 we demonstrate the applicability of In-Context RALM to downstream open-domain questions answering (ODQA) tasks.

在第7节中,我们展示了上下文检索增强语言模型(In-Context RALM)在下游开放域问答(ODQA)任务中的适用性。

In a concurrent work, Shi et al. (2023) also suggest to augment off-the-shelf LMs with retrieved texts by prepending them to the input. Their results are based on training a dedicated retriever for language modeling. In contrast, we focus on the gains achievable in using off-the-shelf retrievers for this task. We show strong gains of this simpler setting by investigating: (1) which off-the-shelf retriever is best suited for language modeling, (2) the frequency of retrieval operations, and (3) the optimal query length. In addition, we boost the offthe-shelf retrieval performance by introducing two reranking methods that demonstrate further gains in perplexity.

在同期研究中,Shi等人(2023)同样建议通过将检索文本前置输入的方式增强现成大语言模型(Large Language Model)性能。他们的研究基于训练专用检索器来优化语言建模,而我们的重点在于探索使用现成检索器所能实现的性能提升。通过研究以下三个方面,我们证明了这种更简单设置带来的显著增益:(1)哪种现成检索器最适合语言建模,(2)检索操作的执行频率,(3)最优查询长度。此外,我们通过引入两种重排序方法进一步提升了现成检索器的性能,这些方法在困惑度指标上展现了额外改进。

We believe that In-Context RALM can play two important roles in making RALM systems more powerful and more prevalent. First, given its simple reading mechanism, In-Context RALM can serve as a clean probe for developing document retrieval methods that are specialized for the LM task. These in turn can be used to improve both In-Context RALM and other more elaborate RALM methods that currently leverage general purpose retrievers. Second, due to its compatibility with off-the-shelf LMs, In-Context RALM can help drive wider deployment of RALM systems.

我们相信,上下文检索增强语言模型 (In-Context RALM) 能在增强RALM系统能力与普及度方面发挥双重作用。首先,凭借其简洁的阅读机制,该模型可作为开发面向大语言模型任务的专用文档检索方法的理想探针,这些方法不仅能优化上下文RALM,也能改进当前依赖通用检索器的其他复杂RALM方案。其次,由于与现成大语言模型的兼容性,该技术有望推动检索增强语言模型系统更广泛落地。

2 Related Work

2 相关工作

RALM approaches can be roughly divided into two families of models: (i) nearest-neighbor language models (also called kNN-LM), and (ii) retrieve and read models. Our work belongs to the second family, but is distinct in that it involves no further training of the LM.

RALM方法大致可分为两类模型:(i) 最近邻语言模型 (也称为kNN-LM),以及 (ii) 检索阅读模型。我们的工作属于第二类,但不同之处在于它不涉及对大语言模型的进一步训练。

Nearest Neighbor Language Models The $k\mathbf{NN}.$ LM approach was first introduced in Khandelwal et al. (2020). The authors suggest a simple inference-time model that interpolates between two next-token distributions: one induced by the LM itself, and one induced by the $k$ neighbors from the retrieval corpus that are closest to the query token in the LM embedding space. Zhong et al. (2022) suggest a framework for training these models. While they showed significant gains from $k\mathbf{NN-LM}$ , the approach requires storing the representations for each token in the corpus, an expensive requirement even for a small corpus like Wikipedia. Although numerous approaches have been suggested for alleviating this issue (He et al., 2021; Alon et al., 2022), scaling any of them to large corpora remains an open challenge.

最近邻语言模型

$k\mathbf{NN}$-LM方法由Khandelwal等人(2020)首次提出。作者提出了一种简单的推理时模型,该模型对两种下一token分布进行插值:一种由大语言模型本身生成,另一种由检索语料库中与查询token在嵌入空间最接近的$k$个邻居生成。Zhong等人(2022)提出了训练这些模型的框架。虽然$k\mathbf{NN-LM}$显示出显著优势,但该方法需要存储语料库中每个token的表示,即使对于维基百科这样的小型语料库也是昂贵的要求。尽管已有多种方法被提出以缓解此问题(He等人,2021;Alon等人,2022),但将其扩展到大型语料库仍是一个开放挑战。

Retrieve and Read Models This family of RALMs creates a clear division between document selection and document reading components. All prior work involves training the LM. We begin by describing works that use this approach for tackling downstream tasks, and then mention works oriented towards RALM. Lewis et al. (2020) and Izacard and Grave (2021) fine tuned encoder–decoder architectures for downstream knowledge-intensive tasks. Izacard et al. (2022b) explored different ways of pre training such models, while Levine et al. (2022c) pretrained an auto regressive LM on clusters of nearest neighbors in sentence embedding space. Levine et al. (2022a) showed competitive open domain question-answering performance by prompt-tuning a frozen LM as a reader. Guu et al. (2020) pretrained REALM, a retrieval augmented bidirectional, masked LM, later fine-tuned for open-domain question answering. The work closest to this paper—with a focus on the language modeling task—is RETRO (Borgeaud et al., 2022), which modifies an auto regressive LM to attend to relevant documents via chunked cross-attention, thus introducing new parameters to the model. OurIn-Context RALM differs from prior work in this family of models in two key aspects:

检索与阅读模型

这类检索增强型大语言模型(RALM)明确划分了文档选择与文档阅读组件。所有先前研究都涉及对大语言模型的训练。我们首先描述将该方法应用于下游任务的研究,再介绍面向RALM的研究。Lewis等人(2020)和Izacard与Grave(2021)针对知识密集型下游任务微调了编码器-解码器架构。Izacard等人(2022b)探索了此类模型的不同预训练方法,而Levine等人(2022c)则在句子嵌入空间的最近邻簇上预训练了自回归大语言模型。Levine等人(2022a)通过提示调优冻结的大语言模型作为阅读器,展现了具有竞争力的开放域问答性能。Guu等人(2020)预训练了REALM——一种检索增强的双向掩码大语言模型,后针对开放域问答任务进行微调。与本论文最接近的研究是专注于语言建模任务的RETRO(Borgeaud等人,2022),该研究通过分块交叉注意力机制使自回归大语言模型关注相关文档,从而为模型引入新参数。我们的上下文检索增强型大语言模型(In-Context RALM)与该类模型先前研究有两个关键区别:

• We use off-the-shelf LMs for document reading without any further training of the LM. • We focus on how to choose documents for improved LM performance.

• 我们直接使用现成的大语言模型 (LLM) 进行文档阅读,无需对模型进行额外训练。

• 我们重点研究如何通过优化文档选择策略来提升大语言模型性能。

3 Our Framework

3 我们的框架

3.1 In-Context RALM

3.1 上下文相关RALM

Language models define probability distributions over sequences of tokens. Given such a sequence $x_{1},...,x_{n}$ , the standard way to model its probability is via next-token prediction: $p(x_{1},...,x_{n})=$ $\textstyle\prod_{i=1}^{n}p(x_{i}|x_{<i})$ , where $x_{<i}:=x_{1},...,x_{i-1}$ is the sequence of tokens preceding $x_{i}$ , also referred to as its prefix. This auto regressive model is usually implemented via a learned transformer network (Vaswani et al., 2017) parameterized by the set of parameters $\theta$ :

语言模型定义了token序列的概率分布。给定一个序列 $x_{1},...,x_{n}$ ,建模其概率的标准方法是通过下一个token预测: $p(x_{1},...,x_{n})=$ $\textstyle\prod_{i=1}^{n}p(x_{i}|x_{<i})$ ,其中 $x_{<i}:=x_{1},...,x_{i-1}$ 是 $x_{i}$ 的前缀序列。这种自回归模型通常通过参数为 $\theta$ 的Transformer网络 (Vaswani et al., 2017) 实现:

$$

p(x_{1},...,x_{n})=\prod_{i=1}^{n}p_{\theta}(x_{i}|x_{<i}),

$$

$$

p(x_{1},...,x_{n})=\prod_{i=1}^{n}p_{\theta}(x_{i}|x_{<i}),

$$

where the conditional probabilities are modeled by employing a causal self-attention mask (Radford et al., 2018). Notably, leading LMs such as GPT-2 (Radford et al., 2019), GPT-3 (Brown et al., 2020), OPT (Zhang et al., 2022) or Jurassic- 1 (Lieber et al., 2021) follow this simple parameterization.

其中条件概率通过采用因果自注意力掩码 (causal self-attention mask) 建模 (Radford et al., 2018) 。值得注意的是,主流大语言模型如 GPT-2 (Radford et al., 2019)、GPT-3 (Brown et al., 2020)、OPT (Zhang et al., 2022) 和 Jurassic-1 (Lieber et al., 2021) 均采用这种简单参数化方式。

Retrieval augmented language models (RALMs) add an operation that retrieves one or more documents from an external corpus $\mathcal{C}$ , and condition the above LM predictions on these documents. Specifically, for predicting $x_{i}$ , the retrieval operation from $\mathcal{C}$ depends on its prefix: $\mathcal{R}_ {\mathcal{C}}(x_{<i})$ , so the most general RALM decomposition is: $p(x_{1},...,x_{n})=$ $\begin{array}{r}{\prod_{i=1}^{n}p(x_{i}|\boldsymbol x_{<i},\mathcal{R}c(\boldsymbol x_{<i}))}\end{array}$ . In order to condition the LM generation on the retrieved document, previous RALM approaches used specialized architectures or algorithms (see $\S2$ ). Inspired by the success of In-Context Learning (Brown et al., 2020; Dong et al., 2023), In-Context RALM refers to the following specific, simple method of concatenating the retrieved documents2 within the Transformer’s input prior to the prefix (see Figure 2), which does not involve altering the LM weights $\theta$ :

检索增强语言模型 (RALM) 通过从外部语料库 $\mathcal{C}$ 检索一个或多个文档的操作,并基于这些文档调整上述语言模型的预测。具体来说,为预测 $x_{i}$ ,从 $\mathcal{C}$ 的检索操作依赖于其前缀: $\mathcal{R}_ {\mathcal{C}}(x_{<i})$ ,因此最通用的 RALM 分解为: $p(x_{1},...,x_{n})=$ $\begin{array}{r}{\prod_{i=1}^{n}p(x_{i}|\boldsymbol x_{<i},\mathcal{R}c(\boldsymbol x_{<i}))}\end{array}$ 。为了使语言模型生成基于检索到的文档,以往的 RALM 方法使用了专门的架构或算法(见 $\S2$ )。受上下文学习 (In-Context Learning) 成功的启发 (Brown et al., 2020; Dong et al., 2023),上下文 RALM 指的是以下具体而简单的方法:在 Transformer 的输入中,将检索到的文档2与前缀拼接(见图 2),这种方法不涉及修改语言模型权重 $\theta$ :

$$

[

p(\boldsymbol{x}_ 1, \dots, \boldsymbol{x}_ n) = \prod_{i=1}^n p_{\theta}\left( \boldsymbol{x}_ i \mid \left[ \mathcal{R}_ {\mathcal{C}}(\boldsymbol{x}_ {<i}); \boldsymbol{x}_{<i} \right] \right)

]

$$

$$

[

p(\boldsymbol{x}_ 1, \dots, \boldsymbol{x}_ n) = \prod_{i=1}^n p_{\theta}\left( \boldsymbol{x}_ i \mid \left[ \mathcal{R}_ {\mathcal{C}}(\boldsymbol{x}_ {<i}); \boldsymbol{x}_{<i} \right] \right)

]

$$

where $[a;b]$ denotes the concatenation of strings $a$ and $b$ .

其中 $[a;b]$ 表示字符串 $a$ 和 $b$ 的拼接。

Since common Transformer-based LM implementations support limited length input sequences, when the concatenation of the document and the input sequence exceed this limit we remove tokens from the beginning of $x$ until the overall input length equals that allowed by the model. Because our retrieved documents are passages of limited length, we always have enough context left from $x$ (see $\S4.3)$ .

由于常见的基于Transformer的大语言模型实现支持有限长度的输入序列,当文档和输入序列的拼接超过此限制时,我们会从$x$的开头移除token,直到整体输入长度等于模型允许的长度。由于我们检索的文档是有限长度的段落,因此总能从$x$中保留足够的上下文(参见$\S4.3$)。

3.2 RALM Design Choices

3.2 RALM 设计选择

We detail below two practical design choices often made in RALM systems. In $\S5$ , we investigate the effect of these in the setting of In-Context RALM.

我们在下面详细介绍了RALM系统中常见的两种实用设计选择。在$\S5$中,我们研究了这些选择在上下文RALM环境中的影响。

Retrieval Stride While in the above formulation a retrieval operation can occur at each generation step, we might want to perform retrieval only once every $s>1$ tokens due to the cost of calling the retriever, and the need to replace the documents in the LM prefix during generation. We refer to $s$ as the retrieval stride. This gives rise to the following In-Context RALM formulation (which reduces back to Eq. (2) for $s=1$ ):

检索步长

在上述设定中,每次生成步骤都可能触发检索操作,但由于调用检索器的成本较高,且需要在生成过程中替换大语言模型前缀中的文档,我们可能希望每生成 $s>1$ 个token才执行一次检索。我们将 $s$ 称为检索步长。由此得到以下上下文检索增强大语言模型(In-Context RALM)的公式化表达(当 $s=1$ 时,该表达式会退化为式(2)):

$$

\begin{array}{r l}&{p(x_{1},...,x_{n})=}\ &{\quad\prod_{j=0}^{n_{s}-1}\prod_{i=1}^{s}p_{\theta}\left(x_{s\cdot j+i}\vert\left[\mathcal{R}_ {\mathcal{C}}(x_{\leq s\cdot j});x_{<(s\cdot j+i)}\right]\right),}\end{array}

$$

$$

\begin{array}{r l}&{p(x_{1},...,x_{n})=}\ &{\quad\prod_{j=0}^{n_{s}-1}\prod_{i=1}^{s}p_{\theta}\left(x_{s\cdot j+i}\vert\left[\mathcal{R}_ {\mathcal{C}}(x_{\leq s\cdot j});x_{<(s\cdot j+i)}\right]\right),}\end{array}

$$

where $n_{s}=n/s$ is the number of retrieval strides.

其中 $n_{s}=n/s$ 为检索步长数。

Notably, in this framework the runtime costs of each retrieval operation is composed of (a) applying the retriever itself, and (b) re computing the embeddings of the prefix. In $\S5.2$ we show that using smaller retrieval strides, i.e., retrieving as often as possible, is superior to using larger ones (though In-Context RALM with larger strides already provides large gains over vanilla LM). Thus, choosing the retrieval stride is ultimately a tradeoff between runtime and performance.

值得注意的是,在该框架中,每次检索操作的运行时成本由两部分组成:(a) 应用检索器本身,(b) 重新计算前缀的嵌入向量。在$\S5.2$中我们证明,使用较小的检索步长(即尽可能频繁地检索)优于使用较大步长(尽管采用较大步长的上下文检索增强大语言模型(In-Context RALM)已比原始大语言模型带来显著提升)。因此,检索步长的选择本质上是运行时间与性能之间的权衡。

Retrieval Query Length While the retrieval query above in principle depends on all prefix tokens $x_{\leq s\cdot j}$ , the information at the very end of the prefix is typically the most relevant to the generated tokens. If the retrieval query is too long then this information can be diluted. To avoid this, we restrict the retrieval query at stride $j$ to the last $\ell$ tokens of the prefix, i.e., we use qj $q_{j}^{s,\ell}:=x_{s\cdot j-\ell+1},...,x_{s\cdot j}$ . We refer to $\ell$ as the retrieval query length. Note that prior RALM work couples the retrieval stride $s$ and the retrieval query length $\ell$ (Borgeaud et al., 2022). In $\S5$ , we show that enforcing $s=\ell$ degrades LM performance. Integrating these hyper-parameters into the In-Context RALM formulation gives

检索查询长度

虽然上述检索查询原则上依赖于所有前缀token $x_{\leq s\cdot j}$,但前缀末尾的信息通常与生成的token最相关。如果检索查询过长,这些信息可能会被稀释。为避免这种情况,我们将步长$j$处的检索查询限制为前缀的最后$\ell$个token,即使用$q_{j}^{s,\ell}:=x_{s\cdot j-\ell+1},...,x_{s\cdot j}$。我们将$\ell$称为检索查询长度。

需要注意的是,先前RALM研究将检索步长$s$与检索查询长度$\ell$耦合处理 (Borgeaud et al., 2022)。在$\S5$中,我们将证明强制$s=\ell$会降低语言模型性能。将这些超参数整合到上下文RALM公式中可得:

$$

\begin{array}{r l}&{p(x_{1},...,x_{n})=}\ &{\quad\prod_{j=0}^{n_{s}-1}\prod_{i=1}^{s}p_{\theta}\left(x_{s\cdot j+i}\vert\left[\mathcal{R}_ {\mathcal{C}}(q_{j}^{s,\ell});x_{<(s\cdot j+i)}\right]\right).}\end{array}

$$

$$

\begin{array}{r l}&{p(x_{1},...,x_{n})=}\ &{\quad\prod_{j=0}^{n_{s}-1}\prod_{i=1}^{s}p_{\theta}\left(x_{s\cdot j+i}\vert\left[\mathcal{R}_ {\mathcal{C}}(q_{j}^{s,\ell});x_{<(s\cdot j+i)}\right]\right).}\end{array}

$$

4 Experimental Details

4 实验细节

We now describe our experimental setup, including all models we use and their implementation details.

我们现在描述实验设置,包括使用的所有模型及其实现细节。

4.1 Datasets

4.1 数据集

We evaluated the effectiveness of In-Context RALM across five diverse language modeling datasets and two common open-domain question answering datasets.

我们在五个不同的语言建模数据集和两个常见的开放域问答数据集上评估了In-Context RALM的有效性。

Language Modeling The first LM dataset is WikiText-103 (Merity et al., 2016), which has been extensively used to evaluate RALMs (Khandelwal et al., 2020; He et al., 2021; Borgeaud et al., 2022; Alon et al., 2022; Zhong et al., 2022). Second, we chose three datasets spanning diverse subjects from The Pile (Gao et al., 2021): ArXiv, Stack Exchange and FreeLaw. Finally, we also investigated RealNews (Zellers et al., 2019), since The Pile lacks a corpus focused only on news (which is by nature a knowledge-intensive domain).

语言建模

首个语言模型数据集是WikiText-103 (Merity et al., 2016),该数据集被广泛用于评估检索增强语言模型(RALMs) (Khandelwal et al., 2020; He et al., 2021; Borgeaud et al., 2022; Alon et al., 2022; Zhong et al., 2022)。其次,我们从The Pile (Gao et al., 2021)中选取了涵盖多个学科的三个数据集:ArXiv、Stack Exchange和FreeLaw。最后,我们还研究了RealNews (Zellers et al., 2019),因为The Pile缺乏专注于新闻的语料库(新闻本质上是知识密集型领域)。

Open-Domain Question Answering In order to evaluate In-Context RALM on downstream tasks as well, we use the Natural Questions (NQ; Kwiatkowski et al. 2019) and TriviaQA (Joshi et al., 2017) open-domain question answering datasets.

开放域问答

为了在下游任务中评估上下文相关RALM,我们使用了Natural Questions (NQ; Kwiatkowski et al. 2019)和TriviaQA (Joshi et al., 2017)这两个开放域问答数据集。

4.2 Models

4.2 模型

Language Models We performed our experiments using the four models of GPT-2 (110M– 1.5B; Radford et al. 2019), three models of GPTNeo and GPT-J (1.3B–6B; Black et al. 2021; Wang and Komatsu zak i 2021), eight models of OPT (125M–66B; Zhang et al. 2022) and three models of LLaMA (7B–33B; Touvron et al. 2023). All models are open source and publicly available.3

语言模型

我们使用 GPT-2 的四个模型 (110M–1.5B; Radford et al. 2019)、GPTNeo 和 GPT-J 的三个模型 (1.3B–6B; Black et al. 2021; Wang and Komatsuzaki 2021)、OPT 的八个模型 (125M–66B; Zhang et al. 2022) 以及 LLaMA 的三个模型 (7B–33B; Touvron et al. 2023) 进行了实验。所有模型均为开源且公开可用。

We elected to study these particular models for the following reasons. The first four (GPT-2) models were trained on WebText (Radford et al., 2019), with Wikipedia documents excluded from their training datasets. We were thus able to evaluate our method’s “zero-shot” performance when retrieving from a novel corpus (for WikiText-103). The rest of the models brought two further benefits. First, they allowed us to investigate how our methods scale to models larger than GPT-2. Second, the fact that Wikipedia was part of their training data allowed us to investigate the usefulness of In-Context RALM for corpora seen during training. The helpfulness of such retrieval has been demonstrated for previous RALM methods (Khandelwal et al., 2020) and has also been justified theoretically by Levine et al. (2022c).

我们选择研究这些特定模型的原因如下。前四个 (GPT-2) 模型是在 WebText (Radford et al., 2019) 上训练的,其训练数据集中排除了维基百科文档。因此,我们能够评估从新语料库 (WikiText-103) 检索时方法的"零样本"性能。其余模型带来了两个额外优势:首先,它们让我们能研究该方法如何扩展到比 GPT-2 更大的模型;其次,由于维基百科是其训练数据的一部分,这使我们能研究 In-Context RALM 对训练期间见过的语料库的有效性。此类检索的效用已在先前 RALM 方法 (Khandelwal et al., 2020) 中得到证实,并得到 Levine 等人 (2022c) 的理论支持。

We ran all models with a maximum sequence length of 1,024, even though GPT-Neo, OPT and LLaMA models support a sequence length of 2,048.4

我们将所有模型的最大序列长度设为1,024运行,尽管GPT-Neo、OPT和LLaMA模型支持2,048的序列长度。

Retrievers We experimented with both sparse (word-based) and dense (neural) retrievers. We used BM25 (Robertson and Zaragoza, 2009) as our sparse model. For dense models, we experimented with (i) a frozen BERT-base (Devlin et al., 2019) followed by mean pooling, similar to Borgeaud et al. (2022); and (ii) the Contriever (Izacard et al., 2022a) and Spider (Ram et al., 2022) models, which are dense retrievers that were trained in unsupervised manners.

检索器

我们尝试了稀疏(基于词)和稠密(基于神经网络)两种检索器。使用BM25 (Robertson和Zaragoza, 2009)作为稀疏模型。对于稠密模型,我们测试了:(i) 固定参数的BERT-base (Devlin等人, 2019)结合均值池化,类似Borgeaud等人 (2022)的方法;(ii) Contriever (Izacard等人, 2022a)和Spider (Ram等人, 2022)模型,这些是通过无监督方式训练的稠密检索器。

Reranking When training rerankers (Section 6.2), we initialized from RoBERTa-base (Liu et al., 2019).

重排序

训练重排序器时(第6.2节),我们从RoBERTa-base (Liu et al., 2019)初始化。

4.3 Implementation Details

4.3 实现细节

We implemented our code base using the Transformers library (Wolf et al., 2020). We based our dense retrieval code on the DPR repository (Karpukhin et al., 2020).

我们使用Transformers库(Wolf等人, 2020)实现了代码库。我们的密集检索代码基于DPR代码库(Karpukhin等人, 2020)。

Figure 3: The performance of four off-the-shelf retrievers used for In-Context RALM on the development set of WikiText-103. All RALMs are run with $s=4$ (i.e., retrieval is applied every four tokens). For each RALM, we report the result of the best query length $\ell$ (see Figures 6, 9, 10).

图 3: 四种现成检索器在WikiText-103开发集上用于上下文RALM (In-Context RALM) 的性能表现。所有RALM均以 $s=4$ 运行 (即每4个token执行一次检索)。对于每个RALM,我们报告了最佳查询长度 $\ell$ 的结果 (参见图6、图9、图10)。

Retrieval Corpora For WikiText-103 and ODQA datasets, we used the Wikipedia corpus from Dec. 20, 2018, standardized by Karpukhin et al. (2020) using the preprocessing from Chen et al. (2017). To avoid contamination, we found and removed all 120 articles of the development and test set of WikiText-103 from the corpus. For the remaining datasets, we used their training data as the retrieval corpus. Similar to Karpukhin et al. (2020), our retrieval corpora consist of non-overlapping passages of 100 words (which translate to less than 150 tokens for the vast majority of passages). Thus, we truncate our retrieved passages at 256 tokens when input to the models, but they are usually much smaller.

WikiText-103和ODQA数据集的检索语料库采用Karpukhin等人(2020)基于Chen等人(2017)预处理流程标准化的2018年12月20日维基百科语料。为避免数据污染,我们移除了该语料库中与WikiText-103开发集及测试集重合的120篇文章。其余数据集则直接使用其训练数据作为检索语料库。参照Karpukhin等人(2020)的做法,我们的检索语料库由100词长度的非重叠段落构成(绝大多数段落对应的token数少于150个)。因此输入模型时会将检索段落截断至256个token,但实际长度通常远小于该值。

Retrieval For sparse retrieval, we used the Pyserini library (Lin et al., 2021). For dense retrieval, we applied exact search using FAISS (Johnson et al., 2021).

检索

对于稀疏检索 (sparse retrieval),我们使用了 Pyserini 库 (Lin et al., 2021)。对于稠密检索 (dense retrieval),我们采用 FAISS (Johnson et al., 2021) 进行精确搜索。

5 The Effectiveness of In-Context RALM with Off-the-Shelf Retrievers

5 现成检索器在上下文 RALM 中的有效性

We now empirically show that despite its simple document reading mechanism, In-Context RALM leads to substantial LM gains across our diverse evaluation suite. We begin in this section by investigating the effectiveness of off-the-shelf retrievers for In-Context RALM; we go on in $\S6$ to show that further LM gains can be made by tailoring document ranking functions to the LM task.

我们现在通过实证表明,尽管采用了简单的文档读取机制,上下文检索增强型大语言模型(In-Context RALM)在我们的多样化评估体系中仍能带来显著的语言模型性能提升。本节首先研究现成检索器在上下文检索增强型大语言模型中的有效性;随后在$\S6$章节将展示,通过针对语言模型任务定制文档排序函数,可以进一步获得性能提升。

The experiments in this section provided us with a recommended configuration for applying In

本节实验为我们提供了应用In的推荐配置

Table 1: Perplexity on the test set of WikiText-103, RealNews and three datasets from the Pile. For each LM, we report: (a) its performance without retrieval, (b) its performance when fed the top-scored passage by BM25 (§5), and (c) its performance when applied on the top-scored passage of each of our two suggested rerankers (§6). All models share the same vocabulary, thus token-level perplexity (token ppl) numbers are comparable. For WikiText we follow prior work and report word-level perplexity $(w o r d p p l)$ .

| Model | Retrieval | Reranking | WikiText-103 | RealNews | ArXiv | Stack Exch. | FreeLaw |

| word ppl | token ppl | token ppl | token ppl | token ppl | |||

| GPT-2 S | 一 | 37.5 | 21.3 | 12.0 | 12.8 | 13.0 | |

| BM25 §5 | 一 | 29.6 | 16.1 | 10.9 | 11.3 | 9.6 | |

| BM25 | Zero-shot §6.1 | 28.6 | 15.5 | 10.1 | 10.6 | 8.8 | |

| BM25 | Predictive §6.2 | 26.8 | 一 | 一 | 一 | ||

| GPT-2 M | 一 | 26.3 | 15.7 | 9.3 | 8.8 | 9.6 | |

| BM25§5 | 一 | 21.5 | 12.4 | 8.6 | 8.1 | 7.4 | |

| BM25 | Zero-shot §6.1 | 20.8 | 12.0 | 8.0 | 7.7 | 6.9 | |

| BM25 | Predictive §6.2 | 19.7 | 一 | 一 | 一 | ||

| GPT-2 L | 22.0 | 13.6 | 8.4 | 8.5 | 8.7 | ||

| BM25 §5 | 18.1 | 10.9 | 7.8 | 7.8 | 6.8 | ||

| BM25 | Zero-shot §6.1 | 17.6 | 10.6 | 7.3 | 7.4 | 6.4 | |

| BM25 | Predictive §6.2 | 16.6 | 一 | 一 | |||

| GPT-2XL | 一 | 一 | 20.0 | 12.4 | 7.8 | 8.0 | 8.0 |

| BM25§5 | 16.6 | 10.1 | 7.2 | 7.4 | 6.4 | ||

| BM25 | Zero-shot §6.1 | 16.1 | 9.8 | 6.8 | 7.1 | 6.0 | |

| BM25 | Predictive §6.2 | 15.4 |

表 1: WikiText-103、RealNews 和 Pile 中三个数据集的测试集困惑度。对于每个大语言模型,我们报告: (a) 无检索时的性能, (b) 输入 BM25 最高分段落时的性能 (§5), 以及 (c) 应用我们建议的两个重排序器最高分段落时的性能 (§6)。所有模型共享相同词汇表,因此 token 级困惑度 (token ppl) 数值可比较。WikiText 遵循先前工作报告词级困惑度 $(word ppl)$。

| 模型 | 检索 | 重排序 | WikiText-103word ppl | RealNewstoken ppl | ArXivtoken ppl | Stack Exch.token ppl | FreeLawtoken ppl |

|---|---|---|---|---|---|---|---|

| GPT-2 S | 一 | 37.5 | 21.3 | 12.0 | 12.8 | 13.0 | |

| BM25 §5 | 一 | 29.6 | 16.1 | 10.9 | 11.3 | 9.6 | |

| BM25 | 零样本 §6.1 | 28.6 | 15.5 | 10.1 | 10.6 | 8.8 | |

| BM25 | Predictive §6.2 | 26.8 | 一 | 一 | 一 | ||

| GPT-2 M | 一 | 26.3 | 15.7 | 9.3 | 8.8 | 9.6 | |

| BM25 §5 | 一 | 21.5 | 12.4 | 8.6 | 8.1 | 7.4 | |

| BM25 | 零样本 §6.1 | 20.8 | 12.0 | 8.0 | 7.7 | 6.9 | |

| BM25 | Predictive §6.2 | 19.7 | 一 | 一 | 一 | ||

| GPT-2 L | 22.0 | 13.6 | 8.4 | 8.5 | 8.7 | ||

| BM25 §5 | 18.1 | 10.9 | 7.8 | 7.8 | 6.8 | ||

| BM25 | 零样本 §6.1 | 17.6 | 10.6 | 7.3 | 7.4 | 6.4 | |

| BM25 | Predictive §6.2 | 16.6 | 一 | 一 | |||

| GPT-2 XL | 一 | 一 | 20.0 | 12.4 | 7.8 | 8.0 | 8.0 |

| BM25 §5 | 16.6 | 10.1 | 7.2 | 7.4 | 6.4 | ||

| BM25 | 零样本 §6.1 | 16.1 | 9.8 | 6.8 | 7.1 | 6.0 | |

| BM25 | Predictive §6.2 | 15.4 |

Table 2: The performance of models from the LLaMA family, measured by word-level perplexity on the test set of WikiText-103.

| Model | Retrieval | WikiText-103 |

| word ppl | ||

| LLaMA-7B | 9.9 | |

| BM25,§5 | 8.8 | |

| LLaMA-13B | 8.5 | |

| BM25,§5 | 7.6 | |

| LLaMA-33B | 6.3 | |

| BM25,§5 | 6.1 |

表 2: LLaMA系列模型在WikiText-103测试集上的词级困惑度表现

| Model | Retrieval | WikiText-103 |

|---|---|---|

| LLaMA-7B | 9.9 | |

| BM25,§5 | 8.8 | |

| LLaMA-13B | 8.5 | |

| BM25,§5 | 7.6 | |

| LLaMA-33B | 6.3 | |

| BM25,§5 | 6.1 |

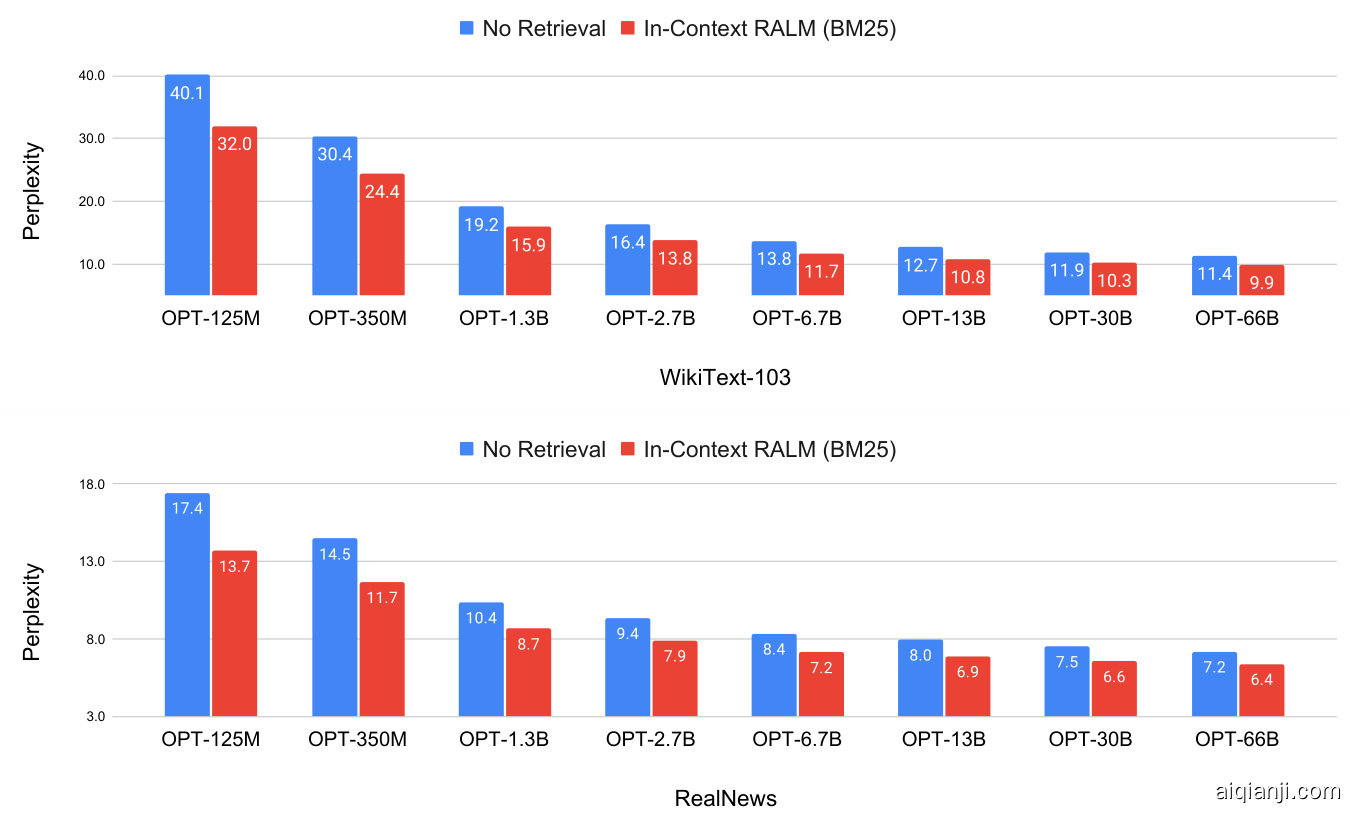

Context RALM: applying a sparse BM25 retriever that receives $\ell=32$ query tokens and is applied as frequently as possible. Practically, we retrieve every $s=4$ tokens ( $\ell$ and $s$ are defined in $\S3$ ). Table 1 shows for the GPT-2 models that across all the examined corpora, employing In-Context RALM with an off-the-shelf retriever improved LM perplexity to a sufficient extent that it matched that of a $2{-}3\times$ larger model. Figure 4 and Tables 2 and 5 show that this trend holds across model sizes up to 66B parameters, for both WikiText-103 and

上下文RALM:应用一个稀疏BM25检索器,接收$\ell=32$个查询token,并尽可能频繁地应用。实际上,我们每$s=4$个token检索一次($\ell$和$s$的定义见$\S3$)。表1显示,对于GPT-2模型,在所有检查的语料库中,使用现成检索器的上下文RALM将语言模型的困惑度提升到足以匹配$2{-}3\times$更大模型的程度。图4及表2和表5表明,这一趋势在模型规模高达660亿参数的情况下,对于WikiText-103和...

RealNews.

RealNews

5.1 BM25 Outperforms Off-the-Shelf Neural Retrievers in Language Modeling

5.1 BM25 在语言建模中优于现成的神经检索器

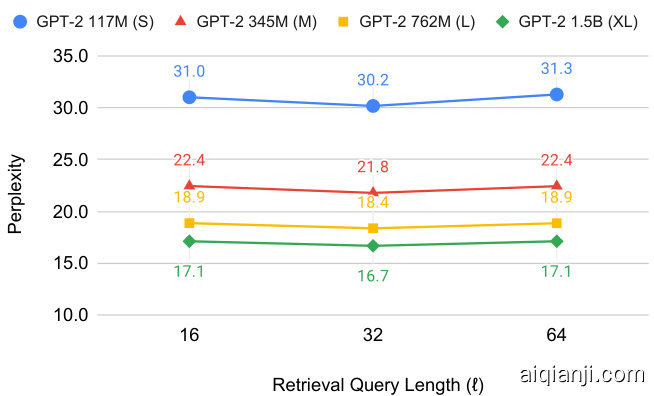

We experimented with different off-the-shelf general purpose retrievers, and found that the sparse (lexical) BM25 retriever (Robertson and Zaragoza, 2009) outperformed three popular dense (neural) retrievers: the self-supervised retrievers Contriever (Izacard et al., 2022a) and Spider (Ram et al., 2022), as well as a retriever based on the average pooling of BERT embeddings that was used in the RETRO system (Borgeaud et al., 2022). We conducted a minimal hyper-parameter search on the query length $\ell$ for each of the retrievers, and found that $\ell=32$ was optimal for BM25 (Figure 6), and $\ell=64$ worked best for dense retrievers (Figures 9, 10).

我们尝试了多种现成的通用检索器,发现稀疏(词法)BM25检索器(Robertson和Zaragoza,2009)优于三种流行的稠密(神经)检索器:自监督检索器Contriever(Izacard等,2022a)和Spider(Ram等,2022),以及基于BERT嵌入平均池化的RETRO系统(Borgeaud等,2022)所用检索器。我们对每种检索器的查询长度$\ell$进行了最小超参数搜索,发现$\ell=32$对BM25最优(图6),而$\ell=64$对稠密检索器效果最佳(图9、10)。

Figure 3 compares the performance gains of InContext RALM with these four general-purpose retrievers. The BM25 retriever clearly outperformed all dense retrievers. This outcome is consistent with prior work showing that BM25 outperforms neural retrievers across a wide array of tasks, when applied in zero-shot settings (Thakur et al., 2021). This result renders In-Context RALM even more appealing since applying a BM25 retriever is significantly cheaper than the neural alternatives.

图 3 对比了 InContext RALM 与这四种通用检索器的性能提升。BM25 检索器明显优于所有密集检索器。这一结果与先前研究一致 (Thakur et al., 2021) ,表明在零样本设置下,BM25 在多种任务中表现优于神经检索器。由于 BM25 检索器的应用成本显著低于神经检索方案,这一结果使得 In-Context RALM 更具吸引力。

Figure 4: Results of OPT models (Zhang et al., 2022) on the test set of WikiText-103 (word-level perplexity) and the development set of RealNews (token-level perplexity). In-Context RALM models use a BM25 retriever with $s=4$ (i.e., the retriever is called every four tokens) and $\ell=32$ (i.e., the retriever query is comprised of the last 32 tokens of the prefix). In-Context RALM with an off-the-shelf retriever improved the performance of a 6.7B parameter OPT model to match that of $a66B$ parameter OPT model.

图 4: OPT模型 (Zhang et al., 2022) 在WikiText-103测试集 (词级困惑度) 和RealNews开发集 (token级困惑度) 上的结果。上下文检索增强语言模型 (In-Context RALM) 使用BM25检索器,参数为$s=4$ (即每4个token调用一次检索器) 和$\ell=32$ (即检索查询由前缀的最后32个token组成)。采用现成检索器的上下文检索增强语言模型将6.7B参数OPT模型的性能提升至与$a66B$参数OPT模型相当的水平。

5.2 Frequent Retrieval Improves Language Modeling

5.2 频繁检索提升语言建模能力

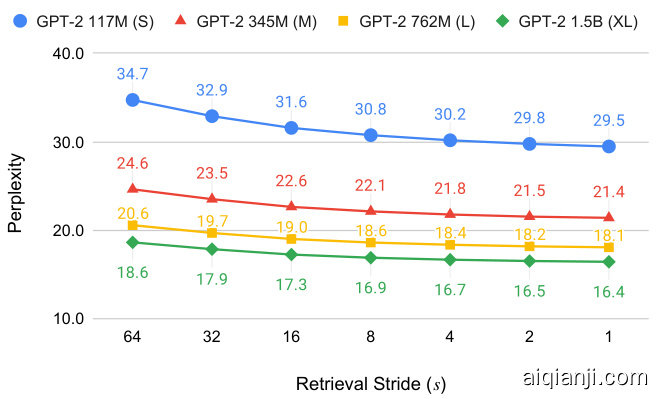

We investigated the effect of varying the retrieval stride s (i.e., the number of tokens between consecutive retrieval operations). Figure 5 shows that LM performance improved as the retrieval operation became more frequent. This supports the intuition that retrieved documents become more relevant the closer the retrieval query becomes to the generated tokens. Of course, each retrieval operation imposes a runtime cost. To balance performance and runtime, we used $s=4$ in our experiments. For comparison, RETRO employed a retrieval frequency of $s=64$ (Borgeaud et al., 2022), which leads to large degradation in perplexity. Intuitively, retrieving with high frequency (low retrieval stride) allows to ground the LM in higher resolution.

我们研究了检索步长s(即连续检索操作之间的token数)变化的影响。图5显示,随着检索操作频率提高,大语言模型性能随之提升。这印证了直觉判断:检索查询位置越接近生成token时,检索到的文档相关性越高。当然,每次检索操作都会带来运行时开销。为平衡性能与耗时,我们在实验中采用$s=4$。作为对比,RETRO采用的检索频率为$s=64$(Borgeaud et al., 2022),这会导致困惑度显著上升。直观而言,高频检索(低检索步长)能使大语言模型基于更细粒度信息进行生成。

5.3 A Contextual iz ation vs. Recency Tradeoff in Query Length

5.3 查询长度中的情境化与时效性权衡

We also investigated the effect of varying $\ell$ , the length of the retrieval query for BM25. Figure 6 reveals an interesting tradeoff and a sweet spot around a query length of 32 tokens. Similar experiments for dense retrievers are given in App. A. We conjecture that when the retriever query is too short, it does not include enough of the input context, decreasing the retrieved document’s relevance. Conversely, excessively growing the retriever query de emphasizes the tokens at the very end of the prefix, diluting the query’s relevance to the LM task.

我们还研究了改变BM25检索查询长度$\ell$的影响。图6揭示了一个有趣的权衡点:查询长度为32个token时达到最佳效果。关于稠密检索器的类似实验见附录A。我们推测当检索查询过短时,无法包含足够的输入上下文,从而降低检索文档的相关性;反之,过度增加检索查询长度会弱化前缀末尾token的重要性,稀释查询与大语言模型任务的相关性。

6 Improving In-Context RALM with LM-Oriented Reranking

6 通过面向大语言模型的重新排序改进上下文检索增强的大语言模型

Since In-Context RALM uses a fixed document reading component by definition, it is natural to ask whether performance can be improved by specializing its document retrieval mechanism to the LM task. Indeed, there is considerable scope for improvement: the previous section considered conditioning the model only on the first document retrieved by the BM25 retriever. This permits very limited semantic understanding of the query, since BM25 is based only on the bag of words signal. Moreover, it offers no way to accord different degrees of importance to different retrieval query tokens, such as recognizing that later query tokens are more relevant to the generated text.

由于上下文相关检索增强语言模型 (In-Context RALM) 根据定义使用了固定的文档读取组件,自然会产生一个问题:能否通过针对大语言模型任务专门优化其文档检索机制来提升性能?事实上,改进空间相当大:上一节仅考虑了基于BM25检索器返回的首个文档来调节模型。这种方式对查询的语义理解非常有限,因为BM25仅基于词袋信号。此外,该方法无法为不同的检索查询token分配不同权重,例如识别到后续查询token与生成文本具有更高相关性。

Figure 5: An analysis of perplexity as a function of $s$ , the retrieval stride, i.e., the number of tokens between consecutive retrieval operations, on the development set of WikiText-103. Throughout the paper, we use $s=4$ to balance perplexity and runtime.

图 5: 困惑度随检索步长 $s$ (即连续检索操作之间的token数量)的变化分析,基于WikiText-103开发集。本文统一采用 $s=4$ 以平衡困惑度与运行时间。

Figure 6: An analysis of perplexity as a function of the number of tokens in the query $\ell$ for BM25 on the development set of WikiText-103. In the appendix, we show similar trade-offs for dense retrievers within WikiText-103. Throughout the paper, we use a query length of $\ell=32$ tokens.

图 6: 在WikiText-103开发集上分析BM25的困惑度随查询token数量$\ell$的变化关系。附录中展示了WikiText-103内稠密检索器的类似权衡。本文统一采用$\ell=32$个token的查询长度。

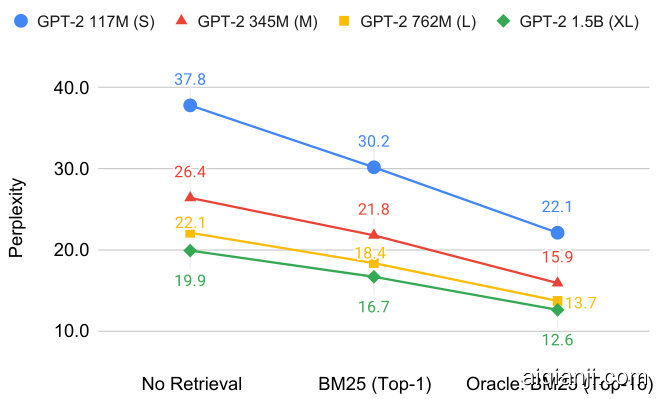

In this section, we focus on choosing which document to present to the model, by reranking the top $k$ documents returned by the BM25 retriever.5 We use Figure 7 as motivation: it shows the large potential for improvement among the top-16 documents returned by the BM25 retriever. We act upon this motivation by using two rerankers. Specifically, in $\S6.1$ we show performance gains across our evaluation suite obtained by using an LM to perform zero-shot reranking of the top $k$ BM25 retrieved documents (results in third row for each of the models in Table 1). Then, in $\S6.2$ we show that training a specialized bidirectional reranker of the top $k$ BM25 retrieved documents in a selfsupervised manner via the LM signal can provide further LM gains (results in forth row for each of the models in Table 1).

在本节中,我们重点讨论如何通过重新排序BM25检索器返回的前$k$篇文档来选择呈现给模型的文档。图7展示了这一动机:它表明BM25检索器返回的前16篇文档存在巨大的改进空间。我们采用两种重新排序器来实现这一目标。具体而言,在$\S6.1$中,我们展示了使用大语言模型对BM25检索到的前$k$篇文档进行零样本重新排序所获得的性能提升(结果见表1中每个模型的第三行)。接着,在$\S6.2$中,我们证明了通过LM信号以自监督方式训练一个专门针对BM25检索前$k$篇文档的双向重新排序器,可以带来进一步的LM性能提升(结果见表1中每个模型的第四行)。

Figure 7: Potential for gains from reranking: perplexity improvement (on the development set of WikiText-103) from an oracle that takes the best of the top-16 documents retrieved by BM25 rather than the first.

图 7: 重排序带来的潜在收益:在WikiText-103开发集上,通过选择BM25检索的前16篇文档中的最佳文档而非首篇文档,困惑度(perplexity)的改进情况。

6.1 LMs as Zero-Shot Rerankers

6.1 大语言模型作为零样本重排器

First, we used off-the-shelf language models as document rerankers for the In-Context RALM setting. Formally, for a query $q$ consisting of the last $\ell$ tokens in the prefix of the LM input $x$ , let ${d_{1},...,d_{k}}$ be the top $k$ documents returned by BM25. For retrieval iteration $j$ , let the text for generation be $y:=x_{s\cdot j+1},...,x_{s\cdot j+s}$ . Ideally, we would like to find the document $d_{i^{*}}$ that maximizes the probability of the text for generation, i.e.,

首先,我们采用现成的语言模型作为文档重排序器,用于上下文相关检索增强语言模型 (In-Context RALM) 场景。具体而言,对于由大语言模型输入 $x$ 前缀中最后 $\ell$ 个 token 组成的查询 $q$,设 ${d_{1},...,d_{k}}$ 为 BM25 返回的前 $k$ 篇文档。在第 $j$ 次检索迭代中,设待生成文本为 $y:=x_{s\cdot j+1},...,x_{s\cdot j+s}$。理想情况下,我们希望找到能最大化待生成文本概率的文档 $d_{i^{*}}$,即

$$

i^{* }=\arg\operatorname*{max}_ {i\in[k]}p_{\theta}(y|[d_{i};x_{\leq s\cdot j}]).

$$

$$

i^{* }=\arg\operatorname*{max}_ {i\in[k]}p_{\theta}(y|[d_{i};x_{\leq s\cdot j}]).

$$

However, at test time we do not have access to the tokens of $y$ . Instead, we used the last $p r e-$ $f\boldsymbol{a}\boldsymbol{x}$ tokens (which are available at test time), denoted by $y^{\prime}$ , for reranking. Formally, let $s^{\prime}$ be a hyper-parameter that determines the number of the prefix tokens by which to rerank. We define $y^{\prime}:=x_{s\cdot j-s^{\prime}+1},...,x_{s\cdot j}$ (i.e., the stride of length $s^{\prime}$ that precedes $y$ ) and choose the document $d_{\hat{i}}$ such

然而,在测试时我们无法获取 $y$ 的 token。为此,我们改用测试时可用的最后 $pre$-$f\boldsymbol{a}\boldsymbol{x}$ 个 token(记为 $y^{\prime}$)进行重排序。具体而言,设 $s^{\prime}$ 为决定用于重排序的前缀 token 数量的超参数。我们定义 $y^{\prime}:=x_{s\cdot j-s^{\prime}+1},...,x_{s\cdot j}$(即 $y$ 之前长度为 $s^{\prime}$ 的滑动窗口),并选择文档 $d_{\hat{i}}$ 使得

| Model | Reranking Model | WikiText-103 | RealNews |

| word ppl | token ppl | ||

| GPT-2 345M (M) | GPT-2 110M (S) | 20.8 | 12.1 |

| GPT-2 345M (M) | 20.8 | 12.0 | |

| GPT-2 762M (L) | GPT-2 110M (S) | 17.7 | 10.7 |

| GPT-2 762M (L) | 17.6 | 10.6 | |

| GPT-2 1.5B (XL) | GPT-2 110M (S) | 16.2 | 9.9 |

| GPT-2 1.5B (XL) | 16.1 | 9.8 |

| 模型 | 重排序模型 | WikiText-103 | RealNews |

|---|---|---|---|

| 词困惑度 | token困惑度 | ||

| GPT-2 345M (M) | GPT-2 110M (S) | 20.8 | 12.1 |

| GPT-2 345M (M) | 20.8 | 12.0 | |

| GPT-2 762M (L) | GPT-2 110M (S) | 17.7 | 10.7 |

| GPT-2 762M (L) | 17.6 | 10.6 | |

| GPT-2 1.5B (XL) | GPT-2 110M (S) | 16.2 | 9.9 |

| GPT-2 1.5B (XL) | 16.1 | 9.8 |

Table 3: Perplexity for zero-shot reranking $(\S6.1)$ where the reranking models is smaller than the LM, or the LM itself. Reranking is performed on the top 16 documents retrieved by BM25. Using a GPT-2 110M (S) instead of a larger language model as a reranker leads to only a minor degradation.

表 3: 零样本重排困惑度 $(\S6.1)$ 其中重排模型小于语言模型或使用语言模型本身。重排基于BM25检索的前16篇文档进行。使用GPT-2 110M (S) 而非更大的语言模型作为重排器仅导致性能轻微下降。

that

那

$$

\hat{i}=\arg\operatorname*{max}_ {i\in[k]}p_{\phi}(y^{\prime}|\left[d_{i};x_{\leq(s\cdot j-s^{\prime})}\right]).

$$

$$

\hat{i}=\arg\operatorname*{max}_ {i\in[k]}p_{\phi}(y^{\prime}|\left[d_{i};x_{\leq(s\cdot j-s^{\prime})}\right]).

$$

The main motivation is that since BM25 is a lexical retriever, we want to incorporate a semantic signal induced by the LM. Also, this reranking shares conceptual similarities with the reranking framework of Sachan et al. (2022) for open-domain question answering, where $y^{\prime}$ (i.e., the last prefix tokens) can be thought of as their “question”.

主要动机在于,由于BM25是一种基于词法的检索器,我们希望融入由大语言模型 (LM) 产生的语义信号。此外,这种重排序与Sachan等人 (2022) 在开放域问答中提出的重排序框架具有概念上的相似性,其中 $y^{\prime}$(即最后的前缀token)可被视为他们的“问题”。

Note that our zero-shot reranking does not require that the LM used for reranking is the same model as the LM used for generation (i.e., the LM in Eq. (6), parameterized by $\phi$ , does not need to be the LM in Eq. (2), parameterized by $\theta$ ). This observation unlocks the possibility of reranking with smaller (and thus faster) models, which is important for two main reasons: (i) Reranking $k$ documents requires $k$ forward passes; and (ii) it allows our methods to be used in cases where the actual LM’s log probabilities are not available (for example, when the LM is accessed through an API).6

需要注意的是,我们的零样本重排序并不要求用于重排序的大语言模型与生成阶段使用的大语言模型相同 (即式 (6) 中由 $\phi$ 参数化的模型不必是式 (2) 中由 $\theta$ 参数化的模型) 。这一发现使得采用更小 (因而更快) 的模型进行重排序成为可能,其重要性主要体现在两方面:(i) 对 $k$ 个文档进行重排序需要进行 $k$ 次前向传播;(ii) 该方法适用于无法获取原始大语言模型对数概率的场景 (例如通过API调用模型时) 。6

Results A minimal hyper-parameter search on the development set of WikiText-103 revealed that the optimal query length is $s^{\prime}=16$ , so we proceed with this value going forward. Table 1 shows the results of letting the LM perform zero-shot reranking on the top-16 documents retrieved by BM25 (third row for each of the models). It is evident that reranking yielded consistently better results than simply taking the first result returned by the retriever.

结果 在WikiText-103开发集上进行的最小超参数搜索显示,最优查询长度为 $s^{\prime}=16$ ,因此后续实验均采用该值。表1展示了让大语言模型对BM25检索出的前16篇文档进行零样本重排的结果(每个模型对应的第三行数据)。显然,重排后的结果始终优于直接采用检索器返回的首个结果。

Table 3 shows that a small LM (GPT-2 117M) can be used to rerank the documents for all larger GPT-2 models, with roughly the same performance as having each LM perform reranking for itself, supporting the applicability of this method for LMs that are only accessible via an API.

表 3: 小型大语言模型 (GPT-2 117M) 可用于为所有更大的 GPT-2 模型重新排序文档,其性能与每个大语言模型自行重新排序大致相同,这支持了该方法仅通过 API 访问的大语言模型的适用性。

6.2 Training LM-dedicated Rerankers

6.2 训练专用大语言模型的重排序器

Next, we trained a reranker to choose one of the top $k$ documents retrieved by BM25. We refer to this approach as Predictive Reranking, since the reranker learns to choose which document will help in “predicting” the upcoming text. For this process, we assume availability of training data from the target corpus. Our reranker is a classifier that gets a prefix $x_{\leq s\cdot j}$ and a document $d_{i}$ (for $i\in[k])$ , and produces a scalar $f(x_{\le s\cdot j},d_{i})$ that should resemble the relevance of di for the continuation of x s j.

接下来,我们训练了一个重排序器 (reranker) 从 BM25 检索到的前 $k$ 个文档中选择一个。我们将这种方法称为预测性重排序 (Predictive Reranking),因为重排序器通过学习来选择哪个文档有助于"预测"接下来的文本。在此过程中,我们假设可以从目标语料库中获得训练数据。我们的重排序器是一个分类器,它接收一个前缀 $x_{\leq s\cdot j}$ 和一个文档 $d_{i}$ (对于 $i\in[k])$,并输出一个标量 $f(x_{\le s\cdot j},d_{i})$,该标量应反映 $d_{i}$ 对于继续生成 $x_{s j}$ 的相关性。

We then normalize these relevance scores:

然后我们对这些相关性分数进行归一化处理:

$$

p_{\mathrm{rank}}(d_{i}|\boldsymbol x{<\boldsymbol s\cdot\boldsymbol j}_ {\mathrm{}})=\frac{\exp(f(\boldsymbol x{<\boldsymbol s\cdot\boldsymbol j}_ {\mathrm{}},d_{i}))}{\sum_{i^{\prime}=1}^{k}\exp(f(\boldsymbol x{<\boldsymbol s\cdot\boldsymbol j}_ {\mathrm{}},d_{i^{\prime}}))},

$$

$$

p_{\mathrm{rank}}(d_{i}|\boldsymbol x{<\boldsymbol s\cdot\boldsymbol j}_ {\mathrm{}})=\frac{\exp(f(\boldsymbol x{<\boldsymbol s\cdot\boldsymbol j}_ {\mathrm{}},d_{i}))}{\sum_{i^{\prime}=1}^{k}\exp(f(\boldsymbol x{<\boldsymbol s\cdot\boldsymbol j}_ {\mathrm{}},d_{i^{\prime}}))},

$$

and choose the document $d_{\hat{i}}$ such that

并选择文档 $d_{\hat{i}}$ 使得

$$

\hat{i}=\mathop{\mathrm{arg}}_ {i\in[k]}p_{\mathrm{rank}}(d_{i}|x\leq s\cdot j).

$$

$$

\hat{i}=\mathop{\mathrm{arg}}_ {i\in[k]}p_{\mathrm{rank}}(d_{i}|x\leq s\cdot j).

$$

Collecting Training Examples To train our predictive reranker, we collected training examples as follows. Let $x_{\leq s\cdot j}$ be a prefix we sample from the training data, and $y:=x_{s\cdot j+1},...,x_{s\cdot j+s}$ be the text for generation upcoming in its next stride. We run BM25 on the query