LEARNING ON LARGE-SCALE TEXT-ATTRIBUTED GRAPHS VIA VARIATION AL INFERENCE

基于变分推理的大规模文本属性图学习

ABSTRACT

摘要

This paper studies learning on text-attributed graphs (TAGs), where each node is associated with a text description. An ideal solution for such a problem would be integrating both the text and graph structure information with large language models and graph neural networks (GNNs). However, the problem becomes very challenging when graphs are large due to the high computational complexity brought by training large language models and GNNs together. In this paper, we propose an efficient and effective solution to learning on large text-attributed graphs by fusing graph structure and language learning with a variation al ExpectationMaximization (EM) framework, called GLEM. Instead of simultaneously training large language models and GNNs on big graphs, GLEM proposes to alternatively update the two modules in the E-step and M-step. Such a procedure allows training the two modules separately while simultaneously allowing the two modules to interact and mutually enhance each other. Extensive experiments on multiple data sets demonstrate the efficiency and effectiveness of the proposed approach 1.

本文研究文本属性图(TAGs)上的学习问题,其中每个节点都与文本描述相关联。这类问题的理想解决方案是将文本和图结构信息与大语言模型和图神经网络(GNNs)相集成。然而,由于同时训练大语言模型和GNNs带来的高计算复杂度,当图规模较大时该问题变得极具挑战性。我们提出了一种高效且有效的解决方案GLEM,通过变分期望最大化(EM)框架融合图结构与语言学习来处理大型文本属性图。GLEM没有选择在大图上同时训练大语言模型和GNNs,而是提出在E步和M步交替更新这两个模块。这种方法允许分别训练两个模块,同时使它们能够交互并相互增强。在多个数据集上的大量实验证明了所提方法的高效性和有效性[1]。

1 INTRODUCTION

1 引言

Graphs are ubiquitous in the real world. In many graphs, nodes are often associated with text attributes, resulting in text-attributed graphs (TAGs) (Yang et al., 2021). For example, in social graphs, each user might have a text description; in paper citation graphs, each paper is associated with its textual content. Learning on TAG has become an important research topic in multiple areas including graph learning, information retrieval, and natural language processing.

图在现实世界中无处不在。在许多图中,节点通常与文本属性相关联,从而形成文本属性图 (TAG) (Yang et al., 2021)。例如,在社交图中,每个用户可能有一段文本描述;在论文引用图中,每篇论文都与其文本内容相关联。TAG上的学习已成为图学习、信息检索和自然语言处理等多个领域的重要研究课题。

In this paper, we focus on a fundamental problem, learning effective node representations, which could be used for a variety of applications such as node classification and link prediction. Intuitively, a TAG is rich in textual and structural information, both of which could be beneficial for learning good node representations. The textual information presents rich semantics to characterize the property of each node, and one could use a pre-trained language model (LM) (e.g., BERT (Devlin et al., 2019)) as a text encoder. Meanwhile, the structural information preserves the proximity between nodes, and connected nodes are more likely to have similar representations. Such structural relationships could be effectively modeled by a graph neural network (GNN) via the message-passing mechanism. In summary, LMs leverage the local textual information of individual nodes, while GNNs use the global structural relationship among nodes.

本文聚焦于一个基础性问题——学习有效的节点表征,这种表征可应用于节点分类和链接预测等多种任务。直观来看,文本属性图(TAG)包含丰富的文本与结构信息,二者均有助于学习优质节点表征。其中,文本信息通过丰富语义刻画节点特性,可使用预训练语言模型(LM)(如BERT (Devlin et al., 2019))作为文本编码器;而结构信息则维护节点间邻近关系,相连节点往往具有相似表征。这类结构关系可通过图神经网络(GNN)的消息传递机制有效建模。总体而言,语言模型利用单个节点的局部文本信息,而图神经网络则利用节点间的全局结构关系。

An ideal approach for learning effective node representations is therefore to combine both the textual information and graph structure. One straightforward solution is to cascade an LM-based text encoder and GNN-based message-passing module and train both modules together. However, this method suffers from severe s cal ability issues. This is because the memory complexity is proportional to the graph size as neighborhood texts are also encoded. Therefore, on real-world TAGs where nodes are densely connected, the memory cost of this method would become un affordable.

因此,学习有效节点表征的理想方法是结合文本信息和图结构。一种直接的解决方案是将基于大语言模型的文本编码器与基于GNN的消息传递模块级联,并同时训练这两个模块。然而,该方法存在严重的可扩展性问题,因为内存复杂度与图规模成正比(需要编码邻域文本)。因此,在节点密集连接的真实世界TAG中,该方法的内存成本将变得难以承受。

To address such a problem, multiple solutions have been proposed. These methods reduce either the capacity of LMs or the size of graph structures for GNNs. More specifically, some studies choose to fix the parameters of LMs without fine-tuning them (Liu et al., 2020b). Some other studies reduce graph structures via edge sampling and perform message-passing only on the sampled edges (Zhu et al., 2021; Li et al., 2021a; Yang et al., 2021). Despite the improved s cal ability, reducing the LM capacity or graph size sacrifices the model effectiveness, leading to degraded performance of learning effective node representation. Therefore, we are wondering whether there exists a scalable and effective approach to integrating large LMs and GNNs on large text-attributed graphs.

为解决这一问题,已提出多种解决方案。这些方法要么降低大语言模型 (Large Language Model, LM) 的容量,要么缩减图神经网络 (Graph Neural Network, GNN) 的图结构规模。具体而言,部分研究选择固定LM参数而不进行微调 [20]。另一些研究通过边采样简化图结构,仅在采样边上执行消息传递 [21][22][23]。尽管可扩展性有所提升,但降低LM容量或图规模会牺牲模型效能,导致学习有效节点表示的性能下降。因此,我们思考是否存在一种可扩展且高效的方法,能在大型文本属性图上集成大语言模型与图神经网络。

In this paper, we propose such an approach, named Graph and Language Learning by Expectation Maximization GLEM. In the GLEM, instead of simultaneously training both the LMs and GNNs, we leverage a variation al EM framework (Neal & Hinton, 1998) to alternatively update the two modules. Take the node classification task as an example. The LM uses local textual information of each node to learn a good representation for label prediction, which thus models label distributions conditioned on text attributes. By contrast, for a node, the GNN leverages the labels and textual encodings of surrounding nodes for label prediction, and it essentially defines a global conditional label distribution. The two components are optimized to maximize a variation al lower bound of the log-likelihood function, which can be achieved by alternating between an E-step and an M-step, where at each step we fix one component to update the other one. This separate training framework significantly improves the efficiency of GLEM, allowing it to scale up to real-world TAGs. In each step, one component presents pseudo-labels of nodes for the other component to mimic. By doing this, GLEM can effectively distill the local textual information and global structural information into both components, and thus GLEM enjoys better effectiveness in node classification. We conduct extensive experiments on three benchmark datasets to demonstrate the superior performance of GLEM. Notably, by leveraging the merits of both graph learning and language learning, GLEMLM achieves on par or even better performance than existing GNN models, GLEM-GNN achieves new state-of-the-art results on ogbn-arxiv, ogbn-product, and ogbn-papers100M.

本文提出了一种名为图与语言期望最大化学习 (Graph and Language Learning by Expectation Maximization, GLEM) 的方法。在GLEM中,我们采用变分EM框架 (Neal & Hinton, 1998) 交替更新语言模型和图神经网络模块,而非同时训练二者。以节点分类任务为例:语言模型利用节点的局部文本信息学习标签预测的表示,从而建模基于文本属性的标签分布;而图神经网络则利用相邻节点的标签和文本编码进行预测,本质上定义了全局条件标签分布。通过交替执行E步和M步(固定一个组件更新另一个组件),这两个组件被优化以最大化对数似然函数的变分下界。这种分离式训练框架显著提升了GLEM的效率,使其能够扩展到现实世界的TAG数据。在每一步中,一个组件会生成供另一个组件学习的节点伪标签。通过这种方式,GLEM能有效将局部文本信息和全局结构信息蒸馏到两个组件中,从而获得更优的节点分类性能。我们在三个基准数据集上的实验表明:GLEM-LM通过结合图学习与语言学习的优势,性能媲美甚至超越现有GNN模型;GLEM-GNN则在ogbn-arxiv、ogbn-product和ogbn-papers100M数据集上创造了最新最优结果。

2 RELATED WORK

2 相关工作

Representation learning on text-attributed graphs (TAGs) (Yang et al., 2021) has been attracting growing attention in graph machine learning, and one of the most important problems is node classification. The problem can be directly formalized as a text representation learning task, where the goal is to use the text feature of each node for learning. Early works resort to convolutional neural networks (Kim, 2014; Shen et al., 2014) or recurrent neural networks Tai et al. (2015). Recently, with the superior performance of transformers (Vaswani et al., 2017) and pre-trained language models (Devlin et al., 2019; Yang et al., 2019), LMs have become the go-to model for encoding contextual semantics in sentences for text representation learning. At the same time, the problem can also be viewed as a graph learning task that has been vastly developed by graph neural networks (GNNs) (Kipf & Welling, 2017; Velickovic et al., 2018; Xu et al., 2019; Zhang & Chen, 2018; Teru et al., 2020). A GNN takes numerical features as input and learns node representations by transforming representations and aggregating them according to the graph structure. With the capability of considering both node attributes and graph structures, GNNs have shown great performance in various applications, including node classification and link prediction. Nevertheless, LMs and GNNs only focus on parts of observed information (i.e., textual or structural) for representation learning, and the results remain to be improved.

文本属性图(TAGs)上的表示学习(Yang等人,2021)在图机器学习中受到越来越多的关注,其中最重要的问题之一是节点分类。该问题可以直接形式化为文本表示学习任务,目标是利用每个节点的文本特征进行学习。早期研究采用卷积神经网络(Kim,2014;Shen等人,2014)或循环神经网络(Tai等人,2015)。近年来,随着Transformer(Vaswani等人,2017)和预训练语言模型(Devlin等人,2019;Yang等人,2019)的卓越表现,大语言模型已成为文本表示学习中编码句子上下文语义的首选模型。同时,该问题也可被视为图学习任务,图神经网络(GNNs)(Kipf & Welling,2017;Velickovic等人,2018;Xu等人,2019;Zhang & Chen,2018;Teru等人,2020)已对此进行了广泛研究。GNN以数值特征为输入,通过转换表示并根据图结构聚合来学习节点表示。由于能够同时考虑节点属性和图结构,GNN在节点分类和链接预测等各种应用中表现出色。然而,大语言模型和GNN仅关注部分观察信息(即文本或结构)进行表示学习,结果仍有改进空间。

There are some recent efforts focusing on the combination of GNNs and LMs, which allows one to enjoy the merits of both models. One widely adopted way is to encode the texts of nodes with a fixed LM, and further treat the LM embeddings as features to train a GNN for message passing. Recently, a few methods propose to utilize domain-adaptive pre training (Gururangan et al., 2020) on TAGs and predict the graph structure using LMs (Chien et al., 2022; Yasunaga et al., 2022) to provide better LM embeddings for GNN. Despite better results, these LM embeddings still remain unlearn able in the GNN training phase. Such a separated training paradigm ensures the model s cal ability as the number of trainable parameters in GNNs is relatively small. However, its performance is hindered by the task and topology-irrelevant semantic modeling process. To overcome these limitations, endeavors have been made (Zhu et al., 2021; Li et al., 2021a; Yang et al., 2021; Bi et al., 2021; Pang et al., 2022) to co-train GNNs and LM under a joint learning framework. However, such co-training approaches suffer from severe s cal ability issues as all the neighbors need to be encoded by language models from scratch, incurring huge extra computation costs. In practice, these models restrict the message-passing to very few, e.g. 3 (Li et al., 2021a; Zhu et al., 2021), sampled first-hop neighbors, resulting in severe information loss.

近期有一些研究致力于将图神经网络 (GNN) 与大语言模型 (LM) 相结合,以同时发挥两种模型的优势。一种广泛采用的方法是使用固定的大语言模型对节点文本进行编码,并将这些语言模型嵌入作为特征来训练用于消息传递的图神经网络。最近,一些方法提出在文本属性图 (TAG) 上进行领域自适应预训练 (Gururangan et al., 2020) ,并利用大语言模型预测图结构 (Chien et al., 2022; Yasunaga et al., 2022) ,从而为图神经网络提供更好的语言模型嵌入。尽管取得了更好的结果,但这些语言模型嵌入在图神经网络训练阶段仍然无法学习。这种分离的训练范式通过保持图神经网络中可训练参数数量相对较少来确保模型的可扩展性,但其性能受到与任务和拓扑无关的语义建模过程的限制。

为了克服这些局限性,研究者们 (Zhu et al., 2021; Li et al., 2021a; Yang et al., 2021; Bi et al., 2021; Pang et al., 2022) 尝试在联合学习框架下共同训练图神经网络和大语言模型。然而,这种联合训练方法存在严重的可扩展性问题,因为所有邻居节点都需要从头开始通过语言模型进行编码,导致巨大的额外计算成本。在实践中,这些模型将消息传递限制在极少数(例如 3 个 (Li et al., 2021a; Zhu et al., 2021) )采样的一跳邻居节点上,造成了严重的信息损失。

To summarize, existing methods of fusing LMs and GNNs suffer from either unsatisfactory results or poor s cal ability. In contrast to these methods, GLEM uses a pseudo-likelihood variation al framework to integrate an LM and a GNN, which allows the two components to be trained separately, leading to good s cal ability. Also, GLEM encourages the collaboration of both components, so that it is able to use both textual semantics and structural semantics for representation learning, and thus enjoys better effectiveness.

总结来说,现有融合大语言模型(LM)和图神经网络(GNN)的方法要么效果欠佳,要么扩展性较差。与这些方法不同,GLEM采用伪似然变分框架来集成LM和GNN,使得两个组件能够分开训练,从而具备良好的扩展性。同时,GLEM促进两者的协作,使其能够同时利用文本语义和结构语义进行表征学习,因此获得了更好的效果。

Besides, there are also some efforts using GNNs for text classification. Different from our approach, which uses GNNs to model the observed structural relationship between nodes, these methods assume graph structures are unobserved. They apply GNNs on synthetic graphs generated by words in a text (Huang et al., 2019; Hu et al., 2019; Zhang et al., 2020) or co-occurrence patterns between texts and words (Huang et al., 2019; Liu et al., 2020a). As structures are observed in the problem of node classification in text-attributed graphs, these methods cannot well address the studied problem.

此外,也有研究尝试用图神经网络 (GNN) 进行文本分类。与本文利用 GNN 建模节点间显式结构关系的方法不同,这些方法假设图结构是隐式的。它们将 GNN 应用于基于文本词汇生成的合成图 (Huang et al., 2019; Hu et al., 2019; Zhang et al., 2020) 或文本-词汇共现模式构建的图结构 (Huang et al., 2019; Liu et al., 2020a)。由于文本属性图中的节点分类问题本身存在显式结构,这些方法无法有效解决本文研究的问题。

Lastly, our work is related to GMNN Qu et al. (2019), which also uses a pseudo-likelihood variational framework for node representation learning. However, GMNN aims to combine two GNNs for general graphs and it does not consider modeling textual features. Different from GMNN, GLEM focuses on TAGs, which are more challenging to deal with. Also, GLEM fuses a GNN and an LM, which can better leverage both structural features and textual features in a TAG, and thus achieves state-of-the-art results on a few benchmarks.

最后,我们的工作与 GMNN (Qu et al., 2019) 相关,后者同样采用伪似然变分框架进行节点表示学习。然而,GMNN 旨在为通用图结合两种 GNN (Graph Neural Network) ,且未考虑文本特征建模。与 GMNN 不同,GLEM 专注于更具挑战性的 TAG (Text-Attributed Graph) ,并通过融合 GNN 和 LM (Language Model) 更好地利用 TAG 中的结构特征与文本特征,从而在多个基准测试中取得最先进的结果。

3 BACKGROUND

3 背景

In this paper, we focus on learning representations for nodes in TAGs, where we take node classification as an example for illustration. Before diving into the details of our proposed GLEM, we start with presenting a few basic concepts, including the definition of TAGs and how LMs and GNNs can be used for node classification in TAGs.

在本文中,我们专注于为TAG中的节点学习表征,并以节点分类为例进行说明。在深入探讨我们提出的GLEM细节之前,首先介绍一些基本概念,包括TAG的定义以及如何利用大语言模型和GNN在TAG中进行节点分类。

3.1 TEXT-ATTRIBUTED GRAPH

3.1 文本属性图 (Text-Attributed Graph)

Formally, a TAG ${\mathcal G}{S}=\left(V,A,{\bf s}{V}\right)$ is composed of nodes $V$ and their adjacency matrix $A\in$ $\mathbb{R}^{|V|\times|\bar{V}|}$ , where each node $n\in V$ is associated with a sequential text feature (sentence) ${\bf s}{n}$ . In this paper, we study the problem of node classification on TAGs. Given a few labeled nodes $\mathbf{y}{L}$ of $L\subset V$ , the goal is to predict the labels $\mathbf{y}_{U}$ for the remaining unlabeled objects $U=V\setminus L$ .

形式上,一个TAG ${\mathcal G}{S}=\left(V,A,{\bf s}{V}\right)$ 由节点 $V$ 及其邻接矩阵 $A\in$ $\mathbb{R}^{|V|\times|\bar{V}|}$ 组成,其中每个节点 $n\in V$ 关联一个序列文本特征(句子) ${\bf s}{n}$。本文研究TAG上的节点分类问题:给定少量已标注节点 $\mathbf{y}{L}$ ($L\subset V$),目标是为剩余未标注对象 $U=V\setminus L$ 预测标签 $\mathbf{y}_{U}$。

Intuitively, node labels can be predicted by using either the textual information or the structural information, and representative methods are language models (LMs) and graph neural networks (GNNs) respectively. Next, we introduce the high-level ideas of both methods.

直观上,节点标签可以通过文本信息或结构信息进行预测,代表性方法分别是大语言模型 (LMs) 和图神经网络 (GNNs)。接下来,我们将介绍这两种方法的核心思想。

3.2 LANGUAGE MODELS FOR NODE CLASSIFICATION

3.2 用于节点分类的语言模型

Language models aim to use the sentence ${\bf s}_{n}$ of each node $n$ for label prediction, resulting in a text classification task (Socher et al., 2013; Williams et al., 2018). The workflow of LMs can be characterized as below:

语言模型旨在利用每个节点 ${\bf s}_{n}$ 的句子进行标签预测,从而形成文本分类任务 (Socher et al., 2013; Williams et al., 2018)。其工作流程可描述如下:

where $\mathrm{SeqEnc}{\theta{1}}$ is a text encoder such as a transformer-based model (Vaswani et al., 2017; Yang et al., 2019), which projects the sentence ${\bf s}{n}$ into a vector representation $\mathbf{h}{n}$ . Afterwards, the node label distribution ${\mathbf y}{n}$ can be simply predicted by applying an $\mathrm{MLP}{\theta_{2}}$ with a softmax function to ${\bf h}_{n}$ .

其中 $\mathrm{SeqEnc}{\theta{1}}$ 是一个文本编码器,例如基于Transformer的模型 (Vaswani et al., 2017; Yang et al., 2019),它将句子 ${\bf s}{n}$ 映射为向量表示 $\mathbf{h}{n}$。随后,节点标签分布 ${\mathbf y}{n}$ 可以通过对 ${\bf h}{n}$ 应用带有softmax函数的 $\mathrm{MLP}{\theta{2}}$ 来简单预测。

Leveraging deep architectures and pre-training on large-scale corpora, LMs achieve impressive results on many text classification tasks (Devlin et al., 2019; Liu et al., 2019). Nevertheless, the memory cost is often high due to large model sizes. Also, for each node, LMs solely use its own sentence for classification, and the interactions between nodes are ignored, leading to sub-optimal results especially on nodes with insufficient text features.

借助深度架构和大规模语料库的预训练,大语言模型在许多文本分类任务中取得了令人印象深刻的结果 (Devlin et al., 2019; Liu et al., 2019)。然而,由于模型规模庞大,内存开销往往很高。此外,对于每个节点,大语言模型仅使用其自身的句子进行分类,忽略了节点之间的交互,这导致结果欠佳,尤其是在文本特征不足的节点上。

3.3 GRAPH NEURAL NETWORKS FOR NODE CLASSIFICATION

3.3 用于节点分类的图神经网络

Graph neural networks approach node classification by using the structural interactions between nodes. Specifically, GNNs leverage a message-passing mechanism, which can be described as:

图神经网络 (Graph Neural Networks) 通过节点间的结构交互关系进行节点分类。具体而言,GNN采用消息传递机制,其过程可描述为:

where $\phi$ denotes the parameters of GNN, $\sigma$ is an activation function, $\mathrm{MSG}{\phi}(\cdot)$ and $\mathrm{AGG}{\phi}(\cdot)$ stand for the message and aggregation functions respectively, $\mathrm{NB}(n)$ denotes the neighbor nodes of $n$ . Given the initial text encodings $\mathbf{h}_{n}^{(0)}$ , e.g. pre-trained LM embeddings, a GNN iterative ly updates them by applying the message function and the aggregation function, so that the learned node represent at ions and predictions can well capture the structural interactions between nodes.

其中$\phi$表示GNN的参数,$\sigma$是激活函数,$\mathrm{MSG}{\phi}(\cdot)$和$\mathrm{AGG}{\phi}(\cdot)$分别代表消息函数和聚合函数,$\mathrm{NB}(n)$表示节点$n$的邻居节点。给定初始文本编码$\mathbf{h}_{n}^{(0)}$(例如预训练语言模型嵌入向量),GNN通过迭代应用消息函数和聚合函数来更新这些编码,使得学习到的节点表示和预测能够很好地捕捉节点间的结构交互关系。

With the message-passing mechanism, GNNs are able to effectively leverage the structural information for node classification. Despite the good performance on many graphs, GNNs are not able to well utilize the textual information, and thus GNNs often suffer on nodes with few neighbors.

通过消息传递机制,图神经网络(GNN)能够有效利用结构信息进行节点分类。尽管在许多图数据上表现良好,但GNN难以充分利用文本信息,因此在邻居节点较少的场景下往往表现不佳。

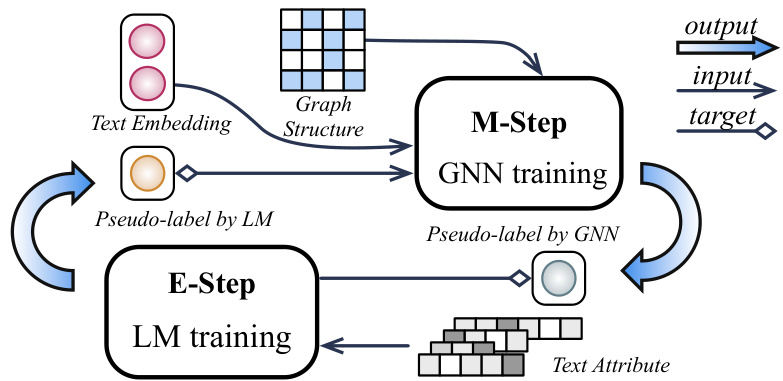

Figure 1: The proposed GLEM framework trains GNN and LM separately in a variation al EM framework: In E-step, an LM is trained towards predicting both the gold labels and GNN-predicted pseudo-labels; In M-step, a GNN is trained by predicting both gold labels and LM-inferred pseudolabels using the embeddings and pseudo-labels predicted by LM.

图 1: 提出的GLEM框架在变分EM框架中分别训练GNN和LM:在E步中,LM被训练用于预测真实标签和GNN预测的伪标签;在M步中,GNN通过预测真实标签和LM推断的伪标签进行训练,同时使用LM生成的嵌入和伪标签。

4 METHODOLOGY

4 方法论

In this section, we introduce our proposed approach which combines GNN and LM for node represent ation learning in TAGs. Existing methods either suffer from s cal ability issues or have poor results in downstream applications such as node classification. Therefore, we are looking for an approach that enjoys both good s cal ability and capacity.

在本节中,我们将介绍结合图神经网络 (GNN) 和大语言模型 (LM) 的文本属性图 (TAG) 节点表征学习方法。现有方法要么存在可扩展性问题,要么在节点分类等下游任务中表现欠佳。因此,我们致力于寻找兼具良好可扩展性和表征能力的新方法。

Toward this goal, we take node classification as an example and propose GLEM. GLEM leverages a variation al EM framework, where the LM uses the text information of each sole node to predict its label, which essentially models the label distribution conditioned on local text attribute; whereas the GNN leverages the text and label information of surrounding nodes for label prediction, which characterizes the global conditional label distribution. The two modules are optimized by alternating between an E-step and an M-step. In the E-step, we fix the GNN and let the LM mimic the labels inferred by the GNN, allowing the global knowledge learned by the GNN to be distilled into the LM. In the M-step, we fix the LM, and the GNN is optimized by using the node representations learned by the LM as features and the node labels inferred by the LM as targets. By doing this, the GNN can effectively capture the global correlations of nodes for precise label prediction. With such a framework, the LM and GNN can be trained separately, leading to better s cal ability. Meanwhile, the LM and GNN are encouraged to benefit each other, without sacrificing model performance.

为实现这一目标,我们以节点分类为例提出GLEM。GLEM采用变分EM框架:大语言模型利用单个节点的文本信息预测其标签,本质上是建模基于局部文本属性的条件标签分布;而图神经网络则利用相邻节点的文本和标签信息进行预测,刻画全局条件标签分布。两个模块通过交替执行E步和M步进行优化:

- E步:固定图神经网络,让大语言模型模仿其推断的标签,将图神经网络学到的全局知识蒸馏至语言模型

- M步:固定大语言模型,以语言模型学习的节点表征作为特征、语言模型推断的节点标签作为目标,优化图神经网络

该框架使图神经网络能有效捕捉节点全局相关性以实现精准预测。通过这种设计,大语言模型和图神经网络既可分离训练以获得更好扩展性,又能相互促进且不牺牲模型性能。

4.1 THE PSEUDO-LIKELIHOOD VARIATION AL FRAMEWORK

4.1 伪似然变分AL框架

Our approach is based on a pseudo-likelihood variation al framework, which offers a principled and flexible formalization for model design. To be more specific, the framework tries to maximize the log-likelihood function of the observed node labels, i.e., $p(\mathbf{y}{L}|\mathbf{s}{V},A)$ . Directly optimizing the function is often hard due to the unobserved node labels $\mathbf{y}_{U}$ , and thus the framework instead optimizes the evidence lower bound as below:

我们的方法基于一种伪似然变分框架,该框架为模型设计提供了原则性且灵活的形式化表达。具体而言,该框架试图最大化观测节点标签的对数似然函数,即 $p(\mathbf{y}{L}|\mathbf{s}{V},A)$ 。由于未观测节点标签 $\mathbf{y}_{U}$ 的存在,直接优化该函数通常较为困难,因此该框架转而优化如下证据下界:

where $q(\mathbf{y}{U}|\mathbf{s}{U})$ is a variation al distribution and the above inequality holds for any $q$ . The ELBO can be optimized by alternating between optimizing the distribution $q$ (i.e., $\mathrm{E}$ -step) and the distribution $p$

其中 $q(\mathbf{y}{U}|\mathbf{s}{U})$ 是一个变分分布,且上述不等式对任意 $q$ 都成立。可以通过交替优化分布 $q$ (即 $\mathrm{E}$ 步) 和分布 $p$ 来优化ELBO。

(i.e., M-step). In the $\mathrm{E}$ -step, we aim at updating $q$ to minimize the KL divergence between $q(\mathbf{y}{U}|\mathbf{s}{U})$ and $p(\mathbf{y}{U}|\mathbf{s}{V},A,\mathbf{y}_{L})$ , so that the above lower bound can be tightened. In the M-step, we then update $p$ towards maximizing the following pseudo-likelihood (Besag, 1975) function:

(i.e., M-step). 在 $\mathrm{E}$ 步中,我们的目标是更新 $q$ 以最小化 $q(\mathbf{y}{U}|\mathbf{s}{U})$ 和 $p(\mathbf{y}{U}|\mathbf{s}{V},A,\mathbf{y}_{L})$ 之间的KL散度,从而收紧上述下界。在M步中,我们更新 $p$ 以最大化以下伪似然 (Besag, 1975) 函数:

The pseudo-likelihood variation al framework yields a formalization with two distributions to maximize data likelihood. The two distributions are trained via a separate $\mathrm{E}$ -step and M-step, and thus we no longer need the end-to-end training paradigm, leading to better s cal ability which naturally fits our scenario. Next, we introduce how we apply the framework to node classification in TAGs by instant i a ting the $p$ and $q$ distributions with GNNs and LMs respectively.

伪似然变分框架通过最大化数据似然性,采用两种分布进行形式化建模。这两种分布通过独立的$\mathrm{E}$步和M步进行训练,从而摆脱端到端训练范式的限制,获得更优的扩展性(scalability),完美适配我们的应用场景。下文将阐述如何将该框架应用于时序关联图(TAGs)的节点分类任务:分别用图神经网络(GNNs)和语言模型(LMs)实例化$p$分布与$q$分布。

4.2 PARAMETER IZ ATION

4.2 参数化

The distribution $q$ aims to use the text information $\mathbf{s}_{U}$ to define node label distribution. In GLEM, we use a mean-field form, assuming the labels of different nodes are independent and the label of each node only depends on its own text information, yielding the following form of factorization:

分布 $q$ 旨在利用文本信息 $\mathbf{s}_{U}$ 定义节点标签分布。在GLEM中,我们采用平均场形式,假设不同节点的标签相互独立,且每个节点的标签仅取决于其自身的文本信息,从而得到以下分解形式:

As introduced in Section 3.2, each term $q_{\theta}(\mathbf{y}{n}|\mathbf{s}{n})$ can be modeled by a transformer-based LM $q_{\theta}$ parameterized by $\theta$ , which effectively models the fine-grained token interactions by the attention mechanism (Vaswani et al., 2017).

如第3.2节所述,每个项 $q_{\theta}(\mathbf{y}{n}|\mathbf{s}{n})$ 可由基于Transformer的语言模型 $q_{\theta}$ 建模,其参数为 $\theta$ ,该模型通过注意力机制 (Vaswani et al., 2017) 有效建模了细粒度token交互。

On the other hand, the distribution $p$ defines a conditional distribution $p_{\phi}(\mathbf{y}{n}|\mathbf{s}{V},A,\mathbf{y}{V\setminus n}),$ , aiming to leverage the node features $\mathbf{s}{V}$ , graph structure $A$ , and other node labels $\mathbf{y}{V\setminus n}$ to characterize the label distribution of each node $n$ . Such a formalization can be naturally captured by a GNN through the message-passing mechanism. Thus, we model $p{\phi}(\mathbf{y}{n}|\mathbf{s}{V},A,\mathbf{y}{V\setminus n})$ as a $\mathrm{GNN}p{\phi}$ parameterized by $\phi$ to effectively model the structural interactions between nodes. Note that the $\mathrm{GNN}p_{\phi}$ takes the node texts $\mathbf{s}{V}$ as input to output the node label distribution. However, the node texts are discrete variables, which cannot be directly used by the GNN. Thus, in practice we first encode the node texts with the LM $q{\theta}$ , and then use the obtained embeddings as a surrogate of node texts for the GNN pφ.

另一方面,分布 $p$ 定义了一个条件分布 $p_{\phi}(\mathbf{y}{n}|\mathbf{s}{V},A,\mathbf{y}{V\setminus n})$,旨在利用节点特征 $\mathbf{s}{V}$、图结构 $A$ 和其他节点标签 $\mathbf{y}{V\setminus n}$ 来表征每个节点 $n$ 的标签分布。这种形式化可以通过图神经网络 (GNN) 的消息传递机制自然捕获。因此,我们将 $p{\phi}(\mathbf{y}{n}|\mathbf{s}{V},A,\mathbf{y}{V\setminus n})$ 建模为由 $\phi$ 参数化的 $\mathrm{GNN}p{\phi}$,以有效建模节点间的结构交互。需要注意的是,$\mathrm{GNN}p_{\phi}$ 以节点文本 $\mathbf{s}{V}$ 作为输入来输出节点标签分布。然而,节点文本是离散变量,无法直接被 GNN 使用。因此,实践中我们首先用大语言模型 $q{\theta}$ 对节点文本进行编码,然后将获得的嵌入作为节点文本的替代输入给 GNN pφ。

In the following sections, we further explain how we optimize the LM $q_{\theta}$ and the GNN $p_{\phi}$ to let them collaborate with each other.

在以下章节中,我们将进一步说明如何优化大语言模型 $q_{\theta}$ 和图神经网络 $p_{\phi}$,以使它们相互协作。

4.3 E-STEP: LM OPTIMIZATION

4.3 E-STEP: 语言模型优化

In the E-step, we fix the GNN and aim to update the LM to maximize the evidence lower bound. By doing this, the global semantic correlations between different nodes can be distilled into the LM.

在E步中,我们固定GNN并更新LM以最大化证据下界。通过这一步骤,不同节点间的全局语义关联可被蒸馏至LM中。

Formally, maximizing the evidence lower bound with respect to the LM is equivalent to minimizing the KL divergence between the posterior distribution and the variation al distribution, i.e., $\begin{array}{r}{\mathrm{KL}(q_{\theta}(\mathbf{y}{U}|\mathbf{s}{U})||p_{\phi}(\mathbf{y}{U}|\mathbf{s}{V},A,\mathbf{y}{L}))}\end{array}$ . However, directly optimizing the KL divergence is nontrivial, as the KL divergence relies on the entropy of $q{\theta}(\mathbf{y}{U}|\mathbf{s}{U})$ , which is hard to deal with. To overcome the challenge, we follow the wake-sleep algorithm (Hinton et al., 1995) to minimize the reverse KL divergence, yielding the following objective function to maximize with respect to the $\operatorname{LM}q_{\theta}$ :

形式上,最大化证据下界 (evidence lower bound) 关于语言模型的部分等价于最小化后验分布与变分分布之间的KL散度 (KL divergence) ,即 $\begin{array}{r}{\mathrm{KL}(q_{\theta}(\mathbf{y}{U}|\mathbf{s}{U})||p_{\phi}(\mathbf{y}{U}|\mathbf{s}{V},A,\mathbf{y}{L}))}\end{array}$ 。然而直接优化KL散度并非易事,因为KL散度依赖于 $q{\theta}(\mathbf{y}{U}|\mathbf{s}{U})$ 的熵,而熵项难以处理。为克服这一挑战,我们采用唤醒-睡眠算法 (wake-sleep algorithm) [Hinton et al., 1995] 来最小化反向KL散度,从而得到关于 $\operatorname{LM}q_{\theta}$ 的最大化目标函数:

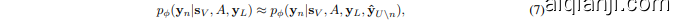

which is more tractable as we no longer need to consider the entropy of $q_{\theta}(\mathbf{y}{U}|\mathbf{s}{U})$ . Now, the sole difficulty lies in computing the distribution $p_{\phi}(\mathbf{y}{n}|\mathbf{s}{V},A,\mathbf{y}{L})$ . Remember that in the original GNN which defines the distribution $p{\phi}(\mathbf{y}{n}|\mathbf{s}{V},A,\mathbf{y}{V\setminus n})$ , we aim to predict the label distribution of a node $n$ based on the surrounding node labels $\mathbf{y}{V\setminus n}$ . However, in the above distribution $p_{\phi}(\mathbf{y}{n}|\mathbf{s}{V},A,\mathbf{y}{L})$ , we only condition on the observed node labels $\mathbf{y}{L}$ , and the labels of other nodes are unspecified, so we cannot compute the distribution directly with the GNN. In order to solve the problem, we propose to annotate all the unlabeled nodes in the graph with the pseudo-labels predicted by the LM, so that we can approximate the distribution as follows:

这变得更加易于处理,因为我们不再需要考虑 $q_{\theta}(\mathbf{y}{U}|\mathbf{s}{U})$ 的熵。现在,唯一的困难在于计算分布 $p_{\phi}(\mathbf{y}{n}|\mathbf{s}{V},A,\mathbf{y}{L})$ 。回想原始GNN中定义的分布 $p{\phi}(\mathbf{y}{n}|\mathbf{s}{V},A,\mathbf{y}{V\setminus n})$ ,我们的目标是根据周围节点标签 $\mathbf{y}{V\setminus n}$ 来预测节点 $n$ 的标签分布。然而,在上述分布 $p_{\phi}(\mathbf{y}{n}|\mathbf{s}{V},A,\mathbf{y}{L})$ 中,我们仅以观测到的节点标签 $\mathbf{y}{L}$ 为条件,其他节点的标签未指定,因此无法直接通过GNN计算该分布。为了解决这个问题,我们提出用大语言模型预测的伪标签为图中所有未标注节点进行标注,从而可以按如下方式近似该分布:

where $\hat{\mathbf{y}}{U\backslash n}={\hat{\mathbf{y}}{n^{\prime}}}{n^{\prime}\in U\backslash n}$ with each $\hat{\mathbf{y}}{n^{\prime}}\sim q_{\theta}(\mathbf{y}{n^{\prime}}|\mathbf{s}{n^{\prime}})$ .

其中 $\hat{\mathbf{y}}{U\backslash n}={\hat{\mathbf{y}}{n^{\prime}}}{n^{\prime}\in U\backslash n}$ ,且每个 $\hat{\mathbf{y}}{n^{\prime}}\sim q_{\theta}(\mathbf{y}{n^{\prime}}|\mathbf{s}{n^{\prime}})$ 。

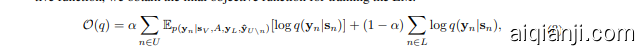

Besides, the labeled nodes can also be used for training the LM. Combining it with the above objective function, we obtain the final objective function for training the LM:

此外,标注节点也可用于训练大语言模型 (LM)。将其与上述目标函数结合,我们得到训练大语言模型的最终目标函数:

where $\alpha$ is a hyper parameter. Intuitively, the second term $\begin{array}{r}{\sum_{n\in L}\log q(\mathbf{y}{n}|\mathbf{s}{n})}\end{array}$ is a supervised objective which uses the given labeled nodes for training. Me anwhile, the first term could be viewed as a knowledge distilling process which teaches the LM by forcing it to predict the label distribution based on neighborhood text-information.

其中 $\alpha$ 是一个超参数。直观来看,第二项 $\begin{array}{r}{\sum_{n\in L}\log q(\mathbf{y}{n}|\mathbf{s}{n})}\end{array}$ 是一个监督目标,它使用给定的带标签节点进行训练。同时,第一项可视为知识蒸馏过程,通过迫使大语言模型基于邻域文本信息预测标签分布来进行教学。

4.4 M-STEP: GNN OPTIMIZATION

4.4 M-STEP: GNN优化

During the GNN phase, we aim at fixing the language model $q_{\theta}$ and optimizing the graph neural network $p_{\phi}$ to maximize the pseudo-likelihood as introduced in equation 4.

在GNN阶段,我们的目标是固定语言模型$q_{\theta}$并优化图神经网络$p_{\phi}$,以最大化方程4中引入的伪似然。

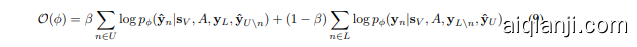

To be more specific, we use the language model to generate node representations $\mathbf{h}{V}$ for all nodes and feed them into the graph neural network as text features for message passing. Besides, note that equation 4 relies on the expectation with respect to $q{\theta}$ , which can be approximated by drawing a sample $\hat{\mathbf{y}}{U}$ from $q{\theta}(\mathbf{y}{U}|\mathbf{s}{U})$ . In other words, we use the language model $q_{\theta}$ to predict a pseudo-label ${\hat{\mathbf{y}}}{n}$ for each unlabeled node $n\in U$ , and combine all the labels ${\hat{\mathbf{y}}{n}}{n\in U}$ into $\hat{\mathbf{y}}{U}$ . With both the node representations and pseudo-labels from the LM $q_{\theta}$ , the pseudo-likelihood can be rewritten as follows:

具体来说,我们使用语言模型为所有节点生成表征 $\mathbf{h}{V}$ ,并将其作为文本特征输入图神经网络进行消息传递。此外,请注意公式4依赖于对 $q{\theta}$ 的期望,这可以通过从 $q_{\theta}(\mathbf{y}{U}|\mathbf{s}{U})$ 中采样 $\hat{\mathbf{y}}{U}$ 来近似计算。换言之,我们利用语言模型 $q{\theta}$ 为每个未标注节点 $n\in U$ 预测伪标签 ${\hat{\mathbf{y}}}{n}$ ,并将所有标签 ${\hat{\mathbf{y}}{n}}{n\in U}$ 组合为 $\hat{\mathbf{y}}{U}$ 。结合来自语言模型 $q_{\theta}$ 的节点表征和伪标签,伪似然可改写如下:

where $\beta$ is a hyper parameter which is added to balance the weight of the two terms. Again, the first term can be viewed as a knowledge distillation process which injects the knowledge captured by the LM into the GNN via all the pseudo-labels. The second term is simply a supervised loss, where we use observed node labels for model training.

其中 $\beta$ 是一个超参数,用于平衡两项的权重。第一项可视为知识蒸馏过程,通过所有伪标签将大语言模型(LM)捕获的知识注入图神经网络(GNN)。第二项是简单的监督损失,使用观测到的节点标签进行模型训练。

Finally, the workflow of the EM algorithm is summarized in Fig. 1. The optimization process iterative ly does the E-step and the M-step. In the E-step, the pseudo-labels predicted by the GNN together with the observed labels are utilized for LM training. In the M-step, the LM provides both text embeddings and pseudo-labels for the GNN, which are treated as input and target respectively for label prediction. Once trained, both the LM in E-step (denoted as GLEM-LM) and the GNN (denoted as GLEM-GNN) in M-step can be used for node label prediction.

最后,EM算法的工作流程总结如图1所示。优化过程迭代执行E步和M步。在E步中,利用GNN预测的伪标签和观测到的标签进行大语言模型训练。在M步中,大语言模型为GNN提供文本嵌入和伪标签,分别作为输入和目标进行标签预测。训练完成后,E步中的大语言模型(记为GLEM-LM)和M步中的GNN(记为GLEM-GNN)均可用于节点标签预测。

5 EXPERIMENTS

5 实验

In this section, we conduct experiments to evaluate the proposed GLEM framework, where two settings are considered. The first setting is trans duct ive node classification, where given a few labeled nodes in a TAG, we aim to classify the rest of the nodes. Besides that, we also consider a structurefree inductive setting, and the goal is to transfer models trained on labeled nodes to unseen nodes, for which we only observe the text attributes without knowing their connected neighbors.

在本节中,我们通过实验评估提出的GLEM框架,考虑两种设置。第一种设置是转导式节点分类 (transductive node classification) ,给定TAG中的少量已标注节点,我们的目标是对其余节点进行分类。此外,我们还考虑了一种无结构的归纳式设置 (inductive setting) ,目标是将基于已标注节点训练的模型迁移到未见节点上,这些节点仅能观测文本属性而无法获知其连接邻居。

5.1 EXPERIMENTAL SETUP

5.1 实验设置

Datasets. Three TAG node classification benchmarks are used in our experiment, including ogbnarxiv, ogbn-products, and ogbn-papers100M (Hu et al., 2020). The statistics of these datasets are shown in Table 1.

数据集。我们的实验使用了三个TAG节点分类基准数据集,包括ogbnarxiv、ogbn-products和ogbn-papers100M (Hu et al., 2020)。这些数据集的统计信息如表1所示。

Compared Methods. We compare GLEM-LM and GLEM-GNN against LMs, GNNs, and methods combining both of worlds. For language models, we apply DeBERTa He et al. (2021) to our setting by fine-tuning it on labeled nodes, and we denote it as LM-Ft. For GNNs, a few wellknown GNNs are selected, i.e., GCN (Kipf & Welling, 2017) and GraphSAGE (Hamilton et al., 2017). Three top-ranked baselines on leader boards are included, i.e., RevGAT (Li et al., 2021b), GAMLP (Zhang et al., 2022), SAGN (Sun & Wu, 2021). For each GNN, we try different kinds of node features, including (1) the raw feature of OGB, denoted as $\mathbf{X}{\mathrm{OGB}}$ ; (2) the LM embedding inferenced by pre-trained LM, i.e. the DeBERTa-base checkpoint 2, denoted as $\mathbf{X{PLM}}$ ; (3) the GIANT (Chien et al., 2022) feature, denoted as XGIANT.

对比方法。我们将 GLEM-LM 和 GLEM-GNN 与语言模型、图神经网络以及两者结合的方法进行比较。对于语言模型,我们采用 DeBERTa He et al. (2021) 并在标注节点上进行微调,记为 LM-Ft。对于图神经网络,选取了几种知名 GNN,包括 GCN (Kipf & Welling, 2017) 和 GraphSAGE (Hamilton et al., 2017)。同时纳入了排行榜上表现优异的三个基线模型:RevGAT (Li et al., 2021b)、GAMLP (Zhang et al., 2022) 和 SAGN (Sun & Wu, 2021)。针对每个 GNN,我们尝试了多种节点特征:(1) OGB 原始特征,记为 $\mathbf{X}{\mathrm{OGB}}$;(2) 预训练语言模型 (DeBERTa-base 检查点 2) 推理得到的 LM 嵌入特征,记为 $\mathbf{X{PLM}}$;(3) GIANT (Chien et al., 2022) 特征,记为 XGIANT。

Table 2: Node classification accuracy for the Arxiv and Products datasets. $(\mathrm{mean}\pm\mathrm{std}%$ , the best results are bolded and the runner-ups are underlined). $\mathrm{G}\uparrow$ denotes the improvements of GLEMGNN over the same GNN trained on $\mathbf{X}_{\mathrm{OGB}}$ ; $\mathrm{L}\uparrow$ denotes the improvements of GLEM-LM over LM-Ft. $\mathbf{\tilde{\Sigma}}^{\acute{\leftmoon}}+\mathbf{\tilde{\Sigma}}^{\prime}$ denotes additional tricks are implemented in the original GNN models.

表 2: Arxiv 和 Products 数据集的节点分类准确率 ($\mathrm{mean}\pm\mathrm{std}%$,最佳结果加粗,次优结果加下划线)。$\mathrm{G}\uparrow$ 表示 GLEMGNN 相对于在 $\mathbf{X}_{\mathrm{OGB}}$ 上训练的相同 GNN 的改进;$\mathrm{L}\uparrow$ 表示 GLEM-LM 相对于 LM-Ft 的改进。$\mathbf{\tilde{\Sigma}}^{\acute{\leftmoon}}+\mathbf{\tilde{\Sigma}}^{\prime}$ 表示在原始 GNN 模型中实现了额外技巧。

| #节点数 | #边数 | 平均节点度数 | 训练/验证/测试 (%) | |

|---|---|---|---|---|

| ogbn-arxiv (Arxiv) | 169,343 | 1,166,243 | 13.7 | 54/18/28 |

| ogbn-products (Products) | 2,449,029 | 61, |