Exploring Video Quality Assessment on User Generated Contents from Aesthetic and Technical Perspectives

从美学与技术角度探索用户生成内容(UGC)的视频质量评估

Abstract

摘要

The rapid increase in user-generated content (UGC) videos calls for the development of effective video quality assessment (VQA) algorithms. However, the objective of the UGC-VQA problem is still ambiguous and can be viewed from two perspectives: the technical perspective, measuring the perception of distortions; and the aesthetic perspective, which relates to preference and recommendation on contents. To understand how these two perspectives affect overall subjective opinions in UGC-VQA, we conduct a large-scale subjective study to collect human quality opinions on the overall quality of videos as well as perceptions from aesthetic and technical perspectives. The collected Disentangled Video Quality Database (DIVIDE-3k) confirms that human quality opinions on UGC videos are universally and inevitably affected by both aesthetic and technical perspectives. In light of this, we propose the Disentangled Objective Video Quality Evaluator (DOVER) to learn the quality of UGC videos based on the two perspectives. The DOVER proves state-of-the-art performance in UGC-VQA under very high efficiency. With perspective opinions in DIVIDE-3k, we further propose $D O V E R{+}{+}$ , the first approach to provide reliable clear-cut quality evaluations from a single aesthetic or technical perspective. Code at https://github.com/V Q Assessment/DOVER.

用户生成内容(UGC)视频的快速增长催生了对高效视频质量评估(VQA)算法的需求。然而UGC-VQA问题的目标仍存在歧义,可从两个视角解读:技术视角(测量失真感知)和美学视角(关联内容偏好与推荐)。为探究这两个视角如何影响UGC-VQA中的主观评价,我们开展了大规模主观实验,收集人类对视频整体质量及美学/技术维度的感知数据。所构建的解耦视频质量数据库(DIVIDE-3k)证实:人类对UGC视频的质量评判普遍且必然同时受美学与技术视角影响。基于此,我们提出解耦目标视频质量评估器(DOVER),从双视角学习UGC视频质量。DOVER在极高效率下实现了最先进的UGC-VQA性能。借助DIVIDE-3k中的视角标注,我们进一步提出$DOVER{+}{+}$——首个能从单一美学或技术视角提供可靠清晰质量评估的方法。代码详见https://github.com/VQAssessment/DOVER。

1. Introduction

1. 引言

Understanding and predicting human quality of experience (QoE) on diverse in-the-wild videos has been a longexisting and unsolved problem. Recent Video Quality Assessment (VQA) studies have gathered enormous human quality opinions [1–5] on in-the-wild user-generated contents (UGC) and attempted to use machine algorithms [6–8] to learn and predict these opinions, known as the UGCVQA problem [9]. However, due to the diversity of contents in UGC videos and the lack of reference videos during subjective studies, these human-quality opinions are still ambiguous and may relate to different perspectives.

理解和预测人类对多样化真实场景视频的体验质量(QoE)是一个长期存在且尚未解决的问题。近期视频质量评估(VQA)研究收集了大量用户生成内容(UGC)的人类质量评价[1-5],并尝试使用机器学习算法[6-8]来学习和预测这些评价,即UGCVQA问题[9]。然而,由于UGC视频内容的多样性以及主观研究过程中参考视频的缺失,这些人类质量评价仍然存在模糊性,可能涉及不同维度的评判标准。

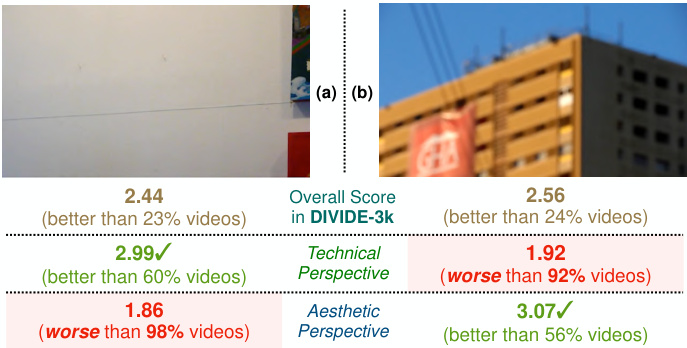

Which video has better quality? Figure 1. Which video has better quality: a clear video with meaningless contents (a) or a blurry video with meaningful contents (b)? Viewing from different perspectives (aesthetic/technical) may produce different judgments, motivating us to collect DIVIDE-3k, which is the first UGC-VQA dataset with opinions from multiple perspectives. More multi-perspective quality comparisons in our dataset are shown in supplementary Sec. A.

哪个视频质量更好?图 1: 哪个视频质量更好:内容无意义但画质清晰的视频 (a) ,还是内容有意义但画面模糊的视频 (b) ?从不同视角(美学/技术)观看可能得出不同判断,这促使我们收集了 DIVIDE-3k——首个包含多视角评价的用户生成内容视频质量评估数据集。更多数据集中的多视角质量对比见补充材料 A 节。

Conventionally, VQA studies [9–13] are concerned with the technical perspective, aiming at measuring distortions in videos (e.g., blurs, artifacts) and their impact on quality, so as to compare and guide technical systems such as cameras [14, 15], restoration algorithms [16–18] and compression standards [19]. Under this perspective, the video with clear textures in Fig. 1(a) should have notably better quality than the blurry video in Fig. 1(b). On the other hand, several recent studies [2, 6, 7, 20, 21] notice that preferences on non-technical semantic factors (e.g., contents, composition) also affect human quality assessment on UGC videos. Human experience on these factors is usually regarded as the aesthetic perspective [22–27] of quality evaluation, which considers the video in Fig. 1(b) as better quality due to its more meaningful contents and is preferred for content recommendation systems on platforms such as YouTube or TikTok. However, how aesthetic preference plays the impact on final human quality opinions of UGC videos is still debatable [1, 2] and requires further validation.

传统上,视频质量评估(VQA)研究[9–13]关注技术视角,旨在测量视频中的失真(如模糊、伪影)及其对质量的影响,以便比较和指导相机[14, 15]、修复算法[16–18]和压缩标准[19]等技术系统。从这个角度看,图1(a)中纹理清晰的视频质量应明显优于图1(b)中模糊的视频。另一方面,最近多项研究[2, 6, 7, 20, 21]指出,对非技术语义因素(如内容、构图)的偏好也会影响用户生成内容(UGC)视频的人类质量评估。人类对这些因素的体验通常被视为质量评估的美学视角[22–27],该视角认为图1(b)中的视频因其更具意义的内容而质量更优,更适合YouTube或TikTok等平台的内容推荐系统。然而,美学偏好如何影响人类对UGC视频的最终质量评价仍存在争议[1, 2],需要进一步验证。

To investigate the impact of aesthetic and technical perspectives on human quality perception of UGC videos, we conduct the first comprehensive subjective study to collect opinions from both perspectives, as well as overall opinions on a large number of videos. We also conduct subjective reasoning studies to explicitly gather information on how much each individual’s overall quality opinion is influenced by aesthetic and technical perspectives. With overall 450K opinions on 3,590 diverse UGC videos, we construct the first Disentangled Video Quality Database (DIVIDE-3k). After calibrating our study on the DIVIDE-3k with existing UGC-VQA subjective studies, we observe that human quality perception on UGC videos is broadly and inevitably affected by both aesthetic and technical perspectives. As a consequence, the overall subjective quality scores between the two videos in Fig. 1 with different qualities from either one of the two perspectives could be similar.

为研究美学和技术视角对用户生成内容(UGC)视频质量感知的影响,我们开展了首个综合性主观研究,从这两个维度收集了大量视频的评价意见及整体质量评分。同时通过主观归因研究,明确量化每个受试者的整体质量评价受美学与技术视角影响的程度。基于对3,590个多样化UGC视频采集的45万条评价数据,我们构建了首个解耦视频质量数据库(DIVIDE-3k)。通过将该研究与现有UGC-VQA主观研究进行校准后,我们发现人类对UGC视频的质量感知普遍且不可避免地同时受到美学与技术视角的影响。如图1所示,当两个视频在美学或技术任一维度存在质量差异时,其整体主观评分仍可能相近。

Motivated by the observation from our subjective study, we aim to develop an objective UGC-VQA method that accounts for both aesthetic and technical perspectives. To achieve this, we design the View Decomposition strategy, which divides and conquers aesthetic-related and technicalrelated information in videos, and propose the Disentangled Objective Video Quality Evaluator (DOVER). DOVER consists of two branches, each dedicated to focusing on the effects of one perspective. Specifically, based on the different characteristics of quality issues related to each perspective, we carefully design inductive biases for each branch, including specific inputs, regular iz ation strategies, and pretraining. The two branches are supervised by the overall scores (affected by both perspectives) to adapt for existing UGC-VQA datasets [1, 3, 4, 28–30], and additionally supervised by aesthetic and technical opinions exclusively in the DIVIDE-3k (denoted as $\mathbf{DOVER}\mathbf{++}$ ). Finally, we obtain the overall quality prediction via a subjectively-inspired fusion of the predictions from the two perspectives. With the subjectively-inspired design, the proposed DOVER and $\scriptstyle\mathrm{{DOVER++}}$ not only reach better accuracy on the overall quality prediction but also provide more reliable quality prediction from aesthetic and technical perspectives, catering for practical scenarios.

基于主观研究的观察结果,我们旨在开发一种兼顾美学与技术视角的客观UGC-VQA(用户生成内容视频质量评估)方法。为此,我们设计了视图解构策略(View Decomposition),通过分治处理视频中的美学相关与技术相关信息,并提出解耦式客观视频质量评估器(Disentangled Objective Video Quality Evaluator, DOVER)。DOVER包含两个分支,分别专注于单一视角的影响:针对各视角相关质量问题的不同特性,我们为每个分支精心设计了归纳偏置(inductive bias),包括特定输入、正则化策略和预训练方案。两个分支在现有UGC-VQA数据集[1, 3, 4, 28–30]中受整体评分(受双视角共同影响)监督,并在DIVIDE-3k数据集(记为$\mathbf{DOVER}\mathbf{++}$)中额外接受专属美学与技术维度意见监督。最终通过主观启发的双视角预测融合获得整体质量评分。这种主观启发的设计使DOVER与$\scriptstyle\mathrm{{DOVER++}}$不仅能提升整体质量预测准确度,还能从美学与技术视角提供更可靠的专项质量预测,满足实际应用需求。

Our contributions can be summarized as four-fold:

我们的贡献可总结为以下四点:

2. Related Works

2. 相关工作

Databases and Subjective Studies on UGC-VQA. Unlike traditional VQA databases [28, 29, 31, 32], UGC-VQA databases [1, 3–5] directly collect from real-world videos from direct photography, YFCC-100M [33] database or YouTube [30] videos. With each video having unique content and being produced by either professional or nonprofessional users [7, 8], quality assessment of UGC videos can be more challenging and less clear-cut compared to traditional VQA tasks. Additionally, the subjective studies in UGC-VQA datasets are usually carried out by crowdsourced users [34] with no reference videos. These factors may lead to the ambiguity of subjective quality opinions in UGC-VQA which can be affected by different perspectives. Objective Methods for UGC-VQA. Classical VQA methods [9–12, 35–42] employ handcrafted features to evaluate video quality. However, they do not take the effects of semantics into consideration, resulting in reduced accuracy on UGC videos. Noticing that UGC-VQA is deeply affected by semantics, deep VQA methods [2, 6, 13, 43–50] are becoming predominant in this problem. For instance, VSFA [6] conducts subjective studies to demonstrate videos with attractive content receive higher subjective scores. Therefore, it uses the semantic-pretrained ResNet-50 [51] features instead of handcrafted features, followed by plenty of recent works [1, 2, 13, 21, 52, 53] that improve the performance for UGC-VQA. However, these methods, which are directly driven by ambiguous subjective opinions, can hardly explain what factors are considered in their quality predictions, hindering them from providing reliable and explainable quality evaluations on real-world scenarios (e.g., distortion metrics and recommendations).

UGC-VQA的数据库与主观研究。与传统VQA数据库[28, 29, 31, 32]不同,UGC-VQA数据库[1, 3–5]直接采集自现实世界拍摄视频、YFCC-100M[33]数据库或YouTube[30]视频。由于每个视频内容独特且制作者涵盖专业与非专业用户[7, 8],UGC视频质量评估相比传统VQA任务更具挑战性和模糊性。此外,UGC-VQA数据集的主观研究通常由众包用户[34]在没有参考视频的情况下完成,这些因素可能导致UGC-VQA主观质量意见的模糊性,并受不同视角影响。

UGC-VQA的客观方法。传统VQA方法[9–12, 35–42]采用手工设计特征评估视频质量,但未考虑语义影响,导致在UGC视频上准确性下降。注意到UGC-VQA深受语义影响,深度VQA方法[2, 6, 13, 43–50]逐渐成为该领域主流。例如VSFA[6]通过主观研究表明具有吸引力内容的视频会获得更高主观分数,因此采用语义预训练的ResNet-50[51]特征替代手工特征,后续大量研究[1, 2, 13, 21, 52, 53]进一步提升了UGC-VQA性能。然而这些直接受模糊主观意见驱动的方法,难以解释其质量预测考虑的因素,阻碍了在实际场景(如失真度量和推荐)中提供可靠且可解释的质量评估。

3. The DIVIDE-3k Database

3. DIVIDE-3k 数据库

In this section, we introduce the proposed Disentangled Video Quality Database (DIVIDE-3k, Fig. 2), along with the multi-perspective subjective study. The database includes 3,590 UGC videos, on which we collected 450,000 human opinions. Different from other UGCVQA databases [1–3], the subjective study is conducted inlab to reduce the ambiguity of perspective opinions.

在本节中,我们介绍了提出的解耦视频质量数据库(DIVIDE-3k,图 2)以及多视角主观研究。该数据库包含 3,590 个用户生成内容(UGC)视频,我们收集了 450,000 条人类意见。与其他 UGCVQA 数据库 [1–3] 不同,主观研究在实验室进行,以减少视角意见的模糊性。

3.1. Collection of Videos

3.1. 视频采集

Sources of Videos. The 3,590-video database is mainly collected from two sources: 1) the YFCC-100M [33] social media database; 2) the Kinetics-400 [54] video recognition database, collected from YouTube, which has in total 400,000 videos. Voices are removed from all videos.

视频来源。该3590段视频数据库主要采集自两个渠道:1) YFCC-100M [33] 社交媒体数据库;2) 采集自YouTube的Kinetics-400 [54] 视频识别数据库(共含40万段视频)。所有视频均经过消音处理。

Getting the subset for annotation. Similar to existing studies [1, 3], we would like the sampled video database able to represent the overall quality of the original larger database. Therefore, we first histogram all 400,000 videos with spatial [11], temporal [12], and semantic indices [55]. Then, we randomly select a subset of 3,270 videos from the 400,000 videos that match the histogram from the three dimensions [56] as in [1,3]. Several examples from DIVIDE3k are provided in the supplementary. We also select 320 videos from the LSVQ [1], the most recent UGC-VQA database, to examine the calibration between DIVIDE-3k and existing UGC-VQA subjective studies (see in Tab. 2).

获取标注子集。与现有研究[1,3]类似,我们希望采样的视频数据库能够代表原始更大数据库的整体质量。因此,我们首先使用空间[11]、时间[12]和语义指标[55]对所有40万个视频进行直方图统计。然后,按照[1,3]的方法,从40万个视频中随机选取3,270个视频作为子集,使其在三个维度[56]上与直方图匹配。补充材料中提供了DIVIDE3k的几个示例。我们还从最新的UGC-VQA数据库LSVQ[1]中选取了320个视频,以检验DIVIDE-3k与现有UGC-VQA主观研究之间的校准情况(见表2)。

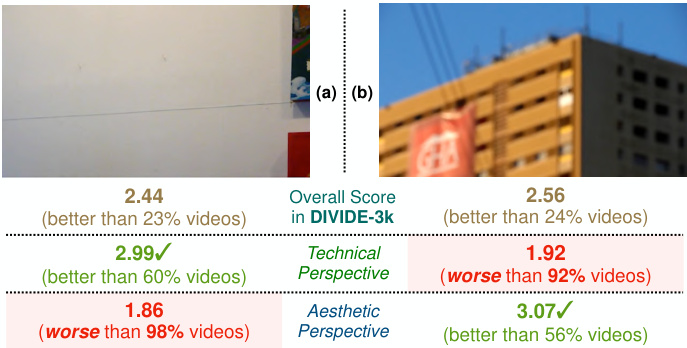

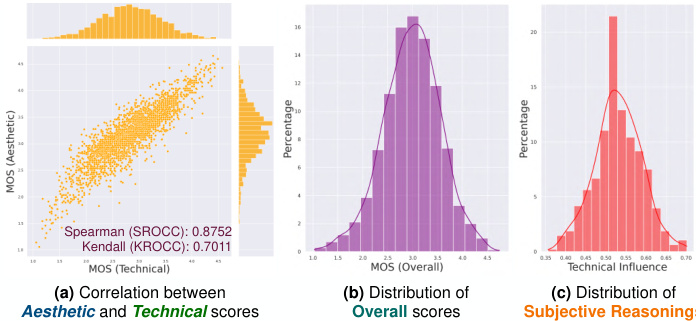

Figure 2. The in-lab subjective study on videos in DIVIDE-3k, including Training, Instruction, Annotation and Testing, discussed in Sec. 3.2.

图 2: DIVIDE-3k视频的实验室主观研究流程,包括训练、指导、标注和测试环节,详见3.2节讨论。

3.2. In-lab Subjective Study on Videos

3.2. 视频实验室主观研究

To ensure a clear understanding of the two perspectives, we conduct in-lab subjective experiments instead of crowdsourced, with 35 trained annotators (including 19 male and 16 female) participating in the full annotation process of Training, Testing and Annotation. All videos are downloaded to local computers before annotation to avoid transmission errors. The main process of the subjective study is illustrated in Fig. 2, discussed step-by-step as follows. $E x\cdot$ - tended details about the study are in supplementary Sec. A. Training. Before annotation, we provide clear criteria with abundant examples of the three quality ratings to train the annotators. For aesthetic rating, we select example images with good, fair and bad aesthetic quality from the aesthetic assessment database AVA [22], each for 20 images, as calibration for aesthetic evaluation. For technical rating, we instruct subjects to rate purely based on technical distortions and provide 5 examples for each of the following eight common distortions: 1) noises; 2) artifacts; 3) low sharpness; 4) out-of-focus; 5) motion blur; 6) stall; 7) jitter; 8) over/under-exposure. For overall quality rating, we select 20 videos each with good, fair and bad quality as examples, from the UGC-VQA dataset LSVQ [1].

为确保清晰理解这两个视角,我们在实验室而非众包环境下进行了主观实验,35名经过培训的标注员(包括19名男性和16名女性)参与了完整的训练、测试与标注流程。所有视频在标注前均下载至本地计算机以避免传输错误。主观研究的主要流程如图2所示,具体步骤如下:$E x\cdot$ - 研究扩展细节详见补充材料A节。

训练

在标注开始前,我们通过大量示例向标注员明确三类质量评分的标准。针对美学评分,我们从美学评估数据库AVA [22]中分别选取20张优、中、差美学质量的示例图像作为美学评价校准;针对技术评分,我们要求受试者仅基于技术失真进行评分,并为以下八种常见失真各提供5个示例:1) 噪点;2) 伪影;3) 低锐度;4) 失焦;5) 运动模糊;6) 卡顿;7) 抖动;8) 过曝/欠曝;针对整体质量评分,我们从UGC-VQA数据集LSVQ [1]中分别选取20段优、中、差质量的视频作为示例。

During Experiment: Instruction and Annotation. We divide the subjective experiments into 40 videos per group, and 9 groups per stage. Before each stage, we instruct the subjects on how to label each specific perspective:

实验期间:指导与标注。我们将主观实验分为每组40个视频,每阶段9组。在每阶段开始前,我们会指导受试者如何标注每个具体维度:

• Aesthetic Score: Please rate the video’s quality based on aesthetic perspective (e.g., semantic preference). • Technical Score: Please rate the video’s quality with only consideration of technical distortions. • Overall Score: Please rate the quality of the video. • Subjective Reasoning: Please rate how your overall score is impacted by aesthetic or technical perspective.

- 美学评分:请从美学角度(如语义偏好)对视频质量进行评分。

- 技术评分:请仅考虑技术失真对视频质量进行评分。

- 综合评分:请对视频的整体质量进行评分。

- 主观理由:请评估美学或技术角度对您综合评分的影响程度。

Specifically, for the subjective reasoning, subjects need to rate the proportion of technical impact in the overall score for each video among [0, 0.25, 0.5, 0.75, 1], while rest proportion is considered as aesthetic impact.

具体而言,在主观推理环节,受试者需为每个视频的技术影响力在总分中的占比评分,选项为[0, 0.25, 0.5, 0.75, 1],其余比例则视为审美影响力。

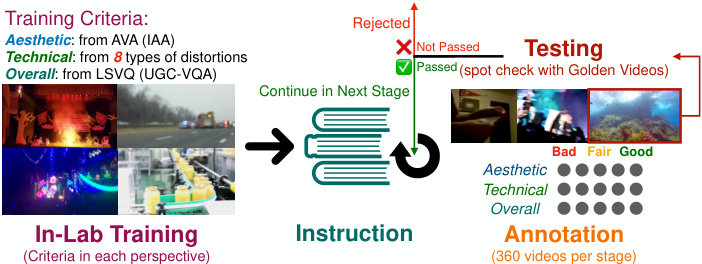

Figure 3. Statistics in DIVIDE-3k: (a) The correlations between aesthetic and technical perspectives, and distributions (b) of overall quality (MOS) & (c) subject-rated proportion of technical impact on overall quality.

图 3: DIVIDE-3k数据集统计:(a) 美学与技术维度相关性分析,(b) 整体质量(MOS)分布,(c) 技术因素对整体质量影响占比的主观评分分布。

Table 1. Effects of Perspectives: The correlations between different perspectives and overall quality (MOS) for all 3,590 videos in DIVIDE $\bf{3k}$ .

表 1: 视角效果:DIVIDE $\bf{3k}$ 中 3,590 个视频的不同视角与整体质量 (MOS) 之间的相关性

| 与MOS相关性 | MOSA | MOST | MOSA+MOST | 0.428MOSA+0.572MOST |

|---|---|---|---|---|

| Spearman (SROCC↑) | 0.9350 | 0.9642 | 0.9827 | 0.9834 |

| Kendall (KROCC↑) | 0.7894 | 0.8455 | 0.8909 | 0.8933 |

Table 2. Calibration with Existing: The correlations of between different ratings in DIVIDE-3k and existing scores in LSVQ [1] $(\mathrm{MOS_{existing}})$ ).

表 2: 与现有评分的校准: DIVIDE-3k中不同评分与LSVQ[1]中现有评分 $(\mathrm{MOS_{existing}})$ 的相关性

| 与MOSexisting的相关性 | MOSA | MOST | MOSA+MOST | MOS |

|---|---|---|---|---|

| Spearman(SROCC↑) | 0.6956 | 0.7374 | 0.7632 | 0.7680 |

| Kendall(KROCC↑) | 0.5073 | 0.5469 | 0.5797 | 0.5822 |

Testing with Golden Videos. For testing, we randomly insert 10 golden videos in each stage as a spot check to ensure the quality of annotation, and the subject will be rejected and not join the next stage if the annotations on the golden videos severely deviate from the standards.

黄金视频测试。在测试阶段,我们会在每个环节随机插入10个黄金视频作为抽查,以确保标注质量。若受试者对黄金视频的标注严重偏离标准,则该受试者将被淘汰,无法进入下一阶段。

3.3. Observations

3.3. 观察结果

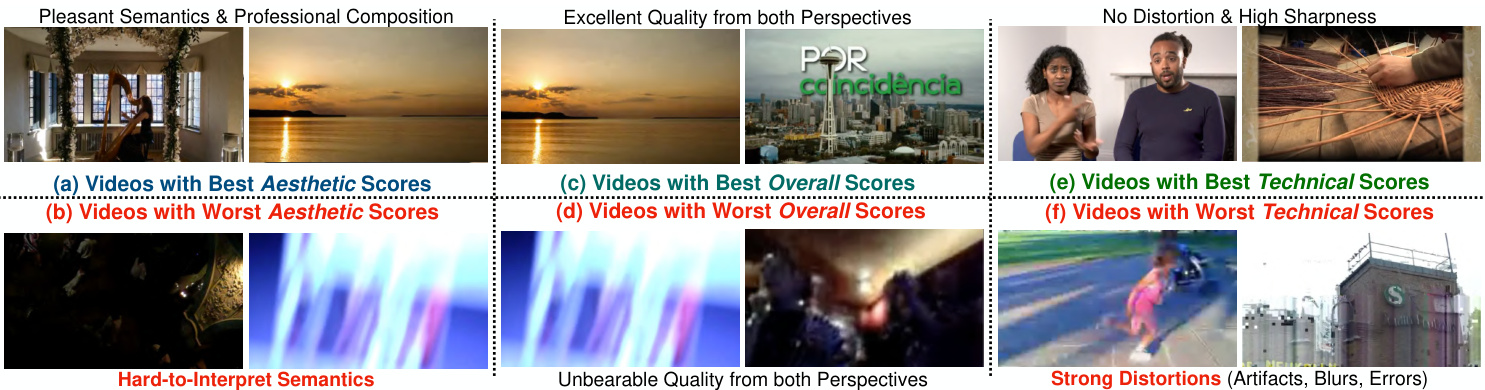

Effects of Two Perspectives. To validate the effects of two perspectives, we first quantitatively assess the correlation between the two perspectives and overall quality. Denote the mean aesthetic opinion as $\mathrm{MOS_{A}}$ , mean technical opinion as $\mathrm{MOS}_{\mathrm{T}}$ , mean overall opinion as MOS, the Spearman and Kendall correlation between different perspectives are listed in Tab. 1. From Tab. 1, we notice that the weighted sum of both perspectives is a better approximation of overall quality than either single perspective. Consequently, methods [1, 6, 57] that naively regress from overall MOS might not provide pure technical quality predictions due to the inevitable effect of aesthetics. The best/worst videos (Fig. 4) in each dimension also support this observation.

两种视角的影响。为验证两种视角的效果,我们首先定量评估了两种视角与整体质量的相关性。将平均美学评分记为$\mathrm{MOS_{A}}$,平均技术评分记为$\mathrm{MOS}_{\mathrm{T}}$,平均整体评分记为MOS,不同视角间的Spearman和Kendall相关系数列于表1。由表1可见,双视角加权求和比单一视角更能逼近整体质量。因此,直接回归整体MOS的方法[1,6,57]可能因美学因素的不可避免影响,而无法提供纯粹的技术质量预测。各维度最佳/最差视频(图4)也佐证了这一观察。

Calibration with Existing Study. To validate whether the observation can be extended for existing UGC-VQA subjective studies, we select 320 videos from LSVQ [1] to compare quality opinions from multi-perspectives with existing scores of these videos. As shown in Tab. 2, the overall quality score is more correlated with the existing score than scores from either perspective, further suggesting considering human quality opinion as a fusion of both perspectives might be a better approximation in the UGC-VQA problem.

与现有研究的校准。为了验证观察结果能否推广到现有的UGC-VQA主观研究中,我们从LSVQ [1]中选取了320个视频,将多视角质量评价与这些视频的现有评分进行对比。如表2所示,整体质量评分与现有评分的相关性高于任一单视角评分,进一步表明在UGC-VQA问题中将人类质量评价视为双视角融合可能更合理。

Figure 4. Videos with best and worst scores in aesthetic perspective, technical perspective and overall quality perception in the DIVIDE-3k. The aestheti perspective is more concerned with semantics or composition of videos, while the technical perspective is more related to low-level textures and distortions

图 4: DIVIDE-3k 数据集中美学视角、技术视角和整体质量感知得分最高与最低的视频示例。美学视角更关注视频的语义或构图,而技术视角则更侧重于底层纹理和失真问题

Subjective Reasoning. In the DIVIDE-3k, we conducted the first subjective reasoning study during the human quality assessment. Fig. 3(c) illustrates the mean technical impact for each video, ranging among [0.364, 0.698]. The results of reasoning further explicitly validate our aforementioned observation, that human quality assessment is affected by opinions from both aesthetic and technical perspectives.

主观推理。在DIVIDE-3k数据集中,我们首次在人类质量评估过程中进行了主观推理研究。图3(c)展示了每个视频的平均技术影响值,范围在[0.364, 0.698]之间。推理结果进一步明确验证了我们之前的观察:人类质量评估同时受到美学和技术两个维度观点的影响。

4. The Approaches: DOVER and DOVER $^$

4. 方法:DOVER 和 DOVER

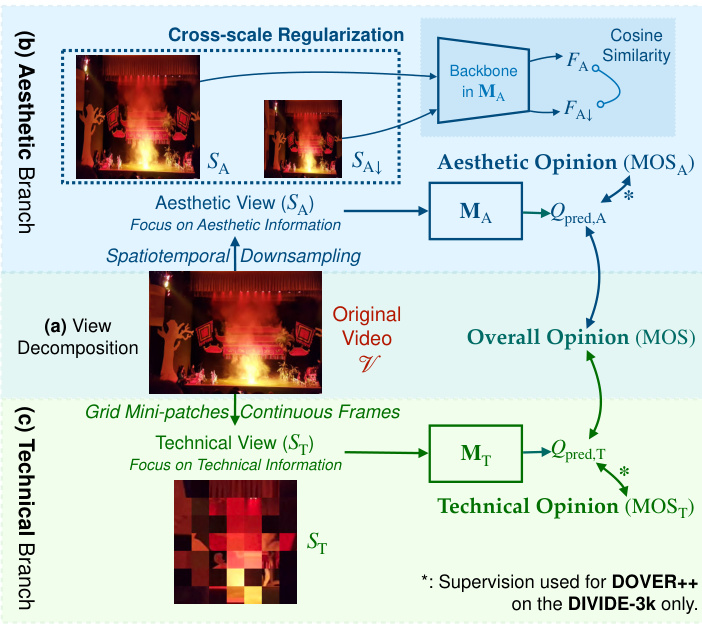

Observing that overall quality opinions are affected by both aesthetic and technical perspectives from subjective studies in DIVIDE-3k, we propose to distinguish and investigate the aesthetic and technical effects in a UGCVQA model based on the View Decomposition strategy (Sec. 4.1). The proposed Disentangled Objective Video Quality Evaluator (DOVER) is built up with an aesthetic branch (Sec. 4.2) and a technical branch (Sec. 4.3). The two branches are separately supervised, either both by overall scores (denoted as DOVER) or by respective aesthetic and technical opinions (denoted as $\mathbf{DOVER}\mathbf{++}$ ), discussed in Sec. 4.4. Finally, we discuss the subjectively-inspired fusion (Sec. 4.5) to predict the overall quality from DOVER.

基于DIVIDE-3k主观研究中观察到整体质量评价受美学与技术双重视角影响,我们提出通过视图解构策略(4.1节)在UGCVQA模型中区分并研究美学与技术效应。所提出的解耦目标视频质量评估器(DOVER)由美学分支(4.2节)与技术分支(4.3节)构成。两个分支采用独立监督机制:或同时使用整体分数监督(记为DOVER),或分别采用美学与技术主观评分监督(记为$\mathbf{DOVER}\mathbf{++}$),详见4.4节讨论。最后,我们探讨基于主观启发的融合策略(4.5节)来预测DOVER的整体质量。

4.1. Methodology: Separate the Perceptual Factors

4.1. 方法论:分离感知因素

From DIVIDE $3\mathrm{k\Omega}$ , we notice that aesthetic and technical perspectives in UGC-VQA are usually associated with different perceptual factors. Specifically, as illustrated in (Fig. , aesthetic opinions are mostly related to semantics, composition of objects [24, 27, 58], which are typically high-level visual perceptions. In contrast, the technical quality is largely affected by low-level visual distortions, e.g., blurs, noises, artifacts [1,13,21,59,60] (Fig. 4(e)&(f)).

从DIVIDE $3\mathrm{k\Omega}$ 可以看出,UGC-VQA(用户生成内容视频质量评估)中的美学与技术视角通常关联着不同的感知因素。具体而言,如图 所示,美学评价多与语义、物体构图[24, 27, 58]这类高层视觉感知相关;而技术质量则主要受模糊、噪点、伪影[1,13,21,59,60]等低层视觉失真影响(图4(e)&(f))。

The observation inspires the View Decomposition strategy that separates the video into two views: the Aesthetic View $(S_{\mathrm{A}})$ that focus on aesthetic perception, and Technical View $(S_{\mathrm{T}})$ for vice versa. With the decomposed views as inputs, two separate aesthetic $\left(\mathbf{M}{\mathrm{A}}\right)$ and technical branches $({\bf M}_{\mathrm{T}})$ evaluate different perspectives separately:

这一观察启发了视图分解策略,将视频分离为两个视图:专注于美学感知的审美视图 $(S_{\mathrm{A}})$ 和与之相反的技术视图 $(S_{\mathrm{T}})$。以分解后的视图作为输入,两个独立的美学分支 $\left(\mathbf{M}{\mathrm{A}}\right)$ 和技术分支 $({\bf M}_{\mathrm{T}})$ 分别评估不同视角:

$$

Q_{\mathrm{pred,A}}=\mathbf{M}{\mathrm{A}}(S_{\mathrm{A}});Q_{\mathrm{pred,T}}=\mathbf{M}{\mathrm{T}}(S_{\mathrm{T}})

$$

$$

Q_{\mathrm{pred,A}}=\mathbf{M}{\mathrm{A}}(S_{\mathrm{A}});Q_{\mathrm{pred,T}}=\mathbf{M}{\mathrm{T}}(S_{\mathrm{T}})

$$

Despite that most perception related to the two perspectives can be separated, a small proportion of perceptual factors are related to both perspectives, such as brightness related to both exposure (technical) [29] and lighting (aesthetic) [26], or motion blurs (which is occasionally considered as good aesthetics but typically regarded as bad technical quality [61]). Thus, we don’t separate these factors and keep them in both branches. Instead, we employ inductive biases (pre-training, regular iz ation) and specific supervision in the DIVIDE $3\mathrm{k\Omega}$ to further drive the two branches’ focus on corresponding perspectives, introduced as follows.

尽管与这两个视角相关的大部分感知因素可以区分,仍有小部分感知因素同时关联两种视角,例如亮度既涉及曝光(技术层面)[29]又关联布光(美学层面)[26],或动态模糊(偶尔被视为美学加分项,但通常被归为技术缺陷[61])。因此我们保留这些因素至两个分支,转而通过归纳偏置(预训练、正则化)和DIVIDE $3\mathrm{k\Omega}$中的专项监督机制来引导分支聚焦对应视角,具体如下。

4.2. The Aesthetic Branch

4.2. 美学分支

To help the aesthetic branch focus on the aesthetic perspective, we first pre-train the branch with Image Aethetic Assessment database AVA [22]. We then elaborate the Aesthetic View $(S_{\mathrm{A}})$ and additional regular iz ation objectives.

为了让审美分支专注于美学视角,我们首先使用图像美学评估数据库AVA [22]对该分支进行预训练。随后详细阐述审美视角$(S_{\mathrm{A}})$及附加的正则化目标。

The Aesthetic View. Semantics and Composition are two key factors deciding the aesthetics of a video [24, 58, 62]. Thus, we obtain Aesthetic View (see Fig. 5(b)) through spatial down sampling [63] and temporal sparse frame sampling [64] which preserves the semantics and composition of original videos. The down sampling strategies are widely applied in many existing state-of-the-art aesthetic assessment methods [24, 25, 27, 65, 66], further proving that they are able to preserve aesthetic information in visual contents. Moreover, the two strategies significantly reduce the sensitivity [9–12] on technical distortions such as blurs, noises, artifacts (via spatial down sampling), shaking, flicker (via temporal sparse sampling), so as to focus on aesthetics.

美学视角。语义(Semantics)与构图(Composition)是决定视频美学的两大关键因素[24,58,62]。因此我们通过保留原始视频语义和构图的空间下采样[63]与时域稀疏帧采样[64]技术获得美学视角(见图5(b))。这类下采样策略被广泛应用于当前主流美学评估方法[24,25,27,65,66],进一步证明其能有效保留视觉内容中的美学信息。此外,这两种策略显著降低了对技术失真(如模糊、噪点、伪影(通过空间下采样)、抖动、闪烁(通过时域稀疏采样))的敏感度[9-12],从而更聚焦于美学本质。

Figure 5. The proposed Disentangled Objective Video Quality Evaluator (DOVER) and $\mathbf{DOVER}\mathbf{++}$ via (a) View Decomposition (Sec. 4.1), with the (b) Aesthetic Branch (Sec. 4.2) and the (c) Technical Branch (Sec. 4.3). The equations to obtain the two views are in Supplementary Sec. E.

图 5: 提出的解耦目标视频质量评估器 (DOVER) 和 $\mathbf{DOVER}\mathbf{++}$ 通过 (a) 视图分解 (第4.1节), 包含 (b) 美学分支 (第4.2节) 和 (c) 技术分支 (第4.3节)。获取这两个视图的公式见补充材料第E节。

where $F_{\mathrm{A}}$ and $F_{\mathrm{A\downarrow}}$ are output features for $S_{\mathrm{A}}$ and $S_{\mathrm{A\downarrow}}$ .

其中 $F_{\mathrm{A}}$ 和 $F_{\mathrm{A\downarrow}}$ 分别是 $S_{\mathrm{A}}$ 和 $S_{\mathrm{A\downarrow}}$ 的输出特征。

4.3. The Technical Branch

4.3. 技术分支

In the technical branch, we would like to keep the technical distortions but obfuscate the aesthetics of the videos. Thus, we design the Technical View $(S_{\mathrm{T}})$ as follows.

在技术分支中,我们希望保留技术失真但模糊视频的美学效果。因此,我们设计了技术视图 $(S_{\mathrm{T}})$ 如下。

The Technical View. We introduce fragments [13] (as in Fig. 5(c)) as Technical View $\cdot{\cal S}{\mathrm{T}})$ for the technical branch. The fragments are composed of randomly cropped patches stitched together to retain the technical distortions. Moreover, it discarded most content and disrupted the compositional relations of the remaining, therefore severely corrupting aesthetics in videos. Temporally, we apply continuous frame sampling for $S_{\mathrm{T}}$ to retain temporal distortions.

技术视角。我们引入片段[13](如图5(c)所示)作为技术分支的技术视角 $\cdot{\cal S}{\mathrm{T}}$ 。这些片段由随机裁剪的补丁拼接而成,以保留技术失真。此外,它丢弃了大部分内容并破坏了剩余内容的构图关系,因此严重损害了视频的美学效果。在时间维度上,我们对 $S_{\mathrm{T}}$ 采用连续帧采样以保留时间失真。

Weak Global Semantics as Background. Many studies [13, 59, 68] suggest that technical quality perception should consider global semantics to better assess distortion levels. Though most content is discarded in $S_{\mathrm{T}}$ , the technical branch can still reach $68.6%$ accuracy for Kinetics400 [54] video classification, indicating it can preserve weak global semantics as background information to distinguish textures (e.g., sands) from distortions (e.g., noises).

弱全局语义作为背景。多项研究 [13, 59, 68] 指出,技术质量感知应考虑全局语义以更准确评估失真程度。尽管 $S_{\mathrm{T}}$ 中大部分内容被丢弃,但技术分支仍能在 Kinetics400 [54] 视频分类任务中达到 $68.6%$ 的准确率,表明其能保留弱全局语义作为背景信息来区分纹理(如沙粒)与失真(如噪点)。

4.4. Learning Objectives

4.4. 学习目标

Weak Supervision with Overall Opinions. With the observation in Sec. 3.3, the overall MOS can be approximated as a weighted sum of $\mathrm{MOS_{A}}$ and $\mathrm{MOS}{\mathrm{T}}$ . Moreover, the subjectively-inspired inductive biases in each branch can reduce the perception of another perspective. The two observations suggest that if we use overall opinions to separately supervise the two branches, the prediction of each branch could be majorly decided by its corresponding perspective. Henceforth, we propose the Limited View Biased Supervisions $(\mathcal{L}_{\mathrm{LVBS}})$ , which minimize the relative $\mathrm{loss}^{\mathrm{*}}$ between predictions in each branch with the overall opinion MOS, as the objective of DOVER, applicable on all databases:

基于整体意见的弱监督。根据第3.3节的观察,整体平均意见分数(MOS)可近似表示为$\mathrm{MOS_{A}}$与$\mathrm{MOS}{\mathrm{T}}$的加权和。此外,各分支中主观驱动的归纳偏置会削弱另一视角的感知。这两点表明:若用整体意见分别监督两个分支,每个分支的预测将主要由其对应视角决定。因此,我们提出有限视角偏置监督$(\mathcal{L}_{\mathrm{LVBS}})$作为DOVER的优化目标(适用于所有数据库),通过最小化各分支预测与整体意见MOS间的相对$\mathrm{loss}^{\mathrm{*}}$来实现。

Supervision with Opinions from Perspectives. With the DIVIDE $3\mathrm{k\Omega}$ database, we further improve the accuracy for disentanglement with the Direct Supervisions $(\mathcal{L}_{\mathrm{DS}})$ on corresponding perspective opinions for both branches:

基于多视角观点的监督。借助DIVIDE $3\mathrm{k\Omega}$数据库,我们通过直接监督$(\mathcal{L}_{\mathrm{DS}})$进一步提升了两分支在对应视角观点上的解耦精度:

$$

\mathcal{L}{\mathrm{DS}}=\mathcal{L}{\mathrm{Rel}}(Q_{\mathrm{pred,A}},\mathrm{MOS}{\mathrm{A}})+\mathcal{L}{\mathrm{Rel}}(Q_{\mathrm{pred,T}},\mathrm{MOS}_{\mathrm{T}})

$$

$$

\mathcal{L}{\mathrm{DS}}=\mathcal{L}{\mathrm{Rel}}(Q_{\mathrm{pred,A}},\mathrm{MOS}{\mathrm{A}})+\mathcal{L}{\mathrm{Rel}}(Q_{\mathrm{pred,T}},\mathrm{MOS}_{\mathrm{T}})

$$

and the proposed $\mathbf{DOVER}\mathbf{++}$ is driven by a fusion of the two objectives to jointly learn more accurate overall quality as well as perspective quality predictions for each branch:

所提出的 $\mathbf{DOVER}\mathbf{++}$ 由两个目标的融合驱动,旨在联合学习更精确的整体质量以及各分支的视角质量预测:

$$

\mathcal{L}{\mathrm{DOVER++}}=\mathcal{L}{\mathrm{DS}}+\lambda_{\mathrm{LVBS}}\mathcal{L}_{\mathrm{LVBS}}

$$

$$

\mathcal{L}{\mathrm{DOVER++}}=\mathcal{L}{\mathrm{DS}}+\lambda_{\mathrm{LVBS}}\mathcal{L}_{\mathrm{LVBS}}

$$

4.5. Subjectively-inspired Fusion Strategy

4.5. 主观启发式融合策略

From the subjective studies, we observe that the MOS can be well-approximated as $0.428\mathrm{MOS_{A}}+0.572\mathrm{MOS_{T}}$ . Henceforth, we propose to similarly obtain the final overall quality prediction $(Q_{\mathrm{pred}})$ from two perspectives: $Q_{\mathrm{pred}}=$ $0.428Q_{\mathrm{pred,A}}+0.572Q_{\mathrm{pred,T}}$ via a simple weighted fusion. With better accuracy on all datasets (Tab. 9), the strategy by side validates the subjective observations in Sec. 3.3.

从主观研究中,我们观察到MOS可以很好地近似为$0.428\mathrm{MOS_{A}}+0.572\mathrm{MOS_{T}}$。因此,我们建议通过简单加权融合从两个角度类似地获得最终整体质量预测$(Q_{\mathrm{pred}})$:$Q_{\mathrm{pred}}=0.428Q_{\mathrm{pred,A}}+0.572Q_{\mathrm{pred,T}}$。该策略在所有数据集上具有更高准确性(表9),侧面验证了第3.3节的主观观察结果。

5. Experimental Evaluation

5. 实验评估

In this section, we answer two important questions:

在本节中,我们将回答两个重要问题:

• Can the aesthetic and technical branches better learn the effects of corresponding perspectives (Sec. 5.2)? • Can the fused model more accurately predict overall quality in UGC-VQA problem (Sec. 5.3)?

• 美学分支与技术分支能否更好地学习对应视角的效果(见5.2节)?

• 融合模型能否更准确地预测UGC-VQA问题中的整体质量(见5.3节)?

Moreover, we include ablation studies (Sec. 5.4) and an outlook for personalized quality evaluation (Sec. 5.5).

此外,我们包含了消融研究(第5.4节)和个性化质量评估的展望(第5.5节)。

5.1. Experimental Setups

5.1. 实验设置

Table 3. Quantitative Evaluation on Perspectives of DOVER (weaklysupervised) and $\scriptstyle\mathrm{DOVER++}$ (fully-supervised) in the DIVIDE-3k, by evaluating the correlation across different predictions and subjective opinions. w/o Decomposition denotes both branches with original videos as inputs.

表 3: DIVIDE-3k 数据集上弱监督方法 DOVER 与全监督方法 $\scriptstyle\mathrm{DOVER++}$ 的多视角定量评估 (通过预测结果与主观评分的相关性分析) 。w/o Decomposition 表示两个分支均以原始视频作为输入。

| 方法 | SROCC/PLCC | MOSA | MOST |

|-----------------------------