Neural Extractive Sum mari z ation with Hierarchical Attentive Heterogeneous Graph Network

基于分层注意力异质图网络的神经抽取式摘要生成

Abstract

摘要

Sentence-level extractive text sum mari z ation is substantially a node classification task of network mining, adhering to the informative components and concise representations. There are lots of redundant phrases between extracted sentences, but it is difficult to model them exactly by the general supervised methods. Previous sentence encoders, especially BERT, specialize in modeling the relationship between source sentences. While, they have no ability to consider the overlaps of the target selected summary, and there are inherent dependencies among target labels of sentences. In this paper, we propose HAHSum (as shorthand for Hierarchical Attentive Heterogeneous Graph for Text Sum mari z ation), which well models different levels of information, includ- ing words and sentences, and spotlights redundancy dependencies between sentences. Our approach iterative ly refines the sentence representations with redundancy-aware graph and delivers the label dependencies by message passing. Experiments on large scale benchmark corpus (CNN/DM, NYT, and NEWSROOM) demonstrate that HAHSum yields ground-breaking performance and outperforms previous extractive summarize rs.

句子级抽取式文本摘要本质上是一种网络挖掘中的节点分类任务,需兼顾信息丰富性与表达简洁性。被抽取的句子间存在大量冗余短语,但通用监督方法难以精确建模这种现象。现有句子编码器(尤其是BERT)擅长建模源句子间关系,却无法考虑目标摘要的选句重叠问题,而句子目标标签间存在固有依赖性。本文提出HAHSum(层次化注意力异质图文本摘要的简称),该模型能有效建模词级和句级等多层次信息,并聚焦句子间的冗余依赖关系。我们的方法通过冗余感知图迭代优化句子表征,并借助消息传递机制捕捉标签依赖。在大规模基准语料(CNN/DM、NYT和NEWSROOM)上的实验表明,HAHSum实现了突破性性能,显著优于现有抽取式摘要器。

1 Introduction

1 引言

Single document extractive sum mari z ation aims to select subset sentences and assemble them as informative and concise summaries. Recent advances (Nallapati et al., 2017; Zhou et al., 2018; Liu and Lapata, 2019; Zhong et al., 2020) focus on balancing the salience and redundancy of sentences, i.e. selecting the sentences with high semantic similarity to the gold summary and resolving the redundancy between selected sentences. Taking Table

单文档抽取式摘要旨在选取部分句子并将其组合成信息丰富且简洁的摘要。最新研究进展 (Nallapati et al., 2017; Zhou et al., 2018; Liu and Lapata, 2019; Zhong et al., 2020) 聚焦于平衡句子的显著性与冗余性,即选择与黄金摘要具有高语义相似度的句子,并解决所选句子间的冗余问题。以表

Salience Label Sentence Table 1: Simplified News from Jackson County Prosecutor. Salience score is an approximate estimation derived from semantic and Label is converted from gold summary to ensure the concision and accuracy of the extracted summaries.

表 1: 杰克逊县检察官简讯。显著度分数是基于语义的近似估计,标签由黄金摘要转换而来,以确保提取摘要的简洁性和准确性。

| 句子 | 标签 | 内容 |

|---|---|---|

| sent1:0.7 | 0 | Deanna Holleranis被控谋杀 |

| sent2:0.1 | 0 | 杰克逊县检察官Jean Peters Baker今日宣布 |

| sent3:0.7 | Deanna Holleran面临交通事故指控 | |

| sent4:0.7 | 这起致命交通事故构成谋杀 | |

| sent5:0.2 | 0 | 事故导致Marianna Hernandez在9th Hardesty附近丧生 |

| 摘要: | 一名女子因致命交通事故面临谋杀指控 |

1 for example, there are five sentences in a document, and each of them is assigned one salience score and one label indicating whether this sentence should be contained in the extracted summary. Although sent1, sent3, and sent4 are assigned high salience score, just sent3 and sent4 are selected as the summary sentences (with label 1) because there are too much redundancy information between unselected sent1 and selected sent3. That is to say, whether one sentence could be selected depends on its salience and the redundancy with other selected sentences. However, it is still difficult to model the dependency exactly.

例如,一个文档中有五句话,每句话都被分配了一个显著性分数和一个标签,表明该句子是否应包含在提取的摘要中。虽然 sent1、sent3 和 sent4 被分配了较高的显著性分数,但只有 sent3 和 sent4 被选为摘要句子(标签为1),因为未选中的 sent1 与已选中的 sent3 之间存在过多冗余信息。也就是说,一个句子能否被选中取决于其显著性以及与其他已选句子的冗余程度。然而,要精确建模这种依赖关系仍然很困难。

Most of the previous approaches utilize autoregressive architecture (Narayan et al., 2018; Mendes et al., 2019; Liu and Lapata, 2019; Xu et al., 2020), which just models the unidirectional dependency between sentences, i.e., the state of the current sentence is based on previously sentence labels. These models are trained to predict the current sentence label given the ground truth labels of the previous sentences, while feeding the predicted labels of the previous sentences as input in inference phase. As we all know, the auto regressive paradigm faces error propagation and exposure bias problems (Ranzato et al., 2015). Besides, reinforcement learning is also introduced to consider the semantics of extracted summary (Narayan et al., 2018; Bae et al.,

先前的大多数方法采用自回归架构 (Narayan et al., 2018; Mendes et al., 2019; Liu and Lapata, 2019; Xu et al., 2020) ,仅建模句子间的单向依赖关系,即当前句子的状态基于先前句子的标签。这些模型在训练时基于前文句子的真实标签预测当前句子标签,而在推理阶段则以前文句子的预测标签作为输入。众所周知,自回归范式面临误差传播和曝光偏差问题 (Ranzato et al., 2015) 。此外,也有研究引入强化学习来考量提取摘要的语义 (Narayan et al., 2018; Bae et al.,

2019), which combines the maximum-likelihood cross-entropy loss with the rewards from policy gradient to directly optimize the evaluation metric for the sum mari z ation task. Recently, the popular solution is to build a sum mari z ation system with two-stage decoder. These models extract salient sentences and then rewrite (Chen and Bansal, 2018; Bae et al., 2019), compress (Lebanoff et al., 2019; Xu and Durrett, 2019; Mendes et al., 2019), or match (Zhong et al., 2020) these sentences.

2019年),该方法将最大似然交叉熵损失与策略梯度的奖励相结合,直接优化摘要任务的评估指标。近期的主流解决方案是构建两阶段解码器的摘要系统。这些模型先提取关键句子,再进行改写 (Chen and Bansal, 2018; Bae et al., 2019)、压缩 (Lebanoff et al., 2019; Xu and Durrett, 2019; Mendes et al., 2019) 或匹配 (Zhong et al., 2020) 操作。

Previous models generally use top $k$ strategy as an optimal strategy: for different documents, the number of selected sentences is constant which conflicts with the real world. For example, almost all previous approaches extract three sentences from the source articles (top-3 strategy (Zhou et al., 2018; Liu and Lapata, 2019; Zhang et al., 2019b; Xu et al., 2020)), although $40%$ documents in CNN/DM contain more or less than 3-sentences oracle summary. That’s because these approaches are difficult to measure the salience and redundancy simultaneously with error propagation. Notably, Mendes et al. (2019) introduces the length variable into the decoder and Zhong et al. (2020) can choose any number of sentences by match candidate summary in semantic space.

先前模型通常采用 top $k$ 策略作为最优策略:对不同文档选取固定数量的句子,这与现实场景存在矛盾。例如,现有方法大多从源文章中抽取三句话(top-3策略 (Zhou et al., 2018; Liu and Lapata, 2019; Zhang et al., 2019b; Xu et al., 2020)),但CNN/DM数据集中 $40%$ 文档的理想摘要句子数并非3句。这是因为这些方法难以同时衡量显著性与冗余度,且存在误差传播问题。值得注意的是,Mendes et al. (2019) 在解码器中引入长度变量,Zhong et al. (2020) 则通过语义空间匹配候选摘要实现任意数量句子的选择。

In order to address above issues, we construct the source article as a hierarchical heterogeneous graph (HHG) and propose a Graph Attention Net (Velickovic et al., 2018) based model (HAHSum) to extract sentences by simultaneously balancing salience and redundancy. In HHG, both words and sentences are constructed as nodes, the relations between them are constructed as different types of edges. This hierarchical graph can be viewed as a two-level graph: word-level and sentencelevel. For word-level graph (word-word), we design an Abstract Layer to learn the semantic represent ation of each word. Then, we transduce the word-level graph into the sentence-level one, by aggregating each word to its corresponding sentence node. For sentence-level graph (sentencesentence), we design a Redundancy Layer, which firstly pre-labels each sentence and iterative ly updates the label dependencies by propagating redundancy information. The redundancy layer restricts the scale of receptive field for redundancy information, and the information passing is guided by the ground-truth labels of sentences. After obtaining the redundancy-aware sentence representations, we use a classifier to label these sentence-level nodes with a threshold. In this way, the whole framework extracts summary sentences simultaneously instead of auto regressive paradigm, taking away the top $k$ strategy.

为了解决上述问题,我们将源文章构建为分层异构图 (HHG),并提出一种基于图注意力网络 (Velickovic et al., 2018) 的模型 (HAHSum),通过同时平衡显著性和冗余性来提取句子。在 HHG 中,单词和句子都被构建为节点,它们之间的关系被构建为不同类型的边。这种分层图可以看作是一个两级图:单词级和句子级。对于单词级图 (word-word),我们设计了一个抽象层来学习每个单词的语义表示。然后,通过将每个单词聚合到其对应的句子节点,我们将单词级图转换为句子级图。对于句子级图 (sentence-sentence),我们设计了一个冗余层,该层首先对每个句子进行预标注,并通过传播冗余信息迭代更新标签依赖关系。冗余层限制了冗余信息的感受野范围,信息传递由句子的真实标签引导。在获得冗余感知的句子表示后,我们使用分类器以阈值对这些句子级节点进行标注。这样,整个框架同时提取摘要句子,而不是采用自回归范式,移除了 top $k$ 策略。

The contributions of this paper are as below: 1) We propose a hierarchical attentive heterogeneous graph based model(HAHSum) to guide the redundancy information propagating between sentences and learn redundancy-aware sentence represent ation; 2) Our architecture is able to extract flexible quantity of sentences with a threshold, instead of top $k$ strategy; 3) We evaluate HAHSum on three popular benchmarks (CNN/DM, NYT, NEWSROOM) and experimental results show that HAHSum outperforms the existing state-of-the-art approaches. Our source code will be available on Github 1.

本文的贡献如下: 1) 我们提出了一种基于分层注意力异构图(HAHSum)的模型,用于指导句子间的冗余信息传播并学习冗余感知的句子表示; 2) 我们的架构能够通过阈值提取灵活数量的句子,而非传统的top $k$策略; 3) 我们在三个主流基准数据集(CNN/DM、NYT、NEWSROOM)上评估HAHSum,实验结果表明HAHSum优于现有的最先进方法。我们的源代码将在Github 1上公开。

2 Related Work

2 相关工作

2.1 Extractive Sum mari z ation

2.1 抽取式摘要

Neural networks have achieved great success in the task of text sum mari z ation. There are two main lines of research: abstract ive and extractive. The abstract ive paradigm (Rush et al., 2015; See et al., 2017; Celikyilmaz et al., 2018; Sharma et al., 2019) focuses on generating a summary word-by-word after encoding the full document. The extractive approach (Cheng and Lapata, 2016; Zhou et al., 2018; Narayan et al., 2018) directly selects sentences from the document to assemble into a summary.

神经网络在文本摘要任务中取得了巨大成功。现有研究主要分为两大方向:生成式 (abstractive) 和抽取式 (extractive)。生成式方法 (Rush et al., 2015; See et al., 2017; Celikyilmaz et al., 2018; Sharma et al., 2019) 通过对全文编码后逐词生成摘要,而抽取式方法 (Cheng and Lapata, 2016; Zhou et al., 2018; Narayan et al., 2018) 则直接从原文中选取句子组合成摘要。

Recent research work on extractive summarization spans a large range of approaches. These work usually instantiate their encoder-decoder architecture by choosing RNN (Nallapati et al., 2017; Zhou et al., 2018), Transformer (Wang et al., 2019; Zhong et al., 2019b; Liu and Lapata, 2019; Zhang et al., 2019b) or Hierarchical GNN (Wang et al., 2020) as encoder, auto regressive (Jadhav and Rajan, 2018; Liu and Lapata, 2019) or nonauto regressive (Narayan et al., 2018; Arumae and Liu, 2018) decoders. The application of RL provides a means of summary-level scoring and brings improvement (Narayan et al., 2018; Bae et al., 2019).

近期关于抽取式摘要的研究工作涵盖了多种方法。这些工作通常通过选择RNN (Nallapati et al., 2017; Zhou et al., 2018)、Transformer (Wang et al., 2019; Zhong et al., 2019b; Liu and Lapata, 2019; Zhang et al., 2019b)或层次图神经网络 (Wang et al., 2020)作为编码器,以及自回归 (Jadhav and Rajan, 2018; Liu and Lapata, 2019)或非自回归 (Narayan et al., 2018; Arumae and Liu, 2018)解码器来实例化其编码器-解码器架构。强化学习的应用为摘要级评分提供了方法并带来了改进 (Narayan et al., 2018; Bae et al., 2019)。

2.2 Graph Neural Network for NLP

2.2 用于自然语言处理的图神经网络 (Graph Neural Network)

Recently, there is considerable amount of interest in applying GNN to NLP tasks and great success has been achieved. Fernandes et al. (2019) applied sequence GNN to model the sentences with named entity information. Yao et al. (2019) used twolayer GCN for text classification and introduced a well-designed adjacency matrix. GCN also played an important role in Chinese named entity (Ding et al., 2019). Liu et al. (2019) proposed a new contextual i zed neural network for sequence learning by leveraging various types of non-local contextual information in the form of information passing over GNN. These studies are related to our work in the sense that we explore extractive text summarization by message passing through hierarchical heterogeneous architecture.

最近,将图神经网络 (GNN) 应用于自然语言处理 (NLP) 任务引起了广泛关注,并取得了巨大成功。Fernandes 等人 (2019) 应用序列 GNN 对带有命名实体信息的句子进行建模。Yao 等人 (2019) 使用双层 GCN (Graph Convolutional Network) 进行文本分类,并引入了一个精心设计的邻接矩阵。GCN 在中文命名实体识别中也发挥了重要作用 (Ding 等人, 2019)。Liu 等人 (2019) 提出了一种新的上下文感知神经网络,通过利用 GNN 上信息传递形式的多种非局部上下文信息进行序列学习。这些研究与我们的工作相关,因为我们探索了通过层次化异构架构的消息传递进行抽取式文本摘要。

3 Methodology

3 方法论

3.1 Problem Definition

3.1 问题定义

Let ${\cal{S}}={s_{1},s_{2},...,s_{N}}$ denotes the source document sequence which contains $N$ sentences, where $s_{i}$ is the $i$ -th sentence of document. Let $T$ denotes the hand-crafted summary. Extractive sum mari z ation aims to produce summary $S^{*}=$ ${s_{1}^{*},s_{2}^{*},...,s_{M}^{*}}$ by selecting $M$ sentences from $S$ where $M\leq N$ . Labels $Y={y_{1},y_{2},...,y_{N}}$ are derived from $T$ , where $y_{i}\in{0,1}$ denotes whether sentence $s_{i}$ should be included in the extracted summary. Oracle summary is a subset of $S$ , which achieves the highest ROUGE score calculated with $T$ .

设 ${\cal{S}}={s_{1},s_{2},...,s_{N}}$ 表示包含 $N$ 个句子的源文档序列,其中 $s_{i}$ 是文档的第 $i$ 个句子。设 $T$ 表示人工撰写的摘要。抽取式摘要旨在通过从 $S$ 中选择 $M$ 个句子(其中 $M\leq N$)生成摘要 $S^{*}={s_{1}^{*},s_{2}^{*},...,s_{M}^{*}}$。标签 $Y={y_{1},y_{2},...,y_{N}}$ 由 $T$ 导出,其中 $y_{i}\in{0,1}$ 表示句子 $s_{i}$ 是否应被包含在抽取的摘要中。Oracle摘要是 $S$ 的一个子集,其与 $T$ 计算的ROUGE分数达到最高。

3.2 Graph Construction

3.2 图构建

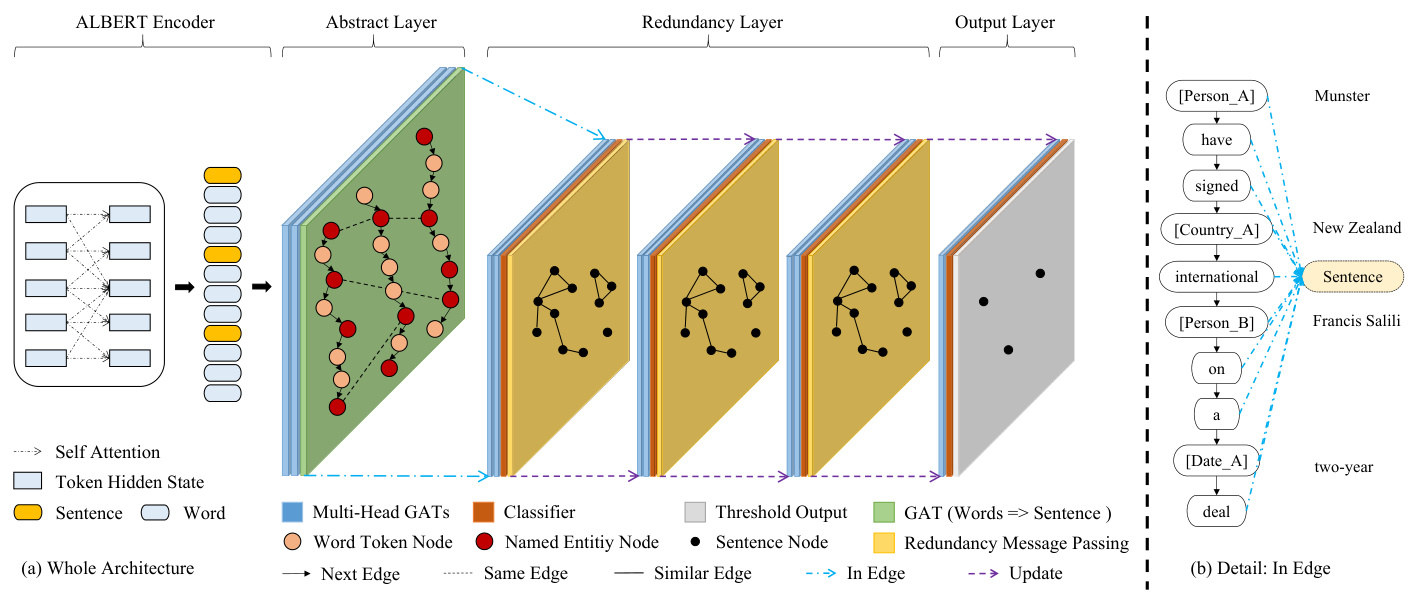

In order to model the redundancy relation between sentences, we use a heterogeneous graph which contains multi-granularity levels of information to represent a document, as shown in Figure 1. In this graph, there are three types of nodes: named entity, word, and sentence. To reduce semantic sparsity, we replace text spans of Named Entity by anonymized tokens (e.g. [Person A], [Person B], [Date A]). Word node is the original textual item, representing word-level information. Different from Div Graph Pointer (Sun et al., 2019), which aggregates identical words into one node, we keep each word occurrence as one node to avoid the confusion of different contexts. Each Sentence node corresponds to one sentence and represents the global information of one sentence.

为了建模句子间的冗余关系,我们使用包含多粒度信息的异构图来表示文档,如图1所示。该图包含三种节点类型:命名实体、词语和句子。为降低语义稀疏性,我们将命名实体的文本片段替换为匿名化token(例如 [Person A], [Person B], [Date A])。词语节点是原始文本项,代表词级信息。与Div Graph Pointer (Sun et al., 2019) 将相同词语聚合为单一节点不同,我们保留每个词语出现实例作为独立节点以避免不同上下文的混淆。每个句子节点对应一个句子并表征该句子的全局信息。

We also define four types of edges to represent various structural information in HAHSum:

我们还定义了四种边类型来表示HAHSum中的各种结构信息:

- We connect sequential named entities and words in one sentence using directed Next edges.

- 我们使用有向Next边连接同一句子中的连续命名实体和单词。

The topological structure of graph can be represented by adjacency matrix $A$ , where the booltype element is indicating whether there is an edge between nodes. Because HAHsum contains multi-granularity levels of information, it can be divided into three subgraphs: the word-level, wordsentence, and sentence-level subgraph. So, we define three adjacency matrices: $A_{w o r d}$ is used for the word-level graph, constructed by Entity node, Word node, Next edge and Same edge. $A_{w o r d-s e n t}$ is used for the word-sentence graph, constructed by three types of nodes and In edge. $A_{s e n t}$ is used for sentence-level graph, constructed by Sentence node and Similar edge. By propagating the information from word-level to sentence-level graph, we can obtain the sentence representation and model the redundancy between sentences.

图的拓扑结构可以用邻接矩阵 $A$ 表示,其中布尔型元素表示节点间是否存在边。由于HAHsum包含多粒度信息,可划分为三个子图:词级子图、词句子图和句级子图。因此我们定义三个邻接矩阵:$A_{word}$ 用于词级图,由实体节点、词节点、Next边和Same边构成;$A_{word-sent}$ 用于词句子图,由三类节点和In边构成;$A_{sent}$ 用于句级图,由句子节点和Similar边构成。通过将信息从词级图传播至句级图,我们可以获得句子表示并建模句子间的冗余性。

Generally, the message passing over graphs can be achieved in two steps: aggregation and combination, and this process can be conducted multiple times (referred as layers or hops in GNN literature) (Tu et al., 2019). Therefore, we iterative ly update the sentence nodes representation with redundancy message passing which will be described in the following sections.

通常,图上的消息传递可以通过两个步骤实现:聚合和组合,这一过程可以进行多次(在GNN文献中称为层或跳) (Tu et al., 2019)。因此,我们通过冗余消息传递迭代更新句子节点表示,具体方法将在后续章节中描述。

3.3 Graph Attention Network

3.3 图注意力网络 (Graph Attention Network)

To represent graph structure $A$ and node content $X$ in a unified framework, we develop a variant of Graph Attention Network (GAT) (Velickovic et al., 2018). GAT is used to learn hidden representations of each node by aggregating the information from its neighbors, with the attention coefficients:

为了在图结构 $A$ 和节点内容 $X$ 的统一框架中表示它们,我们开发了一种图注意力网络 (Graph Attention Network, GAT) (Velickovic et al., 2018) 的变体。GAT 通过学习注意力系数,通过聚合来自邻居节点的信息来学习每个节点的隐藏表示:

$$

e_{i j}=\mathrm{LeakReLU}(a(W x_{i}||W x_{j}))

$$

$$

e_{i j}=\mathrm{LeakReLU}(a(W x_{i}||W x_{j}))

$$

where $W\in\mathbb{R}^{d\times d}$ is a shared linear transformation weight matrix for this layer, $||$ is the concatenation operation, and $a\in\mathbb{R}^{2d}$ is a shared attention al weight vector.

其中 $W\in\mathbb{R}^{d\times d}$ 是该层共享的线性变换权重矩阵,$||$ 是拼接操作,$a\in\mathbb{R}^{2d}$ 是共享的注意力权重向量。

Figure 1: Overview of Hierarchical Attentive Heterogeneous Graph

图 1: 层次化注意力异构图总览

To make the attention coefficients easily comparable across different nodes, we normalize them as follows:

为了使注意力系数在不同节点之间更容易比较,我们对其进行如下归一化:

$$

\alpha_{i j}=\mathrm{softmax}(e_{i j})=\frac{\exp(e_{i j})}{\sum_{k\in\mathcal{N}_{i}}\exp(e_{i k})}

$$

$$

\alpha_{i j}=\mathrm{softmax}(e_{i j})=\frac{\exp(e_{i j})}{\sum_{k\in\mathcal{N}_{i}}\exp(e_{i k})}

$$

where $\mathcal{N}_{i}$ denotes the neighbors of node $i$ according to adjacency matrix $A$ .

其中 $\mathcal{N}_{i}$ 表示根据邻接矩阵 $A$ 得出的节点 $i$ 的邻居。

Then, the normalized attention coefficients are used to compute a linear combination of features.

然后,使用归一化的注意力系数来计算特征的线性组合。

$$

x_{i}^{\prime}=\sigma(\sum_{j\in\mathcal{N}{i}}\alpha_{i j}W x_{j})+W^{\prime}x_{i}

$$

$$

x_{i}^{\prime}=\sigma(\sum_{j\in\mathcal{N}{i}}\alpha_{i j}W x_{j})+W^{\prime}x_{i}

$$

where $W^{\prime}$ is used to distinguish the information between $x_{i}$ and its neighbors.

其中 $W^{\prime}$ 用于区分 $x_{i}$ 与其邻居之间的信息。

3.4 Message Passing

3.4 消息传递

Shown in Figure 1, HAHSum consists of ALBERT Encoder, Abstract Layer, Redundancy Layer, and Output Layer. We next introduce how the information propagates over these layers.

如图 1 所示,HAHSum 由 ALBERT 编码器 (ALBERT Encoder)、摘要层 (Abstract Layer)、冗余层 (Redundancy Layer) 和输出层 (Output Layer) 组成。接下来我们将介绍信息如何在这些层之间传播。

3.4.1 ALBERT Encoder

3.4.1 ALBERT编码器

In order to learn the contextual representation of words, we use a pre-trained ALBERT (Lan et al., 2019) for sum mari z ation and the architecture is similar to BERTSUMEXT (Liu and Lapata, 2019). The output of ALBERT encoder contains word hidden states $h^{w o r d}$ and sentence hidden states $h^{s e n t}$ . Specifically, ALBERT takes subword units as input, which means that one word may correspond to multiple hidden states. In order to accurately use these hidden states to represent each word, we apply an average pooling function to the outputs of ALBERT.

为了学习单词的上下文表示,我们使用预训练的ALBERT (Lan et al., 2019)进行摘要生成,其架构与BERTSUMEXT (Liu and Lapata, 2019)相似。ALBERT编码器的输出包含单词隐藏状态$h^{word}$和句子隐藏状态$h^{sent}$。具体而言,ALBERT以子词单元作为输入,这意味着一个单词可能对应多个隐藏状态。为了准确利用这些隐藏状态表示每个单词,我们对ALBERT的输出应用了平均池化函数。

3.4.2 Abstract Layer

3.4.2 抽象层

The abstract layer contains three GAT sublayers which are described in Section 3.3: two for wordlevel graph and one for word-sentence transduction. The first two GAT sublayers are used to learn the hidden state of each word based on its two-order neighbors inspired by Kipf and Welling[2017],

抽象层包含三个GAT子层(如第3.3节所述):两个用于词级图,一个用于词句转换。前两个GAT子层受Kipf和Welling[2017]启发,用于基于单词的二阶邻居学习每个单词的隐藏状态。

$$

\begin{array}{r}{\mathcal{W}={\tt G A T}({\tt G A T}(h^{w o r d},A_{w o r d}),A_{w o r d})}\end{array}

$$

$$

\begin{array}{r}{\mathcal{W}={\tt G A T}({\tt G A T}(h^{w o r d},A_{w o r d}),A_{w o r d})}\end{array}

$$

where $A_{w o r d}$ denotes the adjacency matrix of the word-level subgraph, and $\mathcal{W}$ denotes the hidden state of the word nodes.

其中 $A_{word}$ 表示词级子图的邻接矩阵,$\mathcal{W}$ 表示词节点的隐藏状态。

The third GAT sublayer is to learn the initial representation of each sentence node, derived from the word hidden states:

第三个GAT子层用于学习每个句子节点的初始表征,该表征源自单词隐藏状态:

$$

[\mathcal{W},{S_{a b s}}]=\mathbf{G}\mathbf{A}\mathbf{T}([\mathcal{W},h^{s e n t}],A_{w o r d-s e n t})

$$

$$

[\mathcal{W},{S_{a b s}}]=\mathbf{G}\mathbf{A}\mathbf{T}([\mathcal{W},h^{s e n t}],A_{w o r d-s e n t})

$$

where $A_{w o r d-s e n t}$ denotes the adjacency matrix of the word-sentence subgraphs, and $S_{a b s}$ (abs is for abstract) is the initial representation of sentence nodes.

其中 $A_{w o r d-s e n t}$ 表示词-句子子图的邻接矩阵,$S_{a b s}$ (abs代表abstract) 是句子节点的初始表示。

3.4.3 Redundancy Layer

3.4.3 冗余层

The BERT encoder and abstract layer specialize in modeling salience with overall context representation of sentences, while it is powerless for redun- dancy information with dependencies among target labels. So, redundancy layer aims to model the redundancy, by iterative ly updating the sentence representation with redundancy message passing, and this process is supervised by ground-truth labels.

BERT编码器和抽象层专注于通过句子的整体上下文表征来建模显著性,但对于目标标签间存在依赖关系的冗余信息却无能为力。因此,冗余层旨在通过冗余消息传递迭代更新句子表征来建模冗余,该过程由真实标签监督。

This layer only deals with sentence-level information ${\mathcal S}={h_{1},h_{2},...,h_{N}}$ and iterative ly updates it $L$ times with classification scores:

该层仅处理句子级信息 ${\mathcal S}={h_{1},h_{2},...,h_{N}}$ ,并通过分类分数对其进行 $L$ 次迭代更新:

$$

\begin{array}{r}{\widetilde{\mathcal{S}}{r e}^{l}=\mathtt{G A T}(\mathtt{G A T}(\mathcal{S}{r e}^{l},A_{s e n t}),A_{s e n t})}\ {P(y_{i}=1|\widetilde{\mathcal{S}}{r e}^{l})=\sigma(\mathrm{FFN}(\mathrm{LN}(\widetilde{h}{i}^{l}+\mathrm{MHAtt}(\widetilde{h}_{i}^{l}))))}\end{array}

$$

$$

\begin{array}{r}{\widetilde{\mathcal{S}}{r e}^{l}=\mathtt{G A T}(\mathtt{G A T}(\mathcal{S}{r e}^{l},A_{s e n t}),A_{s e n t})}\ {P(y_{i}=1|\widetilde{\mathcal{S}}{r e}^{l})=\sigma(\mathrm{FFN}(\mathrm{LN}(\widetilde{h}{i}^{l}+\mathrm{MHAtt}(\widetilde{h}_{i}^{l}))))}\end{array}

$$

where $S_{r e}^{0}=S_{a b s}$ (re is for redundancy) and we get $S_{r e}^{L}$ at the end, $W_{c},W_{r}$ are weight parameters, FFN, LN, MHAtt are feed-foreard network, layer normalization and multi-head attention layer.

其中 $S_{r e}^{0}=S_{a b s}$ (re表示冗余) 且最终得到 $S_{r e}^{L}$,$W_{c},W_{r}$ 是权重参数,FFN、LN、MHAtt 分别代表前馈网络 (feed-foreard network)、层归一化 (layer normalization) 和多头注意力层 (multi-head attention layer)。

We update $\tilde{h}{i}^{l}$ by reducing the redundancy information $g_{i}^{l}$ , which is the weighted summation of neighbors information:

我们通过减少冗余信息 $g_{i}^{l}$ 来更新 $\tilde{h}{i}^{l}$,其中 $g_{i}^{l}$ 是邻居信息的加权求和:

$$

\begin{array}{c}{\displaystyle g_{i}^{l}=\frac{1}{|\mathcal{N}{i}|}\sum_{j\in\mathcal{N}{i}}P(y_{j}=1|\widetilde{S}{r e}^{l})\widetilde{h}{j}^{l}}\ {\displaystyle}\ {h_{i}^{l+1}=W_{c}^{l}*\widetilde{h}{i}^{l}-\widetilde{h}{i}^{l T}W_{r}^{l}\operatorname{tanh}(g_{i}^{l})}\ {\displaystyle S_{r e}^{l+1}=(h_{1}^{l+1},h_{2}^{l+1},...,h_{|\mathcal{S}|}^{l+1})}\end{array}

$$

$$

\begin{array}{c}{\displaystyle g_{i}^{l}=\frac{1}{|\mathcal{N}{i}|}\sum_{j\in\mathcal{N}{i}}P(y_{j}=1|\widetilde{S}{r e}^{l})\widetilde{h}{j}^{l}}\ {\displaystyle}\ {h_{i}^{l+1}=W_{c}^{l}*\widetilde{h}{i}^{l}-\widetilde{h}{i}^{l T}W_{r}^{l}\operatorname{tanh}(g_{i}^{l})}\ {\displaystyle S_{r e}^{l+1}=(h_{1}^{l+1},h_{2}^{l+1},...,h_{|\mathcal{S}|}^{l+1})}\end{array}

$$

where $\mathcal{N}{i}$ is redundancy receptive field for node $i$ , according to $A_{s e n t}$ .

其中 $\mathcal{N}{i}$ 是根据 $A_{sent}$ 得出的节点 $i$ 的冗余感受野。

Specifically, we employ a gating mechanism (Gilmer et al., 2017) for the information update, so that: 1) to avoid GNN smoothing problem; 2) the original overall information from ALBERT is accessible for the ultimate classifier.

具体来说,我们采用门控机制 (gating mechanism) [Gilmer et al., 2017] 进行信息更新,以便:1) 避免 GNN 平滑问题;2) 最终分类器可以访问来自 ALBERT 的原始整体信息。

$$

\begin{array}{r l}&{\quad\tilde{h}{i}^{l^{\prime}}=W_{c}^{l}*\tilde{h}{i}^{l}-\tilde{h}{i}^{l T}W_{r}^{l}\operatorname{tanh}(g_{i}^{l})}\ &{\quad p_{g}^{l}=\sigma(f_{g}^{l}([\tilde{h}{i}^{l};\tilde{h}{i}^{l^{\prime}}]))}\ &{\quad h_{i}^{l+1}=\tilde{h}{i}^{l}\odot p_{g}^{l}+\tilde{h}{i}^{l^{\prime}}\odot(1-p_{g}^{l})}\end{array}

$$

$$

\begin{array}{r l}&{\quad\tilde{h}{i}^{l^{\prime}}=W_{c}^{l}*\tilde{h}{i}^{l}-\tilde{h}{i}^{l T}W_{r}^{l}\operatorname{tanh}(g_{i}^{l})}\ &{\quad p_{g}^{l}=\sigma(f_{g}^{l}([\tilde{h}{i}^{l};\tilde{h}{i}^{l^{\prime}}]))}\ &{\quad h_{i}^{l+1}=\tilde{h}{i}^{l}\odot p_{g}^{l}+\tilde{h}{i}^{l^{\prime}}\odot(1-p_{g}^{l})}\end{array}

$$

where $\odot$ denotes element-wise multiplication.

其中 $\odot$ 表示逐元素相乘。

3.5 Objective Function

3.5 目标函数

Previous approaches for modeling the salience and redundancy is auto regressive, where observations from previous time-steps are used to predict the value at current time-step:

先前用于建模显著性和冗余度的方法是自回归的,即利用先前时间步的观测值来预测当前时间步的值:

$$

P(Y|S)=\prod_{t=1}^{|S|}P(y_{t}|S,y_{1},y_{2},...,y_{t-1})

$$

$$

P(Y|S)=\prod_{t=1}^{|S|}P(y_{t}|S,y_{1},y_{2},...,y_{t-1})

$$

The auto regressive models have some disadvantages: 1) the error in inference will propagate subsequently, 2) label $y_{t}$ is generated just depend on previous sentences $y_{<t}$ rather than considering bidirectional dependency, and 3) it is difficult to decide how many sentences to extract.

自回归模型存在一些缺点:1) 推理过程中的误差会向后传播,2) 标签 $y_{t}$ 仅依赖于前文 $y_{<t}$ 生成,未考虑双向依赖关系,3) 难以确定需要提取多少句子。

Table 2: Data Statistics: CNN/Daily Mail, NYT, Newsroom

表 2: 数据统计: CNN/Daily Mail, NYT, Newsroom

| 数据集 | avg.doclength words sentences | avg.summarylength |

|----------------