GraFITi: Graphs for Forecasting Irregularly Sampled Time Series

GraFITi: 用于预测不规则采样时间序列的图模型

Abstract

摘要

Forecasting irregularly sampled time series with missing values is a crucial task for numerous real-world applications such as healthcare, astronomy, and climate sciences. Stateof-the-art approaches to this problem rely on Ordinary Differential Equations (ODEs) which are known to be slow and often require additional features to handle missing values. To address this issue, we propose a novel model using Graphs for Forecasting Irregularly Sampled Time Series with missing values which we call GraFITi. GraFITi first converts the time series to a Sparsity Structure Graph which is a sparse bipartite graph, and then reformulates the forecasting problem as the edge weight prediction task in the graph. It uses the power of Graph Neural Networks to learn the graph and predict the target edge weights. GraFITi has been tested on 3 real-world and 1 synthetic irregularly sampled time series dataset with missing values and compared with various stateof-the-art models. The experimental results demonstrate that GraFITi improves the forecasting accuracy by up to $17%$ and reduces the run time up to 5 times compared to the state-ofthe-art forecasting models.

预测具有缺失值的不规则采样时间序列是医疗、天文和气候科学等众多现实应用中的关键任务。该领域最先进的方法依赖于常微分方程 (ODE) ,但这种方法速度较慢且通常需要额外特征来处理缺失值。为解决这一问题,我们提出了一种使用图结构预测具有缺失值的不规则采样时间序列的新模型 GraFITi。GraFITi 首先将时间序列转换为稀疏结构图 (Sparsity Structure Graph) ——一种稀疏二分图,然后将预测问题重新定义为图中的边权重预测任务。该模型利用图神经网络 (Graph Neural Networks) 的学习能力来预测目标边权重。GraFITi 已在3个真实世界和1个合成的不规则采样时间序列数据集(均含缺失值)上进行了测试,并与多种最先进模型进行了对比。实验结果表明,相较于现有最优预测模型,GraFITi 将预测精度最高提升17%,并将运行时间缩短至原来的1/5。

1 Introduction

1 引言

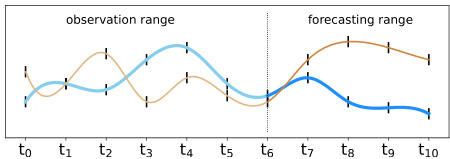

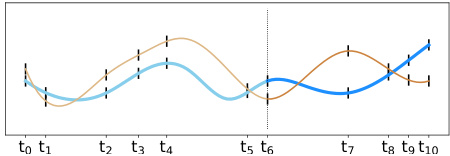

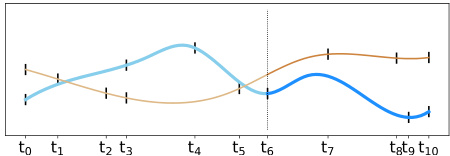

Time series forecasting predicts future values based on past observations. While extensively studied, most research focuses on regularly sampled and fully observed multivariate time series (MTS) (Lim and Zohren 2021; Zeng et al. 2022; De Gooijer and Hyndman 2006). Limited attention is given to irregularly sampled time series with missing values (IMTS) which is commonly seen in many real-world applications. IMTS has independently observed channels at irregular intervals, resulting in sparse data alignment. The focus of this work is on forecasting IMTS. Additionally, there is another type called irregular multivariate time series which is fully observed but with irregular time intervals (Figure 1 illustrates the differences) which is not the interest of this paper.

时间序列预测基于过去观测值预测未来数值。虽然该领域研究广泛,但大多数研究集中于规则采样且完全观测的多元时间序列(MTS) (Lim和Zohren 2021; Zeng等2022; De Gooijer和Hyndman 2006)。对于现实应用中常见的不规则采样且含缺失值的时间序列(IMTS)关注有限。IMTS的各通道以不规则间隔独立观测,导致数据对齐稀疏。本文工作聚焦于IMTS预测。此外还存在另一种完全观测但时间间隔不规则的多元时间序列(图1展示了差异),这类数据不在本文研究范围内。

Ordinary Differential Equations (ODE) model continuous time series predicting system evolution over time based on the rate of change of state variables as shown in Eq. 1.

常微分方程 (ODE) 模型通过状态变量的变化率预测系统随时间演化的连续时间序列,如式1所示。

$$

{\frac{\mathrm{d}}{\mathrm{d}t}}x(t)=f(t,x(t))

$$

$$

{\frac{\mathrm{d}}{\mathrm{d}t}}x(t)=f(t,x(t))

$$

(a) Forecasting regular multivariate time series (MTS)

(a) 预测常规多元时间序列 (MTS)

(b) Forecasting irregular multivariate time series (c) Forecasting irregularly sampled multivariate time series with missing values (IMTS)

(b) 预测不规则多元时间序列

(c) 预测带有缺失值的不规则采样多元时间序列 (IMTS)

Figure 1: (a) multivariate time series forecasting, (b) irregular multivariate time series forecasting, (c) forecasting irregularly sampled multivariate time series with missing values. In all cases the observation range is from time $t_{0}$ to $t_{6}$ and the forecasting range is from time $t_{7}$ to $t_{10}$ .

图 1: (a) 多元时间序列预测, (b) 不规则多元时间序列预测, (c) 含缺失值的不规则采样多元时间序列预测。所有情况下观测时间范围为 $t_{0}$ 至 $t_{6}$, 预测时间范围为 $t_{7}$ 至 $t_{10}$。

ODE-based models (Schirmer et al. 2022; De Brouwer et al. 2019; Bilos et al.2021; Scholz et al. 2023) are able to forecast at arbitrary time points. However, ODE models can be slow because of their auto-regressive nature and comput ation ally expensive numerical integration process. Also, some ODE models cannot directly handle missing values in the observation space, hence, they often rely on missing value indicators (De Brouwer et al. 2019; Bilos et al. 2021) which are given as additional channels in the data.

基于ODE的模型 (Schirmer et al. 2022; De Brouwer et al. 2019; Bilos et al. 2021; Scholz et al. 2023) 能够在任意时间点进行预测。然而,由于ODE模型的自回归特性和计算成本高昂的数值积分过程,其运行速度可能较慢。此外,部分ODE模型无法直接处理观测空间中的缺失值,因此它们通常依赖作为数据附加通道提供的缺失值指示器 (De Brouwer et al. 2019; Bilos et al. 2021)。

In this work, we propose a novel model called GraFITi: graphs for forecasting IMTS. GraFITi converts IMTS data into a Sparsity Structure Graph and formulates forecasting as edge weight prediction in the graph. This approach represents channels and timepoints as disjoint nodes connected by edges in a bipartite graph. GraFITi uses multiple graph neural network (GNN) layers with attention and feed-forward mechanisms to learn node and edge interactions. Our Sparsity Structure Graph, by design, provides a more dynamic and adaptive approach to process IMTS data, and improves the performance of the forecasting task.

在本工作中,我们提出了一种名为GraFITi的新模型:用于预测不完整多变量时间序列(IMTS)的图结构。GraFITi将IMTS数据转换为稀疏结构图(Sparsity Structure Graph),并将预测问题转化为图中边权重的预测任务。该方法通过二分图将通道和时间点表示为由边连接的不相交节点。GraFITi采用具有注意力机制和前馈机制的多层图神经网络(GNN)来学习节点和边的交互关系。我们设计的稀疏结构图提供了一种更动态、自适应的方法来处理IMTS数据,从而提升了预测任务的性能。

We evaluated GraFITi for forecasting IMTS using 3 realworld and 1 synthetic dataset. Comparing it with state-ofthe-art methods for IMTS and selected baselines for MTS, GraFITi provides superior forecasts.

我们使用3个真实世界数据集和1个合成数据集评估了GraFITi在IMTS预测中的表现。与IMTS领域的最先进方法以及选定的MTS基线模型相比,GraFITi提供了更优的预测结果。

Our contributions are summarized as follows:

我们的贡献总结如下:

• We introduce a novel representation of irregularly sampled multivariate time series with missing values (IMTS) as sparse bipartite graphs, the Sparsity Structure Graph, that efficiently can handle missing values in the observation space of the time series (Section 4). • We propose a novel model based on this representation, GraFITi, that can leverage any graph neural network to perform time series forecasting for IMTS (section 5). • We provide extensive experimental evaluation on 3 real world and 1 synthetic dataset that shows that GraFITi improves the forecasting accuracy of the best existing models by up to $17%$ and the run time improvement up to 5 times (section 6).

• 我们提出了一种新颖的不规则采样多变量时间序列 (IMTS) 表示方法——稀疏二分图 (Sparsity Structure Graph),它能高效处理时间序列观测空间中的缺失值 (第4节)。

• 基于该表示方法,我们提出了一个新模型 GraFITi,可利用任意图神经网络对 IMTS 进行时间序列预测 (第5节)。

• 我们在3个真实数据集和1个合成数据集上进行了大量实验评估,结果表明 GraFITi 将现有最佳模型的预测准确率最高提升 $17%$,运行时间最高加快5倍 (第6节)。

We provide the implementation at https://anonymous.4open.science/r/GraFITi-8F7B.

我们在 https://anonymous.4open.science/r/GraFITi-8F7B 提供了实现。

2 Related Work

2 相关工作

This work focus on the forecasting of irregularly sampled multivariate time series data with missing values using graphs. In this section, we discuss the research done in: forecasting models for IMTS, Graphs for forecasting MTS, and models for edge weight prediction in graphs.

本研究聚焦于利用图结构预测具有缺失值的不规则采样多元时间序列数据(IMTS)。本节将探讨以下领域的研究成果: IMTS预测模型、基于图的MTS预测方法, 以及图中边权重预测模型。

Forecasting of IMTS Research on IMTS has mainly focused on classification (Li and Marlin 2015; Lipton, Kale, and Wetzel 2016; Rubanova, Chen, and Duvenaud 2019; Shukla and Marlin 2021; Horn et al. 2020; Tashiro et al. 2021) and interpolation (Che et al. 2018; Rubanova, Chen, and Duvenaud 2019; Shukla and Marlin 2021; Tashiro et al. 2021; Shukla and Marlin 2022; Yalavarthi, Burchert, and Schmidt-Thieme 2022), with limited attention to forecasting tasks. Existing models for these tasks mostly rely on Neural ODEs (Che et al. 2018). In Latent-ODE (Rubanova, Chen, and Duvenaud 2019), an ODE was combined with a Recurrent Neural Network (RNN) for updating the state at the point of new observation. The GRU-ODE-Bayes model (De Brouwer et al. 2019) improved upon this approach by incorporating GRUs, ODEs, and Bayesian inference for parameter estimation. The Continuous Recurrent Unit (CRU) (Schirmer et al. 2022) based model uses a state-space model with stochastic differential equations and kalman filtering. The recent LinODENet model (Scholz et al. 2023) enhanced CRU by using linear ODEs and ensure self-consistency in the forecasts. Another branch of study involves Neural Flows (Bilos et al. 2021), which use neural networks to model ODE solution curves, rendering the ODE integrator unnecessary. Among various flow architectures, GRU flows have shown good performance.

关于IMTS的研究主要集中在分类 (Li and Marlin 2015; Lipton, Kale, and Wetzel 2016; Rubanova, Chen, and Duvenaud 2019; Shukla and Marlin 2021; Horn et al. 2020; Tashiro et al. 2021) 和插值 (Che et al. 2018; Rubanova, Chen, and Duvenaud 2019; Shukla and Marlin 2021; Tashiro et al. 2021; Shukla and Marlin 2022; Yalavarthi, Burchert, and Schmidt-Thieme 2022) 任务上,对预测任务的关注相对有限。现有模型大多基于神经常微分方程 (Neural ODEs) (Che et al. 2018) 。Latent-ODE (Rubanova, Chen, and Duvenaud 2019) 将ODE与循环神经网络 (RNN) 结合,用于更新新观测点的状态。GRU-ODE-Bayes模型 (De Brouwer et al. 2019) 通过引入门控循环单元 (GRU) 、ODE和贝叶斯推理进行参数估计,改进了这一方法。基于连续循环单元 (CRU) (Schirmer et al. 2022) 的模型采用随机微分方程和卡尔曼滤波的状态空间模型。近期提出的LinODENet模型 (Scholz et al. 2023) 通过线性ODE增强了CRU,并确保预测的自洽性。另一研究方向是神经流 (Neural Flows) (Bilos et al. 2021) ,该技术用神经网络建模ODE解曲线,从而无需ODE积分器。在多种流架构中,GRU流表现出优越性能。

Using graphs for MTS In addition to CNNs, RNNs, and Transformers, graph-based methods have been studied for IMTS forecasting. Early GNN-based approaches, such as (Wu et al. 2020), required a pre-defined adjacency matrix to establish relationships between the time series channels. More recent models like the Spectral Temporal Graph Neural Network (Cao et al. 2020) (STGNN) and the TimeAware Zigzag Network (Chen, Segovia, and Gel 2021) improved on this by using GNNs to capture dependencies between variables in the time series. On the other hand, Satorras, Rangapuram, and Janus chow ski (2022) proposed a bipartite setup with induced nodes to reduce graph complexity, built solely from the channels. Existing graph-based time series forecasting models focus on learning correlations or similarities between channels, without fully exploiting the graph structure. Recently, GNNs were used for imputation and reconstruction of MTS with missing values, treating MTS as sequences of graphs where nodes represent sensors and edges denote correlation (Cini, Marisca, and Alippi 2022; Marisca, Cini, and Alippi 2022). Similar to previous studies, they learn similarity or correlation among channels.

使用图结构处理多元时间序列

除CNN、RNN和Transformer外,基于图的方法也被用于多元时间序列预测。早期基于图神经网络(GNN)的方法(如Wu等人2020年研究)需要预定义邻接矩阵来建立时间序列通道间的关系。近期模型如谱时序图神经网络(Cao等人2020,STGNN)和时序感知锯齿网络(Chen、Segovia与Gel 2021)通过GNN捕捉时间序列变量间的依赖关系进行了改进。另一方面,Satorras、Rangapuram与Januschowski(2022)提出采用诱导节点的二分图结构来降低复杂度,该结构仅由通道构建而成。现有基于图的时间序列预测模型主要关注学习通道间的相关性或相似性,未能充分利用图结构。近期研究(Cini、Marisca与Alippi 2022;Marisca、Cini与Alippi 2022)将GNN用于含缺失值的多元时间序列插补与重建,将多元时间序列视为图序列(节点代表传感器,边表示相关性)。与先前研究类似,这些方法主要学习通道间的相似性或相关性。

Graph Neural Networks for edge weight prediction Graph Neural Networks (GNNs) are designed to process graph-based data. While most GNN literature such as Graph Convolutional Networks, Graph Attention Networks focuses on node classification (Kipf and Welling 2017; Velickovic et al. 2017), a few studies have addressed edge weight prediction. Existing methods (De Sa and Prudencio 2011; Fu et al. 2018) in this domain rely on latent features and graph heuristics, such as node similarity (Zhao et al. 2015), proximity measures (Murata and Moriyasu 2007), and local rankings (Yang and Wang 2020). Recently, deep learning-based approaches (Hou and Holder 2017; Zulaika et al. 2022; You et al. 2020) were proposed. Another branch of research deals with edge weight prediction in weighted signed graphs (Kumar et al. 2016) tailored to social networks. However, all proposed methods typically operate in a trans duct ive setup with a single graph split into training and testing data, which may not be suitable for cases involving multiple graphs like ours, where training and evaluation need to be done on separate graph partitions.

用于边权重预测的图神经网络

图神经网络 (Graph Neural Networks, GNNs) 专为处理基于图结构的数据而设计。虽然大多数GNN文献(如图卷积网络、图注意力网络)主要关注节点分类 (Kipf and Welling 2017; Velickovic et al. 2017),但已有少量研究涉及边权重预测。该领域的现有方法 (De Sa and Prudencio 2011; Fu et al. 2018) 依赖于潜在特征和图启发式方法,例如节点相似性 (Zhao et al. 2015)、邻近度测量 (Murata and Moriyasu 2007) 和局部排序 (Yang and Wang 2020)。近年来,基于深度学习的方法 (Hou and Holder 2017; Zulaika et al. 2022; You et al. 2020) 被提出。另一研究方向针对社交网络场景,研究带符号加权图中的边权重预测问题 (Kumar et al. 2016)。然而,现有方法通常采用直推式 (transductive) 设定,即将单个图划分为训练集和测试集,这不适用于涉及多个图的情况(如本文场景),此类场景需要在独立的图分区上进行训练和评估。

3 The Time Series Forecasting Problem

3 时间序列预测问题

An irregularly sampled multivariate times series with missing values, is a finite sequence of pairs $\boldsymbol{S}\quad=\quad$ $(t_{n},x_{n}){n=1:N}$ where $t_{n}\in\mathbb{R}$ is the $n$ -th observation time- point and $x_{n}\in(\mathbb{R}\cup{\mathtt{N a N}})^{C}$ is the $n$ -th observation event. Components with $x_{n,c}\neq\mathtt{N a N}$ represent observed values by channel $c$ at event time $t_{n}$ , and $x_{n,c}=\tt N a N$ represents a missing value. $C$ is the total number of channels.

一个具有缺失值的不规则采样多元时间序列,可以表示为一个有限的序列对 $\boldsymbol{S}\quad=\quad$ $(t_{n},x_{n}){n=1:N}$ ,其中 $t_{n}\in\mathbb{R}$ 是第 $n$ 个观测时间点, $x_{n}\in(\mathbb{R}\cup{\mathtt{N a N}})^{C}$ 是第 $n$ 个观测事件。当 $x_{n,c}\neq\mathtt{N a N}$ 时,表示通道 $c$ 在时间 $t_{n}$ 处观测到的值;若 $x_{n,c}=\tt N a N$ 则表示缺失值。 $C$ 为通道总数。

A time series query is a pair $(Q,S)$ of a time series $S$ and a sequence $Q=(q_{k},c_{k}){k=1:K}$ such that the value of channel $c_{k}\in{1,\ldots,C}$ is to be predicted at time $q_{k}\in\mathbb{R}$ . We call a query a forecasting query, if all its query timepoints are after the last timepoint of the time series $S$ , an imputation query if all of them are before the last timepoint of $S$ and a mixed query otherwise. In this paper, we are interested in forecasting only.

时间序列查询是一对 $(Q,S)$,其中 $S$ 是一个时间序列,$Q=(q_{k},c_{k}){k=1:K}$ 是一个序列,要求在时间 $q_{k}\in\mathbb{R}$ 预测通道 $c_{k}\in{1,\ldots,C}$ 的值。如果查询的所有时间点都在时间序列 $S$ 的最后一个时间点之后,则称之为预测查询;如果所有时间点都在 $S$ 的最后一个时间点之前,则称为填补查询;否则称为混合查询。本文仅关注预测查询。

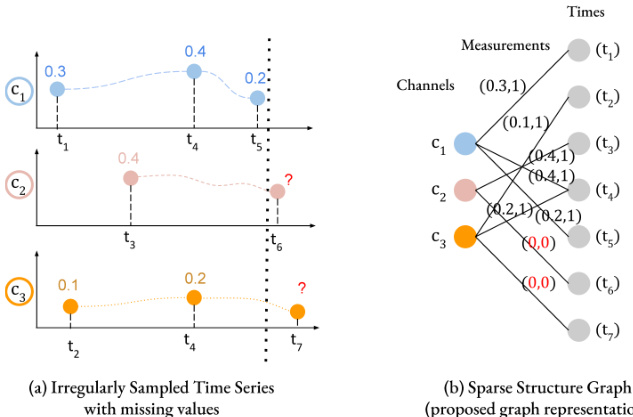

Figure 2: Representation of IMTS as Sparsity Structure Graph. (b) is the Sparsity Structure Graph representation of (a) where times and channels are the nodes and observation measurements are the edges with observations values. Target edges are provided with $(0,0)$ .

图 2: IMTS作为稀疏结构图的表示。(b)是(a)的稀疏结构图表示,其中时间和通道是节点,观测测量值是带有观测值的边。目标边被赋予$(0,0)$。

A vector $y\in\mathbb{R}^{K}$ we call an answer to the forecasting query: $y_{k}$ is understood as the predicated value of time series $S$ at time $q_{k}$ in channel $c_{k}$ . The difference between two answers $y,y^{\prime}$ to the same query can be measured by any loss function, for example by a simple squared error

向量 $y\in\mathbb{R}^{K}$ 被称为预测查询的答案:$y_{k}$ 表示时间序列 $S$ 在通道 $c_{k}$ 中时间点 $q_{k}$ 处的预测值。对于同一查询的两个答案 $y,y^{\prime}$ 之间的差异,可以通过任何损失函数来衡量,例如简单的平方误差。

$$

\ell(y,y^{\prime}):=\frac{1}{K}\sum_{k=1}^{K}(y_{k}-y_{k}^{\prime})^{2}

$$

$$

\ell(y,y^{\prime}):=\frac{1}{K}\sum_{k=1}^{K}(y_{k}-y_{k}^{\prime})^{2}

$$

The time series forecasting problem is as follows: given a dataset of pairs $D:=(\bar{Q_{i}},\bar{S_{i}},y_{i})_{i=1:M}$ of forecasting queries and ground truth answers from an unknown distribution $p^{\mathrm{data}}$ and a loss function $\ell$ on forecasting answers, find a forecasting model $\hat{y}$ that maps queries $(Q,S)$ to answers $\hat{y}(Q,S)$ such that the expected loss between ground truth answers and forecasted answers is minimal:

时间序列预测问题定义如下:给定来自未知分布 $p^{\mathrm{data}}$ 的预测查询与真实答案配对数据集 $D:=(\bar{Q_{i}},\bar{S_{i}},y_{i})_{i=1:M}$ ,以及预测答案的损失函数 $\ell$ ,需找到一个预测模型 $\hat{y}$ ,该模型将查询 $(Q,S)$ 映射为预测答案 $\hat{y}(Q,S)$ ,使得真实答案与预测答案之间的期望损失最小:

$$

\mathcal{L}(\boldsymbol{\hat{y}};\boldsymbol{p}^{\mathrm{data}}):=\mathbb{E}_{(Q,S,y)\sim p^{\mathrm{data}}}\big[\ell(\boldsymbol{y},\boldsymbol{\hat{y}}(Q,S))\big]

$$

$$

\mathcal{L}(\boldsymbol{\hat{y}};\boldsymbol{p}^{\mathrm{data}}):=\mathbb{E}_{(Q,S,y)\sim p^{\mathrm{data}}}\big[\ell(\boldsymbol{y},\boldsymbol{\hat{y}}(Q,S))\big]

$$

4 Sparsity Structure Graph Representation

4 稀疏结构图表示

We describe the proposed Sparsity Structure Graph represent ation and convert the forecasting problem as edge weight prediction problem. Using the proposed representation:

我们描述了所提出的稀疏结构图表示方法,并将预测问题转化为边权重预测问题。利用该表示方法:

• We explicitly obtain the relationship between the channels and times via observation values allowing the inductive bias of the data to pass into the model. • We elegantly handle the missing values in IMTS in the observation space by connecting edges only for the observed values.

• 我们通过观测值明确获取通道与时间的关系,使数据的归纳偏差能够传递到模型中。

• 我们通过在观测空间中仅对观测值建立连接边,优雅地处理了IMTS中的缺失值问题。

Missing values represented by NaN-values are unsuited for standard arithmetical operations. Therefore, they are often encoded by dedicated binary variables called missing value indicators or masks: $\overline{{x_{n}}}\in(\mathbb{R}\times{0,1})^{C}$ . Here, $(x_{n,c,1},1)$ encodes an observed value and $(0,0)$ encodes a missing value. Usually, both components are seen as different scalar variables: the real value $x_{n,c,1}$ and its binary missing value indicator / mask $x_{n,c,2}$ , the relation between both is dropped and observations simply modeled as $x_{n}\in\mathbb{R}^{2C}$ .

用NaN值表示的缺失值不适合进行标准算术运算。因此,它们通常通过专门的二元变量(称为缺失值指示器或掩码)进行编码:$\overline{{x_{n}}}\in(\mathbb{R}\times{0,1})^{C}$。其中,$(x_{n,c,1},1)$表示观测值,$(0,0)$表示缺失值。通常,这两个分量被视为不同的标量变量:实数值$x_{n,c,1}$及其二元缺失值指示器/掩码$x_{n,c,2}$,两者之间的关系被忽略,观测值简化为$x_{n}\in\mathbb{R}^{2C}$。

We propose a novel representation of a time series $S$ using a bipartite graph $G\doteq(V,E)$ . The graph has nodes for channels and timepoints, denoted as $V_{C}$ and $V_{T}$ respectively $(V=V_{C}\cup V_{T})$ . Edges $E\subseteq V_{C}\times V_{T}$ in the graph connect each channel node to its corresponding timepoint node with an observation. Edge features $F^{\mathrm{edge}}$ are the observation values and node features $F^{\mathrm{node}}$ are the channel IDs and timepoints. Nodes $V_{C}:={1,\dots,C}$ represent channels and nodes 。

我们提出了一种新颖的时间序列$S$表示方法,采用二分图$G\doteq(V,E)$。该图包含通道节点和时间点节点,分别记为$V_{C}$和$V_{T}$ $(V=V_{C}\cup V_{T})$。图中的边$E\subseteq V_{C}\times V_{T}$将每个通道节点与其对应观测值的时间点节点相连。边特征$F^{\mathrm{edge}}$为观测值,节点特征$F^{\mathrm{node}}$为通道ID和时间点。通道节点$V_{C}:={1,\dots,C}$表示通道。

For an IMTS, missing values make the bipartite graph sparse, meaning $|E|\ll C\cdot N$ . However, for a fully observed time series, where there are no missing values, i.e. $|E|=C\cdot N$ , the graph is a complete bipartite graph.

对于一个IMTS (Incomplete Multivariate Time Series) ,缺失值会导致二分图变得稀疏,这意味着 $|E|\ll C\cdot N$ 。然而,对于一个完全观测到的时间序列,即没有缺失值的情况 $|E|=C\cdot N$ ,该图就是一个完全二分图。

We extend this representation to time series queries $(S,Q)$ by adding additional edges between queried channels and timepoints, and distinguish observed and queried edges by an additional binary edge feature called target indicator. Note that the target indicator used to differentiate the observed edge and target edge is different from the missing value indicator which is used to represent the missing observations in the observation space. Given a query ${\cal Q}={}$ $(q_{k},c_{k}){k=1:K}$ , let $(t_{1}^{\prime},\dots,t_{K^{\prime}}^{\prime})$ be an enumeration of the unique queried timepoints $q_{k}$ . We introduce additional nodes $V_{Q}:={C+N+1,\dots,C+N+K^{\prime}}$ 。

我们将这种表示扩展到时间序列查询 $(S,Q)$,方法是在查询的通道和时间点之间添加额外的边,并通过一个称为目标指示器的二元边特征来区分已观测边和查询边。需要注意的是,用于区分已观测边和目标边的目标指示器,与用于表示观测空间中缺失观测值的缺失值指示器不同。给定查询 ${\cal Q}={}$ $(q_{k},c_{k}){k=1:K}$,令 $(t_{1}^{\prime},\dots,t_{K^{\prime}}^{\prime})$ 为唯一查询时间点 $q_{k}$ 的枚举。我们引入额外的节点 $V_{Q}:={C+N+1,\dots,C+N+K^{\prime}}$。

where $\begin{array}{r l r}{\left(t_{i-N-C}^{\prime},j\right)}&{{}\in}&{Q}\end{array}$ is supposed to mean that $(t_{i-N-C}^{\prime},j)$ appears in the sequence $Q$ . To denote this graph representation, we write briefly

其中 $\begin{array}{r l r}{\left(t_{i-N-C}^{\prime},j\right)}&{{}\in}&{Q}\end{array}$ 表示 $(t_{i-N-C}^{\prime},j)$ 出现在序列 $Q$ 中。为简洁表示该图结构,我们简记为

$$

\mathrm{ts}2\mathrm{graph}(X,Q):=(V,E,F^{\mathrm{node}},F^{\mathrm{edge}})

$$

$$

\mathrm{ts}2\mathrm{graph}(X,Q):=(V,E,F^{\mathrm{node}},F^{\mathrm{edge}})

$$

The conversion of an IMTS to a Sparsity Structure Graph is shown in Figure 2.

图 2: IMTS转换为稀疏结构图的示意图

To make the graph representation $(V,E,F^{\mathrm{node}},F^{\mathrm{edge}})$ of a time series query process able by a graph neural network, node and edge features have to be properly embedded, otherwise, both, the nominal channel ID and the timepoint are hard to compute on. We propose an Initial Embedding layer that encodes channel IDs via a onehot encoding and time points via a learned sinusoidal encoding (Shukla and Marlin 2021)

为了使时序查询过程的图表示 $(V,E,F^{\mathrm{node}},F^{\mathrm{node}})$ 能够被图神经网络处理,节点和边的特征必须被适当嵌入,否则名义通道ID和时间点都难以计算。我们提出了一个初始嵌入层,该层通过独热编码 (onehot encoding) 对通道ID进行编码,并通过学习到的正弦编码 (Shukla and Marlin 2021)

where onehot denotes the binary indicator vector and FF denotes a separate fully connected layer in each case.

其中onehot表示二进制指示向量,FF在每种情况下表示一个独立的全连接层。

The final graph neural network layer $(h^{\mathrm{node},L},h^{\mathrm{edge},L})$ has embedding dimension 1. The scalar values of the query edges are taken as the predicted answers to the encoded forecasting query:

最终的图神经网络层 $(h^{\mathrm{node},L},h^{\mathrm{edge},L})$ 的嵌入维度为1。查询边的标量值被视为对编码预测查询的预测答案:

$$

\begin{array}{r}{\hat{y}:=\mathrm{graph}2\mathrm{ts}(h^{\mathrm{node},L},h^{\mathrm{edge},L},V,E)=(h_{e_{k}}^{\mathrm{edge},L}){k=1:K}}\ {\mathrm{where}~e_{k}={C+N+k^{\prime},c_{k}}\quad\mathrm{with}\quad t_{k^{\prime}}^{\prime}=q_{k}}\end{array}

$$

$$

\begin{array}{r}{\hat{y}:=\mathrm{graph}2\mathrm{ts}(h^{\mathrm{node},L},h^{\mathrm{edge},L},V,E)=(h_{e_{k}}^{\mathrm{edge},L}){k=1:K}}\ {\mathrm{where}~e_{k}={C+N+k^{\prime},c_{k}}\quad\mathrm{with}\quad t_{k^{\prime}}^{\prime}=q_{k}}\end{array}

$$

5 Forecasting with GraFITi

5 使用 GraFITi 进行预测

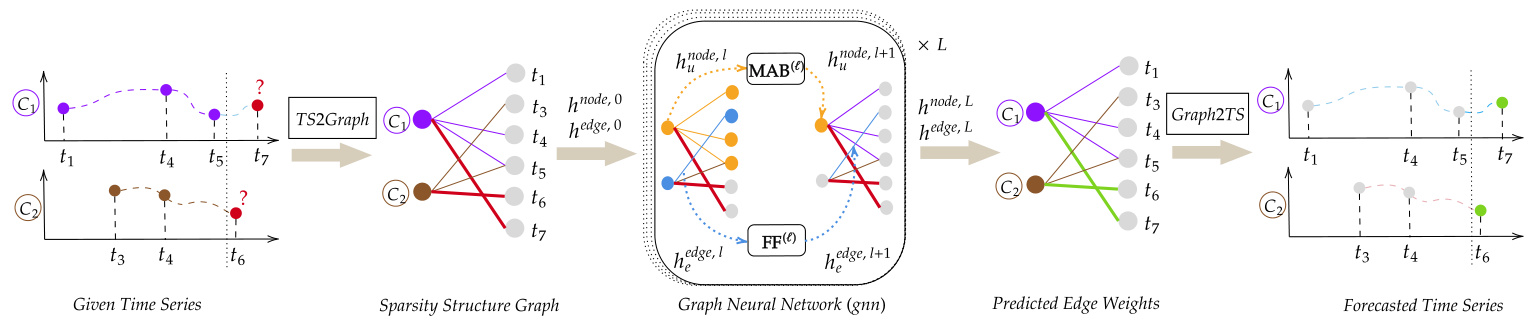

GraFITi first encodes the time series query to graph using Eq. 4 and compute initial embeddings for the nodes $(h^{\mathrm{node,0^{-}}})$ and edges $(h^{\mathrm{edge},0})$ using Eqs. 5 and 6 respectively. Now, we can leverage the power of graph neural networks for further processing the encoded graph. Node and edge features are updated layer wise, from layer $l$ to $l+1$ using a graph neural network:

GraFITi首先通过公式4将时间序列查询编码为图,并分别使用公式5和公式6计算节点$(h^{\mathrm{node,0^{-}}})$和边$(h^{\mathrm{edge},0})$的初始嵌入。现在,我们可以利用图神经网络(graph neural network)的力量进一步处理编码后的图。节点和边的特征通过图神经网络逐层更新,从层$l$到$l+1$:

$$

(h^{\mathrm{node},l+1},h^{\mathrm{edge},l+1}):=\mathrm{gnn}^{(l)}(h^{\mathrm{node},l},h^{\mathrm{edge},l},V,E)

$$

$$

(h^{\mathrm{node},l+1},h^{\mathrm{edge},l+1}):=\mathrm{gnn}^{(l)}(h^{\mathrm{node},l},h^{\mathrm{edge},l},V,E)

$$

There have been a variety of gnn architectures such as Graph Convolutional Networks (Kipf and Welling 2017), Graph Attention Networks (Velickovic et al. 2017), proposed in the literature. In this work, we propose a model adapting the Graph Attention Network (Velickovic et al. 2017) to our graph setting and incorporate essential components for handling sparsity structure graphs. While a Graph Attention Network computes attention weights by adding queries and keys, we found no advantage in using this approach (see supplementary material). Thus, we utilize standard attention mechanism, in our attention block, as it has been widely used in the literature (Zhou et al. 2021). Additionally, we also use edge embeddings in our setup to update node embeddings in a principled manner.

已有多种图神经网络架构被提出,如图卷积网络 (Kipf and Welling 2017) 、图注意力网络 (Velickovic et al. 2017) 。本工作基于图注意力网络 (Velickovic et al. 2017) 进行适应性改造,针对稀疏结构图的特点融入了关键组件。虽然原始图注意力网络通过叠加查询向量和键向量来计算注意力权重,但实验表明该方法并无优势 (详见补充材料) 。因此,我们采用文献 (Zhou et al. 2021) 中广泛使用的标准注意力机制作为注意力模块的核心。此外,我们还通过边嵌入 (edge embeddings) 以规范化方式更新节点嵌入。

Graph Neural Network (gnn)

图神经网络 (GNN)

First, we define Multi-head Attention block (MAB) and Neighborhood functions that are used in our gnn.

首先,我们定义用于图神经网络 (gnn) 的多头注意力块 (MAB) 和邻域函数。

A Multi-head attention block (MAB) (Vaswani et al. 2017) is represented as:

多头注意力块 (MAB) (Vaswani et al. 2017) 表示为:

$$

\begin{array}{r}{\mathbf{MAB}(\mathcal{Q},\mathcal{K},\mathcal{V}):=\alpha(\mathcal{H}+\mathbf{FF}(\mathcal{H}))\quad\quad}\ {\quad\mathrm{where}\quad\mathcal{H}:=\alpha(\mathcal{Q}+\mathbf{MHA}(\mathcal{Q},\mathcal{K},\mathcal{V}))}\end{array}

$$

$$

\begin{array}{r}{\mathbf{MAB}(\mathcal{Q},\mathcal{K},\mathcal{V}):=\alpha(\mathcal{H}+\mathbf{FF}(\mathcal{H}))\quad\quad}\ {\quad\mathrm{where}\quad\mathcal{H}:=\alpha(\mathcal{Q}+\mathbf{MHA}(\mathcal{Q},\mathcal{K},\mathcal{V}))}\end{array}

$$

where $\mathcal{Q},\mathcal{K}$ and, $\nu$ are called queries, keys, and values respectively, MHA is multi-head attention (Vaswani et al. 2017), $\alpha$ is a non-linear activation.

其中 $\mathcal{Q}$、$\mathcal{K}$ 和 $\nu$ 分别称为查询 (queries)、键 (keys) 和值 (values),MHA 是多头注意力机制 (multi-head attention) (Vaswani et al. 2017),$\alpha$ 是非线性激活函数。

Algorithm 1: Graph Neural Network $(\mathbf{g}\mathbf{n}\mathbf{n}^{(l)})$ )

算法 1: 图神经网络 (Graph Neural Network, $\mathbf{g}\mathbf{n}\mathbf{n}^{(l)}$)

| 输入: hnode,l | ,hedge,l, V, E |

|---|---|

| for u ∈ V do | Hu← ([hnode,l |

| lle={u,u} | |

| for e = {u,v} ∈ E do | |

| — | + FF(l) hnode,l hedge,l e |

| 输出 | hedge,l+1 |

The Neighborhood of a node $u$ is defined as the set of all the nodes connected to $u$ through edges in $E$ :

节点 $u$ 的邻域 (Neighborhood) 定义为通过边集 $E$ 与 $u$ 相连的所有节点集合:

$$

\mathcal{N}(u):={v\mid{u,v}\in E}

$$

$$

\mathcal{N}(u):={v\mid{u,v}\in E}

$$

GraFITi consists of $L$ gnnlayers. In each layer, node embeddings are updated using neighbor node embeddings and edge embeddings connecting them. For edge embeddings, we use the embeddings of the adjacent nodes and the current edge embedding. The overall architecture of GraFITi is shown in Figure 3.

GraFITi由$L$个GNN层组成。每一层中,节点嵌入通过相邻节点嵌入及连接它们的边嵌入进行更新。对于边嵌入,我们使用相邻节点的嵌入和当前边嵌入。GraFITi的整体架构如图3所示。

Update node embeddings To update embedding of a node $u\in V$ , first, we create a sequence of features $H_{u}$ concatenating its neighbor node embedding $h_{v}^{\mathrm{node},l}$ and edge embedding heedge,l, $h_{e}^{\mathrm{edge},l},e={u,v}$ where $v\in\mathcal{N}(u)$ . We then pass hnode,l as queries and $H_{u}$ as keys and values to MAB.

更新节点嵌入

为了更新节点 $u\in V$ 的嵌入,首先我们创建一个特征序列 $H_{u}$,将其邻居节点嵌入 $h_{v}^{\mathrm{node},l}$ 和边嵌入 $h_{e}^{\mathrm{edge},l},e={u,v}$ 拼接起来,其中 $v\in\mathcal{N}(u)$。接着,我们将 $h_{v}^{\mathrm{node},l}$ 作为查询,$H_{u}$ 作为键和值输入到 MAB 中。

$$

\begin{array}{r l}&{h_{u}^{\mathrm{node},l+1}:=\mathbf{M}\mathbf{A}\mathbf{B}^{(l)}\left(h_{u}^{\mathrm{node},l},H_{u},H_{u}\right)}\ &{\qquadH_{u}:=\left(\left[h_{v}^{\mathrm{node},l}\parallel h_{e}^{\mathrm{edge},l}\right]\right)_{v\in\mathcal{N}(u)},e={u,v}}\end{array}

$$

$$

\begin{array}{r l}&{h_{u}^{\mathrm{node},l+1}:=\mathbf{M}\mathbf{A}\mathbf{B}^{(l)}\left(h_{u}^{\mathrm{node},l},H_{u},H_{u}\right)}\ &{\qquadH_{u}:=\left(\left[h_{v}^{\mathrm{node},l}\parallel h_{e}^{\mathrm{edge},l}\right]\right)_{v\in\mathcal{N}(u)},e={u,v}}\end{array}

$$

Updating edge embeddings: To compute edge embedding $h_{e}^{\mathrm{edge},l+1}$ , $e={u,v}$ we concatenate $h_{u}^{\mathrm{node},l},h_{v}^{\mathrm{node},l}$ and $h_{e}^{\mathrm{edge},l}$ , and pass it through a dense layer (FF) followed by a residual connection and nonlinear activation.

更新边嵌入(edge embeddings):为了计算边嵌入 $h_{e}^{\mathrm{edge},l+1}$ (其中 $e={u,v}$),我们将 $h_{u}^{\mathrm{node},l},h_{v}^{\mathrm{node},l}$ 和 $h_{e}^{\mathrm{edge},l}$ 拼接起来,然后通过一个稠密层(FF),接着进行残差连接和非线性激活。

$$

h_{e}^{\mathrm{{edge}},l+1}:=\alpha\left(h_{e}^{\mathrm{{edge}},l}+\mathbf{F}\mathbf{F}^{(l)}\left(h_{u}^{\mathrm{{node}},l}\parallel h_{v}^{\mathrm{{node}},l}\parallel h_{e}^{\mathrm{{edge}},l}\right)\right)

$$

$$

h_{e}^{\mathrm{{edge}},l+1}:=\alpha\left(h_{e}^{\mathrm{{edge}},l}+\mathbf{F}\mathbf{F}^{(l)}\left(h_{u}^{\mathrm{{node}},l}\parallel h_{v}^{\mathrm{{node}},l}\parallel h_{e}^{\mathrm{{edge}},l}\right)\right)

$$

where $e={u,v}$ . Note that, although our edges are undirected, we compute the edge embedding by concatenating the embeddings in a specific order i.e., the channel embedding, time embedding and edge embedding. We show the process of updating nodes and edges in layer $l$ using a gnnin Algorithm 1.

其中 $e={u,v}$。需要注意的是,虽然我们的边是无向的,但我们通过按特定顺序拼接嵌入来计算边嵌入,即通道嵌入、时间嵌入和边嵌入。我们在算法1中使用gnn展示了第 $l$ 层中节点和边的更新过程。

Answering the queries: As mentioned in Section 4, our last $\mathrm{gnn}^{(L)}$ layer has embedding dimension 1. Hence, after processing the graph features through $L$ many gnn layers, we use Eq. 7 to decode the graph and provide the predicted answers to the time series query. A forward pass of GraFITi is presented in Algorithm 2.

回答问题:如第4节所述,我们的最后一层$\mathrm{gnn}^{(L)}$嵌入维度为1。因此,在通过$L$个gnn层处理图特征后,我们使用公式7对图进行解码,并为时间序列查询提供预测答案。GraFITi的前向传播过程如算法2所示。

Computational Complexity: The computational complexity of GraFITi primarily comes from using MAB in Eq. 11. For a single channel node $u\in{1,...,C}$ , the maximum complexity for computing its embedding is $\mathcal{N}(u)$ since only neighborhood connections are used for the update, and $\mathcal{N}(\boldsymbol{\bar{u}})\subseteq{\boldsymbol{C}+1,...,\boldsymbol{C}+\boldsymbol{N}+\boldsymbol{K}^{\prime}}$ . Thus, computing the embeddings of all channel nodes is $\mathcal O(|E|)$ . Similarly, the computational complexity of MAB for computing the embeddings of all nodes in $V_{T}\cup V_{Q}$ is also $\mathcal{\bar{O}}(|E|)$ . A feed forward layer $\mathbf{F}\mathbf{F}:\mathbb{R}^{Y}\rightarrow\mathbb{R}^{Z}$ will have a computational complexity of $\mathcal{O}(Y Z)$ .

计算复杂度:GraFITi的计算复杂度主要来自公式11中使用的多臂老虎机 (MAB) 。对于单个通道节点 $u\in{1,...,C}$ ,计算其嵌入的最大复杂度为 $\mathcal{N}(u)$ ,因为更新时仅使用邻域连接,且 $\mathcal{N}(\boldsymbol{\bar{u}})\subseteq{\boldsymbol{C}+1,...,\boldsymbol{C}+\boldsymbol{N}+\boldsymbol{K}^{\prime}}$ 。因此,计算所有通道节点的嵌入复杂度为 $\mathcal O(|E|)$ 。同理,MAB计算 $V_{T}\cup V_{Q}$ 中所有节点嵌入的计算复杂度也是 $\mathcal{\bar{O}}(|E|)$ 。前馈层 $\mathbf{F}\mathbf{F}:\mathbb{R}^{Y}\rightarrow\mathbb{R}^{Z}$ 的计算复杂度为 $\mathcal{O}(Y Z)$ 。

Figure 3: Overall architecture of GraFITi.

图 3: GraFITi的整体架构。

算法 2: GraFITi前向传播

确保: 观测时间序列预测查询 (S, Q)

(V,E, Fnode, Fedge) ← ts2graph(S, Q) //式4

//式5

//节点和边的初始嵌入

hnode,0 {An hedge,0 ↑人

{u,u} ∈E} //式6

//图神经网络

for l ∈ {1, ..., L} do ,V,E) //算法1

y ← graph2ts(hnode,L , hedge,L, ,V,E) //式7

Delineating from GRAPE (You et al. 2020) You et al. (2020) introduced GRAPE, a graph-based model for imputing and classifying vector datasets with missing values. This approach employs a bipartite graph, with nodes divided into separate sets for sample IDs and sample features. The edges of this graph represent the feature values associated with the samples. Notably, GRAPE learns in a trans duct ive manner, encompassing all the data samples, including those from the test set, within in the graph. In contrast, GraFITi uses inductive approach. Here, each instance is a Sparsity Structure Graph, tailored for time series data. In this structure, nodes are divided into distinct sets for channels and