Language Models with Image Descriptors are Strong Few-Shot Video-Language Learners

带有图像描述符的语言模型是强大的少样本视频-语言学习者

Zhen hai long Wang', Manling Li', Ruochen Xu2, Luowei Zhou2, Jie Lei3, Xudong Lin4, Shuohang $\mathbf{Wang}^{2}$ , Ziyi $\mathbf{Yang}^{2}$ , Chenguang $\mathbf{Z}\mathbf{h}\mathbf{u}^{2}$ , Derek Hoiem1, Shih-Fu Chang4, Mohit Bansal3, Heng Ji1 1UIUC 2MSR 3UNC 4Columbia University {wangz3,hengji}@illinois.edu

Zhen hai long Wang', Manling Li', Ruochen Xu2, Luowei Zhou2, Jie Lei3, Xudong Lin4, Shuohang $\mathbf{Wang}^{2}$ , Ziyi $\mathbf{Yang}^{2}$ , Chenguang $\mathbf{Z}\mathbf{h}\mathbf{u}^{2}$ , Derek Hoiem1, Shih-Fu Chang4, Mohit Bansal3, Heng Ji1 1UIUC 2MSR 3UNC 4Columbia University {wangz3,hengji}@illinois.edu

Abstract

摘要

The goal of this work is to build flexible video-language models that can generalize to various video-to-text tasks from few examples. Existing few-shot video-language learners focus exclusively on the encoder, resulting in the absence of a video-totext decoder to handle generative tasks. Video captioners have been pretrained on large-scale video-language datasets, but they rely heavily on finetuning and lack the ability to generate text for unseen tasks in a few-shot setting. We propose VidIL, a few-shot Video-language Learner via Image and Language models, which demonstrates strong performance on few-shot video-to-text tasks without the necessity of pre training or finetuning on any video datasets. We use image-language models to translate the video content into frame captions, object, attribute, and event phrases, and compose them into a temporal-aware template. We then instruct a language model, with a prompt containing a few in-context examples, to generate a target output from the composed content. The flexibility of prompting allows the model to capture any form of text input, such as automatic speech recognition (ASR) transcripts. Our experiments demonstrate the power of language models in understanding videos on a wide variety of video-language tasks, including video captioning, video question answering, video caption retrieval, and video future event prediction. Especially, on video future event prediction, our few-shot model significantly outperforms state-of-the-art supervised models trained on large-scale video datasets. Code and processed data are publicly available for research purposes at https://github.com/Mike Wang W ZH L/VidIL.

本研究旨在构建灵活的视频-语言模型,能够通过少量样本泛化至多种视频到文本任务。现有的少样本视频-语言学习器仅聚焦编码器,导致缺乏处理生成任务的视频到文本解码器。虽然视频描述生成器已在大规模视频-语言数据集上预训练,但它们严重依赖微调,且无法在少样本场景下为未见任务生成文本。我们提出VidIL——基于图像与语言模型的少样本视频-语言学习器,该模型无需任何视频数据集的预训练或微调,即可在少样本视频到文本任务中展现卓越性能。我们利用图像-语言模型将视频内容转化为帧描述、对象/属性/事件短语,并将其组织为时序感知模板。随后通过包含少量上下文示例的提示指令,引导语言模型根据结构化内容生成目标输出。提示机制的灵活性使模型能捕捉任意文本输入形式(如自动语音识别(ASR)转录文本)。实验证明,该语言模型在视频描述生成、视频问答、视频描述检索及视频未来事件预测等多样化视频-语言任务中具有卓越的视频理解能力。特别在视频未来事件预测任务中,我们的少样本模型显著优于基于大规模视频数据集训练的最先进监督模型。代码与处理数据已公开于https://github.com/MikeWangWZHL/VidIL供研究使用。

1 Introduction

1 引言

One major gap between artificial intelligence and human intelligence lies in their abilities to generalize and perform well on new tasks with limited annotations. Recent advances in large-scale pre-trained generative language models [45, 6, 71, 24] have shown promising few-shot capabilities [72, 43, 63] in understanding natural language. However, few-shot video-language understanding is still in its infancy. A particular limitation of most recent video-language frameworks [28, 21, 61, 68, 67, 25, 64, 34] is that they are encoder-only, which means they do not have the ability to generate text from videos for purposes such as captioning [62, 57], question answering [60], and future prediction [23]. Meanwhile, unified video-language models [36, 49] that are capable of language decoding still rely heavily on finetuning using a large number of manually annotated video-text pairs, therefore cannot adapt quickly to unseen tasks. Few-shot video-to-text decoding is challenging because the natural language supervision for learning video-language representation is typically based on subtitles and automatic speech recognition (ASR) transcripts [39, 68], which differ significantly from downstream tasks in terms of distribution and may have poor semantic alignment across vision and text modalities.

人工智能与人类智能之间的一个主要差距在于它们在新任务上的泛化能力和有限标注下的表现。近期大规模预训练生成式语言模型 [45, 6, 71, 24] 的发展展现了在自然语言理解方面具有潜力的少样本能力 [72, 43, 63]。然而,少样本视频-语言理解仍处于起步阶段。当前大多数视频-语言框架 [28, 21, 61, 68, 67, 25, 64, 34] 的核心局限在于它们仅包含编码器结构,这意味着它们无法从视频生成文本以实现字幕生成 [62, 57]、问答 [60] 或未来预测 [23] 等功能。同时,具备语言解码能力的统一视频-语言模型 [36, 49] 仍严重依赖大量人工标注的视频-文本对进行微调,因此难以快速适应未见任务。实现少样本视频到文本解码的挑战在于:用于学习视频-语言表征的自然语言监督信号通常基于字幕和自动语音识别 (ASR) 转写文本 [39, 68],这些数据与下游任务在分布上存在显著差异,且视觉与文本模态间可能缺乏良好的语义对齐。

We propose to address this problem by harnessing the few-shot power of frozen large-scale language models, such as Instruct GP T [40]. Our inspiration is derived from the fact that humans are excellent visual storytellers [15], with the ability to piece together a coherent story from a few isolated images. To mimic this, we propose VidIL, a few-shot Video-language Learner via Image and Language models, to use image models to provide information about the visual content in the video (as well as optionally use ASR to represent speech), and then we instruct language models to generate a video-based summary, answer, or other target output for diverse video-language tasks.

我们提出通过利用冻结大规模语言模型(如InstructGPT [40])的少样本能力来解决这一问题。灵感来源于人类是出色的视觉叙事者[15],能够从少量孤立图像中拼凑出连贯的故事。为模拟这一能力,我们提出VidIL(基于图像和语言模型的少样本视频-语言学习器),利用图像模型提供视频中的视觉内容信息(可选结合ASR表示语音),然后指导语言模型生成基于视频的摘要、答案或其他目标输出,以应对多样化的视频-语言任务。

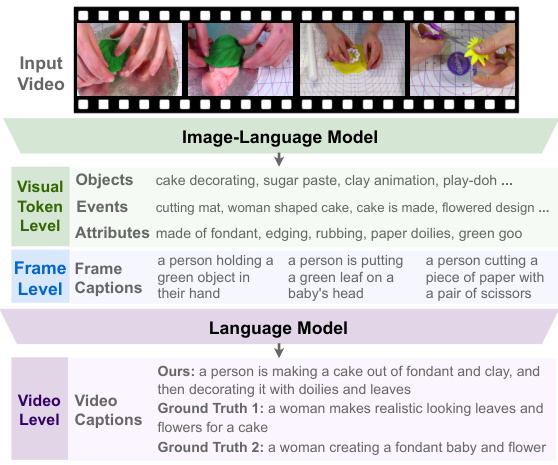

The main challenge of understanding videos is that, videos contain rich semantics and temporal content at multiple granular i ties. Unlike static images which depict objects, attributes and events in a snapshot, the temporal dimension of videos further conveys the state changes of the objects, actions, and events. For example, in Figure 1, the individual frame captions of the video clip only describe static visual features such as "a person holding a green object in hand". In contrast, a correct video-level description would be "a woman makes realistic looking leaves and flowers for a cake", which involves reasoning over a collection of objects and events that occur at different timestamps in the video clip, such as "cake decorating" and "flowered design". Hence, to inform video-level description and queries, we need to represent all of this information and its temporal ordering.

理解视频的主要挑战在于,视频在多个粒度层级上包含丰富的语义和时序内容。与静态图像仅捕捉某一时刻的物体、属性和事件不同,视频的时间维度进一步传递了物体状态、动作和事件的变化。例如在图1中,视频片段的单帧描述仅呈现了"一个人手里拿着绿色物体"等静态视觉特征。而正确的视频级描述应为"一位女性为蛋糕制作逼真的树叶和花朵装饰",这需要推理视频片段中不同时间点发生的物体和事件集合,例如"蛋糕装饰"和"花卉设计"。因此,要为视频级描述和查询提供依据,我们必须表征所有这些信息及其时序关系。

Figure 1: Multiple levels of information in videos.

图 1: 视频中的多层次信息。

To address the unique challenges of videos, we propose to decompose a video into three levels: the video output, frame captions, and visual tokens (including objects, events, attributes). One major benefit from this hierarchical video representation is that we can separate the visual and temporal dimensions of a video. We leverage frozen image-language foundational models at lower levels to collect salient visual features from the sparsely sampled frames. Specifically, we first leverage a pretrained image-language contrastive model CLIP [44] to perform visual token iz ation, based on the similarity score between frames and tokens of objects, events and attributes. The token iz ation is done under the guidance of semantics role labeling [14], which provides us with candidate events with involved objects and related attributes. Next, in order to capture the overall semantics at the frame level, we employ the pretrained image captioner in the image-language model BLIP [26] to obtain frame captions. Finally, we instruct a pretrained large language model using in-context learning [40, 13, 51, 48] to interpret visual tokens and frame captions into the target textual output. In detail, we temporally order visual tokens and frame captions using specially designed prompts such as “First...Then...Finally”, to instruct the pretrained language model to track the changes of objects, events, attributes and frame semantics along the temporal dimension.

为应对视频数据的独特挑战,我们提出将视频分解为三个层级:视频输出、帧级描述和视觉token(包括物体、事件及属性)。这种层级化视频表征的主要优势在于能将视觉维度与时间维度分离。我们利用冻结的图像-语言基础模型在底层提取稀疏采样帧中的显著视觉特征。具体而言,首先基于预训练的CLIP[44]图像-语言对比模型,通过帧与物体/事件/属性token的相似度评分进行视觉token化处理。该过程在语义角色标注[14]的指导下完成,从而获得包含参与对象及相关属性的候选事件。接着,为捕获帧级整体语义,我们采用图像-语言模型BLIP[26]中的预训练图像描述器生成帧级描述。最后,通过情境学习[40,13,51,48]指导预训练大语言模型将视觉token和帧级描述转化为目标文本输出。具体实现时,我们使用"首先...然后...最终"等特制提示词对视觉token和帧级描述进行时序排序,引导预训练语言模型追踪物体、事件、属性及帧语义沿时间维度的变化。

Without pre training or finetuning on any video datasets, we show that our approach outperforms both video-language and image-language state-of-the-art baselines on few-shot video captioning and question answering tasks. Moreover, on video-language event prediction, our approach significantly outperforms fully-supervised models while using only 10 labeled examples. We further demonstrate that our generative model can benefit broader video-language understanding tasks, such as text-video retrieval, via pseudo label generation. Additionally, we show that our model is highly flexible in adding new modalities, such as ASR transcripts.

在不预训练或微调任何视频数据集的情况下,我们的方法在少样本视频描述生成和问答任务上超越了视频-语言及图像-语言领域的最先进基线模型。此外,在视频-语言事件预测任务中,仅使用10个标注样本时,我们的方法显著优于全监督模型。我们进一步证明,该生成式模型能通过伪标签生成提升更广泛的视频-语言理解任务(如文本-视频检索)。同时,模型展现出高度灵活性,可轻松集成新模态(如ASR转录文本)。

2 Related Work

2 相关工作

2.1 Image-Language Models and Their Applications on Video-Language Tasks

2.1 图像-语言模型及其在视频-语言任务中的应用

Large-scale image-language pre training models optimize image-text matching through contrastive learning [44, 17] and multimodal fusion [65, 27, 58, 66, 35, 52, 8, 29, 73, 70, 18, 16]. Recently,

大规模图像语言预训练模型通过对比学习 [44, 17] 和多模态融合 [65, 27, 58, 66, 35, 52, 8, 29, 73, 70, 18, 16] 优化图文匹配。近期,

BLIP [26] proposes a boots trapping image-language pre training framework with a captioner and a filterer which has shown promising performance on various image-language tasks. However, video-language pre training [25, 36, 28, 38, 3, 1, 42, 33] is still hindered by noisy and domain-specific video datasets [74, 22, 39]. Naturally, researchers start to explore transferring the rich knowledge from image models to videos. Different from the traditional way of representing videos by 3D dense features [12], recent work [21, 25] proves that sparse sampling is an effective way to represent videos, which facilitates applying pre-trained image-language models to video-language tasks [37, 11]. Specifically, the image-language model BLIP [26] sets new state-of-the-art on zero-shot retrieval-style video-language tasks, such as video retrieval and video question answering. However, for generationstyle tasks such as domain-specific video captioning, video-language model UniVL [36] still leads the performance but highly rely on fine-tuning. In this work, we extend the idea of leveraging image-language models to a wide variety of video-to-text generation tasks. We further connect imagelanguage models with language models which empowers our model with strong generalization ability. We show that the knowledge from both image-language pre training and language-only pre training can benefit video-language understanding in various aspects.

BLIP [26] 提出了一种包含描述生成器和过滤器的自举图像-语言预训练框架,在各种图像-语言任务中展现出优异性能。然而,视频-语言预训练 [25, 36, 28, 38, 3, 1, 42, 33] 仍受限于噪声多且领域特定的视频数据集 [74, 22, 39]。研究者们自然开始探索将图像模型的丰富知识迁移至视频领域。不同于传统采用3D密集特征表示视频的方法 [12],近期研究 [21, 25] 证明稀疏采样是有效的视频表征方式,这有助于将预训练图像-语言模型应用于视频-语言任务 [37, 11]。具体而言,图像-语言模型BLIP [26] 在零样本检索式视频-语言任务(如视频检索和视频问答)中创造了新标杆。但对于领域特定视频描述等生成式任务,视频-语言模型UniVL [36] 仍保持领先性能,但高度依赖微调。本研究将图像-语言模型的拓展应用延伸至多样化的视频到文本生成任务,并进一步将图像-语言模型与语言模型相结合,赋予模型强大的泛化能力。我们证明,来自图像-语言预训练和纯语言预训练的知识能在多方面促进视频-语言理解。

2.2 Unifying MultiModal Tasks with Language Models

2.2 用大语言模型统一多模态任务

The community has paid much attention to connecting different modalities with a unified representation recently. Text-only generation models, such as T5 [46], have been extended to vision-language tasks by text generation conditioned on visual features [9, 53, 50, 75, 55]. In order to fully leverage the generalization power from pretained language models, [63] represents images using text in a fully symbolic way. [32] includes more modalities such as video and audio, but requires annotated video-text data to jointly training the language model with the video and audio tokenizer. In this work, we propose a temporal-aware hierarchical representation for describing a video textually. To our knowledge, we are the first work to leverage prompting a frozen language model for tackling few-shot video-language tasks with a unified textual representation. Concurrent work Socratic [69] uses a zero-shot language-based world-state history to represent long videos with given time stamps, while our model can quickly adapt to different video and text distributions with few examples. Furthermore, we show that by injecting temporal markers to the prompt we can make a pre-trained language model understand fine-grained temporal dynamics in video events. Compared with the concurrent work Flamingo [2], which requires dedicated vision-language post-pre training, our framework does not require to pretrain or finetune on any video data. Our framework is simple and highly modulated where all the components are publicly available. Additionally, our framework is more flexible on adding new modalities, e.g., automatic speech recognition, without the need for complex redesigning.

近年来,学界对多模态统一表征的关注度显著提升。以T5 [46]为代表的纯文本生成模型,已通过视觉特征条件化文本生成的方式扩展到视觉-语言任务 [9, 53, 50, 75, 55]。为充分释放预训练语言模型的泛化能力,[63]采用完全符号化的文本形式表征图像。[32]虽引入视频、音频等多模态数据,但需依赖视频-文本标注数据联合训练语言模型与音视频tokenizer。本文提出时序感知的层次化文本表征框架来描述视频内容。据我们所知,这是首个利用冻结大语言模型的提示工程(prompting)技术,通过统一文本表征解决少样本视频-语言任务的研究。同期工作Socratic [69]采用基于零样本语言的世界状态历史表征带时间戳的长视频,而我们的模型仅需少量样本即可快速适应不同视频-文本数据分布。更进一步,我们证明通过向提示词注入时序标记,可使预训练语言模型理解视频事件中的细粒度时序动态。相比需要专门视觉-语言后预训练的同期工作Flamingo [2],本框架无需任何视频数据的预训练或微调。我们的框架结构简洁、模块化程度高,所有组件均为开源可用。此外,本框架在新增模态(如自动语音识别)时更具灵活性,无需复杂的架构重构。

3 Method

3 方法

We propose a hierarchical video representation framework which decomposes a video into three levels, i.e., visual token level, frame level and video level. The motivation is to separate the spatial and temporal dimension of a video in order to leverage image-language and language-only foundation models, such as CLIP [44] and GPT-3 [6]. All three levels use a unified textual representation which enables us to leverage the powerful few-shot ability from pretrained language models.

我们提出了一种分层视频表示框架,将视频分解为三个层级:视觉token (visual token) 层级、帧层级和视频层级。该设计的核心动机是通过分离视频的空间与时间维度,从而利用CLIP [44] 和GPT-3 [6] 等图像-语言模型及纯语言基础模型。所有层级均采用统一的文本表示形式,这使得我们能够利用预训练语言模型强大的少样本 (few-shot) 学习能力。

3.1 Frame Level: Image Captioning

3.1 帧级别:图像描述

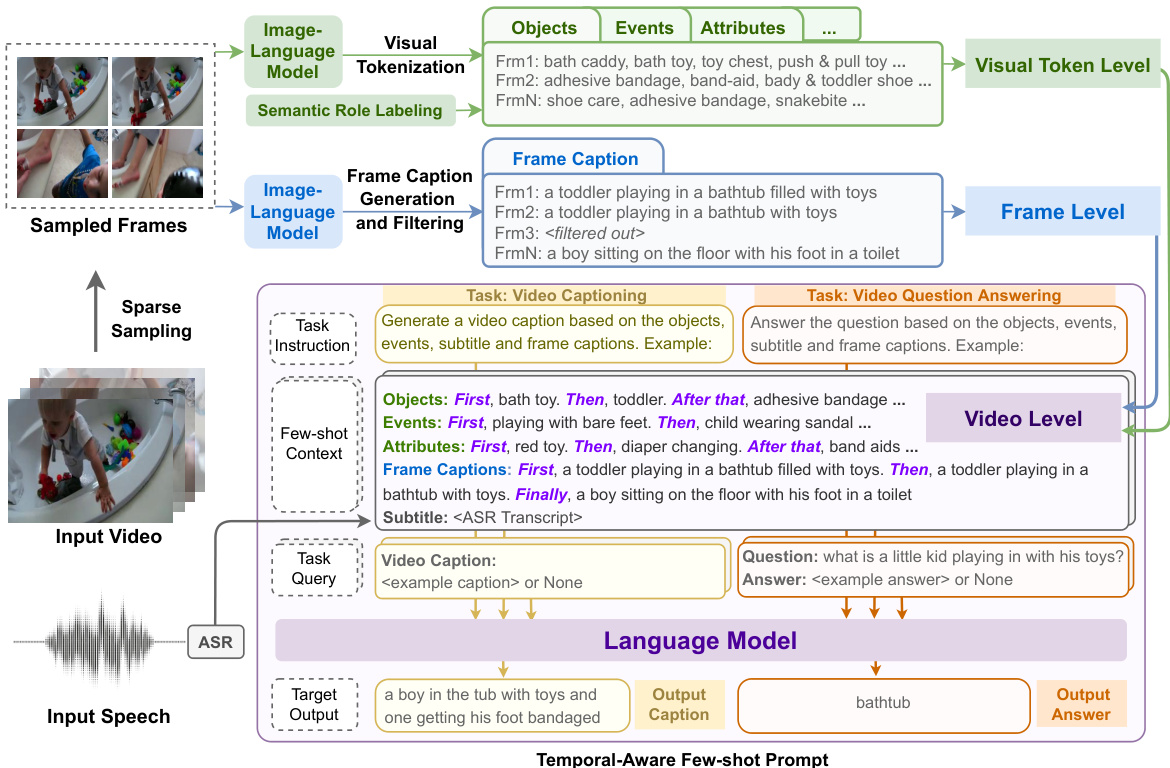

Following [21] we first perform sparse sampling to obtain several video frames. Unless otherwise specified, we sample 4 frames for frame level and 8 frames for visual token level. We then feed each frame into a pre-trained image-language model to obtain frame level captions. An example can be found in the blue part of Figure 2. In our experiments, we use BLIP [26], a recent image-language framework containing both image-grounded encoder and decoder, for generating frame captions. We follow [26] to do both captioning and filtering on each frame. However, as mentioned in Section 1, videos contain rich semantics and temporal contents at multiple granular i ties. It is not enough to generate video-level target text such as video captions solely based on frame captions. Thus, we further perform visual token iz ation for each frame to capture features at a finer granularity.

遵循[21]的方法,我们首先进行稀疏采样以获取若干视频帧。除非另有说明,帧级别采样4帧,视觉token级别采样8帧。随后将每帧输入预训练的图文模型获取帧级描述(示例见图2蓝色部分)。实验中采用BLIP[26]这一最新图文框架,其包含基于图像的编码器和解码器,用于生成帧描述。我们依照[26]对每帧执行描述生成和过滤操作。但如第1节所述,视频蕴含多粒度的丰富语义与时序内容,仅凭帧描述生成视频级目标文本(如视频字幕)是不够的。因此我们进一步对每帧执行视觉token化处理,以捕捉更细粒度的特征。

Figure 2: Overview of VidIL framework. We represent a video in a unified textural representation containing three semantic levels: visual token level, frame level, and video level. At visual token level, we extract salient objects, events, attributes for each sampled frame. At frame level, we perform image captioning and filtering. At video level, we construct video representation by aggregating the visual tokens, frame captions and other text modalities such as ASR, using a few-shot temporalaware prompt. We then feed the prompt to a pre-trained language model together with task-specific instructions to generate target text for a variety of video-language tasks. Examples of the full prompt for different tasks can be found in Appendix ??.

图 2: VidIL框架概述。我们将视频表示为包含三个语义层次的统一文本表征:视觉token (visual token) 层次、帧层次和视频层次。在视觉token层次,为每个采样帧提取显著物体、事件和属性。在帧层次,执行图像描述生成与过滤。在视频层次,通过聚合视觉token、帧描述文本以及自动语音识别 (ASR) 等其他文本模态,使用少样本 (few-shot) 时序感知提示 (prompt) 构建视频表征。随后将该提示与任务特定指令共同输入预训练大语言模型,为多种视频-语言任务生成目标文本。不同任务的完整提示示例见附录??。

3.2 Visual Token Level: Structure-Aware Visual Token iz ation

3.2 视觉Token级别:结构感知的视觉Token化

At this level, we aim to extract the textual representations of salient visual token types, such as objects, events and attributes. We found that pre-defined classes for classification, such as those in ImageNet [10], are far from enough for covering the rich semantics in open-domain videos. Thus, instead of using classification-based methods for visual token iz ation as in previous work [32, 63], we adopt a retrieval-based visual token iz ation approach by leveraging pre-trained contrastive imagelanguage models. Given a visual token vocabulary which contains all candidate object, event, and attribute text phrases, we compute the image embedding of a frame and the text embeddings of the candidate visual tokens using a contrastive multi-modal encoder, CLIP [44]. We then select top 5 visual tokens per frame based on the cosine similarity of the image and text embeddings. An example of the extracted object tokens can be found in the green part of Figure 2.

在这一层级,我们的目标是提取显著视觉token类型的文本表征,例如物体、事件和属性。我们发现预定义的分类类别(如ImageNet [10]中的类别)远不足以覆盖开放域视频中的丰富语义。因此,不同于先前工作[32, 63]采用基于分类的视觉token化方法,我们利用预训练的对比式图文模型,采用基于检索的视觉token化方法。给定一个包含所有候选物体、事件及属性文本短语的视觉token词表,我们使用对比式多模态编码器CLIP [44]计算帧的图像嵌入和候选视觉token的文本嵌入,然后根据图像与文本嵌入的余弦相似度,每帧选取前5个视觉token。提取的物体token示例如图2绿色部分所示。

Unlike in images where objects and attributes already cover most visual features, events are more informative in videos. In order to discover events from video frames, we construct our own event vocabulary by extracting event structures from Visual Genome [19] synsets using Semantic Role Labeling. Specifically, we first select the phrases that contain at least one verb and one argument as events. Then we remove highly similar events based on their sentence similarity using SentenceBERT [47] embeddings. For object vocabulary, we adopt OpenImage [20] full classes $(\sim20\mathrm{k})$ , instead of using the visually groundable subset $(\mathord{\sim}600)$ as in concurrent work [69]. We found that using large but noisy vocabulary is more effective than using small but clean vocabulary in our retrieval-based setting with CLIP. For attribute vocabulary, we adopt visual genome attribute synset. In Section 4.6, we provide ablation study on the impact of different types of visual tokens. The statistics of visual token vocabulary can be found in Appendix Table ??.

与图像中物体和属性已涵盖大部分视觉特征不同,视频中的事件更具信息量。为了从视频帧中发现事件,我们通过语义角色标注从Visual Genome [19]同义词集中提取事件结构,构建了专属的事件词库。具体而言,我们首先筛选包含至少一个动词和一个论元的短语作为事件,随后基于SentenceBERT [47]嵌入的句子相似度去除高度相似事件。对于物体词库,我们采用OpenImage [20]全量类别$(\sim20\mathrm{k})$,而非同期研究[69]使用的视觉可落地子集$(\mathord{\sim}600)$。在基于CLIP的检索场景中,我们发现使用大规模但含噪声的词库比小规模洁净词库更有效。属性词库则采用Visual Genome属性同义词集。第4.6节将针对不同类型视觉token的影响进行消融实验,视觉token词库统计信息见附录表??。

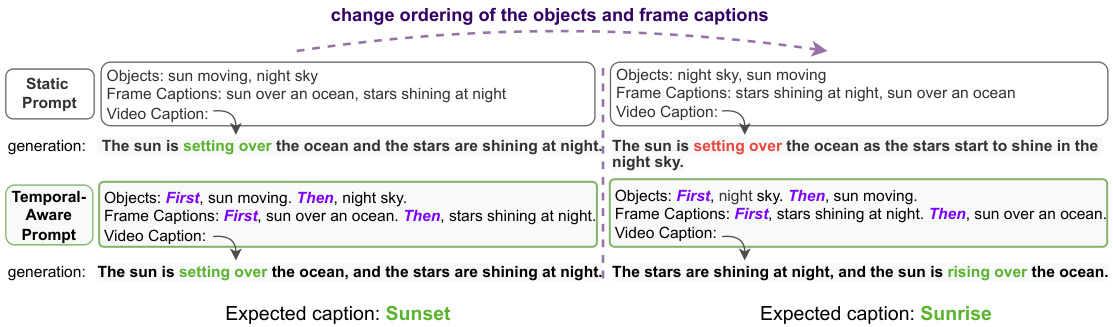

Figure 3: Temporal-aware prompt successfully distinguishes the Sunset and Sunrise scenarios based on the temporal ordering change of objects and frame captions, while the static prompt fails.

图 3: 时序感知提示 (Temporal-aware prompt) 能根据物体和帧描述的时序变化成功区分日落和日出场景,而静态提示 (static prompt) 则无法做到。

3.3 Video Level: Temporal-Aware Few-shot Prompting

3.3 视频层级:时序感知少样本提示

Once we obtain the textual representation from frame level and visual token level, the final step is to put the pieces together to generate a video level target text. The goal is to build a model that can be quickly adapted to any video-to-text generation task with only a few examples. To this end, we propose to leverage large-scale pre-trained language models, such as GPT-3 [6], with a temporal-aware few-shot prompt. As shown in Figure 2, our framework can be readily applied to various video-to-text generation tasks, such as video captioning and video question answering, with a shared prompt template. The proposed prompting strategy enables a language model to attend to the lower level visual information as well as taking into account the temporal ordering.

一旦我们从帧级别和视觉token级别获得文本表示,最后一步就是将这些片段组合起来生成视频级别的目标文本。我们的目标是构建一个只需少量示例就能快速适应任何视频到文本生成任务的模型。为此,我们提出利用GPT-3[6]等大规模预训练语言模型,结合时序感知的少样本提示(prompt)。如图2所示,我们的框架可以轻松应用于各种视频到文本生成任务(如视频字幕生成和视频问答),并共享同一个提示模板。所提出的提示策略使语言模型既能关注低层视觉信息,又能考虑时序顺序。

Here, we use the video captioning task depicted in Figure 2 to illustrate the details. The few-shot prompt consists of three parts: instruction, few-shot context, and task query. The instruction is a concise description of the generation task, e.g., "Generate a video caption based on the objects, events, attributes and frame captions. Example:", which is proved to be effective in zero-shot and few-shot settings [6, 59]. The few-shot context contains the selected in-context examples as well as the test video instance. Each video instance is represented by the aggregated visual tokens4, e.g., "Objects: First, bath toy. Then,..." the frame captions, such as "Frame Captions: First, a toddler playing in a bathtub filled with toys. Then,...", and the ASR inputs if available, e.g., "Subtitle:

此处,我们以图2所示的视频字幕生成任务为例说明具体细节。少样本提示(prompt)包含三部分:指令(instruction)、少样本上下文(few-shot context)和任务查询(task query)。指令是对生成任务的简明描述(例如"根据物体、事件、属性和帧描述生成视频字幕。示例:"),该方式在零样本和少样本场景下已被验证有效[6,59]。少样本上下文包含选定的上下文示例及测试视频实例,每个视频实例通过聚合的视觉token(如"物体:首先出现浴盆玩具,然后...")、帧描述(如"帧描述:首先是一个幼儿在装满玩具的浴缸里玩耍,然后...")及可选的ASR输入(如"字幕:<ASR转录文本>")来表征。最后,任务查询是指明目标文本格式的任务特定后缀(如"视频字幕:")。对于上下文示例(此处为简洁省略),任务查询后接真实标注;而对于测试实例,生成内容从任务查询结尾处开始。

Formally, we denote the instruction line as $\mathbf{t}$ , few-shot context as c, the task query as q, and the target text as $\mathbf{y}$ , where $\mathbf{y}=(y_{1},y_{2},...,y_{L})$ . The generation of the next target token $y_{l}$ can be modeled as:

形式上,我们将指令行表示为 $\mathbf{t}$,少样本上下文表示为 c,任务查询表示为 q,目标文本表示为 $\mathbf{y}$,其中 $\mathbf{y}=(y_{1},y_{2},...,y_{L})$。下一个目标 token $y_{l}$ 的生成可建模为:

$$

y_{l}=\underset{y}{\arg\operatorname*{max}}p(y|\mathbf s,\mathbf c,\mathbf q,y_{<l})

$$

$$

y_{l}=\underset{y}{\arg\operatorname*{max}}p(y|\mathbf s,\mathbf c,\mathbf q,y_{<l})

$$

In order to capture the temporal dynamics between frames and visual tokens, we further propose to inject temporal markers to the prompt. As shown in the few-shot context in Figure 2, each visual token and frame caption is prefixed with a natural language phrase indicating its temporal ordering, e.g., "First,","Then,", and "Finally,". We found adding the temporal marker can make the language model conditioned on not only literal but also temporal information of the context. We show an example in Figure 3, where we compare our temporal-aware prompt with a static prompt on video captioning using Instruct GP T. Again, the in-context examples are omitted here, which can be found in Appendix ??. In this example, the only difference between these two contexts is the ordering of the visual tokens and the frame captions. For the context on the left, where "sun moving" appears before "night sky", we are expected to see a story talking about sunset, while for the context on the right, we are expected to see sunrise. We can see the static prompt generates captions about sunset for both contexts, while the temporal-aware prompt can capture temporal ordering correctly and generate sunrise for the context on the right.

为了捕捉帧与视觉token之间的时序动态关系,我们进一步提出在提示词中注入时序标记。如图2的少样本示例所示,每个视觉token和帧描述都冠以表示时序顺序的自然语言短语,例如"首先,"、"接着,"和"最后,"。我们发现添加时序标记能使语言模型不仅基于文本内容,还能结合上下文的时序信息进行推理。图3展示了使用时序感知提示与静态提示在InstructGPT视频描述任务中的对比案例(上下文示例详见附录??)。本例中,两种提示的唯一区别在于视觉token和帧描述的排列顺序。左侧上下文中"太阳移动"出现在"夜空"之前,预期生成日落相关的描述;而右侧上下文则应呈现日出场景。可见静态提示为两种上下文都生成日落描述,而时序感知提示能准确捕捉时序关系,为右侧上下文正确生成日出描述。

4 Experiments

4 实验

4.1 Experimental Setup

4.1 实验设置

To comprehensively evaluate our model, we show results on four video-language understanding tasks in few-shot settings: video captioning, video question answering (QA), video-language event prediction, and text-video retrieval. We compare our approach with state-of-the-art approaches on five benchmarks, i.e, MSR-VTT [62], MSVD [7], VaTeX [57], YouCook2 [74], and VLEP [23]. The statistics of the datasets can be found in Table 1. For more details please refer to Appendix ??.

为了全面评估我们的模型,我们在少样本设置下展示了四项视频-语言理解任务的结果:视频描述生成、视频问答(QA)、视频-语言事件预测以及文本-视频检索。我们在五个基准测试(即MSR-VTT [62]、MSVD [7]、VaTeX [57]、YouCook2 [74]和VLEP [23])上将我们的方法与最先进的方法进行了比较。数据集统计信息见表1。更多细节请参阅附录??。

Implementation Details. We use CLIP-L/146 as our default encoder for visual token iz ation. We adopt BLIP captioning checkpoint 7 finetuned on COCO [31] for frame captioning. We use Instruct GP T [40] as our default language model for generating text conditioned on the few-shot prompt. To construct event vocabulary, we use the semantic role labeling model from AllenNLP8. The experiments are conducted on 2 NVIDIA V100 (16GB) GPUs. All few-shot

实现细节。我们采用CLIP-L/146作为视觉token化的默认编码器,使用在COCO数据集[31]上微调的BLIP标注检查点7进行帧标注。默认使用InstructGPT[40]作为大语言模型,基于少样本提示生成文本。构建事件词汇表时采用AllenNLP8的语义角色标注模型。实验在2块NVIDIA V100(16GB)GPU上进行。所有少样本

Table 1: Statistics of datasets in our experiments

| Dataset | Task | SplitCount #train/#eval |

| MSR-VTT [62] | Captioning; ;QA | 6,513/2,990 |

| MSR-VTT [62] | Retrieval | 7,010/1,000 |

| MSVD [7] | QuestionAnswering | 30,933/13,157 |

| VaTeX v1.15 [57] | Captioning;Retrieval | 25,991/6,000 |

| YouCook2 [74] | Captioning | 10.337/3.492 |

| VLEP [23] | EventPrediction | 20,142/4,192 |

finetuning on baselines and semi-supervised training are performed on 2 Nvidia V100 16G GPUs.

表 1: 实验数据集统计

| Dataset | Task | SplitCount #train/#eval |

|---|---|---|

| MSR-VTT [62] | 字幕生成;问答 | 6,513/2,990 |

| MSR-VTT [62] | 检索 | 7,010/1,000 |

| MSVD [7] | 问答 | 30,933/13,157 |

| VaTeX v1.15 [57] | 字幕生成;检索 | 25,991/6,000 |

| YouCook2 [74] | 字幕生成 | 10.337/3.492 |

| VLEP [23] | 事件预测 | 20,142/4,192 |

基线微调和半监督训练在2块Nvidia V100 16G GPU上完成。

In-context Example Selection. From our preliminary experiments, we find that the generation performance is sensitive to the quality of in-context examples. For example, for QA tasks such as MSVD-QA where the annotations are automatically generated, the <question, answer> pair in randomly selected in-context examples can be only weakly-correlated with the video context. Thus, instead of using a fixed prompt for each query, we dynamically filter out the irrelevant in-context examples. Specifically, given a randomly sampled $M$ -shot support set from the training set, we select a subset of $N$ -shots as in-context examples based on their Sentence BERT [47] similarities with text queries. Furthermore, we reorder the selected examples in ascending order based on the similarity score to account for the recency bias [72] in large language models. For QA tasks, we choose the most relevant in-context examples by comparing with questions. While for captioning task, we compare with frame captions. If not otherwise specified, we use $M{=}I0$ and $N{=}5$ , which we consider as 10-shot training.

上下文示例选择。通过初步实验,我们发现生成性能对上下文示例的质量非常敏感。例如,在MSVD-QA等标注为自动生成的问答任务中,随机选取的上下文示例中的<问题,答案>对可能与视频内容仅有微弱关联。因此,我们不再为每个查询使用固定提示,而是动态过滤掉不相关的上下文示例。具体而言,给定从训练集中随机采样的$M$-shot支持集后,我们根据其与文本查询的Sentence BERT [47]相似度,选择$N$-shot子集作为上下文示例。此外,为应对大语言模型中的近因偏差[72],我们按相似度分数升序对所选示例重新排序。对于问答任务,我们通过问题对比选择最相关的上下文示例;而对于字幕任务,则与帧描述进行对比。若无特殊说明,我们采用$M{=}10$和$N{=}5$的配置,即视为10-shot训练。

4.2 Few-shot Video Captioning

4.2 少样本视频描述

We report BLEU-4 [41], ROUGE-L [30], METEOR [5], and CIDEr [54] scores on three video captioning benchmarks covering both open-domain (MSR-VTT, VaTeX) and domain-specific (YouCook2) videos. We compare with both state-of-the-art video captioner (UniVL [36]) and image captioner (BLIP [26]). In order to implement the BLIP baseline for few-shot video captioning, we extend the approach used for text-video retrieval evaluation in [26] to video-language training. Specifically, we concatenate the visual features of sampled frames and then feed them into the image-grounded text-encoder to compute the language modeling loss. This is equivalent to stitching the sampled frames into a large image and then feeding it to BLIP for image captioning. We found that this simple approach results in very strong baselines.

我们在三个视频描述基准测试上报告了BLEU-4 [41]、ROUGE-L [30]、METEOR [5]和CIDEr [54]分数,涵盖开放领域(MSR-VTT、VaTeX)和特定领域(YouCook2)视频。我们与最先进的视频描述模型(UniVL [36])和图像描述模型(BLIP [26])进行了比较。为实现少样本视频描述的BLIP基线,我们将[26]中用于文本-视频检索评估的方法扩展到视频-语言训练。具体而言,我们拼接采样帧的视觉特征,然后将其输入基于图像的文本编码器以计算语言建模损失。这相当于将采样帧拼接成一个大图像后输入BLIP进行图像描述。我们发现这种简单方法能产生非常强的基线。

As shown in Table 2, existing methods have strong bias on certain datasets. For example, UniVL performs well on YouCook2 but fails on MSR-VTT and VaTeX, while BLIP performs the opposite. This is because UniVL is pretrained on HowTo100M which favors instructional videos, i.e., YouCook2, while BLIP is pre-training on image-caption pairs which favors description-style captions, i.e., MSR-VTT and VaTeX. On the contrary, our model performs competitively on both open-domain and instructional videos, and significantly outperforms the baselines on the average CIDEr score across all three benchmarks. This indicates that by leveraging language models, we can maintain strong few-shot ability regardless of the video domain or the target caption distribution.

如表 2 所示,现有方法在某些数据集上存在强烈偏差。例如,UniVL 在 YouCook2 上表现良好,但在 MSR-VTT 和 VaTeX 上表现不佳,而 BLIP 则相反。这是因为 UniVL 在偏向教学视频的 HowTo100M 上进行预训练(即 YouCook2),而 BLIP 则在偏向描述式字幕的图像-字幕对上预训练(即 MSR-VTT 和 VaTeX)。相比之下,我们的模型在开放领域和教学视频上均表现出竞争力,并且在所有三个基准测试的平均 CIDEr 分数上显著优于基线模型。这表明,通过利用大语言模型,无论视频领域或目标字幕分布如何,我们都能保持强大的少样本能力。

Table 2: 10-shot video captioning results. ♠ indicates concurrent work. The reported Flamingo [2] results are using 16 shots. #VideoPT represents the number of videos used for pre-training. B-4, R-L, M, $C$ represents BLEU-4, ROUGE-L, METEOR and CIDEr. Avg $C$ represents the average CIDEr score across all available benchmarks. ASR indicates whether the model has access to the ASR subtitles. $B L I P$ and $B L I P_{c a p}$ use the pretrained checkpoint and the finetuned checkpoint on COCO captioning. All results are averaged over three random seeds.

| Method | #VideopT ASR | MSR-VTTCaption | YouCook2Caption | VaTexCaption | Avg C | |||||||||

| B-4 R-L MC | B-4 R-L M | B-4 R-L M | C | |||||||||||

| Few-shot | ||||||||||||||

| UniVL | 1.2M | No | 2.122.59.5 | 3.6 | 3.3 | 25.3 11.6 | 34.1 | 1.715.78.0 | 2.1 | 13.3 | ||||

| BLIP | 0 | No | 27.743.023.039.5 | 0.7 | 9.0 3.4 | 11.5 | 13.5 39.5 15.4 20.7 | 23.9 | ||||||

| BLIPcap | 0 | No | 21.6 48.0 22.7 30.2 | 3.7 | 8.6 3.8 | 9.4 | 20.7 41.5 17.4 28.9 | 22.8 | ||||||

| VidIL(ours) | 0 | No | 26.0 51.7 24.7 36.3 | 2.6 | 22.9 9.5 | 27.0 | 22.2 43.6 20.0 36.7 | 33.3 | ||||||

| UniVL | 1.2M | Yes | 4.3 | 26.4 | 12.2 48.6 | 2.7 | 17.7 | 10.2 | 3.4 | 26.0 | ||||

| VidIL(ours) | 0 | Yes | 10.7 35.9 19.4 111.623.2 44.2 20.6 38.9 | 75.3 | ||||||||||

| Flamingo-3B(16) | 27M | No | 73.2 | 57.1 | ||||||||||

| Flamingo-80B(16) 27M | No | 84.2 | 62.8 | |||||||||||

| Fine-tuning | ||||||||||||||

| UniVL | 1.2M | No | 42.0 61.0 29.0 50.111.2 40.117.6 127.0|22.838.622.333.4 | 70.2 | ||||||||||

| UniVL | 1.2M | Yes | 16.6 45.721.6 176.823.7 39.3 22.735.6 | 106.2 | ||||||||||

表 2: 10样本视频描述生成结果。♠表示同期工作。报告的Flamingo [2]结果使用16样本。#VideoPT表示用于预训练的视频数量。B-4、R-L、M、$C$分别代表BLEU-4、ROUGE-L、METEOR和CIDEr。Avg $C$表示所有可用基准的平均CIDEr分数。ASR表示模型是否使用ASR字幕。$BLIP$和$BLIP_{cap}$分别使用COCO描述任务上的预训练检查点和微调检查点。所有结果为三次随机种子的平均值。

| 方法 | #VideoPT | ASR | MSR-VTTCaption | YouCook2Caption | VaTexCaption | Avg C | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| B-4 | R-L | M | C | B-4 | R-L | M | C | B-4 | R-L | M | |||

| 少样本 | |||||||||||||

| UniVL | 1.2M | No | 2.1 | 22.5 | 9.5 | 3.6 | 3.3 | 25.3 | 11.6 | 34.1 | 1.7 | 15.7 | 8.0 |

| BLIP | 0 | No | 27.7 | 43.0 | 23.0 | 39.5 | 0.7 | 9.0 | 3.4 | 11.5 | 13.5 | 39.5 | 15.4 |

| BLIPcap | 0 | No | 21.6 | 48.0 | 22.7 | 30.2 | 3.7 | 8.6 | 3.8 | 9.4 | 20.7 | 41.5 | 17.4 |

| VidIL(ours) | 0 | No | 26.0 | 51.7 | 24.7 | 36.3 | 2.6 | 22.9 | 9.5 | 27.0 | 22.2 | 43.6 | 20.0 |

| UniVL | 1.2M | Yes | 4.3 | 26.4 | 12.2 | 48.6 | 2.7 | 17.7 | 10.2 | ||||

| VidIL(ours) | 0 | Yes | 10.7 | 35.9 | 19.4 | 111.6 | 23.2 | 44.2 | 20.6 | ||||

| Flamingo-3B(16) | 27M | No | 73.2 | ||||||||||

| Flamingo-80B(16) | 27M | No | 84.2 | ||||||||||

| 微调 | |||||||||||||

| UniVL | 1.2M | No | 42.0 | 61.0 | 29.0 | 50.1 | 11.2 | 40.1 | 17.6 | 127.0 | 22.8 | 38.6 | 22.3 |

| UniVL | 1.2M | Yes | 16.6 | 45.7 | 21.6 | 176.8 | 23.7 | 39.3 | 22.7 |

As discussed in Section 1, video captions describe the content in various semantic levels. The N-gram based metric may not fairly reflect the models’ performance in capturing the video-caption alignment. We further verify this hypothesis in Section 4.5. Thus, in addition to automatic metrics, we include qualitative examples illustrated in Figure 4. More examples are in Appendix ??.

如第1节所述,视频字幕通过不同语义层级描述内容。基于N-gram的指标可能无法公平反映模型在捕捉视频-字幕对齐关系时的性能表现。我们将在第4.5节进一步验证这一假设。因此除自动指标外,我们还提供了图4展示的定性分析案例(更多示例见附录??)。

Additionally, for most existing methods and also concurrent work, e.g., Flamingo [2], adding