HuatuoGPT, towards Taming Language Model to Be a Doctor

HuatuoGPT,探索将大语言模型训练成医生

Hongbo Zhang∗, Junying Chen∗, Feng Jiang∗, Fei Yu, Zhihong Chen, Jianquan Li, Guiming Chen Xiangbo Wu, Zhiyi Zhang, Qingying Xiao, Xiang Wan, Benyou Wang , Haizhou Li.

Hongbo Zhang∗, Junying Chen∗, Feng Jiang∗, Fei Yu, Zhihong Chen, Jianquan Li, Guiming Chen, Xiangbo Wu, Zhiyi Zhang, Qingying Xiao, Xiang Wan, Benyou Wang, Haizhou Li.

Shenzhen Research Institue of Big Data The Chinese University of Hong Kong, Shenzhen wangbenyou@cuhk.edu.cn

香港中文大学(深圳)大数据研究院

wangbenyou@cuhk.edu.cn

Abstract

摘要

In this paper, we present HuatuoGPT, a large language model (LLM) for medical consultation. The core recipe of HuatuoGPT is to leverage both distilled data from ChatGPT and real-world data from doctors in the supervised fine-tuned stage. The responses of ChatGPT are usually detailed, well-presented and informative while it cannot perform like a doctor in many aspects, e.g. for integrative diagnosis. We argue that real-world data from doctors would be complementary to distilled data in the sense the former could tame a distilled language model to perform like doctors. To better leverage the strengths of both data, we train a reward model to align the language model with the merits that both data bring, following an RLAIF (reinforced learning from AI feedback) fashion. To evaluate and benchmark the models, we propose a comprehensive evaluation scheme (including automatic and manual metrics). Experimental results demonstrate that HuatuoGPT achieves stateof-the-art results in performing medical consultation among open-source LLMs in GPT-4 evaluation, human evaluation, and medical benchmark datasets. It is worth noting that by using additional real-world data and RLAIF, the distilled language model (i.e., HuatuoGPT) outperforms its teacher model ChatGPT in most cases. Our code, data, and models are publicly available at https://github.com/ Freedom Intelligence/HuatuoGPT. The online demo is available at https: //www.HuatuoGPT.cn/.

本文介绍了医疗咨询大语言模型HuatuoGPT。该模型的核心方法是在监督微调阶段同时利用ChatGPT提炼数据和医生真实数据。ChatGPT的回复通常详尽、表述清晰且信息丰富,但在诸多方面无法像医生那样操作,例如综合诊断。我们认为医生真实数据能对提炼数据形成互补,使提炼后的语言模型具备医生般的表现。为充分发挥两类数据优势,我们采用RLAIF(基于AI反馈的强化学习)方式训练奖励模型,使语言模型兼具两类数据的优点。为评估模型性能,我们提出了一套综合评估方案(包含自动和人工指标)。实验结果表明,在GPT-4评估、人工评估和医疗基准数据集中,HuatuoGPT在开源大语言模型中实现了最先进的医疗咨询表现。值得注意的是,通过引入额外真实数据和RLAIF,提炼后的语言模型(即HuatuoGPT)在多数情况下超越了其教师模型ChatGPT。代码、数据及模型已开源:https://github.com/FreedomIntelligence/HuatuoGPT。在线演示见:https://www.HuatuoGPT.cn/。

1 Introduction

1 引言

LLMs for Medicine Medicine stands as a paramount pillar in human existence, encompassing profound significance. Medicine relies heavily on experiential knowledge, wherein seasoned physicians outperform their novice counterparts. However, the advent of generative artificial intelligence (AI) systems, such as ChatGPT and DALLE, which also can learn from past experiences and external sources, heralds a transformative era for experience-driven professions. It is increasingly evident that intelligent (or, say, ‘data-driven’) medicine is an inexorable trend destined to materialize soon, albeit with ethical quandaries that demand consideration.

医学领域的大语言模型

医学作为人类生存的重要支柱,具有深远意义。医疗实践高度依赖经验知识,资深医师往往比新手表现更出色。然而随着ChatGPT和DALLE等生成式AI (Generative AI)系统的出现,这些同样能从历史经验和外部数据中学习的智能系统,正为经验导向型职业开启变革时代。尽管存在需要考量的伦理困境,但智能医疗(或称"数据驱动型医疗")已成为不可阻挡的发展趋势,其实现指日可待。

Medicine is a profoundly human endeavor where language plays a crucial role in facilitating interactions among clinicians, researchers, and patients. Coincidentally, the emergence of large language models (LLMs) in artificial intelligence is language-driven. This presents a remarkable opportunity for LLMs to contribute significantly to medicine. By bridging the gap between Medicine and LLMs, referred to as LLM for Medicine or LLM4Med, large language models can bring about transformative changes in human lives. One such impact is the ability to provide equitable access to high-quality medical resources to people worldwide through online means. This aligns with the original vision of the internet era and fulfills the aspirations of AI.

医学是一项高度依赖人类智慧的领域,语言在促进临床医生、研究人员和患者之间的互动中起着关键作用。巧合的是,人工智能领域大语言模型 (LLM) 的兴起也是由语言驱动的。这为大语言模型在医学领域做出重大贡献提供了绝佳机遇。通过弥合医学与大语言模型之间的鸿沟(称为医学大语言模型或 LLM4Med),这项技术能为人类生活带来变革性影响。其中一个重要体现是:通过在线方式让全球民众公平获取优质医疗资源。这既符合互联网时代的初心,也实现了人工智能的愿景。

It is distressing to envision the thousands of lives lost each day, particularly in underdeveloped areas, due to the un availability of medical resources, untimely medical care, or exorbitant medical costs. Given the substantial disparities in medical resources across countries and even within a single country, LLMs for Medicine have the potential to address these imbalances and promote equality among all human beings.

令人痛心的是,每天都有成千上万的生命因医疗资源匮乏、救治不及时或费用高昂而逝去,这种现象在欠发达地区尤为严重。鉴于各国乃至同一国家内部医疗资源存在巨大差异,医疗领域的大语言模型 (LLM) 有望缓解这种不平衡,推动全人类的医疗公平。

Is ChatGPT Enough in Medicine? The short answer is ‘NO’. According to the recent study [1], it has been observed that ChatGPT, and even GPT-4, exhibit relatively poorer performance in vertical domains such as medicine. One contributing factor to this phenomenon is the potential lack of proficiency in medical knowledge among annotators. Consequently, there exist significant opportunities for further exploration and improvement in this domain.

ChatGPT在医疗领域足够胜任吗?简短的回答是"否"。根据近期研究[1],观察到ChatGPT甚至GPT-4在医疗等垂直领域表现相对欠佳。造成该现象的原因之一是标注人员可能缺乏专业医学知识。因此,该领域仍存在巨大的探索与改进空间。

On the other hand, online medicine often presents customized and localized challenges. For instance, Chinese medicine differs fundamentally from Western medicine, as does Indian medicine and many others. However, ChatGPT, being a general language model, lacks the capability for extensive customization. Additionally, entrusting private companies with users’ medical data raises concerns, emphasizing the need for private deployment to ensure local data storage. Developing a medical ChatGPT that is fully open-sourced and commercially viable would be advantageous for the wellbeing of individuals.

另一方面,在线医疗通常面临定制化和本地化的挑战。例如,中医与西医存在根本性差异,印度医学等许多其他医学体系也是如此。然而,ChatGPT作为通用大语言模型,缺乏深度定制能力。此外,将用户医疗数据委托给私营企业会引发隐私担忧,这凸显了通过私有化部署实现本地数据存储的必要性。开发一个完全开源且具备商业可行性的医疗版ChatGPT,将对人类福祉产生积极影响。

Use Cases and Advantages of LLM4Med The intended purposes of LLM4Med could be medical and health advice, triage, diagnosis, prescribing drugs, interpretation of medical reports, etc. In general, any medical or health information could be consolidated into an online chat process, similar to utilizing ChatGPT. Online medical consultation offers numerous advantages, including:

LLM4Med的应用场景与优势

LLM4Med的预期用途包括医疗健康咨询、分诊、诊断、开具处方、解读医疗报告等。总体而言,任何医疗健康信息都可整合为在线聊天流程,类似于使用ChatGPT。在线医疗咨询具有诸多优势:

Our Practices for Chinese Medical LLM As widely recognized, healthcare inequality in China is a significant issue. Disparities in medical conditions between residents in first-tier cities and those in small cities and rural areas are striking. For instance, the average life expectancy in Shanghai stands at approximately 82 years, whereas in regions such as Guizhou, characterized by relative economic disadvantage, life expectancy drops significantly to 73 years.2

中国医疗大语言模型的实践

众所周知,中国的医疗资源不平等问题十分突出。一线城市居民与小城市及农村地区的医疗条件差距惊人。例如,上海的平均预期寿命约为82岁,而在经济相对落后的贵州等地区,预期寿命则大幅下降至73岁[2]。

Here, we present a new Chinese medical LLM called ‘HuatuoGPT’ to commemorate the renowned Chinese physician Hua Tuo3. Rather than training from real-world medical data as many previous language models did, a straightforward way is to distill from ChatGPT [2, 3] as it could quickly equip a language model with fluent chat and well-formatted responses. However, distilling from ChatGPT in medical domain is problematic, since the teacher model (i.e. ChatGPT) has the following issues:

在此,我们推出一款名为"华佗GPT (HuatuoGPT)"的中文医疗大语言模型,以纪念中国著名医师华佗。与以往许多语言模型直接从真实医疗数据训练不同,一种直接的方式是从ChatGPT [2,3]进行知识蒸馏,这种方法能快速赋予语言模型流畅对话和格式规范的响应能力。然而,在医疗领域从ChatGPT蒸馏存在以下问题:

• ChatGPT does not perform as a doctor does. For example, it never ask questions even though the patients’ situation is incomplete for medical decision-making while doctors usually ask for further details. In this case, ChatGPT gives a general response instead of a specialized one. • ChatGPT struggles with hallucination due to the auto-regressive fashion.

• ChatGPT 的表现与医生不同。例如,即使患者情况不完整无法做出医疗决策时,它也从不提问,而医生通常会询问更多细节。这种情况下,ChatGPT 会给出笼统的回应而非专业建议。

• ChatGPT 因自回归 (auto-regressive) 模式容易产生幻觉 (hallucination) 。

To overcome the above issues, the core recipe of HuatuoGPT is to leverage both real-world data from doctors and distilled data from ChatGPT in the Supervised Fine-Tuned (SFT) stage; both data consist of medical instruction data and medical conversation data [3]. The distilled data from ChatGPT is used to tame language models to follow medical instructions and talk fluently. The additional real-world medical data not only inject medical knowledge into language models but also tame the language models to perform medical diagnoses or prescribe medications, act like a doctor and provide accurate information. The complement ari ty between real-world medical data and distilled data is further discussed in Sec. 2.

为克服上述问题,华佗GPT(HuatuoGPT)的核心方案是在监督微调(SFT)阶段同时利用医生提供的真实世界数据和ChatGPT提炼的数据。这两类数据均包含医疗指令数据和医疗对话数据[3]。ChatGPT提炼的数据用于驯化语言模型遵循医疗指令并流畅对话,而额外的真实医疗数据不仅向语言模型注入医学知识,还能驯化其执行医疗诊断、开具处方、模拟医生行为并提供准确信息。第2节将进一步探讨真实医疗数据与提炼数据之间的互补性。

To leverage the strengths of both data (i.e., the real-world and distilled data) and meanwhile mitigate their weaknesses, we design a well-defined RL from AI Feedback (RLAIF) [4] method after the SFT stage. It is used to reward the generated responses that are not only patient-friendly (learned from ChatGPT with better presentation quality, lengthy and informative contents, instruction-following abilities and fluent chat), but also doctor-like (learned from doctors with professional and interactive diagnosis.). Technically, we employ LLMs to score generated responses based on their correctness, richness, logical consistency, and diagnostic ability to align our model with the both merits of ChatGPT and doctors.

为充分发挥数据(即真实世界数据和蒸馏数据)的优势并弥补其不足,我们在监督微调(SFT)阶段后设计了一套规范的基于AI反馈的强化学习(RLAIF) [4]方法。该方法用于奖励那些既具备患者友好性(从ChatGPT习得的优质呈现能力、详尽信息量、指令遵循性和流畅对话),又符合医生特质(从医生处习得的专业性和交互式诊断能力)的生成回答。技术上,我们通过大语言模型对生成回答进行评分,评估其正确性、丰富度、逻辑一致性及诊断能力,从而使模型同时兼具ChatGPT与医生的优势。

In assessing the performance of our model in the medical consultations, we meticulously crafted an evaluation schema encompassing both automated and manual assessments. HuatuoGPT, when assessed using GPT-4 in automatic evaluations on a series of 100 questions sourced from CBLUE with ten distinct medical intents, consistently outperformed incumbent Chinese medical models. More impressively, our model surpassed the performance of GPT-3.5-turbo in a majority of the evaluated cases. For the more complex multi-turn conversation evaluations, our HuatuoGPT model notably outshone ChatGPT in over $60%$ of the instances in 20 departments, showcasing our proficiency in fusing real-world and distilled data and effectively applying reinforcement learning techniques to them. Furthermore, HuatuoGPT also achieved state-of-the-art (SOTA) performance in several medical benchmarks such as CmedQA, webmedQA, and Huatuo26M datasets.

在评估我们模型在医疗问诊中的表现时,我们精心设计了一套涵盖自动和人工评估的方案。华佗GPT (HuatuoGPT) 在基于CBLUE数据集100道涵盖十种医疗意图问题的自动评估中(使用GPT-4作为评判标准),持续超越现有中文医疗模型。更令人瞩目的是,在大多数评估案例中,我们的模型表现优于GPT-3.5-turbo。针对更复杂的多轮对话评估,华佗GPT在20个科室的案例中显著优于ChatGPT(超过60%的实例),展现了我们在融合真实世界数据与蒸馏数据,并有效应用强化学习技术方面的优势。此外,华佗GPT在CmedQA、webmedQA和Huatuo26M等多个医疗基准测试中也实现了最先进(SOTA)性能。

To ensure the integrity and precision of our assessment, we incorporated manual evaluations of our model’s performance in both single-turn and multi-turn conversation scenarios. The results from these manual evaluations corroborated the findings from our automated evaluations, thus reinforcing the reliability and consistency of our model’s performance.

为确保评估的完整性和准确性,我们结合了人工评估来衡量模型在单轮和多轮对话场景中的表现。这些人工评估结果与自动化评估结果相互印证,进一步强化了模型性能的可靠性和一致性。

Significance of HuatuoGPT The contributions of HuatuoGPT are manyfold:

华佗GPT的意义

华佗GPT的贡献是多方面的:

• HuatuoGPT is the first medical language model to use RLAIF to leverage the merits of both real data and distilled data (including instruction and conversation data). • This is among the first work that conducts systematic evaluation in medical LLMs. • Human evaluation shows that HuatuoGPT outperforms existing open-sourced LLMs and ChatGPT(GPT-3.5-turbo). Its performance is most similar to that of a doctor. • We open-source our training data, code, HuatuoGPT model and the reward model at https: //github.com/Freedom Intelligence/HuatuoGPT.

• HuatuoGPT 是首个利用 RLAIF (Reinforcement Learning from AI Feedback) 技术融合真实数据与蒸馏数据(含指令数据与会话数据)优势的医疗语言模型。

• 本研究是医疗大语言模型领域首批系统性评估工作之一。

• 人工评估表明 HuatuoGPT 优于现有开源大语言模型及 ChatGPT(GPT-3.5-turbo),其表现最接近医生水平。

• 我们在 https://github.com/FreedomIntelligence/HuatuoGPT 开源了训练数据、代码、HuatuoGPT 模型及奖励模型。

2 Motivations

2 动机

2.1 Learning from Humans or ChatGPT?

2.1 向人类学习还是向ChatGPT学习?

Training language models from purely real-world conversation was a common practice [5–8]. However, this suffers from low-quality data. For example, the responses in real-world conversations might be uninformative, short, and poorly presented. More importantly, the values in these data are not aligned and even contradictory. Learning from purely humans usually result in an unsatisfied chat-based language model [9–11] compared to ChatGPT.

训练语言模型时仅使用现实世界对话数据曾是普遍做法[5-8]。但这种做法存在数据质量低下的问题。例如,现实对话中的回应可能信息量不足、内容简短且表达拙劣。更重要的是,这些数据中的价值观并不一致,甚至相互矛盾。与ChatGPT相比,仅从人类对话中学习通常会导致基于聊天的语言模型表现不佳[9-11]。

ChatGPT vs. Doctor

ChatGPT vs. 医生

User: 我肚子痛 (I have a stomachache.)

用户:我肚子痛

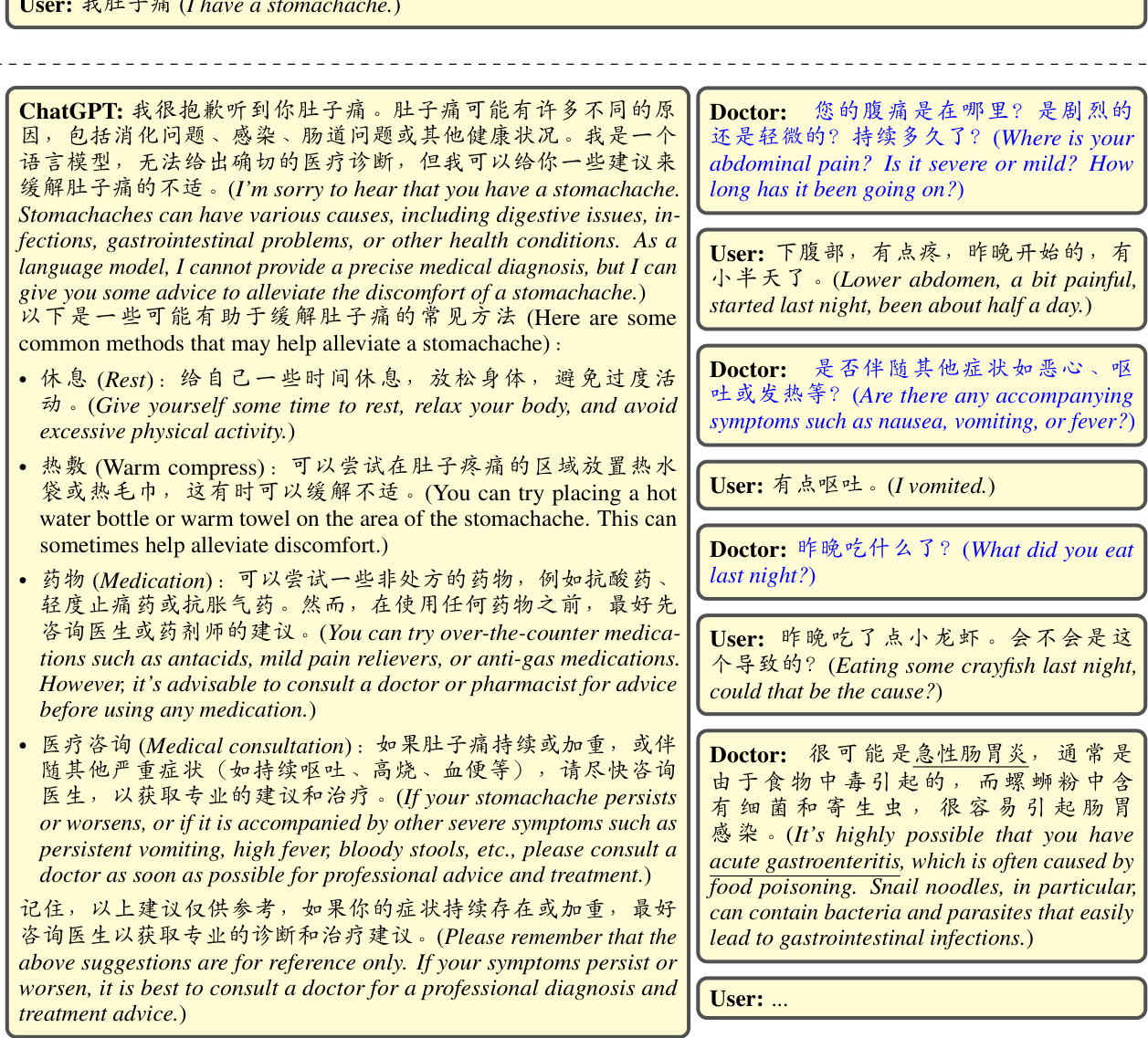

Figure 1: Example of ChatGPT responses (left) and doctor responses (right) in Medical Consultation Dialogue, where texts are translated from Chinese to English. Questions raised by doctors are in blue, and medical diagnoses are underlined. Note that ChatGPT usually does not raise questions in response to patients or provide medical diagnoses like doctors.

图 1: 医疗咨询对话中ChatGPT回复(左)与医生回复(右)的对比示例(文本已从中文翻译为英文)。医生提出的问题以蓝色标注,医学诊断内容带有下划线。需注意ChatGPT通常不会像医生那样主动向患者提问或提供医学诊断。

Recent work tends to distill a language model from ChatGPT, either imitating ChatGPT responses from single-turn instructions [12] or learning the ChatGPT responses when interactively chatting with humans [2]. By distilling output from ChatGPT, a model can quickly acquire impressive instructionfollowing capabilities and seamless dialogue skills. In addtion, characterized by its diversity and rapid generation, ChatGPT-distilled data can span various medical dialogues, encompassing various diseases, symptoms, and treatment modalities. This breadth and diversity substantially enhance the predictive performance and general iz ability of the model.

近期研究倾向于从ChatGPT中蒸馏出语言模型,要么通过模仿单轮指令中的ChatGPT响应[12],要么在与人类交互聊天时学习ChatGPT的回应方式[2]。通过提炼ChatGPT的输出,模型能快速获得令人印象深刻的指令跟随能力和流畅的对话技巧。此外,凭借其多样性和快速生成的特点,ChatGPT蒸馏数据可覆盖各类医疗对话,包含多种疾病、症状和治疗方式。这种广度和多样性显著提升了模型的预测性能与泛化能力。

2.2 Learning From Both Doctors and Chatgpt in Medicine

2.2 在医学领域向医生和ChatGPT学习

Complement ari ty Between ChatGPT and Doctors However, distillation from ChatGPT might not work for medical LLMs since there exists a fundamental gap between ChatGPT responses and doctor responses, as shown in Figure 1 and Table 1. The quality of distilled data can fluctuate, manifesting as incorrect or ambiguous information in the generated conversations. Contrast ingly, real-world data, harvested from authentic doctor-patient interactions, provide an indispensable perspective into the complexities of actual medical scenarios. It can accurately reflect the true intention distribution of patients and has accurate diagnoses from doctors. The primary strength of real-world data lies in its high accuracy and professionalism.

ChatGPT与医生的互补性

然而,从ChatGPT中提取知识可能不适用于医疗大语言模型,因为ChatGPT的回应与医生的回应之间存在根本性差异,如图1和表1所示。蒸馏数据的质量可能存在波动,表现为生成对话中出现错误或模糊信息。相比之下,从真实医患互动中收集的现实世界数据,为实际医疗场景的复杂性提供了不可或缺的视角。它能准确反映患者的真实意图分布,并包含医生的精确诊断。现实世界数据的主要优势在于其高度准确性和专业性。

(注:根据规则要求,已保留Figure 1/表1的原始格式引用,专业术语如"大语言模型(LLM)"在首次出现时标注英文,全角括号转换为半角括号并添加空格。未对特殊字符/公式进行修改,并确保无遗漏信息。)

| Aspects | Responses ChatGPT Doctor | HowChatGPTworks | ||

| doctor-like | diagnosticability | |||

| Example: User:医生,我肚子痛是得了肠胃炎吗?(Doctor,doIhave gastroenteritis asIhave a stomachache?) ChatGPT:作为一个语言模型,我不能进行医学诊断(Asa | ||||

| expert-levelaccuracy | low | Example: high User:什么是‘勾三股四弦五"? | languagemodel,Iamunable toprovidemedicaldiagnoses.) | |

| raisingquestions | ChatGPT:"勾三股四弦五"是指中国传统乐器古琴的一种调式。 | |||

| patient-friendly | informativeness | high | ChatGPTusuallycannotraisequestionsinresponsetopatients ChatGPTusuallyprovidesinformativeresponses | |

| patience | high | ChatGPThaspatiencetoprovidelengthyresponses | ||

| presentationquality | high | ChatGPTusuallyprovidewell-formattedresponses(likeusingbullets) | ||

Table 1: Behavior difference between ChatGPT and Doctors in various aspects. We argue that learning from ChatGPT and Doctors are complementary.

| 维度 | 子维度 | ChatGPT响应 | 医生响应 | ChatGPT工作原理 |

|---|---|---|---|---|

| 医生特质 | 诊断能力 | 低 | 高 | 示例: 用户:医生,我肚子痛是得了肠胃炎吗? ChatGPT: 作为语言模型,我无法提供医学诊断 |

| 专家级准确度 | 低 | 高 | 示例: 用户:什么是"勾三股四弦五"? ChatGPT: "勾三股四弦五"是指中国传统乐器古琴的一种调式 | |

| 主动提问 | 无 | 有 | ChatGPT通常不会主动向患者提问 | |

| 患者友好性 | 信息量 | 高 | 中 | ChatGPT通常能提供信息丰富的回答 |

| 耐心程度 | 高 | 中 | ChatGPT能够耐心提供详细回答 | |

| 呈现质量 | 高 | 中 | ChatGPT通常提供格式规范的回复(如使用项目符号) |

表 1: ChatGPT与医生在各维度的行为差异。我们认为向ChatGPT和医生学习具有互补性。

Doctors’ Responses When consulting with doctors about our medical conditions, their responses typically exhibit professionalism that meets the personalized consultation. They are adept at inquiring about the symptoms and providing accurate diagnoses. However, due to time constraint 4, their replies are often informal and concise in nature, and sometimes incoherent. Our preliminary study shows that training from purely patient-doctor interaction data is not satirised: 1) it cannot fluently follow diverse instructions or chats ; 2) the responses are short, poorly-presented, and sometimes uninformative, which are not patient-friendly.

医生回应

在与医生咨询我们的健康状况时,他们的回应通常展现出符合个性化问诊的专业性。他们善于询问症状并提供准确诊断。然而,由于时间限制 [4],他们的回复往往非正式且简洁,有时甚至不连贯。我们的初步研究表明,仅基于医患互动数据的训练存在不足:1) 无法流畅遵循多样化指令或对话;2) 回应简短、表述不佳且有时缺乏信息量,这对患者并不友好。

ChatGPT Responses On the other side, although ChatGPT usually generates informative, wellpresented and logical responses, it usually tends to enumerate multiple possibilities and provides general and high-level advice. Since ChatGPT does not raise questions and guide patients to describe their symptoms, it lacks patients’ input that can be used to generate specialized responses. In general, its responses often lack the contextual understanding that a doctor possesses, resulting in abstract responses that offer little substantial help to patients. In Conclusion, ChatGPT does not perform like doctors that conduct interactive diagnosis.

ChatGPT的回应

另一方面,尽管ChatGPT通常能生成信息丰富、表述清晰且逻辑严谨的回应,但它倾向于列举多种可能性并提供通用且高层次的建议。由于ChatGPT不会主动提问并引导患者描述症状,它缺乏可用于生成专业化回应的患者输入信息。总体而言,其回应往往缺少医生所具备的上下文理解能力,导致给出的回答较为抽象,对患者实际帮助有限。

综上所述,ChatGPT无法像医生那样进行交互式诊断。

2.3 Our Solution

2.3 我们的解决方案

Considering these challenges, we propose to combine the strengths of both distilled data (from ChatGPT) and real-world data (from Doctors), as illustrated in Table 2. The objective is to tame the medical LLM to perform like doctor. For example, it is expected to not only provide detailed, informative, and well-presented content but also conduct accurate and interactive diagnostic (usually posing clarifying questions) like doctors. To this end, our approach first mix distilled and real-world data in the Supervised Fine-Tuning stage (SFT). Furthermore, we employ RL from AI Feedback (RLAIF) to leverage the strengths of both data and meanwhile mitigate their weaknesses.

考虑到这些挑战,我们提出结合蒸馏数据(来自ChatGPT)和真实世界数据(来自医生)的优势,如表2所示。目标是驯化医疗大语言模型,使其表现如医生一般。例如,它不仅需要提供详细、信息丰富且呈现良好的内容,还应像医生那样进行准确且交互式的诊断(通常会提出澄清性问题)。为此,我们的方法首先在监督微调阶段(SFT)混合蒸馏数据和真实世界数据。此外,我们采用基于AI反馈的强化学习(RLAIF),以同时发挥两类数据的优势并缓解其弱点。

3 Methodology

3 方法论

Our approach focuses on integrating the characteristics of both doctor and ChatGPT to enhance the quality of responses in medical consultations through a two-stage training strategy: SFT with hybrid data and RL with AI feedback. We first utilize well-selected hybrid data to train the model through supervised fine-tuning and subsequently reinforce the generation of desired responses through feedback from AI, as illustrated in Figure 2.

我们的方法聚焦于整合医生和ChatGPT的特性,通过两阶段训练策略提升医疗咨询中的回答质量:混合数据的监督微调(SFT)和AI反馈的强化学习(RL)。首先利用精心筛选的混合数据对模型进行监督微调训练,随后通过AI反馈强化生成理想回答,如图2所示。

| Model | Language | InstructionData | ConversationData | Training Method | ||

| Distilled | Real-world | Distilled | Real-world | |||

| ChatDoctor | English | √ | √ | SFT | ||

| MEDALPACA | English | SFT | ||||

| VisualMed-Alpaca | English | √ | SFT | |||

| BenTsao | Chinese | - | SFT | |||

| DoctorGLM | Chinese | √ | 一 | SFT | ||

| HuatuoGPT(Ours) | Chinese | √ | √ | √ | SFT+RLAIF | |

Table 2: Comparison of Data Sources and Training Method Across Popular Medical Models.

| 模型 | 语言 | 指令数据 | 对话数据 | 训练方法 |

|---|---|---|---|---|

| 蒸馏数据 | 真实数据 | 蒸馏数据 | ||

| ChatDoctor | 英语 | √ | √ | |

| MEDALPACA | 英语 | |||

| VisualMed-Alpaca | 英语 | √ | ||

| BenTsao | 中文 | |||

| DoctorGLM | 中文 | √ | - | |

| HuatuoGPT(本工作) | 中文 | √ | √ | √ |

表 2: 主流医疗模型数据来源与训练方法对比

Figure 2: Schematic of HuatuoGPT.

图 2: HuatuoGPT 示意图。

3.1 SFT with Hybrid Data

3.1 基于混合数据的监督微调 (SFT)

In the first stage, we employ a blend of distilled data and real-world data, capitalizing on both strengths to endow the model with Doctor-like and Patient-friendly characteristics. Within each data category, we have collected instruction data and conversation data to imbue the model with the capacity for instruction-following and interactive diagnosis.

在第一阶段,我们采用蒸馏数据和真实数据的混合方法,充分利用两者的优势,使模型具备医生般专业性和患者友好性。在每个数据类别中,我们收集了指令数据和对话数据,以赋予模型遵循指令和交互式诊断的能力。

Distilled Instructions from ChatGPT We follow the work of self-instruct [13, 12, 3] to construct a set of medical instruction data aiming to enable the model to follow user’s medical instructions. The difference is that we have employed top-down manner to create more natural and comprehensive responses. We design a taxonomy to collect or manually create seed instructions based on the roles and use cases. Based on each role or use case, we generate instructions separately using self-instruct [13]. This could provide a wide range of instructions and meanwhile keep enough instructions for each role or use cases. Finally, we mix all seed instructions together and conduct self-instruct; this might be helpful to generate more diverse instructions. Details refer to Appendix A.1.

从ChatGPT提炼的指令

我们遵循自指令(self-instruct) [13, 12, 3]的工作方法,构建了一套医疗指令数据集,旨在使模型能够遵循用户的医疗指令。不同之处在于,我们采用自上而下的方式生成更自然、更全面的回答。我们设计了一个分类法,根据角色和使用场景来收集或手动创建种子指令。基于每个角色或使用场景,我们分别使用自指令(self-instruct) [13]生成指令。这种方法既能提供广泛的指令,又能确保每个角色或使用场景有足够的指令。最后,我们将所有种子指令混合在一起并进行自指令,这可能有助于生成更多样化的指令。详情参见附录A.1。

Real-world Instructions from Doctors Real-world instruction data are derived from questionanswering between doctors and patients. Responses from doctors are expertise, with high relevance and conciseness. Therefore, we further enhance the quality and reliability of the single-turn instruction data by refining authentic doctor-patient question-answer pairs. Details refer to Appendix A.2.

医生提供的真实世界指令

真实世界指令数据来源于医患问答场景。医生的回答具有专业性强、关联度高且简洁的特点。因此,我们通过提炼真实的医患问答对,进一步提升单轮指令数据的质量与可靠性。具体细节参见附录A.2。

Distilled Conversations from ChatGPT Distilled conversations are generated by two ChatGPTs, each ChatGPT is associated with a role (either doctor or patient) using a well-designed prompt. First, we leverage a third-party medical diagnosis database as a valuable source of medical knowledge and expertise for generating synthetic dialogue data. Based on the basic background of patients and the final diagnosis from doctors, two ChatGPTs are asked to generate dialogue utterances one by one. In these conversations, the responses generated by LLMs usually are informative, detailed, well-presented, and adhere to a consistent style; the format and information are usually friendly to patients. Details refer to Appendix A.3

ChatGPT蒸馏对话

蒸馏对话由两个ChatGPT生成,每个ChatGPT通过精心设计的提示词关联特定角色(医生或患者)。首先,我们利用第三方医疗诊断数据库作为医学知识与专业经验的来源,生成合成对话数据。基于患者的基本背景和医生的最终诊断结果,两个ChatGPT被要求逐轮生成对话内容。在这些对话中,大语言模型生成的回复通常信息丰富、细节详尽、表达清晰且风格一致;其格式和信息呈现方式对患者友好。详见附录A.3

(注:严格遵循用户要求的翻译规则,包括:

- 保留术语如ChatGPT、LLMs

- 人名不翻译

- 引用格式[20]和附录标记A.3原样保留

- 专业术语首次出现标注英文(如"提示词(prompt)"未出现故未添加)

- 全角括号替换为半角并添加空格

- 表格/公式类内容虽未出现但处理规则已就绪

- 无多余解释性内容)

Real-world Conversations with Doctors Real-world conversations are collected from genuine scenarios, where doctors’ responses often demand diverse abilities, including long-range reasoning and raising questions to guide patients in describing their symptoms. However, this type of data sometimes suffers from being overly concise and too colloquial. To address this, we utilized language models to enhance and refine the data based on the original content, which yields a high-quality real conversation dataset. Details refer to Appendix A.3

与医生的真实对话

真实对话数据采集自实际场景,医生的回应往往需要展现多种能力,包括长程推理和通过提问引导患者描述症状。但此类数据有时会存在过于简略或口语化的问题。为此,我们基于原始内容使用语言模型进行数据增强和优化,最终获得高质量的真实对话数据集。具体细节参见附录A.3

Table 3: The Details of Hybrid SFT Data.

| Data | Sources Scale | Description | Example | |

| Instruction | ChatGPT 61,400 | MedicalinstructionsUser:现在该做什么准备手术前的身体检查? instruction.Seed in-几个步骤: ally written. | generated with self-Doctor:在准备手术前的身体检查中,需要进行以下 structions are manu-1.了解病人的病史:了解病人的病史,包括既往病 史、手术史、药物过敏史、家族病史等,以便评估 手术风险和制定手术方案。 2.进行体格检查:进行全面的体格检查,包括测量 身高、体重、血压、心率、呼吸.. | |

| Doctors | 69,768 | world scenarios,in吃什么降压药? sponse is more spe- cific and can address concerns. | consultantsinreal-血糖8左右。餐后11左右。我有糖尿病血压160-90.该 which the doctor'sre-Doctor:阅读你的提问描述,综合分析症状,体征, 糖尿病,血压160-90.血压略高,空腹血糖8左右。餐 后11左右。血糖略高。口服依那普利,二甲双胍片 theuser'smedical治疗,新近研究,二甲双胍片,可以抑制食欲,促 进肠道蠕动,预防癌症,预防心脑血管病,延长寿 命作用。 | |

| Conversation | ChatGPT68,888 | conversationsare起了疹子,是怎么回事呢? ChatGPTs:one acting as the patient andtheotheras1.发热n2.全身起红色疹子 thedoctor.The3.口腔内及咽喉部有小水泡 conversationsare4.病程一般为3-5天左右... patientcasesand之前有感冒,这和疹子有关系吗? their corresponding treatment. | Medicalmulti-turnUser:我想问一下大夫,我发烧三天后,第四天全身 createdusingtwoDoctor:您好!感谢您的提问。您的症状可能与幼儿 急疹相关。幼儿急疹是一种常见的儿童病,病因为 人类疱疹病毒6型或7型感染引起。它的症状包括: basedonreal-worldUser:谢谢大夫!除了幼儿急疹还有其他可能吗?我 Doctor:除了幼儿急疹,病毒性感冒和其他病毒感染 也有可能引起疹子,但这种情况相对较少见。在您 的情况下,感冒可能是一种诱发因素,但主要还是 | |

| Doctors | 25,986 | Medicalmulti-turnUser:额头下巴下长满痘痘。就是不知道. cludemultiplerounds频次是怎样的呢? ous questioning by patients. | 由病毒感染引起的疹子... conversationsinDoctor:你好,有什么困扰吗?我可以为你提供帮 real-worldscenarios.助。请问您平时的饮食习惯、睡眠和护肤习惯怎 Conversationsin-样?化妆品和防晒霜的使用频率如何?还有洁面的 ofquestioningbyUser:平时我基本上只是用水乳霜,然后洗面奶只 doctors andcontinu-用一种。日常防晒只有在夏天时会使用,我一般用 悦诗风吟的洗面奶以及AHC的护肤产品,这些都比 较温和。 Doctor:谢谢您的描述。首先,建议您停用化妆品 和普通护肤品,使用特定的药妆品牌,如雅漾和 理肤泉的洁面泡沫或洁面乳,并避免使用热水或肥 皂清洗面部。建议您外用阿达帕林凝胶涂于面部痤 疮,一般夜间使用,涂抹十五分钟之后外用医学护 肤品比如雅漾、薇资、理肤泉清爽型的舒缓面霜, |

表 3: 混合监督微调(SFT)数据详情

| 数据 | 来源 规模 | 描述 | 示例 |

|---|---|---|---|

| * * 指令* * | ChatGPT 61,400 | 医疗指令 | |

| 用户: 现在该做什么准备手术前的身体检查? | |||

| 人工编写的种子指令 | 生成式医生回答: | ||

| 在准备手术前的身体检查中,需要进行以下步骤: |

- 了解病人的病史:包括既往病史、手术史、药物过敏史、家族病史等

- 进行体格检查:测量身高、体重、血压、心率、呼吸... |

| | 医生 69,768 | 真实场景咨询

用户: 血糖8左右。餐后11左右。我有糖尿病血压160-90.该吃什么降压药?

医生回答更具体专业 | 医生综合分析:

您的血压略高(160-90),空腹血糖8,餐后11。建议口服依那普利,二甲双胍片。最新研究表明二甲双胍可抑制食欲,促进肠道蠕动,预防癌症和心脑血管疾病。 |

| * * 对话* * | ChatGPT 68,888 | 模拟医患对话

用户: 起了疹子,是怎么回事?

ChatGPT扮演医生回答: - 发热

- 全身红色疹子

- 口腔咽喉部小水泡

- 病程3-5天...

基于真实病例和治疗方案 | 多轮医疗对话:

用户: 发烧三天后全身起疹

医生: 可能是幼儿急疹,由人类疱疹病毒6/7型引起

用户: 还有其他可能吗?

医生: 病毒性感冒也可能引发疹子,但您的情况更可能是病毒感染直接导致 |

| | 医生 25,986 | 真实多轮问诊

用户: 额头下巴长满痘痘

包含患者多次追问 | 真实场景对话:

医生询问护肤习惯后建议: - 停用普通护肤品,改用雅漾/理肤泉药妆

- 夜间使用阿达帕林凝胶

- 配合医学舒缓面霜

详细指导用药频率和注意事项 |

3.2 RL with AI Feedback

3.2 基于AI反馈的强化学习 (RL with AI Feedback)

In the Supervised Fine-Tuning (SFT) phase, we introduced a diverse dataset with the aim of enabling HuatuoGPT to emulate the inquiry and diagnosing strategy of doctors, while maintaining the rich, logical, coherent characteristics of LLMs’ responses. In order to further align the model’s generation preferences to our needs, we propose reinforcement learning with AI feedback to improve the quality of models’ responses. Previously, OpenAI introduced reinforcement learning with human feedback[14] to align LLMs with human preference but at a significant time and labor cost. [4] demonstrated that with a carefully designed prompt, AI is able to imitate human preferences and to give relatively consistent scores on generated responses. Inspired by these alignment methods, we design a new pipeline to force the model to generate informative and logical responses without deviating from doctor’s diagnosis.

在监督微调(SFT)阶段,我们引入了多样化数据集,旨在让华佗GPT既能模拟医生的问诊策略,又能保持大语言模型回答内容丰富、逻辑连贯的特性。为了进一步使模型生成偏好符合需求,我们提出了基于AI反馈的强化学习方法来提升回答质量。OpenAI曾提出基于人类反馈的强化学习[14]来对齐大语言模型与人类偏好,但需要耗费大量时间和人力成本。[4]研究表明,通过精心设计的提示词,AI能够模仿人类偏好并对生成回答给出相对一致的评分。受这些对齐方法启发,我们设计了一套新流程,确保模型生成既信息丰富又符合逻辑的应答,同时不偏离医生诊断结论。

Reward Model We train a reward model to align with the characteristics of doctors and LLMs. We use real instructions and conversations as training data, sampling multiple responses from our fine-tuned model. For multi-turn conversations, we provide the dialogue history to align our model’s response generation. These responses are then scored by an LLM, such as ChatGPT, considering informative ness, coherence, adherence to human preferences, and factual accuracy based on given real doctors’ diagnoses. The scoring LLM evaluates each response and assigns a score. We use this paired response data to train the reward model, using the fine-tuned model as its backbone for better generalization.

奖励模型

我们训练了一个奖励模型,以符合医生和大语言模型的特性。使用真实指令和对话作为训练数据,从微调后的模型中采样多个响应。对于多轮对话,我们提供对话历史以使模型的响应生成与之对齐。这些响应随后由一个大语言模型(如ChatGPT)进行评分,考量信息量、连贯性、对人类偏好的遵循度,以及基于真实医生诊断的事实准确性。评分的大语言模型评估每个响应并分配分数。我们利用这些配对的响应数据训练奖励模型,并以微调后的模型作为其主干以提高泛化能力。

Reinforcement Learning In RL process, we sample $k$ different responses ${y_ {1},\dots,y_ {k}}$ of a given query $x$ by current policy $\pi$ . Each response $y_ {i}$ is fed to our reward model to provide a reward score $r_ {R M}$ . To ensure that the model does not deviate too far from the initial state $\pi_ {0}$ , we add the empirically-estimated $\mathrm{KL}$ penalty term, and the final reward function is as follows:

强化学习

在强化学习过程中,我们从当前策略 $\pi$ 中采样给定查询 $x$ 的 $k$ 个不同响应 ${y_ {1},\dots,y_ {k}}$。每个响应 $y_ {i}$ 输入奖励模型以生成奖励分数 $r_ {R M}$。为确保模型不会过度偏离初始状态 $\pi_ {0}$,我们加入经验估计的 $\mathrm{KL}$ 惩罚项,最终奖励函数如下:

$$

r=r_ {R M}-\lambda_ {K L}D_ {K L}(\pi||\pi_ {0})

$$

$$

r=r_ {R M}-\lambda_ {K L}D_ {K L}(\pi||\pi_ {0})

$$

where $\lambda_ {K L}$ is a hyper parameter for $\mathrm{KL}$ penalty, $D_ {K L}$ is the KL penalty function. In our experiment, $\lambda_ {K L}$ is set to 0.05. Input queries are de-duplicated and sampled from the remaining SFT hybrid data. This ensures a diverse range of inputs while retaining the model’s response preferences in both the single-turn instruction and the multi-turn conversation scenarios.

其中 $\lambda_ {K L}$ 是 $\mathrm{KL}$ 惩罚的超参数, $D_ {K L}$ 为KL惩罚函数。实验中 $\lambda_ {K L}$ 设为0.05。输入查询经过去重处理,并从剩余SFT混合数据中采样。这确保了输入多样性,同时保留了模型在单轮指令和多轮对话场景中的响应偏好。

4 Experiments

4 实验

In this section, we first introduce the training implementation (Section 4.1) and then present the evaluation manners and results including automatic evaluation (Section 4.2) and manual evaluation (Section 4.3).

在本节中,我们首先介绍训练实现(第4.1节),然后展示评估方式及结果,包括自动评估(第4.2节)和人工评估(第4.3节)。

4.1 Training Details

4.1 训练细节

Our model is implemented in PyTorch using the Accelerate 5 and $\mathrm{tr}1\mathrm{x}^{6}$ packages with Bloomz-7b1- mt [15] as the base architecture.7 We leverage ZeRO-3 [17] to distribute the model across 8 A100 GPUs for training. In the supervised fine-tuning process, we set the learning rate, batch size, and maximum context length to $2e-5$ , 128, and 2048, respectively. All models are trained for 3 epochs and weights performed the best on the validation set are saved. During the reinforcement learning process, we only update the parameters of the last two layers. The total number of steps is 16, 000, with a learning rate of $8e-6$ . In addition, to enhance the model’s conversational and instructionfollowing capabilities in the general domain, we have incorporated Chinese instruction data (the Chinese Alpaca dataset [18] and conversation data (ShareGPT8). This enhances the model’s ability to effectively understand and generate responses in various conversational scenarios and accurately follow instructions across different domains.

我们的模型基于Bloomz-7b1-mt[15]架构,采用PyTorch框架并集成Accelerate 5和$\mathrm{tr}1\mathrm{x}^{6}$工具包实现。通过ZeRO-3[17]技术在8块A100 GPU上分布式训练,监督微调阶段设置学习率为$2e-5$、批量大小128、最大上下文长度2048,所有模型训练3个周期并保留验证集表现最佳的权重。强化学习阶段仅更新最后两层的参数,总步数16,000步,学习率设为$8e-6$。为增强通用领域的对话和指令跟随能力,我们融合了中文指令数据(Chinese Alpaca数据集[18])和对话数据(ShareGPT8),显著提升了模型在多轮对话场景下的理解响应能力及跨领域指令执行的准确性。

4.2 Automatic Evaluation

4.2 自动评估

4.2.1 Medical Benchmarks

4.2.1 医疗基准测试

We select three existing Chinese medical QA datasets as examples, namely cMedQA2 [19], webMedQA [20] and Huatuo-26M [21], and compare the results with the existing baselines. cMedQA2 is a publicly available dataset based on Chinese medical questions and answers consisting of 108,000 questions and 203,569 answers. webMedQA is a real-world Chinese medical QA dataset collected from online health consultancy websites consisting of 63,284 questions. Huatuo-26M [21] is the largest Chinese medical QA dataset which has 26M QA pairs from online medical consultation, knowledge bases and encyclopedias.

我们选取了三个现有的中文医疗问答数据集作为示例,即cMedQA2 [19]、webMedQA [20]和Huatuo-26M [21],并将结果与现有基线进行比较。cMedQA2是一个公开的中文医疗问答数据集,包含108,000个问题和203,569个答案。webMedQA是从在线健康咨询网站收集的真实世界中文医疗问答数据集,包含63,284个问题。Huatuo-26M [21]是最大的中文医疗问答数据集,包含来自在线医疗咨询、知识库和百科全书的2600万问答对。

| Dataset | Model | BLEU-1 BLEU-2 BLEU-3 BLEU-4 GLEU ROUGE-1 | |||||||||

| cMedQA2 | GPT-3.5-turbo | 19.21 | 7.43 | 3.14 | 1.24 | 5.06 | 20.13 | 3.10 | 12.57 | 0.69 | 0.99 |

| T5 (fine-tuned) | 20.88 | 11.87 | 7.69 | 5.09 | 7.62 | 27.16 | 9.30 | 20.11 | 0.41 | 0.52 | |

| HuatuoGPT | 25.37 | 13.16 | 7.39 | 4.25 | 8.30 | 27.75 | 7.31 | 17.36 | 0.74 | 0.93 | |

| webMedQA | GPT-3.5-turbo | 18.06 | 6.74 | 2.73 | 1.09 | 4.71 | 20.01 | 2.81 | 12.58 | 0.65 | 0.87 |

| T5 (fine-tuned) | 21.42 | 13.79 | 10.06 | 7.38 | 8.94 | 31.00 | 13.85 | 25.78 | 0.37 | 0.46 | |

| HuatuoGPT | 24.61 | 12.84 | 7.23 | 4.19 | 7.73 | 27.38 | 7.09 | 17.66 | 0.71 | 0.93 | |

| GPT-3.5-turbo | 18.44 | 6.95 | 2.87 | 1.13 | 4.87 | 19.60 | 2.82 | 12.46 | 0.69 | 0.89 | |

| T5 (fine-tuned) HuatuoGPT | 26.63 25.16 | 16.74 13.21 | 11.77 7.54 | 8.46 4.40 | 11.38 8.37 | 33.21 27.76 | 13.26 7.45 | 24.85 17.99 | 0.51 0.73 | 0.68 0.93 | |

Table 4: Benchmark on Chinese medical QA dataset [21]. GPT-3.5-turbo (ChatGPT) and HuatuoGPT are zero-shot while T5 is finetuned.

| 数据集 | 模型 | BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | GLEU | ROUGE-1 | ROUGE-2 | ROUGE-L | METEOR | CIDEr |

|---|---|---|---|---|---|---|---|---|---|---|---|

| cMedQA2 | GPT-3.5-turbo | 19.21 | 7.43 | 3.14 | 1.24 | 5.06 | 20.13 | 3.10 | 12.57 | 0.69 | 0.99 |

| T5 (fine-tuned) | 20.88 | 11.87 | 7.69 | 5.09 | 7.62 | 27.16 | 9.30 | 20.11 | 0.41 | 0.52 | |

| HuatuoGPT | 25.37 | 13.16 | 7.39 | 4.25 | 8.30 | 27.75 | 7.31 | 17.36 | 0.74 | 0.93 | |

| webMedQA | GPT-3.5-turbo | 18.06 | 6.74 | 2.73 | 1.09 | 4.71 | 20.01 | 2.81 | 12.58 | 0.65 | 0.87 |

| T5 (fine-tuned) | 21.42 | 13.79 | 10.06 | 7.38 | 8.94 | 31.00 | 13.85 | 25.78 | 0.37 | 0.46 | |

| HuatuoGPT | 24.61 | 12.84 | 7.23 | 4.19 | 7.73 | 27.38 | 7.09 | 17.66 | 0.71 | 0.93 | |

| GPT-3.5-turbo | 18.44 | 6.95 | 2.87 | 1.13 | 4.87 | 19.60 | 2.82 | 12.46 | 0.69 | 0.89 | |

| T5 (fine-tuned) | 26.63 | 16.74 | 11.77 | 8.46 | 11.38 | 33.21 | 13.26 | 24.85 | 0.51 | 0.68 | |

| HuatuoGPT | 25.16 | 13.21 | 7.54 | 4.40 | 8.37 | 27.76 | 7.45 | 17.99 | 0.73 | 0.93 |

表 4: 中文医疗问答数据集基准测试 [21]。GPT-3.5-turbo (ChatGPT) 和 HuatuoGPT 采用零样本方式,T5 为微调模型。

Evaluation Metrics Following the previous works [21], we utilize evaluation metrics such as BLEU, ROUGE, GLEU, and Distinct. BLEU computes the $\mathbf{k}$ -gram overlap between generated and reference sentences to measure similarity. ROUGE-N assesses the N-gram overlap, and ROUGE-L gauges the longest common sub sequence of word matches. GLEU auto-evaluates sentence-level fluency. Distinct-1/2 aids in assessing textual diversity of the generated response by determining distinct n-grams count. However, these reference-based metrics may not suit medical QA scenarios due to diverse potential reference answers; more sound metrics should be paid more attention.

评估指标

遵循先前工作[21],我们采用BLEU、ROUGE、GLEU和Distinct等评估指标。BLEU通过计算生成句与参考句之间的$\mathbf{k}$-gram重叠度来衡量相似性。ROUGE-N评估N-gram重叠率,ROUGE-L则衡量最长公共子序列匹配。GLEU自动评估句子流畅度。Distinct-1/2通过统计独特n-gram数量来评估生成响应的文本多样性。然而,这些基于参考的指标可能不适用于医疗QA场景,因其存在多样化的潜在参考答案;更合理的指标值得重点关注。

Baselines We compare our model to the best reported zero-shot model ChatGPT (GPT-3.5-turbo) and an in-domain fine-tuned model Chinese $\mathbf{T5}^{\mathrm{ \tiny { 2 } }}$ respectively, which is continuously trained for 1 epoch on the full training set using batch-size 8, with a learning rate of $10^{-4}$ using Adam, linear scheduling with a warm-up rate of 0.1.

基线模型

我们将自己的模型与目前表现最佳的零样本模型ChatGPT (GPT-3.5-turbo)以及领域内微调模型Chinese $\mathbf{T5}^{\mathrm{ \tiny { 2 } }}$进行对比。该微调模型采用Adam优化器($10^{-4}$学习率)、线性调度(0.1预热比例),以批次大小8在全量训练集上持续训练了1个epoch。

Results HuatuoGPT demonstrates impressive performance across various Chinese medical benchmarks, achieves consistently high scores across all metrics, and demonstrates a high level of accuracy, fluency, and diversity in its generated responses. In cMedQA2 and webMedQA, HuatuoGPT even outperforms fine-tuned T5, suggesting that it has a robust generalization capability and is able to effectively handle a wide range of medical question-answering tasks.

结果

HuatuoGPT 在多个中文医学基准测试中展现出令人印象深刻的表现,在所有指标上均取得了一致的高分,并在生成回答中表现出高度的准确性、流畅性和多样性。在 cMedQA2 和 webMedQA 上,HuatuoGPT 甚至优于经过微调的 T5,这表明它具有强大的泛化能力,能够有效处理广泛的医学问答任务。

4.2.2 Evaluation with GPT4

4.2.2 基于GPT4的评估

We conduct an automated evaluation on single-turn questions with different intents and multiturn conversations from different departments to observe the performance of the model in various scenarios.

我们对不同意图的单轮问题和不同部门的多轮对话进行了自动化评估,以观察模型在各种场景下的表现。

Evaluation dataset For the single-turn questions, we extract 100 questions representing 10 intents (condition diagnosis, e tio logical analysis, treatment plan, medical advice, indicators interpretation, disease description, consequences description, precautions, efficacy, medical expenses) from the validation set of the Knowledge-based Universal Automated Knowledge Extraction for Query Intent Classification (KUAKE-QIC) in Chinese Biomedical Language Understanding Evaluation (CBLUE [22])10. KUAKE-QIC is collected from search engine queries, which makes it suitable for single-turn questions. To filter the noisy data, these questions were initially scored by ChatGPT, and a manual filtering process was conducted to select higher quality candidate questions for the test set. For the multi-turn questions, we used the patient cases from [8]. We selected 20 departments and randomly sampled 5 patient cases from each department, resulting in a total of 100 real patient cases. These cases were provided to ChatGPT, which played the role of the patient, interacting with each doctor model to obtain the diagnosis results.

评估数据集

对于单轮问题,我们从中文生物医学语言理解评估(CBLUE [22])的基于知识的通用自动知识抽取查询意图分类(KUAKE-QIC)验证集中提取了代表10种意图(病情诊断、病因分析、治疗方案、就医建议、指标解读、疾病描述、后果描述、注意事项、疗效、医疗费用)的100个问题。KUAKE-QIC收集自搜索引擎查询,因此适合单轮问题。为过滤噪声数据,这些问题先由ChatGPT评分,再通过人工筛选流程选出更高质量的候选问题作为测试集。

对于多轮问题,我们采用[8]中的患者病例。选取20个科室,每个科室随机抽样5个患者病例,共获得100个真实患者病例。这些病例提供给ChatGPT,由其扮演患者角色与每个医生模型交互以获得诊断结果。

Evaluation aspects and metrics We use GPT-4 as the referees to review the quality of model outputs. We prompt it to consider doctor-like language, symptom inquiry capability, the effect and reliability of the treatment recommendations and prescriptions, and the helpfulness to the patient. Given the question and the corresponding two answers from two models, GPT-4 is asked to first compare the advantages of each output and analyze the helpfulness to the patient, then it is requested to provide a score to each response respectively. In this way, we can get the evaluation scores of the 100 questions for each model comparison pair. We take the average scores over all the questions and calculate the performance ratio for each compared model (i.e. the overall score of the compared model divided by that of HuatuoGPT in a comparison pair).

评估维度与指标

我们使用GPT-4作为评审员来评估模型输出的质量。提示其从医生般的语言表达、症状询问能力、治疗建议与处方的效果及可靠性、以及对患者的帮助性等维度进行评判。给定问题及两个模型的对应回答后,GPT-4需先分析每个输出的优势及其对患者的实际帮助,再分别对两个回答进行评分。通过这种方式,我们可获得每个模型对比组在100个问题上的评分结果。最终计算所有问题的平均分,并得出被对比模型的性能比率(即对比组中被对比模型总分与HuatuoGPT总分的比值)。

Baselines We mainly compare HuatuoGPT to the two most popular general models ChatGPT and GPT4 11, and the two most representative open-source Chinese medical large language models: BenTsao (tuned from LLaMA)12, DoctorGLM (tuned from ChatGLM)13. For single-turn questions evaluation, we compare to all the mentioned four models. For multi-turn conversations evaluation, we only compare our model to DoctorGLM and GPT-3.5-turbo due to the quote limit of GPT-4. We report the performance ratio of all models over all single-turn questions and multi-turn conversations respectively.

基线模型

我们主要将华佗GPT与两款最受欢迎的通用模型ChatGPT和GPT4[11]以及两款最具代表性的开源中文医疗大语言模型进行比较:本草(基于LLaMA微调)[12]、DoctorGLM(基于ChatGLM微调)[13]。在单轮问答评估中,我们与上述四款模型均进行对比。在多轮对话评估中,由于GPT-4的调用限制,我们仅将模型与DoctorGLM和GPT-3.5-turbo进行对比。我们分别报告了所有模型在单轮问答和多轮对话场景下的性能比率。

| Category | HuatuoGPTv.s.BenTsao | HuatuoGPTv.s.DoctorGLM | HuatuoGPTv.s.ChatGPT | HuatuoGPTv.s.GPT-4 | ||

| Efficacy | 8.60 | 2.40 | 8.30 4.15 | 7.50 | 7.45 | 7.00 7.05 |

| Medical Expenses | 8.50 | 3.30 | 4.10 | 8.05 | 6.65 | 7.45 |

| Consequences Description | 8.70 | 4.00 | 3.60 | 7.50 | 7.20 | 8.65 |

| MedicalAdvice | 8.60 | 3.90 | 3.50 | 7.25 | 8.05 6.65 | 9.00 |

| IndicatorsInterpretation | 8.60 | 3.40 | 4.50 | 8.55 | 6.50 | 8.30 |

| TreatmentPlan | 8.90 | 3.40 | 4.00 | 7.45 | 8.30 | 8.95 |

| Precautions | 9.00 | 4.20 | 4.80 | 7.70 | 8.25 | 8.45 |

| DiseaseDescription | 8.60 | 2.70 | 3.80 | 7.75 | 7.65 7.45 | 8.15 |

| Etiological Analysis | 8.70 | 3.40 | 3.40 | 7.65 | 8.20 7.00 | 8.50 |

| ConditionDiagnosis | 8.60 | 4.05 | 5.05 | 7.90 | 7.55 | 8.10 |

| Overall | 8.68 | 3.47 | 8.56 4.09 | 7.73 | 7.64 | 7.30 7.06 8.26 |

Table 5: Scores for each category and the overall scores of each model pair on 100 single-turn questions reviewed by GPT-4.

| 类别 | 华佗GPT vs. 本草 | 华佗GPT vs. DoctorGLM | 华佗GPT vs. ChatGPT | 华佗GPT vs. GPT-4 | ||

|---|---|---|---|---|---|---|

| 疗效 (Efficacy) | 8.60 | 2.40 | 8.30 4.15 | 7.50 | 7.45 | 7.00 7.05 |

| 医疗费用 (Medical Expenses) | 8.50 | 3.30 | 4.10 | 8.05 | 6.65 | 7.45 |

| 后果描述 (Consequences Description) | 8.70 | 4.00 | 3.60 | 7.50 | 7.20 | 8.65 |

| 医疗建议 (Medical Advice) | 8.60 | 3.90 | 3.50 | 7.25 | 8.05 6.65 | 9.00 |

| 指标解读 (Indicators Interpretation) | 8.60 | 3.40 | 4.50 | 8.55 | 6.50 | 8.30 |

| 治疗方案 (Treatment Plan) | 8.90 | 3.40 | 4.00 | 7.45 | 8.30 | 8.95 |

| 注意事项 (Precautions) | 9.00 | 4.20 | 4.80 | 7.70 | 8.25 | 8.45 |

| 疾病描述 (Disease Description) | 8.60 | 2.70 | 3.80 | 7.75 | 7.65 7.45 | 8.15 |

| 病因分析 (Etiological Analysis) | 8.70 | 3.40 | 3.40 | 7.65 | 8.20 7.00 | 8.50 |

| 病情诊断 (Condition Diagnosis) | 8.60 | 4.05 | 5.05 | 7.90 | 7.55 | 8.10 |

| 总体 (Overall) | 8.68 | 3.47 | 8.56 4.09 | 7.73 | 7.64 | 7.30 7.06 8.26 |

表 5: GPT-4 评估的 100 个单轮问题中各模型对各类别及总体的评分。

| Category | HuatuoGPT v.s. DoctorGLM | HuatuoGPTv.s.ChatGPT |

| Traditional Chinese Medicine | 8.80 5.70 | 8.60 8.30 |

| Obstetrics | 9.00 5.20 | 8.90 7.50 |

| Pediatrics | 8.90 6.50 | 8.50 7.80 |

| Internal Medicine | 8.80 5.60 | 8.70 7.70 |

| Stomatology | 8.60 6.60 | 8.30 8.40 |

| Surgery | 8.00 5.20 | 8.60 7.60 |

| Obstetrics and Gynecology | 8.70 5.80 | 8.60 7.60 |

| Gynecology | 8.50 6.40 | 8.10 8.00 |

| CardiovascularMedicine | 8.60 5.20 | 8.40 8.00 |

| General Surgery | 9.00 5.00 | 8.80 7.70 |

| Urology | 8.70 5.80 | 8.50 7.40 |

| Gastroenterology | 8.70 5.50 | 8.70 7.60 |

| Andrology | 8.90 5.20 | 8.20 7.50 |

| Dermatology andVenereology | 8.70 5.80 | 8.40 7.60 |

| Dermatology | 8.80 5.30 | 7.90 7.70 |

| Ophthalmology | 8.50 6.00 | 7.60 8.40 |

| Neurology | 8.80 5.90 | 9.00 8.10 |

| Neurosurgery | 8.80 4.20 | 8.10 8.40 |

| Psychiatry | 8.70 5.80 | 8.20 7.70 |

| Orthopedics | 8.80 5.90 | 8.30 8.00 |

| Overall | 8.72 5.63 | 8.42 7.85 |

Table 6: Scores for each category and the overall scores of each model pair on 100 multi-turn dialogues reviewed by GPT-4.

| 类别 | 华佗GPT vs DoctorGLM | 华佗GPT vs ChatGPT |

|---|---|---|

| 中医 | 8.80 5.70 | 8.60 8.30 |

| 产科 | 9.00 5.20 | 8.90 7.50 |

| 儿科 | 8.90 6.50 | 8.50 7.80 |

| 内科 | 8.80 5.60 | 8.70 7.70 |

| 口腔科 | 8.60 6.60 | 8.30 8.40 |

| 外科 | 8.00 5.20 | 8.60 7.60 |

| 妇产科 | 8.70 5.80 | 8.60 7.60 |

| 妇科 | 8.50 6.40 | 8.10 8.00 |

| 心血管科 | 8.60 5.20 | 8.40 8.00 |

| 普外科 | 9.00 5.00 | 8.80 7.70 |

| 泌尿科 | 8.70 5.80 | 8.50 7.40 |

| 消化科 | 8.70 5.50 | 8.70 7.60 |

| 男科 | 8.90 5.20 | 8.20 7.50 |

| 皮肤性病科 | 8.70 5.80 | 8.40 7.60 |

| 皮肤科 | 8.80 5.30 | 7.90 7.70 |

| 眼科 | 8.50 6.00 | 7.60 8.40 |

| 神经科 | 8.80 5.90 | 9.00 8.10 |

| 神经外科 | 8.80 4.20 | 8.10 8.40 |

| 精神科 | 8.70 5.80 | 8.20 7.70 |

| 骨科 | 8.80 5.90 | 8.30 8.00 |

| 总体 | 8.72 5.63 | 8.42 7.85 |

表 6: GPT-4 评估的 100 轮多轮对话中各模型对各类别及总体的评分。

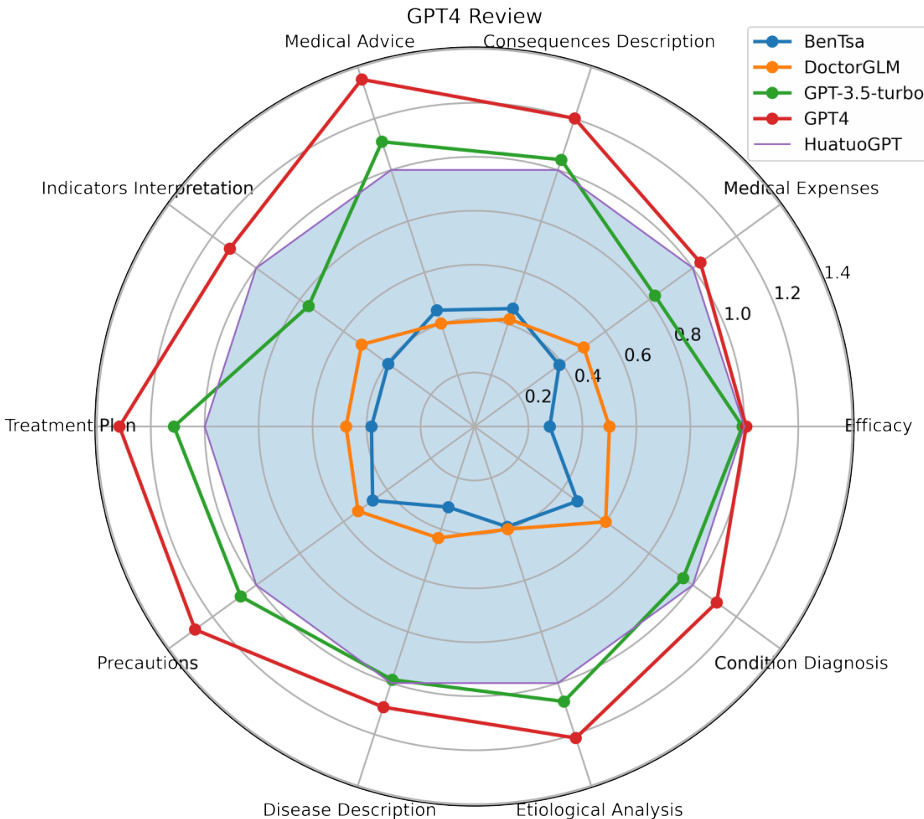

Results For the single-turn questions evaluation, all the model performance results are shown in Table 5. The comparison among models for each category is shown in Figure 3 and the comparison among their overall performance is shown in Figure 4, where the performance of HuatuoGPT is set to 1.0. According to GPT-4, HuatuoGPT is much better than BenTsao and DoctorGPT in all categories. Compared to GPT-3.5-turbo, HuatuoGPT outperforms it in three categories (Indicators Interpretation, Condition Diagnosis, and Medical Expenses) and performs similarly to it in two categories (Efficacy and Disease Description). However, HuatuoGPT is still worse than GPT4 in almost all categories, where it attains similar performance to GPT4 in two categories (Efficacy and Medical Expenses). Overall, HuatuoGPT achieves higher scores than DoctorGLM, BenTsao, and GPT-3.5-turbo. For the multi-turn conversations evaluation, similarly, the overall performance of HuatuoGPT surpasses GPT-3.5-turbo in over $60%$ of cases. The comparison for each category and for overall performance are shown in Table 6, Figure 5, and Figure 6 respectively.

结果

在单轮问题评估中,所有模型的性能结果如表 5 所示。各模型在不同类别中的对比见图 3,整体性能对比见图 4(以华佗GPT的性能为1.0基准)。根据GPT-4的评估,华佗GPT在所有类别中均显著优于BenTsao和DoctorGPT。与GPT-3.5-turbo相比,华佗GPT在三个类别(指标解读、病情诊断和医疗费用)上表现更优,在两个类别(疗效和疾病描述)上表现相近。但华佗GPT在几乎所有类别中仍落后于GPT4,仅在两类别(疗效和医疗费用)达到相近水平。总体而言,华佗GPT的得分高于DoctorGLM、BenTsao和GPT-3.5-turbo。

在多轮对话评估中,华佗GPT的整体表现同样在超过$60%$的案例中优于GPT-3.5-turbo。各类别及整体性能对比分别见表 6、图 5 和图 6。

Figure 3: The model performance ratio for each category on 100 single-turn questions, reviewed by GPT4. The performance of HuatuoGPT is set to 1.0.

图 3: GPT4 评估的 100 个单轮问题中各类别模型性能比率 (HuatuoGPT 性能基准设为 1.0)

4.3 Manual Evaluation

4.3 人工评估

4.3.1 Evaluation dataset and metrics

4.3.1 评估数据集与指标

We utilize the 100 KUAKE-QIC questions (the same as those in automated evaluation) as the test set for single-turn question evaluation and randomly sample 50 patient cases from 100 test cases used in automated evaluation for multi-turn conversations manually evaluation.

我们利用100个KUAKE-QIC问题(与自动评估中的相同)作为单轮问题评估的测试集,并从自动评估使用的100个测试案例中随机抽取50个患者案例进行多轮对话人工评估。

In the manual evaluation of the HuatuoGPT, we think that the following three aspects should be considered, particularly in medical consultation and medication prescription and take them as the guidelines for evaluation:

在评估HuatuoGPT时,我们认为应重点关注以下三个方面,特别是在医疗咨询和药物处方场景中,并将其作为评估准则:

Diagnosis accuracy. This aspect evaluates the model’s accuracy and comprehensiveness in diagnosing patient symptoms. Evaluators are provided a set of medical cases or symptom descriptions and assess the correctness, relevance, and reasonableness of the model’s diagnosis. Comparisons can be made with assessments made by medical professionals to ensure the model’s accuracy.

诊断准确性。该方面评估模型在诊断患者症状时的准确性和全面性。评估者会获得一组医疗案例或症状描述,并评判模型诊断的正确性、相关性和合理性。可与医疗专业人员的评估结果进行对比,以确保模型的准确性。

Treatment recommendation accuracy. This aspect assesses the accuracy and appropriateness of the model’s treatment recommendations for patients. Evaluators are provided a set of medical cases or symptom descriptions and evaluate whether the model’s treatment recommendations align with medical knowledge and real-world applications that are effective and reliable to the patient’s main condition and problem.

治疗推荐准确性。该方面评估模型对患者治疗建议的准确性和适当性。评估者会获得一组医疗案例或症状描述,并判断模型的治疗建议是否符合医学知识及实际应用,是否针对患者主要病情和问题提供有效可靠的治疗方案。

Figure 4: The overall model performance ratio on 100 single-turn questions, reviewed by GPT-4. The performance of HuatuoGPT is set to $100%$ . Table 7: Manual evaluation of model performance in single-turn medical consultations.

图 4: 在100个单轮问题上由GPT-4审核的整体模型性能比率。以HuatuoGPT的性能为基准设为$100%$。

表 7: 单轮医疗咨询中模型性能的人工评估。

Medication knowledge and prescription accuracy. This aspect evaluates the model’s understanding of medications and the accuracy of its prescription recommendations. Evaluators are provided a set of medical cases or symptom descriptions and assess the accuracy and reliability of the medication recommendations based on medical knowledge and guidelines.

药物知识与处方准确性。该方面评估模型对药物的理解及其处方推荐的准确性。评估者会获得一组医疗案例或症状描述,并根据医学知识和指南评估药物推荐的准确性与可靠性。

We provide physicians with above considerations, enabling them to align their evaluation guidelines. This allows for a meticulous comparison of the good and bad outputs of different models for the same scenario.

我们为医生提供上述考量因素,使其能够调整评估标准。这样可以对同一场景下不同模型输出的优劣进行细致对比。

During evaluation, medical experts are asked to provide assessments on different responses. Each physician is solely responsible for evaluating the output of a single pair of models, ensuring that each response data is scrambled and anonymized with the utmost strictness. Consistent with automatic evaluation, we take BenTsao, DoctorGLM, ChatGPT and GPT4 as the baselines in single-turn question evaluation and select DoctorGLM, ChatGPT as the baselines in multi-turn conversation evaluation.

在评估过程中,我们邀请医学专家对不同回答进行评分。每位医生仅负责评估一对模型的输出结果,确保所有应答数据均经过严格打乱和匿名化处理。与自动评估保持一致,我们在单轮问答评估中以BenTsao、DoctorGLM、ChatGPT和GPT4作为基线模型,在多轮对话评估中选择DoctorGLM和ChatGPT作为基线。

4.3.2 Results

4.3.2 结果

As shown in Table 7, HuatuoGPT performs exceptionally well against BenTsao, DoctorGLM, and it even slightly outperforms ChatGPT, highlighting its robust diagnosis accuracy, treatment recommendations, and medication knowledge. The results of multi-turn evaluation are shown in Table 8, which reveals that HuatuoGPT excels in extended dialogue contexts, evidenced by an $86%$ win rate against DoctorGLM and $58%$ against ChatGPT. It indicates that HuatuoGPT has a more prominent interactive diagnostic capability in patient consultation scenarios.

如表7所示,华佗GPT在诊断准确性、治疗建议和药物知识方面表现尤为出色,不仅显著优于BenTsao和DoctorGLM,甚至略微超越ChatGPT。多轮评估结果如表8所示,数据显示华佗GPT在长对话场景中优势明显:对DoctorGLM的胜率达86%,对ChatGPT的胜率为58%,这表明华佗GPT在患者咨询场景中具备更突出的交互式诊断能力。

| Comparison | Win | Lose |

| HuatuoGPT vsBenTsao | 100% | %0 |

| HuatuoGPT VS DoctorGLM | 98% | 2% |

| HuatuoGPTvs( ChatGPT | 52% | 48% |

| HuatuoGPTvs GPT-4 | 10.5% | 89.5% |

| 对比 | 胜率 | 败率 |

|---|---|---|

| 华佗GPT vs 本草模型 | 100% | 0% |

| 华佗GPT vs DoctorGLM | 98% | 2% |

| 华佗GPT vs ChatGPT | 52% | 48% |

| 华佗GPT vs GPT-4 | 10.5% | 89.5% |

Figure 5: The model performance ratio for each category on 100 multi-turn dialogues, reviewed by GPT4. The performance of HuatuoGPT is set to 1.0. Table 8: Manual evaluation of model performance in multi-turn medical consultations.

图 5: GPT4 评估的 100 轮多轮对话中各类型模型性能比率 (HuatuoGPT 性能基准设为 1.0)

表 8: 多轮医疗咨询场景下的模型性能人工评估结果

| Comparison | Win | Lose |

| HuatuoGPT VS DoctorGLM | 86% | 14% |

| HuatuoGPT VS ChatGPT | 58% | 42% |

| 对比 | 胜率 | 负率 |

|---|---|---|

| HuatuoGPT VS DoctorGLM | 86% | 14% |

| HuatuoGPT VS ChatGPT | 58% | 42% |

5 Discussion

5 讨论

5.1 Ablation Study

5.1 消融实验

In this section, we explore the impact of two types of data on the model. We trained two distinct models, namely HuatuoGPT (w/ real data) and HuatuoGPT (w/ distilled data), using exclusively real-world data or distilled data, respectively. We thoroughly compare the variations in responses between the two models for the same set of questions as shown in Table 9. HuatuoGPT (w/ real data) has a tendency to ask clarifying questions to patients, performing as expected, similar to a doctor. However, a minor flaw is that the response is brief and the content appears less well-organized for reading. On the other hand, HuatuoGPT (w/ distilled data) generates well-organized, detailed, and informative content. Nevertheless, its responses are more focused on providing suggestions rather than making a diagnostic decision. Thus, HuatuoGPT (w/ distilled data) resembles a "non-doctor friend" rather than a doctor.

在本节中,我们探讨了两种数据类型对模型的影响。我们分别使用纯真实数据和蒸馏数据训练了两个独立模型:华佗GPT(真实数据版)和华佗GPT(蒸馏数据版)。如表9所示,我们全面对比了两个模型对同一组问题的回答差异。华佗GPT(真实数据版)倾向于像医生一样询问患者以澄清问题,表现符合预期,但存在回应较简短、内容组织性稍弱的不足。相比之下,华佗GPT(蒸馏数据版)能生成结构清晰、细节丰富且信息量大的内容,但其回答更侧重于提供建议而非做出诊断决策,因此更像一位"非医生朋友"而非专业医师。

To assess the impact of RLAIF (Reinforced Learning with Auxiliary Information Feedback), we also compare two models: the default model called HuatuoGPT and a variant called HuatuoGPT (w/o RLAIF) which does not utilize RLAIF. It is worth noting that the latter model, HuatuoGPT (w/o RLAIF), did not ask additional questions to patients. This might be attributed to the fact that its training data could be biased towards the ChatGPT data, while real-world data may have been overlooked. In contrast, our default model, HuatuoGPT with RLAIF, can function like a doctor by asking follow-up questions to patients to get more accurate diagnoses.

为了评估RLAIF(带辅助信息反馈的强化学习)的影响,我们还比较了两个模型:默认模型HuatuoGPT和不使用RLAIF的变体HuatuoGPT (w/o RLAIF)。值得注意的是,后者HuatuoGPT (w/o RLAIF)没有向患者提出额外问题。这可能是因为其训练数据偏向ChatGPT数据,而忽略了真实世界数据。相比之下,我们的默认模型HuatuoGPT(带RLAIF)能像医生一样通过追问患者来获得更准确的诊断。

Figure 6: The overall model performance ratio on 100 multi-turn dialogues, reviewed by GPT-4. The performance of HuatuoGPT is set to $100%$ .

图 6: 基于 GPT-4 评估的 100 轮多轮对话整体模型性能比率 (HuatuoGPT 性能设为 $100%$)

5.2 Limitation

5.2 局限性

We emphasize the potential risks associated with generation-based medical consultation. The main concern lies in the challenge of verifying the accuracy and correctness of the generated content. In the medical domain, the dissemination of misleading information can have severe ethical implications. Although generative QA has shown promise, especially with the success of models like ChatGPT, they are not yet fully prepared for real-world deployment in the biomedical domain.

我们强调基于生成的医疗咨询存在的潜在风险。主要问题在于验证生成内容的准确性和正确性存在挑战。在医疗领域,传播误导性信息可能引发严重的伦理问题。尽管生成式问答(Generative QA)已展现出前景,特别是像ChatGPT这样的模型取得成功,但它们尚未完全准备好应用于生物医学领域的实际部署。

While generation methods currently hold great potential, it is important to exercise caution and prudence before deploying them in real-world applications. Further research and development are necessary to refine these models, enhance their accuracy, and establish robust mechanisms for accurateness-checking and error correction. Only through careful scrutiny and continual improvement can we minimize the risks and ethical concerns associated with generation-based medical QA.

虽然生成方法目前具有巨大潜力,但在实际应用中部署前仍需保持谨慎态度。需要通过进一步研发来完善这些模型、提升其准确性,并建立可靠的准确性核查与纠错机制。只有通过严格审查和持续改进,才能最大限度降低基于生成的医疗问答系统相关风险与伦理问题。

6 LLMs in Medicine

6 大语言模型在医学中的应用

The language model in the medical field has always been a concern for researchers. The early models were mainly based on the GPT-2 series models to continue pre-training in the domain. BioMedLM14 is a domain-specific large language model for bio medicine, trained from 2.7B GPT-2. It is trained on the PubMed Abstracts and PubMed Central portions of the Pile dataset, which contains around 50B tokens and spans a collection of 16 million abstracts and 5 million full-text articles from the biomedical literature. Similarly, BioGPT [23] is a medium GPT-2 model pre-training in medical data collected from the official PubMed website 15. For downstream tasks, it uses the soft prompt for fine-tuning training.

医学领域的语言模型一直是研究者关注的焦点。早期模型主要基于GPT-2系列模型进行领域内继续预训练。BioMedLM14是专注于生物医学的领域专用大语言模型,基于27亿参数的GPT-2训练而成。其训练数据来自Pile数据集中的PubMed摘要和PubMed Central部分,包含约500亿token,涵盖1600万篇生物医学文献摘要及500万篇全文。类似地,BioGPT[23]是基于中等规模GPT-2模型,在从PubMed官网收集的医学数据上进行预训练。针对下游任务,该模型采用软提示(soft prompt)进行微调训练。

Recently, many efforts have attempted to use instruction fine-tuning to enhance the ability for medical consultation on large-scale language models $(>6\mathrm{B})$ , as shown in Table 2. MEDALPACA[24]16 is a LLaMA model trained on the Medical Meadow, consisting of two main categories, a collection of established medical NLP tasks reformatted in instruction tuning formats, as well as a crawl of various internet resources. ChatDoctor $[25]^{17}$ is also a medical LLM trained on the HealthCare Magic-100k

近期,许多研究尝试通过指令微调来增强大规模语言模型(>6B)的医疗咨询能力,如表 2 所示。MEDALPACA[24]是基于LLaMA模型在Medical Meadow数据集上训练的成果,主要包含两大类数据:一组被重新格式化为指令调优形式的成熟医疗NLP任务集,以及从各类互联网资源爬取的内容。ChatDoctor[25]同样是在HealthCare Magic-100k数据集上训练的医疗大语言模型。

Table 9: Sample responses from four models.

| 我最近手上,手心长小泡,十个手指肚脱皮。想问这是什么病,需要去医院吗? hospital?) |

| HuatuoGPT(w/treal data): 您好,根据您的症状描述,您可能患有湿疹或接触性皮炎等皮肤疾病引起的过敏反应。为了更准确地诊断病情,我需要了解一些 额外的信息。请问这些小泡是否伴有癌痒感?是否有其他部位的皮肤出现类似的症状呢? (Hello, based on the description of your symptoms, you may be suffering from an allergic reaction caused by a skin condition such as eczema or contact dermatitis. In order to diagnose the condition more accurately, I need to know some additional information. Are these small blisters accompanied by itching?Are there any other areas of the skin that show similar symptoms?) HuatuoGPT (w/t distilled data): |

| 在等待就医期间,您可以采取以下措施缓解症状: 1.保持双手清洁干燥。 2.避免使用含有刺激性成分或化学物质的洗涤剂或洗手液清洗双手。 3.穿宽松透气的衣服,并尽量避免接触过敏原物质。 4.多饮水,多吃新鲜蔬菜水果以补充维生素和矿物质。 5.注意心理调节,减轻压力。 (Hello, according to the symptoms you describe, it may be an inflammatory skin reaction caused by eczema.However, in order to determine the cause and treatment plan more precisely,we recommend that you visit a hospital.Your doctor can make a definitive diagnosis by carefully While waiting to seek medical attention, you can take the following steps to relieve your symptoms: 1.Keep your hands clean and dry. 2. Avoid washing your hands with detergents or hand sanitizers that contain harsh ingredients or chemicals. |

| HuatuoGPT (w/oRLAIF): 您好,根据您的描述,您可能患有湿疹或者接触性皮肤炎等皮肤病。这种情况通常与皮肤对某些物质过敏有关,例如洗涤剂、化 妆品、金属制品等。 我建议您先避免使用任何可能会引起过敏反应的物品或环境刺激,如香水、洗洁精、化妆品等等。同时注意保持双手卫生清洁干 燥,穿戴柔软透气的手套,避免用手抓挠患处,以免感染加重。 如果症状持续存在并无法缓解,您可以到医院就诊,医生会进一步检查确定病因后给予相应的治疗方案。一般情况下,可以采用 外用药物进行治疗,比如氢化可的松乳膏(尤卓尔),但具体剂量和使用方法需遵医嘱。 (Hello, according to your description, you may be suffering from a skin condition such as eczema or contact dermatitis. This condition is usually I recommend that you first avoid any items or environmental stimuli that may cause allergic reactions, such as perfumes, detergents, cosmetics, etc. Also pay attention to keeping your hands hygienically clean and dry, wear soft and breathable gloves, and avoid scratching the affected area with your hands to avoid aggravating the infection. If the symptoms persist and cannot be relieved, you can go to the hospital,the doctor will further examine to determine the cause of the disease and give the appropriate treatment plan. In general, you can use topical medications for treatment, such as hydrocortisone cream (Euthyrox), but the specific dosage and application methods need to follow medical advice.) |

| HuatuoGPT: 您好,根据您的描述,您可能患有汗疱湿疹。这是一种由病毒感染引起的皮肤病,通常会在皮肤摩擦或受热后出现水疱和癌痒 感。 |

表 9: 四个模型的回答示例。

| 我最近手上,手心长小泡,十个手指肚脱皮。想问这是什么病,需要去医院吗? |

|---|