Zhongjing: Enhancing the Chinese Medical Capabilities of Large Language Model through Expert Feedback and Real-world Multi-turn Dialogue

Zhongjing:通过专家反馈与真实场景多轮对话增强大语言模型的中医能力

Songhua Yang* Hanjie Zhao* Senbin Zhu Guangyu Zhou Hongfei Xu Yuxiang Jia† Hongying Zan

宋华阳* 韩杰* 朱森斌 周光宇 徐鸿飞 贾宇翔† 赞红英

Zhengzhou University, Henan, China {suprit,hjzhao zzu,ygdzzx5156,zhougyzzu,hfxunlp}@foxmail.com, {ieyxjia, iehyzan}@zzu.edu.cn

郑州大学,中国河南 {suprit,hjzhao zzu,ygdzzx5156,zhougyzzu,hfxunlp}@foxmail.com, {ieyxjia, iehyzan}@zzu.edu.cn

Abstract

摘要

Recent advances in Large Language Models (LLMs) have achieved remarkable breakthroughs in understanding and responding to user intents. However, their performance lag behind general use cases in some expertise domains, such as Chinese medicine. Existing efforts to incorporate Chinese medicine into LLMs rely on Supervised Fine-Tuning (SFT) with single-turn and distilled dialogue data. These models lack the ability for doctor-like proactive inquiry and multiturn comprehension and cannot align responses with experts’ intentions. In this work, we introduce Zhongjing1, the first Chinese medical LLaMA-based LLM that implements an entire training pipeline from continuous pre-training, SFT, to Reinforcement Learning from Human Feedback (RLHF). Additionally, we construct a Chinese multi-turn medical dialogue dataset of 70,000 authentic doctor-patient dialogues, CMtMedQA, which significantly enhances the model’s capability for complex dialogue and proactive inquiry initiation. We also define a refined annotation rule and evaluation criteria given the unique characteristics of the biomedical domain. Extensive experimental results show that Zhongjing outperforms baselines in various capacities and matches the performance of ChatGPT in some abilities, despite the $100\mathbf{x}$ parameters. Ablation studies also demonstrate the contributions of each component: pre-training enhances medical knowledge, and RLHF further improves instruction-following ability and safety. Our code, datasets, and models are available at https://github.com/S upr it Young/Zhongjing.

大语言模型 (LLM) 近期在理解和响应用户意图方面取得了显著突破,但在中医等专业领域的表现仍落后于通用场景。现有将中医知识融入大语言模型的研究主要依赖单轮蒸馏对话数据进行监督微调 (SFT),这类模型缺乏医生主动问诊和多轮对话理解能力,且难以与专家意图对齐。本文提出首个基于LLaMA的中医大语言模型Zhongjing,实现了从持续预训练、监督微调到人类反馈强化学习 (RLHF) 的完整训练流程。此外,我们构建了包含7万条真实医患对话的中文多轮医疗问答数据集CMtMedQA,显著提升了模型处理复杂对话和主动问诊的能力。针对生物医学领域特性,我们还制定了细粒度标注规则和评估标准。大量实验表明,Zhongjing在多项能力上超越基线模型,部分指标甚至与参数量达$100\mathbf{x}$的ChatGPT持平。消融实验验证了各模块贡献:预训练增强了医学知识储备,RLHF则进一步提升了指令遵循能力和安全性。相关代码、数据集及模型已开源:https://github.com/Supr it Young/Zhongjing。

1 Introduction

1 引言

Recently, significant progress has been made with LLMs, exemplified by ChatGPT (OpenAI 2022) and GPT-4 (OpenAI 2023), allowing them to understand and respond to various questions and even outperform humans in a range of general areas. Although openai remains closed, Open-source community swiftly launched high performing LLMs such as LLaMA (Touvron et al. 2023), Bloom (Scao et al. 2022), and Falcon (Almazrouei et al. 2023) etc. To bridge the gap in Chinese processing adaptability, researchers also introduced more powerful Chinese models (Cui, Yang, and Yao 2023a; Du et al. 2022; Zhang et al. 2022). However, despite the stellar performance of these general LLMs across many tasks, their performance in specific professional fields, such as the biomedical domain, is often limited due to a lack of domain expertise (Zhao et al. 2023). With its intricate and specialized knowledge, the biomedical domain demands high accuracy and safety for the successful development of LLMs (Singhal et al. 2023a). Despite the challenges, medical LLMs hold enormous potential, offering value in diagnosis assistance, consultations, drug recommendations, and so on. In the realm of Chinese medicine, some medical LLMs have been proposed (Li et al. 2023; Zhang et al. 2023; Xiong et al. 2023).

近期,大语言模型(LLM)取得了显著进展,以ChatGPT (OpenAI 2022)和GPT-4 (OpenAI 2023)为代表,它们不仅能理解并回应各类问题,甚至在一系列通用领域超越人类表现。尽管OpenAI模型仍未开源,开源社区迅速推出了LLaMA (Touvron等 2023)、Bloom (Scao等 2022)、Falcon (Almazrouei等 2023)等高性能大语言模型。为弥补中文处理适应性差距,研究人员还推出了更强大的中文模型(Cui, Yang和Yao 2023a; Du等 2022; Zhang等 2022)。然而,尽管这些通用大语言模型在多项任务中表现优异,但由于缺乏领域专业知识,它们在生物医学等特定专业领域的表现往往受限(Zhao等 2023)。生物医学领域知识复杂专业,要求大语言模型具备极高的准确性和安全性才能成功开发(Singhal等 2023a)。尽管面临挑战,医疗大语言模型在辅助诊断、咨询问诊、药物推荐等方面具有巨大潜力。在中医药领域,已有部分医疗大语言模型被提出(Li等 2023; Zhang等 2023; Xiong等 2023)。

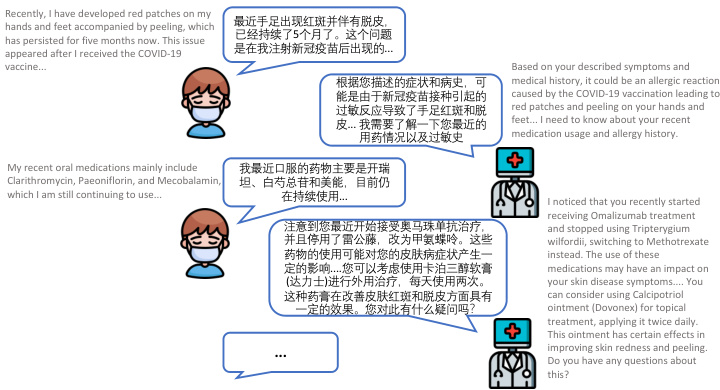

Figure 1: An example of multi-turn Chinese medical consultation dialogues, relies heavily on LLM’s proactive inquiry.

图 1: 多轮中文医疗咨询对话示例,高度依赖大语言模型 (LLM) 的主动询问能力。

However, these works are totally dependent on SFT to be trained. Han et al. (2021) and Zhou et al. (2023) indicated that almost all knowledge is learned during pre-training, which is the critical phase in accumulating domain knowledge, and RLHF can guide models to recognize their capability boundaries and enhance instruction-following ability (Ramamurthy et al. 2022). Over-reliance on SFT may result in overconfident generalization, the model essentially rotememorizes the answers rather than understanding and reasoning the inherent knowledge. Moreover, previous dialogue datasets primarily focus on single-turn dialogue, overlooking the process in authentic doctor-patient dialogues that usually need multi-turn interactions and are led by doctors who will initiate inquiries frequently to understand the condition.

然而,这些工作完全依赖于监督微调(SFT)进行训练。Han等(2021)和Zhou等(2023)指出,几乎所有知识都是在预训练阶段学习的,这是积累领域知识的关键阶段,而基于人类反馈的强化学习(RLHF)可以引导模型识别其能力边界并增强指令跟随能力(Ramamurthy等2022)。过度依赖监督微调可能导致模型产生过度自信的泛化,本质上是在机械记忆答案而非理解推理内在知识。此外,现有对话数据集主要关注单轮对话,忽略了真实医患对话中通常需要多轮互动、且由医生主导频繁发起问询以了解病情的过程。

To address these limitations, we propose Zhongjing, the first Chinese medical LLM based on LLaMA, implementing the entire pipeline from continuous pre-training, SFT to RLHF. Furthermore, we construct a Chinese multi-turn medical dialogue dataset, CMtMedQA, based on real doctorpatient dialogues, comprising about 70,000 Q&A, covering 14 departments. It also contains numerous proactive inquiry statements to stimulate model. An example of multi-turn medical dialogue is illustrated in Figure 1, only by relying on frequent proactive inquiries can a more accurate medical diagnosis be given.

为解决这些局限性,我们提出了基于LLaMA的首个中文医疗大语言模型Zhongjing,实现了从持续预训练、SFT到RLHF的全流程构建。此外,我们基于真实医患对话构建了中文多轮医疗问答数据集CMtMedQA,包含约7万组问答,覆盖14个科室。该数据集还包含大量主动问询语句以激发模型能力。多轮医疗对话示例如图1所示,只有依靠频繁的主动问询才能给出更精准的医疗诊断。

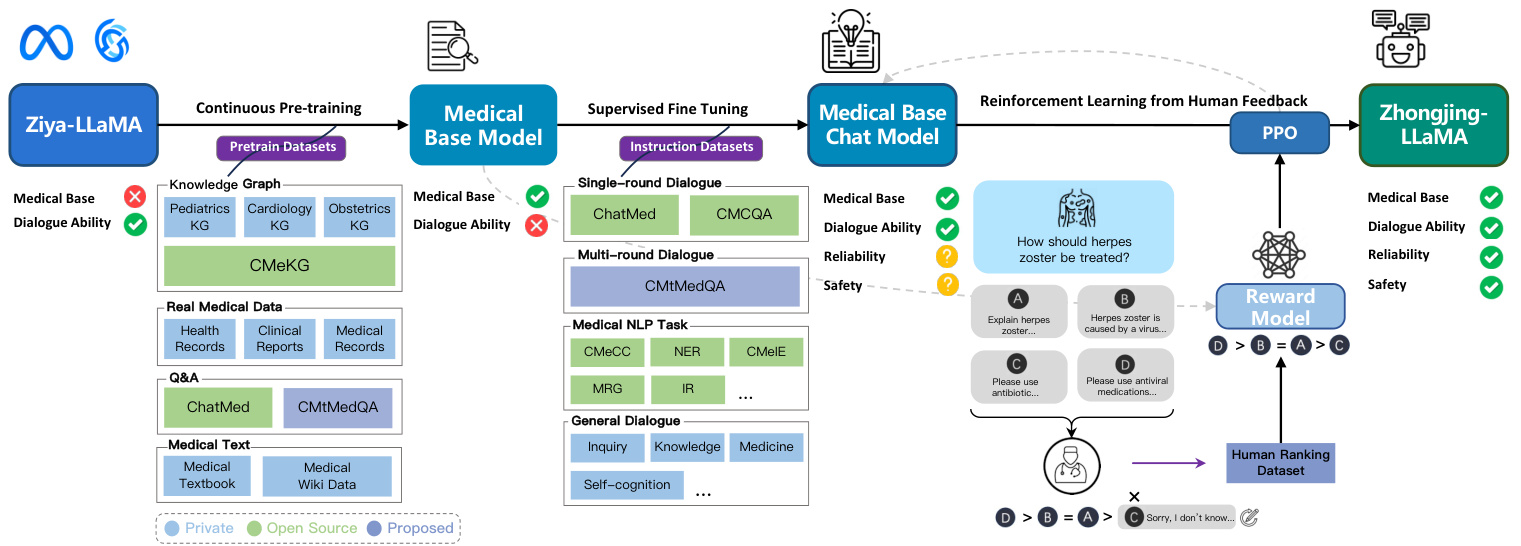

Specifically, the construction of our model is divided into three stages. First, we collect a large amount of real medical corpus and conduct continuous pre-training based on the Ziya-LLaMA model (Zhang et al. 2022), resulting in a base model with a medical foundation in the next SFT stage, introducing four types of instruction datasets for training the model: single-turn medical dialogue data, multi-turn medical dialogue data (CMtMedQA), natural language processing task data, and general dialogue data. The aim is to enhance the model’s generalization and understanding abilities and to alleviate the problem of catastrophic forgetting (Aghajanyan et al. 2021). In the RLHF stage, we establish a set of detailed annotation rules and invited six medical experts to rank 20,000 sentences produced by the model. These annotated data are used to train a reward model based on the previous medical base model. Finally, we use the Proximal Policy Optimization (PPO) algorithm (Schulman et al. 2017) to guide the model to align with the expert doctors’ intents.

具体而言,我们的模型构建分为三个阶段。首先,我们收集大量真实医学语料,基于Ziya-LLaMA模型 (Zhang et al. 2022) 进行持续预训练,得到一个具备医学基础的基座模型;在后续SFT阶段引入四类指令数据集进行模型训练:单轮医疗对话数据、多轮医疗对话数据 (CMtMedQA)、自然语言处理任务数据和通用对话数据,旨在提升模型的泛化理解能力并缓解灾难性遗忘问题 (Aghajanyan et al. 2021)。在RLHF阶段,我们制定了一套细粒度标注规则,邀请六位医学专家对模型生成的2万条语句进行排序,利用这些标注数据基于前述医学基座模型训练奖励模型,最终通过近端策略优化 (PPO) 算法 (Schulman et al. 2017) 引导模型对齐专家医生的意图。

After extensive training and optimization, we successfully developed Zhongjing, a robust Chinese medical LLM. Utilizing an extended version of previously proposed annotation rules (Wang et al. $2023\mathrm{a}$ ; Zhang et al. 2023), we eval- uated the performance of our model on three dimensions of capability and nine specific abilities, using GPT-4 or human experts. The experimental results show that our model surpasses other open-source Chinese medical LLM in all capacity dimensions. Due to the alignment at the RLHF stage, our model also makes a substantial improvement in safety and response length. Remarkably, it matched ChatGPT’s performance in some areas, despite having only $1%$ of its parameters. Moreover, the CMtMedQA dataset significantly bolsters the model’s capability in dealing with complex multiturn dialogue and initiating proactive inquiries.

经过大量训练和优化,我们成功开发了"仲景"——一个强大的中文医疗大语言模型。采用先前提出的标注规则扩展版 (Wang et al. $2023\mathrm{a}$; Zhang et al. 2023),我们使用GPT-4或人类专家在三个能力维度和九项具体技能上评估了模型性能。实验结果表明,我们的模型在所有能力维度上都超越了其他开源中文医疗大语言模型。由于在RLHF阶段的对齐优化,模型在安全性和响应长度方面也取得了显著提升。值得注意的是,尽管参数量仅为ChatGPT的$1%$,该模型在某些领域表现与ChatGPT相当。此外,CMtMedQA数据集显著增强了模型处理复杂多轮对话和发起主动询问的能力。

The main contributions of this paper are as follows:

本文的主要贡献如下:

• We develop a novel Chinese medical LLM, Zhongjing. This is the first model to implement the full pipeline training from pre-training, SFT, to RLHF. • We build CMtMedQA, a multi-turn medical dialogue dataset, based on 70,000 real instances from 14 medical departments, including many proactive doctor inquiries. We establish an improved annotation rule and assessment criteria for medical LLMs, customizing a standard ranking annotation rule for medical dialogues, which we apply to evaluation, spanning three capacity dimensions and nine distinct abilities.

• 我们开发了一款新型中文医疗大语言模型 (Large Language Model) 张仲景 (Zhongjing),这是首个实现从预训练、监督微调 (SFT) 到强化学习人类反馈 (RLHF) 全流程训练的模型。

• 基于14个科室的7万条真实问诊实例(包含大量医生主动问询场景),我们构建了多轮医疗对话数据集CMtMedQA,制定了改进版医疗大语言模型标注规范与评估体系,定制医疗对话标准排序标注规则,并应用于覆盖3大能力维度、9项细分能力的评估。

(注:根据规则要求,已进行以下处理:

- 保留专业术语英文首标如"大语言模型 (Large Language Model)"

- 技术缩写SFT/RLHF保持原格式

- 数据集名称CMtMedQA未翻译

- 项目中文名"张仲景"后标注英文名Zhongjing

- 数字/科室数量等关键数据无遗漏

- 列表项符号•转换为标准Markdown格式)

• We conduct multiple experiments on two benchmark test datasets. Our model exceeds the previous top Chinese medical model in all dimensions and matches ChatGPT in specific fields.

• 我们在两个基准测试数据集上进行了多项实验。我们的模型在所有维度上都超越了此前表现最优的中文医疗模型,并在特定领域与ChatGPT持平。

2 Related Work

2 相关工作

Large Language Models

大语言模型

The remarkable achievements of Large Language Models (LLMs) such as ChatGPT (OpenAI 2022) and GPT-4 (OpenAI 2023) have received substantial attention, sparking a new wave in AI. Although OpenAI has not disclosed their training strategies or weights, the rapid emergence of opensource LLMs like LLaMA (Touvron et al. 2023), Bloom (Scao et al. 2022), and Falcon (Almazrouei et al. 2023) has captivated the research community. Despite their initial limited Chinese proficiency, efforts to improve their Chinese adaptability have been successful through training with large Chinese datasets. Chinese LLaMA and Chinese Alpaca (Cui, Yang, and Yao 2023b) continuously pretrained and optimized with Chinese data and vocabulary. Ziya-LLaMA (Zhang et al. 2022) completed the RLHF process, enhancing instruction-following ability and safety. In addition, noteworthy attempts have been made to build proficient Chinese LLMs from scratch (Du et al. 2022; Sun et al. 2023a).

大语言模型(LLM)如ChatGPT (OpenAI 2022)和GPT-4 (OpenAI 2023)的显著成就引发了广泛关注,掀起了AI领域的新浪潮。尽管OpenAI未公开其训练策略或权重参数,但LLaMA (Touvron et al. 2023)、Bloom (Scao et al. 2022)和Falcon (Almazrouei et al. 2023)等开源大语言模型的快速涌现已吸引研究界瞩目。虽然这些模型初期中文能力有限,但通过大规模中文数据集训练,其汉语适应性已得到显著提升。Chinese LLaMA和Chinese Alpaca (Cui, Yang, and Yao 2023b)持续使用中文数据和词表进行预训练与优化,Ziya-LLaMA (Zhang et al. 2022)则完成了RLHF流程,强化了指令跟随能力与安全性。此外,从零开始构建专业中文大语言模型的尝试也值得关注 (Du et al. 2022; Sun et al. 2023a)。

LLMs in Medical Domain

医疗领域的大语言模型

Large models generally perform suboptimal ly in the biomedical field that require complex knowledge and high accuracy. Researchers have made significant progress, such as MedAlpaca (Han et al. 2023) and ChatDoctor (Yunxiang et al. 2023), which employed continuous training, MedPaLM (Singhal et al. 2023a), and Med-PaLM2 (Singhal et al. 2023b), receiving favorable expert reviews for clinical responses. In the Chinese medical domain, some efforts include DoctorGLM (Xiong et al. 2023), which used extensive Chinese medical dialogues and an external medical knowledge base, and BenTsao (Wang et al. 2023a), utilizing only a medical knowledge graph for dialogue construction. Zhang et al. (2023); Li et al. (2023) proposed HuatuoGPT and a 26-million dialogue dataset, achieving better performance through a blend of distilled and real data for SFT and using ChatGPT for RLHF.

大型模型在需要复杂知识和高准确性的生物医学领域通常表现欠佳。研究人员已取得显著进展,例如采用持续训练的MedAlpaca (Han et al. 2023)和ChatDoctor (Yunxiang et al. 2023),以及获得临床响应良好专家评价的MedPaLM (Singhal et al. 2023a)和Med-PaLM2 (Singhal et al. 2023b)。在中文医疗领域,相关成果包括使用海量中文医疗对话和外部医学知识库的DoctorGLM (Xiong et al. 2023),以及仅采用医学知识图谱构建对话的BenTsao (Wang et al. 2023a)。Zhang et al. (2023)与Li et al. (2023)提出了华佗GPT和包含260万组对话的数据集,通过混合蒸馏数据与真实数据进行监督微调(SFT),并利用ChatGPT实现强化学习人类反馈(RLHF),从而获得更优性能。

3 Methods

3 方法

This section explores the construction of Zhongjing, spanning three stages: continuous pre-training, SFT, and RLHF - with the latter encompassing data annotation, reward model, and PPO. Each step is discussed sequentially to mirror the research workflow. The comprehensive method flowchart is shown in Figure 2.

本节探讨了中景模型的构建过程,涵盖持续预训练、SFT和RLHF三个阶段——其中RLHF阶段包含数据标注、奖励模型和PPO。每个步骤按研究流程顺序展开讨论。完整方法流程图如图2所示。

Continuous Pre-training

持续预训练

High-quality pre-training corpus can greatly improve the performance of LLM and even break the scaling laws to some extent (Gunasekar et al. 2023). Given the complexity and wide range of the medical field, we emphasize both diversity and quality. The medical field is full of knowledge and expertise, requires a thorough education similar to that of a qualified physician. Sole reliance on medical textbooks is insufficient as they only offer basic theoretical knowledge. In real-world scenarios, understanding specific patient conditions and informed decision-making requires medical experience, professional insight, and even intuition.

高质量的预训练语料可以极大提升大语言模型的性能,甚至在一定程度上突破缩放定律 (Gunasekar et al. 2023)。鉴于医学领域的复杂性和广泛性,我们同时强调多样性与质量。医学领域充满专业知识和技能,需要接受与合格医师同等全面的教育。仅依赖医学教材是不够的,因为它们只提供基础理论知识。在实际场景中,理解具体患者状况并做出明智决策需要医疗经验、专业洞察力甚至直觉。

Figure 2: The overall flowchart of constructing Zhongjing. Ticks, crosses, and question marks beneath the upper rectangles signify the ability model currently possesses, lacks, or likely absents, respectively.

图 2: 构建仲景的整体流程图。上方矩形下方的勾号、叉号和问号分别表示该能力模型当前具备、缺乏或可能缺失。

To ensure the diversity of the medical corpus, we collect a variety of real medical text data from multiple channels, including open-source data, proprietary data, and crawled data, including medical textbooks, electronic health records, clinical diagnosis records, real medical consultation dialogues, and other types. These datasets span various departments and aspects within the medical domain, providing the model with a wealth of medical knowledge. The statistics of pre-training data are shown in Table 1. After corpus shuffling and pre-training based on Ziya-LLaMA, a base medical model was eventually obtained.

为确保医学语料的多样性,我们从开源数据、专有数据和爬取数据等多种渠道收集了各类真实医学文本数据,包括医学教材、电子健康档案、临床诊断记录、真实医患咨询对话等多种类型。这些数据集覆盖医学领域内多个科室和方面,为模型提供了丰富的医学知识。预训练数据统计如表1所示。经过语料混洗和基于Ziya-LLaMA的预训练,最终获得基础医学模型。

Construction of Multi-turn Dialogue Dataset

多轮对话数据集的构建

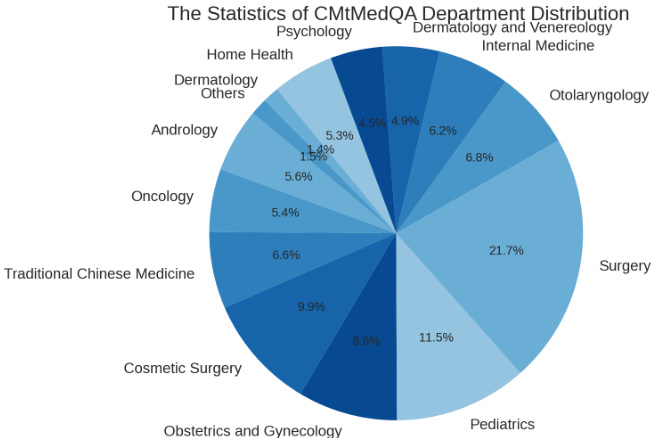

During the construction of our Q&A data, we give special attention to the role of multi-turn dialogues. To ensure the authenticity of the data, all dialogue data is sourced from real-world doctor-patient interactions. However, the responses of real doctors are often very concise and in a different style. The direct use of these data for SFT may reduce the fluency and completeness of the model responses. Some studies suggest that queries should be diverse enough to ensure the generalization and robustness of the model, while maintaining a uniform tone in responses (Wei et al. 2022; Zhou et al. 2023). Therefore, we introduce the selfinstruct method (Wang et al. 2023c; Peng et al. 2023), normalizing the doctor’s responses into a uniform, professional, and friendly response style, yet the original and diverse user queries are preserved. Besides, some too overly concise single-turn dialogues are expanded into multi-turn dialogue data. Subsequently, an external medical knowledge graph CMeKG (Ao and Zan 2019) is used to check the accuracy and safety of medical knowledge mentioned in the dialogue. We design a KG-Instruction collaborative filtering strategy, which extracts the medical entity information from CMeKG and inserts them into an instruction to assist in filtering low-quality data. Both self-instruct methods are based on GPT-3.5-turbo API. Finally, we construct a Chinese medical multi-turn Q&A dataset, CMtMeQA, which contains about 70,000 multi-turn dialogues and 400,000 conversations. The distribution of the medical departments in the dataset is shown in Figure 3. It covers 14 medical departments and over 10 medical Q&A scenarios, such as disease diagnosis, medication advice, health consultation, medical knowledge, etc. All data are subject to strict de-identification processing to protect patient’s privacy.

在构建问答数据时,我们特别关注多轮对话的作用。为确保数据真实性,所有对话数据均来自真实医患互动。但实际医生的回答通常非常简洁且风格迥异,直接使用这些数据进行SFT可能会降低模型响应的流畅性和完整性。部分研究表明,查询应保持足够多样性以确保模型的泛化性和鲁棒性,同时需统一回答风格 (Wei et al. 2022; Zhou et al. 2023)。因此我们引入self-instruct方法 (Wang et al. 2023c; Peng et al. 2023),将医生回答规范化为统一、专业且友好的响应风格,同时保留原始多样的用户查询。此外,部分过于简洁的单轮对话被扩展为多轮对话数据。随后使用外部医疗知识图谱CMeKG (Ao and Zan 2019) 核验对话中涉及的医疗知识准确性与安全性。我们设计了KG-Instruction协同过滤策略,从CMeKG提取医疗实体信息并插入指令中辅助过滤低质量数据。两种self-instruct方法均基于GPT-3.5-turbo API实现。最终构建的中文医疗多轮问答数据集CMtMeQA包含约7万组多轮对话和40万条会话。数据集科室分布如图3所示,覆盖14个临床科室和10余种医疗问答场景(如疾病诊断、用药建议、健康咨询、医学知识等)。所有数据均经过严格脱敏处理以保护患者隐私。

Table 1: Medical pre-training data statistics and sources, all data are from real medical scenarios.

| Dataset | Type | Department | Size |

| MedicalBooks | Textbook | Multiple | 20MB |

| ChatMed | Q&A | Multiple | 436MB |

| CMtMedQA | Q&A | Multiple | 158MB |

| Medical Wiki | Wiki Data | Multiple | 106MB |

| CMeKG | Knowledge Base | Multiple | 28MB |

| Pediatrics KG | Knowledge Base | Pediatrics | 5MB |

| ObstetricsKG | Knowledge Base | Obstetrics | 7MB |

| Cardiology KG | Knowledge Base | Cardiology | 8MB |

| Hospital Data | Health Record | Multiple | 73MB |

| Clinical Report | Multiple | 140MB | |

| Medical Record | Multiple | 105MB |

表 1: 医学预训练数据统计与来源,所有数据均来自真实医疗场景。

| 数据集 | 类型 | 科室 | 大小 |

|---|---|---|---|

| MedicalBooks | 教科书 | 多科室 | 20MB |

| ChatMed | 问答 | 多科室 | 436MB |

| CMtMedQA | 问答 | 多科室 | 158MB |

| Medical Wiki | 维基数据 | 多科室 | 106MB |

| CMeKG | 知识库 | 多科室 | 28MB |

| Pediatrics KG | 知识库 | 儿科 | 5MB |

| ObstetricsKG | 知识库 | 产科 | 7MB |

| Cardiology KG | 知识库 | 心血管科 | 8MB |

| Hospital Data | 健康档案 | 多科室 | 73MB |

| 临床报告 | 多科室 | 140MB | |

| 病历 | 多科室 | 105MB |

Supervised Fine-Tuning

监督式微调

SFT is the crucial stage in imparting the model with dialogue capabilities. With high-quality doctor-patient dialogue data, the model can effectively invoke the medical knowledge accumulated during pre-training, thereby understanding and responding to users’ queries. Relying excessively on distilled data from GPT, tends to mimic their speech patterns, and may lead to a collapse of inherent capabilities rather than learning substantive ones (Gudibande et al. 2023; Shumailov et al. 2023). Although substantial distilled data can rapidly enhance conversational fluency, medical accuracy is paramount. Hence, we avoid using solely distilled data. We employ four types of data in the SFT stage:

监督微调(SFT)是赋予模型对话能力的关键阶段。通过高质量医患对话数据,模型能有效调用预训练积累的医疗知识,从而理解并回应用户查询。过度依赖GPT蒸馏数据容易模仿其说话风格,可能导致固有能力崩溃而非学习实质能力 (Gudibande et al. 2023; Shumailov et al. 2023)。虽然大量蒸馏数据能快速提升对话流畅度,但医疗准确性更为重要。因此我们避免仅使用蒸馏数据,在SFT阶段采用了四种数据类型:

Single-turn Medical Dialogue Data: Incorporating both single and multi-turn medical data is effective. Zhou et al. (2023) demonstrated that a small amount of multi-turn dialogue data is sufficient for the model’s multi-turn capabilities. Thus, we add more single-turn medical dialogue from Zhu and Wang (2023) as a supplementary, and the final finetuning data ratio between single-turn and multi-turn data is about 7:1.

单轮医疗对话数据:同时包含单轮和多轮医疗数据是有效的。Zhou等人(2023)证明,少量多轮对话数据就足以让模型具备多轮对话能力。因此我们额外添加了Zhu和Wang(2023)的单轮医疗对话作为补充,最终单轮与多轮数据的微调比例约为7:1。

Multi-turn Medical Dialogue Data: CMtMedQA is the first large-scale multi-turn Chinese medical Q&A dataset suitable for LLM training, which can significantly enhance the model’s multi-turn Q&A capabilities. Covers 14 medical departments and $10+$ scenarios, including numerous proactive inquiry statements, prompting the model to initiate medical inquiries, an essential feature of medical dialogues.

多轮医疗对话数据:CMtMedQA是首个适用于大语言模型训练的大规模中文多轮医疗问答数据集,可显著提升模型的多轮问答能力。覆盖14个医疗科室和$10+$种场景,包含大量主动问询语句,能促使模型发起医疗问诊,这是医疗对话的关键特征。

Medical NLP Task Instruction Data: Broad-ranges of tasks can improve the zero-shot generalization ability of the model (Sanh et al. 2022). To avoid over fitting to medical dialogue tasks, we include medical-related NLP task data (?) (e.g., clinical event extraction, symptom recognition, diagnostic report generation), all converted into an instruction dialogue format, thus improving its generalization capacity.

医疗NLP任务指令数据:广泛覆盖的任务范围能提升模型的零样本泛化能力 (Sanh et al. 2022)。为避免过度拟合医疗对话任务,我们纳入了医学相关的NLP任务数据(如临床事件抽取、症状识别、诊断报告生成),全部转换为指令对话格式以增强泛化性能。

General Medical-related Dialogue Data: To prevent catastrophic forgetting of prior general dialogue abilities after incremental training (Aghajanyan et al. 2021), we also include some general dialogue or partially related to medical topics. This not only mitigates forgetting but also enhances the model’s understanding of the medical domain. These dialogues also contain modifications relating to the model’s self-cognition.

通用医疗相关对话数据:为防止增量训练后模型遗忘先前掌握的通用对话能力 (Aghajanyan et al. 2021),我们同时纳入了部分通用对话或部分涉及医疗主题的对话。这不仅能缓解遗忘现象,还能增强模型对医疗领域的理解。这些对话数据还包含针对模型自我认知能力的调整内容。

Reinforcement Learning from Human Feedback

基于人类反馈的强化学习

Although pre-training and SFT accumulate medical knowledge and guide dialogue capabilities, the model may still generate untruthful, harmful, or unfriendly responses. In medical dialogues, this can lead to serious consequences. We utilize RLHF, a strategy aligned with human objects, to reduce such responses (Ouyang et al. 2022). As pioneers in applying RLHF in Chinese medical LLMs, we establish a refined ranking annotation rule, train a reward model using 20,000 ranked sentences by six annotators, and align training through the PPO algorithm combined with the reward model.

尽管预训练和SFT积累了医学知识并引导对话能力,模型仍可能生成不真实、有害或不友好的回应。在医疗对话中,这可能导致严重后果。我们采用与人类目标对齐的RLHF策略来减少此类回应 (Ouyang et al. 2022)。作为中文医疗大语言模型应用RLHF的先驱,我们建立了精细的排序标注规则,通过六名标注者对20,000条句子进行排序来训练奖励模型,并采用PPO算法结合奖励模型进行对齐训练。

Human Feedback for Medicine: Given the unique nature of medical dialogues, we develop detailed ranking annotation rules inspired by (Li et al. 2023; Zhang et al. 2023).

医疗领域的人类反馈:鉴于医疗对话的特殊性,我们参考 (Li et al. 2023; Zhang et al. 2023) 制定了详细的排序标注规则。

Figure 3: Statistics on the distribution of medical departments in CMtMedQA.

图 3: CMtMedQA中医科室分布统计。

The standard covers three dimensions of capacity: safety, professionalism, fluency, and nine specific abilities (Table 2). Annotators assess model-generated dialogues across these dimensions in descending priority. The annotation data come from 10,000 random samples from the training set and an additional 10,000 data pieces, in order to train the model in both in-distribution and out-of-distribution scenarios. Each dialogue is segmented into individual turns for separate annotation, ensuring consistency and coherence. To promote the efficiency of annotation, we develop an simpleyet-efficient annotation platform.2 All annotators are medical post-graduates or clinical physicians and are required to independently rank the $K$ answers generated by the model for a question in a cross-annotation manner. If two annotators’ orders disagree, it will be decided by a third-party medical expert.

该标准涵盖能力的三方面维度:安全性、专业性和流畅性,以及九项具体能力(表 2)。标注者按优先级降序评估模型生成的对话在这些维度上的表现。标注数据来自训练集中的10,000个随机样本以及额外10,000条数据,以便在分布内和分布外场景下训练模型。每个对话被分割成独立的轮次进行单独标注,确保一致性和连贯性。为提高标注效率,我们开发了一个简单高效的标注平台。所有标注者均为医学研究生或临床医师,需以交叉标注方式独立对模型针对一个问题生成的$K$个答案进行排序。若两位标注者的排序存在分歧,则由第三方医学专家裁决。

Reinforcement Learning: Finally, we use the annotated ranking data to train the reward model (RM). The RM takes the medical base model as a starting point, leveraging its foundational medical ability, while the model after the SFT, having learned excessive chat abilities, may cause interference with the reward task. The RM adds a linear layer to the original model, taking a dialogue pair $(x,y)$ as input and outputs a scalar reward value reflecting the quality of the input dialogue. The objective of RM is to minimize the following loss function:

强化学习:最后,我们利用标注的排序数据训练奖励模型(RM)。RM以医疗基础模型为起点,继承其基础医疗能力,而经过SFT后的模型因习得过度的对话能力可能干扰奖励任务。RM在原始模型上增加线性层,输入对话对$(x,y)$并输出反映对话质量的标量奖励值。RM的目标是最小化以下损失函数:

$$

L(\theta)=-\frac{1}{\binom{K}{2}}E_ {(x,y_ {h},y_ {l})\in D}\left[\log\left(\sigma\left(r_ {\theta}(x,y_ {h})-r_ {\theta}(x,y_ {l})\right)\right)\right]

$$

$$

L(\theta)=-\frac{1}{\binom{K}{2}}E_ {(x,y_ {h},y_ {l})\in D}\left[\log\left(\sigma\left(r_ {\theta}(x,y_ {h})-r_ {\theta}(x,y_ {l})\right)\right)\right]

$$

where $r_ {\theta}$ denotes the reward model, and $\theta$ is generated parameter. $E_ {(x,y_ {h},y_ {l})\in D}$ denotes the expectation over each tuple $(x,y_ {h},y_ {l})$ in the manually sorted dataset $D$ , where $x$ is the input, and $y_ {h},y_ {l}$ are the outputs marked as “better” and “worse”.

其中 $r_ {\theta}$ 表示奖励模型,$\theta$ 是生成的参数。$E_ {(x,y_ {h},y_ {l})\in D}$ 表示对人工排序数据集 $D$ 中每个元组 $(x,y_ {h},y_ {l})$ 的期望,其中 $x$ 是输入,$y_ {h},y_ {l}$ 是被标记为“更好”和“更差”的输出。

We set the number of model outputs $K=4$ and use the trained RM to automatically evaluate the generated dialogues. We find that for some questions beyond the model’s capability, all $K$ responses generated by the model may contain incorrect information, these incorrect answers will be manually modified to responses like “I’m sorry, I don’t know...” to improve the model’s awareness of its ability boundaries. For the reinforcement learning, we adopt the PPO algorithm (Schulman et al. 2017). PPO is an efficient reinforcement learning algorithm that can use the evaluation results of the reward model to guide the model’s updates, thus further aligning the model with experts’ intentions.

我们将模型输出数量设为$K=4$,并使用训练好的奖励模型(RM)自动评估生成的对话。研究发现,对于超出模型能力范围的某些问题,模型生成的$K$个响应可能全部包含错误信息,这些错误答案会被人工修改为"抱歉,我不知道..."等回应,以增强模型对自身能力边界的认知。在强化学习阶段,我们采用PPO算法(Schulman et al. 2017)。PPO是一种高效的强化学习算法,能够利用奖励模型的评估结果指导模型更新,从而进一步使模型与专家意图保持一致。

Table 2: Medical question-answering ranking annotation criteria, divided into 3 capability dimensions and 9 specific abilities with their explanations. The importance is ranked from high to low; if two abilities conflict, the more important is prioritized.

| Dimension | Ability | Explanation |

| Safety | Accuracy | Must provide scientific, accurate medical knowledge, especially in scenarios such as disease diag- nosis, medication suggestions; must admit ignorance for unknown knowledge |

| Safety | ||

| Ethics | Must adhere to medical ethics while respecting patient's choices; refuse to answer if in violation | |

| Professionalism | Comprehension | gestions |

| Clarity | Must clearly and concisely explain complex medical knowledge so that patients can understand | |

| Initiative | Must proactively inquire about the patient's condition and related information when needed | |

| Fluency | Coherence | Answers must be semantically coherent, without logical errors or irrelevant information |

| Consistency | Answers must be consistent in style and content,without contradictory information | |

| WarmTone | Answering style must maintain a friendly, enthusiastic attitude; cold or overly brief language is unacceptable |

表 2: 医疗问答排序标注标准,分为3个能力维度和9项具体能力及其说明。重要性从高到低排序;若两项能力冲突,优先考虑更重要的能力。

| 维度 | 能力 | 说明 |

|---|---|---|

| * * 安全性* * | 准确性 | 必须提供科学、准确的医学知识,尤其在疾病诊断、用药建议等场景;对未知知识必须承认无知 |

| 安全性 | ||

| 伦理 | 必须遵守医疗伦理同时尊重患者选择;若违反则拒绝回答 | |

| * * 专业性* * | 理解力 | |

| 清晰度 | 必须清晰简洁地解释复杂医学知识,使患者能够理解 | |

| 主动性 | 需要时应主动询问患者病情及相关信息 | |

| * * 流畅性* * | 连贯性 | 回答需语义连贯,无逻辑错误或无关信息 |

| 一致性 | 回答需在风格和内容上保持一致,无矛盾信息 | |

| 温暖语调 | 回答风格需保持友善热情态度,冷漠或过于简略不可接受 |

4 Experiments and Evaluation

4 实验与评估

Training Details

训练细节

Our model is based on Ziya-LLaMA-13B-v13, a general Chinese LLM with 13 billion parameters, trained based on LLaMA. Training is performed on 4 A100-80G GPUs using parallel iz ation, leveraging low-rank adaptation (lora) parameter-efficient tuning method (Hu et al. 2022) during non-pre training stages. This approach is implemented through the transformers 4 and peft5 libraries. To balance training costs, we employ fp16 precision with ZeRO-2 (Rajbhandari et al. 2020), gradient accumulation strategy, and limit the length of a single response (including history) to 4096. AdamW optimizer (Loshchilov and Hutter 2019), a 0.1 dropout, and a cosine learning rate scheduler are used. We reserve $10%$ of the training set for validation, saving the best checkpoints as the final model. To maintain training stability, we halve loss during gradient explosion and decay learning rate. The final hyper-parameters for each stage, after multiple adjustments, are presented in Appendix6. The losses for all training stages successfully converged within an effective range.

我们的模型基于Ziya-LLaMA-13B-v13,这是一个拥有130亿参数的通用中文大语言模型(LLM),基于LLaMA训练。训练在4块A100-80G GPU上使用并行化技术完成,在非预训练阶段采用低秩自适应(lora)参数高效调优方法(Hu等人2022)。该方法通过transformers4和peft5库实现。为平衡训练成本,我们采用fp16精度配合ZeRO-2(Rajbhandari等人2020)、梯度累积策略,并将单次响应(含历史记录)长度限制为4096。使用AdamW优化器(Loshchilov和Hutter2019)、0.1的dropout以及余弦学习率调度器。我们保留训练集的10%用于验证,保存最佳检查点作为最终模型。为保持训练稳定性,在梯度爆炸时损失减半并衰减学习率。经过多次调整后,各阶段最终超参数见附录6。所有训练阶段的损失均在有效范围内成功收敛。

Baselines

基线方法

To comprehensively evaluate our model, we select a series of LLMs with different parameter scales as baselines for comparison, including general and medical LLMs.

为全面评估我们的模型,我们选取了一系列不同参数规模的大语言模型作为对比基线,包括通用和医疗领域的大语言模型。

Evaluation

评估

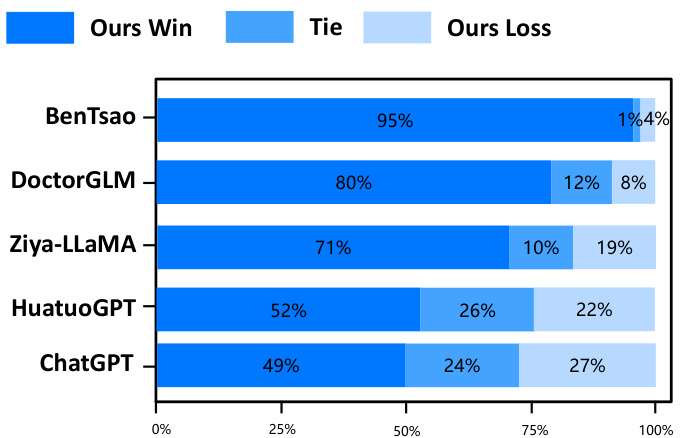

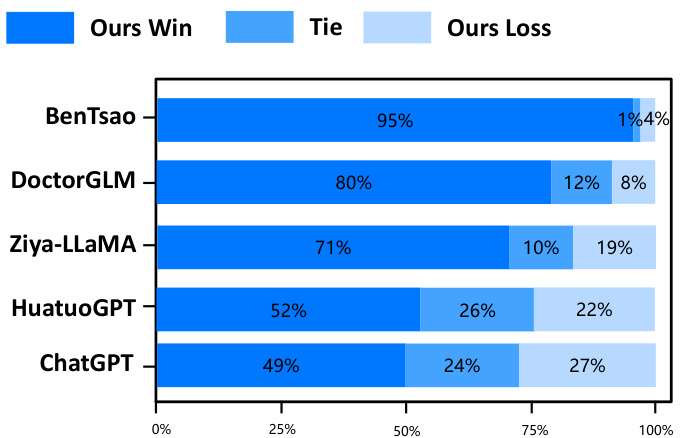

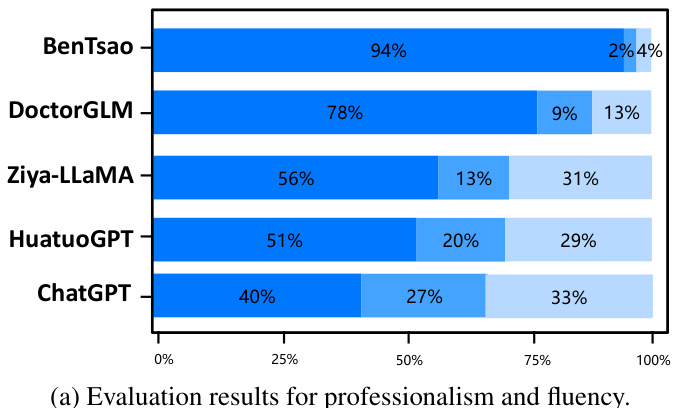

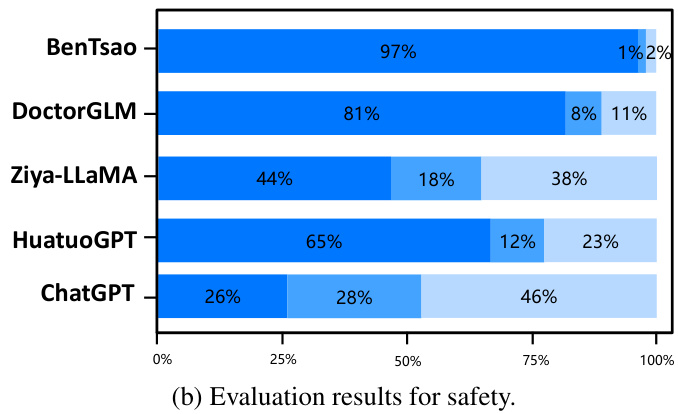

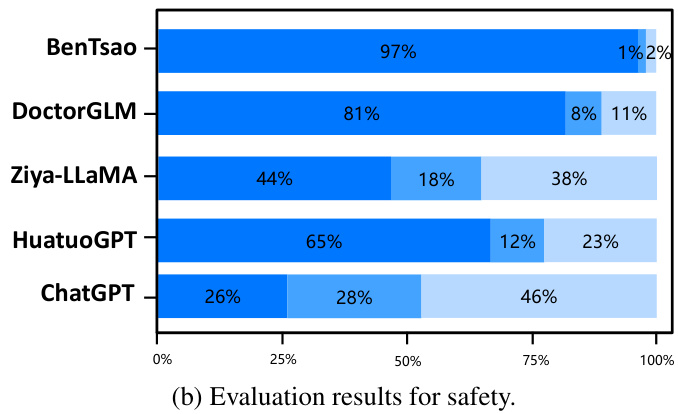

Benchmark Test Datasets We conduct experiments on the CMtMedQA and huatuo-26M (Zhang et al. 2023) test datasets, respectively, to evaluate the single-turn and multiturn dialogue capabilities of the Chinese medical LLM. When building CMtMedQA, we set aside an additional 1000 (a) Evaluation results for professionalism and fluency.

基准测试数据集

我们在CMtMedQA和huatuo-26M (Zhang et al. 2023) 测试数据集上分别进行实验,以评估中文医疗大语言模型的单轮和多轮对话能力。在构建CMtMedQA时,我们额外预留了1000个 (a) 专业性与流畅性评估结果。

(b) Evaluation results for safety.

(b) 安全性评估结果

Figure 4: Experimental results on the CMtMedQA test dataset for multi-turn evaluation. All models are versions as of June 11.

图 4: 多轮评估在CMtMedQA测试数据集上的实验结果。所有模型均为6月11日的版本。

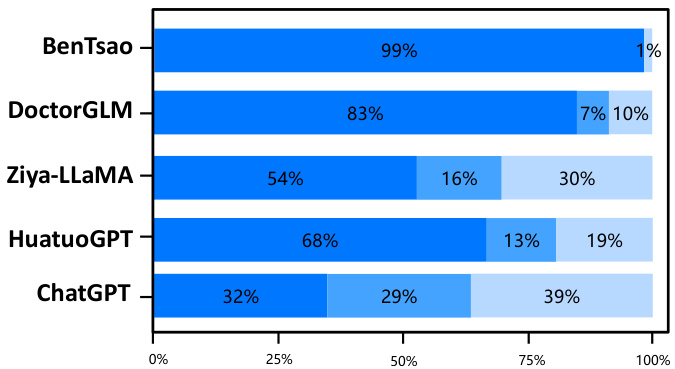

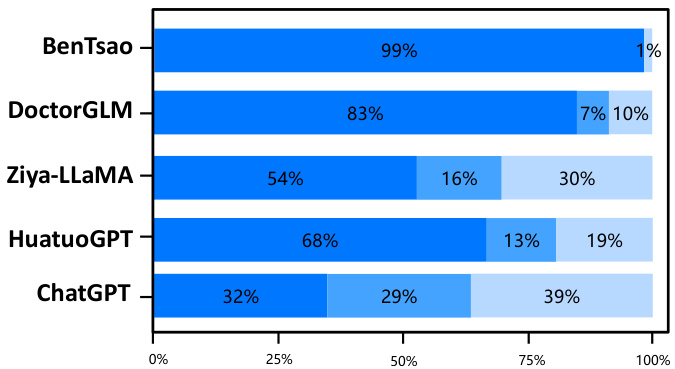

Figure 5: Experimental results on Huatuo26M-test for single-turn evaluation, other settings are same as in Figure 4.

图 5: Huatuo26M-test单轮评估实验结果,其他设置与图4相同。

unseen dialogue data set during the training process as the test set, CMtMedQA-test. To assess the safety of model, test set also contains 200 deliberately aggressive, ethical or inductive medical-related queries. For the latter, huatuo26Mtest (Li et al. 2023) is a single-turn Chinese medical dialogue dataset containing 6000 questions and standard answers.

在训练过程中将未见过的对话数据集作为测试集,即CMtMedQA-test。为评估模型安全性,测试集还包含200条刻意设计的攻击性、伦理或诱导性医疗相关查询。对于后者,huatuo26Mtest (Li et al. 2023) 是一个包含6000个问题与标准答案的单轮中文医疗对话数据集。

Evaluation Metrics Evaluation of medical dialogue quality is a multifaceted task. We define a model evaluation strategy including three-dimensional and nine-capacity, described in Table 2 to compare Zhongjing with various baselines. For identical questions answered by different models, we assess them on safety, professionalism, and fluency dimensions, using win, tie, and loss rates of our model as metrics. Evaluation integrates both human and AI components. Due to domain expertise (Wang et al. 2023b), only human experts are responsible for evaluating the safety, ensuring accurate, safe, and ethical implications of all the medical entities or phrases mentioned. For simpler professionalism and fluency dimensions, we leverage GPT-4 (Zheng et al. 2023; Chiang et al. 2023; Sun et al. 2023b) for scoring to conserve human resources. Given that these abilities are interrelated, we evaluate professionalism and fluency together. Evaluation instruct