ALPACARE: INSTRUCTION FINE-TUNED LARGE LANGUAGE MODELS FOR MEDICAL APPLICATIONS

ALPACARE: 面向医疗应用的指令微调大语言模型

ABSTRACT

摘要

Instruction-finetuning (IFT) has become crucial in aligning Large Language Models (LLMs) with diverse human needs and has shown great potential in medical applications. However, previous studies mainly fine-tune LLMs on biomedical datasets with limited diversity, which often rely on benchmarks or narrow task scopes, significantly limiting the effectiveness of their medical instruction-following ability and general iz ability. To bridge this gap, we propose creating a diverse, machinegenerated medical IFT dataset, Med Instruct $52k$ , using GPT-4 and ChatGPT with a high-quality expert-curated seed set. We then fine-tune LLaMA-series models on the dataset to develop AlpaCare. Despite using a smaller domain-specific dataset than previous medical LLMs, AlpaCare not only demonstrates superior performance on medical applications, with up to $38.1%$ absolute gain over best baselines in medical free-form instruction evaluations, but also achieves $6.7%$ absolute gains averaged over multiple general domain benchmarks. Human evaluation further shows that AlpaCare consistently outperforms best baselines in terms of both correctness and helpfulness. We offer data, model, and code publicly available 1.

指令微调(IFT)已成为将大语言模型(LLM)与多样化人类需求对齐的关键技术,并在医疗应用中展现出巨大潜力。然而,先前研究主要在生物医学数据集上进行有限多样性的微调,这些数据集通常依赖基准测试或狭窄的任务范围,极大限制了其医疗指令跟随能力和泛化能力的有效性。为弥补这一差距,我们提出利用GPT-4和ChatGPT配合专家精选的高质量种子集,构建了一个多样化、机器生成的医疗IFT数据集Med Instruct $52k$。随后我们在该数据集上对LLaMA系列模型进行微调,开发出AlpaCare。尽管使用的领域特定数据集比先前医疗LLM更小,AlpaCare不仅在医疗应用中表现出卓越性能(在医疗自由形式指令评估中比最佳基线绝对提升达$38.1%$),还在多个通用领域基准测试中平均获得$6.7%$的绝对提升。人工评估进一步表明,AlpaCare在正确性和实用性方面持续优于最佳基线。我们公开提供了数据、模型和代码1。

1 INTRODUCTION

1 引言

Recent advancements in the training of large language models (LLMs) have placed a significant emphasis on instruction-finetuning (IFT), a critical step in enabling pre-trained LLMs to effectively follow instructions (Ouyang et al., 2022; Longpre et al., 2023; Wei et al., 2022). However, relying solely on NLP benchmarks to create instructional datasets can lead to ‘game-the-metric’ issues, often failing to meet actual user needs (Ouyang et al., 2022). To better align with human intent, Wang et al. (2023b) introduces the concept of fine-tuning LLMs using diverse machine-generated instruction-response pairs. Subsequent works further highlight the importance of diversity in IFT datasets (Taori et al., 2023; Xu et al., 2023a; Chiang et al., 2023; Chen et al., 2023). However, how to improve dataset diversity in the medical domain for aligning with various user inquiries is still under explored.

大语言模型 (LLM) 训练的最新进展高度重视指令微调 (instruction-finetuning, IFT) ,这是使预训练大语言模型有效遵循指令的关键步骤 (Ouyang et al., 2022; Longpre et al., 2023; Wei et al., 2022) 。然而,仅依赖 NLP 基准测试创建指令数据集可能导致"指标博弈"问题,往往无法满足实际用户需求 (Ouyang et al., 2022) 。为更好对齐人类意图,Wang et al. (2023b) 提出了使用多样化机器生成指令-响应对来微调大语言模型的概念。后续研究进一步强调了指令微调数据集多样性的重要性 (Taori et al., 2023; Xu et al., 2023a; Chiang et al., 2023; Chen et al., 2023) 。但如何提升医疗领域数据集多样性以匹配多样化用户查询,仍是一个待探索的方向。

LLMs have demonstrated significant potential in the medical domain across various applications (Nori et al., 2023a;b; Singhal et al., 2022; 2023; Liévin et al., 2023; Zhang et al., 2023). To alleviate privacy concerns and manage costs, several medical open-source LLMs (Han et al., 2023; Li et al., 2023b; Wu et al., 2023; Xu et al., 2023b) have been developed by tuning LLaMA (Touvron et al., 2023a;b) on medical datasets. Even substantial volumes, these datasets are limited in task scopes and instructions, primarily focusing on medical benchmarks or specific topics, due to the high cost of collecting real-world instruction datasets (Wang et al., 2023b), particularly when extending further into the medical domain(Jin et al., 2021; 2019). This lack of diversity can negatively impact the models’ ability to follow instructions in medical applications and their effectiveness in the general domain. Therefore, there is an urgent need for a method to generate diverse medical IFT datasets that align with various domain-specific user inquiries while balancing cost.

大语言模型在医疗领域的多种应用中展现出显著潜力 (Nori et al., 2023a;b; Singhal et al., 2022; 2023; Liévin et al., 2023; Zhang et al., 2023)。为缓解隐私担忧并控制成本,研究者通过基于医疗数据微调LLaMA (Touvron et al., 2023a;b) 开发了多个医疗开源大语言模型 (Han et al., 2023; Li et al., 2023b; Wu et al., 2023; Xu et al., 2023b)。尽管数据量庞大,但由于收集真实世界指令数据集的高成本 (Wang et al., 2023b),尤其是深入医疗领域时 (Jin et al., 2021; 2019),这些数据集的任务范围和指令类型仍局限于医疗基准测试或特定主题。这种多样性缺失会削弱模型在医疗应用中的指令遵循能力,并影响其在通用领域的表现。因此,亟需一种方法能平衡成本地生成符合各垂直领域用户需求的多样化医疗指令微调数据集。

To bridge this gap, inspired by Wang et al. (2023b), we propose a semi-automated process that uses GPT-4 (OpenAI, 2023) and ChatGPT (OpenAI, 2022) to create a diverse medical IFT dataset for tuning a medical LLM, which can better align with various domain-specific user intents. Initially, to guide the overall task generations with meaningful medical instructions and considering different user needs, we create a high-quality seed set of 167 clinician-curated tasks spanning various medical topics, points of view, task types, and difficulty levels, as shown in Figure 1.

为弥补这一差距,受Wang等人 (2023b) 启发,我们提出一种半自动化流程,利用GPT-4 (OpenAI, 2023) 和ChatGPT (OpenAI, 2022) 创建多样化医疗指令微调 (IFT) 数据集,用于优化医疗大语言模型,使其更贴合不同领域用户的特定需求。首先,为生成具有医学意义的指令任务并兼顾多样化用户需求,我们构建了由临床医生标注的167个高质量种子任务,涵盖多类医疗主题、观察视角、任务类型及难度等级,如图1所示。

To toapiuct: oCa mr dai otl ioc gy ally generate a broader array of tasks for training, we prompt GPT-4 to create instructions for new medical tasks by leveraging thet o etxesits tyiounr gk no cw l lied ng iec aibaount- bclouord aftleowd i nt athsek hse arats. demonstrations. After generating tasks and conducting de duplications, we employ ChatGPT to provide responses to the valid tasks. Consequently, we compile a $52\mathrm{k\Omega}$ onm deurdiincg atlh iss elf-instruct dataset, Med Instruct $.52k$ ,i esw; hich supervises the tuning on the LLaMA series models (Touvron et Ea)l .I,n cr 2 e 0 as 2 ed 3 caa;pibl;l arAy If $@$ Meta, 2024), resulting in AlpaCare. Due to the limited number of test sets available for evaluating medical LLMs in terms of instruction-following ability and medical ca

为扩展训练任务的多样性,我们通过提示 GPT-4 利用现有医学知识库和任务示例生成新医疗指令。在生成任务并完成去重后,使用 ChatGPT 为有效任务提供响应,最终构建出包含 52k 条指令的自指导数据集 Med-Instruct。该数据集用于监督 LLaMA 系列模型 (Touvron et al. [2023]; Meta [2024]) 的微调,从而得到 AlpaCare。由于目前评估医疗大语言模型在指令遵循能力和医学专业性的测试集数量有限...

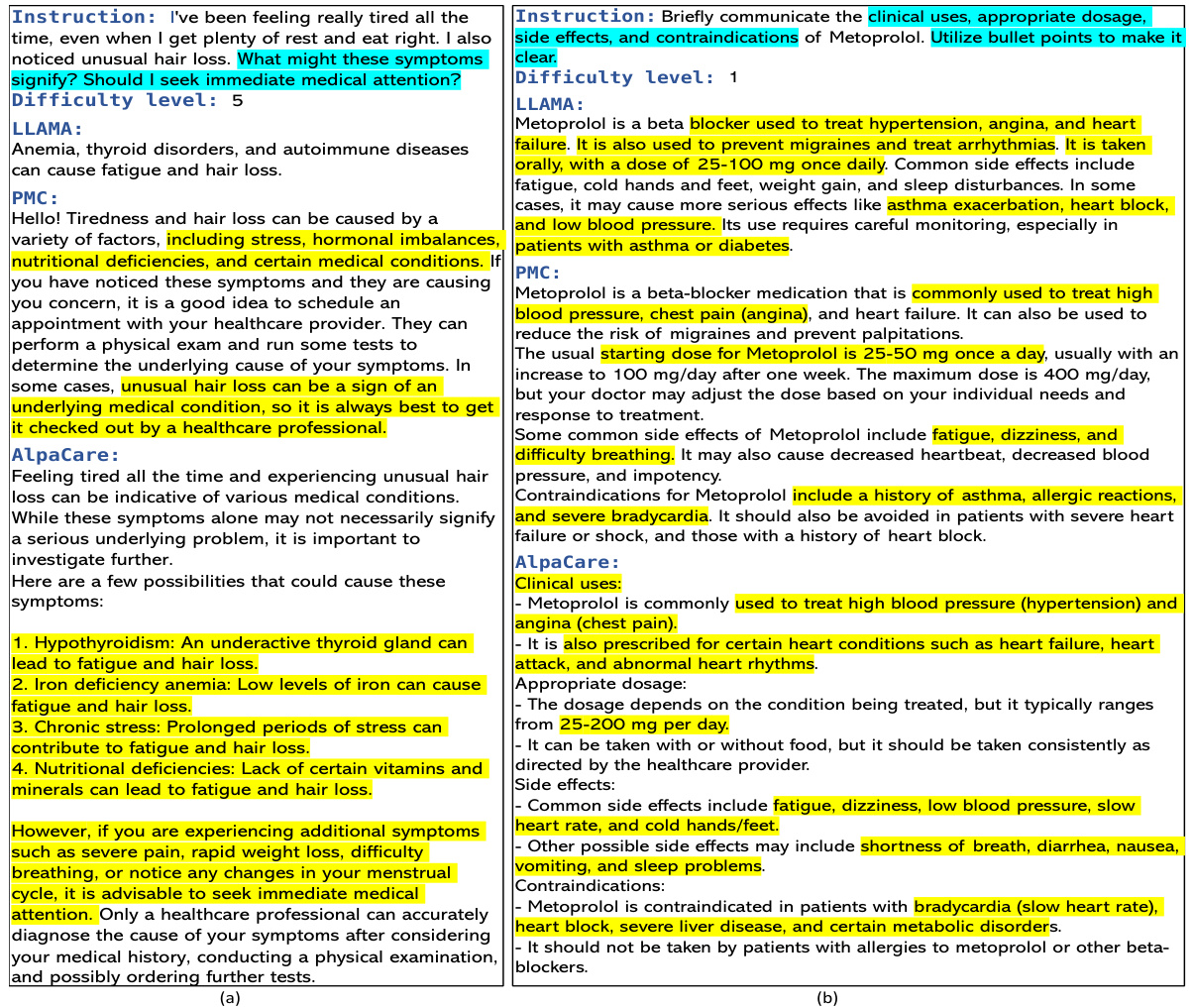

Figure 1: Selected example from the cliniciancrafted seed set. We focus on 4 perspectives: topic, viewpoint, task type, and difficulty level, to improve the seed set diversity. The set is further used to query GPT-4 to generate medical tasks.

图 1: 临床医生构建的种子集示例。我们聚焦于主题、观点、任务类型和难度级别4个维度来提升种子集多样性。该集合后续用于查询GPT-4以生成医疗任务。

pacity, we introduce a new clinician-crafted free-form instruction evaluation test set, Med Instruct-test, covering medical tasks across different difficulty levels.

为评估容量,我们引入了一个由临床医生设计的新型自由形式指令评估测试集Med Instruct-test,涵盖不同难度级别的医疗任务。

Our comprehensive experiments within medical and general domains reveal that AlpaCare, solely tuned on the 52k diverse medical IFT dataset, exhibits enhanced performance on medical applications and strong general iz ability. It achieves up to a $38.1%$ absolute gain over the best baselines in medical free-form instruction evaluations and a $6.7%$ absolute gain averaged over multiple general domain benchmarks. Moreover, our human study on free-form instruction evaluations shows that AlpaCare consistently produces better responses compared to existing medical LLMs by a large margin in terms of both correctness $(+12%)$ and helpfulness $(+49%)$ .

我们在医疗和通用领域的全面实验表明,仅基于52k多样化医疗IFT数据集微调的AlpaCare,在医疗应用中展现出更强的性能与优秀的泛化能力。其在医疗自由指令评估中相比最佳基线模型获得高达38.1%的绝对性能提升,在多个通用领域基准测试中平均取得6.7%的绝对增益。此外,我们在自由指令评估中的人工研究表明,AlpaCare生成的响应在正确性 (+12%) 和实用性 (+49%) 两方面均显著优于现有医疗大语言模型。

Our paper makes the following contributions:

我们的论文做出了以下贡献:

2 RELATED WORKS

2 相关工作

IFT. Closed-form IFT creates IFT datasets from existing NLP benchmarks using carefully designed instructions to improve model generalization on new tasks (Wei et al., 2022; Sanh et al., 2022; Chung et al., 2022; Longpre et al., 2023). However, these instructions are often simpler than real-world scenarios, leading to models that fail to align with diverse user intentions. In contrast, Ouyang et al. (2022) collects a diverse IFT dataset with real-world instructions and responses, rich in both instruction forms and task types. They train GPT-3 Brown et al. (2020) on this dataset to obtain Instruct GP T (Ouyang et al., 2022), demonstrating promising results in aligning with diverse actual user needs. Due to the closed-source propriety of strong LLMs (e.g. ChatGPT and GPT-4), various open-source instruction fine-tuned models (Taori et al., 2023; Xu et al., $2023\mathrm{a}$ ; Chiang et al., 2023; Peng et al., 2023) have been proposed to tune open-source LLMs using datasets obtained from these strong teacher models to enhance their instruction-following abilities. Alpaca (Taori et al., 2023) creates a 52k diverse machine-generated IFT dataset by distilling knowledge from the "teacher" Text-Davinci-003 (Hinton et al., 2015; Li et al., 2022). Peng et al. (2023) utilizes the same instructions with Alpaca but adopts GPT-4 as the "teacher" LLM to generate higher-quality and more diverse responses to improve the model’s alignment on 3H (Helpfulness, Honesty, and Harmlessness) (Askell et al., 2021). Vicuna (Chiang et al., 2023) is trained on the ShareGPT data (Sharegpt, 2023), which contains actual ChatGPT users’ diverse instructions, obtaining strong response quality and instruction-following ability. However, creating diverse IFT datasets for aligning models with various user intentions in the medical domain remains under explored.

IFT。封闭式IFT通过精心设计的指令从现有NLP基准创建IFT数据集,以提升模型在新任务上的泛化能力 (Wei et al., 2022; Sanh et al., 2022; Chung et al., 2022; Longpre et al., 2023) 。但这些指令通常比真实场景更简单,导致模型难以适配多样化的用户意图。相比之下,Ouyang et al. (2022) 收集了包含真实世界指令和响应的多样化IFT数据集,其指令形式和任务类型都极为丰富。他们基于该数据集训练GPT-3 Brown et al. (2020) 得到Instruct GPT (Ouyang et al., 2022) ,在适配多样化实际用户需求方面展现出良好效果。由于强大大语言模型(如ChatGPT和GPT-4)的闭源特性,研究者们提出了多种开源指令微调模型 (Taori et al., 2023; Xu et al., $2023\mathrm{a}$; Chiang et al., 2023; Peng et al., 2023) ,通过使用从这些"教师模型"获得的数据集来调优开源大语言模型,以增强其指令遵循能力。Alpaca (Taori et al., 2023) 通过从"教师模型"Text-Davinci-003 (Hinton et al., 2015; Li et al., 2022) 蒸馏知识,创建了包含52k条多样化机器生成指令的IFT数据集。Peng et al. (2023) 采用与Alpaca相同的指令集,但选用GPT-4作为"教师"大语言模型来生成更高质量、更多样化的响应,从而提升模型在3H(有用性、诚实性、无害性) (Askell et al., 2021) 方面的对齐效果。Vicuna (Chiang et al., 2023) 基于ShareGPT数据 (Sharegpt, 2023) 进行训练,该数据包含ChatGPT用户实际使用的多样化指令,最终获得了出色的响应质量和指令遵循能力。然而,在医疗领域创建多样化IFT数据集以使模型适配各类用户意图的研究仍显不足。

Figure 2: The pipeline of AlpaCare. The process starts with a small set of clinician-curated seed tasks. 1. Task instruction generation: GPT-4 iterative ly generates a series of new task instructions using 3 tasks from the seed set. 2. Filtering: Ensures textual diversity by removing similar instructions via Rouge-L. 3. Response generation: ChatGPT creates responses for each task, forming Med Instruct52K. 4. Instruction tuning: The dataset is used to fine-tune LLaMA models, developing AlpaCare.

图 2: AlpaCare 的流程。该流程从少量临床医生整理的种子任务开始。1. 任务指令生成: GPT-4 使用种子集中的 3 个任务迭代生成一系列新任务指令。2. 过滤: 通过 Rouge-L 去除相似指令确保文本多样性。3. 响应生成: ChatGPT 为每个任务创建响应,形成 Med Instruct52K。4. 指令调优: 使用该数据集对 LLaMA 模型进行微调,开发出 AlpaCare。

LLMs in Bio medicine. Closed-source LLMs have demonstrated significant proficiency in the medical domain (OpenAI, 2022; 2023; Singhal et al., 2023; 2022). ChatGPT demonstrates promise in the US Medical Exam (Kung et al., 2023) and serves as a knowledge base for medical decisionmaking (Zhang et al., 2023). The MedPaLM (Singhal et al., 2022; 2023) have shown performance in answering medical questions on par with that of medical professionals. GPT-4 (OpenAI, 2023) obtains strong medical capacities without specialized training strategies in the medical domain or engineering for solving clinical tasks Nori et al. (2023a;b). Due to privacy concerns and high costs, several open-source medical LLMs (Xu et al., 2023b; Han et al., 2023; Li et al., 2023b; Wu et al., 2023) have been built by tuning open-source base models on medical corpus. ChatDoctor (Li et al., 2023b) is fine-tuned using $100\mathrm{k}$ online doctor-patient dialogues, while Baize-Healthcare (Xu et al., 2023b) employs about $100\mathrm{k}$ Quora and MedQuAD dialogues. MedAlpaca (Han et al., 2023) utilizes a $230\mathrm{k\Omega}$ dataset of question-answer pairs and dialogues. PMC-LLAMA (Wu et al., 2023) continually trains LLaMA with millions of medical textbooks and papers, and then tunes it with a 202M-token dataset formed by benchmarks and dialogues during IFT. However, due to the high cost of collecting diverse real-world user instructions (Wang et al., 2023b), their datasets are limited in diversity, mainly focusing on medical benchmarks or within certain topics, such as doctor-patient conversations, hampering models’ medical instruction-following ability and general iz ability. We propose creating a cost-effective, diverse medical machine-generated IFT dataset using GPT-4 and ChatGPT to better align the model with various medical user intents. Other follow-up works after ours (Xie et al., 2024; Tran et al., 2023) consistently show the benefits of tuning medical LLMs with diverse machine-generated datasets.

生物医学中的大语言模型。闭源大语言模型在医疗领域展现出显著能力 (OpenAI, 2022; 2023; Singhal 等, 2023; 2022)。ChatGPT在美国医师资格考试中表现优异 (Kung 等, 2023),并可作为医疗决策的知识库 (Zhang 等, 2023)。MedPaLM (Singhal 等, 2022; 2023) 在回答医学问题方面达到专业医师水平。GPT-4 (OpenAI, 2023) 无需专门医学训练策略就展现出强大的临床任务处理能力 (Nori 等, 2023a;b)。

出于隐私和成本考虑,研究者通过调整开源基础模型在医学语料上的表现,开发了多个开源医疗大语言模型 (Xu 等, 2023b; Han 等, 2023; Li 等, 2023b; Wu 等, 2023)。ChatDoctor (Li 等, 2023b) 使用10万条在线医患对话进行微调,Baize-Healthcare (Xu 等, 2023b) 采用约10万条Quora和MedQuAD对话数据。MedAlpaca (Han 等, 2023) 使用23万条问答对和对话数据集。PMC-LLAMA (Wu 等, 2023) 持续训练LLaMA模型数百万医学教材和论文,并通过2.02亿token的基准测试和IFT阶段对话数据集进行微调。

然而,由于收集多样化真实用户指令成本高昂 (Wang 等, 2023b),现有数据集多样性有限,主要集中在医疗基准测试或特定主题(如医患对话),制约了模型的医疗指令跟随能力和泛化能力。我们提出使用GPT-4和ChatGPT创建高性价比、多样化的机器生成IFT数据集,以更好地使模型适应各类医疗用户需求。后续研究 (Xie 等, 2024; Tran 等, 2023) 一致证明了使用多样化机器生成数据集微调医疗大语言模型的优势。

3 METHOD

3 方法

Collecting a large-scale medical IFT dataset is challenging because it necessitates 1) a deep understanding of the specific domain knowledge and 2) creativity in designing novel and diverse tasks by considering different real-world medical needs. To mitigate human effort while maintaining high quality, we propose a pipeline by instructing GPT-4 and ChatGPT to create a machine-generated dataset containing diverse domain-specific tasks. The process starts with utilizing a small set of high-quality clinician-curated seed tasks with 167 instances to prompt GPT-4 in generating medical tasks. Similar instructions are removed from the generated medical tasks, preserving $52\mathrm{k\Omega}$ instances which are subsequently inputted into ChatGPT for response generation. The instruction-response pairs dataset, Med Instruct $52k$ , is used to tune the LLaMA, resulting in AlpaCare with superior medical instruction-following ability and general iz ability. The pipeline is shown in Figure 2.

收集大规模医疗指令微调(IFT)数据集具有挑战性,因为这需要:1) 对特定领域知识的深入理解;2) 通过考虑不同现实医疗需求来设计新颖多样任务的创造力。为在保持高质量的同时减少人力投入,我们提出一个流程:指导GPT-4和ChatGPT创建包含多样化领域特定任务的机器生成数据集。该流程首先利用167个临床专家精心策划的种子任务来提示GPT-4生成医疗任务。从生成的医疗任务中去除相似指令后,保留52k个实例并输入ChatGPT生成响应。最终获得的指令-响应对数据集Med-Instruct-52k用于微调LLaMA模型,由此产生的AlpaCare展现出卓越的医疗指令跟随能力和泛化能力。该流程如图2所示。

Table 1: Comparative analysis of free-form instruction evaluation. Performance comparison of AlpaCare and instruction-tuned baselines. GPT-3.5-turbo acts as a judge for pairwise auto-evaluation. Each instruction-tuned model is compared with 4 distinct reference models: Text-davinci-003, GPT3.5-turbo, GPT-4, and Claude-2. ‘AVG’ denotes the average performance score across all referenced models in each test set.

| iCliniq | Medlnstruct | |||||||||

| Text-davinci-003 | GPT-3.5-turboGPT-4 | Claude-2 | AVG | Text-davinci-O03 | GPT-3.5-turbo | GPT-4 | Claude-2 | AVG | ||

| Alpaca | 38.8 | 30.4 | 12.8 | 15.6 | 24.4 | 25.0 | 20.6 | 21.5 | 15.6 | 22.5 |

| ChatDoctor | 25.4 | 16.7 | 6.5 | 9.3 | 14.5 | 35.6 | 18.3 | 20.4 | 13.4 | 18.2 |

| Medalpaca | 35.6 | 24.3 | 10.1 | 13.2 | 20.8 | 45.1 | 33.5 | 34.0 | 29.2 | 28.1 |

| PMC | 8.3 | 7.2 | 6.5 | 0.2 | 5.5 | 5.1 | 4.5 | 4.6 | 0.2 | 4.6 |

| Baize-H | 41.8 | 36.3 | 19.2 | 20.6 | 29.5 | 35.1 | 22.2 | 22.2 | 15.6 | 26.6 |

| AlpaCare | 66.6 | 50.6 | 47.4 | 49.7 | 53.6 | 67.6 | 49.8 | 48.1 | 48.4 | 53.5 |

表 1: 自由形式指令评估的对比分析。AlpaCare与指令调优基线的性能比较。GPT-3.5-turbo作为成对自动评估的评判标准。每个指令调优模型分别与4个不同的参考模型进行比较:Text-davinci-003、GPT3.5-turbo、GPT-4和Claude-2。"AVG"表示每个测试集中所有参考模型的平均性能得分。

| iCliniq | MedInstruct | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Text-davinci-003 | GPT-3.5-turbo | GPT-4 | Claude-2 | AVG | Text-davinci-003 | GPT-3.5-turbo | GPT-4 | Claude-2 | AVG | |

| Alpaca | 38.8 | 30.4 | 12.8 | 15.6 | 24.4 | 25.0 | 20.6 | 21.5 | 15.6 | 22.5 |

| ChatDoctor | 25.4 | 16.7 | 6.5 | 9.3 | 14.5 | 35.6 | 18.3 | 20.4 | 13.4 | 18.2 |

| Medalpaca | 35.6 | 24.3 | 10.1 | 13.2 | 20.8 | 45.1 | 33.5 | 34.0 | 29.2 | 28.1 |

| PMC | 8.3 | 7.2 | 6.5 | 0.2 | 5.5 | 5.1 | 4.5 | 4.6 | 0.2 | 4.6 |

| Baize-H | 41.8 | 36.3 | 19.2 | 20.6 | 29.5 | 35.1 | 22.2 | 22.2 | 15.6 | 26.6 |

| AlpaCare | 66.6 | 50.6 | 47.4 | 49.7 | 53.6 | 67.6 | 49.8 | 48.1 | 48.4 | 53.5 |

CLINICIAN-CURATED SEED DATASET

临床专家精选种子数据集

A diverse and high-quality seed task is essential for prompting LLMs in task generation (Wang et al., 2023b). We focus on 4 key areas, taking into account various user intents in medical applications, to improve the diversity of seed instructions: topic, view, type, and difficulty level. Specifically, the topic covers various submedical domains, such as radiology, genetics, and psycho physiology. The view is derived from diverse medical personnel, including researchers, medical students, and patients, who have different inquiries, to ensure a comprehensive range of viewpoints based on various levels of domain knowledge. For the type, we include various task formats, such as sum mari z ation, rewriting, single-hop, and multi-hop reasoning, to align with different application needs. Lastly, each task is categorized by its medical difficulty level, ranging from 1 to 5 (low to high), to ensure that the seed tasks can prompt new tasks on a wide range of expertise levels. We defer the explanation of the difficulty score to Appendix B for further clarification. A clinician crafts each task considering these 4 dimensions, and each task contains instruction and may have a corresponding input, which could be a detailed medical example to further elucidate the instruction and enhance task diversity. Examples are shown in Figure 1.

规则:

- 确保翻译结果严格遵循Markdown格式要求

- 保留所有专业术语、引用和图表编号格式

- 维持原文段落结构

- 首次出现术语时标注英文原文

翻译结果:

多样且高质量的种子任务对于激发大语言模型(LLM)生成任务至关重要(Wang等人,2023b)。我们聚焦4个关键维度,结合医疗应用中的不同用户意图,提升种子指令的多样性:主题、视角、类型和难度等级。具体而言,主题涵盖放射学、遗传学、心理生理学等多个医学子领域。视角来源于研究者、医学生和患者等不同医疗从业者的多样化诉求,确保基于不同领域知识层次形成全面的观点覆盖。类型方面包含摘要生成、文本改写、单步推理和多步推理等多种任务形式,以适配不同的应用需求。最后,每个任务都按1-5级(低到高)的医学难度进行分类,确保种子任务能激发不同专业水平的新任务生成。难度评分的具体说明详见附录B。临床医师基于这4个维度精心设计每个任务,包含指令说明,并可能配有对应的输入内容(如详细医疗案例)以进一步阐明指令要求并增强任务多样性。示例如图1所示。

MEDICAL IFT DATASET GENERATION AND LLM TUNING

医学 IFT 数据集生成与大语言模型调优

We utilize GPT-4 for in-context learning by randomly selecting 3 tasks from the seed set and generating 12 tasks for each run. To ensure generated task diversity, we instruct GPT-4 to consider the 4 aspects outlined in 3. To further amplify textual diversity, instructions with a Rouge-L similarity above 0.7 to any other generated task are discarded (Wang et al., 2023b). Due to the lengthy propriety of medical text, we separately generate responses for each task using ChatGPT (GPT-3.5-turbo), which has demonstrated efficacy in the medical domain (Zhang et al., 2023). Finally, we result in $52\mathrm{k\Omega}$ machine-generated medical instruction-response pairs, Med Instruct $52k$ . Detailed prompts for instruction and output generation are provided in the Appendix C. To verify medInstcut $52k$ ’s data quality, we randomly select 50 instances for a clinician to evaluate, resulting in 49 out of 50 responses being graded as correct, which demonstrates the dataset’s high quality. The LLM APIs cost analysis and chosen reason are in Appendix D.

我们利用GPT-4进行上下文学习,每次从种子集中随机选择3个任务并生成12个任务。为确保生成任务的多样性,我们指导GPT-4参考第3节所述的4个方面。为进一步增强文本多样性,Rouge-L相似度超过0.7的指令会被剔除 (Wang et al., 2023b)。由于医学文本的专业性较强,我们使用ChatGPT (GPT-3.5-turbo) 为每个任务单独生成回复,该模型已在医学领域展现成效 (Zhang et al., 2023)。最终我们获得$52\mathrm{k\Omega}$条机器生成的医学指令-回复对,即Med Instruct $52k$。指令和输出生成的详细提示见附录C。为验证medInstcut $52k$的数据质量,我们随机选取50个样本由临床医生评估,其中49条回复被判定为正确,证明数据集质量优异。大语言模型API成本分析及选用理由详见附录D。

During IFT, we adopt the same training prompt and hyper-parameter setup as Taori et al. (2023) to fine-tune LLaMA models on Med Instruct $52k$ , Specifically, we employ instructions and inputs (when available) as inputs to tune the model to generate corresponding response outputs through a standard supervised fine-tuning with cross-entropy loss. We defer hyper-parameter setup into Appendix E.

在IFT过程中,我们采用与Taori等人 (2023) 相同的训练提示和超参数设置,对LLaMA模型在Med Instruct $52k$ 数据集上进行微调。具体而言,我们使用指令和输入(如有)作为输入,通过标准的监督式微调(采用交叉熵损失)来调整模型以生成相应的响应输出。超参数设置详见附录E。

Table 2: Results on medical benchmarks. ‘AVG’ represents the mean performance score across tasks.

| MEDQA HeadQA PubmedQA MEDMCQA MeQSum AVG | |||||

| Alpaca | 35.7 | 29.1 | 75.4 | 29.2 | 24.4 38.8 |

| ChatDoctor | 34.3 | 30.0 | 73.6 | 33.5 | 27.1 39.7 |

| Medalpaca | 38.4 | 30.3 | 72.8 31.3 | 11.0 | 36.8 |

| PMC | 34.2 | 28.1 | 68.2 | 26.1 9.2 | 33.2 |

| Baize-H | 34.5 | 29.3 | 73.8 | 32.5 | 35.6 |

| AlpaCare | 35.5 | 30.4 | 74.8 | 33.5 | 29.0 40.6 |

表 2: 医学基准测试结果。'AVG'表示各任务的平均性能得分。

| MEDQA | HeadQA | PubmedQA | MEDMCQA | MeQSum | AVG | |

|---|---|---|---|---|---|---|

| Alpaca | 35.7 | 29.1 | 75.4 | 29.2 | 24.4 | 38.8 |

| ChatDoctor | 34.3 | 30.0 | 73.6 | 33.5 | 27.1 | 39.7 |

| Medalpaca | 38.4 | 30.3 | 72.8 | 31.3 | 11.0 | 36.8 |

| PMC | 34.2 | 28.1 | 68.2 | 26.1 | 9.2 | 33.2 |

| Baize-H | 34.5 | 29.3 | 73.8 | 32.5 | 35.6 | |

| AlpaCare | 35.5 | 30.4 | 74.8 | 33.5 | 29.0 | 40.6 |

4 EXPERIMENTAL SETUP

4 实验设置

FREE-FORM INSTRUCTION EVALUATION

自由形式指令评估

Datasets.To evaluate the effectiveness of LLMs in a user-oriented manner, we conduct free-form instruction evaluations on two medical datasets. (1) iCliniq2, a dataset comprising transcripts of real patient-doctor conversations collected from an online website (Li et al., 2023b). In this task, the model processes patient inquiries as input and then simulates a doctor to provide corresponding answers. (2) Med Instruct-test, a dataset created by our clinicians, includes 216 medical instructions. These instructions mimic inquiries posed by different medical personnel, varying in difficulty on a scale from 1 to 5, with 1 being the simplest and 5 being the most challenging. We defer the difficulty levels description and test set statistics into the Appendix B and F, respectively.

数据集。为了以用户导向的方式评估大语言模型(LLM)的有效性,我们在两个医疗数据集上进行了自由形式的指令评估。(1) iCliniq2数据集包含从在线网站收集的真实医患对话记录(Li et al., 2023b)。在该任务中,模型将患者咨询作为输入,然后模拟医生提供相应回答。(2) Med Instruct-test数据集由我们的临床医生创建,包含216条医疗指令。这些指令模拟了不同医务人员提出的咨询,难度等级从1到5不等,其中1表示最简单,5表示最具挑战性。我们将难度等级描述和测试集统计数据分别放在附录B和F中。

Evaluation Metric. We conduct auto-evaluation by employing GPT-3.5-turbo to serve as a judge (Zheng et al., 2023). The judge pairwise compares responses from a model with reference responses produced by another LLM API for each instruction in the test sets. To conduct a holistic evaluation, we employ reference outputs generated by 4 different APIs: Text-davinci-003, GPT-3.5-turbo, GPT-4 and Claude-2, respectively. To ensure unbiased evaluation and avoid positional bias (Wang et al., 2023a), we evaluate each output comparison twice, alternating the order of the model output and the reference output. We follow Li et al. (2023a) to score our models and baselines by calculating the win rate. To ensure fair comparisons, we set the maximum token length to 1024 and utilize greedy decoding for the generation of all model outputs and reference responses.

评估指标。我们采用GPT-3.5-turbo作为评判员进行自动评估(Zheng et al., 2023)。评判员将测试集中每个指令对应的模型响应与由另一个大语言模型API生成的参考响应进行成对比较。为实现全面评估,我们采用了4种不同API生成的参考输出:分别是Text-davinci-003、GPT-3.5-turbo、GPT-4和Claude-2。为确保无偏评估并避免位置偏差(Wang et al., 2023a),我们对每个输出比较进行两次评估,交替调换模型输出和参考输出的顺序。我们遵循Li et al. (2023a)的方法,通过计算胜率来为模型和基线评分。为保证公平比较,我们将最大token长度设为1024,并对所有模型输出和参考响应的生成采用贪婪解码策略。

BENCHMARK EVALUATION

基准评估

Datasets. We further evaluate AlpaCare on 4 medical multiple-choice benchmarks, namely MedQA (Jin et al., 2021), HeadQA (Vilares & Gómez-Rodríguez, 2019), PubmedQA (Jin et al., 2019), and MedMCQA (Pal et al., 2022), as well as a sum mari z ation dataset, i.e., MeQSum (Ben Abacha & Demner-Fushman, 2019)3, to assess the model’s medical capacity.

数据集。我们进一步在4个医学多选题基准测试上评估AlpaCare,包括MedQA (Jin et al., 2021)、HeadQA (Vilares & Gómez-Rodríguez, 2019)、PubmedQA (Jin et al., 2019)和MedMCQA (Pal et al., 2022),以及一个摘要数据集MeQSum (Ben Abacha & Demner-Fushman, 2019)3,以评估模型的医学能力。

Evaluation Metric. Following Gao et al. (2021), we conduct the multiple-choice benchmark evaluation and report the accuracy. For the sum mari z ation task, we utilize greedy decoding with a maximum token length of 1024 to generate outputs and report the ROUGE-L score.

评估指标。参照Gao等人(2021)的研究,我们进行了多项选择基准评估并报告准确率。在摘要任务中,我们采用最大token长度为1024的贪心解码生成输出,并报告ROUGE-L分数。

BASELINES

基线方法

We evaluate the performance of AlpaCare by comparing it with both general and medical LLMs based on the LLaMA models. We consider a range of models including: (1) Alpaca, tuning on $52\mathrm{k\Omega}$ general domain machine-generated samples with responses from Text-davinci-003; (2) ChatDoctor, finetuning with $100\mathrm{k}$ real patient-doctor dialogues; (3) MedAlpaca, utilizing approximately $230\mathrm{k\Omega}$ medical instances such as Q&A pairs and doctor-patient conversations; (4) PMC-LLaMA (PMC), a two-step tuning model that was first trained on 4.8 million biomedical papers and $30\mathrm{k\Omega}$ medical textbooks, then instruction-tuned on a corpus of 202 million tokens; and (5) Baize-Healthcare (Baize-H), training with around $100\mathrm{k}$ multi-turn medical dialogues.

我们通过将AlpaCare与基于LLaMA模型的通用及医疗领域大语言模型进行对比来评估其性能。对比模型包括:(1) Alpaca,使用52kΩ通用领域机器生成样本(响应来自Text-davinci-003)进行微调;(2) ChatDoctor,基于100k真实医患对话进行微调;(3) MedAlpaca,利用约230kΩ医疗实例(如问答对和医患对话)训练;(4) PMC-LLaMA (PMC),采用两阶段微调:先在480万篇生物医学论文和30kΩ医学教科书上预训练,再在2.02亿token的指令语料上进行微调;(5) Baize-Healthcare (Baize-H),使用约100k轮多轮医疗对话训练。

Table 3: Performance on general domain tasks. AlpacaFarm is a free-form instruction evaluation, MMLU and BBH are knowledge benchmarks and TruthfulQA is a truthfulness task. ‘AVG’ denotes the average score across all tasks.

| AlpacaFarm | MMLU | BBH | TruthfulQA | AVG | |

| Alpaca | 22.7 | 40.8 | 32.4 | 25.6 | 30.4 |

| ChatDoctor | 21.2 | 34.3 | 31.9 | 27.8 | 28.8 |

| Medalpaca | 25.8 | 41.7 | 30.6 | 24.6 | 30.7 |

| PMC | 8.3 | 23.6 | 30.8 | 23.8 | 21.6 |

| Baize-H | 18.3 | 36.5 | 30.1 | 23.5 | 27.1 |

| AlpaCare | 40.7 | 45.6 | 34.0 | 27.5 | 37.0 |

表 3: 通用领域任务性能对比。AlpacaFarm是自由形式指令评估,MMLU和BBH是知识基准测试,TruthfulQA是真实性任务。"AVG"表示所有任务的平均得分。

| AlpacaFarm | MMLU | BBH | TruthfulQA | AVG | |

|---|---|---|---|---|---|

| Alpaca | 22.7 | 40.8 | 32.4 | 25.6 | 30.4 |

| ChatDoctor | 21.2 | 34.3 | 31.9 | 27.8 | 28.8 |

| Medalpaca | 25.8 | 41.7 | 30.6 | 24.6 | 30.7 |

| PMC | 8.3 | 23.6 | 30.8 | 23.8 | 21.6 |

| Baize-H | 18.3 | 36.5 | 30.1 | 23.5 | 27.1 |

| AlpaCare | 40.7 | 45.6 | 34.0 | 27.5 | 37.0 |

5 EXPERIMENT RESULTS

5 实验结果

MAIN RESULTS

主要结果

Free-form Instruction Evaluation Performance. The evaluation results for 4 reference models on both datasets are summarized in Table 1. AlpaCare outperforms its general domain counterpart, Alpaca, demonstrating that domain-specific training bolsters medical capabilities. Despite tuning with only $52\mathrm{k}$ medical instruction-response pairs, AlpaCare consistently and significantly surpasses other medical models, which are trained on considerably larger datasets, across various reference LLMs. Specifically, for average scores across reference models, AlpaCare demonstrates a relative gain of $130%$ on iCliniq and $90%$ on Med Instruct, respectively, compared to the best baselines. These results highlight the advantages of improving medical proficiency by training with a diverse, domain-specific IFT dataset. Surprisingly, medical LLMs don’t always outperform general ones in medical tasks, and some even fail to generate useful responses, possibly due to their limited training scope restricting conversational skills.

自由形式指令评估性能。表1总结了两个数据集上4个参考模型的评估结果。AlpaCare表现优于其通用领域对应模型Alpaca,表明特定领域训练能增强医疗能力。尽管仅用$52\mathrm{k}$个医疗指令-响应对进行微调,AlpaCare在不同参考大语言模型中始终显著超越其他训练数据量更大的医疗模型。具体而言,在参考模型平均分方面,与最佳基线相比,AlpaCare在iCliniq和Med Instruct上分别实现了$130%$和$90%$的相对提升。这些结果凸显了通过多样化、领域特定的指令微调(IFT)数据集提升医疗专业能力的优势。值得注意的是,医疗大语言模型在医疗任务中并不总是优于通用模型,部分甚至无法生成有效响应,可能是由于其训练范围有限制约了对话能力。

Benchmark Evaluation Performance. Table 2 presents an extensive evaluation of AlpaCare on 5 medical benchmarks. AlpaCare obtain the best performance on average, highlighting its robust capability in the medical domain. Benchmarks evaluate a model’s intrinsic knowledge(Gao et al., 2021), which is mainly gained in LLM pre training instead of instruction fine-tuning (Zhou et al., 2023). AlpaCare’s strong medical capability, combined with its superior ability to follow medical instructions, enables it to meet a wide range of medical application needs effectively.

基准评估性能。表 2 展示了 AlpaCare 在 5 个医学基准上的全面评估结果。AlpaCare 平均表现最佳,凸显了其在医学领域的强大能力。这些基准主要评估大语言模型通过预训练而非指令微调获得的内在知识 (Gao et al., 2021) (Zhou et al., 2023)。AlpaCare 卓越的医学能力,结合其出色的医疗指令遵循能力,使其能够有效满足广泛的医疗应用需求。

GENERAL IZ ABILITY EVALUATION

通用性评估

Training models with specific data may lead to catastrophic forgetting, limiting their general iz ability (Kirkpatrick et al., 2017). Our approach, instruction tuning a model with a diverse, domain-specific dataset, aims to improve its general iz ability simultaneously. We test this using AlpaCare in AlpaFarm (Dubois et al., 2023), MMLU (Hendrycks et al., 2021), BBH (Suzgun et al., 2022) and TruthfulQA (Lin et al., 2022). We compare AlpaCare with 4 reference LLMs in AlpaFarm and report the average score, and follow Chia et al. (2023) to holistic ally evaluate models’ general domain knowledge on MMLU (5-shot) and BBH (3-shot), receptively; and evaluate the model truthfulness on TruthfulQA (0-shot) with Gao et al. (2021). The results are shown in Table 3. The detailed score for each reference model on AlpaFarm and more general domain experiment are deferred to Table 13 and Table 14 in the Appendix G.

使用特定数据训练模型可能导致灾难性遗忘 (catastrophic forgetting),从而限制其泛化能力 (Kirkpatrick et al., 2017)。我们采用指令微调 (instruction tuning) 方法,通过多样化的领域专用数据集来提升模型的同时泛化能力。我们在AlpaFarm (Dubois et al., 2023)、MMLU (Hendrycks et al., 2021)、BBH (Suzgun et al., 2022) 和TruthfulQA (Lin et al., 2022) 上测试了AlpaCare的表现。在AlpaFarm中,我们将AlpaCare与4个参考大语言模型进行对比并报告平均分数;同时参照Chia等人 (2023) 的方法,分别在MMLU (5-shot) 和BBH (3-shot) 上综合评估模型的通用领域知识,并采用Gao等人 (2021) 的方案在TruthfulQA (0-shot) 上评估模型真实性。结果如 表3 所示。AlpaFarm中各参考模型的详细得分及其他通用领域实验数据详见附录G中的 表13 和 表14。

Medical LLMs often have worse or comparable results than the general LLM, Alpaca, in terms of general iz ability. However, AlpaCare significantly outperforms both medical and general domain baselines in multiple general tasks on average. Specifically, AlpaCare shows a significant relative improvement of $57.8%$ on AlpacaFarm compared to the best baseline, demonstrating strong general instruction-following ability. Moreover, AlpaCare scores higher in general knowledge tasks and maintains comparable truthfulness scores compared to other baselines, indicating strong generalization abilities due to high data diversity.

医疗大语言模型在通用能力方面往往表现不如或仅与通用大语言模型Alpaca相当。然而AlpaCare在多项通用任务中的平均表现显著优于医疗领域和通用领域的基线模型。具体而言,AlpaCare在AlpacaFarm基准测试中相对最佳基线模型实现了57.8%的显著提升,展现出强大的通用指令跟随能力。此外,AlpaCare在通用知识任务中得分更高,同时保持与其他基线模型相当的求真性分数,这表明其通过高数据多样性获得了强大的泛化能力。

ABLATION STUDY

消融实验

To further understand the effectiveness of AlpaCare, we conduct systematic ablation studies on two medical free-form instruction evaluations and report mean results of each task across 4 reference models, receptively. We defer detailed results of each reference model into Appendix G.

为进一步评估AlpaCare的有效性,我们在两项医疗自由指令评估上进行了系统消融实验,并分别报告了4个参考模型在各任务上的平均结果。各参考模型的详细结果见附录G。

Table 4: Result comparison on 13B instructiontuned models.

| Alpaca | Medalpaca | PMC | AlpaCare | |

| iCliniq | 31.3 | 3.9 | 25.4 | 54.4 |

| MedInstruct | 26.9 | 0.1 | 34.7 | 54.5 |

表 4: 13B 指令调优模型的结果对比

| Alpaca | Medalpaca | PMC | AlpaCare | |

|---|---|---|---|---|

| iCliniq | 31.3 | 3.9 | 25.4 | 54.4 |

| MedInstruct | 26.9 | 0.1 | 34.7 | 54.5 |

Table 5: Results on different LLM backbones.

| LLaMALLaMA-2LLaMA-3 | |||

| iCliniq | Alpaca | 24.4 | 30.3 26.8 |

| AlpaCare | 53.6 | 53.7 56.9 | |

| MedInstruct | Alpaca | 23.2 | 26.8 20.7 |

| AlpaCare | 53.5 | 54.2 56.6 | |

表 5: 不同大语言模型主干的结果。

| LLaMA | LLaMA-2 | LLaMA-3 | |

|---|---|---|---|

| iCliniq | Alpaca | 24.4 | 30.3 26.8 |

| AlpaCare | 53.6 | 53.7 56.9 | |

| MedInstruct | Alpaca | 23.2 | 26.8 20.7 |

| AlpaCare | 53.5 | 54.2 56.6 |

AlpaCare consistently delivers superior performance in 13B model comparisons. To explore the impact of scaling up LLM size, we fine-tune AlpaCare-13B on LLaMA-13B and compare its performance against other 13B LLMs. Results are shown in Table 4.

AlpaCare在13B模型对比中始终展现出卓越性能。为探究大语言模型规模扩展的影响,我们在LLaMA-13B上微调AlpaCare-13B,并将其与其他13B大语言模型进行性能对比。结果如表4所示。

AlpaCare-13B consistently outperforms other 13B models in both tasks. This reaffirms the conclusion drawn from the 7B model comparison: tuning models with a diverse medical IFT dataset can better align the model with user needs across different medical applications.

AlpaCare-13B 在这两项任务中持续优于其他 13B 模型。这再次验证了 7B 模型比较得出的结论:使用多样化的医疗 IFT (instruction fine-tuning) 数据集进行调优,能更好地使模型适配不同医疗应用场景下的用户需求。

AlpaCare achieves superior performance across various backbones. To explore the effect of different LLM backbones, we tune Alpaca-LLaMA2/3 and AlpaCare-LLaMA2/3 by training LLaMA2-7B (Touvron et al., 2023b) and LLaMA3-8B (AI $@$ Meta, 2024) on Alpaca data and Med Instruct $.52k$ , respectively. Table 5 compares the performance of Alpaca and AlpaCare based on different LLM backbone families.

AlpaCare 在不同骨干模型上均表现出卓越性能。为探究不同大语言模型 (LLM) 骨干的影响,我们通过分别在 Alpaca 数据和 Med Instruct $52k$ 上训练 LLaMA2-7B (Touvron et al., 2023b) 和 LLaMA3-8B (AI @ Meta, 2024) 来微调 Alpaca-LLaMA2/3 和 AlpaCare-LLaMA2/3。表 5 对比了基于不同大语言模型骨干系列的 Alpaca 和 AlpaCare 性能表现。

Consistent with the results of using LLaMA-1 as the backbone, AlpaCare-LLaMA2/3 consistently and significantly outperforms Alpaca-LLaMA2/3 in both datasets. This further underscores the backbone agnostic property of our method and emphasises tuning with a diverse medical IFT dataset can bolsters models’ medical capabilities.

与使用LLaMA-1作为主干网络的结果一致,AlpaCare-LLaMA2/3在两个数据集中持续显著优于Alpaca-LLaMA2/3。这进一步印证了我们方法的骨干网络无关特性,并表明通过多样化的医疗指令微调(IFT)数据集进行调优可增强模型的医疗能力。

AlpaCare shows robust performance across different judges. Recent studies have highlighted potential biases in the LLM evaluator (Wang et al., 2023a). ChatGPT may give a higher preference for outputs from ChatGPT and GPT-4, which are both trained by OpenAI. To robustly evaluate our method, we introduce an alternative judge, Claude-2 (Anthropic, 2023) from Anthropic, to mitigate the potential biases of relying on a single family of judges. The results are shown in Table 6.

AlpaCare在不同评估者间展现出稳健性能。近期研究指出大语言模型评估者可能存在偏差 (Wang et al., 2023a) 。ChatGPT可能更偏好同为OpenAI训练的ChatGPT和GPT-4输出。为稳健评估方法,我们引入Anthropic公司的Claude-2 (Anthropic, 2023) 作为替代评估者,以缓解依赖单一评估体系带来的潜在偏差。结果如表 6 所示。

Upon evaluation by Claude-2, it is observed that AlpaCare consistently outperforms its IFT baselines by a large margin. This aligns with findings from assessments using GPT-3.5-turbo as the judge. Such consistency underscores the superior medical proficiency of our approach.

经Claude-2评估发现,AlpaCare始终大幅领先其指令微调(IFT)基线模型。这一结果与使用GPT-3.5-turbo作为评判者的评估结论一致。这种一致性凸显了我们方法在医疗专业领域的卓越表现。

Table 6: Results evaluated by the different judge. Free-form instruction evaluation with Claude-2 as the judge.

| iCliniq | Medlnstruct | |

| Alpaca | 26.7 | 23.5 |

| ChatDoctor | 17.4 | 21.7 |

| Medalpaca | 26.7 | 23.1 |

| PMC | 1.3 | 1.8 |

| Baize-H | 25.5 | 19.8 |

| AlpaCare | 38.8 | 31.5 |

表 6: 不同评估者的结果。使用Claude-2作为评估者进行的自由形式指令评估。

| iCliniq | MedInstruct | |

|---|---|---|

| Alpaca | 26.7 | 23.5 |

| ChatDoctor | 17.4 | 21.7 |

| Medalpaca | 26.7 | 23.1 |

| PMC | 1.3 | 1.8 |

| Baize-H | 25.5 | 19.8 |

| AlpaCare | 38.8 | 31.5 |

6 HUMAN STUDY

6 人类研究

We further conduct human studies to label question-and-answer pairs in medical free-form instruction evaluation. Three annotators with MD degree in progress are involved in the study to perform pairwise comparisons for each question and answer pair. Specifically, we randomly select 50 prompts from each test set, totaling 100 prompts. These prompts, along with the responses generated by both AlpaCare-13B and PMC-13B, the best baseline in the 13B models, are presented to the annotators for evaluation. The evaluation is based on two

我们进一步开展人工研究,对医疗自由形式指令评估中的问答对进行标注。三名正在攻读医学博士学位的标注员参与研究,对每个问答对进行两两比较。具体而言,我们从每个测试集中随机选取50个提示词,共计100个提示词。这些提示词连同AlpaCare-13B和13B模型中最佳基线PMC-13B生成的响应,一并提交给标注员评估。评估基于两个

Figure 3: Human study results. Head-to-head clinician preference comparison between AlpaCare-13B and PMC-13B on (a) correctness and (b) helpfulness.

图 3: 人工研究结果。AlpaCare-13B与PMC-13B在(a)正确性和(b)有用性方面的临床医生偏好对比。

criteria: correctness and helpfulness. Correctness evaluates whether the response provides accurate medical knowledge to address question posed, while helpfulness measures the model’s ability to assist users concisely and efficiently, considering the user intent. In practical terms, an answer can be correct but not necessarily helpful if it is too verbose and lacks guidance. To determine the final result for each criterion of each evaluation instance, we employ a majority vote method. If at least two of the annotators share the same opinion, their preference is considered the final answer; otherwise, we consider the outputs of the two models to be tied. The results are shown in Figure 3.

标准:正确性和实用性。正确性评估回答是否提供准确的医学知识来解决提出的问题,而实用性衡量模型简洁高效地帮助用户的能力,同时考虑用户意图。实际上,一个答案可能正确但不一定实用,如果它过于冗长且缺乏指导性。为了确定每个评估实例的每个标准的最终结果,我们采用多数投票方法。如果至少两位标注者意见一致,则以他们的偏好为最终答案;否则,我们认为两个模型的输出结果相当。结果如图3所示。

Figure 4: Analysis of diversity in the Med Instruct-52k. In panels (a-c), the top 20 entries for topic, view, and type are displayed, respectively. Panel (d) shows the distribution of instruction medical difficulty levels. Panel (e) analyzes linguistic diversity to depict the top 20 root verbs in the inner circle and their 4 primary direct noun objects in the outer circle in the generated instructions.

图 4: Med Instruct-52k 数据集多样性分析。(a-c) 面板分别展示了主题、视角和类型的前 20 项条目。(d) 面板显示了医疗指令难度等级的分布情况。(e) 面板通过分析语言多样性,在内圈展示生成指令中出现频率最高的 20 个根动词,外圈则呈现这些动词对应的 4 个主要直接名词宾语。

Consistent with previous results, AlpaCare-13B outperforms PMC-13B in human evaluation, with $54%$ of answers preferred by expert annotators for correctness and $69%$ for helpfulness. This demonstrates AlpaCare’s superior medical capacity and practical usability. The greater improvement in helpfulness over correctness for AlpaCare is expected, as the goal of IFT is to enhance LLMs’ instruction-following ability to meet diverse user needs, rather than acquiring new knowledge.

与先前结果一致,AlpaCare-13B在人工评估中表现优于PMC-13B,专家标注者在答案正确性方面更偏好其54%的答案,在实用性方面则达到69%。这表明AlpaCare具备更卓越的医疗能力和实际应用价值。AlpaCare在实用性方面比正确性提升更显著是符合预期的,因为指令微调(IFT)的目标是增强大语言模型的指令跟随能力以满足多样化用户需求,而非获取新知识。

7 ANALYSIS & CASE STUDY

7 分析与案例研究

IFT DATASET DIVERSITY ANALYSIS

IFT 数据集多样性分析

Training a model with diverse instructions enhances its ability to follow instructions (Wang et al., 2023b). However, current medical LLMs often have training data lacking in instructional diversity, typically using repetitive instructions across different instances (Li et al., 2023b; Han et al., 2023; Wu et al., 2023). To examine the diversity in our dataset, we plot the distributions of 4 key areas for instruction generation from Med Instruct $.52k$ , shown in Figure 4 (a)- (d). Specifically, we present the top 20 topics, views and types and the difficulty levels from 1 to 5, offering insight into training data distribution. We further analyze instruction linguistic diversity by showing the root verbs and their corresponding direct-object nouns from each instruction. The top 20 root verbs and their 4 most common direct-object nouns are displayed in Figure 4 (e), representing $22%$ of the total dataset. Our findings show quite diverse medical intents and textual formats in our Med Instruct $52k$ .

使用多样化的指令训练模型能增强其遵循指令的能力 (Wang et al., 2023b)。然而当前医疗领域的大语言模型训练数据往往缺乏指令多样性,通常在不同实例中重复使用相同指令 (Li et al., 2023b; Han et al., 2023; Wu et al., 2023)。为评估数据集的多样性,我们绘制了Med Instruct $52k$ 中指令生成的4个关键维度分布,如图4(a)-(d)所示。具体展示了前20个主题、视角和类型,以及1至5级难度等级,揭示了训练数据分布特征。我们进一步通过分析指令中的根动词及其对应宾语名词来评估语言多样性。图4(e)展示了前20个根动词及其4个最常见宾语名词,覆盖了总数据集的$22%$。研究结果表明,Med Instruct $52k$ 在医疗意图和文本格式方面表现出显著多样性。

To quantitatively showcase our dataset’s diversity in comparison to IFT dataset of other medical LLMs, we calculate the linguistic entropy in the instructions of instructionfollowing datasets used for medical models. Higher entropy values signify greater diversity. Specifically, we

为了定量展示我们数据集与其他医疗大语言模型 (LLM) 的指令微调 (IFT) 数据集相比的多样性,我们计算了用于医疗模型的指令跟随数据集中指令的语言熵值。熵值越高表明多样性越强。具体来说,我们

Table 7: Quantitative comparison of linguistic diversity in medical instructional datasets. Comparing linguistic entropy of each IFT dataset for medical LLMs. The higher value represents better diversity.

| ChatDoctor | Medalpaca | PMC Baize-H | [AlpaCare | |

| Entropy | 0 | 2.85 3.45 | 5.57 |

表 7: 医疗指导数据集中语言多样性的量化比较。对比各医疗大语言模型IFT数据集的语言熵值,数值越高代表多样性越好。

| ChatDoctor | Medalpaca | PMC Baize-H | [AlpaCare | |

|---|---|---|---|---|

| Entropy | 0 | 2.85 3.45 | 5.57 |

analyze the top 20 root verbs and their 4 primary direct noun objects for each dataset and calculate verb-noun pair entropy, as shown in Table 7. AlpaCare’s dataset, Med Instruct $.52K$ , exhibits the highest entropy, underscoring its superior diversity, which in turn better elicits the model’s instruction-following capabilities during IFT.

分析各数据集中前20个根动词及其对应的4个主要直接名词宾语,并计算动词-名词对的熵值,如表7所示。AlpaCare的数据集Med Instruct $.52K$ 展现出最高的熵值,凸显其卓越的多样性,进而在指令微调(IFT)过程中更能激发模型的指令遵循能力。

Figure 5: Case Study of 13B models of AlpaCare and PMC on (a) correctness and (b) helpfulness. Instruction key points and primary responses are highlighted in blue and yellow, respectively. GENERATION CASE STUDY

图 5: AlpaCare与PMC的13B模型在(a)正确性和(b)实用性上的案例分析。指令要点和主要回答分别用蓝色和黄色高亮标注。生成案例研究

We randomly selected one win case from Med Instruct-test for correctness and another for helpfulness, as described in Section 6. Figure 5 displays the instructions and outputs of the base model, LLAMA13B, and 13B medical models, AlpaCare and PMC.

我们随机从Med Instruct-test中选取了一个正确性案例和一个实用性案例,如第6节所述。图5展示了基础模型、LLAMA13B以及两款13B医学模型(AlpaCare和PMC)的指令与输出结果。

Figure 5(a) illustrates a correctness case with a high medical difficulty level, where LLAMA-13B struggles to provide accurate responses, while Medical LLMs show improvement. However, PMC provides a general overview, mentioning common causes like stress and hormonal imbalances, and uses vague terms such as ’certain medical conditions,’ which lack specificity and do not provide actionable medical insights. In contrast, AlpaCare gives a detailed analysis, identifying specific conditions like hypo thyroid is m and iron deficiency anemia, and emphasizes the importance of medical attention for severe symptoms, enhancing the guidance’s precision and action ability.

图 5(a) 展示了一个医学难度较高的正确案例:LLAMA-13B 难以给出准确回答,而医疗大语言模型表现有所提升。不过 PMC 仅提供笼统概述,提及压力、荷尔蒙失调等常见诱因,并使用"特定医疗状况"等模糊表述,缺乏针对性且未给出可操作的医学建议。相比之下,AlpaCare 给出了详细分析,明确指出甲状腺功能减退和缺铁性贫血等具体病症,并强调严重症状需及时就医,显著提升了指导的精确性和可操作性。

Figure 5(b) shows a helpfulness case study. Both the base and medical models accurately describe Metoprolol, but LLAMA and PMC disregard the requested bullet-point format, reducing clarity. In contrast, AlpaCare effectively follows the instructions with well-organized formatting. This demonstrates that fine-tuning with a diverse medical IFT dataset enhances the model’s ability to follow instructions, thereby increasing helpfulness.

图 5(b) 展示了一个实用性案例研究。基础模型和医疗模型都能准确描述美托洛尔 (Metoprolol) ,但 LLAMA 和 PMC 忽略了要求的项目符号格式,降低了清晰度。相比之下,AlpaCare 有效地遵循了指令并采用了组织良好的格式。这表明通过多样化的医疗 IFT (Instruction Fine-Tuning) 数据集进行微调,能增强模型遵循指令的能力,从而提高实用性。

8 CONCLUSION

8 结论

In this paper, we propose a semi-automated pipeline using GPT-4 and ChatGPT to create the diverse Med Instruct $52k$ dataset for LLM tuning. Extensive experiments on multiple benchmarks show that when trained on this dataset, our model, AlpaCare, demonstrates stronger medical and general instruction-following capabilities compared to medical LLM baselines, underscoring the importance of data diversity in medical AI model development.

本文提出了一种利用GPT-4和ChatGPT构建多样化医疗指令数据集Med Instruct $52k$的半自动化流程,用于大语言模型调优。在多个基准测试上的实验表明,基于该数据集训练的AlpaCare模型相比医疗大语言模型基线展现出更强的医疗指令遵循和通用指令跟随能力,印证了数据多样性对医疗AI模型开发的关键作用。

REFERENCES

参考文献

AI@Meta. Llama 3 model card. 2024. URL https://github.com/meta-llama/llama3/ blob/main/MODEL_ CARD.md.

AI@Meta. Llama 3 模型卡. 2024. 网址 https://github.com/meta-llama/llama3/ blob/main/MODEL_ CARD.md.

Anthropic. Claude 2, 2023. URL https://www.anthropic.com/index/claude-2.

Anthropic. Claude 2, 2023. URL https://www.anthropic.com/index/claude-2.

Amanda Askell, Yuntao Bai, Anna Chen, Dawn Drain, Deep Ganguli, Tom Henighan, Andy Jones, Nicholas Joseph, Ben Mann, Nova DasSarma, Nelson Elhage, Zac Hatfield-Dodds, Danny Hernandez, Jackson Kernion, Kamal Ndousse, Catherine Olsson, Dario Amodei, Tom Brown, Jack Clark, Sam McCandlish, Chris Olah, and Jared Kaplan. A general language assistant as a laboratory for alignment, 2021.

Amanda Askell、Yuntao Bai、Anna Chen、Dawn Drain、Deep Ganguli、Tom Henighan、Andy Jones、Nicholas Joseph、Ben Mann、Nova DasSarma、Nelson Elhage、Zac Hatfield-Dodds、Danny Hernandez、Jackson Kernion、Kamal Ndousse、Catherine Olsson、Dario Amodei、Tom Brown、Jack Clark、Sam McCandlish、Chris Olah 和 Jared Kaplan。《通用语言助手作为对齐研究的实验平台》,2021年。

Asma Ben Abacha and Dina Demner-Fushman. On the sum mari z ation of consumer health questions. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pp. 2228–2234, Florence, Italy, July 2019. Association for Computational Linguistics. doi: 10.18653/v1/P19-1215. URL https://aclanthology.org/P19-1215.

Asma Ben Abacha 和 Dina Demner-Fushman。关于消费者健康问题的摘要生成。见《第57届计算语言学协会年会论文集》,第2228–2234页,意大利佛罗伦萨,2019年7月。计算语言学协会。doi: 10.18653/v1/P19-1215。URL https://aclanthology.org/P19-1215。

Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neel a kant an, Pranav Shyam, Girish Sastry, Amanda Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeffrey Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, and Dario Amodei. Language models are few-shot learners, 2020.

Tom B. Brown、Benjamin Mann、Nick Ryder、Melanie Subbiah、Jared Kaplan、Prafulla Dhariwal、Arvind Neelakantan、Pranav Shyam、Girish Sastry、Amanda Askell、Sandhini Agarwal、Ariel Herbert-Voss、Gretchen Krueger、Tom Henighan、Rewon Child、Aditya Ramesh、Daniel M. Ziegler、Jeffrey Wu、Clemens Winter、Christopher Hesse、Mark Chen、Eric Sigler、Mateusz Litwin、Scott Gray、Benjamin Chess、Jack Clark、Christopher Berner、Sam McCandlish、Alec Radford、Ilya Sutskever 和 Dario Amodei。语言模型是少样本学习者,2020年。

Lichang Chen, Shiyang Li, Jun Yan, Hai Wang, Kalpa Gunaratna, Vikas Yadav, Zheng Tang, Vijay Srinivasan, Tianyi Zhou, Heng Huang, and Hongxia Jin. Alpagasus: Training a better alpaca with fewer data, 2023.

Lichang Chen、Shiyang Li、Jun Yan、Hai Wang、Kalpa Gunaratna、Vikas Yadav、Zheng Tang、Vijay Srinivasan、Tianyi Zhou、Heng Huang 和 Hongxia Jin。Alpagasus: 用更少数据训练更好的Alpaca,2023。

Zhiyu Zoey Chen, Jing Ma, Xinlu Zhang, Nan Hao, An Yan, Armineh Nourbakhsh, Xianjun Yang, Julian McAuley, Linda Petzold, and William Yang Wang. A survey on large language models for critical societal domains: Finance, healthcare, and law, 2024. URL https://arxiv.org/ abs/2405.01769.

Zhiyu Zoey Chen、Jing Ma、Xinlu Zhang、Nan Hao、An Yan、Armineh Nourbakhsh、Xianjun Yang、Julian McAuley、Linda Petzold 和 William Yang Wang。大语言模型在关键社会领域的应用综述:金融、医疗和法律,2024。URL https://arxiv.org/abs/2405.01769。

Yew Ken Chia, Pengfei Hong, Lidong Bing, and Soujanya Poria. Instruct e val: Towards holistic evaluation of instruction-tuned large language models, 2023.

Yew Ken Chia、Pengfei Hong、Lidong Bing 和 Soujanya Poria。Instruct e val: 面向指令调优大语言模型的整体评估,2023。

Wei-Lin Chiang, Zhuohan Li, Zi Lin, Ying Sheng, Zhanghao Wu, Hao Zhang, Lianmin Zheng, Siyuan Zhuang, Yonghao Zhuang, Joseph E. Gonzalez, Ion Stoica, and Eric P. Xing. Vicuna: An open-source chatbot impressing gpt-4 with $90%^{\ast}$ chatgpt quality, March 2023. URL https: //lmsys.org/blog/2023-03-30-vicuna/.

Wei-Lin Ch