HuatuoGPT-II, One-stage Training for Medical Adaption of LLMs

HuatuoGPT-II,大语言模型医学适配的一站式训练方案

Abstract

摘要

Adapting a language model (LM) into a specific domain, a.k.a ‘domain adaption’, is a common practice when specialized knowledge, e.g. medicine, is not encapsulated in a general language model like Llama2. This typically involves a two-stage process including continued pre-training and supervised fine-tuning. Implementing a pipeline solution with these two stages not only introduces complexities (necessitating dual meticulous tuning) but also leads to two occurrences of data distribution shifts, exacerbating catastrophic forgetting. To mitigate these, we propose a one-stage domain adaption protocol where heterogeneous data from both the traditional pre-training and supervised stages are unified into a simple instructionoutput pair format to achieve efficient knowledge injection. Subsequently, a data priority sampling strategy is introduced to adaptively adjust data mixture during training. Following this protocol, we train HuatuoGPT-II, a specialized LLM for the medical domain in Chinese. HuatuoGPT-II achieve competitive performance with GPT4 across multiple benchmarks, which especially shows the state-of-the-art (SOTA) performance in multiple Chinese medical benchmarks and the newest pharmacist licensure examinations. Furthermore, we explore the phenomenon of onestage protocols, and the experiments reflect that the simplicity of the proposed protocol improves training stability and domain generalization. Our code, data, and models are available at https://github.com/Freedom Intelligence/HuatuoGPT-II.

将语言模型 (LM) 适配到特定领域(即"领域适应"),是当通用语言模型(如 Llama2)未涵盖专业知识(例如医学)时的常见做法。这通常涉及持续预训练和监督微调的两阶段流程。采用这种两阶段管道方案不仅会引入复杂性(需要双重精细调优),还会导致两次数据分布偏移,加剧灾难性遗忘。为缓解这些问题,我们提出一种单阶段领域适应协议:将传统预训练和监督阶段的异构数据统一为简单的指令-输出对格式,从而实现高效知识注入。随后引入数据优先级采样策略,在训练期间自适应调整数据混合比例。基于该协议,我们训练了中文医疗领域专用大语言模型 HuatuoGPT-II。该模型在多项基准测试中与 GPT4 表现相当,尤其在多个中文医疗基准和最新执业药师资格考试中展现出最先进 (SOTA) 性能。此外,我们探索了单阶段协议现象,实验表明该协议的简洁性提升了训练稳定性和领域泛化能力。代码、数据及模型详见 https://github.com/FreedomIntelligence/HuatuoGPT-II 。

1 Introduction

1 引言

Currently, general large language model (LLM), such as the Llama series (Touvron et al., 2023), are developing rapidly. Simultaneously, in some vertical domains1, some researchers (Cui et al., 2023; Yang et al., 2023c; Li et al., 2023a) attempt to develop specialized models. Specialized models have the potential to yield results comparable to those of larger models by utilizing a medium-sized model through the exclusion of certain general knowledge (Wang et al., 2024; Chen et al., 2023b; Yang et al., 2023c). For instance, financial knowledge may not be sufficiently usefully in the medical field and can be therefore omitted in moderately-sized medical LLMs, thereby freeing up more capacity for memorizing medical knowledge.

目前,通用大语言模型(如Llama系列 [Touvron等人,2023])正在快速发展。与此同时,在某些垂直领域,部分研究者 [Cui等人,2023; Yang等人,2023c; Li等人,2023a] 尝试开发专用模型。通过排除部分通用知识,专用模型有望利用中等规模模型获得与更大模型相当的结果 [Wang等人,2024; Chen等人,2023b; Yang等人,2023c]。例如,金融知识在医疗领域可能不够实用,因此可以在中等规模的医疗大语言模型中省略这部分内容,从而释放更多容量用于记忆医学知识。

The two-stage protocol Adaption of general large language models in vertical domains typically involves two stages: continued pre-training and supervised fine-tuning (SFT) (Wang et al., 2023a). For this adaption in medicine domain, continued pre-training aims to inject specialized knowledge, such as medical expertise, while supervised fine-tuning seeks to activate the ability to follow medical instruction, as stated in Zhou et al., 2023.

通用大语言模型在垂直领域的适配通常分为两个阶段:持续预训练和监督微调(SFT) (Wang et al., 2023a)。针对医学领域的适配,持续预训练旨在注入专业知识(如医学知识),而监督微调则致力于激活模型遵循医学指令的能力(Zhou et al., 2023)。

Issues of the two-stage protocol In the two-stage adaption, LLMs face two key challenge: 1) the difference in continual pre-training and supervised fine-tuning optimization objectives, and 2) the inherent difference in data distribution from general pre-training to continued pre-training. Therefore, the two-stage process suggests that the LLM experiences dual shifts in data distribution. As stated in Goodfellow et al., 2013, catastrophic forgetting occurs when neural networks learn multiple sequential tasks in a pipeline, resulting in the loss of knowledge from previously learned tasks. This problem worsens when the two-stage training involves significantly divergent data distributions and objectives (Bhat et al., 2022). Specifically, Cheng et al., 2023 argues continued pre-training on domain-specific corpora reduces the LLM’s prompting capabilities. Secondly, the two-stage training pipeline adds complexity due to the interdependence of its stages. This intricacy not only complicates the optimization process but also limits s cal ability and adaptability. Each stage possesses distinct hyper parameters such as batch size, learning rate, and warmup procedures. These parameters necessitate careful manual selection through rigorous experimentation.

两阶段协议的问题

在两阶段适应过程中,大语言模型面临两大关键挑战:1) 持续预训练与监督微调优化目标之间的差异,2) 从通用预训练到持续预训练的数据分布固有差异。因此,两阶段流程意味着大语言模型会经历数据分布的双重偏移。如Goodfellow等人在2013年所述,当神经网络以流水线方式学习多个连续任务时,会导致先前任务知识的丢失,即灾难性遗忘。当两阶段训练涉及显著不同的数据分布和目标时,这一问题会加剧 (Bhat等人, 2022)。具体而言,Cheng等人在2023年指出,针对特定领域语料库的持续预训练会削弱大语言模型的提示能力。其次,两阶段训练流程因其阶段的相互依赖性而增加了复杂性。这种复杂性不仅使优化过程更困难,还限制了扩展能力和适应性。每个阶段都具有独特的超参数,例如批量大小、学习率和预热过程,这些参数需要通过严格实验进行谨慎的手动选择。

The proposed one-stage protocol Following the philosophy of Parsimony, this work proposes a simpler protocol of domain adaption that unifies the two stages (continued pre-training and SFT) into a single stage. The core recipe is to transform domain-specific pre-training data into a unified format similar to fine-tuning data: a straightforward (instruction, output) pairs. This strategy diverges from the conventional dependence on unsupervised learning in continued pre-training, opting instead for a focused learning goal that emphasizes eliciting knowledge-driven responses to given instructions. The reformulated data is subsequently merged with fine-tuning data to facilitate one-stage domain adaption. In this process, we introduce a data priority sampling strategy aimed at initially learning domain knowledge from pre-training data and then progressively shifting focus to downstream fine-tuning data. This approach enhances the model’s capability to utilize domain knowledge effectively.

遵循简约原则,本文提出了一种更简单的领域适配协议,将两阶段(持续预训练和SFT)统一为单阶段。核心方法是将领域特定的预训练数据转换为类似微调数据的统一格式:简单的(指令,输出)对。该策略不同于持续预训练中对无监督学习的传统依赖,而是选择强调激发对给定指令的知识驱动响应的聚焦学习目标。重构后的数据随后与微调数据合并,以促进单阶段领域适配。在此过程中,我们引入了一种数据优先级采样策略,旨在首先从预训练数据中学习领域知识,然后逐步将重点转移到下游微调数据。这种方法增强了模型有效利用领域知识的能力。

Verification for the new protocol To verify the one-stage protocol, we experiment on Chinese healthcare 2 where ChatGPT and GPT-4 perform relatively poorly (Wang et al., 2023a). Leveraging the proposed protocol, we train a Chinese medical language model, HuatuoGPT-II. Inspired by back-translation (Li et al., 2023d), we employ the prowess of LLMs for data unification, where all diverse and multilingual pre-training data are converted to Chinese instructions with a consistent style. This stage bridges the gap between two-stage data, especially for training LLMs in unpopular languages, where English data is overwhelmingly more abundant and high-quality. Subsequently, a priority sampling strategy is used to integrate pre-training and SFT instructions for domain adaption. We believe this unified domain adaptation protocol can be similarly effective in other specialized areas such as finance and law, as well as in different languages.

验证新协议

为验证单阶段协议的有效性,我们在中文医疗领域展开实验(该领域ChatGPT和GPT-4表现较差 [Wang et al., 2023a])。基于该协议,我们训练了中文医疗大语言模型华佗GPT-II (HuatuoGPT-II)。受反向翻译技术 [Li et al., 2023d] 启发,我们利用大语言模型实现数据统一化——将所有多语言预训练数据转换为风格统一的中文指令。这一阶段弥合了双阶段数据间的鸿沟,尤其适用于训练小语种大语言模型(当前英语数据在数量和质量上占据绝对优势)。随后采用优先级采样策略整合预训练与监督微调(SFT)指令,实现领域适配。我们相信这种统一的领域适配协议同样适用于金融、法律等其他专业领域及不同语种场景。

Experimental Results Experimental results demonstrate that HuatuoGPT-II, a new Chinese medical language model, outperforms other open-source models and rivals proprietary ones like GPT-4 and ChatGPT in some Chinese medical Benchmarks. In expert manual evaluations, HuatuoGPT-II shows a remarkable win rate of $38%$ and tie in another $38%$ against GPT-4. Moreover, in a recent and untouched Chinese National Pharmacist Licensure Examination (2023), HuatuoGPTII’s superiority over other models, highlighting its specialized effectiveness in the medical field.

实验结果

实验结果表明,新型中文医疗大语言模型 HuatuoGPT-II 在部分中文医疗基准测试中表现优于其他开源模型,并与 GPT-4、ChatGPT 等专有模型不相上下。在专家人工评估中,HuatuoGPT-II 对 GPT-4 的胜率高达 $38%$,另有 $38%$ 结果为平局。此外,在最新的中国国家执业药师资格考试 (2023) 中,HuatuoGPT-II 的表现显著优于其他模型,突显了其在医疗领域的专业有效性。

Additionally, in a spectrum of evaluation methods, the one-stage protocol of HuatuoGPT-II prove more effective than the traditional two-stage training paradigm.

此外,在一系列评估方法中,HuatuoGPT-II的单阶段训练协议被证明比传统的两阶段训练范式更有效。

Contributions The key contributions are:

贡献 主要贡献包括:

2 Data Collection

2 数据收集

Domain data is typically divided into two parts: pre-training corpora and fine-tuning instructions.

领域数据通常分为两部分:预训练语料和微调指令。

Medical Pre-training Corpus Domain corpus is pivotal for augmenting domain-specific expertise. We collect 1.1TB of both Chinese and English corpus, sourced from encyclopedias, books, literature, and web corpus, blending general corpora like C4 and specialized corpora such as PubMed. All the corpora are publicly accessible, detailed in Appendix C. Then, a meticulous collection pipeline are established for curating high-quality domain data, detailed in the Appendix C. As a result, we obtained 5,252,894 premium medical documents for the pre-training corpus.

医学预训练语料库

领域语料库对于增强特定领域的专业知识至关重要。我们收集了1.1TB的中英文语料,来源包括百科全书、书籍、文献和网络语料,融合了C4等通用语料和PubMed等专业语料。所有语料均公开可获取,详见附录C。随后,我们建立了一套精细的收集流程以筛选高质量领域数据(附录C详述),最终获得5,252,894份优质医学文档用于预训练语料库。

Medical Fine-Tuning Instructions For the fine-tuning instruction, We acquire 142K real-world medical questions as instructions from Huatuo-26M (Li et al., 2023c), and had GPT-4 respond to them as outputs. The fine-tuning instruction is utilized to generalize the model’s capability to interact with users within the domain.

医学微调指令

对于微调指令,我们从Huatuo-26M (Li et al., 2023c) 中获取了142K个真实世界的医学问题作为指令,并由GPT-4生成对应的回答作为输出。该微调指令用于提升模型在医学领域与用户交互的泛化能力。

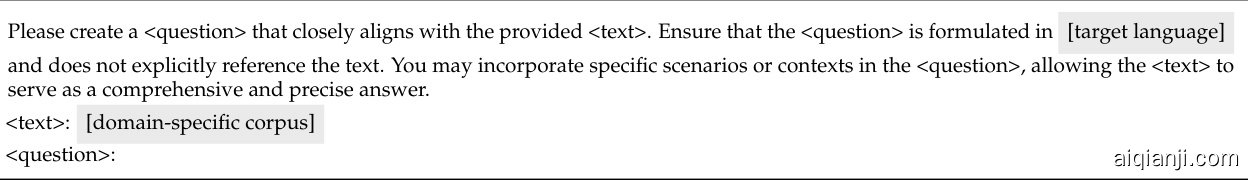

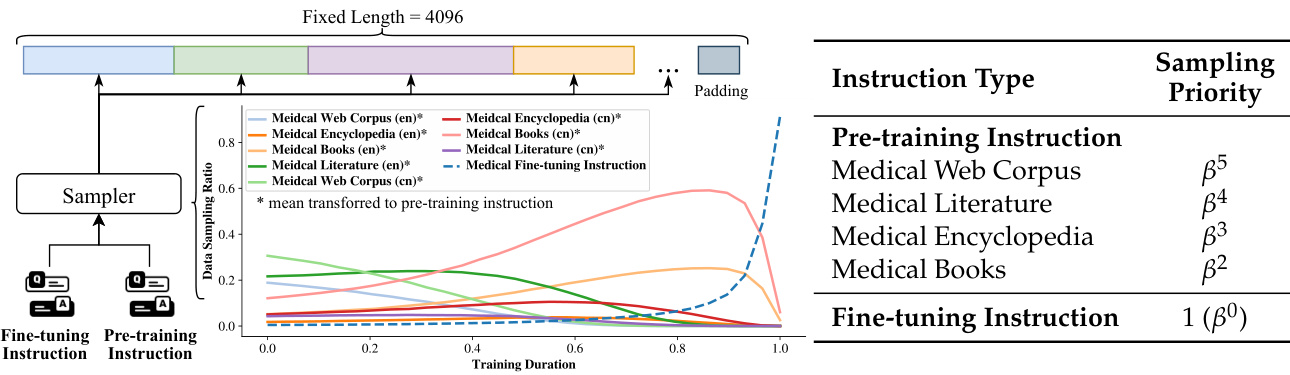

Figure 1: Schematic of One-stage Adaption of HuatuoGPT-II.

图 1: HuatuoGPT-II 单阶段适配示意图。

3 One-stage Adaption

3 单阶段适配

One-stage Adaption strategy aims to unify the conventional Two-stage Adaption process (continued pre-training and supervised fine-tuning) into a single stage, as shown in Figure 1. The adaption process can be executed in two succinct steps: 1) Data unification and 2) One-stage training.

一阶段适应策略旨在将传统的两阶段适应过程(持续预训练和监督微调)统一为单一阶段,如图 1 所示。适应过程可通过两个简洁步骤执行:1) 数据统一和 2) 一阶段训练。

Subsequently, we will detail our method for adapting to the Chinese healthcare sector and developing and the development of HuatuoGPT-II.

接下来,我们将详细介绍如何针对中国医疗行业进行适配,并开发HuatuoGPT-II。

3.1 Data Unification

3.1 数据统一

Domain pre-training corpus is pivotal for augmenting domain-specific expertise. However, it faces challenges such as diverse languages and genres, punctuation errors, and ethical concerns in its pre-training corpus. Particularly, there’s a noticeable discrepancy between its unsupervised training and the supervised instruction learning in Supervised Fine-Tuning (SFT). Data Unification aims to unify this data into a consistent format, aligning it with SFT data. Traditional methods like those in Cheng et al., 2023 fall short due to the variation in language and genre. Hence, we leverage Large Language Models to achieve effective data unification.

领域预训练语料对于增强特定领域的专业知识至关重要。然而,其预训练语料面临着语言和体裁多样、标点错误以及伦理问题等挑战。特别是在无监督训练与监督微调(SFT)中的监督指令学习之间存在明显差异。数据统一旨在将这些数据转换为统一格式,使其与SFT数据保持一致。传统方法如Cheng等人在2023年提出的方案因语言和体裁的多样性而效果有限。因此,我们利用大语言模型来实现有效的数据统一。

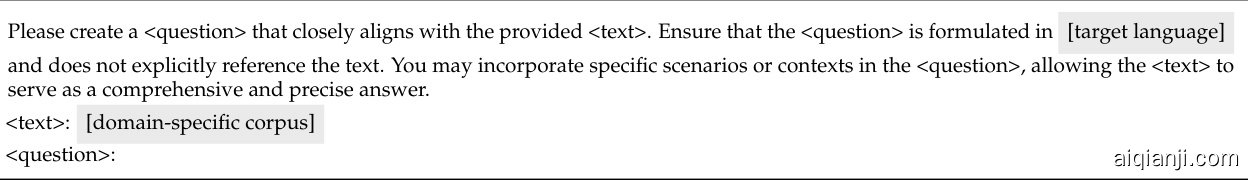

Our method of data unification is straightforward yet effective. We generate instructions based on the text of the corpus, and then we generate responses based on both the corpus and the instructions. The prompt for generating instructions is shown in Figure 2.

我们的数据统一方法简单而有效。我们基于语料库文本生成指令,然后根据语料库和指令生成响应。生成指令的提示如图 2 所示。

Figure 2: The prompt for question generation. [target language] is Chinese, and [domain-specific corpus] refers to a corpus in the domain-specific pre-training corpora.

图 2: 问题生成的提示语。[target language] 是中文,[domain-specific corpus] 指领域专用预训练语料库中的语料。

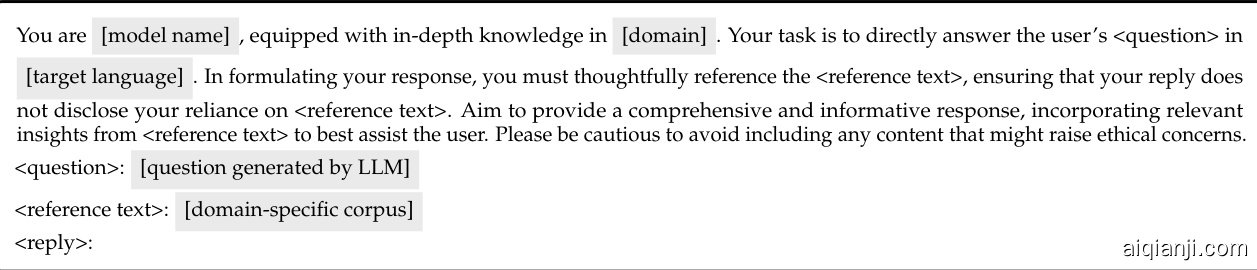

After obtaining questions from the corpus text, we use the prompt, shown in Figrue 3, to let a Large Language Model (LLM) generate responses based on the questions and the corpus. Therefore, all pre-training corpora are converted into a instruction-output format, identical to our single-turn SFT data. An example of our final SFT data is shown in Table 7.

从语料文本中获取问题后,我们使用图3所示的提示词(prompt)让大语言模型(LLM)基于问题和语料生成回答。因此,所有预训练语料都被转换为指令-输出格式,与我们的单轮监督微调(SFT)数据格式一致。最终监督微调数据的示例如表7所示。

Here, we use ChatGPT as the LLM for data unification, converting all the medical corpus into instructions of the same language and genre. As detailed in Appendix F, Data Unification can be achieved independently of external LLMs. In Appendix E, we verified that the selection of LLM for data unification is inconsequential. This strategy also mitigates potential ethical concerns inherent in the corpus. Additionally, we also use statistical and semantic recognition to keep the model-generated answers close to the data’s original content, as explained in Appendix H.

在此,我们采用ChatGPT作为大语言模型(LLM)进行数据统一,将所有医学语料转换为相同语言风格和类型的指令。如附录F所述,数据统一可不依赖外部大语言模型独立实现。附录E验证了数据统一环节选择不同大语言模型对结果无显著影响。该策略还能规避语料库中潜在的伦理问题。此外,如附录H所阐释,我们通过统计分析与语义识别技术确保模型生成答案贴近原始数据内容。

3.2 One-stage Training

3.2 单阶段训练

In the one-stage training process, we integrate data from the Medical Pre-training Instruction and Medical Fine-tuning Instruction datasets to form dataset $D$ . The traditional two-stage pipeline adopts a completely separate form, first learning knowledge and then learning instructions to

在一阶段训练过程中,我们将医学预训练指令(Medical Pre-training Instruction)和医学微调指令(Medical Fine-tuning Instruction)数据集整合形成数据集$D$。传统两阶段流程采用完全分离的形式,先学习知识再学习指令。

Figure 3: Prompt for answer generation. [model name] refers to HuatuoGPT-II, [domain] is medicine, and [question generated by LLM]] is the previously text-derived query.

图 3: 答案生成的提示语。[model name]指代华佗GPT-II,[domain]为医学领域,[question generated by LLM]]是此前基于文本生成的查询。

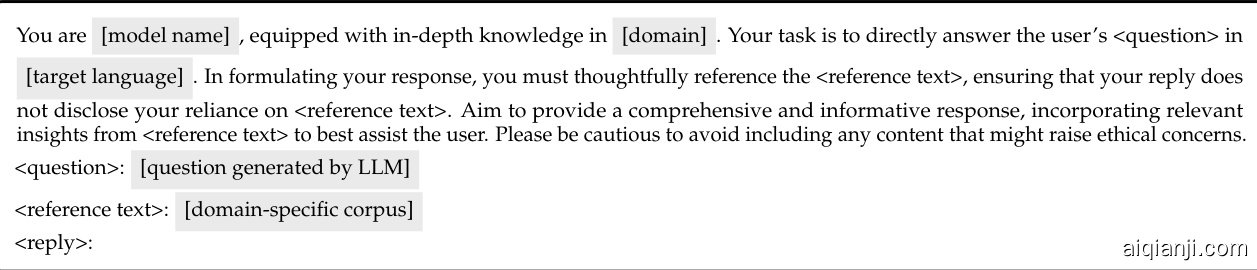

Figure 4: One-stage training process and sampling priority. The diagram (left) illustrates the onestage training methodology. The table (right) details the sampling priority for various instructional data types, with the $\beta$ (set as 2) dictating the relative priority. Higher $\beta$ values indicate a more sequential sampling approach, whereas lower values suggest a blended strategy.

图 4: 单阶段训练流程与采样优先级。示意图(左)展示了单阶段训练方法。表格(右)详细列出了不同指令数据类型的采样优先级,其中$\beta$(设为2)决定相对优先级。$\beta$值越高表示采用更顺序化的采样方式,而较低值则代表混合策略。

follow. It exacerbates the catastrophic forgetting of the knowledge acquired in the first stage (Bhat et al., 2022). Referring to Touvron et al., 2023, simply mixing all data could hinder the ability of Large Language Models (LLMs). To address this, we introduce a priority sampling strategy in this study. This strategy starts with domain knowledge, gradually transitioning to fine-tuning data while reducing the sampling ratio of previously learned data over time.

遵循这一做法会加剧第一阶段所学知识的灾难性遗忘 (Bhat et al., 2022) 。参考 Touvron 等人 2023 年的研究,简单地混合所有数据可能会阻碍大语言模型 (LLM) 的能力。为此,我们在本研究中引入了优先级采样策略。该策略从领域知识入手,逐步过渡到微调数据,同时随时间推移降低已学习数据的采样比例。

In the priority sampling strategy, the sampling probability of each data $x\in D$ changes over the course of training. The sampling probability of data $x$ at step $t$ during training is:

在优先级采样策略中,每个数据$x\in D$的采样概率会随着训练过程动态变化。训练第$t$步时数据$x$的采样概率为:

$$

P_ {t}(x)=\frac{\pi(x)}{\sum_ {y\in D-S_ {t}}\pi(y)}

$$

$$

P_ {t}(x)=\frac{\pi(x)}{\sum_ {y\in D-S_ {t}}\pi(y)}

$$

Here, $\pi(x)$ denotes the priority of element $x,$ , and $S_ {t}$ represents the sampled data before step t. The priority setting and data sampling distribution are delineated in Figure 4. Notably, the priority $\overset{\cdot}{\pi}(x)$ is static, whereas the sampling probabilities of each data source dynamically changes. More precisely, consider data sources $D_ {1}^{t},\bar{D}_ {2}^{t},\ldots,D_ {n}^{t}\subseteq D-S^{t}$ at time $t_ {.}$ , each with an assigned priority $\pi({\boldsymbol{x}}\in D_ {i}^{t})=\beta^{K_ {i}}$ . The probability of selecting an element from $D_ {i}^{t}$ is given by:

这里,$\pi(x)$ 表示元素 $x$ 的优先级,$S_ {t}$ 代表第 t 步前的采样数据。优先级设置与数据采样分布如图 4 所示。值得注意的是,优先级 $\overset{\cdot}{\pi}(x)$ 是静态的,而每个数据源的采样概率会动态变化。更准确地说,考虑时间 $t_ {.}$ 时的数据源 $D_ {1}^{t},\bar{D}_ {2}^{t},\ldots,D_ {n}^{t}\subseteq D-S^{t}$ ,每个数据源被赋予优先级 $\pi({\boldsymbol{x}}\in D_ {i}^{t})=\beta^{K_ {i}}$ 。从 $D_ {i}^{t}$ 中选择元素的概率由下式给出:

$$

P_ {t}(x\in D_ {i}^{t})=\frac{|D_ {i}^{t}|\beta^{K_ {i}}}{\sum_ {j=1}^{n}|D_ {j}^{t}|\beta^{K_ {j}}}

$$

After an element is selected from $D_ {i}^{t},$ the size of $D_ {i}^{t+1}$ becomes $|D_ {i}^{t+1}|=|D_ {i}^{t}|-1,$ , resulting in: $P_ {t+1}(x\in D_ {i.}^{t+1})<P_ {t}(x\in D_ {i}^{t})$ . Therefore, each selection from $D_ {i}$ slightly decreases its sampling probability, leading to a dynamic update. The parameter $\beta$ plays a crucial role in adjusting the sampling intensity among high-priority sources, with higher $\beta$ values favoring sequential sampling, while lower values encourage mixed sampling.

从 $D_ {i}^{t}$ 中选定一个元素后,$D_ {i}^{t+1}$ 的大小变为 $|D_ {i}^{t+1}|=|D_ {i}^{t}|-1$,从而得到:$P_ {t+1}(x\in D_ {i.}^{t+1})<P_ {t}(x\in D_ {i}^{t})$。因此,每次从 $D_ {i}$ 中进行选择都会略微降低其采样概率,实现动态更新。参数 $\beta$ 在调整高优先级数据源间的采样强度中起关键作用,$\beta$ 值越高越倾向于顺序采样,而较低值则促进混合采样。

To enable the model to first learn domain capabilities and then gradually shift to instruction interaction learning, we assign a higher priority to pre-training instruction data. Furthermore, to facilitate the model’s transition from low to high knowledge density learning, we assign different priorities to the four types of data sources. Appendix I shows details of how we determine sampling priority values for different types of data.

为了让模型先学习领域能力再逐步转向指令交互学习,我们为预训练指令数据分配了更高优先级。此外,为促进模型从低知识密度向高知识密度学习过渡,我们对四类数据源设定了不同优先级。附录I详细展示了如何确定不同类型数据的采样优先级值。

4 Experiments

4 实验

4.1 Experimental settings

4.1 实验设置

Base Model & Setup HuatuoGPT-II, tailored for Chinese healthcare, builds upon the foundations o f the Baichuan2-7B-Base and Baichuan2-13B-Base models. Since all data consists solely of singleround format instruction, we enrich the Medical Fine-tuning Instruction dataset by integrating ShareGPT data 3. This allows HuatuoGPT-II to support multi-round dialogues while maintaining its general domain capabilities. For training details, please see the Appendix J.

基础模型与配置

华佗GPT-II (HuatuoGPT-II) 专为中文医疗场景设计,基于百川2-7B基础版 (Baichuan2-7B-Base) 和百川2-13B基础版 (Baichuan2-13B-Base) 模型构建。由于原始数据仅包含单轮指令格式,我们通过整合ShareGPT数据[3]扩充了医疗微调指令数据集,使华佗GPT-II在保持通用领域能力的同时支持多轮对话。训练细节详见附录J。

Baselines We compare HuatuoGPT-II with several leading general large language models known for their excellent general chat capabilities in Chinese. These models are Baichuan2-7B/13BChat(Yang et al., 2023a), Qwen-7B/14B-Chat(Bai et al., 2023) and ChatGLM3-6B(Zeng et al., 2023). Additionally, for the Chinese medical context, we carefully select DISC-MedLLM(Bao et al., 2023) and HuatuoGPT(Zhang et al., 2023) based on an experimental experiment detailed in Appendix R. We also consider leading proprietary models, including ERNIE Bot ( 文心一言) (Sun et al., 2021), ChatGPT(OpenAI, 2022), and GPT $4^{4}$ (OpenAI, 2023), noted for their extensive parameters and superior performance. For the details of these models, please refer to Appendix M.

基线模型

我们将华佗GPT-II (HuatuoGPT-II) 与多款以优秀中文通用对话能力著称的主流大语言模型进行对比,包括百川2-7B/13B-Chat (Yang et al., 2023a)、通义千问-7B/14B-Chat (Bai et al., 2023) 和ChatGLM3-6B (Zeng et al., 2023)。针对中文医疗场景,基于附录R的实验筛选出DISC-MedLLM (Bao et al., 2023) 和华佗GPT (Zhang et al., 2023) 作为对照。同时纳入参数规模与性能领先的闭源模型:文心一言 (Sun et al., 2021)、ChatGPT (OpenAI, 2022) 以及GPT-4 (OpenAI, 2023)。各模型详情参见附录M。

4.2 Medical Benchmark

4.2 医疗基准测试

In this section, we evaluate the medical capabilities of HuatuoGPT-II on popular benchmarks. We focus on four medical benchmarks (MedQA, MedMCQA, CMB, CMExam) and two general benchmarks (C-Eval, CMMLU), focusing specifically on their medical components. See Appendix K for more details on these benchmarks. To ensure fairness, we use the most straightforward prompt for all the models, as shown in Appendix K.

在本节中,我们评估了HuatuoGPT-II在主流基准测试中的医疗能力。我们重点关注四个医疗基准(MedQA、MedMCQA、CMB、CMExam)和两个通用基准(C-Eval、CMMLU)的医疗相关部分。关于这些基准的详细信息请参阅附录K。为确保公平性,我们为所有模型使用最直接的提示方式,如附录K所示。

Medical Benchmark As shown in Table 1, the benchmark results highlight HuatuoGPT-II’s impressive proficiency in the medical domain. Its exceptional performance in Chinese medical benchmarks like CMB and CMExam reflects its deep understanding of medical concepts in a Chinese context. Moreover, HuatuoGPT-II maintains a high level on English benchmarks, demonstrating its medical capabilities. To further evaluate the medical expertise, we utilize a dataset with various Chinese national medical exams. The results, displayed in Table 4, reveal that HuatuoGPT-II surpass all open-source models and closely rivaled the proprietary model, ERNIE Bot. In Appendix B, we present the performance of HuatuoGPT-II on additional medical benchmarks.

医疗基准测试

如表 1 所示,基准测试结果突显了 HuatuoGPT-II 在医疗领域的卓越表现。其在 CMB 和 CMExam 等中文医疗基准测试中的优异表现,反映了其对中文语境下医疗概念的深刻理解。此外,HuatuoGPT-II 在英文基准测试中同样保持高水平,展现了其跨语言的医疗能力。

为进一步评估其专业医疗知识,我们采用了包含多种中国国家级医学考试的数据集。表 4 的结果显示,HuatuoGPT-II 超越了所有开源模型,并与专有模型 ERNIE Bot 表现接近。附录 B 中,我们展示了 HuatuoGPT-II 在其他医疗基准测试中的性能。

Table 1: Medical benchmark results. Med. signifies extraction of only medical-related questions. ’-’ indicate that the model cannot follow the question and make a choice. Due to the too large size of these benchmarks, we exclude the testing of GPT-4 and ERNIE Bot here.

| Model | English | Chinese | Average | ||||

| MedQA | MedMCQA | CMB | CMExam | CMMLUMed. | C_ EvalMed. | ||

| DISC-MedLLM | 28.67 | 32.47 | 36.62 | ||||

| HuatuoGPT | 25.77 | 31.20 | 28.81 | 31.07 | 33.23 | 36.53 | 31.92 |

| ChatGLM3-6B | 28.75 | 35.91 | 39.81 | 43.21 | 46.97 | 48.80 | 40.73 |

| Baichuan2-7B-Chat | 33.31 | 38.90 | 46.33 | 50.48 | 50.74 | 51.47 | 45.39 |

| Baichuan2-13B-Chat | 39.43 | 41.86 | 50.87 | 54.90 | 52.95 | 58.67 | 49.77 |

| Qwen-7B-Chat | 33.46 | 41.36 | 49.39 | 53.33 | 54.65 | 52.80 | 47.50 |

| Qwen-14B-Chat | 42.81 | 46.59 | 60.28 | 63.57 | 64.55 | 65.07 | 57.16 |

| ChatGPT (API) | 52.24 | 53.60 | 43.26 | 46.51 | 50.37 | 48.80 | 48.51 |

| HuatuoGPT-II (7B) | 41.13 | 41.87 | 60.39 | 65.81 | 59.08 | 62.40 | 55.13 |

| HuatuoGPT-II(13B) | 45.68 | 47.41 | 63.34 | 68.98 | 61.45 | 64.00 | 58.47 |

表 1: 医疗基准测试结果。Med. 表示仅提取与医疗相关的问题。"-" 表示模型无法理解问题并做出选择。由于这些基准测试规模过大,我们在此排除了 GPT-4 和 ERNIE Bot 的测试。

| 模型 | 英语 | 中文 | 平均 | ||||

|---|---|---|---|---|---|---|---|

| MedQA | MedMCQA | CMB | CMExam | CMMLUMed. | C_ EvalMed. | ||

| DISC-MedLLM | 28.67 | - | 32.47 | 36.62 | - | - | - |

| HuatuoGPT | 25.77 | 31.20 | 28.81 | 31.07 | 33.23 | 36.53 | 31.92 |

| ChatGLM3-6B | 28.75 | 35.91 | 39.81 | 43.21 | 46.97 | 48.80 | 40.73 |

| Baichuan2-7B-Chat | 33.31 | 38.90 | 46.33 | 50.48 | 50.74 | 51.47 | 45.39 |

| Baichuan2-13B-Chat | 39.43 | 41.86 | 50.87 | 54.90 | 52.95 | 58.67 | 49.77 |

| Qwen-7B-Chat | 33.46 | 41.36 | 49.39 | 53.33 | 54.65 | 52.80 | 47.50 |

| Qwen-14B-Chat | 42.81 | 46.59 | 60.28 | 63.57 | 64.55 | 65.07 | 57.16 |

| ChatGPT (API) | 52.24 | 53.60 | 43.26 | 46.51 | 50.37 | 48.80 | 48.51 |

| HuatuoGPT-II (7B) | 41.13 | 41.87 | 60.39 | 65.81 | 59.08 | 62.40 | 55.13 |

| HuatuoGPT-II (13B) | 45.68 | 47.41 | 63.34 | 68.98 | 61.45 | 64.00 | 58.47 |

Table 2: Results of the 2023 Chinese National Pharmacist Licensure Examination. It consists of two tracks of Pharmacy track and Traditional Chinese Medicine (TCM) Pharmacy track.

| Model | 2023PharmacistLicensureExamination (Pharmacy) | 2023PharmacistLicensureExamination (TCM) | Avg. | ||||||||

| Optimal Choice | Matched Selection | Integrated Analysis | Multiple Choice | Total Score | Optimal Choice | Matched Selection | Integrated Analysis | Multiple Choice | Total Score | ||

| DISC-MedLLM | 22.2 | 26.8 | 23.3 | 0.0 | 22.6 | 24.4 | 32.3 | 15.0 | 0.0 | 24.9 | 23.8 |

| HuatuoGPT | 25.6 | 25.5 | 23.3 | 2.6 | 23.4 | 24.1 | 26.8 | 31.6 | 7.5 | 24.9 | 24.2 |

| ChatGLM3-6B | 39.5 | 39.1 | 10.5 | 0.2 | 34.6 | 31.8 | 38.2 | 25.0 | 20.0 | 32.9 | 33.8 |

| Qwen-7B-chat | 43.8 | 46.8 | 33.3 | 18.4 | 41.9 | 40.0 | 43.2 | 33.3 | 17.5 | 38.8 | 40.4 |

| Qwen-14B-chat | 56.2 | 58.6 | 41.7 | 21.1 | 52.7 | 51.3 | 51.0 | 27.5 | 41.7 | 47.9 | 50.3 |

| Baichuan2-7B-Chat | 51.2 | 50.9 | 30.0 | 2.6 | 44.6 | 48.1 | 46.0 | 35.0 | 7.5 | 42.1 | 43.4 |

| Baichuan2-13B-Chat | 43.8 | 52.7 | 36.7 | 7.9 | 44.2 | 41.3 | 46.4 | 43.3 | 15.0 | 41.7 | 43.0 |

| ERNIE Bot (API) | 45.0 | 60.9 | 36.7 | 23.7 | 49.6 | 53.8 | 59.1 | 38.3 | 20.0 | 51.5 | 50.6 |

| ChatGPT (API) | 45.6 | 44.1 | 36.7 | 13.2 | 41.2 | 34.4 | 32.3 | 30.0 | 15.0 | 31.2 | 36.2 |

| GPT-4 (API) | 65.1 | 59.6 | 46.7 | 15.8 | 57.3 | 40.6 | 42.7 | 33.3 | 17.5 | 38.8 | 48.1 |

| HuatuoGPT-II(7B) | 41.9 | 61.0 | 35.0 | 15.7 | 47.7 | 52.5 | 51.4 | 41.7 | 15.0 | 47.5 | 47.6 |

| HuatuoGPT-II(13B) | 47.5 | 64.1 | 45.0 | 23.7 | 52.9 | 48.8 | 61.8 | 45.0 | 17.5 | 51.6 | 52.3 |

表 2: 2023年中国国家执业药师资格考试结果。包含药学和中药学两个方向。

| Model | 2023药师资格考试(药学) | 2023药师资格考试(中药学) | Avg. | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 最佳选择 | 匹配选择 | 综合分析 | 多选题 | 总分 | 最佳选择 | 匹配选择 | 综合分析 | 多选题 | 总分 | ||

| DISC-MedLLM | 22.2 | 26.8 | 23.3 | 0.0 | 22.6 | 24.4 | 32.3 | 15.0 | 0.0 | 24.9 | 23.8 |

| HuatuoGPT | 25.6 | 25.5 | 23.3 | 2.6 | 23.4 | 24.1 | 26.8 | 31.6 | 7.5 | 24.9 | 24.2 |

| ChatGLM3-6B | 39.5 | 39.1 | 10.5 | 0.2 | 34.6 | 31.8 | 38.2 | 25.0 | 20.0 | 32.9 | 33.8 |

| Qwen-7B-chat | 43.8 | 46.8 | 33.3 | 18.4 | 41.9 | 40.0 | 43.2 | 33.3 | 17.5 | 38.8 | 40.4 |

| Qwen-14B-chat | 56.2 | 58.6 | 41.7 | 21.1 | 52.7 | 51.3 | 51.0 | 27.5 | 41.7 | 47.9 | 50.3 |

| Baichuan2-7B-Chat | 51.2 | 50.9 | 30.0 | 2.6 | 44.6 | 48.1 | 46.0 | 35.0 | 7.5 | 42.1 | 43.4 |

| Baichuan2-13B-Chat | 43.8 | 52.7 | 36.7 | 7.9 | 44.2 | 41.3 | 46.4 | 43.3 | 15.0 | 41.7 | 43.0 |

| ERNIE Bot (API) | 45.0 | 60.9 | 36.7 | 23.7 | 49.6 | 53.8 | 59.1 | 38.3 | 20.0 | 51.5 | 50.6 |

| ChatGPT (API) | 45.6 | 44.1 | 36.7 | 13.2 | 41.2 | 34.4 | 32.3 | 30.0 | 15.0 | 31.2 | 36.2 |

| GPT-4 (API) | 65.1 | 59.6 | 46.7 | 15.8 | 57.3 | 40.6 | 42.7 | 33.3 | 17.5 | 38.8 | 48.1 |

| HuatuoGPT-II(7B) | 41.9 | 61.0 | 35.0 | 15.7 | 47.7 | 52.5 | 51.4 | 41.7 | 15.0 | 47.5 | 47.6 |

| HuatuoGPT-II(13B) | 47.5 | 64.1 | 45.0 | 23.7 | 52.9 | 48.8 | 61.8 | 45.0 | 17.5 | 51.6 | 52.3 |

The Fresh Medical Exams Tackling data contamination in LLM training is challenging (Huang et al., 2023), especially with extensive and intricate training data. To counter this, we selected the 2023 Chinese National Pharmacist Licensure Examination, held on October 21, 2023, as our benchmarks. This date is crucially before both our data collection cut-off (October 7, 2023) and the release of our assessment models. The annual uniqueness of the exam questions ensures a reliable safeguard against contamination risks. The results in Table 2 show that HuatuoGPT-II ranked second after GPT-4 in the Pharmacy track. However, in the Traditional Chinese Medicine track, HuatuoGPT-II led with 51.6 points. HuatuoGPT-II demonstrated superior performance in average scores across both tracks, highlighting its proficiency in the medical field.

在大语言模型(LLM)训练中解决数据污染问题具有挑战性(Huang et al., 2023),尤其是在处理海量复杂训练数据时。为此,我们选取2023年10月21日举行的中国国家执业药师资格考试作为基准测试,该日期早于我们的数据收集截止日(2023年10月7日)和评估模型发布时间。考卷每年的唯一性为防范数据污染风险提供了可靠保障。表2结果显示,在药学赛道华佗GPT-II仅次于GPT-4排名第二,而在中医药赛道则以51.6分领先。华佗GPT-II在两个赛道的平均得分均展现出卓越性能,凸显了其在医疗领域的专业优势。

4.3 Medical Response Quality

4.3 医疗响应质量

To evaluate the model’s performance in real-world medical scenarios, we utilize real-wrold questions in both single-round and multi-round formats, sourced respectively from KUAKE-QIC (Zhang et al., 2021) and Med-dialog (Zeng et al., 2020), following the approach of HuatuoGPT (Zhang et al., 2023). The assessment details are provided in the Appendix L.

为评估模型在真实医疗场景中的表现,我们采用单轮和多轮形式的真实世界问题,分别来自KUAKE-QIC (Zhang et al., 2021) 和 Med-dialog (Zeng et al., 2020),并遵循HuatuoGPT (Zhang et al., 2023) 的方法。具体评估细节见附录L。

Table 3: Results of the Automated Evaluation Using GPT-4 and Expert Evaluation.

| HuatuoGPT-II(7B)vsOtherModel | Single-roundQA | Multi-roundDialogue | Average Win/TieRate | ||||

| Win | Tie | Fail | Win | Tie | Fail | ||

| AutomatedEvaluationUsingGPT-4 | |||||||

| HuatuoGPT-II(7B)vs GPT-4 | 58 | 21 | 21 | 62 | 15 | 23 | 78.0% |

| HuatuoGPT-II(7B)vsChatGPT | 62 | 18 | 20 | 69 | 14 | 17 | 81.5% |

| HuatuoGPT-II(7B)vsBaichuan2-13B-Chat | 64 | 14 | 22 | 75 | 11 | 14 | 82.0% |

| HuatuoGPT-II(7B)vsHuatuoGPT | 87 | 7 | 6 | 67 | 15 | 18 | 88.0% |

| HumanExpertEvaluation | |||||||

| HuatuoGPT-II(7B)vs GPT-4 | 38 | 38 | 24 | 53 | 17 | 30 | 73% |

| HuatuoGPT-II(7B)vsChatGPT | 52 | 33 | 15 | 56 | 11 | 33 | 76% |

| HuatuoGPT-II(7B)vsBaichuan2-13B-Chat | 63 | 19 | 18 | 63 | 19 | 18 | 82% |

| HuatuoGPT-II(7B)vsHuatuoGPT | 81 | 11 | 8 | 68 | 6 | 26 | 83% |

表 3: 使用GPT-4自动评估与专家评估结果

| HuatuoGPT-II(7B)对比模型 | 单轮问答 | 多轮对话 | 平均胜率/平率 | ||||

|---|---|---|---|---|---|---|---|

| 胜 | 平 | 败 | 胜 | 平 | 败 | ||

| * * GPT-4自动评估* * | |||||||

| HuatuoGPT-II(7B) vs GPT-4 | 58 | 21 | 21 | 62 | 15 | 23 | 78.0% |

| HuatuoGPT-II(7B) vs ChatGPT | 62 | 18 | 20 | 69 | 14 | 17 | 81.5% |

| HuatuoGPT-II(7B) vs Baichuan2-13B-Chat | 64 | 14 | 22 | 75 | 11 | 14 | 82.0% |

| HuatuoGPT-II(7B) vs HuatuoGPT | 87 | 7 | 6 | 67 | 15 | 18 | 88.0% |

| * * 专家人工评估* * | |||||||

| HuatuoGPT-II(7B) vs GPT-4 | 38 | 38 | 24 | 53 | 17 | 30 | 73% |

| HuatuoGPT-II(7B) vs ChatGPT | 52 | 33 | 15 | 56 | 11 | 33 | 76% |

| HuatuoGPT-II(7B) vs Baichuan2-13B-Chat | 63 | 19 | 18 | 63 | 19 | 18 | 82% |

| HuatuoGPT-II(7B) vs HuatuoGPT | 81 | 11 | 8 | 68 | 6 | 26 | 83% |

Automatic Evaluation We utilize GPT-4 to evaluate which of the two models generated better outputs. The results, as indicated in Table 3 (for more compared baselines, see Table 13), show that HuatuoGPT-II has a higher win rate compared to other models. Notably, HuatuoGPT-II achieve a higher win rate in comparison with GPT-4. Although its fine-tuning data originated from GPT-4, its extensive medical corpus provided it with more medical knowledge, as shown in Table 2.

自动评估

我们利用 GPT-4 评估两个模型中哪个生成的输出更优。如表 3 所示(更多基线对比见表 13),华佗GPT-II 相比其他模型具有更高的胜率。值得注意的是,华佗GPT-II 在与 GPT-4 的对比中也取得了更高的胜率。尽管其微调数据源自 GPT-4,但其庞大的医学语料库为其提供了更丰富的医学知识,如表 2 所示。

Expert Evaluation For further evaluating the quality of the model outputs, we invite four licensed physicians to score them, with the detailed criteria available in the Appendix L.2. Due to the high cost of expert evaluation, we select four models for comparison with HuatuoGPT-II. Results, as outlined in Tables 3, indicate that HuatuoGPT-II consistently outperforms its peers, aligning with automatic evaluation. This consensus between expert opinions and GPT $\bar{4}^{\prime}\mathrm{s}$ evaluations underscores HuatuoGPT-II’s efficacy in medical response generation. The case study on the model’s response can be found in Appendix S.

专家评估

为进一步评估模型输出的质量,我们邀请四位执业医师进行评分,详细标准见附录 L.2。由于专家评估成本较高,我们选取了四个模型与HuatuoGPT-II进行对比。结果如表 3 所示,HuatuoGPT-II始终优于同类模型,与自动评估结果一致。专家意见与GPT $\bar{4}^{\prime}\mathrm{s}$ 评估之间的共识印证了HuatuoGPT-II在医疗应答生成方面的有效性。关于模型应答的案例分析见附录 S。

4.4 One-stage Vs. Two-stage Adaption

4.4 单阶段与双阶段适配

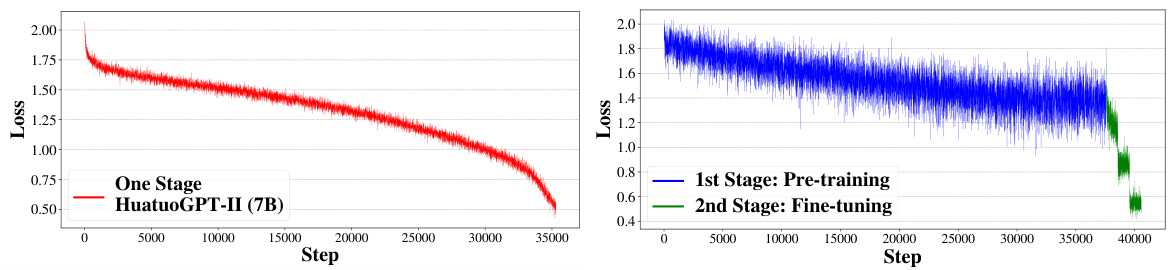

Figure 5: Comparison of the loss outcomes between proposed One-stage Adaption and conventional Two-stage Adaption using the same SFT data and medical corpus.

图 5: 使用相同监督微调(SFT)数据和医学语料库时,提出的单阶段适配方法与常规双阶段适配方法的损失结果对比。

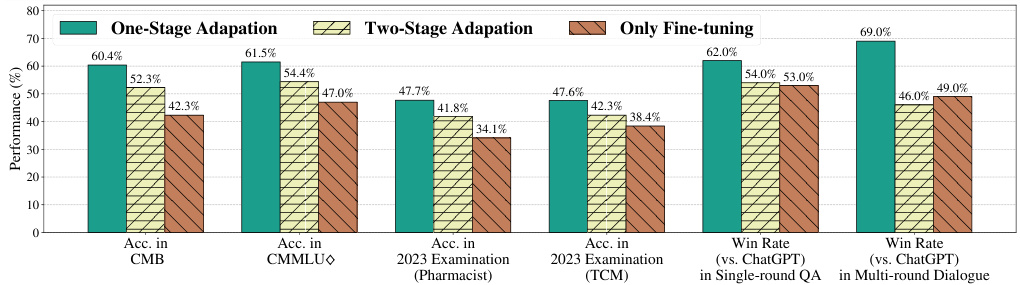

Figure 6: The comparison results of One-stage Adaption and Two-stage Adaption. "Only Finetuning" refers to the model that only fine-tunes the backbone directly. Win Rate is the result scored by automatic evaluation using GPT-4.

图 6: 单阶段适应与双阶段适应的对比结果。"Only Finetuning"指仅对主干网络直接微调的模型。胜率是通过GPT-4自动评估得出的结果。

To validate the superiority of One-stage Adaption, we conduct a traditional Two-stage Adaption to fine-tune the the Baichuan2-7B-Base on the same medical data.

为验证单阶段适配(One-stage Adaption)的优越性,我们在相同医疗数据上采用传统双阶段适配(Two-stage Adaption)对Baichuan2-7B-Base进行微调。

Figure 5 shows the training loss of these two training methods. The loss of Two-stage Adaption shows instability, marked by pronounced fluctuations and loss spikes. This instability arises from the varied content and styles within the medical pre-training corpus, which includes four distinct data types as detailed in Table 9. The variation is further amplified by the differences between Chinese and English datasets. Our one-stage Adaption unifies the diverse contents and styles of the pre-training corpus, improving training stability and reducing loss fluctuation. Moreover, due to data discrepancies between the two stages, there is a noticeable loss divergence between the pre-training and fine-tuning stages in Two-stage Adaption. In contrast, One-stage Adaption effectively resolves this issue by simplifying the pre-training corpus into a unified language and style, aligning with SFT data, making a more stable and smooth training process.

图 5 展示了两种训练方法的训练损失。两阶段适应 (Two-stage Adaption) 的损失表现出不稳定性,其特征是明显的波动和损失峰值。这种不稳定性源于医学预训练语料库中多样化的内容和风格,如表 9 所示,其中包含四种不同的数据类型。中英文数据集之间的差异进一步放大了这种变化。我们的一阶段适应 (One-stage Adaption) 统一了预训练语料库的多样化内容和风格,提高了训练稳定性并减少了损失波动。此外,由于两阶段之间的数据差异,两阶段适应在预训练和微调阶段之间存在明显的损失差异。相比之下,一阶段适应通过将预训练语料库简化为统一的语言和风格,与 SFT 数据保持一致,从而有效解决了这一问题,使训练过程更加稳定和平滑。

The results of the previously mentioned experiments also demonstrate that One-stage Adaption achieves better performance than other methods, as shown in Figure 6. It can be seen that on all six datasets, our One-stage Adaption performance is significantly better than the Two-stage Adaption performance (from $5.3%$ to $23%$ ), especially in single-round Q&A and multi-round conversation tasks. This superiority is likely due to two-stage unification and more effective knowledge generalization in One-stage Adaption.

前述实验结果也表明,One-stage Adaption方法性能优于其他方案,如图6所示。可以观察到在全部六个数据集上,我们的One-stage Adaption性能显著超越Two-stage Adaption方案(提升幅度从$5.3%$到$23%$),尤其在单轮问答和多轮对话任务中表现突出。这种优势可能源于One-stage Adaption实现了两阶段统一以及更高效的知识泛化能力。

4.5 The Relative Priority for Sampling

4.5 采样相对优先级

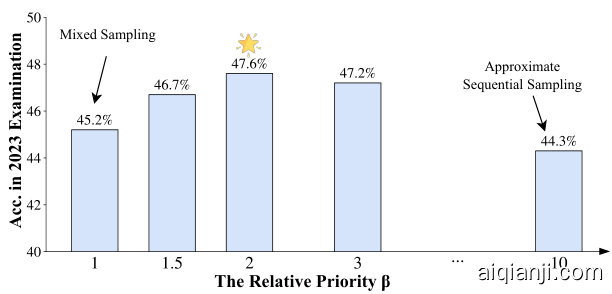

Figure 7: Comparison of model performance under different relative priority $\beta$ settings.

图 7: 不同相对优先级 $\beta$ 设置下的模型性能对比。

In evaluating the priority sampling strategy, we tested it across different $\beta$ settings using the same HuatuoGPT-II (7B) configuration. Our results show that model performance drastically drops when $\beta=0$ (mixed sampling) or when $\beta$ is excessively high (Sequential Sampling), highlighting the importance of priority sampling. The best performance is achieved when $\hat{\boldsymbol{\beta}}$ is around 2.

在评估优先级采样策略时,我们使用相同的华佗GPT-II (7B)配置测试了不同$\beta$设置下的效果。结果表明,当$\beta=0$(混合采样)或$\beta$过高(顺序采样)时,模型性能急剧下降,这凸显了优先级采样的重要性。最佳性能出现在$\hat{\boldsymbol{\beta}}$约为2时。

Moreover, we also conducted an ablation study on data unification in appendix G. The experiment indicates that data unification is crucial for the performance improvement of the one-stage training.

此外,我们还在附录G中对数据统一(Data Unification)进行了消融实验。结果表明,数据统一对单阶段训练的性能提升至关重要。

5 Conclusion

5 结论

In this work, we propose a one-stage domain adaption method, simplifying the conventional two-stage domain adaption process and mitigating its associated challenges. This approach is straightforward, involving the use of LLM capabilities to align domain corpus with SFT data and adopting a priority sampling strategy to enhance domain adaption. Based on this method, we develop a Chinese medical language model, HuatuoGPT-II. In the experiment, HuatuoGPT-II demonstrates state-of-the-art performance in the Chinese medicine domain across various benchmarks. It even surpasses proprietary models like ChatGPT and GPT-4 in some aspects, particularly in Traditional Chinese Medicine. The experimental results also confirm the reliability of the one-stage domain adaption method, which shows a significant improvement over the traditional two-stage performance. This One-stage Adaption promises to offer valuable insights and a methodological framework for future LLM domain adaption works.

在本工作中,我们提出了一种单阶段领域适配方法,简化了传统的两阶段领域适配流程并缓解了其相关挑战。该方法简单直接,包括利用大语言模型能力将领域语料与SFT数据对齐,并采用优先级采样策略以增强领域适配性。基于此方法,我们开发了中文医疗语言模型华佗GPT-II。实验中,华佗GPT-II在多个基准测试中展现出中医领域的最先进性能,在某些方面甚至超越了ChatGPT和GPT-4等专有模型,尤其在传统中医药领域表现突出。实验结果也证实了单阶段领域适配方法的可靠性,其性能较传统两阶段方法有显著提升。这种单阶段适配方法有望为未来大语言模型领域适配工作提供有价值的见解和方法论框架。

Ethic Statement

道德声明

The development of HuatuoGPT-II, a specialized language model for Chinese healthcare, raises several potential risks.

华佗GPT-II (HuatuoGPT-II)作为中文医疗领域专用大语言模型的开发存在若干潜在风险。

Accuracy of Medical Advice While HuatuoGPT-II has shown promising results in the domain of Chinese healthcare, it’s crucial to underscore that at this stage, it should not be used to provide any medical advice. This caution stems from the inherent limitations of large language models, including their capacity for generating plausible yet inaccurate or misleading information.

医疗建议的准确性

尽管华佗GPT-II (HuatuoGPT-II) 在中文医疗领域展现出积极成果,但必须强调现阶段不可将其用于提供任何医疗建议。这一警示源于大语言模型 (Large Language Model) 的固有局限性,包括其可能生成看似合理实则不准确或误导性信息的能力。

Data Privacy and Ethics The ethical handling of data is paramount, especially in the sensitive field of healthcare. The primary data sources for HuatuoGPT-II include medical texts, such as textbooks and literature, ensuring that patient-specific data is not utilized. This approach aligns with ethical guidelines and privacy regulations, ensuring that individual patient information is not compromised. Another significant aspect of our methodology is the ’data unification’ process, which aims to address potential ethical issues in the training data. By employing large language models to rewrite the medical corpora, we aim to eliminate any ethically questionable content, thereby ensuring that the training process and the model align with ethical standards.

数据隐私与伦理

在医疗等敏感领域,数据的伦理处理至关重要。HuatuoGPT-II的主要数据源包括医学教材和文献等文本,确保不使用患者特定数据。这一做法符合伦理准则和隐私法规,保障了患者个体信息的安全。我们方法的另一关键环节是"数据统一化"流程,旨在解决训练数据中潜在的伦理问题。通过使用大语言模型重写医学语料,我们力求消除任何存在伦理争议的内容,从而确保训练过程及模型本身符合伦理标准。

Acknowledgement

致谢

This work was supported by the Shenzhen Science and Technology Program (JCYJ20220818103001002), Shenzhen Doctoral Startup Funding (RCBS20221008093330065), Tianyuan Fund for Mathematics of National Natural Science Foundation of China (NSFC) (12326608), Shenzhen Key Laboratory of Cross-Modal Cognitive Computing (grant number

本研究由深圳市科技计划项目(JCYJ20220818103001002)、深圳市博士启动基金(RCBS20221008093330065)、国家自然科学基金数学天元基金(12326608)、深圳市跨模态认知计算重点实验室(资助编号

ZD S YS 20230626091302006), and Shenzhen Stability Science Program 2023, Shenzhen Key Lab of Multi-Modal Cognitive Computing.

ZD S YS 20230626091302006),以及深圳市稳定支持计划2023,深圳市多模态认知计算重点实验室。

References

参考文献

Jinze Bai, Shuai Bai, Yunfei Chu, Zeyu Cui, Kai Dang, Xiaodong Deng, Yang Fan, Wenbin Ge, Yu Han, Fei Huang, et al. Qwen technical report. arXiv preprint arXiv:2309.16609, 2023.

Jinze Bai, Shuai Bai, Yunfei Chu, Zeyu Cui, Kai Dang, Xiaodong Deng, Yang Fan, Wenbin Ge, Yu Han, Fei Huang, 等. Qwen技术报告. arXiv预印本 arXiv:2309.16609, 2023.

Zhijie Bao, Wei Chen, Shengze Xiao, Kuang Ren, Jiaao Wu, Cheng Zhong, Jiajie Peng, Xuanjing Huang, and Zhongyu Wei. Disc-medllm: Bridging general large language models and real-world medical consultation, 2023.

Zhijie Bao, Wei Chen, Shengze Xiao, Kuang Ren, Jiaao Wu, Cheng Zhong, Jiajie Peng, Xuanjing Huang, and Zhongyu Wei. Disc-MedLLM: 连接通用大语言模型与现实世界医疗咨询的桥梁, 2023.

Prashant Shivaram Bhat, Bahram Zonooz, and Elahe Arani. Consistency is the key to further mitigating catastrophic forgetting in continual learning. In Conference on Lifelong Learning Agents, pp. 1195–1212. PMLR, 2022.

Prashant Shivaram Bhat、Bahram Zonooz和Elahe Arani。一致性是持续学习中进一步缓解灾难性遗忘的关键。载于《终身学习智能体会议》,第1195-1212页。PMLR,2022。

Stella Biderman, Kieran Bicheno, and Leo Gao. Datasheet for the pile. arXiv preprint arXiv:2201.07311, 2022.

Stella Biderman、Kieran Bicheno 和 Leo Gao。《The Pile数据集说明书》。arXiv预印本 arXiv:2201.07311,2022年。

Yirong Chen, Zhenyu Wang, Xiaofen Xing, huimin zheng, Zhipei Xu, Kai Fang, Junhong Wang, Sihang Li, Jieling Wu, Qi Liu, and Xiangmin Xu. Bianque: Balancing the questioning and suggestion ability of health llms with multi-turn health conversations polished by chatgpt, 2023a.

Yirong Chen, Zhenyu Wang, Xiaofen Xing, huimin zheng, Zhipei Xu, Kai Fang, Junhong Wang, Sihang Li, Jieling Wu, Qi Liu, and Xiangmin Xu. Bianque: 通过ChatGPT优化的多轮健康对话平衡健康大语言模型的提问与建议能力, 2023a.

Zeming Chen, Alejandro Hernández Cano, Angelika Romanou, Antoine Bonnet, Kyle Matoba, Francesco Salvi, Matteo Pag liard in i, Simin Fan, Andreas Köpf, Amirkeivan Mohtashami, et al. Meditron-70b: Scaling medical pre training for large language models. arXiv preprint arXiv:2311.16079, 2023b.

Zeming Chen, Alejandro Hernández Cano, Angelika Romanou, Antoine Bonnet, Kyle Matoba, Francesco Salvi, Matteo Pagliardini, Simin Fan, Andreas Köpf, Amirkeivan Mohtashami等。Meditron-70b: 大语言模型的医疗预训练扩展。arXiv预印本arXiv:2311.16079, 2023b。

Zhihong Chen, Feng Jiang, Junying Chen, Tiannan Wang, Fei Yu, Guiming Chen, Hongbo Zhang, Juhao Liang, Chen Zhang, Zhiyi Zhang, et al. Phoenix: Democratizing chatgpt across languages. arXiv preprint arXiv:2304.10453, 2023c.

Zhihong Chen、Feng Jiang、Junying Chen、Tiannan Wang、Fei Yu、Guiming Chen、Hongbo Zhang、Juhao Liang、Chen Zhang、Zhiyi Zhang等。Phoenix: 跨语言普及ChatGPT。arXiv预印本arXiv:2304.10453,2023c。

Daixuan Cheng, Shaohan Huang, and Furu Wei. Adapting large language models via reading comprehension. arXiv preprint arXiv:2309.09530, 2023.

戴轩程、黄少晗、魏福如。基于阅读理解的大语言模型适配方法。arXiv预印本 arXiv:2309.09530,2023。

Jiaxi Cui, Zongjian Li, Yang Yan, Bohua Chen, and Li Yuan. Chatlaw: Open-source legal large language model with integrated external knowledge bases. arXiv preprint arXiv:2306.16092, 2023.

贾曦崔, 宗建李, 阳颜, 博华陈, 和莉袁. Chatlaw: 集成外部知识库的开源法律大语言模型. arXiv预印本 arXiv:2306.16092, 2023.

Leo Gao, Stella Biderman, Sid Black, Laurence Golding, Travis Hoppe, Charles Foster, Jason Phang, Horace He, Anish Thite, Noa Nabeshima, et al. The pile: An 800gb dataset of diverse text for language modeling. arXiv preprint arXiv:2101.00027, 2020.

Leo Gao、Stella Biderman、Sid Black、Laurence Golding、Travis Hoppe、Charles Foster、Jason Phang、Horace He、Anish Thite、Noa Nabeshima等。The Pile:一个用于语言建模的800GB多样化文本数据集。arXiv预印本arXiv:2101.00027,2020。

Ian J Goodfellow, Mehdi Mirza, Da Xiao, Aaron Courville, and Yoshua Bengio. An empirical investigation of catastrophic forgetting in gradient-based neural networks. arXiv preprint arXiv:1312.6211, 2013.

Ian J Goodfellow、Mehdi Mirza、Da Xiao、Aaron Courville 和 Yoshua Bengio。基于梯度的神经网络中灾难性遗忘的实证研究。arXiv预印本 arXiv:1312.6211,2013。

Suriya Gunasekar, Yi Zhang, Jyoti Aneja, Caio César Teodoro Mendes, Allie Del Giorno, Sivakanth Gopi, Mojan Javaheripi, Piero Kauffmann, Gustavo de Rosa, Olli Saarikivi, et al. Textbooks are all you need. arXiv preprint arXiv:2306.11644, 2023.

Suriya Gunasekar、Yi Zhang、Jyoti Aneja、Caio César Teodoro Mendes、Allie Del Giorno、Sivakanth Gopi、Mojan Javaheripi、Piero Kauffmann、Gustavo de Rosa、Olli Saarikivi等。《教科书即所需》。arXiv预印本arXiv:2306.11644,2023。

Yuzhen Huang, Yuzhuo Bai, Zhihao Zhu, Junlei Zhang, Jinghan Zhang, Tangjun Su, Junteng Liu, Chuancheng Lv, Yikai Zhang, Jiayi Lei, et al. C-eval: A multi-level multi-discipline chinese evaluation suite for foundation models. arXiv preprint arXiv:2305.08322, 2023.

黄雨真, 白雨卓, 朱志豪, 张俊磊, 张靖涵, 苏堂峻, 刘俊腾, 吕传成, 张艺凯, 雷佳艺等. C-Eval: 面向基础模型的多层次多学科中文评估套件. arXiv预印本 arXiv:2305.08322, 2023.

Di Jin, Eileen Pan, Nassim Oufattole, Wei-Hung Weng, Hanyi Fang, and Peter Szolovits. What disease does this patient have? a large-scale open domain question answering dataset from medical exams. Applied Sciences, 11(14):6421, 2021.

Di Jin、Eileen Pan、Nassim Oufattole、Wei-Hung Weng、Hanyi Fang 和 Peter Szolovits。《这位患者患有何种疾病?基于医学考试构建的大规模开放域问答数据集》。Applied Sciences,11(14):6421,2021年。

Chuyi Kong, Yaxin Fan, Xiang Wan, Feng Jiang, and Benyou Wang. Large language model as a user simulator. arXiv preprint arXiv:2308.11534, 2023.

孔楚一, 范雅欣, 万翔, 蒋峰, 王本友. 大语言模型作为用户模拟器. arXiv预印本 arXiv:2308.11534, 2023.

Cheng Li, Ziang Leng, Chenxi Yan, Junyi Shen, Hao Wang, Weishi Mi, Yaying Fei, Xiaoyang Feng, Song Yan, HaoSheng Wang, et al. Chatharuhi: Reviving anime character in reality via large language model. arXiv preprint arXiv:2308.09597, 2023a.

Cheng Li, Ziang Leng, Chenxi Yan, Junyi Shen, Hao Wang, Weishi Mi, Yaying Fei, Xiaoyang Feng, Song Yan, HaoSheng Wang, 等. Chatharuhi: 基于大语言模型的动漫角色现实复现. arXiv预印本 arXiv:2308.09597, 2023a.

Haonan Li, Yixuan Zhang, Fajri Koto, Yifei Yang, Hai Zhao, Yeyun Gong, Nan Duan, and Timothy Baldwin. Cmmlu: Measuring massive multitask language understanding in chinese. arXiv preprint arXiv:2306.09212, 2023b.

Haonan Li、Yixuan Zhang、Fajri Koto、Yifei Yang、Hai Zhao、Yeyun Gong、Nan Duan 和 Timothy Baldwin。CMMLU: 中文大规模多任务语言理解评估。arXiv预印本 arXiv:2306.09212,2023b。

Jianquan Li, Xidong Wang, Xiangbo Wu, Zhiyi Zhang, Xiaolong Xu, Jie Fu, Prayag Tiwari, Xiang Wan, and Benyou Wang. Huatuo-26m, a large-scale chinese medical qa dataset. arXiv preprint arXiv:2305.01526, 2023c.

Jianquan Li、Xidong Wang、Xiangbo Wu、Zhiyi Zhang、Xiaolong Xu、Jie Fu、Prayag Tiwari、Xiang Wan和Benyou Wang。Huatuo-26m:一个大规模中文医疗问答数据集。arXiv预印本arXiv:2305.01526,2023c。

Xian Li, Ping Yu, Chunting Zhou, Timo Schick, Luke Z ett le moyer, Omer Levy, Jason Weston, and Mike Lewis. Self-alignment with instruction back translation. arXiv preprint arXiv:2308.06259, 2023d.

Xian Li、Ping Yu、Chunting Zhou、Timo Schick、Luke Zettlemoyer、Omer Levy、Jason Weston 和 Mike Lewis。基于指令回译的自对齐方法。arXiv预印本 arXiv:2308.06259,2023年。

Yuanzhi Li, Sébastien Bubeck, Ronen Eldan, Allie Del Giorno, Suriya Gunasekar, and Yin Tat Lee. Textbooks are all you need ii: phi-1.5 technical report. arXiv preprint arXiv:2309.05463, 2023e.

Yuanzhi Li、Sébastien Bubeck、Ronen Eldan、Allie Del Giorno、Suriya Gunasekar和Yin Tat Lee。《教科书即所需 II:phi-1.5技术报告》。arXiv预印本arXiv:2309.05463,2023年。

Junling Liu, Peilin Zhou, Yining Hua, Dading Chong, Zhongyu Tian, Andrew Liu, Helin Wang, Chenyu You, Zhenhua Guo, Lei Zhu, et al. Benchmarking large language models on cmexam–a comprehensive chinese medical exam dataset. arXiv preprint arXiv:2306.03030, 2023.

Junling Liu、Peilin Zhou、Yining Hua、Dading Chong、Zhongyu Tian、Andrew Liu、Helin Wang、Chenyu You、Zhenhua Guo、Lei Zhu 等。大语言模型在CMExam上的基准测试——一个全面的中文医学考试数据集。arXiv预印本 arXiv:2306.03030,2023。

OpenAI. Introducing chatgpt. https://openai.com/blog/chatgpt, 2022.

OpenAI. 发布 ChatGPT. https://openai.com/blog/chatgpt, 2022.

OpenAI. Gpt-4 technical report, 2023.

OpenAI. GPT-4技术报告, 2023.

Ankit Pal, Logesh Kumar Umapathi, and Malai kann an Sankara sub bu. Medmcqa: A large-scale multi-subject multi-choice dataset for medical domain question answering. In Conference on Health, Inference, and Learning, pp. 248–260. PMLR, 2022.

Ankit Pal、Logesh Kumar Umapathi 和 Malai kann an Sankara sub bu。Medmcqa: 面向医疗领域问答的大规模多学科多选题数据集。In Conference on Health, Inference, and Learning, pp. 248–260. PMLR, 2022。

Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, and Peter J Liu. Exploring the limits of transfer learning with a unified text-to-text transformer. The Journal of Machine Learning Research, 21(1):5485–5551, 2020.

Colin Raffel、Noam Shazeer、Adam Roberts、Katherine Lee、Sharan Narang、Michael Matena、Yanqi Zhou、Wei Li 和 Peter J Liu。探索文本到文本Transformer (text-to-text transformer) 迁移学习的极限。《机器学习研究期刊》,21(1):5485–5551, 2020。

Yu Sun, Shuohuan Wang, Shikun Feng, Siyu Ding, Chao Pang, Junyuan Shang, Jiaxiang Liu, Xuyi Chen, Yanbin Zhao, Yuxiang Lu, et al. Ernie 3.0: Large-scale knowledge enhanced pre-training for language understanding and generation. arXiv preprint arXiv:2107.02137, 2021.

Yu Sun, Shuohuan Wang, Shikun Feng, Siyu Ding, Chao Pang, Junyuan Shang, Jiaxiang Liu, Xuyi Chen, Yanbin Zhao, Yuxiang Lu, 等. ERNIE 3.0: 面向语言理解与生成的大规模知识增强预训练. arXiv预印本 arXiv:2107.02137, 2021.

Hugo Touvron, Louis Martin, Kevin Stone, Peter Albert, Amjad Almahairi, Yasmine Babaei, Nikolay Bashlykov, Soumya Batra, Prajjwal Bhargava, Shruti Bhosale, et al. Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288, 2023.

Hugo Touvron、Louis Martin、Kevin Stone、Peter Albert、Amjad Almahairi、Yasmine Babaei、Nikolay Bashlykov、Soumya Batra、Prajjwal Bhargava、Shruti Bhosale等。Llama 2:开放基础与微调对话模型。arXiv预印本arXiv:2307.09288,2023。

Benyou Wang, Qianqian Xie, Jiahuan Pei, Zhihong Chen, Prayag Tiwari, Zhao Li, and Jie Fu. Pretrained language models in biomedical domain: A systematic survey. ACM Computing Surveys, 56(3):1–52, 2023a.

Benyou Wang、Qianqian Xie、Jiahuan Pei、Zhihong Chen、Prayag Tiwari、Zhao Li和Jie Fu。生物医学领域的预训练语言模型:系统性综述。ACM Computing Surveys,56(3):1–52,2023a。

A Related Work

相关工作

A.1 Domain adaption

A.1 领域自适应

Recent research has also indicated that such domain adaption using further training Cheng et al., 2023 leads to a drastic drop despite still benefiting fine-tuning evaluation and knowledge probing tests. This inspires us to design a different protocol for domain adaption. Also, Gunasekar et al. (2023); Li et al. (2023e); Chen et al. (2023c); Kong et al. (2023) show a possibility that the 10B well-selected dataset could achieve comparable performance to a much larger model.

近期研究还表明,尽管这种通过额外训练实现的领域适应(Cheng等人,2023)仍有助于微调评估和知识探测测试,但会导致性能急剧下降。这启发我们设计了一种不同的领域适应方案。此外,Gunasekar等人(2023)、Li等人(2023e)、Chen等人(2023c)和Kong等人(2023)的研究表明,经过精心筛选的100亿规模数据集有可能达到与更大模型相媲美的性能。

A.2 Medical LLMs

A.2 医疗大语言模型

The rapid advancement of large language models (LLMs) in Chinese medical field is driven by the release of open-source Chinese LLMs, notably trained via instruction fine-tuning. DoctorGLM (Xiong et al., 2023) and MedicalGPT (Xu, 2023) are fine-tuned on various Chinese and English medical dialogue datasets. Another Chinese medical LLM, BenTsao (Wang et al., 2023b) is finetuned on distilled data derived from knowledge graphs. Bianque-2 (Chen et al., 2023a) includes multiple rounds of medical expansions, encompassing drug instructions and encyclopedia knowledge instructions. ChatMed-Consult (Zhu & Wang, 2023) is fine-tuned on both Chinese online consultation data and ChatGPT responses. DISC-MedLLM (Bao et al., 2023) is fine-tuned on more than 470,000 medical data including doctor-patient dialogues and knowledge QA pairs.

开源中文大语言模型的发布,尤其是基于指令微调训练的模型,推动了中文医疗领域大语言模型的快速发展。DoctorGLM (Xiong et al., 2023) 和 MedicalGPT (Xu, 2023) 在多种中英文医疗对话数据集上进行了微调。另一款中文医疗大语言模型 BenTsao (Wang et al., 2023b) 则基于知识图谱蒸馏数据进行了优化。Bianque-2 (Chen et al., 2023a) 包含多轮医学扩展,涵盖药品说明书和百科知识指令。ChatMed-Consult (Zhu & Wang, 2023) 同时微调了中文在线问诊数据和 ChatGPT 生成内容。DISC-MedLLM (Bao et al., 2023) 在超过 47 万条医患对话和知识问答对组成的医疗数据上进行了训练。

By integrating the reinforcement learning, HuatuoGPT (Zhang et al., 2023) is fine-tuned on a 220,000 medical dataset consisting of ChatGPT-distilled instructions and dialogues alongside real doctorpatient single-turn Q&As and multi-turn dialogues. ZhongJing (Yang et al., 2023b) undergoes a comprehensive three-stage training process: continuous pre-training on various medical data, instruction fine-tuning on single-turn and multi-turn dialogue data as well as medical NLP tasks data, and reinforcement learning adjudicated by experts to ensure reliability and safety.

通过整合强化学习技术,华佗GPT (Zhang et al., 2023) 在22万条医疗数据集上进行了微调,该数据集包含ChatGPT蒸馏的指令对话、真实医患单轮问答及多轮对话。仲景 (Yang et al., 2023b) 采用三阶段训练流程:基于多样化医疗数据的持续预训练、针对单轮/多轮对话数据及医疗NLP任务数据的指令微调、以及由专家监督的强化学习以确保可靠性与安全性。

B Supplementary Experiment

B 补充实验

Table 4: The results of Chinese National Medical Licensing Examinations. The year represents the actual examination year. Note that the exam here may not be complete, and the blue fonts indicates the number of questions.

| Model | TraditionalChineseMedicine | Clinical | Avg. | |||||||||||||

| Assistant | Physician | Pharmacist | Assistant | Physician | ||||||||||||

| 2015 (300) | 2016 (220) | 2017 (116) | 2012 (600) | 2013 (600) | 2016 (430) | 2017 2018 (168) (167) | 2019 (223) | 2018 | 2019 (234) (244) | 2020 (244) | 2018 | 2019 (449) (476) | 2020 (436) | |||

| HuatuoGPT | 26.0 | 30.9 | 32.8 | 31.3 | 26.8 | 30.2 | 18.4 | 27.5 | 25.1 | 32.5 | 33.6 | 27.5 | 32.7 | 28.6 | 30.1 | 28.9 |

| DISC-MedLLM | 38.3 | 41.8 | 26.7 | 36.2 | 38.7 | 35.1 | 25.0 | 22.2 | 22.0 | 49.2 | 36.1 | 41.8 | 41.4 | 36.6 | 35.8 | 35.1 |

| ChatGLM3-6B | 45.7 | 45.0 | 50.0 | 46.3 | 45.8 | 46.5 | 42.3 | 28.1 | 35.4 | 50.9 | 48.8 | 43.9 | 41.7 | 43.9 | 43.6 | 43.9 |

| Baichuan2-7B-Chat | 57.3 | 57.3 | 58.6 | 55.7 | 58.5 | 57.9 | 41.7 | 41.9 | 45.7 | 61.1 | 55.7 | 55.3 | 51.0 | 53.6 | 50.0 | 53.4 |

| Baichuan2-13B-Chat | 64.7 | 58.2 | 62.9 | 61.7 | 61.5 | 63.3 | 54.2 | 38.9 | 48.4 | 66.2 | 64.8 | 63.1 | 65.9 | 58.8 | 61.5 | 59.6 |

| Qwen-7B-Chat | 54.7 | 55.9 | 56.0 | 52.7 | 53.5 | 54.4 | 44.0 | 33.5 | 43.0 | 68.8 | 63.9 | 57.8 | 60.6 | 57.6 | 54.1 | 54.0 |

| Qwen-14B-Chat | 65.3 | 63.2 | 67.2 | 64.8 | 63.3 | 67.9 | 54.8 | 49.1 | 52.5 | 74.8 | 75.0 | 69.3 | 73.7 | 69.7 | 68.8 | 65.3 |

| ERNIE Bot (API) | 73.3 | 66.3 | 73.3 | 70.0 | 71.8 | 66.7 | 55.9 | 50.3 | 60.0 | 78.2 | 77.0 | 77.5 | 66.6 | 70.8 | 74.1 | 68.8 |

| ChatGPT (API) | 46.0 | 36.4 | 41.4 | 36.7 | 38.5 | 40.5 | 32.1 | 28.1 | 30.0 | 63.3 | 57.8 | 53.7 | 53.7 | 52.5 | 51.8 | 44.2 |

| GPT-4 (API) | 47.3 | 48.2 | 53.5 | 50.3 | 53.7 | 54.2 | 41.1 | 43.7 | 48.0 | 79.9 | 72.5 | 70.9 | 74.8 | 73.1 | 68.4 | 58.6 |

| HuatuoGPT-II(7B) | 67.1 | 65.2 | 67.5 | 67.9 | 67.4 | 64.9 | 53.0 | 46.7 | 51.0 | 70.9 | 73.2 | 69.4 | 68.8 | 66.5 | 67.7 | 64.5 |

| HuatuoGPT-II(13B) | 70.3 | 70.0 | 71.6 | 71.0 | 69.2 | 70.2 | 56.5 | 52.1 | 54.7 | 73.1 | 76.6 | 70.1 | 72.8 | 68.9 | 72.2 | 68.0 |

表 4: 中国国家医学资格考试结果。年份代表实际考试年份。请注意,此处列出的考试可能不完整,蓝色字体表示题目数量。

| 模型 | 中医 | 临床 | 平均 | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 助理 | 医师 | 药师 | 助理 | 医师 | ||||||||||||

| 2015 (300) | 2016 (220) | 2017 (116) | 2012 (600) | 2013 (600) | 2016 (430) | 2017 2018 (168) (167) | 2019 (223) | 2018 | 2019 (234) (244) | 2020 (244) | 2018 | 2019 (449) (476) | 2020 (436) | |||

| HuatuoGPT | 26.0 | 30.9 | 32.8 | 31.3 | 26.8 | 30.2 | 18.4 | 27.5 | 25.1 | 32.5 | 33.6 | 27.5 | 32.7 | 28.6 | 30.1 | 28.9 |

| DISC-MedLLM | 38.3 | 41.8 | 26.7 | 36.2 | 38.7 | 35.1 | 25.0 | 22.2 | 22.0 | 49.2 | 36.1 | 41.8 | 41.4 | 36.6 | 35.8 | 35.1 |

| ChatGLM3-6B | 45.7 | 45.0 | 50.0 | 46.3 | 45.8 | 46.5 | 42.3 | 28.1 | 35.4 | 50.9 | 48.8 | 43.9 | 41.7 | 43.9 | 43.6 | 43.9 |

| Baichuan2-7B-Chat | 57.3 | 57.3 | 58.6 | 55.7 | 58.5 | 57.9 | 41.7 | 41.9 | 45.7 | 61.1 | 55.7 | 55.3 | 51.0 | 53.6 | 50.0 | 53.4 |

| Baichuan2-13B-Chat | 64.7 | 58.2 | 62.9 | 61.7 | 61.5 | 63.3 | 54.2 | 38.9 | 48.4 | 66.2 | 64.8 | 63.1 | 65.9 | 58.8 | 61.5 | 59.6 |

| Qwen-7B-Chat | 54.7 | 55.9 | 56.0 | 52.7 | 53.5 | 54.4 | 44.0 | 33.5 | 43.0 | 68.8 | 63.9 | 57.8 | 60.6 | 57.6 | 54.1 | 54.0 |

| Qwen-14B-Chat | 65.3 | 63.2 | 67.2 | 64.8 | 63.3 | 67.9 | 54.8 | 49.1 | 52.5 | 74.8 | 75.0 | 69.3 | 73.7 | 69.7 | 68.8 | 65.3 |

| ERNIE Bot (API) | 73.3 | 66.3 | 73.3 | 70.0 | 71.8 | 66.7 | 55.9 | 50.3 | 60.0 | 78.2 | 77.0 | 77.5 | 66.6 | 70.8 | 74.1 | 68.8 |

| ChatGPT (API) | 46.0 | 36.4 | 41.4 | 36.7 | 38.5 | 40.5 | 32.1 | 28.1 | 30.0 | 63.3 | 57.8 | 53.7 | 53.7 | 52.5 | 51.8 | 44.2 |

| GPT-4 (API) | 47.3 | 48.2 | 53.5 | 50.3 | 53.7 | 54.2 | 41.1 | 43.7 | 48.0 | 79.9 | 72.5 | 70.9 | 74.8 | 73.1 | 68.4 | 58.6 |

| HuatuoGPT-II(7B) | 67.1 | 65.2 | 67.5 | 67.9 | 67.4 | 64.9 | 53.0 | 46.7 | 51.0 | 70.9 | 73.2 | 69.4 | 68.8 | 66.5 | 67.7 | 64.5 |

| HuatuoGPT-II(13B) | 70.3 | 70.0 | 71.6 | 71.0 | 69.2 | 70.2 | 56.5 | 52.1 | 54.7 | 73.1 | 76.6 | 70.1 | 72.8 | 68.9 | 72.2 | 68.0 |

Chinese Medical Licensing Examination To evaluate HuatuoGPT-II’s medical competence, we utilize a dataset comprising medical examination questions from China and the United States. This approach aims to measure the model’s adaptability and proficiency across varied medical knowledge frameworks and terminologies. The results of the Chinese National Medical Licensing Examination are outlined in Table 4. In the results, HuatuoGPT-II (13B) not only surpassed all open-source models but also closely approached the performance of the leading proprietary model, ERNIE Bot. This notable outcome reflects the model’s advanced understanding of Chinese medical principles and its adeptness in applying this knowledge in complex examination contexts. The 13B variant’s elevated scores signify enhanced analytical capabilities and deeper comprehension of the nuances inherent in Chinese medical practice.

中国执业医师资格考试

为评估华佗GPT-II的医学能力,我们采用了包含中美两国医学考试题目的数据集。该方法旨在衡量模型在不同医学知识框架和术语体系中的适应性与熟练度。中国国家医学资格考试结果如 表 4 所示。结果显示,华佗GPT-II (13B)不仅超越了所有开源模型,还显著逼近了领先的专有模型ERNIE Bot的表现。这一突出成果反映了该模型对中文医学原理的深刻理解,以及在复杂考试场景中灵活应用知识的能力。13B版本更高的分数标志着其分析能力的提升,以及对中文医疗实践细节更深入的理解。

CMB-Clin CMB-Clin is a dataset designed to evaluate the Clinical Diagnostic capabilities of LLMs, based on 74 classical complex and real-world cases derived from textbooks. Distinct from the response quality evaluations, CMB-Clin provides standard answers for reference and scores each model individually. We follow the same evaluation strategy from the original paper which utilizes GPT-4 as the evaluator. Results, as delineated in Table 5, indicate that HuatuoGPT-II outperforms its counterparts, excluding GPT-4. Intriguingly, the 7B version of HuatuoGPT-II demonstrates superior efficacy over its 13B variant, a phenomenon potentially attributable to foundational capacity variances, as evidenced by Baichuan2-7B-Chat’s superior performance compared to Baichuan2-13BChat.

CMB-Clin

CMB-Clin是一个用于评估大语言模型(LLM)临床诊断能力的数据集,包含74个源自教科书的经典复杂真实病例。与回答质量评估不同,CMB-Clin提供标准参考答案并对每个模型单独评分。我们沿用原论文的评估策略,使用GPT-4作为评估器。如表5所示,除GPT-4外,华佗GPT-II表现优于其他模型。值得注意的是,华佗GPT-II的7B版本展现出优于13B版本的效能,这一现象可能与基础能力差异有关——百川2-7B-Chat的表现同样优于百川2-13B-Chat[20]佐证了该推测。

Table 5: Results of CMB-Clin on Automatic Evaluation using GPT-4.

| Model | Fluency | Relevance | Completeness | Proficiency | Avg.↑ |

| GPT-4 | 4.95 | 4.71 | 4.35 | 4.66 | 4.67 |

| HuatuoGPT-II (7B) | 4.94 | 4.56 | 4.24 | 4.46 | 4.55 |

| ERNIEBot | 4.92 | 4.53 | 4.16 | 4.55 | 4.54 |

| ChatGPT | 4.97 | 4.49 | 4.12 | 4.53 | 4.53 |

| HuatuoGPT-II(13B) | 4.92 | 4.38 | 4.00 | 4.40 | 4.43 |

| Baichuan2-7B-Chat | 4.93 | 4.41 | 4.03 | 4.36 | 4.43 |

| Qwen-14B-Chat | 4.90 | 4.35 | 3.93 | 4.48 | 4.41 |

| Qwen-7B-Chat | 4.94 | 4.17 | 3.67 | 4.33 | 4.28 |

| Baichuan2-13B-Chat | 4.88 | 4.18 | 3.78 | 4.27 | 4.28 |

| ChatGLM3-6B | 4.92 | 4.11 | 3.74 | 4.23 | 4.25 |

| HuatuoGPT | 4.89 | 3.76 | 3.38 | 3.86 | 3.97 |

| DISC-MedLLM | 4.82 | 3.24 | 2.75 | 3.51 | 3.58 |

表 5: CMB-Clin 使用 GPT-4 进行自动评估的结果

| 模型 | 流畅性 | 相关性 | 完整性 | 专业性 | 平均↑ |

|---|---|---|---|---|---|

| GPT-4 | 4.95 | 4.71 | 4.35 | 4.66 | 4.67 |

| HuatuoGPT-II (7B) | 4.94 | 4.56 | 4.24 | 4.46 | 4.55 |

| ERNIEBot | 4.92 | 4.53 | 4.16 | 4.55 | 4.54 |

| ChatGPT | 4.97 | 4.49 | 4.12 | 4.53 | 4.53 |

| HuatuoGPT-II(13B) | 4.92 | 4.38 | 4.00 | 4.40 | 4.43 |

| Baichuan2-7B-Chat | 4.93 | 4.41 | 4.03 | 4.36 | 4.43 |

| Qwen-14B-Chat | 4.90 | 4.35 | 3.93 | 4.48 | 4.41 |

| Qwen-7B-Chat | 4.94 | 4.17 | 3.67 | 4.33 | 4.28 |

| Baichuan2-13B-Chat | 4.88 | 4.18 | 3.78 | 4.27 | 4.28 |

| ChatGLM3-6B | 4.92 | 4.11 | 3.74 | 4.23 | 4.25 |

| HuatuoGPT | 4.89 | 3.76 | 3.38 | 3.86 | 3.97 |

| DISC-MedLLM | 4.82 | 3.24 | 2.75 | 3.51 | 3.58 |

USMLE The United States Medical Licensing Examination (USMLE) outcomes, as delineated in Table 6, reveal that HuatuoGPT-II (13B) outperforms comparative open-source models. Despite a discernible disparity with ChatGPT, it is important to note that the USMLE’s English-centric nature imposes constraints on HuatuoGPT-II, primarily designed for Chinese medical contexts. However, its commendable performance in the USMLE underscores its proficiency in employing medical knowledge across diverse scenarios, effectively addressing the range of challenges posed by the USMLE.

美国医师执照考试 (USMLE) 结果如表 6 所示,华佗GPT-II (13B) 的表现优于其他开源模型。尽管与 ChatGPT 存在明显差距,但需注意 USMLE 以英语为主的特性对主要面向中文医疗场景设计的华佗GPT-II 形成了限制。然而,其在 USMLE 中的出色表现印证了该模型跨场景运用医学知识的能力,能有效应对 USMLE 提出的各类挑战。

C Domain Corpus Collection

C 领域语料库收集

Domain corpus is pivotal for augmenting domain-specific expertise. Domain data necessitates a comprehensive collection of high-quality and domain-specific content, surpassing the scope of the

领域语料库对于增强领域专业知识至关重要。领域数据需要全面收集高质量且具有领域针对性的内容,其范围远超...

| Models | Stagel (6308) | Stage2&3 (5148) | Avg. (11456) |

| HuatuoGPT | 28.68 | 28.38 | 28.54 |

| ChatGLM3-6B | 33.39 | 32.49 | 32.98 |

| Baichuan2-7B-Chat | 38.11 | 37.32 | 37.76 |

| Baichuan2-13B-Chat | 44.99 | 45.57 | 45.25 |

| Qwen-7B-Chat | 40.90 | 36.09 | 38.73 |

| Qwen-14B-Chat | 48.73 | 42.45 | 45.90 |

| ChatGPT (API) | 57.04 | 56.27 | 56.69 |

| HuatuoGPT-II(7B) | 45.72 | 44.45 | 45.15 |

| HuatuoGPT-II(13B) | 47.34 | 49.37 | 48.25 |

| 模型 | 阶段1 (6308) | 阶段2&3 (5148) | 平均值 (11456) |

|---|---|---|---|

| HuatuoGPT | 28.68 | 28.38 | 28.54 |

| ChatGLM3-6B | 33.39 | 32.49 | 32.98 |

| Baichuan2-7B-Chat | 38.11 | 37.32 | 37.76 |

| Baichuan2-13B-Chat | 44.99 | 45.57 | 45.25 |

| Qwen-7B-Chat | 40.90 | 36.09 | 38.73 |

| Qwen-14B-Chat | 48.73 | 42.45 | 45.90 |

| ChatGPT (API) | 57.04 | 56.27 | 56.69 |

| HuatuoGPT-II(7B) | 45.72 | 44.45 | 45.15 |

| HuatuoGPT-II(13B) | 47.34 | 49.37 | 48.25 |

Figure 8: Domain Data collection. Figure 9: Summary of the Medical Pre-training Corpus.

图 8: 领域数据收集。图 9: 医学预训练语料库概述。

| Type of Data | #Doc | Source |

| Chinese | ||

| WebCorpus | 640,621 | Wudao |

| Books Encyclopedia | 1,802,517 411,183 | Textbook Online Encyclopedia |

| Literature | 177,261 | ChineseLiterature |

| English | ||

| Web Corpus | 394,490 | C4 |

| Books | 801,522 | Textbook, |

| Encyclopedia | 147,059 | the_ pile_ books3 Wikipida |

| Literature | 878,241 | PubMed |

| Total | 5,252,894 |

| 数据类型 | 文档数量 | 来源 |

|---|---|---|

| 中文 | ||

| 网络语料 | 640,621 | Wudao |

| 书籍百科 | 1,802,517 | Textbook Online Encyclopedia |

| 411,183 | ||

| 文学作品 | 177,261 | ChineseLiterature |

| 英文 | ||

| 网络语料 | 394,490 | C4 |

| 书籍 | 801,522 | Textbook |

| 百科全书 | 147,059 | the_ pile_ books3 Wikipida |

| 文学作品 | 878,241 | PubMed |

| 总计 | 5,252,894 |

foundational corpus. We identified four primary data types for extensive collection: encyclopedias, books, academic literature, and web content. Focusing on these categories, we amassed 1.1TB of Chinese and English data, as shown in Table 9.

基础语料库。我们确定了四种主要数据类型进行大规模收集:百科全书、书籍、学术文献和网络内容。聚焦这些类别,我们积累了1.1TB的中英文数据,如表9所示。

The domain data collection pipeline is an essential part of ensuring the quality of domain language corpora, designed to extract high-quality and diverse domain corpora from large-scale corpora. The methodology encompasses four primary steps:

领域数据收集流程是确保领域语言语料质量的关键环节,旨在从大规模语料中提取高质量、多样化的领域语料。该方法包含四个主要步骤:

- Extract Medical Corpus: This process aims to remove irrelevant domain corpora, serving as the first step in filtering massive corpora. We employ a dictionary-based approach, obtaining dictionaries containing medical vocabulary from THUOCL5 and The SPECIALIST Lexicon6 from the Unified Medical Language System. We strive to exclude non-medical terms to form a domain-specific dictionary. For each text segment, we evaluate whether it’s domain-specific by assessing the density of matched domain words from the dictionary. This dictionary method is an effective and efficient way to extract domain text.

- 提取医学语料库:该流程旨在去除无关领域的语料,作为海量语料筛选的第一步。我们采用基于词典的方法,从THUOCL5和统一医学语言系统中的SPECIALIST Lexicon6获取包含医学术语的词典。通过排除非医学术语,力求构建专业领域词典。针对每个文本片段,我们通过评估词典中匹配领域词汇的密度来判断其领域相关性。这种词典方法是提取领域文本高效且有效的方式。

Our data sources consist of four categories: (1) Web Corpus, which includes C4 (Raffel et al., 2020) and Wudao (Yuan et al., 2021); (2) Books, primarily comprising Textbook and the pile books 3(Gao et al., 2020); (3) Encyclopedia, encompassing Chinese Medical Encyclopedia and Wikipedia; (4) Medical Literature, which consists of PubMed and Chinese literature. Following the aforementioned steps, the corpus is converted into 5,252K of instruct