DISC-MedLLM: Bridging General Large Language Models and Real-World Medical Consultation

DISC-MedLLM:连接通用大语言模型与现实世界医疗咨询

Zhijie Bao1, 2, Wei Chen1, Shengze Xiao1, Kuang Ren3, Jiaao $\mathbf{W}\mathbf{u}^{1}$ , Cheng Zhong1, Jiajie Peng2 * , Xuanjing Huang4, Zhongyu Wei1, $^{5\ast}$

Zhijie Bao1, 2, Wei Chen1, Shengze Xiao1, Kuang Ren3, Jiaao $\mathbf{W}\mathbf{u}^{1}$, Cheng Zhong1, Jiajie Peng2* , Xuanjing Huang4, Zhongyu Wei1, $^{5\ast}$

1School of Data Science, Fudan University, China 2School of Computer Science, Northwestern Polytechnic al University, China 3 University of Toronto, Canada 4School of Computer Science, Fudan University, China 5Research Institute of Automatic and Complex Systems, Fudan University, China zhijiebao $@$ mail.nwpu.edu.cn, szxiao 23a .fudan.edu.cn, kuang.ren $@$ mail.utoronto.ca, zhong7414@gmail.com, jiajiepeng $@$ nwpu.edu.cn, {chenwei18, xjhuang, zywei}@fudan.edu.cn

1复旦大学数据科学学院,中国 2西北工业大学计算机学院,中国 3多伦多大学,加拿大 4复旦大学计算机学院,中国 5复旦大学自动化与复杂系统研究所,中国 zhijiebao $@$ mail.nwpu.edu.cn, szxiao 23a .fudan.edu.cn, kuang.ren $@$ mail.utoronto.ca, zhong7414@gmail.com, jiajiepeng $@$ nwpu.edu.cn, {chenwei18, xjhuang, zywei}@fudan.edu.cn

Abstract

摘要

We propose DISC-MedLLM, a comprehensive solution that leverages Large Language Models (LLMs) to provide accurate and truthful medical response in end-to-end convers at ional healthcare services. To construct high-quality Supervised Fine-Tuning (SFT) datasets, we employ three strategies: utilizing medical knowledge-graphs, reconstructing real-world dialogues, and incorporating human-guided preference rephrasing. These datasets are instrumental in training DISC-MedLLM, surpassing existing medical LLMs in both single-turn and multi-turn consultation scenarios. Extensive experimental results demonstrate the effectiveness of the proposed model in bridging the gap between general language models and real-world medical consultation. Additionally, we release the constructed dataset and model weights to further contribute to research and development. Further details and resources can be found at https://github. com/FudanDISC/DISC-MedLLM.

我们提出DISC-MedLLM,这是一种利用大语言模型(LLMs)在端到端对话医疗服务中提供准确真实医疗响应的综合解决方案。为构建高质量的监督微调(SFT)数据集,我们采用三种策略:运用医学知识图谱、重构真实世界对话、融合人工引导的偏好改写。这些数据集对训练DISC-MedLLM至关重要,使其在单轮和多轮诊疗场景中均超越现有医疗大语言模型。大量实验结果表明,该模型能有效弥合通用语言模型与实际医疗咨询之间的差距。我们同时开源了构建的数据集和模型权重以促进研究发展。更多细节与资源请访问https://github.com/FudanDISC/DISC-MedLLM。

Introduction

引言

The emergence of the tele medicine industry has reshaped the healthcare service, offering remote medical consultations, broadening access to professionals, and trimming medical costs (Haleem et al. 2021). Additionally, intelligent medical systems have enriched online medical services by incorporating features like medical information extraction (Lin et al. 2019; Zhang et al. 2020; Chen et al. 2023b), drug recommendation (He, Folkman, and Borgwardt 2018; Zheng et al. 2021), automated diagnosis (Wei et al. 2018; Zhong et al. 2022; Chen et al. 2023c), and health question answering (He et al. 2020; Pal, Umapathi, and Sankara sub bu 2022), etc.

远程医疗行业的兴起重塑了医疗服务模式,通过提供远程问诊服务、拓宽专业资源获取渠道并降低医疗成本 (Haleem et al. 2021)。智能医疗系统进一步丰富了线上医疗服务功能,整合了医疗信息抽取 (Lin et al. 2019; Zhang et al. 2020; Chen et al. 2023b)、药物推荐 (He, Folkman, and Borgwardt 2018; Zheng et al. 2021)、自动化诊断 (Wei et al. 2018; Zhong et al. 2022; Chen et al. 2023c) 和健康问答 (He et al. 2020; Pal, Umapathi, and Sankara sub bu 2022) 等模块。

While progress has been made in developing intelligent healthcare systems, previous studies primarily focuses on specific tasks or diseases with limited applicability, creating a gap between experimental advancements and practical applications (Yang et al. 2022). To bridge this gap, there is a need of comprehensive solutions for a broader range of medical scenarios, and providing high-quality healthcare services to users in an end-to-end conversational manner.

尽管在开发智能医疗系统方面已取得进展,但以往研究主要局限于特定任务或疾病,适用性有限,导致实验进展与实际应用之间存在差距 (Yang et al. 2022)。为弥合这一差距,需要针对更广泛医疗场景提供综合解决方案,并以端到端对话形式为用户提供高质量医疗服务。

Recently, Large Language Models (LLMs) (OpenAI 2022; Touvron et al. 2023; Wei et al. 2021; Ouyang et al. 2022) have showed impressive ability to follow human instructions and to engage in meaningful conversations. These developments have opened up new possibilities for building medical consultation systems. However, medical consultation scenarios are usually complicated and beyond the capability of LLMs from general domain.

近来,大语言模型 (OpenAI 2022; Touvron et al. 2023; Wei et al. 2021; Ouyang et al. 2022) 展现出遵循人类指令并参与有意义对话的卓越能力。这些发展为构建医疗咨询系统开辟了新可能。然而,医疗咨询场景通常复杂多变,超出了通用领域大语言模型的能力范围。

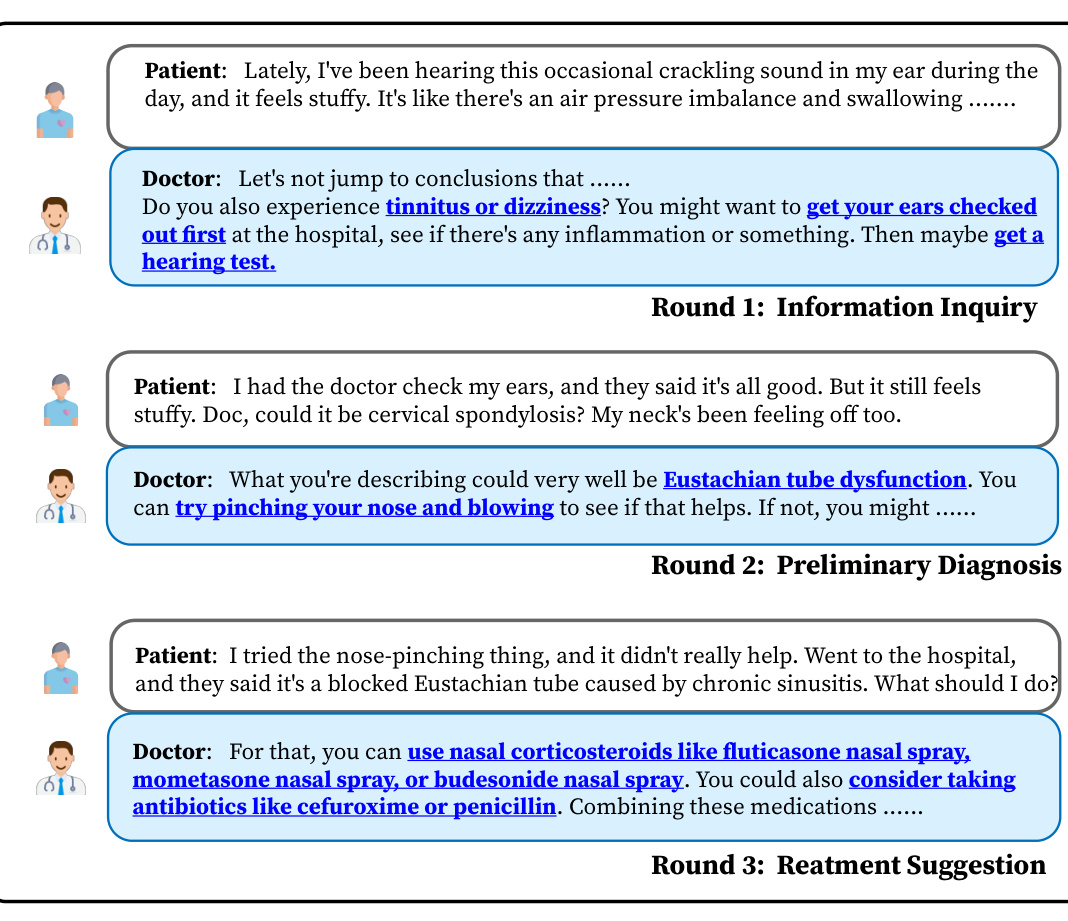

An example of real-world medical consultation is shown in Figure 1. It reveals two characteristics. Firstly, it requires intensive and reliable medical knowledge to understand the dialogue and make proper response in every steps. General domain LLMs reveal serious problems of hallucination by generating irrelevant content to the specific case. Secondly, it usually takes multiple turns to gather sufficient patient information before providing healthcare consultation and each round of conversation has specific intention. However, general domain LLMs tend to be single-turn agents with limited multi-turn inquiring capabilities regarding the details of a user’s health condition.

图 1: 展示了一个真实世界医疗咨询的示例。该案例揭示了两大特征:首先,理解对话并在每一步做出恰当回应需要密集且可靠的医学知识。通用领域大语言模型在生成与特定病例无关内容时表现出严重的幻觉问题;其次,通常需要多轮对话才能收集足够的患者信息以提供医疗建议,且每轮对话都有特定意图。然而,通用领域大语言模型往往是单轮智能体,在针对用户健康状况细节的多轮询问能力上存在局限。

Based on these two observations, we argue that medical LLMs should encode intensive and reliable medical knowledge while aligning with the real-world medical dialogue distribution. Motivated by the success of Instruction Tuning (Wang et al. 2022), we explore to construct high quality Supervised Fine Tuning (SFT) datasets for training medical LLMs, and inject medical knowledge and consultation behavior patterns into the large language model. In practice, we construct samples following three strategies.

基于这两点观察,我们认为医疗大语言模型应当编码密集且可靠的医学知识,同时与现实世界的医疗对话分布对齐。受指令微调 (Instruction Tuning) [20] 成功经验的启发,我们探索构建高质量的监督微调 (Supervised Fine Tuning, SFT) 数据集来训练医疗大语言模型,并将医学知识和问诊行为模式注入大语言模型。实践中,我们采用三种策略构建样本:

• Medical knowledge-graph driven sample construction. We use a department-oriented strategy to sample knowledge triples from a medical knowledge graph following a patient query distribution obtained from a real-world consultation dataset. For each triple, GPT-3.5 is used to construct QA pairs in a few-shot manner. This results in 50k samples. • Real-world dialogue re-construction. Consultation records collected from medical forums are appropriate sources for LLMs fine-tuning. However, these records contain informal language usage, inconsistent terminology presentation, and different expressive style from different healthcare professionals. Therefore, we utilize GPT-3.5 to re-generate the dialogue based on real cases. This results in $420\mathrm{k\Omega}$ samples.

• 医学知识图谱驱动的样本构建。我们采用科室导向策略,根据从真实问诊数据集中获取的患者查询分布,从医学知识图谱中采样知识三元组。针对每个三元组,使用GPT-3.5以少样本方式构建问答对,最终生成5万条样本。

• 真实对话重构。从医疗论坛收集的问诊记录是大语言模型微调的合适数据源,但这些记录存在非正式用语、术语表述不一致以及不同医疗专业人员表达风格差异等问题。因此,我们利用GPT-3.5基于真实案例重新生成对话,最终获得$420\mathrm{k\Omega}$条样本。

Figure 1: Dialogue Sample between a human doctor and a patient. The blue-highlighted text indicates medical entities involved in the doctor’s response. Doctor’s behavior reveals specific intention in each round: (1) in the round 1, further inquiries for information that aids in determining possible situations; (2) in the round 2, arrives at a preliminary diagnosis and provide valid recommendations; (3) in round 3, offers specific treatment options based on the medical condition.

图 1: 人类医生与患者的对话示例。蓝色高亮文本表示医生回应中涉及的医疗实体。医生的行为在每轮对话中展现出特定意图:(1) 在第一轮中,进一步询问有助于判断病情的信息;(2) 在第二轮中,得出初步诊断并提供有效建议;(3) 在第三轮中,根据病情提供具体治疗方案。

• Human preference following sample collection. For the alignment of human preference, we manually select a small set of entries from the real-world medical dialogue records covering different consultation situations and manually rewrite some samples. After the human guided re-construction, we further ensure the overall quality of each dialogue. This results in 2k samples.

• 人工偏好样本收集。为对齐人类偏好,我们从真实世界医疗对话记录中手动筛选出涵盖不同咨询场景的小规模条目,并对部分样本进行人工改写。经过人工引导重构后,我们进一步确保每段对话的整体质量,最终获得2000条样本。

The constructed SFT datasets are then utilized to train DISC-MedLLM following a two-stage training mechanism on top of a general domain Chinese LLM with 13B parameters 1. We assess the model’s performance from two perspectives to check its capability of providing accuracy answers in single-turn conversations and presenting systematical consultation in multi-turn conversations, respectively. For single-turn evaluation, we construct a benchmark consisting of multiple choices questions collected from three public medical datasets and evaluate the model’s accuracy.

构建的SFT数据集随后被用于在13B参数的中文通用大语言模型基础上,通过两阶段训练机制训练DISC-MedLLM。我们从两个角度评估模型性能:一是检验其在单轮对话中提供准确回答的能力,二是考察其在多轮对话中呈现系统化咨询的能力。针对单轮评估,我们构建了一个由三个公共医疗数据集收集的多选题组成的基准,并评估模型的准确率。

For multi-turn evaluation, we first construct a small set of high quality consulting cases, and then employ GPT-3.5 play the role of the patient based on the cases, and chat with the model. We use GPT-4 to evaluate the model’s pro activity, accuracy, helpfulness and linguistic quality.

在多轮评估中,我们首先构建了一小部分高质量的咨询案例,然后利用GPT-3.5根据这些案例扮演患者角色与模型对话。通过GPT-4评估模型的主动性、准确性、帮助性和语言质量。

The experimental results demonstrate that DISCMedLLM outperforms the medical large-scale model HuatuoGPT (Zhang et al. 2023) with same parameters (13B) by over $10%$ on average in medical multiple-choice questions, although still falls behind that of GPT-3.5. Moreover, in simulated medical consultation scenarios, DISC-MedLLM exhibits superior overall performance compared to baseline models such as GPT-3.5, HuatuoGPT, and BianQue (Chen et al. 2023d). Particularly in the scenarios involving medical departments and patient intents, DISC-MedLLM achieves the best performance among Chinese medical LLMs.

实验结果表明,在相同参数量(13B)下,DISCMedLLM在医学多选题上的平均表现比医疗大模型华佗GPT (Zhang et al. 2023) 高出超过$10%$,但仍落后于GPT-3.5。此外,在模拟医疗咨询场景中,DISC-MedLLM相较于GPT-3.5、华佗GPT和扁鹊(Chen et al. 2023d)等基线模型展现出更优的综合性能。尤其在涉及医疗科室和患者意图的场景中,DISC-MedLLM在所有中文医疗大语言模型中取得了最佳表现。

Advantages of DISC-MedLLM

DISC-MedLLM的优势

In this section, we discuss the advantages of the proposed DISC-MedLLM, particularly its deliberated constructed dataset for fine-tuning. The overall framework is illustrated in Figure 2. The dataset is primarily sourced from both medical knowledge graph and real-world doctor-patient consultations and the sample selection process is following a hospital department distribution extracted from real-world dataset.

在本节中,我们讨论了所提出的DISC-MedLLM的优势,特别是其精心构建的微调数据集。整体框架如图2所示。该数据集主要来源于医学知识图谱和真实医患咨询记录,样本筛选过程遵循从真实数据中提取的医院科室分布规律。

Figure 2: Overview of the construction of DISC-Med-SFT. The DICS-Med-SFT dataset is constructed using various data sources, including real-world conversations and knowledge graphs, and combines the mechanisms of LLM-in-the-loop and Human-in-the-loop to form its three components: Re-constructed AI Doctor-Patient Dialogue, Knowledge Graph QA pairs, Behavioral Preference Dataset.

图 2: DISC-Med-SFT构建流程概览。DISC-Med-SFT数据集通过整合真实医患对话、知识图谱等多源数据,结合大语言模型 (LLM-in-the-loop) 与人工校验 (Human-in-the-loop) 机制,形成三大组成部分:重构AI医患对话、知识图谱问答对、行为偏好数据集。

Two sample construction mechanisms are utilized, LLM in the loop plays a role in paraphrasing real-world conversations, while human in the loop ensures alignment with high-quality conversational behaviors. These efforts result in three key features of DISC-MedLLM: knowledge-intensive and reliable, ability of multi-turn inquiry, and alignment with human preferences.

采用两种样本构建机制:大语言模型 (LLM) 在循环中负责改写真实对话,而人工在循环中确保与高质量对话行为对齐。这些努力使DISC-MedLLM具备三大核心特性:知识密集且可靠、支持多轮问询能力、符合人类偏好。

Knowledge Intensive and Reliable

知识密集型与可靠

In general-domain instruction tuning, it is popular to use diverse instruction examples generated by model like ChatGPT, that is, the instruction examples are derived from the model’s own knowledge (Wang et al. 2022). However, relying solely on the model itself is dangerous in the healthcare field, as LLMs itself have serious hallucination problems. Therefore, in our setting, we do not rely on the LLM to generate any medical knowledge, instead, the knowledge is entirely derived from reliable data sources, including medical knowledge graphs (from human labeled) and real doctorpatient dialogues (from doctors). The role of ChatGPT is to rewrite rather than generate. The paraphrased samples retain the underlying medical domain knowledge entirely, while ChatGPT supplements and elaborates on non-essential information. For instance, appropriate responses can provide additional details about the causes and information regarding a particular medical condition.

在通用领域的指令微调中,流行使用如ChatGPT等模型生成的多样化指令样本,即这些指令样本源自模型自身的知识 (Wang et al. 2022)。然而,在医疗健康领域仅依赖模型本身是危险的,因为大语言模型本身存在严重的幻觉问题。因此,在我们的设定中,我们不依赖大语言模型生成任何医学知识,而是完全从可靠数据源获取知识,包括医学知识图谱(来自人工标注)和真实医患对话(来自医生)。ChatGPT的作用是重写而非生成。改写后的样本完全保留了底层医学领域知识,同时由ChatGPT补充和阐述非核心信息。例如,恰当的回应可以提供关于特定病症成因及相关信息的额外细节。

Ability of Multi-turn Inquiry

多轮询问能力

Models like ChatGPT tend to provide detailed template-like responses in one single turn when faced with healthcare inquiries, while overlooking effective inquiry and clarification of the patient’s condition. In online pediatric disease consultations, for example, the average interaction between doctors and patients spans around 40 turns, with half of turns dedicated to discussing the patient’s symptom details (Chen et al. 2023a). DISC-MedLLM leverages real-world multi-turn pa- tient doctor conversations, equipping the model with ability of inquiry that enable it to engage in meaningful medical inquiries. As most patients find it challenging to describe their complete physical condition in one go, possessing inquiry capabilities important and essential.

面对医疗健康咨询时,像ChatGPT这样的模型倾向于在单轮对话中提供模板化的详细回答,却忽略了有效询问和澄清患者病情的环节。例如,在在线儿科疾病咨询中,医患平均交互轮次达40次左右,其中半数对话用于讨论患者症状细节 (Chen et al. 2023a)。DISC-MedLLM利用真实世界多轮医患对话数据,使模型具备主动询问能力,从而开展有临床意义的医学问诊。由于多数患者难以一次性完整描述身体状况,这种询问能力显得尤为重要且必要。

Alignment with Human Preference

与人类偏好的对齐

During patient communication, human doctors exhibit concise and direct behavior. However, they often lack sufficient empathy, resulting in providing incomplete or insufficiently detailed assistance. In contrast, models like ChatGPT have behavior patterns that can be adjusted based on human preferences, leading to a tendency to provide users with as much information and help as possible. Therefore, DISC-MedLLM improves upon this by in two significant ways: 1) using human preference guided behavioral preference dataset to better align its response and behavior more closely with human preference; 2) distill the behavior patterns of ChatGPT, resulting in responses that demonstrate comprehensive explanations and a high level of empathy when engaging with patients.

在医患沟通中,人类医生表现出简洁直接的行为特征,但往往缺乏足够的同理心,导致提供的帮助不完整或不够细致。相比之下,ChatGPT等模型的行为模式可根据人类偏好调整,倾向于为用户提供尽可能多的信息和帮助。为此,DISC-MedLLM通过两大核心改进实现优化:1) 采用人类偏好引导的行为偏好数据集,使模型响应与行为更贴合人类期望;2) 蒸馏ChatGPT的行为模式,确保与患者互动时既能提供全面解释,又保持高度同理心。

Table 1: Dataset Details of DISC-Med-SFT-ext, it extends from DISC-Med-SFT by incorporating general datasets. The table provides an overview of our training datasets and the corresponding capabilities they grant to the model.

| Dataset | Original Source | Size | Abilities | |||

| Domain Knowledge | Behavioral Pattern | Dialogue Ability | Human Preference | |||

| Re-constructed AI Doctor-PatientDialogue | MedDialog2 | 400k | ||||

| cMedQA23 | 20k | |||||

| CMeKG4 | 50k | |||||

| QA pairs BehavioralPreferenceDataset | Manualselection | 2k | ||||

| Others | MedMCQA5 | 8k | ||||

| MOSS6 | 33k | |||||

| Alpaca-GPT47 | 1k | |||||

表 1: DISC-Med-SFT-ext 数据集详情,该数据集通过整合通用数据集从 DISC-Med-SFT 扩展而来。表格概述了我们的训练数据集及其赋予模型的相应能力。

| 数据集 | 原始来源 | 规模 | 领域知识 | 行为模式 | 对话能力 | 人类偏好 |

|---|---|---|---|---|---|---|

| * * 重构的AI医患对话* * | MedDialog2 | 400k | ||||

| cMedQA23 | 20k | |||||

| CMeKG4 | 50k | |||||

| * * QA行为偏好数据集* * | 人工筛选 | 2k | ||||

| * * 其他* * | MedMCQA5 | 8k | ||||

| MOSS6 | 33k | |||||

| Alpaca-GPT47 | 1k |

To a certain extent, DISC-MedLLM can be conceptualized as an amalgamation of two mentors: the first being the acquisition of medical knowledge and decision-making from doctor-patient conversations, and the second involving the assimilation of behavioral patterns and human preferences from ChatGPT. Through the integration of these two mentors and precise sampling with human intervention, DISC-MedLLM strives to align itself with the observed medical consultation distributions present in the real world.

在一定程度上,DISC-MedLLM可以被视为两位导师的结合体:第一位是从医患对话中获取医学知识和决策能力,第二位则涉及从ChatGPT中学习行为模式和人类偏好。通过整合这两位导师并辅以人工干预的精确采样,DISC-MedLLM力求与现实世界中观察到的医疗咨询分布保持一致。

DISC-Med-SFT

DISC-Med-SFT

To train DISC-MedLLM, we construct a high-quality dataset called DISC-Med-SFT consisting of over 470k examples derived from existing medical datasets. This comprehensive dataset encompasses various scenarios, including single-turn medical Q&A, multi-turn medical consultations and medical multiple-choice Q&A. Additionally, we incorporated over $34\mathrm{k\Omega}$ general domain conversation and instruction samples. Detailed information regarding the employed datasets is provided in Table 1.

为训练DISC-MedLLM,我们构建了一个名为DISC-Med-SFT的高质量数据集,包含从现有医疗数据集中提取的47万多个样本。该综合性数据集涵盖多种场景,包括单轮医疗问答、多轮医疗咨询和医疗多选题。此外,我们还整合了超过$34\mathrm{k\Omega}$个通用领域对话和指令样本。所用数据集的详细信息见表1。

It is worth noting that our approach differs from simply gathering a large volume of NLP datasets in the medical domain and manually creating diverse instructions as Wei et al.. Instead, we adopt a goal-oriented strategy by selectively reconstructing the dataset using a few deliberately chosen sources. These data sources serve the purpose of assisting LLMs in acquiring medical domain knowledge, aligning behavioral patterns with human preferences, and capturing real-world online medical dialogue distributions.

值得注意的是,我们的方法不同于Wei等人简单地收集大量医学领域的NLP数据集并手动创建多样化指令的做法。相反,我们采用目标导向策略,通过精选少量数据源对数据集进行重构。这些数据源旨在帮助大语言模型获取医学领域知识、使行为模式符合人类偏好,并捕捉真实世界在线医疗对话的分布。

Real-world Dialogue Records

真实世界对话记录

We choose two public datasets collected from online forums as the sources of real-world dialgoue records, namely, MedDialog (Chen et al. 2020b) and cMedQA2 (Zhang et al. 2018). MedDialog contains over 3 million multi-turn conversations between doctors and patients and the topic focuses on medical consultation scenarios. cMedQA2 contains $108\mathrm{k}$ single-turn conversations encompassing both consultation and advisory situations. After filtering records using keyword filters and named entity recognition, We randomly select $400\mathrm{k\Omega}$ and 20k samples from each dataset respectively as source samples for SFT dataset construction.

我们选择两个从在线论坛收集的公开数据集作为真实世界对话记录的来源,即MedDialog (Chen et al. 2020b) 和 cMedQA2 (Zhang et al. 2018)。MedDialog包含超过300万轮医患多轮对话,主题聚焦于医疗咨询场景。cMedQA2包含 $108\mathrm{k}$ 轮单轮对话,涵盖咨询和顾问两种情境。通过关键词过滤和命名实体识别筛选记录后,我们分别从每个数据集中随机选取 $400\mathrm{k\Omega}$ 和20k样本作为SFT数据集构建的源样本。

Real-world dialogues are noisy in linguistic patterns and expression styles of different doctors vary; moreover, doctor’s responses might not align with the identify of an AI doctor. In order to obtain high quality conversation samples, we employ the language ability of general LLMs to reconstruct the entire dialogue. We design some prompts (Figure B1) for GPT-3.5, following several rules stated below.

现实世界中的对话在语言模式上存在噪音,且不同医生的表达风格各异;此外,医生的回应可能与AI医生的身份不符。为获取高质量对话样本,我们利用通用大语言模型的语言能力重构整个对话。基于以下规则,我们为GPT-3.5设计了一些提示词 (图 B1)。

• Remove colloquial expressions, address inconsistencies in the doctor’s language use, and distill more uniform expressions from the LLM. • Adhere to the key information in the original doctor’s response, and based on that, provide an appropriate explanation and supplement to the original answer, rephrasing it in a more detailed and logical manner. • Rewrite or remove responses that shouldn’t be made by an AI doctor, such as viewing imaging materials or asking the patient to register for an appointment.

• 去除口语化表达,修正医生语言使用的不一致,并从大语言模型中提炼出更统一的表达方式。

• 遵循医生原始回答中的关键信息,并在此基础上对原答案进行适当的解释和补充,以更详细、更具逻辑性的方式重新表述。

• 重写或删除不应由AI医生作出的回应,例如查看影像资料或要求患者预约挂号。

Figure A2 displays a sample in resulted dataset. After reconstruction, the doctor’s response aligns with the identity of the AI medical assistant. The overall response adheres to the key information provided in the original answer by the doctor, and offers more comprehensive assistance to the patient with richer content.

图 A2: 展示了结果数据集中的一个样本。经过重构后,医生的回答与AI医疗助手的身份保持一致。整体回复遵循了医生原始答案中的关键信息,并以更丰富的内容为患者提供了更全面的帮助。

Figure 3: The two stage training process of DISC-MedLLM. Stage 1 equips the model with l with domain knowledge and medical dialogue capability using diverse datasets. In Stage 2, the model’s performance is enhanced through the Behavioral Preference Dataset, which aligned with human preferences..

图 3: DISC-MedLLM 的两阶段训练流程。第一阶段通过多样化数据集使模型具备领域知识和医疗对话能力。第二阶段利用符合人类偏好的行为偏好数据集提升模型性能。

Knowledge Graph QA pairs

知识图谱问答对

The medical knowledge graph contains a large amount of well organized medical expertise. Generating QA training samples based on it can enable us to obtain data with relatively low noise compared with real world samples. We construct QA pairs based on a Chinese medical knowledge graph which contains over 10k diseases, nearly 20k medications, and more than $10\mathrm{k\Omega}$ symptoms. Centered on diseases, we sample the knowledge graph based on the department information of the disease nodes, following the department distribution in the original MedDialog data. We obtain QA pairs through two steps.

医学知识图谱包含大量结构化的医学专业知识。基于此生成问答训练样本,相比真实世界样本能获得噪声相对较低的数据。我们基于一个包含超过1万种疾病、近2万种药物及逾 $10\mathrm{k\Omega}$ 种症状的中文医学知识图谱构建问答对。以疾病为核心,根据疾病节点的科室信息,遵循原始MedDialog数据中的科室分布对知识图谱进行采样。通过两个步骤获取问答对。

Over $50\mathrm{k\Omega}$ diverse medical scenario conversation samples have been generated in total. The details of the prompts used to generate conversations refer to Figure B2 and Figure B3.

总计生成了超过 $50\mathrm{k\Omega}$ 个多样化医疗场景对话样本。用于生成对话的提示词详情参见图 B2 和图 B3。

Human Preferences Guided Conversation Samples

人机对话偏好引导的对话样本

To further enhance the model’s performance and align its response and behavior more closely with human preferences, we need to utilize a higher-quality dataset that aligns more closely with human preferences for secondary supervised fine-tuning in the final training stage. We manually select approximately 2,000 high-quality, diverse samples suitable for adaptation from the MedDialog and cMedQA2 datasets that were not used in the previous data construction process.

为了进一步提升模型性能,使其响应和行为更贴合人类偏好,我们需要在最终训练阶段使用更符合人类偏好的高质量数据集进行二次监督微调。我们从MedDialog和cMedQA2数据集中手动筛选了约2000个高质量、多样化的适用样本,这些样本未在前期数据构建过程中使用。

Depending on the sample’s consultation scenario, disease severity, and other variables, we select several examples to be reconstructed by GPT-4 and then manually revise them to align more closely with our preferences for AI doctor behavior and response quality. Subsequently, using a few-shot approach, we provid these examples to GPT-3.5 to generate 2,000 high-quality behavior-tuning samples under human supervision.

根据样本的咨询场景、疾病严重程度和其他变量,我们选取若干示例由GPT-4重构后人工修订,使其更符合我们对AI医生行为与响应质量的偏好。随后采用少样本策略,在人工监督下向GPT-3.5提供这些示例以生成2,000条高质量的行为调校样本。

Others

其他

MedMCQA MedMCQA (Pal, Umapathi, and Sankarasubbu 2022) is a multiple-choice Q&A dataset in the medical field in English, and it provides expert-annotated explanations for each question. We utilize it to generate professional medical QA samples to enhance the model’s expertise in Q&A capabilities. We refine the questions and correct answers from the multiple-choice questions using GPT-3.5, combined with explanations to generate QA format samples, and then translate them into Chinese. Using this approach, we construct about $8\mathrm{k\Omega}$ samples, of which approximately 2k samples retain the multiple-choice format and are directly translated into Chinese.

MedMCQA

MedMCQA (Pal, Umapathi, and Sankarasubbu 2022) 是一个英文医学领域多项选择题问答数据集,它为每个问题提供了专家标注的解析。我们利用该数据集生成专业医学问答样本,以增强模型在问答能力上的专业性。通过 GPT-3.5 对多项选择题的问题和正确答案进行优化,结合解析生成问答格式样本,并将其翻译为中文。采用这种方法,我们构建了约 $8\mathrm{k\Omega}$ 个样本,其中约 2k 样本保留多项选择题形式并直接翻译为中文。

General We introduce some generic data to enrich the diversity of our training set, mitigating the risk of foundational capability degradation in the model during the SFT training phase, especially if data predominantly leans towards the medical sector (Wen et al. 2023). Specifically, we draw from two general domain supervised fine-tuning datasets: mosssft-003 (Sun et al. 2023) and alpaca gpt4 data zh (Peng et al. 2023). From moss-sft-003, we extract data from the Brainstorming, Role Playing, and Harmless categories, selecting $33\mathrm{k\Omega}$ samples at random. For alpaca gpt4 data zh, considering it is only used in the final stages of training, where sample volume is reduced, we randomly sample 1k instances.

通用数据

我们引入一些通用数据来丰富训练集的多样性,以降低模型在SFT训练阶段基础能力退化的风险,尤其是在数据主要偏向医疗领域的情况下 (Wen et al. 2023)。具体而言,我们选取了两个通用领域的监督微调数据集:moss-sft-003 (Sun et al. 2023) 和 alpaca gpt4 data zh (Peng et al. 2023)。从moss-sft-003中,我们提取了头脑风暴 (Brainstorming)、角色扮演 (Role Playing) 和无害 (Harmless) 类别的数据,并随机选取了 $33\mathrm{k\Omega}$ 个样本。对于alpaca gpt4 data zh,考虑到它仅用于训练的最后阶段(此时样本量已减少),我们随机抽取了1k个实例。

Training Details

训练细节

We develop our model on top of the Baichuan-13B-Base model, which is an open source LLM with over 13.2 billion parameters that was trained on 1.4 trillion tokens corpus, exhibiting ideal performance in both English and Chinese. As illustrated in the Figure 3, our training is divided into two SFT stages, both of which are completed on $4^{* }\mathrm{A}800$ GPUs.

我们在Baichuan-13B-Base模型基础上进行开发,这是一个拥有132亿参数的开源大语言模型(LLM),训练语料规模达1.4万亿token,在中英文场景均展现出优异性能。如图3所示,我们的训练分为两个SFT阶段,均在4* A800 GPU上完成。

• Stage 1: We initially use large-scale samples to imbue the model with domain knowledge and medical dialogue capabilities, including a $420\mathrm{k\Omega}$ adapted AI doctor-patient dialogue dataset, $50\mathrm{k\Omega}$ knowledge graph constructed QA pairs, MedMCQA, and moss-sft-003. The hyper para meters setting for this training process are as follows: global batch size of 24, learning rate of $1e\mathrm{ - }5$ with AdamW optimizer, 1 epochs, maximum sequence length of 2048 tokens, warm up steps of 1800 and with no weight decay. • Stage 2: In this stage, we train the model to align with human preferences in terms of behavioral patterns, usefulness, etc., enabling it to perform better in medical dialogue scenarios. We employ a $2\mathrm{k\Omega}$ meticulously crafted preference-aligned behavioral preference dataset and combine it with 1k alpaca gpt4 data zh data for training. The hyper parameters setting for this training process are as follows: global batch size of 8, learning rate of $5e\mathrm{ - }6$ with AdamW optimizer, 1 epochs, maximum sequence length of 2048 tokens, with no weight decay.

• 阶段1:我们首先使用大规模样本让模型掌握领域知识和医疗对话能力,包括一个42万条适配的AI医患对话数据集、5万条基于知识图谱构建的问答对、MedMCQA以及moss-sft-003。该训练过程的超参数设置如下:全局批次大小为24,采用AdamW优化器且学习率为1e-5,训练1个epoch,最大序列长度为2048个token,预热步数为1800且不进行权重衰减。

• 阶段2:本阶段训练模型使其行为模式、实用性等方面与人类偏好对齐,从而在医疗对话场景中表现更优。我们使用2千条精心构建的行为偏好对齐数据集,并结合1千条alpaca gpt4中文数据进行训练。该训练过程的超参数设置如下:全局批次大小为8,采用AdamW优化器且学习率为5e-6,训练1个epoch,最大序列长度为2048个token,且不进行权重衰减。

Evaluation Setup

评估设置

We evaluate the performance of medical LLMs in two settings, namely, single-turn question answering and multi-turn conversation.

我们评估医疗大语言模型在两种场景下的表现,即单轮问答和多轮对话。

Single-turn Question Answering Evaluation

单轮问答评估

To evaluate the single-round QA capability of LLMs in providing accurate answers to healthcare related questions, we utilize construct a benchmark dataset including multiplechoice questions based on several public datasets. Although open-ended QA setting has been widely employed to test the interactive capability of LLMs in terms of rule-based metrics (ROUGE, BLUE, etc.), it is suitable for evaluating medical consultation systems which care more about the accuracy instead of free-style generation. We use multiple-choice questions to evaluate different systems and use accuracy as the metric.

为评估大语言模型(LLM)在医疗健康相关问答中提供准确答案的单轮能力,我们基于多个公开数据集构建了包含多选题的基准测试集。虽然开放式问答设置已广泛用于通过基于规则的指标(ROUGE、BLUE等)测试大语言模型的交互能力,但多选题形式更适合注重准确性而非自由生成的医疗咨询系统评估。我们采用多选题测试不同系统,并以准确率作为衡量指标。

Multiple-choice Datasets We sample cases from two public datasets to construct our evaluation benchmark. (1) MLEC-QA (Li, Zhong, and Chen 2021) is collected from the National Medical Licensing Examination in China (NMLEC). It is divided into five categories: Clinic, Stomatology, Public Health, Traditional Chinese Medicine, and Integrated Traditional Chinese and Western Medicine. We randomly sample $10%$ from its test set, resulting in a total of 1,362 questions for evaluation. (2) NEEP is a collection of multiple-choice questions from the Western Medicine 306 of the National Entrance Examination for Postgraduate (NEEP) that we manually collated. For Western Medicine 306, we acquire questions from the years 2019 to 2021 and use a combined total of 270 questions from 2020 and 2021 for our tests. The overall statistics of the benchmark is shown in Table 2.

多选题数据集

我们从两个公开数据集中采样案例构建评估基准:(1) MLEC-QA (Li, Zhong, and Chen 2021) 收集自中国国家医学资格考试(NMLEC),分为临床、口腔、公共卫生、中医和中西医结合五个类别。我们从其测试集中随机抽取$10%$,共获得1,362道题目用于评估。(2) NEEP是我们手动整理的全国硕士研究生入学考试西医306多选题合集。对于西医306科目,我们获取了2019至2021年的试题,并选用2020和2021年共计270道题作为测试集。基准数据整体统计如 表 2 所示。

We experiment using both zero-shot and few-shot methodologies. For the few-shot samples, the MLEC-QA examples are chosen from its validation set, while those for NEEP are derived from the 2019 set of questions.

我们同时采用了零样本 (zero-shot) 和少样本 (few-shot) 方法进行实验。少样本示例中,MLEC-QA 的样本选自其验证集,而 NEEP 的样本则来自 2019 年的问题集。

Table 2: Benchmark Evaluation Dataset Details. $10%$ of cases are sampled from original datasets to form the benchmark dataset. MLEC-QA has 5 subsets, including Clinic, Traditional Chinese Medicine Combined with Western Medicine, Public Health, S tomato logy and Traditional Chinese Medicine. NEEP contains Western Medicine 306.

| Dataset | TestSet OriginalSize | SampleSize |

| MLEC-QAClinic | 3362 | 336 |

| MLEC-QACWM | 2674 | 268 |

| MLEC-QAPublicHealth | 1853 | 185 |

| MLEC-QAStomatology | 2644 | 264 |

| MLEC-QATCM | 3086 | 309 |

| NEEP306 | 270 | 270 |

表 2: 基准评估数据集详情。从原始数据集中抽取 10% 的案例构成基准数据集。MLEC-QA 包含 5 个子集:临床、中西医结合、公共卫生、口腔医学和中医。NEEP 包含西医 306。

| 数据集 | 原始测试集大小 | 采样大小 |

|---|---|---|

| MLEC-QA临床 | 3362 | 336 |

| MLEC-QA中西医结合 | 2674 | 268 |

| MLEC-QA公共卫生 | 1853 | 185 |

| MLEC-QA口腔医学 | 2644 | 264 |

| MLEC-QA中医 | 3086 | 309 |

| NEEP306 | 270 | 270 |

Multi-turn Conversation Evaluation

多轮对话评估

In the scenario of multi-turn dialogue, it is insufficient to evaluate the system performance using traditional evaluation metrics. While human evaluation may be a preferable solution, it is costly and difficult to reproduce the evaluation across different projects. Given these considerations, we select a small set of dialogue samples based on real-world cases, and propose four metrics focusing on medical conversation. We employ GPT-3.5 play the role of the patient and chat with the model for three rounds. In addition, we utilize external LLMs as the judge.

在多轮对话场景中,使用传统评估指标衡量系统性能存在不足。虽然人工评估可能是更优方案,但其成本高昂且难以在不同项目间复现评估结果。基于这些考量,我们根据真实案例选取少量对话样本,并针对医疗对话场景提出四项评估指标。我们让GPT-3.5扮演患者角色与模型进行三轮对话,同时采用外部大语言模型作为评判者。

Dialogue Evaluation Datasets We choose samples from three public datasets, and manually check the quality of samples. (1) CMB-Clin provides 74 real-world medical cases (Wang et al. 2023b), detailing patient history summaries, chief complaints, and various laboratory and imaging tests conducted. We using GPT3.5 to generate a initial question based on the patient’s condition for each case. One of the cases wasn’t suitable for our evaluation setup, leaving us with 73 cases.(2) CMD is a medical Q&A dataset with a total of 0.79M Q&A pairs across six departments, containing consultations with explicit demands and diagnostic queries. We randomly choose 20 questions from each department (internal medicine, surgery, pediatrics, andrology, gynecology, and oncology), and this results in 120 samples in total. (3) CMID is a dataset of user queries in the medical domain, where questions are categorized into symptoms, treatment methods, medications and others (Chen et al. 2020a). We randomly select 30 samples from each category and result in 120 cases. The final evaluation set contains 313 cases. CMB-Clin simulates real-world consultation process, while CMD and CMID focus on the evaluation from the perspectives of departmental specialities and user intentions.

对话评估数据集

我们从三个公开数据集中选取样本,并手动检查样本质量。(1) CMB-Clin 提供了74个真实世界医疗案例 (Wang et al. 2023b),详细记录了患者病史摘要、主诉及各项实验室与影像学检查。我们使用GPT3.5根据每个案例的患者状况生成初始问题,其中一个案例不符合评估设置要求,最终保留73个案例。(2) CMD 是包含六个科室共79万组问答对的医疗问答数据集,涵盖明确诉求的咨询和诊断查询。我们从内科、外科、儿科、男科、妇科和肿瘤科每个科室随机选取20个问题,共计120个样本。(3) CMID 是医疗领域用户查询数据集,问题分为症状、治疗方法、药物等类别 (Chen et al. 2020a)。我们从每类随机抽取30个样本,最终获得120个案例。最终评估集包含313个案例。CMB-Clin 模拟真实世界诊疗流程,CMD 和 CMID 则分别从科室专业性和用户意图角度进行评估。

Evaluation Metrics for Conversation In order to perform a systematic al evaluation on dialogue capability, we propose four metrics, namely, pro activity, accuracy, helpfulness, and linguistic quality.

对话评估指标

为了对对话能力进行系统性评估,我们提出四项指标:主动性 (pro activity)、准确性 (accuracy)、有用性 (helpfulness) 和语言质量 (linguistic quality)。

| Method | Model | MLEC-QA Clinic | MLEC-QA CWM | MLEC-QA PublicHealth | MLEC-QA Stomatology | MLEC-QA TCM | NEEP306 | Avarage |

| few-shot | GPT-3.5 | 58.63 | 45.9 | 53.51 | 51.52 | 43.47 | 44.81 | 49.64 |

| Baichuan-13b-Chat | 31.25 | 37.69 | 28.65 | 27.27 | 29.77 | 24.81 | 29.91 | |

| HuatuoGPT(13B) | 31.85 | 25 | 32.43 | 32.95 | 26.54 | 24.44 | 28.87 | |

| DISC-MedLLM | 44.64 | 41.42 | 41.62 | 38.26 | 39.48 | 33.33 | 39.79 | |

| zero-shot | GPT-3.5 | 47.32 | 33.96 | 48.11 | 39.77 | 38.83 | 33.33 | 40.22 |

| Baichuan-13b-Chat | 44.05 | 43.28 | 39.92 | 31.06 | 41.42 | 32.22 | 38.66 | |

| HuatuoGPT(13B) | 27.38 | 21.64 | 25.95 | 25.76 | 24.92 | 20.37 | 24.34 | |

| DISC-MedLLM | 44.64 | 37.31 | 35.68 | 34.85 | 41.75 | 31.11 | 37.56 |

Table 3: Results of multiple-choice benchmark. The highest score is highlighted in bold, while the second is underscored.

| 方法 | 模型 | MLEC-QA Clinic | MLEC-QA CWM | MLEC-QA PublicHealth | MLEC-QA Stomatology | MLEC-QA TCM | NEEP306 | 平均 |

|---|---|---|---|---|---|---|---|---|

| 少样本 | GPT-3.5 | 58.63 | 45.9 | 53.51 | 51.52 | 43.47 | 44.81 | 49.64 |

| Baichuan-13b-Chat | 31.25 | 37.69 | 28.65 | 27.27 | 29.77 | 24.81 | 29.91 | |

| HuatuoGPT(13B) | 31.85 | 25 | 32.43 | 32.95 | 26.54 | 24.44 | 28.87 | |

| DISC-MedLLM | 44.64 | 41.42 | 41.62 | 38.26 | 39.48 | 33.33 | 39.79 | |

| 零样本 | GPT-3.5 | 47.32 | 33.96 | 48.11 | 39.77 | 38.83 | 33.33 | 40.22 |

| Baichuan-13b-Chat | 44.05 | 43.28 | 39.92 | 31.06 | 41.42 | 32.22 | 38.66 | |

| HuatuoGPT(13B) | 27.38 | 21.64 | 25.95 | 25.76 | 24.92 | 20.37 | 24.34 | |

| DISC-MedLLM | 44.64 | 37.31 | 35.68 | 34.85 | 41.75 | 31.11 | 37.56 |

表 3: 选择题基准测试结果。最高分以粗体标出,次高分以下划线标注。

• Pro activity: The doctor can pro actively and clearly request the patient to provide more information when the information is insufficient.

• 主动性:当信息不足时,医生能够主动且明确地要求患者提供更多信息。

GPT4-as-a-Judge Strong LLM judges like GPT-4 can match both controlled and crowd sourced human preferences well (Zheng et al. 2023). In this evaluation, GPT-4 serves as a referee and perform evaluation in providing a rating score from 1 to 5 for each of the four criteria.

GPT4-as-a-Judge 强大的大语言模型评判者(如GPT-4)能很好地匹配受控和众包的人类偏好 (Zheng et al. 2023)。本次评估中,GPT-4作为裁判,从1到5分为四个标准分别提供评分。

Evaluation Results

评估结果

We compare DISC-MedLLM with some competitive systems and present results for both single-turn and multi-turn evaluations.

我们对比了DISC-MedLLM与一些竞争性系统,并展示了单轮和多轮评估的结果。

Comparative Models

对比模型

We are positioning our model in comparison with three general-purpose LLMs and two specialized conversational Chinese medical LLMs. These include: (1) GPT-3.5 (OpenAI 2022), one of the most powerful and most widely used LLM developed by OpenAI; (2)GPT-4 (OpenAI 2023) the subsequent iteration of GPT3.5, exhibits the most advanced overall performance among the existed LLM series. (3) Baichuan-13B-Chat (Baichuan-inc 2023), the chat version of the 13 billion parameter pre-trained Baichuan-13B-Base model; (4) BianQue-2 (Chen et al. 2023d), an open-sourced Chinese medical LLM with 6 billion parameters; and (5) HuatuoGPT-13B (Zhang et al. 2023), a Chinese large language model fine-tuned on both distilled and real-world data for medical use. GPT-4 and BianQue-2 have not been extensively tested in multiple-choice question answering, due to billing constraints and non-conformance to the expected output, respectively.

我们将模型定位与三款通用大语言模型和两款专业的中文医疗对话大语言模型进行比较。包括:(1) GPT-3.5 (OpenAI 2022),由OpenAI开发的最强大且应用最广泛的大语言模型之一;(2) GPT-4 (OpenAI 2023),作为GPT-3.5的后续版本,在现有大语言模型系列中展现出最先进的综合性能;(3) Baichuan-13B-Chat (Baichuan-inc 2023),基于130亿参数预训练模型Baichuan-13B-Base优化的对话版本;(4) 扁鹊-2 (Chen et al. 2023d),开源的60亿参数中文医疗大语言模型;(5) 华佗GPT-13B (Zhang et al. 2023),基于蒸馏数据和真实医疗数据微调的中文大语言模型。由于计费限制和输出格式不符,GPT-4和扁鹊-2尚未在选择题答题场景进行充分测试。

Results for Single-Turn QA

单轮问答结果

The overall results of multiple-choice assessment are shown in Table 3. GPT-3.5 demonstrates an undeniable lead. Our model achieves the second best results in the few-shot setup. In the zero-shot setting, DISC-MedLLM trails behind Baichuan-13B-Chat, ranking the third. It is worthy to know that we perform better than HuatuoGPT(13B) which is trained in reinforcement learning setup.

多项选择题评估的总体结果如表 3 所示。GPT-3.5 展现出无可争议的领先优势。我们的模型在少样本设置中取得了第二好的成绩。在零样本设置下,DISC-MedLLM 落后于 Baichuan-13B-Chat,排名第三。值得注意的是,我们的表现优于采用强化学习训练的 HuatuoGPT(13B)。

Results for Multi-turn Conversation

多轮对话结果

In the CMB-Clin evaluation, DISC-MedLLM garners the top aggregate score, with HuatuoGPT coming in a close second as is shown in Table 4. All three general-purpose models demonstrate commendable performances during this assessment. While GPT-4 excels in the accuracy and usefulness categories, its inclination to address issues within its current interaction leads to a diminished pro activity score compared to GPT-3.5. Significantly, our model registers the highest score in the pro activity criterion, underscoring the effectiveness of our tailored approach for medical model behavi