ChatRadio-Valuer: A Chat Large Language Model for General iz able Radiology Report Generation Based on Multi-institution and Multi-system Data

ChatRadio-Valuer: 基于多机构多系统数据的通用放射学报告生成对话大语言模型

Tianyang Zhong ∗1, Wei Zhao ∗ $^{\mathrm{2,3,4}}$ , Yutong Zhang $^{5}$ , Yi Pan †6, Peixin Dong $^{\dagger1}$ , Zuowei Jiang $^{\dagger1}$ , Xiaoyan Kui $^{\dagger8}$ , Youlan Shang †2, Li Yang1, Yaonai Wei $^1$ , Longtao Yang2, Hao Chen1, Huan Zhao7, Yuxiao Liu $^{9,10}$ , Ning Zhu $^{1}$ , Yiwei Li $^{18}$ , Yisong Wang $^2$ , Jiaqi Yao $_ {11}$ , Jiaqi Wang12, Ying Zeng $^{2,13}$ , Lei He $^{14}$ , Chao Zheng $^{15}$ , Zhixue Zhang $16$ , Ming Li $^{17}$ , Zhengliang Liu $^{18}$ , Haixing Dai $^{18}$ , Zihao Wu $^{18}$ , Lu Zhang $^{19}$ , Shu Zhang $_ {12}$ , Xiaoyan Cai $^{1}$ , Xintao Hu $^{1}$ , Shijie Zhao $^{1}$ , Xi Jiang $7$ , Xin Zhang $^5$ , Xiang Li $^{20}$ , Dajiang Zhu $^{19}$ , Lei Guo $^1$ , Dinggang Shen $^{9,21,22}$ , Junwei Han $^{1}$ , Tianming Liu $^{18}$ , Jun Liu $^{\ddagger2,3}$ , and Tuo Zhang §1

钟天阳* 1, 赵伟* 2,3,4, 张雨桐†5, 潘毅†6, 董沛昕†1, 姜作伟†1, 隗晓燕†8, 尚幼兰†2, 杨莉1, 魏耀耐1, 杨龙涛2, 陈浩1, 赵欢7, 刘宇霄9,10, 朱宁1, 李一维18, 王轶松2, 姚佳琪11, 王佳琪12, 曾颖2,13, 何磊14, 郑超15, 张智学16, 李明17, 刘正亮18, 戴海星18, 吴子豪18, 张璐19, 张舒12, 蔡晓燕1, 胡新涛1, 赵世杰1, 蒋曦7, 张鑫5, 李想20, 朱大江19, 郭磊1, 沈定刚9,21,22, 韩俊伟1, 刘天明18, 刘军‡2,3, 张拓§1

Abstract

摘要

Radiology report generation, as a key step in medical image analysis, is critical to the quantitative analysis of clinically informed decision-making levels. However, complex and diverse radiology reports with cross-source heterogeneity pose a huge general iz ability challenge to the current methods under massive data volume, mainly because the style and norm at iv it y of radiology reports are obviously distinctive among institutions, body regions inspected and radiologists. Recently, the advent of large language models (LLM) offers great potential for recognizing signs of health conditions. To resolve the above problem, we collaborate with the Second Xiangya Hospital in China and propose ChatRadio-Valuer based on the LLM, a tailored model for automatic radiology report generation that learns general iz able representations and provides a basis pattern for model adaptation in sophisticated analysts’ cases. Specifically, ChatRadio-Valuer is trained based on the radiology reports from a single institution by means of supervised fine-tuning, and then adapted to disease diagnosis tasks for human multi-system evaluation (i.e., chest, abdomen, muscle-skeleton, head, and max ill o facial & neck) from six different institutions in clinical-level events. The clinical dataset utilized in this study encompasses a remarkable total of 332,673 observations. From the comprehensive results on engineering indicators, clinical efficacy and deployment cost metrics, it can be shown that ChatRadio-Valuer consistently outperforms state-of-the-art models, especially ChatGPT (GPT-3.5-Turbo) and GPT-4 et al., in terms of the diseases diagnosis from radiology reports. ChatRadio-Valuer provides an effective avenue to boost model generalization performance and alleviate the annotation workload of experts to enable the promotion of clinical AI applications in radiology reports.

放射学报告生成作为医学影像分析的关键步骤,对临床决策层面的定量分析至关重要。然而,在大数据量下,复杂多样且存在跨源异质性的放射学报告对现有方法提出了巨大的泛化能力挑战,这主要源于不同机构、检查部位和放射科医师之间报告风格与规范性的显著差异。近期,大语言模型(LLM)的出现为识别健康状况体征提供了巨大潜力。为解决上述问题,我们与中国中南大学湘雅二医院合作,提出了基于LLM的ChatRadio-Valuer——一种通过学习可泛化表征、为复杂分析案例提供模型适配基础范式的定制化放射学报告自动生成模型。具体而言,ChatRadio-Valuer首先通过监督微调基于单一机构的放射学报告进行训练,随后适配至临床级事件中来自六家不同机构的人类多系统(胸部、腹部、肌肉骨骼、头面部及颈部)疾病诊断任务。本研究使用的临床数据集包含总计332,673例观测记录。从工程指标、临床效能和部署成本等综合评估结果来看,ChatRadio-Valuer在放射学报告的疾病诊断方面持续优于最先进模型,特别是ChatGPT(GPT-3.5-Turbo)和GPT-4等。该模型为提升模型泛化性能、减轻专家标注工作量提供了有效途径,从而推动放射学报告中临床AI应用的发展。

1 Introduction

1 引言

Radiology exists as an essential department in hospitals or medical institutions, which substantially facilitates the progress of the detection, diagnosis, prediction, evaluation and follow-up for most of diseases. Radiology reports provide a comprehensive evaluation for different diseases, assisting clinicians in making decisions [1–5]. The natural process of generating a radiology report is that the radiologists conclude an impression after comprehensively describing the positive and negative image findings of a specific examination [6–9] (i.e., chest CT and abdominal CT). Considering the tremendous amounts of radiology examinations, especially in China, it places a heavy burden on radiologists to write radiology reports in daily work [10–12]. Besides, the style and norm at iv it y of radiology reports are variable among individuals and institutions, due to the different writing habits and education. This may confuse the patients if they receive different descriptions from many institutions and also limit the communication among different radiologists. Therefore, an efficient and standard way to generate the impression of radiology report is pursued by radiologists.

放射科是医院或医疗机构的重要科室,极大地促进了大多数疾病的检测、诊断、预测、评估和随访进程。放射报告为不同疾病提供全面评估,辅助临床医生决策[1-5]。生成放射报告的自然流程是放射科医师在综合描述特定检查(如胸部CT和腹部CT)的阳性和阴性影像表现后得出印象[6-9]。考虑到庞大的放射检查量(尤其在中国),撰写放射报告给放射科医师的日常工作带来了沉重负担[10-12]。此外,由于书写习惯和教育背景差异,放射报告的风格和规范性在个人与机构间存在较大差异。当患者从多家机构获得不同描述时可能产生困惑,同时也限制了不同放射科医师间的交流。因此,放射科医师亟需一种高效且标准化的放射报告印象生成方式。

Driven by the extensive clinical demands, automatic generating radiology reports becomes a new hotspot and promising direction [13–23]. In this context, natural language processing (NLP) strategies, which are widely used in non-medical areas, are recently adopted to tackle this issue [24]. With the aid of NLP techniques, the reporting time can be obviously reduced, resulting in the improvement of work efficiency. Another potential advantage of NLP in generating radiology reports is that the impression of radiology reports could be more structured and complete, further reducing error rates and facilitating the mutual communication from different institutions. In general, there are two main research directions: model-driven methods and data-driven methods.

在广泛临床需求的推动下,自动生成放射学报告成为新的热点和前景方向[13-23]。在此背景下,自然语言处理(NLP)策略这一在非医疗领域广泛应用的技术,近期被用于解决该问题[24]。借助NLP技术,报告时间可显著缩短,从而提升工作效率。NLP在生成放射学报告中的另一潜在优势是,可使报告结论部分更加结构化和完整,进一步降低错误率并促进不同机构间的相互交流。总体而言,该领域存在两个主要研究方向:模型驱动方法和数据驱动方法。

Many previous studies on radiology report generation have followed model-driven methods [7, 15–18]. Jing et al. [15] proposed a co-attention network to produce full paragraphs for automatically generating reports and demonstrated the effectiveness of their proposed methods on publicly available datasets. Chen et al. [7] proposed to generate radiology reports via a memory-driven transformer, which addressed what conventional image captioning methods are inefficient (and inaccurate) for generating long and detailed radiology reports. Chen et al. [16] proposed that incorporating memory into both the encoding process and decoding process can further enhance the generation ability of the transformer. Wang et al., [17] proposed a cross-modal network to facilitate cross-modal pattern learning, where the cross-modal prototype matrix is initialized with prior information and an improved multi-label contrastive loss is proposed to promote the cross-modal prototype learning. Wang et al., [18] proposed a self-boosting framework, where the main task of report generation helps learn highly text-correlated visual features, and an auxiliary task of image-text matching facilitates the alignment of visual features and textual features.

许多先前关于放射学报告生成的研究都遵循了模型驱动的方法 [7, 15–18]。Jing 等人 [15] 提出了一种协同注意力网络来生成完整段落,用于自动生成报告,并在公开数据集上证明了所提方法的有效性。Chen 等人 [7] 提出通过记忆驱动的 Transformer 生成放射学报告,解决了传统图像描述方法在生成长且详细的放射学报告时效率低下(且不准确)的问题。Chen 等人 [16] 提出在编码和解码过程中都引入记忆可以进一步增强 Transformer 的生成能力。Wang 等人 [17] 提出了一种跨模态网络以促进跨模态模式学习,其中跨模态原型矩阵用先验信息初始化,并提出了一种改进的多标签对比损失来促进跨模态原型学习。Wang 等人 [18] 提出了一种自我增强框架,其中报告生成的主要任务有助于学习高度文本相关的视觉特征,而图像-文本匹配的辅助任务促进了视觉特征和文本特征的对齐。

Other researchers deem that data is significant for deep learning based automatic report generation, and their research interests focus on data-driven methods [19–23]. Liu et al. [19] introduced three modules to utilize prior knowledge and posterior knowledge from radiology data for alleviating visual and textual data bias, and showed that their model outperforms previous methods on both language generation metrics and clinical evaluation. Inspired by curriculum learning, No oral a hz a deh et al. [20] extracted global concepts from the radiology data and utilized them as transition text from chest radio graphs to radiology reports (i.e., image-to-text-to-text). To alleviate the data bias and make the best use of available data, Liu et al. [21] proposed a competence-based multimodal curriculum learning framework, where each training instance was estimated and current models were evaluated and then the most suitable batch of training instances were selected considering current model competence. To make full use of limited data, Yan et al. [22] developed a weakly supervised approach to identify “hard” negative samples from radiology data and assign them with higher weights in training loss to enhance sample differences. Yuan et al. [23] introduced a sentence-level attention mechanism to fuse multi-view visual features and extracted medical concepts from radiology reports to fine-tune the encoder for extracting the most frequent medical concepts from the x-ray images.

其他研究者认为数据对基于深度学习的自动报告生成至关重要,他们的研究兴趣集中在数据驱动方法上[19–23]。Liu等人[19]引入了三个模块,利用放射学数据中的先验知识和后验知识来缓解视觉与文本数据偏差,并证明其模型在语言生成指标和临床评估上均优于先前方法。受课程学习启发,No oral a hz a deh等人[20]从放射学数据中提取全局概念,将其作为从胸部X光片到放射学报告的过渡文本(即图像-文本-文本)。为缓解数据偏差并充分利用现有数据,Liu等人[21]提出基于能力的多模态课程学习框架,通过评估每个训练实例和当前模型状态,选择最适合当前模型能力的训练批次。针对有限数据的最大化利用,Yan等人[22]开发弱监督方法从放射学数据中识别"困难"负样本,并在训练损失中赋予更高权重以增强样本差异性。Yuan等人[23]采用句子级注意力机制融合多视角视觉特征,并从放射学报告中提取医学概念来微调编码器,从而从X光图像中提取最高频的医学概念。

The above methods have made great progress in radiology report generation. However, there are still some limitations. Firstly, the current expansion of radiology data is largely contributed to the practice of sharing across multiple medical institutions, which leads to a complex data interaction, thus requiring insightful and controlled analysis. Secondly, the development of modern radiology imaging leads to data complexity increasing. Thirdly, in radiology report generation, NLP researchers have paid attention to image caption [25], which has demonstrated its effectiveness. Actually, the transfer properties of these models to radiology report generation encounter the model generalization problem. Model generalization requires a trained model to transfer its generation capacity to the target domain and produce accurate output for previously unseen instances. In radiology report generation, this requires generating clinically accurate reports for various medical subjects. While image caption has exhibited remarkable effectiveness in general text generation tasks, the complexities of medical terms and language introduce hindrances to model generalization.

上述方法在放射学报告生成方面取得了重大进展。然而仍存在一些局限性。首先,当前放射学数据的扩展主要得益于多家医疗机构间的共享实践,这导致了复杂的数据交互,因此需要深入且可控的分析。其次,现代放射成像技术的发展使得数据复杂性不断增加。第三,在放射学报告生成中,NLP研究者关注了图像描述[25]的有效性。实际上,这些模型向放射学报告生成的迁移特性会遇到模型泛化问题。模型泛化要求训练后的模型将其生成能力迁移到目标领域,并为未见过的实例生成准确输出。在放射学报告生成中,这需要为各种医学主题生成临床准确的报告。虽然图像描述在通用文本生成任务中表现出显著有效性,但医学术语和语言的复杂性给模型泛化带来了阻碍。

As one of the most influential artificial intelligence (AI) products today [26], LLMs, known as generative models, provide a user-friendly human-machine interaction platform that brings the powerful capabilities of large language models to the public and has been rapidly integrated into various fields of application [26–42]. Liu et al. [41] proposed Radiology-GPT, which uses instruction tuning approach to fine-tune Alpaca [43] for mining radiology domain knowledge, and prove its superior performance on reports sum mari z ation task. Based on Llama2, Liu et al. [42] further presented Radiology-Llama2 using instruction tuning to fine-tune Llama2 on radiology reports. However, they both ignore the fact that the style and norm at iv it y of radiology reports vary among individuals and institutions with writing habits and education. Therefore, they are difficult to achieve satisfactory results when training medical LLMs.

作为当今最具影响力的人工智能(AI)产品之一[26],大语言模型(LLM)作为生成式模型,提供了一个用户友好的人机交互平台,将大语言模型的强大能力带给公众,并已迅速融入各个应用领域[26-42]。Liu等人[41]提出了Radiology-GPT,采用指令微调方法对Alpaca[43]进行微调以挖掘放射学领域知识,并证明了其在报告摘要任务上的卓越性能。基于Llama2,Liu等人[42]进一步提出Radiology-Llama2,使用指令微调在放射学报告上对Llama2进行微调。然而,他们都忽略了放射学报告的风格和规范性因个人和机构的写作习惯及教育背景而异这一事实。因此,在训练医疗大语言模型时,他们难以取得令人满意的结果。

Inspired by large language models, we propose ChatRadio-Valuer by fine-tuning Llama2 on large-scale, high-quality instruction-following data in the radiology domain. ChatRadio-Valuer inherits robust language understanding ability across various domains and genres, coupled with complex reasoning and diverse generation abilities. Additionally, it learns high-level domain-specific knowledge during training in the radiology domain. This addresses the heterogeneity gaps among institutions and enables accurate radiology report generation. Extensive experiments are conducted with real radiology reports from a clinical pipeline in the Second Xiangya Hospital of China and the experimental results highlight the superiority of our method. The main contributions are summarized as follows:

受大语言模型 (Large Language Model) 启发,我们通过在放射学领域的大规模高质量指令跟随数据上微调 Llama2,提出了 ChatRadio-Valuer。该方法继承了跨领域跨体裁的强健语言理解能力,兼具复杂推理与多样化生成能力,同时在放射学领域训练过程中习得了高层次领域知识,有效解决了机构间的异质性差异,实现了精准的放射学报告生成。我们在中南大学湘雅二医院临床流程的真实放射学报告上进行了大量实验,结果凸显了本方法的优越性。主要贡献总结如下:

• To the best of our knowledge, a complete full-stack solution for clinical-level radiology report generation based on multi-institution and multi-system data is developed to obtain desirable performance results for the first time. The solution significantly outperforms the state-of-the-art counterparts, benefiting performance analysts of multiple data sources in radiology. • An effective ChatRadio-Valuer framework is proposed that can automatically utilize radiology domain knowledge for cross-institution adaptive radiology report generation based on singleinstitution samples, which can provide a fundamental scheme to boost model generalization performance from radiology reports. • We implement our framework and conduct substantial use cases on clinical utilities among six different institutions. Through the cases, we obtain valuable insights into radiology experts, which are beneficial to alleviate the annotation workload of experts. This opens the door to bridge LLMs’ domain adaptation application and radiology performance evaluation.

• 据我们所知,这是首次基于多机构、多系统数据开发的临床级放射学报告生成全栈解决方案,其性能表现达到理想水平。该方案显著优于现有最优方法,使放射学多数据源的性能分析人员受益。

• 提出了一种高效的ChatRadio-Valuer框架,能够基于单机构样本自动利用放射学领域知识进行跨机构自适应放射学报告生成,为提升放射学报告的模型泛化性能提供了基础方案。

• 我们在六个不同机构的临床应用中实施了该框架并开展大量用例研究。通过这些案例,我们获得了对放射学专家具有重要价值的洞见,有助于减轻专家的标注工作量。这为弥合大语言模型领域适应应用与放射学性能评估之间的鸿沟打开了大门。

2 Clinical Background and Data

2 临床背景与数据

2.1 Collaboration with the Second Xiangya Hospital of China

2.1 与中南大学湘雅二医院的合作

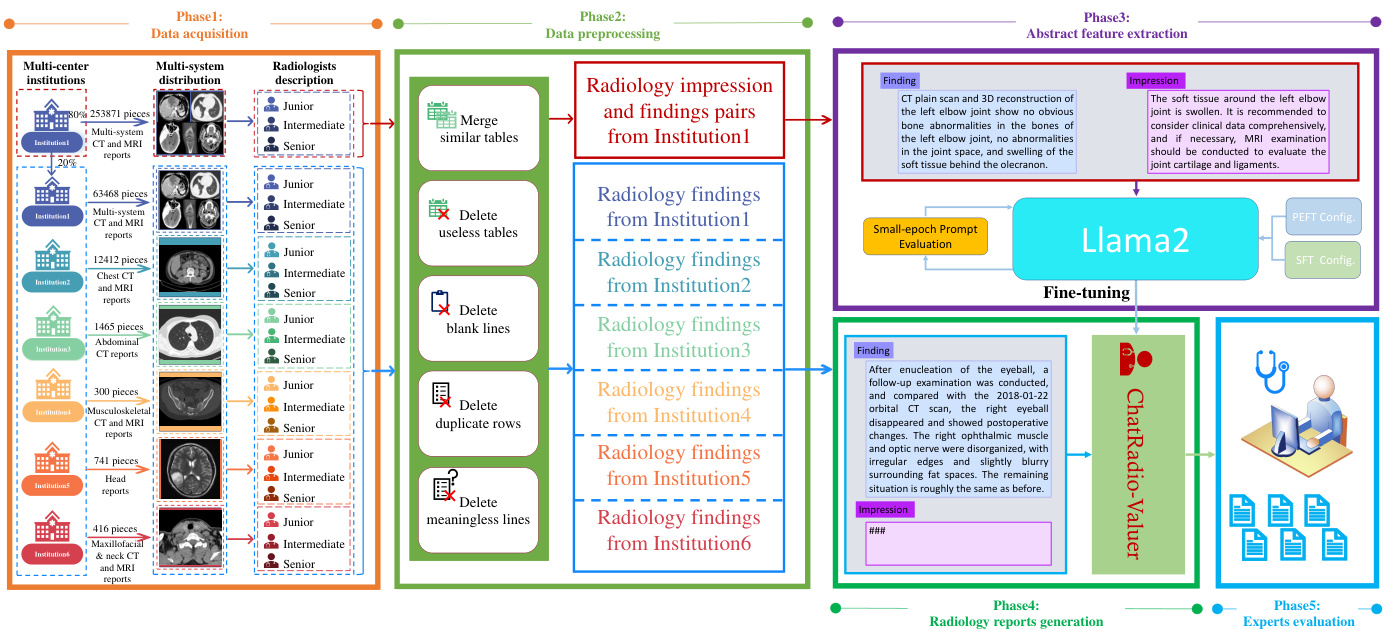

We have collaborated with the Second Xiangya Hospital of China. During the collaboration, we utilize the platform (the phase 1 in Figure 1) for data acquisition that enables physicians to gather and analyze medical data. The platform consists of three components: multi-center institutions inspection, multi-system analysis, and radiologists’ description. The ultimate goal is to propose a unified and general iz able framework, which can simulate radiology experts to execute disease diagnoses across multiple institutions and multiple systems, in order to improve clinical efficiency and reduce the workload of relevant staff in processing massive data.

我们与中南大学湘雅二医院开展了合作。在合作过程中,我们利用该平台(图1中的第一阶段)进行数据采集,使医生能够收集和分析医疗数据。该平台由三个部分组成:多中心机构检查、多系统分析和放射科医生描述。最终目标是提出一个统一且可泛化的框架,能够模拟放射学专家执行跨多个机构和多个系统的疾病诊断,以提高临床效率并减少相关人员处理海量数据的工作量。

Many factors have brought great challenges to this task. Specifically, in the first component of the platform, it describes that data acquisition comes from six different institutions, and the quality and quantity are different to varying degrees. The main reasons are the constraints of types of equipment and observable conditions among them. The specific materials will be introduced in Section 2.2. Next, the second component contains two message formats, such as CT and MRI modalities on the chest, abdomen, muscle-skeleton, head, and max ill o facial & neck systems. The prominent dissimilarity within this dataset arises primarily from significant variations in data source distributions between the two modalities, contingent upon the specific human body locations inspected. Specifically, Institution 1 contributes data for five complete systems, while institutions 2 through 6 provide data for individual human body systems, namely the chest, abdomen, mus cul o skeletal, head, and max ill o facial & neck systems. Then, during the third process, the information carrier from image to text conversion is performed by different levels of clinicians including junior, intermediate, and senior radiologists. Due to the different writing habits and education, the style and norm at iv it y of radiology reports are obviously distinctive among radiologists.

诸多因素给这项任务带来了巨大挑战。具体而言,平台的第一部分指出数据采集自六家不同机构,其质量和数量存在不同程度的差异,主要源于设备类型和观测条件的限制。具体材料将在2.2节详述。其次,第二部分包含两种信息载体格式(如胸部、腹部、肌肉骨骼、头颈颌面系统的CT和MRI模态),该数据集最显著的差异性源自两种模态间数据源分布的显著变化,这取决于具体检查的人体部位。具体表现为:机构1提供了五个完整系统的数据,而机构2至6仅分别提供胸部、腹部、肌肉骨骼、头颈颌面系统中单一系统的数据。最后在第三环节中,图像到文本转换的信息载体由不同层级的临床医生(包括初级、中级和高级放射科医师)完成。由于书写习惯和教育背景差异,放射科医师撰写的报告在风格和规范性方面存在明显区别。

Figure 1: Overall framework of the proposed method for radiology report generation. Multiinstitution and multi-system clinical radiology reports are acquired in phase 1. Systematic data preprocessing is implemented and then synthesizes the samples into high-quality prompts in phase 2. The general iz able advanced features are extracted and applied for clinical utilities in phase $3&$ phase 4. The comprehensive evaluations on ChatRadio-Valuer’s efficacy are executed in phase 5.

图 1: 放射学报告生成方法的整体框架。第一阶段获取多机构、多系统的临床放射学报告。第二阶段实施系统性数据预处理,并将样本合成为高质量提示。第三和第四阶段提取可泛化的高级特征并应用于临床用途。第五阶段对ChatRadio-Valuer的效能进行全面评估。

In brief, multiple aspects, from institutions, multiple systems of human body, and radiologists, bring great dilemmas to the assignment, which may confuse the patients if they receive different descriptions from many institutions and also limit the free communication among different radiologists. The proposed framework named ChatRadio-Valuer is described in Section 3.

简言之,从医疗机构、人体多系统到放射科医师的多重因素,为诊断报告撰写带来了巨大困境。这不仅可能导致患者收到不同机构矛盾描述时的困惑,也限制了放射科医师间的自由交流。第3节将阐述所提出的ChatRadio-Valuer框架。

2.2 Data Description

2.2 数据描述

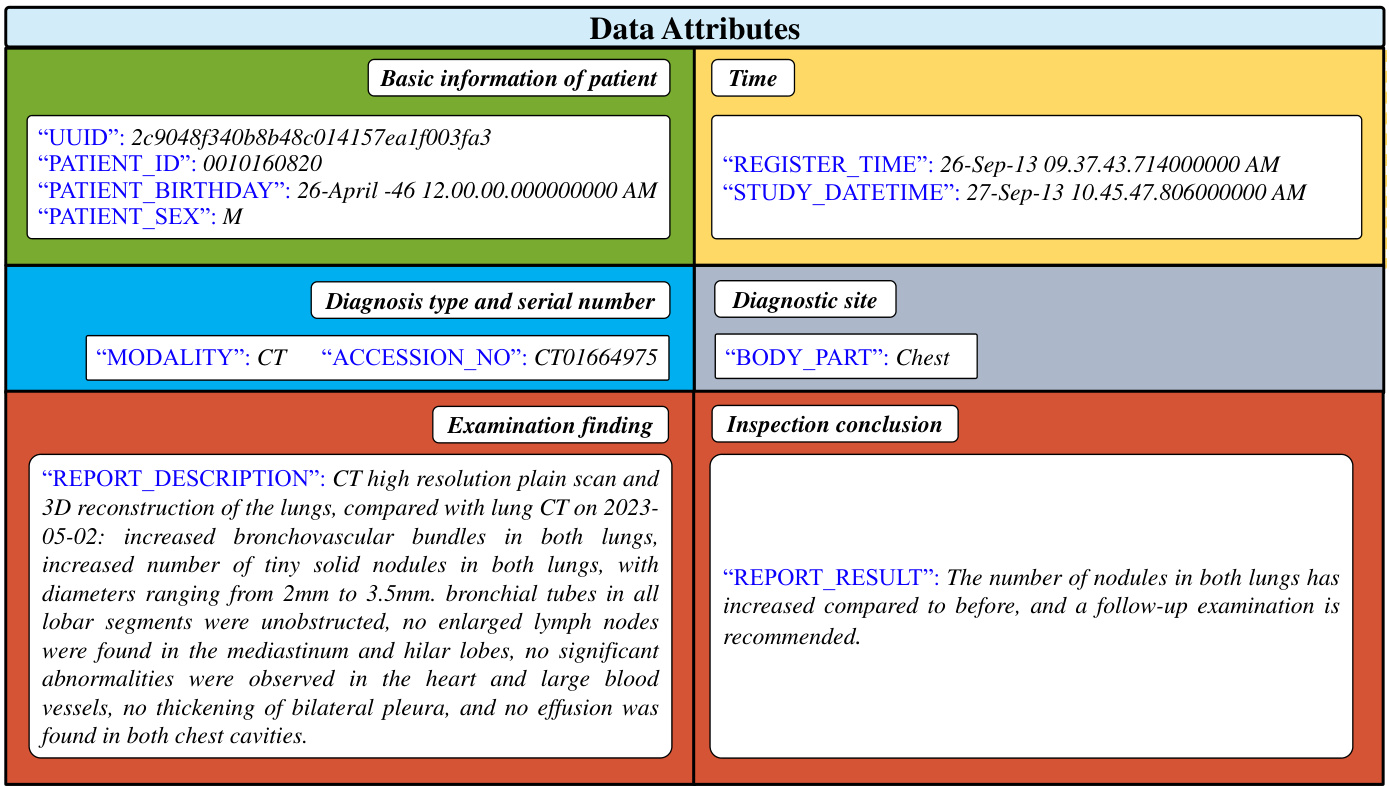

In this multi-institution modeling study, the radiology reports, including basic information, description and impression, were searched and downloaded in the report system from six institutions. In detail, the specific data attributes of a radiology report example are shown in Figure 2. And a comprehensive data distribution is illustrated in Table 1. In the Second Xiangya hospital dataset (Dataset SXY), 317339 radiology reports from five subgroups (94097 chest reports, 64550 abdominal reports, 46092 mus cul o skeletal reports, 69902 head reports, and 42698 max ill o facial & neck reports) were exported from 2012 year to 2023 year. These data were used for model development and internal testing. Other five datasets were collected for external testing, from the Huadong Hospital dataset (Dataset External 1, DE1, chest reports), Xiangtan Central Hospital (Dataset External 2, DE2, abdominal reports), The First Hospital of Hunan University of Chinese Medicine (Dataset External 3, DE3, mus cul o skeletal reports), The First People’s Hospital of Changde City (Dataset External 4, DE4, head reports), Yueyang Central Hospital City (Dataset External 5, DE5, max ill o facial & neck

在这项多机构建模研究中,我们从六家机构的报告系统中检索并下载了包含基本信息、描述和印象的放射学报告。具体而言,图2展示了一份放射学报告示例的具体数据属性,表1则呈现了全面的数据分布情况。

在湘雅二医院数据集(Dataset SXY)中,我们导出了2012年至2023年间五个亚组的317339份放射学报告(94097份胸部报告、64550份腹部报告、46092份肌肉骨骼报告、69902份头部报告以及42698份颌面颈部报告)。这些数据用于模型开发和内部测试。

外部测试数据来自另外五个数据集:华东医院数据集(Dataset External 1, DE1,胸部报告)、湘潭市中心医院(Dataset External 2, DE2,腹部报告)、湖南中医药大学第一附属医院(Dataset External 3, DE3,肌肉骨骼报告)、常德市第一人民医院(Dataset External 4, DE4,头部报告)以及岳阳市中心医院(Dataset External 5, DE5,颌面颈部报告)。

Figure 2: A radiology report example on its attributes. These attributes are manually diagnosed and described by radiologists at different levels, among which there are obvious variations in styles.

图 2: 放射学报告示例及其属性。这些属性由放射科医生在不同层级进行人工诊断和描述,其中存在明显的风格差异。

Table 1: The statistical analysis encompasses comprehensive information regarding the experimental dataset, including both the training and test sets, along with detailed statistical insights derived from data obtained from six distinct institutions.

表1: 统计分析涵盖了实验数据集的全面信息,包括训练集和测试集,以及从六个不同机构获得的数据中提取的详细统计结果。

| Variable | Training Set | Test Set | Total | |||||

| Institution 1 (80%) | Institution 1 (20%) | Institution 2 | Institution 3 | Institution 4 | Institution 5 | Institution 6 | All Set | |

| No.of pieces | 253871 | 63468 | 12412 | 1465 | 300 | 741 | 416 | 332673 |

| Age(y) | 0-103 | 0-101 | 0-96 | 9-95 | 1-92 | 0-100 | 0-91 | 0-103 |

| Sex | ||||||||

| Female | 119185 | 28993 | 7320 | 620 | 133 | 382 | 225 | 156858 |

| Male | 134686 | 34475 | 5092 | 845 | 167 | 359 | 191 | 175815 |

| Modality | ||||||||

| CT | 210487 | 49488 | 12397 | 1465 | 238 | 543 | 346 | 274964 |

| MRI | 43384 | 13980 | 15 | 0 | 62 | 198 | 70 | 57709 |

| System | ||||||||

| Chest | 75277 | 18820 | 12412 | 0 | 0 | 0 | 0 | 106509 |

| Abdomen | 51640 | 12910 | 0 | 1465 | 0 | 0 | 0 | 66015 |

| Muscle-skeletion | 36874 | 9218 | 0 | 0 | 300 | 0 | 0 | 46392 |

| Head | 55922 | 13980 | 0 | 0 | 0 | 741 | 0 | 70643 |

| Maxillofacial & neck | 34158 | 8540 | 0 | 0 | 0 | 0 | 416 | 43114 |

| 变量 | 训练集 | 测试集 | 总计 | |||||

|---|---|---|---|---|---|---|---|---|

| 机构1 (80%) | 机构1 (20%) | 机构2 | 机构3 | 机构4 | 机构5 | 机构6 | 全集 | |

| 数量(件) | 253871 | 63468 | 12412 | 1465 | 300 | 741 | 416 | 332673 |

| 年龄(岁) | 0-103 | 0-101 | 0-96 | 9-95 | 1-92 | 0-100 | 0-91 | 0-103 |

| 性别 | ||||||||

| 女性 | 119185 | 28993 | 7320 | 620 | 133 | 382 | 225 | 156858 |

| 男性 | 134686 | 34475 | 5092 | 845 | 167 | 359 | 191 | 175815 |

| 模态 | ||||||||

| CT | 210487 | 49488 | 12397 | 1465 | 238 | 543 | 346 | 274964 |

| MRI | 43384 | 13980 | 15 | 0 | 62 | 198 | 70 | 57709 |

| 系统 | ||||||||

| 胸部 | 75277 | 18820 | 12412 | 0 | 0 | 0 | 0 | 106509 |

| 腹部 | 51640 | 12910 | 0 | 1465 | 0 | 0 | 0 | 66015 |

| 肌肉骨骼 | 36874 | 9218 | 0 | 0 | 300 | 0 | 0 | 46392 |

| 头部 | 55922 | 13980 | 0 | 0 | 0 | 741 | 0 | 70643 |

| 颌面及颈部 | 34158 | 8540 | 0 | 0 | 0 | 0 | 416 | 43114 |

reports). They are denoted as from Institution 1 to Institution 6 respectively. This retrospective study was approved by The Second Xiangya Hospital, Institutional Review Board (Approval NO. LYF2023084), which waived the requirement for informed consent.

报告)。分别标记为机构1至机构6。这项回顾性研究经中南大学湘雅二医院伦理委员会批准(批准号LYF2023084),并豁免知情同意要求。

2.3 Data Preprocessing

2.3 数据预处理

In the overall framework of ChatRadio-Valuer, the quality of radiology reports plays a critical role in facilitating the method’s performance. Hence, the data preprocessing phase, encompassing the multi-system & multi-institution data refinement and prompt generation, assumes a pivotal role in ensuring the model’s efficacy and dependability when dealing with extensive domain-specific radiology reports originating from diverse institutions and systems. To achieve this objective, the operations proposed in this study are executed as indicated in Algorithm 1, aiming at a particular emphasis on mitigating improper interference caused by value and report title repetition, sheet inconsistencies and text irrelevance. The specific details are as follows:

在ChatRadio-Valuer的整体框架中,放射学报告的质量对方法性能起着关键作用。因此,数据预处理阶段(包括多系统多机构数据精炼与提示生成)对于确保模型在处理来自不同机构和系统的大规模领域特定放射学报告时的效能和可靠性具有决定性意义。为实现这一目标,本研究提出的操作如算法1所示,重点在于减轻由数值与报告标题重复、表格不一致及文本无关性造成的不当干扰。具体细节如下:

Algorithm 1 Training Preparation.

算法 1: 训练准备

- Data Synthesis: We aggregate data from multiple systems, group them by institution, and randomly process them according to their belonging centers, obtaining a comprehensive dataset $X_ {a l l}$ covering all the reports. Then we follow the sequence of these institutions, extracting the training set $X_ {t r a i n}$ and the test set $X_ {t e s t}$ according to the index of centers. In detail, the data from Institution 1 are randomly selected to fine-tune ChatRadio-Valuer, and the other institutions are utilized to test the performance of ChatRadio-Valuer. The entire training dataset are then split into an $80%$ training set and a $20%$ evaluation set. Data in $X_ {t e s t}$ are exclusively reserved for evaluation purposes for simplicity.

- 数据合成:我们从多个系统聚合数据,按机构分组,并根据所属中心随机处理,获得涵盖所有报告的综合数据集 $X_ {all}$。随后按照这些机构的顺序,根据中心索引提取训练集 $X_ {train}$ 和测试集 $X_ {test}$。具体而言,随机选择机构1的数据对ChatRadio-Valuer进行微调,其他机构则用于测试ChatRadio-Valuer的性能。整个训练数据集进一步划分为80%的训练集和20%的评估集。为简化流程,$X_ {test}$ 中的数据仅保留用于评估目的。

- Data Cleaning: For each dataset, a rigorous data cleaning process is conducted from three different perspectives: addressing repetition, rectifying inconsistencies, and filtering out text insignificance. To tackle repetition issues, manual searches are conducted to identify and eliminate extraneous elements related to values and report titles. This result in a curated dataset featuring unique values in a standardized format. In addressing inconsistencies arising from multiple sheets from different institutions, meticulous human scrutiny and manual consolidation are employed. Regarding text insignificance, a collaborative effort between experts from the Second Xiangya Hospital of China and our team led to the creation of a corpus containing non-essential terms, deemed too eclectic for LLMs to comprehend but crucial for clinical applications. Leveraging this corpus, a significant portion of irrelevant data is removed, yielding a refined dataset optimized for prompt generation. We combine this dataset and expert-curated templates $X_ {p t}$ to eventually construct high-quality prompts $X_ {p r o m p t}$ consisting of both training prompts Xtprrompt and evaluation prompts Xepvroalm pt, as described in the Section 3.

- 数据清洗:针对每个数据集,我们从三个维度进行严格的数据清洗:处理重复项、修正不一致性以及过滤无意义文本。为解决重复问题,通过人工检索剔除与数值和报告标题相关的冗余元素,最终生成具有标准化格式唯一值的精编数据集。对于不同机构多表格导致的不一致问题,采用人工核查与手动整合相结合的方式处理。在无意义文本过滤方面,由中南大学湘雅二医院专家团队与我们协作构建了非必要术语语料库,这些术语虽对大语言模型理解无关紧要但对临床应用至关重要。基于该语料库,我们移除了大量无关数据,生成适用于提示词生成的优化数据集。如第3节所述,我们将该数据集与专家编制的模板$X_ {pt}$结合,最终构建出包含训练提示词$X_ {tprrompt}$和评估提示词$X_ {epvroalmpt}$的高质量提示词集$X_ {prompt}$。

3 Framework

3 框架

In this section, we first define the problem of radiology report generation in this work and present an overview of ChatRadio-Valuer. Then, we introduce the implementation details of ChatRadio-Valuer.

在本节中,我们首先定义了本工作中放射学报告生成的问题,并概述了ChatRadio-Valuer。接着,我们介绍了ChatRadio-Valuer的实现细节。

3.1 Problem Formulation

3.1 问题表述

Due to factors of variations in physicians’ professional skills, differences in the operation of medical equipment across different hospitals, and variances in organ structures, radiology medical data often exhibit inconsistencies in both format and quality. These factors can be collectively referred to as heterogeneity in healthcare data.

由于医生专业技能差异、不同医院医疗设备操作方式不同以及器官结构差异等因素,放射医学数据在格式和质量上常存在不一致性。这些因素可统称为医疗数据异质性 (heterogeneity) 。

In the testing task, the input is defined as $\mathrm{Input}={f,X_ {\mathrm{test}}}$ , where $f$ represents the fine-tuned model, and $X_ {\mathrm{test}}$ belongs to the set ${X_ {1},X_ {2},X_ {3},X_ {4},X_ {5},X_ {6}}$ , which denotes the data from six different institutions. In a single sample, $x_ {m}$ represents "REPORT DESCRIPTION", while $y_ {m}$ denotes "REPORT RESULT".Each subset $X_ {i}$ can be further divided based on the system:

在测试任务中,输入定义为 $\mathrm{Input}={f,X_ {\mathrm{test}}}$ ,其中 $f$ 代表微调后的模型, $X_ {\mathrm{test}}$ 属于集合 ${X_ {1},X_ {2},X_ {3},X_ {4},X_ {5},X_ {6}}$ ,表示来自六个不同机构的数据。单个样本中, $x_ {m}$ 表示"报告描述", $y_ {m}$ 表示"报告结果"。每个子集 $X_ {i}$ 可按系统进一步划分:

Formally, the mathematical expression for the testing process can be represented as:

形式上,测试过程的数学表达式可表示为:

$$

\operatorname* {max}_ {f}\sum_ {X_ {i}^{j}\in X_ {\mathrm{test}}}\operatorname{Performance}(f,X_ {i}^{j})

$$

$$

\operatorname* {max}_ {f}\sum_ {X_ {i}^{j}\in X_ {\mathrm{test}}}\operatorname{Performance}(f,X_ {i}^{j})

$$

where $f$ represents the fine-tuned model, which needs to be evaluated in the testing task; $X_ {i}^{j}$ denotes a sample set from the $X_ {\mathrm{test}}$ collection, corresponding to different medical departments or system divisions; Performance $(f,X_ {i}^{j})$ is the metrics used to measure the performance of model $f$ on the sample set $X_ {i}^{j}$ . In Section 4, the similarity metrics R-1, R-2, and R-L are used as indicators.

其中 $f$ 代表经过微调的模型,需要在测试任务中进行评估;$X_ {i}^{j}$ 表示来自 $X_ {\mathrm{test}}$ 集合的样本子集,对应不同的医疗科室或系统部门;Performance $(f,X_ {i}^{j})$ 是用于衡量模型 $f$ 在样本集 $X_ {i}^{j}$ 上表现的指标。在第4节中,相似性指标R-1、R-2和R-L被用作评估指标。

Building upon this, we conduct experiments to assess the model’s performance from the following three aspects:

基于此,我们从以下三个方面开展实验以评估模型性能:

Our goal is to enable models to possess cross-institution and multi-system diagnostic capabilities. The fundamental challenge faced in mathematical analysis is how to leverage latent knowledge to constrain the model’s freedom within a vast space of heterogeneous data samples, thereby enabling the model to achieve an approximate global optimal solution in Eq.(1). In our experiments, the effective fine-tuning techniques are exploited to restrict the pre-trained model’s degrees of freedom, resulting in an improved generalization performance in the feature space.

我们的目标是让模型具备跨机构和多系统的诊断能力。数学分析面临的根本挑战在于如何利用潜在知识在异构数据样本的广阔空间中约束模型的自由度,从而使模型能够在方程(1)中实现近似全局最优解。在实验中,我们采用有效的微调技术来限制预训练模型的自由度,从而在特征空间中提升泛化性能。

3.2 Framework Overview

3.2 框架概述

This section provides a comprehensive introduction to the method presented in this article, with applicability to large-scale, multi-institution, and multi-system in the field of radiology, as illustrated in Figure 1. The method is based on a dataset comprising 332673 radiology records obtained from multi-institution and multi-system sources and designed to address the clinical challenge of radiology reports originating from multiple institutions. Here, we present an overall architecture of the proposed method by leveraging medical knowledge in the field of radiology, followed by a detailed description of each phase. The proposed method comprises five distinct phases: data acquisition, data preprocessing, abstract feature extraction, radiology report generation, and experts evaluation.

本节全面介绍了本文提出的方法,该方法适用于放射学领域的大规模、多机构、多系统场景,如图 1 所示。该方法基于一个包含 332673 份放射学记录的数据集,这些记录来自多机构和多系统,旨在解决来自多个机构的放射学报告的临床挑战。在此,我们通过利用放射学领域的医学知识,展示了所提方法的整体架构,随后详细描述了每个阶段。所提方法包括五个不同的阶段:数据采集、数据预处理、抽象特征提取、放射学报告生成和专家评估。

- Phase 1 (Data Acquisition): As an extremely important department in hospitals or medical institutions, the radiology department has different writing habits and styles of radiology reports, making it difficult to unify. Acknowledging this distinctive nature of report data within the radiology department, it is critical to address the inherent challenges in obtaining such data. This inherent challenge presents formidable obstacles when seeking to employ LLMs in the field of radiology. Therefore, in collaboration with Xiangya Second Hospital, we acquire radiology reports from six distinct institutions and five disparate systems. This meticulous data acquisition effort ensures the availability of high-quality data requisite for the training of our expected LLM, ChatRadio-Valuer. The description of the data acquisition can be found in Section 2.1 and 2.2.

- 第一阶段 (数据采集): 作为医院或医疗机构中极其重要的部门,放射科在撰写放射学报告时存在不同的书写习惯和风格,导致报告难以统一。认识到放射科报告数据的这一独特性,解决获取此类数据的内在挑战至关重要。这一固有挑战为在放射学领域应用大语言模型 (LLM) 带来了巨大障碍。因此,我们与湘雅二医院合作,从六家不同机构和五套独立系统中获取放射学报告。这一细致的数据采集工作确保了训练预期大语言模型 ChatRadio-Valuer 所需的高质量数据可用性。数据采集的具体描述详见第 2.1 和 2.2 节。

- Phase 2 (Data Preprocessing): LLMs necessitate the use of high-quality data for their pre-training, a critical property that distinguishes them from smaller-scale language models. The model capacity of LLMs is profoundly influenced by the pre-training corpus and its associated preprocessing methodologies, as expounded in [44]. The presence of a substantial number of bad samples within the corpus can significantly degrade the performance of the model. To alleviate the detrimental impact of such bad samples on model performance and enhance models’ adaptability and general iz ability, we collaborate closely with domain experts from hospitals to devise a method for the data preprocessing of these bad samples, which is discussed in Section 2.3.

- 阶段2 (数据预处理): 大语言模型(LLM)需要使用高质量数据进行预训练,这一关键特性使其区别于小规模语言模型。如[44]所述,大语言模型的容量深度受预训练语料库及其相关预处理方法的影响。语料库中存在大量劣质样本会显著降低模型性能。为减轻劣质样本对模型性能的负面影响并增强模型的适应性和泛化能力,我们与医院领域专家密切合作,设计了一套针对劣质样本的数据预处理方法,详见2.3节讨论。

- Phase 3 (Abstract Feature Extraction): LLMs invariably demand a heavy investment of human resources, computational resources, financial cost, and time consumption, presenting formidable challenges to researchers. Consequently, our method, following exhaustive investigations and rigorous experimental validation, leverages the Llama2 series of models. Additionally, we have employed proprietary datasets, acquired and pre processed in the previous two phases, for enhancing the performance of ChatRadio-Valuer. Comprehensive insights into this abstract feature extraction process are detailed in Section 3.3.

- 阶段3(抽象特征提取):大语言模型往往需要投入大量人力资源、计算资源、资金成本和时间消耗,这给研究人员带来了巨大挑战。因此,我们的方法在经过详尽调研和严格实验验证后,采用了Llama2系列模型。此外,我们还使用了在前两个阶段获取并预处理的专有数据集,以提升ChatRadio-Valuer的性能。关于这一抽象特征提取过程的全面阐述详见第3.3节。

- Phase 4 (Radiology Reports Generation): Leveraging the power of our proposed ChatRadioValuer, we can generate causal inferences with a specific set of configurations. The provided "Finding" is utilized as the input to the LLM, from which the "Impression", the output of the LLM, is derived. The explicit process to implement ChatRadio-Valuer for generating radiology reports refers to the Section 3.4.

- 阶段4 (放射学报告生成): 利用我们提出的ChatRadioValuer,可以通过特定配置生成因果推断。将提供的"发现(Findings)"作为大语言模型的输入,从中得到大语言模型的输出"印象(Impression)"。使用ChatRadioValuer生成放射学报告的具体实现流程详见第3.4节。

- Phase 5 (Experts Evaluation): Currently, there still exists controversy over the evaluation indicators for LLMs in the radiology field. Traditional similarity indicators fall short in accurately reflecting the specific performance of the model in this field, let alone measuring their practical applicability. In response to this challenge, our method, in collaboration with domain experts from medical institutions, introduces an evaluation framework tailored for medical LLMs. This framework not only excels in conventional similarity metrics as engineering ones but also offers a comprehensive assessment of the models’ practical clinical utility. Comparative analysis against the state-of-the-art (SOTA) methods reveals that the proposed ChatRadio-Valuer maintains a competitive advantage. A detailed exposition of this evaluation methodology can be found in Section 4.

- 阶段5(专家评估):目前针对放射学领域大语言模型(LLM)的评估指标仍存在争议。传统相似性指标难以准确反映模型在该领域的具体表现,更无法衡量其实际适用性。为解决这一挑战,我们联合医疗机构领域专家,提出了一套专为医疗大语言模型设计的评估框架。该框架不仅在工程性相似指标上表现优异,更能全面评估模型的临床实用价值。与前沿(SOTA)方法的对比分析表明,所提出的ChatRadio-Valuer保持竞争优势。该评估方法的具体阐述详见第4节。

3.3 How to Fine-tune ChatRadio-Valuer?

3.3 如何微调ChatRadio-Valuer?

3.3.1 Architecture of the Llama Model

3.3.1 Llama模型架构

In artificial intelligence, Llama has won the affection of a vast user base with its unique charm and unparalleled intelligence. However, this is not the end of the story. Llama2 not only inherits all the advantages of its predecessors but also brings significant innovations and improvements in many aspects. Taking into account the special problems of diagnosis of a variety of diseases based on radiology reports, the considerations for the foundation model are detailed as follows:

在人工智能领域,Llama以其独特的魅力和无与伦比的智能赢得了广大用户的喜爱。然而,这并非故事的终点。Llama2不仅继承了前代产品的全部优势,更在多方面带来了重大创新与提升。考虑到基于放射学报告诊断多种疾病的特殊问题,基础模型的考量细节如下:

matrix dimensions in the Feed forward Neural Network (FFN) module and introducing Grouped Query Attention (GQA) [46]. These improvements can enhance the model’s performance and efficiency, making it better suited to heterogeneous data. A schematic diagram of Llama2 is presented in Figure 3.

在前馈神经网络 (FFN) 模块中优化矩阵维度,并引入分组查询注意力 (Grouped Query Attention, GQA) [46]。这些改进能提升模型性能和效率,使其更适配异构数据。Llama2 的结构示意图见图 3:

Figure 3: The architecture diagram of Llama 2. The model structure of Llama 2 is basically consistent with the standard Transformer Decoder structure, mainly composed of 32 Transformer Blocks

图 3: Llama 2 架构图。Llama 2 的模型结构与标准 Transformer Decoder 结构基本一致,主要由 32 个 Transformer Blocks 组成

Some technical details are described as follows:

以下是一些技术细节:

• In the model’s Norm layer, RMSNorm is used without re-centering operations (the removal of the mean term), significantly improving model speed [47]. The RMSNorm formula is as follows:

• 在模型的 Norm 层中,使用 RMSNorm 时不进行重新居中操作(移除均值项),显著提升了模型速度 [47]。RMSNorm 公式如下:

$$

\mathrm{RMSNorm}(x)=\frac{x}{\sqrt{\mathrm{Mean}(x^{2})+\epsilon}}

$$

$$

\mathrm{RMSNorm}(x)=\frac{x}{\sqrt{\mathrm{Mean}(x^{2})+\epsilon}}

$$

where $x$ represents the input vector or tensor, typically the output of a neural network layer; $\epsilon$ is a small positive constant, usually used to prevent the denominator from being zero, ensuring numerical stability. It is typically a very small value, such as $1.0e^{-5}$ or $1.0e^{-6}$ .

其中 $x$ 代表输入向量或张量,通常是神经网络层的输出;$\epsilon$ 是一个小的正常数,通常用于防止分母为零,确保数值稳定性。它通常是一个非常小的值,例如 $1.0e^{-5}$ 或 $1.0e^{-6}$。

• SwiGLU activation function is employed in the Feed-Forward Network (FFN) to enhance performance. The implementation of the SwiGLU activation function is as follows:

• 在 Feed-Forward Network (FFN) 中采用 SwiGLU 激活函数以提升性能。SwiGLU 激活函数的实现方式如下:

$$

S w i G L U(x,W,V,b,c,\beta)=\mathrm{Swish}_ {\beta}(x W+b)\odot(x V+c)

$$

$$

S w i G L U(x,W,V,b,c,\beta)=\mathrm{Swish}_ {\beta}(x W+b)\odot(x V+c)

$$

where $S w i s h$ is defined as $S w i s h=x\cdot\sigma(\beta x)$ ; $\cal{G L U}$ is represented as $G L U(x)=\sigma(W x+b)\odot$ $(V x+c)$ ; $W$ and $V$ denote weight matrices; $b$ and $c$ represent biases; The symbol $\odot$ represents the element-wise Hadamard product, also known as element-wise multiplication.

其中 $Swish$ 定义为 $Swish=x\cdot\sigma(\beta x)$;$\cal{GLU}$ 表示为 $GLU(x)=\sigma(W x+b)\odot$ $(V x+c)$;$W$ 和 $V$ 表示权重矩阵;$b$ 和 $c$ 代表偏置;符号 $\odot$ 表示逐元素的哈达玛积 (Hadamard product),也称为逐元素乘法。

• RoPE (Rotary Position Embedding) is a technique used in neural networks to represent sequential position information, particularly in natural language processing tasks. The core idea behind RoPE is to introduce a rotational operation to represent position information, avoiding the fixed nature of traditional positional embeddings. Specifically, the formula for RoPE’s positional embedding is as follows:

• RoPE (Rotary Position Embedding) 是一种用于神经网络中表示序列位置信息的技术,尤其在自然语言处理任务中。RoPE 的核心思想是通过引入旋转操作来表示位置信息,避免传统位置嵌入的固定性。具体而言,RoPE 的位置嵌入公式如下:

$$

R o P E(\mathrm{position},d)=R_ {d}\cdot P_ {\mathrm{position}}

$$

$$

R o P E(\mathrm{position},d)=R_ {d}\cdot P_ {\mathrm{position}}

$$

where $R o P E(p o s i t i o n,d)$ represents the RoPE position embedding at a specific position; $d$ denotes the embedding dimension; $R_ {d}$ denotes a rotation matrix with dimensions of $d$ , utilized for rotating the position embedding; $P_ {p o s i t i o n}$ denotes an original position embedding vector, typically generated by sine and cosine functions. However, in RoPE, it is not static but can be rotated to adapt to different tasks.

其中 $R o P E(p o s i t i o n,d)$ 表示特定位置上的RoPE位置嵌入;$d$ 表示嵌入维度;$R_ {d}$ 表示维度为 $d$ 的旋转矩阵,用于旋转位置嵌入;$P_ {p o s i t i o n}$ 表示原始位置嵌入向量,通常由正弦和余弦函数生成。但在RoPE中,它不是静态的,而是可以旋转以适应不同任务。

• GQA is the abbreviation for grouped-query attention, which is a variant of the attention mechanism. In GQA, query heads are divided into multiple groups, each of which shares a single key and value matrix. This mechanism serves to reduce the size of the key-value cache during inference and significantly enhances inference throughput.

• GQA是分组查询注意力 (grouped-query attention) 的缩写,它是注意力机制的一种变体。在GQA中,查询头被分为多个组,每组共享一个键矩阵和值矩阵。该机制能减小推理过程中的键值缓存大小,并显著提升推理吞吐量。

Based on the selected Llama2 family (i.e., Llama2, Llama2-Chat, and Llama2-Chinese-Chat), the model fine-tuning process in this paper is described in Algorithm 2 and outlined as follows:

基于选定的Llama2系列(即Llama2、Llama2-Chat和Llama2-Chinese-Chat),本文中的模型微调过程如算法2所述并概述如下:

Algorithm 2 Train ChatRadio-Valuer.

算法 2: 训练 ChatRadio-Valuer

- Model Quantization: Leveraging the Transformers’ model quantization library, we employ the Bits And Bytes Config interface for model quantization. Essentially, this process involves converting model weights into int4 format through quantization layers and placing them on the GPU. Referring to Algorithm 2, we initialize the pre-train model $f_ {0}$ with the int4 quantization configuration $\pmb{\theta}_ {q u a n t i z a t i o n}$ to prepare for further model preparation. The core computation is carried out on the CUDA, reducing memory consumption and improving efficiency.

- 模型量化 (Model Quantization): 利用Transformers的模型量化库,我们采用Bits And Bytes Config接口进行模型量化。该过程本质上是通过量化层将模型权重转换为int4格式并置于GPU上。参考算法2,我们用int4量化配置$\pmb{\theta}_ {q u a n t i z a t i o n}$初始化预训练模型$f_ {0}$,为后续模型准备奠定基础。核心计算在CUDA上执行,从而降低内存消耗并提升效率。

- Model Fine-tuning: We incorporate LoRA (Low-Rank Adaptation) weights $\pmb{\theta}_ {p e f t-l o r a}$ and training parameters $\pmb{\theta}_ {t r}$ shown in Algorithm 2. In the first step, we fix the parameters of the model’s transformer component, focusing solely on training embeddings. This is done to adapt the newly added small-sample vectors without significantly interfering with the original model. Additionally, supervised Prompt data is utilized for fine-tuning, aiding in the selection of the most suitable prompts. In the second step, training continues on the remaining majority of samples while simultaneously updating LoRA parameters. The LoRA weights are then merged back into the initialized model $f_ {i n i t}$ , resulting in fine-tuned model $f$ with model parameters $\theta$

- 模型微调:我们引入LoRA (Low-Rank Adaptation)权重$\pmb{\theta}_ {p e f t-l o r a}$和训练参数$\pmb{\theta}_ {t r}$(如算法2所示)。第一步固定模型Transformer组件的参数,仅训练嵌入层,旨在适配新增的小样本向量而不显著干扰原始模型。同时利用监督式Prompt数据进行微调,辅助选择最合适的提示词。第二步继续训练剩余多数样本,并同步更新LoRA参数。最终将LoRA权重合并回初始化模型$f_ {i n i t}$,得到含参数$\theta$的微调模型$f$。

- Efficient Prompt Selection: During the stage of fine-tuning, to effectively activate the general iz ability and semantic capability of LLMs, prompt selection plays a significant role. However, fine-tuning any LLMs thoroughly is a time-consuming project, which requires sufficient computational resources and time capacity. Therefore, we employ small-epoch fine-tuning with our five expert-curated training prompts $X_ {prompt}^{tr}={sp_ {1}^{tr},sp_ {2}^{tr},...,sp_ {5}^{tr}}$ for time efficiency.

- 高效提示词选择:在微调阶段,为有效激活大语言模型(LLM)的泛化能力和语义理解能力,提示词选择至关重要。但由于完整微调任何大语言模型都是耗时工程,需要充足的计算资源和时间成本,因此我们采用小周期微调策略,使用五个专家精选的训练提示词$X_ {prompt}^{tr}={sp_ {1}^{tr},sp_ {2}^{tr},...,sp_ {5}^{tr}}$以提升时间效率。

We find the best prompt and its index $i d x$ through this small-epoch fine-tuning, and then inherit the model and parameters pair $(f_ {i d x},\theta_ {i d x})$ to continue the complete model fine-tuning mention above.

我们通过这个小周期的微调找到最佳提示及其索引$idx$,然后继承模型和参数对$(f_ {idx},\theta_ {idx})$,继续完成上述提到的完整模型微调。

3.3.2 Prompt Generation

3.3.2 提示生成

With the meticulously processed dataset proposed in Section 2.3, our objective is to generate complete prompts, subsequently employed by LLMs to yield effective causal inferences, contributing to either training or evaluation phases. Before introducing the proposed prompt, we first explicate the parts of the structure, which is accessible via a string and divided into three components: system description, instruction and input. The system description component is typically invoked at the outset to demarcate the task and constrain the instruction’s behavior, while the instruction component serves to provide direction for radiology report generation and the input component contains clinical radiology reports. The model output is generated by the response component, which serves as the basis for our dynamic prompt and iterative optimization framework.

利用第2.3节提出的精细处理数据集,我们的目标是生成完整提示词,随后由大语言模型(LLM)生成有效的因果推理结果,用于训练或评估阶段。在介绍所提出的提示结构前,首先解析其组成部分:该结构通过字符串实现,包含系统描述、指令和输入三个组件。系统描述组件通常在开始时调用,用于界定任务范围并约束指令行为;指令组件为放射学报告生成提供指导方向;输入组件则包含临床放射学报告。模型输出由响应组件生成,该组件是我们动态提示与迭代优化框架的基础。

Figure 4: Prompt generation overview. The overall framework contains three parts, system description, instruction, and input, which collaborative ly constitute a prompt. Within a prompt example (purple), expert instruction and input data on its right are inserted to the ${E x p e r t I n s t r u c t i o n}$ and ${I n p u t D a t a}$ , respectively. The derived impression is in the ${O u t p u t I m p r e s s i o n}$ .

图 4: 提示生成概览。整体框架包含系统描述、指令和输入三部分,协同构成一个提示。在提示示例(紫色)中,右侧的专家指令和输入数据分别插入到 ${E x p e r t I n s t r u c t i o n}$ 和 ${I n p u t D a t a}$ 中,生成的印象位于 ${O u t p u t I m p r e s s i o n}$。

Prior studies have used fixed-form prompts for straightforward tasks that could be easily generalized. However, these prompts lack the necessary prior knowledge for complex tasks and domain-specific datasets, resulting in low performance [29]. Thus, we propose a hypothesis that constructs dynamic prompts from relevant domain-specific corpora and can thus enhance the model’s comprehension and perception.

先前的研究使用固定形式的提示(prompt)来处理易于泛化的简单任务。然而,这些提示缺乏针对复杂任务和领域特定数据集所需的先验知识,导致性能低下[29]。因此,我们提出一个假设:从相关领域语料库构建动态提示,可以增强模型的理解和感知能力。

Specifically, in addition to the corpus containing pre processed multi-institution and multi-system data, domain experts contribute to the formulation of five prompt templates, instructions to LLMs, tailored to this study. Their professional advice helps provide refined instructions to LLMs to activate their ability to adapt to radiology domain and generate meaningful domain-specified results. Definitely referring to Algorithm 3, we combine the synthesized expert-curated instruction and system description set $X_ {p t}$ with the input data. As an illustrative example, one of these templates is shown in Figure 4, where Input Data is for data insertion and Expert Instruction is for instruction insertion. The complete prompt generation scheme is illustrated in Figure 4. Following this approach, five distinct prompt sets are generated, fully prepared for subsequent training and evaluation stages.

具体而言,除了包含预处理的多机构多系统数据的语料库外,领域专家还为本研究定制了五种提示模板和大语言模型指令。他们的专业建议有助于提供精细化指令,激活大语言模型适应放射学领域的能力,并生成有意义的领域特定结果。如算法3所示,我们将专家精心合成的指令和系统描述集$X_ {pt}$与输入数据相结合。其中一个模板示例如图4所示,其中输入数据用于数据插入,专家指令用于指令插入。完整的提示生成方案如图4所示。通过这种方法,我们生成了五个不同的提示集,为后续训练和评估阶段做好了充分准备。

Algorithm 3 Inference & Evaluate Reports.

算法 3: 推理与评估报告

3.4 How to Inference ChatRadio-Valuer?

3.4 如何推理ChatRadio-Valuer?

In the final phase, model reasoning testing and evaluation, clinging to the power of LLMs, including our proposed ChatRadio-Valuer especially, we exert them to generate casual inferences on the evaluation dataset $\pmb{I}$ for evaluating ChatRadio-Valuer’s performance over the other selected LLMs’.

在最终阶段,即模型推理测试与评估阶段,我们依托大语言模型(LLM)的强大能力(特别是我们提出的ChatRadio-Valuer),利用它们在评估数据集 $\pmb{I}$ 上生成因果推断,以评估ChatRadio-Valuer相较于其他选定大语言模型的性能表现。

In Algorithm 3, we have decided the best evaluation prompt template candidate $t_ {i d x}$ with its index $_ {i d x}$ . This template is also implemented in this module for saving computation resources and better exerting the capability of LLMs (i.e., no more prompt template selection is required due to its reliable credits in previous performance comparisons). Accordingly, this template indicates the expected evaluation prompts $s p_ {i d x}^{e v a l}$ . In terms of the test-institution prompt sets, following the same data preprocessing schematic in the second stage, we synthesize the cleaned test-institution data $X_ {t e s t}$ and the designated template candidate $t_ {i d x}$ into the final test-institution prompts $s p_ {c}$ .

在算法3中,我们确定了最佳评估提示模板候选$t_ {idx}$及其索引$_ {idx}$。该模板同样在本模块中实施,以节省计算资源并更好地发挥大语言模型的性能(即由于其在先前性能对比中的可靠表现,无需再进行提示模板选择)。因此,该模板指向预期的评估提示$sp_ {idx}^{eval}$。对于测试机构提示集,遵循第二阶段相同的数据预处理方案,我们将清洗后的测试机构数据$X_ {test}$与指定模板候选$t_ {idx}$合成为最终的测试机构提示$sp_ {c}$。

With the massive preparation work of multi-institution and multi-system prompts finished, we carefully construct our LLM pool for the final evaluation, containing total 15 LLMs, including our ChatRadio-Valuer. For each LLM, weights are accordingly initialized (i.e., ChatRadio-Valuer is initiated with SFT weights but others are with their official ones) and prepared for causal inference. With all inference configuration $\gamma$ , including top $k$ , top $p$ , temperature, etc., are specified, we leverage the power of these LLMs to inference casually, obtaining the reports $\pmb{I}_ {e v a l}$ and $I_ {c}$ . During the generation process, we pay great attention to the quality of the generated results. However, limited by the highly hardware-dependent properties of LLMs and randomness within their outputs, results sometimes encounter problems including the null output and repetitive words, leading to inaccurate representation of LLMs’ performances on generating casual inferences, specifically the quality of the reports without meaningless values. In order to solve these problems, we incorporate two modules to check whether the null output is generated and repetitive words are obtained during generation. These two modules maintain the quality of the final results in avoid of interference caused by meaningless values. In addition, more language-specifically, we leverage Chinese text segmentation to the results to improve the accuracy of ROUGE evaluation towards Chinese contexts. This segmentation provides a valuable vision for bilingual or multi-lingual tasks.

在多机构多系统提示词(prompt)的大规模准备工作完成后,我们精心构建了包含15个大语言模型(LLM)的评估池,其中包括我们的ChatRadio-Valuer。每个模型都进行了相应的权重初始化(ChatRadio-Valuer采用SFT权重,其余模型使用官方权重)并准备进行因果推理。当所有推理配置参数$\gamma$(包括top $k$、top $p$、温度值等)设定完毕后,我们驱动这些大语言模型进行自由推理,获得评估报告$\pmb{I}_ {eval}$和对比报告$I_ {c}$。

在生成过程中,我们高度重视输出结果的质量。但由于大语言模型高度依赖硬件设备的特性及其输出固有的随机性,结果有时会出现空输出和重复词汇等问题,导致无法准确反映模型在自由推理任务中的表现,特别是生成报告时出现无意义数值的情况。为解决这些问题,我们引入了两个检测模块:空输出检测和重复词汇检测。这两个模块通过过滤无意义数值的干扰,确保最终结果质量。

此外,针对中文语境特点,我们对结果进行中文分词处理以提高ROUGE评估的准确性。这种分词方法为双语或多语言任务提供了有价值的处理视角。

4 Performance Evaluation

4 性能评估

4.1 Performance Evaluation Indices

4.1 性能评估指标

To confirm the effectiveness, generalization, and transfer ability of our proposed ChatRadio-Valuer, we conduct a comprehensive analysis using engineering indexes and clinical evaluation from the aspects of feasibility, clinical performance, and application cost.

为验证所提出的ChatRadio-Valuer在有效性、泛化性和迁移能力上的表现,我们从可行性、临床性能和应用成本三个维度,结合工程指标与临床评估展开了全面分析。

For feasibility, we evaluate the performance of the models by the widely-used engineering index ROUGE [48] and report the $F_ {1}$ scores for ROUGE-N and ROUGE-L (denoted as R-1, R-2, and R-L), which measure the word-level N-gram-overlap and longest common sequence between the reference summaries and the candidate summaries respectively, as shown from Eq.(5)-Eq.(8). $P$ is denoted as the percentage of defects correctly assigned, while $R$ represents the ratio of accurately detected defects to total true defects. $F_ {1}$ measures the trade-off between Precision $\mathrm{\nabla}^{\cdot}P_ {\mathrm{\Phi}}^{\cdot}$ ) and $\operatorname{Recall}(R)$ .

为评估可行性,我们采用广泛使用的工程指标ROUGE[48]来衡量模型性能,并报告ROUGE-N和ROUGE-L的$F_ {1}$分数(记为R-1、R-2和R-L)。这些分数分别通过式(5)-式(8)计算参考摘要与候选摘要之间的词级N-gram重叠率和最长公共子序列。$P$表示正确分配的缺陷百分比,$R$代表准确检测到的缺陷占真实缺陷总数的比例。$F_ {1}$用于衡量精确率($P$)与召回率($R$)之间的平衡关系。

$$

R O U G E-N=\frac{\sum_ {S\in{R e f e r e n c e S u m m a r i e s}}\sum_ {g r a m_ {n}\in S}C o u n t_ {m a t c h}(g r a m_ {n})}{\sum_ {S\in{R e f e r e n c e S u m m a r i e s}}\sum_ {g r a m_ {n}\in S}C o u n t(g r a m_ {n})}

$$

$$

R O U G E-N=\frac{\sum_ {S\in{R e f e r e n c e S u m m a r i e s}}\sum_ {g r a m_ {n}\in S}C o u n t_ {m a t c h}(g r a m_ {n})}{\sum_ {S\in{R e f e r e n c e S u m m a r i e s}}\sum_ {g r a m_ {n}\in S}C o u n t(g r a m_ {n})}

$$

$$

R={\frac{L C S(R e f e r e n c e S u m m a r i e s,C a n d i d a t e S u m m a r i e s)}{m}}

$$

$$

R={\frac{L C S(R e f e r e n c e S u m m a r i e s,C a n d i d a t e S u m m a r i e s)}{m}}

$$

$$

P={\frac{L C S(R e f e r e n c e S u m m a r i e s,C a n d i d a t e S u m m a r i e s)}{n}}

$$

$$

P={\frac{L C S(R e f e r e n c e S u m m a r i e s,C a n d i d a t e S u m m a r i e s)}{n}}

$$

$$

F_ {1}={\frac{2R P}{R+P}}

$$

$$

F_ {1}={\frac{2R P}{R+P}}

$$

where $n$ stands for the length of the n-gram, $g r a n$ ; $C o u n t_ {m a t c h}(g r a m_ {n})$ is the maximum number of the n-grams co-occurring in a candidate summary and a set of reference summaries. $L C S$ is the length of the longest common sub sequence of Reference-Summaries and Candidate-Summaries. $m$ denotes the length of Reference-Summaries and $n$ is the length of Candidate-Summaries.

其中 $n$ 表示 n-gram 的长度 $gran$;$Count_ {match}(gram_ {n})$ 是候选摘要与参考摘要集中共现 n-gram 的最大数量。$LCS$ 是参考摘要与候选摘要的最长公共子序列长度。$m$ 表示参考摘要的长度,$n$ 表示候选摘要的长度。

For clinical performance, we assess ChatRadio-Valuer using an array of clinical indexes to test its performance, as illustrated in Figure 9. For each index, we utilize a 100-point scale and divide it into quintiles every 20 points. These clinical indexes include "Understand ability" which denotes the model’s capacity to be comprehended and interpreted by clinicians and relevant physicians.

在临床性能方面,我们通过一系列临床指标评估ChatRadio-Valuer的表现,如图9所示。每个指标采用百分制,并按每20分为一个五分位区间划分。这些临床指标包括"理解能力(Understand ability)",即模型被临床医生及相关医师理解和阐释的能力。

"Coherence" means the model’s ability to maintain logical consistency and unified structure in its outputs. Furthermore, "Relevance" means the model’s capacity to generate related information and insights for the current clinical context. "Conciseness" represents the importance of the model’s output being concise and reducing redundant information, ensuring that it effectively conveys essential clinical knowledge. "Clinical Utility" emphasizes the practical value of the model’s outputs, measuring its capacity to inform and enhance clinical decision-making processes. Moreover, the evaluation also examines the potential for "Missed Diagnosis" emphasizing the model’s ability to minimize instances where clinically significant conditions are overlooked or under emphasized. On the contrary, "Over diagnosis" assesses the condition where the model may lead to excessive diagnoses or over utilization of medical interventions. These clinical indexes inform the assessment of the model’s effectiveness, ensuring its effectiveness and appropriateness in the clinical domain. In addition to the basic interpretation of these metrics, the most essential factors for clinical utilities and significance are "Missed Diagnosis" and "Over diagnosis", explained more thoroughly as follows:

"连贯性"指模型在输出中保持逻辑一致性和结构统一的能力。"相关性"指模型为当前临床情境生成相关信息与见解的能力。"简洁性"强调模型输出需简明扼要,减少冗余信息,确保有效传递核心临床知识。"临床实用性"着重评估模型输出对临床决策的实际指导价值。此外,评估还关注"漏诊"风险,即模型能否最大限度避免遗漏临床重要病症;相反,"过度诊断"则评估模型可能导致过度医疗干预的情况。这些临床指标共同构成模型有效性的评估体系,确保其在医疗领域的适用性。除基础指标外,最具临床实用价值的关键因素是"漏诊"与"过度诊断",具体阐释如下:

Missed Diagnosis Missed diagnosis, or false negative in medical diagnostics, occurs when healthcare practitioners fail to detect a medical condition despite its actual presence. This diagnostic error stems from the inability of clinical assessments, tests, or screenings to accurately identify the condition, resulting in a lack of appropriate treatment. The consequences of missed diagnosis range from delayed therapy initiation to disease progression, with contributing factors including diagnostic modality limitations, inconspicuous symptoms, and cognitive biases. Timely and accurate diagnosis is fundamental to effective healthcare. Therefore, it is necessary to reduce the occurrence of missed diagnosis for improved patient outcomes and healthcare quality.

漏诊

漏诊,即医学诊断中的假阴性,指医疗从业者未能检测出实际存在的病症。这种诊断错误源于临床评估、检测或筛查无法准确识别病情,导致患者未得到适当治疗。漏诊的后果包括治疗延迟和疾病恶化,影响因素包括诊断方法局限性、症状不明显以及认知偏差。及时准确的诊断是有效医疗的基础,因此有必要减少漏诊发生率以改善患者预后和医疗质量。

Over diagnosis Over diagnosis is characterized by the erroneous or unnecessary identification of a medical condition upon closer examination. This diagnostic error arises when a condition is diagnosed but would not have caused harm or clinical symptoms during the patient’s lifetime. Over diagnosis often leads to unnecessary medical interventions, exposing patients to potential risks without significant benefits. It is particularly relevant in conditions with broad diagnostic criteria, where the boundaries between normal and pathological states are unclear. For example, early detection of certain malignancies can result in over diagnosis if the cancer has remained a symptomatic throughout the patient’s lifetime. Recognizing and addressing over diagnosis are critical aspects of modern healthcare, influencing resource allocation, patient well-being, and healthcare system sustainability.

过度诊断

过度诊断的特征是在进一步检查时错误或不必要地识别出某种疾病。这种诊断错误发生在确诊某种疾病,但该疾病在患者一生中不会造成伤害或临床症状的情况下。过度诊断常导致不必要的医疗干预,使患者暴露于潜在风险中却无明显获益。在诊断标准宽泛、正常与病理状态界限不明确的疾病中尤为突出。例如,某些恶性肿瘤若在患者一生中始终保持无症状状态,早期检测便可能导致过度诊断。识别并解决过度诊断是现代医疗的关键议题,影响着资源分配、患者福祉及医疗系统的可持续性。

As for application metrics, the assessment of practicality is critical for ChatRadio-Valuer. In this context, two critical indexes are "Time Cost" (i.e. "Fine-Tuning Time" and "Testing Time") and "Parameter Count". "Fine-tuning time" means the temporal duration during the iterative training process where the model acquires its knowledge and optimization. The duration of the training phase is of great importance, as it directly impacts the time-to-model readiness and the overall efficiency of the development workflow. "Testing Time" is an essential measure that gauges the computational expenses incurred during the model’s execution, including both the temporal and computational resources required for real-time deployment. This metric carries great significance, particularly in resource-limited settings where efficient use of computational resources is vital. "Parameter Count" is another vital evaluation metric, indicating the model’s demand for memory storage during execution. This aspect is of great importance, especially when dealing with data-intensive applications. An effective evaluation of memory size ensures that the model remains deployable on hardware configurations with adequate memory resources while avoiding performance degradation. In a word, these indexes serve as crucial benchmarks in the assessment of the model, allowing for a comprehensive evaluation of its computational efficiency and resource utilization in the specific operational setting.

就应用指标而言,ChatRadio-Valuer的实用性评估至关重要。在此背景下,两个关键指标是"时间成本"(即"微调时间"和"测试时间")与"参数量"。"微调时间"指模型在迭代训练过程中获取知识与优化的时间跨度。训练阶段的持续时间极为重要,因其直接影响模型就绪时间与整体开发流程效率。"测试时间"是衡量模型执行期间计算开销的关键指标,包括实时部署所需的时间与计算资源。该指标在资源受限环境中尤为重要,此时计算资源的高效利用至关重要。"参数量"是另一项重要评估指标,反映模型运行期间对内存存储的需求。这一特性在处理数据密集型应用时尤为关键。对内存占用的有效评估能确保模型在具有充足内存资源的硬件配置上保持可部署性,同时避免性能下降。简言之,这些指标作为模型评估的关键基准,可全面评估其在特定运行环境中的计算效率与资源利用率。

4.2 Experiment Setup

4.2 实验设置

We initially preprocess the multi-system data from multiple institutions (shown in Section 2.3) and established datasets for training and evaluation by combining these data with five prompt templates. After data preprocessing, we implement supervised fine-tuning (SFT) on ChatRadio-Valuer using these cleaned and standardized data. We also employ evaluation on the SFT ChatRadio-Valuer. To achieve reduced computational resource requirements, faster adaptation, and enhanced accessibility, we apply low-rank adaptation (LoRA), a technique for parameter-efficient fine-tuning (PEFT), and 4-bit quantization during the SFT stage. Technically, LoRA enhances the efficiency of adapting large, pre-trained language models to specific tasks by introducing trainable rank decomposition matrices. It significantly reduces the number of trainable parameters while maintaining high model quality when compared to traditional fine-tuning. In terms of quantization, it simplifies numerical representation by reducing data precision. In LLMs, it involves the conversion of high-precision floating-point values into lower-precision fixed-point representations, effectively diminishing memory and computational requirements. Quantized LLMs offer enhanced resource efficiency and compatibility with a wide array of hardware platforms. In the context of ChatRadio-Valuer, we implement 4-bit precision quantization.

我们首先对来自多个机构的多系统数据(如第2.3节所示)进行预处理,并通过将这些数据与五种提示模板结合,建立了用于训练和评估的数据集。数据预处理完成后,我们利用这些经过清洗和标准化的数据对ChatRadio-Valuer实施监督微调(SFT),并对SFT后的ChatRadio-Valuer进行评估。为降低计算资源需求、加快适应速度并提升可访问性,我们在SFT阶段采用了参数高效微调(PEFT)技术——低秩自适应(LoRA)以及4位量化技术。从技术角度看,LoRA通过引入可训练的秩分解矩阵,提升了将大型预训练语言模型适配到特定任务的效率。与传统微调相比,它在保持高质量模型表现的同时,显著减少了可训练参数数量。量化技术则通过降低数据精度来简化数值表示。在大语言模型中,该技术将高精度浮点值转换为低精度定点表示,从而有效降低内存和计算需求。量化后的大语言模型具有更高的资源效率,并能兼容更广泛的硬件平台。在ChatRadio-Valuer中,我们实现了4位精度的量化。

Before the training stage, we investigate the well-known LLMs from medical perspective and constructed LLM pool for model selection. We mainly focus on 6 aspects, indicated in Figure 5, domain adaptability, compatibility with medical standards, bilingual, open source, parameter efficiency, and cost and licensing. A brief introduction to these metrics is listed below:

在训练阶段之前,我们从医学角度调研了知名的大语言模型,并构建了用于模型选择的LLM池。主要关注6个方面(如图5所示): 领域适应性、医学标准兼容性、双语能力、开源性质、参数效率及成本与授权。这些指标的简要说明如下:

Eventually, we choose 12 SOTA LLMs and take 4 of them, Llama2-7B, Llama2-Chat-7B, Llama2- Chinese-Chat-7B and ChatGLM2-6B for fine-tuning. During the training stage, we conduct a total of three training epochs. The initial learning rate is set to $1.41e^{-5}$ , with a steady decrease as training steps progressed. The batch size is fixed at 64, and the maximum input/output sequence length are set to 512. To alleviate GPU memory load, we use gradient accumulation steps, which are set to 16. In terms of LoRA, we assign values of 64 and 16 to the parameters $r$ and $\alpha$ , respectively. During the evaluation stage, all inferences share the same configuration, with a maximum token generation length of 512. Temperature, top-k, and top-p values are set to 1.0, 50, and 1.0, respectively. The prevailing algorithms as the baseline to construct a LLM pool are shown in Figure 5 and explained as follows:

最终,我们选择了12个最先进的大语言模型(LLM),并对其中的4个模型(Llama2-7B、Llama2-Chat-7B、Llama2-Chinese-Chat-7B和ChatGLM2-6B)进行微调。训练阶段共进行三个训练周期,初始学习率设为$1.41e^{-5}$,随着训练步数增加逐步降低。批量大小固定为64,最大输入/输出序列长度设为512。为减轻GPU内存负载,我们采用梯度累积步数设置为16。在LoRA配置方面,参数$r$和$\alpha$分别设为64和16。评估阶段所有推理采用相同配置,最大token生成长度为512,温度值、top-k和top-p分别设置为1.0、50和1.0。图5展示了作为基线的主流算法构建的大语言模型池,具体说明如下:

Figure 5: LLM pool for model selection. Considering the scene of medical application, six aspects are considered: domain adaptability, compatibility with medical standards, bilingual, open source, parameter efficiency, and cost and licensing. 15 SOTA LLMs (12 baseline models, 3 fine-tuning pairs, and 1 fine-tuned ChatGLM2-6B) from 10 organizations jointly are established by the LLM pool.

图 5: 大语言模型选型池。针对医疗应用场景,我们从六个维度进行评估:领域适应性、医疗标准兼容性、双语支持、开源属性、参数效率及成本许可。该模型池汇集了来自10家机构的15个前沿大语言模型 (包含12个基线模型、3组微调对比模型及1个微调版ChatGLM2-6B)。

Baseline Methods:

基线方法:

- Llama2: Llama2 [49] is a collection that comprises a meticulously curated set of pre-trained generative text models that have been expertly fine-tuned. These models operate as auto-regressive language models and are founded upon an optimized transformer architecture. The fine-tuned variants incorporate SFT and reinforcement learning with input from human feedback (RLHF) [50] to align with human preferences regarding both utility and safety. Our model selection includes Llama2-7B and Llama2-Chat-7B.

- Llama2: Llama2 [49] 是一个精心筛选的预训练生成文本模型集合,这些模型经过专家级微调。它们作为自回归大语言模型运行,基于优化后的Transformer架构。微调版本结合了监督微调(SFT) 和来自人类反馈的强化学习(RLHF) [50],以在实用性和安全性方面符合人类偏好。我们选用的模型包括Llama2-7B和Llama2-Chat-7B。

- Llama2-Chinese: Llama2-Chinese [51] is a collection containing LLMs pre-trained on a large-scale Chinese corpus, leveraging high-quality Chinese language datasets totaling 200 billion characters for fine-tuning. While this approach is costly, requiring significant resources in terms of both high-quality Chinese data and computational resources, its great advantage lies in enhancing Chinese language capabilities at the model’s core, achieving fundamental improvement and imparting strong Chinese language proficiency to the large model. In our study, we carefully select Llama2-Chinese-Chat-7B as the baseline method.

- Llama2-Chinese: Llama2-Chinese [51] 是一个包含基于大规模中文语料预训练的大语言模型合集,利用总计2000亿字符的高质量中文数据集进行微调。虽然这种方法成本高昂,需要大量高质量中文数据和计算资源,但其巨大优势在于从模型核心层面提升中文能力,实现根本性改进,并赋予大模型强大的中文处理能力。在我们的研究中,我们精心选择了Llama2-Chinese-Chat-7B作为基线方法。

- GPT-3.5-Turbo/GPT-4: ChatGPT and GPT4, both developed by OpenAI, are influential large language models. ChatGPT, also known as GPT-3.5-turbo, is an advancement based on GPT-2 and GPT-3, with its training process heavily influenced by instruct GP T [52]. A key distinction from GPT-3 [53] is the incorporation of RLHF [50], which refines model output through human feedback. This approach enhances the model’s ability to rank results effectively. ChatGPT excels in language comprehension, accommodating diverse expressions and queries. Its extensive knowledge base answers frequently asked questions and provides valuable information. GPT-4, being the successor of GPT-3, may possess enhanced capabilities. In our experiments, both ChatGPT and GPT4 are utilized.

- GPT-3.5-Turbo/GPT-4: 由OpenAI开发的ChatGPT和GPT4都是具有影响力的大语言模型。ChatGPT(即GPT-3.5-turbo)是在GPT-2和GPT-3基础上的改进版本,其训练过程深受instruct GPT [52]影响。与GPT-3 [53]的关键区别在于引入了RLHF [50]技术,通过人类反馈来优化模型输出。这种方法提升了模型对结果排序的能力。ChatGPT在语言理解方面表现优异,能够处理多样化的表达方式和查询需求。其庞大的知识库可以回答常见问题并提供有价值的信息。作为GPT-3的继任者,GPT-4可能具备更强的能力。本实验中同时使用了ChatGPT和GPT4。

- ChatGLM2-6B: ChatGLM2-6B [54, 55] is the second-generation bilingual chat model based on the open-source ChatGLM-6B framework. It has undergone pre-training with 1.4 trillion bilingual tokens, accompanied by human preference alignment training. This model successfully achieves several key objectives, including maintaining a smooth conversation flow, imposing minimal deployment requirements, and extending the context length to 32K tokens through the incorporation of Flash Attention.

- ChatGLM2-6B:ChatGLM2-6B [54, 55] 是基于开源框架 ChatGLM-6B 的第二代双语对话模型。该模型通过 1.4 万亿双语 token 的预训练及人类偏好对齐训练,成功实现了多项关键目标:保持流畅的对话体验、极低的部署需求,并通过引入 Flash Attention 技术将上下文长度扩展至 32K token。

- BayLing: BayLing [56] is a set of advanced language models that excel in English and Chinese text generation, adeptly follow instructions, and engage in multi-turn conversations. It seamlessly operates on a standard 16GB GPU, facilitating users with translation, writing, and creative suggestions, among other tasks. In our evaluation task, BayLing-7B was selected for evaluation.

- BayLing: BayLing [56] 是一套擅长中英文文本生成、精准遵循指令并支持多轮对话的先进语言模型。它可在标准16GB GPU上流畅运行,辅助用户完成翻译、写作及创意建议等任务。本次评估选用BayLing-7B模型进行测试。

- Baichuan-7B: Baichuan-7B [57], an open-source language model by Baichuan Intelligent Technology, utilizes the Transformer architecture with 7 billion parameters, trained on about 1.2 trillion tokens. It excels in both Chinese and English, featuring a 4096-token context window. It outperforms similar-sized models on standard Chinese and English benchmarks like C-Eval and MMLU.

- Baichuan-7B: Baichuan-7B [57] 是百川智能科技推出的开源大语言模型, 采用 Transformer 架构, 拥有 70 亿参数, 基于约 1.2 万亿 token 训练而成。该模型在中英文任务上均表现优异, 支持 4096 token 的上下文窗口, 在 C-Eval 和 MMLU 等中英文基准测试中超越了同规模模型。

- Tigerbot-7B-chat: Tigerbot-7B-chat [58], derived from TigerBot-7B-base, underwent fine-tuning with 20M data across various tasks for directives (SFT) and alignment using rejection sampling (RS-HIL). Across 13 key assessments in English and Chinese, it outperforms Llama2-Chat-7B by 29%, showcasing superior performance compared to similar open-source models worldwide.

- Tigerbot-7B-chat: Tigerbot-7B-chat [58] 基于 TigerBot-7B-base 开发,通过 20M 多任务指令数据进行了监督微调 (SFT),并采用拒绝采样对齐方法 (RS-HIL)。在涵盖中英文的 13 项核心评测中,其性能超越 Llama2-Chat-7B 达 29%,展现出全球同类开源模型中的卓越表现。

- Chinese-LLaMA-Alpaca-2: Chinese-LLaMA-Alpaca-2 [59], an evolution of Meta’s Llama2, marks the second iteration of the Chinese LLaMA & Alpaca LLM initiative. We’ve made the Chinese LLaMA-2 (the base model) and Alpaca-2 (the instruction-following model) open source. These models have been enhanced and refined with a broader Chinese vocabulary compared to the original Llama2. It conducted extensive pre-training with abundant Chinese data, significantly bolstering our grasp of Chinese language semantics, resulting in a substantial performance boost compared to the first-gen models. The standard version supports a 4K context, while the long-context version accommodates up to 16K context. Moreover, all models can expand their context size using the NTK method, going beyond 24K+.

- Chinese-LLaMA-Alpaca-2: Chinese-LLaMA-Alpaca-2 [59] 作为 Meta Llama2 的演进版本,标志着中文 LLaMA & Alpaca 大语言模型项目的第二次迭代。我们开源了中文 LLaMA-2 (基础模型) 和 Alpaca-2 (指令跟随模型)。相比原始 Llama2,这些模型通过扩充中文词表进行了增强优化,并基于海量中文数据开展了充分预训练,显著提升了对中文语义的理解能力,性能较第一代模型有大幅提升。标准版支持 4K 上下文长度,长上下文版本最高支持 16K。此外,所有模型均可通过 NTK 方法扩展上下文窗口至 24K+。

- Chinese-Falcon-7B: Chinese-Falcon-7B [60], developed by the Linly team, expands the Chinese vocabulary of the Falcon model and transfers its language capabilities to Chinese through Chinese and Chinese-English parallel incremental pre-training. Pre-training was conducted using 50GB of data, with 20GB of general Chinese corpora providing Chinese language proficiency and knowledge to the model, 10GB of Chinese-English parallel corpora aligning the model’s Chinese and English representations to transfer English language proficiency to Chinese, and 20GB of English corpora used for data replay to mitigate model forgetting.

- Chinese-Falcon-7B: Chinese-Falcon-7B [60] 由 Linly 团队开发,通过扩展 Falcon 模型的中文词汇表,并采用中英平行增量预训练方式将其语言能力迁移至中文领域。预训练使用 50GB 数据完成,其中 20GB 通用中文语料为模型提供中文语言能力与知识,10GB 中英平行语料对齐模型的中英文表征以实现英语能力向中文的迁移,另有 20GB 英文语料用于数据回放以防止模型遗忘。

- iFLYTEK Spark (V2.0): iFLYTEK Spark (V2.0) [61] is a next-generation cognitive intelligent model that possesses interdisciplinary knowledge and language comprehension capabilities. It can understand and execute tasks based on natural conversation. It offers functionalities such as multimodal interaction, coding abilities, text generation, mathematical capabilities, language comprehension, knowledge answering, and logical reasoning. Its API enables applications to quickly access interdisciplinary knowledge and powerful natural language understanding capabilities, effectively addressing pressing issues in specific contexts.

- 科大讯飞星火(V2.0): 科大讯飞星火(V2.0) [61] 是新一代认知智能模型,具备跨学科知识和语言理解能力。它能基于自然对话理解并执行任务,提供多模态交互、编程能力、文本生成、数学能力、语言理解、知识问答及逻辑推理等功能。其API可使应用快速接入跨学科知识和强大的自然语言理解能力,有效解决特定场景下的迫切问题。

For SFT, Llama2-7B, Llama2-7B-Chat, Llama2-Chinese-7B-Chat, and ChatGLM2-6B employed identical training and LoRA configuration settings. For inference, the anterior models with their SFT versions and the listed models above conformed to the same inference configuration. Experiments have been performed to validate the effectiveness of ChatRadio-Valuer on the multi-institution and multi-system radiology report generation.

对于监督微调(SFT),Llama2-7B、Llama2-7B-Chat、Llama2-Chinese-7B-Chat和ChatGLM2-6B均采用相同的训练和LoRA配置参数。在推理阶段,上述基础模型及其监督微调版本均遵循统一的推理配置。实验验证了ChatRadio-Valuer在多机构、多系统的放射学报告生成任务中的有效性。

4.3 Generalization Performance Evaluation Across Institutions

4.3 跨机构泛化性能评估

Firstly, to assess the ChatRadio-Valuer’s general iz able abilities to transfer its acquired knowledge and skills to diverse institutions or sources, we conduct extensive cross-institution performance comparisons involving 15 models. As shown in Table 2 and Table 3 for details, ChatRadio-Valuer’s evaluation encompasses treating data from Institutions 2 through 6 as external sources, revealing a noteworthy degree of general iz ability and similarity with the data originating from Institution 1. Our results underscore significant advantages of ChatRadio-Valuer over other models, particularly evident in its absolute superiority in R-1, R-2, and R-L scores on datasets from Institutions 2 and 5. Furthermore, ChatRadio-Valuer secures the highest R-2 score on the dataset from Institution 6. Although it does not attain the top score across all metrics for Institutions 3, 4, and 6, ChatRadioValuer consistently maintains a prominent position within the first tier. Taking into account influential external factors like hardware and environmental disparities, we can assert that ChatRadio-Valuer demonstrates exceptional cross-institution performance.

首先,为评估ChatRadio-Valuer将其所学知识和技能迁移至不同机构或数据源的泛化能力,我们开展了涵盖15个模型的跨机构性能对比实验。如表2和表3所示,当将机构2至6的数据视为外部源时,ChatRadio-Valuer展现出与机构1数据源显著相似的泛化性能。实验结果凸显了ChatRadio-Valuer相对于其他模型的显著优势:在机构2和5数据集上R-1、R-2及R-L分数绝对领先,同时斩获机构6数据集的最高R-2分数。尽管在机构3、4、6的部分指标上未达榜首,ChatRadio-Valuer始终稳居第一梯队。综合考虑硬件环境差异等外部影响因素,可以确认ChatRadio-Valuer具有卓越的跨机构表现。

This remarkable capacity for generalization across institutions significantly diminishes the model’s reliance on specific institutional data, thereby presenting promising advantages for data sharing and collaboration among institutions. The ability to seamlessly transfer and apply the model across institutions without necessitating retraining positions ChatRadio-Valuer as a preferred tool for numerous medical establishments. The potential value lies in its capability to enhance the quality of patient care, expedite disease diagnosis, and streamline clinical decision-making processes.

这种跨机构泛化的卓越能力显著降低了模型对特定机构数据的依赖,从而为机构间的数据共享与合作带来了显著优势。无需重新训练即可在不同机构间无缝迁移和应用模型的特点,使ChatRadio-Valuer成为众多医疗机构的优选工具。其潜在价值体现在提升患者护理质量、加速疾病诊断以及优化临床决策流程等方面。

Explore further, in order to evaluate the ChatRadio-Valuer’s general iz able ability to extend its knowledge and skills to multiple body systems within Institution 1, we conduct performance tests involving 15 models on the designated testing dataset from Institution 1. This testing dataset encompasses five distinct systems (chest, abdomen, skeletal muscle, head, and max ill o facial & neck parts). As listed in Table 4 and 5 for details, we calculate the similarity for each system among the model-generated diagnosis results (impression) and the professional diagnosis results (REPORT RESULT) provided by doctors, yielding R-1, R-2, and R-L values. The outcomes clearly showcase ChatRadio-Valuer’s remarkable advantages. Across three systems—abdomen, head, and max ill o facial & neck—ChatRadio-Valuer achieves the highest scores for R-1, R-2, and R-L values. In the chest system’s testing results, both R-1 and R-L values attain the highest scores as well. The remaining test results exhibit remarkable proximity to the maximum values, presenting significantly superior performance compared to the other two fine-tuned models. They firmly belong to the top tier.

为进一步评估ChatRadio-Valuer在机构1中将知识技能泛化至多人体系统的能力,我们在机构1指定测试集上对15个模型进行了性能测试。该测试集涵盖五大系统(胸部、腹部、骨骼肌、头部及颌面颈部)。如表4和表5所示,我们计算了各系统模型生成诊断结果(印象)与医生提供的专业诊断结果(报告结果)之间的R-1、R-2和R-L相似度值。结果清晰展现了ChatRadio-Valuer的显著优势:在腹部、头部和颌面颈部三个系统中,其R-1、R-2和R-L值均获最高分;胸部系统测试中R-1与R-L值同样登顶;其余测试结果与最大值差距微小,较另外两个微调模型展现出显著优越性,稳居第一梯队。

Table 2: Cross-institution comparison (Part 1). Results of data from Institution 1 - 3 are shown. For results within each institution, each model corresponds to three similarity scores, R-1, R-2, and R-L.

| Model | Institution 1 | Institution 2 | Institution 3 | ||||||

| R-1 | R-2 | R-L | R-1 | R-2 | R-L | R-1 | R-2 | R-L | |

| Llama2-7B | 0.0909 | 0.0402 | 0.0879 | 0.0825 | 0.0344 | 0.0784 | 0.0172 | 0.0062 | 0.0169 |

| Llama2-7B-ft | 0.4324 | 0.2680 | 0.4181 | 0.4657 | 0.2675 | 0.4489 | 0.0875 | 0.0250 | 0.0847 |

| Llama2-Chat-7B | 0.1983 | 0.0810 | 0.1885 | 0.1871 | 0.0478 | 0.1780 | 0.0178 | 0.0040 | 0.0168 |

| ChatRadio-Valuer | 0.4619 | 0.2872 | 0.4464 | 0.4807 | 0.2684 | 0.4608 | 0.0673 | 0.0191 | 0.0644 |

| Llama2-Chinese-Chat-7B | 0.1701 | 0.0751 | 0.1633 | 0.1411 | 0.0386 | 0.1352 | 0.0376 | 0.0127 | 0.0358 |

| Llama2-Chinese-Chat-7B-ft | 0.3221 | 0.1911 | 0.3106 | 0.4045 | 0.2250 | 0.3869 | 0.0675 | 0.0166 | 0.0647 |

| ChatGLM2-ft | 0.2639 | 0.1052 | 0.2556 | 0.2733 | 0.0818 | 0.2578 | 0.0458 | 0.0114 | 0.0441 |

| GPT-3.5-Turbo | 0.1411 | 0.0615 | 0.1341 | 0.0721 | 0.0221 | 0.0679 | 0.0644 | 0.0346 | 0.0623 |

| GPT-4 | 0.1080 | 0.0451 | 0.1029 | 0.0689 | 0.0215 | 0.0646 | 0.0752 | 0.0393 | 0.0729 |

| BayLing-7B | 0.2732 | 0.1128 | 0.2604 | 0.2643 | 0.0729 | 0.2519 | 0.0412 | 0.0112 | 0.0380 |

| Baichuan-7B | 0.1264 | 0.0578 | 0.1217 | 0.0846 | 0.0247 | 0.0816 | 0.0532 | 0.0234 | 0.0508 |

| Tigerbot-7B-chat-v3 | 0.1338 | 0.0587 | 0.1283 | 0.0783 | 0.0285 | 0.0750 | 0.0817 | 0.0260 | 0.0789 |

| Chinese-Alpaca-2-7B | 0.1884 | 0.0776 | 0.1800 | 0.1837 | 0.0476 | 0.1758 | 0.0546 | 0.0214 | 0.0522 |

| Chinese-Falcon-7B | 0.0770 | 0.0356 | 0.0742 | 0.0360 | 0.0127 | 0.0350 | 0.0391 | 0.0166 | 0.0382 |

| iFLYTEK Spark (V2.0) | 0.1186 | 0.0513 | 0.1142 | 0.0962 | 0.0254 | 0.0918 | 0.0642 | 0.0305 | 0.0622 |

表 2: 跨机构对比 (第一部分)。展示机构1-3的数据结果。各机构内部结果中,每个模型对应三个相似度分数:R-1、R-2和R-L。

| 模型 | 机构1-R-1 | 机构1-R-2 | 机构1-R-L | 机构2-R-1 | 机构2-R-2 | 机构2-R-L | 机构3-R-1 | 机构3-R-2 | 机构3-R-L |

|---|---|---|---|---|---|---|---|---|---|

| Llama2-7B | 0.0909 | 0.0402 | 0.0879 | 0.0825 | 0.0344 | 0.0784 | 0.0172 | 0.0062 | 0.0169 |

| Llama2-7B-ft | 0.4324 | 0.2680 | 0.4181 | 0.4657 | 0.2675 | 0.4489 | 0.0875 | 0.0250 | 0.0847 |

| Llama2-Chat-7B | 0.1983 | 0.0810 | 0.1885 | 0.1871 | 0.0478 | 0.1780 | 0.0178 | 0.0040 | 0.0168 |

| ChatRadio-Valuer | 0.4619 | 0.2872 | 0.4464 | 0.4807 | 0.2684 | 0.4608 | 0.0673 | 0.0191 | 0.0644 |

| Llama2-Chinese-Chat-7B | 0.1701 | 0.0751 | 0.1633 | 0.1411 | 0.0386 | 0.1352 | 0.0376 | 0.0127 | 0.0358 |

| Llama2-Chinese-Chat-7B-ft | 0.3221 | 0.1911 | 0.3106 | 0.4045 | 0.2250 | 0.3869 | 0.0675 | 0.0166 | 0.0647 |

| ChatGLM2-ft | 0.2639 | 0.1052 | 0.2556 | 0.2733 | 0.0818 | 0.2578 | 0.0458 | 0.0114 | 0.0441 |

| GPT-3.5-Turbo | 0.1411 | 0.0615 | 0.1341 | 0.0721 | 0.0221 | 0.0679 | 0.0644 | 0.0346 | 0.0623 |