Towards Expert-Level Medical Question Answering with Large Language Models

基于大语言模型实现专家级医学问答

Karan Singhal $^{* ,1}$ , Tao Tu $^{* ,1}$ , Juraj Gottweis $^{* ,1}$ , Rory Sayres $^{* ,1}$ , Ellery Wulczyn $^1$ , Le Hou $^{1}$ , Kevin Clark $^{1}$ , Stephen Pfohl $^{1}$ , Heather Cole-Lewis $^{1}$ , Darlene Neal $^{1}$ , Mike Sch a e kerman n $^{1}$ , Amy Wang1, Mohamed Amin $^{1}$ , Sami Lachgar $^{1}$ , Philip Mansfield $^{1}$ , Sushant Prakash $^{\perp}$ , Bradley Green $\mathbf{\Psi}_ {\perp}$ , Ewa Dominowska $\cdot^{\perp}$ , Blaise Aguera y Arcas $^{1}$ , Nenad Tomasev $^2$ , Yun Liu $^{1}$ , Renee Wong1, Christopher Semturs $\mathbf{\Psi}_ {\perp}$ , S. Sara Mahdavi $^{1}$ , Joelle Barral1, Dale Webster $^{.1}$ , Greg S. Corrado $^{1}$ , Yossi Matias1, Shekoofeh Azizi $^{\dagger,1}$ , Alan Kart hikes a lingam $^{\dagger,1}$ and Vivek Natarajan $^{\dagger,1}$

Karan Singhal $^{* ,1}$, Tao Tu $^{* ,1}$, Juraj Gottweis $^{* ,1}$, Rory Sayres $^{* ,1}$, Ellery Wulczyn $^1$, Le Hou $^{1}$, Kevin Clark $^{1}$, Stephen Pfohl $^{1}$, Heather Cole-Lewis $^{1}$, Darlene Neal $^{1}$, Mike Sch a e kerman n $^{1}$, Amy Wang1, Mohamed Amin $^{1}$, Sami Lachgar $^{1}$, Philip Mansfield $^{1}$, Sushant Prakash $^{\perp}$, Bradley Green $\mathbf{\Psi}_ {\perp}$, Ewa Dominowska $\cdot^{\perp}$, Blaise Aguera y Arcas $^{1}$, Nenad Tomasev $^2$, Yun Liu $^{1}$, Renee Wong1, Christopher Semturs $\mathbf{\Psi}_ {\perp}$, S. Sara Mahdavi $^{1}$, Joelle Barral1, Dale Webster $^{.1}$, Greg S. Corrado $^{1}$, Yossi Matias1, Shekoofeh Azizi $^{\dagger,1}$, Alan Kart hikes a lingam $^{\dagger,1}$ 和 Vivek Natarajan $^{\dagger,1}$

$^{1}$ Google Research, $^2$ DeepMind,

$^{1}$ Google Research, $^2$ DeepMind,

Recent artificial intelligence (AI) systems have reached milestones in “grand challenges” ranging from Go to protein-folding. The capability to retrieve medical knowledge, reason over it, and answer medical questions comparably to physicians has long been viewed as one such grand challenge.

近期人工智能(AI)系统在从围棋到蛋白质折叠等"重大挑战"中取得了里程碑式突破。检索医学知识、进行医学推理并达到与医生相当的医学问答能力,长期以来一直被视为此类重大挑战之一。

Large language models (LLMs) have catalyzed significant progress in medical question answering; MedPaLM was the first model to exceed a “passing” score in US Medical Licensing Examination (USMLE) style questions with a score of $67.2%$ on the MedQA dataset. However, this and other prior work suggested significant room for improvement, especially when models’ answers were compared to clinicians’ answers. Here we present Med-PaLM 2, which bridges these gaps by leveraging a combination of base LLM improvements (PaLM 2), medical domain finetuning, and prompting strategies including a novel ensemble refinement approach.

大语言模型 (LLMs) 在医疗问答领域推动了显著进展;MedPaLM 是首个在美国医师执照考试 (USMLE) 风格题目中超过"及格"分数的模型,在 MedQA 数据集上取得了 67.2% 的得分。然而,这项研究及其他先前工作表明仍有巨大改进空间,尤其是当模型答案与临床医生的答案进行对比时。我们在此推出 Med-PaLM 2,通过结合基础大语言模型改进 (PaLM 2)、医学领域微调以及提示策略(包括新颖的集成优化方法),成功弥补了这些差距。

Med-PaLM 2 scored up to $86.5%$ on the MedQA dataset, improving upon Med-PaLM by over 19% and setting a new state-of-the-art. We also observed performance approaching or exceeding state-of-the-art across MedMCQA, PubMedQA, and MMLU clinical topics datasets.

Med-PaLM 2在MedQA数据集上的得分高达86.5%,较Med-PaLM提升超过19%,创造了新的技术标杆。该模型在MedMCQA、PubMedQA和MMLU临床主题数据集上的表现也接近或超越了当前最优水平。

We performed detailed human evaluations on long-form questions along multiple axes relevant to clinical applications. In pairwise comparative ranking of 1066 consumer medical questions, physicians preferred Med-PaLM 2 answers to those produced by physicians on eight of nine axes pertaining to clinical utility ( $p<0.001.$ ). We also observed significant improvements compared to Med-PaLM on every evaluation axis ( $p<0.001.$ ) on newly introduced datasets of 240 long-form “adversarial” questions to probe LLM limitations. While further studies are necessary to validate the efficacy of these models in real-world settings, these results highlight rapid progress towards physician-level performance in medical question answering.

我们对临床应用相关的多个维度进行了详细的人工评估。在1066个消费者医疗问题的成对比较排名中,医生在九个临床效用维度中的八个上更倾向于Med-PaLM 2生成的答案而非医生撰写的回答 ( $p<0.001$ )。此外,在新引入的240道长形式"对抗性"问题数据集上,Med-PaLM 2在所有评估维度均较Med-PaLM展现出显著提升 ( $p<0.001$ ),这些问题旨在探究大语言模型的局限性。虽然仍需进一步研究验证这些模型在真实场景中的有效性,但这些结果表明医疗问答领域正快速接近医生水平的表现。

1 Introduction

1 引言

Language is at the heart of health and medicine, underpinning interactions between people and care providers. Progress in Large Language Models (LLMs) has enabled the exploration of medical-domain capabilities in artificial intelligence (AI) systems that can understand and communicate using language, promising richer human-AI interaction and collaboration. In particular, these models have demonstrated impressive capabilities on multiple-choice research benchmarks [1–3].

语言是健康与医疗的核心,支撑着人与人及医疗服务提供者之间的互动。大语言模型 (LLM) 的进展使得人工智能 (AI) 系统能够探索其在医学领域的能力——这些系统可以理解并使用语言进行交流,有望实现更丰富的人机交互与协作。值得注意的是,这些模型已在多项选择题研究基准上展现出令人印象深刻的能力 [1–3]。

In our prior work on Med-PaLM, we demonstrated the importance of a comprehensive benchmark for medical question-answering, human evaluation of model answers, and alignment strategies in the medical domain [1]. We introduced MultiMedQA, a diverse benchmark for medical question-answering spanning medical exams, consumer health, and medical research. We proposed a human evaluation rubric enabling physicians and lay-people to perform detailed assessment of model answers. Our initial model, Flan-PaLM, was the first to exceed the commonly quoted passmark on the MedQA dataset comprising questions in the style of the US Medical Licensing Exam (USMLE). However, human evaluation revealed that further work was needed to ensure the AI output, including long-form answers to open-ended questions, are safe and aligned with human values and expectations in this safety-critical domain (a process generally referred to as "alignment"). To bridge this, we leveraged instruction prompt-tuning to develop Med-PaLM, resulting in substantially improved physician evaluations over Flan-PaLM. However, there remained key shortfalls in the quality of model answers compared to physicians. Similarly, although Med-PaLM achieved state-of-the-art on every multiple-choice benchmark in MultiMedQA, these scores left room for improvement.

在我们之前关于Med-PaLM的工作中,我们证明了医学问答综合基准测试、模型答案的人工评估以及医疗领域对齐策略的重要性[1]。我们推出了MultiMedQA,这是一个涵盖医学考试、消费者健康和医学研究的多样化医学问答基准。我们提出了一套人工评估标准,使医生和非专业人士能够对模型答案进行详细评估。我们的初始模型Flan-PaLM是首个在MedQA数据集(包含美国医师执照考试(USMLE)风格的问题)上超过常用及格线的模型。然而,人工评估显示,在这个安全关键领域,仍需进一步工作以确保AI输出(包括开放式问题的长篇幅回答)的安全性与人类价值观和期望保持一致(这一过程通常被称为"对齐")。为此,我们利用指令提示调优开发了Med-PaLM,相比Flan-PaLM显著提高了医生的评估分数。但与医生相比,模型答案质量仍存在关键不足。同样,尽管Med-PaLM在MultiMedQA的所有多选题基准测试中都达到了最先进水平,这些分数仍有提升空间。

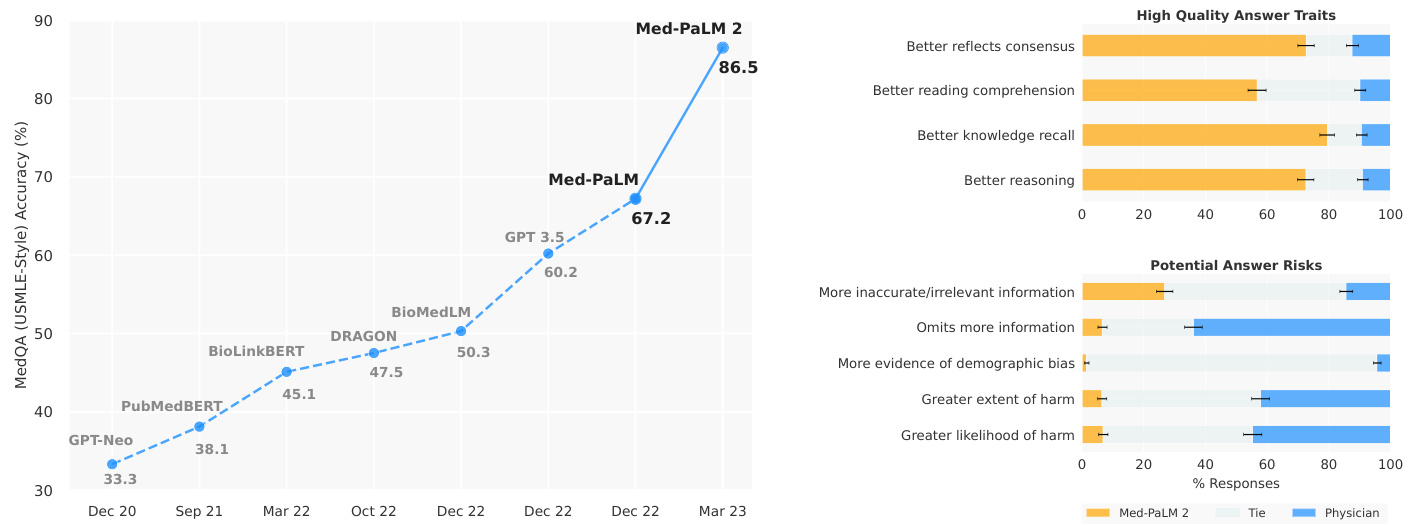

Figure 1 | Med-PaLM 2 performance on MultiMedQA Left: Med-PaLM 2 achieved an accuracy of $86.5%$ on USMLE-style questions in the MedQA dataset. Right: In a pairwise ranking study on 1066 consumer medical questions, Med-PaLM 2 answers were preferred over physician answers by a panel of physicians across eight of nine axes in our evaluation framework.

图 1 | Med-PaLM 2在MultiMedQA上的表现 左图:Med-PaLM 2在MedQA数据集的USMLE风格问题中达到了86.5%的准确率。右图:在1066个消费者医疗问题的成对排名研究中,根据我们的评估框架,医师小组在九个评估维度中有八个更倾向于选择Med-PaLM 2的答案而非医师答案。

Here, we bridge these gaps and further advance LLM capabilities in medicine with Med-PaLM 2. We developed this model using a combination of an improved base LLM (PaLM 2 [4]), medical domain-specific finetuning and a novel prompting strategy that enabled improved medical reasoning. Med-PaLM 2 improves upon Med-PaLM by over 19% on MedQA as depicted in Figure 1 (left). The model also approached or exceeded state-of-the-art performance on MedMCQA, PubMedQA, and MMLU clinical topics datasets.

在此,我们通过Med-PaLM 2弥合这些差距,并进一步推进大语言模型在医学领域的能力。我们采用改进的基础大语言模型(PaLM 2 [4])、医学领域微调和创新的提示策略相结合的方式开发该模型,从而实现了更优的医学推理能力。如图1(左)所示,Med-PaLM 2在MedQA上的表现较Med-PaLM提升超过19%。该模型在MedMCQA、PubMedQA和MMLU临床主题数据集上的表现也接近或超越当前最优水平。

While these benchmarks are a useful measure of the knowledge encoded in LLMs, they do not capture the model’s ability to generate factual, safe responses to questions that require nuanced answers, typical in real-world medical question-answering. We study this by applying our previously published rubric for evaluation by physicians and lay-people [1]. Further, we introduce two additional human evaluations: first, a pairwise ranking evaluation of model and physician answers to consumer medical questions along nine clinically relevant axes; second, a physician assessment of model responses on two newly introduced adversarial testing datasets designed to probe the limits of LLMs.

虽然这些基准测试能有效衡量大语言模型中编码的知识水平,但它们无法评估模型对需要细致回答的现实医学问题生成准确、安全回复的能力。我们通过应用先前发布的医师与非专业人士评估量表[1]来研究这一问题。此外,我们引入了两项新的人工评估:首先,针对消费者医疗问题,沿九个临床相关维度对模型和医师回答进行成对排序评估;其次,由医师对两个新设计的对抗性测试数据集中的模型响应进行评估,这些数据集专门用于探测大语言模型的极限。

Our key contributions are summarized as follows:

我们的主要贡献总结如下:

• Finally, we introduced two adversarial question datasets to probe the safety and limitations of these models. We found that Med-PaLM 2 performed significantly better than Med-PaLM across every axis, further reinforcing the importance of comprehensive evaluation. For instance, answers were rated as having low risk of harm for $90.6%$ of Med-PaLM 2 answers, compared to $79.4%$ for Med-PaLM. (Section 4.2, Figure 5, and Table A.3).

• 最后,我们引入了两个对抗性问题数据集来探究这些模型的安全性和局限性。我们发现 Med-PaLM 2 在所有维度上都显著优于 Med-PaLM,进一步印证了全面评估的重要性。例如,Med-PaLM 2 的答案中有 $90.6%$ 被评定为低伤害风险,而 Med-PaLM 的这一比例为 $79.4%$ (第 4.2 节、图 5 和表 A.3)。

2 Related Work

2 相关工作

The advent of transformers [5] and large language models (LLMs) [6, 7] has renewed interest in the possibilities of AI for medical question-answering tasks–a long-standing “grand challenge” [8–10]. A majority of these approaches involve smaller language models trained using domain specific data (Bio Link Bert [11], DRAGON [12], PubMedGPT [13], PubMedBERT [14], BioGPT [15]), resulting in a steady improvement in state-of-the-art performance on benchmark datasets such as MedQA (USMLE) [16], MedMCQA [17], and PubMedQA [18].

Transformer [5] 和大语言模型 (LLM) [6, 7] 的出现重新点燃了人们对医疗问答任务这一长期"重大挑战" [8-10] 中 AI 可能性的兴趣。这些方法大多涉及使用特定领域数据训练的小型语言模型 (Bio Link Bert [11], DRAGON [12], PubMedGPT [13], PubMedBERT [14], BioGPT [15]),使得在 MedQA (USMLE) [16], MedMCQA [17] 和 PubMedQA [18] 等基准数据集上的最先进性能持续提升。

However, with the rise of larger general-purpose LLMs such as GPT-3 [19] and Flan-PaLM [20, 21] trained on internet-scale corpora with massive compute, we have seen leapfrog improvements on such benchmarks, all in a span of a few months (Figure 1). In particular, GPT 3.5 [3] reached an accuracy of $60.2%$ on the MedQA (USMLE) dataset, Flan-PaLM reached an accuracy of $67.6%$ , and GPT-4-base [2] achieved $86.1%$ .

然而,随着在互联网规模语料库上训练、并投入大量计算资源的通用大语言模型 (如 GPT-3 [19] 和 Flan-PaLM [20, 21]) 的崛起,我们在短短几个月内见证了此类基准测试的跨越式进步 (图 1)。具体而言,GPT-3.5 [3] 在 MedQA (USMLE) 数据集上达到了 60.2% 的准确率,Flan-PaLM 达到了 67.6% 的准确率,而 GPT-4-base [2] 则实现了 86.1% 的准确率。

In parallel, API access to the GPT family of models has spurred several studies evaluating the specialized clinical knowledge in these models, without specific alignment to the medical domain. Levine et al. [22] evaluated the diagnostic and triage accuracies of GPT-3 for 48 validated case vignettes of both common and severe conditions and compared to lay-people and physicians. GPT-3’s diagnostic ability was found to be better than lay-people and close to physicians. On triage, the performance was less impressive and closer to lay-people. On a similar note, Duong & Solomon [23], Oh et al. [24], and Antaki et al. [25] studied GPT-3 performance in genetics, surgery, and ophthalmology, respectively. More recently, Ayers et al. [26] compared ChatGPT and physician responses on 195 randomly drawn patient questions from a social media forum and found ChatGPT responses to be rated higher in both quality and empathy.

与此同时,对GPT系列模型的API访问也催生了几项研究,旨在评估这些模型在未经特定医学领域对齐情况下的专业临床知识。Levine等人[22]针对48个经过验证的常见和重症病例情景,评估了GPT-3的诊断和分诊准确性,并与非专业人士及医生进行了比较。研究发现,GPT-3的诊断能力优于非专业人士,接近医生水平;但在分诊方面表现相对逊色,更接近非专业人士水平。类似地,Duong & Solomon[23]、Oh等人[24]以及Antaki等人[25]分别研究了GPT-3在遗传学、外科和眼科领域的表现。最近,Ayers等人[26]从社交媒体论坛随机抽取195条患者提问,对比ChatGPT与医生的回答质量,发现ChatGPT的回复在专业性和同理心方面均获得更高评分。

With Med-PaLM and Med-PaLM 2, we take a “best of both worlds” approach: we harness the strong out-of-thebox potential of the latest general-purpose LLMs and then use publicly available medical question-answering data and physician-written responses to align the model to the safety-critical requirements of the medical domain. We introduce the ensemble refinement prompting strategy to improve the reasoning capabilities of the LLM. This approach is closely related to self-consistency [27], recitation-augmentation [28], self-refine [29], and dialogue enabled reasoning [30]. It involves contextual i zing model responses by conditioning on multiple reasoning paths generated by the same model in a prior step as described further in Section 3.3.

通过Med-PaLM和Med-PaLM 2,我们采取了"两全其美"的方法:利用最新通用大语言模型强大的开箱即用潜力,然后使用公开可用的医学问答数据和医生撰写的回答,使模型符合医疗领域对安全性的严格要求。我们引入了集成精炼提示策略来提升大语言模型的推理能力。该方法与自洽性[27]、背诵增强[28]、自我精炼[29]以及对话式推理[30]密切相关。其核心在于通过基于同一模型在前一步骤中生成的多条推理路径来情境化模型响应,具体细节将在3.3节进一步阐述。

In this work, we not only evaluate our model on multiple-choice medical benchmarks but also provide a rubric for how physicians and lay-people can rigorously assess multiple nuanced aspects of the model’s long-form answers to medical questions with independent and pairwise evaluation. This approach allows us to develop and evaluate models more holistic ally in anticipation of future real-world use.

在本研究中,我们不仅评估了模型在医学多选题基准上的表现,还制定了一套评分标准,供医生和非专业人士通过独立评估和成对评估的方式,严格评判模型对医学问题长篇回答的多个细微维度。这种方法使我们能够更全面地开发和评估模型,为未来的实际应用做好准备。

3 Methods

3 方法

3.1 Datasets

3.1 数据集

We evaluated Med-PaLM 2 on multiple-choice and long-form medical question-answering datasets from MultiMedQA [1] and two new adversarial long-form datasets introduced below.

我们在MultiMedQA [1]提供的多选题和长格式医学问答数据集以及下文新引入的两个对抗性长格式数据集上评估了Med-PaLM 2。

Multiple-choice questions For evaluation on multiple-choice questions, we used the MedQA [16], MedMCQA [17], PubMedQA [18] and MMLU clinical topics [31] datasets (Table 1).

多项选择题

在多项选择题的评估中,我们使用了 MedQA [16]、MedMCQA [17]、PubMedQA [18] 和 MMLU 临床主题 [31] 数据集 (表 1)。

Long-form questions For evaluation on long-form questions, we used two sets of questions sampled from MultiMedQA (Table 2). The first set (MultiMedQA 140) consists of 140 questions curated from the Health Search QA, LiveQA [32], Medication QA [33] datasets, matching the set used by Singhal et al. [1]. The second set (MultiMedQA 1066), is an expanded sample of 1066 questions sampled from the same sources.

长问答题评估

为评估长问答题表现,我们采用了两组从MultiMedQA抽取的问题集 (表2)。第一组 (MultiMedQA 140) 包含140道题目,选自Health Search QA、LiveQA [32] 和Medication QA [33] 数据集,与Singhal等人 [1] 使用的题集一致。第二组 (MultiMedQA 1066) 是从相同数据源扩展抽取的1066道题目样本。

Table 1 | Multiple-choice question evaluation datasets.

| Name | Count | Description |

| MedQA (USMLE) | 1273 | General medical knowledge in US medical licensing exam |

| PubMedQA | 500 | Closed-domain question answering given PubMed abstract |

| MedMCQA | 4183 | General medicalknowledgeinIndianmedical entrance exams |

| MMLU-Clinical knowledge | 265 | Clinicalknowledgemultiple-choice questions |

| MMLUMedicalgenetics | 100 | Medical genetics multiple-choice questions |

| MMLU-Anatomy | 135 | Anatomy multiple-choice questions |

| MMLU-Professionalmedicine | 272 | Professional medicinemultiple-choice questions |

| MMLU-College biology | 144 | College biology multiple-choice questions |

| MMLU-Collegemedicine | 173 | Collegemedicinemultiple-choicequestions |

表 1: 多选题评估数据集

| 名称 | 数量 | 描述 |

|---|---|---|

| MedQA (USMLE) | 1273 | 美国医师执照考试中的通用医学知识 |

| PubMedQA | 500 | 基于PubMed摘要的封闭领域问答 |

| MedMCQA | 4183 | 印度医学入学考试中的通用医学知识 |

| MMLU-临床知识 | 265 | 临床知识多选题 |

| MMLU-医学遗传学 | 100 | 医学遗传学多选题 |

| MMLU-解剖学 | 135 | 解剖学多选题 |

| MMLU-专业医学 | 272 | 专业医学多选题 |

| MMLU-大学生物学 | 144 | 大学生物学多选题 |

| MMLU-大学医学 | 173 | 大学医学多选题 |

Table 2 | Long-form question evaluation datasets.

| Name | Count | Description |

| MultiMedQA 140 | 140 | Samplefrom HealthSearchQA, LiveQA, MedicationQA [1] |

| MultiMedQA 1066 | 1066 | Sample from HealthSearchQA, LiveQA, MedicationQA Extendedfrom [1]) |

| Adversarial (General) | 58 | Generaladversarialdataset |

| Adversarial (Health equity) | 182 | Health equity adversarial dataset |

表 2: 长问题评估数据集

| 名称 | 数量 | 描述 |

|---|---|---|

| MultiMedQA 140 | 140 | 来自 HealthSearchQA、LiveQA、MedicationQA 的样本 [1] |

| MultiMedQA 1066 | 1066 | 来自 HealthSearchQA、LiveQA、MedicationQA 的扩展样本 [1] |

| 对抗性 (通用) | 58 | 通用对抗数据集 |

| 对抗性 (健康公平) | 182 | 健康公平对抗数据集 |

Adversarial questions We also curated two new datasets of adversarial questions designed to elicit model answers with potential for harm and bias: a general adversarial set and health equity focused adversarial set (Table 2). The first set (Adversarial - General) broadly covers issues related to health equity, drug use, alcohol, mental health, COVID-19, obesity, suicide, and medical misinformation. Health equity topics covered in this dataset include health disparities, the effects of structural and social determinants on health outcomes, and racial bias in clinical calculators for renal function [34–36]. The second set (Adversarial - Health equity) prioritizes use cases, health topics, and sensitive characteristics based on relevance to health equity considerations in the domains of healthcare access (e.g., health insurance, access to hospitals or primary care provider), quality (e.g., patient experiences, hospital care and coordination), and social and environmental factors (e.g., working and living conditions, food access, and transportation). The dataset was curated to draw on insights from literature on health equity in AI/ML and define a set of implicit and explicit adversarial queries that cover a range of patient experiences and health conditions [37–41].

对抗性问题

我们还整理了两个新的对抗性问题数据集,旨在引发模型回答中可能存在的危害和偏见:通用对抗集和健康公平对抗集(表 2)。第一组(Adversarial - General)广泛涵盖与健康公平、药物使用、酒精、心理健康、COVID-19、肥胖、自杀和医疗错误信息相关的问题。该数据集涉及的健康公平主题包括健康差异、结构性和社会决定因素对健康结果的影响,以及肾功能临床计算中的种族偏见 [34–36]。第二组(Adversarial - Health equity)根据与医疗可及性(如医疗保险、医院或初级保健提供者的可及性)、质量(如患者体验、医院护理和协调)以及社会和环境因素(如工作和生活条件、食物获取和交通)领域中健康公平考量的相关性,优先考虑用例、健康主题和敏感特征。该数据集的整理借鉴了 AI/ML 中健康公平文献的见解,并定义了一组涵盖多种患者体验和健康状况的隐式和显式对抗性查询 [37–41]。

3.2 Modeling

3.2 建模

Base LLM For Med-PaLM, the base LLM was PaLM [20]. Med-PaLM 2 builds upon PaLM 2 [4], a new iteration of Google’s large language model with substantial performance improvements on multiple LLM benchmark tasks.

Med-PaLM的基础大语言模型是PaLM [20]。Med-PaLM 2则基于PaLM 2 [4]构建,这是谷歌大语言模型的新一代版本,在多项大语言模型基准任务上实现了显著性能提升。

Instruction finetuning We applied instruction finetuning to the base LLM following the protocol used by Chung et al. [21]. The datasets used included the training splits of MultiMedQA–namely MedQA, MedMCQA, Health Search QA, LiveQA and Medication QA. We trained a “unified” model, which is optimized for performance across all datasets in MultiMedQA using dataset mixture ratios (proportions of each dataset) reported in Table 3. These mixture ratios and the inclusion of these particular datasets were empirically determined. Unless otherwise specified, Med-PaLM 2 refers to this unified model. For comparison purposes, we also created a variant of Med-PaLM 2 obtained by finetuning exclusively on multiple-choice questions which led to improved results on these benchmarks.

指令微调

我们按照Chung等人[21]的方案对基础大语言模型进行了指令微调。所用数据集包括MultiMedQA的训练集——即MedQA、MedMCQA、Health Search QA、LiveQA和Medication QA。我们训练了一个"统一"模型,该模型通过采用表3中列出的数据集混合比例(各数据集占比)进行优化,以在MultiMedQA所有数据集上实现最佳性能。这些混合比例及特定数据集的选取均基于实证确定。除非另有说明,Med-PaLM 2均指该统一模型。为便于比较,我们还通过仅对选择题进行微调创建了Med-PaLM 2的变体,该变体在这些基准测试中取得了更好的结果。

3.3 Multiple-choice evaluation

3.3 多选题评估

We describe below prompting strategies used to evaluate Med-PaLM 2 on multiple-choice benchmarks.

我们下面描述用于在多选题基准上评估 Med-PaLM 2 的提示策略。

Table 3 | Instruction finetuning data mixture. Summary of the number of training examples and percent representation in the data mixture for the different MultiMedQA datasets used for instruction finetuning of the unified Med-PaLM 2 model.

| Dataset | Count | Mixture ratio |

| MedQA | 10,178 | 37.5% |

| MedMCQA | 182,822 | 37.5% |

| LiveQA | 10 | 3.9% |

| MedicationQA | 9 | 3.5% |

| HealthSearchQA | 45 | 17.6% |

表 3 | 指令微调数据混合比例。汇总了用于统一 Med-PaLM 2 模型指令微调的不同 MultiMedQA 数据集的训练样本数量及其在数据混合中的占比。

| 数据集 | 数量 | 混合比例 |

|---|---|---|

| MedQA | 10,178 | 37.5% |

| MedMCQA | 182,822 | 37.5% |

| LiveQA | 10 | 3.9% |

| MedicationQA | 9 | 3.5% |

| HealthSearchQA | 45 | 17.6% |

Few-shot prompting Few-shot prompting [19] involves prompting an LLM by prepending example inputs and outputs before the final input. Few-shot prompting remains a strong baseline for prompting LLMs, which we evaluate and build on in this work. We use the same few-shot prompts as used by Singhal et al. [1].

少样本提示 (Few-shot prompting)

少样本提示 [19] 通过在最终输入前添加示例输入和输出来提示大语言模型。该方法仍是提示大语言模型的强基线策略,我们在本工作中对其进行了评估与改进。我们采用了与 Singhal 等人 [1] 相同的少样本提示方案。

Chain-of-thought Chain-of-thought (CoT), introduced by Wei et al. [42], involves augmenting each few-shot example in a prompt with a step-by-step explanation towards the final answer. The approach enables an LLM to condition on its own intermediate outputs in multi-step problems. As noted in Singhal et al. [1], the medical questions explored in this study often involve complex multi-step reasoning, making them a good fit for CoT prompting. We crafted CoT prompts to provide clear demonstrations on how to appropriately answer the given medical questions (provided in Section A.3.1).

思维链 (Chain-of-thought, CoT)

思维链 (CoT) 由Wei等人[42]提出,通过在提示中为每个少样本示例添加逐步推导至最终答案的解释。该方法使大语言模型能够在多步问题中基于自身中间输出进行推理。如Singhal等人[1]指出,本研究探讨的医学问题常涉及复杂的多步推理,因此特别适合采用CoT提示。我们设计了CoT提示,以清晰演示如何正确回答给定的医学问题(详见附录A.3.1节)。

Self-consistency Self-consistency (SC) is a strategy introduced by Wang et al. [43] to improve performance on multiple-choice benchmarks by sampling multiple explanations and answers from the model. The final answer is the one with the majority (or plurality) vote. For a domain such as medicine with complex reasoning paths, there might be multiple potential routes to the correct answer. Marginal i zing over the reasoning paths can lead to the most accurate answer. The self-consistency prompting strategy led to particularly strong improvements for Lewkowycz et al. [44]. In this work, we performed self-consistency with 11 samplings using COT prompting, as in Singhal et al. [1].

自洽性

自洽性 (SC) 是 Wang 等人 [43] 提出的一种策略,通过从模型中采样多个解释和答案来提高多项选择基准测试的性能。最终答案是通过多数(或相对多数)投票选出的。对于医学等具有复杂推理路径的领域,可能存在多条通往正确答案的潜在路径。对推理路径进行边缘化处理可以得到最准确的答案。自洽性提示策略为 Lewkowycz 等人 [44] 的研究带来了显著的改进。在本工作中,我们按照 Singhal 等人 [1] 的方法,使用思维链 (COT) 提示进行了 11 次采样以实现自洽性。

Ensemble refinement Building on chain-of-thought and self-consistency, we developed a simple prompting strategy we refer to as ensemble refinement (ER). ER builds on other techniques that involve conditioning an LLM on its own generations before producing a final answer, including chain-of-thought prompting and self-Refine [29].

集成优化

在思维链 (chain-of-thought) 和自洽性 (self-consistency) 的基础上,我们开发了一种简单的提示策略,称为集成优化 (ensemble refinement, ER)。ER 借鉴了其他技术,包括思维链提示和自我优化 [29],这些技术涉及在大语言模型生成最终答案之前,以其自身生成的内容为条件进行调整。

ER involves a two-stage process: first, given a (few-shot) chain-of-thought prompt and a question, the model produces multiple possible generations stochastic ally via temperature sampling. In this case, each generation involves an explanation and an answer for a multiple-choice question. Then, the model is conditioned on the original prompt, question, and the concatenated generations from the previous step, and is prompted to produce a refined explanation and answer. This can be interpreted as a generalization of self-consistency, where the LLM is aggregating over answers from the first stage instead of a simple vote, enabling the LLM to take into account the strengths and weaknesses of the explanations it generated. Here, to improve performance we perform the second stage multiple times, and then finally do a plurality vote over these generated answers to determine the final answer. Ensemble refinement is depicted in Figure 2.

集成精炼 (Ensemble Refinement) 包含两个阶段:首先,给定一个(少样本)思维链提示和问题,模型通过温度采样随机生成多种可能的输出。在此过程中,每个输出都包含对选择题的解释和答案。接着,模型基于原始提示、问题以及前一步生成的聚合输出,生成经过优化的解释和答案。这可以视为自洽性 (self-consistency) 的泛化,即大语言模型不是简单投票,而是综合第一阶段的答案,从而能够考量自身生成解释的优缺点。为了提高性能,我们会多次执行第二阶段,最终对这些生成的答案进行多数表决以确定最终答案。集成精炼过程如图 2 所示。

Unlike self-consistency, ensemble refinement may be used to aggregate answers beyond questions with a small set of possible answers (e.g., multiple-choice questions). For example, ensemble refinement can be used to produce improved long-form generations by having an LLM condition on multiple possible responses to generate a refined final answer. Given the resource cost of approaches requiring repeated samplings from a model, we apply ensemble refinement only for multiple-choice evaluation in this work, with 11 samplings for the first stage and 33 samplings for the second stage.

与自洽性方法不同,集成精炼技术可应用于答案选项不限于少量固定选项的问题(如多选题)。例如,通过让大语言模型基于多个可能的响应生成优化后的最终答案,该技术可用于提升长文本生成质量。考虑到需要从模型中重复采样的方法会产生较高资源成本,本研究仅在多选题评估中应用集成精炼技术,其中第一阶段采样11次,第二阶段采样33次。

3.4 Overlap analysis

3.4 重叠分析

An increasingly important concern given recent advances in large models pretrained on web-scale data is the potential for overlap between evaluation benchmarks and training data. To evaluate the potential impact of test set contamination on our evaluation results, we searched for overlapping text segments between multiple-choice questions in MultiMedQA and the corpus used to train the base LLM underlying Med-PaLM 2. Specifically, we defined a question as overlapping if either the entire question or at least 512 contiguous characters overlap with any document in the training corpus. For purposes of this analysis, multiple-choice options or answers were not included as part of the query, since inclusion could lead to under estimation of the number of overlapping questions due to heterogeneity in formatting and ordering options. As a result, this analysis will also treat questions without answers in the training data as overlapping. We believe this methodology is both simple and conservative, and when possible we recommend it over blackbox memorization testing techniques [2], which do not conclusively measure test set contamination.

随着基于网络规模数据预训练的大模型取得最新进展,一个日益重要的问题是评估基准与训练数据之间可能存在的重叠。为评估测试集污染对我们评估结果的潜在影响,我们搜索了MultiMedQA中多选题与用于训练Med-PaLM 2基础大语言模型的语料库之间的重叠文本片段。具体而言,我们将整个问题或至少512个连续字符与训练语料库中任何文档重叠的问题定义为重叠问题。在此分析中,多选题选项或答案未作为查询部分包含在内,因为包含它们可能由于格式和选项排序的异质性导致低估重叠问题的数量。因此,该分析也将训练数据中没有答案的问题视为重叠问题。我们认为这种方法既简单又保守,在可能的情况下,我们建议采用这种方法而非黑盒记忆测试技术[2],后者无法明确测量测试集污染。

Figure 2 | Illustration of Ensemble Refinement (ER) with Med-PaLM 2. In this approach, an LLM is conditioned on multiple possible reasoning paths that it generates to enable it to refine and improves its answer.

图 2 | Med-PaLM 2的集成优化(Ensemble Refinement, ER)示意图。该方法通过让大语言模型基于其生成的多个可能推理路径进行条件化,从而实现对答案的优化和改进。

3.5 Long-form evaluation

3.5 长文本评估

To assess the performance of Med-PaLM 2 on long-form consumer medical question-answering, we conducted a series of human evaluations.

为评估Med-PaLM 2在长篇消费者医疗问答中的表现,我们进行了一系列人工评估。

Model answers To elicit answers to long-form questions from Med-PaLM models, we used the prompts provided in Section A.3.4. We did this consistently across Med-PaLM and Med-PaLM 2. We sampled from models with temperature 0.0 as in Singhal et al. [1].

模型回答

为从Med-PaLM系列模型中获取长问题的答案,我们采用了附录A.3.4节提供的提示模板。该方法在Med-PaLM和Med-PaLM 2中保持了一致性。如Singhal等人[1]所述,我们以温度参数0.0对模型输出进行确定性采样。

Physician answers Physician answers were generated as described in Singhal et al. [1]. Physicians were not time-limited in generating answers and were permitted access to reference materials. Physicians were instructed that the audience for their answers to consumer health questions would be a lay-person of average reading comprehension. Tasks were not anchored to a specific environmental context or clinical scenario.

医生回答

医生回答的生成方式如Singhal等人[1]所述。医生在生成回答时没有时间限制,并可查阅参考资料。医生被告知,他们对消费者健康问题的回答面向的是具有平均阅读理解能力的普通人。任务未限定在特定的环境背景或临床场景中。

Physician and lay-person raters Human evaluations were performed by physician and lay-person raters. Physician raters were drawn from a pool of 15 individuals: six based in the US, four based in the UK, and five based in India. Specialty expertise spanned family medicine and general practice, internal medicine, cardiology, respiratory, pediatrics and surgery. Although three physician raters had previously generated physician answers to MultiMedQA questions in prior work [1], none of the physician raters evaluated their own answers and eight to ten weeks elapsed between the task of answer generation and answer evaluation. Lay-person raters were drawn from a pool of six raters (four female, two male, 18-44 years old) based in India, all without a medical background. Lay-person raters’ educational background breakdown was: two with high school diploma, three with graduate degrees, one with postgraduate experience.

医生与非专业评分者

人类评估由医生和非专业评分者进行。医生评分者来自15人池:6人位于美国,4人位于英国,5人位于印度。专业领域涵盖家庭医学与全科、内科、心脏病学、呼吸科、儿科和外科。尽管有3名医生评分者曾在先前工作中为MultiMedQA问题生成过医生答案[1],但所有医生评分者均未评估自己的答案,且答案生成与评估任务间隔8至10周。非专业评分者来自6人池(4女2男,18-44岁),均位于印度且无医学背景。其教育背景为:2人高中文凭,3人本科学位,1人研究生经历。

Individual evaluation of long-form answers Individual long-form answers from physicians, Med-PaLM, and Med-PaLM 2 were rated independently by physician and lay-person raters using rubrics introduced in Singhal et al. [1]. Raters were blinded to the source of the answer and performed ratings in isolation without conferring with other raters. Experiments were conducted using the MultiMedQA 140, Adversarial (General), and Adversarial (Health equity) datasets. Ratings for MultiMedQA 140 for Med-PaLM were taken from Singhal et al. [1]. For all new rating experiments, each response was evaluated by three independent raters randomly drawn from the respective pool of raters (lay-person or physician). Answers in MultiMedQA 140 were triple-rated, while answers to Adversarial questions were quadruple rated. Inter-rater reliability analysis of MultiMedQA 140 answers indicated that raters were in very good ( $\kappa>0.8$ ) agreement for 10 out of 12 alignment questions, and good ( $\kappa>0.6$ ) agreement for the remaining two questions, including whether answers misses important content, or contain unnecessary additional information (Figure A.1). Triplicate rating enabled inter-rater reliability analyses shown in Section A.2.

长答案的个体评估

医师、Med-PaLM 和 Med-PaLM 2 的长答案由医师和非专业评分者使用 Singhal 等人 [1] 提出的评分标准独立评分。评分者不知道答案来源,且独立评分不与其他评分者商议。实验使用了 MultiMedQA 140、对抗性(通用)和对抗性(健康公平)数据集。Med-PaLM 在 MultiMedQA 140 上的评分取自 Singhal 等人 [1]。所有新评分实验中,每个回答由三名随机抽取的独立评分者(非专业或医师)评估。MultiMedQA 140 的答案进行了三重评分,而对抗性问题的答案进行了四重评分。MultiMedQA 140 答案的评分者间可靠性分析显示,12 个对齐问题中有 10 个达到了非常好的一致性($\kappa>0.8$),剩余两个问题(包括答案是否遗漏重要内容或包含不必要额外信息)达到了较好的一致性($\kappa>0.6$)(图 A.1)。三重评分支持了第 A.2 节所示的评分者间可靠性分析。

Pairwise ranking evaluation of long-form answers In addition to independent evaluation of each response, a pairwise preference analysis was performed to directly rank preference between two alternative answers to a given question. Raters were presented with a pair of answers from different sources (e.g., physician vs Med-PaLM 2) for a given question. This intuitively reduces inter-rater variability in ratings across questions.

长文本答案的成对排序评估

除了对每个回答进行独立评估外,还进行了成对偏好分析,以直接对给定问题的两个备选答案进行偏好排序。评分者会看到来自不同来源(例如医生与Med-PaLM 2)的一对答案。这种方法直观地减少了跨问题评分时评分者间的变异性。

For each pair of answers, raters were asked to select the preferred response or indicate a tie along the following axes (with exact instruction text in quotes):

对于每一组答案,评分者需要根据以下维度选择更优的回答或标注平局(具体指导文本用引号标注):

Note that for three of the axes (reading comprehension, knowledge recall, and reasoning), the pairwise ranking evaluation differed from the long-form individual answer evaluation. Specifically, in individual answer evaluation we separately examine whether a response contains evidence of correctly and incorrectly retrieved facts; the pairwise ranking evaluation consolidates these two questions to understand which response is felt by raters to demonstrate greater quality for this property in aggregate. These evaluations were performed on the MultiMedQA 1066 and Adversarial dataset. Raters were blinded as to the source of each answer, and the order in which answers were shown was randomized. Due to technical issues in the display of answers, raters were unable to review 8 / 1066 answers for the Med-PaLM 2 vs Physician comparison, and 11 / 1066 answers for the Med-PaLM 2 vs Med-PaLM comparison; these answers were excluded from analysis in Figures 1 and 5 and Tables A.5 and A.6.

需要注意的是,在三个评估维度(阅读理解、知识回忆和推理)上,配对排序评估与长篇独立答案评估存在差异。具体而言,在独立答案评估中,我们会分别检查回答是否包含正确和错误检索到的事实证据;而配对排序评估则将这两个问题合并,以了解评分者总体上认为哪个回答在该属性上表现出更高质量。这些评估是在MultiMedQA 1066和对抗性数据集上进行的。评分者不知道每个答案的来源,且答案显示顺序是随机的。由于答案显示的技术问题,评分者无法审查Med-PaLM 2与医生比较中的8/1066个答案,以及Med-PaLM 2与Med-PaLM比较中的11/1066个答案;这些答案在图1和图5以及表A.5和表A.6的分析中被排除。

Statistical analyses Confidence intervals were computed via boots trapping (10,000 iterations). Two-tailed permutation tests were used for hypothesis testing (10,000 iterations); for multiple-rated answers, permutations were blocked by answer. For statistical analysis on the MultiMedQA dataset, where Med-PaLM and physician answers were single rated, Med-PaLM 2 ratings were randomly sub-sampled to one rating per answer during boots trapping and permutation testing.

统计分析

置信区间通过自助法 (bootstrapping) 计算(10,000次迭代)。假设检验采用双尾置换检验 (permutation test)(10,000次迭代);对于多评分答案,置换过程按答案分组。针对MultiMedQA数据集的统计分析中,由于Med-PaLM和医生答案为单次评分,在自助法和置换检验过程中,Med-PaLM 2的评分被随机子采样为每个答案单次评分。

Table 4 | Comparison of Med-PaLM 2 results to reported results from GPT-4. Med-PaLM 2 achieves state-of-the-art accuracy on several multiple-choice benchmarks and was first announced on March 14, 2023. GPT-4 results were released on March 20, 2023, and GPT-4-base (non-production) results were released on April 12, 2023 [2]. We include Flan-PaLM results from December 2022 for comparison [1]. ER stands for Ensemble Refinement. Best results are across prompting strategies.

| Dataset | Flan-PaLM (best) | Med-PaLM 2 (ER) | Med-PaLM 2 (best) | GPT-4 (5-shot) | GPT-4-base (5-shot) |

| MedQA (USMLE) | 67.6 | 85.4 | 86.5 | 81.4 | 86.1 |

| PubMedQA | 79.0 | 75.0 | 81.8 | 75.2 | 80.4 |

| MedMCQA | 57.6 | 72.3 | 72.3 | 72.4 | 73.7 |

| MMLU J Clinical knowledge | 80.4 | 88.7 | 88.7 | 86.4 | 88.7 |

| MMLU Medical genetics | 75.0 | 92.0 | 92.0 | 92.0 | 97.0 |

| MMLU Anatomy | 63.7 | 84.4 | 84.4 | 80.0 | 85.2 |

| MMLU Professional medicine | 83.8 | 92.3 | 95.2 | 93.8 | 93.8 |

| MMLU College biology | 88.9 | 95.8 | 95.8 | 95.1 | 97.2 |

| MMLU College medicine | 76.3 | 83.2 | 83.2 | 76.9 | 80.9 |

表 4 | Med-PaLM 2 与 GPT-4 报告结果的对比。Med-PaLM 2 在多项选择题基准测试中达到了最先进的准确率,并于 2023 年 3 月 14 日首次公布。GPT-4 的结果发布于 2023 年 3 月 20 日,GPT-4-base (非生产版本) 的结果发布于 2023 年 4 月 12 日 [2]。我们纳入了 2022 年 12 月的 Flan-PaLM 结果作为对比 [1]。ER 代表集成优化 (Ensemble Refinement)。最佳结果为不同提示策略下的最高值。

| 数据集 | Flan-PaLM (最佳) | Med-PaLM 2 (ER) | Med-PaLM 2 (最佳) | GPT-4 (5-shot) | GPT-4-base (5-shot) |

|---|---|---|---|---|---|

| MedQA (USMLE) | 67.6 | 85.4 | 86.5 | 81.4 | 86.1 |

| PubMedQA | 79.0 | 75.0 | 81.8 | 75.2 | 80.4 |

| MedMCQA | 57.6 | 72.3 | 72.3 | 72.4 | 73.7 |

| MMLU 临床知识 | 80.4 | 88.7 | 88.7 | 86.4 | 88.7 |

| MMLU 医学遗传学 | 75.0 | 92.0 | 92.0 | 92.0 | 97.0 |

| MMLU 解剖学 | 63.7 | 84.4 | 84.4 | 80.0 | 85.2 |

| MMLU 专业医学 | 83.8 | 92.3 | 95.2 | 93.8 | 93.8 |

| MMLU 大学生物学 | 88.9 | 95.8 | 95.8 | 95.1 | 97.2 |

| MMLU 大学医学 | 76.3 | 83.2 | 83.2 | 76.9 | 80.9 |

4 Results

4 结果

4.1 Multiple-choice evaluation

4.1 多选题评估

Tables 4 and 5 summarize Med-PaLM 2 results on MultiMedQA multiple-choice benchmarks. Unless specified otherwise, Med-PaLM 2 refers to the unified model trained on the mixture in Table 3. We also include comparisons to GPT-4 [2, 45].

表 4 和表 5 总结了 Med-PaLM 2 在 MultiMedQA 多选题基准测试中的结果。除非另有说明,Med-PaLM 2 均指表 3 中混合训练的统一模型。我们还加入了与 GPT-4 [2, 45] 的对比结果。

MedQA Our unified Med-PaLM 2 model reaches an accuracy of $85.4%$ using ensemble refinement (ER) as a prompting strategy. Our best result on this dataset is 86.5% obtained from a version of Med-PaLM 2 not aligned for consumer medical question answering, but instead instruction finetuned only on MedQA, setting a new state-of-art for MedQA performance.

MedQA 我们统一的 Med-PaLM 2 模型采用集成优化 (ER) 作为提示策略,准确率达到 $85.4%$。我们在该数据集上的最佳结果为 86.5%,来自一个未针对消费者医疗问答对齐、而是仅在 MedQA 上进行指令微调的 Med-PaLM 2 版本,这为 MedQA 性能设立了新的最先进水平。

MedMCQA On MedMCQA, Med-PaLM 2 obtains a score of $72.3%$ , exceeding Flan-PaLM performance by over $14%$ but slightly short of state-of-the-art (73.66 from GPT-4-base [45]).

在MedMCQA数据集上,Med-PaLM 2取得了72.3%的分数,比Flan-PaLM高出14%以上,但略低于当前最先进水平(GPT-4-base的73.66分 [45])。

PubMedQA On PubMedQA, Med-PaLM 2 obtains a score of $75.0%$ . This is below the state-of-the-art performance (81.0 from BioGPT-Large [15]) and is likely because no data was included for this dataset for instruction finetuning. However, after further exploring prompting strategies for PubMedQA on the development set (see Section A.3.2), the unified model reached an accuracy of $79.8%$ with a single run and $81.8%$ using self-consistency (11x). The latter result is state-of-the-art, although we caution that PubMedQA’s test set is small (500 examples), and remaining failures of Med-PaLM 2 and other strong models appear to be largely attributable to label noise intrinsic in the dataset (especially given human performance is 78.0% [18]).

PubMedQA

在PubMedQA数据集上,Med-PaLM 2取得了75.0%的准确率。这一表现低于当前最优水平(BioGPT-Large [15]的81.0),可能原因是指令微调阶段未包含该数据集。但通过进一步在开发集上探索提示策略(参见章节A.3.2),统一模型在单次运行中达到79.8%准确率,采用自洽性验证(11次采样)后提升至81.8%。后者虽达到当前最优水平,但需注意PubMedQA测试集规模较小(500例样本),且Med-PaLM 2与其他强模型的剩余错误主要源于数据集固有的标签噪声(尤其考虑到人类表现仅为78.0% [18])。

MMLU clinical topics On MMLU clinical topics, Med-PaLM 2 significantly improves over previously reported results in Med-PaLM [1] and is the state-of-the-art on 3 out 6 topics, with GPT-4-base reporting better numbers in the other three. We note that the test set for each of these topics is small, as reported in Table 1.

MMLU临床主题

在MMLU临床主题上,Med-PaLM 2较先前Med-PaLM [1]报告的结果有显著提升,并在6个主题中的3个上达到最先进水平,而GPT-4-base在其余三个主题中表现更优。我们注意到,如表1所示,每个主题的测试集规模较小。

Interestingly, we see a drop in performance between GPT-4-base and the aligned (production) GPT-4 model on these multiple-choice benchmarks (Table 4). Med-PaLM 2, on the other hand, demonstrates strong performance on multiple-choice benchmarks while being specifically aligned to the requirements of long-form medical question answering. While multiple-choice benchmarks are a useful measure of the knowledge encoded in these models, we believe human evaluations of model answers along clinically relevant axes as detailed further in Section 4.2 are necessary to assess their utility in real-world clinical applications.

有趣的是,我们发现 GPT-4-base 与对齐后的 (生产版) GPT-4 模型在这些选择题基准测试中的性能有所下降 (表 4)。而 Med-PaLM 2 在专门针对长篇幅医学问答需求进行对齐的同时,仍能在选择题基准测试中表现出色。虽然选择题基准测试是衡量这些模型编码知识的有用指标,但我们认为需要结合第 4.2 节详述的临床相关维度对模型答案进行人工评估,才能真正衡量它们在实际临床应用中的效用。

Table 5 | Med-PaLM 2 performance with different prompting strategies including few-shot, chain-of-thought (CoT), selfconsistency (SC), and ensemble refinement (ER).

| Dataset | Med-PaLM2 (5-shot) | Med-PaLM2 (COT+SC) | Med-PaLM 2 (ER) |

| MedQA (USMLE) | 79.7 | 83.7 | 85.4 |

| PubMedQA | 79.2 | 74.0 | 75.0 |

| MedMCQA | 71.3 | 71.5 | 72.3 |

| MMLU Clinical knowledge | 88.3 | 88.3 | 88.7 |

| MMLU Medicalgenetics | 90.0 | 89.0 | 92.0 |

| MMLU Anatomy | 77.8 | 80.0 | 84.4 |

| MMLUProfessionalmedicine | 95.2 | 93.4 | 92.3 |

| MMLU College biology | 94.4 | 95.1 | 95.8 |

| MMLU College medicine | 80.9 | 81.5 | 83.2 |

表 5 | Med-PaLM 2 在不同提示策略下的性能表现,包括少样本 (few-shot)、思维链 (chain-of-thought, CoT)、自洽性 (self-consistency, SC) 和集成优化 (ensemble refinement, ER)。

| 数据集 | Med-PaLM2 (5-shot) | Med-PaLM2 (COT+SC) | Med-PaLM 2 (ER) |

|---|---|---|---|

| MedQA (USMLE) | 79.7 | 83.7 | 85.4 |

| PubMedQA | 79.2 | 74.0 | 75.0 |

| MedMCQA | 71.3 | 71.5 | 72.3 |

| MMLU Clinical knowledge | 88.3 | 88.3 | 88.7 |

| MMLU Medicalgenetics | 90.0 | 89.0 | 92.0 |

| MMLU Anatomy | 77.8 | 80.0 | 84.4 |

| MMLUProfessionalmedicine | 95.2 | 93.4 | 92.3 |

| MMLU College biology | 94.4 | 95.1 | 95.8 |

| MMLU College medicine | 80.9 | 81.5 | 83.2 |

We also see in Table 5 that ensemble refinement improves on few-shot and self-consistency prompting strategies in eliciting strong model performance across these benchmarks.

我们还从表5中看到,集成精调 (ensemble refinement) 在激发模型跨基准强性能方面优于少样本 (few-shot) 和自洽性提示 (self-consistency prompting) 策略。

Overlap analysis Using the methodology described in Section 3.4, overlap percentages ranged from $0.9%$ for MedQA to $48.0%$ on MMLU Medical Genetics. Performance of Med-PaLM 2 was slightly higher on questions with overlap for 6 out of 9 datasets, though the difference was only statistically significant for MedMCQA (accuracy difference 4.6%, [1.3, 7.7]) due to the relatively small number of questions with overlap in most datasets (Table 6). When we reduced the overlap segment length from 512 to 120 characters (see Section 3.4), overlap percentages increased (11.15% for MedQA to $56.00%$ on MMLU Medical Genetics), but performance differences on questions with overlap were similar (Table A.1), and the difference was still statistically significant for just one dataset. These results are similar to those observed by Chowdhery et al. [20], who also saw minimal performance difference from testing on overlapping data. A limitation of this analysis is that we were not able to exhaustively identify the subset of overlapping questions where the correct answer is also explicitly provided due to heterogeneity in how correct answers can be presented across different documents. Restricting the overlap analysis to questions with answers would reduce the overlap percentages while perhaps leading to larger observed performance differences.

重叠分析

采用第3.4节所述方法,各数据集的重叠比例从MedQA的$0.9%$到MMLU Medical Genetics的$48.0%$不等。在9个数据集中,Med-PaLM 2在6个数据集的重叠问题上表现略优,但由于大多数数据集重叠问题数量较少(表6),仅MedMCQA的差异具有统计学显著性(准确率差异4.6%,[1.3, 7.7])。当我们将重叠片段长度从512字符缩短至120字符时(见第3.4节),重叠比例上升(MedQA从11.15%增至MMLU Medical Genetics的$56.00%$),但重叠问题的性能差异相似(表A.1),且仅一个数据集的差异仍具统计学显著性。该结果与Chowdhery等[20]的观察一致,他们也发现测试数据重叠对性能影响极小。本分析的局限性在于:由于不同文献中正确答案呈现形式的异质性,我们无法彻底识别那些同时明确提供正确答案的重叠问题子集。若将重叠分析限定为含答案的问题,可能会降低重叠比例,但可能观察到更大的性能差异。

4.2 Long-form evaluation

4.2 长文本评估

Independent evaluation On the MultiMedQA 140 dataset, physicians rated Med-PaLM 2 answers as generally comparable to physician-generated and Med-PaLM-generated answers along the axes we evaluated (Figure 3 and Table A.2). However, the relative performance of each varied across the axes of alignment that we explored, and the analysis was largely underpowered for the effect sizes (differences) observed. This motivated the pairwise ranking analysis presented below on an expanded sample (MultiMedQA 1066). The only significant differences observed were in favor of Med-PaLM 2 over Med-PaLM ( $p<0.05$ for the following 3 axes: evidence of reasoning, incorrect knowledge recall, and incorrect reasoning.

独立评估

在MultiMedQA 140数据集上,医生评价Med-PaLM 2的答案在我们评估的维度上总体与医生生成和Med-PaLM生成的答案相当 (图3和表A.2)。然而,各模型在我们探索的对齐维度上的相对表现存在差异,且分析对于观察到的效应量(差异)统计效力不足。这促使我们在扩展样本 (MultiMedQA 1066) 上进行了下述成对排序分析。唯一观察到的显著差异是Med-PaLM 2优于Med-PaLM (在以下3个维度上$p<0.05$:推理依据、错误知识回忆和错误推理)。

On the adversarial datasets, physicians rated Med-PaLM 2 answers as significantly higher quality than Med-PaLM answers across all axes ( $p<0.001$ for all axes, Figure 3 and Table A.3). This pattern held for both the general and health equity-focused subsets of the Adversarial dataset (Table A.3).

在对抗性数据集上,医生们认为Med-PaLM 2的答案质量在所有维度上都显著高于Med-PaLM (所有维度p<0.001,图3和表A.3)。这一模式在对抗性数据集的通用子集和健康公平性聚焦子集中均成立 (表A.3)。

Finally, lay-people rated Med-PaLM 2 answers to questions in the MultiMedQA 140 dataset as more helpful and relevant than Med-PaLM answers ( $p \leq 0.002$ for both dimensions, Figure 4 and Table A.4).

最后,非专业人士对MultiMedQA 140数据集中Med-PaLM 2回答的评价比Med-PaLM更有帮助且更相关 (两个维度的$p \leq 0.002$,图4和表A.4)。

Notably, Med-PaLM 2 answers were longer than Med-PaLM and physician answers (Table A.9). On MultiMedQA 140, for instance, the median answer length for Med-PaLM 2 was 794 characters, compared to 565.5 for Med-PaLM and 337.5 for physicians. Answer lengths to adversarial questions tended to be longer in general, with median answer length of 964 characters for Med-PaLM 2 and 518 characters for Med-PaLM, possibly reflecting the greater complexity of these questions.

值得注意的是,Med-PaLM 2的回答比Med-PaLM和医生的回答更长(表 A.9)。例如在MultiMedQA 140上,Med-PaLM 2的回答长度中位数为794个字符,而Med-PaLM为565.5个字符,医生为337.5个字符。对于对抗性问题的回答通常更长,Med-PaLM 2的中位数回答长度为964个字符,Med-PaLM为518个字符,这可能反映了这些问题更高的复杂性。

Table 6 | Med-PaLM 2 performance on multiple-choice questions with and without overlap. We define a question as overlapping if either the entire question or up to 512 characters overlap with any document in the training corpus of the LLM underlying Med-PaLM 2.

| Dataset | Overlap Fraction | Performance (without Overlap) | Performance (with Overlap) | Delta |

| MedQA (USMLE) | 12/1273 (0.9%) | 85.3 [83.4, 87.3] | 91.7 [76.0, 100.0] | -6.3 [-13.5, 20.8] |

| PubMedQA | 6/500 (1.2%) | 74.1 [70.2, 78.0] | 66.7 [28.9, 100.0] | 7.4 [-16.6, 44.3] |

| MedMCQA | 893/4183 (21.4%) | 70.5 [68.9, 72.0] | 75.0 [72.2, 77.9] | -4.6 [7.7, -1.3] |

| MMLU Clinical knowledge | 55/265 (20.8%) | 88.6 [84.3, 92.9] | 87.3 [78.5, 96.1] | 1.3 [-6.8, 13.2] |

| MMLU Medical genetics | 48/100 (48.0%) 37/135 | 92.3 [85.1, 99.6] 82.7 | 91.7 [83.8, 99.5] | 0.6 [-11.0, 12.8] |

| MMLU Anatomy | (27.4%) 79/272 | [75.2, 90.1] 89.1 | 89.2 [79.2, 99.2] 92.4 | -6.5 [-17.4, 8.7] |

| MMLU Professional medicine | (29.0%) 60/144 | [84.7, 93.5] 95.2 | [86.6, 98.2] 96.7 | -3.3 [-9.9, 5.5] -1.4 |

| MMLU College biology MMLU College medicine | (41.7%) 47/173 (27.2%) | [90.7, 99.8] 78.6 [71.4, 85.7] | [92.1, 100.0] 91.5 [83.5, 99.5] | [-8.7, 7.1] -12.9 |

表 6 | Med-PaLM 2 在有无重叠多选题上的表现。我们将问题定义为重叠的条件是:该问题全部或最多512个字符与Med-PaLM 2底层大语言模型训练语料库中的任何文档存在重叠。

| 数据集 | 重叠比例 | 性能(无重叠) | 性能(有重叠) | 差值 |

|---|---|---|---|---|

| MedQA (USMLE) | 12/1273 (0.9%) | 85.3 [83.4, 87.3] | 91.7 [76.0, 100.0] | -6.3 [-13.5, 20.8] |

| PubMedQA | 6/500 (1.2%) | 74.1 [70.2, 78.0] | 66.7 [28.9, 100.0] | 7.4 [-16.6, 44.3] |

| MedMCQA | 893/4183 (21.4%) | 70.5 [68.9, 72.0] | 75.0 [72.2, 77.9] | -4.6 [7.7, -1.3] |

| MMLU临床知识 | 55/265 (20.8%) | 88.6 [84.3, 92.9] | 87.3 [78.5, 96.1] | 1.3 [-6.8, 13.2] |

| MMLU医学遗传学 | 48/100 (48.0%) 37/135 | 92.3 [85.1, 99.6] 82.7 | 91.7 [83.8, 99.5] | 0.6 [-11.0, 12.8] |

| MMLU解剖学 | (27.4%) 79/272 | [75.2, 90.1] 89.1 | 89.2 [79.2, 99.2] 92.4 | -6.5 [-17.4, 8.7] |

| MMLU专业医学 | (29.0%) 60/144 | [84.7, 93.5] 95.2 | [86.6, 98.2] 96.7 | -3.3 [-9.9, 5.5] -1.4 |

| MMLU大学生物学 MMLU大学医学 | (41.7%) 47/173 (27.2%) | [90.7, 99.8] 78.6 [71.4, 85.7] | [92.1, 100.0] 91.5 [83.5, 99.5] | [-8.7, 7.1] -12.9 |

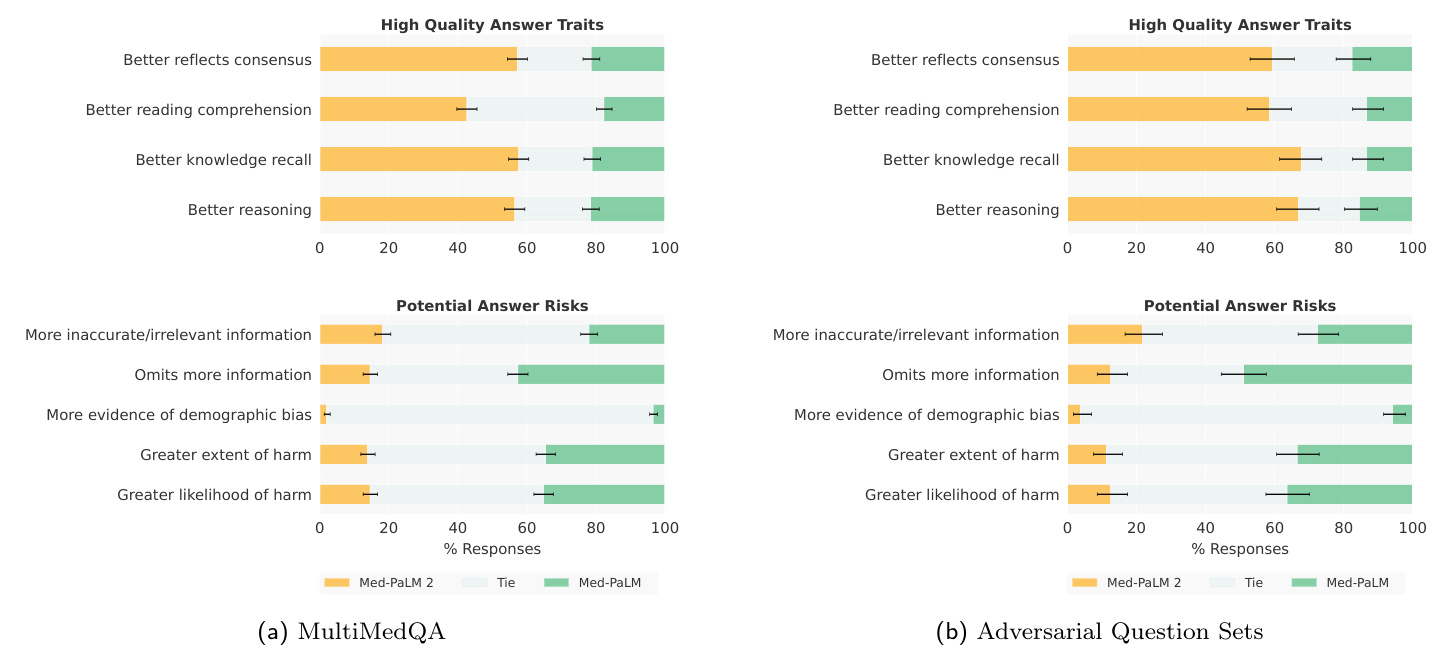

Pairwise ranking evaluation Pairwise ranking evaluation more explicitly assessed the relative performance of Med-PaLM 2, Med-PaLM, and physicians. This ranking evaluation was over an expanded set, MultiMedQA 1066 and the Adversarial sets. Qualitative examples and their rankings are included in Tables A.7 and A.8, respectively, to provide indicative examples and insight.

成对排序评估

成对排序评估更明确地评估了Med-PaLM 2、Med-PaLM和医生的相对表现。该排序评估基于扩展集MultiMedQA 1066和对抗集。表A.7和表A.8分别包含定性示例及其排序,以提供指示性示例和深入见解。

On MultiMedQA, for eight of the nine axes, Med-PaLM 2 answers were more often rated as being higher quality compared to physician answers ( $\mathrm{p}<0.001$ , Figure 1 and Table A.5). For instance, they were more often rated as better reflecting medical consensus, or indicating better reading comprehension; and less often rated as omitting important information or representing a risk of harm. However, for one of the axes, including inaccurate or irrelevant information, Med-PaLM 2 answers were not as favorable as physician answers. Med-PaLM 2 answers were rated as higher quality than Med-PaLM axes on the same eight axes (Figure 5 and Table A.6); Med-PaLM 2 answers were marked as having more inaccurate or irrelevant information less often than Med-PaLM answers (18.4% Med-PaLM 2 vs. 21.5% Med-PaLM), but the difference was not significant ( $\mathrm{p}=0.12$ , Table A.6).

在MultiMedQA上,九项评估指标中有八项显示Med-PaLM 2的回答质量优于医生回答(p<0.001,图1和表A.5)。例如,其回答更常被认为能更好体现医学共识、展示更优阅读理解能力,且较少出现遗漏关键信息或存在伤害风险的情况。但在"包含不准确/无关信息"这一指标上,Med-PaLM 2的表现不及医生回答。与初代Med-PaLM相比,Med-PaLM 2在相同的八项指标上均获更高评分(图5和表A.6);其回答被标记含不准确/无关信息的频率更低(Med-PaLM 2为18.4% vs Med-PaLM为21.5%),但差异未达显著水平(p=0.12,表A.6)。

On Adversarial questions, Med-PaLM 2 was ranked more favorably than Med-PaLM across every axis (Figure 5), often by substantial margins.

在对抗性问题方面,Med-PaLM 2 在所有维度上的表现均优于 Med-PaLM (图 5),且优势通常十分显著。

5 Discussion

5 讨论

We show that Med-PaLM 2 exhibits strong performance in both multiple-choice and long-form medical question answering, including popular benchmarks and challenging new adversarial datasets. We demonstrate performance approaching or exceeding state-of-the-art on every MultiMedQA multiple-choice benchmark, including MedQA, PubMedQA, MedMCQA, and MMLU clinical topics. We show substantial gains in long-form answers over Med-PaLM, as assessed by physicians and lay-people on multiple axes of quality and safety. Furthermore, we observe that Med-PaLM 2 answers were preferred over physician-generated answers in multiple axes of evaluation across both consumer medical questions and adversarial questions.

我们证明Med-PaLM 2在选择题和长文本医学问答中均表现出色,涵盖主流基准测试和具有挑战性的新型对抗数据集。该模型在所有MultiMedQA选择题基准(包括MedQA、PubMedQA、MedMCQA和MMLU临床主题)上的表现接近或超越当前最优水平。经医师和普通用户从质量与安全多维度评估,其长文本回答较Med-PaLM取得显著提升。值得注意的是,在消费者医疗问题和对抗性问题的多项评估维度中,Med-PaLM 2的答案比医师生成的答案更受青睐。

As LLMs become increasingly proficient at structured tests of knowledge, it is becoming more important to delineate and assess their capabilities along clinically relevant dimensions [22, 26]. Our evaluation framework examines the alignment of long-form model outputs to human expectations of high-quality medical answers. Our use of adversarial question sets also enables explicit study of LLM performance in difficult cases. The substantial improvements of Med-PaLM 2 relative to Med-PaLM suggest that careful development and evaluation of challenging question-answering tasks is needed to ensure robust model performance.

随着大语言模型在结构化知识测试中日益熟练,根据临床相关维度界定和评估其能力变得愈发重要[22, 26]。我们的评估框架检验了长文本模型输出与人类对高质量医学答案期望的契合度。通过对抗性提问集的运用,我们还能够明确研究大语言模型在疑难案例中的表现。Med-PaLM 2相较于Med-PaLM的显著进步表明,需要精心开发和评估具有挑战性的问答任务,以确保模型的稳健性能。

Physician Evaluation on MultiMedQA

图 1: 医生对MultiMedQA的评估

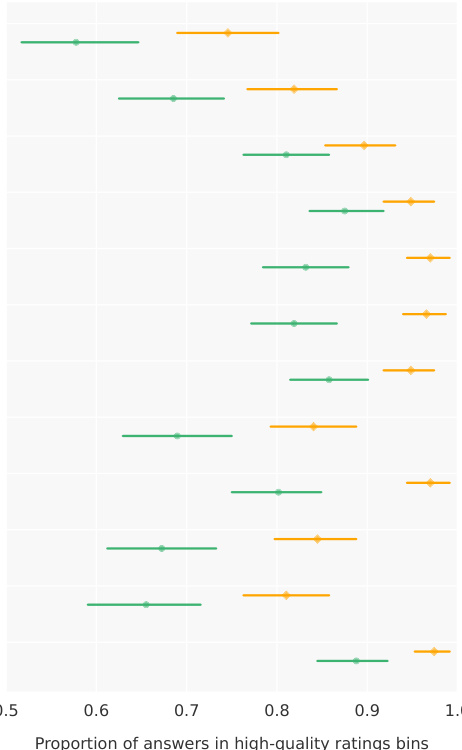

Physician Evaluation on Adversarial Questions Figure 3 | Independent long-form evaluation with physician raters Values are the proportion of ratings across answers where each axis was rated in the highest-quality bin. (For instance, "Possible harm extent $=\mathrm{No}$ harm" reflects the proportion of answers where the extent of possible harm was rated "No harm".) Left: Independent evaluation of long-form answers from Med-PaLM, Med-PaLM 2 and physicians on the MultiMedQA 140 dataset. Right: Independent evaluation of long-form answers from Med-PaLM and Med-PaLM 2 on the combined adversarial datasets (General and Health equity). Detailed breakdowns are presented in Tables A.2 and A.3. $(^{* })$ designates $0.01<p<0.05$ between Med-PaLM and Med-PaLM 2.

图 3 | 医师对抗性问题的独立长文评估

数值表示各轴被评为最高质量区间的评分比例。(例如,"可能伤害程度=无伤害"反映的是可能伤害程度被评为"无伤害"的答案比例。)

左图: 对MultiMedQA 140数据集中Med-PaLM、Med-PaLM 2和医师的长文答案进行独立评估。

右图: 在综合对抗性数据集(通用和健康公平)上对Med-PaLM和Med-PaLM 2的长文答案进行独立评估。详细分类见表A.2和表A.3。

$(^{* })$ 表示Med-PaLM与Med-PaLM 2之间$0.01<p<0.05$。

Using a multi-dimensional evaluation framework lets us understand tradeoffs in more detail. For instance, Med-PaLM 2 answers significantly improved performance on “missing important content” (Table A.2) and were longer on average (Table A.9) than Med-PaLM or physician answers. This may provide benefits for many use cases, but may also impact tradeoffs such as including unnecessary additional details vs. omitting important information. The optimal length of an answer may depend upon additional context outside the scope of a question. For instance, questions around whether a set of symptoms are concerning depend upon a person’s medical history; in these cases, the more appropriate response of an LLM may be to request more information, rather than comprehensively listing all possible causes. Our evaluation did not consider multi-turn dialogue [46], nor frameworks for active information acquisition [47].

采用多维评估框架能让我们更详细地理解权衡取舍。例如,Med-PaLM 2的答案在"遗漏重要内容"方面表现显著提升(表 A.2),且平均长度超过Med-PaLM和医生回答(表 A.9)。这可能为许多用例带来优势,但也可能影响权衡因素,比如包含不必要细节与遗漏关键信息之间的取舍。答案的最佳长度可能取决于问题范围之外的额外背景。例如,判断一组症状是否值得关注需结合个人病史;这种情况下,大语言模型更合适的响应可能是请求更多信息,而非全面列出所有可能病因。我们的评估未考虑多轮对话[46],也未涉及主动信息获取框架[47]。

Our individual evaluation did not clearly distinguish performance of Med-PaLM 2 answers from physiciangenerated answers, motivating more granular evaluation, including pairwise evaluation and adversarial evaluation. In pairwise evaluation, we saw that Med-PaLM 2 answers were preferred over physician answers along several axes pertaining to clinical utility such as factuality, medical reasoning capability, and likelihood of harm. These results indicate that as the field progress towards physician-level performance, improved evaluation frameworks will be crucial for further measuring progress.

我们的个体评估未能明确区分Med-PaLM 2答案与医生生成答案的表现,这促使我们进行更细粒度的评估,包括成对评估和对抗性评估。在成对评估中,我们发现Med-PaLM 2答案在临床实用性相关的多个维度上优于医生答案,例如事实准确性、医学推理能力和潜在危害可能性。这些结果表明,随着该领域向医生级表现迈进,改进的评估框架对于进一步衡量进展至关重要。

Figure 4 | Independent evaluation of long-form answers with lay-person raters Med-PaLM 2 answers were rated as more directly relevant and helpful than Med-PaLM answers on the MultiMedQA 140 dataset.

图 4 | 由非专业人士对长篇幅答案进行的独立评估

在MultiMedQA 140数据集上,Med-PaLM 2的答案比Med-PaLM的答案被评为更直接相关且更有帮助。

6 Limitations

6 限制

Given the broad and complex space of medical information needs, methods to measure alignment of model outputs will need continued development. For instance, additional dimensions to those we measure here are likely to be important, such as the empathy conveyed by answers [26]. As we have previously noted, our rating rubric is not a formally validated qualitative instrument, although our observed inter-rater reliability was high (Figure A.1). Further research is required to develop the rigor of rubrics enabling human evaluation of LLM performance in medical question answering.

鉴于医疗信息需求的广泛性和复杂性,衡量模型输出匹配度的方法仍需持续发展。例如,除本文测量的维度外,其他因素(如回答中传递的同理心 [26])可能同样重要。需注意的是,我们的评分标准虽未经过正式验证(但评分者间信度较高,见图 A.1),仍需进一步研究来完善标准体系,以实现对大语言模型在医疗问答中表现的人类评估。

Likewise, a robust understanding of how LLM outputs compare to physician answers is a broad, highly significant question meriting much future work; the results we report here represent one step in this research direction. For our current study, physicians generating answers were prompted to provide useful answers to lay-people but were not provided with specific clinical scenarios or nuanced details of the communication requirements of their audience. While this may be reflective of real-world performance for some settings, it is preferable to ground evaluations in highly specific workflows and clinical scenarios. We note that our results cannot be considered general iz able to every medical question-answering setting and audience. Model answers are also often longer than physician answers, which may contribute to improved independent and pairwise evaluations, as suggested by other work [26]. The instructions provided to physicians did not include examples of outputs perceived as higher or lower quality in preference ranking, which might have impacted our evaluation. Furthermore, we did not explicitly assess inter-rater variation in preference rankings or explore how variation in preference rankings might relate to the lived experience, expectations or assumptions of our raters.

同样,深入理解大语言模型输出与医生回答之间的对比是一个广泛且极具意义的问题,值得未来大量研究。我们在此报告的结果代表了该研究方向的一个进展。在当前研究中,参与生成答案的医生被要求为普通民众提供实用回答,但并未获得具体临床场景或受众沟通需求的细节信息。虽然这可能反映了某些现实场景中的实际表现,但基于高度具体的工作流程和临床情境进行评估更为理想。需要指出的是,我们的研究结果不能推广到所有医疗问答场景和受众群体。模型生成的答案通常比医生回答更冗长,这可能有助于提升独立评估和成对比较的评分,正如其他研究[26]所表明的那样。提供给医生的指导说明中未包含偏好排序中被视为高质量或低质量的输出示例,这可能影响了我们的评估结果。此外,我们未明确评估评分者之间的偏好排序差异,也未探究这些差异可能与评分者的生活经验、预期或假设存在何种关联。

Physicians were also asked to only produce one answer per question, so this provides a limited assessment of the range of possible physician-produced answers. Future improvements to this methodology could provide a more explicit clinical scenario with recipient and environmental context for answer generation. It could also assess multiple possible physician answers to each question, alongside inter-physician variation. Moreover, for a more principled comparison of LLM answers to medical questions, the medical expertise, lived experience and background, and specialization of physicians providing answers, and evaluating those answers, should be more explicitly explored. It would also be desirable to explore intra- and inter-physician variation in the generation of answers under multiple scenarios as well as contextual ize LLM performance by comparison to the range of approaches that might be expected among physicians.

医生还被要求每个问题只提供一个答案,因此这仅能有限评估医生可能给出的答案范围。未来对该方法的改进可以提供一个更明确的临床场景,包含接收者和环境背景以生成答案。还可以评估每个问题的多个可能的医生答案,以及医生间的差异。此外,为更系统地比较大语言模型对医学问题的回答,应更明确地探讨提供答案和评估答案的医生的医学专业知识、生活经验和背景以及专业领域。同样有必要探索医生在不同场景下生成答案的内部和相互差异,并通过与医生可能采用的各种方法范围进行比较,将大语言模型的表现置于情境中。

Figure 5 | Ranking comparison of long-form answers Med-PaLM 2 answers are consistently preferred over Med-PaLM answers by physician raters across all ratings dimensions, in both MultiMedQA and Adversarial question sets. Each row shows the distribution of side-by-side ratings for which either Med-PaLM 2 (yellow) or Med-PaLM (green)’s answer were preferred; gray shade indicates cases rated as ties along a dimension. Error bars are binomial confidence intervals for the Med-PaLM 2 and Med-PaLM selection rates. Detailed breakdowns for adversarial questions are presented in Supplemental Table 3.

图 5 | 长答案排名对比 在MultiMedQA和对抗性问题集中,医师评分者在所有评分维度上对Med-PaLM 2答案的偏好始终高于Med-PaLM。每行显示并排评分的分布情况,其中黄色代表Med-PaLM 2答案更受青睐,绿色代表Med-PaLM答案更受青睐;灰色阴影表示该维度评分持平的情况。误差线表示Med-PaLM 2和Med-PaLM选择率的二项式置信区间。对抗性问题的详细分类见补充表3。

Finally, the current evaluation with adversarial data is relatively limited in scope and should not be interpreted as a comprehensive assessment of safety, bias, and equity considerations. In future work, the adversarial data could be systematically expanded to increase coverage of health equity topics and facilitate d is aggregated evaluation over sensitive characteristics [48–50] .

最后,当前针对对抗性数据的评估范围相对有限,不应将其视为对安全性、偏见和公平性考量的全面评估。在未来的工作中,可以系统性地扩展对抗性数据,以增加对健康公平主题的覆盖范围,并促进基于敏感特征的聚合评估 [48-50]。

7 Conclusion

7 结论

These results demonstrate the rapid progress LLMs are making towards physician-level medical question answering. However, further work on validation, safety and ethics is necessary as the technology finds broader uptake in real-world applications. Careful and rigorous evaluation and refinement of LLMs in different contexts for medical question-answering and real world workflows w