Radiology-GPT: A Large Language Model for Radiology

Radiology-GPT: 面向放射学的大语言模型

Zhengliang Liu $^{1}$ , Aoxiao Zhong $^2$ , Yiwei Li $^1$ , Longtao Yang $^3$ , Chao Ju $^3$ , Zihao Wu $^1$ , Chong Ma $^4$ , Peng Shu $^{1}$ , Cheng Chen $^5$ , Sekeun Kim $^5$ , Haixing Dai $^{1}$ , Lin Zhao $^{1}$ , Lichao Sun $^6$ , Dajiang Zhu $7$ , Jun Liu $^3$ , Wei Liu $^8$ , Dinggang Shen $^{9,10,11}$ , Xiang Li $^5$ , Quanzheng Li $^5$ , and Tianming Liu1

郑亮 刘 $^{1}$ , 钟傲霄 $^2$ , 李一苇 $^1$ , 杨龙涛 $^3$ , 鞠超 $^3$ , 吴子豪 $^1$ , 马冲 $^4$ , 舒鹏 $^{1}$ , 陈诚 $^5$ , Kim Sekeun $^5$ , 戴海星 $^{1}$ , 赵琳 $^{1}$ , 孙立超 $^6$ , 朱大江 $7$ , 刘军 $^3$ , 刘伟 $^8$ , 沈定刚 $^{9,10,11}$ , 李翔 $^5$ , 李全正 $^5$ , 刘天明 $1$

1School of Computing, University of Georgia $^2$ Department of Electrical Engineering, Harvard University $^{3}$ Department of Radiology, Second Xiangya Hospital $^4$ School of Automation, Northwestern Polytechnic al University $^5$ Department of Radiology, Massachusetts General Hospital and Harvard Medical School $^6$ Department of Computer Science and Engineering, Lehigh University 7 Department of Computer Science and Engineering, University of Texas at Arlington $^{8}$ Department of Radiation Oncology, Mayo Clinic $^{9}$ School of Biomedical Engineering, Shanghai Tech University $^{10}$ Shanghai United Imaging Intelligence Co., Ltd. $_ {11}$ Shanghai Clinical Research and Trial Center

1 佐治亚大学计算学院

2 哈佛大学电气工程系

3 中南大学湘雅二医院放射科

4 西北工业大学自动化学院

5 麻省总医院及哈佛医学院放射科

6 利哈伊大学计算机科学与工程系

7 德克萨斯大学阿灵顿分校计算机科学与工程系

8 梅奥诊所放射肿瘤科

9 上海科技大学生物医学工程学院

10 上海联影智能医疗科技有限公司

11 上海市临床研究伦理中心

Abstract

摘要

We introduce Radiology-GPT, a large language model for radiology. Using an instruction tuning approach on an extensive dataset of radiology domain knowledge, Radiology-GPT demonstrates superior performance compared to general language models such as StableLM, Dolly and LLaMA. It exhibits significant versatility in radiological diagnosis, research, and communication. This work serves as a catalyst for future developments in clinical NLP. The successful implementation of Radiology-GPT is indicative of the potential of localizing generative large language models, specifically tailored for distinctive medical specialties, while ensuring adherence to privacy standards such as HIPAA. The prospect of developing individualized, large-scale language models that cater to specific needs of various hospitals presents a promising direction. The fusion of conversational competence and domain-specific knowledge in these models is set to foster future development in healthcare AI. A demo of Radiology-GPT is available at https://hugging face.co/spaces/allen-eric/radiology-gpt.

我们推出Radiology-GPT,这是一款专为放射学设计的大语言模型。通过在大量放射学领域知识数据集上采用指令微调方法,Radiology-GPT展现出相较于StableLM、Dolly和LLaMA等通用语言模型的卓越性能。该模型在放射学诊断、研究和交流方面表现出显著的多功能性。这项工作为临床自然语言处理的未来发展提供了催化剂。Radiology-GPT的成功实施表明,针对特定医学专科定制生成式大语言模型并确保符合HIPAA等隐私标准具有巨大潜力。开发满足不同医院特定需求的个性化大规模语言模型,展现出一个充满前景的方向。这些模型将对话能力与领域专业知识相融合,必将推动医疗AI的未来发展。Radiology-GPT演示版可在https://huggingface.co/spaces/allen-eric/radiology-gpt访问。

1 Introduction

1 引言

The recent rise of large language models (LLMs) such as ChatGPT [1] and GPT-4 [2] has brought about a significant transformation in the field of natural language processing (NLP) [3, 1]. These models demonstrate unprecedented abilities and versatility, a clear progression from preceding models such as BERT [4], and have engendered broad advancements in diverse domains [1, 5, 6].

近期,以ChatGPT [1]和GPT-4 [2]为代表的大语言模型(LLM)的崛起,为自然语言处理(NLP)领域带来了重大变革[3, 1]。这些模型展现出前所未有的能力与通用性,相较BERT [4]等前代模型实现了显著突破,并在多个领域推动了广泛进展[1, 5, 6]。

Among the fields that can significantly benefit from these advancements is radiology. Radiology, by its very nature, generates a vast amount of textual data, including radiology reports, clinical notes, annotations associated with medical imaging, and more [7]. Examples of such texts include radiographic findings, annotations for Computerized Tomography (CT) scans, and Magnetic Resonance

能够显著受益于这些进步的领域之一是放射学。放射学本质上会产生大量文本数据,包括放射学报告、临床记录、与医学影像相关的注释等[7]。这类文本的例子包括放射学检查结果、计算机断层扫描(CT)的注释和磁共振

Imaging (MRI) reports, all of which require sophisticated understanding and interpretation.

影像学 (MRI) 报告,均需要复杂的理解和解读。

Despite the transformative potential, the application of LLMs in the radiology domain has been limited [8, 7, 9]. Large commercial models such as GPT-4 and PaLM-2 [10], while powerful, are not readily applicable in clinical settings. HIPAA regulations, privacy concerns, and the necessity for IRB approvals pose substantial barriers [11], primarily because these models necessitate the uploading of patient data to externally hosted platforms.

尽管具有变革潜力,大语言模型(LLM)在放射学领域的应用仍然有限[8,7,9]。GPT-4和PaLM-2[10]等大型商业模型虽然强大,却难以直接应用于临床环境。HIPAA法规、隐私问题以及IRB审批要求构成了重大障碍[11],这主要是因为这些模型需要将患者数据上传至外部托管平台。

This situation underscores the pressing need for a localized foundational model, specifically designed for radiology, that can work effectively within the regulatory boundaries while capitalizing on the potential of LLMs. We address this gap through the development of Radiology-GPT, an LLM specifically designed for the radiology domain.

这一现状凸显出对专为放射学设计的本地化基础模型的迫切需求,该模型需在监管范围内有效运作,同时充分发挥大语言模型的潜力。我们通过开发专为放射学领域设计的大语言模型Radiology-GPT来解决这一空白。

A crucial advantage of generative large language models such as Radiology-GPT, particularly in comparison to domain-specific BERT-based models such as RadBERT [12] (for radiology) or Clinical Radio BERT [13] (for radiation oncology), lies in their flexibility and dynamism. Unlike their BERT-based counterparts, generative LLMs are not rigidly dependent on a specific input structure, allowing for a more diverse range of inputs. Additionally, they are capable of generating diverse outputs, making them suitable for tasks that were previously considered impractical, such as reasoning [14, 15]. This further extends their versatility.

Radiology-GPT等生成式大语言模型 (Generative Large Language Model) 的关键优势在于其灵活性和动态性,这尤其体现在与RadBERT [12] (针对放射学) 或Clinical Radio BERT [13] (针对放射肿瘤学) 等基于BERT的领域专用模型对比时。与基于BERT的模型不同,生成式大语言模型不严格依赖特定输入结构,可接受更广泛的输入类型。此外,它们能生成多样化输出,使其适用于以往被认为不切实际的任务 (如推理 [14, 15]),从而进一步扩展了多功能性。

This inherent conversational capability of generative LLMs [1] positions them as invaluable aids to medical professionals, including radiologists. They can provide contextual insights and responses in a conversational manner, mimicking a human-like interaction, and hence enhancing the usability of these models in a clinical setting.

生成式大语言模型 [1] 这种与生俱来的对话能力,使其成为包括放射科医生在内的医疗专业人员的宝贵助手。它们能以对话方式提供情境化见解和响应,模拟类人交互,从而提升这些模型在临床环境中的实用性。

Furthermore, these models eliminate the need for complex fine-tuning processes and the associated labor-intensive manual annotation procedures. This attribute reduces both the development time and cost, making the adoption of such models more feasible and appealing in a clinical context.

此外,这些模型省去了复杂的微调过程和相应的高强度人工标注流程。这一特性既缩短了开发时间又降低了成本,使得此类模型在临床环境中更具可行性和吸引力。

Radiology-GPT outperforms other instruction-tuned models not specially trained for radiology, such as Satbility AI’s Stable LM [16] and Databrick’s Dolly [17]. The model is trained on the MIMIC-CXR dataset [18], affording it a deep understanding of radiology-specific language and content.

放射学GPT的表现优于其他未针对放射学专门训练的指令调优模型,例如Stability AI的Stable LM [16] 和Databrick的Dolly [17]。该模型基于MIMIC-CXR数据集 [18] 进行训练,使其对放射学专用语言和内容具备深刻理解。

Key contributions of our work include:

我们工作的主要贡献包括:

With these advancements, we believe Radiology-GPT holds immense potential to revolutionize the interpretation of radiology reports and other radiology-associated texts, making it a promising tool for clinicians and researchers alike.

随着这些进步,我们相信 Radiology-GPT 在革新放射学报告及其他放射学相关文本解读方面具有巨大潜力,使其成为临床医生和研究人员的有力工具。

2 Related work

2 相关工作

2.1 Large language models

2.1 大语言模型

In recent years, the field of Natural Language Processing (NLP) has undergone a transformative shift with the advent of large language models (LLMs). These models, such as GPT-3 [19], GPT-4 [2], and PaLM [20], PaLM-2 [10], offer enhanced language understanding capabilities, transforming the way language is processed, analyzed, and generated [1, 3]. A notable shift in this evolution has been the transition from the pre-training and fine-tuning paradigm of BERT [4], GPT [21], GPT-2 [22] and their variants [23, 24, 5]. In contrast, LLMs have introduced impressive few-shot and zero-shot learning capabilities achieved through in-context learning, offering a leap in both the performance and application scope of language models.

近年来,随着大语言模型(LLM)的出现,自然语言处理(NLP)领域发生了革命性转变。以GPT-3[19]、GPT-4[2]、PaLM[20]和PaLM-2[10]为代表的模型显著提升了语言理解能力,彻底改变了语言处理、分析和生成的方式[1,3]。这一演进过程中的显著变化是从BERT[4]、GPT[21]、GPT-2[22]及其变体[23,24,5]的预训练-微调范式,转向通过上下文学习实现惊艳的少样本和零样本学习能力,使语言模型的性能和应用范围都实现了飞跃。

Alongside the growth of these powerful, commercially developed LLMs, the field has also seen the emergence of open-source alternatives. Models such as LLaMA [25] and Bloom [26] have extended the reach of LLMs, fostering democratization and in clu siv it y by offering the research community accessible and replicable models.

随着这些由商业开发的大语言模型 (LLM) 不断发展壮大,开源替代方案也在该领域崭露头角。LLaMA [25] 和 Bloom [26] 等模型通过为研究社区提供可获取且可复现的模型,扩大了大语言模型的影响力,促进了技术民主化与包容性。

Building on open-source LLMs, the research community has begun to explore and develop instructiontuned language models that can effectively interpret and execute complex instructions within the input text. Exemplifying this trend are models such as Alpaca [27], StableLM [16], and Dolly [17]. Alpaca, fine-tuned from Meta’s LLaMA 7B model, has shown that even smaller models can achieve behaviors comparable to larger, closed-source models like OpenAI’s text-davinci-003, while being more cost-effective and easily reproducible.

基于开源大语言模型,研究社区已开始探索开发能够有效解析和执行输入文本中复杂指令的指令调优语言模型。这一趋势的代表性模型包括Alpaca [27]、StableLM [16]和Dolly [17]。以Meta的LLaMA 7B模型微调而来的Alpaca证明,即使较小模型也能实现与OpenAI text-davinci-003等闭源大模型相当的表现,同时更具成本效益且易于复现。

2.2 Domain-specific language models

2.2 领域专用大语言模型

Domain-Specific Language Models (DSLM) are models that are specifically trained or fine-tuned on text data pertaining to a particular domain or sector [28, 29]. By focusing on domain-relevant tasks and language patterns, these models seek to offer superior performance for tasks within their specialized domain.

领域专用语言模型 (Domain-Specific Language Models, DSLM) 指针对特定领域或行业的文本数据进行专门训练或微调的模型 [28, 29]。通过聚焦领域相关任务和语言模式,这类模型旨在其专业领域内提供更优的任务性能。

For instance, AgriBERT [30], a domain-specific variant of BERT, is trained on an extensive corpus of text data related to agriculture and food sciences. It effectively captures specific jargon and language patterns typical of this sector, making it a valuable tool for tasks such as crop disease classification and food quality assessment.

例如,AgriBERT [30] 作为 BERT 的领域专用变体,经过大量农业与食品科学相关文本数据的训练。它能有效捕捉该领域特有的术语和语言模式,成为作物病害分类和食品质量评估等任务中的实用工具。

In the field of education, SciEdBERT [8] exemplifies the potential of DSLMs. SciEdBERT is pretrained on student response data to middle school chemistry and physics questions. It is designed to understand and evaluate the language and reasoning of students in these domains, enabling accurate evaluation of student responses and providing insights into student understanding and misconceptions.

在教育领域,SciEdBERT [8] 展现了领域专用大语言模型 (DSLM) 的潜力。该模型基于中学生化学和物理答题数据进行预训练,旨在理解并评估学生在这些学科中的语言表达与推理能力,从而实现对答题内容的精准评估,并揭示学生的知识掌握情况与认知误区。

Similarly, in healthcare, Clinical Radio BERT [13] has been developed for radiation oncology, training on clinical notes and radiotherapy literature to understand and generate radiation oncology-related text. These examples illustrate the diversity and potential of DSLMs in various sectors, enhancing performance on domain-specific tasks by leveraging tailored language patterns and specific knowledge.

同样,在医疗健康领域,临床放射BERT (Clinical Radio BERT) [13] 被开发用于放射肿瘤学,通过训练临床记录和放射治疗文献来理解和生成与放射肿瘤学相关的文本。这些例子展示了领域特定语言模型 (DSLM) 在各行业的多样性和潜力,通过利用定制化的语言模式和特定知识来提升领域特定任务的性能。

While LLMs and DSLMs each have their strengths, there is a growing need for models that leverage the strengths of both, which is particularly pronounced in areas with specialized jargon and extensive bodies of text data, such as radiology.

虽然大语言模型和领域专用语言模型各有优势,但在放射学等专业术语繁多且文本数据量庞大的领域,越来越需要能结合两者优势的模型。

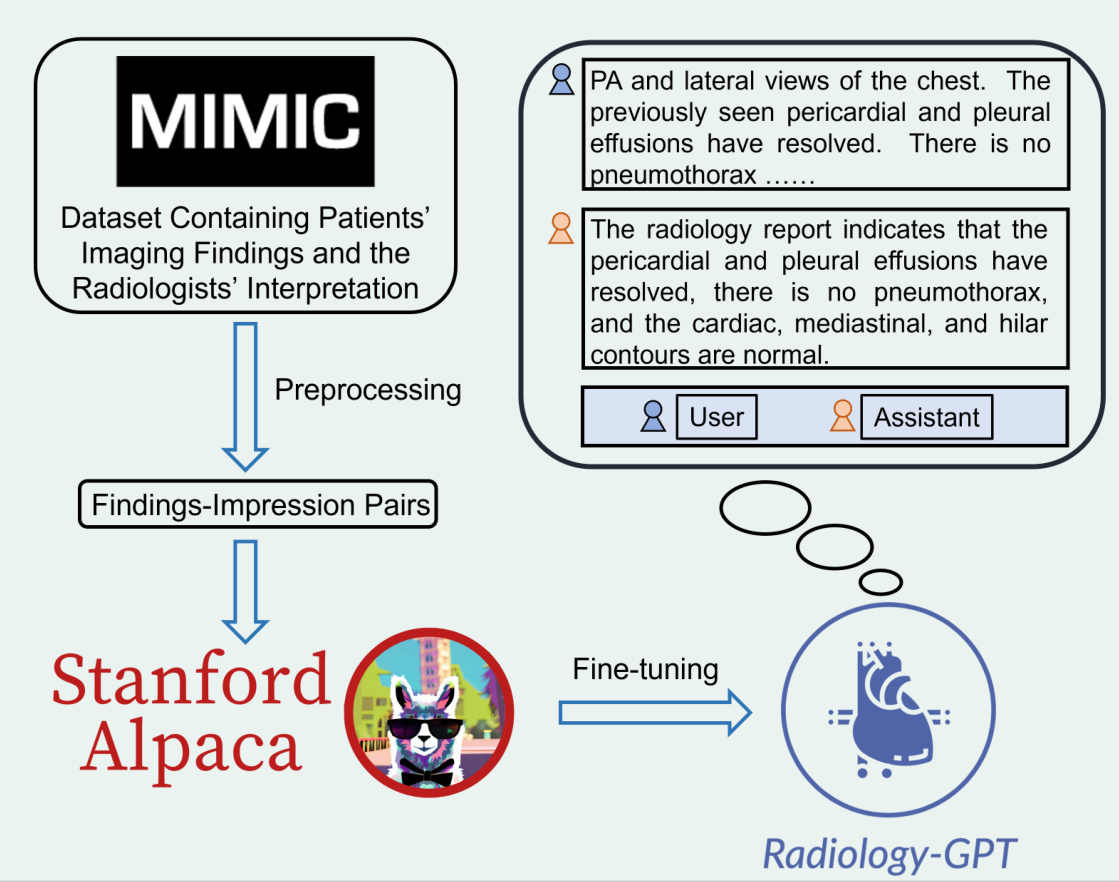

Figure 1: The overall framework of Radiology-GPT.

图 1: Radiology-GPT 的整体框架。

3 Methodology

3 方法论

Our approach to building Radiology-GPT involves a two-step process: preprocessing of the dataset and fine-tuning the model with instruction following. The pipeline of our method can be seen in Figure 1.

我们构建Radiology-GPT的方法分为两个步骤:数据集的预处理和遵循指令的模型微调。方法流程如图1所示。

3.1 Dataset and preprocessing

3.1 数据集与预处理

We base our work on the publicly available MIMIC-CXR dataset [18], a large, publicly available dataset of chest X-rays (CXRs). MIMIC-CXR contains de-identified medical data from over 60,000 patients who were admitted to the Beth Israel Deaconess Medical Center between 2001 and 2012.

我们的工作基于公开可用的MIMIC-CXR数据集[18],这是一个大型公开胸部X光(CXR)数据集。MIMIC-CXR包含2001年至2012年间贝斯以色列女执事医疗中心超过60,000名患者的去标识化医疗数据。

We focus on the radiology reports available in this dataset, as they provide rich textual information about patients’ imaging findings and the radiologists’ interpretations. The reports typically contain two sections that correspond to each other: "Findings" and "Impression". The "Findings" section includes detailed observations from the radiology images, whereas the "Impression" section includes the summarized interpretations drawn from those observations.

我们重点关注该数据集中可用的放射学报告,因为它们提供了关于患者影像学表现和放射科医生解读的丰富文本信息。报告通常包含两个相互对应的部分:"发现"和"印象"。"发现"部分包含从放射学图像中观察到的详细结果,而"印象"部分则包含根据这些观察结果总结出的解读。

To prepare the data for training, we pre processed these reports to extract the "Findings" and "Impression" sections from each report and organized them into pairs. The preprocessing involved removing irrelevant sections, standardizing terminologies, and handling missing or incomplete sections. Specifically, we excluded ineligible reports through the following operations: (1) remove reports without finding or impression sections, (2) remove reports whose finding section contained less than 10 words, and (3) remove reports whose impression section contained less than 2 words. We apply the official split published by [18] and finally obtain 122,014/957/1,606 reports for train/val/test set.

为准备训练数据,我们对这些报告进行了预处理,从每份报告中提取"发现"和"印象"部分并组织成配对。预处理包括移除无关章节、标准化术语处理以及处理缺失或不完整的章节。具体而言,我们通过以下操作排除了不符合要求的报告:(1) 移除没有发现或印象章节的报告,(2) 移除发现章节少于10个单词的报告,(3) 移除印象章节少于2个单词的报告。我们采用[18]发布的官方划分方式,最终获得122,014/957/1,606份报告分别用于训练集/验证集/测试集。

Figure 2: The instruction-tuning process of Radiology-GPT.

图 2: Radiology-GPT的指令微调流程。

In addition, we also pre processed the OpenI dataset [31] based on the above exclusion operations to function as an independent external test dataset. It is crucial to validate our model’s performance and general iz ability across different data sources. Since the official split is not provided, we follow [32] to randomly divide the dataset into train/val/test sets by 2400:292:576 (total: 3268 reports). The independent nature of the OpenI dataset allowed us to robustly assess our model’s capabilities and understand its practical applicability.

此外,我们还基于上述排除操作对OpenI数据集[31]进行了预处理,将其作为独立的外部测试数据集。验证模型在不同数据源上的性能和泛化能力至关重要。由于未提供官方划分标准,我们参照[32]将该数据集随机划分为训练集/验证集/测试集(2400:292:576,总计3268份报告)。OpenI数据集的独立性使我们能够稳健评估模型能力并理解其实际适用性。

3.2 Experimental setting

3.2 实验设置

In this study, we trained the current version of Radiology-GPT based on Alpaca-7B. The training was conducted on a server with 4 Nvidia A100 80GB GPUs. We utilized LoRA [33] to facilitate training. More importantly, we followed the LoRA approach because the small size and portable nature of LoRA weights eases model sharing and deployment.

在本研究中,我们基于Alpaca-7B训练了当前版本的Radiology-GPT。训练在一台配备4块Nvidia A100 80GB GPU的服务器上进行。我们采用LoRA [33]技术来辅助训练。更重要的是,选择LoRA方法是因为其权重文件体积小、便于移植,能简化模型共享与部署流程。

The batch size was set to 128 and the learning rate was fixed at 3e-4. For LoRA, we set lora_ r, the rank of the low-rank factorization, to 8, and lora_ alpha, the scaling factor for the rank, to 16. This was accompanied by a dropout rate of 0.05. The target modules for LoRA were set to "q_ proj" and "v_ proj". These modules refer to the query and value matrices in the self-attention mechanism of the transformer architecture [34, 33].

批量大小设置为128,学习率固定为3e-4。对于LoRA (Low-Rank Adaptation) ,我们将低秩分解的秩lora_ r设为8,秩的缩放因子lora_ alpha设为16,并配合0.05的丢弃率。LoRA的目标模块设置为"q_ proj"和"v_ proj",这些模块对应Transformer架构自注意力机制中的查询矩阵和值矩阵 [34, 33]。

A very similar training process will be applied to larger versions of Radiology-GPT based on larger base models.

将对基于更大基础模型的Radiology-GPT更大版本应用非常相似的训练过程。

3.3 Instruction tuning

3.3 指令微调 (Instruction tuning)

The next step involves instruction tuning our LLM on this radiology dataset. Our methodology is based on the principle of instruction-tuning [35, 27, 36]. The aim is to tune the model such that it can generate an "Impression" text given a "Findings" text as an instruction. The underlying language model learns the relationship between "Findings" and "Impression" from the dataset and hence, starts generating impressions in a similar manner when presented with new findings. Currently, we use Alpaca as the base model for this process. We will release versions of Radiology-GPT in various sizes in the near future. The model also learns domain-specific knowledge relevant to radiology during this training process.

下一步是在这个放射学数据集上对我们的LLM进行指令微调。我们的方法基于指令微调原则[35, 27, 36],目的是调整模型,使其能够在给定"发现"文本作为指令时生成"印象"文本。基础语言模型从数据集中学习"发现"和"印象"之间的关系,从而在面对新发现时以类似方式生成印象。目前,我们使用Alpaca作为此过程的基础模型。我们将在不久的将来发布不同规模的Radiology-GPT版本。在此训练过程中,模型还会学习与放射学相关的领域特定知识。

Figure 3: Comparisons of the LLMs based on Understand ability, Coherence, Relevance, Conciseness, and Clinical Utility.

图 3: 基于理解能力、连贯性、相关性、简洁性和临床实用性对大语言模型的比较。

To ensure our model learns effectively, we use a specific format for the instructions during training. We provide a short instruction to the "Findings" text: "Derive the impression from findings in the radiology report". The "Impression" text from the same report serves as the target output. This approach promotes learning by aligning the model with the task, thereby creating an instructionfollowing language model fine-tuned for radiology reports. Examples can be seen in Figure 2.

为确保模型有效学习,我们在训练过程中对指令采用特定格式。我们为"检查发现"文本提供简短指令:"从放射学报告中推导印象"。同一报告中的"印象"文本作为目标输出。这种方法通过将模型与任务对齐来促进学习,从而创建出针对放射学报告微调的指令遵循语言模型。示例如图2所示。

Through this methodology, Radiology-GPT is designed to capture the specific language patterns, terminologies, and logical reasoning required to interpret radiology reports effectively, thereby making it an efficient and reliable tool for aiding radiologists.

通过这种方法论,Radiology-GPT旨在捕捉解读放射学报告所需的特定语言模式、术语和逻辑推理能力,从而成为辅助放射科医师的高效可靠工具。

It might be valuable to use diverse instruction pairs beyond "Findings — $_ {\scriptscriptstyle{>}}$ Impression" in radiology. Currently, "Findings — $\scriptscriptstyle>$ Impression" is the most natural and clinically meaningful task to conduct instruction-tuning. We are actively engaging with radiologists to construct a variety of clinically meaningful instruction tuning pairs to further enhance Radiology-GPT.

在放射学领域,除了"检查所见 (Findings) -> 影像印象 (Impression)"指令对外,采用多样化的指令对可能具有重要价值。目前,"检查所见 -> 影像印象"仍是最自然且最具临床意义的指令微调任务。我们正积极与放射科医师合作,构建多种具有临床意义的指令微调对,以进一步提升Radiology-GPT的性能。

4 Evaluation

4 评估

One of the challenging aspects of using large language models (LLMs) in the medical field, particularly in radiology, is the determination of their effectiveness and reliability. Given the consequences of errors, it’s crucial to employ appropriate methods to evaluate and compare their outputs. To quantitatively evaluate the effectiveness of Radiology-GPT and other language models in radiology, we implemented a strategy to measure the understand ability, coherence, relevance, conciseness, clinical utility of generated responses.

在医疗领域,尤其是放射学中使用大语言模型(LLM)的一个挑战在于确定其有效性和可靠性。鉴于错误的后果,采用适当方法来评估和比较其输出至关重要。为定量评估Radiology-GPT及其他语言模型在放射学中的表现,我们实施了衡量生成回答的可理解性、连贯性、相关性、简洁性和临床实用性的策略。

It should be noted that even trained radiologists have different writing styles [37, 38]. There could be significant variations in how different radiologists interpret the same set of radiology findings [39, 40, 41]. Therefore, we believe it is not appropriate to use string-matching [42], BLEU [43], ROUGE [44], or other n-gram based methods to evaluate the generated radiology impressions. Instead, we develop a set of metrics that are directly relevant to clinical radiology practices.

需要注意的是,即使是训练有素的放射科医生也存在书写风格差异 [37, 38]。不同放射科医师对同一组影像学表现的解读可能存在显著差异 [39, 40, 41]。因此,我们认为使用字符串匹配 [42]、BLEU [43]、ROUGE [44] 或其他基于 n-gram 的方法来评估生成的放射学印象并不合适。相反,我们开发了一套与临床放射实践直接相关的评估指标。

We describe the five metrics below:

我们描述以下五个指标:

The experimental results yield insightful results regarding the performance of Radiology-GPT in comparison with other existing models. Figure 3 shows the results of the expert evaluation of LLMs. A panel of two expert radiologists assessed the capacity of these LLMs in generating appropriate impressions based on given findings, considering five key parameters: understand ability, coherence, relevance, conciseness, and clinical utility. Each metric was scored on a scale from 1 to 5. The radiologists independently assessed the quality of the LLMs’ responses using a set of 10 radiology reports randomly selected from the test set of the MIMIC-CXR radiology reports and the test set of the OpenI dataset pre processed by our in-house team. The assessments of the two radiologists were conducted independently, and the final scores for each metric were computed by averaging the scores from both radiologists, providing a balanced and comprehensive evaluation of each model’s performance.

实验结果表明,Radiology-GPT与其他现有模型相比具有显著性能优势。图3展示了大语言模型的专家评估结果。由两名放射科专家组成的评审小组基于五项关键参数(理解能力、连贯性、相关性、简洁性和临床实用性),评估了这些大语言模型根据给定检查结果生成恰当印象报告的能力,各项指标按1-5分制评分。专家们使用从MIMIC-CXR放射报告测试集和我们内部团队预处理的OpenI数据集测试集中随机选取的10份放射报告,独立评估了大语言模型回答的质量。两位放射科医生的评估独立进行,最终各项指标得分取两人评分的平均值,从而对每个模型的性能做出平衡全面的评价。

The performance of Radiology-GPT was found to be comparable to that of ChatGPT in terms of understand ability and slightly better in coherence. However, it lagged slightly behind in relevance, not due to a propensity to omit critical findings but rather, because it tended to produce shorter responses compared to ChatGPT. ChatGPT often generated lengthier responses that addressed nearly every point detailed in the findings (which sometimes can provide more context). Figure 4 shows the results of ChatGPT. This discrepancy could be attributed to the contrasting objectives of these models, with Radiology-GPT being designed to deliver succinct and focused outputs that

研究发现,Radiology-GPT在理解能力方面与ChatGPT表现相当,在连贯性上略胜一筹。但在相关性方面稍显不足,这并非由于遗漏关键发现,而是因其生成的回复通常比ChatGPT更为简短。ChatGPT往往会生成更详尽的回复,几乎涵盖报告中列出的所有细节(有时能提供更多背景信息)。图4展示了ChatGPT的结果。这种差异可能源于两款模型的设计目标不同:Radiology-GPT旨在提供简洁聚焦的输出,而...

ChatGPT

ChatGPT

Prompt: “Derive the impression from findings in the radiology report" Findings: PA and lateral views of the chest. The previously seen pericardia l and pleural effusions have resolved. There is no pneumothorax. There is no consolidation. The cardiac, media st in al, and hilar contours are normal.

提示:“根据放射学报告中的发现得出印象”

发现:胸部正位和侧位片。先前观察到的心包和胸腔积液已消退。无气胸。无实变。心脏、纵隔及肺门轮廓正常。

Based on the findings in the radiology report, the following impression can be derived:

根据放射学报告中的发现,可得出以下印象:

- Resolution of pericardia l and pleural eff us in s:Thereport statesthat the previously observed pericardia l and ple rale ff us in s haveresoed.Pericardia l effusion r fest the accumulation of fluid around the heart, and pleural effusion refers to the accumulation of fluid in the space between the lungs and the chest wall. The resolution of these effusions suggests that the fluid has been reabsorbed or drained.

- 心包积液和胸腔积液的消退:报告指出,先前观察到的心包积液和胸腔积液已消退。心包积液 (pericardial effusion) 是指心脏周围液体积聚,胸腔积液 (pleural effusion) 则指肺与胸壁之间空隙中的液体积聚。这些积液的消退表明液体已被重新吸收或排出。

- No pneumothorax: The report indicates that there is no evidence of pneumothorax, which refers to a collapsed lung caused by air accumulation in the chest cavity. The absence of pneumothorax suggests that the lungs are properly inflated without any air leakage into the chest cavity.

- 无气胸:报告显示无气胸迹象。气胸指胸腔内积气导致肺塌陷的情况。未出现气胸表明肺部充气正常,无气体漏入胸腔。

- Normal cardiac, media st in al, and hilar contours: he radiology report mentions that the cardiac, media st in al and hilar contours are normal. This indicates that there are no significant abnormalities or distortions in the structures within the chest, including the heart, central compartment (media st in um), and the hilar region where the blood vessels and bronchi enter the lungs.

- 正常的心脏、纵隔和肺门轮廓:放射学报告指出心脏、纵隔和肺门轮廓均正常。这表明胸部结构(包括心脏、中央区域(纵隔)以及血管和支气管进入肺部的肺门区)无明显异常或变形。

Based on these findings, the overall impression is that there is resolution of the pericardia l and pleural effusions, no e