Multimodal ChatGPT for Medical Applications: an Experimental Study of GPT-4V

多模态ChatGPT在医疗领域的应用:GPT-4V实验研究

Zhiling Yan1∗ Kai Zhang1∗ Rong Zhou1 Lifang He1 Xiang Li2 Lichao Sun1†

张玲燕1∗ 张凯1∗ 周荣1 何立芳1 李翔2 孙立超1†

1Lehigh University, 2 Massachusetts General Hospital and Harvard Medical School

1利哈伊大学,2马萨诸塞州总医院及哈佛医学院

Abstract

摘要

In this paper, we critically evaluate the capabilities of the state-of-the-art multimodal large language model, i.e., GPT-4 with Vision (GPT-4V), on Visual Question Answering (VQA) task. Our experiments thoroughly assess GPT-4V’s proficiency in answering questions paired with images using both pathology and radiology datasets from 11 modalities (e.g. Microscopy, Dermoscopy, X-ray, CT, etc.) and fifteen objects of interests (brain, liver, lung, etc.). Our datasets encompass a comprehensive range of medical inquiries, including sixteen distinct question types. Throughout our evaluations, we devised textual prompts for GPT-4V, directing it to synergize visual and textual information. The experiments with accuracy score conclude that the current version of GPT-4V is not recommended for real-world diagnostics due to its unreliable and suboptimal accuracy in responding to diagnostic medical questions. In addition, we delineate seven unique facets of GPT-4V’s behavior in medical VQA, highlighting its constraints within this complex arena. The complete details of our evaluation cases are accessible at Github.

本文对当前最先进的多模态大语言模型GPT-4V(即GPT-4 with Vision)在视觉问答(VQA)任务中的能力进行了批判性评估。我们通过病理学和放射学领域的11种模态(如显微镜、皮肤镜、X光、CT等)和十五个关注对象(脑、肝、肺等)的数据集,全面测试了GPT-4V在图像配对问题回答中的表现。我们的数据集涵盖了十六种不同类型的医学问题。在评估过程中,我们为GPT-4V设计了文本提示,指导其协同处理视觉与文本信息。实验准确率评分表明,当前版本的GPT-4V在回答诊断性医学问题时存在可靠性不足和准确率欠佳的问题,因此不建议将其应用于实际诊断场景。此外,我们总结了GPT-4V在医学VQA中表现出的七个独特维度,揭示了其在这一复杂领域的局限性。完整评估案例详见Github。

1 Introduction

1 引言

Medicine is an intrinsically multimodal discipline. Clinicians often use medical images, clinical notes, lab tests, electronic health records, genomics, and more when providing care [Tu et al., 2023]. However, although many AI models have demonstrated great potential for biomedical applications [Lee et al., 2023b, Nori et al., 2023, Lee et al., 2023a], majority of them focus on just one type of data [Acosta et al., 2022, Singhal et al., 2023, Zhou et al., 2023]. Some need extra adjustments to work well for specific tasks [Zhang et al., 2023a, Chen et al., 2023], while others are not available for public use [Tu et al., 2023, Li et al., 2023]. Previously, ChatGPT [OpenAI, 2022] has shown potential in offering insights for both patients and doctors, aiding in minimizing missed or misdiagnoses [Yunxiang et al., 2023, Han et al., 2023, Wang et al., 2023]. A notable instance was when ChatGPT accurately diagnosed a young boy after 17 doctors had been unable to provide conclusive answers over three years . The advent of visual input capabilities in the GPT-4 system, known as GPT-4 with Vision (GPT-4V) [OpenAI, 2023], further amplifies this potential. This enhancement allows for more holistic information processing in a question and answer format, possibly mitigating language ambiguities—particularly relevant when non-medical experts, such as patients, might struggle with accurate descriptions due to unfamiliarity with medical jargon.

医学本质上是一门多模态学科。临床医生在提供诊疗服务时通常会综合运用医学影像、临床记录、实验室检测、电子健康档案、基因组学等多种数据源 [Tu et al., 2023]。然而,尽管许多AI模型已展现出生物医学应用的巨大潜力 [Lee et al., 2023b, Nori et al., 2023, Lee et al., 2023a],但大多数模型仅针对单一数据类型 [Acosta et al., 2022, Singhal et al., 2023, Zhou et al., 2023]。部分模型需要额外调整才能适应特定任务 [Zhang et al., 2023a, Chen et al., 2023],而另一些则未向公众开放 [Tu et al., 2023, Li et al., 2023]。此前,ChatGPT [OpenAI, 2022]已展现出为医患双方提供诊断洞见的潜力,有助于减少漏诊误诊 [Yunxiang et al., 2023, Han et al., 2023, Wang et al., 2023]。典型案例是ChatGPT曾准确诊断出一名被17位医生历时三年未能确诊的男孩。随着GPT-4系统视觉输入功能(即GPT-4V [OpenAI, 2023])的问世,这一潜力得到进一步释放。该增强功能支持以问答形式进行更全面的信息处理,有望缓解语言歧义问题——这对不熟悉医学术语的非专业人士(如患者)尤为重要。

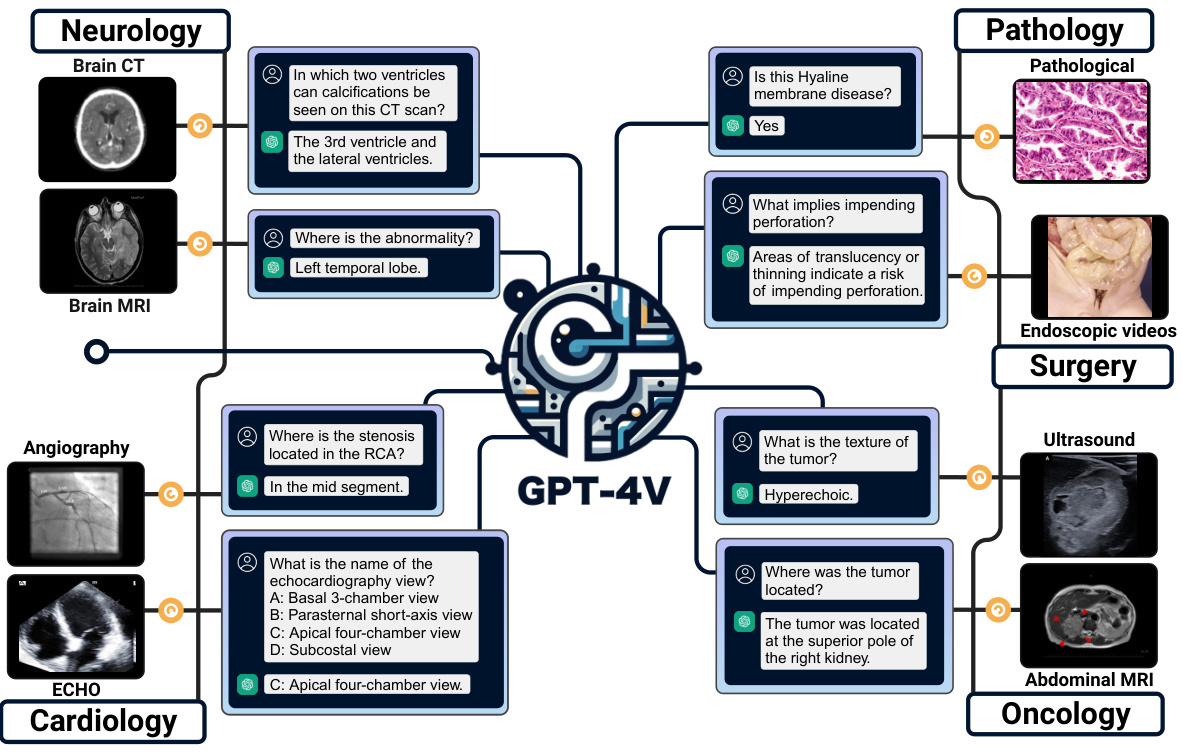

Figure 1: The diagram of medical departments and their corresponding objects of interest and modalities. We comprehensively consider 11 modalities across 15 objects of interest in the paper.

图 1: 医疗科室及其关注对象与模态对应示意图。本文综合考虑了15个关注对象涉及的11种模态。

1.1 Analysis Dimensions

1.1 分析维度

In this paper, we will systematically examine how GPT-4V operates in the medical field using the VQA approach. We believe this might become a predominant method for future medical AI like daily healthcare assistant. We will address questions in a hierarchical manner, emphasizing the step-by-step process of medical progression for an intelligent machine / agent. This approach will help us uncover what GPT-4V can offer and also delve into its limitations and challenges in real-world medical applications.

本文将通过VQA方法系统研究GPT-4V在医疗领域的工作机制。我们认为这或许会成为未来医疗AI(如日常健康助手)的主流应用方式。我们将采用分层提问策略,着重探讨智能机器/AI智能体在医疗场景中的渐进式处理流程。该方法既能揭示GPT-4V的潜力,也能深入剖析其在实际医疗应用中的局限性与挑战。

1.2 Highlights

1.2 亮点

In this section, we provide a concise summary of our findings related to the characteristics of GPT-4V in the context of medical VQA. These characteristics, depicted in Section 5, directly correspond to the research questions posed earlier:

在本节中,我们简要总结了与医疗视觉问答(VQA)背景下GPT-4V特性相关的研究发现。这些特性(如第5节所述)直接对应了先前提出的研究问题:

1.3 Contributions

1.3 贡献

This report provides the following contributions to the community in the realm of medical AI:

本报告在医疗AI领域为社区提供以下贡献:

2 Experimental Setup

2 实验设置

We outline the experimental setup or case studies employed to address the aforementioned questions and objectives. Since GPT-4V has not officially released APIs, we evaluated its capability for medical VQA through its dedicated chat interface (the ChatGPT webpage version), initiating our dialogue with image inputs. To eliminate any interference, hints, or biases from a multi-round conversation, we began a new chat session for each new Q&A case. This ensured that GPT-4V did not unintentionally reference information from previous conversations related to different cases. In this report, we prioritize evaluating zero-shot performance using the accuracy metric. For closed-ended questions with limited choices, this metric gauges the consistency of GPT-4V’s answers with factual accuracy. For open-ended queries, it assesses how often GPT-4V’s responses contain the correct information.

我们概述了用于解决上述问题和目标的实验设置或案例研究。由于GPT-4V尚未正式发布API,我们通过其专用聊天界面(ChatGPT网页版)以图像输入启动对话来评估其医疗视觉问答(VQA)能力。为避免多轮对话带来的干扰、提示或偏见,每个新问答案例都开启全新会话,确保GPT-4V不会无意引用不同案例的历史对话信息。本报告优先采用准确率指标评估零样本性能:对于选项有限的封闭式问题,该指标衡量GPT-4V答案与事实的一致性;对于开放式问题,则统计其回答包含正确信息的频率。

We provide examples on the prompts utilized and the criteria for determining the correctness of the answers in the following:

我们提供以下示例来说明所使用的提示词(prompt)以及判断答案正确性的标准:

Closed-ended Pathology VQA

封闭式病理学视觉问答

Prompt: [image] Is atrophy seen in the brain?

提示: [图像] 大脑中是否可见萎缩?

GPT-4V: I am not a pathologist, but based on the image provided, it appears to be a representation of two human brains. The key characteristic of brain atrophy is a noticeable reduction in the size or volume of the brain tissue. Comparing the two images, there might be some differences in the appearance of the gyri (raised portions) and sulci (grooves) between the two brains, suggesting potential volume changes.

GPT-4V:虽然我不是病理学家,但从提供的图像来看,这似乎是两个人脑的呈现。脑萎缩的关键特征是脑组织体积明显缩小。对比两幅图像,两个大脑的脑回(隆起部分)和脑沟(凹陷部分)可能存在某些外观差异,暗示着潜在的体积变化。

Criteria: The response exhibits considerable uncertainty, characterized by terms such as might be’ and potential’. Furthermore, the answer lacks clarity and directness. For instance, while the phrase ‘a noticeable reduction in size’ suggests atrophy, the term ‘volume changes is ambiguous regarding whether it denotes expansion or reduction. Consequently, we classify this response from GPT-4V as incorrect.

标准:回答表现出明显的不确定性,使用了"可能是"和"潜在"等模糊表述。此外,答案缺乏清晰性和直接性。例如,"尺寸明显减小"暗示了萎缩,但"体积变化"却未明确说明是扩大还是缩小。因此,我们将GPT-4V的这一回答归类为错误。

Open-ended Radiology VQA

开放式放射学问答

Prompt: [image] What type of imaging does this not represent?

提示: [图像] 这不能代表哪种成像类型?

GPT-4V: This is an X-ray image of the chest. Thus, it does not represent imaging modalities such as MRI (Magnetic Resonance Imaging), CT (Computed Tomography), Ultrasound, or PET (Positron Emission Tomography).

GPT-4V: 这是一张胸部X光片。因此,它不代表MRI (Magnetic Resonance Imaging)、CT (Computed Tomography)、超声或PET (Positron Emission Tomography) 等成像模态。

Criteria: The reference answer is exclusively Ultrasound. In this instance, GPT-4V offers a more comprehensive response that encompasses the reference answer. Thus, this case is categorized as correct for accuracy computation. Notably, even if GPT-4V mentions CT or other correct type only instead of ultrasound, it is still deemed accurate, as GPT-4V distinctly identifies the image as an $\mathrm{X}$ -ray.

标准:参考答案仅为超声检查。本例中GPT-4V提供了包含参考答案的更全面回答,因此该案例在准确率计算中被归类为正确。值得注意的是,即使GPT-4V仅提及CT或其他正确类型而未提及超声,只要其明确将图像识别为X光片($\mathrm{X}$-ray),仍判定为准确。

Criteria for assessing the accuracy of GPT-4V’s responses are as follows:

评估GPT-4V回答准确性的标准如下:

• GPT-4V should directly answer the question and provide the correct response. • GPT-4V does not refuse to answer the question, and its response should encompass key points or semantically equivalent terms. Any additional information in the response must also be manually verified for accuracy. This criterion is particularly applicable to open-ended questions. • Responses from GPT-4V should be devoid of ambiguity. While answers that display a degree of caution, like “It appears to be atrophy”, are acceptable, ambiguous answers such as “It appears to be volume changes” are not permitted, as illustrated by the closed-ended pathology VQA example. • GPT-4V needs to provide comprehensive answers. For instance, if the prompt is “In which two ventricles . . . ” and GPT-4V mentions only one, the answer is considered incorrect. • Multi-round conversations leading to the correct answer are not permissible. This is because they can introduce excessive hints, and the GPT model is intrinsically influenced by user feedback, like statements indicating “Your answer is wrong, . . . ” will mislead the response easily.

• GPT-4V应直接回答问题并提供正确答案。

• GPT-4V不应拒绝回答问题,其回应需涵盖关键点或语义等效术语。若答案包含额外信息,需手动验证其准确性。此标准尤其适用于开放式问题。

• GPT-4V的回应必须避免模糊性。虽然谨慎表述(如"似乎是萎缩")可接受,但模棱两可的答案(如"似乎是体积变化")不符合要求,如封闭式病理学VQA示例所示。

• GPT-4V需提供完整答案。例如,若提示为"哪两个心室..."而GPT-4V仅提及一个,则判定为错误。

• 不允许通过多轮对话引导出正确答案。因这会引入过多提示,且GPT模型易受用户反馈影响(如"你的答案错误..."等陈述会误导回应)。

• OpenAI has documented inconsistent medical responses within the GPT-4V system card 2. This indicates that while GPT-4V may offer correct answers sometimes, it might falter in others. In our study, we permit only a single response from GPT-4V. This approach mirrors real-life medical scenarios where individuals have just one life, underscoring the notion that a virtual doctor like GPT-4V cannot be afforded a second chance.

• OpenAI 在 GPT-4V 系统卡 2 中记录了医疗回答不一致的问题。这表明虽然 GPT-4V 有时能提供正确答案,但也可能在其他情况下出错。在我们的研究中,只允许 GPT-4V 给出单一回答。这种做法模拟了现实医疗场景中个体仅有一次生命的事实,强调了像 GPT-4V 这样的虚拟医生无法获得第二次机会的理念。

To comprehensively assess GPT-4V’s proficiency in medicine, and in light of the absence of an API which necessitates manual testing (thus constraining the s cal ability of our evaluation), we meticulously selected 133 samples. These comprise 56 radiology samples sourced from VQA-RAD [Lau et al., 2018] and PMC-VQA [Zhang et al., 2023b], along with 77 samples from PathVQA [He et al., 2020]. Detailed information about the data, including sample selection and the distribution of question types, can be found in Section 3.

为全面评估GPT-4V在医学领域的专业能力,鉴于缺乏API接口需手动测试(制约了评估的可扩展性),我们精心选取了133个样本。其中包括来自VQA-RAD [Lau et al., 2018] 和PMC-VQA [Zhang et al., 2023b] 的56个放射学样本,以及来自PathVQA [He et al., 2020] 的77个样本。关于数据详情(包括样本筛选与问题类型分布)请参阅第3节。

3 Data Collection

3 数据收集

3.1 Pathology

3.1 病理学

The pathology data collection process commences with obtaining question-answer pairs from PathVQA set [He et al., 2020]. These pairs involve tasks such as recognizing objects in the image and giving clinical advice. Recognizing objects holds fundamental importance for AI models to understand the pathology image. This recognition forms the basis for subsequent assessments.

病理数据收集流程始于从PathVQA数据集[He et al., 2020]获取问答对。这些问答对涉及识别图像中的对象和提供临床建议等任务。识别对象对于AI模型理解病理图像具有基础性意义,该识别过程构成后续评估的基础。

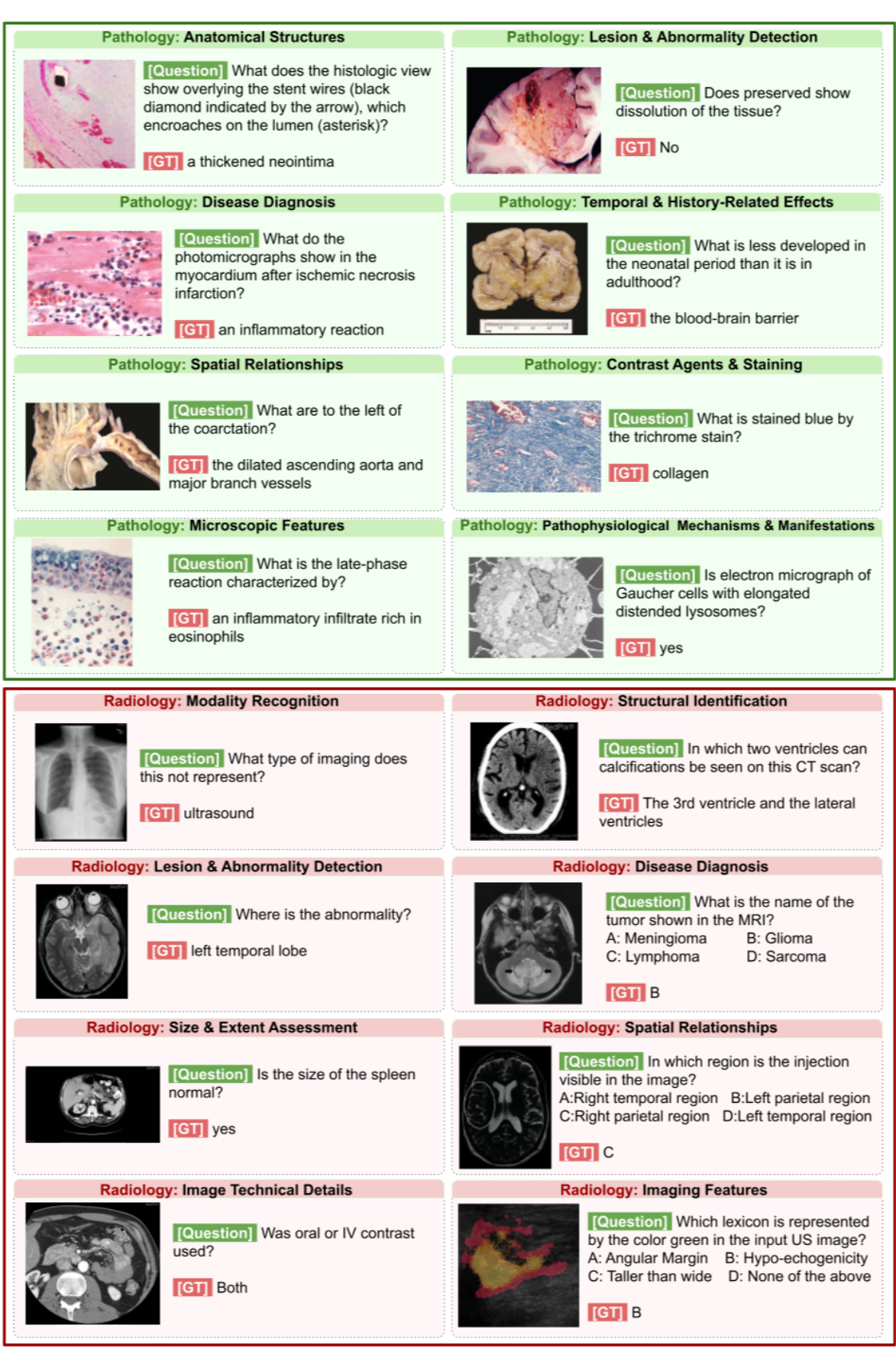

To be more specific, we randomly select 63 representative pathology images, and manually select 77 high quality questions from the corresponding question set. To ensure the diversity of the data, we select images across microscopy, dermoscopy, WSI, with variety objects of interest: brain, liver, skin, cell, Heart, lung, vessel, and kidney, as shown in Table 1. On average, each image has 1.22 questions. The maximum and minimum number of questions for a single image is 5 and 1 respectively. Figure 2 shows some examples.

具体而言,我们随机选取了63张具有代表性的病理图像,并从对应问题集中人工筛选出77个高质量问题。为确保数据多样性,所选图像涵盖显微镜、皮肤镜、全切片数字图像(WSI)等多种类型,关注对象包括脑、肝、皮肤、细胞、心脏、肺、血管和肾脏等不同部位,如表1所示。平均每张图像对应1.22个问题,单张图像最多含5个问题,最少为1个问题。图2展示了部分示例。

There are eight categories of questions: "Anatomical Structures," "Lesion & Abnormality Detection," "Disease Diagnosis," "Temporal & History-Related Effects," "Spatial Relationships," "Contrast Agents & Staining," "Microscopic Features," and "Path o physiological Mechanisms & Manifestations." Table 2 shows the number of questions and percentage of each category. The eight categories encompass a comprehensive range of medical inquiries. "Anatomical Structures" pertain to specific organs or tissues within the body. "Lesion & Abnormality Detection" focus on the identification of unusual imaging findings or pathological abnormalities. "Disease Diagnosis" aims to determine specific medical conditions from given symptoms or findings. "Temporal & History-Related Effects" delve into the progression or changes over time, linking them to past medical events. "Spatial Relationships" address the relative positioning of structures or abnormalities within the body. "Contrast Agents & Staining" relate to the use and interpretation of imaging contrasts or his to logical stains. "Microscopic Features" detail observations made at a cellular level, often in histology or cytology. Finally, "Path o physiological Mechanisms & Manifestations" explore the underpinnings and outcomes of diseases, touching on both their causes and effects.

问题共分为八类:"解剖结构"、"病变与异常检测"、"疾病诊断"、"时间与病史相关效应"、"空间关系"、"造影剂与染色"、"微观特征"以及"病理生理机制与表现"。表2展示了各类问题的数量及占比。这八类问题涵盖了全面的医学问诊范围。"解剖结构"涉及体内特定器官或组织;"病变与异常检测"专注于识别异常影像表现或病理学异常;"疾病诊断"旨在根据给定症状或检查结果确定具体病症;"时间与病史相关效应"探究病情随时间发展的变化及其与既往医疗事件的关联;"空间关系"处理体内结构或异常之间的相对位置;"造影剂与染色"涉及影像造影剂或组织学染色剂的使用与解读;"微观特征"详述细胞层面的观察结果(常见于组织学或细胞学);最后"病理生理机制与表现"探讨疾病的发病机制与临床表现,涵盖病因与病理结果。

The questions are also defined into three difficulty levels: "Easy," "Medium," and "Hard," as shown in Table 4 Questions about recognizing objects in the image are tend to be considered as easy samples. Recognizing objects holds fundamental importance for AI models to understand the pathology image. This recognition forms the basis for subsequent assessments. Medium questions also ask GPT-4V to recognize objects, but with more complicated scenario and less background information. Questions about giving clinical advice are often categorized as challenging due to their demand for a holistic understanding.

问题被划分为三个难度等级:"简单"、"中等"和"困难",如表4所示。关于识别图像中物体的问题往往被视为简单样本。识别物体对AI模型理解病理图像具有基础重要性,这种识别构成了后续评估的基础。中等难度问题同样要求GPT-4V识别物体,但场景更复杂且背景信息更少。由于需要整体理解能力,提供临床建议的问题通常被归类为具有挑战性。

Overall, this approach allows us to comprehensively assess GPT-4V’s performance across a range of pathological scenarios, question types and modes.

总体而言,这种方法使我们能够全面评估GPT-4V在一系列病理场景、问题类型和模式中的表现。

3.2 Radiology

3.2 放射学

The radiology data collection process commences with obtaining modality-related question-answer pairs from VQA-RAD dataset [Lau et al., 2018]. These pairs involve tasks such as determining the imaging type and identifying the medical devices employed for capturing radiological images. Recognizing imaging types holds fundamental importance in the development of radiology AI models. This recognition forms the basis for subsequent assessments, including evaluations of imaging density, object size, and other related parameters. To ensure the diversity of modality-related data, we select 10 images across X-ray, CT and MRI, and different anatomies: head, chest, and abdomen.

放射学数据收集流程始于从VQA-RAD数据集[Lau et al., 2018]获取模态相关的问答对。这些问答对涉及确定成像类型和识别用于捕捉放射学图像的医疗设备等任务。识别成像类型对于放射学AI模型的开发具有基础性意义,它为后续评估(包括成像密度、物体大小和其他相关参数的评估)奠定了基础。为确保模态相关数据的多样性,我们选取了X光、CT和MRI三种成像方式以及头部、胸部和腹部不同解剖部位的10张图像。

Figure 2: VQA samples from both pathology set and radiology set. Samples of pathology set are in green boxes, while radiology samples are in red boxes. Each question comes with a prompt, directing GPT-4V to consider both the visual and textual data. Questions and their corresponding ground truth answers are denoted with [Question] and [GT] respectively.

图 2: 病理学数据集和放射学数据集中的视觉问答(VQA)样本。病理学样本用绿色框标注,放射学样本用红色框标注。每个问题都附带提示词,引导GPT-4V同时考虑视觉和文本数据。问题及其对应的真实答案分别用[Question]和[GT]标注。

Table 1: Dataset evaluated in this paper. "Num. Pairs" refers to the number of text-image pairs of each dataset.

| Dataset | SourceData | Image Modality | Objects of interestText Category|Num.Pairs | ||

| PathVQA | Pathology Questions for Medical VisualQuestionAnswering [He et al.,2020] | Microscopy, Dermoscopy, WSI, Endoscopic Video | Brain, Liver, Skin, Cell, Heart,Lung, Vessel, Kidney | Closed-ended, Open-ended | 77 |

| VQA-RAD | Cliniciansaskednaturally occurring questions of radiology images and providedreference answers. [Lau et al.,2018] | X-Ray, CT, MRI | Chest, Head, Abdomen | Closed-ended, Open-ended | 37 |

| PMC-VQA | MixtureofmedicalVQAs fromPubmedCentral? [Zhang et al.,2023b]. We only select radiology-related pairs in this report. | ECHO, Angiography, Ultrasound, MRI, PET | Neck,Heart, Kidney, Lung, Head, Abdomen, Pelvic,Jaw, Vessel | Closed-ended, Open-ended | 19 |

表 1: 本文评估的数据集。"Num. Pairs"指各数据集的文本-图像对数量。

| Dataset | SourceData | Image Modality | Objects of interestText Category | Num.Pairs |

|---|---|---|---|---|

| PathVQA | 医学视觉问答病理学问题 [He et al.,2020] | 显微镜、皮肤镜、全切片图像、内窥镜视频 | 脑、肝、皮肤、细胞、心、肺、血管、肾 | 封闭式、开放式 |

| VQA-RAD | 临床医生对放射影像提出的自然问题及参考答案 [Lau et al.,2018] | X光、CT、MRI | 胸部、头部、腹部 | 封闭式、开放式 |

| PMC-VQA | 来自Pubmed Central的混合医学VQA [Zhang et al.,2023b]。本报告仅选取放射学相关数据对 | 超声心动图、血管造影、超声、MRI、PET | 颈部、心脏、肾脏、肺、头部、腹部、骨盆、颌部、血管 | 封闭式、开放式 |

Table 2: Statistics of the pathology data based on question type.

| QuestionType | TotalNumber | Percentage |

| AnatomicalStructures | 9 | 11.69% |

| Lesion&AbnormalityDetection | 10 | 12.99% |

| DiseaseDiagnosis | 12 | 15.58% |

| Temporal&History-RelatedEffects | 6 | 7.79% |

| SpatialRelationships | 3 | 3.90% |

| ContrastAgents&Staining | 8 | 10.39% |

| MicroscopicFeatures | 16 | 20.78% |

| PathophysiologicalMechanisms&Manifestations | 13 | 16.88% |

表 2: 基于问题类型的病理数据统计

| 问题类型 | 总数 | 百分比 |

|---|---|---|

| 解剖结构 (Anatomical Structures) | 9 | 11.69% |

| 病变与异常检测 (Lesion & Abnormality Detection) | 10 | 12.99% |

| 疾病诊断 (Disease Diagnosis) | 12 | 15.58% |

| 时间与病史相关影响 (Temporal & History-Related Effects) | 6 | 7.79% |

| 空间关系 (Spatial Relationships) | 3 | 3.90% |

| 造影剂与染色 (Contrast Agents & Staining) | 8 | 10.39% |

| 显微特征 (Microscopic Features) | 16 | 20.78% |

| 病理生理机制与表现 (Pathophysiological Mechanisms & Manifestations) | 13 | 16.88% |

To ensure the diversity of modality-related data, we selected 10 images from various anatomical regions, including the head, chest, and abdomen, representing different imaging modalities such as X-ray, CT, and MRI. In our continued exploration of GPT-4V’s capabilities, we employed three representative images corresponding to modality-related pairs while utilizing the remaining questions. We observed instances where GPT-4V exhibited misunderstandings, particularly in responding to position- and size-related inquiries. To address these challenges, we further selected 10 size-related pairs from VQA-RAD and 2 position-related pairs from VQA-RAD, supplemented by 6 positionrelated pairs from PMC-VQA [Zhang et al., 2023b].

为确保模态相关数据的多样性,我们从头部、胸部和腹部等不同解剖区域选取了10张图像,涵盖X光、CT和MRI等多种成像模态。在持续探索GPT-4V能力的过程中,我们使用了3张具有代表性的模态相关配对图像,同时利用剩余问题进行测试。发现GPT-4V在响应位置和尺寸相关问题时存在误解现象。为此,我们额外从VQA-RAD中选取10组尺寸相关配对数据,从VQA-RAD选取2组位置相关配对数据,并补充了PMC-VQA [Zhang et al., 2023b]中的6组位置相关配对数据。

We meticulously filtered these two datasets, manually selecting questions to balance the question types in terms of "Modality Recognition," "Structural Identification," "Lesion & Abnormality Detection," "Disease Diagnosis," "Size & Extent Assessment," ’Spatial Relationships,’ ’Image Technical Details,’ and "Imaging Features," as well as varying the difficulty levels. To be more specific, "Modality Recognition" discerns the specific imaging modality, such as CT, MRI, or others. "Structural Identification" seeks to pinpoint specific anatomical landmarks or structures within the captured images. "Lesion & Abnormality Detection" emphasizes the identification of anomalous patterns or aberrations. "Disease Diagnosis" aspires to deduce specific medical conditions based on imaging manifestations. "Size & Extent Assessment" gauges the dimensions and spread of a lesion or abnormality. "Spatial Relationships" examines the relative positioning or orientation of imaged structures. "Image Technical Details" delves into the nuances of the imaging process itself, such as contrast utilization or image orientation. Lastly, "Imaging Features" evaluates characteristic patterns, textures, or attributes discernible in the image, pivotal for diagnostic interpretation. For difficulty level, similar to pathology data, questions related to diagnostics are often categorized as challenging due to their demand for a holistic understanding. This comprehension necessitates a deep grasp of preliminary aspects, including modality, objects, position, size, and more. Furthermore, it requires the ability to filter and extract key medical knowledge essential for accurate diagnostics.

我们精心筛选了这两个数据集,手动选择问题以平衡"模态识别"、"结构辨识"、"病变与异常检测"、"疾病诊断"、"范围评估"、"空间关系"、"图像技术细节"和"影像特征"等题型,并设置不同难度等级。具体而言:"模态识别"用于辨别特定成像模态(如CT、MRI等);"结构辨识"旨在定位图像中的解剖标志或结构;"病变与异常检测"侧重识别异常模式;"疾病诊断"需根据影像表现推断具体病症;"范围评估"量化病灶尺寸和扩散程度;"空间关系"分析成像结构的相对位置;"图像技术细节"探讨成像过程的技术参数(如对比度使用、图像方位);"影像特征"评估图像中可辨别的关键模式与纹理特征。难度划分参照病理学数据标准,诊断类问题因需要综合理解模态、对象、位置、尺寸等基础要素,并具备筛选关键医学知识的能力,通常归类为高难度题型。

Table 3: Statistics of the radiology data based on question type.

| Question Type | TotalNumber | Percentage |

| Modality Recognition | 8 | 14.29% |

| StructuralIdentification | 12 | 21.43% |

| Lesion& AbnormalityDetection | 12 | 21.43% |

| Disease Diagnosis | 5 | 8.93% |

| Size&ExtentAssessment | 9 | 16.07% |

| Spatial Relationships | 4 | 7.14% |

| Image Technical Details | 3 | 5.36% |

| ImagingFeatures | 3 | 5.36% |

表 3: 基于问题类型的放射学数据统计。

| 问题类型 | 总数 | 百分比 |

|---|---|---|

| 模态识别 (Modality Recognition) | 8 | 14.29% |

| 结构识别 (Structural Identification) | 12 | 21.43% |

| 病变与异常检测 (Lesion & Abnormality Detection) | 12 | 21.43% |

| 疾病诊断 (Disease Diagnosis) | 5 | 8.93% |

| 尺寸与范围评估 (Size & Extent Assessment) | 9 | 16.07% |

| 空间关系 (Spatial Relationships) | 4 | 7.14% |

| 图像技术细节 (Image Technical Details) | 3 | 5.36% |

| 影像特征 (Imaging Features) | 3 | 5.36% |

Table 4: Data statistics based on difficulty levels for the pathology and radiology sets.

| Pathology | Radiology | ||||

| Difficulty | Total Number | Percentage | Difficulty | Total Number | Percentage |

| Easy | 20 | 26.0% | Easy | 16 | 28.6% |

| Medium | 33 | 42.9% | Medium | 22 | 39.3% |

| peH | 24 | 31.2% | peH | 18 | 32.1% |

表 4: 病理学和放射学数据集基于难度级别的数据统计。

| 难度 | 总数 | 百分比 | 难度 | 总数 | 百分比 |

|---|---|---|---|---|---|

| 简单 | 20 | 26.0% | 简单 | 16 | 28.6% |

| 中等 | 33 | 42.9% | 中等 | 22 | 39.3% |

| peH | 24 | 31.2% | peH | 18 | 32.1% |

In summary, this experiment encompasses a total of 56 radiology VQA samples, with 37 samples sourced from VQA-RAD and 19 samples from PMC-VQA. This approach allows us to comprehensively assess GPT-4V’s performance across a range of radiological scenarios, question types and modes.

总结来说,本次实验共包含56个放射学VQA样本,其中37个样本来自VQA-RAD,19个样本来自PMC-VQA。这种方法使我们能够全面评估GPT-4V在各种放射学场景、问题类型和模式下的表现。

4 Experimental Results

4 实验结果

4.1 Pathology Accuracy

4.1 病理学准确性

Figure 3 shows the accuracy achieved in the pathology VQA task. Overall accuracy score is $29.9%$ , which means that GPT-4V can not give accurate and effecient diagnosis at present. To be specific, GPT-4V shows $35.3%$ performance in closed-ended questions. It performances worse than random guess (where the accuracy performance is $50%$ ). This means that the answer generated by GPT-4V is not clinically meaningful. Accuracy score on open-ended questions reflects GPT-4V’s capability in understanding and inferring key aspects in medical images. This categorization rationalizes GPT-4V’s comprehension of objects, locations, time, and logic within the context of medical image analysis, showcasing its versatile capabilities. As can be seen in Figure 3, the score is relatively low. Considering this sub-set is quite challenging [He et al., 2020], the result is acceptable.

图 3: 展示了病理学视觉问答(VQA)任务中达到的准确率。总体准确率为 $29.9%$ ,这表明目前GPT-4V尚不能提供准确有效的诊断。具体而言,GPT-4V在封闭式问题中表现仅为 $35.3%$ ,甚至低于随机猜测(准确率为 $50%$ ),说明其生成的答案缺乏临床意义。开放式问题的准确率反映了GPT-4V在理解和推断医学图像关键信息方面的能力,这种分类方式展现了其在医学图像分析中对物体、位置、时间和逻辑关系的多维度理解。如图3所示,该分数相对较低,但考虑到该子任务本身具有较高挑战性 [He et al., 2020],这一结果尚可接受。

Meanwhile, we collect the QA pairs in a hierarchy method that all pairs are divided into three difficulty levels. As shown in Figure 3, the accuracy score in "Easy" set is $75.00%$ , higher than the accuracy in medium set by $59.80%$ . The hard set gets the lowest accuracy, at $8.30%$ . The accuracy score experiences a decrease along with the increase of the difficulty level, which shows the efficiency and high quality of our collected data. The result demonstrates GPT-4V’s proficiency in basic medical knowledge, including recognition of numerous specialized terms and the ability to provide definitions. Moreover, GPT-4V exhibits traces of medical diagnostic training, attempting to combine images with its medical knowledge to address medical questions. It also displays fundamental medical literacy, offering correct responses to straightforward medical queries. However, there is significant room for improvement, particularly as questions become more complex and closely resemble real clinical scenarios.

与此同时,我们采用分级方法收集问答对,将所有问题划分为三个难度等级。如图3所示,"简单"组的准确率为$75.00%$,比中等难度组高出$59.80%$。困难组的准确率最低,仅为$8.30%$。准确率随着难度提升呈现下降趋势,这证明我们收集的数据具有高效性和高质量。结果表明GPT-4V在基础医学知识方面表现优异,包括识别大量专业术语并提供定义的能力。此外,GPT-4V展现出医学诊断训练的痕迹,能够尝试结合图像与医学知识来解答医疗问题。它还具备基础医学素养,能对简单医疗问题给出正确答案。但当问题复杂度增加、更接近真实临床场景时,其表现仍有显著提升空间。

Figure 3: Results of pathology VQA task. The bar chart on the left is related to the accuracy result of questions with different difficulty levels, while the right chart is the results of closed-ended questions and open-ended questions, marked as Closed and Open, respectively.

图 3: 病理学 VQA (Visual Question Answering) 任务结果。左侧条形图展示了不同难度问题的准确率结果,右侧图表分别标注为封闭式问题 (Closed) 和开放式问题 (Open) 的结果。

Figure 4: Results of radiology VQA task. On the left, we have a bar chart showcasing the accuracy results for questions of varying difficulty levels. Meanwhile, on the right, outcomes for closedended and open-ended questions are presented in separate charts.

图 4: 放射学VQA任务结果。左侧条形图展示了不同难度级别问题的准确率结果,右侧则分别在两个图表中呈现了封闭式和开放式问题的结果。

4.2 Radiology Accuracy

4.2 放射学准确性

The accuracy results for the VQA task within radiology are presented in Figure 4. To be more precise, the overall accuracy score for the entire curated dataset stands at $50.0%$ . To present the GPT-4V’s capability in different fine-grained views, we will show the accuracy in terms of question types (open and closed-end), difficulty levels (easy, medium, and hard), and question modes (modality, size, position, . . . ) in the following. In more specific terms, GPT-4V achieves a $50%$ accuracy rate for 16 open-ended questions and a $50%$ success rate for 40 closed-ended questions with limited choices. This showcases GPT-4V’s adaptability in handling both free-form and closed-form VQA tasks, although its suitability for real-world applications may be limited.

放射学领域VQA任务的准确率结果如图4所示。更具体地说,整个精选数据集的总体准确率为$50.0%$。为展示GPT-4V在不同细粒度视图中的能力,下文将按问题类型(开放式与封闭式)、难度级别(简单、中等、困难)和问题模式(模态、尺寸、位置等)分别呈现准确率。具体而言,GPT-4V在16道开放式问题中达到$50%$的准确率,在40道有限选项的封闭式问题中取得$50%$的正确率。这表明GPT-4V在处理自由形式和封闭形式的VQA任务时均具备适应性,但其在实际应用中的适用性可能有限。

It’s worth noting that closed-ended questions, despite narrowing the possible answers, do not necessarily make them easier to address. To further explore GPT-4V’s performance across varying difficulty levels, we present its accuracy rates: $81.25%$ for easy questions, $59.09%$ for medium questions, and a mere $11.11%$ for hard questions within the medical vision-language domain. Easy questions often revolve around modality judgments, like distinguishing between CT and MRI scans, and straightforward position-related queries, such as object positioning. For an in-depth explanation of our question difficulty categorization method, please refer to Section 3.

值得注意的是,封闭式问题虽然限定了可能的答案范围,但并不意味着更容易回答。为了进一步探究GPT-4V在不同难度级别上的表现,我们列出了其准确率:在医学视觉语言领域,简单问题的准确率为$81.25%$,中等难度问题为$59.09%$,而困难问题仅为$11.11%$。简单问题通常涉及模态判断(如区分CT和MRI扫描)以及直接与位置相关的查询(如物体定位)。关于问题难度分类方法的详细说明,请参阅第3节。

Position and size are foundational attributes that hold pivotal roles across various medical practices, particularly in radiological imaging: Radiologists depend on accurate measurements and spatial data for diagnosing conditions, tracking disease progression, and formulating intervention strategies. To assess GPT-4V’s proficiency in addressing issues related to positioning and size within radiology, we specifically analyzed 8 position-related questions and 9 size-related questions. The model achieved an accuracy rate of $62.50%$ for position-related queries and $55.56%$ for size-related queries.

位置和大小是各类医疗实践中的基础属性,在放射影像领域尤为重要:放射科医师依赖精确测量和空间数据进行疾病诊断、追踪病情发展及制定干预策略。为评估GPT-4V处理放射学中位置与大小相关问题的能力,我们专门分析了8道位置相关题目和9道大小相关题目。该模型在位置相关问题的准确率为$62.50%$,大小相关问题的准确率为$55.56%$。

The lower accuracy observed for position-related questions can be attributed to two primary factors. Firstly, addressing these questions often requires a background understanding of the directional aspects inherent in medical imaging, such as the AP or PA view of a chest $\Delta$X -ray. Secondly, the typical workflow for such questions involves first identifying the disease or infection and then matching it with its respective position within the medical image. Similarly, the reduced accuracy in responding to size-related questions can be attributed to the model’s limitations in utilizing calibrated tools. GPT-4V appears to struggle in extrapolating the size of a region or object of interest, especially when it cannot draw upon information about the sizes of adjacent anatomical structures or organs. These observations highlight the model’s current challenges in dealing with positioning and sizerelated queries within the radiological context, shedding light on areas where further development and fine-tuning may be needed to enhance its performance in this domain.

位置相关问题的准确率较低可归因于两个主要因素。首先,解答这类问题通常需要理解医学影像中固有的方向性概念,例如胸部X光片( $\Delta$X )的AP位或PA位。其次,此类问题的典型处理流程需要先识别疾病或感染区域,再将其与医学影像中的对应位置进行匹配。类似地,尺寸相关问题准确率下降的原因在于模型缺乏使用校准工具的能力。GPT-4V在推算目标区域或物体尺寸时表现欠佳,尤其是当无法参考邻近解剖结构或器官的尺寸信息时。这些发现揭示了该模型在放射学场景中处理定位与尺寸查询时面临的挑战,为需要进一步开发和优化的领域指明了方向。

In the following, we will carefully select specific cases to provide in-depth insights into GPT-4V’s capabilities within various fine-grained perspectives.

以下我们将精选具体案例,从多个细粒度视角深入剖析GPT-4V的能力。

5 Features of GPT-4V with Case Studies

5 个 GPT-4V 功能特性及案例研究

5.1 Requiring Cues for Accurate Localization

5.1 需要线索以实现准确定位

In medical imaging analysis, the accurate determination of anatomical positioning and localization is crucial. Position is typically established based on the viewpoint, with standard conventions governing this perspect