XrayGPT: Chest Radio graphs Sum mari z ation using Large Medical Vision-Language Models

XrayGPT: 基于大型医疗视觉-语言模型的胸片报告摘要

Omkar Thawkar1 Abdel rahman Shaker1 Sahal Shaji Mull app illy 1 Hisham Cholakkal1 Rao Muhammad Anwer1,2 Salman Khan1 Jorma Laaksonen2 Fahad Shahbaz Khan1 1Mohamed bin Zayed University of AI 2Aalto University

Omkar Thawkar1 Abdel rahman Shaker1 Sahal Shaji Mullappilly1 Hisham Cholakkal1 Rao Muhammad Anwer1,2 Salman Khan1 Jorma Laaksonen2 Fahad Shahbaz Khan1 1穆罕默德·本·扎耶德人工智能大学 2阿尔托大学

Abstract

摘要

The latest breakthroughs in large visionlanguage models, such as Bard and GPT-4, have showcased extraordinary abilities in performing a wide range of tasks. Such models are trained on massive datasets comprising billions of public image-text pairs with diverse tasks. However, their performance on taskspecific domains, such as radiology, is still under-investigated and potentially limited due to a lack of sophistication in understanding biomedical images. On the other hand, convers at ional medical models have exhibited remarkable success but have mainly focused on text-based analysis. In this paper, we introduce XrayGPT, a novel conversational medical vision-language model that can analyze and answer open-ended questions about chest radiographs. Specifically, we align both medical visual encoder (MedClip) with a fine-tuned large language model (Vicuna), using a simple linear transformation. This alignment enables our model to possess exceptional visual conversation abilities, grounded in a deep under standing of radio graphs and medical domain knowledge. To enhance the performance of LLMs in the medical context, we generate 217k interactive and high-quality summaries from free-text radiology reports. These summaries serve to enhance the performance of LLMs through the fine-tuning process. Our approach opens up new avenues the research for advancing the automated analysis of chest radio graphs. Our open-source demos, models, and instruction sets are available at: https: //github.com/mbzuai-oryx/XrayGPT

大视觉语言模型(如Bard和GPT-4)的最新突破展现了执行广泛任务的非凡能力。这类模型基于包含数十亿公开图文对的大规模数据集进行训练,涵盖多样任务。然而,由于对生物医学图像理解的不足,它们在特定领域任务(如放射学)上的表现仍有待研究且可能受限。另一方面,对话式医疗模型虽已取得显著成功,但主要集中于基于文本的分析。本文提出XrayGPT,一种新型对话式医疗视觉语言模型,能够分析并回答关于胸部X光片的开放式问题。具体而言,我们通过简单线性变换将医学视觉编码器(MedClip)与微调后的大语言模型(Vicuna)对齐。这种对齐使我们的模型具备基于对放射图像和医学领域知识的深刻理解的卓越视觉对话能力。为提升大语言模型在医疗场景中的表现,我们从自由文本放射报告中生成217k条交互式高质量摘要,这些摘要通过微调过程增强模型性能。我们的方法为推进胸部X光片自动分析研究开辟了新途径。开源演示、模型及指令集详见:https://github.com/mbzuai-oryx/XrayGPT

1 Introduction

1 引言

The Large-scale Vision-Language models have emerged as a transformative area of research at the intersection of computer vision and natural language processing, enabling machines to understand and generate information from both visual and textual modalities. These models represent a significant advancement in the field, bridging the gap between visual perception and language comprehension, and have demonstrated remarkable capabilities across various tasks, including but not limited to image captioning (Hossain et al., 2019), visual question answering (Lu et al., 2023), and visual commonsense reasoning (Zellers et al., 2019). Training these models requires vast amounts of image and text data, enabling them to learn rich representations that capture the intricacies of both modalities. Additionally, fine-tuning can be employed using task-specific data to better align the models with specific end tasks and user preferences. Recently, Bard and GPT-4 have demonstrated impressive capabilities in various tasks, raising excitement within the research community and industry. However, it is important to note that the models of Bard and GPT-4 are not currently available as open-source, limiting access to their underlying architecture and implementation details.

大规模视觉语言模型已成为计算机视觉与自然语言处理交叉领域的变革性研究方向,使机器能够理解和生成来自视觉与文本模态的信息。这些模型代表了该领域的重大进展,弥合了视觉感知与语言理解之间的鸿沟,并在图像描述生成 (Hossain et al., 2019) 、视觉问答 (Lu et al., 2023) 和视觉常识推理 (Zellers et al., 2019) 等多种任务中展现出卓越能力。训练此类模型需要海量图像和文本数据,使其能够学习捕获两种模态复杂特征的丰富表征。此外,可采用任务特定数据对模型进行微调,以更好地适配具体终端任务和用户偏好。近期,Bard 和 GPT-4 在多项任务中展现出令人印象深刻的能力,引发了学术界与工业界的广泛关注。但需注意,Bard 和 GPT-4 的模型目前尚未开源,这限制了对底层架构与实现细节的研究访问。

Recently, Mini-GPT (Zhu et al., 2023) demonstrates a range of impressive capabilities by aligning both vision and language models. It excels at generating contextual descriptions based on the given image. However, it is not as effective in medical scenarios due to the significant differences between medical image-text pairs and general web content. Adopting vision-text pre-training in the medical domain is a challenging task because of two factors: (1) Lack of data, Mini-GPT has trained the projection layer on a dataset of 5M image-text pairs, while the total number of publicly available medical images and reports is orders of magnitude below. (2) Different modalities and domains, while Mini-GPT may involve distinguishing between broad categories like "Person" and "Car" the distinctions within medical domains are much more subtle and fine-grained. For instance, different i ating between terms like "Pneumonia" and "Pleural Effusion" requires more precision by capturing and aligning crucial medical domain knowledge.

最近,Mini-GPT (Zhu et al., 2023) 通过对齐视觉和语言模型展示了一系列令人印象深刻的能力。它擅长根据给定图像生成上下文描述。然而,由于医学图像-文本对与一般网络内容存在显著差异,它在医疗场景中表现不佳。在医疗领域采用视觉-文本预训练是一项具有挑战性的任务,原因有二:(1) 数据匮乏,Mini-GPT 在 500 万图像-文本对数据集上训练了投影层,而公开可用的医学图像和报告总量要低几个数量级。(2) 模态和领域差异,虽然 Mini-GPT 可能涉及区分"人"和"车"等宽泛类别,但医学领域内的区分要更加细微和精细。例如,区分"肺炎(Pneumonia)"和"胸腔积液(Pleural Effusion)"等术语需要通过捕捉和对齐关键医学领域知识来实现更高精度。

Chest radio graphs are vital for clinical decisionmaking as they offer essential diagnostic and prognostic insights about the health of the patients. Text sum mari z ation tasks can partially address this challenge by providing meaningful information and summaries based on the given radiology reports. In our approach, we go beyond traditional summarization techniques by providing concise summaries that highlight the key findings and the overall impression based on the given X-ray. Additionally, our model allows for interactive engagement, enabling users to ask follow-up questions based on the provided answers. In this study, we stimulate the research around the automated analysis of chest radio graphs based on X-ray images. Also, we argue that based on the visual and large language models, the majority of knowledge acquired during the pertaining stage of these models requires a domain-specific high-quality instruction set derived from task-specific data to achieve promising results. The main contributions of our work are:- • The LLM (Vicuna) is fine-tuned on medical data (100k real conversations between patients and doctors) and 20k radiology conversations to acquire domain-specific and relevant features. • We generate interactive and clean summaries ( 217k) from free-text radiology reports of two datasets: MIMIC-CXR (Johnson et al., 2019) and OpenI (Demner-Fushman et al., 2015). These summaries serve to enhance the performance of LLMs by fine-tuning the linear transformation layer on high-quality data. • We align the frozen specialized medical visual encoder (MedClip) with a fine-tuned LLM (Vicuna), using a simple linear transformation to understand medical meanings and acquire remarkable visual conversation abilities. • To advance the research in biomedical multimodal learning, we open-source our assets to the community: The codebase, the finetuned models, the high-quality instruction-set, and the raining recipe for data generation and model training are publically released.

胸部X光片对临床决策至关重要,它们能提供关于患者健康状况的关键诊断和预后信息。文本摘要任务可以通过基于给定放射学报告生成有意义的信息和摘要,部分解决这一挑战。在我们的方法中,我们超越了传统摘要技术,根据给定X光片提供突出关键发现和整体印象的简明摘要。此外,我们的模型支持交互式参与,允许用户基于提供的答案提出后续问题。

本研究推动了基于X光图像的胸部X光片自动分析研究。我们认为,基于视觉和大语言模型,这些模型在预训练阶段获得的大部分知识需要来自特定任务数据的领域专用高质量指令集,才能取得理想结果。

我们工作的主要贡献包括:

- 大语言模型(Vicuna)在医疗数据(10万条真实医患对话)和2万条放射科对话上进行微调,以获取领域相关特征。

- 我们从两个数据集(MIMIC-CXR [Johnson等人, 2019]和OpenI [Demner-Fushman等人, 2015])的自由文本放射学报告中生成了21.7万条交互式简洁摘要。这些摘要通过在高质量数据上微调线性变换层来提升大语言模型性能。

- 我们将冻结的专用医学视觉编码器(MedClip)与微调后的大语言模型(Vicuna)对齐,使用简单线性变换来理解医学含义并获得出色的视觉对话能力。

- 为推进生物医学多模态学习研究,我们向社区开源了以下资源:代码库、微调模型、高质量指令集,以及用于数据生成和模型训练的完整方案。

2 Related Work

2 相关工作

Medical Chatbot Medical chatbots have emerged as valuable tools in healthcare, providing personalized support, information, and assistance to patients and healthcare professionals. The recently introduced Chatdoctor (Li et al., 2023) is a nextgeneration AI doctor model that is based on the LLaMA (Touvron et al., 2023) model. The goal of this project is to provide patients with an intelligent and reliable healthcare companion that can answer their medical queries and provide them with personalized medical advice. After success of ChatGPT (OpenAI, 2022), GPT-4 (OpenAI, 2023) and other open source LLM’s (Touvron et al., 2023; Chiang et al., 2023; Taori et al., 2023), many medical chatbots were introduced recently such as MedAlpaca (Han et al., 2023), PMC-LLaMA (Wu et al., 2023), and DoctorGLM (Xiong et al., 2023). These models utilize open source LLM’s and finetuned on specific medical instructions. These studies highlight the potential of medical chatbots to improve patient engagement and health outcomes through interactive and personalized conversations. Overall, medical chatbots offer promising opportunities using only textual modality for enhancing AI healthcare, with ongoing research focused on refining their capabilities with ethical considerations.

医疗聊天机器人

医疗聊天机器人已成为医疗保健领域的宝贵工具,为患者和医疗专业人员提供个性化支持、信息和帮助。最新推出的ChatDoctor (Li et al., 2023) 是基于LLaMA (Touvron et al., 2023) 模型的下一代AI医生模型。该项目旨在为患者提供智能可靠的医疗伴侣,能够解答医疗问题并提供个性化医疗建议。随着ChatGPT (OpenAI, 2022)、GPT-4 (OpenAI, 2023) 及其他开源大语言模型 (Touvron et al., 2023; Chiang et al., 2023; Taori et al., 2023) 的成功,近期涌现出许多医疗聊天机器人,如MedAlpaca (Han et al., 2023)、PMC-LLaMA (Wu et al., 2023) 和DoctorGLM (Xiong et al., 2023)。这些模型利用开源大语言模型,并针对特定医疗指令进行微调。这些研究凸显了医疗聊天机器人通过交互式和个性化对话改善患者参与度和健康结果的潜力。总体而言,医疗聊天机器人为仅使用文本模态增强AI医疗保健提供了广阔前景,当前研究重点在于结合伦理考量持续优化其能力。

Large Language Vision Models A significant area of research in natural language processing (NLP) and computer vision is the exploration of Large Language-Vision Model (LLVM) learning techniques. This LLVM aims to bridge the gap between visual and textual information, enabling machines to understand and generate content that combines both modalities. Recent studies have demonstrated the potential of LLVM models in various tasks, such as image captioning (Zhu et al., 2023), visual question answering (Bazi et al., 2023; Liu et al., 2023; Muhammad Maaz and Khan, 2023), and image generation (Zhang and Agrawala, 2023).

大语言视觉模型

自然语言处理 (NLP) 和计算机视觉领域的一个重要研究方向是大语言视觉模型 (LLVM) 学习技术的探索。这类 LLVM 旨在弥合视觉与文本信息之间的鸿沟,使机器能够理解和生成融合两种模态的内容。近期研究展示了 LLVM 模型在多项任务中的潜力,例如图像描述生成 (Zhu et al., 2023)、视觉问答 (Bazi et al., 2023; Liu et al., 2023; Muhammad Maaz and Khan, 2023) 以及图像生成 (Zhang and Agrawala, 2023)。

3 Method

3 方法

XrayGPT is an innovative conversational medical vision-language model specifically developed for analyzing chest radio graphs. The core concept revolves around aligning medical visual and textual representations to enable the generation of meaningful conversations about these radio graphs using our generated high-quality data. Our approach draws inspiration from the design of visionlanguage models in general, but with a specific focus on the medical domain. Due to the limited availability of medical image-summary pairs, we adopt a similar methodology by building upon a pre-trained medical vision encoder (VLM) and medical large language model (LLM), as our foundation. The fine-tuning process involves aligning both modalities using high-quality image-summary pairs through a simple transformation layer. This alignment enables XrayGPT to possess the capability of generating insightful conversations about chest radio graphs, providing valuable insights for medical professionals. By leveraging pre-existing resources and fine-tuning specific components, we optimize the model’s performance while minimiz- ing the need for extensive training on scarce data.

XrayGPT是一种创新的对话式医疗视觉语言模型,专为分析胸部X光片而开发。其核心概念是通过对齐医学视觉与文本表征,利用我们生成的高质量数据实现关于这些X光片的有意义对话。我们的方法借鉴了通用视觉语言模型的设计思路,但特别聚焦于医疗领域。由于医学图像-摘要配对数据的稀缺性,我们采用类似方法:以预训练的医疗视觉编码器(VLM)和医疗大语言模型(LLM)为基础,通过简单转换层使用高质量图像-摘要对进行多模态对齐。这种对齐使XrayGPT具备生成胸部X光片深度对话的能力,为医疗专业人员提供有价值的见解。通过利用现有资源并微调特定组件,我们在最小化稀缺数据训练需求的同时优化了模型性能。

3.1 Model Architecture

3.1 模型架构

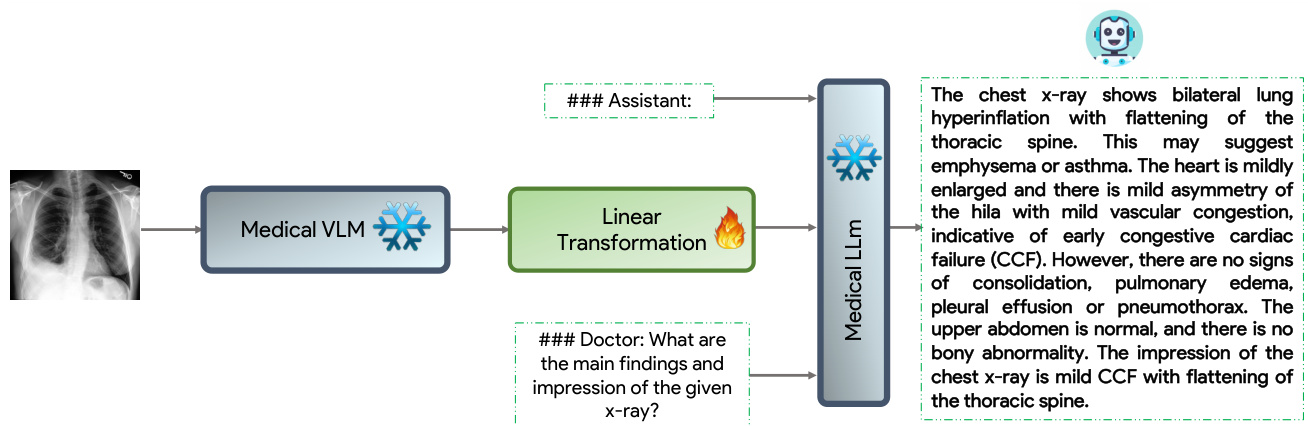

We show in Fig. 1 an overview of our XrayGPT. Given the X-ray, we align both visual features and textual information from a pre-trained medical vision encoder (VLM), and medical large language model (LLM). Specifically, we utilize MedClip (Wang et al., 2022) as a visual encoder and our large language model (LLM) is built upon the recent Vicuna (Chiang et al., 2023).

我们在图1中展示了XrayGPT的概览。给定X射线图像,我们同时对齐来自预训练医学视觉编码器(VLM)的视觉特征和文本信息,以及医学大语言模型(LLM)。具体而言,我们采用MedClip (Wang et al., 2022)作为视觉编码器,而大语言模型基于最新的Vicuna (Chiang et al., 2023)构建。

Given $\boldsymbol{\mathrm X}$ -ray $\mathbf{x}\in R^{H\times W\times C}$ , the visual encoder is used to encode the image into embeddings using a vision encoder $E_ {i m g}$ . Then, the raw embeddings are mapped to an output dimension of 512 using a linear projection head.

给定 $\boldsymbol{\mathrm X}$ 射线 $\mathbf{x}\in R^{H\times W\times C}$,视觉编码器通过 $E_ {img}$ 将图像编码为嵌入向量。随后,原始嵌入通过线性投影头映射到512维输出空间。

$$

{\bf V}_ {p}=f_ {v}(E_ {i m g}({\bf x}))

$$

$$

{\bf V}_ {p}=f_ {v}(E_ {i m g}({\bf x}))

$$

where $E_ {i m g}$ is the vision encoder, $f_ {v}$ is the projection head.

其中 $E_ {i m g}$ 是视觉编码器,$f_ {v}$ 是投影头。

To bridge the gap between image-level features and the language decoder’s embedding space, we employ a trainable linear transformation layer, denoted as $t$ . This layer projects the image-level features, represented by $\mathbf{V}_ {p}$ , into corresponding language embedding tokens, denoted as $\mathbf{L}_ {v}$ :

为了弥合图像级特征与语言解码器嵌入空间之间的差距,我们采用了一个可训练的线性变换层,记为$t$。该层将图像级特征$\mathbf{V}_ {p}$投影为对应的语言嵌入token$\mathbf{L}_ {v}$:

$$

\mathbf{L}_ {v}=t(v_ {p}),

$$

$$

\mathbf{L}_ {v}=t(v_ {p}),

$$

We have two text queries in our overall architecture. The first query, denoted as ###Assistant, serves the purpose of determining the system role, which in our case is defined as "You are a helpful healthcare virtual assistant." The second text query, ###Doctor, corresponds to the prompt itself. To ensure consistency, both queries undergo tokenization, resulting in dimensions represented by $\mathbf{L}_ {t}$ . Finally, $\mathbf{L}_ {v}$ is concatenated with $\mathbf{L}_ {t}$ and fed into the medical LLM, Fine-tuned Vicuna, which generates the summary of the chest x-ray.

在我们的整体架构中有两个文本查询。第一个查询标记为###Assistant,用于确定系统角色,在我们的案例中定义为"你是一个有用的医疗健康虚拟助手"。第二个文本查询###Doctor对应提示本身。为确保一致性,两个查询都经过Token化处理,生成由$\mathbf{L}_ {t}$表示的维度。最后将$\mathbf{L}_ {v}$与$\mathbf{L}_ {t}$拼接后输入医疗大语言模型Fine-tuned Vicuna,生成胸部X光片摘要。

Our XrayGPT follows a two-stage training approach. In the first stage, we train the model using interactive summaries from MIMIC-CXR (Johnson et al., 2019) reports. While in the second stage, we use the high-quality curated interactive summaries of OpenI (Demner-Fushman et al., 2015) reports. MIMIC-CXR report findings contain information about patient history, which adds noise to the data. To mitigate this effect, we use small yet effective Interactive OpenI report summaries in the second stage to make our model robust to noise.

我们的XrayGPT采用两阶段训练方法。在第一阶段,我们使用MIMIC-CXR (Johnson等人,2019) 报告中的交互式摘要训练模型。而在第二阶段,我们采用OpenI (Demner-Fushman等人,2015) 报告中经过高质量整理的交互式摘要。MIMIC-CXR报告的检查结果包含患者病史信息,这会给数据带来噪声。为减轻这种影响,我们在第二阶段使用精简但高效的OpenI交互式报告摘要,使模型对噪声具有鲁棒性。

3.2 Image-text alignment

3.2 图文对齐

To align the generated high-quality summaries with the given $\mathbf{X}$ -ray, we use similar conversational format of the Vicuna (Chiang et al., 2023) language model as follows:

为了使生成的高质量摘要与给定的$\mathbf{X}$射线对齐,我们采用类似Vicuna (Chiang et al., 2023) 大语言模型的对话格式如下:

###Doctor: $X_ {R}X_ {Q}$ ###Assistant: $X_ {S}$

医生: $X_X_$ ### 助手: $X_$

where $X_ {R}$ is the visual representation produced by the linear transformation layer for image $X$ , $X_ {Q}$ is a sampled question (e.g. What are the main findings and impression of the given X-ray?), and $X_ {S}$ is the associated summary for image $X$ . In this way, we curate image-text pairs with detailed and informative interactive summaries.

其中 $X_ {R}$ 是线性变换层为图像 $X$ 生成的视觉表征, $X_ {Q}$ 是采样的问题(例如:给定X光片的主要发现和印象是什么?), $X_ {S}$ 则是图像 $X$ 的关联摘要。通过这种方式,我们构建了具有详细信息量交互摘要的图文对。

4 Curating high-quality data

4 精选高质量数据

Datasets: The MIMIC-CXR consists of a collection of chest radio graphs associated with free-text radiology reports. It consists of 377,110 images and 227,827 associated reports, which are used for both training and testing purposes. The dataset is de-identified by removing the health information to satisfy health insurance and privacy requirements. The OpenI dataset is a collection of chest X-ray images from the Indiana University hospital network, composing 6,459 images and 3,955 reports.

数据集:MIMIC-CXR包含一组与自由文本放射学报告相关联的胸部X光片,包含377,110张图像和227,827份相关报告,用于训练和测试目的。该数据集通过移除健康信息以满足医疗保险和隐私要求进行了去标识化处理。OpenI数据集是印第安纳大学医院网络的胸部X光图像集合,包含6,459张图像和3,955份报告。

High Quality and Interactive Summaries: To generate concise and coherent medical summaries from the unstructured reports, we perform the following pre-processing steps for both datasets: (1) Removal of incomplete reports lacking finding or impression sections. (2) Elimination of reports that have finding sections containing less than 10 words. (3) Exclusion of reports with impression sections containing less than 2 words.

高质量交互式摘要:为了从非结构化报告中生成简洁连贯的医疗摘要,我们对两个数据集执行了以下预处理步骤:(1) 移除缺少检查结果或印象部分的不完整报告。(2) 剔除检查结果部分少于10个单词的报告。(3) 排除印象部分少于2个单词的报告。

In addition, utilizing the power of gpt-3.5-turbo model, we further implement the following preprocessing techniques to ensure high-quality summaries per image: (1) Elimination of sentences containing comparisons to the patient’s prior medical history. (2) Removal of de-defined symbols "_ _ ", while preserving the original meaning. (3) As our training relies on image-text pairs, we excluded the provided view from the summary. (4) We combine the clean findings and impressions to generate an interactive and high-quality summary.

此外,借助 gpt-3.5-turbo 模型的能力,我们进一步实施以下预处理技术以确保每张图像生成高质量的摘要:(1) 剔除包含与患者既往病史对比的句子。(2) 移除预定义符号"_ _ "同时保留原意。(3) 由于训练依赖图文对,我们从摘要中排除了提供的视图信息。(4) 合并清洗后的检查结果(Findings)和影像印象(Impressions)以生成交互式高质量摘要。

Figure 1: Overview of our XrayGPT framework. The input X-ray is passed sequentially to three components. (1) Frozen medical visual encoder to extract relevant features pertaining to the chest diagnosis. (2) Leanable linear transformation layer to align the medical visual features with the Medical LLM together to learn extensive medical visual-text alignment. (3) Frozen Medical LLM to generate a detailed summary of the $\boldsymbol{\mathrm X}$ -ray based on the encoded features and the given prompt.

图 1: XrayGPT框架概览。输入X光片依次通过三个组件处理:(1) 冻结的医学视觉编码器提取与胸部诊断相关的特征。(2) 可学习的线性变换层将医学视觉特征与医疗大语言模型对齐,以学习广泛的医学视觉-文本对齐。(3) 冻结的医疗大语言模型基于编码特征和给定提示生成$\boldsymbol{\mathrm X}$光片的详细报告。

Following these steps, we obtained a set of filtered training reports consisting of 114,690 reports associated with 241k training images based on Mimic-CXR dataset. Also, we obtained 3,403 highquality summaries that used for training based on the OpenI dataset.

按照上述步骤,我们基于Mimic-CXR数据集获得了包含114,690份报告(关联241k训练图像)的过滤后训练报告集,并基于OpenI数据集获得了3,403份用于训练的高质量摘要。

Here is an example before and after the proposed pre-processing. Input findings: PA and lateral views of the chest were provided demonstrating no focal consolidation, effusion, or pneumothorax. Car dio media st in al silhouette appears normal and stable. There is a compression deformity involving a mid thoracic vertebral body, which appears new from the prior chest radiograph of _ _ _ . No free air below the right hemi diaphragm. There are tiny surgical clips in the left base of neck, likely indicating prior thyroid surgery. Input Impression: No acute intra thoracic process. Interval development of a mid thoracic spine compression fracture. Highquality and interactive summary: The chest x-ray findings reveal no evidence of focal consolidation, effusion, or pneumothorax. The car dio media st in al silhouette appears stable and normal. There is a newly developed mid thoracic spine compression fracture but no free air below the right hemidiaphragm. The presence of surgical clips in the left base of the neck suggests prior thyroid surgery. The impression suggests that there is no acute intra thoracic condition detected in the $x$ -ray aside from the new development of mid thoracic spine compression fracture.

以下是拟处理前后的示例。输入检查结果:提供了胸部正位和侧位片,显示无局灶性实变、积液或气胸。心脏纵隔轮廓显示正常且稳定。中段胸椎椎体存在压缩性畸形,与既往_ _ _ 胸片相比为新发表现。右半膈下未见游离气体。左颈部基底可见微小手术夹,提示既往甲状腺手术史。输入印象:无急性胸内病变。中段胸椎压缩性骨折为新发。高质量交互式摘要:胸部X线检查显示无局灶性实变、积液或气胸证据。心脏纵隔轮廓稳定正常。新发中段胸椎压缩性骨折,但右半膈下未见游离气体。左颈部基底手术夹提示既往甲状腺手术。印象表明除新发中段胸椎压缩性骨折外,$x$线检查未检测到急性胸内病变。

5 Experiments

5 实验

5.1 Implementation Details

5.1 实现细节

Stage-1 Training: In stage-1 training, the model is designed to gain understanding of how Xray image features and corresponding reports are interconnected by analysing a large set of image-text pairs. The result obtained from the injected projection layer is considered as a gentle cue for our medically tuned LLM model, guiding it to produce the appropriate report based on the finding and impression that match the given x-ray images. We use high quality interactive report summary as described in sec. 4 of MIMIC-CXR (Johnson et al., 2019) train set with 213,514 image text pairs for training. During training the model trained for $320\mathrm{k\Omega}$ total training steps with a total batch size of 128 using 4 AMD MI250X (128GB) GPUS.

第一阶段训练:在第一阶段训练中,该模型旨在通过分析大量图像-文本对来理解X光图像特征与相应报告之间的关联关系。注入投影层获得的结果被视为对医学调优大语言模型的温和提示,引导其根据与给定X光影像匹配的检查发现(Findings)和诊断印象(Impression)生成相应报告。我们使用MIMIC-CXR (Johnson et al., 2019) 第4节描述的高质量交互式报告摘要作为训练集,包含213,514个图像-文本对。训练过程中,模型在4块AMD MI250X (128GB) GPU上以128的总批次大小进行了$320\mathrm{k\Omega}$总训练步数。

Stage-2 Training: In stage-2 training, the pretrained stage-1 model is enforced to gain radiology specific summary of how xray image features by examining set of highly curated image-text pairs from OpenI dataset. As a result, our medically tuned LLM can produce more natural and high quality radiology specific responses of given chest Xray images. We use high quality interactive report summary as described in sec. 4 from OpenI (DemnerFushman et al., 2015) set with $3\mathrm{k\Omega}$ image text pairs for training. During training the model trained for $5\mathrm{k\Omega}$ total training steps with a total batch size of 32 using single AMD MI250X (128GB) GPU.

第二阶段训练:在第二阶段训练中,通过使用OpenI数据集中的精选图文对,强制预训练的第一阶段模型学习X射线图像特征的放射学特异性摘要。这使得我们经过医学调校的大语言模型能够针对给定的胸部X光图像生成更自然、更高质量的放射学特异性响应。我们采用OpenI (DemnerFushman et al., 2015) 第4节所述的高质量交互式报告摘要作为训练集,包含$3\mathrm{k\Omega}$个图文对。训练过程中,模型在单个AMD MI250X (128GB) GPU上以总批次大小32进行了$5\mathrm{k\Omega}$次训练迭代。

In both stage-1 and stage-2 of training, we utilize predetermined prompts in the given format:

在训练的第一阶段和第二阶段,我们均采用以下预定格式的提示词:

###Doctor:

医生: ![]() <图像特征> <指令> ### 助手:

<图像特征> <指令> ### 助手:

Here,

此处,

5.2 Evaluation Metrics

5.2 评估指标

We used the Rogue Score as an evaluation metric to compare the contribution of our components over the baseline (Zhu et al., 2023). Rogue score has been commonly used (Cheng and Lapata, 2016; Nallapati et al., 2017;