Qilin-Med-VL: Towards Chinese Large Vision-Language Model for General Healthcare

Qilin-Med-VL: 面向通用医疗的中文大视觉语言模型

Junling Liu†∗ Ziming Wang† Qichen $\mathbf{Ye}^{1\dagger}$ Dading Chong1† Peilin Zhou2† Yining Hua3†

Junling Liu†∗ Ziming Wang† Qichen $\mathbf{Ye}^{1\dagger}$ Dading Chong1† Peilin Zhou2† Yining Hua3†

william.liuj@gmail.com, wang.zm@pku.edu.cn, yeeeqichen@pku.edu.cn 1601213984@pku.edu.cn, zhoupalin@gmail.com, yininghua@g.harvard.edu

william.liuj@gmail.com, wang.zm@pku.edu.cn, yeeeqichen@pku.edu.cn, 1601213984@pku.edu.cn, zhoupalin@gmail.com, yininghua@g.harvard.edu

Abstract

摘要

Large Language Models (LLMs) have introduced a new era of proficiency in comprehending complex healthcare and biomedical topics. However, there is a noticeable lack of models in languages other than English and models that can interpret multi-modal input, which is crucial for global healthcare accessibility. In response, this study introduces Qilin-Med $\mathbf{\Delta}\mathbf{V}\mathbf{L}^{1}$ , the first Chinese large vision-language model designed to integrate the analysis of textual and visual data. Qilin-Med-VL combines a pre-trained Vision Transformer (ViT) with a foundational LLM. It undergoes a thorough two-stage curriculum training process that includes feature alignment and instruction tuning. This method enhances the model’s ability to generate medical captions and answer complex medical queries. We also release ChiMed-VL, a dataset consisting of more than 1M image-text pairs. This dataset has been carefully curated to enable detailed and comprehensive interpretation of medical data using various types of images.

大语言模型(LLMs)开启了理解复杂医疗和生物医学主题的新纪元。然而目前明显缺乏非英语语种的模型,以及能够解析多模态输入的模型,这对提升全球医疗可及性至关重要。为此,本研究推出Qilin-Med $\mathbf{\Delta}\mathbf{V}\mathbf{L}^{1}$——首个专为整合文本与视觉数据分析而设计的中文大视觉语言模型。Qilin-Med-VL将预训练的Vision Transformer(ViT)与基础大语言模型相结合,通过包含特征对齐和指令微调的两阶段课程训练流程,显著提升了生成医学描述与解答复杂医学查询的能力。我们还发布了包含超100万图文对的ChiMed-VL数据集,该数据集经过精心构建,可支持利用多种图像类型实现精细全面的医学数据解读。

1 Introduction

1 引言

The introduction of Large Language Models (LLMs) into the field of healthcare and bio medicine has brought significant advancements. GPT-4’s notable achievement on the United States Medical Licensing Examination (USMLE) demonstrates its proficiency in complex biomedical concepts and its potential as a tool for healthcare professionals (Nori et al., 2023). This milestone reflects the model’s extensive training and knowledge, as well as the potential of medical LLMs.

大语言模型(LLM)在医疗健康和生物医学领域的引入带来了重大进展。GPT-4在美国医师执照考试(USMLE)上的突出表现,证明了其在复杂生物医学概念方面的熟练程度,以及作为医疗专业人员工具的潜力 (Nori et al., 2023)。这一里程碑反映了该模型的广泛训练和知识储备,也展现了医疗大语言模型的潜力。

However, the practical implementation and support for making decisions in healthcare and bio medicine require the use of multi-modal techniques due to the intricate nature of medical diagnosis and patient care (Yu et al., 2018). Determinative information often goes beyond written content to encompass visual indicators of how illnesses manifest. Disease patterns, clinical diagnoses, and many other aspects often rely on the analysis of visual cues. This includes patterns of skin lesions for dermato logical conditions (Wan et al., 2022; Debelee, 2023) or the interpretation of electrocardiograms and brain scans for cardiac and neurological issues (Yu et al., 2018; Abbasi et al., 2023). Chronic con- ditions like diabetes require analysis of visual data like retinal scans (Gupta et al., 2022), while cancer treatment benefits from detailed imaging from CT scans or MRIs(Khoo et al., 1997). This highlights the limitations of relying solely on textual data and emphasizes the demand for integrated methods that combine visual data analysis with human-like conversation.

然而,由于医疗诊断和患者护理的复杂性(Yu et al., 2018),在医疗健康和生物医学领域实现决策支持的实际应用需要采用多模态技术。关键信息往往超越文本内容,涵盖疾病表现的视觉指标。疾病模式、临床诊断等诸多方面通常依赖于对视觉线索的分析,包括皮肤病损模式(Debelee, 2023; Wan et al., 2022),或心电图表和脑部扫描对心脏及神经系统问题的解读(Abbasi et al., 2023; Yu et al., 2018)。糖尿病等慢性病需要分析视网膜扫描等视觉数据(Gupta et al., 2022),而癌症治疗则受益于CT或MRI的详细成像(Khoo et al., 1997)。这凸显了仅依赖文本数据的局限性,并强调了将视觉数据分析与类人对话相结合的集成方法的需求。

In the English world, (Li et al., 2023a) has undertaken a pioneering endeavor to develop LLaVAMed, an LLM that combines advanced visualtextual data analysis in the field of bio medicine through a process of multi staged multi-modal instruction tailoring. However, it is crucial to recognize that language barriers persist as a significant impediment to the advancement of global health (Gerchow et al., 2020). A shortsighted focus on English-centric models could exacerbate inequalities in healthcare accessibility.

在英语世界,(Li et al., 2023a) 率先开发了 LLaVAMed,这是一种通过多阶段多模态指令定制过程、结合生物医学领域先进视觉文本分析的大语言模型。然而必须认识到,语言障碍仍是全球卫生发展的重大阻碍 (Gerchow et al., 2020)。若短视地聚焦于英语中心模型,可能加剧医疗可及性的不平等。

Given the current absence of large visionlanguage models for Chinese medical fields, we reduce this inequality by working on developing Chinese healthcare and biomedical models, recognizing the significant impact that linguistic inclusion has on improving global health standards. Expanding on this foundation, our research introduces pivotal contributions:

鉴于目前中文医疗领域缺乏大型视觉语言模型,我们通过开发中文医疗和生物医学模型来减少这种不平等,认识到语言包容性对提升全球健康标准的重大影响。基于这一基础,我们的研究提出了以下关键贡献:

- Qilin-Med-VL, the first large Chinese medical vision-language model, proficient in multiple critical medical imaging disciplines.

- Qilin-Med-VL,首个中文医疗视觉-语言大模型,精通多项关键医学影像学科。

- The first large-scale Chinese Vision-Language dataset for general healthcare, Chinese Medicine - Vision Language ChiMed-VL, designed to facilitate multistage training. This dataset has two subsets: vision-language feature alignment and instruction tuning.

- 首个面向通用医疗领域的大规模中文视觉-语言数据集 Chinese Medicine - Vision Language ChiMed-VL,旨在促进多阶段训练。该数据集包含两个子集:视觉-语言特征对齐和指令微调。

Models like Qilin-Med-VL look forward to helping healthcare professionals make better decisions by providing them with more insights. Ultimately, our goal is to improve healthcare worldwide. We believe that our work represents a new frontier in research, where technology and medical knowledge come together to create a brighter and more equitable future for healthcare.

麒麟医疗视觉语言模型(Qilin-Med-VL)等模型旨在通过提供更多洞察,帮助医疗专业人员做出更优决策。我们的最终目标是改善全球医疗水平。我们相信这项工作代表了研究的新前沿——当技术与医学知识相结合,必将为医疗行业创造更光明、更公平的未来。

2 Related Work

2 相关工作

2.1 Multi-modal LLMs

2.1 多模态大语言模型

The advent of LLMs has transformed the field of multi-modal LLMs field, which now has a branch that focuses on the adaptability of LLMs to incorporate various modalities. For example, AnyMal(Moon et al., 2023) generates textual responses from input signals, including text, image, video, audio, and IMU motion sensor. NExT-GPT(Wu et al., 2023b) accomplishes universal multi-modal comprehension and diverse input/output modalities by integrating LLM with multi-modal adaptors and diffusion decoders. A typical focus of this field is on integrating visual elements, which is primarily concerned with integrating vision as a ‘foreign language’ into the models, and can thus be easily adapted to other modalities. These models are typically referred to as large vision-language models.

大语言模型 (LLM) 的出现革新了多模态大语言模型领域,该领域现已分化出一个专注于大语言模型适应多种模态的分支。例如,AnyMal (Moon et al., 2023) 能够根据文本、图像、视频、音频和IMU运动传感器等输入信号生成文本响应。NExT-GPT (Wu et al., 2023b) 通过将大语言模型与多模态适配器和扩散解码器相结合,实现了通用多模态理解及多样化的输入/输出模态。该领域的典型关注点在于视觉元素的整合,主要研究如何将视觉作为一种"外语"融入模型,从而轻松适配其他模态。这类模型通常被称为大视觉语言模型。

Pioneering research, such as Flamingo (Alayrac et al., 2022), highlights the effectiveness of these models in synthesizing visual and textual information, resulting in nuanced, unrestricted content. Noteworthy developments like the Q-Former by BLIP-2 (Li et al., 2023c) contribute to harmoniz- ing pre-trained vision models with LLMs, driving forward the capabilities of these systems.

开创性研究,如Flamingo (Alayrac等人,2022) ,突显了这些模型在合成视觉与文本信息方面的有效性,能生成细腻且不受限的内容。BLIP-2提出的Q-Former (Li等人,2023c) 等显著进展,促进了预训练视觉模型与大语言模型的融合,推动了这些系统的能力发展。

Models like MiniGPT-4 (Zhu et al., 2023) and LLaVA (Li et al., 2023b) laveraged GPT-4 create conversational visual instruction datasets. These datasets enhance the models’ proficiency in correlating visual traits with linguistic elements. LLaVA1.5 (Liu et al., 2023a) has advanced through strategic enhancements and high-performance standards in multi-modal LLM evaluations. It outperformed many open-source models, demonstrating significant improvements.

像 MiniGPT-4 (Zhu et al., 2023) 和 LLaVA (Li et al., 2023b) 这样的模型利用 GPT-4 创建了对话式视觉指令数据集。这些数据集提升了模型在关联视觉特征与语言元素方面的能力。LLaVA1.5 (Liu et al., 2023a) 通过策略性增强和在多模态大语言模型评估中的高性能标准取得了进展。它的表现优于许多开源模型,展示了显著的改进。

Meanwhile, VisCPM (Hu et al., 2023a), InternLM-XComposer (Zhang et al., 2023a), and Qwen-VL (Bai et al., 2023a), have excelled in interpreting and executing instructions in Chinese, reflecting the global applicability and adaptability of these advanced systems. These achievements not only showcase the models’ versatility in processing language-specific tasks but also highlight their capability to handle intricate instructions across various domains and applications.

与此同时,VisCPM (Hu et al., 2023a)、InternLM-XComposer (Zhang et al., 2023a) 和 Qwen-VL (Bai et al., 2023a) 在中文指令解析与执行方面表现卓越,体现了这些先进系统的全球适用性和适应性。这些成果不仅展示了模型在处理语言特定任务时的多功能性,更凸显了其跨领域、跨应用中处理复杂指令的能力。

2.2 Large Medical Vision-Language Models

2.2 大型医疗视觉语言模型

Research in large medical vision-language models has been encouraging, with significant efforts put into establishing foundational models. Noteworthy initiatives include LLaVA-Med (Li et al., 2023a) and MedVInT (Zhang et al., 2023b), which utilize image captions from PubMed Central (Roberts, 2001) for fine-tuning medical visual instruction sets.

大型医疗视觉语言模型的研究进展令人鼓舞,众多研究致力于构建基础模型。值得关注的成果包括LLaVA-Med (Li et al., 2023a)和MedVInT (Zhang et al., 2023b),它们利用PubMed Central (Roberts, 2001)中的图像描述数据来微调医疗视觉指令集。

Medical visual question answering (VQA) has received extensive attention and research due to its substantial practical uses. Pushing the boundaries of medical VQA capabilities, Med-Flamingo (Moor et al., 2023) emerged with capabilities for few-shot generative medical VQA on interleaved medical image-text data. Additionally, MedBLIP (Chen et al., 2023) narrows its focus to a specialized segment of 3D imaging, primarily MRI.

医学视觉问答 (VQA) 因其巨大的实用价值受到广泛关注和研究。Med-Flamingo (Moor et al., 2023) 通过实现交错医学图文数据上的少样本生成式医学VQA能力,推动了该领域的技术边界。此外,MedBLIP (Chen et al., 2023) 将研究重点聚焦于3D成像 (特别是MRI) 的专业细分领域。

Beyond medical VQA, Med-PaLM M (Tu et al., 2023), adopted an innovative approach, proposed a generalist biomedical AI system that can perform medical image classification, medical VQA, radiology report generation and sum mari z ation, and more with the same set of model weights. In radiological diagnostics, models like RadFM (Wu et al., 2023a) and Radiology-Llama2 (Liu et al., 2023d) demonstrated promising performance in enhancing diagnostic precision through visual aid.

在医疗视觉问答(VQA)领域之外,Med-PaLM M (Tu等人,2023)采用创新方法,提出了一种通用型生物医学AI系统,能够使用同一组模型权重执行医学图像分类、医疗VQA、放射学报告生成与摘要等任务。在放射诊断领域,RadFM (Wu等人,2023a)和Radiology-Llama2 (Liu等人,2023d)等模型通过视觉辅助显著提升了诊断精度,展现出卓越性能。

Despite these advances, a research gap persists concerning Chinese medical LLMs tailored for multi-modal inputs. Existing models, such as Huatuo (Wang et al., 2023), Qilin-Med (Ye et al., 2023), and CMExam (Liu et al., 2023c) only allow textual inputs. Bridging this gap is crucial, considering the potential impact on healthcare acces sibi lit y, where linguistic barriers can restrict critical information and services. This concern is especially pronounced for non-mainstream language speakers currently under served by prevalent NLP technologies (Bird, 2020; Zeng et al., 2022). Prioritizing such research is imperative to mitigate systemic disparities and democratize access to crucial healthcare advancements.

尽管取得了这些进展,但针对多模态输入定制的中文医疗大语言模型仍存在研究空白。现有模型如华佗 (Wang et al., 2023)、麒麟医疗 (Ye et al., 2023) 和 CMExam (Liu et al., 2023c) 仅支持文本输入。考虑到语言障碍可能限制关键信息和服务获取,填补这一空白对提升医疗可及性至关重要 (Bird, 2020; Zeng et al., 2022)。对于当前主流自然语言处理技术覆盖不足的非主流语言使用者而言,这一问题尤为突出。优先开展此类研究对消除系统性差异、促进关键医疗进步的普惠化具有紧迫意义。

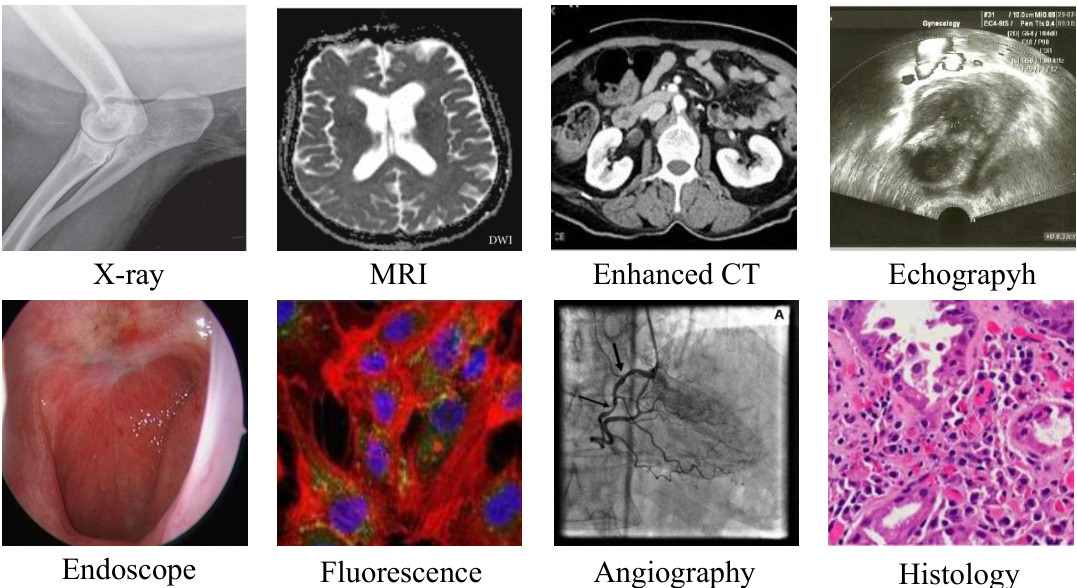

Figure 1: Samples of various types of medical images in ChiMed-VL dataset.

图 1: ChiMed-VL数据集中各类医学影像样本示例。

Table 1: Basic statistics of ChiMed-VL-Alignment. C: Contexts; I: Inlines.

| PMC-CaseReport | PMC-OA | Total | |

| Image-Text pairs # | 316,838 | 263,176 | 580,014 |

| CTokens# | 167M | 167M | |

| I Tokens# | 21M | 42M | 63M |

| MaxCtokens | 2,576 | 2,576 | |

| MaxItokens | 1,551 | 1,417 | 1,551 |

| Median (Q1, Q3) C tokens | 435 (211,757) | 435 (211,757) | |

| Median (Q1,Q3) I tokens | 59 (41, 83) | 125 (68,210) | 75 (47,132) |

表 1: ChiMed-VL-Alignment 基础统计量。C: 上下文 (Contexts);I: 内联文本 (Inlines)。

| PMC-CaseReport | PMC-OA | 总计 | |

|---|---|---|---|

| 图文对数量 | 316,838 | 263,176 | 580,014 |

| C Token数量 | 167M | 167M | |

| I Token数量 | 21M | 42M | 63M |

| 最大C Token数 | 2,576 | 2,576 | |

| 最大I Token数 | 1,551 | 1,417 | 1,551 |

| C Token中位数(Q1,Q3) | 435 (211,757) | 435 (211,757) | |

| I Token中位数(Q1,Q3) | 59 (41,83) | 125 (68,210) | 75 (47,132) |

In this work, we harness these advancements to develop a specialized Chinese medical visionlanguage model, characterized by its efficiency and efficacy in operation.

在本工作中,我们利用这些进展开发了一个专业的中文医疗视觉语言模型,其特点是运行高效且有效。

3 Dataset Construction

3 数据集构建

Addressing the scarcity of Chinese medical multimodal data for training instruction-following models, we introduce a pioneering dataset, ChiMed-VL. ChiMed-VL was established by leveraging several open-source English medical multi-modal datasets. We translated these datasets into Chinese with GPT3.5 and conducted expert quality control. The dataset contains two components: concept alignment and instruction-following, each critical during distinct training phases.

针对中文医学多模态数据稀缺难以训练指令跟随模型的问题,我们推出了开创性数据集ChiMed-VL。该数据集通过整合多个开源英文医学多模态资源构建而成:首先使用GPT3.5进行中英翻译,再经过专家质量校验。数据集包含概念对齐和指令跟随两大模块,分别对应不同训练阶段的核心需求。

3.1 The Concept Alignment Subset

3.1 概念对齐子集

To enable model support for a multitude of medical image types, we leveraged two comprehensive open-source multi-modal medical datasets: PMC $O A$ (Lin et al., 2023) and PMC-CaseReport (Wu,

为实现对多种医学影像类型的模型支持,我们采用了两个综合性开源多模态医学数据集:PMC-OA (Lin et al., 2023) 和 PMC-CaseReport (Wu,

2023). These datasets collectively cover an extensive range of diagnostic modalities, such as X-ray, MRI, CT, Radioisotope, Mitotic, and several others, examples of which are depicted in Fig.1. Recognizing the disparity induced by the scarcity of Chinese-centric data, we used GPT-3.5 to translate the dataset into Chinese. The breakdown of this translation process is elaborated in Tab.1 and Fig.2(a).

2023)。这些数据集共同涵盖了广泛的诊断模式,如X光、MRI、CT、放射性同位素、有丝分裂等,部分示例如图1所示。考虑到中文数据稀缺导致的差异,我们使用GPT-3.5将数据集翻译为中文。该翻译过程的详细说明见表1和图2(a)。

ChiMed-VL-Alignment consists of 580,014 image-text couplings, each pair falling into one of two categories: context information of an image or descriptions of an image. The context category contains 167M tokens, presenting a median text length of 435 (Q1: 211, Q3: 757). Conversely, descriptions, more concise and image-specific, contain inline descriptions and captions. They comprise 63M tokens, with median lengths settling at 59 (Q1: 45, Q3: 83).

ChiMed-VL-Alignment 包含 580,014 组图文配对,每对数据归属以下两类之一:图像的上下文信息或图像描述。上下文类别包含 1.67 亿 token (原文保留),文本长度中位数为 435 (Q1: 211, Q3: 757)。相比之下,描述类文本更为简洁且聚焦图像内容,包含内联描述和标题,共涵盖 6300 万 token,长度中位数稳定在 59 (Q1: 45, Q3: 83)。

3.2 The Instruction-Tuning Subset

3.2 指令微调子集

In the second phase, we constructed the ChiMedVL-Instruction subset for refining the model’s interpreting and instruction following capabilities. We extracted data from two open-source compilations: PMC-Report and PMC-VQA (Zhang et al., 2023c). These datasets contain a diverse collection of data, including X-rays, CT scans, Echography, and Ultrasonography, enriching the model’s familiarity with varied medical scenarios. We again used GPT-3.5 to translate the English questions and their corresponding answers into Chinese. Tab.2 and Fig.2(b) details the statistics of this process.

在第二阶段,我们构建了ChiMedVL-Instruction子集以优化模型的解释和指令遵循能力。数据源自两个开源汇编:PMC-Report和PMC-VQA (Zhang et al., 2023c),这些数据集涵盖X光、CT扫描、超声心动图和超声检查等多样化医疗场景数据,增强了模型对复杂医学情境的适应力。我们再次使用GPT-3.5将英文问题及其对应答案翻译为中文。表2和图2(b)详细统计了该流程数据。

Figure 2: (a) Word cloud of ChiMed-Alignment. (b) Word cloud of ChiMed-Instruction.

图 2: (a) ChiMed-Alignment 词云。 (b) ChiMed-Instruction 词云。

Table 2: Basic statistics of ChiMed-VL-Instruction. Q: Questions; A: Answers.

| PMC-CaseReport | PMC-VQA | Total | |

| QApairs # | 316,838 | 152,603 | 469,441 |

| Q Tokens # | 7M | 3M | 10M |

| ATokens# | 10M | 3M | 13M |

| Max Q tokens | 4,040 | 335 | 4,040 |

| MaxAtokens | 451 | 732 | 732 |

| Median (Q1,Q3) Q tokens | 21 (16,26) | 18 (15,22) | 20 (16,25) |

| Median (Q1,Q3) A tokens | 27 (20,38) | 10 (6, 16) | 22 (12,34) |

表 2: ChiMed-VL-Instruction 基础统计。Q: 问题;A: 答案。

| PMC-CaseReport | PMC-VQA | Total | |

|---|---|---|---|

| QApairs # | 316,838 | 152,603 | 469,441 |

| Q Tokens # | 7M | 3M | 10M |

| A Tokens # | 10M | 3M | 13M |

| Max Q tokens | 4,040 | 335 | 4,040 |

| Max A tokens | 451 | 732 | 732 |

| Median (Q1,Q3) Q tokens | 21 (16,26) | 18 (15,22) | 20 (16,25) |

| Median (Q1,Q3) A tokens | 27 (20,38) | 10 (6,16) | 22 (12,34) |

ChiMed-VL-Instruction comprises 469,441 question-answer pairs. Within this subset, the questions section contains 10M tokens with a median length of 20 (Q1: 16, Q3: 25), posing a concise inquiry reflective of medical queries. The answers consist of 13M tokens with a median length slightly longer at 22 (Q1: 12, Q3: 34), providing clear, direct, and informative responses.

ChiMed-VL-Instruction包含469,441个问答对。其中问题部分包含1000万token,中位长度为20(Q1:16,Q3:25),体现了医学查询的简洁性;答案部分包含1300万token,中位长度略长为22(Q1:12,Q3:34),提供清晰直接且信息丰富的回答。

3.3 Data Pre-processing

3.3 数据预处理

A significant challenge addressed during the compilation of ChiMed-VL involved the management of input images. Datasets from PMC-OA and PMCCaseReport contain multiple images corresponding to single text snippets. To enhance medical visual concept alignment and mitigate potential misalignments, images related to the same text were concatenated into single composite images, forming unified image-text pairs. This method necessitated the exclusion of samples with more than four images per text to avoid low-resolution outputs postconcatenation. We strategically chose horizontal or vertical image concatenation based on the original image sets’ dimensions, preventing extreme aspect ratios in the combined image. Furthermore, we discarded samples with overly brief textual descriptions or those impractical for translation.

在ChiMed-VL的汇编过程中,一个重大挑战涉及输入图像的管理。PMC-OA和PMCCaseReport数据集中的单个文本片段对应多张图像。为加强医学视觉概念对齐并减少潜在错位,我们将同一文本相关的图像拼接成单个合成图像,形成统一的图文对。该方法需要排除每段文本对应超过四张图像的样本,以避免拼接后输出分辨率过低。我们根据原始图像集的尺寸策略性地选择水平或垂直拼接方式,防止合成图像出现极端宽高比。此外,我们还剔除了文本描述过短或翻译不切实际的样本。

The final training data format emulates a conversation between an assistant and an individual providing visual instructions, structured via task-specific Chinese prompts. Approximately 20 unique prompt templates were designed for each task, ensuring a diverse training schema. For each sample, a template was randomly selected from the task-specific set, and the data was reformulated into a dialog format, making it a robust resource for training purposes.

最终的训练数据格式模拟了助手与提供视觉指令的个人之间的对话,通过特定任务的中文提示进行结构化。为每个任务设计了约20个独特的提示模板,以确保训练方案的多样性。对于每个样本,从特定任务集中随机选择一个模板,并将数据重新格式化为对话形式,使其成为训练目的的稳健资源。

4 Methodology

4 方法论

4.1 Overall Architecture

4.1 整体架构

The Qilin-Med-VL architecture comprises three key components:

麒麟医疗视觉语言架构包含三个关键组件:

- Foundation LLM: Qilin-Med-VL employs the renowned Chinese LLM, ChineseLLaMA2-13B-Chat, to comprehend linguistic content and generate appropriate responses.

- 基础大语言模型:Qilin-Med-VL采用知名中文大语言模型ChineseLLaMA2-13B-Chat,用于理解语言内容并生成恰当响应。

- Pretrained Image Encoder: To process medical images, Qilin-Med-VL leverages the Vision Transformer (ViT) (Do sov it ski y et al., 2021) to obtain visual features from each image.

- 预训练图像编码器:为处理医学图像,Qilin-Med-VL采用Vision Transformer (ViT) (Dosovitskiy et al., 2021) 从每张图像中提取视觉特征。

Figure 3: The two-stage curriculum training scheme of Qilin-Med-VL.

图 3: Qilin-Med-VL 的两阶段课程训练方案。

- Vision-Language Feature Adapter: This component aims to align visual features with linguistic features, creating a shared feature space to effectively capture complementary information from different modalities. For efficiency, a simple linear projection layer is used as the feature adapter. In the future, we plan to investigate more effective and sophisticated adapters.

- 视觉-语言特征适配器 (Vision-Language Feature Adapter): 该组件旨在对齐视觉特征与语言特征,创建共享特征空间以有效捕捉不同模态的互补信息。出于效率考虑,我们采用简单的线性投影层作为特征适配器。未来计划研究更高效复杂的适配器方案。

4.2 Two-stage Curriculum Training Scheme

4.2 两阶段课程训练方案

As shown in Fig. 3, the training procedure of Qilin-Med-VL is divided into two stages: visionlanguage feature alignment and instruction-tuning. This two-stage training scheme is inspired by curriculum learning, which progressively enhances the medical proficiency of VL models.

如图 3 所示,Qilin-Med-VL 的训练流程分为两个阶段:视觉语言特征对齐和指令微调。这种两阶段训练方案受到课程学习的启发,可逐步提升视觉语言模型的医学专业能力。

4.2.1 Feature Alignment

4.2.1 特征对齐

In this first stage, Qilin-Med-VL is trained on an image description task, where the model is asked to predict a caption for each input medical image. For each pair of medical images and text in the dataset, we instructed the model to generate a caption for the image(prompts summarized in Appendix. 5. We used the actual captions as the correct answers during training. Importantly, we fix the parameters of the pre-trained image encoder and language model (LLM). Instead, we train a special adapter to make sure visual and language features representing the same medical concepts align well. This alignment helps the model better understand medical information across different forms (visual and text) and improves the consistency of its medical concept understanding.

在第一阶段,Qilin-Med-VL通过图像描述任务进行训练,要求模型为输入的医学图像生成描述文本。针对数据集中每对医学图像和文本,我们指导模型为图像生成标题(提示词汇总见附录5),并将实际描述文本作为训练时的正确答案。值得注意的是,我们固定了预训练图像编码器和大语言模型(LLM)的参数,转而训练特殊适配器来确保表征相同医学概念的视觉特征与语言特征良好对齐。这种对齐机制有助于模型更好地理解跨形态(视觉与文本)的医学信息,并提升其医学概念理解的一致性。

4.2.2 Instruction-Tuning

4.2.2 指令微调

In the second stage, we further improved QilinMed-VL’s capability of following medical instructions. We used a dataset specifically designed for this purpose, as discussed in Sec. 3.3. In this stage, each training example consisted of a medical image and a related question. The model’s task was to answer the question using the information in the image. We freezed the pre-trained image encoder and fine-tuned the language model and the vision-language feature adapter. This way, QilinMed-VL becomes more proficient at understanding various medical instructions and can carry out medical tasks, like answering medical questions based on images, in a conversational manner.

在第二阶段,我们进一步提升了QilinMed-VL遵循医疗指令的能力。如第3.3节所述,我们使用了专门为此设计的数据集。此阶段每个训练样本包含一张医学图像和相关问题,模型的任务是利用图像信息回答问题。我们冻结了预训练的图像编码器,微调了语言模型和视觉语言特征适配器。通过这种方式,QilinMed-VL能更熟练地理解各类医疗指令,并以对话形式执行医疗任务(例如基于图像的医学问答)。

5 Experiments

5 实验

5.1 Baselines

5.1 基线方法

To investigate Qilin-Med-VL’s ability in medical vision-language understanding and instruction following, we conduct a comparative analysis with five baseline LMMs:

为了评估Qilin-Med-VL在医学视觉语言理解和指令跟随方面的能力,我们与五个基线大语言模型进行了对比分析:

• GPT-4V(OpenAI, 2023), a large multi-modal model that, while less capable than humans in many real-world scenarios, exhibits humanlevel performance on various professional and academic benchmarks.

• GPT-4V (OpenAI, 2023),一个大型多模态模型,虽然在许多现实场景中能力不及人类,但在各种专业和学术基准测试中展现出人类水平的表现。

Qwen-VL (Bai et al., 2023a), an opensourced general large vision-language model based on Qwen-7B(Bai et al., 2023b) and ViT(Do sov it ski y et al., 2021) that can handle various vision-language tasks, including image description, question-answering, grounding, and text-reading.

Qwen-VL (Bai et al., 2023a) 是基于 Qwen-7B (Bai et al., 2023b) 和 ViT (Dosovitskiy et al., 2021) 的开源通用视觉-语言大模型,能够处理多种视觉-语言任务,包括图像描述、问答、定位和文本阅读。

• VisCPM-Chat (Hu et al., 2023b), trained us- ing CPM-Bee2 with 10B parameters, fusing visual encoder Muffin(Yu et al., 2023) and visual decoder Diffusion-UNet(Rombach et al., 2022) to support visual inputs and outputs.

• VisCPM-Chat (Hu et al., 2023b),基于10B参数的CPM-Bee2训练,融合视觉编码器Muffin (Yu et al., 2023) 和视觉解码器Diffusion-UNet (Rombach et al., 2022) 以支持视觉输入输出。

• LLaVA-1.5 (Liu et al., 2023b), an opensourced end-to-end trained LMM based on Vicuna-13B(Chiang et al., 2023) and ViT(Do sov it ski y et al., 2021).

• LLaVA-1.5 (Liu et al., 2023b),一个基于Vicuna-13B (Chiang et al., 2023) 和ViT (Dosovitskiy et al., 2021) 的开源端到端训练大语言模型。

5.2 Implementation Details

5.2 实现细节

We used Chinese-LLaMA2-13B-Chat as the foundation LLM and Clip-ViT-large-patch14-336 as the pre-trained image encoder for Qilin-Med-VL. Chinese-LLaMA2-13B-Chat is an open-source Transformer-based LLM with 13 billion parameters further trained on Chinese-LLaMA2-13B, which is optimized for conversation scenarios. Clip-ViTlarge-patch14-336 is a pre-trained CLIP vision encoder trained by OpenAI.

我们采用Chinese-LLaMA2-13B-Chat作为基础大语言模型(LLM),并选用Clip-ViT-large-patch14-336作为Qilin-Med-VL的预训练图像编码器。Chinese-LLaMA2-13B-Chat是一个基于Transformer架构的开源大语言模型,拥有130亿参数,并在Chinese-LLaMA2-13B基础上针对对话场景进行了优化训练。Clip-ViT-large-patch14-336是由OpenAI训练的一个预训练CLIP视觉编码器。

We performed two-stage curriculum training using $8\times{\mathrm{Al}}0080\mathrm{G}$ GPUs. Specifically, We had the following settings during feature alignment: batch size $=32$ per GPU, 1 epoch, learning rate $\begin{array}{r l}{\mathbf{\Psi}}&{{}= 1\mathrm{e}\cdot\mathbf{\Psi}}\end{array}$ - 3, warmup ratio $=0.03$ , and max length $=2048$ .

我们使用 $8\times{\mathrm{Al}}0080\mathrm{G}$ GPU 进行了两阶段课程训练。具体而言,在特征对齐阶段采用以下设置:单 GPU 批处理量 $=32$,训练轮数 1 次,学习率 $\begin{array}{r l}{\mathbf{\Psi}}&{{}= 1\mathrm{e}\cdot\mathbf{\Psi}}\end{array}$ -3,预热比例 $=0.03$,最大长度 $=2048$。

As for the vision-language instruction-tuning stage, we used the following settings: batch size $=16$ per GPU, 1 epoch, learning rate $=2\mathrm{e}{-5}$ , warmup ratio $=0.03$ , and max length $=2048$ .

至于视觉语言指令微调阶段,我们采用了以下设置:每块GPU的批量大小 $=16$,训练1个周期,学习率 $=2\mathrm{e}{-5}$,预热比例 $=0.03$,最大长度 $=2048$。

5.3 Results and Discussion

5.3 结果与讨论

Fig.4 shows some results of Qilin-Med-VL and various baselines on the PMC-VQA test set. We display cases of different types of images, including ultrasound, X-ray, MRI, etc.

图 4: 展示了Qilin-Med-VL与多种基线方法在PMC-VQA测试集上的部分结果。我们展示了不同类型的图像案例,包括超声、X光、MRI等。

For the first case, even though the image is clearly informed to be related to the testis, LLaVA still determined it to be an embryo in the uterus. Qwen-VL predicted it to be a varicocele inside the testicle. VisCPM made a fundamental mistake, predicting that there was a fetus inside the testicle and describing the specific situation. GPT-4V’s answer was relatively accurate, suggesting the possibility of a cystic or solid lesion. In contrast, Qilin-MedVL accurately predicted that there was a tumor in the region.

对于第一种情况,尽管图像明确提示与睾丸相关,LLaVA仍将其判定为子宫内的胚胎。Qwen-VL预测其为睾丸内部的精索静脉曲张。VisCPM犯了根本性错误,认为睾丸内部存在胎儿并描述了具体状况。GPT-4V的回答相对准确,提出了囊性或实性病变的可能性。相比之下,Qilin-MedVL精准预测该区域存在肿瘤。

For the second case, both LLaVa and VisCPM suggested abnormalities in the lungs, while QwenVL suggested there was a rib fracture. GPT-4V did not give a clear judgment. However, Qilin-Med-VL predicted the abnormality to be an enlarged heart.

在第二种情况下,LLaVa和VisCPM均提示肺部异常,而QwenVL则指出存在肋骨骨折。GPT-4V未给出明确判断。然而,Qilin-Med-VL预测该异常为心脏扩大。

For the third evaluative task, we provided the models with clinical information indicating the presence of a pathological condition and challenged them to ascertain the tumor’s anatomical location based on the imaging data. LLaVA, QwenVL, and VisCPM misidentified the site of the lesion. GPT rendered a non-specific interpretation, suggesting the tumor’s presence within an organ in the abdominal region, yet without precise localization. Conversely, Qilin-Med-VL demonstrated precision by accurately pinpointing the tumor to the right renal region.

在第三项评估任务中,我们向模型提供了表明存在病理状况的临床信息,并挑战它们根据影像数据确定肿瘤的解剖位置。LLaVA、QwenVL和VisCPM错误识别了病变部位。GPT给出了非特异性解读,暗示肿瘤存在于腹部区域的某个器官内,但未能精确定位。相反,Qilin-Med-VL通过准确将肿瘤定位至右肾区域展现了其精确性。

We sought the expertise of a medical specialist who conducted a meticulous analysis based on the image data. The specialist astutely observed that the liver was located in the upper left quadrant of the image, while the kidneys were bilaterally aligned adjacent to the spinal column. This comprehensive evaluation, considering both the tumor’s position and morphology, led the specialist to the conclusion that the tumor was localized within the renal region.

我们咨询了一位医学专家的意见,他根据图像数据进行了细致分析。专家敏锐地观察到肝脏位于图像的左上象限,而肾脏则双侧对称地位于脊柱旁侧。通过综合考虑肿瘤的位置和形态特征,专家最终得出肿瘤局限于肾脏区域的结论。

Figure 4: Case Study of Qilin-Med-VL and baselines.

| CN:灰度超声图像显示睾丸内的什么? EN: What does the gray scale US image show within the testis? | |

| LLaVA-1.5 | CN:灰度超声图像显示了一个胚胎在母亲的子宫内的情况。 EN: The grayscale ultrasound image depicts the condition of an embryo inside the mother's uterus. |

| Qwen-VL-7B-Chat | CN:灰度超声图像显示睾丸内的精索静脉。 EN: The grayscale ultrasound image shows the varicocele inside the testicle. |

| VisCPM | EN: The grayscale ultrasound image shows the fetal heart inside the testicle, which has two atria and two ventricles, indicating that it is a developing baby. |

| GPT-4V | CN:这是一个超声图像,看起来像是睾丸的影像。在图像中,标记为“* "的区域可能表示一个囊性或实质 性的病变。同时,白色三角形标记的部分可能表示睾丸的正常组织或其它结构。为了确切地解读这张图像 并确定具体的诊断,你应当咨询放射科医生或超声诊断专家。 EN: This is an ultrasound image that appears to be of the testicle. In the image, the area marked with "* " might indicate a cystic or solid lesion. Meanwhile, the part marked with a white triangle may represent normal testicular tissue or other structures. To accurately interpret this image and determine a specific diagnosis, you should consult a radiologist or ultrasound diagnostic specialist. |

| Qilin-Med-VL Ground Truth | CN:肿块。 EN: Mass. CN:大型、清晰的肿块。 EN: Large, defined mass. |

| CN:患者的X光片显示了什么异常? EN: What abnormality is shown in the patient's X-ray? | |

| LLaVA-1.5 | CN:在患者的X光片中,医生发现了一个异常,即肺部的一个区域被减少了。这可能是由于肺部疾病或其 他健康问题导致的。医生在X光片上标注了这个异常区域,以便进一步评估和治疗。 EN: In the patient's X-ray, the doctor identified an abnormality where a portion of the lung appears reduced. This could be due to a pulmonary disease or other health issues. The doctor marked this abnormal area on the X-ray for |

| Qwen-VL-7B-Chat | furtherevaluation andtreatment. CN:患者的X光片显示了他的右侧第4根肋骨骨折,这是一种常见的外伤导致的骨折类型。此外,X光片还 显示了肺部的一些其他异常,但这些可能是由于骨折或其他健康问题引起的。 caused by trauma.Additionally, the X-ray displayed some other abnormalities in the lungs, but these might be due |

| VisCPM | to thefracture or other health issues. CN:患者的X光片显示有一个蓝色箭头指向肺部,这可能表示存在问题或疾病的迹象,例如肺炎、肺结核 或者其他影响肺组织的医疗状况。 EN: The patient's X-ray shows a blue arrow pointing to the lung, which may indicate signs of a problem or disease, such as pneumonia, tuberculosis, or other medical conditions affecting the lung tissue. |

| GPT-4V | CN:这这是一个胸部的X光图像。从图像上看,蓝色箭头所指的区域显示了一个较暗的斑块或阴影,可能 代表某种病变或积液。然而,仅凭这张图像是难以确切判断这个病变的性质的。为了得到准确的诊断和解 读,建议咨询放射科医生或相关医疗专家。EN:This is a chest X-ray image.From the image,the area pointed to by the blue arrow shows a darker patch or shadow,which might represent some kind of lesion or fluid |

| Qilin-Med-VL | accumulation.However, it's hard to definitively determine the nature of this lesion based solely on this image. For an accurate diagnosis and interpretation, it is recommended to consult a radiologist or relevant medical specialist. CN:扩大的心脏。 |

| Ground Truth | EN: Enlarged heart. CN:肥大的心脏。 EN: Enlarged heart. |

| CN:肿瘤位于何处? EN: Where was the tumor located? | |

| LLaVA-1.5 | CN:肿瘤位于肝脏 EN: The tumor is located in the liver |

| Qwen-VL-7B-Chat | CN:肿瘤位于患者的左肾上腺 EN: The tumor is located in the patient's left adrenal gland |

| VisCPM | CN:在胸壁和肺之间 EN: Between the chest wall and the lung |

| GPT-4V | CN:从图像上看,带红色箭头的区域可能代表肿瘤或病变。在此图像中,这些箭头标记的部分位于腹部的 某个器官附近。为了确切地确定肿瘤的位置和性质,建议咨询放射科医生或相关医疗专家。 EN: From the image,the areas marked with red arrows may represent a tumor or lesion. In this image, these |

| of the tumor,it is recommended to consult with a radiologist or relevant medical specialist. CN:右肾 | |

| Qilin-Med-VL Ground Truth | EN: The right kidney CN:右肾上极 |

图 4: Qilin-Med-VL 与基线模型的案例分析

| | CN: 灰度超声图像显示睾丸内的什么? EN: What does the gray scale US image show within the testis? |

| LLaVA-1.5 | CN: 灰度超声图像显示了一个胚胎在母亲的子宫内的情况。 EN: The grayscale ultrasound image depicts the condition of an embryo inside the mother's uterus. |

| Qwen-VL-7B-Chat | CN: 灰度超声图像显示睾丸内的精索静脉。 EN: The grayscale ultrasound image shows the varicocele inside the testicle. |

| VisCPM | EN: The grayscale ultrasound image shows the fetal heart inside the testicle, which has two atria and two ventricles, indicating that it is a developing baby. |

| GPT-4V | CN: 这是一个超声图像,看起来像是睾丸的影像。在图像中,标记为“* ”的区域可能表示一个囊性或实质性的病变。同时,白色三角形标记的部分可能表示睾丸的正常组织或其它结构。为了确切地解读这张图像并确定具体的诊断,你应当咨询放射科医生或超声诊断专家。 EN: This is an ultrasound image that appears to be of the testicle. In the image, the area marked with "* " might indicate a cystic or solid lesion. Meanwhile, the part marked with a white triangle may represent normal testicular tissue or other structures. To accurately interpret this image and determine a specific diagnosis, you should consult a radiologist or ultrasound diagnostic specialist. |

| Qilin-Med-VL Ground Truth | CN: 肿块。 EN: Mass. CN: 大型、清晰的肿块。 EN: Large, defined mass. |

| | CN: 患者的X光片显示了什么异常? EN: What abnormality is shown in the patient's X-ray? |

| LLaVA-1.5 | CN: 在患者的X光片中,医生发现了一个异常,即肺部的一个区域被减少了。这可能是由于肺部疾病或其他健康问题导致的。医生在X光片上标注了这个异常区域,以便进一步评估和治疗。 EN: In the patient's X-ray, the doctor identified an abnormality where a portion of the lung appears reduced. This could be due to a pulmonary disease or other health issues. The doctor marked this abnormal area on the X-ray for further evaluation and treatment. |

| Qwen-VL-7B-Chat | CN: 患者的X光片显示了他的右侧第4根肋骨骨折,这是一种常见的外伤导致的骨折类型。此外,X光片还显示了肺部的一些其他异常,但这些可能是由于骨折或其他健康问题引起的。 EN: The X-ray shows a fracture in the patient's right 4th rib, a common type of fracture c