MED-FLAMINGO: A MULTIMODAL MEDICAL FEWSHOT LEARNER

MED-FLAMINGO: 多模态医学少样本学习模型

Michael Moor∗1 Qian Huang∗1 Shirley $ Wu^1 $ Michihiro Yasunaga1 Cyril Zakka2 Yash Dalmia1 Eduardo Pontes Reis3 Pranav Rajpurkar4 Jure Leskovec1

Michael Moor∗1 Qian Huang∗1 Shirley $Wu^{1}$ Michihiro Yasunaga1 Cyril Zakka2 Yash Dalmia1 Eduardo Pontes Reis3 Pranav Rajpurkar4 Jure Leskovec1

1 Department of Computer Science, Stanford University, Stanford, USA 2 Department of Car dio thoracic Surgery, Stanford Medicine, Stanford, USA 3Hospital Israelita Albert Einstein, Sao Paulo, Brazil 4 Department of Biomedical Informatics, Harvard Medical School, Boston, USA

1 斯坦福大学计算机科学系,美国斯坦福

2 斯坦福医学院心胸外科,美国斯坦福

3 以色列阿尔伯特·爱因斯坦医院,巴西圣保罗

4 哈佛医学院生物医学信息学系,美国波士顿

ABSTRACT

摘要

Medicine, by its nature, is a multifaceted domain that requires the synthesis of information across various modalities. Medical generative vision-language models (VLMs) make a first step in this direction and promise many exciting clinical applications. However, existing models typically have to be fine-tuned on sizeable down-stream datasets, which poses a significant limitation as in many medical applications data is scarce, necessitating models that are capable of learning from few examples in real-time. Here we propose Med-Flamingo, a multimodal few-shot learner adapted to the medical domain. Based on Open Flamingo-9B, we continue pre-training on paired and interleaved medical image-text data from publications and textbooks. Med-Flamingo unlocks few-shot generative medical visual question answering (VQA) abilities, which we evaluate on several datasets including a novel challenging open-ended VQA dataset of visual USMLE-style problems. Furthermore, we conduct the first human evaluation for generative medical VQA where physicians review the problems and blinded generations in an interactive app. Med-Flamingo improves performance in generative medical VQA by up to $20%$ in clinician’s rating and firstly enables multimodal medical few-shot adaptations, such as rationale generation. We release our model, code, and evaluation app under https://github.com/snap-stanford/med-flamingo.

医学本质上是一个多领域交叉的学科,需要综合多种模态的信息。医学生成式视觉语言模型(VLM)在这一方向上迈出了第一步,并有望实现诸多激动人心的临床应用。然而,现有模型通常需要在下游大型数据集上进行微调,这在数据稀缺的医疗场景中构成重大限制,亟需能够实时从少量样本中学习的模型。为此,我们提出Med-Flamingo——一个适配医疗领域的多模态少样本学习模型。基于Open Flamingo-9B架构,我们继续使用来自医学出版物和教科书的配对及交错医学图文数据进行预训练。Med-Flamingo解锁了少样本生成式医学视觉问答(VQA)能力,我们在多个数据集上进行了评估,包括新构建的开放式USMLE风格视觉问题挑战集。此外,我们首次开展了生成式医学VQA的医生人工评估:通过交互式应用让医师审核问题并对生成结果进行盲评。Med-Flamingo将生成式医学VQA的临床医生评分最高提升20%,并首次实现多模态医学少样本适应(如诊疗依据生成)。我们在https://github.com/snap-stanford/med-flamingo发布了模型、代码和评估应用。

1 INTRODUCTION

1 引言

Large, pre-trained models (or foundation models) have demonstrated remarkable capabilities in solving an abundance of tasks by being provided only a few labeled examples as context Bommasani et al. (2021). This is known as in-context learning Brown et al. (2020), through which a model learns a task from a few provided examples specifically during prompting and without tuning the model parameters. In the medical domain, this bears great potential to vastly expand the capabilities of existing medical AI models Moor et al. (2023). Most notably, it will enable medical AI models to handle the various rare cases faced by clinicians every day in a unified way, to provide relevant rationales to justify their statements, and to easily customize model generations to specific use cases.

大型预训练模型(或基础模型)通过仅提供少量标注示例作为上下文,已展现出解决大量任务的卓越能力 [Bommasani et al., 2021]。这种方法被称为上下文学习 [Brown et al., 2020],模型在提示过程中从少量提供的示例中学习任务,而无需调整模型参数。在医疗领域,这具有极大潜力来显著扩展现有医疗AI模型的能力 [Moor et al., 2023]。最值得注意的是,它将使医疗AI模型能够以统一方式处理临床医生每天面临的各种罕见病例,提供相关依据来支持其陈述,并轻松针对特定用例定制模型生成内容。

Implementing the in-context learning capability in a medical setting is challenging due to the inherent complexity and multi modality of medical data and the diversity of tasks to be solved.

在医疗环境中实现上下文学习能力具有挑战性,这源于医疗数据固有的复杂性和多模态特性,以及需要解决任务的多样性。

Previous efforts to create multimodal medical foundation models, such as ChexZero Tiu et al. (2022) and BiomedCLIP Zhang et al. (2023a), have made significant strides in their respective domains. ChexZero specializes in chest $\boldsymbol{\mathrm X}$ -ray interpretation, while BiomedCLIP has been trained on more diverse images paired with captions from the biomedical literature. Other models have also been developed for electronic health record (EHR) data Steinberg et al. (2021) and surgical videos Kiyasseh et al. (2023). However, none of these models have embraced in-context learning for the multimodal medical domain. Existing medical VLMs, such as MedVINT Zhang et al. (2023b), are typically trained on paired image-text data with a single image in the context, as opposed to more general streams of text that are interleaved with multiple images. Therefore, these models were not designed and tested to perform multimodal in-context learning with few-shot examples1

先前在构建多模态医学基础模型方面的努力,如ChexZero Tiu等人 (2022) 和BiomedCLIP Zhang等人 (2023a),已在各自领域取得显著进展。ChexZero专精于胸部$\boldsymbol{\mathrm X}$光片解读,而BiomedCLIP则通过生物医学文献中的多样化图像与配对说明进行训练。其他模型也针对电子健康记录(EHR)数据 Steinberg等人 (2021) 和手术视频 Kiyasseh等人 (2023) 进行了开发。然而,这些模型均未采用多模态医学领域的上下文学习技术。现有医学视觉语言模型(VLM),如MedVINT Zhang等人 (2023b),通常仅在单图像上下文中训练图文配对数据,而非处理穿插多张图像的通用文本流。因此,这些模型在设计之初未考虑少样本情况下的多模态上下文学习能力测试。

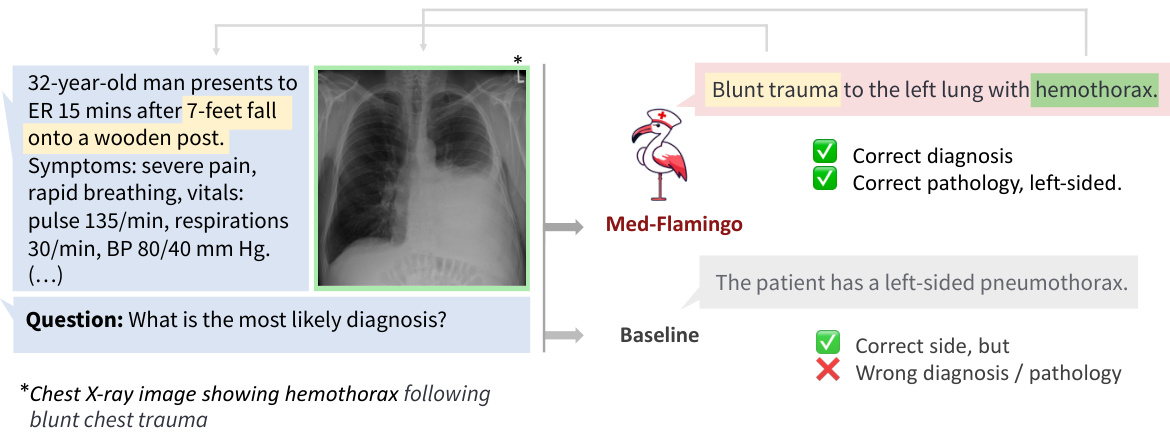

Figure 1: Example of how Med-Flamingo answers complex multimodal medical questions by generating open-ended responses conditioned on textual and visual information.

图 1: Med-Flamingo 如何通过基于文本和视觉信息生成开放式回答来处理复杂多模态医学问题的示例。

Here, we propose Med-Flamingo, the first medical foundation model that can perform multimodal incontext learning specialized for the medical domain. Med-Flamingo is a vision-language model based on Flamingo (Alayrac et al., 2022) that can naturally ingest data with interleaved modalities (images and text), to generate text conditioned on this multimodal input. Building on the success of Flamingo, which was among the first vision-language models to exhibit in-context learning and few-shot learning abilities, Med-Flamingo extends these capabilities to the medical domain by pre-training on multimodal knowledge sources across medical disciplines. In preparation for the training of Med-Flamingo, our initial step involved constructing a unique, interleaved image-text dataset, which was derived from an extensive collection of over $4K$ medical textbooks (Section 3). Given the critical nature of accuracy and precision within the medical field, it is important to note that the quality, reliability, and source of the training data can considerably shape the results. Therefore, to ensure accuracy in medical facts, we meticulously curated our dataset from respected and authoritative sources of medical knowledge, as opposed to relying on potentially unreliable web-sourced data.

在此,我们提出Med-Flamingo,这是首个能够执行医学领域专用多模态上下文学习的医学基础模型。Med-Flamingo是基于Flamingo (Alayrac等人,2022) 的视觉语言模型,可自然地处理交错模态 (图像和文本) 数据,并根据这种多模态输入生成文本。Flamingo作为首批展现上下文学习和少样本学习能力的视觉语言模型之一取得了成功,而Med-Flamingo通过跨医学学科的多模态知识源进行预训练,将这些能力扩展到医学领域。在准备训练Med-Flamingo时,我们的初始步骤是构建一个独特的交错图文数据集,该数据集源自超过4K本医学教科书的广泛收集 (第3节)。鉴于医学领域对准确性和精确性的严格要求,必须注意训练数据的质量、可靠性和来源会显著影响结果。因此,为确保医学事实的准确性,我们精心从受尊敬且权威的医学知识来源中筛选数据集,而非依赖可能不可靠的网络数据。

In our experiments, we evaluate Med-Flamingo on generative medical visual questionanswering (VQA) tasks by directly generating open-ended answers, as opposed to scoring artificial answer options ex post–as CLIP-based medical vision-language models do. We design a new realistic evaluation protocol to measure the model generations’ clinical usefulness. For this, we conduct an in-depth human evaluation study with clinical experts which results in a human evaluation score that serves as our main metric. In addition, due to existing medical VQA datasets being narrowly focused on image interpretation among the specialties of radiology and pathology, we create Visual USMLE, a challenging generative VQA dataset of complex USMLE-style problems across specialties, which are augmented with images, case vignettes, and potentially with lab results.

在我们的实验中,我们通过直接生成开放式答案来评估Med-Flamingo在生成式医学视觉问答(VQA)任务上的表现,这与基于CLIP的医学视觉语言模型事后对人工答案选项进行评分的方式不同。我们设计了一种新的现实评估方案来衡量模型生成的临床实用性。为此,我们与临床专家进行了深入的人工评估研究,得出人工评估分数作为我们的主要指标。此外,由于现有的医学VQA数据集主要集中在放射学和病理学专业的图像解读上,我们创建了Visual USMLE,这是一个具有挑战性的生成式VQA数据集,包含跨专业的复杂USMLE风格问题,这些问题配有图像、病例摘要,并可能包含实验室结果。

Averaged across three generative medical VQA datasets, few-shot prompted Med-Flamingo achieves the best average rank in clinical evaluation score (rank of 1.67, best prior model has 2.33), indicating that the model generates answers that are most preferred by clinicians, with up to $20%$ improvement over prior models. Furthermore, Med-Flamingo is capable of performing medical reasoning, such as answering complex medical questions (such as visually grounded USMLE-style questions) and providing explanations (i.e., rationales), a capability not previously demonstrated by other multimodal medical foundation models. However, it is important to note that Med-Flamingo’s performance may be limited by the availability and diversity of training data, as well as the complexity of certain medical tasks. All investigated models and baselines would occasionally hallucinate or generate low-quality responses. Despite these limitations, our work represents a significant step forward in the development of multimodal medical foundation models and their ability to perform multimodal in-context learning in the medical domain. We release the Med-Flamingo-9B checkpoint for further research, and

在三个生成式医学VQA数据集上的平均结果显示,采用少样本提示的Med-Flamingo在临床评估得分中取得了最佳平均排名(排名1.67,此前最佳模型为2.33),表明该模型生成的答案最受临床医生青睐,相比先前模型提升高达20%。此外,Med-Flamingo能够执行医学推理任务,例如回答复杂医学问题(如基于视觉的USMLE风格试题)并提供解释(即原理阐述),这是其他多模态医学基础模型此前未展示的能力。但需注意,Med-Flamingo的性能可能受限于训练数据的可获得性、多样性以及某些医学任务的复杂性。所有被研究的模型和基线方法偶尔会出现幻觉或生成低质量回答。尽管存在这些局限,我们的工作标志着多模态医学基础模型发展及其在医学领域执行多模态上下文学习能力的重要进步。我们发布了Med-Flamingo-9B检查点以供进一步研究。

1. Multimodal pre-training on medical literature

1. 基于医学文献的多模态预训练

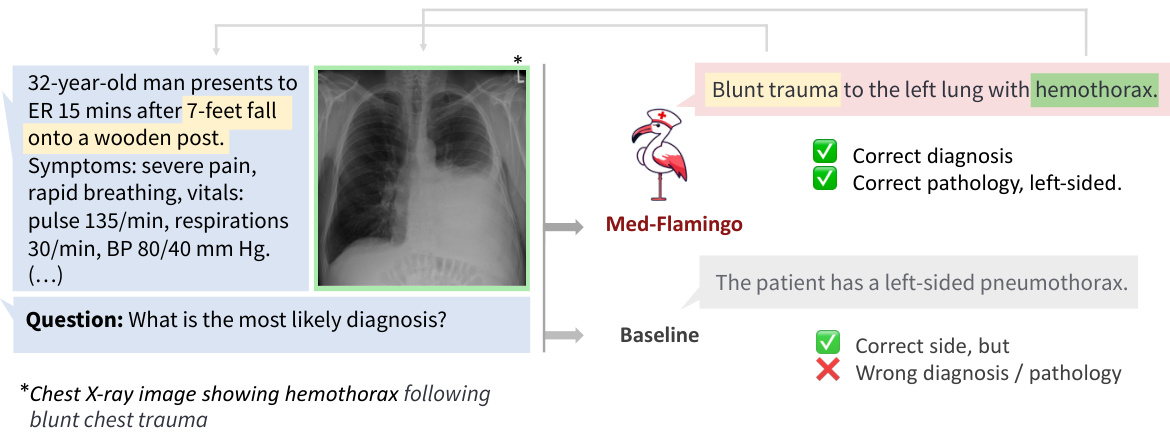

Figure 2: Overview of the Med-Flamingo model and the three steps of our study. First, we pre-train our Med-Flamingo model using paired and interleaved image-text data from the general medical domain (sourced from publications and textbooks). We initialize our model at the Open Flamingo checkpoint continue pre-training on medical image-text data. Second, we perform few-shot generative visual question answering (VQA). For this, we leverage two existing medical VQA datasets, and a new one, Visual USMLE. Third, we conduct a human rater study with clinicians to rate generations in the context of a given image, question and correct answer. The human evaluation was conducted with a dedicated app and results in a clinical evaluation score that serves as our main metric for evaluation.

图 2: Med-Flamingo 模型概览及研究三阶段。首先,我们使用通用医学领域(来自出版物和教科书)的配对及交错图文数据对 Med-Flamingo 模型进行预训练,该模型基于 Open Flamingo 检查点初始化并持续在医学图文数据上预训练。其次,我们执行少样本生成式视觉问答(VQA),利用两个现有医学 VQA 数据集及新构建的 Visual USMLE 数据集。最后,我们邀请临床医生通过专用应用程序对给定图像、问题和正确答案背景下的生成结果进行人工评分,产生的临床评估分数作为主要评估指标。

make our code available under https://github.com/snap-stanford/med-flamingo. In summary, our paper makes the following contributions:

我们的代码可在 https://github.com/snap-stanford/med-flamingo 获取。总的来说,本文做出了以下贡献:

2 RELATED WORKS

2 相关工作

The success of large language models (LLMs) Brown et al.; Liang et al. (2022); Qin et al. (2023) has led to significant advancements in training specialized models for the medical domain. This has resulted in the emergence of various models, including BioBERT Lee et al. (2020), Clinic alBERT Huang et al. (2019), PubMedBERT Gu et al. (2021), Bio Link BERT Yasunaga et al. (b), DRAGON Yasunaga et al. (a), BioMedLM Bolton et al., BioGPT Luo et al. (2022), and Med-PaLM Singhal et al.. Although these medical language models are typically smaller than general-purpose LLMs like GPT-3 Brown et al., they can match or even surpass their performance on medical tasks, such as medical question answering.

大语言模型 (LLMs) [Brown et al.; Liang et al. (2022); Qin et al. (2023)] 的成功推动了医疗领域专用模型的显著进展。这催生了多种模型的出现,包括 BioBERT [Lee et al. (2020)]、ClinicalBERT [Huang et al. (2019)]、PubMedBERT [Gu et al. (2021)]、BioLinkBERT [Yasunaga et al. (b)]、DRAGON [Yasunaga et al. (a)]、BioMedLM [Bolton et al.]、BioGPT [Luo et al. (2022)] 以及 Med-PaLM [Singhal et al.]。尽管这些医疗语言模型通常比通用大语言模型 (如 GPT-3 [Brown et al.]) 规模更小,但它们在医疗任务 (如医学问答) 上的表现可与之匹敌甚至更优。

Recently, there has been a growing interest in extending language models to handle vision-language multimodal data and tasks Su et al. (2019); Ramesh et al.; Alayrac et al. (2022); Aghajanyan et al.; Yasunaga et al. (2023). Furthermore, many medical applications involve multimodal information, such as radiology tasks that require the analysis of both X-ray images and radiology reports Tiu et al. (2022). Motivated by these factors, we present a medical vision-language model (VLM). Existing medical VLMs include BiomedCLIP Zhang et al. (2023a), MedVINT Zhang et al. (2023b). While BiomedCLIP is an encoder-only model, our focus lies in developing a generative VLM, demonstrating superior performance compared to MedVINT. Finally, Llava-Med is another recent medical generative VLM Li et al. (2023), however the model was not yet available for benchmarking.

近来,将语言模型扩展到处理视觉-语言多模态数据和任务的研究兴趣日益增长 [Su et al., 2019; Ramesh et al.; Alayrac et al., 2022; Aghajanyan et al.; Yasunaga et al., 2023]。此外,许多医学应用涉及多模态信息,例如需要同时分析X光影像和放射学报告的放射科任务 [Tiu et al., 2022]。基于这些因素,我们提出了一种医学视觉-语言模型 (VLM)。现有医学VLM包括BiomedCLIP [Zhang et al., 2023a] 和MedVINT [Zhang et al., 2023b]。虽然BiomedCLIP是仅编码器模型,我们的重点在于开发生成式VLM,其表现优于MedVINT。最后,Llava-Med是另一个近期提出的医学生成式VLM [Li et al., 2023],但该模型尚未可用于基准测试。

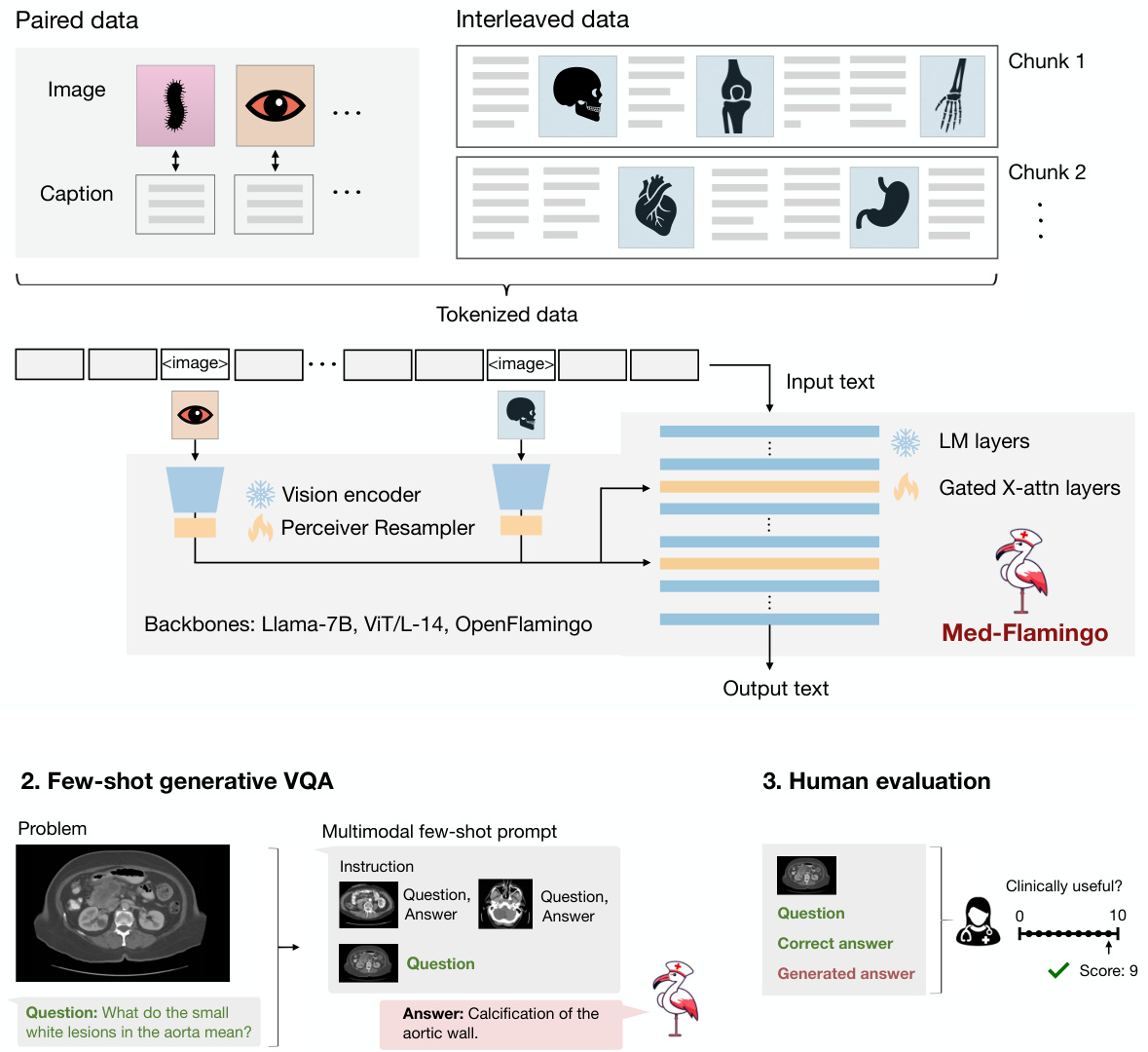

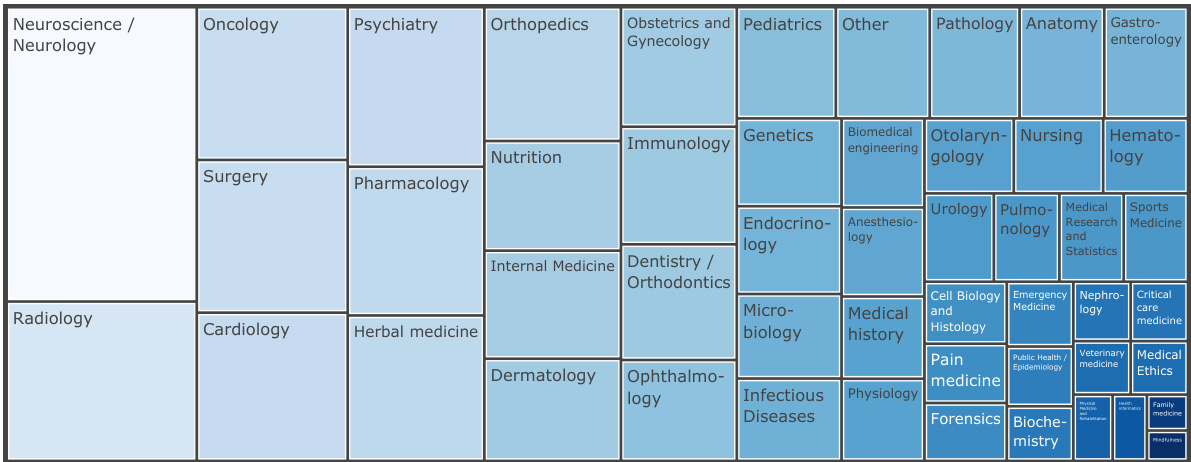

Figure 3: Overview of the distribution of medical textbook categories of the MTB dataset. We classify each book title into one of the 49 manually created categories or ”other” using the Claude-1 model.

图 3: MTB数据集医学教科书类别分布概览。我们使用Claude-1模型将每本书名分类到49个人工创建的类别或"其他"类别中。

3 MED-FLAMINGO

3 MED-FLAMINGO

To train a Flamingo model adapted to the medical domain, we leverage the pre-trained Open Flamingo9B model checkpoint Awadalla et al. (2023), which is a general-domain VLM that was built on top

为了训练一个适应医疗领域的Flamingo模型,我们利用了预训练的Open Flamingo9B模型检查点Awadalla等人(2023)。该模型是基于通用领域视觉语言模型(VLM)构建的。

of the frozen language model LLaMA-7B Touvron et al. (2023) and frozen vision encoder CLIP ViT/L-14 Radford et al.. We perform continued pre-training in the medical domain which results in the model we refer to as Med-Flamingo.

冻结的语言模型LLaMA-7B Touvron等人 (2023) 和冻结的视觉编码器CLIP ViT/L-14 Radford等人。我们在医学领域进行了持续预训练,最终得到称为Med-Flamingo的模型。

3.1 DATA

3.1 数据

We pre-train Med-Flamingo by jointly training on interleaved image-text data and paired image-text data. As for the interleaved dataset, we created a interleaved dataset from a set of medical textbooks, which we subsequently refer to as MTB. As for the paired datasets, we used PMC-OA Lin et al. (2023).

我们通过联合训练交错图文数据和配对图文数据对Med-Flamingo进行预训练。对于交错数据集,我们从一系列医学教科书中创建了一个交错数据集,后续简称为MTB。至于配对数据集,我们使用了PMC-OA (Lin et al., 2023)。

MTB We construct a new multimodal dataset from a set of 4 721 textbooks from different medical specialties (see Figure 3). During preprocessing, each book is first converted from PDF to HTML with all tags removed, except the image tags are converted to tokens. We then carry out data cleaning via de duplication and content filtering. Finally, each book with cleaned text and images is then chopped into segments for pre training so that each segment contains at least one image and up to 10 images and a maximum length. In total, MTB consists of approximately $0.8\mathbf{M}$ images and 584M tokens. We use $95%$ of the data for training and $5%$ of the data for evaluation during the pre-training.

MTB

我们从4,721本不同医学专业的教材中构建了一个新的多模态数据集(参见图3)。预处理阶段,每本书首先从PDF转换为HTML格式并移除所有标签,仅保留图像标签并转换为 token。随后通过去重和内容过滤进行数据清洗。最终,每本经过清洗的含图文教材被切分为预训练片段,确保每个片段包含1至10张图像及最大长度文本。MTB数据集总计包含约$0.8\mathbf{M}$张图像和5.84亿token,其中$95%$数据用于预训练,$5%$用于评估。

PMC-OA We adopt the PMC-OA dataset Lin et al. (2023) which is a biomedical dataset with 1.6M image-caption pairs collected from PubMed Central’s OpenAccess subset. We use 1.3M image-caption pairs for training and 0.16M pairs for evaluation following the public split .

PMC-OA

我们采用PMC-OA数据集[20],这是一个包含160万张生物医学图像-标题对的集合,数据来自PubMed Central的开放获取子集。按照公开划分标准,我们使用130万对数据进行训练,16万对数据进行评估。

3.2 OBJECTIVES

3.2 目标

We follow the original Flamingo model approach Alayrac et al., which considers the following language modelling problem:

我们遵循原始Flamingo模型方法Alayrac等人[20]的思路,考虑以下语言建模问题:

$$

p\left(y_ {\ell}\mid x_ {<\ell},y_ {<\ell}\right)=\prod_ {\ell=1}^{L}p\left(y_ {\ell}\mid y_ {<\ell},x_ {<\ell}\right),

$$

$$

p\left(y_ {\ell}\mid x_ {<\ell},y_ {<\ell}\right)=\prod_ {\ell=1}^{L}p\left(y_ {\ell}\mid y_ {<\ell},x_ {<\ell}\right),

$$

where $y_ {\ell}$ refers to the $\ell$ -th language token, $y<\ell$ to the set of preceding language tokens, and $x_ {<\ell}$ to the set of preceding visual tokens. As we focus on modelling the medical literature, here we consider only image-text data (i.e., no videos).

其中 $y_ {\ell}$ 表示第 $\ell$ 个语言 token,$y<\ell$ 表示前面的语言 token 集合,$x_ {<\ell}$ 表示前面的视觉 token 集合。由于我们专注于医学文献建模,此处仅考虑图文数据(即不包含视频)。

Following Alayrac et al., we minimize a joint objective $\mathcal{L}$ over paired and interleaved data:

根据 Alayrac 等人的研究,我们在配对和交错数据上最小化联合目标 $\mathcal{L}$:

$$

\mathcal{L}=\mathbb{E}_ {(x,y)\sim D_ {p}}\left[-\sum_ {\ell=1}^{L}\log p\left(y_ {\ell}\mid y_ {<\ell},x_ {<\ell}\right)\right]+\lambda\cdot\mathbb{E}_ {(x,y)\sim D_ {i}}\left[-\sum_ {\ell=1}^{L}\log p\left(y_ {\ell}\mid y_ {<\ell},x_ {<\ell}\right)\right],

$$

$$

\mathcal{L}=\mathbb{E}_ {(x,y)\sim D_ {p}}\left[-\sum_ {\ell=1}^{L}\log p\left(y_ {\ell}\mid y_ {<\ell},x_ {<\ell}\right)\right]+\lambda\cdot\mathbb{E}_ {(x,y)\sim D_ {i}}\left[-\sum_ {\ell=1}^{L}\log p\left(y_ {\ell}\mid y_ {<\ell},x_ {<\ell}\right)\right],

$$

where $D_ {p}$ and $D_ {i}$ stand for the paired and interleaved dataset, respectively. In our case, we use $\lambda=1$

其中 $D_ {p}$ 和 $D_ {i}$ 分别表示配对数据集和交错数据集。在我们的案例中,使用 $\lambda=1$

3.3 TRAINING

3.3 训练

We performed multi-gpu training on a single node with $8\mathbf{x}80\mathbf{G}\mathbf{B}$ NVIDIA A100 GPUs. We trained the model using DeepSpeed ZeRO Stage 2: Optimizer states and gradients are sharded across devices. To further reduce memory load, we employed the 8-bit AdamW optimizer as well as the memory-efficient attention implementation of PyTorch 2.0. Med-Flamingo was initialized at the checkpoint of the Open-Flamingo model and then pre-trained for 2700 steps (or 6.75 days in wall time, including the validation steps), using 50 gradient accumulation steps and a per-device batch size of 1, resulting in a total batch size of 400. The model has $1.3B$ trainable parameters (gated cross attention layers and perceiver layers) and roughly $^{7B}$ frozen parameters (decoder layers and vision encoder), which results in a total of $8.3B$ parameters. Note that this is the same number parameters as in the Open Flamingo-9B model (version 1).

我们在单节点上使用8块80GB NVIDIA A100 GPU进行多GPU训练。采用DeepSpeed ZeRO Stage 2策略:优化器状态和梯度在设备间分片存储。为降低内存负载,使用了8位AdamW优化器及PyTorch 2.0的高效注意力实现。Med-Flamingo基于Open-Flamingo模型检查点初始化,经过2700步预训练(含验证步骤,实际耗时6.75天),采用50步梯度累积和单设备批量大小1,总批量达400。模型包含13亿可训练参数(门控交叉注意力层和感知器层)与约70亿冻结参数(解码层和视觉编码器),总参数量达83亿。需注意该参数量与Open Flamingo-9B模型(版本1)保持相同。

4 EVALUATION

4 评估

4.1 AUTOMATIC EVALUATION

4.1 自动评估

Baselines To compare generative VQA abilities against the literature, we consider different variants of the following baselines:

基线方法

为比较生成式VQA能力与现有研究的差异,我们考虑了以下基线方法的不同变体:

Evaluation datasets To evaluate our model and compare it against the baselines, we leverage two existing VQA datasets from the medical domain (VQA-RAD and PathVQA). Upon closer inspection of the VQA-RAD dataset, we identified severe data leakage in the official train / test splits, which is problematic given that many recent VLMs fine-tune on the train split. To address this, we created a custom train / test split by seperately splitting images and questions (each $90%/10%)$ to ensure that no image or question of the train split leaks into the test split. On these datasets, 6 shots were used for few-shot.

评估数据集

为评估我们的模型并与基线方法进行比较,我们采用了医疗领域的两个现有VQA数据集(VQA-RAD和PathVQA)。在仔细检查VQA-RAD数据集时,我们发现官方训练/测试划分存在严重的数据泄漏问题,考虑到近期许多视觉语言模型(VLM)都在训练集上进行微调,这一问题尤为突出。为此,我们通过分别划分图像和问题(各占$90%/10%$)创建了自定义的训练/测试划分,确保训练集中的任何图像或问题都不会泄漏到测试集中。在这些数据集上,我们采用6样本进行少样本学习。

Furthermore, we create Visual USMLE, a challenging multimodal problem set of 618 USMLE-style questions which are not only augmented with images but also with a case vignette and potentially tables of laboratory measurements. The Visual USMLE dataset was created by adapting problems from the Amboss platform (using licenced user access). To make the Visual USMLE problems more actionable and useful, we rephrased the problems to be open-ended instead of multiple-choice. This makes the benchmark harder and more realistic, as the models have to come up with differential diagnoses and potential procedures completely on their own—as opposed to selecting the most reasonable answer choice from few choices. Figure 8 gives an overview of the broad range of specialties that are covered in the dataset, greatly extending existing medical VQA datasets which are narrowly focused on radiology and pathology. For this comparatively small dataset, instead of creating a training split for finetuning, we created a small train split of 10 problems which can be used for few-shot prompting. For this dataset (with considerably longer problems and answers), we used only 4 shots to fit in the context window.

此外,我们创建了Visual USMLE——一个包含618道USMLE风格问题的多模态难题集,这些问题不仅配有图像增强,还包含病例摘要和可能的实验室测量数据表。该数据集通过改编Amboss平台上的题目(使用授权用户访问权限)构建而成。为了使Visual USMLE问题更具可操作性和实用性,我们将问题改写为开放式而非选择题形式。这使得基准测试更具挑战性和真实性,因为模型需要完全自主提出鉴别诊断和潜在诊疗方案,而非从有限选项中选择最合理的答案。图8展示了该数据集涵盖的广泛专科领域,极大拓展了现有仅聚焦于放射学和病理学的医学视觉问答数据集。针对这个相对小规模的数据集,我们没有创建用于微调的训练集分割,而是构建了包含10道题的少量训练集,可用于少样本提示。对于该数据集(问题和答案长度显著更长),我们仅使用4个样本以适应上下文窗口。

Evaluation metrics Previous works in medical vision-language modelling typically focused scoring all available answers of a VQA dataset to arrive at a classification accuracy. However, since we are interested in generative VQA (as opposed to post-hoc scoring different potential answers), for sake of clinical utility, we employ the following evaluation metrics that directly assess the quality of the generated answer:

评估指标

以往在医学视觉语言建模中的研究通常侧重于对视觉问答(VQA)数据集的所有可用答案进行评分,以得出分类准确率。然而,由于我们关注的是生成式VQA (与事后评分不同潜在答案相对),出于临床实用性考虑,我们采用以下直接评估生成答案质量的指标:

4.2 HUMAN EVALUATION

4.2 人工评估

We implemented a human evaluation app using Streamlit to visually display the generative VQA problems for clinical experts to rate the quality of the generated answers with scores from 0 to 10. Figure 4 shows an examplary view of the app. For each VQA problem, the raters are provided with the image, the question, the correct answer, and a set of blinded generations (e.g., appearing as ”prediction 1” in Figure 4), that appear in randomized order.

我们使用Streamlit实现了一个人工评估应用,以可视化方式展示生成式VQA问题,供临床专家对生成答案的质量进行0到10分的评分。图4展示了该应用的示例界面。针对每个VQA问题,评估者会看到图像、问题、正确答案以及一组盲审生成结果(如图4中显示的"prediction 1"),这些生成结果以随机顺序呈现。

Figure 4: Illustration of our Human evaluation app that we created for clinical experts to evaluate generated answers.

图 4: 我们为临床专家创建的人工评估应用界面示意图,用于评估生成的答案。

4.3 DE DUPLICATION AND LEAKAGE

4.3 去重与泄漏

During the evaluation of the Med-Flamingo model, we were concerned that there may be leakage between the pre-training datasets (PMC-OA and MTB) and the down-stream VQA datasets used for evaluation; this could inflate judgements of model quality, as the model could memorize imagequestion-answer triples.

在评估 Med-Flamingo 模型时,我们担心预训练数据集 (PMC-OA 和 MTB) 与用于评估的下游 VQA 数据集之间可能存在泄漏;这可能会夸大对模型质量的判断,因为模型可能记住了图像-问题-答案三元组。

To alleviate this concern, we performed data de duplication based upon pairwise similarity between images from our pre-training datasets and the images from our evaluation benchmarks. To detect similar images, in spite of perturbations due to cropping, color shifts, size, etc, we embedded the images using Google’s Vision Transformer, preserving the last hidden state as the resultant embedding Do sov it ski y et al. (2021). We then found the $\mathbf{k}$ -nearest neighbors to each evaluation image from amongst the pre-training images (using the FAISS library) Johnson et al. (2019). We then sorted and visualized image-image pairs