Conversational Health Agents: A Personalized LLM-Powered Agent Framework

对话式健康智能体:一个个性化的大语言模型驱动智能体框架

Mahyar Abbasian, M.Sc.1* , Iman Azimi, Ph.D.1, Amir M. Rahmani, Ph.D.1, and Ramesh Jain, Ph.D.1

Mahyar Abbasian, M.Sc.1* , Iman Azimi, Ph.D.1, Amir M. Rahmani, Ph.D.1, 以及 Ramesh Jain, Ph.D.1

$^{1}$ University of California, Irvine Corresponding author, abbasiam@uci.edu September 26, 2024

$^{1}$ 加州大学欧文分校 通讯作者,abbasiam@uci.edu 2024年9月26日

ABSTRACT

摘要

Conversational Health Agents (CHAs) are interactive systems that provide healthcare services, such as assistance and diagnosis. Current CHAs, especially those utilizing Large Language Models (LLMs), primarily focus on conversation aspects. However, they offer limited agent capabilities, specifically lacking multi-step problem-solving, personalized conversations, and multimodal data analysis. Our aim is to overcome these limitations. We propose openCHA, an open-source LLM-powered framework, to empower conversational agents to generate a personalized response for users’ healthcare queries. This framework enables developers to integrate external sources including data sources, knowledge bases, and analysis models, into their LLM-based solutions. openCHA includes an orchestrator to plan and execute actions for gathering information from external sources, essential for formulating responses to user inquiries. It facilitates knowledge acquisition, problemsolving capabilities, multilingual and multimodal conversations, and fosters interaction with various AI platforms. We illustrate the framework’s proficiency in handling complex healthcare tasks via two demonstrations and four use cases. Moreover, we release openCHA as open source available to

会话健康助手 (Conversational Health Agents, CHAs) 是提供医疗保健服务的交互式系统,例如协助和诊断。当前的 CHAs,尤其是那些利用大语言模型 (LLMs) 的系统,主要关注对话方面。然而,它们提供的智能体能力有限,特别是缺乏多步骤问题解决、个性化对话和多模态数据分析。我们的目标是克服这些限制。我们提出了 openCHA,一个开源的大语言模型驱动框架,旨在使会话智能体能够为用户医疗保健查询生成个性化响应。该框架使开发者能够将外部资源(包括数据源、知识库和分析模型)集成到基于大语言模型的解决方案中。openCHA 包含一个编排器,用于规划和执行从外部资源收集信息的操作,这对制定用户查询的响应至关重要。它促进了知识获取、问题解决能力、多语言和多模态对话,并促进了与各种 AI 平台的交互。我们通过两个演示和四个用例展示了该框架在处理复杂医疗保健任务方面的能力。此外,我们将 openCHA 作为开源发布,可供...

the community via GitHub $\diamond,\diamond$ .

通过GitHub社区 $\diamond,\diamond$。

INTRODUCTION

引言

Artificial intelligence (AI), particularly large language model (LLM)-based conversational systems, has attracted immense global attention in recent years. These systems have revolutionized the field by enabling unprecedented access to and interaction with vast amounts of textual information. LLMs can aggregate and process comprehensive or focused segments of textual knowledge existing online, delivering con textually relevant, goal-oriented, and interactive access to this knowledge for anyone who needs it. The advent of LLMs has transformed early, simple conversation systems like Alexa and Siri, demonstrating significant effectiveness across diverse domains [1, 2, 3]. Convers at ional systems can now engage in open-ended conversations and provide relevant, contextual information in a more natural and engaging way.

人工智能 (AI),尤其是基于大语言模型 (LLM) 的对话系统,近年来引发了全球范围的广泛关注。这些系统通过实现对海量文本信息的空前访问与交互,彻底改变了该领域的发展格局。大语言模型能够聚合并处理网络中现有的综合性或专题性文本知识片段,为任何需求者提供情境相关、目标明确且可交互的知识获取方式。大语言模型的出现重塑了早期Alexa和Siri等简单对话系统,在多个领域展现出显著效能 [1, 2, 3]。现代对话系统已能进行开放式交流,并以更自然、更具吸引力的方式提供相关情境信息。

While the field of AI has long explored intelligent agents, their focus has primarily been on analyzing the environment and making decisions based on gathered information. Early AI research often concentrated on physical world problems, fueled by advancements in computer vision, audio processing, and other areas of multimodal perceptual understanding. However, in dynamic environments like health management, where personalized and constantly evolving human health states are crucial, intelligent agents need to accurately capture these states through various means, including conversational interactions and access to personal user data. This information needs to be collected and analyzed, leveraging the vast knowledge gathered through research and practitioners’ experience.

尽管AI领域长期探索智能体(AI Agent),但其重点主要集中于环境分析和基于收集信息做出决策。早期AI研究常聚焦于物理世界问题,这得益于计算机视觉、音频处理及其他多模态感知理解领域的进步。然而在健康管理等动态环境中,个性化且持续变化的人类健康状态至关重要,智能体需要通过对话交互和用户个人数据访问等多种方式精准捕捉这些状态。这些信息需借助研究与实践经验积累的庞大知识库进行收集与分析。

Conversational Health Agents (CHAs) hold significant potential to address the challenges of dynamic health management environments. Thanks to the emergence of LLMs, CHAs can now understand user interactions through multimodal conversations, encompassing text, speech, and potentially other modalities. By analyzing these interactions, CHAs need to identify the necessary data, information, computational processes, and knowledge sources required to comprehend the user’s evolving health state. This information is then translated into actionable insights that effectively guide healthcare management. In essence, CHAs should combine the power of LLM-based conversions with agents’ capabilities, leveraging external data and information sources to navigate the complexities of personalized health environments and provide customized support for users:

对话式健康助手 (Conversational Health Agents, CHAs) 在应对动态健康管理环境挑战方面具有巨大潜力。得益于大语言模型 (LLM) 的出现,CHA 现在能够通过文本、语音乃至更多模态的多模态对话理解用户交互。通过分析这些交互,CHA 需要识别理解用户动态健康状态所需的数据、信息、计算流程和知识来源,进而将其转化为可执行的洞察,有效指导健康管理。本质上,CHA 应结合基于大语言模型的对话能力与智能体 (agent) 功能,利用外部数据和信息源来驾驭个性化健康环境的复杂性,为用户提供定制化支持:

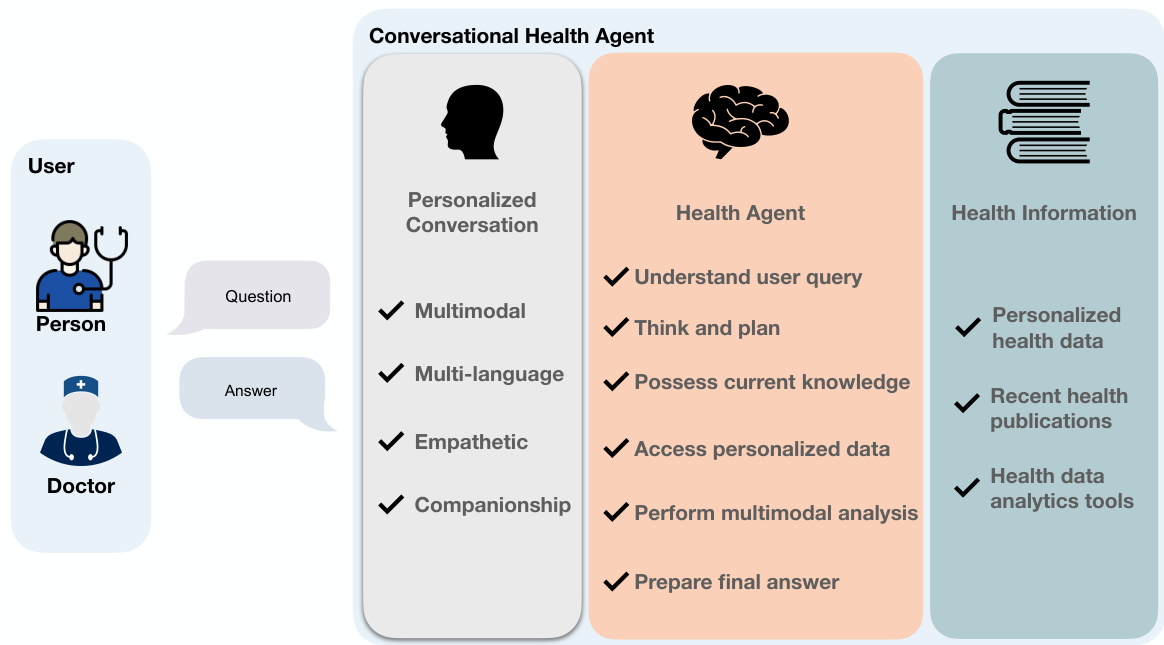

Our current exploration centers on the development of CHAs using the latest technological developments in AI, LLMs, and mHealth, where it has shown efficacy in the continuous collection of lifestyle and physiological data from users. Figure 1 shows an overview of the CHA main components. These indispensable components stand poised to facilitate the creation of exceptionally efficient CHAs.

我们当前的研究重点是利用AI、大语言模型(LLM)和移动健康(mHealth)领域的最新技术进展来开发CHA(Conversational Health Assistant)。该系统已展现出在持续收集用户生活方式和生理数据方面的有效性。图1展示了CHA的主要组件概览,这些核心组件将为创建高效CHA系统提供关键支持。

Existing LLMs, such as ChatGPT [1], BioGPT [12], ChatDoctor [13], and Med-PaLM [3], are currently active in the medical knowledge domain. These LLMs can be served as CHAs [14, 15, 9, 16, 17]. However, they merely focus on the conversational aspects, offering limited agent capabilities such as basic text-based chat interfaces and lacking multi-step problem-solving capabilities. They lack access to users’ personally collected longitudinal data and electronic health records (EHRs), which include crucial information like vital signs, biosignals (e.g., electrocardiogram), medical images, and demographic data. Consequently, their responses tend to be generic and may not address individual health circumstances adequately. Moreover, they struggle to incorporate the latest health insights, leading to potentially outdated responses [18]. Furthermore, these chatbots do not seamlessly integrate with established and existing AI models and tools [19] for multimodal predictive modeling, rendering previous healthcare efforts obsolete.

现有的大语言模型(LLM),如ChatGPT [1]、BioGPT [12]、ChatDoctor [13]和Med-PaLM [3],目前活跃于医学知识领域。这些大语言模型可作为对话式健康助手(CHA)[14, 15, 9, 16, 17]使用。然而,它们仅聚焦于对话功能,提供的智能体能力有限,例如基于文本的基础聊天界面,且缺乏多步骤问题解决能力。它们无法访问用户个人收集的纵向数据和电子健康记录(EHR),这些数据包含生命体征、生物信号(如心电图)、医学影像和人口统计资料等关键信息。因此,它们的回答往往流于泛泛,可能无法充分应对个体健康情况。此外,这些模型难以整合最新的健康洞察,导致回答可能过时[18]。更重要的是,这些聊天机器人无法与现有成熟的人工智能模型和工具[19]无缝集成以进行多模态预测建模,使得先前的医疗保健努力失去效用。

Figure 1: A conversational health agent including 1) a conversation component to enable user interaction and 2) a health agent for problem-solving and determining the optimal sequences of actions, leveraging health information.

图 1: 一个包含以下两部分的对话式健康智能体 (conversational health agent) : 1) 用于实现用户交互的对话组件, 2) 利用健康信息进行问题解决和确定最佳行动序列的健康智能体。

In light of the significant advancements in technology and its paramount importance for both humanity and the environment, it becomes imperative that we synergize all available tools and harness knowledge from diverse sources to craft CHAs that offer a trustworthy, understandable, and actionable environment for a global audience. Presently, we stand on the threshold of crafting frameworks capable of delivering information in the most userfriendly and culturally attuned manner possible. This paper aims to introduce an initial iteration of such agents and lay the foundation for developing more sophisticated tools as our journey unfolds.

鉴于技术的重大进步及其对人类和环境的至关重要性,我们必须协同利用所有可用工具,整合多元知识来源,构建能为全球受众提供可信、可理解且可操作环境的CHA (Computational Human Agent)。当前,我们正站在开发新型框架的门槛上——这些框架能以最用户友好且文化适配的方式传递信息。本文旨在介绍此类AI智能体的初始版本,并为后续开发更复杂工具奠定基础。

BACKGROUND AND SIGNIFICANCE

背景与意义

Related Work

相关工作

Efforts in developing LLM-based CHAs can be categorized into three main groups: LLM Chatbots, Specialized Health LLMs, and Multimodal Health LLMs. LLM Chatbots employ and evaluate current chatbots (e.g., ChatGPT) in executing distinct healthcare functions [20, 21, 22, 23, 24]. For instance, Chen et al. [23] examined ChatGPT’s efficacy in furnishing dependable insights on cancer treatment-related inquiries.

基于大语言模型的CHA开发工作可分为三大类:大语言模型聊天机器人、专业医疗大语言模型和多模态医疗大语言模型。大语言模型聊天机器人通过调用现有聊天机器人(如ChatGPT)来执行特定医疗功能并评估其表现[20, 21, 22, 23, 24]。例如Chen等[23]研究了ChatGPT在提供癌症治疗相关咨询时的可靠性。

Specialized Health LLMs delved deeper into the fundamental aspects of LLMs, aiming to enhance conversational models’ performance by creating entirely new LLMs pretrained specifically for healthcare or fine-tuning existing models. Notable examples include initiatives such as ChatDoctor [13], MedAlpaca [2], and BioGPT [12]. This category emerged in response to research indicating that general-domain LLMs often struggle with healthcarespecific tasks due to domain shift [25, 26], and relying solely on prompt engineering may not significantly improve their healthcare-specific performance [27, 28].

专业医疗大语言模型深入探索了大语言模型的基础层面,旨在通过创建专为医疗保健领域预训练的全新大语言模型或微调现有模型,提升对话模型的性能。代表性案例包括ChatDoctor [13]、MedAlpaca [2]和BioGPT [12]等项目。该领域的兴起源于研究表明,由于领域偏移问题 [25, 26],通用领域大语言模型在医疗专项任务中往往表现不佳,且仅依赖提示工程可能无法显著提升其医疗场景性能 [27, 28]。

Multimodal Health LLMs involve a novel trajectory by integrating multimodality into LLMs for diagnostic functions. For instance, Tu et al. [3] investigated the potential of foundational transformer concepts in LLMs to amalgamate diverse modalities—videos, images, signals, and text—culminating in a multimodal generative model. Xu et al. [29] introduced an LLM-aligned multimodal model, coupling chest X-ray images with radiology reports for Xray-related tasks. Similarly, Belyaeva et al. [30] incorporated tabular health data into LLMs, yielding multimodal healthcare capabilities.

多模态健康大语言模型通过将多模态整合到大语言模型中实现诊断功能,开创了一条新路径。例如,Tu等人[3]探索了基于Transformer架构的大语言模型融合视频、图像、信号和文本等多种模态的潜力,最终构建出多模态生成模型。Xu等人[29]提出了一种与大语言模型对齐的多模态模型,将胸部X光图像与放射学报告相结合用于X射线相关任务。类似地,Belyaeva等人[30]将表格化健康数据融入大语言模型,实现了多模态医疗能力。

Existing Research Gaps and Challenges

现有研究空白与挑战

Knowledge-grounded ness and personalization in CHAs require tailored interactions that transcend basic dialogues, ensuring in clu siv it y through versatile, multimodal multilingual interfaces. The goal is to create CHAs that not only excel in conversational skills but also exhibit agent capabilities, enabling them to engage in critical thinking and strategic planning as proficient problem solvers. Despite the great efforts in developing CHAs, the existing services and models suffer from the following limitations:

知识基础性和个性化在对话式人工智能助手 (CHA) 中需要超越基础对话的定制化交互,通过多功能、多模态、多语言的界面确保包容性。目标是打造不仅擅长对话技巧,还能展现智能体能力的 CHA,使其能够像熟练的问题解决者一样进行批判性思考和战略规划。尽管在开发 CHA 方面付出了巨大努力,现有服务和模型仍存在以下局限:

i) Insufficient support for comprehensive personalization, particularly in cases necessitating real-time access to individualized data. A substantial portion of users’ healthcare data, primarily images, time-series, tabular data, and all other users’ measured personal data streams is housed within healthcare platforms. Currently, CHAs have limited access to this data, primarily during the training and fine-tuning phases of LLM development, or they are completely severed from user data thereafter. The absence of accurate user healthcare information – including continuous data from wearable devices, mHealth applications, and similar sources – hampers the performance of these agents, confining their capabilities to furnish generic responses, offer general guidelines, or potentially provide inaccurate answers.

i) 对全面个性化支持不足,尤其在需要实时访问个体化数据的情况下。用户的大部分医疗数据(主要包括图像、时间序列、表格数据以及其他所有用户测量的个人数据流)存储在医疗平台中。目前,CHA(Conversational Health Assistants)对这些数据的访问权限有限,主要集中在大语言模型开发的训练和微调阶段,此后便与用户数据完全隔离。缺乏准确的用户医疗信息(包括来自可穿戴设备、移动健康应用等的连续数据)会削弱这些智能体的表现,使其只能提供通用回复、一般性指导,甚至可能给出错误答案。

ii) Limited capacity to access up-to-date knowledge and retrieve the most recent healthcare knowledge base. Conventional LLMs depend on limited data and Internet-derived knowledge during their training phase, leading to three primary challenges. They tend to exhibit biases favoring populations with the most abundant online content, underscoring the importance of accessing the latest, relevant data. Recently introduced LLM-based services (e.g., ChatGPT4 [1]) offer Internet search, but this is still insufficient for healthcare applications due to the large number of websites propagating false information. They lack updates on newly reliable Internet resources, a critical shortcoming in healthcare where novel, reliable, and evaluated treatments and modifications to previous recommendations are frequently ignored. Lastly, their reliance on outdated or less pertinent data makes identifying instances of hallucination [31] problematic. Lack of up-to-date information reduces the trustworthiness and credibility of generated responses [18].

ii) 获取最新知识和检索最新医疗知识库的能力有限。传统大语言模型在训练阶段依赖于有限的数据和互联网获取的知识,这导致三个主要挑战。它们往往倾向于偏向在线内容最丰富的人群,突显了获取最新相关数据的重要性。最近推出的基于大语言模型的服务(如ChatGPT4 [1])提供了互联网搜索功能,但由于存在大量传播虚假信息的网站,这对医疗应用仍显不足。它们缺乏对新可靠互联网资源的更新,这在医疗领域是一个关键缺陷,因为新颖、可靠且经过评估的治疗方法以及对先前建议的修改经常被忽视。最后,它们对过时或相关性较低数据的依赖使得识别幻觉实例 [31] 变得困难。缺乏最新信息会降低生成回答的可信度和可靠性 [18]。

iii) Lack of seamless integration with established, multimodal data analysis tools and predictive models that require external execution. Current agents often overestimate the computational capabilities of generative AI, leading to an under-utilization of well-established healthcare analysis tools, despite their proficiency in managing diverse data types [19].

iii) 缺乏与成熟的、需要外部执行的多模态数据分析工具和预测模型的无缝集成。当前的AI智能体往往高估了生成式AI (Generative AI) 的计算能力,导致对成熟的医疗分析工具利用不足,尽管这些工具擅长处理多种数据类型 [19]。

iv) Lack of multi-step problem-solving capabilities. Existing LLM-based CHAs are typically specialized for specific tasks or deficient in robust data analysis capabilities. For example, Xu et al. [29] model performs X-ray image reporting relying solely on X-ray images, ignoring other modalities, such as vital signs recorded in time-series format. Additionally, the existing CHAs cannot address intricate sequential tasks (i.e., act as problem solvers). Incorporating LLMs into CHAs requires integrating sequential reasoning, personalized health history analysis, and data fusion.

iv) 缺乏多步骤问题解决能力。现有基于大语言模型的CHA通常专精于特定任务,或缺乏强大的数据分析能力。例如Xu等人[29]的模型仅依赖X光图像生成报告,忽略了其他模态数据(如时间序列格式记录的体征数据)。此外,现有CHA无法处理复杂序列任务(即作为问题解决者)。将大语言模型整合到CHA中需要融合序列推理、个性化健康史分析和数据融合能力。

LLMs solely are insufficient to tackle the previously mentioned challenges. To make them practical for real-world applications, we need a comprehensive framework that harnesses LLMs while integrating various auxiliary components and external resources.

仅靠大语言模型 (LLM) 不足以应对上述挑战。要让它们在实际应用中发挥作用,我们需要一个综合框架,既能利用大语言模型,又能整合各种辅助组件和外部资源。

Key Contributions

主要贡献

In this article, we present a holistic LLM-powered framework for the development of CHA, aiming to rectify the limitations mentioned above. We delineate the components of our framework and proceed with a case study demonstrating our agent’s capacity. The framework is a problem solver that provides personalized responses by utilizing contemporary Internet resources and advanced multimodal healthcare analysis tools, including machine learning methods. Significant contributions are as follows.

本文提出了一种基于大语言模型(LLM)的整体性框架用于CHA开发,旨在解决上述局限性。我们详细阐述了该框架的组成部分,并通过案例研究展示了该AI智能体的能力。该框架是一个问题解决系统,能够利用现代互联网资源和先进的多模态医疗分析工具(包括机器学习方法)提供个性化响应。主要贡献如下:

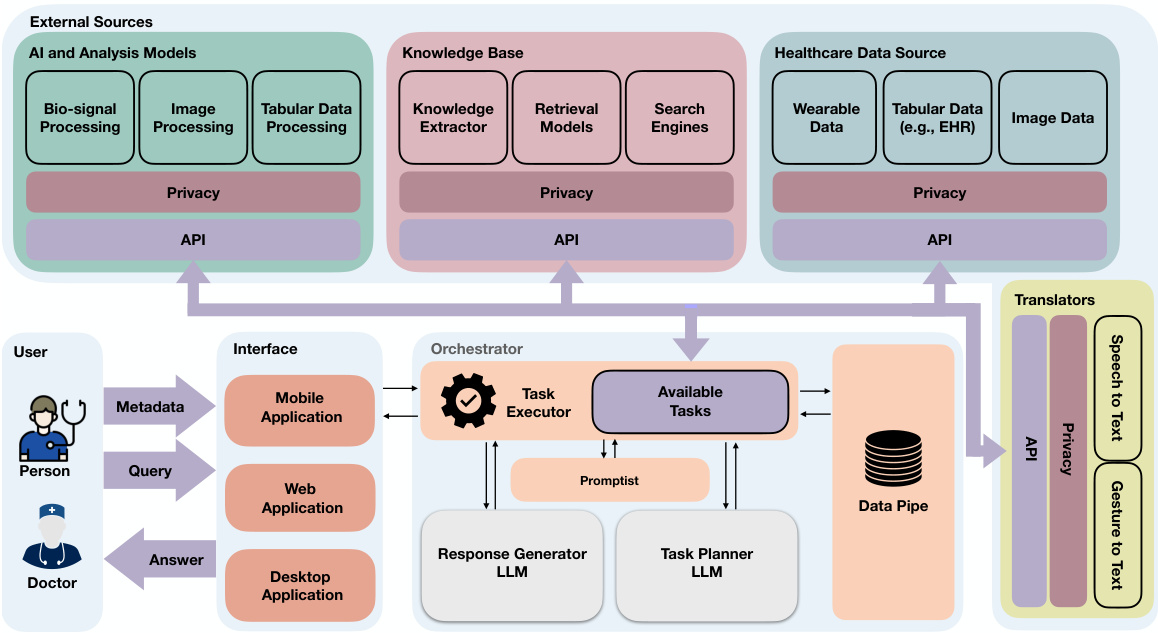

Figure 2: An overview of the proposed LLM-powered framework leveraging a service-based architecture

图 2: 采用基于服务架构的LLM驱动框架概览

MATERIAL AND METHODS

材料与方法

We design an LLM-powered framework with a central agent that perceives and analyzes user queries, provides appropriate responses, and manages access to external resources through Application Program Interfaces (APIs) or function calls. The user-framework interaction is bidirectional, ensuring a conversational tone for ongoing and follow-up conversations. Figure 2 shows an overview of the framework, including three major components: Interface, Orchestrator, and External Sources.

我们设计了一个基于大语言模型(LLM)的框架,其核心是一个能够感知和分析用户查询、提供适当响应并通过应用程序接口(API)或函数调用管理外部资源访问的中央AI智能体。用户与框架的交互是双向的,确保对话语气适用于持续和后续的对话。图2展示了该框架的概览,包括三个主要组件:接口(Interface)、协调器(Orchestrator)和外部资源(External Sources)。

Interface

接口

Interface acts as a bridge between the users and agents, including interactive tools accessible through mobile, desktop, or web applications. It integrates multimodal communication channels, such as text and audio. The Interface receives users’ queries and subsequently transmits them to the Orchestrator (see Figure 2).

接口作为用户与AI智能体之间的桥梁,包括可通过移动设备、桌面或网页应用程序访问的交互工具。它集成了文本和音频等多模态通信渠道。该接口接收用户查询后,将其传输至协调器 (参见图 2)。

Within this framework, users can provide metadata (alongside their queries), including images, audio, gestures, and more. For instance, a user could capture an image of their meal and inquire about its nutritional values or calorie content, with the image serving as metadata.

在此框架下,用户可提供元数据(metadata)(随查询一并提交),包括图像、音频、手势等。例如,用户可拍摄餐食照片并询问其营养价值或卡路里含量,该图像将作为元数据使用。

Orchestrator

编排器

The Orchestrator is the openCHA agent core, which is responsible for problemsolving, planning, executing actions, and providing an appropriate response based on the user query. It incorporates the concept of the Perceptual Cycle Model [32] in openCHA, allowing it to perceive, transform, and analyze the world (i.e., input query and metadata) to generate appropriate responses. To this end, the input data are aggregated, transformed into structured data, and then analyzed to plan and execute actions. Through this process, the Orchestrator interacts with external sources to acquire the required information, perform data integration and analysis, and extract insights, among other functions. In the following, we outline five major components of the Orchestrator.

Orchestrator是openCHA智能体的核心,负责问题解决、规划、执行动作,并根据用户查询提供适当响应。它融合了感知循环模型(Perceptual Cycle Model) [32] 的概念,能够感知、转换并分析世界(即输入查询和元数据)以生成恰当回应。为此,输入数据会被聚合、转换为结构化数据,随后进行分析以规划和执行动作。通过这一过程,Orchestrator与外部源交互以获取所需信息,执行数据集成与分析,并提取洞察等功能。下文将概述Orchestrator的五个主要组件。

The Task Planner is the LLM-enabled decision-making, planning, and reasoning core of the Orchestrator. Its primary responsibility is gathering all necessary information to answer users’ queries. To achieve this, it interprets the user’s query and metadata, identifying the necessary steps for task execution.

任务规划器(Task Planner)是大语言模型(LLM)驱动的协调器决策核心,主要负责规划、推理以及收集回答用户查询所需的所有信息。为此,它会解析用户查询及元数据,明确任务执行所需的步骤。

To transform a user query into a sequence of tasks, we incorporate the Tree of Thought [33] prompting methods into the Task Planner. Using this prompting method, the LLM is asked to 1) generate three unique strategies (i.e., sequences of tasks to be called with their inputs), 2) describe the pros and cons of each strategy, and 3) select one as the best strategy. An alternative prompting technique incorporated in openCHA is ReAct [34], which employs reasoning and action techniques to ascertain the essential tasks to be executed. openCHA offers users the flexibility to choose the prompting method that best meets the needs of their application. Other prompting techniques, such as Plan-and-Solve Prompting [35], could also be implemented and integrated as a Task Planner.

为了将用户查询转化为任务序列,我们在任务规划器中融入了思维树 (Tree of Thought) [33] 提示方法。通过这种方法,大语言模型需要:1) 生成三种独特策略(即待调用任务及其输入的序列),2) 描述每种策略的优缺点,3) 选择其中一种作为最佳策略。openCHA采用的另一种提示技术是ReAct [34],该方法通过推理与行动技术来确定需要执行的核心任务。openCHA允许用户根据应用需求灵活选择提示方法。其他提示技术(如计划求解提示 (Plan-and-Solve Prompting) [35])也可作为任务规划器进行集成实现。

We outline the process of creating and integrating a task into openCHA in Appendix 1. We also indicate how the task is converted into an appropriate prompt, enabling the Task Planner to recognize the available tasks and how to invoke them. Appendix 2 provides a detailed examination of the Task Planner’s implementation, utilizing the Tree of Thought prompting method.

我们在附录1中概述了在openCHA中创建和集成任务的过程。同时说明了如何将任务转换为合适的提示(prompt),使任务规划器(Task Planner)能够识别可用任务及其调用方式。附录2详细分析了任务规划器的实现,其中采用了思维树(Tree of Thought)提示方法。

In the proposed Orchestrator, the planning part is performed in English, leveraging the superior capabilities of LLMs in this language. The framework can employ one of two distinct approaches if the query is in a language other than English. The first approach retrains the source language and utilizes the language model capabilities in that language to generate responses. The second approach involves translating the query into English (e.g., using Google Translate), planning and executing the process in English, and translating the final answer back into the source language.

在所提出的Orchestrator中,规划部分采用英语执行,以发挥大语言模型在该语言上的优势。当查询语言为非英语时,该框架可采用两种不同方法之一:第一种方法重新训练源语言,并利用该语言的大语言模型能力生成响应;第二种方法将查询翻译为英语(例如使用Google Translate),用英语进行规划执行,最后将最终答案回译为源语言。

The Task Executor carries out actuation within the Orchestrator by following the planning and task execution steps determined by the Task Planner. The Task Executor has two primary responsibilities. First, it acts as a data converter, converting the input query and metadata and preparing it to be used by the Task Planner. For instance, if the question is in a language other than English, it will be translated into English using the Google Translate service [36]. Furthermore, if the metadata contains files or images, Task Executor sends the metadata details to Task Planner for planning. Second, the Task Executor executes tasks generated by the Task Planner through interactions with external sources. The results are then relayed to the Task Planner to continue planning if needed. In the end, the Task Planner signals the end of the planning. In Appendix 2, we detail how the Task Planner translates planned tasks into execution instructions, enabling the Task Executor to properly carry out the tasks.

任务执行器 (Task Executor) 在协调器 (Orchestrator) 内通过遵循任务规划器 (Task Planner) 确定的规划和任务执行步骤来实施操作。任务执行器有两个主要职责:首先,它作为数据转换器,对输入查询和元数据进行转换并准备供任务规划器使用。例如,若问题是非英语语言,则会通过谷歌翻译服务 [36] 将其转换为英文。此外,若元数据包含文件或图像,任务执行器会将元数据细节发送给任务规划器进行规划。其次,任务执行器通过与外部源的交互来执行任务规划器生成的任务,随后将结果反馈给任务规划器以继续规划(如有需要)。最终,任务规划器发出规划结束信号。附录2详细说明了任务规划器如何将规划任务转换为执行指令,从而使任务执行器能够正确执行任务。

It is crucial to emphasize that communication between the task planner and task executor is bidirectional. An iterative process continues between the Task Executor and Task Planner until the Task Planner accumulates sufficient information to respond appropriately to the user’s inquiry. This two-way exchange proves indispensable because, in specific scenarios, the Task Planner may necessitate intermediate information to determine subsequent actions.

必须强调的是,任务规划器与任务执行器之间的通信是双向的。任务执行器与任务规划器会持续进行迭代交互,直到任务规划器积累足够信息以恰当回应用户查询。这种双向交互不可或缺,因为在特定场景中,任务规划器可能需要中间信息才能决定后续行动。

The Data Pipe is a repository of metadata and data acquired from External Sources through the execution of conversational sessions. This component is essential because numerous multimodal analyses involve intermediate stages, and their associated data must be retained for future retrieval. The intermediate data might be large, surpassing token limits, or challenging to comprehend and utilize by the Task Planner’s or Response Generator’s LLM. The Data Pipe is automatically managed by the Task Executor. It monitors the stored metadata and intermediate data.

数据管道(Data Pipe)是一个存储库,包含通过对话会话从外部源获取的元数据和数据。该组件至关重要,因为许多多模态分析涉及中间阶段,必须保留相关数据以供后续检索。这些中间数据可能体量庞大超出token限制,或难以被任务规划器(Task Planner)和响应生成器(Response Generator)的大语言模型理解使用。数据管道由任务执行器(Task Executor)自动管理,持续监控存储的元数据和中间数据。

The Data Pipe in openCHA can range from a simple in-memory key/value storage for intermediate data to a more complex database system. The proposed framework allows developers to determine whether their tasks’ results are intermediate or should be directly returned to the LLM. Appendix 1 details how developers can configure this setting.

openCHA中的数据管道(Data Pipe)可以是从简单的内存键值存储(用于中间数据)到更复杂的数据库系统。该框架允许开发者决定其任务结果是作为中间数据还是直接返回给大语言模型。附录1详细说明了开发者如何配置此设置。

Additionally, we have implemented a mechanism whereby an intermediate result stored in the Data Pipe generates a unique key as the task’s outcome. This key is then provided in the Task Planner prompt, aiding the Task Planner in recognizing and utilizing this data as necessary. Appendix 4 illustrates sample prompts generated for tasks and demonstrates how the Task Planner employs the Data Pipe key.

此外,我们还实现了一种机制:存储在数据管道 (Data Pipe) 中的中间结果会生成一个唯一键作为任务输出。该键随后被提供给任务规划器 (Task Planner) 提示词,帮助任务规划器在需要时识别并利用这些数据。附录4展示了为任务生成的提示词示例,并演示了任务规划器如何使用数据管道键。

The Promptist is responsible for transforming query text or outcomes from External Sources into suitable prompts that can be supplied to either the Task Planner or the Response Generator. The Promptist provides the flexibility to modify and adapt each technique, allowing for seamless integration and customization. It can be implemented using existing prompting techniques, some of which are listed as follows.

Promptist负责将来自外部源的查询文本或结果转化为适合提供给任务规划器(Task Planner)或响应生成器(Response Generator)的提示词。该模块支持灵活修改和调整每种技术,实现无缝集成与定制化。其实现可基于现有提示技术,部分技术列举如下:

LLM-REC, proposed by Lyu et al. [37], employs four unique prompting strategies to enrich text descriptions, enhancing personalized text-based recom mend at ions. The approach leverages the LLM to understand item charact eris tics, significantly improving recommendation quality. Additionally, the Hao et al. [38] method can be leveraged, which optimizes text-to-image prompt generation through a framework called prompt adaptation. It auto mati call y refines user inputs into model-preferred prompts. This process starts with supervised fine-tuning of a pretrained language model using a curated set of prompts. It then employs reinforcement learning, guided by a reward function, to identify more effective prompts that produce aesthetically pleasing images aligned with user intentions. Furthermore, the instructions provided by OpenAI on creating more effective prompts can be used [39].

LLM-REC由Lyu等人[37]提出,采用四种独特的提示策略来丰富文本描述,从而提升基于文本的个性化推荐效果。该方法利用大语言模型理解物品特征,显著提高了推荐质量。此外,可借鉴Hao等人[38]提出的方法,该方案通过名为提示适配(prompt adaptation)的框架优化文本到图像的提示生成,自动将用户输入优化为模型偏好的提示。该流程首先使用精选提示集对预训练语言模型进行监督微调,随后通过奖励函数引导的强化学习来识别能生成符合用户意图且具有美学吸引力图像的高效提示。还可参考OpenAI提供的优化提示创建指南[39]。

The Response Generator is an LLM-based module responsible for preparing the response. It refines the gathered information by the Task Planner, converting it into an understandable format and inferring the appropriate response. We separate the Response Generator and Task Planner to allow flexibility in choosing diverse LLM models and prompting techniques for these components. This division ensures that the Task Planner focuses solely on planning without responding to users, while the Response Generator utilizes gathered information to deliver conclusive responses. This segregation facilitates the Response Generator in addressing aspects of empathy and companionship in conversations. In contrast, the Task Planner primarily handles personalization and the up-to-dateness of conversations. Appendix

响应生成器是一个基于大语言模型的模块,负责准备响应。它会对任务规划器收集的信息进行提炼,将其转换为易于理解的格式并推断出合适的回应。我们将响应生成器与任务规划器分离,以便灵活选择不同的大语言模型和提示技术来构建这些组件。这种分工确保任务规划器只需专注于规划而无需直接响应用户,而响应生成器则利用收集到的信息来提供最终答复。这种隔离设计有助于响应生成器在对话中体现共情与陪伴功能,而任务规划器主要处理对话的个性化与时效性。附录

3 outlines the implementation of the Response Generator and how it utilizes results collected by the Task Planner to respond to the user effectively.

3 概述了响应生成器 (Response Generator) 的实现方式,以及它如何利用任务规划器 (Task Planner) 收集的结果来有效响应用户。

External Sources

外部资源

External Sources play a pivotal role in obtaining essential information from the broader world. Typically, these External Sources furnish application program interfaces (APIs) that the Orchestrator can use to retrieve required data, process them using AI or analysis tools, and extract meaningful health information. In openCHA, we integrate with four primary external sources, which we found critical for CHAs (see Figure 2).

外部数据源在从更广阔的世界获取关键信息方面发挥着至关重要的作用。通常,这些外部数据源提供应用程序接口(API),编排器可通过这些接口获取所需数据,使用AI或分析工具进行处理,并提取有意义的健康信息。在openCHA中,我们整合了四个主要外部数据源,这些对CHA至关重要(见图2)。

Healthcare Data Source enables the collection, ingestion, and integration of data captured from a variety of sources, such as Electronic Health Record (EHR), smartphones, and smart watches, for healthcare purposes [40]. Examples of data sources are mHealth platforms and healthcare databases. mHealth platforms have garnered significant attention in the recent wave of healthcare digital iz ation, enabling ubiquitous health monitoring [41, 42]. The data encompass various modalities, including biosignals (e.g., PPG collected via a smartwatch), images (e.g., captured via user’s smartphone), videos, tabular data (e.g., demographic data gathered from EHR), and more. Notable examples of such healthcare platforms include ZotCare [43] and ilumivu [44], offering APIs for third-party integration. In our context, the Orchestrator functions as a third party, accessing user data with their consent.

医疗数据源 (Healthcare Data Source) 能够为医疗健康目的收集、摄取和整合来自电子健康档案 (EHR)、智能手机和智能手表等多种来源的数据 [40]。数据源的例子包括移动医疗 (mHealth) 平台和医疗数据库。在最近的医疗数字化浪潮中,移动医疗平台因其支持无处不在的健康监测而受到广泛关注 [41, 42]。这些数据涵盖多种模态,包括生物信号 (例如通过智能手表采集的光电容积图 (PPG))、图像 (例如通过用户智能手机拍摄的照片)、视频、表格数据 (例如从电子健康档案中收集的人口统计数据) 等。此类医疗平台的典型例子包括 ZotCare [43] 和 ilumivu [44],它们提供第三方集成 API。在我们的场景中,协调器 (Orchestrator) 作为第三方,在用户同意的情况下访问其数据。

Knowledge Base fetches the most current and pertinent data from healthcare sources, such as healthcare literature, reputable websites, or knowledge graphs using search engines or retrieval models [45, 46, 47, 48]. Accessing this retrieved information equips CHAs with up-to-date, personalized knowledge, enhancing its trustworthiness while reducing hallucination and bias. openCHA allows the integration of various knowledge bases to be defined and configured as tasks.

知识库 (Knowledge Base) 从医疗健康来源(如医学文献、权威网站或知识图谱)通过搜索引擎或检索模型 [45, 46, 47, 48] 获取最新且相关的数据。访问这些检索到的信息使CHA具备最新的个性化知识,从而增强其可信度,同时减少幻觉和偏见。openCHA支持集成多种可定义和配置为任务的知识库。

AI and Analysis Models provide data analytics tools to extract information, associations, and insights from data [49, 19], playing a crucial role in the evolving landscape of LLM-healthcare integration, enhancing trustworthiness and personalization. They can perform various tasks, including data denoising, abstraction, classification, and event detection, to mention a few [49, 19]. As generative models, LLMs cannot effectively perform extensive computations or act as machine learning inferences on data. The AI platforms empower our framework to leverage existing health data analytic

AI与分析模型提供数据分析工具,用于从数据中提取信息、关联和洞察[49,19],在大语言模型与医疗健康融合的发展中发挥着关键作用,增强了可信度与个性化。这些工具能执行多种任务,包括数据去噪、抽象化、分类及事件检测等[49,19]。作为生成式模型,大语言模型无法有效执行大规模计算或对数据进行机器学习推理。AI平台使我们的框架能够利用现有健康数据分析...

approaches.

方法。

Translators effectively convert various languages into widely spoken languages, such as English, thereby enhancing the accessibility and in clu siv it y of CHAs. Existing agents face limitations that hinder their usability for large communities globally. Universal text literacy for CHAs often narrows their reach and positions them as a privilege [50, 51, 52]. Many under served communities face obstacles while using CHAs due to their educational disparities, financial constraints, and biases that favor developed nations within existing technological paradigms. Our framework integrates with Translator platforms and is designed to accommodate and support communication with diverse communities. This integration enhances the overall usability of CHAs.

翻译器能有效将各种语言转换为英语等广泛使用的语言,从而提升对话式健康助手(CHA)的可及性与包容性。现有智能体存在局限性,阻碍了全球大规模群体的使用。对话式健康助手对文本识读能力的普遍要求往往缩小了其覆盖范围,使其成为一种特权[50, 51, 52]。由于教育差异、经济条件限制以及现有技术范式对发达国家的偏向性,许多弱势群体在使用对话式健康助手时面临障碍。我们的框架与翻译平台集成,旨在适应并支持与多元社群的沟通,从而提升对话式健康助手的整体可用性。

The selection of the external sources is based on the information and knowledge they provide and their interaction with the Orchestrator. The Orchestrator handles various data types in the proposed framework, including text, JSON formatted data, or unstructured data such as images and audio. This design ensures that any external source capable of returning results in these formats is supported.

外部源的选择基于它们提供的信息和知识,以及它们与协调器 (Orchestrator) 的交互。在提出的框架中,协调器处理各种数据类型,包括文本、JSON格式数据或非结构化数据(如图像和音频)。这一设计确保能够支持任何可以返回这些格式结果的外部源。

DEMONSTRATION

演示

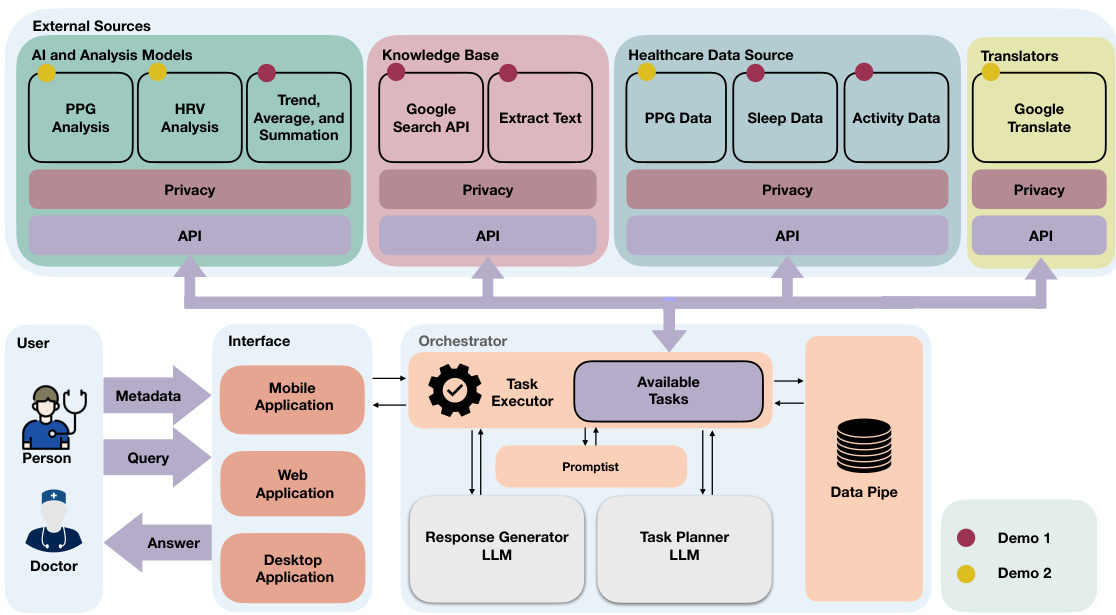

We demonstrate the capabilities of openCHA through three distinct demos. These demonstrations highlight how LLMs’ planning and reasoning abilities can effectively comprehend user queries and translate them into appropriate task executions. Each demonstration involves linking a set of implemented tasks to openCHA. Subsequently, we highlight openCHA’s planning proficiency in effectively sequencing tasks in the correct order with appropriate inputs and executing the tasks. After executing all necessary tasks, the results are conveyed to the Response Generator to provide the final response. The overview of the implemented tasks and their utilization in each demo is depicted in Figure 3.

我们通过三个不同的演示展示了openCHA的能力。这些演示突显了大语言模型(LLM)的规划和推理能力如何有效理解用户查询并将其转化为适当的任务执行。每个演示都涉及将一组已实现的任务链接到openCHA。随后,我们重点展示了openCHA在正确排序任务、提供适当输入并执行任务方面的规划能力。在执行完所有必要任务后,结果会传递给响应生成器以提供最终响应。已实现任务的概述及其在每个演示中的应用如图3所示。

Demo 1: Patient health record reporting

演示1:患者健康记录报告

The first demo indicates how openCHA interacts with patient data stored in a database, conducts data analysis, generates health reports upon request, and asks follow-up questions. Examples of the user’s questions could be: "Provide a sleep summary of Patient 5 during August 2020", "Is Patient

首个演示展示了openCHA如何与数据库中存储的患者数据交互、进行数据分析、根据请求生成健康报告并提出后续问题。用户可能提出的问题示例如:"提供2020年8月期间患者5的睡眠摘要"、"患者..."

Figure 3: Overview of the implemented tasks and components and how they are used in the two demos.

图 3: 已实现任务和组件的概览及其在两个演示中的应用。

5 REM sleep enough during August 2020?", "How much is the total step count of Patient 5 during August 2020?" or "Provide an activity summary of Patient 5 during 2020". For this demo, we implement two tasks for retrieving sleep and physical activity from a health monitoring dataset [53]. The data utilized in this demo is a part of an extensive longitudinal study focusing on the mental health of college students, as documented in [53]. Moreover, we develop analytical tasks capable of executing basic statistical analysis (e.g., computing trends and averaging). Finally, we also add Google Search and Extract Text tasks so that the current setup of openCHA can access internet information. The involved tasks in this demo are marked by red dots in Figure 3.

5号患者在2020年8月期间的REM睡眠是否充足?","5号患者在2020年8月的总步数是多少?"或"提供5号患者2020年的活动摘要"。在本演示中,我们实现了从健康监测数据集[53]中检索睡眠和身体活动的两项任务。本演示使用的数据是一项针对大学生心理健康的大规模纵向研究的一部分,如[53]所述。此外,我们开发了能够执行基本统计分析(如计算趋势和求平均值)的分析任务。最后,我们还添加了谷歌搜索和提取文本任务,使当前openCHA的设置可以访问互联网信息。本演示中涉及的任务在图3中用红点标出。

Figure 4 illustrates sample queries and the corresponding responses from openCHA. The Task Planner identifies the correct tasks, their execution order, and the appropriate inputs to be used. Table 1 showcases the strategy chosen by the Task Planner using the Tree of Thought prompting technique.

图 4: 展示了openCHA的示例查询及对应响应。任务规划器(Task Planner)能准确识别任务、执行顺序及适用输入参数。表 1: 呈现了任务规划器采用思维树(Tree of Thought)提示技术选择的策略。

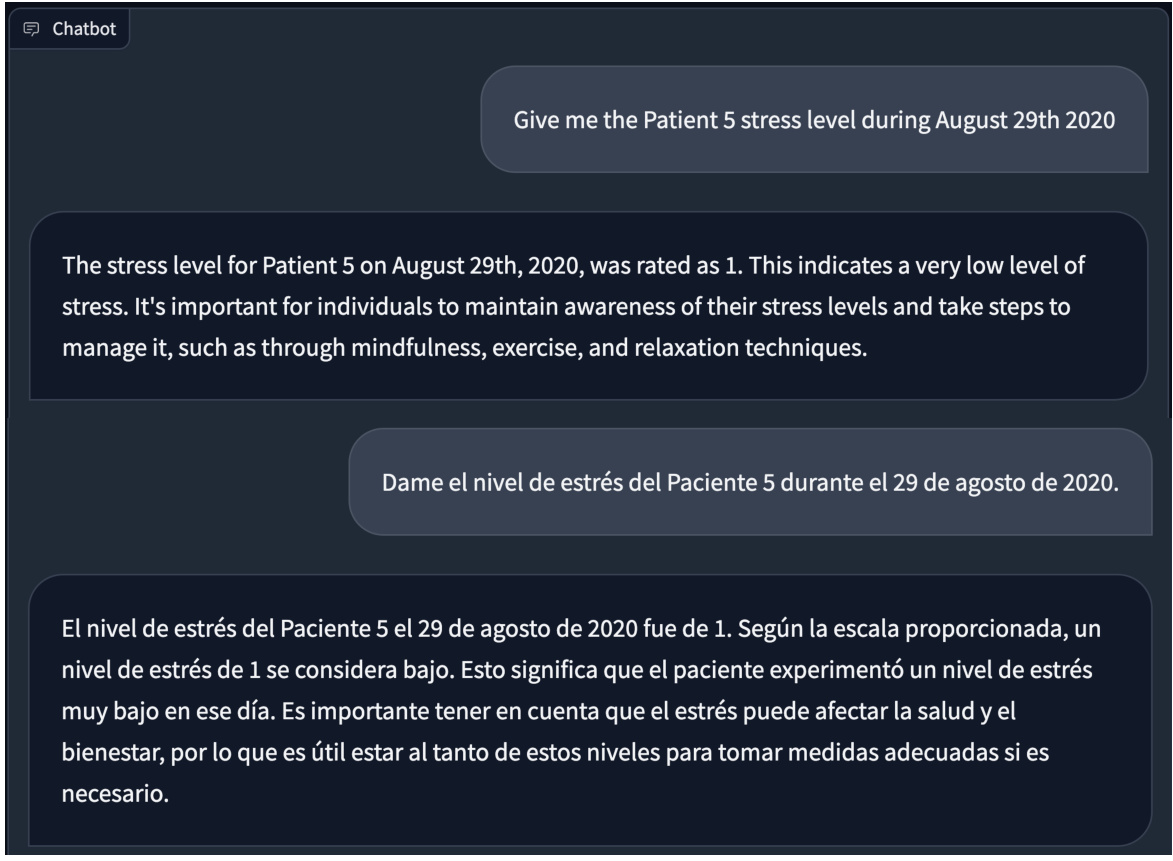

Demo 2: Objective stress level estimation with multi language interaction

Demo 2: 基于多语言交互的客观压力水平评估

Demo 2 indicates the openCHA’s capability to conduct signal processing and objective stress level estimation. We also show that openCHA can answer the query in multiple languages. This is achieved by interacting with a

Demo 2展示了openCHA进行信号处理和客观压力水平评估的能力。我们还证明openCHA能以多种语言回答查询。这是通过交互实现的

Is REM sleep of Patient 5 sufficient during August 2020?

2020年8月患者5的REM睡眠是否充足?

Based on the data collected from Patient 5 in August 2020, the REM sleep duration was 110.63 minutes. In order to determine if this is sufficient, we need to consider the individual's specific sleep needs and overall sleep patterns. It's important to note that the optimal amount of REM sleep can vary from person to person, but generally, adults need about 90-120 minutes of REM sleep each night.

根据2020年8月从患者5收集的数据,快速眼动睡眠(REM)时长为110.63分钟。要判断这一时长是否充足,需考虑个体的具体睡眠需求及整体睡眠模式。值得注意的是,最佳REM睡眠时长因人而异,但成年人通常每晚需要90-120分钟的REM睡眠。

In this case, the REM sleep duration of 110.63 minutes falls within the typical range for adults. However, it's important to assess the overall sleep quality and patterns as well. Factors such as sleep efficiency and total sleep time also play a role in determining if the REM sleep is sufficient for the individual's needs.

在这种情况下,110.63分钟的快速眼动睡眠(REM)时长处于成人典型范围内。但评估整体睡眠质量和模式同样重要。睡眠效率、总睡眠时长等因素也会影响快速眼动睡眠是否满足个体需求。

Give me reference on the REM sleep recommendation

快速眼动睡眠 (REM sleep) 建议参考

According to the WHooP,Rapid Eye Movement(REM)sleep is an essential stage of sleep thathas important physiological and cognitive functions. It is known as the "mentally restorative" stage of sleep when the brain converts short-term memories into long-term ones. The average adult should aim to spend around 20-25% of their total sleep time in the REM stage, with around 90 minutes of REM sleep being recommended for individuals who get 7-8 hours of sleep. This means that the REM sleep duration of 110.63minutes for Patient 5 in August 2020 falls within the typical rangefor adults

根据WHooP的数据,快速眼动睡眠(REM)是睡眠中一个至关重要的阶段,具有重要的生理和认知功能。这一阶段被称为睡眠的"精神修复期",此时大脑会将短期记忆转化为长期记忆。成年人平均应将总睡眠时间的20-25%用于快速眼动睡眠,建议每晚睡7-8小时的人保持约90分钟的REM睡眠。这意味着2020年8月患者5记录的110.63分钟REM睡眠时长处于成人正常范围内

However, REM sleep needs can vary from person to person, and other factors such as overall sleep patterns, sleep efficiency, and total sleep time also play a role in determining if the REM sleep is sufficient for an individual's needs. It's important to consider a more comprehensive view of the patient's sleep health by assessing other sleep metrics such as total sleep time, sleep efficiency, and sleep onset latency.

然而,快速眼动睡眠(REM)需求因人而异,其他因素如整体睡眠模式、睡眠效率和总睡眠时长也会影响个体REM睡眠是否充足。应通过评估总睡眠时长、睡眠效率和入睡潜伏期等其他指标,更全面地考量患者的睡眠健康状况。

If you would like to learn more, you can visit the following link for additional information on REM sleep:WHoOP -What is REM Sleep,Why it's Important,How MuchYou Need &How to Increase It.

如果你想了解更多,可以访问以下链接获取关于快速眼动睡眠 (REM sleep) 的更多信息:WHoOP - 什么是快速眼动睡眠、它的重要性、你需要多少以及如何增加它。

Table 1: The strategies suggested by Tree of Thought prompting technique for the first question.

| Decision | I will proceed to directly analyze the REM sleep data of Patient 5 for August 2020, providing a precise and specific conclusion. Execution: 1. Use the sleep _ get tool to obtain the REM sleep data for |

表 1: 思维树提示技术针对第一个问题提出的策略

| 决策 | 我将直接分析2020年8月患者5的快速眼动睡眠(REM)数据,给出精确具体的结论。执行步骤:1. 使用sleep_ get工具获取患者5的REM睡眠数据 |

translator, health data sources, and AI models. Examples of interactions include inquiries such as "Retrieve the stress level of Patient 5 on August 29th, $2020^{\mathfrak{N}}$ and "What is the average heart rate of Patient 5 during August 2020?" To fulfill our objective, we implemented three distinct tasks (yellow dots in Figure 3). The first task involved acquiring Photo ple thys m ogram (PPG) data from the patients. PPG data were gathered using Samsung Gear Sport smart watches [54], with a sampling frequency of 20 Hz, while participants were in free-living conditions. The data is part of the [53]. The second task performs PPG signal processing to extract heart rate variability (HRV) metrics. For this purpose, we utilize the Neurokit [55] Python library. In our case study, we extract a total of 32 HRV parameters, including metrics such as the root mean square of successive differences between normal heartbeats (RMSSD), low-frequency (LF), and high-frequency (HF) values [56, 57]. The third task estimates stress levels based on HRV using an AI model. Initially, we employed an auto encoder to reduce the dataset’s 32 HRV features to 12. Subsequently, a four-layer neural network categorizes the 12 features into five stress levels. The evaluation of the stress estimation model demonstrates an 86% accuracy rate on a test set.

翻译器、健康数据源和AI模型。交互示例包括查询如"检索2020年8月29日患者5的压力水平$2020^{\mathfrak{N}}$"和"2020年8月期间患者5的平均心率是多少?"。为实现目标,我们实施了三个独立任务(图3中的黄点)。第一项任务是从患者处获取光电容积描记(PPG)数据,使用三星Gear Sport智能手表[54]以20Hz采样频率在自由生活条件下采集,该数据属于[53]研究的一部分。第二项任务通过PPG信号处理提取心率变异性(HRV)指标,采用Neurokit[55] Python库进行处理,共提取32个HRV参数,包括正常心跳间连续差值的均方根(RMSSD)、低频(LF)和高频(HF)值等指标[56,57]。第三项任务基于HRV使用AI模型评估压力水平:先通过自编码器将32个HRV特征降维至12个,再由四层神经网络将这12个特征分类为五个压力等级。压力评估模型在测试集上显示出86%的准确率。

Figure 5 depicts example queries and the corresponding responses from openCHA. The Task Planner’s approach involves initially retrieving the PPG data of Patient 5 on August 29th, 2020. Subsequently, the obtained result is forwarded to the PPG analysis task to extract HRV metrics. Lastly, the planner initiates the execution of stress analysis tasks, providing the HRV metrics for this task. Table 2 displays the chosen strategy by the Task Planner utilizing the Tree of Thought prompting technique. The ultimate estimated stress, along with an explanation, is then returned to the user.

图5展示了openCHA的示例查询及对应响应。任务规划器(Task Planner)的方法包括:首先检索2020年8月29日患者5的PPG数据,随后将获取的结果传递给PPG分析任务以提取HRV指标,最后规划器启动压力分析任务的执行,为该任务提供HRV指标。表2显示了任务规划器采用思维树(Tree of Thought)提示技术选择的策略,最终将带有解释的预估压力值返回给用户。

Figure 5: Demo 2. Objective stress level estimation. The question is asked in English and Spanish.

图 5: 演示2. 客观压力水平评估。问题以英语和西班牙语呈现。

openCHA Use cases

openCHA 应用场景

To indicate the usability of openCHA across various applications, we outline several use cases that have utilized the framework in their research as follows.

为了展示openCHA在各种应用中的可用性,我们概述了以下研究中使用该框架的几个用例。

- ChatDiet [58] introduced a personalized, nutrition-oriented food recommendation agent, utilizing openCHA as its core implementation. By integrating personal and population models as external sources, ChatDiet offered tailored food suggestions. It enhanced traditional food recommendation services by delivering dynamic, personalized, and explainable recommendations. In a case study, ChatDiet achieved an effectiveness rate of 92%, outperforming solutions like ChatGPT.

- ChatDiet [58] 引入了一个以营养为导向的个性化食品推荐智能体 (AI Agent),其核心实现基于 openCHA。通过整合个人与群体模型作为外部数据源,ChatDiet 提供了定制化的食品建议。该系统通过动态化、个性化及可解释的推荐机制,优化了传统食品推荐服务。案例研究表明,ChatDiet 的有效性达到 92%,表现优于 ChatGPT 等解决方案。

Table 2: The strategies suggested by Tree of Thought prompting technique for the first question.

| Decision heart rate. obtained PPG data. | The best strategy provides both detailed PPG analysis and an estimation of the stress level, which offers a comprehensive view of the patient's health status. Execution: 1. Use ppg_ get tool to retrieve the PPG data for Patient 5 during August 29th, 2020. 2. Analyze the PPG data with ppg_analysis tool to obtain the |

表 2: 思维树提示技术针对第一个问题提出的策略。

| 决策心率。获取PPG数据。 | 最佳策略同时提供详细的PPG分析和压力水平评估,为患者健康状况提供全面视角。执行步骤:1. 使用ppg_ get工具获取2020年8月29日患者5的PPG数据。2. 通过ppg_analysis工具分析PPG数据以获取 |

- Knowledge-infused LLM-powered CHA for diabetic patients [59] is developed by integrating domain-specific knowledge and analytical tools as external sources using openCHA. This integration included incorporating American Diabetes Association dietary guidelines and deploying analytical tools for nutritional intake calculation, resulting in superior performance compared to GPT4 in managing diabetes through tailored dietary recommendations, as demonstrated by an evaluation of 100 diabetes-related questions.

- 知识增强型大语言模型驱动的糖尿病患者CHA [59]通过使用openCHA整合领域特定知识和分析工具作为外部资源而开发。该集成包括纳入美国糖尿病协会饮食指南,并部署用于营养摄入计算的分析工具,通过对100个糖尿病相关问题的评估证明,其在通过定制饮食建议管理糖尿病方面表现优于GPT4。

- openCHA was employed to develop an agent for evaluating the safety and reliability of mental health chatbots [60]. This agent’s evaluation capabilities were compared with expert assessments and several existing LLMs, including GPT4, Claude, Gemini, and Mistral. Guidelines and benchmarks introduced by experts and Internet search served as external sources linked to openCHA. The agent demonstrated superior accuracy, achieving the lowest mean absolute error (MAE) against experts’ scores — a reduction by a factor of 1 compared to LLMs’ scores, with the maximum MAE being 10 — and provided unbiased evaluation scores.

- 采用openCHA开发了一个用于评估心理健康聊天机器人安全性和可靠性的AI智能体 [60]。该智能体的评估能力与专家评估及多个现有大语言模型(包括GPT4、Claude、Gemini和Mistral)进行了对比。专家制定的指南基准和互联网搜索数据作为外部知识源接入openCHA系统。该智能体展现出卓越的准确性:与专家评分的平均绝对误差(MAE)最低(相比大语言模型的MAE降低了1倍,最大MAE为10),并能提供无偏见的评估分数。

- The Empathy-enhanced CHA [61] was developed to interpret and respond to users’ emotional states through multimodal dialogue, representing a significant step forward in providing con textually aware and em pathetically resonant support in the mental health field. This paper utilized speech-to-text, text-to-speech, and speech emotion detection models as external sources connected to openCHA.

- 共情增强型CHA [61]通过多模态对话来解读并响应用户情绪状态,标志着心理健康领域在提供情境感知与情感共鸣支持方面的重要进展。该研究将语音转文本、文本转语音及语音情感检测模型作为外部资源接入openCHA平台。

To see more demonstrations on how the openCHA works in real setup, we have uploaded multiple YouTube videos $\diamond$ , $\diamond$ , ⋄, ⋄

要查看openCHA在实际设置中的更多演示,我们已上传多个YouTube视频 $\diamond$ , $\diamond$ , ⋄, ⋄

DISCUSSION

讨论

openCHA Potentials and Limitations

openCHA 的潜力与局限

In this section, we briefly discuss our proposed framework’s capabilities, potentials, and limitations.

在本节中,我们将简要讨论所提出框架的能力、潜力与局限性。

Flexibility: openCHA provides a high level of flexibility to integrate LLMs with external data sources, knowledge bases, and analytical tools. The proposed components can be developed and replaced according to the requirements of the healthcare application in question. For instance, new external sources can be effortlessly integrated and introduced as new tasks into openCHA. The LLMs employed in openCHA can be readily swapped with fine-tuned or more healthcare-specific LLMs. Similarly, the Planner prompting technique and decision-making processes are modifiable. This flexibility facilitates collaboration among diverse research communities, enabling them to contribute to various aspects of CHAs. Appendix 1 shows how a new task can be defined and introduced into openCHA.

灵活性:openCHA提供了高度灵活性,能够将大语言模型(LLM)与外部数据源、知识库和分析工具集成。所提出的组件可根据具体医疗应用需求进行开发和替换。例如,新外部源可轻松集成并作为新任务引入openCHA。openCHA采用的大语言模型可随时替换为经过微调或更具医疗专业性的模型。同样,规划器(Planner)提示技术和决策流程也可调整。这种灵活性促进了不同研究团队之间的协作,使其能够为各类医疗对话助手(CHA)的各个方面做出贡献。附录1展示了如何定义新任务并将其引入openCHA系统。

Explain ability: openCHA enhances explain ability for CHAs, allowing users to inquire about the tools and actions used to generate a response. As detailed in Appendices 2 and 3, openCHA maintains a "previous actions" section that records past conversations and tasks. When queried about task usage, it lists the executed tasks and their applications, enhancing transparency and fostering trust between users and CHAs. For instance, in Demo 2, when a user asks, "Name the tasks used," openCHA responds by detailing that PPG and HRV data were utilized to determine stress levels. An example of this interaction is shown in Figure 6.

可解释性:openCHA增强了CHA的可解释性,允许用户查询生成响应所使用的工具和操作。如附录2和附录3所述,openCHA设有"历史操作"模块记录过往对话及任务。当用户询问任务使用情况时,系统会列出已执行任务及其应用场景,从而提升透明度并增强用户与CHA之间的信任。例如在Demo 2中,当用户提问"列出所使用的任务"时,openCHA会详细说明利用PPG和HRV数据判断压力水平的过程,该交互示例如图6所示。

Personalization: The openCHA framework enhances personalization by integrating individual information and analytics tools from healthcare systems or local databases as external sources. The quality of these external sources greatly influences the effectiveness of the personalization. For example, ChatDiet [58] utilizes personal dietary preferences and population data, along with an analysis of nutrients’ effects on health outcomes like sleep quality, to enhance its food recommendations significantly. This strategy not only heightens the accuracy of the recommendations but also ensures they are precisely tailored to meet individual dietary needs.

个性化:openCHA框架通过整合来自医疗系统或本地数据库的个人信息和分析工具作为外部来源,增强了个性化服务。这些外部来源的质量极大地影响了个性化的效果。例如,ChatDiet [58]利用个人饮食偏好和人口数据,以及分析营养素对睡眠质量等健康结果的影响,显著提升了其食物推荐的准确性。这一策略不仅提高了推荐的精准度,还确保推荐内容能精确满足个人的饮食需求。

Reliability: openCHA boosts the reliability of answers by leveraging validated information and computations as external sources. Our framework is tailored to effectively utilize existing LLMs for complex healthcare tasks, strategically offloading computational and sensitive information tasks to external sources while reserving LLMs prima