Editing Large Language Models: Problems, Methods, and Opportunities

大语言模型 (Large Language Model) 编辑:问题、方法与机遇

Yunzhi $\mathbf{Yao}^{\pmb{\mathscr{s}}\pmb{\mathscr{s}}}$ * Peng Wang*** Bozhong Tian**, Siyuan Cheng**, Zhoubo Li** Shumin Deng, Huajun Chen**, Ningyu Zhang**

云知 $\mathbf{Yao}^{\pmb{\mathscr{s}}\pmb{\mathscr{s}}}$ * 王鹏*** 田博中**, 程思源**, 李周波** 邓淑敏, 陈华君**, 张宁宇**

♣ Zhejiang University $\spadesuit$ Zhejiang University - Ant Group Joint Laboratory of Knowledge Graph ♢Donghai Laboratory ♡ National University of Singapore, NUS-NCS Joint Lab, Singapore {yyztodd,peng2001,tbozhong,sycheng,zhoubo.li}@zju.edu.cn {huajunsir,zhang ning yu}@zju.edu.cn,shumin@nus.edu.sg

♣ 浙江大学 $\spadesuit$ 浙江大学-蚂蚁集团知识图谱联合实验室 ♢东海实验室 ♡ 新加坡国立大学, NUS-NCS联合实验室, 新加坡 {yyztodd,peng2001,tbozhong,sycheng,zhoubo.li}@zju.edu.cn {huajunsir,zhang ning yu}@zju.edu.cn,shumin@nus.edu.sg

Abstract

摘要

Despite the ability to train capable LLMs, the methodology for maintaining their relevancy and rectifying errors remains elusive. To this end, the past few years have witnessed a surge in techniques for editing LLMs, the objective of which is to efficiently alter the behavior of LLMs within a specific domain without negatively impacting performance across other inputs. This paper embarks on a deep exploration of the problems, methods, and opportunities related to model editing for LLMs. In particular, we provide an exhaustive overview of the task definition and challenges associated with model editing, along with an in-depth empirical analysis of the most progressive methods currently at our disposal. We also build a new benchmark dataset to facilitate a more robust evaluation and pinpoint enduring issues intrinsic to existing techniques. Our objective is to provide valuable insights into the effectiveness and feasibility of each editing technique, thereby assisting the community in making informed decisions on the selection of the most appropriate method for a specific task or context1.

尽管能够训练出强大的大语言模型 (LLM),但保持其相关性和纠正错误的方法仍然难以捉摸。为此,过去几年见证了编辑大语言模型技术的激增,其目标是高效地改变大语言模型在特定领域的行为,同时不影响其他输入的性能。本文深入探讨了与大语言模型编辑相关的问题、方法和机遇。具体而言,我们全面概述了模型编辑的任务定义和挑战,并对当前最先进的方法进行了深入的实证分析。我们还构建了一个新的基准数据集,以促进更稳健的评估,并指出现有技术固有的持久性问题。我们的目标是为每种编辑技术的有效性和可行性提供有价值的见解,从而帮助社区在特定任务或场景下选择最合适的方法时做出明智的决策 [20]。

1 Introduction

1 引言

Large language models (LLMs) have demonstrated a remarkable capacity for understanding and generating human-like text (Brown et al., 2020; OpenAI, 2023; Anil et al., 2023; Touvron et al., 2023; Qiao et al., 2022; Zhao et al., 2023). Despite the profi- ciency in training LLMs, the strategies for ensuring their relevance and fixing their bugs remain unclear. Ideally, as the world’s state evolves, we aim to update LLMs in a way that sidesteps the computational burden associated with training a wholly new model. As shown in Figure 1, to address this issue, the concept of model editing has been proposed (Sinitsin et al., 2020; De Cao et al., 2021), enabling data-efficient alterations to the behavior of models, specifically within a designated realm of interest, while ensuring no adverse impact on other inputs.

大语言模型(LLM)已展现出理解和生成类人文本的卓越能力 (Brown et al., 2020; OpenAI, 2023; Anil et al., 2023; Touvron et al., 2023; Qiao et al., 2022; Zhao et al., 2023)。尽管LLM训练技术日趋成熟,但确保其相关性和修复缺陷的策略仍不明确。理想情况下,随着世界状态的变化,我们希望以规避训练全新模型的计算负担为前提来更新LLM。如图1所示,为解决这一问题,模型编辑(model editing)概念被提出 (Sinitsin et al., 2020; De Cao et al., 2021),该技术能在指定领域内高效修改模型行为,同时确保对其他输入不产生负面影响。

Figure 1: Model editing to fix and update LLMs.

图 1: 通过模型编辑修正和更新大语言模型。

Currently, numerous works on model editing for LLMs (De Cao et al., 2021; Meng et al., 2022, 2023; Sinitsin et al., 2020; Huang et al., 2023) have made strides in various editing tasks and settings. As illustrated in Figure 2, these works manipulate the model’s output for specific cases by either integrating an auxiliary network with the original unchanged model or altering the model parameters responsible for the undesirable output. Despite the wide range of model editing techniques present in the literature, a comprehensive comparative analysis, assessing these methods in uniform experimental conditions, is notably lacking. This absence of direct comparison impairs our ability to discern the relative merits and demerits of each approach, consequently hindering our comprehension of their adaptability across different problem domains.

目前,众多关于大语言模型 (LLM) 编辑的研究 (De Cao et al., 2021; Meng et al., 2022, 2023; Sinitsin et al., 2020; Huang et al., 2023) 已在各类编辑任务和场景中取得进展。如图 2 所示,这些工作通过两种方式操控模型在特定案例中的输出:要么为原始未修改模型集成辅助网络,要么直接修改导致不良输出的模型参数。尽管现有文献中存在多种模型编辑技术,但缺乏在统一实验条件下对这些方法进行全面比较分析的研究。这种直接对比的缺失使我们难以辨别各方法的相对优劣,进而阻碍了我们对不同问题领域中方法适应性的理解。

To confront this issue, the present study endeavors to establish a standard problem definition accompanied by a meticulous appraisal of these methods $(\S2,\S3)$ . We conduct experiments under regulated conditions, fostering an impartial comparison of their respective strengths and weaknesses (§4). We initially use two popular model editing datasets, ZsRE (Levy et al., 2017) and COUNTERFACT (Meng et al., 2022), and two structurally different language models, T5 (Raffel et al., 2020a) (encoder-decoder) and GPT-J (Wang and Komatsuzaki, 2021a) (decoder only), as our base models. We also evaluate the performance of larger models, OPT-13B (Zhang et al., 2022a) and GPT-NEOX20B (Black et al., 2022). Beyond basic edit settings, we assess performance for batch and sequential editing. While we observe that current methods have demonstrated considerable capacity in factual model editing tasks, we reconsider the current evaluation and create a more encompassing evaluation dataset(§5): portability (robust generalization capabilities), locality (side effect), and efficiency (time and memory usage). We find current model editing methods are somewhat limited on these levels, thereby constraining their practical application, and deserve more research in the future. Through systematic evaluation, we aim to provide valuable insights on each model editing technique’s effectiveness, aiding researchers in choosing the appropriate method for specific tasks.

为解决这一问题,本研究致力于建立标准问题定义,并对这些方法进行细致评估(§2, §3)。我们在受控条件下开展实验,以实现对其各自优缺点的公正比较(§4)。初始阶段采用两个主流模型编辑数据集ZsRE (Levy et al., 2017)和COUNTERFACT (Meng et al., 2022),以及两种结构不同的大语言模型T5 (Raffel et al., 2020a)(编码器-解码器架构)和GPT-J (Wang and Komatsuzaki, 2021a)(仅解码器架构)作为基础模型。同时评估了更大规模模型OPT-13B (Zhang et al., 2022a)与GPT-NEOX20B (Black et al., 2022)的表现。除基础编辑设置外,我们还测试了批量编辑和序列编辑场景。虽然现有方法在事实性模型编辑任务中展现出显著能力,但我们重新审视当前评估体系并构建了更全面的评估数据集(§5):可移植性(鲁棒泛化能力)、局部性(副作用)和效率(时间与内存消耗)。研究发现现有模型编辑方法在这些层面存在局限,制约了实际应用价值,值得未来深入研究。通过系统化评估,我们旨在为各类模型编辑技术的有效性提供洞见,帮助研究者针对特定任务选择适宜方法。

2 Problems Definition

2 问题定义

Model editing, as elucidated by Mitchell et al. (2022b), aims to adjust an initial base model’s $(f_{\theta}$ , $\theta$ signifies the model’s parameters) behavior on the particular edit descriptor $(x_{e},y_{e})$ efficiently without influencing the model behavior on other samples. The ultimate goal is to create an edited model, denoted $f_{\theta_{e}}$ . Specifically, the basic model $f_{\theta}$ is represented by a function $f:\mathbb{X}\mapsto\mathbb{Y}$ that associates an input $x$ with its corresponding prediction $y$ . Given an edit descriptor comprising the edit input $x_{e}$ and edit label $y_{e}$ such that $f_{\theta}(x_{e})\neq y_{e}$ , the post-edit model $f_{\theta_{e}}$ is designed to produce the expected output, where $f_{\theta_{e}}(x_{e})=y_{e}$ .

模型编辑,如Mitchell等人 (2022b) 所述,旨在高效调整初始基础模型 $(f_{\theta}$ (其中 $\theta$ 表示模型参数) 在特定编辑描述符 $(x_{e},y_{e})$ 上的行为,而不影响模型在其他样本上的表现。最终目标是创建一个编辑后的模型,记为 $f_{\theta_{e}}$。具体而言,基础模型 $f_{\theta}$ 由一个函数 $f:\mathbb{X}\mapsto\mathbb{Y}$ 表示,该函数将输入 $x$ 映射到其对应的预测 $y$。给定一个包含编辑输入 $x_{e}$ 和编辑标签 $y_{e}$ 的编辑描述符,使得 $f_{\theta}(x_{e})\neq y_{e}$,编辑后的模型 $f_{\theta_{e}}$ 被设计为产生预期输出,即 $f_{\theta_{e}}(x_{e})=y_{e}$。

The model editing process generally impacts the predictions for a broad set of inputs that are closely associated with the edit example. This collection of inputs is called the editing scope. A successful edit should adjust the model’s behavior for examples within the editing scope while leaving its performance for out-of-scope examples unaltered:

模型编辑过程通常会影响与编辑示例密切相关的广泛输入集的预测结果。这一输入集合被称为编辑范围 (editing scope)。一次成功的编辑应当调整模型在编辑范围内的行为,同时保持其在范围外示例上的表现不变:

$$

f_{\theta_{e}}(x) =

\begin{cases}

y_{e} & \text{if } x \in I(x_{e}, y_{e}) \quad

f_{\theta}(x) & \text{if } x \in O(x_{e}, y_{e})

\end{cases}

$$

$$

f_{\theta_{e}}(x) =

\begin{cases}

y_{e} & \text{if } x \in I(x_{e}, y_{e}) \quad

f_{\theta}(x) & \text{if } x \in O(x_{e}, y_{e})

\end{cases}

$$

The in-scope $I(x_{e},y_{e})$ usually encompasses $x_{e}$ along with its equivalence neighborhood $N(x_{e},y_{e})$ , which includes related input/output pairs. In contrast, the out-of-scope $O(x_{e},y_{e})$ consists of inputs that are unrelated to the edit example. The post-edit model $f_{e}$ should satisfy the following three properties: reliability, generalization, and locality.

范围内$I(x_{e},y_{e})$通常包含$x_{e}$及其等价邻域$N(x_{e},y_{e})$ (其中包含相关的输入/输出对)。相比之下,范围外$O(x_{e},y_{e})$由与编辑示例无关的输入组成。编辑后模型$f_{e}$应满足以下三个属性:可靠性、泛化性和局部性。

Reliability Previous works (Huang et al., 2023; De Cao et al., 2021; Meng et al., 2022) define a reliable edit when the post-edit model $f_{\theta_{e}}$ gives the target answer for the case $(x_{e},y_{e})$ to be edited. The reliability is measured as the average accuracy on the edit case:

可靠性

先前的研究 (Huang et al., 2023; De Cao et al., 2021; Meng et al., 2022) 将可靠编辑定义为后编辑模型 $f_{\theta_{e}}$ 为待编辑案例 $(x_{e},y_{e})$ 提供目标答案的情况。可靠性通过编辑案例的平均准确率来衡量:

$$

\mathbb{E}_ {(x_{\mathrm{e}}^{\prime}, y_{\mathrm{e}}^{\prime}) \sim {(x_{\mathrm{e}}, y_{\mathrm{e}})}}

\mathbb{1}{\operatorname{argmax}_ {y} f_{\theta_{e}}(y \mid x_{\mathrm{e}}^{\prime}) = y_{\mathrm{e}}^{\prime}}

$$

$$

\mathbb{E}_ {(x_{\mathrm{e}}^{\prime}, y_{\mathrm{e}}^{\prime}) \sim {(x_{\mathrm{e}}, y_{\mathrm{e}})}}

\mathbb{1}{\operatorname{argmax}_ {y} f_{\theta_{e}}(y \mid x_{\mathrm{e}}^{\prime}) = y_{\mathrm{e}}^{\prime}}

$$

Generalization The post-edit model $f_{\theta_{e}}$ should also edit the equivalent neighbour $N\left(x_{\mathrm{e}},y_{\mathrm{e}}\right)$ (e.g. rephrased sentences). It is evaluated by the average accuracy of the model $f_{\theta_{e}}$ on examples drawn uniformly from the equivalence neighborhood:

泛化性 经过编辑的模型 $f_{\theta_{e}}$ 还应能处理等价邻域 $N\left(x_{\mathrm{e}},y_{\mathrm{e}}\right)$ 中的样本(例如改写后的句子)。其评估方式为:从等价邻域中均匀采样样本,计算模型 $f_{\theta_{e}}$ 的平均准确率。

$$

\mathbb{E}_ {(x_{\mathrm{e}}^{\prime}, y_{\mathrm{e}}^{\prime}) \sim N(x_{\mathrm{e}}, y_{\mathrm{e}})}

\mathbb{1}{\operatorname*{argmax}_ {y} f_{\theta_{e}}(y \mid x_{\mathrm{e}}^{\prime}) = y_{\mathrm{e}}^{\prime}}

$$

$$

\mathbb{E}_ {(x_{\mathrm{e}}^{\prime}, y_{\mathrm{e}}^{\prime}) \sim N(x_{\mathrm{e}}, y_{\mathrm{e}})}

\mathbb{1}{\operatorname*{argmax}_ {y} f_{\theta_{e}}(y \mid x_{\mathrm{e}}^{\prime}) = y_{\mathrm{e}}^{\prime}}

$$

Locality also noted as Specificity in some work. Editing should be implemented locally, which means the post-edit model $f_{\theta_{e}}$ should not change the output of the irrelevant examples in the out-ofscope $O(x_{e},y_{e})$ . Hence, the locality is evaluated by the rate at which the post-edit model $f_{\theta_{e}}$ ’s predictions are unchanged as the pre-edit $f_{\theta}$ model:

局部性(Locality)在某些研究中也称为特异性(Specificity)。编辑应在局部范围内实现,这意味着经过编辑的模型 $f_{\theta_{e}}$ 不应改变超出范围 $O(x_{e},y_{e})$ 中无关样本的输出。因此,局部性通过比较编辑后模型 $f_{\theta_{e}}$ 与编辑前模型 $f_{\theta}$ 的预测结果保持不变的比例来评估:

$$

\mathbb{E}_ {(x_{\mathrm{e}}^{\prime}, y_{\mathrm{e}}^{\prime}) \sim O(x_{\mathrm{e}}, y_{\mathrm{e}})}

\mathbb{1}{f_{\theta_{e}}(y \mid x_{\mathrm{e}}^{\prime}) = f_{\theta}(y \mid x_{\mathrm{e}}^{\prime})}

$$

$$

\mathbb{E}_ {(x_{\mathrm{e}}^{\prime}, y_{\mathrm{e}}^{\prime}) \sim O(x_{\mathrm{e}}, y_{\mathrm{e}})}

\mathbb{1}{f_{\theta_{e}}(y \mid x_{\mathrm{e}}^{\prime}) = f_{\theta}(y \mid x_{\mathrm{e}}^{\prime})}

$$

3 Current Methods

3 当前方法

Current model editing methods for LLMs can be categorized into two main paradigms as shown in Figure 2: modifying the model’s parameters or pre- serving the model’s parameters. More comparisons can be seen in Table 6.

当前对大语言模型 (LLM) 进行编辑的方法可分为两大范式,如图 2 所示:修改模型参数或保留模型参数。更多对比可参见表 6。

3.1 Methods for Preserving LLMs’ Parameters

3.1 大语言模型参数保留方法

Memory-based Model This kind of method stores all edit examples explicitly in memory and employs a retriever to extract the most relevant edit facts for each new input to guide the model to generate the edited fact. SERAC (Mitchell et al., 2022b) presents an approach that adopts a distinct counter factual model while leaving the original model unchanged. Specifically, it employs a scope classifier to compute the likelihood of new input falling within the purview of stored edit examples. If the input matches any cached edit in memory, the counter factual model’s prediction is based on the input and the most probable edit. Otherwise, if the input is out-of-scope for all edits, the original model’s prediction is given. Additionally, recent research demonstrates that LLMs possess robust capabilities for in-context learning. Instead of resorting to an extra model trained with new facts, the model itself can generate outputs corresponding to the provided knowledge given a refined knowledge context as a prompt. This kind of method edits the language model by prompting the model with the edited fact and retrieved edit demonstrations from the edit memory and includes the following work: MemPrompt (Madaan et al., 2022),IKE (Zheng et al., 2023) and MeLLo (Zhong et al., 2023).

基于记忆的模型

这类方法将所有编辑样本显式存储在内存中,并利用检索器为每个新输入提取最相关的编辑事实,以指导模型生成修改后的内容。SERAC (Mitchell et al., 2022b) 提出了一种采用独立反事实模型的方法,同时保持原始模型不变。具体而言,它通过范围分类器计算新输入落入已存储编辑样本范围内的概率。若输入与内存中任一缓存编辑匹配,则反事实模型将基于该输入及最可能的编辑进行预测;反之,若输入超出所有编辑范围,则返回原始模型的预测结果。此外,近期研究表明大语言模型具备强大的上下文学习能力。这类方法无需额外训练新事实的模型,而是通过提供精炼的知识上下文作为提示,使模型自身能根据给定知识生成对应输出。该方法通过向模型提供编辑事实及从编辑记忆中检索的演示样本进行提示来实现语言模型编辑,相关研究包括:MemPrompt (Madaan et al., 2022)、IKE (Zheng et al., 2023) 和 MeLLo (Zhong et al., 2023)。

Figure 2: An overview of two paradigms of model editing for LLMs.

图 2: 大语言模型模型编辑的两种范式概览。

Additional Parameters This paradigm introduces extra trainable parameters within the language models. These parameters are trained on a modified knowledge dataset while the original model parameters remain static. T-Patcher (Huang et al., 2023) integrates one neuron(patch) for one mistake in the last layer of the Feed-Forward Network (FFN) of the model, which takes effect only when encountering its corresponding mistake. CaliNET (Dong et al., 2022) incorporates several neurons for multiple edit cases. Differently, GRACE (Hartvigsen et al., 2022) maintains a discrete code- book as an Adapter, adding and updating elements over time to edit a model’s predictions.

额外参数

该范式在大语言模型中引入了额外的可训练参数。这些参数在修改后的知识数据集上进行训练,而原始模型参数保持不变。T-Patcher (Huang et al., 2023) 在模型前馈网络 (FFN) 的最后一层为每个错误集成一个神经元 (patch),仅当遇到对应错误时才会激活。CaliNET (Dong et al., 2022) 则为多个编辑案例整合了若干神经元。与之不同,GRACE (Hartvigsen et al., 2022) 维护了一个离散的代码本作为适配器 (Adapter),通过持续添加和更新元素来编辑模型的预测结果。

3.2 Methods for Modifying LLMs’ Parameters

3.2 大语言模型参数修改方法

This paradigm would update part of the parameter $\theta$ , it applies an update $\Delta$ matrix to edit the model.

该范式会更新部分参数 $\theta$,通过应用更新矩阵 $\Delta$ 来修改模型。

Locate-Then-Edit This paradigm initially identifies parameters corresponding to specific knowledge and modifies them through direct updates to the target parameters. The Knowledge Neuron (KN) method (Dai et al., 2022) introduces a knowledge attribution technique to pinpoint the “knowledge neuron” (a key-value pair in the FFN matrix) that embodies the knowledge and then updates these neurons. ROME (Meng et al., 2022) applies causal mediation analysis to locate the editing area. Instead of modifying the knowledge neurons in the FFN, ROME alters the entire matrix. ROME views model editing as the least squares with a linear equality constraint and uses the Lagrange multiplier to solve it. However, KN and ROME can only edit one factual association at a time. To this end, MEMIT (Meng et al., 2023) expands on the setup of ROME, realizing the situation of synchronous editing for multiple cases. Based on MEMIT, PMET (Li et al., 2023a) involves the attention value to get a better performance.

定位-然后-编辑范式

该范式首先识别与特定知识对应的参数,并通过直接更新目标参数进行修改。知识神经元 (Knowledge Neuron, KN) 方法 (Dai et al., 2022) 引入了一种知识归因技术,以定位体现知识的"知识神经元"(FFN矩阵中的键值对),然后更新这些神经元。ROME (Meng et al., 2022) 应用因果中介分析来定位编辑区域。与修改FFN中的知识神经元不同,ROME会改变整个矩阵。ROME将模型编辑视为带线性等式约束的最小二乘问题,并使用拉格朗日乘数法求解。然而,KN和ROME每次只能编辑一个事实关联。为此,MEMIT (Meng et al., 2023) 扩展了ROME的设置,实现了多案例同步编辑的场景。基于MEMIT,PMET (Li et al., 2023a) 引入了注意力值以获得更好的性能。

Meta-learning Meta-learning methods employ a hyper network to learn the necessary $\Delta$ for editing the LLMs. Knowledge Editor (KE) (De Cao et al., 2021) leverages a hyper network (specifically, a bidirectional-LSTM) to predict the weight update for each data point, thereby enabling the constrained optimization of editing target knowledge without disrupting others. However, this approach falls short when it comes to editing LLMs. To overcome this limitation, Model Editor Networks with Gradient Decomposition (MEND) (Mitchell et al., 2022a) learns to transform the gradient of fine-tuned language models by employing a lowrank decomposition of gradients, which can be applied to LLMs with better performance.

元学习

元学习方法采用一个超网络来学习编辑大语言模型所需的 $\Delta$。知识编辑器 (Knowledge Editor, KE) [De Cao 等人, 2021] 利用超网络 (具体为双向 LSTM) 预测每个数据点的权重更新,从而在不干扰其他知识的情况下实现对目标知识的约束优化。然而,这种方法在编辑大语言模型时表现不足。为突破这一限制,基于梯度分解的模型编辑网络 (Model Editor Networks with Gradient Decomposition, MEND) [Mitchell 等人, 2022a] 通过学习对微调语言模型梯度进行低秩分解变换,可应用于大语言模型并取得更优性能。

| DataSet | Model | Metric | FT-L | SERAC | IKE | CaliNet | T-Patcher | KE | MEND | KN | ROME | MEMIT |

| ZsRE | T5-XL | Reliability | 20.71 | 99.80 | 67.00 | 5.17 | 30.52 | 3.00 | 78.80 | 22.51 | ||

| Generalization | 19.68 | 99.66 | 67.11 | 4.81 | 30.53 | 5.40 | 89.80 | 22.70 | ||||

| Locality | 89.01 | 98.13 | 63.60 | 72.47 | 77.10 | 96.43 | 98.45 | 16.43 | ||||

| GPT-J | Reliability | 54.70 | 90.16 | 99.96 | 22.72 | 97.12 | 6.60 | 98.15 | 11.34 | 99.18 | 99.23 | |

| Generalization | 49.20 | 89.96 | 99.87 | 0.12 | 94.95 | 7.80 | 97.66 | 9.40 | 94.90 | 87.16 | ||

| Locality | 37.24 | 99.90 | 59.21 | 12.03 | 96.24 | 94.18 | 97.39 | 90.03 | 99.19 | 99.62 | ||

| COUNTERFACT | T5-XL | Reliability | 33.57 | 99.89 | 97.77 | 7.76 | 80.26 | 1.00 | 81.40 | 47.86 | ||

| Generalization | 23.54 | 98.71 | 82.99 | 7.57 | 21.73 | 1.40 | 93.40 | 46.78 | ||||

| Locality | 72.72 | 99.93 | 37.76 | 27.75 | 85.09 | 96.28 | 91.58 | 57.10 | ||||

| GPT-J | Reliability | 99.90 | 99.78 | 99.61 | 43.58 | 100.00 | 13.40 | 73.80 | 1.66 | 99.80 | 99.90 | |

| Generalization | 97.53 | 99.41 | 72.67 | 0.66 | 83.98 | 11.00 | 74.20 | 1.38 | 86.63 | 73.13 | ||

| Locality | 1.02 | 98.89 | 35.57 | 2.69 | 8.37 | 94.38 | 93.75 | 58.28 | 93.61 | 97.17 |

Table 1: Results of existing methods on three metrics of the dataset. The settings for these models and datasets are the same with Meng et al. (2022). ‘-’ refers to the results that the methods empirically fail to edit LLMs.

| 数据集 | 模型 | 指标 | FT-L | SERAC | IKE | CaliNet | T-Patcher | KE | MEND | KN | ROME | MEMIT |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ZsRE | T5-XL | 可靠性 | 20.71 | 99.80 | 67.00 | 5.17 | 30.52 | 3.00 | 78.80 | 22.51 | - | - |

| 泛化性 | 19.68 | 99.66 | 67.11 | 4.81 | 30.53 | 5.40 | 89.80 | 22.70 | - | - | ||

| 局部性 | 89.01 | 98.13 | 63.60 | 72.47 | 77.10 | 96.43 | 98.45 | 16.43 | - | - | ||

| GPT-J | 可靠性 | 54.70 | 90.16 | 99.96 | 22.72 | 97.12 | 6.60 | 98.15 | 11.34 | 99.18 | 99.23 | |

| 泛化性 | 49.20 | 89.96 | 99.87 | 0.12 | 94.95 | 7.80 | 97.66 | 9.40 | 94.90 | 87.16 | ||

| 局部性 | 37.24 | 99.90 | 59.21 | 12.03 | 96.24 | 94.18 | 97.39 | 90.03 | 99.19 | 99.62 | ||

| COUNTERFACT | T5-XL | 可靠性 | 33.57 | 99.89 | 97.77 | 7.76 | 80.26 | 1.00 | 81.40 | 47.86 | - | - |

| 泛化性 | 23.54 | 98.71 | 82.99 | 7.57 | 21.73 | 1.40 | 93.40 | 46.78 | - | - | ||

| 局部性 | 72.72 | 99.93 | 37.76 | 27.75 | 85.09 | 96.28 | 91.58 | 57.10 | - | - | ||

| GPT-J | 可靠性 | 99.90 | 99.78 | 99.61 | 43.58 | 100.00 | 13.40 | 73.80 | 1.66 | 99.80 | 99.90 | |

| 泛化性 | 97.53 | 99.41 | 72.67 | 0.66 | 83.98 | 11.00 | 74.20 | 1.38 | 86.63 | 73.13 | ||

| 局部性 | 1.02 | 98.89 | 35.57 | 2.69 | 8.37 | 94.38 | 93.75 | 58.28 | 93.61 | 97.17 |

表 1: 现有方法在数据集三个指标上的结果。这些模型和数据集的设置与Meng等人 (2022) 相同。'-'表示该方法经验上无法编辑大语言模型的结果。

4 Preliminary Experiments

4 初步实验

Considering the abundance of studies and datasets centered on factual knowledge, we use it as our primary comparison foundation. Our initial controlled experiments, conducted using two prominent factual knowledge datasets (Table 1), facilitate a direct comparison of methods, highlighting their unique strengths and limitations (Wang et al., 2023b).

考虑到大量研究和数据集都围绕事实性知识展开,我们将其作为主要对比基准。我们最初的控制实验采用两个著名的事实性知识数据集(表1),实现了方法的直接对比,凸显了各自独特优势与局限(Wang et al., 2023b)。

4.1 Experiment Setting

4.1 实验设置

We use two prominent model editing datasets: ZsRE and COUNTER FACT, with their details available in Appendix B. Previous studies typically used smaller language models $(<1\mathrm{B})$ and demonstrated the effectiveness of current editing methods on smaller models like BERT (Devlin et al., 2019). However, whether these methods work for larger models is still unexplored. Hence, considering the editing task and future developments, we focus on generation-based models and choose larger ones: T5-XL (3B) and GPT-J (6B), representing both encoder-decoder and decoder-only structures.

我们使用两个著名的模型编辑数据集:ZsRE和COUNTER FACT,其详细信息见附录B。先前研究通常使用较小规模的语言模型$(<1\mathrm{B})$,并证明了当前编辑方法在BERT等小模型上的有效性[20]。然而,这些方法是否适用于更大模型仍是未知领域。因此,考虑到编辑任务和未来发展趋势,我们专注于基于生成的模型,并选择了更大规模的T5-XL(3B)和GPT-J(6B),它们分别代表编码器-解码器和纯解码器结构。

We’ve selected influential works from each method type. Alongside existing model editing techniques, we additionally examined the results of fine-tuning, an elementary approach for model updating. To avoid the computational cost of retraining all layers, we employed methodology proposed by Meng et al. (2022), fine-tuning layers identified by ROME and we denoted it as FT-L. This strategy ensures a fair comparison with other direct editing methods, bolstering our analysis’s validity. More details can be found in Appendix A.

我们从每种方法类型中选取了具有影响力的工作。除现有的模型编辑技术外,我们还研究了微调(fine-tuning)这一基础模型更新方法的结果。为避免重训练所有层的计算成本,我们采用了Meng等人 (2022) 提出的方法,仅对ROME识别出的层进行微调,并将其标记为FT-L。该策略确保了与其他直接编辑方法的公平比较,增强了我们分析的有效性。更多细节详见附录A。

4.2 Experiment Results

4.2 实验结果

Basic Model Table 1 reveals SERAC and ROME’s superior performance on the ZsRE and COUNTER FACT datasets, with SERAC exceeding $90%$ on several metrics. While MEMIT lacks its generalization, it excels in reliability and locality. KE, CaliNET, and KN perform poorly, with acceptable performance in smaller models, but mediocrity in larger ones. MEND performs well on the two datasets, achieving over $80%$ in the results on T5, although not as impressive as ROME and SERAC. The performance of the T-Patcher model fluctuates across different model architectures and sizes. For instance, it under performs on T5-XL for the ZsRE dataset, while it performs perfectly on GPT-J. In the case of the COUNTER FACT dataset, T-Patcher achieves satisfactory reliability and locality on T5 but lacks generalization. Conversely, on GPT-J, the model excels in reliability and generalization but under performs in the locality. This instability can be attributed to the model architecture since T-Patcher adds a neuron to the final decoder layer for T5; however, the encoder may still retain the original knowledge. FT-L performs less impressively than ROME on PLMs, even when modifying the same position. It shows under w hel ming performance on the ZsRE dataset but equals ROME in reliability and generalization with the COUNTERFACT dataset on GPT-J. Yet, its low locality score suggests potential impacts on unrelated knowledge areas. IKE demonstrates good reliability but struggles with locality, as prepended prompts might affect unrelated inputs. Its generalization capability could also improve. The in-context learning method may struggle with context mediation failure (Hernandez et al., 2023), as pre-trained language models may not consistently generate text aligned with the prompt.

基础模型

表 1 显示 SERAC 和 ROME 在 ZsRE 和 COUNTER FACT 数据集上表现优异,其中 SERAC 在多项指标上超过 $90%$。尽管 MEMIT 缺乏泛化能力,但其在可靠性和局部性方面表现突出。KE、CaliNET 和 KN 表现较差,在较小模型中尚可接受,但在较大模型中表现平庸。MEND 在这两个数据集上表现良好,在 T5 上的结果超过 $80%$,尽管不如 ROME 和 SERAC 亮眼。

T-Patcher 模型的表现因模型架构和规模而异。例如,在 ZsRE 数据集上,T5-XL 表现不佳,但在 GPT-J 上表现完美。对于 COUNTER FACT 数据集,T-Patcher 在 T5 上实现了令人满意的可靠性和局部性,但缺乏泛化能力。相反,在 GPT-J 上,该模型在可靠性和泛化方面表现出色,但在局部性上表现不佳。这种不稳定性可能源于模型架构,因为 T-Patcher 为 T5 的最终解码器层添加了一个神经元,但编码器可能仍保留原始知识。

FT-L 在 PLM 上的表现不如 ROME 亮眼,即使在修改相同位置时也是如此。它在 ZsRE 数据集上表现欠佳,但在 GPT-J 的 COUNTERFACT 数据集上与 ROME 在可靠性和泛化方面持平。然而,其较低的局部性分数表明可能对不相关知识领域产生影响。

IKE 表现出良好的可靠性,但在局部性方面存在困难,因为前置提示可能影响无关输入。其泛化能力也有待提升。上下文学习方法可能面临上下文调解失败的问题 (Hernandez et al., 2023),因为预训练语言模型可能无法始终生成与提示一致的文本。

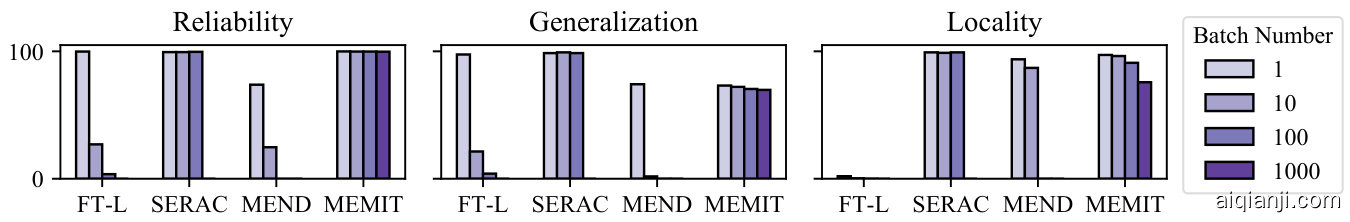

Figure 3: Batch Editing performance against batch number. We test batch numbers in [1,10,100,1000] for MEMIT. Due to the huge memory usage for FT, SERAC and MEND, we didn’t test batch 1000 for these methods.

图 3: 批量编辑性能随批量数的变化。我们在 MEMIT 上测试了 [1,10,100,1000] 的批量数。由于 FT、SERAC 和 MEND 的内存占用过大,这些方法未测试批量数 1000 的情况。

Model Scaling We conduct experiments with larger models, testing IKE, ROME, and MEMIT on OPT-13B and GPT-NEOX-20B due to computational constraints. The results (Table 2) surprisingly show ROME and MEMIT performing well on the GPT-NEOX-20B model but failing on OPT-13B. This is due to both methods relying on a matrix inversion operation. However, in the OPT-13B model, the matrix is not invertible. We even empirically find that approximating the solution with least squares yields unsatisfactory results. We think this is the limitation of ROME and MEMIT as they are based on the strong assumption that matrices are non-degenerate and may not be applied to different models. MEMIT performs worse due to its reliance on multi-layer matrix computations, and its reliability and generalization declined more than ROME’s for larger models. IKE’s performance is affected by the in-context learning ability of the model itself. The results of OPT are even worse than the results of GPT-J, which may be attributed to OPT’s own in-context learning ability. Additionally, as the model size increases, its performance in both generalization and locality diminishes.

模型扩展

由于计算资源限制,我们在OPT-13B和GPT-NEOX-20B上测试了IKE、ROME和MEMIT的扩展实验。结果(表2)显示,ROME和MEMIT在GPT-NEOX-20B上表现良好,但在OPT-13B上失效。这是因为这两种方法都依赖于矩阵求逆运算,而OPT-13B中的矩阵不可逆。即使采用最小二乘法近似求解,结果仍不理想。我们认为这是ROME和MEMIT的局限性——它们基于矩阵非退化的强假设,可能无法适配不同模型。MEMIT因依赖多层矩阵计算,其可靠性和泛化性在更大模型上比ROME下降更明显。IKE的表现受模型自身上下文学习能力影响,OPT的结果甚至比GPT-J更差,这可能与OPT自身的上下文学习能力有关。此外,随着模型规模增大,其泛化性和局部性表现均有所下降。

Batch Editing We conduct further batch editing analysis, given that many studies often limit updates to a few dozen facts or focus only on singleedit cases. However, it’s often necessary to modify the model with multiple knowledge pieces simultaneously. We focused on batch-editing-supportive methods (FT, SERAC, MEND, and MEMIT) and displayed their performance in Figure 3. Notably, MEMIT supports massive knowledge editing for LLMs, allowing hundreds or even thousands of simultaneous edits with minimal time and memory costs. Its performance across reliability and genera liz ation remains robust up to 1000 edits, but locality decreases at this level. While FT-L, SERAC, and MEND also support batch editing, they require significant memory for handling more cases, exceeding our current capabilities. Thus, we limited tests to 100 edits. SERAC can conduct batch edits perfectly up to 100 edits. MEND and FT-L performance in batch edits is not as strong, with the model’s performance rapidly declining as the number of edits increases.

批量编辑

鉴于许多研究通常将更新限制在几十条事实内或仅关注单次编辑案例,我们进一步开展了批量编辑分析。然而,实际应用中常需同时修改模型中的多条知识。我们重点测试了支持批量编辑的方法(FT、SERAC、MEND和MEMIT),其性能如图3所示。值得注意的是,MEMIT支持对大语言模型进行大规模知识编辑,能以极低的时间和内存成本同时处理数百甚至数千条编辑。在1000次编辑量级下,其可靠性和泛化性能仍保持稳健,但局部性有所下降。虽然FT-L、SERAC和MEND也支持批量编辑,但处理更多案例需要大量内存,超出了当前实验条件。因此我们将测试限制在100次编辑内:SERAC在100次编辑量级下表现完美,而MEND和FT-L的批量编辑性能较弱,模型表现随编辑次数增加迅速下降。

(注:根据要求,已保留原始术语如MEMIT、SERAC等,图表引用格式转换为"图3",并调整了标点符号间距。专业术语如"大语言模型(LLM)"在首次出现时标注英文,但原文未出现首次定义场景故直接使用中文。段落结构与技术细节完整保留。)

Table 2: Current methods’ results of current datasets on OPT-13B and GPT-NEOX-20B.

| Method | ZSRE | COUNTERFACT | ||||

| Reliability | Generalization | Locality | Reliability | Generalization | Locality | |

| OPT-13B | ||||||

| ROME | 22.23 | 6.08 | 99.74 | 36.85 | 2.86 | 95.46 |

| MEMIT | 7.95 | 2.87 | 92.61 | 4.95 | 0.36 | 93.28 |

| IKE | 69.97 | 69.93 | 64.83 | 49.71 | 34.98 | 53.08 |

| GPT-NEOX-20B | ||||||

| ROME | 99.34 | 95.49 | 99.79 | 99.80 | 85.45 | 94.54 |

| MEMIT | 77.30 | 71.44 | 99.67 | 87.22 | 70.26 | 96.48 |

| IKE | 100.00 | 99.95 | 59.69 | 98.64 | 67.67 | 43.03 |

表 2: 当前方法在 OPT-13B 和 GPT-NEOX-20B 数据集上的结果。

| 方法 | ZSRE | COUNTERFACT | ||||

|---|---|---|---|---|---|---|

| 可靠性 | 泛化性 | 局部性 | 可靠性 | 泛化性 | 局部性 | |

| OPT-13B | ||||||

| ROME | 22.23 | 6.08 | 99.74 | 36.85 | 2.86 | 95.46 |

| MEMIT | 7.95 | 2.87 | 92.61 | 4.95 | 0.36 | 93.28 |

| IKE | 69.97 | 69.93 | 64.83 | 49.71 | 34.98 | 53.08 |

| GPT-NEOX-20B | ||||||

| ROME | 99.34 | 95.49 | 99.79 | 99.80 | 85.45 | 94.54 |

| MEMIT | 77.30 | 71.44 | 99.67 | 87.22 | 70.26 | 96.48 |

| IKE | 100.00 | 99.95 | 59.69 | 98.64 | 67.67 | 43.03 |

Sequential Editing Note that the default evaluation procedure is to update a single model knowledge, evaluate the new model, and then roll back the update before repeating the process for each test point. In practical scenarios, models should retain previous changes while conducting new edits. Thus, the ability to carry out successive edits is a vital feature for model editing (Huang et al., 2023). We evaluate approaches with strong singleedit performance for sequential editing and report the results in Figure 4. Methods that freeze the model’s parameters, like SERAC and T-Patcher, generally show stable performance in sequential editing. However, those altering the model’s parameters struggle. ROME performs well up to $n=10$ , then degrades at $n=100$ . MEMIT’s performance also decreases over 100 edits, but less drastically than ROME. Similarly, MEND performs well at $n=1$ but significantly declines at $n=10$ . As the editing process continues, these models increasingly deviate from their original state, resulting in suboptimal performance.

顺序编辑

需要注意的是,默认评估流程是更新单个模型知识、评估新模型,然后在为每个测试点重复该过程前回滚更新。在实际场景中,模型应在进行新编辑时保留先前更改。因此,执行连续编辑的能力是模型编辑的关键特性 (Huang et al., 2023)。我们评估了在单次编辑中表现优异的方法在顺序编辑中的表现,结果如图 4 所示。冻结模型参数的方法(如 SERAC 和 T-Patcher)通常在顺序编辑中表现稳定,而修改模型参数的方法则表现不佳。ROME 在 $n=10$ 次编辑内表现良好,但在 $n=100$ 次时性能下降。MEMIT 的性能在超过 100 次编辑后也有所降低,但降幅小于 ROME。类似地,MEND 在 $n=1$ 时表现良好,但在 $n=10$ 时显著下降。随着编辑过程的持续,这些模型逐渐偏离原始状态,导致性能欠佳。

Figure 4: Sequential Editing performance against data stream size (log-scale).

图 4: 序列编辑性能随数据流大小(对数尺度)变化情况。

5 Comprehensive Study

5 综合研究

Considering the above points, we contend that previous evaluation metrics may not fully assess model editing capabilities. Therefore, we propose more comprehensive evaluations regarding portability, locality, and efficiency.

考虑到上述几点,我们认为之前的评估指标可能无法全面衡量模型编辑能力。因此,我们针对可移植性、局部性和效率提出了更全面的评估方案。

5.1 Portability - Robust Generalization

5.1 可移植性 - 鲁棒泛化

Several studies evaluate generalization using samples generated through back translation (De Cao et al., 2021). However, these paraphrased sentences often involve only minor wording changes and don’t reflect substantial factual modifications. As stated in Jacques Thibodeau (2022), it’s crucial to verify if these methods can handle the implications of an edit for realistic applications. As a result, we introduce a new evaluation metric called Portability to gauge the effectiveness of model editing in transferring knowledge to related content, termed robust generalization. Hence we consider three aspects: (1) Subject Replace: As most rephrased sentences keep subject descriptions but rephrase the relation more, we test generalization by replacing the subject in the question with an alias or synonym. This tests whether the model can generalize the edited attribute to other descriptions of the same subject. (2) Reversed Relation: When the target of a subject and relation is edited, the attribute of the target entity also changes. We test the model’s ability to handle this by filtering for suitable relations such as one-to-one and asking it the reverse question to check if the target entity is also updated. (3) One-hop: Modified knowledge should be usable by the edited language model for downstream tasks. For example, if we change the answer to the question “What university did Watts Humphrey attend?” from “Trinity College” to “University of Michigan”, the model should then answer “Ann Arbor in Michigan State” instead of “Dublin in Ireland” when asked, “Which city did Watts Humphrey live in during his university studies?” We thus construct a reasoning dataset to evaluate the post-edit models’ abilities to use the edited knowledge.

多项研究通过回译生成的样本来评估泛化能力 (De Cao et al., 2021) 。然而这些改写句通常仅涉及措辞微调,并未体现实质性事实修改。正如Jacques Thibodeau (2022) 所述,关键要验证这些方法能否处理编辑内容对实际应用的隐含影响。为此,我们提出名为"可移植性"的新评估指标,用于衡量模型编辑在向相关内容迁移知识时的有效性,即鲁棒泛化能力。我们从三个维度展开评估:(1) 主体替换:由于多数改写句保留主体描述而侧重关系重构,我们通过将问题中的主体替换为别名或同义词来测试泛化能力,检验模型能否将编辑属性泛化至同一主体的其他描述。(2) 关系反转:当编辑主体与关系的目标时,目标实体的属性也会变化。我们筛选一对一等合适关系,通过提出反向问题来测试模型是否同步更新目标实体。(3) 单跳推理:修改后的知识应能被编辑后的大语言模型用于下游任务。例如将"Watts Humphrey就读哪所大学"的答案从"Trinity College"改为"University of Michigan"后,当询问"Watts Humphrey大学时期生活在哪个城市"时,模型应回答"密歇根州的安娜堡"而非"爱尔兰的都柏林"。为此我们构建推理数据集来评估编辑后模型运用新知识的能力。

Table 3: Portability results on various model editing methods. The example for each assessment type can be found in Table7 at Appendix B.

| Method | Subject- Replace | Reverse- Relation | One- hop |

| GPT-J-6B | |||

| FT-L | 72.96 | 8.05 | 1.34 |

| SERAC | 17.79 | 1.30 | 5.53 |

| T-Patcher | 96.65 | 33.62 | 3.10 |

| MEND | 42.45 | 0.00 | 11.34 |

| ROME | 37.42 | 46.42 | 50.91 |

| MEMIT | 27.73 | 47.67 | 52.74 |

| IKE | 88.77 | 92.96 | 55.38 |

| GPT-NEOX-20B | |||

| ROME | 44.57 | 48.99 | 51.03 |

| MEMIT | 30.98 | 49.19 | 49.58 |

| IKE | 85.54 | 96.46 | 58.97 |

表 3: 不同模型编辑方法的可移植性结果。各评估类型的示例见附录B中的表7。

| Method | Subject-Replace | Reverse-Relation | One-hop |

|---|---|---|---|

| GPT-J-6B | |||

| FT-L | 72.96 | 8.05 | 1.34 |

| SERAC | 17.79 | 1.30 | 5.53 |

| T-Patcher | 96.65 | 33.62 | 3.10 |

| MEND | 42.45 | 0.00 | 11.34 |

| ROME | 37.42 | 46.42 | 50.91 |

| MEMIT | 27.73 | 47.67 | 52.74 |

| IKE | 88.77 | 92.96 | 55.38 |

| GPT-NEOX-20B | |||

| ROME | 44.57 | 48.99 | 51.03 |

| MEMIT | 30.98 | 49.19 | 49.58 |

| IKE | 85.54 | 96.46 | 58.97 |

We incorporate a new part, $P(x_{e},y_{e})$ , into the existing dataset ZsRE, and Portability is calculated as the average accuracy of the edited model $\left(f_{\theta_{e}}\right)$ when applied to reasoning examples in $P(x_{e},y_{e})$ :

我们在现有数据集ZsRE中新增了一个部分 $P(x_{e},y_{e})$ ,可移植性 (Portability) 的计算方式为编辑后模型 $\left(f_{\theta_{e}}\right)$ 在 $P(x_{e},y_{e})$ 推理样本上的平均准确率:

$$

\mathbb{E}_ {(x_{\mathrm{e}}^{\prime}, y_{\mathrm{e}}^{\prime}) \sim P(x_{\mathrm{e}}, y_{\mathrm{e}})}

\mathbb{1}{\operatorname*{argmax}_ {y} f_{\theta_{e}}(y \mid x_{\mathrm{e}}^{\prime}) = y_{\mathrm{e}}^{\prime}}

$$

$$

\mathbb{E}_ {(x_{\mathrm{e}}^{\prime}, y_{\mathrm{e}}^{\prime}) \sim P(x_{\mathrm{e}}, y_{\mathrm{e}})}

\mathbb{1}{\operatorname*{argmax}_ {y} f_{\theta_{e}}(y \mid x_{\mathrm{e}}^{\prime}) = y_{\mathrm{e}}^{\prime}}

$$

Dataset Construction As to the one-hop dataset, in the original edit, we alter the answer from $o$ to $o^{* }$ for a subject $s$ . We then prompt the model to generate a linked triple $(o^{* },r^{* },o^{'* })$ . Subsequently, GPT-4 creates a question and answer based on this triple and $s$ . Notably, if the model can answer this new question, it would imply that it has preexisting knowledge of the triple $(o^{* },r^{* },o^{'* })$ . We filter out unknown triples by asking the model to predict $o^{'* }$ from $o^{* }$ and $r^{*}$ . If successful, it’s inferred the model has prior knowledge. Finally, Human evaluators verify the triple’s accuracy and the question’s fluency. Additional details, such as the demonstrations we used and other parts of dataset construction, can be found in the Appendix B.

数据集构建

对于单跳数据集,在原始编辑中,我们将主体 $s$ 的答案从 $o$ 修改为 $o^{* }$。接着,我们提示模型生成一个关联三元组 $(o^{* },r^{* },o^{'* })$。随后,GPT-4基于该三元组和 $s$ 生成一个问题及其答案。值得注意的是,若模型能回答这个新问题,则表明它已预先掌握三元组 $(o^{* },r^{* },o^{'* })$ 的知识。我们通过要求模型根据 $o^{* }$ 和 $r^{* }$ 预测 $o^{'*}$ 来过滤未知三元组。若预测成功,则推断模型具备先验知识。最后,人工评估员验证三元组的准确性和问题的流畅性。更多细节(如使用的演示案例及数据集构建的其他部分)可参见附录B。

Results We conduct experiments based on the newly proposed evaluation metric and datasets, presenting the results in Table 3. As demonstrated by the Table, the performance of current model editing methods regarding portability is somewhat suboptimal. SERAC, despite showing impeccable results on previous metrics, scores less than $20%$ accuracy across all three portability aspects. The bottleneck of SERAC lies in the accuracy of the classifier and the capabilities of the additional model. As to the subject replace scenario, including SERAC, MEND, ROME, and MEMIT, can only adapt to a specific subject entity expression but cannot generalize to the concept of the subject entity. However, FT-L, IKE, and T-patcher demonstrate great performance when facing the substituted subject. Regarding the reversed relation, our results indicate that current editing methods mainly edit one-direction relations, with IKE as the notable exception, achieving over $90%$ on both GPT-J and GPT-NEOX-20B. Other methods alter the subject entities’ attributes while leaving the object entity unaffected. In the one-hop reasoning setting, most of the editing methods struggle to transfer the altered knowledge to related facts. Unexpectedly, ROME, MEMIT, and IKE exhibit relatively commendable performance on portability (exceeding $50%$ ). They are capable of not only editing the original cases but also modifying facts correlated with them in some respect. To summarize, IKE exhibits relatively good performance across the three scenarios in our evaluations. However, it is clear that current model editing techniques continue to face challenges in managing the ramifications of an edit - that is, ensuring that changes to knowledge are coherently and consistently reflected in related contexts. This area, indeed, calls for further investigation and innovation in future research.

结果

我们基于新提出的评估指标和数据集进行了实验,结果如表3所示。如表所示,当前模型编辑方法在可移植性方面的表现欠佳。SERAC虽然在先前指标中表现完美,但在所有三个可移植性维度上的准确率均低于$20%$。其瓶颈在于分类器的准确性及附加模型的能力。在主语替换场景中,包括SERAC、MEND、ROME和MEMIT等方法仅能适应特定主语实体表达,而无法泛化至主语实体的概念。然而FT-L、IKE和T-patcher在面对替换主语时表现出色。关于反向关系,我们的结果表明当前编辑方法主要处理单向关系,其中IKE是显著例外,在GPT-J和GPT-NEOX-20B上均达到$90%$以上。其他方法仅改变主语实体属性而保持宾语实体不变。在单跳推理场景中,多数编辑方法难以将修改后的知识迁移至相关事实。出乎意料的是,ROME、MEMIT和IKE在可移植性上表现相对较好(超过$50%$),不仅能编辑原始案例,还能修改与之关联的事实。总体而言,IKE在我们的评估中三种场景均表现较优。但显然,当前模型编辑技术在管理编辑的连锁效应——即确保知识修改在相关语境中连贯一致地体现——方面仍面临挑战。这一领域确实需要未来研究进一步探索与创新。

5.2 Locality - Side Effect of Model Editing

5.2 局部性 - 模型编辑的副作用

In the preceding section, COUNTER FACT and ZsRE evaluate model editing’s locality from differ

在前一节中,COUNTER FACT和ZsRE从不同角度评估了模型编辑的局部性

| Method | Other- Attribution | Distract- Neighbor | Other- Task |

| FT-L | 12.88 | 9.48 | 49.56 |

| MEND | 73.50 | 32.96 | 48.86 |

| SERAC | 99.50 | 39.18 | 74.84 |

| T-Patcher | 91.51 | 17.56 | 75.03 |

| ROME | 78.94 | 50.35 | 52.12 |

| MEMIT | 86.78 | 60.47 | 74.62 |

| IKE | 84.13 | 66.04 | 75.33 |

| 方法 | 其他归因 | 干扰邻居 | 其他任务 |

|---|---|---|---|

| FT-L | 12.88 | 9.48 | 49.56 |

| MEND | 73.50 | 32.96 | 48.86 |

| SERAC | 99.50 | 39.18 | 74.84 |

| T-Patcher | 91.51 | 17.56 | 75.03 |

| ROME | 78.94 | 50.35 | 52.12 |

| MEMIT | 86.78 | 60.47 | 74.62 |

| IKE | 84.13 | 66.04 | 75.33 |

Table 4: Locality results on various model editing methods for GPT-J. Examples of each type can be seen in Tabel 9 at Appendix B.

表 4: GPT-J 各类模型编辑方法的局部性结果。具体示例详见附录 B 的表 9。

ent perspectives. COUNTER FACT employs triples from the same distribution as the target knowledge, while ZsRE utilizes questions from the distinct Natural Questions dataset. Notably, some methods, such as T-Patcher, exhibit differing performances on these two datasets. This highlights that the impact of model editing on the language model is multifaceted, necessitating a thorough and comprehensive evaluation to fully appreciate its effects. To thoroughly examine the potential side effects of model editing, we propose evaluations at three different levels: (1) Other Relations: Although Meng et al. (2022) introduced the concept of essence, they did not explicitly evaluate it. We argue that other attributes of the subject that have been updated should remain unchanged after editing. (2) Distract Neighbourhood: HoelscherObermaier et al. (2023a) find that if we concatenate the edited cases before other unrelated input, the model tends to be swayed by the edited fact and continue to produce results aligned with the edited cases. (3) Other Tasks: Building upon Skill Neuron’s assertion (Wang et al., 2022) that feedforward networks in large language models (LLMs) possess task-specific knowledge capabilities, we introduce a new challenge to assess whether model editing might negatively impact performance on other tasks. Construction of the dataset details can be found in Appendix B.3.

不同视角下的考量。COUNTER FACT采用与目标知识相同分布的三元组,而ZsRE则使用来自不同自然问题数据集(Natural Questions)的提问。值得注意的是,某些方法(如T-Patcher)在这两个数据集上表现出差异化的性能。这表明模型编辑对大语言模型的影响是多方面的,需要全面系统的评估才能充分理解其效果。为深入探究模型编辑的潜在副作用,我们提出三个层次的评估框架:(1) 其他关联性:虽然Meng等人(2022)提出了本质属性的概念,但未对其进行显式评估。我们认为编辑后,主体已更新的其他属性应保持不变。(2) 干扰邻域:HoelscherObermaier等人(2023a)发现,若将编辑案例置于无关输入之前,模型易受编辑事实影响而持续产生与编辑案例一致的结果。(3) 其他任务:基于Skill Neuron(Wang等人,2022)关于大语言模型中前馈网络具有任务特定知识能力的论断,我们提出新挑战以评估模型编辑是否会对其他任务性能产生负面影响。数据集构建细节详见附录B.3。

Results Table 4 presents our results. Notably, current editing methods excel in the other attributions aspect, indicating that they only modify the target characteristic without affecting other attributes. However, they generally perform poorly in Distract-Neighbor settings, as reflected in the performance drop compared to the results in Table 1. An exception is IKE, whose performance remains relatively stable due to the fact that it inherently requires the edited fact to be concatenated before the input. As for the commonsense reasoning tasks, parameter-preserving methods largely maintain their performance on other tasks. Conversely, methods that alter parameters tend to negatively influence performance, with the exception of MEMIT. Despite changing parameters, MEMIT maintains strong performance in commonsense tasks, demonstrating its commendable locality.

结果

表 4 展示了我们的实验结果。值得注意的是,当前编辑方法在"其他属性"维度表现优异,表明其仅修改目标特征而不影响其他属性。然而,这些方法在"干扰邻近项"(Distract-Neighbor)设定中普遍表现欠佳,与表 1 结果相比存在性能下降。IKE 是个例外,由于其本质要求将编辑事实与输入拼接的特性,性能保持相对稳定。在常识推理任务方面,参数保留方法基本能维持其他任务的表现,而参数修改方法往往会产生负面影响(MEMIT 除外)。尽管会改变参数,MEMIT 在常识任务中仍保持强劲性能,展现出卓越的局部性特质。

Table 5: Wall clock time for each edit method conducting 10 edits on GPT-J using one $2\times\mathsf{V}100$ (32G). The calculation of this time involves measuring the duration from providing the edited case to obtaining the postedited model.

| Editor | COUNTERFACT | ZsRE |

| FT-L | 35.94s | 58.86s |

| SERAC | 5.31s | 6.51s |

| CaliNet | 1.88s | 1.93s |

| T-Patcher | 1864.74s | 1825.15s |

| KE | 2.20s | 2.21s |

| MEND | 0.51s | 0.52s |

| KN | 225.43s | 173.57s |

| ROME | 147.2s | 183.0s |

| MEMIT | 143.2s | 145.6s |

表 5: 各编辑方法在单张 $2\times\mathsf{V}100$ (32G) 显卡上对 GPT-J 执行 10 次编辑的挂钟时间。该时间的计算包括从提供编辑案例到获得后编辑模型的持续时间测量。

| Editor | COUNTERFACT | ZsRE |

|---|---|---|

| FT-L | 35.94s | 58.86s |

| SERAC | 5.31s | 6.51s |

| CaliNet | 1.88s | 1.93s |

| T-Patcher | 1864.74s | 1825.15s |

| KE | 2.20s | 2.21s |

| MEND | 0.51s | 0.52s |

| KN | 225.43s | 173.57s |

| ROME | 147.2s | 183.0s |

| MEMIT | 143.2s | 145.6s |

5.3 Efficiency

5.3 效率

Model editing should minimize the time and memory required for conducting edits without compromising the model’s performance.

模型编辑应在不影响模型性能的前提下,尽量减少执行编辑所需的时间和内存。

Time Analysis Table 5 illustrates the time required for different model editing techniques from providing the edited case to obtaining the postedited model. We observe that once the hypernetwork is trained, KE and MEND perform the editing process at a considerably fast pace. Likewise, SERAC can also quickly edit knowledge, completing the process in about 5 seconds, given a trained classifier and counter fact model. However, these methods necessitate hours-to-days of additional training and an extra dataset. In our experiments, training MEND on the ZsRE dataset took over 7 hours, and training SERAC required over 36 hours on $3\times\mathrm{V}100$ . On the other hand, ROME and MEMIT necessitate a pre-computation of the covariance statistics for the Wikitext. However, this computation is time-consuming and can potentially take hours-to-days to complete. In comparison, other methods such as KN, CaliNET, and T-Patcher may be faster since they do not require any pre-computation or pre-training. However, KN and CaliNET’s performance on larger models is unsatisfactory, and T-Patcher is the slowest due to the need for individual neuron training for each corresponding mistake. Considering the time aspect, there is a need for a model editing method that is more time-friendly.

时间分析

表 5 展示了从提供编辑案例到获得后编辑模型所需的时间。我们观察到,一旦超网络训练完成,KE 和 MEND 能以相当快的速度执行编辑过程。同样,在分类器和反事实模型训练完成后,SERAC 也能在约 5 秒内快速完成知识编辑。然而,这些方法需要数小时至数天的额外训练和额外数据集。在我们的实验中,在 ZsRE 数据集上训练 MEND 耗时超过 7 小时,而训练 SERAC 在 $3\times\mathrm{V}100$ 上需要超过 36 小时。另一方面,ROME 和 MEMIT 需要预先计算 Wikitext 的协方差统计量,但这一计算耗时且可能花费数小时至数天才能完成。相比之下,KN、CaliNET 和 T-Patcher 等方法可能更快,因为它们不需要任何预计算或预训练。然而,KN 和 CaliNET 在较大模型上的表现不尽如人意,而 T-Patcher 由于需要为每个对应错误单独训练神经元而成为最慢的方法。从时间角度考虑,我们需要一种更省时的模型编辑方法。

Figure 5: GPU VRAM consumption during training and editing for different model editing methods.

图 5: 不同模型编辑方法在训练和编辑过程中的 GPU 显存消耗。

Memory Analysis Figure 5 exhibits the memory VRAM usage for each model editing method. From this figure, we observe that the majority of the methods consume a similar amount of memory, with the exception of MEND, which requires more than 60GB for training. Methods that introduce extra training, such as MEND and SERAC lead to additional computational overhead, hence the significant increase in memory consumption.

内存分析

图 5 展示了各模型编辑方法的内存 (VRAM) 使用情况。从图中可以看出,除 MEND 训练时需占用超过 60GB 内存外,其他方法的内存消耗量基本相近。引入额外训练的方法 (如 MEND 和 SERAC) 会导致计算开销增加,因此内存占用显著上升。

6 Relationship with Relevant Works

6 与相关工作的关系

6.1 Knowledge in LLMs

6.1 大语言模型中的知识

Several model editing approaches aim to discern how knowledge stored in PLMs precisely and directly alters the model’s parameters. There is existing work that examines the principles that govern how PLMs store knowledge (Geva et al., 2021, 2022; Haviv et al., 2023; Hao et al., 2021; Her- nandez et al., 2023; Yao et al., 2023; Cao et al., 2023; Lamparth and Reuel, 2023; Cheng et al., 2023; Li et al., 2023b; Chen et al., 2023; Ju and Zhang, 2023), which contribute to the model editing process. Moreover, some model editing techniques bear resemblance to knowledge augmentation (Zhang et al., 2019; Lewis et al., 2020; Zhang et al., 2022b; Yasunaga et al., 2021; Yao et al., 2022; Pan et al., 2023) approaches, as updating the model’s knowledge can also be considered as instilling knowledge into the model.

多种模型编辑方法致力于探究预训练语言模型(PLM)中存储的知识如何精确且直接地改变模型参数。现有研究揭示了PLM存储知识的核心机制(Geva et al., 2021, 2022; Haviv et al., 2023; Hao et al., 2021; Hernandez et al., 2023; Yao et al., 2023; Cao et al., 2023; Lamparth and Reuel, 2023; Cheng et al., 2023; Li et al., 2023b; Chen et al., 2023; Ju and Zhang, 2023),这些发现推动了模型编辑技术的发展。此外,部分模型编辑技术与知识增强方法(Zhang et al., 2019; Lewis et al., 2020; Zhang et al., 2022b; Yasunaga et al., 2021; Yao et al., 2022; Pan et al., 2023)具有相似性,因为更新模型知识本质上也可视为向模型注入新知识。

6.2 Lifelong Learning and Unlearning Limitations

6.2 持续学习与遗忘机制的局限性

Model editing, encompassing lifelong learning and unlearning, allows adaptive addition, modification, and removal of knowledge. Continual learning (Biesialska et al., 2020), which improves model adaptability across tasks and domains, has shown effectiveness in model editing in PLMs (Zhu et al., 2020). Moreover, it’s vital for models to forget sensitive knowledge, aligning with machine unlearning concepts (Hase et al., 2023; Wu et al., 2022; Tarun et al., 2021; Gandikota et al., 2023).

模型编辑 (model editing) 涵盖持续学习 (lifelong learning) 与遗忘学习 (unlearning),支持知识的动态增删改。持续学习 (continual learning) [20] 能提升模型跨任务和跨领域的适应能力,在预训练语言模型 (PLM) 编辑中已展现成效 [21]。此外,模型需具备遗忘敏感知识的能力,这与机器遗忘 (machine unlearning) 概念相契合 [22-25]。

6.3 Security and Privacy for LLMs

6.3 大语言模型的安全与隐私

Past studies (Carlini et al., 2020; Shen et al., 2023) show that LLMs can produce unreliable or personal samples from certain prompts. The task of erasing potentially harmful and private information stored in large language models (LLMs) is vital to enhance the privacy and security of LLM-based applications (Sun et al., 2023). Model editing, which can suppress harmful language generation (Geva et al., 2022; Hu et al., 2023), could help address these concerns.

过往研究 (Carlini et al., 2020; Shen et al., 2023) 表明,大语言模型可能根据特定提示生成不可靠或涉及个人隐私的样本。消除大语言模型中潜在有害和私密信息的任务,对于提升基于大语言模型应用的隐私与安全性至关重要 (Sun et al., 2023)。能够抑制有害语言生成的模型编辑技术 (Geva et al., 2022; Hu et al., 2023) 有助于解决这些问题。

7 Conclusion

7 结论

We systematically analyze methods for editing large language models (LLMs). We aim to help researchers better understand existing editing techniques by examining their features, strengths, and limitations. Our analysis shows much room for improvement, especially in terms of portability, locality, and efficiency. Improved LLM editing could help better align them with the changing needs and values of users. We hope that our work spurs progress on open issues and further research.

我们系统性地分析了大语言模型(LLM)的编辑方法。通过考察这些方法的特性、优势与局限,旨在帮助研究者更好地理解现有编辑技术。分析表明这些方法在可移植性、局部性和效率方面仍有很大改进空间。改进的大语言模型编辑技术能帮助模型更好地适应用户不断变化的需求和价值观。我们希望这项工作能推动开放问题的进展并促进后续研究。

Acknowledgment

致谢

We would like to express gratitude to the anonymous reviewers for their kind comments. This work was supported by the National Natural Science Foundation of China (No.62206246), Zhejiang Provincial Natural Science Foundation of China (No. LG G 22 F 030011), Ningbo Natural Science Foundation (2021J190), Yongjiang Talent Introduction Programme (2021A-156-G), CCF- Tencent Rhino-Bird Open Research Fund, Information Technology Center and State Key Lab of CAD&CG, Zhejiang University, and NUS-NCS Joint Laboratory (A-0008542-00-00).

我们要感谢匿名评审专家的宝贵意见。本研究得到了国家自然科学基金(No.62206246)、浙江省自然科学基金(No.LGG22F030011)、宁波市自然科学基金(2021J190)、甬江人才工程(2021A-156-G)、CCF-腾讯犀牛鸟基金、浙江大学信息技术中心及CAD&CG国家重点实验室,以及NUS-NCS联合实验室(A-0008542-00-00)的支持。

There remain several aspects of model editing that are not covered in this paper.

本文尚未涵盖模型编辑的若干方面。

Model Scale & Architecture Due to computational resource constraints, we have only calculated the results for models up to 20B in size here. Meanwhile, many model editing methods treat the FFN of the model as key-value pairs. Whether these methods are effective for models with different archi tec ture s, such as Llama, remains to be explored.

模型规模与架构

由于计算资源限制,我们仅计算了规模不超过200亿参数模型的结果。同时,许多模型编辑方法将模型的FFN (Feed-Forward Network) 视为键值对存储。这些方法是否适用于不同架构的模型(如Llama)仍有待探索。

Editing Scope Notably, the application of model editing goes beyond mere factual contexts, underscoring its vast potential. Elements such as personality, emotions, opinions, and beliefs also fall within the scope of model editing. While these aspects have been somewhat explored, they remain relatively uncharted territories and thus are not detailed in this paper. Furthermore, multilingual editing (Xu et al., 2022; Wang et al., 2023a; Wu et al., 2023) represents an essential research direction that warrants future attention and exploration. There are also some editing works that can deal with computer vision tasks such as ENN (Sinitsin et al., 2020) and Ilharco et al. (2023).

编辑范围

值得注意的是,模型编辑的应用远不止于事实性内容,其巨大潜力还体现在人格、情感、观点和信仰等元素的编辑上。虽然这些方面已有部分探索,但仍属于相对未知的领域,因此本文不作详细讨论。此外,多语言编辑 (Xu et al., 2022; Wang et al., 2023a; Wu et al., 2023) 是一个重要的研究方向,值得未来关注和探索。还有一些编辑工作能处理计算机视觉任务,例如 ENN (Sinitsin et al., 2020) 和 Ilharco et al. (2023)。

Editing Setting In our paper, the comprehensive study 5 mainly evaluated the method’s performance on one edit. During the time of our work, Zhong et al. (2023) proposed a multi-hop reasoning setting that explored current editing methods’ generalization performance for multiple edits simultaneously. We leave this multiple-edit evaluation for the future. Besides, this work focused on changing the model’s result to reflect specific facts. Cohen et al. (2023) propose a benchmark for knowledge injection and knowledge update. However, erasing the knowledge or information stored in LLMs (Belrose et al., 2023; Geva et al., 2022; Ishibashi and Shi- modaira, 2023) is also an important direction for investigating.

编辑设置

在我们的论文中,综合研究5主要评估了该方法在单次编辑上的性能。在我们工作期间,Zhong等人(2023)提出了一种多跳推理设置,探索了当前编辑方法在同时进行多次编辑时的泛化性能。我们将这种多次编辑评估留待未来研究。此外,这项工作侧重于改变模型的结果以反映特定事实。Cohen等人(2023)提出了知识注入和知识更新的基准。然而,消除大语言模型中存储的知识或信息(Belrose等人,2023;Geva等人,2022;Ishibashi和Shimodaira,2023)也是一个重要的研究方向。

Editing Black-Box LLMs Meanwhile, models like ChatGPT and GPT-4 exhibit remarkable performance on a wide range of natural language tasks but are only accessible through APIs. This raises an important question: How can we edit these “blackbox” models that also tend to produce undesirable outputs during downstream usage? Presently, there are some works that utilize in-context learning (Onoe et al., 2023) and prompt-based methods (Murty et al., 2022) to modify these models.

编辑黑盒大语言模型

与此同时,像 ChatGPT 和 GPT-4 这样的模型在广泛的自然语言任务中表现出色,但只能通过 API 访问。这引发了一个重要问题:如何编辑这些在下游使用中往往会产生不良输出的"黑盒"模型?目前,已有一些研究利用上下文学习 (Onoe et al., 2023) 和基于提示的方法 (Murty et al., 2022) 来修改这些模型。

They precede each example with a textual prompt that specifies the adaptation target, which shows promise as a technique for model editing.

他们在每个示例前添加文本提示以指定适配目标,这展现了作为模型编辑技术的潜力。

Ethic Consideration

伦理考量

Model editing pertains to the methods used to alter the behavior of pre-trained models. However, it’s essential to bear in mind that ill-intention ed model editing could lead the model to generate harmful or inappropriate outputs. Therefore, ensuring safe and responsible practices in model editing is of paramount importance. The application of such techniques should be guided by ethical considerations, and there should be safeguards to prevent misuse and the production of harmful results. All our data has been carefully checked by humans, and any malicious editing or offensive content has been removed.

模型编辑涉及用于改变预训练模型行为的方法。然而,必须牢记的是,恶意模型编辑可能导致模型生成有害或不适当的输出。因此,确保模型编辑的安全和负责任实践至关重要。此类技术的应用应以伦理考量为指导,并应设置防护措施以防止滥用和有害结果的产生。我们的所有数据都经过人工仔细检查,任何恶意编辑或冒犯性内容均已被移除。

References

参考文献

Rohan Anil, Andrew M Dai, Orhan Firat, Melvin John- son, Dmitry Lepikhin, Alexandre Passos, Siamak Shakeri, Emanuel Taropa, Paige Bailey, Zhifeng Chen, et al. 2023. Palm 2 technical report. arXiv preprint arXiv:2305.10403.

Rohan Anil、Andrew M Dai、Orhan Firat、Melvin Johnson、Dmitry Lepikhin、Alexandre Passos、Siamak Shakeri、Emanuel Taropa、Paige Bailey、Zhifeng Chen 等. 2023. PaLM 2技术报告. arXiv预印本 arXiv:2305.10403.

Nora Belrose, David Schneider-Joseph, Shauli Ravfogel, Ryan Cotterell, Edward Raff, and Stella Biderman. 2023. Leace: Perfect linear concept erasure in closed form.

Nora Belrose、David Schneider-Joseph、Shauli Ravfogel、Ryan Cotterell、Edward Raff 和 Stella Biderman。2023。Leace:闭式完美线性概念擦除。

Magdalena Biesialska, Katarzyna Biesialska, and Marta Ruiz Costa-jussà. 2020. Continual lifelong learning in natural language processing: A survey. ArXiv, abs/2012.09823.

Magdalena Biesialska、Katarzyna Biesialska 和 Marta Ruiz Costa-jussà。2020。自然语言处理中的持续终身学习:综述。ArXiv,abs/2012.09823。

Yonatan Bisk, Rowan Zellers, Ronan Le Bras, Jianfeng Gao, and Yejin Choi. 2020. PIQA: reasoning about physical commonsense in natural language. In The Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, The Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, February 7-12, 2020, pages 7432– 7439. AAAI Press.

Yonatan Bisk、Rowan Zellers、Ronan Le Bras、Jianfeng Gao和Yejin Choi。2020。PIQA:基于自然语言的物理常识推理。载于《第三十四届AAAI人工智能会议(AAAI 2020)》《第三十二届人工智能创新应用会议(IAAI 2020)》《第十届AAAI人工智能教育进展研讨会(EAAI 2020)》,2020年2月7-12日,美国纽约州纽约市,第7432–7439页。AAAI Press。

Sid Black, Stella Biderman, Eric Hallahan, Quentin Anthony, Leo Gao, Laurence Golding, Horace He, Connor Leahy, Kyle McDonell, Jason Phang, Michael Pieler, USVSN Sai Prashanth, Shivanshu Purohit, Laria Reynolds, Jonathan Tow, Ben Wang, and Samuel Weinbach. 2022. Gpt-neox-20b: An opensource auto regressive language model.

Sid Black、Stella Biderman、Eric Hallahan、Quentin Anthony、Leo Gao、Laurence Golding、Horace He、Connor Leahy、Kyle McDonell、Jason Phang、Michael Pieler、USVSN Sai Prashanth、Shivanshu Purohit、Laria Reynolds、Jonathan Tow、Ben Wang 和 Samuel Weinbach。2022。GPT-NeoX-20B:一个开源自回归语言模型。

Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neel a kant an, Pranav Shyam, Girish Sastry, Amanda

Tom B. Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda

Askell, Sandhini Agarwal, Ariel Herbert-Voss, Gretchen Krueger, Tom Henighan, Rewon Child, Aditya Ramesh, Daniel M. Ziegler, Jeffrey Wu, Clemens Winter, Christopher Hesse, Mark Chen, Eric Sigler, Mateusz Litwin, Scott Gray, Benjamin Chess, Jack Clark, Christopher Berner, Sam McCandlish, Alec Radford, Ilya Sutskever, and Dario Amodei. 2020. Language models are few-shot learners. In Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual.

Askell、Sandhini Agarwal、Ariel Herbert-Voss、Gretchen Krueger、Tom Henighan、Rewon Child、Aditya Ramesh、Daniel M. Ziegler、Jeffrey Wu、Clemens Winter、Christopher Hesse、Mark Chen、Eric Sigler、Mateusz Litwin、Scott Gray、Benjamin Chess、Jack Clark、Christopher Berner、Sam McCandlish、Alec Radford、Ilya Sutskever 和 Dario Amodei。2020。语言模型是少样本学习者。收录于《神经信息处理系统进展 33:2020 年神经信息处理系统年会》(NeurIPS 2020),2020 年 12 月 6-12 日,线上会议。

Boxi Cao, Qiaoyu Tang, Hongyu Lin, Xianpei Han, Jiawei Chen, Tianshu Wang, and Le Sun. 2023. Retentive or forgetful? diving into the knowledge memorizing mechanism of language models. arXiv preprint arXiv:2305.09144.

Boxi Cao、Qiaoyu Tang、Hongyu Lin、Xianpei Han、Jiawei Chen、Tianshu Wang 和 Le Sun。2023. 保留还是遗忘?探究语言模型的知识记忆机制。arXiv预印本 arXiv:2305.09144。

Nicholas Carlini, Florian Tramèr, Eric Wallace, Matthew Jagielski, Ariel Herbert-Voss, Katherine Lee, Adam Roberts, Tom B. Brown, Dawn Xiaodong Song, Úlfar Erlingsson, Alina Oprea, and Colin Raffel. 2020. Extracting training data from large language models. In USENIX Security Symposium.

Nicholas Carlini、Florian Tramèr、Eric Wallace、Matthew Jagielski、Ariel Herbert-Voss、Katherine Lee、Adam Roberts、Tom B. Brown、Dawn Xiaodong Song、Úlfar Erlingsson、Alina Oprea 和 Colin Raffel。2020。从大语言模型中提取训练数据。见:USENIX安全研讨会。

Yuheng Chen, Pengfei Cao, Yubo Chen, Kang Liu, and Jun Zhao. 2023. Journey to the center of the knowledge neurons: Discoveries of language-independent knowledge neurons and degenerate knowledge neurons. CoRR, abs/2308.13198.

Yuheng Chen、Pengfei Cao、Yubo Chen、Kang Liu 和 Jun Zhao。 2023. 知识神经元核心探索:语言无关知识神经元与退化知识神经元的发现。 CoRR, abs/2308.13198.

Siyuan Cheng, Ningyu Zhang, Bozhong Tian, Zelin Dai, Feiyu Xiong, Wei Guo, and Huajun Chen. 2023. Editing language model-based knowledge graph embeddings. CoRR, abs/2301.10405.

Siyuan Cheng、Ningyu Zhang、Bozhong Tian、Zelin Dai、Feiyu Xiong、Wei Guo 和 Huajun Chen。2023。基于语言模型的知识图谱嵌入编辑。CoRR, abs/2301.10405。

Roi Cohen, Eden Biran, Ori Yoran, Amir Globerson, and Mor Geva. 2023. Evaluating the ripple effects of knowledge editing in language models.

Roi Cohen、Eden Biran、Ori Yoran、Amir Globerson和Mor Geva。2023。评估大语言模型中知识编辑的连锁效应。

Damai Dai, Li Dong, Yaru Hao, Zhifang Sui, Baobao Chang, and Furu Wei. 2022. Knowledge neurons in pretrained transformers. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 8493– 8502, Dublin, Ireland. Association for Computational Linguistics.

Damai Dai、Li Dong、Yaru Hao、Zhifang Sui、Baobao Chang和Furu Wei。2022。预训练Transformer中的知识神经元。载于《第60届计算语言学协会年会论文集(第一卷:长论文)》,第8493-8502页,爱尔兰都柏林。计算语言学协会。

Nicola De Cao, Wilker Aziz, and Ivan Titov. 2021. Editing factual knowledge in language models. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 6491– 6506, Online and Punta Cana, Dominican Republic. Association for Computational Linguistics.

Nicola De Cao、Wilker Aziz 和 Ivan Titov。2021. 编辑语言模型中的事实知识。载于《2021年自然语言处理实证方法会议论文集》,第6491–6506页,线上会议及多米尼加共和国蓬塔卡纳。计算语言学协会。

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 4171–4186, Minneapolis, Minnesota. Association for Computational Linguistics.

Jacob Devlin、Ming-Wei Chang、Kenton Lee 和 Kristina Toutanova。2019. BERT: 面向语言理解的深度双向Transformer预训练。载于《2019年北美计算语言学协会人类语言技术会议论文集》(长篇与短篇论文), 第1卷, 第4171–4186页, 明尼苏达州明尼阿波里斯市。计算语言学协会。

Qingxiu Dong, Damai Dai, Yifan Song, Jingjing Xu, Zhifang Sui, and Lei Li. 2022. Calibrating factual knowledge in pretrained language models. In Findings of the Association for Computational Linguistics: EMNLP 2022, pages 5937–5947, Abu Dhabi, United Arab Emirates. Association for Computational Linguistics.

董清秀、戴大麦、宋一凡、徐晶晶、隋志芳和李磊。2022。校准预训练语言模型中的事实知识。载于《计算语言学协会发现:EMNLP 2022》,第5937–5947页,阿拉伯联合酋长国阿布扎比。计算语言学协会。

Rohi