Zero-shot Audio Source Separation through Query-based Learning from Weakly-labeled Data

零样本音频源分离:基于弱标签数据的查询学习

Abstract

摘要

Deep learning techniques for separating audio into different sound sources face several challenges. Standard architectures require training separate models for different types of audio sources. Although some universal separators employ a single model to target multiple sources, they have difficulty generalizing to unseen sources. In this paper, we propose a threecomponent pipeline to train a universal audio source separator from a large, but weakly-labeled dataset: AudioSet. First, we propose a transformer-based sound event detection system for processing weakly-labeled training data. Second, we devise a query-based audio separation model that leverages this data for model training. Third, we design a latent embedding processor to encode queries that specify audio targets for separation, allowing for zero-shot generalization. Our approach uses a single model for source separation of multiple sound types, and relies solely on weakly-labeled data for training. In addition, the proposed audio separator can be used in a zero-shot setting, learning to separate types of audio sources that were never seen in training. To evaluate the separation performance, we test our model on MUSDB18, while training on the disjoint AudioSet. We further verify the zero-shot performance by conducting another experiment on audio source types that are held-out from training. The model achieves comparable Source-to-Distortion Ratio (SDR) performance to current supervised models in both cases.

深度学习技术在将音频分离为不同声源方面面临多项挑战。标准架构需要针对不同类型的音频源训练独立模型。尽管部分通用分离器采用单一模型处理多类声源,但难以泛化至未见过的声源类型。本文提出由三个组件构成的训练流程,利用大规模弱标签数据集AudioSet训练通用音频源分离器:首先,我们提出基于Transformer的声音事件检测系统处理弱标签训练数据;其次,设计基于查询的音频分离模型以利用该数据进行训练;最后,开发潜在嵌入处理器来编码指定分离目标的查询,实现零样本泛化能力。该方法使用单一模型完成多类声源分离,且完全依赖弱标签数据进行训练。此外,所提出的音频分离器可在零样本场景下使用,学习分离训练阶段未见的声源类型。为评估分离性能,我们在MUSDB18数据集进行测试(训练数据来自互斥的AudioSet),并通过在训练阶段排除的声源类型上进行二次实验验证零样本性能。该模型在两种场景下均取得与当前监督模型相当的源失真比(SDR)性能。

Introduction

引言

Audio source separation is a core task in the field of audio processing using artificial intelligence. The goal is to separate one or more individual constituent sources from a single recording of a mixed audio piece. Audio source separation can be applied in various downstream tasks such as audio extraction, audio transcription, and music and speech enhancement. Although there are many successful backbone architectures (e.g. Wave-U-Net, TasNet, D3Net (Stoller, Ewert, and Dixon 2018; Luo and Mesgarani 2018; Takahashi and Mitsufuji 2020)), fundamental challenges and questions remain: How can the models be made to better generalize to multiple, or even unseen, types of audio sources when supervised training data is limited? Can large amounts of weaklylabeled data be used to increase generalization performance?

音频源分离是运用人工智能进行音频处理的核心任务,其目标是从混合音频的单条录音中分离出一个或多个独立构成声源。该技术可应用于音频提取、音频转录、音乐及语音增强等多种下游任务。尽管已有诸多成功的主干架构(如Wave-U-Net、TasNet、D3Net [20, 21, 22]),但根本性挑战依然存在:在监督训练数据有限时,如何使模型更好地泛化至多种甚至未见过的音频源类型?能否利用大量弱标注数据提升泛化性能?

The first challenge is known as universal source separation, meaning that we only need a single model to separate as many sources as possible. Most models mentioned above require training a full set of model parameters for each target type of audio source. As a result, training these models is both time and memory intensive. There are several heuristic frameworks (Samuel, Ganeshan, and Naradowsky 2020) that leverage meta-learning to bypass this problem, but they have difficulty generalizing to diverse types of audio sources. In other words, these frameworks succeeded in combining several source separators into one model, but the number of sources is still limited.

第一个挑战被称为通用源分离 (universal source separation),即只需一个模型就能分离尽可能多的声源。上述大多数模型都需要为每种目标音频源类型训练全套模型参数,因此训练这些模型既耗时又耗内存。目前已有几种启发式框架 (Samuel, Ganeshan, and Naradowsky 2020) 通过元学习规避该问题,但它们难以泛化到不同类型的音频源。换言之,这些框架成功将多个声源分离器整合到一个模型中,但声源数量仍然受限。

One approach to overcome this challenge is to train a model with an audio separation dataset that contains a very large variety of sound sources. The more sound sources a model can see, the better it will generalize. However, the scarcity of the supervised separation datasets makes this process challenging. Most separation datasets contain only a few source types. For example, MUSDB18 (Rafii et al. 2017) and DSD100 (Liutkus et al. 2017) contain music tracks of only four source types (vocal, drum, bass, and other) with a total duration of 5-10 hours. MedleyDB (Bittner et al. 2014) contains 82 instrument classes but with a total duration of only 3 hours. There exists some large-scale datasets such as AudioSet (Gemmeke et al. 2017) and FUSS (Wisdom et al. 2021), but they contain only weakly-labeled data. AudioSet, for example, contains 2.1 million 10-sec audio samples with 527 sound events. However, only $5%$ of recordings in Audioset have a localized event label (Hershey et al. 2021). For the remaining $95%$ of recordings, the correct occurrence of each labeled sound event can be anywhere within the 10-sec sample. In order to leverage this large and diverse source of weakly-labeled data, we first need to localize the sound event in each audio sample, which is referred as an audio tagging task (Fonseca et al. 2018).

克服这一挑战的一种方法是使用包含多种声源的音频分离数据集来训练模型。模型接触的声源种类越多,其泛化能力就越强。然而,监督式分离数据集的稀缺性使得这一过程颇具挑战性。大多数分离数据集仅包含少数几种声源类型。例如,MUSDB18 (Rafii et al. 2017) 和 DSD100 (Liutkus et al. 2017) 仅包含四种声源类型(人声、鼓、贝斯和其他)的音乐曲目,总时长为5-10小时。MedleyDB (Bittner et al. 2014) 包含82种乐器类别,但总时长仅为3小时。虽然存在AudioSet (Gemmeke et al. 2017) 和 FUSS (Wisdom et al. 2021) 等大规模数据集,但它们仅包含弱标注数据。以AudioSet为例,它包含210万个10秒音频样本,涵盖527种声音事件,但其中仅有 $5%$ 的录音具有定位事件标签 (Hershey et al. 2021)。对于其余 $95%$ 的录音,每个标注声音事件的实际出现位置可能位于10秒样本中的任意时间点。为了有效利用这种大规模且多样化的弱标注数据源,我们首先需要定位每个音频样本中的声音事件,这一过程被称为音频标注任务 (Fonseca et al. 2018)。

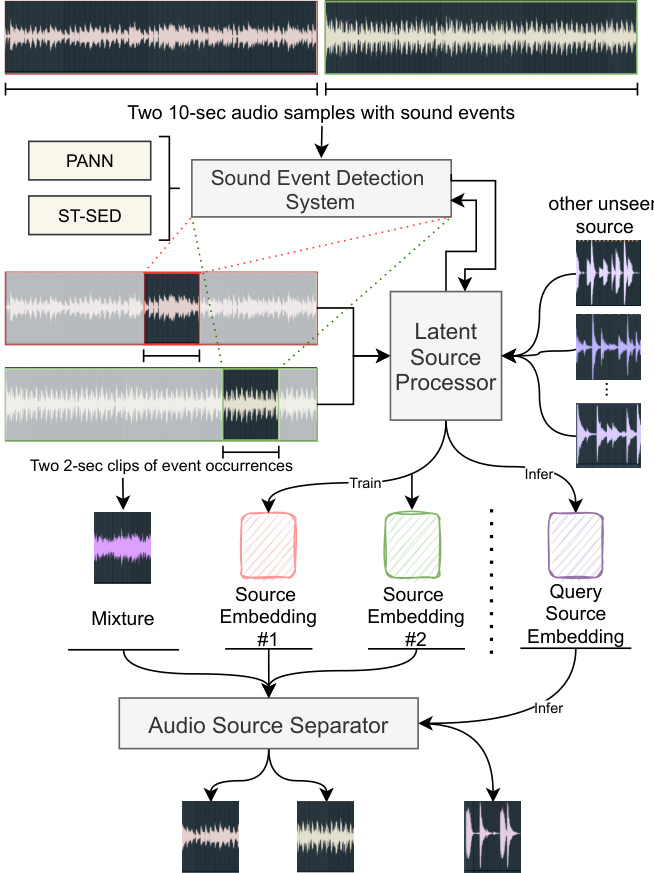

In this paper, as illustrated in Figure 1, we devise a pipeline1 that comprises of three components: a transformerbased sound event detection system ST-SED for performing time-localization in weakly-labeled training data, a querybased U-Net source separator to be trained from this data, and a latent source embedding processor that allows genera liz ation to unseen types of audio sources. The ST-SED can localize the correct occurrences of sound events from weakly-labeled audio samples and encode them as latent source embeddings. The separator learns to separate out a target source from an audio mixture given a corresponding target source embedding query, which is produced by the embedding processor. Further, the embedding processor enables zero-shot generalization by forming queries for new audio source types that were unseen at training time. In the experiment, we find that our model can separate unseen types of audio sources, including musical instruments and held-out AudioSet’s sound classes, effectively by achieving the SDR performance on par with existing state-of-the-art (SOTA) models. Our contributions are specified as follows:

本文中,如图1所示,我们设计了一个包含三个组件的流程:基于Transformer的声音事件检测系统ST-SED(用于在弱标记训练数据中执行时间定位)、基于查询的U-Net源分离器(通过该数据训练)以及可实现未见音频源类型泛化的潜在源嵌入处理器。ST-SED能够从弱标记音频样本中定位声音事件的正确发生时刻,并将其编码为潜在源嵌入。分离器通过学习,在给定由嵌入处理器生成的目标源嵌入查询时,能够从音频混合物中分离出目标源。此外,嵌入处理器通过为训练时未见过的新音频源类型构建查询,实现了零样本泛化能力。实验表明,我们的模型能够有效分离未见类型的音频源(包括乐器和保留的AudioSet声音类别),其SDR性能与现有最先进(SOTA)模型相当。具体贡献如下:

Figure 1: The architecture of our proposed zero-shot separation system.

图 1: 我们提出的零样本 (zero-shot) 分离系统架构。

• We propose a complete pipeline to leverage weaklylabeled audio data in training audio source separation systems. The results show that our utilization of these data is effective. • We design a transformer-based sound event detection system ST-SED. It outperforms the SOTA for sound event detection in AudioSet, while achieving a strong localization performance on the weakly-labeled data. • We employ a single latent source separator for multiple types of audio sources, which saves training time and reduces the number of parameters. Moreover, we experi mentally demonstrate that our approach can support zero-shot generalization to unseen types of sources.

• 我们提出了一套完整的流程,用于在音频源分离系统训练中利用弱标注音频数据。结果表明,我们对这些数据的利用是有效的。

• 我们设计了一个基于Transformer的声音事件检测系统ST-SED。该系统在AudioSet上的声音事件检测性能超越了当前最佳水平(SOTA),同时在弱标注数据上实现了强大的定位性能。

• 我们采用单一潜在源分离器处理多种类型的音频源,这节省了训练时间并减少了参数量。此外,实验证明我们的方法支持对未见源类型的零样本泛化。

Related Work Sound Event Detection and Localization

相关工作 声音事件检测与定位

The sound event detection task is to classify one or more target sound events in a given audio sample. The localization task, or the audio tagging, further requires the model to output the specific time-range of events on the audio timeline. Currently, the convolutional neural network (CNN) (LeCun et al. 1999) is being widely used to detect sound events. The Pretrained Audio Neural Networks (PANN) (Kong et al. 2020a) and the PSLA (Gong, Chung, and Glass 2021b) achieve the current CNN-based SOTA for the sound event detection, with their output feature maps serving as an empirical probability map of events within the audio timeline. For the transformer-based structure, the latest audio spectrogram transformer (AST) (Gong, Chung, and Glass 2021a) re-purposes the visual transformer structure ViT (Dosovitskiy et al. 2021) and DeiT (Touvron et al. 2021) to use the transformer’s class-token to predict the sound event. It achieves the best performance on the sound event detection task in AudioSet. However, it cannot directly localize the events because it outputs only a class-token instead of a featuremap. In this paper, we propose a transformer-based model ST-SED to detect and localize the sound event. Moreover, we use the ST-SED to process the weakly-labeled data that is sent downstream into the following separator.

声音事件检测任务是对给定音频样本中的一个或多个目标声音事件进行分类。定位任务(或称音频标注)则进一步要求模型输出音频时间线上事件的具体时间范围。目前,卷积神经网络 (CNN) (LeCun et al. 1999) 被广泛用于检测声音事件。预训练音频神经网络 (PANN) (Kong et al. 2020a) 和 PSLA (Gong, Chung, and Glass 2021b) 实现了当前基于 CNN 的声音事件检测 SOTA (state-of-the-art) ,其输出特征图作为音频时间线上事件的经验概率图。对于基于 Transformer 的结构,最新的音频频谱图 Transformer (AST) (Gong, Chung, and Glass 2021a) 重新利用了视觉 Transformer 结构 ViT (Dosovitskiy et al. 2021) 和 DeiT (Touvron et al. 2021) ,使用 Transformer 的类别 Token 来预测声音事件。它在 AudioSet 的声音事件检测任务中取得了最佳性能。然而,由于它仅输出类别 Token 而非特征图,因此无法直接定位事件。本文提出了一种基于 Transformer 的模型 ST-SED 来检测和定位声音事件。此外,我们使用 ST-SED 处理弱标注数据,并将其发送到下游的分离器中。

Universal Source Separation

通用源分离

Universal source separation attempts to employ a single model to separate different types of sources. Currently, the query-based model AQMSP (Lee, Choi, and Lee 2019) and the meta-learning model MetaTasNet (Samuel, Ganeshan, and Naradowsky 2020) can separate up to four sources in MUSDB18 dataset in the music source separation task. SuDoRM-RF (Tzinis, Wang, and Smaragdis 2020), the UniConvTasNet (Kavalerov et al. 2019), the PANN-based separator (Kong et al. 2020b), and MSI-DIS (Lin et al. 2021) extend the universal source separation to speech separation, environmental source separation, speech enhancement and music separation and synthesis tasks. However, most existing models require a separation dataset with clean sources and mixtures to train, and only support a limited number of sources that are seen in the training set. An ideal universal source separator should separate as many sources as possible even if they are unseen or not clearly defined in the training. In this paper, based on the architecture from (Kong et al. 2020b), we move further in this direction by proposing a pipeline that can use audio event samples for training a separator that generalizes to diverse and unseen sources.

通用音源分离旨在通过单一模型分离不同类型的音源。目前,基于查询的模型AQMSP (Lee, Choi, and Lee 2019) 和元学习模型MetaTasNet (Samuel, Ganeshan, and Naradowsky 2020) 在音乐音源分离任务中可分离MUSDB18数据集中的最多四种音源。SuDoRM-RF (Tzinis, Wang, and Smaragdis 2020)、UniConvTasNet (Kavalerov et al. 2019)、基于PANN的分离器 (Kong et al. 2020b) 以及MSI-DIS (Lin et al. 2021) 将通用音源分离扩展至语音分离、环境音源分离、语音增强以及音乐分离与合成任务。然而,现有模型大多需要包含纯净音源与混合样本的训练数据集,且仅支持训练集中已见的有限音源类型。理想的通用音源分离器应能分离尽可能多的音源,即使这些音源在训练中未出现或未明确定义。本文基于 (Kong et al. 2020b) 的架构,提出一种利用音频事件样本训练分离器的流程,该分离器可泛化至多样且未见过的音源,从而推动该方向的进一步发展。

Methodology and Pipeline

方法论与流程

In this section, we introduce three components of our source separation model. The sound event detection system is established to refine the weakly-labeled data before it is used by the separation model for training. A query-based source separator is designed to separate audio into different sources. Then an embedding processor is proposed to connect the above two components and allows our model to perform separation on unseen types of audio sources.

在本节中,我们将介绍音源分离模型的三个组成部分。首先建立声音事件检测系统,用于在弱标注数据被分离模型用于训练前对其进行优化。随后设计了一个基于查询的音源分离器,用于将音频分离为不同音源。最后提出一个嵌入处理器来连接上述两个组件,使我们的模型能够对未见过的音频源类型进行分离。

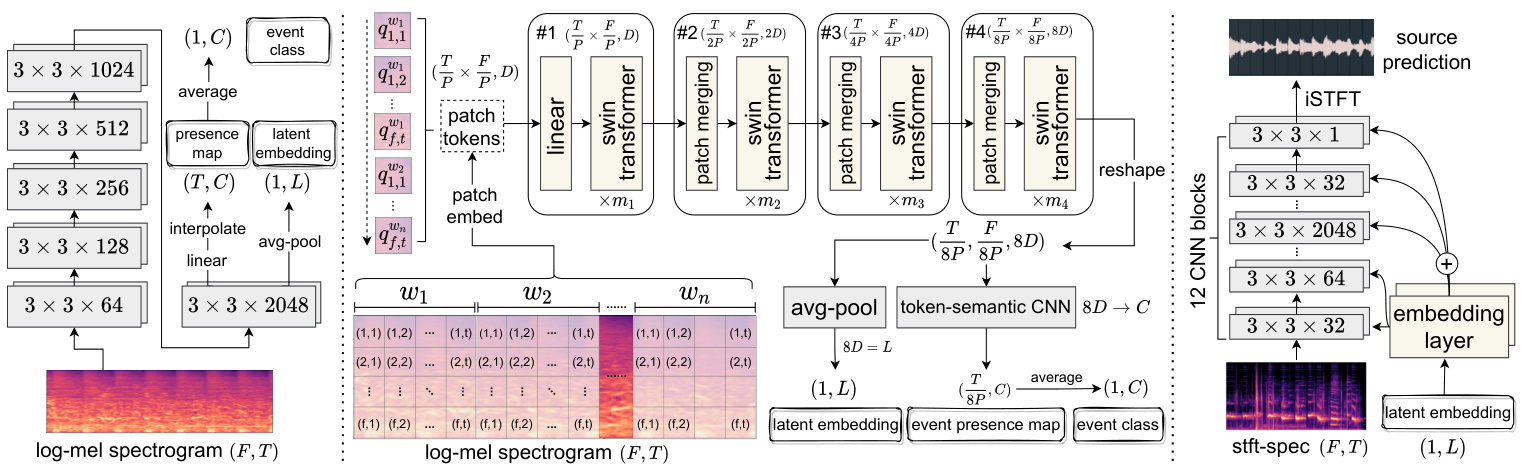

Figure 2: The network architecture of SED systems and the source separator. Left: PANN (Kong et al. 2020a); Middle: our proposed ST-SED; Right: the U-Net-based source separator. All CNNs are named as [2D-kernel size $\times$ channel size].

图 2: SED系统与音源分离器的网络架构。左: PANN (Kong et al. 2020a); 中: 我们提出的ST-SED; 右: 基于U-Net的音源分离器。所有CNN均命名为[2D-核尺寸 $\times$ 通道数]。

Sound Event Detection System

声音事件检测系统

In Audioset, each datum is a 10-sec audio sample with multiple sound events. The only accessible label is what sound events this sample contains (i.e., a multi-hot vector). However, we cannot get accurate start and end times for each sound event in a sample. This raises the problem of extracting a clip from a sample where one sound event most likely occurs (e.g., a 2-sec audio clip). As shown in the upper part of Figure 1, a pipeline is depicted by using a sound event detection (SED) system to process the weakly-labeled data. This system is designed to localize a 2-sec audio clip from a 10-sec sample, which will serve as an accurate sound event occurrence.

在Audioset数据集中,每个数据点都是包含多个声音事件的10秒音频样本。唯一可获取的标签是样本包含哪些声音事件(即一个多热向量)。但我们无法获知样本中每个声音事件精确的起止时间。这引出了如何从样本中提取最可能包含单一声音事件的音频片段(例如2秒片段)的问题。如图1上半部分所示,我们通过声音事件检测(SED)系统处理弱标注数据的流程:该系统被设计用于从10秒样本中定位出2秒音频片段,作为精确的声音事件发生区间。

In this section, we will first briefly introduce an existing SOTA system: Pretrained Audio Neural Networks (PANN) (Left), which serves as the main model to compare in both sound event detection and localization experiments. Then we introduce our proposed system ST-SED (Middle) that leads to better performance than PANN.

在本节中,我们将首先简要介绍现有的SOTA系统:预训练音频神经网络 (Pretrained Audio Neural Networks, PANN) (左),它作为声音事件检测和定位实验中的主要对比模型。然后介绍我们提出的ST-SED系统 (中),其性能优于PANN。

Pretrained Audio Neural Networks As shown in the left of Figure 2, PANN contains VGG-like CNNs (Simonyan and Zisserman 2015) to convert an audio mel-spec tr ogram into a $(T,C)$ featuremap, where $T$ is the number of time frames and $C$ is the number of sound event classes. The model averages the featuremap over the time axis to obtain a final probability vector $(1,C)$ and computes the binary cross-entropy loss between it and the groudtruth label. Since CNNs can capture the information in each time window, the featuremap $(T,C)$ is empircally regarded as a presence probability map of each sound event at each time frame. When determining the latent source embedding for the following pipeline, the penultimate layer’s output $(T,L)$ can be used to obtain its averaged vector $(1,L)$ as the latent source embedding.

预训练音频神经网络

如图 2 左侧所示,PANN 采用类 VGG CNN (Simonyan and Zisserman 2015) 将梅尔频谱图转换为 $(T,C)$ 特征图,其中 $T$ 为时间帧数,$C$ 为声音事件类别数。该模型通过对时间轴取平均得到最终概率向量 $(1,C)$ ,并计算其与真实标签的二元交叉熵损失。由于 CNN 能捕捉每个时间窗口的信息,特征图 $(T,C)$ 可视为各时间帧上各声音事件的存在概率图。在确定后续流程的潜在源嵌入时,可使用倒数第二层输出 $(T,L)$ 的平均向量 $(1,L)$ 作为潜在源嵌入。

Swin Token-Semantic Transformer for SED The transformer structure (Vaswani et al. 2017) and the tokensemantic module (Gao et al. 2021) have been widely used in the image classification and segmentation task and achieve better performance. In this paper, we expect to bring similar improvements to the sound event detection and audio tagging task, which then will contribute also to the separation task. As mentioned in the related work, the audio spectrogram transformer (AST) cannot be applied to audio tagging. Therefore, we refer to swin-transformer (Liu et al. 2021) in order to propose a swin token-semantic transformer for sound event detection (ST-SED). In the middle of Figure 2, a mel-spec tr ogram is cut into different patch tokens with a patch-embed CNN and sent into the transformer in order. We make the time and frequency lengths of the patch equal as $P\times P$ . Further, to better capture the relationship between frequency bins of the same time frame, we first split the mel-spec tr ogram into windows $w_{1},w_{2},...,w_{n}$ and then split the patches in each window. The order of tokens $Q$ follows time $\rightarrow$ frequency $\rightarrow$ window as:

Swin Token-Semantic Transformer 用于声音事件检测

Transformer 结构 (Vaswani et al. 2017) 和 token-semantic 模块 (Gao et al. 2021) 在图像分类和分割任务中已被广泛应用,并取得了优异性能。本文希望将类似改进引入声音事件检测和音频标注任务,从而进一步助力音频分离任务。如相关工作所述,音频频谱 Transformer (AST) 无法直接应用于音频标注任务。因此,我们参考 swin-transformer (Liu et al. 2021) ,提出用于声音事件检测的 swin token-semantic transformer (ST-SED)。

在图 2 中部,梅尔频谱图通过 patch-embed CNN 被切割为多个 patch token 并按序输入 Transformer。我们将 patch 的时频维度统一设为 $P\times P$。此外,为更好捕捉同一时间帧内频点间的关系,我们先将梅尔频谱图分割为窗口 $w_{1},w_{2},...,w_{n}$,再对各窗口进行 patch 划分。token 序列 $Q$ 遵循 时间 $\rightarrow$ 频率 $\rightarrow$ 窗口 的排序规则:

Where $\begin{array}{r}{t=\frac{T}{P},f=\frac{F}{P}}\end{array}$ , $n$ is the number of time windows, and $q_{i,j}^{w_{k}}$ denotes the patch in the position shown by Figure 2. The patch tokens pass through several network groups, each of which contains several transformer-encoder blocks. Between every two groups, we apply a patch-merge layer to reduce the number of tokens to construct a hierarchical representation. Each transformer-encoder block is a swintransformer block with the shifted window attention module (Liu et al. 2021), a modified self-attention module to improve the training efficiency. As illustrated in Figure 2, the shape of the patch tokens is reduced by 8 times from $\begin{array}{r}{\left(\frac{T}{P}\times\frac{\dot{F}}{P},D\right)}\end{array}$ to $(\frac{\stackrel{\cdot}{T}}{8P}\times\frac{F}{8P},8D)$ after 4 network groups.

其中 $\begin{array}{r}{t=\frac{T}{P},f=\frac{F}{P}}\end{array}$ ,$n$ 为时间窗口数量,$q_{i,j}^{w_{k}}$ 表示图 2 所示位置的图像块 (patch) 。这些图像块 token 会通过多个网络组,每组包含若干 transformer-encoder 模块。在每两组之间,我们采用 patch-merge 层来减少 token 数量以构建层次化表征。每个 transformer-encoder 模块都是采用移位窗口注意力机制 (shifted window attention module) [20] 的 swintransformer 模块,这是一种改进的自注意力模块以提升训练效率。如图 2 所示,经过 4 个网络组后,图像块 token 的维度从 $\begin{array}{r}{\left(\frac{T}{P}\times\frac{\dot{F}}{P},D\right)}\end{array}$ 缩减至 $(\frac{\stackrel{\cdot}{T}}{8P}\times\frac{F}{8P},8D)$ ,缩小了 8 倍。

We reshape the final block’s output to $(\frac{T}{8P},\frac{F}{8P},8D)$ Then, we apply a token-semantic 2D-CNN (Gao et al. 2021) with kernel size $(3,{\frac{F}{8P}})$ and padding size $(1,0)$ to integrate all frequency bins, meanwhile map the channel size $8D$ into the sound event classes $C$ . The output $\textstyle({\frac{T}{8P}},C)$ is regarded as a featuremap within time frames in a certain resolution. Finally, we average the featuremap as the final vector $(1,C)$ and compute the binary cross-entropy loss with the ground truth label. Different from traditional visual transformers and AST, our proposed ST-SED does not use the class-token but the averaged final vector from the tokensemantic layer to indicate the sound event. This makes the localization of sound events available in the output. In the practical scenario, we could use the featuremap $\textstyle{\Bigl(}{\frac{T}{8P}},C{\Bigr)}$ to localize sound events. And if we set $8D=L$ , the averaged vector $(1,L)$ of the featuremap $\bigl(\frac{T}{8P},\underline{{{L}}}\bigr)$ can be used as the latent source embedding in line with PANN.

我们将最终块的输出重塑为 $(\frac{T}{8P},\frac{F}{8P},8D)$ ,随后应用一个核大小为 $(3,{\frac{F}{8P}})$ 、填充大小为 $(1,0)$ 的 token-semantic 2D-CNN (Gao et al. 2021) 来整合所有频段,同时将通道数 $8D$ 映射到声音事件类别数 $C$ 。输出 $\textstyle({\frac{T}{8P}},C)$ 被视为特定时间分辨率下的特征图。最后,我们将特征图平均为最终向量 $(1,C)$ ,并与真实标签计算二元交叉熵损失。与传统视觉 Transformer 和 AST 不同,我们提出的 ST-SED 不使用类别 token,而是采用 token-semantic 层的平均输出向量来表征声音事件,这使得声音事件的定位可在输出中实现。实际应用中,我们可以利用特征图 $\textstyle{\Bigl(}{\frac{T}{8P}},C{\Bigr)}$ 进行声音事件定位。若设 $8D=L$ ,则特征图 $\bigl(\frac{T}{8P},\underline{{{L}}}\bigr)$ 的平均向量 $(1,L)$ 可作为符合 PANN 的潜在声源嵌入。

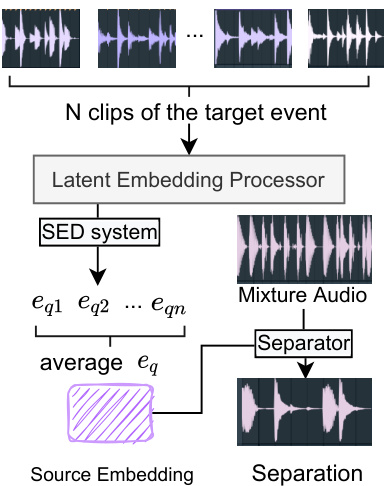

Figure 3: The mechanism to separate an audio into any given source. We collect $N$ clean clips of the target event. Then we take the average of latent source embeddings as the query embedding $e_{q}$ . The separator receives the embedding then performs the separation on the given audio.

图 3: 将音频分离为任意指定声源的机制。我们收集目标事件的 $N$ 段纯净片段,然后计算潜在声源嵌入的平均值作为查询嵌入 $e_{q}$。分离器接收该嵌入后对给定音频执行分离操作。

Query-based Source Separator

基于查询的源分离器

By SED systems, we can localize the most possible occurrence of a given sound event in an audio sample. Then, as shown in the Figure 1, suppose that we want to localize the sound event $s_{1}$ in the sample $x_{1}$ and another event $s_{2}$ in $x_{2}$ , we feed $x_{1},x_{2}$ into the SED system to obtain two featuremaps $m_{1},m_{2}$ . From $m_{1},m_{2}$ we can find the time frame $t_{1},t_{2}$ of the maximum probability on $s_{1}$ , $s_{2}$ , respectively. Finally, we could get two 2-sec clips $c_{1},c_{2}$ as the most possible occurrences of $s_{1},s_{2}$ by assigning $t_{1},t_{2}$ as center frames on two clips, respectively.

通过SED系统,我们可以在音频样本中定位给定声音事件最可能发生的位置。如图1所示,假设我们需要在样本$x_{1}$中定位声音事件$s_{1}$,在$x_{2}$中定位另一个事件$s_{2}$,我们将$x_{1},x_{2}$输入SED系统以获得两个特征图$m_{1},m_{2}$。从$m_{1},m_{2}$中可分别找到$s_{1}$、$s_{2}$最大概率对应的时间帧$t_{1},t_{2}$。最终,通过将$t_{1},t_{2}$分别设为两个片段的中心帧,可得到两个2秒片段$c_{1},c_{2}$作为$s_{1},s_{2}$最可能出现的区间。

Subsequently, we resend two clips $c_{1},c_{2}$ into the SED system to obtain two source embeddings $e_{1},e_{2}$ . Each latent source embedding $(1,L)$ is incorporated into the source separation model to specify which source needs to be separated. The incorporation mechanism will be introduced in detail in the following paragraphs.

随后,我们将两个音频片段 $c_{1},c_{2}$ 重新输入SED系统,得到两个源嵌入向量 $e_{1},e_{2}$。每个潜在源嵌入 $(1,L)$ 会被整合到源分离模型中,以指定需要分离的声源。具体的整合机制将在后续段落中详细介绍。

After we collect $c_{1},c_{2},e_{1},e_{2}$ , we mix two clips as $c=$ $c_{1}+c_{2}$ with energy normalization. Then we send two training triplets $(c,c_{1},e_{1}),(c,c_{2},e_{2})$ into the separator $f$ , respec- tively. We let the separator to learn the following regression:

在收集到 $c_{1},c_{2},e_{1},e_{2}$ 后,我们将两段音频混合为 $c=c_{1}+c_{2}$ 并进行能量归一化。随后将两个训练三元组 $(c,c_{1},e_{1}),(c,c_{2},e_{2})$ 分别送入分离器 $f$ ,使分离器学习以下回归关系:

As shown in the right of Figure 2, we base on U-Net (Ronneberger, Fischer, and Brox 2015) to construct our source separator, which contains a stack of down sampling and upsampling CNNs. The mixture clip $c$ is converted into the spec tr ogram by Short-time Fourier Transform (STFT). In each CNN block, the latent source embedding $e_{j}$ is incorporated by two embedding layers producing two featuremaps and added into the audio feature maps before passing through the next block. Therefore, the network will learn the relationship between the source embedding and the mixture, and adjust its weights to adapt to the separation of different sources. The output spec tr ogram of the fi- nal CNN block is converted into the separate waveform $c\prime$ by inverse STFT (iSTFT). Suppose that we have $n$ training triplets ${(c^{1},c_{j}^{1},e_{j}^{1}),(c^{2},c_{j}^{2},e_{j}^{\hat{2}}},...,(c^{n},c_{j}^{n},e_{j}^{n})}$ , we apply the Mean Absolute Error (MAE) to compute the loss between separate waveforms $C^{\prime}={c^{1\prime},c^{2\prime},...,c^{n\prime}}$ and the target source clips $C_{j}={c_{j}^{1},c_{j}^{2},...,\dot{c}_{j}^{n}}$ :

如图 2 右侧所示,我们基于 U-Net (Ronneberger, Fischer, and Brox 2015) 构建源分离器,该结构包含堆叠的下采样和上采样 CNN。混合音频片段 $c$ 通过短时傅里叶变换 (STFT) 转换为频谱图。在每个 CNN 模块中,潜在源嵌入 $e_{j}$ 通过两个嵌入层生成两个特征图,并添加到音频特征图中再传入下一模块。因此,网络将学习源嵌入与混合音频之间的关系,并调整权重以适应不同源的分离。最终 CNN 模块输出的频谱图通过逆 STFT (iSTFT) 转换为分离波形 $c\prime$。假设我们有 $n$ 个训练三元组 ${(c^{1},c_{j}^{1},e_{j}^{1}),(c^{2},c_{j}^{2},e_{j}^{\hat{2}}},...,(c^{n},c_{j}^{n},e_{j}^{n})}$,采用平均绝对误差 (MAE) 计算分离波形 $C^{\prime}={c^{1\prime},c^{2\prime},...,c^{n\prime}}$ 与目标源片段 $C_{j}={c_{j}^{1},c_{j}^{2},...,\dot{c}_{j}^{n}}$ 之间的损失:

Combining these two components together, we could utilize more datasets (i.e. containing sufficient audio samples but without separation data) in the source separation task. Indeed, it also indicates that we no longer require clean sources and mixtures for the source separation task (Kong et al. 2020b, 2021) if we succeed in using these datasets to achieve a good performance.

将这两个组件结合起来,我们可以在音源分离任务中利用更多数据集(即包含充足音频样本但缺乏分离数据的数据集)。事实上,这也意味着如果我们能成功利用这些数据集实现良好性能,就不再需要为音源分离任务准备干净的源信号和混合信号 (Kong et al. 2020b, 2021)。

Zero-shot Learning via Latent Source Embeddings

零样本学习通过潜在源嵌入实现

The third component, the embedding processor, serves as a communicator between the SED system and the source separator. As shown in Figure 1, during the training, the function of the latent source embedding processor is to obtain the latent source embedding $e$ of given clips $c$ from the SED system, and send the embedding into the separator. And in the inference stage, we enable the processor to utilize this model to separate more sources that are unseen or undefined in the training set.

第三个组件是嵌入处理器 (embedding processor),它充当SED系统和源分离器之间的通信桥梁。如图1所示,在训练过程中,潜在源嵌入处理器的功能是从SED系统中获取给定片段$c$的潜在源嵌入$e$,并将该嵌入发送到分离器。在推理阶段,我们使处理器能够利用该模型分离训练集中未见或未定义的更多声源。

Formally, suppose that we need to separate an audio $x_{q}$ according to a query source $s_{q}$ . In order to get the latent source embedding $e_{q}$ , we first need to collect $N$ clean clips of this source ${\dot{c_{q1}},c_{q2},...,c_{q N}}$ . Then we feed them into the SED system to obtain the latent embeddings ${e_{q1},e_{q2},...,e_{q N}}$ . The $e_{q}$ is obtained by taking the average of them:

形式上,假设我们需要根据查询源 $s_{q}$ 分离音频 $x_{q}$。为了获取潜在源嵌入 $e_{q}$,首先需要收集该源的 $N$ 个干净片段 ${\dot{c_{q1}},c_{q2},...,c_{q N}}$,然后将其输入SED系统以获得潜在嵌入 ${e_{q1},e_{q2},...,e_{q N}}$。最终通过取平均值得到 $e_{q}$:

Then, we use $e_{q}$ as the query for the source $s_{q}$ and separate $x_{q}$ into the target track $f(x_{q},e_{q})$ . A visualization of this process is depicted in Figure 3.

然后,我们使用 $e_{q}$ 作为源 $s_{q}$ 的查询,并将 $x_{q}$ 分离为目标轨道 $f(x_{q},e_{q})$ 。该过程的可视化如图 3 所示。

The 527 classes of Audioset are ranged from ambient natural sounds to human activity sounds. Most of them are not clean sources as they contain other backgrounds and event sounds. After training our model in Audioset, we find that the model is able to achieve a good performance on separating unseen sources. According to (Wang et al. 2019), we declare that this follows a Class-Trans duct ive InstanceInductive (CTII) setting of zero-shot learning (Wang et al. 2019) as we train the separation model by certain types of sources and use unseen queries to let the model separate unseen sources.

AudioSet的527个类别涵盖了从自然环境声到人类活动声的广泛范围。大多数声源并非纯净样本,往往包含背景音和其他事件声音。通过在AudioSet上训练模型,我们发现该模型能够有效分离未见过的声源类型。根据 (Wang et al. 2019) 的研究,我们将其归类为类传导实例归纳 (CTII) 的零样本学习设定:模型通过特定类型声源进行训练后,能够根据未见过的查询指令分离新声源类型 (Wang et al. 2019)。

Table 1: The mAP results in Audioset evaluation set.

表 1: Audioset评估集的mAP结果

| Model | mAP |

|---|---|

| AudioSetBaseline (2017) | 0.314 |

| DeepRes. (2019) | 0.392 |

| PANN. (2020a) | 0.434 |

| PSLA. (2021b) | 0.443 |

| AST. (single) w/o. pretrain (2021a) | 0.368 |

| AST. (single) (2021a) | 0.459 |

| 768-dST-SED | 0.467 |

| 768-d ST-SEDw/o.pretrain | 0.458 |

| 2048-d ST-SED w/o.pretrain | 0.459 |

Experiment

实验

There are two experimental stages for us to train a zero-shot audio source separator. First, we need to train a SED system as the first component. Then, we train an audio source separator as the second component based on the processed data from the SED system. In the following subsections, we will introduce the experiments in these two stages.

我们训练零样本音频源分离器的实验分为两个阶段。首先,需要训练一个声音事件检测(SED)系统作为第一组件。然后,基于SED系统处理后的数据,训练音频源分离器作为第二组件。以下小节将介绍这两个阶段的实验。

Sound Event Detection

声音事件检测

Dataset and Training Details We choose AudioSet to train our sound event detection system ST-SED. It is a largescale collection of over 2 million 10-sec audio samples and labeled with sound events from a set of 527 labels. Following the same training pipeline with (Gong, Chung, and Glass 2021a), we use AudioSet’s full-train set (2M samples) for training the ST-SED model and its evaluation set (22K samples) for evaluation. To further evaluate the localization performance, we use DESED test set (Serizel et al. 2020), which contains 692 10-sec audio samples with strong labels (time boundaries) of 2765 events in total. All labels in DESED are the subset (10 classes) of AudioSet’s sound event classes. In that, we can directly map AudioSet’s classes into DESED’s classes. There is no overlap between AudioSet’s full-train set and DESED test set. And there is no need to use DESED training set because AudioSet’s fulltrain set contains more training data.

数据集与训练细节

我们选择AudioSet来训练声音事件检测系统ST-SED。该数据集包含超过200万段10秒音频样本,并使用527种标签标注声音事件。遵循(Gong, Chung, and Glass 2021a)相同的训练流程,我们使用AudioSet的全训练集(200万样本)训练ST-SED模型,并用其评估集(2.2万样本)进行评估。

为进一步评估定位性能,我们采用DESED测试集(Serizel et al. 2020),该数据集包含692段10秒音频样本,带有2765个事件的强标注(时间边界)。DESED所有标签均为AudioSet声音事件类别的子集(10类),因此可直接将AudioSet类别映射到DESED类别。

AudioSet全训练集与DESED测试集无重叠,且无需使用DESED训练集,因为AudioSet全训练集已包含更丰富的训练数据。

For the pre-processing of audio, all samples are converted to mono as 1 channel by $32\mathrm{kHz}$ sampling rate. To compute STFTs and mel-spec tro grams, we use 1024 window size and 320 hop size. As a result, each frame is $\frac{320}{32000}=0.01$ sec. The number of mel-frequency bins is $F=64$ . Each 10-sec sample constructs 1000 time frames and we pad them with 24 zero-frames $T=1024)$ . The shape of the output featuremap is (1024, 527) ( $C=527$ ). The patch size is $4\times4$ and the time window is 256 frames in length. We propose two settings for the ST-SED with a latent dimension size $L$ of 768 or 2048. We adopt the 768-d model to make use of the swin-transformer ImageNet-pretrain