BURT: BERT-inspired Universal Representation from Learning Meaningful Segment BURT:从学习有意义的细分中获得BERT启发的通用表示形式

author

Yian Li, Hai Zhao

Abstract

Although pre-trained contextualized language models such as BERT achieve significant performance on various downstream tasks, current language representation still only focuses on linguistic objective at a specific granularity, which may not applicable when multiple levels of linguistic units are involved at the same time. Thus this work introduces and explores the universal representation learning, i.e., embeddings of different levels of linguistic unit in a uniform vector space. We present a universal representation model, BURT (BERT-inspired Universal Representation from learning meaningful segmenT), to encode different levels of linguistic unit into the same vector space. Specifically, we extract and mask meaningful segments based on point-wise mutual information (PMI) to incorporate different granular objectives into the pre-training stage. We conduct experiments on datasets for English and Chinese including the GLUE and CLUE benchmarks, where our model surpasses its baselines and alternatives on a wide range of downstream tasks. We present our approach of constructing analogy datasets in terms of words, phrases and sentences and experiment with multiple representation models to examine geometric properties of the learned vector space through a task-independent evaluation. Finally, we verify the effectiveness of our unified pre-training strategy in two real-world text matching scenarios. As a result, our model significantly outperforms existing information retrieval (IR) methods and yields universal representations that can be directly applied to retrieval-based question-answering and natural language generation tasks.

摘要

尽管经过训练的上下文化语言模型(例如BERT)在各种下游任务上都取得了显着的性能,但是当前的语言表示仍然只专注于特定粒度的语言目标,当同时涉及多个语言单元级别时,这可能不适用。因此,这项工作介绍和探索了通用表示学习,即在统一向量空间中嵌入不同级别的语言单元的方法。我们提出了一种通用表示模型BURT(通过学习有意义的段T来启发BERT的通用表示),以将不同级别的语言单元编码到相同的向量空间中。具体来说,我们根据逐点互信息(PMI)提取和掩盖有意义的细分,以将不同的粒度目标纳入预训练阶段。我们对英语和中文数据集(包括GLUE和CLUE基准)进行了实验,我们的模型在许多下游任务上都超过了其基准和替代方法。我们介绍了根据单词,短语和句子构造类比数据集的方法,并尝试了多种表示模型,以通过独立于任务的评估来检查学习的向量空间的几何特性。最后,我们在两个实际的文本匹配场景中验证了我们统一的预培训策略的有效性。

Artificial Intelligence, Natural Language Processing, Transformer, Language Representation.

1INTRODUCTION

Representations learned by deep neural models have attracted a lot of attention in Natural Language Processing (NLP). However, previous language representation learning methods such as word2vec [1], LASER [2] and USE [3] focus on either words or sentences. Later proposed pre-trained contextualized language representations like ELMo [4], GPT[5], BERT [6] and XLNet [7] may seemingly handle different sized input sentences, but all of them focus on sentence-level specific representation still for each word, which leads to unsatisfactory performance in real-world situations. Although the latest BERT-wwm-ext [8], StructBERT [9] and SpanBERT [10] perform MLM on a higher linguistic level, the masked segments (whole words, trigrams, spans) either follow a pre-defined distribution or focus on a specific granularity. Such sampling strategy ignores important semantic and syntactic information of a sequence, resulting in a large number of meaningless segments.

However, universal representation among different levels of linguistic units may offer a great convenience when it is needed to handle free text in language hierarchy in a unified way. As well known that, embedding representation for a certain linguistic unit (i.e., word) enables linguistics-meaningful arithmetic calculation among different vectors, also known as word analogy. For example, vector (“King”) - vector (“Man”) + vector (“Woman”) results in vector (“Queen”). Thus universal representation may generalize such good analogy features or meaningful arithmetic operation onto free text with all language levels involved together. For example, Eat an onion : Vegetable :: Eat a pear : Fruit. In fact, manipulating embeddings in the vector space reveals syntactic and semantic relations between the original sequences and this feature is indeed useful in true applications. For example, “London is the capital of England.” can be formulized as v(capital)+v(England)∼v(London). Given two documents one of which contains “England” and “capital”, the other contains “London”, we consider these two documents relevant. Such features can be generalized onto higher language levels for phrase/sentence embedding.

In this paper, we explore the regularities of representations including words, phrases and sentences in the same vector space. To this end, we introduce a universal analogy task derived from Google’s word analogy dataset. To solve such task, we present BURT, a pre-trained model that aims at learning universal representations for sequences of various lengths. Our model follows the architecture of BERT but differs from its original masking and training scheme. Specifically, we propose to efficiently extract and prune meaningful segments (n-grams) from unlabeled corpus with little human supervision, and then use them to modify the masking and training objective of BERT. The n-gram pruning algorithm is based on point-wise mutual information (PMI) and automatically captures different levels of language information, which is critical to improving the model capability of handling multiple levels of linguistic objects in a unified way, i.e., embedding sequences of different lengths in the same vector space.

Overall, our pre-trained models improves the performance of our baseline in both English and Chinese. In English, BURT-base reaches one percent gain on average over Google BERT-base. In Chinese, BURT-wwm-ext obtains 74.48% on the WSC test set, 3.45% point absolute improvement compared with BERT-wwm-ext and exceeds the baselines by 0.2% ∼ 0.6% point accuracy on five other CLUE tasks including TNEWS, IFLYTEK, CSL, ChID and CMRC 2018. Extensive experimental results on our universal analogy task demonstrate that BERT is able to map sequences of variable lengths into a shared vector space where similar sequences are close to each other. Meanwhile, addition and subtraction of embeddings reflect semantic and syntactic connections between sequences. Moreover, BURT can be easily applied to real-world applications such as Frequently Asked Questions (FAQ) and Natural Language Generation (NLG) tasks, where it encodes words, sentences and paragraphs into the same embedding space and directly retrieves sequences that are semantically similar to the given query based on cosine similarity. All of the above experimental results demonstrate that our well-trained model leads to universal representation that can adapt to various tasks and applications.

在自然语言处理(NLP)中,由深度神经模型学到的表示形式引起了很多关注。但是,以前的语言表示学习方法(如word2vec [ 1 ],LASER [ 2 ]和USE [ 3 ])专注于单词或句子。后来提出了经过预训练的上下文化语言表示形式,例如ELMo [ 4 ],GPT [ 5 ],BERT [ 6 ]和XLNet [ 7 ]看起来似乎可以处理大小不同的输入句子,但是它们全都集中在每个单词仍然针对句子级别的特定表示上,这导致在实际情况下的表现不尽人意。尽管最新的BERT-wwm-ext [ 8 ],StructBERT [ 9 ]和SpanBERT [ 10 ]在较高的语言水平上执行MLM,但是蒙版句段(整个单词,三字母组,跨度)要么遵循预定义的分布,要么着眼于具体的粒度。这种采样策略忽略了序列的重要语义和句法信息,从而导致了大量无意义的句段。

但是,当需要以统一的方式处理语言层次结构中的自由文本时,不同级别的语言单元之间的通用表示可能会提供极大的便利。众所周知,将表示形式嵌入某个语言单元(即单词)可以实现不同向量之间的语言意义的算术计算,也称为单词类比。例如,vector (“King”) - vector (“Man”) + vector (“Woman”) 来表达vector (“Queen”).。因此,通用表示可以将这种良好的类比功能或有意义的算术运算推广到具有所有语言级别一起的自由文本上。例如,吃洋葱:蔬菜::吃梨:水果。实际上,在向量空间中处理嵌入揭示了原始序列之间的句法和语义关系,并且此功能确实在实际应用中很有用。例如,“伦敦是英格兰的首都。” 可以公式作为$ v(capital) + v(England) \approx v(London) $配制。然后给出了两个文件,其中一个包含“英格兰”和“首都”,另一个包含“伦敦”,我们认为这两个文档是相关的。可以将此类功能推广到更高的语言级别以进行词组/句子嵌入。

在本文中,我们探索了在相同向量空间中包括单词,短语和句子在内的表示形式的规律性。为此,我们引入了一个通用的类比任务,该任务源自Google的单词类比数据集。为了解决这一任务,我们提出了BURT,这是一种预训练的模型,旨在学习各种长度序列的通用表示形式。我们的模型遵循BERT的体系结构,但不同于其原始的掩蔽和训练方案。具体来说,我们建议在没有人工监督的情况下,从未标记的语料库中高效提取和修剪有意义的片段(n- gram),然后使用它们来修改BERT的掩蔽和训练目标。该ñ-gram修剪算法基于点向互信息(PMI),并自动捕获不同级别的语言信息,这对于提高以统一方式处理多个级别的语言对象的模型能力(即嵌入不同序列的语言)至关重要同一向量空间中的长度。

总体而言,我们经过预训练的模型可以提高英语和中文基线的性能。英语上,基于BURT的平均收益要比基于Google BERT的平均收益高1%。在中文中,BURT-wwm-ext在WSC测试集中获得了74.48%,与BERT-wwm-ext相比,绝对提高了3.45%,并且比基线高出0.2%〜在其他五个CLUE任务(包括TNEWS,IFLYTEK,CSL,ChID和CMRC 2018)上,其点精度为0.6%。我们通用类比任务的大量实验结果表明,BERT能够将可变长度的序列映射到相似序列接近的共享向量空间中对彼此。同时,嵌入的加法和减法反映了序列之间的语义和句法联系。此外,BURT可以轻松应用于现实世界中的应用程序,例如常见问题解答(FAQ)和自然语言生成(NLG)任务,在其中将单词,句子和段落编码到相同的嵌入空间中,并直接检索语义相似的序列基于余弦相似度的给定查询。

2 BACKGROUND 背景

2.1WORD AND SENTENCE EMBEDDINGS 单词和句子嵌入

Representing words as real-valued dense vectors is a core technique of deep learning in NLP. Word embedding models [1, 11, 12] map words into a vector space where similar words have similar latent representations. ELMo [4] attempts to learn context-dependent word representations through a two-layer bi-directional LSTM network. In recent years, more and more researchers focus on learning sentence representations. The Skip-Thought model [13] is designed to predict the surrounding sentences for an given sentence. [14] improve the model structure by replacing the RNN decoder with a classifier. InferSent [15] is trained on the Stanford Natural Language Inference (SNLI) dataset [16] in a supervised manner. [17, 3] employ multi-task training and report considerable improvements on downstream tasks. LASER [2] is a BiLSTM encoder designed to learn multilingual sentence embeddings. Nevertheless, most of the previous work focused on a specific granularity. In this work we extend the training goal to a unified level and enables the model to leverage different granular information, including, but not limited to, word, phrase or sentence.

将单词表示为实值密集向量是NLP中深度学习的一项核心技术。字嵌入模型[ 1,11,12 ]图字转换为一个向量空间,其中类似的词语具有类似的潜表示。ELMo [ 4 ]尝试通过两层双向LSTM网络学习上下文相关的词表示形式。近年来,越来越多的研究人员致力于学习句子表示。Skip-Thought模型[ 13 ]设计用于预测给定句子的周围句子。[ 14 ]通过用分类器替换RNN解码器来改善模型结构。在Stanford Natural Language Inference(SNLI)数据集[ 16 ]上以有监督的方式训练InferSent [ 15 ]。[ 17,3 ]雇用多任务的训练和报告下游任务相当大的改善。激光[ 2 ]是一种BiLSTM编码器,旨在学习多语言句子嵌入。尽管如此,以前的大多数工作都集中在特定的粒度上。在这项工作中,我们将训练目标扩展到统一的水平,并使模型能够利用不同的详细信息,包括但不限于单词,短语或句子。

2.2PRE-TRAINED LANGUAGE MODELS 预训练语言模型

Most recently, the pre-trained language model BERT [6] has shown its powerful performance on various downstream tasks. BERT is trained on a large amount of unlabeled data including two training targets: Masked Language Model (MLM) for modeling deep bidirectional representations, and Next Sentence Prediction (NSP) for understanding the relationship between two sentences. [18] introduce Sentence-Order Prediction (SOP) as a substitution of NSP. [9] develop a sentence structural objective by combining the random sampling strategy of NSP and continuous sampling as in SOP. However, [19] and [10] use single contiguous sequences of at most 512 tokens for pre-training and show that removing the NSP objective improves the model performance. Besides, BERT-wwm [8], StructBERT [10], SpanBERT [9] perform MLM on higher linguistic levels, augmenting the MLM objective by masking whole words, trigrams or spans, respectively. Nevertheless, we concentrate on enhancing the masking and training procedures from a broader and more general perspective.

最近,经过预训练的语言模型BERT [ 6 ]已显示出其在各种下游任务上的强大性能。BERT对大量未标记的数据进行了训练,包括两个训练目标:用于深层双向表示建模的屏蔽语言模型(MLM)和用于理解两个句子之间关系的下一句预测(NSP)。[ 18 ]引入了句子顺序预测(SOP)作为NSP的替代。[ 9 ]通过结合NSP的随机采样策略和SOP中的连续采样来开发句子结构目标。但是,[ 19 ]和[ 10 ]使用最多512个令牌的单个连续序列进行预训练,并表明删除NSP目标可提高模型性能。此外,BERT-wwm [ 8 ],StructBERT [ 10 ],SpanBERT [ 9 ]以较高的语言水平执行传销,通过分别掩盖整个单词,三字组或跨度来扩大传销目标。尽管如此,我们还是从更广泛,更普遍的角度集中于增强掩蔽和训练程序。

2.3 ANALYSIS ON EMBEDDINGS 嵌入分析

矢量规律的先前勘探主要研究字的嵌入[ 1,20,21 ]。在引入句子编码器和Transformer模型[ 22 ]之后,人们进行了更多工作来研究句子级嵌入。通常在下游任务的执行被认为是用于表示句子的模型能力的测定[ 15,3,23 ]。一些研究提出了探测任务的理解句子的嵌入的某些方面[ 24,25,26 ]。具体来说,[ 27,28,29 ]直视BERT的嵌入和揭示其内部工作机构。此外,[ 30,31 ]探索在句子的嵌入规律性。然而,很少有工作在相同的向量空间中分析单词,短语和句子。在本文中,我们致力于以独立于任务的方式嵌入由不同模型获得的各种长度的序列。

Previous exploration of vector regularities mainly studies word embeddings [1, 20, 21]. After the introduction of sentence encoders and Transformer models [22], more works were done to investigate sentence-level embeddings. Usually the performance in downstream tasks is considered to be the measurement for model ability of representing sentences [15, 3, 23]. Some research proposes probing tasks to understand certain aspects of sentence embeddings [24, 25, 26]. Specifically, [27, 28, 29] look into BERT embeddings and reveal its internal working mechanisms. Besides, [30, 31] explore the regularities in sentence embeddings. Nevertheless, little work analyzes words, phrases and sentences in the same vector space. In this paper, We work on embeddings for sequences of various lengths obtained by different models in a task-independent manner.

2.4 FAQ APPLICATIONS 常见问题解答申请

常见问题解答(FAQ)的目标是从给定查询的预定义数据集中检索最相关的质量检查对。先前的工作集中在基于特征的方法[ 32,33,34 ]。最近,基于Transformer的表示模型在测量查询-问题或查询-答案相似度方面取得了长足的进步。[ 35 ]对Transformer模型进行分析,并提出了一种神经架构来解决FAQ任务。[ 36 ]提出了一个FAQ检索系统,该系统结合了BERT和基于规则的方法的特征。在这项工作中,我们评估FAQ任务上训练有素的通用表示模型的性能。

The goal of a Frequently Asked Question (FAQ) task is to retrieve the most relevant QA pairs from the pre-defined dataset given a query. Previous works focus on feature-based methods [32, 33, 34]. Recently, Transformer-based representation models have made great progress in measuring query-Question or query-Answer similarities. [35] make an analysis on Transformer models and propose a neural architecture to solve the FAQ task. [36] come up with an FAQ retrieval system that combines the characteristics of BERT and rule-based methods. In this work, we evaluate the performance of well-trained universal representation models on the FAQ task.

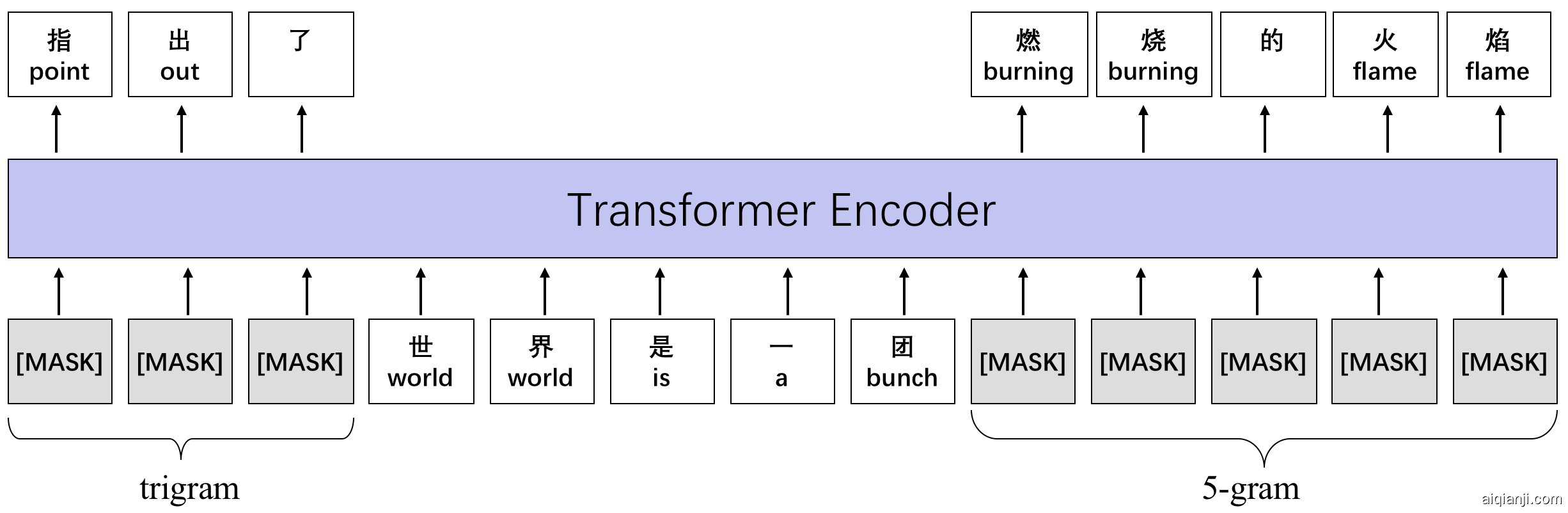

Fig. 1: An illustration of n-gram pre-training.

图1:n -gram预训练的示意图。

Fig. 2: An example from the Chinese Wikipedia corpus. n-grams of different lengths are marked with dashed boxes in different colors in the upper part of the figure. During training, we randomly mask n-grams and only the longest n-gram is masked if there are multiple matches, as shown in the lower part of the figure.

图2:来自中国维基百科语料库的示例。图的上部用不同颜色的虚线框标记了不同长度的n克。在训练过程中,我们随机掩盖n -gram,如果存在多个匹配项,则仅掩盖最长的n -gram,如图下部所示。

3 METHODOLOGY 方法

Our BURT follows the Transformer encoder [22] architecture where the input sequence is first split into subword tokens and a contextualized representation is learned for each token. We only perform MLM training on single sequences as suggested in [10]. The basic idea is to mask some of the tokens from the input and force the model to recover them from the context. Here we propose a unified masking method and training objective considering different grained linguistic units.

Specifically, we apply an pruning mechanism to collect meaningful n-grams from the corpus and then perform n-gram masking and predicting. Our model differs from the original BERT and other BERT-like models in several ways. First, instead of the token-level MLM of BERT, we incorporate different levels of linguistic units into the training objective in a comprehensive manner. Second, unlike SpanBERT and StructBERT which sample random spans or trigrams, our n-gram sampling approach automatically discovers structures within any sequence and is not limited to any granularity.

我们的BURT遵循Transformer编码器[ 22 ]架构,在该架构中,输入序列首先被拆分为子词标记,并为每个标记学习上下文表示。我们仅按[ 10 ]中的建议对单个序列执行MLM训练。基本思想是屏蔽输入中的某些标记,并强制模型从上下文中恢复它们。在这里,我们提出了一种统一的掩蔽方法和考虑不同粒度语言单元的训练目标。

具体来说,我们应用修剪机制从语料库中收集有意义的n- gram,然后执行n- gram掩蔽和预测。我们的模型在某些方面与原始BERT和其他类似BERT的模型不同。首先,代替BERT的令牌级MLM,我们以综合的方式将不同级别的语言单元合并到训练目标中。其次,与SpanBERT和StructBERT采样随机跨度或三字母组不同,我们的n- gram采样方法可自动发现任何序列内的结构,并且不限于任何粒度。

3.1 N-GRAM PRUNING

In this subsection, we introduce our approach of extracting a large number of meaningful n-grams from the monolingual corpus, which is a critical step of data processing.

First, we scan the corpus and extract all n-grams with lengths up to N using the SRILM toolkithttp://www.speech.sri.com/projects/srilm/download.html [37]. In order to filter out meaningless n-grams and prevent the vocabulary from being too large, we apply pruning by means of point-wise mutual information (PMI) [38]. To be specific, mutual information I(x,y) describes the association between tokens x and y by comparing the probability of observing x and y together with the probabilities of observing x and y independently. Higher mutual information indicates stronger association between the two tokens.

在本小节中,我们介绍了从单语语料库中提取大量有意义的n- gram的方法,这是数据处理的关键步骤。

首先,我们使用SRILM Toolkit http://www.speech.sri.com/projects/srilm/download.html,扫描语料库并提取所有n -dgrops,长度为$ N $。为了滤除毫无意义的n -gram,并防止词汇量过大,我们通过Pock-Wise互信息(PMI)进行修剪。为特定的,通过将$ x $和$ y $和$ x $和$ y D1和$ y $独立于观察$ T1180_0 $和$ y $的概率,互及$ I(x, y) $描述了令牌$ x $和$ y $之间的关联。更高的互信息表示两个令牌之间的更强关联。

$$ I(x, y) = {\rm log}\frac{P(x, y)}{P(x) P(y)} $$

In practice, $ P(x) $ and $ P(y) $ denote the probabilities of $ x $ and $ y $ , respectively, and $ P(x, y) $ represents the joint probability of observing $ x $ followed by $ y $ . This alleviates bias towards high-frequency words and allows tokens that are rarely used individually but often appear together such as " to have higher scores. In our application, an $ \emph{n} $ -gram denoted as $ w = (x_1, \ldots, x_{L_w}) $ , where $ L_w $ is the number of tokens in $ w $ , may contains more than two words. Therefore, we present an extended PMI formula displayed as below:

在实践中,$ P(x) $和$ P(y) $表示概率$ x $和$ y $分别和$ P(x, y) $表示观察$ x $的联合概率,后跟$ y $。这减轻了对高频词的偏见,并且允许单独使用的令牌,但经常出现在一起,如“具有更高的分数。在我们的应用中,n-GRAM表示为$ w = (x_1, \ldots, x_{L_w}) $,其中$ L_w $是$ w $中的令牌数量,可以包含两个以上的单词。因此,我们介绍了如下所示的扩展PMI公式:

$$ PMI(w) = \frac{1}{L_w}\left({\rm log}P(w) - \sum\limits_{k=1}^{L_w}{\rm log}P(x_k) \right) $$

where the probabilities are estimated by counting the number of observations of each token and $ \emph{n} $ -gram in the corpus, and normalizing by the size of the corpus. $ \frac{1}{L_w} $ is an additional normalization factor which avoids extremely low scores for longer -grams. Finally, $ \emph{n} $ -grams with PMI scores below the chosen threshold are filtered out, resulting in a vocabulary of meaningful -grams.

通过计算每个令牌的观察次数来估计概率在语料库中和n-GRAM,并通过语料库的大小进行归一化。$\frac{1}{L_w} $是额外的归一化因子,其避免了更低的分数用于更长的级。最后,n-GRAME具有所选PMI的PMI分数滤网阈值,导致有意义的-gr的词汇ams。

3.2 N-GRAM MASKING

For a given input$ S = {x_1, x_2,\dots, x_L} $, where L is the number of tokens in S, special tokens [CLS] and [SEP] are added at the beginning and end of the sequence, respectively. Before feeding the training data into the Transformer blocks, we identify all the n-grams in the sequence using the aforementioned n-gram vocabulary. An example is shown in Figure 2, where there are overlap between n-grams, which indicates the multi-granular inner structure of the given sequence. In order to make better use of higher-level linguistic information, the longest n-gram is retained if multiple matches exist. Compared with other masking strategies, our method has two advantages. First, n-gram extracting and matching can be efficiently done in an unsupervised manner without introducing random noise. Second, by utilizing n-grams of different lengths, we generalize the masking and training objective of BERT to a unified level where different granular linguistic units are integrated.

Following BERT, we mask 15% of all tokens in each sequence. The data processing algorithm uniformly samples one n-gram at a time until the maximum number of masking tokens is reached. 80% of the time the we replace the entire n-gram with [MASK] tokens. 10% of the time it is replace with random tokens and 10% of the time we keep it unchanged. The original token-level masking is retained and considered as a special case of n-gram masking where n=1. We employ dynamic masking as mentioned in [19], which means masking patterns for the same sequence in different epochs are probably different.

给定输入$ S = {x_1, x_2,\dots, x_L} $,其中$ L $是$ S $中的令牌数量,在特殊的令牌[cls]和[sep]中添加分别的开始和结束。在将训练数据输入到Transformer块中之前,我们使用上述n- gram词汇表识别序列中的所有n - gram。图2中显示了一个示例,其中n- gram之间有重叠,这表示给定序列的多颗粒内部结构。为了更好地利用高级语言信息,如果存在多个匹配项,则保留最长的n- gram。与其他掩蔽策略相比,我们的方法有两个优点。首先,n语法图的提取和匹配可以以无监督的方式高效完成,而不会引入随机噪声。其次,通过利用不同长度的n- gram,我们将BERT的掩蔽和训练目标推广到一个统一的级别,其中集成了不同的粒度语言单元。

在BERT之后,我们在每个序列中屏蔽了15%的令牌。数据处理算法一次均匀地样本,直到达到屏蔽令牌的最大数量。在80%的时间内,我们用[MASK]令牌替换了整个n -gram 。10%的时间将其替换为随机令牌,而10%的时间我们将其保持不变。原始令牌级别的掩码被保留,并被视为n- gram掩码的特殊情况,其中ñ=1个。我们采用了[ 19 ]中提到的动态遮罩,这意味着在不同时期中相同序列的遮罩模式可能会有所不同。

3.3 Traning Objective 训练目标

As depicted in Figure 1, the Transformer encoder generates a fixed-length contextualized representation at each input position and the model only predicts the masked tokens. Ideally, a universal representation model is able to capture features for multiple levels of linguistic units. Therefore, we extend the MLM training objective to a more general situation, where the model is trained to predict n-grams rather than subwords.

如图1所示,Transformer编码器在每个输入位置生成固定长度的上下文表示,并且该模型仅预测被屏蔽的令牌。理想情况下,通用表示模型能够捕获多级语言单元的特征。因此,我们将MLM训练目标扩展到更一般的情况,在该情况下训练模型以预测n元语法词而不是子词。

$$ \max_{\theta}\sum_{w}{\rm log} P(w | \mathbf{\hat x}; \theta) = \max_{\theta}\sum_{(i, j)}{\rm log} P(x_i, \dots, x_j | \mathbf{\hat x}; \theta) $$

where $ w $ is a masked -gram and $ \mathbf{\hat x} $ is a corrupted version of the input sequence. $ (i, j) $ represents the absolute start and end positions of $ w $ .

其中$ w $是masked -gram和$\mathbf{\hat x} $是输入序列的损坏版本。 $(i, j) $表示$ w $的绝对启动和结束位置。

| GLUE | Length | #Train | #Dev | #Test | #L |

|---|---|---|---|---|---|

| CoLA | Short | 8.5k | 1k | 1k | 2 |

| SST-2 | Short | 67k | 872 | 1.8k | 2 |

| MNLI | Short | 393k | 20k | 20k | 3 |

| QNLI | Short | 105k | 5.5k | 5.5k | 2 |

| RTE | Short | 2.5k | 277 | 3k | 2 |

| MRPC | Short | 3.7k | 408 | 1.7k | 2 |

| QQP | Short | 364k | 40k | 391k | 2 |

| STS-B | Short | 5.8k | 1.5k | 1.4k | - |

| CLUE | Length | #Train | #Dev | #Test | #L |

| TNEWS | Short | 53k | 10k | 10k | 15 |

| IFLYTEK | Long | 12k | 2.6k | 2.6k | 119 |

| WSC | Short | 1.2k | 304 | 290 | 2 |

| AFQMC | Short-Short | 34k | 4.3k | 3.9k | 2 |

| CSL | Long-Short | 20k | 3k | 3k | 2 |

| OCNLI | Short-Short | 50k | 3k | 3k | 3 |

| CMRC18 | Long | 10k | 1k | 3.2k | - |

| ChID | Long | 85k | 3.2k | 3.2k | - |

| C3 | Short | 12k | 3.8k | 3.9k | - |

TABLE I: Statistics of datasets from the GLUE and CLUE benchmark. #Train, #Dev, #Test are the size of training, development and test sets, respectively. #L is the number of labels. Sequences are simply divided into two categories according to their length: “Long” and “Short”.

4 Task Setup 任务设定

为了评估处理不同语言单元的模型能力,我们从GLUE和CLUE基准报告了我们模型在下游任务上的性能。此外,我们构造了一个通用类比任务。最后,我们提出了保险FAQ任务和基于检索的语言生成任务,其中的关键是将不同长度的序列嵌入到相同的向量空间中,并检索最接近给定查询的文本。

To evaluate the model ability of handling different linguistic units, we apply our model on downstream tasks from GLUE and CLUE benchmark. Moreover, we construct a universal analogy task based on Google's word analogy dataset to explore the regularity of universal representation. Finally, we present an insurance FAQ task and a retrieval-based language generation task, where the key is to embed sequences of different lengths in the same vector space and retrieve sequences with similar meaning to the given query.

任务设置

评估处理不同语言单位的模型能力,我们将我们的模型应用于胶水和线索基准的下游任务。此外,我们基于Google的Spalicy DataSet构建一个通用的类比任务,以探索通用表示的规律性。最后,我们介绍了一个保险常见问题任务和基于检索的语言生成任务,其中密钥是在相同的矢量空间中嵌入不同长度的序列,并检索具有与给定查询相似含义的序列。

General Language Understanding 一般语言理解

我们在广泛的自然语言理解任务上评估英语和汉语模型。表I列出了数据集的统计信息。除了任务类型的多样性之外,我们还发现不同的数据集集中在不同长度的序列上,这满足了我们研究代表多个粒度语言单元的模型能力的需求。

Statistics of the GLUE and CLUE benchmarks are listed in Table clue-statistics. Besides the diversity of task types, we also find that different datasets concentrates on sequences of different lengths, which satisfies our need to examine the model ability of representing multiple granular linguistic units.

4.1 GENERAL LANGUAGE UNDERSTANDING

We evaluate both English and Chinese models on a wide range of natural language understanding tasks. Statistics of the datasets are listed in Table I. Besides the diversity of task types, we also find that different datasets concentrates on sequences of different lengths, which satisfies our need to examine the model ability of representing multiple granular linguistic units.

Glue

The General Language Understanding Evaluation (GLUE) benchmark [39] is a collection of tasks that is widely used to evaluate the performance of English language models. We divide eight NLU tasks from the GLUE benchmark into three main categories.

Single-Sentence Classification The Corpus of Linguistic Acceptability (CoLA) [40] is to determine whether a sentence is grammatically acceptability or not. The Stanford Sentiment Treebank (SST-2) [41] is a sentiment classification task that requires the model to predict whether the sentiment of a sentence is positive or negative. In both datasets, each example is a sequence of words annotated with a label.

Natural Language Inference Multi-Genre Natural Language Inference (MNLI) [42], Stanford Question Answering Dataset (QNIL) [43] and Recognizing Textual Entailment (RTE) [rte] are natural language inference tasks, where a pair of sentences are given and the model is trained to identify the relationship between the two sentences from entailment, contradiction, and neutral.

Semantic Similarity Semantic similarity tasks identify whether the two sentences are equivalent or measure the degree of semantic similarity of two sentences according to their representations. Microsoft Paraphrase corpus (MRPC) [44] and Quora Question Pairs (QQP) dataset are paraphrase datasets , where each example consists of two sentences and a label of “1” indicating they are paraphrases or “0” otherwise. The goal of Semantic Textual Similarity benchmark (STS-B) [45] is to predict a continuous scores from 1 to 5 for each pair as the similarity of the two sentences.

通用语言理解评估(GLUE)基准[ 39 ]是一组任务的集合,这些任务被广泛用于评估英语语言模型的性能。我们将GLUE基准测试中的八个NLU任务分为三个主要类别。

单句分类 :语言可接受性语料库(CoLA)[ 40 ]用于确定句子在语法上是否可接受。斯坦福情感树库(SST-2)[ 41 ]是情感分类任务,需要模型预测句子的情感是肯定的还是否定的。在两个数据集中,每个示例都是带有标签的单词序列。

自然语言推理:多类型自然语言推理(MNLI)[ 42 ],斯坦福问答数据集(QNIL)[ 43 ]和认识文字蕴涵(RTE)[ RTE ]是自然语言的推论的任务,其中,一对句子中给出和该模型被训练识别来自两个句子之间的关系蕴涵,矛盾,和中性。

语义相似度:任务语义相似度任务确定两个句子是否相等,或者根据它们的表示来度量两个句子的语义相似度。Microsoft复述语料库(MRPC)[ 44 ]和Quora问题对(QQP)数据集是复述数据集,其中每个示例都包含两个句子,标签“ 1”表示它们是复述,否则为“ 0”。语义文本相似性基准测试(STS-B)[ 45 ]的目标是预测每对从1到5的连续分数作为两个句子的相似性。

Clue

The Chinese General Language Understanding Evaluation (ChineseGLUE or CLUE) benchmark [46] is a Chinese version of the GLUE benchmark for language understanding. We also find nine tasks from the CLUE benchmark can be classified into three groups.

Single Sentence Tasks We utilize three single-sentence classification tasks including TouTiao Text Classification for News Titles (TNEWS), IFLYTEK [47] and the Chinese Winograd Schema Challenge (WSC) dataset. Examples from TNEWS and IFLYTEK are short and long sequences, respectively, and the goal is to predict the category that the given single sequence belongs to. WSC is a coreference resolution task where the model is required to decide whether two spans refer to the same entity in the original sequence.

Sentence Pair Tasks The Ant Financial Question Matching Corpus (AFQMC), Chinese Scientific Literature (CSL) dataset and Original Chinese Natural Language Inference (OCNLI) [48] are three pairwise textual classification tasks. AFQMC contains sentence pairs and binary labels, and the model is asked to examine whether two sentences are semantically similar. Each example in CSL involves a text and several keywords. The model needs to determine whether these keywords are true labels of the text. OCNLI is a natural language inference task following the same collection procedures of MNLI.

Machine Reading Comprehension Tasks CMRC 2018 [49], ChID [50], and C3 [51] are span-extraction based, cloze style and free-form multiple-choice machine reading comprehension datasets, respectively. Answers to the questions in CMRC 2018 are spans extracted from the given passages. ChID is a collection of passages with blanks and corresponding candidates for the model to decide the most suitable option. C3 is similar to RACE and DREAM, where the model has to choose the correct answer from several candidate options based on a text and a question.

中文通用语言理解评估(ChineseGLUE或CLUE)基准[ 46 ]是GLUE用于语言理解的基准的中文版本。我们还发现CLUE基准测试中的九个任务可以分为三类。

单句任务:我们利用三个单句分类任务,包括新闻标题的头条文本分类(TNEWS),IFLYTEK [ 47 ]和中文Winograd Schema Challenge(WSC)数据集。来自TNEWS和IFLYTEK的示例分别是长序列和短序列,目的是预测给定单个序列所属的类别。WSC是共参考解析任务,在该任务中,需要模型来确定两个跨度是否引用原始序列中的同一实体。

句子对任务: 蚁群财务问题匹配语料库(AFQMC),中国科学文献(CSL)数据集和原始中国自然语言推论(OCNLI)[ 48 ]是三个成对的文本分类任务。AFQMC包含句子对和二进制标签,并且要求模型检查两个句子在语义上是否相似。CSL中的每个示例都包含一个文本和几个关键字。模型需要确定这些关键字是否是文本的真实标签。OCNLI是遵循MNLI相同收集过程的自然语言推理任务。

机器阅读理解任务: CMRC 2018 [ 49 ],ChID [ 50 ]和C 3 [ 51 ]分别是基于跨度提取,完形填空和自由形式的多项选择机器阅读理解数据集。CMRC 2018中问题的答案是从给定段落中摘录的跨度。ChID是段落的集合,其中包含空格和模型的相应候选者,以决定最合适的选项。C 3与RACE和DREAM相似,其中模型必须基于文本和问题从多个候选选项中选择正确的答案。

| A : B :: C | Candidates |

|---|---|

| boy👧:brother | daughter, sister, wife, father, son |

| bad:worse::big | bigger, larger, smaller, biggest, better |

| Beijing:China::Paris | France, Europe, Germany, Belgium, London |

| Chile:Chilean::China | Japanese, Chinese, Russian, Korean, Ukrainian |

TABLE II: Examples from our word analogy dataset. The correct answers are in bold.

4.2 Universal Analogy

As a new task, universal representation has to be evaluated in a multiple-granular analogy dataset. The purpose of proposing a task-independent dataset is to avoid determining the quality of the learned vectors and interpret the model based on a specific problem or situation. Since embeddings are essentially dense vectors, it is natural to apply mathematical operations on them. In this subsection, we introduce the procedure of constructing different levels of analogy datasets based on Google's word analogy dataset.

通用类比

作为新任务,必须在多粒类比数据集中进行通用表示。提出独立任务数据集的目的是避免确定学习向量的质量并根据特定问题或情况解释模型。由于嵌入的向量基本上是密集的向量,因此对它们进行数学操作是自然的。在本小节中,我们介绍了基于Google的单词类比数据集构建不同级别的类比数据集的过程。

Word-level analogy 词级类比

Recall that in a word analogy task , two pairs of words that share the same type of relationship, denoted as $ A $ : $ B $ :: $ C $ : $ D $ , are involved. The goal is to solve questions like $ A $ is to $ B $ as $ C $ is to ?", which is to retrieve the last word from the vocabulary given the first three words. The objective can be formulated as maximizing the cosine similarity between the target word embedding and the linear combination of the given vectors: where $ a $ , $ b $ , $ c $ , $ d $ represent embeddings of the corresponding words and are all normalized to unit lengths. To facilitate comparison between models with different vocabularies, we construct a closed-vocabulary analogy task based on Google's word analogy dataset through negative sampling. Concretely, for each question, we use GloVe to rank every word in the vocabulary and the top 5 results are considered to be candidate words. If GloVe fails to retrieve the correct answer, we manually add it to make sure it is included in the candidates. During evaluation, the model is expected to select the correct answer from 5 candidate words. Examples are listed in Table analogy_example.

回想一句话中的一个类比任务,两对单词共享相同类型的关系,表示为$ A $:$ B $ :: $ C $:$ D $。目标是解决像$ A $的问题,如$ B $,因为$ C $是?“,这是从第一个三个单词给出的词汇中的最后一个词。该目标可以制定为最大化余弦相似性在目标字嵌入和给定矢量的线性组合之间:其中$ a $,$ b $,$ c $,$ d $表示相应单词的嵌入,并且全部归一化到单位长度。为了便于模型之间的比较不同的词汇表,通过负面抽样构建基于Google的单词类比数据集的闭合词汇类比任务。具体地说,对于每个问题,我们使用手套在词汇中排列每个词,前5个结果被认为是候选词。如果手套未能检索正确的答案,我们手动添加它以确保它包含在候选者中。在评估期间,预计模型将从5个候选词中选择正确的答案。示例列在表格alpyy_example中。

Phrase/Sentence-level analogy 短语/句子级类比

To investigate the arithmetic properties of vectors for higher levels of linguistic units, we present phrase and sentence analogy tasks based on the proposed word analogy dataset. We only consider a subset of the original analogy task because we find that for some categories, such as Australia" : Australian", the same template phrase/sentence cannot be applied on both words. Statistics are shown in Table statistics.

为了研究用于更高级别语言单元的向量的算术特性,我们基于提出的词类比数据集提出了短语和句子类比任务。我们仅考虑原始类比任务的一个子集,因为我们发现对于某些类别(例如“ Australia”:“ Australian”),不能在两个单词上应用相同的模板短语/句子。统计信息显示在表LABEL:length和表III中。

| Dataset | #p | #q | #c | #l (p/s) |

|---|---|---|---|---|

| capital-common | 23 | 506 | 5 | 6.0/12.0 |

| capital-world | 116 | 4524 | 5 | 6.0/12.0 |

| city-state | 67 | 2467 | 5 | 6.0/12.0 |

| male-female | 23 | 506 | 5 | 4.1/10.1 |

| present-participle | 33 | 1056 | 2 | 4.8/8.8 |

| positive-comparative | 37 | 1322 | 2 | 3.4/6.1 |

| positive-negative | 29 | 812 | 2 | 4.4/9.2 |

| All | 328 | 11193 | - | 5.4/10.7 |

TABLE III: Statistics of our analogy datasets. #p and #q are the number of pairs and questions for each category. #c is the number of candidates for each dataset. #l (p/s) is the average sequence length in phrase/sentence-level analogy datasets.

Fig. 3: Examples of Question-Answer pairs from our insurance FAQ dataset. The correct match to the query is highlighted.

Semantic Semantic analogies can be divided into four subsets: capital-common", capital-world", city-state" and male-female". The first two sets can be merged into a larger dataset: capital-country", which contains pairs of countries and their capital cities; the third involves states and their cities; the last one contains pairs with gender relations. Considering GloVe's poor performance on word-level country-currency" questions ( $ < $ 32%), we discard this subset in phrase and sentence-level analogies. Then we put words into contexts so that the resulting phrases and sentences also have linear relationships. For example, based on relationship : :: : , we select phrases and sentences that contain the word " from the English Wikipedia Corpus https://dumps.wikimedia.org/enwiki/latest : " and create examples: " : " :: " : ". However, we found that such a question is identical to word-level analogy for BOW methods like averaging GloVe vectors, because they treat embeddings independently despite the content and word order. To avoid lexical overlap between sequences, we replace certain words and phrases with their synonyms and paraphrases, e.g., " : " :: " : ". Usually sentences selected from the corpus have a lot of redundant information. To ensure consistency, we manually modify some words during the construction of templates. However, this procedure will not affect the relationship between sentences.

语义 语义类比可分为四个子集:“资本共通”,“资本世界”,“城市状态”和“男女”。前两个集合可以合并成一个更大的数据集:“ capital-country”,其中包含成对的国家及其首都。第三个涉及州及其城市;最后一个包含成对的性别关系。考虑到GloVe在字级“国家/地区”问题上的表现不佳(<32%),我们会在短语和句子级别的类比中丢弃此子集。然后,我们将单词放入上下文中,以便生成的短语和句子也具有线性关系。例如,基于关系雅典:希腊::巴格达:伊拉克,我们从英语维基百科语料库2中选择包含单词“ Athens ”的短语和句子2个https://dumps.wikimedia.org/enwiki/latest:“他被雅典大学作为物理学教授聘用。”创造的例子:‘由......雅典聘请’:‘由......希腊聘为’::‘由...巴格达聘请’:‘由......伊拉克雇用’。但是,我们发现这样的问题与BOW方法(如平均GloVe向量)的词级类比相同,因为它们不管内容和词序如何都独立地处理嵌入。为了避免序列之间重叠的词汇,我们替换某些单词和短语与他们的同义词和意译,例如“由......雅典聘请”:“由......希腊使用” ::“由...巴格达采用”:“由伊拉克...雇用”。通常,从语料库中选择的句子有很多多余的信息。为了确保一致性,我们在构建模板期间手动修改了一些单词。但是,此过程不会影响句子之间的关系。

| Category | Topics | |

|---|---|---|

| Daily Scenarios | Traveling, Recipe, Skin care, Beauty makeup, Pets | 22 |

| Sport & Health | Outdoor sports, Athletics, Weight loss, Medical treatment | 15 |

| Reviews | Movies, Music, Poetry, Books | 16 |

| Persons | Entrepreneurs, Historical/Public figures, Writers, Directors, Actors | 17 |

| General | Festivals, Hot topics, TV shows | 6 |

| Specialized | Management, Marketing, Commerce, Workplace skills | 17 |

| Others | Relationships, Technology, Education, Literature | 14 |

| All | - | 107 |

TABLE IV: Details of the templates.

表IV:模板的详细信息。

Syntactic We consider three typical syntactic analogies: Tense, Comparative and Negation, corresponding to three subsets: “present-participle”, “positive-comparative”, “positive-negative”, where the model needs to distinguish the correct answer from “past tense”, “superlative” and “positive”, respectively. For example, given phrases “Pigs are bright” : “Pigs are brighter than goats” :: “The train is slow”, the model need to give higher similarity score to the sentence that contains “slower” than the one that contains “slowest”. Similarly, we add synonyms and synonymous phrases for each question to evaluate the model ability of learning context-aware embeddings rather than interpreting each word in the question independently. For instance, “pleasant” ≈ “not unplea