Xception: Deep Learning with Depthwise Separable Convolutions

xception:深度学习,深度可分离卷积

Abstract

We present an interpretation of Inception modules in convolutional neural networks as being an intermediate step in-between regular convolution and the \textit{depthwise separable convolution} operation (a depthwise convolution followed by a pointwise convolution). In this light, a depthwise separable convolution can be understood as an Inception module with a maximally large number of towers. This observation leads us to propose a novel deep convolutional neural network architecture inspired by Inception, where Inception modules have been replaced with depthwise separable convolutions. We show that this architecture, dubbed Xception, slightly outperforms Inception V3 on the ImageNet dataset (which Inception V3 was designed for), and significantly outperforms Inception V3 on a larger image classification dataset comprising 350 million images and 17,000 classes. Since the Xception architecture has the same number of parameters as Inception V3, the performance gains are not due to increased capacity but rather to a more efficient use of model parameters.

摘要

我们将卷积神经网络中的Inception模块解释为是常规卷积和深度可分离卷积之间的中间步骤运算(先进行深度卷积,再进行点状卷积)。鉴于此,可以将深度方向上可分离的卷积理解为具有最大数量的塔的Inception模块。这一发现使我们提出了一种受Inception启发的新颖的深度卷积神经网络体系结构,其中Inception模块已被深度可分离卷积替代。我们显示,这种被称为Xception的体系结构在ImageNet数据集(Inception V3在此上面设计,这是个包含3.5亿张图像和17,000个类别的较大图像分类数据集)上明显优于Inception V3。它有着和Inception V3相同数量的参数,但是模型更有效果。

.

Introduction

Convolutional neural networks have emerged as the master algorithm in computer vision in recent years, and developing recipes for designing them has been a subject of considerable attention. The history of convolutional neural network design started with LeNet-style models , which were simple stacks of convolutions for feature extraction and max-pooling operations for spatial sub-sampling. In 2012, these ideas were refined into the AlexNet architecture , where convolution operations were being repeated multiple times in-between max-pooling operations, allowing the network to learn richer features at every spatial scale. What followed was a trend to make this style of network increasingly deeper, mostly driven by the yearly ILSVRC competition; first with Zeiler and Fergus in 2013 and then with the VGG architecture in 2014 . At this point a new style of network emerged, the Inception architecture, introduced by Szegedy et al. in 2014 as GoogLeNet (Inception V1), later refined as Inception V2 , Inception V3 , and most recently Inception-ResNet . Inception itself was inspired by the earlier Network-In-Network architecture . Since its first introduction, Inception has been one of the best performing family of models on the ImageNet dataset , as well as internal datasets in use at Google, in particular JFT . The fundamental building block of Inception-style models is the Inception module, of which several different versions exist. In figure inception_module we show the canonical form of an Inception module, as found in the Inception V3 architecture. An Inception model can be understood as a stack of such modules. This is a departure from earlier VGG-style networks which were stacks of simple convolution layers. While Inception modules are conceptually similar to convolutions (they are convolutional feature extractors), they empirically appear to be capable of learning richer representations with less parameters. How do they work, and how do they differ from regular convolutions? What design strategies come after Inception?

简介

近年来,卷积神经网络已成为计算机视觉中的主要算法,开发用于设计它们的配方已成为相当受关注的主题。卷积神经网络设计的历史始于LeNet样式的模型[ 10 ],该模型是用于特征提取的卷积的简单堆栈和用于空间子采样的最大池化操作。在2012年,这些想法被提炼为AlexNet架构[ 9 ],在最大池操作之间多次重复进行卷积操作,从而使网络可以在每个空间尺度上学习更丰富的功能。随后出现的趋势是使这种类型的网络越来越深,这主要是由年度ILSVRC竞争推动的。首先在2013年加入Zeiler和Fergus [ 25 ] ,然后在2014年加入VGG架构[ 18 ]。

此时,出现了一种新的网络样式,即由Szegedy等人介绍的Inception体系结构。在2014年[ 20 ]改名为GoogLeNet(Inception V1),后来又更名为Inception V2 [ 7 ],Inception V3 [ 21 ],以及最近的Inception-ResNet [ 19 ]。Inception本身的灵感来自早期的Network-In-Network体系结构[ 11 ]。自从首次推出以来,Inception一直是ImageNet数据集[ 14 ]以及Google使用的内部数据集,特别是JFT [[5 ]。

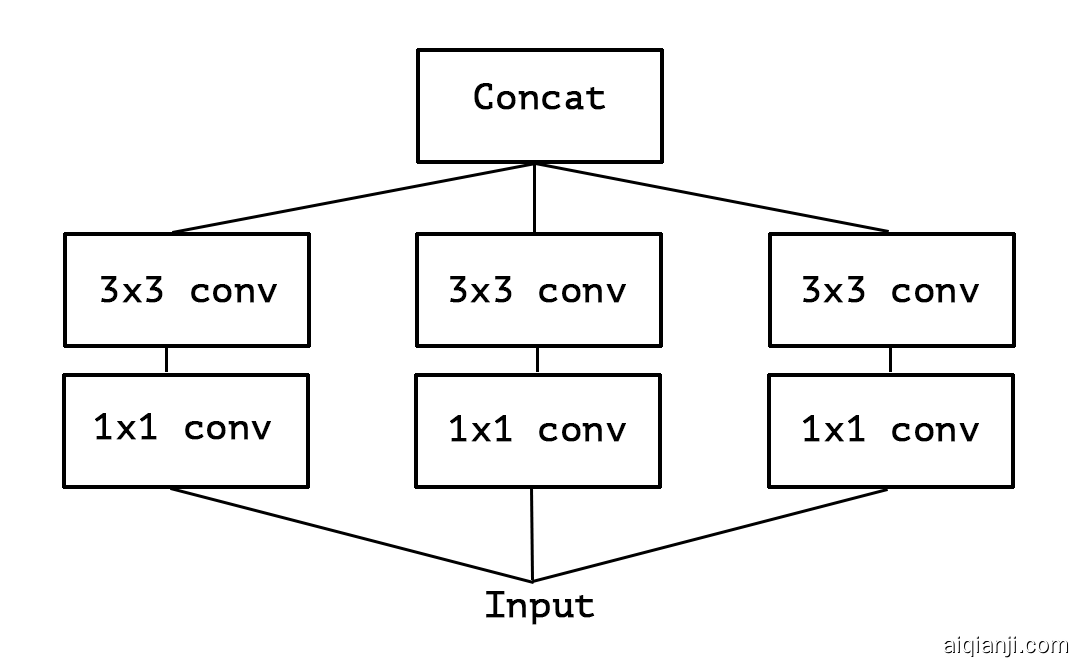

Inception样式模型的基本构建模块是Inception模块,其中存在几种不同的版本。在图1中,我们显示了Inception V3体系结构中的Inception模块的规范形式。初始模型可以理解为此类模块的堆栈。这与早期的VGG样式网络不同,后者是简单的卷积层的堆栈。

尽管Inception模块在概念上与卷积相似(它们是卷积特征提取器),但从经验上看,它们似乎能够以较少的参数学习更丰富的表示形式。它们是如何工作的,它们与常规卷积有何不同?Inception之后会有哪些设计策略?

The Inception hypothesis 初始假设

A convolution layer attempts to learn filters in a 3D space, with 2 spatial dimensions (width and height) and a channel dimension; thus a single convolution kernel is tasked with simultaneously mapping cross-channel correlations and spatial correlations. This idea behind the Inception module is to make this process easier and more efficient by explicitly factoring it into a series of operations that would independently look at cross-channel correlations and at spatial correlations. More precisely, the typical Inception module first looks at cross-channel correlations via a set of 1x1 convolutions, mapping the input data into 3 or 4 separate spaces that are smaller than the original input space, and then maps all correlations in these smaller 3D spaces, via regular 3x3 or 5x5 convolutions. This is illustrated in figure inception_module. In effect, the fundamental hypothesis behind Inception is that cross-channel correlations and spatial correlations are sufficiently decoupled that it is preferable not to map them jointly A variant of the process is to independently look at width-wise correlations and height-wise correlations. This is implemented by some of the modules found in Inception V3, which alternate 7x1 and 1x7 convolutions. The use of such spatially separable convolutions has a long history in image processing and has been used in some convolutional neural network implementations since at least 2012 (possibly earlier). . Consider a simplified version of an Inception module that only uses one size of convolution (e.g. 3x3) and does not include an average pooling tower (figure simplified_inception_module). This Inception module can be reformulated as a large 1x1 convolution followed by spatial convolutions that would operate on non-overlapping segments of the output channels (figure simplified_inception_reformulation). This observation naturally raises the question: what is the effect of the number of segments in the partition (and their size)? Would it be reasonable to make a much stronger hypothesis than the Inception hypothesis, and assume that cross-channel correlations and spatial correlations can be mapped completely separately?

卷积层尝试在3D空间中学习过滤器,该空间具有2个空间维度(宽度和高度)和一个通道维度;因此,单个卷积内核的任务是同时映射跨通道相关性和空间相关性。

Inception模块背后的想法是,通过将其明确地分解为一系列可独立查看跨通道相关性和空间相关性的操作,从而使此过程更轻松,更高效。更准确地说,典型的Inception模块首先通过一组1x1卷积查看跨通道相关性,将输入数据映射到小于原始输入空间的3或4个独立空间中,然后在这些较小的3D空间中映射所有相关性,通过常规3x3或5x5卷积。这在图1中示出。实际上,Inception背后的基本假设是跨通道相关性和空间相关性充分解耦,因此最好不要将它们一起映射(该过程的一种变体是独立查看宽度方向的相关性和高度方向的相关性。)这是由Inception V3中的某些模块实现的,这些模块交替进行7x1和1x7卷积。这种空间上可分离的卷积的使用在图像处理中具有悠久的历史,并且至少从2012年起(可能更早)就已在一些卷积神经网络实现中使用。。

考虑Inception模块的简化版本,该模块仅使用一种卷积大小(例如3x3),并且不包括平均池塔average pooling tower(图2)。可以将该Inception模块重新构造为大的1x1卷积,然后再进行空间卷积,这些卷积将在输出通道的非重叠段上进行操作(图3)。这种观察自然会引发一个问题:分区中的段数(及其大小)会产生什么影响?做出比Inception假设强得多的假设,并假设跨通道相关性和空间相关性可以完全分开映射,是否合理?

Figure 1: A canonical Inception module (Inception V3).

Figure 2: A simplified Inception module.

Figure 3: A strictly equivalent reformulation of the simplified Inception module.

Figure 4: An “extreme” version of our Inception module, with one spatial convolution per output channel of the 1x1 convolution.

The continuum between convolutions and separable convolutions 卷积和可分离卷积之间的连续性

An “extreme” version of an Inception module, based on this stronger hypothesis, would first use a 1x1 convolution to map cross-channel correlations, and would then separately map the spatial correlations of every output channel. This is shown in figure 4. We remark that this extreme form of an Inception module is almost identical to a depthwise separable convolution, an operation that has been used in neural network design as early as 2014 [15] and has become more popular since its inclusion in the TensorFlow framework [1] in 2016.

A depthwise separable convolution, commonly called “separable convolution” in deep learning frameworks such as TensorFlow and Keras, consists in a depthwise convolution, i.e. a spatial convolution performed independently over each channel of an input, followed by a pointwise convolution, i.e. a 1x1 convolution, projecting the channels output by the depthwise convolution onto a new channel space. This is not to be confused with a spatially separable convolution, which is also commonly called “separable convolution” in the image processing community.

Two minor differences between and “extreme” version of an Inception module and a depthwise separable convolution would be:

- The order of the operations: depthwise separable convolutions as usually implemented (e.g. in TensorFlow) perform first channel-wise spatial convolution and then perform 1x1 convolution, whereas Inception performs the 1x1 convolution first.

- The presence or absence of a non-linearity after the first operation. In Inception, both operations are followed by a ReLU non-linearity, however depthwise separable convolutions are usually implemented without non-linearities.

We argue that the first difference is unimportant, in particular because these operations are meant to be used in a stacked setting. The second difference might matter, and we investigate it in the experimental section (in particular see figure 10).

We also note that other intermediate formulations of Inception modules that lie in between regular Inception modules and depthwise separable convolutions are also possible: in effect, there is a discrete spectrum between regular convolutions and depthwise separable convolutions, parametrized by the number of independent channel-space segments used for performing spatial convolutions. A regular convolution (preceded by a 1x1 convolution), at one extreme of this spectrum, corresponds to the single-segment case; a depthwise separable convolution corresponds to the other extreme where there is one segment per channel; Inception modules lie in between, dividing a few hundreds of channels into 3 or 4 segments. The properties of such intermediate modules appear not to have been explored yet.

Having made these observations, we suggest that it may be possible to improve upon the Inception family of architectures by replacing Inception modules with depthwise separable convolutions, i.e. by building models that would be stacks of depthwise separable convolutions. This is made practical by the efficient depthwise convolution implementation available in TensorFlow. In what follows, we present a convolutional neural network architecture based on this idea, with a similar number of parameters as Inception V3, and we evaluate its performance against Inception V3 on two large-scale image classification task.

基于此更强的假设,Inception模块的“极端”版本将首先使用1x1卷积来映射跨通道相关性,然后分别映射每个输出通道的空间相关性。如图4所示。我们注意到,Inception模块的这种极端形式几乎与深度可分离卷积相同,该操作早在2014年就已在神经网络设计中使用[ 15 ],并且自于其在2016年的TensoRFlow框架中纳入以来已经更受欢迎 [1]。 ]。

深度可分离卷积在深度学习框架(如TensorFlow和Keras)中通常称为“可分离卷积”,其中包括深度卷积,即在输入的每个通道上独立执行的空间卷积,然后是逐点卷积,即1x1卷积,将通过深度卷积输出的通道投影到新的通道空间上。请勿将其与空间可分离卷积混淆,空间可分离卷积在图像处理社区中通常也称为“可分离卷积”。

Inception模块的“极端”版本与深度可分离卷积之间的两个小区别是:

- 操作顺序:通常实现的深度可分离卷积(例如在TensorFlow中)首先执行通道空间卷积,然后执行1x1卷积,而Inception首先执行1x1卷积。

- 第一次操作后是否存在非线性。在Inception中,所有操作后面都跟随ReLU这个非线性单元,但是深度可分离卷积通常在没有非线性单元。

In Inception, both operations are followed by a ReLU non-linearity, however depthwise separable convolutions are usually implemented without non-linearities. We argue that the first difference is unimportant, in particular because these operations are meant to be used in a stacked setting. The second difference might matter, and we investigate it in the experimental section (in particular see figure xception_imagenet_activations). We also note that other intermediate formulations of Inception modules that lie in between regular Inception modules and depthwise separable convolutions are also possible: in effect, there is a discrete spectrum between regular convolutions and depthwise separable convolutions, parametrized by the number of independent channel-space segments used for performing spatial convolutions. A regular convolution (preceded by a 1x1 convolution), at one extreme of this spectrum, corresponds to the single-segment case; a depthwise separable convolution corresponds to the other extreme where there is one segment per channel; Inception modules lie in between, dividing a few hundreds of channels into 3 or 4 segments. The properties of such intermediate modules appear not to have been explored yet. Having made these observations, we suggest that it may be possible to improve upon the Inception family of architectures by replacing Inception modules with depthwise separable convolutions, i.e. by building models that would be stacks of depthwise separable convolutions. This is made practical by the efficient depthwise convolution implementation available in TensorFlow. In what follows, we present a convolutional neural network architecture based on this idea, with a similar number of parameters as Inception V3, and we evaluate its performance against Inception V3 on two large-scale image classification task.

我们认为第一个区别并不重要,特别是因为这些操作是要在堆叠的环境中使用的。第二个差异可能很重要,我们将在实验部分对此进行研究(特别是参见图10)。

我们还注意到,位于常规Inception模块和深度可分离卷积之间的Inception模块的其他中间形式也是可能的:实际上,常规卷积和深度可分离卷积之间存在离散频谱,其参数由独立通道空间的数量参数化用于执行空间卷积的线段。在这种频谱的一个极端情况下,常规卷积(以1x1卷积为先)对应于单段情况;深度可分离卷积对应于每个通道有一个分段的另一个极端;初始模块位于两者之间,将数百个通道分为3或4个段。此类中间模块的属性似乎尚未探索。

进行了这些观察后,我们建议通过用深度可分离卷积代替Inception模块,即通过构建将是深度可分离卷积的堆栈的模型,来改进Inception系列体系结构是可能的。通过TensorFlow中可用的有效深度深度卷积实现,这变得切实可行。接下来,我们将基于此思想提出一种卷积神经网络体系结构,其参数数量与Inception V3相似,并针对两个大型图像分类任务针对Inception V3评估其性能。

Prior work 相关工作

The present work relies heavily on prior efforts in the following areas:

- Convolutional neural networks [10, 9, 25], in particular the VGG-16 architecture [18], which is schematically similar to our proposed architecture in a few respects.

- The Inception architecture family of convolutional neural networks [20, 7, 21, 19], which first demonstrated the advantages of factoring convolutions into multiple branches operating successively on channels and then on space.

- Depthwise separable convolutions, which our proposed architecture is entirely based upon. While the use of spatially separable convolutions in neural networks has a long history, going back to at least 2012 [12] (but likely even earlier), the depthwise version is more recent. Laurent Sifre developed depthwise separable convolutions during an internship at Google Brain in 2013, and used them in AlexNet to obtain small gains in accuracy and large gains in convergence speed, as well as a significant reduction in model size. An overview of his work was first made public in a presentation at ICLR 2014 [23]. Detailed experimental results are reported in Sifre’s thesis, section 6.2 [15]. This initial work on depthwise separable convolutions was inspired by prior research from Sifre and Mallat on transformation-invariant scattering [16, 15]. Later, a depthwise separable convolution was used as the first layer of Inception V1 and Inception V2 [20, 7]. Within Google, Andrew Howard [6] has introduced efficient mobile models called MobileNets using depthwise separable convolutions. Jin et al. in 2014 [8] and Wang et al. in 2016 [24] also did related work aiming at reducing the size and computational cost of convolutional neural networks using separable convolutions. Additionally, our work is only possible due to the inclusion of an efficient implementation of depthwise separable convolutions in the TensorFlow framework [1].

- Residual connections, introduced by He et al. in [4], which our proposed architecture uses extensively.

目前的工作在很大程度上取决于在以下领域的先前努力:

- 卷积神经网络[ 10,9,25 ],特别是VGG-16体系结构[ 18 ],这是示意性地类似于我们提出的架构在几个方面。

- Inception 系列卷积神经网络[ 20,7,21,19 ],首先展示了将卷积组合成多个分支结构,将其分解为在频道上的连续操作的优势

- 深度可分离卷积,这是我们提出的体系结构完全基于的。虽然在神经网络中使用空间上可分离的卷积已有很长的历史,但至少可以追溯到2012年[ 12 ](但可能更早),但深度版本是最近的。Laurent Sifre于2013年在Google Brain实习期间开发了深度可分离卷积,并在AlexNet中使用了它们,从而获得了较小的准确性和较大的收敛速度,并且显着减小了模型大小。在2014年ICLR上的一次演讲中首次公开了他的工作概述[ 23 ]。详细的实验结果在Sifre论文的6.2节中报告[ 15 ]。深度方向上的可分离卷积该初始工作是从Sifre和的Mallat上变换不变散射之前研究的启发[ 16,15 ]。之后,将深度可分离卷积用作用在InceptionV1和InceptionV2上的第一层[ 20,7 ]。在Google内部,安德鲁·霍华德[ 6 ]使用深度可分离卷积引入了称为MobileNets的高效移动模型。Jin等。在2014年[ 8 ]和Wang等。2016年[ 24 ]还做了相关工作,旨在减少使用可分离卷积的卷积神经网络的大小和计算成本。此外,由于TensorFlow框架[ 1 ]中包含深度可分离卷积的有效实现,因此我们的工作才有可能实现。

- 残差联系,由He等人介绍。在[ 4 ]中,我们提出的体系结构中广泛使用到它。

The Xception architecture Xception架构

We propose a convolutional neural network architecture based entirely on depthwise separable convolution layers. In effect, we make the following hypothesis: that the mapping of cross-channels correlations and spatial correlations in the feature maps of convolutional neural networks can be entirely decoupled. Because this hypothesis is a stronger version of the hypothesis underlying the Inception architecture, we name our proposed architecture Xception, which stands for “Extreme Inception”.

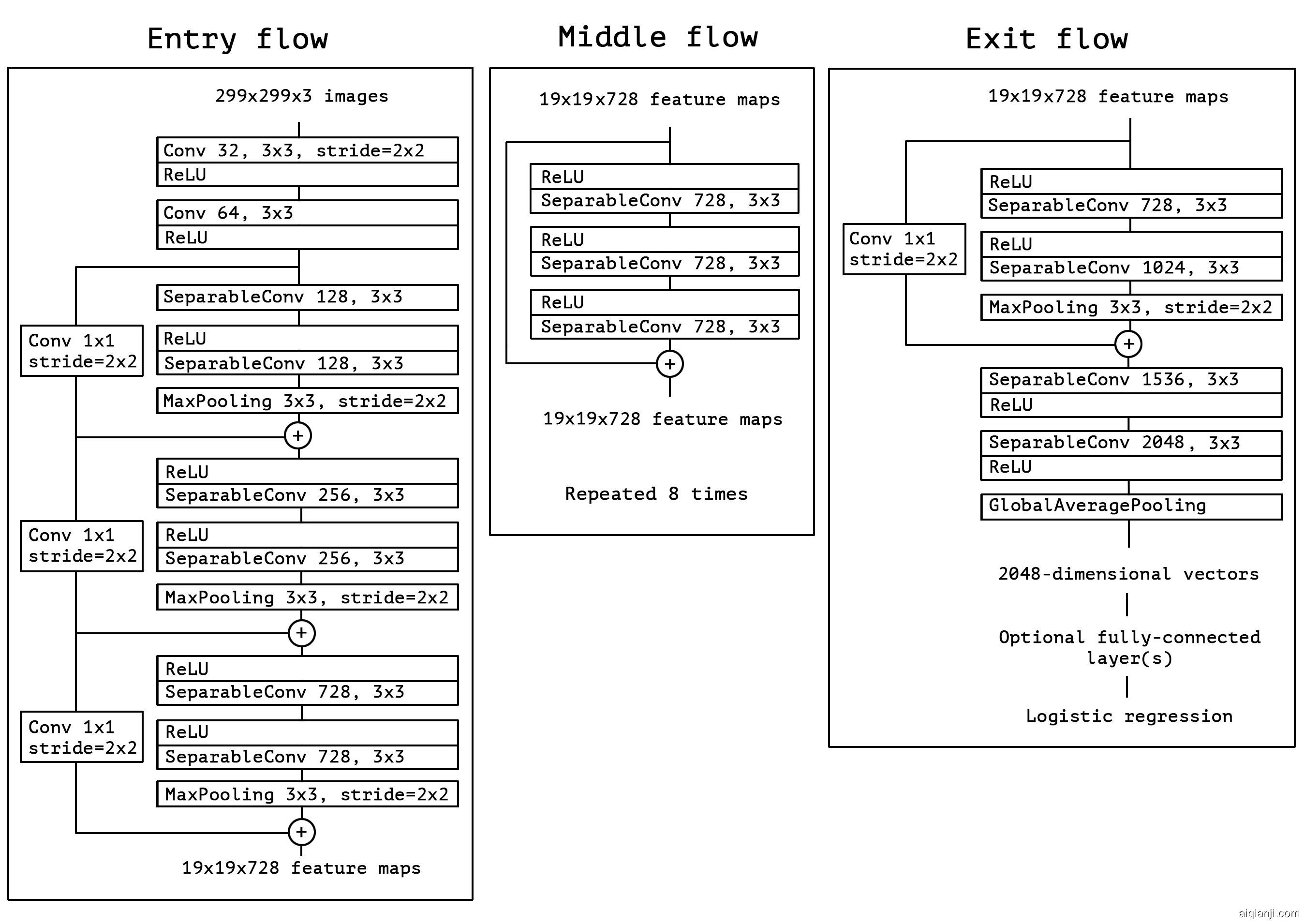

A complete description of the specifications of the network is given in figure 5. The Xception architecture has 36 convolutional layers forming the feature extraction base of the network. In our experimental evaluation we will exclusively investigate image classification and therefore our convolutional base will be followed by a logistic regression layer. Optionally one may insert fully-connected layers before the logistic regression layer, which is explored in the experimental evaluation section (in particular, see figures 7 and 8). The 36 convolutional layers are structured into 14 modules, all of which have linear residual connections around them, except for the first and last modules.

In short, the Xception architecture is a linear stack of depthwise separable convolution layers with residual connections. This makes the architecture very easy to define and modify; it takes only 30 to 40 lines of code using a high-level library such as Keras [2] or TensorFlow-Slim [17], not unlike an architecture such as VGG-16 [18], but rather unlike architectures such as Inception V2 or V3 which are far more complex to define. An open-source implementation of Xception using Keras and TensorFlow is provided as part of the Keras Applications module https://keras.io/applications/#xception , under the MIT license.

我们提出了一种完全基于深度可分离卷积层的卷积神经网络体系结构。实际上,我们做出以下假设:卷积神经网络的特征图中跨通道相关性和空间相关性的映射可以完全解耦。因为此假设是Inception体系结构假设的更强版本,所以我们将建议的体系结构Xception命名为“ Extreme Inception”。

网络规范的完整描述在图5中给出。Xception体系结构具有36个卷积层,构成了网络的特征提取基础。在我们的实验评估中,我们将专门研究图像分类,因此在我们的卷积基础之后将是逻辑回归层。可选地,可以在逻辑回归层之前插入完全连接的层,这将在实验评估部分中进行探讨(特别是,参见图7和8)。36个卷积层被构造为14个模块,除了第一个和最后一个模块外,所有这些模块周围都具有线性残差连接。

简而言之,Xception体系结构是具有残差连接的深度可分离卷积层的线性堆栈。这使得体系结构非常容易定义和修改。使用高级库(例如Keras [ 2 ]或TensorFlow-Slim [ 17 ])仅需要30至40行代码,这与VGG-16 [ 18 ]这样的体系结构没有什么不同,但与Inception V2或V3的定义却要复杂得多。作为Keras应用程序模块2的一部分,提供了使用Keras和TensorFlow的Xception的开源实现。 https://keras.io/applications/#xception,在MIT许可

Figure 5: The Xception architecture: the data first goes through the entry flow, then through the middle flow which is repeated eight times, and finally through the exit flow. Note that all Convolution and SeparableConvolution layers are followed by batch normalization [7] (not included in the diagram). All SeparableConvolution layers use a depth multiplier of 1 (no depth expansion).

图5: Xception体系结构:数据首先经过入口流,然后经过重复八次的中间流,最后经过出口流。请注意,所有卷积和SeparableConvolution层之后均进行批处理归一化[ 7 ](图中未包括)。所有SeparableConvolution图层均使用深度倍数1(无深度扩展)。

Experimental evaluation 实验评估

We choose to compare Xception to the Inception V3 architecture, due to their similarity of scale: Xception and Inception V3 have nearly the same number of parameters (table sizeandspeed), and thus any performance gap could not be attributed to a difference in network capacity. We conduct our comparison on two image classification tasks: one is the well-known 1000-class single-label classification task on the ImageNet dataset , and the other is a 17,000-class multi-label classification task on the large-scale JFT dataset.

我们选择将xception与v3架构进行比较,由于它们的规模相似:xception和v3具有几乎相同数量的参数(表sizeandspeed),因此任何性能差距都无法归因于a网络容量差异。我们对两种图像分类任务进行比较:一个是ImageNet DataSet上的众所周知的1000级单个标签分类任务,另一个是大型JFT数据集上的17,000级多标签分类任务。

The JFT dataset JFT数据集

JFT is an internal Google dataset for large-scale image classification dataset, first introduced by Hinton et al. in , which comprises over 350 million high-resolution images annotated with labels from a set of 17,000 classes. To evaluate the performance of a model trained on JFT, we use an auxiliary dataset, . FastEval14k is a dataset of 14,000 images with dense annotations from about 6,000 classes (36.5 labels per image on average). On this dataset we evaluate performance using Mean Average Precision for top 100 predictions (MAP@100), and we weight the contribution of each class to MAP@100 with a score estimating how common (and therefore important) the class is among social media images. This evaluation procedure is meant to capture performance on frequently occurring labels from social media, which is crucial for production models at Google.

JFT是用于大型图像分类数据集的内部Google数据集,最早由Hinton等人引入。在[ 5 ]中,它包含超过3.5亿个高分辨率图像,这些图像带有来自17,000个类别的标签的注释。为了评估在JFT上训练的模型的性能,我们使用了辅助数据集FastEval14k。

FastEval14k是14,000张图像的数据集,其中包含来自大约6,000个类别的密集注释(平均每张图像36.5个标签)。在此数据集上,我们使用平均平均精度对前100个预测(MAP @ 100 )进行评估,并对每个类别对MAP @ 100的贡献进行加权,并给出一个分数,以估算该类别在社交媒体图像中的普遍程度(因此很重要) 。此评估程序旨在从社交媒体上捕获频繁出现的标签上的效果,这对于Google的生产模型至关重要。

Optimization configuration 优化配置

A different optimization configuration was used for ImageNet and JFT:

-

On ImageNet:

- Optimizer: SGD

- Momentum: 0.9

- Initial learning rate: 0.045

- Learning rate decay: decay of rate 0.94 every 2 epochs

-

On JFT:

- Optimizer: RMSprop [22]

- Momentum: 0.9

- Initial learning rate: 0.001

- Learning rate decay: decay of rate 0.9 every 3,000,000 samples

For both datasets, the same exact same optimization configuration was used for both Xception and Inception V3. Note that this configuration was tuned for best performance with Inception V3; we did not attempt to tune optimization hyperparameters for Xception. Since the networks have different training profiles (figure [6](https://www.aiqianji.com/papers/1610.02357/#S4.F6 "Figure 6 ‣ 4.5.1 Classification performance ‣ 4.5 Comparison with Inception V3 ‣ 4 Experimental evaluation ‣ Xception: Deep Learning with Depthwi