XUI-TARS: Pioneering Automated GUI Interaction with Native Agents

XUI-TARS: 开创性自动 GUI 交互与原生智能体

Abstract

摘要

This paper introduces UI-TARS, a native GUI agent model that solely perceives the screenshots as input and performs human-like interactions (e.g., keyboard and mouse operations). Unlike prevailing agent frameworks that depend on heavily wrapped commercial models (e.g., GPT-4o) with expert-crafted prompts and workflows, UI-TARS is an end-to-end model that outperforms these sophisticated frameworks. Experiments demonstrate its superior performance: UI-TARS achieves SOTA performance in $^{10+}$ GUI agent benchmarks evaluating perception, grounding, and GUI task execution (see below). Notably, in the OsWorld benchmark, UI-TARS achieves scores of 24.6 with 50 steps and 22.7 with 15 steps, outperforming Claude's 22.0 and 14.9 respectively. In Android World, UI-TARS achieves 46.6, surpassing GPT-4o's 34.5. UI-TARS incorporates several key innovations: (1) Enhanced Perception: leveraging a large-scale dataset of GUI screenshots for context-aware understanding of UI elements and precise captioning; (2) Unified Action Modeling, which standardizes actions into a unified space across platforms and achieves precise grounding and interaction through large-scale action traces; (3) System-2 Reasoning, which incorporates deliberate reasoning into multi-step decision making, involving multiple reasoning patterns such as task decomposition, reflection thinking, milestone recognition, etc. (4) Iterative Training with Reflective Online Traces, which addresses the data bottleneck by automatically collecting, filtering, and reflective ly refining new interaction traces on hundreds of virtual machines. Through iterative training and reflection tuning, UI-TARS continuously learns from its mistakes and adapts to unforeseen situations with minimal human intervention. We also analyze the evolution path of GUI agents to guide the further development of this domain. UI-TARS is open sourced at https : / /github .Com/bytedance /UI-TARS.

本文介绍了 UI-TARS,一种原生 GUI 智能体模型,其仅以截图作为输入并执行类人交互(如键盘和鼠标操作)。与依赖高度封装的商业模型(如 GPT-4o)并配备专家精心设计的提示和工作流程的主流智能体框架不同,UI-TARS 是一个端到端模型,其性能优于这些复杂框架。实验证明了其卓越性能:UI-TARS 在评估感知、基础化和 GUI 任务执行的 $^{10+}$ 个 GUI 智能体基准测试中实现了 SOTA 性能(见下文)。值得注意的是,在 OsWorld 基准测试中,UI-TARS 在 50 步和 15 步中分别获得了 24.6 和 22.7 的分数,优于 Claude 的 22.0 和 14.9。在 Android World 中,UI-TARS 获得了 46.6 分,超过了 GPT-4o 的 34.5 分。UI-TARS 包含了几项关键创新:(1) 增强感知:利用大规模 GUI 截图数据集进行上下文感知的 UI 元素理解和精确标注;(2) 统一动作建模,将动作标准化为跨平台的统一空间,并通过大规模动作轨迹实现精确的基础化和交互;(3) 系统-2 推理,将深思熟虑的推理融入多步骤决策,涉及任务分解、反思思维、里程碑识别等多种推理模式;(4) 带反思在线轨迹的迭代训练,通过在数百台虚拟机上自动收集、过滤和反思性地优化新交互轨迹来解决数据瓶颈。通过迭代训练和反思调优,UI-TARS 不断从错误中学习,并以最少的人工干预适应意外情况。我们还分析了 GUI 智能体的演进路径,以指导该领域的进一步发展。UI-TARS 已在 https://github.com/bytedance/UI-TARS 开源。

Contents

目录

1 Introduction

1 引言

3

3

2 Evolution Path of GUI Agents

2 GUI智能体的演进路径

3 Core Capabilities of Native Agent Model

原生智能体模型的核心能力

4UI-TARS 13

4UI-TARS 13

5 Experiment 22

5 实验 22

6 Conclusion 27

6 结论 27

A Case Study 38

案例研究 38

B Data Example 41

B 数据示例 41

1 Introduction

1 引言

Autonomous agents (Wang et al., 2024b; Xi et al., 2023; Qin et al., 2024) are envisioned to operate with minimal human oversight, perceiving their environment, making decisions, and executing actions to achieve specific goals. Among the many challenges in this domain, enabling agents to interact seamlessly with Graphical User Interfaces (GUIs) has emerged as a critical frontier (Hu et al., 2024; Zhang et al., 2024a; Nguyen et al., 2024; Wang et al., 2024e; Ga0 et al., 2024). GUI agents are designed to perform tasks within digital environments that rely heavily on graphical elements such as buttons, text boxes, and images. By leveraging advanced perception and reasoning capabilities, these agents hold the potential to revolutionize task automation, enhance accessibility, and streamline workflows across a wide range of applications.

自主智能体 (Wang et al., 2024b; Xi et al., 2023; Qin et al., 2024) 被设想为在最少的人工监督下运行,感知其环境、做出决策并执行行动以实现特定目标。在该领域的众多挑战中,使智能体能够与图形用户界面 (GUI) 无缝交互已成为一个关键前沿 (Hu et al., 2024; Zhang et al., 2024a; Nguyen et al., 2024; Wang et al., 2024e; Ga0 et al., 2024)。GUI 智能体旨在执行严重依赖按钮、文本框和图像等图形元素的数字环境中的任务。通过利用先进的感知和推理能力,这些智能体有潜力彻底改变任务自动化、增强可访问性,并在广泛的应用中简化工作流程。

The development of GUI agents has historically relied on hybrid approaches that combine textual representations (e.g., HTML structures and accessibility trees) (Liu et al., 2018; Deng et al., 2023; Zhou et al., 2023). While these methods have driven significant progress, they suffer from limitations such as platform-specific inconsistencies, verbosity, and limited s cal ability (Xu et al., 2024). Textual-based methods often require system-level permissions to access underlying system information, such as HTML code, which further limits their applicability and general iz ability across diverse environments. Another critical issue is that, many existing GUI systems follow an agent framework paradigm (Zhang et al., 2023; Wang et al., 2024a; Wu et al., 2024a; Zhang et al., 2024b; Wang & Liu, 2024; Xie et al., 2024), where key functions are modular i zed across multiple components. These components often rely on specialized vision-language models (VLMs), e.g., GPT-4o (Hurst et al., 2024), for understanding and reasoning (Zhang et al., 2024b), while grounding (Lu et al., 2024b) or memory (Zhang et al., 2023) modules are implemented through additional tools or scripts. Although this modular architecture facilitates rapid development in specific domain tasks, it relies on handcrafted approaches that depend on expert knowledge, modular components, and task-specific optimization s, which are less scalable and adaptive than end-to-end models. This makes the framework prone to failure when faced with unfamiliar tasks or dynamically changing environments (Xia et al., 2024).

图形用户界面(GUI)智能体的发展历来依赖于结合文本表示(例如,HTML结构和可访问性树)的混合方法(Liu et al., 2018; Deng et al., 2023; Zhou et al., 2023)。尽管这些方法推动了显著进展,但它们也存在一些局限性,例如平台间的不一致性、冗长性以及有限的扩展性(Xu et al., 2024)。基于文本的方法通常需要系统级权限来访问底层系统信息,例如HTML代码,这进一步限制了它们在不同环境中的适用性和泛化能力。另一个关键问题是,许多现有的GUI系统遵循智能体框架范式(Zhang et al., 2023; Wang et al., 2024a; Wu et al., 2024a; Zhang et al., 2024b; Wang & Liu, 2024; Xie et al., 2024),其中关键功能被模块化分布在多个组件中。这些组件通常依赖于专门的视觉-语言模型(VLMs),例如GPT-4o(Hurst et al., 2024),用于理解和推理(Zhang et al., 2024b),而基础模块(Lu et al., 2024b)或记忆模块(Zhang et al., 2023)则通过额外的工具或脚本实现。尽管这种模块化架构促进了特定领域任务的快速开发,但它依赖于需要专家知识、模块化组件和任务特定优化的手工方法,这使得其扩展性和适应性不如端到端模型。因此,当面对不熟悉的任务或动态变化的环境时,该框架容易失败(Xia et al., 2024)。

These challenges have prompted two key shifts towards native GUI agent model: (1) the transition from textual-dependent to pure-vision-based GUI agents (Bavishi et al., 2023; Hong et al., 2024). “Pure-vision" means the model relies exclusively on screenshots of the interface as input, rather than textual descriptions (e.g., HTML). This bypasses the complexities and platform-specific limitations of textual representations, aligning more closely with human cognitive processes; and (2) the evolution from modular agent frameworks to end-to-end agent models (Wu et al., 2024b; Xu et al., 2024; Lin et al., 2024b; Yang et al., 2024a; Anthropic, 2024b). The end-to-end design unifies traditionally modular i zed components into a single architecture, enabling a smooth flow of information among modules. In philosophy, agent frameworks are design-driven, requiring extensive manual engineering and predefined workflows to maintain stability and prevent unexpected situations; while agent models are inherently data-driven, enabling them to learn and adapt through large-scale data and iterative feedback (Putta et al., 2024).

这些挑战促使了向原生GUI智能体模型的两个关键转变:(1) 从依赖文本的GUI智能体向纯视觉(pure-vision)GUI智能体的转变 (Bavishi et al., 2023; Hong et al., 2024)。"纯视觉"意味着模型仅依赖界面截图作为输入,而非文本描述(如HTML)。这绕过了文本表示的复杂性和平台特定限制,更贴近人类的认知过程;(2) 从模块化智能体框架向端到端(end-to-end)智能体模型的演进 (Wu et al., 2024b; Xu et al., 2024; Lin et al., 2024b; Yang et al., 2024a; Anthropic, 2024b)。端到端设计将传统模块化的组件统一到单一架构中,实现了模块间信息的流畅传递。在理念上,智能体框架是设计驱动的,需要大量手工工程和预定义工作流程来保持稳定性并防止意外情况;而智能体模型本质上是数据驱动的,能够通过大规模数据和迭代反馈进行学习和适应 (Putta et al., 2024)。

Despite their conceptual advantages, today's native GUI agent model often falls short in practical applications, causing their real-world impact to lag behind its hype. These limitations stem from two primary sources: (1) the GUI domain itself presents unique challenges that compound the difficulty of developing robust agents. (1.a) On the perception side, agents must not only recognize but also effectively interpret the high information-density of evolving user interfaces. (1.b) Reasoning and planning mechanisms are equally important in order to navigate, manipulate, and respond to these interfaces effectively. (1.c) These mechanisms must also leverage memory, considering past interactions and experiences to make informed decisions. (1.d) Beyond high-level decision-making, agents must also execute precise, low-level actions, such as outputting exact screen coordinates for clicks or drags and inputting text into the appropriate fields. (2) The transition from agent frameworks to agent models introduces a fundamental data bottleneck. Modular frameworks traditionally rely on separate datasets tailored to individual components. These datasets are relatively easy to curate since they address isolated functionalities. However, training an end-to-end agent model demands data that integrates all components in a unified workflow, capturing the seamless interplay between perception, reasoning, memory, and action. Such data, which comprise rich workflow knowledge from human experts, have been scarcely recorded historically. This lack of comprehensive, high-quality data limits the ability of native agents to generalize across diverse real-world scenarios, hindering their s cal ability and robustness.

尽管具有概念上的优势,当今的原生 GUI 智能体模型在实际应用中往往表现不佳,导致其实际影响远不及宣传。这些局限性主要源于两个原因:(1) GUI 领域本身存在独特的挑战,增加了开发健壮智能体的难度。(1.a) 在感知方面,智能体不仅需要识别,还需要有效解释不断变化的用户界面的高信息密度。(1.b) 推理和规划机制同样重要,以便有效地导航、操作和响应这些界面。(1.c) 这些机制还必须利用记忆,考虑过去的交互和经历,以做出明智的决策。(1.d) 除了高层次的决策,智能体还必须执行精确的低层次操作,例如输出点击或拖动的精确屏幕坐标,并在适当的字段中输入文本。(2) 从智能体框架到智能体模型的转变引入了一个基本的数据瓶颈。模块化框架传统上依赖于为各个组件量身定制的单独数据集。这些数据集相对容易整理,因为它们处理的是孤立的功能。然而,训练端到端智能体模型需要能够将所有组件整合到统一工作流中的数据,捕捉感知、推理、记忆和行动之间的无缝交互。此类数据包含来自人类专家的丰富工作流知识,历史上很少被记录。这种缺乏全面、高质量数据的情况限制了原生智能体在多样化现实场景中的泛化能力,阻碍了其扩展性和鲁棒性。

To address these challenges, this paper focuses on advancing native GUI agent model. We begin by reviewing the evolution path for GUI agents $(\S,2)$ . By segmenting the development of GUI agents into key stages based on the degree of human intervention and generalization capabilities, we conduct a comprehensive literature review. Starting with traditional rule-based agents, we highlight the evolution from rigid, framework-based systems to adaptive native models that seamlessly integrate perception, reasoning, memory, and action. We

为应对这些挑战,本文致力于推进原生GUI智能体模型的发展。我们首先回顾了GUI智能体的演进路径 $(\S,2)$ 。通过根据人类干预程度和泛化能力将GUI智能体的发展划分为关键阶段,我们进行了全面的文献综述。从传统的基于规则的智能体开始,我们强调了从僵化的、基于框架的系统到无缝集成感知、推理、记忆和动作的自适应原生模型的演进过程。

Find round trip flights from SEA to NYC on 5th next month and filtered by price in ascending order.

查找下月5日从SEA到NYC的往返航班,并按价格升序筛选。

UI-TARS

UI-TARS

Figure 1: A demo case of UI-TARS that helps user to find fights.

图 1: UI-TARS 帮助用户查找航班的演示案例。

also prospect the future potential of GUI agents capable of active and lifelong learning, which minimizes human intervention while maximizing generalization abilities. To deepen understanding, we provide a detailed analysis of the core capabilities of the native agent model, which include: (1) perception, enabling real-time environmental understanding for improved situational awareness; (2) action, requiring the native agent model to accurately predict and ground actions within a predefined space; (3) reasoning, which emulates human thought processes and encompasses both System 1 and System 2 thinking; and (4) memory, which stores task-specific information, prior experiences, and background knowledge. We also summarize the main evaluation metrics and benchmarks for GUI agents.

展望未来具有主动学习和终身学习能力的GUI智能体潜力,这类智能体能够最小化人类干预,同时最大化泛化能力。为了加深理解,我们对原生智能体模型的核心能力进行了详细分析,包括:(1) 感知能力,使智能体能够实时理解环境,提升情境感知;(2) 行动能力,要求原生智能体模型在预定义空间内准确预测和落地行动;(3) 推理能力,模拟人类思维过程,涵盖系统1和系统2思维;(4) 记忆能力,存储任务特定信息、先前经验和背景知识。我们还总结了GUI智能体的主要评估指标和基准。

Based on these analyses, we propose a native GUI agent model UI-TARS2, with a demo case illustrated in Figure 1. UI-TARS incorporates the following core contributions:

基于这些分析,我们提出了一个原生GUI智能体模型UI-TARS2,图1展示了一个演示案例。UI-TARS包含以下核心贡献:

· Enhanced Perception for GUI Screenshots $(\S\ 4.2)$ : GUI environments, with their high information density, intricate layouts, and diverse styles, demand robust perception capabilities. We curate a largescale dataset by collecting screenshots using specialized parsing tools to extract metadata such as element types, bounding boxes, and text content from websites, applications, and operating systems. The dataset targets the following tasks: (1) element description, which provides fine-grained, structured descriptions of GUI components; (2) dense captioning, aimed at holistic interface understanding by describing the entire GUI layout, including spatial relationships, hierarchical structures, and interactions among elements; (3) state transition captioning, which captures subtle visual changes in the screen; (4) question answering, designed to enhance the agent's capacity for visual reasoning; and (5) set-of-mark prompting, which uses visual markers to associate GUI elements with specific spatial and functional contexts. These carefully designed tasks collectively enable UI-TARS to recognize and understand GUI elements with exceptional precision, providing a robust foundation for further reasoning and action.

· 增强 GUI 截图的感知能力 $(S\ 4.2)$ :GUI 环境具有高信息密度、复杂布局和多样化的风格,需要强大的感知能力。我们通过使用专门的解析工具收集截图来构建一个大规模数据集,从网站、应用程序和操作系统中提取元素类型、边界框和文本内容等元数据。该数据集针对以下任务:(1) 元素描述,提供对 GUI 组件的细粒度、结构化描述;(2) 密集标注,旨在通过描述整个 GUI 布局(包括空间关系、层次结构和元素之间的交互)来实现对界面的整体理解;(3) 状态转换标注,捕捉屏幕中的细微视觉变化;(4) 问答,旨在增强智能体的视觉推理能力;(5) 标记集提示,使用视觉标记将 GUI 元素与特定的空间和功能上下文关联起来。这些精心设计的任务共同使 UI-TARS 能够以极高的精度识别和理解 GUI 元素,为进一步的推理和行动提供了坚实的基础。

· Unified Action Modeling for Multi-step Execution $(\S\ 4.3)$ : we design a unified action space to standardize semantically equivalent actions across platforms. To improve multi-step execution, we create a large-scale dataset of action traces, combining our annotated trajectories and standardized open-source data. The grounding ability, which involves accurately locating and interacting with specific GUI elements, is improved by curating a vast dataset that pairs element descriptions with their spatial coordinates. This data enables UI-TARS to achieve precise and reliable interactions.

· 多步执行的统一动作建模 $(\S\ 4.3)$:我们设计了一个统一的动作空间,以标准化跨平台的语义等价动作。为了改进多步执行,我们创建了一个大规模的动作轨迹数据集,结合了我们标注的轨迹和标准化的开源数据。通过整理一个庞大的数据集,将元素描述与其空间坐标配对,提升了定位能力,即准确找到并与特定GUI元素交互的能力。这些数据使UI-TARS能够实现精确可靠的交互。

· System-2 Reasoning for Deliberate Decision-making (§ 4.4): robust performance in dynamic environments demands advanced reasoning capabilities. To enrich reasoning ability, we crawl 6M GUI tutorials, meticulously filtered and refined to provide GUI knowledge for logical decision-making. Building on this foundation, we augment reasoning for all the collected action traces by injecting diverse reasoning patterns—such as task decomposition, long-term consistency, milestone recognition, trial&error, and reflection—-into the model. UI-TARS integrates these capabilities by generating explicit “thoughts" before each action, bridging perception and action with deliberate decision-making.

系统-2推理用于审慎决策(§ 4.4):在动态环境中实现稳健性能需要先进的推理能力。为了增强推理能力,我们爬取了600万条GUI教程,经过精心筛选和提炼,为逻辑决策提供GUI知识。在此基础上,我们通过向模型中注入多样化的推理模式(如任务分解、长期一致性、里程碑识别、试错和反思)来增强所有收集到的动作轨迹的推理能力。UI-TARS通过在每个动作之前生成明确的“思考”来整合这些能力,将感知与动作通过审慎决策连接起来。

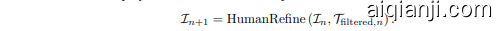

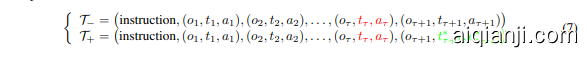

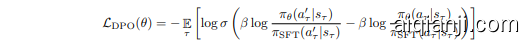

· Iterative Refinement by Learning from Prior Experience $(\S\ 4.5)$ : a significant challenge in GUI agent development lies in the scarcity of large-scale, high-quality action traces for training. To overcome this data bottleneck, UI-TARS employs an iterative improvement framework that dynamically collects and refines new interaction traces. Leveraging hundreds of virtual machines, UI-TARS explores diverse real-world tasks based on constructed instructions and generates numerous traces. Rigorous multi-stage filtering——incorporating rule-based heuristics, VLM scoring, and human review—-ensures trace quality. These refined traces are then fed back into the model, enabling continuous, iterative enhancement of the agent's performance across successive cycles of training. Another central component of this online boots trapping process is reflection tuning, where the agent learns to identify and recover from errors by analyzing its own suboptimal actions. We annotate two types of data for this process: (1) error correction, where annotators pinpoint mistakes in agent-generated traces and label the corrective actions, and (2) post-reflection, where annotators simulate recovery steps, demonstrating how the agent should realign task progress after an error. These two types of data create paired samples, which are used to train the model using Direct Preference Optimization (DPO) (Rafailov et al., 2023). This strategy ensures that the agent not only learns to avoid errors but also adapts dynamically when they occur. Together, these strategies enable UI-TARS to achieve robust, scalable learning with minimal human oversight.

· 通过从先验经验中学习进行迭代优化(§ 4.5):GUI智能体开发中的一个重大挑战在于缺乏大规模、高质量的动作轨迹用于训练。为了克服这一数据瓶颈,UI-TARS采用了一种迭代改进框架,动态收集并优化新的交互轨迹。通过利用数百台虚拟机,UI-TARS基于构建的指令探索多样化的现实任务,并生成大量轨迹。严格的多阶段过滤——包括基于规则的启发式方法、VLM评分和人工审查——确保了轨迹的质量。这些优化后的轨迹随后反馈给模型,使得智能体在连续的训练周期中能够持续迭代提升性能。这一在线引导过程的核心组成部分之一是反思调优,智能体通过分析自身次优动作来学习识别并从中恢复。我们为此过程标注了两种类型的数据:(1) 错误修正,标注者指出智能体生成轨迹中的错误并标记纠正动作,(2) 事后反思,标注者模拟恢复步骤,展示智能体在错误发生后应如何重新调整任务进度。这两种类型的数据创建了配对样本,用于通过直接偏好优化(DPO)(Rafailov et al., 2023) 训练模型。这一策略确保智能体不仅学会避免错误,还能在错误发生时动态调整。这些策略共同使UI-TARS在最少人工监督下实现了稳健、可扩展的学习。

We continually train Qwen-2-VL 7B and 72B (Wang et al., 2024c) on approximately 50 billion tokens to develop UI-TARS-7B and UI-TARS-72B. Through extensive experiments, we draw the following conclusions:

我们持续训练 Qwen-2-VL 7B 和 72B (Wang et al., 2024c) 在大约 500 亿 Token 上,开发了 UI-TARS-7B 和 UI-TARS-72B。通过大量实验,我们得出以下结论:

2 Evolution Path of GUI Agents

2 GUI智能体的演进路径

GUI agents are particularly significant in the context of automating workflows, where they help streamline repetitive tasks, reduce human effort, and enhance productivity. At their core, GUI agents are designed to facilitate the interaction between humans and machines, simplifying the execution of tasks. Their evolution reflects a progression from rigid, human-defined heuristics to increasingly autonomous systems that can adapt, learn, and even independently identify tasks. In this context, the role of GUI agents has shifted from simple automation to ful-fledged, self-improving agents that increasingly integrate with the human workflow, acting not just as tools, but as collaborators in the task execution process.

GUI智能体在工作流自动化中的重要性

Over the years, agents have progressed from basic rule-based automation to an advanced, highly automated, and flexible system that increasingly mirrors human-like behavior and requires minimal human intervention to perform its tasks. As illustrated in Figure 2, the development of GUI agents can be broken down into several key stages, each representing a leap in autonomy, fexibility, and generalization ability. Each stage is characterized by how much human intervention is required in the workfow design and learning process.

多年来,AI智能体 (AI Agent) 已经从基于规则的基本自动化发展为先进的、高度自动化的灵活系统,越来越像人类行为,并且需要最少的人工干预来执行任务。如图 2 所示,GUI 智能体的发展可以分为几个关键阶段,每个阶段都代表着自主性、灵活性和泛化能力的飞跃。每个阶段的特点在于工作流设计和学习过程中需要多少人工干预。

2.1 Rule-based Agents

2.1 基于规则的AI智能体

Stage 1: Rule-based Agents In the initial stage, agents such as Robotic Process Automation (RPA) systems (Dobrica, 2022; Hofmann et al., 2020) were designed to replicate human actions in highly structured environments, often interacting with GUIs and enterprise software systems. These agents typically processed user instructions by matching them to predefined rules and invoking APIs accordingly. Although effective for well-defined and repetitive tasks, these systems were constrained by their reliance on human-defined heuristics and explicit instructions, hindering their ability to handle novel and complex scenarios. At this stage, the agent cannot learn from its environment or previous experiences, and any changes to the workflow require human intervention. Moreover, these agents require direct access to APIs or underlying system permissions, as demonstrated by systems like DART (Memon et al., 2003), WoB (Shi et al., 2017), Roscript (Qian et al., 2020) and FLIN (Mazumder & Riva, 2021). This makes it unsuitable for cases where such access is restricted or unavailable. This inherent rigidity constrained their applicability to scale across diverse environments.

第一阶段:基于规则的智能体

The limitations of rule-based agents underscore the importance of transitioning to GUI-based agents that rely on visual information and explicit operation on GUIs instead of requiring low-level access to systems. Through visual interaction with interfaces, GUI agents unlock greater fexibility and adaptability, significantly expanding the range of tasks they can accomplish without being limited by predefined rules or the need for explicit system access. This paradigm shift opens pathways for agents to interact with unfamiliar or newly developed interfaces autonomously.

基于规则的智能体的局限性突显了向基于图形用户界面(GUI)的智能体过渡的重要性,这些智能体依赖于视觉信息和对GUI的显式操作,而不是需要低级别系统访问权限。通过与界面的视觉交互,GUI智能体获得了更高的灵活性和适应性,显著扩展了它们能够完成的任务范围,而不受预定义规则或需要显式系统访问的限制。这种范式转变为智能体自主与不熟悉或新开发的界面交互开辟了途径。

Figure 2: The evolution path for GUI agents.

图 2: GUI 智能体的演进路径。

2.2 From Modular Agent Framework to Native Agent Model

2.2 从模块化智能体框架到原生智能体模型

Agent frameworks leveraging the power of large models (M)LLMs have surged in popularity recently. This surge is driven by the foundation models’ ability to deeply comprehend diverse data types and generate relevant outputs via multi-step reasoning. Unlike rule-based agents, which necessitate handcrafted rules for each specific task, foundation models can generalize across different environments and effectively handle tasks by interacting multiple times with environments. This eliminates the need for humans to painstakingly define rules for every new scenario, significantly simplifying agent development and deployment.

利用大模型 (M)LLMs 力量的智能体框架最近迅速流行起来。这一趋势的推动力在于基础模型能够深入理解多种数据类型,并通过多步推理生成相关输出。与基于规则的智能体不同,后者需要为每个特定任务手工制定规则,而基础模型能够在不同环境中进行泛化,并通过多次与环境交互有效地处理任务。这消除了人类为每个新场景费力定义规则的需要,极大地简化了智能体的开发和部署。

Stage 2: Agent Framework Specifically, these agent systems mainly leverage the understanding and reasoning capabilities of advanced foundation models (e.g., GPT-4 (OpenAI, 2023b) and GPT-4o (Hurst et al., 2024)) to enhance task execution fexibility, which become more fexible, framework-based agents. Early efforts primarily focused on tasks such as calling specific APIs or executing code snippets within text-based interfaces (Wang et al., 2023; Li et al., 2023a,b; Wen et al., 2023; Nakano et al., 2021). These agents marked a significant advancement from purely rule-based systems by enabling more automatic and fexible interactions. Autonomous frameworks like AutoGPT (Yang et al., 2023a) and LangChain allow agents to integrate multiple external tools, APIs, and services, enabling a more dynamic and adaptable workflow.

阶段 2: 智能体框架

Enhancing the performance of foundation model-based agent frameworks often involves designing taskspecific workflows and optimizing prompts for each component. For instance, some approaches augment these frameworks with specialized modules, such as short- or long-term memory, to provide task-specific knowledge or store operational experience for self-improvement. Cradle (Tan et al., 2024) enhances foundational agents' multitasking capabilities by storing and leveraging task execution experiences. Similarly, Song et al. (2024) propose a framework for API-driven web agents that utilizes task-specific background knowledge to execute complex web operations. The Agent Workflow Memory (AWM) module (Wang et al., 2024g) further optimizes memory management by selectively providing relevant workflows to guide the agent's subsequent actions. Another common strategy to improve task success is the incorporation of reflection-based, multi-step reasoning to refine action planning and execution. The widely recognized ReAct framework (Yao et al., 2023) integrates reasoning with the outcomes of actions, enabling more dynamic and adaptable planning. For multimodal tasks, MM Navigator (Yan et al., 2023) leverages summarized contextual actions and mark tags to generate accurate, executable actions. SeeAct (Zheng et al., 2024b) takes a different approach by explicitly instructing GPT-4V to mimic human browsing behavior, taking into account the task, webpage content, and previous actions. Furthermore, multi-agent collaboration has emerged as a powerful technique for boosting task completion rates. Mobile Experts (Zhang et al., 2024c), for example, addresses the unique challenges of mobile environments by incorporating tool formulation and fostering collaboration among multiple agents. In summary, current advancements in agent frameworks heavily rely on optimizing plan and action generation through prompt engineering, centered around the capabilities of the underlying foundation models, ultimately leading to improved task completion.

提升基于基础模型的AI智能体框架性能,通常涉及设计任务特定的工作流并优化每个组件的提示。例如,一些方法通过增加专门模块(如短期或长期记忆)来增强这些框架,以提供任务特定知识或存储操作经验以实现自我改进。Cradle (Tan et al., 2024) 通过存储和利用任务执行经验来增强基础智能体的多任务能力。同样,Song et al. (2024) 提出了一个API驱动的网页智能体框架,该框架利用任务特定的背景知识来执行复杂的网页操作。Agent Workflow Memory (AWM) 模块 (Wang et al., 2024g) 通过选择性提供相关工作流来进一步优化内存管理,以指导智能体的后续操作。另一个提高任务成功率的常见策略是结合基于反思的多步推理,以优化行动计划和执行。广为人知的ReAct框架 (Yao et al., 2023) 将推理与行动结果相结合,实现更动态和适应性强的计划。对于多模态任务,MM Navigator (Yan et al., 2023) 利用汇总的上下文操作和标记标签生成准确可执行的操作。SeeAct (Zheng et al., 2024b) 则采用不同方法,明确指示GPT-4V模仿人类浏览行为,同时考虑任务、网页内容和之前的操作。此外,多智能体协作已成为提高任务完成率的强大技术。例如,Mobile Experts (Zhang et al., 2024c) 通过工具制定和促进多个智能体之间的协作,解决了移动环境中的独特挑战。总之,当前智能体框架的进展在很大程度上依赖于通过提示工程优化计划和行动生成,围绕基础模型的能力,最终提高任务完成率。

Key Limitations of Agent Frameworks Despite greater adaptability compared to rule-based systems, agent frameworks still rely on human-defined workflows to structure their actions. The “agentic workflow knowledge" (Wang et al., 2024g) is manually encoded through custom prompts, external scripts, or tool-usage heuristics. This external iz ation of knowledge yields several drawbacks:

AI智能体框架的关键限制

Thus, while agent frameworks offer quick demonstrations and are fexible within a narrow scope, they ultimately remain brittle when deployed in real-world scenarios, where tasks and interfaces continuously evolve. This reliance on pre-programmed workflows, driven by human expertise, makes frameworks inherently non-scalable. They depend on the foresight of developers to anticipate all future variations, which limits their capacity to handle unforeseen changes or learn autonomously. Frameworks are design-driven, meaning they lack the ability to learn and generalize across tasks without continuous human involvement.

因此,尽管AI智能体框架能够快速展示并在有限范围内灵活应用,但在实际场景中部署时,由于任务和界面不断演变,它们最终仍然显得脆弱。这种依赖于人类专业知识预先编程的工作流程,使得框架本质上不具备可扩展性。它们依赖于开发者的远见来预测所有未来的变化,这限制了它们处理意外变化或自主学习的能力。框架是设计驱动的,这意味着在没有持续人类参与的情况下,它们缺乏跨任务学习和泛化的能力。

Stage 3: Native Agent Model In contrast, the future of autonomous agent development lies in the creation of native agent models, where workflow knowledge is embedded directly within the agent's model through orientation al learning. In this paradigm, tasks are learned and executed in an end-to-end manner, unifying perception, reasoning, memory, and action within a single, continuously evolving model. This approach is fundamentally data-driven, allowing for the seamless adaptation of agents to new tasks, interfaces, or user needs without relying on manually crafted prompts or predefined rules. Native agents offer several distinct advantages that contribute to their s cal ability and adaptability:

阶段 3: 原生智能体模型 (Native Agent Model)

· Holistic Learning and Adaptation: because the agent's policy is learned end-to-end, it can unify knowledge from perception, reasoning, memory, and action in its internal parameters. As new data or user demonstrations become available, the entire system (rather than just a single module or prompt) updates its knowledge. This empowers the model to adapt more seamlessly to changing tasks, interfaces, or user demands.

· 整体学习与适应:由于AI智能体的策略是端到端学习的,它可以在内部参数中统一来自感知、推理、记忆和行动的知识。随着新数据或用户演示的可用,整个系统(而不仅仅是单个模块或提示)会更新其知识。这使得模型能够更无缝地适应不断变化的任务、界面或用户需求。

· Reduced Human Engineering: instead of carefully scripting how the LLM/VLM should be invoked at each node, native models learn task-relevant workflows from large-scale demonstrations or online experiences. The burden of *hardwiring a workflow"' is replaced by data-driven learning. This significantly reduces the need for domain experts to handcraft heuristics whenever the environment evolves.

· 减少人工设计:原生模型从大规模演示或在线经验中学习任务相关的工作流程,而不是精心编写在每个节点上如何调用大语言模型/视觉语言模型(LLM/VLM)。通过数据驱动的学习取代了“硬编码工作流程”的负担。这显著减少了领域专家在环境变化时手动设计启发式规则的需求。

· Strong Generalization via Unified Parameters: Although manual prompt engineering can make the model adaptable to user-defined new tools, the model itself cannot evolve. Under one parameterized policy and a unified data construction and training pipeline, knowledge among environments like certain app features, navigation strategies, or UI patterns can be transferred across tasks, equipping it with strong generalization.

· 通过统一参数实现强泛化:尽管手动提示工程可以使模型适应用户自定义的新工具,但模型本身无法进化。在一个参数化的策略和统一的数据构建与训练流程下,某些应用功能、导航策略或UI模式等环境间的知识可以在任务之间转移,从而赋予其强大的泛化能力。

· Continuous Self-Improvement: native agent models lend themselves naturally to online or lifelong learning paradigms. By deploying the agent in real-world GUI environments and collecting new interaction data, the model can be fine-tuned or further trained to handle novel challenges.

· 持续自我改进:原生智能体模型自然适用于在线或终身学习范式。通过在真实世界的 GUI 环境中部署智能体并收集新的交互数据,可以对模型进行微调或进一步训练,以应对新的挑战。

This data-driven, learning-oriented approach stands in contrast to the design-driven, static nature of agent frameworks. As for now, the development of GUI agent gradually reached this stage, which representative works like Claude Computer-Use (Anthropic, 2024b), Aguvis (Xu et al., 2024), ShowUI (Lin et al., 2024b), OS-Atlas (Wu et al., 2024b), Octopus v2-4 (Chen & Li, 2024), etc. These models mainly utilize existing world data to tailor large VLMs specifically for the domain of GUI interaction.

这种数据驱动、以学习为导向的方法与AI智能体框架的设计驱动、静态特性形成对比。目前,GUI智能体的发展已逐步达到这一阶段,代表性工作包括Claude Computer-Use (Anthropic, 2024b)、Aguvis (Xu et al., 2024)、ShowUI (Lin et al., 2024b)、OS-Atlas (Wu et al., 2024b)、Octopus v2-4 (Chen & Li, 2024)等。这些模型主要利用现有的世界数据,专门为GUI交互领域定制大型视觉语言模型 (VLMs)。

Figure 3: An overview of core capabilities and evaluation for GUI agents

图 3: GUI 智能体的核心能力与评估概览

2.3 Active and Lifelong Agent (Prospect)

2.3 主动与终身智能体(展望)

Stage 4: Action and Lifelong Agent Despite improvements in adaptability, native agents still rely heavily on human experts for data labeling and training guidance. This dependence inherently restricts their capabilities, making them contingent upon the quality and breadth of human-provided data and knowledge.

阶段 4:行动与终身 AI智能体

The transition towards active and lifelong learning (Sur et al., 2022; Rama moor thy et al., 2024) represents a crucial next step in the evolution of GUI agents. In this paradigm, agents actively engage with their environment to propose tasks, execute them, and evaluate the outcomes. These agents can autonomously assign self-rewards based on the success of their actions, reinforcing positive behaviors and progressively refining their capabilities through continuous feedback loops. This process of self-directed exploration and learning allows the agent to discover new knowledge, improve task execution, and enhance problem-solving strategies without heavy reliance on manual annotations or explicit external guidance.

向主动学习和终身学习(Sur 等,2022;Rama moor thy 等,2024)的转变代表了 GUI 智能体(GUI agents)发展的关键下一步。在这一范式中,智能体主动与环境互动,提出任务、执行任务并评估结果。这些智能体可以根据其行动的成功与否自主分配自我奖励,通过持续的反馈循环强化积极行为,并逐步提升其能力。这种自我驱动的探索和学习过程使智能体能够发现新知识、改进任务执行并增强问题解决策略,而无需过度依赖人工标注或明确的外部指导。

These agents develop and modify their skills iterative ly, much like continual learning in robotics (Ayub et al., 2024; Soltoggio et al., 2024), where they can learn from both successes and failures, progressively enhancing their generalization across an increasingly broad range of tasks and scenarios. The key distinction between native agent models and active lifelong learners lies in the autonomy of the learning process: native agents still depend on humans, whereas active agents drive their own learning by identifying gaps in their knowledge and filling them through self-initiated exploration.

这些智能体迭代地开发和修改其技能,类似于机器人技术中的持续学习(Ayub et al., 2024;Soltoggio et al., 2024),它们可以从成功和失败中学习,逐步增强其在越来越广泛的任务和场景中的泛化能力。原生智能体模型与主动终身学习者的关键区别在于学习过程的自主性:原生智能体仍然依赖人类,而主动智能体通过识别自身知识的空白并通过自主探索填补这些空白来驱动其学习。

In this work, we focus on building a scalable and data-driven native agent model, which paves the way for this active and lifelong agent stage. We begin by exploring the core capabilities necessary for such a framework $(\S\ 3)$ and then introduce UI-TARS, our instantiation of this approach $(\S,4)$

在本工作中,我们专注于构建一个可扩展且数据驱动的原生智能体模型,为这一活跃且终身的智能体阶段铺平道路。我们首先探索了这种框架所需的核心能力 (见第 3 节),然后介绍了 UI-TARS,这是我们对该方法的实例化 (见第 4 节)。

3 Core Capabilities of Native Agent Model

3 原生 AI 智能体模型的核心能力

The native agent model internalizes modular i zed components from the previous agent framework into several core capabilities, thereby transitioning towards an end-to-end structure. To get a more profound understanding of the native agent model, this section delves into an in-depth analysis of its core capabilities and reviews the current evaluation metrics and benchmarks.

原生 AI智能体模型将先前 AI智能体框架中的模块化组件内化为几项核心能力,从而向端到端结构过渡。为了更深入地理解原生 AI智能体模型,本节将深入分析其核心能力,并回顾当前的评估指标和基准。

3.1 Core Capabilities

3.1 核心能力

As ilustrated in Figure 3, our analysis is structured around four main aspects: perception, action, reasoning (system-1&2 thinking), and memory.

如图 3 所示,我们的分析围绕四个主要方面展开:感知、行动、推理(系统1和系统2思维)以及记忆。

Perception A fundamental aspect of effective GUI agents lies in their capacity to precisely perceive and interpret graphical user interfaces in real-time. This involves not only understanding static screenshots, but also dynamically adapting to changes as the interface evolves. We review existing works based on their usage of input features:

感知

有效的 GUI 智能体的一个基本方面在于其能够实时精确感知和解释图形用户界面。这不仅涉及理解静态截图,还包括动态适应界面的变化。我们根据其对输入特征的使用回顾现有工作:

· Structured Text: early iterations (Li et al., 2023a; Wang et al., 2023; Wu et al., 2024a) of GUI agents powered by LLMs are constrained by the LLMs' limitation of processing only textual input. Consequently, these agents rely on converting GUI pages into structured textual representations, such as HTML, accessibility trees, or Document Object Model (DOM). For web pages, some agents use HTML data as input or leverage the DOM to analyze pages’ layout. The DOM provides a tree-like structure that organizes elements hierarchically. To reduce input noise, Agent-E (Abuelsaad et al., 2024) utilizes a DOM distillation technique to achieve more effective screenshot representations. Tao et al. (2023) introduce Web WISE, which iterative ly generates small programs based on observations from filtered DOM elements and performs tasks in a sequential manner.

结构化文本:早期由大语言模型驱动的 GUI 智能体(Li et al., 2023a; Wang et al., 2023; Wu et al., 2024a)受限于大语言模型只能处理文本输入的局限性。因此,这些智能体依赖于将 GUI 页面转换为结构化的文本表示,例如 HTML、无障碍树或文档对象模型 (DOM)。对于网页,一些智能体使用 HTML 数据作为输入或利用 DOM 来分析页面布局。DOM 提供了一种树状结构,以层次化的方式组织元素。为了减少输入噪声,Agent-E (Abuelsaad et al., 2024) 使用 DOM 蒸馏技术来实现更有效的截图表示。Tao et al. (2023) 引入了 Web WISE,它基于过滤后的 DOM 元素观察迭代生成小程序,并以顺序方式执行任务。

· Visual Screenshot: with advancements in computer vision and VLMs, agents are now capable of leveraging visual data from screens to interpret their on-screen environments. A significant portion of research relies on Set-of-Mark (SoM) (Yang et al., 2023b) prompting to improve the visual grounding capabilities. To enhance visual understanding, these methods frequently employ Optical Character Recognition (OCR) in conjunction with GUI element detection models, including ICONNet (Sunkara et al., 2022) and DINO (Liu et al., 2025). These algorithms are used to identify and delineate interactive elements through bounding boxes, which are subsequently mapped to specific image regions, enriching the agents? contextual comprehension. Some studies also improve the semantic grounding ability and understanding of elements by adding descriptions of these interactive elements in the screenshots. For example, SeeAct (Zheng et al., 2024a) enhances fine-grained screenshot content understanding by associating visual elements with the content they represent in HTML web.

· 视觉截图:随着计算机视觉和视觉语言模型(VLM)的进步,AI智能体现在能够利用屏幕中的视觉数据来解读其屏幕环境。大部分研究依赖于标记集(Set-of-Mark, SoM) (Yang et al., 2023b) 提示来增强视觉定位能力。为了提升视觉理解能力,这些方法经常结合光学字符识别(OCR)与图形用户界面(GUI)元素检测模型,包括 ICONNet (Sunkara et al., 2022) 和 DINO (Liu et al., 2025)。这些算法用于通过边界框识别和划分交互元素,随后将这些元素映射到特定的图像区域,从而增强AI智能体的上下文理解能力。一些研究还通过在截图中添加这些交互元素的描述,来提高语义定位能力和元素理解能力。例如,SeeAct (Zheng et al., 2024a) 通过将视觉元素与它们在HTML网页中代表的内容关联起来,增强了细粒度的截图内容理解能力。

· Comprehensive Interface Modeling: recently, certain works have employed structured text, visual snapshots, and semantic outlines of elements to attain a holistic understanding of external perception. For instance, Gou et al. (2024a) synthesize large-scale GUI element data and train a visual grounding model UGround to gain the associated references of elements in GUI pages on various platforms. Similarly, OSCAR (Wang & Liu, 2024) utilizes an A1ly tree generated by the Windows API for representing GUI components, incorporating descriptive labels to facilitate semantic grounding. Meanwhile, DUALVCR (Kil et al., 2024) captures both the visual features of the screenshot and the descriptions of associated HTML elements to obtain a robust representation of the visual screenshot.

全面界面建模:近期,部分研究通过使用结构化文本、视觉快照和元素的语义轮廓来全面理解外部感知。例如,Gou 等人 (2024a) 合成了大规模 GUI 元素数据,并训练了一个视觉基础模型 UGround,以获取不同平台 GUI 页面中元素的关联引用。类似地,OSCAR (Wang & Liu, 2024) 利用 Windows API 生成的 A1ly 树来表示 GUI 组件,并加入描述性标签以促进语义基础。同时,DUALVCR (Kil et al., 2024) 捕捉了截图的视觉特征和关联 HTML 元素的描述,以获得视觉截图的强大表示。

Another important point is the ability to interact in real-time. GUIs are inherently dynamic, with elements frequently changing in response to user actions or system processes. GUI agents must continuously monitor these changes to maintain an up-to-date understanding of the interface's state. This real-time perception is critical for ensuring that agents can respond promptly and accurately to evolving conditions. For instance, if a loading spinner appears, the agent should recognize it as an indication of a pending process and adjust its actions accordingly. Similarly, agents must detect and handle scenarios where the interface becomes unresponsive or behaves unexpectedly.

另一个重要的点是实时交互的能力。GUI 本质上是动态的,元素会随着用户操作或系统进程的变化而频繁变化。GUI 智能体必须持续监控这些变化,以保持对界面状态的最新理解。这种实时感知对于确保智能体能够及时准确地应对不断变化的条件至关重要。例如,如果出现加载动画,智能体应将其识别为待处理进程的指示,并相应地调整其操作。同样,智能体必须检测并处理界面无响应或行为异常的场景。

By effectively combining these above aspects, a robust perception system ensures that the GUI agent can maintain situational awareness and respond appropriately to the evolving state of the user interface, aligning its actions with the user's goals and the application's requirements. However, privacy concerns and the additional perceptual noise introduced by the DOM make it challenging to extend pure text descriptions and hybrid text-visual perceptions to any GUI environment. Hence, similar to human interaction with their surroundings, a native agent model should directly comprehend the external environment through visual perception and ground their actions to the original screenshot accurately. By doing so, the native agent model can generalize various tasks and improve the accuracy of actions at each step.

通过有效结合上述方面,一个强大的感知系统确保 GUI 智能体能够保持态势感知,并适应用户界面不断变化的状态,使其行为与用户目标和应用程序需求保持一致。然而,隐私问题以及 DOM 引入的额外感知噪声使得将纯文本描述和混合文本-视觉感知扩展到任何 GUI 环境变得具有挑战性。因此,类似于人类与周围环境的互动,原生智能体模型应通过视觉感知直接理解外部环境,并将其行为准确地基于原始截图。通过这样做,原生智能体模型可以泛化各种任务,并提高每一步动作的准确性。

Action Effective action mechanisms must be versatile, precise, and adaptable to various GUI contexts. Key aspects include:

行动有效的行动机制必须多样化、精确,并能适应各种GUI(图形用户界面)环境。关键方面包括:

· Unified and Diverse Action Space: GUI agents (Gur et al., 2023; Bonatti et al., 2024) operate across multiple platforms, including mobile devices, desktop applications, and web interfaces, each with distinct interaction paradigms. Establishing a unified action space abstracts platform-specific actions into a common set of operations such as cli ck, type, scroll, and drag. Additionally, integrating actions from language agents-such as API calls (Chen et al., 2024b; Li et al., 2023a,b), code interpretation (Wu et al., 2024a), and Command-Line Interface (CLI) (Mei et al., 2024) operations-enhances agent versatility. Actions can be categorized into atomic actions, which execute single operations, and compositional actions, which sequence multiple atomic actions to streamline task execution. Balancing atomic and compositional actions optimizes efficiency and reduces cognitive load, enabling agents to handle both simple interactions and the coordinated execution of multiple steps seamlessly.

· 统一且多样化的操作空间:GUI智能体(Gur et al., 2023; Bonatti et al., 2024)在多个平台上运行,包括移动设备、桌面应用程序和Web界面,每个平台都有其独特的交互范式。建立一个统一的操作空间将特定平台的操作抽象为一组通用操作,如点击、输入、滚动和拖动。此外,整合来自语言智能体的操作——如API调用(Chen et al., 2024b; Li et al., 2023a,b)、代码解释(Wu et al., 2024a)和命令行界面(CLI)(Mei et al., 2024)操作——增强了智能体的多功能性。操作可以分为原子操作(执行单一操作)和组合操作(将多个原子操作串联起来以简化任务执行)。平衡原子操作和组合操作可以优化效率并减少认知负担,使智能体能够无缝处理简单交互以及多步骤的协调执行。

· Challenges in Grounding Coordinates: accurately determining coordinates for actions like clicks, drags, and swipes is challenging due to variability in GUI layouts (He et al., 2024; Burger et al., 2020), differing aspect ratios across devices, and dynamic content changes. Different devices’ aspect ratios can alter the spatial arrangement of interface elements, complicating precise localization. Grounding coordinates requires advanced techniques to interpret visual cues from screenshots or live interface streams accurately.

· 坐标定位的挑战:由于 GUI 布局的可变性(He et al., 2024; Burger et al., 2020)、设备间不同的宽高比以及动态内容的变化,准确确定点击、拖动和滑动等操作的坐标具有挑战性。不同设备的宽高比可能会改变界面元素的空间排列,使得精确定位变得复杂。坐标定位需要先进的技术来准确解释从截图或实时界面流中提取的视觉信息。

Due to the similarity of actions across different operational spaces, agent models can standardize actions from various GUI contexts into a unified action space. Decomposing actions into atomic operations reduces learning complexity, facilitating faster adaptation and transfer of atomic actions across different platforms.

由于不同操作空间中的动作具有相似性,智能体模型可以将来自各种 GUI 上下文的标准动作统一到一个动作空间中。将动作分解为原子操作降低了学习复杂度,有助于在不同平台间更快地适应和迁移原子操作。

Reasoning with System 1&2 Thinking _ Reasoning is a complex capability that integrates a variety of cognitive functions. Human interaction with GUIs relies on two distinct types of cognitive processes (Groves & Thompson, 1970): system 1 and system 2 thinking.

基于系统1和系统2思维的推理

· System 1 refers to fast, automatic, and intuitive thinking, typically employed for simple and routine tasks, such as clicking a familiar button or dragging a file to a folder without conscious deliberation. · System 2 encompasses slow, deliberate, and analytical thinking, which is crucial for solving complex tasks, such as planning an overall workflow or reflecting to troubleshoot errors.

· System 1 指的是快速、自动和直觉的思维,通常用于简单和常规的任务,例如点击熟悉的按钮或将文件拖到文件夹中而不需要深思熟虑。· System 2 包含缓慢、深思熟虑和分析性的思维,这对于解决复杂任务至关重要,例如规划整体工作流程或反思以排查错误。

Similarly, autonomous GUI agents must develop the ability to emulate both system 1 and system 2 thinking to perform effectively across a diverse range of tasks. By learning to identify when to apply rapid, heuristic-based responses and when to engage in detailed, step-by-step reasoning, these agents can achieve greater efficiency, adaptability, and reliability in dynamic environments.

同样地,自主的 GUI 智能体必须发展出模拟系统 1 和系统 2 思维的能力,以便在各种任务中高效执行。通过学会识别何时应用快速的启发式响应,何时进行详细的逐步推理,这些智能体可以在动态环境中实现更高的效率、适应性和可靠性。

System 1 Reasoning represents the agent's ability to execute fast, intuitive responses by identifying patterns in the interface and applying pre-learned knowledge to observed situations. This form of reasoning mirrors human interaction with familiar elements of a GUI, such as recognizing that pressing ^“Enter” in a text field submits a form or understanding that clicking a certain button progresses to the next step in a workflow. These heuristic-based actions enable agents to respond swiftly and maintain operational efficiency in routine scenarios. However, the reliance on pre-defined mappings limits the scope of their decision-making to immediate, reactive behaviors. For instance, models such as large action models (Wu et al., 2024b; Wang et al., 2024a) excel at generating quick responses by leveraging environmental observations, but they often lack the capacity for more sophisticated reasoning. This constraint becomes particularly evident in tasks requiring the planning and execution of multi-step operations, which go beyond the reactive, one-step reasoning of system 1. Thus, while system 1 provides a foundation for fast and efficient operation, it underscores the need for agents to evolve toward more deliberate and reflective capabilities seen in system 2 reasoning.

系统1推理代表了AI智能体通过识别界面中的模式并将预先学习的知识应用于观察到的情境来执行快速、直观响应的能力。这种推理形式类似于人类与图形用户界面(GUI)中熟悉元素的交互,例如识别在文本字段中按下“Enter”键会提交表单,或理解点击某个按钮会推进工作流程中的下一步。这些基于启发式的行为使AI智能体能够在常规场景中快速响应并保持操作效率。然而,对预定义映射的依赖将其决策范围限制为即时、反应性的行为。例如,大动作模型(Wu et al., 2024b; Wang et al., 2024a)等模型擅长利用环境观察生成快速响应,但它们通常缺乏更复杂推理的能力。这一限制在需要规划和执行多步骤操作的任务中尤为明显,这些任务超出了系统1的反应性、单步推理范围。因此,虽然系统1为快速高效的操作提供了基础,但它强调了AI智能体需要向系统2推理中所见的更具深思熟虑和反思性的能力发展的必要性。

System 2 Reasoning represents deliberate, structured, and analytical thinking, enabling agents to handle complex, multi-step tasks that go beyond the reactive behaviors of system 1. Unlike heuristic-based reasoning, system 2 involves explicitly generating intermediate thinking processes, often using techniques like Chain-ofThought (CoT) (Wei et al., 2022) or ReAct (Ya0 et al., 2023), which bridge the gap between simple actions and intricate workfows. This paradigm of reasoning is composed of several essential components.

系统2推理代表了有目的、结构化和分析性的思维,使AI智能体能够处理超越系统1反应行为的复杂、多步骤任务。与基于启发式的推理不同,系统2涉及显式生成中间思维过程,通常使用诸如Chain-of-Thought (CoT) (Wei et al., 2022) 或 ReAct (Ya0 et al., 2023) 等技术,这些技术在简单动作和复杂工作流程之间架起桥梁。这种推理范式由几个关键组成部分构成。

· First, task decomposition focuses on formulating plannings to achieve over arching objectives by decomposing tasks into smaller, manageable sub-tasks (Dagan et al., 2023; Song et al., 2023; Huang et al., 2024). For example, completing a multi-field form involves a sequence of steps like entering a name, address, and other details, all guided by a well-structured plan.

· 首先,任务分解的重点是通过将任务分解为更小、更易管理的子任务来制定实现总体目标的计划 (Dagan et al., 2023; Song et al., 2023; Huang et al., 2024)。例如,完成一个多字段表单涉及一系列步骤,如输入姓名、地址和其他详细信息,所有这些步骤都由一个结构良好的计划指导。

· Second, long-term consistency is critical during the entire task completion process. By consistently referring back to the initial objective, agent models can effectively avoid any potential deviations that may occur during complex, multi-stage tasks, thus ensuring coherence and continuity from start to finish.

其次,在整个任务完成过程中,长期一致性至关重要。通过持续回顾初始目标,智能体模型能够有效避免在复杂、多阶段任务中可能出现的偏差,从而确保从头到尾的连贯性和连续性。

The development of UI-TARS places a strong emphasis on equipping the model with robust system 2 reasoning capabilities, allowing it to address complex tasks with greater precision and adaptability. By integrating high-level planning mechanisms, UI-TARS excels at decomposing over arching goals into smaller, manageable sub-tasks. This structured approach enables the model to systematically handle intricate workflows that require coordination across multiple steps. Additionally, UI-TARS incorporates a long-form CoT reasoning process, which facilitates detailed intermediate thinking before executing specific actions. Furthermore, UITARS adopts reflection-driven training process. By incorporating reflective thinking, the model continuously evaluates its past actions, identifies potential mistakes, and adjusts its behavior to improve performance over time. The model's iterative learning method yields significant benefits, enhancing its reliability and equipping it to navigate dynamic environments and unexpected obstacles.

UI-TARS 的开发高度重视为模型配备强大的系统 2 推理能力,使其能够以更高的精度和适应性处理复杂任务。通过集成高级规划机制,UI-TARS 擅长将总体目标分解为更小、可管理的子任务。这种结构化方法使模型能够系统地处理需要跨多个步骤协调的复杂工作流。此外,UI-TARS 采用了长链 CoT 推理过程,有助于在执行特定操作之前进行详细的中间思考。更进一步,UI-TARS 采用了反思驱动的训练过程。通过引入反思性思维,模型不断评估其过去的行动,识别潜在的错误,并调整其行为以逐步提高性能。模型的迭代学习方法带来了显著的好处,增强了其可靠性,并使其能够在动态环境和意外障碍中游刃有余。

Memory The memory is mainly used to store the supported explicit knowledge and historical experience that the agent refers to when making decisions. For agent frameworks, an additional memory module is often introduced to store previous interactions and task-level knowledge. Agents then retrieve and update these memory modules during decision-making progress. The memory module can be divided into two categories:

记忆模块主要用于存储智能体在决策时参考的显性知识和历史经验。对于智能体框架,通常会引入额外的记忆模块来存储之前的交互和任务级知识。智能体在决策过程中会检索和更新这些记忆模块。记忆模块可以分为两类:

· Short-term Memory: this serves as a temporary repository for task-specific information, capturing the agent's immediate context. This includes the agent's action history, current state details, and the ongoing execution trajectory of the task, enabling real-time situational awareness and adaptability. By semantically processing contextual screenshots, CoAT (Zhang et al., 2024d) extracts key interface details, thereby enhancing comprehension of the task environment. CoCo-Agent (Ma et al., 2024) records layouts and dynamic states through Comprehensive Environment Perception (CEP).

短期记忆:作为任务特定信息的临时存储库,捕获 AI 智能体的即时上下文。这包括 AI 智能体的行动历史、当前状态细节以及任务的持续执行轨迹,从而实现实时情境感知和适应性。CoAT (Zhang 等人, 2024d) 通过对上下文截图进行语义处理,提取关键界面细节,从而增强对任务环境的理解。CoCo-Agent (Ma 等人, 2024) 通过全面环境感知 (CEP) 记录布局和动态状态。

· Long-term Memory: it operates as a long-term data reserve, capturing and safeguarding records of previous interaction, tasks, and background knowledge. It retains details such as execution paths from prior tasks, offering a comprehensive knowledge base that supports reasoning and decision-making for future tasks. By integrating accumulated knowledge that contains user preferences and task operation experiences, OS-copilot (Wu et al., 2024a) refines its task execution over time to better align with user needs and improve overall efficiency. Cradle (Tan et al., 2024) focuses on enhancing the multitasking abilities of foundational agents by equipping them with the capability to store and utilize task execution experiences. Song et al. (2024) introduce a framework for API-driven web agents that leverage taskspecific background knowledge to perform complex web operations.

长期记忆:它作为长期数据储备,记录和保存之前的交互、任务和背景知识。它保留了之前任务的执行路径等细节,提供了一个全面的知识库,支持未来任务的推理和决策。通过整合包含用户偏好和任务操作经验的积累知识,OS-copilot (Wu et al., 2024a) 逐步优化其任务执行,以更好地适应用户需求并提高整体效率。Cradle (Tan et al., 2024) 专注于增强基础代理的多任务能力,使其具备存储和利用任务执行经验的能力。Song et al. (2024) 提出了一个API驱动的网络代理框架,利用任务特定的背景知识来执行复杂的网络操作。

Memory reflects the capability to leverage background knowledge and input context. The synergy between short-term and long-term memory storage significantly enhances the efficiency of an agent's decision-making process. Native agent models, unlike agent frameworks, encode long-term operational experience of tasks within their internal parameters, converting the observable interaction process into implicit, parameterized storage. Techniques such as In-Context Learning (ICL) or CoT reasoning can be employed to activate this internal memory.

记忆反映了利用背景知识和输入上下文的能力。短期记忆和长期记忆存储之间的协同作用显著提升了智能体决策过程的效率。与智能体框架不同,原生智能体模型在其内部参数中编码了任务的长期操作经验,将可观察的交互过程转化为隐式的参数化存储。可以采用上下文学习(In-Context Learning,ICL)或链式思维(Chain-of-Thought,CoT)推理等技术来激活这种内部记忆。

3.2 Capability Evaluation

3.2 能力评估

To evaluate the effectiveness of GUI agents, numerous benchmarks have been meticulously designed, focusing on various aspects of capabilities such as perception, grounding, and agent capabilities. Specifically, Perception Evaluation reflects the degree of understanding of GUI knowledge. Grounding Evaluation verifies whether agents can accurately locate coordinates in diverse GUI layouts. Agent capabilities can be primarily divided into two categories: Offine Agent Capability Evaluation, which is conducted in a predefined and static environment and mainly focuses on assessing the individual steps performed by GUI agents, and Online Agent Capability Evaluation, which is performed in an interactive and dynamic environment and evaluates the agent's overall capability to successfully complete the task.

为了评估 GUI 智能体的有效性,许多基准测试被精心设计,重点关注感知、定位和智能体能力等各个方面的能力。具体来说,感知评估反映了对 GUI 知识的理解程度。定位评估验证了智能体是否能够在不同的 GUI 布局中准确定位坐标。智能体能力主要分为两类:离线智能体能力评估,在预定义和静态的环境中进行,主要关注评估 GUI 智能体执行的各个步骤;在线智能体能力评估,在交互和动态的环境中进行,评估智能体成功完成任务的整体能力。

Perception Evaluation Perception evaluation assesses agents’ understanding of user interface (Ul) knowledge and their awareness of the environment. For instance, Visual Web Bench (Liu et al., 2024c) focuses on agents’ web understanding capabilities, while WebSRC (Chen et al., 2021) and ScreenQA (Hsiao et al., 2022) evaluate web structure comprehension and mobile screen content understanding through question-answering (QA) tasks. Additionally, GUI-World (Chen et al., 2024a) offers a wide range of queries in multiple-choice, free-form, and conversational formats to assess GUI understanding. Depending on the varying question formats, a range of metrics are employed. For instance, accuracy is utilized for multiple-choice question (MCQ) tasks as the key metric, and in the case of captioning or Optical Character Recognition (OCR) tasks, the ROUGE-L metric is adopted to evaluate performance.

感知评估 感知评估评估AI智能体对用户界面(UI)知识的理解及其对环境的意识。例如,Visual Web Bench (Liu et al., 2024c) 专注于AI智能体的网页理解能力,而WebSRC (Chen et al., 2021) 和 ScreenQA (Hsiao et al., 2022) 通过问答(QA)任务评估网页结构理解和移动屏幕内容理解。此外,GUI-World (Chen et al., 2024a) 提供了多种查询形式,包括选择题、自由形式和对话形式,以评估对图形用户界面(GUI)的理解。根据不同的提问形式,采用了一系列评价指标。例如,在选择题(MCQ)任务中,准确率作为关键指标,而在字幕或光学字符识别(OCR)任务中,采用ROUGE-L指标来评估性能。

Grounding Evaluation Given an instructions, grounding evaluation focuses on the ability to precisely locate GUI elements. ScreenSpot (Cheng et al., 2024) evaluates single-step GUI grounding performance across multiple platforms. ScreenSpot v2 (Wu et al., 2024b), a re-annotated version, addresses annotation errors present in the original ScreenSpot. ScreenSpot Pro (Li et al., 2025) facilitates grounding evaluation by incorporating real-world tasks gathered from diverse high-resolution professional desktop environments. Metrics for grounding evaluation are usually determined based on whether the model's predicted location accurately lies within the bounding box of the target element.

基于指令的定位评估

ScreenSpot (Cheng et al., 2024) 评估了跨平台单步GUI定位性能。ScreenSpot v2 (Wu et al., 2024b) 作为重新标注的版本,解决了原版ScreenSpot中的标注错误。ScreenSpot Pro (Li et al., 2025) 通过整合来自多种高分辨率专业桌面环境的真实任务,促进了定位评估。定位评估的指标通常基于模型预测的位置是否准确位于目标元素的边界框内。

Offline Agent Capability Evaluation Offine evaluation measures the performance of GUI agents in static, pre-defined environments. Each environment typically includes an input instruction and the current state of the environment (e.g., a screenshot or a history of previous actions), requiring agents to produce the correct outputs or actions. These environments remain consistent throughout the evaluation process. Numerous offine evaluation benchmarks, including AITW (Rawles et al., 2023), Mind2Web (Deng et al., 2023), MT-Mind2Web (Deng et al., 2024), AITZ (Zhang et al., 2024e), Android Control (Li et al., 2024c), and GUI-Odyssey (Lu et al., 2024a), provide agents with a task description, a current screenshot, and previous actions history, aimed at enabling accurate prediction of the next action. These benchmarks commonly employ step-level metrics , providing fine-grained supervision of their specific behaviors. For instance, the Action-Matching Score (Rawles et al., 2023; Zhang et al., 2024e; Li et al., 2024c; Lu et al., 2024a) considers an action correct solely when both the type of action and its specific details (e.g. arguments like typed content or scroll direction) are consistent with the ground truth. Some benchmarks (Li et al., 2020a; Burns et al., 2022) demand that agents produce a series of automatically executable actions from provided instructions and screenshots. These benchmarks predominantly assess performance using task-level metrics, which determine task success by whether the output results precisely match the pre-defined labels, like the complete and partial action sequence matching accuracy (Li et al., 2020a; Burns et al., 2022; Rawles et al., 2023).

离线智能体能力评估

Online Agent Capability Evaluation Online evaluation facilitates dynamic environments, each designed as an interactive simulation that replicates real-world scenarios. In these environments, GUI agents can modify environmental states by executing actions in real time. These dynamic environments span various platforms: (1) Web: WebArena (Zhou et al., 2023) and MMInA (Zhang et al., 2024g) provide realistic web environments. (2) Desktop: OSWorld (Xie et al., 2024), Office Bench (Wang et al., 2024f), ASSISTGUI (Ga0 et al., 2023), and Windows Agent Arena (Bonatti et al., 2024) operate within real computer desktop environments. (3) Mobile: Android World (Rawles et al., 2024a), LlamaTouch (Zhang et al., 2024f), and B-MOCA (Lee et al., 2024) are built on mobile operating systems such as Android. To assess performance in online evaluation, task-level metrics are employed, providing a comprehensive measure of the agents′ effectiveness. Specifically, in the realm of online agent capability evaluation, these task-level metrics primarily determine task success based on whether an agent successfully reaches a goal state. This verification process checks whether the intended outcome achieved or if the resulting outputs precisely align with the labels (Zhou et al., 2023; Xie et al., 2024; Wang et al., 2024f; Ga0 et al., 2023).

在线智能体能力评估

在线评估促进了动态环境的发展,每个环境都设计为模拟现实场景的交互式仿真。在这些环境中,GUI 智能体可以通过实时执行操作来修改环境状态。这些动态环境涵盖多个平台:(1) 网页:WebArena (Zhou et al., 2023) 和 MMInA (Zhang et al., 2024g) 提供了真实的网页环境。(2) 桌面:OSWorld (Xie et al., 2024)、Office Bench (Wang et al., 2024f)、ASSISTGUI (Ga0 et al., 2023) 和 Windows Agent Arena (Bonatti et al., 2024) 在真实的计算机桌面环境中运行。(3) 移动设备:Android World (Rawles et al., 2024a)、LlamaTouch (Zhang et al., 2024f) 和 B-MOCA (Lee et al., 2024) 基于 Android 等移动操作系统构建。为了评估在线评估中的表现,采用了任务级指标,全面衡量智能体的有效性。具体而言,在在线智能体能力评估领域,这些任务级指标主要根据智能体是否成功达到目标状态来确定任务成功与否。该验证过程检查是否实现了预期结果,或者生成的结果是否与标签完全一致 (Zhou et al., 2023; Xie et al., 2024; Wang et al., 2024f; Ga0 et al., 2023)。

4 UI-TARS

4 UI-TARS

In this section, we introduce UI-TARS, a native GUI agent model designed to operate without reliance on cumbersome manual rules or the cascaded modules typical of conventional agent frameworks. UI-TARS directly perceives the screenshot, applies reasoning processes, and generates valid actions autonomously. Moreover, UI-TARS can learn from prior experience, iterative ly refining its performance by leveraging environment feedback.

在本节中,我们介绍 UI-TARS,这是一种原生 GUI 智能体模型,旨在无需依赖繁琐的手动规则或传统智能体框架中典型的级联模块即可运行。UI-TARS 直接感知屏幕截图,应用推理过程,并自主生成有效操作。此外,UI-TARS 可以从先前的经验中学习,通过利用环境反馈迭代地优化其性能。

Figure 4: Overview of UI-TARS. We illustrate the architecture of the model and its core capabilities.

图 4: UI-TARS 概览。我们展示了模型的架构及其核心能力。

In the following, we begin by describing the overall architecture of UI-TARS (\$ 4.1), followed by how we enhance its perception $(\S,4.2)$ and action $(\S,4.3)$ capabilities. Then we concentrate on how to infuse system-2 reasoning capabilities into UI-TARS $(\S,^{4.4})$ and iterative improvement through experience learning $(\S,4.5)$

接下来,我们首先描述 UI-TARS 的整体架构 (\$ 4.1),然后介绍如何增强其感知 (\S,4.2) 和行动 (\S,4.3) 能力。接着,我们重点讨论如何将系统-2 推理能力融入 UI-TARS (\S,^{4.4}) 以及通过经验学习进行迭代改进 (\S,4.5)。

4.1 Architecture Overview

4.1 架构概述

As illustrated in Figure 4, given an initial task instruction, UI-TARS iterative ly receives observations from the device and performs corresponding actions to accomplish the task. This sequential process can be formally expressed as:

如图 4 所示,给定初始任务指令,UI-TARS 会迭代地从设备接收观察结果并执行相应的操作以完成任务。这个顺序过程可以正式表示为:

where $o_{i}$ denotes the observation (device screenshot) at time step $i$ , and $a_{i}$ represents the action executed by the agent. At each time step, UI-TARS takes as input the task instruction, the history of prior interactions $\left(o_{1},a_{1},\cdot\cdot\cdot,,o_{i-1},a_{i-1}\right)$ , and the current observation $o_{i}$ . Based on this input, the model outputs an action $a_{i}$ from the predefined action space. After executing the action, the device provides the subsequent observation, and these processes iterative ly continue.

其中 $o_{i}$ 表示时间步 $i$ 的观察(设备截图),$a_{i}$ 表示智能体执行的动作。在每个时间步,UI-TARS 将任务指令、先前的交互历史 $\left(o_{1},a_{1},\cdot\cdot\cdot,,o_{i-1},a_{i-1}\right)$ 以及当前观察 $o_{i}$ 作为输入。基于此输入,模型从预定义的动作空间中输出一个动作 $a_{i}$。执行动作后,设备提供后续观察,这些过程会迭代进行。

To further enhance the agent's reasoning capabilities and foster more deliberate decision-making, we integrate a reasoning component in the form of “thoughts" $t_{i}$ , generated before each action $a_{i}$ . These thoughts reflect the reflective nature of “System $2^{\bullet}$ thinking. They act as a crucial intermediary step, guiding the agent to reconsider previous actions and observations before moving forward, thus ensuring that each decision is made with intentional it y and careful consideration.

为了进一步增强智能体的推理能力并促进更谨慎的决策,我们在每个动作 $a_{i}$ 之前,以“思考” $t_{i}$ 的形式集成了一个推理组件。这些思考反映了“系统 $2^{\bullet}$ 思维”的反思性质。它们作为一个关键的中介步骤,引导智能体在继续前进之前重新考虑之前的动作和观察,从而确保每个决策都是有意图且经过深思熟虑的。

This approach is inspired by the ReAct framework (Yao et al., 2023), which introduces a similar refective mechanism but in a more straightforward manner. In contrast, our integration of “thoughts"” involves a more structured, goal-oriented deliberation. These thoughts are a more explicit reasoning process that guides the agent toward better decision-making, especially in complex or ambiguous situations. The process can now be formalized as:

该方法受到了 ReAct 框架 (Yao et al., 2023) 的启发,该框架引入了一种类似的反思机制,但采用了更直接的方式。相比之下,我们对“思考”的整合涉及更结构化、以目标为导向的深思熟虑。这些思考是一个更明确的推理过程,能够引导智能体做出更好的决策,尤其是在复杂或模糊的情况下。该过程现在可以形式化为:

Figure 5: Data example of perception and grounding data

图 5: 感知与基础数据示例

these intermediate thoughts guide the model's decision-making and enable more nuanced and reflective interactions with the environment.

这些中间思考指导模型的决策,并使其能够与环境进行更细致和反思性的互动。

In order to optimize memory usage and maintain efficiency within the typically constrained token budget (e.g.,. $32\mathrm{k}$ sequence length), we limit the input to the last $N$ observations. This constraint ensures the model remains capable of handling the necessary context without overwhelming its memory capacity. The full history of previous actions and thoughts is retained as short-term memory. UI-TARS predicts the thought $t_{n}$ and action $a_{n}$ outputs iterative ly, conditioned on both the task instruction and the previous interactions:

为了优化内存使用并在通常受限的 Token 预算(例如,$32\mathrm{k}$ 序列长度)内保持效率,我们将输入限制为最后 $N$ 个观察结果。这一约束确保模型能够处理必要的上下文,而不会超出其内存容量。之前的动作和思维的完整历史被保留为短期记忆。UI-TARS 根据任务指令和之前的交互,迭代地预测思维 $t_{n}$ 和动作 $a_{n}$ 的输出:

4.2 Enhancing GUI Perception

4.2 增强 GUI 感知

Improving GUI perception presents several unique challenges: (1) Screenshot Scarcity: while large-scale general scene images are widely available, GUI-specific screenshots are relatively sparse. (2) Information Density and Precision Requirement: GUI images are inherently more information-dense and structured than general scene images, often containing hundreds of elements arranged in complex layouts. Models must not only recognize individual elements but also understand their spatial relationships and functional interactions. Moreover, many elements in GUI images are small (e.g., $10!\times!10$ pixel icons in a $1920!\times!1080$ image), making it difficult to perceive and localize these elements accurately. Unlike traditional frameworks that rely on separate, modular perception models, native agents overcome these challenges by directly processing raw input from GUI screenshots. This approach enables them to scale better by leveraging large-scale, unified datasets, thereby addressing the unique challenges of GUI perception with greater efficiency.

提升 GUI (Graphical User Interface) 感知面临几个独特的挑战:(1) 截图稀缺性:虽然大规模的通用场景图像广泛可用,但 GUI 相关的截图相对稀少。(2) 信息密度与精度要求:GUI 图像本质上比通用场景图像更具信息密度和结构性,通常包含数百个元素,排列在复杂的布局中。模型不仅需要识别单个元素,还需要理解它们的空间关系和功能交互。此外,GUI 图像中的许多元素很小 (例如,在 $1920!\times!1080$ 图像中的 $10!\times!10$ 像素图标),这使得准确感知和定位这些元素变得困难。与依赖独立模块化感知模型的传统框架不同,原生 AI 智能体通过直接处理 GUI 截图的原始输入来克服这些挑战。这种方法使它们能够通过利用大规模的统一数据集更好地扩展,从而更高效地应对 GUI 感知的独特挑战。

Screenshot Collection To address data scarcity and ensure diverse coverage, we built a large-scale dataset comprising screenshots and metadata from websites, apps, and operating systems. Using specialized parsing tools, we automatically extracted rich metadata—such as element type, depth, bounding box, and text content for each element—-while rendering the screenshots. Our approach combined automated crawling and humanassisted exploration to capture a wide range of content. We included primary interfaces as well as deeper, nested pages accessed through repeated interactions. All data was logged in a structured format-—-(screenshot, element box, element metadata)—-to provide comprehensive coverage of diverse interface designs.

截图收集

We adopt a bottom-up data construction approach, starting from individual elements and progressing to holistic interface understanding. By focusing on small, localized parts of the GUI before integrating them into the broader context, this approach minimizes errors while balancing precision in recognizing components with the ability to interpret complex layouts. Based on the collected screenshot data, we curated five core task data (Figure5):

我们采用自下而上的数据构建方法,从单个元素开始,逐步推进到整体界面理解。通过先关注 GUI 的局部小部分,再将它们整合到更广泛的上下文中,这种方法在识别组件的精确性与解释复杂布局的能力之间取得了平衡,同时最大限度地减少了错误。基于收集的截图数据,我们整理了五项核心任务数据 (图5):

Element Description To enhance recognizing and understanding specific elements within a GUI, particularly tiny elements, we focus on creating detailed and structured descriptions for each element. Such descriptions are based on metadata extracted using parsing tools and further synthesized by a VLM, covering four aspects: (1) Element Type (e.g., windows control types): we classify elements (e.g., buttons, text fields, scrollbars) based on visual cues and system information; (2) Visual Description, which describes the element's appearance, including its shape, color, text content, and style, derived directly from the image; (3) Position Information: we describe the spatial position of each element relative to others; (4) Element Function, which describes the element's intended functionality and possible ways of interactions. We train UI-TARS to enumerate all visible elements within a screenshot and generate their element descriptions, conditioned on the screenshot.

元素描述

Dense Captioning We train UI-TARS to understand the entire interface while maintaining accuracy and minimizing hallucinations. The goal of dense captioning is to provide a comprehensive, detailed description of the GUI screenshot, capturing not only the elements themselves but also their spatial relationships and the overall layout of the interface. For each recorded element in the screenshot, we first obtain their element descriptions. For embedded images, which often lack detailed metadata, we also generate their descriptive captions. After that, we integrate all the image and element descriptions into a cohesive, highly detailed caption that preserves the structure of the GUI layout using a VLM. During training, UI-TARS is given only the image and tasked with outputting the corresponding dense caption.

密集标注

我们训练 UI-TARS 在保持准确性和最小化幻觉的同时理解整个界面。密集标注的目标是提供 GUI 截图的全面、详细描述,不仅捕捉元素本身,还包括它们的空间关系以及界面的整体布局。对于截图中记录的每个元素,我们首先获取它们的元素描述。对于通常缺乏详细元数据的嵌入图像,我们也生成它们的描述性标注。之后,我们使用 VLM 将所有图像和元素描述整合成一个连贯、高度详细的标注,保留 GUI 布局的结构。在训练过程中,UI-TARS 仅提供图像,并负责输出相应的密集标注。

State Transition Captioning While dense captioning provides a comprehensive description of a GUI interface, it does not capture state transitions, particularly the subtle effects of actions (e.g., a tiny button being pressed) on the interface. To address this limitation, we train the model to identify and describe the differences between two consecutive screenshots and determine whether an action, such as a mouse click or keyboard input, has occurred. We also incorporate screenshot pairs that correspond to non-interactive UI changes (e.g.. animations, screen refreshes, or background updates). During training, UI-TARS is presented with a pair of images and tasked with predicting the specific visual changes (and possible reasons) of the two images. In this way, UI-TARS learns the subtle UI changes, including both user-initiated actions and non-interactive transitions. This capability is crucial for tasks requiring fine-grained interaction understanding and dynamic state perception.

状态转换描述

Question Answering (QA) While dense captioning and element descriptions primarily focus on understanding the layout and elements of a GUI, QA offers a more dynamic and fexible approach to integrating these tasks with reasoning capabilities. We synthesize a diverse set of QA data that spans a broad range of tasks, including interface comprehension, image interpretation, element identification, and relational reasoning. This enhances UI-TARS's capacity to process queries that involve a higher degree of abstraction or reasoning.

问答 (QA)

Set-of-Mark (SoM) We also enhance the Set-of-Mark (SoM) prompting ability (Yang et al., 2023b) of UI-TARS. We draw visually distinct markers for parsed elements on the GUI screenshot based on their spatial coordinates. These markers vary in attributes such as form, color, and size, providing clear, intuitive visual cues for the model to locate and identify specific elements. In this way, UI-TARS better associates visual markers with their corresponding elements. We integrate SoM annotations with tasks like dense captioning and QA. For example, the model might be trained to describe an element highlighted by a marker.

Set-of-Mark (SoM)

4.3 Unified Action Modeling and Grounding

4.3 统一的动作建模与落地

The de-facto approach for improving action capabilities involves training the model to mimic human behaviors in task execution, i.e., behavior cloning (Bain & Sammut, 1995). While individual actions are discrete and isolated, real-world agent tasks inherently involve executing a sequence of actions, making it essential to train the model on multi-step trajectories. This approach allows the model to learn not only how to perform individual actions but also how to sequence them effectively (system-1 thinking).

提升行动能力的实际方法包括训练模型模仿人类执行任务的行为,即行为克隆 (Bain & Sammut, 1995)。尽管单个动作是离散且孤立的,但现实世界中的智能体任务本质上涉及执行一系列动作,因此有必要在多步轨迹上训练模型。这种方法使模型不仅能够学习如何执行单个动作,还能学习如何有效地将它们排序(系统-1思维)。

Unified Action Space Similar to previous works, we design a common action space that standardizes semantically equivalent actions across devices (Table 1), such as “click" on Windows versus “"tap" on mobile, enabling knowledge transfer across platforms. Due to device-specific differences, we also introduce optional actions tailored to each platform. This ensures the model can handle the unique requirements of each device while maintaining consistency across scenarios. We also define two terminal actions: Fini shed () , indicating task completion, and Ca11User () , invoked in cases requiring user intervention, such as login or authentication.

统一动作空间

与之前的工作类似,我们设计了一个通用的动作空间,标准化了跨设备的语义等效动作(表 1),例如 Windows 上的“click”与移动设备上的“tap”,从而实现跨平台的知识迁移。由于设备间的差异,我们还引入了针对每个平台的可选动作。这确保了模型能够处理每个设备的独特需求,同时保持跨场景的一致性。我们还定义了两个终止动作:Finished (),表示任务完成,以及 CallUser (),在需要用户干预的情况下调用,例如登录或身份验证。

Table 1: Unified action space for different platforms

表 1: 不同平台的统一动作空间

| 环境 | 动作 | 定义 |

|---|---|---|

| 共享 | Click(x,y) | 在坐标 (x,y) 处点击。 |

| Drag(x1,y1,x2,y2) | 从 (x1, y1) 拖动到 (x2, y2)。 | |

| Scroll(x,y,direction) | 在 (x,y) 处按给定方向滚动。 | |

| Type(content) | 输入指定内容。 | |

| WaitO | 暂停片刻。 | |

| FinishedO | 标记任务完成。 | |

| 桌面 | CallUserO | 请求用户干预。 |

| Hotkey(key) | 按下指定的快捷键。 | |

| LeftDouble(x,y) | 在 (x,y) 处双击。 | |

| RightSingle(x, y) | 在 (x,y) 处右击。 | |

| 移动 | LongPress(x, y) | 在 (x, y) 处长按。 |

| PressBackO | 按下“返回”按钮。 | |

| PressHomeO | 按下“主页”按钮。 | |

| PressEnterO) | 按下“回车”键。 |

| 数据类型 | 基础数据 (Grounding) | 多步骤 (MultiStep) |

|---|---|---|

| 元素 (Ele.) | 元素/图像 (Ele./lmage) | |

| 开源 (OpenSource) | Web | 14.8M |

| Mobile | 2.5M | |

| Desktop | 1.1M | |

| 我们的 (Ours) | * |

Action Trace Collection A significant challenge in training models for task execution lies in the limited availability of multi-step trajectory data, which has historically been under-recorded and sparse. To address this issue, we rely on two primary data sources: (1) our annotated dataset: we develop a specialized annotation tool to capture user actions across various software and websites within PC environments. The annotation process begins with the creation of initial task instructions, which are reviewed and refined by annotators to ensure clarity and alignment with the intended goals. Annotators then execute the tasks, ensuring that their actions fulfill the specified requirements. Each task undergoes rigorous quality filtering; and (2) open-source data: we also integrate multiple existing datasets (MM-Mind2Web (Zheng et al., 2024b), GUIAct (Chen et al., 2024c), AITW (Rawles et al., 2023), AITZ (Zhang et al., 2024d), Android Control (Li et al., 2024c), GUI-Odyssey (Lu et al., 2024a), AMEX (Chai et al., 2024)) and standardize them into a unified action space format. This involves reconciling varying action representations into a consistent template, allowing for seamless integration with the annotated data. In Table 2, we list the basic statistics of our action trace data.

动作轨迹收集

Improving Grounding Ability Grounding, the ability to accurately locate and interact with specific GUI elements, is critical for actions like clicking or dragging. Unlike multi-step action data, grounding data is easier to scale because it primarily relies on the visual and position properties of elements, which can be efficiently synthesized or extracted (Hong et al., 2024; Gou et al., 2024a; Wu et al., 2024b). We train UI-TARS to directly predict the coordinates of the elements it needs to interact with. This involves associating each element in a GUI with its spatial coordinates and metadata.

提升定位能力

定位,即准确找到并与其交互的能力,对于点击或拖动等操作至关重要。与多步操作数据不同,定位数据更容易扩展,因为它主要依赖于元素的视觉和位置属性,这些属性可以高效地合成或提取 (Hong et al., 2024; Gou et al., 2024a; Wu et al., 2024b)。我们训练 UI-TARS 直接预测其需要交互的元素的坐标。这涉及将 GUI 中的每个元素与其空间坐标和元数据关联起来。

As described in $\S\ 4.2$ , we collected screenshots and extracted metadata, including element type, depth, bounding boxes, and text content, using specialized parsing tools. For elements recorded with bounding boxes, we calculated the average of the corners to derive a single point coordinate, representing the center of the bounding box. To construct training samples, each screenshot is paired with individual element descriptions derived from metadata. The model is tasked with outputting relative coordinates normalized to the dimensions of the screen, ensuring consistency across devices with varying resolutions. For example, given the description "red button in the top-right corner labeled Submit", the model predicts the normalized coordinates of that button. This direct mapping between descriptions and coordinates enhances the model's ability to understand and ground visual elements accurately.

如 $\S\ 4.2$ 所述,我们收集了截图并使用专门的解析工具提取了元数据,包括元素类型、深度、边界框和文本内容。对于记录有边界框的元素,我们计算了角点的平均值以得出一个单点坐标,代表边界框的中心。为了构建训练样本,每个截图都与从元数据中提取的单个元素描述配对。模型的任务是输出归一化到屏幕尺寸的相对坐标,确保在不同分辨率的设备上保持一致。例如,给定描述“右上角标记为提交的红色按钮”,模型预测该按钮的归一化坐标。这种描述与坐标之间的直接映射增强了模型准确理解和定位视觉元素的能力。

To further augment our dataset, we integrated open-source data (Seeclick (Cheng et al., 2024), GUIAct (Chen et al., 2024c), MultiUI (Liu et al., 2024b), Rico-SCA (Li et al., 2020a), Widget Caption (Li et al., 2020b), MUG (Li et al., 2024b), Rico Icon (Sunkara et al., 2022), CLAY (Li et al., 2022), UIBERT (Bai et al., 2021), OmniACT (Kapoor et al., 2024), AutoGUI (Anonymous, 2024), OS-ATLAS (Wu et al., 2024b) and standardized them into our unified action space format. We provide the basic statiscs of the grounding data for training in Table 2. This combined dataset enables UI-TARS to achieve high-precision grounding, significantly improving its effectiveness in actions such as clicking and dragging.

为了进一步增强我们的数据集,我们整合了开源数据 (Seeclick (Cheng et al., 2024), GUIAct (Chen et al., 2024c), MultiUI (Liu et al., 2024b), Rico-SCA (Li et al., 2020a), Widget Caption (Li et al., 2020b), MUG (Li et al., 2024b), Rico Icon (Sunkara et al., 2022), CLAY (Li et al., 2022), UIBERT (Bai et al., 2021), OmniACT (Kapoor et al., 2024), AutoGUI (Anonymous, 2024), OS-ATLAS (Wu et al., 2024b) ) 并将其标准化为我们的统一动作空间格式。我们提供了用于训练的接地数据的基本统计数据,如表 2 所示。这个组合数据集使 UI-TARS 能够实现高精度的接地,显著提高了其在点击和拖动等动作中的有效性。

4.4 Infusing System-2 Reasoning

4.4 注入系统2推理

Relying solely on system-1 intuitive decision-making is insufficient to handle complex scenarios and everchanging environments. Therefore, we aim for UI-TARS to combine system-2 level reasoning, fexibly planning action steps by understanding the global structure of tasks.

仅依赖系统1的直觉决策不足以应对复杂场景和多变的环境。因此,我们希望UI-TARS能够结合系统2的推理能力,通过理解任务的全局结构灵活规划行动步骤。

Reasoning Enrichment with GUI Tutorials The first step focuses on reasoning enrichment, where we leverage publicly available tutorials that interweave text and images to demonstrate detailed user interactions across diverse software and web environments. These tutorials provide an ideal source for establishing foundational GUI knowledge while introducing logical reasoning patterns inherent to task execution.

推理增强与GUI教程

第一步侧重于推理增强,我们利用公开的教程,这些教程将文本和图像交织在一起,展示跨不同软件和网络环境中的详细用户交互。这些教程为建立基础GUI知识并引入任务执行中固有的逻辑推理模式提供了理想的来源。