AWQ: ACTIVATION-AWARE WEIGHT QUANTIZATION FORON-DEVICE LLM COMPRESSION AND ACCELERATION

AWQ:基于激活感知的权重量化技术,用于设备端大语言模型压缩与加速

Ji Lin * 1 Jiaming Tang * 1 2 Haotian Tang † 1 Shang Yang † 1 Wei-Ming Chen 3 Wei-Chen Wang 1 Guangxuan Xiao 1 Xingyu Dang 1 4 Chuang Gan 5 6 Song Han 1 3 https://github.com/mit-han-lab/llm-awq

Ji Lin * 1 Jiaming Tang * 1 2 Haotian Tang † 1 Shang Yang † 1 Wei-Ming Chen 3 Wei-Chen Wang 1 Guangxuan Xiao 1 Xingyu Dang 1 4 Chuang Gan 5 6 Song Han 1 3 https://github.com/mit-han-lab/llm-awq

ABSTRACT

摘要

Large language models (LLMs) have transformed numerous AI applications. On-device LLM is becoming increasingly important: running LLMs locally on edge devices can reduce the cloud computing cost and protect users’ privacy. However, the astronomical model size and the limited hardware resource pose significant deployment challenges. We propose Activation-aware Weight Quantization (AWQ), a hardware-friendly approach for LLM low-bit weight-only quantization. AWQ finds that not all weights in an LLM are equally important. Protecting only $I%$ salient weights can greatly reduce quantization error. To identify salient weight channels, we should refer to the activation distribution, not weights. To avoid the hardware-inefficient mix-precision quantization, we mathematically derive that scaling up the salient channels can reduce the quantization error. AWQ employs an equivalent transformation to scale the salient weight channels to protect them. The scale is determined by collecting the activation statistics offline. AWQ does not rely on any back propagation or reconstruction, so it generalizes to different domains and modalities without over fitting the calibration set. AWQ outperforms existing work on various language modeling and domain-specific benchmarks (coding and math). Thanks to better generalization, it achieves excellent quantization performance for instruction-tuned LMs and, for the first time, multi-modal LMs. Alongside AWQ, we implement TinyChat, an efficient and flexible inference framework tailored for 4-bit on-device LLM/VLMs. With kernel fusion and platform-aware weight packing, TinyChat offers more than $3\times$ speedup over the Hugging face FP16 implementation on both desktop and mobile GPUs. It also democratizes the deployment of the 70B Llama-2 model on mobile GPUs.

1 INTRODUCTION

1 引言

Deploying large language models (LLMs) directly on edge devices is crucial. On-device usage eliminates delays caused by sending data to a cloud server and enables LLMs to operate offline, which is beneficial for real-time applications like virtual assistants, chatbots, and autonomous vehicles. The operational costs associated with maintaining and scaling centralized cloud infrastructure can also be reduced. On-device LLM also enhances data security by keeping sensitive information local, reducing the chance of data breaches. LLMs, grounded in transformer-based architectures (Vaswani et al., 2017), have gathered significant attention for their impressive performance across diverse benchmarks (Brown et al., 2020; Zhang et al., 2022; Touvron et al., 2023a; Scao et al., 2022). However, the large model size leads to the high serving costs. For example, GPT-3 has 175B parameters, which is 350GB in FP16, while the latest B200 GPU only has 192GB memory, let alone edge devices.

在边缘设备上直接部署大语言模型 (LLMs) 至关重要。设备端使用消除了将数据发送到云服务器所带来的延迟,并使大语言模型能够在离线状态下运行,这对于虚拟助手、聊天机器人和自动驾驶汽车等实时应用非常有益。与维护和扩展集中式云基础设施相关的运营成本也可以降低。设备端大语言模型还通过将敏感信息保留在本地来增强数据安全性,减少数据泄露的可能性。基于 Transformer 架构 (Vaswani et al., 2017) 的大语言模型,因其在各种基准测试中的出色表现 (Brown et al., 2020; Zhang et al., 2022; Touvron et al., 2023a; Scao et al., 2022) 而备受关注。然而,大模型规模导致了高服务成本。例如,GPT-3 拥有 175B 参数,在 FP16 下为 350GB,而最新的 B200 GPU 只有 192GB 内存,更不用说边缘设备了。

Figure 1. We introduce AWQ, a versatile weight quantization method for LLM. To implement AWQ, we developed TinyChat to deploy 4-bit quantized LLMs into various edge platforms, achieving a $\mathbf{3-4\times}$ performance boost compared to FP16. Notably, we’ve also manufactured a TinyChat computer, powered by TinyChat, which contains an NVIDIA Jetson Orin Nano with only 8GB of memory and 15W power consumption. Demo: https://youtu.be/z 91 a 8 Dr f gEw.

图 1: 我们介绍了 AWQ,一种适用于大语言模型的多功能权重量化方法。为了实施 AWQ,我们开发了 TinyChat,将 4 位量化的大语言模型部署到各种边缘平台,相比 FP16 实现了 $\mathbf{3-4\times}$ 的性能提升。值得注意的是,我们还制造了一台由 TinyChat 驱动的 TinyChat 计算机,配备仅 8GB 内存和 15W 功耗的 NVIDIA Jetson Orin Nano。演示视频:https://youtu.be/z 91 a 8 Dr f gEw。

Low-bit weight quantization for LLMs can significantly reduce the memory footprint of on-device LLM inference but is hard. Quantization-aware training (QAT) is not efficient due to the high training cost, while post-training quantization (PTQ) suffers from large accuracy degradation under a low-bit setting. The closest work is GPTQ (Frantar et al., 2022), which uses second-order information to perform error compensation. However, it may overfit the calibration set during reconstruction, distorting the learned features on out-of-distribution domains (Figure 8), which is problematic since LLMs are generalist models.

低比特权重量化 (low-bit weight quantization) 可以显著减少设备端大语言模型推理的内存占用,但实现起来较为困难。由于训练成本高昂,量化感知训练 (QAT) 效率低下,而训练后量化 (PTQ) 在低比特设置下精度损失较大。最接近的工作是 GPTQ (Frantar et al., 2022),它利用二阶信息进行误差补偿。然而,它在重建过程中可能过度拟合校准集,导致在分布外领域的特征失真(图 8),这对于大语言模型作为通用模型来说是一个问题。

In this paper, we propose Activation-aware Weight Quantization (AWQ), a hardware-friendly low-bit weight-only quantization method for LLMs. Our method is based on the observation that weights are not equally important for LLMs’ performance. There is a small fraction $(0.1%{-1%})$ of salient weights; skipping the quantization of these salient weights will significantly reduce the quantization loss (Table 1). To find the salient weight channels, the insight is that we should refer to the activation distribution instead of the weight distribution, despite we are doing weightonly quantization: weight channels corresponding to larger activation magnitudes are more salient since they process more important features. To avoid the hardware-inefficient mixed-precision implementation, we analyze the error from weight quantization and derive that scaling up the salient channels can reduce their relative quantization error (Equation 2). Following the intuition, we designed a per-channel scaling method to automatically search for the optimal scaling that minimizes the quantization error under full-weight quantization. AWQ does not rely on any back propagation or reconstruction, so it can well preserve LLMs’ generalization ability on various domains and modalities without over fitting to the calibration set.

本文提出了一种硬件友好的低比特权重量化方法——激活感知权重量化 (Activation-aware Weight Quantization, AWQ),适用于大语言模型。该方法基于观察到权重对大语言模型的性能并不同等重要。存在一小部分 (0.1%-1%) 的关键权重;跳过这些关键权重的量化将显著减少量化损失 (表 1)。为了找到关键权重通道,我们的思路是参考激活分布而非权重分布,尽管我们进行的是权重量化:对应较大激活幅度的权重通道更为关键,因为它们处理更重要的特征。为了避免硬件效率低下的混合精度实现,我们分析了权重量化误差并得出,放大关键通道可以减少它们的相对量化误差 (公式 2)。基于这一直觉,我们设计了一种逐通道缩放方法,自动搜索在全权重量化下最小化量化误差的最佳缩放比例。AWQ 不依赖于任何反向传播或重建,因此能够很好地保留大语言模型在各个领域和模态上的泛化能力,而不会过度拟合校准集。

To implement AWQ, we designed TinyChat, an efficient inference framework to convert theoretical memory savings from 4-bit LLM to measured speedup. Our framework significantly speeds up linear layers through on-the-fly dequantization. We also take advantage of efficient 4-bit weight packing and kernel fusion to minimize the inference overhead (e.g., intermediate DRAM access and kernel launch overhead), such that we can better realize the speed up from quantizing the weights to 4-bit, despite the computer is byte-aligned.

为实现 AWQ,我们设计了 TinyChat,这是一个高效的推理框架,旨在将 4 位大语言模型的理论内存节省转化为实际的加速效果。我们的框架通过即时反量化显著加速了线性层。此外,我们还利用高效的 4 位权重打包和内核融合技术,以最小化推理开销(例如,中间 DRAM 访问和内核启动开销),从而更好地实现将权重量化为 4 位所带来的加速,尽管计算机是以字节对齐的。

Experiments show that AWQ outperforms existing work on various tasks for different model families (e.g., LLaMA (Touvron et al., 2023a), OPT (Zhang et al., 2022)) and model sizes. Thanks to better generalization, it also achieves good quantization performance for instructiontuned LMs (e.g., Vicuna) and, for the first time, multi-modal LMs (Open Flamingo (Awadalla et al., 2023)). TinyChat further translates the ${\sim}4,{\times}$ lower memory footprint to measured speedup. On desktop, laptop and mobile GPUs, we consistently observe a $3.2{-}3.3\times$ average speedup compared to the FP16 implementation by Hugging face across a diverse spectrum of LLMs. Furthermore, it facilitates effortless deployment of the Llama-2-70B model on a single NVIDIA Jetson Orin with 64GB of memory. It also democratizes 13 billion parameter LLM at an interactive pace of 30 tokens/second on a laptop RTX 4070 GPU with only 8GB of memory. AWQ has been widely adopted by industry and open-source community: Hugging Face Transformers, NVIDIA TensorRT-LLM, Microsfot DirectML, Google Vertex AI, Intel Neural Compressor, Amazon Sagemaker, AMD, FastChat, vLLM, LMDeploy, and enables Falcon-180B deployable on a single H200 GPU.

实验表明,AWQ 在不同模型家族(如 LLaMA (Touvron et al., 2023a)、OPT (Zhang et al., 2022))和不同模型规模的各种任务上优于现有工作。得益于更好的泛化能力,它在指令调优的语言模型(如 Vicuna)和多模态语言模型(如 Open Flamingo (Awadalla et al., 2023))上也首次实现了良好的量化性能。TinyChat 进一步将 ${\sim}4,{\times}$ 的内存占用降低转化为实际的速度提升。在台式机、笔记本电脑和移动 GPU 上,我们观察到与 Hugging Face 的 FP16 实现相比,在多种大语言模型上平均速度提升了 $3.2{-}3.3\times$。此外,它使得 Llama-2-70B 模型可以轻松部署在单个 64GB 内存的 NVIDIA Jetson Orin 上。它还在仅 8GB 内存的笔记本电脑 RTX 4070 GPU 上以每秒 30 个 Token 的交互速度实现了 130 亿参数大语言模型的民主化。AWQ 已被业界和开源社区广泛采用:Hugging Face Transformers、NVIDIA TensorRT-LLM、Microsoft DirectML、Google Vertex AI、Intel Neural Compressor、Amazon Sagemaker、AMD、FastChat、vLLM、LMDeploy,并使得 Falcon-180B 可以在单个 H200 GPU 上部署。

2 RELATED WORK

2 相关工作

Model quantization methods. Quantization reduces the bit-precision of deep learning models (Han et al., 2016; Jacob et al., 2018; Nagel et al., 2019; Wang et al., 2019; Nagel et al., 2020; Lin et al., 2020), which helps to reduce the model size and accelerate inference. Quantization techniques generally fall into two categories: quantization-aware training (QAT, which relies on back propagation to update the quantized weights) (Bengio et al., 2013; Gholami et al., 2021; Nagel et al., 2021; Choi et al., 2018) and post-training quantization (Jacob et al., 2018; Nagel et al., 2019; 2020) (PTQ, usually training-free). The QAT methods cannot easily scale up to large models like LLMs. Therefore, people usually use PTQ methods to quantize LLMs.

模型量化方法。量化降低了深度学习模型的比特精度 (Han et al., 2016; Jacob et al., 2018; Nagel et al., 2019; Wang et al., 2019; Nagel et al., 2020; Lin et al., 2020),这有助于减小模型大小并加速推理。量化技术通常分为两类:量化感知训练 (QAT,依赖于反向传播来更新量化权重) (Bengio et al., 2013; Gholami et al., 2021; Nagel et al., 2021; Choi et al., 2018) 和训练后量化 (Jacob et al., 2018; Nagel et al., 2019; 2020) (PTQ,通常无需训练)。QAT 方法无法轻松扩展到像大语言模型这样的大型模型。因此,人们通常使用 PTQ 方法来量化大语言模型。

Quantization of LLMs. People study two settings for LLM quantization: (1) W8A8 quantization, where both activation and weights are quantized to INT8 (Dettmers et al., 2022; Xiao et al., 2022; Yao et al., 2022; Wei et al., 2022a; 2023); (2) Low-bit weight-only quantization (e.g., W4A16), where only weights are quantized into low-bit integers (Frantar et al., 2022; Dettmers & Z ett le moyer, 2022; Sheng et al., 2023; Park et al., 2022). We focus on the second setting in this work since it not only reduces the hardware barrier (requiring a smaller memory size) but also speeds up the token generation (remedies memory-bound workload). Apart from the vanilla round-to-nearest baseline (RTN), GPTQ (Frantar et al., 2022) is the closest to our work. However, the reconstruction process of GPTQ leads to an over-fitting issue to the calibration set and may not preserve the generalist abilities of LLMs for other modalities and domains. It also requires a reordering trick to work for some models (e.g., LLaMA-7B (Touvron et al., 2023a) and OPT66B (Zhang et al., 2022)). Apart from quant iz tion methods designed for general-purporse hardware, SpAtten (Wang et al., 2020) designs a progressive approach to gradually increase the number of bits used in softmax calculation.

大语言模型的量化。人们研究了大语言模型量化的两种设置:(1) W8A8量化,其中激活和权重都被量化为INT8 (Dettmers et al., 2022; Xiao et al., 2022; Yao et al., 2022; Wei et al., 2022a; 2023);(2) 低位权重量化 (例如 W4A16),其中仅权重被量化为低位整数 (Frantar et al., 2022; Dettmers & Zettlemoyer, 2022; Sheng et al., 2023; Park et al., 2022)。我们在本工作中专注于第二种设置,因为它不仅降低了硬件门槛 (需要更小的内存),还加速了Token生成 (缓解了内存受限的工作负载)。除了普通的四舍五入基线 (RTN) 外,GPTQ (Frantar et al., 2022) 是与我们工作最接近的。然而,GPTQ的重建过程会导致对校准集的过拟合问题,并且可能无法保留大语言模型在其他模态和领域的通用能力。它还需要重新排序技巧才能在某些模型上工作 (例如 LLaMA-7B (Touvron et al., 2023a) 和 OPT66B (Zhang et al., 2022))。除了为通用硬件设计的量化方法外,SpAtten (Wang et al., 2020) 设计了一种渐进式方法,逐步增加softmax计算中使用的位数。

System support for low-bit quantized LLMs. Low-bit quantized LLMs have been a popular setting to reduce inference costs. There are some system supports to achieve a practical speed-up. GPTQ (Frantar et al., 2022) provides INT3 kernels for OPT models and GPTQ-for-LLaMA extends kernel support for INT4 reordered quantization with the help of Triton (Tillet et al., 2019). FlexGen (Sheng et al., 2023), llama. ${\mathsf{C p p}}^{*}$ and exllama† perform group-wise INT4 quantization to reduce I/O costs and offloading. FasterTransformer implements $_{\mathrm{FP16\timesINT4}}$ GEMM for weightonly per-tensor quantization but does not support group quantization. LUT-GEMM (Park et al., 2022) performs bitwise computation on GPU CUDA cores with the help of lookup tables. Our concurrent work, MLC-LLM (MLCTeam, 2023) offers strong results on multiple edge CPU and GPU platforms thanks to the powerful TVM (Chen et al., 2018; Feng et al., 2023) backend.

低比特量化大语言模型的系统支持。低比特量化大语言模型已成为降低推理成本的热门设置。有一些系统支持可实现实际的加速效果。GPTQ (Frantar et al., 2022) 为 OPT 模型提供了 INT3 内核,而 GPTQ-for-LLaMA 则在 Triton (Tillet et al., 2019) 的帮助下扩展了对 INT4 重排量化的内核支持。FlexGen (Sheng et al., 2023)、llama. ${\mathsf{C p p}}^{*}$ 和 exllama† 执行分组 INT4 量化以减少 I/O 成本和卸载。FasterTransformer 实现了 $_{\mathrm{FP16\timesINT4}}$ GEMM 用于仅权重的逐张量量化,但不支持分组量化。LUT-GEMM (Park et al., 2022) 在 GPU CUDA 核心上借助查找表执行位运算。我们的并行工作 MLC-LLM (MLCTeam, 2023) 得益于强大的 TVM (Chen et al., 2018; Feng et al., 2023) 后端,在多个边缘 CPU 和 GPU 平台上取得了显著成果。

Figure 2. We observe that we can find $1%$ of the salient weights in LLMs based on the activation distribution (middle). Keeping the salient weights in FP16 can significantly improve the quantized performance (PPL from 43.2 (left) to 13.0 (middle)), but the mixed-precision format is not hardware-efficient. We follow the activation-awareness principle and propose AWQ (right). AWQ performs per-channel scaling to protect the salient weights and reduce quantization error. We measure the perplexity of OPT-6.7B under INT3- $\cdot\mathrm{g}128$ quantization.

图 2: 我们观察到,基于激活分布(中)可以在大语言模型中找到 1% 的重要权重。将这些重要权重保留在 FP16 中,可以显著提高量化性能(PPL 从 43.2(左)降至 13.0(中)),但混合精度格式在硬件上并不高效。我们遵循激活感知原则,提出了 AWQ(右)。AWQ 通过每通道缩放来保护重要权重并减少量化误差。我们测量了 OPT-6.7B 在 INT3-·g128 量化下的困惑度。

3 AWQ: ACTIVATION-AWARE WEIGHTQUANTIZATION

3 AWQ:激活感知权重量化

Quantization maps a floating-point number into lower-bit integers. It is an effective method to reduce the model size and inference costs of LLMs (Dettmers et al., 2022; Frantar et al., 2022; Yao et al., 2022; Xiao et al., 2022). In this section, we first propose a weight-only quantization method to improve accuracy without training/regression by protecting more “important” weights. And then develop a data-driven method to search for the optimal scaling that reduces quantization errors (Figure 2).

量化将浮点数映射为低位整数。它是减少大语言模型 (LLM) 模型大小和推理成本的有效方法 (Dettmers et al., 2022; Frantar et al., 2022; Yao et al., 2022; Xiao et al., 2022)。在本节中,我们首先提出一种仅量化权重的方法,通过保护更多“重要”权重来提高精度,而无需训练/回归。然后开发一种数据驱动的方法来寻找最优缩放比例,以减少量化误差 (图 2)。

3.1 Improving LLM Quantization by Preserving $%$ Salient Weights

3.1 通过保留 ${\bf1}%$ 显著权重改进大语言模型量化

We observe that the weights of LLMs are not equally important: there is a small fraction of salient weights that are much more important for LLMs’ performance compared to others. Skipping the quantization of these salient weights can help bridge the performance degradation due to the quantization loss without any training or regression (Figure 2(b)). To verify the idea, we benchmark the performance of quantized LLMs when skipping part of the weight channels in Table 1. We measured the performance of INT3 quantized models while keeping some ratios of weight channels in FP16. A widely used method to determine the importance of weights is to look at its magnitude or $L_{2}$ -norm (Han et al., 2015; Frankle & Carbin, 2018). But we find skipping the weight channels with large norm (i.e., $\mathrm{FP16%}$ (based on W)) does not significantly improve the quantized performance, leading to a similar marginal improvement as random selection. Interestingly, selecting weights based on activation magnitude can significantly improve the performance despite keeping only $0.1%{-1%}$ of channels in FP16. We hypothesize that the input features with larger magnitudes are generally more important. Keeping the corresponding weights in FP16 can preserve those features, which contributes to better model performance.

我们观察到,大语言模型(LLM)的权重并非同等重要:存在一小部分显著权重,它们对模型性能的影响远大于其他权重。跳过这些显著权重的量化可以在不进行任何训练或回归的情况下,帮助缓解量化损失带来的性能下降(图 2(b))。为了验证这一想法,我们在表 1 中测试了跳过部分权重通道时量化大语言模型的性能。我们测量了 INT3 量化模型在保持一定比例的权重通道为 FP16 时的性能。确定权重重要性的常用方法是查看其幅值或 $L_{2}$ 范数(Han et al., 2015; Frankle & Carbin, 2018)。但我们发现,跳过具有较大范数的权重通道(即基于 W 的 $\mathrm{FP16%}$)并没有显著改善量化性能,其边际改进与随机选择相似。有趣的是,基于激活幅值选择权重可以显著提升性能,尽管仅保持 $0.1%{-1%}$ 的通道为 FP16。我们推测,具有较大幅值的输入特征通常更为重要。将相应的权重保持为 FP16 可以保留这些特征,从而有助于提升模型性能。

Limitations: Despite keeping $0.1%$ of weights in FP16 can improve the quantized performance without a noticeable increase in model size (measured in total bits), such a mixedprecision data type will make the system implementation difficult. We need to come up with a method to protect the important weights without actually keeping them as FP16.

局限性:尽管在 FP16 中保留 0.1% 的权重可以在不明显增加模型大小(以总比特数衡量)的情况下提高量化性能,但这种混合精度数据类型会使系统实现变得困难。我们需要找到一种方法来保护重要权重,而不需要实际将它们保留为 FP16。

3.2 Protecting Salient Weights by Activation-aware Scaling

3.2 通过激活感知缩放保护显著权重

We propose an alternative method to reduce the quantization error of the salient weight by per-channel scaling, which does not suffer from the hardware inefficiency issue.

我们提出了一种替代方法,通过逐通道缩放来减少显著权重的量化误差,该方法不会遇到硬件效率低下的问题。

Analyzing the quantization error.

分析量化误差。

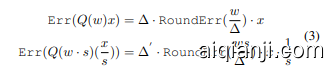

We start by analyzing the error from weight-only quantization. Consider a group/block of weight w; the linear operation can be written as $y;=;\mathbf{w}\mathbf{x}$ , and the quantized counterpart is ${\boldsymbol y}=Q(\mathbf{w})\mathbf{x}$ . Specifically, the quantization

我们首先分析仅量化权重带来的误差。考虑一组/块的权重 w;线性操作可以表示为 $y;=;\mathbf{w}\mathbf{x}$,而量化后的对应操作是 ${\boldsymbol y}=Q(\mathbf{w})\mathbf{x}$。具体来说,量化

AWQ: Activation-aware Weight Quantization for On-Device LLM Compression and Acceleration

AWQ:面向激活感知的权重量化技术,用于设备端大语言模型压缩与加速

| PPL← | FP16 | RTN (w3-g128) | FP16% (基于 act.) | FP16% (基于 W) | FP16% (随机) |

|---|---|---|---|---|---|

| 0.1% | 1% | 3% | |||

| OPT-1.3B | 14.62 | 119.00 | 25.03 | 16.91 | 16.68 |

| OPT-6.7B | 10.86 | 23.54 | 11.58 | 11.39 | 11.36 |

| OPT-13B | 10.13 | 46.04 | 10.51 | 10.43 | 10.42 |

Table 1. Keeping a small fraction of weights $(0.1%{-1%})$ in FP16 significantly improves the performance of the quantized models over round-to-nearest (RTN). It is only effective when we select the important weights in FP16 by looking at activation distribution instead of weight distribution. We highlight results with a decent perplexity in green. We used INT3 quantization with a group size of 128 and measured the WikiText perplexity (↓).

表 1. 保留一小部分权重 $(0.1%{-1%})$ 为 FP16 显著提升了量化模型在四舍五入(RTN)上的性能。仅当我们通过观察激活分布而非权重分布来选择重要的 FP16 权重时,这种方法才有效。我们用绿色标出了具有较好困惑度的结果。我们使用 INT3 量化,组大小为 128,并测量了 WikiText 困惑度(↓)。

| OPT-6.7B | s=1 | s=1.25 | s=1.5 | s=2 | s=4 |

|---|---|---|---|---|---|

| △ 比例 0% | 2.8% | 4.4% | 8.2% | 21.2% | 1.038 |

| 平均 ' /△ | 1 | 1.005 | 1.013 | ||

| A· 平均 1 | 0.804 | 0.676 | 0.519 | 0.303 | |

| Wiki-2PPL 23.54 | 12.87 | 12.48 | 11.92 | 12.36 |

Table 2. Statistics when multiplying the $1%$ salient channels by $s\ >\ 1$ . Scaling up the salient channels significantly improves the perplexity (23.54 to 11.92). As $s$ goes larger, the percentage of changed $\Delta$ increases, and the error reduction rate for salient channels also increases. However, the best perplexity is achieved at $s=2$ , since further increasing $s$ will increase the quantization error for non-salient channels.

表 2: 将 $1%$ 显著通道乘以 $s\ >\ 1$ 时的统计数据。放大显著通道显著改善了困惑度(从 23.54 降至 11.92)。随着 $s$ 增大,$\Delta$ 变化的百分比增加,显著通道的误差减少率也随之增加。然而,最佳困惑度在 $s=2$ 时达到,因为进一步增加 $s$ 会增加非显著通道的量化误差。

Table 3. AWQ protects salient weights and reduces quantization error by using a scaling-based method. It consistently outperforms Round-to-nearest quantization (RTN) and achieves comparable performance as mixed-precision $[1%$ FP16) while being more hardware-friendly. We use 3-bit quantization with group size 128.

表 3. AWQ 通过使用基于缩放的方法保护显著权重并减少量化误差。它在性能上始终优于最近舍入量化 (RTN) ,并与混合精度 $[1%$ FP16) 的性能相当,同时更加硬件友好。我们使用组大小为 128 的 3 位量化。

| OPT (PPL↓) | 1.3B | 2.7B | 6.7B | 13B | 30B |

|---|---|---|---|---|---|

| FP16 | 14.62 | 12.47 | 10.86 | 10.13 | 9.56 |

| RTN | 119.47 | 298.00 | 23.54 | 46.04 | 18.80 |

| 1%FP16 | 16.91 | 13.69 | 11.39 | 10.43 | 9.85 |

| s=2 | 18.63 | 14.94 | 11.92 | 10.80 | 10.32 |

| AWQ | 16.32 | 13.58 | 11.39 | 10.56 | 9.77 |

function is defined as:

函数定义为:

where $N$ is the number of quantization bits, and $\Delta$ is the quantization scaler determined by the absolute maximum value. Now consider a weight element $w\in\mathfrak{w}$ , if we multiply $w$ with $s>1$ and the inversely scale $x$ , we will have $Q(w\cdot s)(x/s)$ , which is:

其中 $N$ 是量化位数,$\Delta$ 是由绝对最大值决定的量化标量。现在考虑一个权重元素 $w\in\mathfrak{w}$,如果我们将 $w$ 乘以 $s>1$ 并反向缩放 $x$,我们将得到 $Q(w\cdot s)(x/s)$,即:

where $\Delta^{\prime}$ is the new quantization scaler after applying $s$ . We empirically find that: (1) The expected error from Round $(\cdot)$ (denoted as $\mathrm{RoundErr}(\cdot)$ ) does not change: since the round function maps a floating-point number to an integer, the error is roughly uniformly distributed from [0,0.5], resulting in an average error of 0.25; i.e., RoundErr $(\cdot)\sim$ 0.25. (2) Scaling up a single element $w$ usually does not change the maximum value from the group w. Therefore we have $\bar{\Delta}^{\prime}\approx\Delta$ ; (3) As $\Delta$ and $x$ are represented in FP16, they have no quantization error. Consequently, the quantization error from equation 1 and 2 can be expressed as

其中 $\Delta^{\prime}$ 是应用 $s$ 后的新量化缩放因子。我们通过实验发现:(1) Round $(\cdot)$ 的预期误差(记为 $\mathrm{RoundErr}(\cdot)$)不变:由于舍入函数将浮点数映射为整数,误差大致在 [0,0.5] 区间均匀分布,平均误差为 0.25;即 RoundErr $(\cdot)\sim$ 0.25。(2) 放大单个元素 $w$ 通常不会改变组 w 的最大值。因此我们有 $\bar{\Delta}^{\prime}\approx\Delta$。(3) 由于 $\Delta$ 和 $x$ 以 FP16 表示,它们没有量化误差。因此,公式 1 和 2 的量化误差可以表示为

The ratio of the new error to the original error is $\frac{\Delta^{'}}{\Delta},\cdot,\frac{1}{s}$ Given $\Delta^{\prime}\approx\Delta$ and $s>1$ , the relative error is smaller for the salient weight $w$ .

新误差与原始误差的比率为 $\frac{\Delta^{'}}{\Delta},\cdot,\frac{1}{s}$。给定 $\Delta^{\prime}\approx\Delta$ 且 $s>1$,显著权重 $w$ 的相对误差较小。

To verify the idea, we multiply the $1%$ salient channels with $s>1$ for the OPT-6.7B model, and measure the change in $\Delta$ for each group in Table 2. We find that scaling up the salient channels is quite effective: the perplexity improves from 23.54 for $s,=,1$ (simply RTN) to 11.92 for $s,=,2$ As $s$ goes larger, the percentage of changed $\Delta$ generally gets larger, but the percentage is still quite small for $s<2$ (less than $5%$ ); the relative error for the salient channels continues to go smaller as $s$ increases. Nonetheless, the best PPL actually appears at $s=2$ . This is because if we use a very large $s$ , it will increase the relative error for the nonsalient channels when $\Delta$ increases (the error of non-salient channels will be amplified by ∆∆′, and the ratio is larger than 1 for $21.2%$ of the channels under $s=4$ ), which can damage the model’s overall accuracy. Therefore, we need to also consider the error from non-salient channels when protecting salient ones.

为了验证这一想法,我们将 OPT-6.7B 模型中 $1%$ 的显著通道乘以 $s>1$,并在表 2 中测量每一组 $\Delta$ 的变化。我们发现放大显著通道的效果非常显著:困惑度从 $s,=,1$(即简单的 RTN)的 23.54 提高到 $s,=,2$ 的 11.92。随着 $s$ 的增大,$\Delta$ 变化的百分比通常也会增大,但在 $s<2$ 时,这一百分比仍然很小(少于 $5%$);显著通道的相对误差随着 $s$ 的增加而持续减小。然而,最佳的 PPL 实际上出现在 $s=2$ 时。这是因为如果我们使用非常大的 $s$,当 $\Delta$ 增加时,非显著通道的相对误差也会增加(非显著通道的误差会被 ∆∆′ 放大,且在 $s=4$ 下有 $21.2%$ 的通道比例大于 1),这可能会损害模型的整体准确性。因此,在保护显著通道时,我们还需要考虑非显著通道的误差。

Searching to scale. To consider both salient and nonsalient weights, we choose to automatically search for an optimal (per input channel) scaling factor that minimizes the output difference after quantization for a certain layer.

搜索以扩展。为了同时考虑显著和非显著的权重,我们选择自动搜索一个最优的(每个输入通道的)缩放因子,以最小化某一层量化后的输出差异。

(a) Generation stage is slower (b) Generation stage is bounded by memory bandwidth (c) Weight loading is more expensive

(a) 生成阶段较慢 (b) 生成阶段受限于内存带宽 (c) 权重加载代价更高

Figure 3. Bottleneck analysis for Llama-2-7B on NVIDIA RTX 4090. Left: In on-device LLM applications, generation stage is much slower than the context stage. Middle: The generation stage is memory bound and has low arithmetic intensity. W4A16 quantization can effectively improve the arithmetic intensity by $4\times$ . Right: The amount of weight access is orders of magnitude larger than the amount of activation access. Thus, weight-only quantization is more effective for on-device LLMs.

图 3: Llama-2-7B 在 NVIDIA RTX 4090 上的瓶颈分析。左图:在设备上的大语言模型应用中,生成阶段比上下文阶段慢得多。中图:生成阶段受内存限制且算术强度低。W4A16 量化可以有效地将算术强度提高 $4\times$ 。右图:权重访问的量级远大于激活访问的量级。因此,仅对权重进行量化对设备上的大语言模型更有效。

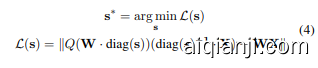

Formally, we want to optimize the following objective:

我们想要优化以下目标:

Here $Q$ means the weight quantization function (e.g., INT3/INT4 quantization with group size 128), W is the original weights in FP16, and $\mathbf{X}$ is the input features cached from a small calibration set (we take a small calibration set from he pre-training dataset in order not to overfit to a specific task). s is a per-(input) channel scaling factor; for $\mathbf{s}^{-1}\cdot\mathbf{X}$ , it can usually be fused into the previous operator (Wei et al., 2022b; Xiao et al., 2022). Since the quantization function is not differentiable, we are not able to directly optimize the problem with vanilla back prop agation. There are some techniques relying on approximated gradients (Bengio et al., 2013; Esser et al., 2019), which we found still suffers from unstable convergence.

这里 $Q$ 表示权重量化函数(例如,组大小为 128 的 INT3/INT4 量化),W 是原始 FP16 权重,$\mathbf{X}$ 是从一个小校准集中缓存的输入特征(我们从预训练数据集中取一个小校准集,以避免过度拟合特定任务)。s 是一个每(输入)通道的缩放因子;对于 $\mathbf{s}^{-1}\cdot\mathbf{X}$,它通常可以融合到前一个操作符中(Wei 等,2022b;Xiao 等,2022)。由于量化函数不可微,我们无法直接通过普通的反向传播来优化这个问题。有一些技术依赖于近似梯度(Bengio 等,2013;Esser 等,2019),但我们发现这些技术仍然存在收敛不稳定的问题。

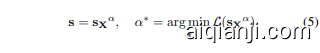

To make the process more stable, we define a search space for the optimal scale by analyzing the factors that will affect the choice of scaling factor. As shown in the last section, the saliency of weight channels is actually determined by the activation scale (thus “activation-awareness”). Therefore, we simply use a very simple search space:

为了使过程更加稳定,我们通过分析影响缩放因子选择的因素,为最佳缩放比例定义了一个搜索空间。如上一节所示,权重通道的显著性实际上是由激活比例决定的(因此称为“激活感知”)。因此,我们使用了一个非常简单的搜索空间:

$\mathbf{s}_{\mathbf{X}}$ is the average magnitude of activation (per-channel), and we use a single hyper-parameter $\alpha$ to balance between the protection of salient and non-salient channels. We can find the best $\alpha$ by a fast grid search over the interval of $[0,1]$ (0 means we do not scale; 1 corresponds to the most aggressive scaling in our search space). We further apply weight clipping to minimize the MSE error of quantization. We provide an ablation study on OPT models under INT3- $\mathrm{g}128$ quantization in Table 5; AWQ consistently outperforms round-to-nearest quantization (RTN) and achieves comparable performance as mixed-precision ( $1%$ FP16) while being more hardware-friendly.

$\mathbf{s}_{\mathbf{X}}$ 是激活的平均幅度(每个通道),我们使用单个超参数 $\alpha$ 来平衡显著通道和非显著通道的保护。我们可以通过在区间 $[0,1]$ 上进行快速网格搜索来找到最佳的 $\alpha$(0 表示不进行缩放;1 对应于我们搜索空间中最激进的缩放)。我们进一步应用权重裁剪来最小化量化的 MSE 误差。我们在表 5 中提供了 OPT 模型在 INT3-$\mathrm{g}128$ 量化下的消融研究;AWQ 始终优于最近舍入量化 (RTN),并与混合精度($1%$ FP16)实现了相当的性能,同时更硬件友好。

Advantages. Our method does not rely on any regression (Frantar et al., 2022) or back propagation, which is required by many quantization-aware training methods. It has minimal reliance on the calibration set since we only measure the average magnitude per channel, thus preventing over-fitting (Figure 8). Therefore, our method requires fewer data for the quantization process and can preserve LLMs’ knowledge outside of the calibration set’s distribution. See Section 5.3 for more details.

优势。我们的方法不依赖于任何回归 (Frantar et al., 2022) 或反向传播,这是许多量化感知训练方法所必需的。它对校准集的依赖极小,因为我们只测量每个通道的平均幅度,从而防止过拟合 (图 8)。因此,我们的方法在量化过程中需要的数据更少,并且可以保留大语言模型在校准集分布之外的知识。更多细节请参见第 5.3 节。

4 TINYCHAT: MAPPING AWQ ONTO EDGE PLATFORMS

4 TINYCHAT: 将 AWQ 映射到边缘平台

AWQ can substantially reduce the size of LLMs. However, converting the theoretical memory savings from W4A16 (4-bit weight, 16-bit activation) quantization into measured speedup is non-trivial. Alternative W8A8 quantization methods, such as Smooth Quant (Xiao et al., 2022), maintain the same data precision for both storage and computation. This allows the de quantization procedure to be seamlessly integrated into the computation kernel’s epilogue. On the other hand, W4A16 quantization employs different data types for memory access and computation. As a result, its dequantization must be incorporated into the primary computation loop for optimal performance, posing implementation challenges. To tackle this, we introduce TinyChat: a nimble system for AWQ model inference. It boasts a PyTorch frontend and a backend harnessing device-specific instruction sets (e.g., CUDA/PTX, Neon, AVX).

AWQ 可以显著减小大语言模型的体积。然而,将 W4A16(4 位权重,16 位激活)量化的理论内存节省转化为实际的速度提升并不容易。像 Smooth Quant(Xiao 等,2022)这样的替代 W8A8 量化方法在存储和计算中保持相同的数据精度。这使得反量化过程可以无缝集成到计算内核的后处理中。另一方面,W4A16 量化在内存访问和计算中使用不同的数据类型。因此,为了获得最佳性能,其反量化必须纳入主计算循环中,这带来了实现上的挑战。为了解决这一问题,我们引入了 TinyChat:一个用于 AWQ 模型推理的轻量系统。它拥有一个 PyTorch 前端和一个利用设备特定指令集(例如 CUDA/PTX、Neon、AVX)的后端。

4.1 Why AWQ Helps Accelerate On-Device LLMs

4.1 为什么 AWQ 有助于加速设备上的大语言模型

To understand the acceleration opportunities in quantized LLMs on the edge, we start by profiling the latency breakdown of LLaMA-7B (Touvron et al., 2023a) model on an RTX 4090 GPU. We adopt an inference batch size of 1, catering for edge use cases, and implement the model in FP16 with NVIDIA Faster Transformer.

为了理解在边缘设备上量化大语言模型的加速机会,我们首先分析了 LLaMA-7B (Touvron et al., 2023a) 模型在 RTX 4090 GPU 上的延迟分布。我们采用推理批大小为 1,以适应边缘使用场景,并使用 NVIDIA Faster Transformer 在 FP16 模式下实现该模型。

Context vs generation latency. As in Figure 3(a), it takes $310;\mathrm{ms}$ to generate 20 tokens, while summarizing a prompt with 200 tokens only takes $10;\mathrm{ms}$ . Consequently, the generation phase is substantially slower than the context stage, particularly for on-device interactive applications.

上下文与生成延迟。如图 3(a) 所示,生成 20 个 Token 需要 $310;\mathrm{ms}$,而总结包含 200 个 Token 的提示仅需 $10;\mathrm{ms}$。因此,生成阶段明显慢于上下文阶段,特别是在设备上的交互应用中。

Figure 4. SIMD-aware weight packing for ARM NEON with 128-bit SIMD units. Original weights are reordered and packed to align with the bit width so that the weights can be unpacked into bytes at runtime using AND and shift bitwise operations with a 128-bit mask.

图 4: 针对 ARM NEON 128 位 SIMD 单元的 SIMD 感知权重打包。原始权重被重新排序并打包以适应位宽,以便在运行时使用 128 位掩码的 AND 和位移位操作将权重解包为字节。

Generation stage is memory-bound. To accelerate the generation phase, we conduct a roofline analysis in Figure 3(b). The 4090 GPU has a peak computation throughput of 165 TFLOPS and a memory bandwidth of 1TB/s. Therefore, any workload with arithmetic intensity (the ratio of FLOPs to memory access) less than 165 is memory bounded on 4090 GPUs. Notably, when executed in FP16, the generation stage for on-device LLMs has arithmetic intensity ${\approx}1$ This underscores the memory-bound nature of the workload. Since the FLOPs of a given model is fixed, the only way to improve the peak performance is to reduce the total amount of memory traffic. AWQ reduces the weight memory by four times.

生成阶段受限于内存。为了加速生成阶段,我们在图 3(b) 中进行了屋顶线分析。4090 GPU 的计算峰值吞吐量为 165 TFLOPS,内存带宽为 1TB/s。因此,任何算术强度(FLOPs 与内存访问的比率)小于 165 的工作负载在 4090 GPU 上都会受限于内存。值得注意的是,当以 FP16 执行时,设备上的大语言模型的生成阶段算术强度为 ${\approx}1$,这突显了工作负载的内存受限特性。由于给定模型的 FLOPs 是固定的,提高峰值性能的唯一方法是减少内存流量。AWQ 将权重内存减少了四倍。

Weight access dominates memory traffic. We therefore further break down the memory access for weight and activation in Figure 3(c). Clearly, weight access dominates the memory traffic for on-device LLMs. Quantizing the model weights to 4 bit integers will approximately increase the arithmetic intensity to 4 FLOPs/Byte, leading to a 4TFLOPS peak performance in Figure 3(b). Since weight-only quantization leads to a lower bit width for weights (and thus higher theoretical performance upper bound), it is natural for AWQ to follow this setting for on-device LLM applications.

权重访问主导内存流量。因此,我们在图 3(c) 中进一步分解了权重和激活的内存访问。显然,权重访问主导了设备上大语言模型的内存流量。将模型权重量化为 4 位整数大约会将算术强度提高到 4 FLOPs/Byte,从而在图 3(b) 中实现 4TFLOPS 的峰值性能。由于仅权重量化会导致权重的位宽度降低(从而提高理论上限),AWQ 自然会在设备上大语言模型应用中采用这种设置。

4.2 Deploy AWQ with TinyChat

4.2 使用 TinyChat 部署 AWQ

To this end, we demonstrated that 4-bit weight quantization could lead to a $4\times$ theoretical peak performance. We further design TinyChat to realize this speedup. On GPUs, we only focus on implementing essential components, including attention, layer normalization, and linear projection kernels. The flexible frontend allows easy customization and fast support for new models. TinyChat with 4-bit AWQ achieves more than $3\times$ speedup compared with the Huggingface FP16 implementation across different families of LLMs on GPUs. On CPUs, we lower the entire computation graph to $C++$ to minimize overhead.

为此,我们证明了 4 位权重量化可以带来 $4\times$ 的理论峰值性能。我们进一步设计了 TinyChat 来实现这一加速。在 GPU 上,我们仅专注于实现关键组件,包括注意力机制、层归一化和线性投影内核。灵活的前端允许轻松定制并快速支持新模型。在 GPU 上,使用 4 位 AWQ 的 TinyChat 与 Huggingface FP16 实现相比,在不同系列的大语言模型上实现了超过 $3\times$ 的加速。在 CPU 上,我们将整个计算图降级到 $C++$ 以最小化开销。

On-the-fly weight de quantization. For quantized layers, as the hardware does not provide multiplication instructions between INT4 and FP16, we need to dequantize the integers to FP16 before performing matrix computation. We avoid writing de quantized weights into DRAM by fusing dequantization kernels with the matrix mult pli cation kernel. Note that such fusion is adopted for both matrix-matrix (MM) and matrix-vector (MV) product kernels.

动态权重反量化。对于量化层,由于硬件不提供 INT4 和 FP16 之间的乘法指令,我们需要在执行矩阵计算之前将整数反量化为 FP16。我们通过将反量化内核与矩阵乘法内核融合,避免将反量化后的权重写入 DRAM。请注意,这种融合适用于矩阵-矩阵 (MM) 和矩阵-向量 (MV) 乘积内核。

SIMD-aware weight packing. On-the-fly weight dequantization reduces intermediate DRAM access, but remains expensive. For instance, de quant i zing a single 4-bit weight involves 1 shift, 1 bitwise AND, and 1 FMA scaling operations, while the de quantized weight undergoes only 1 FMA computation. This process is particularly costly on CPUs with SIMD architecture that favor vectorized instructions. To mitigate this, we suggest platform-specific weight packing tailored to the bitwidth of a device’s SIMD units. Figure 4 demonstrates our strategy for ARM CPUs with 128-bit SIMD registers offering up to $1.2\times$ speedup. Here, each register holds 32 4-bit weights, sequenced as $w_{0},w_{16},w_{1},w_{17},...,w_{15},w_{31}$ . This approach requires just three SIMD instructions to unpack all 32 weights, as opposed to 3 scalar instructions per weight in a conventional packing $(w_{0},w_{1},...,w_{31})$ . Generally, for $2^{n}$ -bit SIMD registers, adjacent weights will have indices off by $1/8\times2^{n}$ , since each register can hold $1/8\times2^{n}$ 8-bit integers. On GPUs, we found it more efficient to pack each 8 weights into $\mathcal{W}_{\left{0,2,4,6,1,3,5,7\right}}$ following (Kim et al., 2022).

SIMD感知的权重打包。动态权重反量化减少了中间DRAM访问,但仍然很昂贵。例如,反量化单个4位权重涉及1次移位、1次按位AND和1次FMA缩放操作,而反量化后的权重仅进行1次FMA计算。这个过程在支持向量化指令的SIMD架构CPU上尤为昂贵。为了缓解这一问题,我们建议根据设备的SIMD单元的位宽进行特定平台的权重打包。图4展示了我们针对ARM CPU的策略,使用128位SIMD寄存器可提供高达$1.2\times$的加速。在这里,每个寄存器包含32个4位权重,顺序为$w_{0},w_{16},w_{1},w_{17},...,w_{15},w_{31}$。这种方法只需三条SIMD指令即可解包所有32个权重,而传统打包$(w_{0},w_{1},...,w_{31})$中每个权重需要三条标量指令。通常,对于$2^{n}$位SIMD寄存器,相邻权重的索引将相差$1/8\times2^{n}$,因为每个寄存器可以容纳$1/8\times2^{n}$个8位整数。在GPU上,我们发现将每8个权重打包成$\mathcal{W}_{\left{0,2,4,6,1,3,5,7\right}}$更为高效(Kim et al., 2022)。

Kernel fusion. We also extensively apply kernel fusion to optimize on-device LLM inference. For layer normalization, we fuse all operators (e.g. multiplication, division and square root) into a single kernel. For attention layers, we fuse QKV projections into a single kernel, and also perform on-the-fly positional embedding calculation. We also preallocate KV caches and perform cache updates within the attention kernel. Kernel fusion is particularly useful for models with inefficient forward pass implementations, such as Falcon (Penedo et al., 2023) and StarCoder (Li et al., 2023c). Notably, the computation time for each FP16 kernel is in the order of 0.01ms on the 4090 GPU, comparable to the GPU kernel launch overhead. Hence, reducing number of kernel calls through kernel fusion leads to direct speedups.

核融合。我们还广泛应用核融合来优化设备上的大语言模型推理。对于层归一化,我们将所有操作符(例如乘法、除法和平方根)融合到一个核中。对于注意力层,我们将 QKV 投影融合到一个核中,并同时进行实时位置嵌入计算。我们还会预分配 KV 缓存并在注意力核内执行缓存更新。核融合对于前向传播实现效率较低的模型特别有用,例如 Falcon (Penedo et al., 2023) 和 StarCoder (Li et al., 2023c)。值得注意的是,在 4090 GPU 上,每个 FP16 核的计算时间大约为 0.01ms,与 GPU 核启动开销相当。因此,通过核融合减少核调用次数可以直接加速。

AWQ: Activation-aware Weight Quantization for On-Device LLM Compression and Acceleration

AWQ: 基于激活感知的权重量化用于设备端大语言模型压缩与加速

| PPL√ | Llama-2 | LLaMA |

|---|---|---|

| 7B | 13B | |

| FP16 | - | 5.47 |

| INT3 g128 | RTN | 6.66 |

| GPTQ | 6.43 | |

| GPTQ-R | 6.42 | |

| AWQ | 6.24 | |

| INT4 g128 | RTN | 5.73 |

| GPTQ | 5.69 | |

| GPTQ-R | 5.63 | |

| AWQ | 5.60 |

Table 4. AWQ improves over round-to-nearest quantization (RTN) for different model sizes and different bit-precisions. It consistently achieves better perplexity than GPTQ (w/ and w/o reordering) on LLaMA & Llama-2 models.

表 4: AWQ 在不同模型大小和不同比特精度下相比最近舍入量化 (RTN) 的改进。它在 LLaMA 和 Llama-2 模型上始终比 GPTQ (带和不带重排序) 获得更好的困惑度。

| Wikitext2PPL√ | Mixtral-8x7B | Mistral-7B |

|---|---|---|

| FP16 | 5.94 | 4.14 |

| INT4-g128 | 6.05 | 4.30 |

| INT3-g128 | 6.52 | 4.83 |

5 EXPERIMENTS

5 实验

5.1 Settings

5.1 设置

Quantization. We focus on weight-only grouped quantization in this work. As shown in previous work (Dettmers & Z ett le moyer, 2022; Frantar et al., 2022), grouped quantization is always helpful for improving performance/model size trade-off. We used a group size of 128 throughout the work, except otherwise specified. We focus on INT4/INT3 quantization since they are able to mostly preserve the LLMs’ performance (Dettmers & Z ett le moyer, 2022). For AWQ, we used a small calibration set from the Pile (Gao et al., 2020) dataset in order not to overfit to a specific downstream domain. We used a grid size of 20 to search for the optimal $\alpha$ in Equation 5.

量化。我们在本工作中专注于仅权重的分组量化。正如之前的研究 (Dettmers & Zettlemoyer, 2022; Frantar et al., 2022) 所示,分组量化总是有助于提高性能/模型大小的权衡。除非另有说明,我们在整个工作中使用128的组大小。我们专注于INT4/INT3量化,因为它们能够大部分保留大语言模型的性能 (Dettmers & Zettlemoyer, 2022)。对于AWQ,我们使用了来自Pile (Gao et al., 2020) 数据集的小型校准集,以避免过度拟合特定下游领域。我们使用20的网格大小来搜索方程5中的最优 $\alpha$。

Models. We benchmarked our method on LLaMA (Touvron et al., 2023a) and OPT (Zhang et al., 2022) families. There are other open LLMs like BLOOM (Scao et al., 2022), but they are generally worse in quality, so we do not include them in our study. We further benchmark an instructiontuned model Vicuna (Chiang et al., 2023) and visual language models Open Flamingo-9B (Awadalla et al., 2023) and LLaVA-13B (Liu et al., 2023a) to demonstrate the generability of our method.

模型。我们在 LLaMA (Touvron et al., 2023a) 和 OPT (Zhang et al., 2022) 系列上对我们的方法进行了基准测试。虽然还有其他开源的大语言模型,例如 BLOOM (Scao et al., 2022),但它们的质量普遍较差,因此我们未将其纳入研究。此外,我们还对指令调优模型 Vicuna (Chiang et al., 2023) 以及视觉语言模型 Open Flamingo-9B (Awadalla et al., 2023) 和 LLaVA-13B (Liu et al., 2023a) 进行了基准测试,以展示我们方法的通用性。

Table 5. AWQ quantization results on Mistral-7B-Instructv0.2(Jiang et al., 2023) and Mixtral $\mathbf{\nabla}\cdot8\mathbf{x}7\mathbf{B}$ -Instruct-v0.1 model (Jiang et al., 2024). The PPL result on wikitext shows that AWQ can achieve superior quantization performance on different model architectures including LLMs with GQA and Mixture-of-Experts (MoE) models. Figure 5. Comparing INT3-g128 quantized Vicuna models with FP16 counterparts under GPT-4 evaluation protocol (Chiang et al., 2023). More winning cases (in blue) indicate better performance. AWQ consistently improves the quantized performance compared to RTN and GPTQ (Frantar et al., 2022), showing generalization to instruction-tuned models.

表 5. AWQ 量化在 Mistral-7B-Instructv0.2 (Jiang et al., 2023) 和 Mixtral $\mathbf{\nabla}\cdot8\mathbf{x}7\mathbf{B}$ -Instruct-v0.1 模型 (Jiang et al., 2024) 上的结果。wikitext 上的 PPL 结果表明,AWQ 可以在不同模型架构(包括具有 GQA 和 Mixture-of-Experts (MoE) 模型的大语言模型)上实现卓越的量化性能。图 5. 在 GPT-4 评估协议 (Chiang et al., 2023) 下比较 INT3-g128 量化的 Vicuna 模型与 FP16 模型。更多的获胜案例(蓝色)表示更好的性能。与 RTN 和 GPTQ (Frantar et al., 2022) 相比,AWQ 始终提高了量化性能,显示了对指令调优模型的泛化能力。

Evaluations. Following previous literature (Dettmers et al., 2022; Xiao et al., 2022; Frantar et al., 2022; Dettmers & Z ett le moyer, 2022; Yao et al., 2022), we mainly profiled the quantized models on language modeling tasks (perplexity evaluation on WikiText-2 (Merity et al., 2016)) since perplexity can stably reflect the LLM’s performance (Dettmers & Z ett le moyer, 2022).

评估。遵循先前文献 (Dettmers et al., 2022; Xiao et al., 2022; Frantar et al., 2022; Dettmers & Zettlemoyer, 2022; Yao et al., 2022),我们主要在语言建模任务 (WikiText-2 上的困惑度评估 (Merity et al., 2016)) 上对量化模型进行了分析,因为困惑度能够稳定地反映大语言模型的性能 (Dettmers & Zettlemoyer, 2022)。

Baselines. Our primary baseline is vanilla round-tonearest quantization (RTN). It is actually quite strong when using a small group size like 128 (Frantar et al., 2022; Dettmers & Z ett le moyer, 2022). We also compare with a state-of-the-art method GPTQ (Frantar et al., 2022) for LLM weight quantization. For GPTQ, we also compare with an updated version that uses a “reorder” trick (denoted as GPTQ-Reorder or GPTQ-R). Other techniques like ZeroQuant (Yao et al., 2022), AdaRound (Nagel et al., 2020), and BRECQ (Li et al., 2021) rely on back propagation to update the quantized weights, which may not easily scale up to large model sizes; they also do not outperform GPTQ (Frantar et al., 2022), thus not included for study.

基线。我们的主要基线是普通的四舍五入量化 (RTN)。当使用较小的组大小(如 128)时,它实际上非常强大 (Frantar et al., 2022; Dettmers & Zettlemoyer, 2022)。我们还与最先进的大语言模型权重量化方法 GPTQ (Frantar et al., 2022) 进行了比较。对于 GPTQ,我们还比较了使用“重新排序”技巧的更新版本(称为 GPTQ-Reorder 或 GPTQ-R)。其他技术如 ZeroQuant (Yao et al., 2022)、AdaRound (Nagel et al., 2020) 和 BRECQ (Li et al., 2021) 依赖于反向传播来更新量化权重,这可能不容易扩展到大型模型;它们也没有超越 GPTQ (Frantar et al., 2022),因此不包括在研究范围内。

5.2 Evaluation

5.2 评估

Results on LLaMA models. We focus on LLaMA models (LLaMA (Touvron et al., 2023a) and Llama-2 (Touvron et al., 2023b)) due to their superior performance compared to other open-source LLMs (Zhang et al., 2022; Scao et al., 2022); it is also the foundation of many popular open-source models (Taori et al., 2023; Chiang et al., 2023). We evaluate the perplexity before and after quantization in Table 4. AWQ consistently outperforms round-to-nearest (RTN) and GPTQ (Frantar et al., 2022) (w/ and w/o reordering) across different model scales (7B-70B) and generations.

LLaMA 模型的结果。我们专注于 LLaMA 模型(LLaMA [Touvron et al., 2023a] 和 Llama-2 [Touvron et al., 2023b]),因为与其他开源大语言模型相比,它们表现出色 [Zhang et al., 2022; Scao et al., 2022];它也是许多流行的开源模型的基础 [Taori et al., 2023; Chiang et al., 2023]。我们在表 4 中评估了量化前后的困惑度。AWQ 在不同模型规模(7B-70B)和代际中始终优于最近舍入(RTN)和 GPTQ [Frantar et al., 2022](带和不带重排序)。

AWQ: Activation-aware Weight Quantization for On-Device LLM Compression and Acceleration

AWQ:面向激活感知的权重量化,用于设备端大语言模型压缩与加速

| COCO (CIDEr ↑) | 零样本 | 4-shot | 8-shot | 16-shot | 32-shot | (32-shot) |

|---|---|---|---|---|---|---|

| FP16 | 63.73 | 72.18 | 76.95 | 79.74 | 81.70 | |

| INT4 g128 | RTN | 60.24 | 68.07 | 72.46 | 74.09 | 77.13 |

| GPTQ | 59.72 | 67.68 | 72.53 | 74.98 | 74.98 | |

| AWQ | 62.57 | 71.02 | 74.75 | 78.23 | 80.53 | |

| INT3 g128 | RTN | 46.07 | 55.13 | 60.46 | 63.21 | 64.79 |

| GPTQ | 29.84 | 50.77 | 56.55 | 60.54 | 64.77 | |

| AWQ | 56.33 | 64.73 | 68.79 | 72.86 | 74.47 |

Table 6. Quantization results of a visual language model Open Flamingo-9B (Awadalla et al., 2023) on COCO Captioning datasets. Activation-aware Weight Quantization outperforms existing methods under zero-shot and various few-shot settings, demonstrating the gene r ability to different modalities and in-context learning workloads. Activation-aware Weight Quantization reduces the quantization degradation (32-shot) from 4.57 to 1.17 under INT4-g128, providing $4\times$ model size reduction with negligible performance loss.

Table 7. INT4-g128 results of VILA-7B and VILA-13B (Lin et al., 2024) on 11 visual-language benchmarks. AWQ consistently shows lossless performance on all benchmarks. Benchmark names are abbreviated due to space limits. VQA-v2 (Goyal et al., 2017); GQA (Hudson & Manning, 2019); VisWiz (Gurari et al., 2018); SQAI: ScienceQA-IMG (Lu et al., 2022); VQAT: TextVQA (Singh et al., 2019); POPE (Li et al., 2023d); MME (Fu et al., 2023); MMB: MMBench (Liu et al., 2023b); MMBCN: MMBench-Chinese (Liu et al., 2023b); SEED: SEED-Bench (Li et al., 2023a); LLaVAW: LLaVA-Bench (In-the-Wild) (Liu et al., 2023a); MM-Vet (Yu et al., 2023).

Results on Mistral / Mixtral models. We also evaluated AWQ on the Mistral and Mixtral models, which are among the most popular open-source LLMs and Mixtureof-Experts (MoE) models, respectively (Jiang et al., 2023; 2024). The results indicate that AWQ achieves superior performance on both the Mistral and Mixtral models. This demonstrates that AWQ is effective across various model architectures.

Mistral / Mixtral 模型上的结果。我们还在 Mistral 和 Mixtral 模型上评估了 AWQ,它们分别是最流行的开源大语言模型和混合专家模型 (Mixture of Experts, MoE) (Jiang et al., 2023; 2024)。结果表明,AWQ 在 Mistral 和 Mixtral 模型上都实现了卓越的性能。这证明了 AWQ 在各种模型架构中都是有效的。

Quantization of instruction-tuned models. Instruction tuning can significantly improve the models’ performance and usability (Wei et al., 2021; Sanh et al., 2021; Ouyang et al., 2022; Chung et al., 2022). It has become an essential procedure before model deployment. We further benchmark our method’s performance on a popular instruction-tuned model Vicuna (Chiang et al., 2023) in Figure 5. We used the GPT-4 score to evaluate the quantized models’ performance against the FP16 counterpart on 80 sample questions (Chiang et al., 2023). We compare the responses with both orders (quantized-FP16, FP16-quantized) to get rid of the ordering effect (we found GPT-4 tends to increase the rating of the first input), leading to 160 trials. AWQ consistently improves the INT3- $\cdot\mathrm{g}128$ quantized Vicuna models over RTN and GPTQ under both scales (7B and 13B), demonstrating the gene r ability to instruction-tuned models.

指令微调模型的量化。指令微调可以显著提升模型的性能和可用性 (Wei et al., 2021; Sanh et al., 2021; Ouyang et al., 2022; Chung et al., 2022)。它已成为模型部署前的关键步骤。我们进一步在图 5 中展示了我们的方法在流行的指令微调模型 Vicuna (Chiang et al., 2023) 上的性能基准测试。我们使用 GPT-4 评分来评估量化模型在 80 个样本问题上与 FP16 模型的性能对比 (Chiang et al., 2023)。我们比较了两种顺序(量化-FP16,FP16-量化)的响应,以消除顺序效应(我们发现 GPT-4 倾向于提高第一个输入的评分),总共进行了 160 次试验。AWQ 在两种规模(7B 和 13B)下均优于 RTN 和 GPTQ,展示了其在指令微调模型上的通用能力。

Table 8. INT4-g128 quantization results of CodeLlama-7bInstruct-hf on MBPP dataset and Llama-2 (7B/13B/70B) on GSM8K dataset. AWQ outperforms existing methods on programming and math datasets, demonstrating the gene r ability to different scenarios and evaluation settings. Notably, AWQ under the INT4- $\mathrm{g}128$ configuration demonstrates comparable performance to the original FP16 model across both datasets.

| MBPP (7B) | pass@ 1 pass@10 | GSM8K | 7B 13B | 70B |

|---|---|---|---|---|

| FP16 | 38.53 | 49.77 | FP16 | 13.87 26.16 56.41 |

| RTN | 37.51 | 48.49 | RTN | 11.07 21.23 |

| GPTQ | 31.97 | 44.75 | GPTQ | 12.13 24.26 |

| AWQ | 40.64 | 49.25 | AWQ | 13.57 25.25 |

表 8. CodeLlama-7bInstruct-hf 在 MBPP 数据集和 Llama-2 (7B/13B/70B) 在 GSM8K 数据集上的 INT4-g128 量化结果。AWQ 在编程和数学数据集上优于现有方法,展示了在不同场景和评估设置中的通用能力。值得注意的是,在 INT4-$\mathrm{g}128$ 配置下,AWQ 在两个数据集上的表现与原始 FP16 模型相当。

Quantization of multi-modal language models. Large multi-modal models (LMMs) or visual language models (VLMs) are LLMs augmented with vision inputs (Alayrac et al., 2022; Li et al., 2023b; Koh et al., 2023; Driess et al., 2023; Zhang et al., 2023; Liu et al., 2023a). Such models are able to perform text generation conditioned on image/video inputs. Since our method does not have the over fitting issue to the calibration set, it can be directly applied to VLMs

多模态大语言模型的量化

Question: Can you explain this meme in detail? INT4-g128 RTN: The meme in the image features a plate of food, likely fried chicken, with a unique twist. There are small pictures of the Earth and other planets placed on top of the food, creating an interesting and amusing visual. Alongside the plate, a caption reads, "Sometimes I look at pictures of the Earth from space and I marvel at how beautiful it all is." This combination of food and planet images adds a touch of humor and curiosity, making the viewer wonder about the context and intent behind the meme. INT4-g128 AWQ: The meme in the image is a lighthearted and humorous take on the concept of looking at pictures of the Earth from space. A plate of fried food, specifically chicken nuggets, is presented with the caption, "Sometimes I look at pictures of the Earth from space and I marvel at how beautiful it all is." The playful combination of a food item and an Earth-related context creates a fun and entertaining visual.

图 1: 你能详细解释一下这个梗图吗?

INT4-g128 RTN: 图中的梗图展示了一盘食物,可能是炸鸡,但有一个独特的转折。食物上放置了小地球和其他行星的图片,营造出一种有趣且引人发笑的视觉效果。盘子旁边有一句文字说明:“有时候我看着从太空拍摄的地球照片,惊叹于它是多么美丽。”这种食物与行星图片的结合增添了一丝幽默和好奇,让观众不禁思考这个梗图的背景和意图。

INT4-g128 AWQ: 图中的梗图以一种轻松幽默的方式呈现了从太空看地球照片的概念。一盘炸鸡块配以文字说明:“有时候我看着从太空拍摄的地球照片,惊叹于它是多么美丽。”这种食物与地球相关背景的有趣结合,创造了一个充满趣味和娱