StyleCLIP: Text-Driven Manipulation of StyleGAN Imagery 文本驱动的styleggan图像合成

Abstract 摘要

Inspired by the ability of StyleGAN to generate highly realistic images in a variety of domains, much recent work has focused on understanding how to use the latent spaces of StyleGAN to manipulate generated and real images. However, discovering semantically meaningful latent manipulations typically involves painstaking human examination of the many degrees of freedom, or an annotated collection of images for each desired manipulation. In this work, we explore leveraging the power of recently introduced Contrastive Language-Image Pre-training (CLIP) models in order to develop a text-based interface for StyleGAN image manipulation that does not require such manual effort. We first introduce an optimization scheme that utilizes a CLIP-based loss to modify an input latent vector in response to a user-provided text prompt. Next, we describe a latent mapper that infers a text-guided latent manipulation step for a given input image, allowing faster and more stable text-based manipulation. Finally, we present a method for mapping a text prompts to input-agnostic directions in StyleGAN's style space, enabling interactive text-driven image manipulation. Extensive results and comparisons demonstrate the effectiveness of our approaches. \vfill

受StyleGAN在各种领域生成高度逼真的图像的能力的启发,许多最新工作集中在理解如何使用StyleGAN的潜在空间来操纵生成的和真实的图像。然而,发现在语义上有意义的潜在操纵通常涉及艰苦的人类对许多自由度的检查,或涉及每个所需操纵的带注释的图像集合。在这项工作中,我们探索利用最新引入的对比语言-图像预训练(CLIP)模型的功能,以便为StyleGAN图像处理开发基于文本的界面,而无需进行此类人工操作。我们首先介绍一种优化方案,该方案利用基于CLIP的损失来响应用户提供的文本提示来修改输入潜在矢量。下一个,我们描述了一个潜在映射器,它针对给定的输入图像推断出文本引导的潜在操作步骤,从而允许更快,更稳定的基于文本的操作。最后,我们提出了一种在StyleGAN样式空间中将文本提示映射到与输入无关的方向的方法,从而实现交互式文本驱动的图像操作。广泛的结果和比较证明了我们方法的有效性。

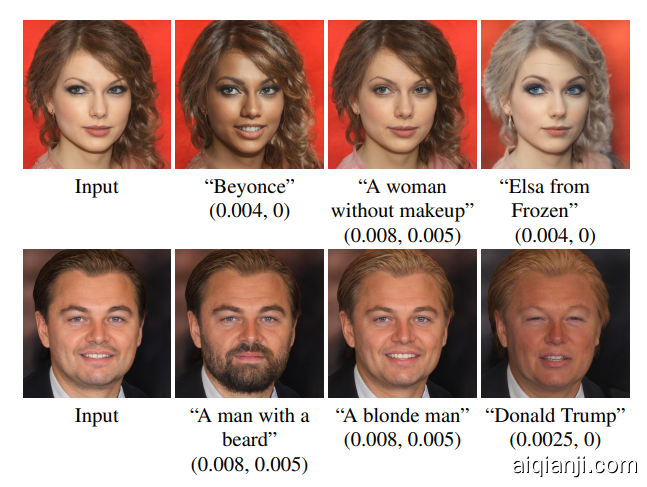

Examples of text-driven manipulations using StyleCLIP. Top row: input images; Bottom row: our manipulated results. The text prompt used to drive each manipulation appears under each column.

使用StyleCLIP进行文本驱动的操作的示例。第一行:输入图像;最下面一行:我们的操纵结果。用于驱动每种操作的文本提示出现在每一列下

Introduction 简介

Generative Adversarial Networks (GANs) have revolutionized image synthesis, with recent style-based generative models boasting some of the most realistic synthetic imagery to date. Furthermore, the learnt intermediate latent spaces of StyleGAN have been shown to possess disentanglement properties, which enable utilizing pretrained models to perform a wide variety of image manipulations on synthetic, as well as real, images.

Harnessing StyleGAN's expressive power requires developing simple and intuitive interfaces for users to easily carry out their intent. Existing methods for semantic control discovery either involve manual examination (e.g.,), a large amount of annotated data, or pretrained classifiers . Furthermore, subsequent manipulations are typically carried out by moving along a direction in one of the latent spaces, using a parametric model, such as a 3DMM in StyleRig , or a trained normalized flow in StyleFlow . Specific edits, such as virtual try-on and aging have also been explored. Thus, existing controls enable image manipulations only along preset semantic directions, severely limiting the user's creativity and imagination. Whenever an additional, unmapped, direction is desired, further manual effort and/or large quantities of annotated data are necessary.

In this work, we explore leveraging the power of recently introduced Contrastive Language-Image Pre-training (CLIP) models in order to enable intuitive text-based semantic image manipulation that is neither limited to preset manipulation directions, nor requires additional manual effort to discover new controls. The CLIP model is pretrained on 400 million image-text pairs harvested from the Web, and since natural language is able to express a much wider set of visual concepts, combining CLIP with the generative power of StyleGAN opens fascinating avenues for image manipulation. Figuresteaser shows several examples of unique manipulations produced using our approach. Specifically, in this paper we investigate three techniques that combine CLIP with StyleGAN: The results in this paper and the supplementary material demonstrate a wide range of semantic manipulations on images of human faces, animals, cars, and churches. These manipulations range from abstract to specific, and from extensive to fine-grained. Many of them have not been demonstrated by any of the previous StyleGAN manipulation works, and all of them were easily obtained using a combination of pretrained StyleGAN and CLIP models.

生成对抗网络(GANs) [ goodfellow2014generative ]彻底改变了图像合成,最近有了基于样式的生成模型 [ karras2019style,karras2020analyzing,karras2020ada ] ,其中包含一些迄今为止最逼真的合成图像。此外,已经证明所学习的StyleGAN中间潜在空间具有纠缠性质 [ collins2020editing,shen2020interpreting,harkonen2020ganspace,tewari2020stylerig,wu2020stylespace ],可以利用预训练的模型对合成图像和真实图像执行各种图像处理。

充分利用StyleGAN的表达能力,需要开发简单直观的界面,以便用户轻松实现其意图。现有的语义控制发现方法要么涉及手动检查(例如 [ harkonen2020ganspace,shen2020interpreting,wu2020stylespace ]),大量带注释的数据,要么是经过预训练的分类器[ shen2020interfacegan,abdal2020styleflow ]。此外,通常使用参数模型(例如StyleRig [ tewari2020stylerig ]中的3DMM),通过沿一个潜在空间中的方向移动来执行后续操作。或StyleFlow [ abdal2020styleflow ]中经过训练的标准化流。还研究了特定的编辑方法,例如虚拟试穿 [ lewis2021vogue ]和老化 [ alaluf2021matter ]。

因此,现有控件只能沿预设的语义方向进行图像操作,从而严重限制了用户的创造力和想象力。每当需要附加的,未映射的方向时,就需要进一步的人工操作和/或大量的注释数据。

在这项工作中,我们探索利用最新引入的对比语言-图像预训练(CLIP)模型的功能,以实现基于文本的直观语义图像操作,该操作不仅限于预设的操作方向,也不需要额外的人工来发现新控件。CLIP模型在从Web上收获的4亿个图像文本对上进行了预训练,并且由于自然语言能够表达更广泛的视觉概念,因此将CLIP与StyleGAN的生成能力相结合将为图像处理提供有趣的途径。Figures StyleCLIP:StyleGAN Imagery的文本驱动操作显示了使用我们的方法产生的独特操作的几个示例。具体而言,在本文中,我们研究了将CLIP与StyleGAN相结合的三种技术:

- 文本指导的潜在优化,其中CLIP模型用作损失网络[ johnson2016perceptual ]。这是最通用的方法,但是需要几分钟的优化才能对图像进行操作。

- 潜在残差映射器,针对特定的文本提示进行了培训。给定潜在空间中的起点(要处理的输入图像),映射器会在潜在空间中产生局部步长。

- 一种将文本提示映射到StyleGAN样式空间中与输入无关的(全局)方向的方法,可控制操纵强度和解开程度。

本文和补充材料中的结果展示了对人脸,动物,汽车和教堂图像的广泛语义操纵。这些操作的范围从抽象到特定,从广泛到细化。他们中的许多人都没有通过以前的StyleGAN操作作品得到证明,并且所有这些人都可以通过结合使用预训练的StyleGAN和CLIP模型轻松获得。

Related Work 相关工作

Vision and Language 视觉与语言方向

Multiple works learn cross-modal Vision and language (VL) representations for a variety of tasks, such as language-based image retrieval, image captioning, and visual question answering. Following the success of BERT in various language tasks, recent VL methods typically use Transformers to learn the joint representations. A recent model, based on Contrastive Language-Image Pre-training (CLIP), learns a multi-modal embedding space, which may be used to estimate the semantic similarity between a given text and an image. CLIP was trained on 400 million text-image pairs, collected from a variety of publicly available sources on the Internet. The representations learned by CLIP have been shown to be extremely powerful, enabling state-of-the-art zero-shot image classification on a variety of datasets. We refer the reader to OpenAI's Distill article for an extensive exposition and discussion of the visual concepts learned by CLIP. The pioneering work of Reed approached text-guided image generation by training a conditional GAN, conditioned by text embeddings obtained from a pretrained encoder. Zhang improved image quality by using multi-scale GANs. AttnGAN incorporated an attention mechanism between the text and image features. Additional supervision was used in other works to further improve the image quality. A few studies focus on text-guided image manipulation. Some methods use a GAN-based encoder-decoder architecture, to disentangle the semantics of both input images and text descriptions.

ManiGAN introduces a novel text-image combination module, which produces high-quality images. Differently from the aforementioned works, we propose a single framework that combines the high-quality images generated by StyleGAN, with the rich multi-domain semantics learned by CLIP. Recently, DALL·E, a 12-billion parameter version of GPT-3, which at 16-bit precision requires over 24GB of GPU memory, has shown a diverse set of capabilities in generating and applying transformations to images guided by text. In contrast, our approach is deployable even on a single commodity GPU. A concurrent work to ours, TediGAN, also uses StyleGAN for text-guided image generation and manipulation. By training an encoder to map text into the StyleGAN latent space, one can generate an image corresponding to a given text. To perform text-guided image manipulation, TediGAN encodes both the image and the text into the latent space, and then performs style-mixing to generate a corresponding image. In Sectionexperiments we demonstrate that the manipulations achieved using our approach reflect better the semantics of the driving text. In a recent online post, Perez describes a text-to-image approach that combines StyleGAN and CLIP in a manner similar to our latent optimizer in Sectionopt. Rather than synthesizing an image from scratch, our optimization scheme, as well as the other two approaches described in this work, focus on image manipulation. While text-to-image generation is an intriguing and challenging problem, we believe that the image manipulation abilities we provide constitute a more useful tool for the typical workflow of creative artists.

联合表达

多个作品学习跨模态视觉和语言(VL)表示形式 [ Desai2020VirTexLV,sariyildiz2020learning,Tan2019LXMERTLC,Lu2019ViLBERTPT,Li2019VisualBERTAS,Su2020VL-BERT,Li2020UnicoderVLAU,Chen2020UNITERUI,Li2020OscarOA ]用于多种任务,例如基于语言的图像检索字幕和视觉问题解答。继BERT [ Devlin2019BERTPO ]在各种语言任务中取得成功之后 ,最近的VL方法通常使用变形金刚 [ NIPS2017_3f5ee243 ]学习联合表示。一种基于对比语言-图像预训练(CLIP)[ radford2021learning ]的最新模型, 学习了一种多模式嵌入空间,该空间可用于估计给定文本和图像之间的语义相似性。CLIP接受了从互联网上各种公开可用来源收集的4亿个文本图像对的培训。CLIP所学的表示方法非常强大,可以对各种数据集进行最新的零镜头图像分类。我们向读者介绍OpenAI的Distill文章[ distill2021multimodal ],以广泛介绍和讨论CLIP所学的视觉概念。

文本引导的图像生成和处理

里德的开创性工作\等人 [ Reed2016GenerativeAT ]通过训练条件GAN走近文本引导图像生成 [ mirza2014conditional ],通过从预训练的编码器得到的嵌入文本空调。Zhang \ etal [ zhang2017stackgan,Zhang2019StackGANRI ]通过使用多尺度GAN改善了图像质量。AttnGAN [ Xu2018AttnGANFT ]在文本和图像特征之间引入了一种注意机制。其他作品中也使用了额外的监督 [ Reed2016GenerativeAT,Li2019ObjectDrivenTS,Koh2020TexttoImageGG ]进一步改善图像质量。

一些研究集中在文本引导的图像处理上。某些方法 [ Dong2017SemanticIS,Nam2018TextAdaptiveGA,Liu2020DescribeWT ]使用基于GAN的编码器-解码器体系结构来解开输入图像和文本描述的语义。ManiGAN [ li2020manigan ]引入了一种新颖的文本-图像组合模块,可以产生高质量的图像。与上述工作不同,我们提出了一个单一框架,该框架将StyleGAN生成的高质量图像与CLIP所学的丰富的多域语义相结合。

最近,DALL·E [ unpublished2021dalle,ramesh2021zeroshot ],这是120亿个参数版本的GPT-3 [ Brown2020LanguageMA ],其精度为16位,需要超过24GB的GPU内存,它在生成和应用转换方面表现出多种功能到由文字引导的图像。相反,我们的方法甚至可以部署在单个商用GPU上。

与我们同时进行的工作TediGAN [ xia2020tedigan ]也使用StyleGAN进行文本引导的图像生成和操作。通过训练编码器将文本映射到StyleGAN潜在空间中,可以生成与给定文本相对应的图像。为了执行文本引导的图像处理,TediGAN将图像和文本都编码到潜在空间中,然后执行样式混合以生成相应的图像。在第7节中, 我们证明了使用我们的方法实现的操作更好地反映了驾驶文本的语义。

在最近的在线帖子中,Perez [ perez2021imagesfromprompts ]描述了一种文本到图像的方法,该方法将StyleGAN和CLIP组合在一起,其方式类似于第4节中的潜在优化器 。我们的优化方案以及本工作中介绍的其他两种方法,不是从头开始合成图像,而是着眼于图像处理。尽管文本到图像的生成是一个有趣且具有挑战性的问题,但我们认为,我们提供的图像处理功能对于创意艺术家的典型工作流程而言,是一种更为有用的工具。

Latent Space Image Manipulation 潜在空间图像处理

Many works explore how to utilize the latent space of a pretrained generator for image manipulation. Specifically, the intermediate latent spaces in StyleGAN have been shown to enable many disentangled and meaningful image manipulations. Some methods learn to perform image manipulation in an end-to-end fashion, by training a network that encodes a given image into a latent representation of the manipulated image. Other methods aim to find latent paths, such that traversing along them result in the desired manipulation. Such methods can be categorized into: (i) methods that use image annotations to find meaningful latent paths, and (ii) methods that find meaningful directions without supervision, and require manual annotation for each direction. While most works perform image manipulations in the $ \mathcal{W} $ or $ \mathcal{W+} $ spaces, Wu proposed to use the StyleSpace $ \mathcal{S} $ , and showed that it is better disentangled than $ \mathcal{W} $ and $ \mathcal{W+} $ . Our latent optimizer and mapper work in the $ \mathcal{W+} $ space, while the input-agnostic directions that we detect are in $ \mathcal{S} $ . In all three, the manipulations are derived directly from text input, and our only source of supervision is a pretrained CLIP model. As CLIP was trained on hundreds of millions of text-image pairs, our approach is generic and can be used in a multitude of domains without the need for domain- or manipulation-specific data annotation.

许多作品探索了如何利用预训练生成器的潜在空间进行图像处理 [ collins2020editing,tewari2020stylerig,wu2020stylespace ]。具体而言,StyleGAN中的中间潜在空间已显示出能够进行许多纠缠且有意义的图像处理。一些方法通过训练将给定图像编码为操纵图像的潜在表示的网络来学会以端到端的方式执行图像操纵 [ nitzan2020dis,richardson2020encoding,alaluf2021matter ]。其他方法旨在找到潜在路径,以便沿它们遍历会导致所需的操作。这些方法可以分类为:(i)使用图像注释查找有意义的潜在路径的方法 [ shen2020interpreting,abdal2020styleflow ],以及(ii)在没有监督的情况下查找有意义的方向并且需要针对每个方向进行手动注释的方法 [ harkonen2020ganspace,shen2020closedform,voynov2020unsupervised,wang2021a ]。

许多工作探索如何利用预磨损发生器的潜空间进行图像操纵。具体地,已经证明了样式中的中间潜在空间能够实现许多解开和有意义的图像操纵。一些方法通过训练将给定图像进行编码为被操纵图像的潜在表示,以端到端的方式执行图像操纵。其他方法目的旨在找到潜在的路径,使得沿着它们遍历导致所需的操纵。此类方法可以分类为:(i)使用图像注释找到有意义的潜在路径的方法,以及(ii)在没有监督的情况下找到有意义的方向的方法,并要求每方向进行手动注释。

虽然大多数作品在$\mathcal{W} $或$\mathcal{W+} $空间中执行图像操作,但是WU[ wu2020stylespace ]建议使用* StyleSpace * $\mathcal{S} $,并显示它比$\mathcal{W} $和$\mathcal{W+} $更好地解散。我们的潜在优化器和映射器在$\mathcal{W+} $空间中工作,而我们检测到的输入 - 不可知方向在$\mathcal{S} $中。在这三种方法中,操作都是直接从文本输入中派生的,而我们唯一的监督来源是预先训练的CLIP模型。由于CLIP已针对数亿个文本图像对进行了培训,因此我们的方法是通用的,可以在多个域中使用,而无需特定于域或特定于操作的数据注释。

StyleCLIP Text-Driven Manipulation STYLECLIP文本驱动的操作

In this work we explore three ways for text-driven image manipulation, all of which combine the generative power of StyleGAN with the rich joint vision-language representation learned by CLIP. We begin in Section opt with a simple latent optimization scheme, where a given latent code of an image in StyleGAN's $ \mathcal{W}+ $ space is optimized by minimizing a loss computed in CLIP space. The optimization is performed for each (source image, text prompt) pair. Thus, despite it's versatility, several minutes are required to perform a single manipulation, and the method can be difficult to control. A more stable approach is described in Section mapper, where a mapping network is trained to infer a manipulation step in latent space, in a single forward pass. The training takes a few hours, but it must only be done once per text prompt. The direction of the manipulation step may vary depending on the starting position in $ \mathcal{W}+ $ , which corresponds to the input image, and thus we refer to this mapper as local. Our experiments with the local mapper reveal that, for a wide variety of manipulations, the directions of the manipulation step are often similar to each other, despite different starting points. Also, since the manipulation step is performed in $ \mathcal{W}+ $ , it is difficult to achieve fine-grained visual effects in a disentangled manner. Thus, in Sectionglobal we explore a third text-driven manipulation scheme, which transforms a given text prompt into an input agnostic (i.e., global in latent space) mapping direction. The global direction is computed in StyleGAN's style space $ \mathcal{S} $ , which is better suited for fine-grained and disentangled visual manipulation, compared to $ \mathcal{W}+ $ .

在这项工作中,我们探索了文本驱动的图像处理的三种方式,所有这些方式将StyleGAN的生成能力与CLIP所学的丰富的联合视觉语言表示形式相结合。

我们从第4节开始,使用简单的潜在优化方案,其中通过最小化剪辑空间中计算的损失来优化样式语言$\mathcal{W}+ $空间中的给定潜在代码。针对每个(源图像,文本提示)对执行优化。因此,尽管它具有多功能性,但执行一次操作仍需要几分钟,并且该方法可能难以控制。在第5节中介绍了一种更稳定的方法,其中训练了一个映射网络,以单次向前通过来推断潜在空间中的操作步骤。训练需要几个小时,但每个文本提示仅需进行一次训练。操纵步骤的方向可以根据$\mathcal{W}+ $中的起始位置而变化,这对应于输入图像,因此我们将此映射器称为本地*。我们与本地映射器的实验表明,对于各种操作,尽管不同的起点,但是操作步骤的方向通常彼此相似。而且,由于操纵步骤在$\mathcal{W}+ $中进行,因此难以以脱屑的方式实现细粒度的视觉效果。因此,在SIFEGLOBAL中,我们探讨了第三个文本驱动的操作方案,它将给定的文本提示转换为输入不可知(即,全局在潜在空间)映射方向。与$\mathcal{W}+ $相比,全局方向在STYLEGAN的风格空间$\mathcal{S} $中计算出更适合细粒度和解散的视觉操作。

| pre-proc | train -time | infer.- time | input image - dependent | latent- space | |

|---|---|---|---|---|---|

| optimizer | – | – | 98 sec | yes | W+ |

| mapper | – | 10 – 12h | 75 ms | yes | W+ |

| global dir. | 4h | – | 72 ms | no | S |

Table 1: Our three methods for combining StyleGAN and CLIP. The latent step inferred by the optimizer and the mapper depends on the input image, but the training is only done once per text prompt. The global direction method requires a one-time pre-processing, after which it may be applied to different (image, text prompt) pairs. Times are for a single NVIDIA GTX 1080Ti GPU.

表1: 我们结合StyleGAN和CLIP的三种方法。优化程序和映射器推断出的潜在步骤取决于输入图像,但是每个文本提示仅训练一次。全局方向方法需要进行一次预处理,然后可以将其应用于不同的(图像,文本提示)对。单个NVIDIA GTX 1080Ti GPU的使用时间。

Table methods summarizes the differences between the three methods outlined above, while visual results and comparisons are presented in the following sections.

表1方法概括以上概述的三种方法之间的差异,而视觉效果和比较在都以下部分。

Latent Optimization 潜在优化

A simple approach for leveraging CLIP to guide image manipulation is through direct latent code optimization. Specifically, given a source latent code $ w_s \in\mathcal{W}+ $ , and a directive in natural language, or a text prompt $ t $ , we solve the following optimization problem:

利用剪辑以引导图像操纵的简单方法是通过直接潜在的代码优化。具体地,给定源潜代码$ w_s \in\mathcal{W}+ $,以及自然语言的指令,或者文本提示 $ t $,我们解决了以下优化问题:

$$ \argmin_{w \in W+}{D_{CLIP}(G(w), t) + \lambda_{L2} \norm{w - w_s}2 + \lambda_{ID} L_ID(w)}, $$

where $ G $ is a pretrained StyleGAN We use StyleGAN2 in all our experiments. generator and $ D_{CLIP} $ is the cosine distance between the CLIP embeddings of its two arguments. Similarity to the input image is controlled by the $ L_2 $ distance in latent space, and by the identity loss:

$ G $是我们所有实验中Stylegan的预训练模型的生成器,$ D_{CLIP} $是其两个参数的剪辑嵌入之间的余弦距离。与输入图像的相似性通过潜伏空间中的$ L_2 $距离和 identity loss 来判断:

$$ L_{ID}(w) = 1- <R(G(w_s)),R(G(w))> , $$

where $ R $ is a pretrained ArcFace network for face recognition, and $ \langle\cdot, \cdot\rangle $ computes the cosine similarity between it's arguments. We solve this optimization problem through gradient descent, by back-propagating the gradient of the objective in through the pretrained and fixed StyleGAN generator $ G $ and the CLIP image encoder. In Figure opt_results we provide several edits that were obtained using this optimization approach after 200-300 iterations. The input images were inverted by e4e . Note that visual characteristics may be controlled explicitly (beard, blonde) or implicitly, by indicating a real or a fictional person (Beyonce, Trump, Elsa). The values of $ \lambda_{L2} $ and $ \lambda_{ID} $ depend on the nature of the desired edit. For changes that shift towards another identity, $ \lambda_{ID} $ is set to a lower value.

,其中$ R $是用于面部识别的预训练arcface网络,而⟨⋅,⋅⟩计算它的参数之间的余弦相似性。通过梯度下降,通过梯度下降来通过返回传播通过预先磨则和固定式样式的样式生成器$ G $和剪辑图像编码器的目标的梯度来解决该优化问题。在图中,OPT_RESULTS我们提供了多种编辑,在200-300次迭代之后使用此优化方法获得。输入图像由E4E反转。注意,可以通过表示真实或虚构的人(Beyonce,Trump,Elsa)明确地(胡子,金发)或隐含地控制视觉特征。 $\lambda_{L2} $和$\lambda_{ID} $的值取决于所需编辑的性质。对于向其他身份转移的变化,$\lambda_{ID} $设置为较低的值。

Figure 2: The architecture of our text-guided mapper (using the text prompt “surprised”, in this example). The source image (left) is inverted into a latent code w. Three separate mapping functions are trained to generate residuals (in blue) that are added to w to yield the target code, from which a pretrained StyleGAN (in green) generates an image (right), assessed by the CLIP and identity losses.

图2:我们的文本向导映射器的体系结构(在此示例中,使用文本提示“ surprised”)。源图像(左)被转换为潜在代码w。训练了三个独立的映射函数以生成残差(蓝色),这些残差被添加到w 生成目标代码,经过CLIP和身份损失评估的目标代码将由经过预训练的StyleGAN(绿色)生成图像(右)。

Figure 3: Edits of real celebrity portraits obtained by latent optimization. The driving text prompt and the (λL2,λID) parameters for each edit are indicated under the corresponding result.

图3:通过潜在优化获得的真实名人肖像的编辑。驾驶文字提示和(λL2,λID) 每次编辑的参数会在相应的结果下方显示。

Latent Mapper 潜在映射器

| Mohawk | Afro | Bob-cut | Curly | Beyonce | Taylor Swift | Surprised | Purple hair | |

|---|---|---|---|---|---|---|---|---|

| Mean | 0.82 | 0.84 | 0.82 | 0.84 | 0.83 | 0.77 | 0.79 | 0.73 |

| Std | 0.096 | 0.085 | 0.095 | 0.088 | 0.081 | 0.107 | 0.893 | 0.145 |

Table 2: Average cosine similarity between manipulation directions obtained from mappers trained using differnt text prompts.

表2:从使用不同文本提示训练的映射器获得的操作方向之间的平均余弦相似度。

The latent optimization described above is versatile, as it performs a dedicated optimization for each (source image, text prompt) pair. On the downside, several minutes of optimization are required to edit a single image, and the method is somewhat sensitive to the values of its parameters. Below, we describe a more efficient process, where a mapping network is trained, for a specific text prompt $ t $ , to infer a manipulation step $ M_t(w) $ in the $ \mathcal{W+} $ space, for any given latent image embedding $ w \in\mathcal{W}+ $ .

上面描述的潜在优化是多功能的,因为它对每个(源图像,文本提示)对执行专用优化。在缺点中,需要几分钟的优化来编辑单个图像,并且该方法对其参数的值稍微敏感。下面,我们描述了一种更有效的过程,其中培训映射网络,用于特定文本提示$ t $,以推断在$\mathcal{W+} $空间中的操作步骤$ M_t(w) $,用于任何给定的潜像嵌入$ w \in\mathcal{W}+ $。

Architecture

The architecture of our text-guided mapper is depicted in Figuremapper_arch. It has been shown that different StyleGAN layers are responsible for different levels of detail in the generated image. Consequently, it is common to split the layers into three groups (coarse, medium, and fine), and feed each group with a different part of the (extended) latent vector. We design our mapper accordingly, with three fully-connected networks, one for each group/part. The architecture of each of these networks is the same as that of the StyleGAN mapping network, but with fewer layers (4 rather than 8, in our implementation). Denoting the latent code of the input image as $ w = (w_{c}, w_{m}, w_{f}) $ , the mapper is defined by我们的文本引导映射器的体系结构在Figuremapper_arch中描绘。已经表明,不同的样式中止层对所生成的图像中的不同细节级别负责。因此,常常将层分成三组(粗,培养基和细),并用不同部分的(延伸的)潜航载体喂食每组。我们根据具有三个完全连接的网络设计我们的地图Per,每个组/部分都是一个。这些网络中的每一个的架构与样式映射网络的架构相同,但层数较少(在我们的实施中4而不是8个)。表示输入图像的潜在代码为$ w = (w_{c}, w_{m}, w_{f}) $,映射器由

$$ M_t(w) = (M^c_t(w_c), M^m_t(w_m), M^f_t(w_f)). $$

Note that one can choose to train only a subset of the three mappers. There are cases where it is useful to preserve some attribute level and keep the style codes in the corresponding entries fixed.

请注意,可以选择仅培训三个映射器的子集。有些情况下保留一些属性级别,并保持固定的相应条目中的样式代码

Losses

Our mapper is trained to manipulate the desired attributes of the image as indicated by the text prompt $ t $ , while preserving the other visual attributes of the input image. The CLIP loss, $ L_CLIP(w) $ guides the mapper to minimize the cosine distance in the CLIP latent space:。我们的映射器接受培训以操纵图像的所需属性,如文本提示$ t $所示,同时保留输入图像的其他可视化属性。剪辑丢失,$L_CLIP(w) $指导映射器以最小化剪辑潜像中的余弦距离:

$$ L_CLIP(w) = D_{CLIP}(G(w + M_t(w)), t), $$

where $ G $ denotes again the pretrained StyleGAN generator. To preserve the visual attributes of the original input image, we minimize the $ L_2 $ norm of the manipulation step in the latent space. Finally, for edits that require identity preservation, we use the identity loss defined in eq.(id-loss).

,其中$ G $再次表示佩带的样式生成器。为了保留原始输入图像的视觉属性,我们最小化了潜在空间中操作步骤的$ L_2 $标准。最后,对于需要身份保存的编辑,我们使用EQ中定义的身份损失。(ID-loss)。

$$ \ mathcal {l} clip(w)= d {clip}(g(w + m_t(w)),t ),$$

Our total loss function is a weighted combination of these losses:

我们的总损失函数是这些损失的加权组合:

$$ L(w) =L_CLIP(w) + \lambda_{L2}\norm{M_t(w)}_2 + \lambda_ID L_ID(w). $$

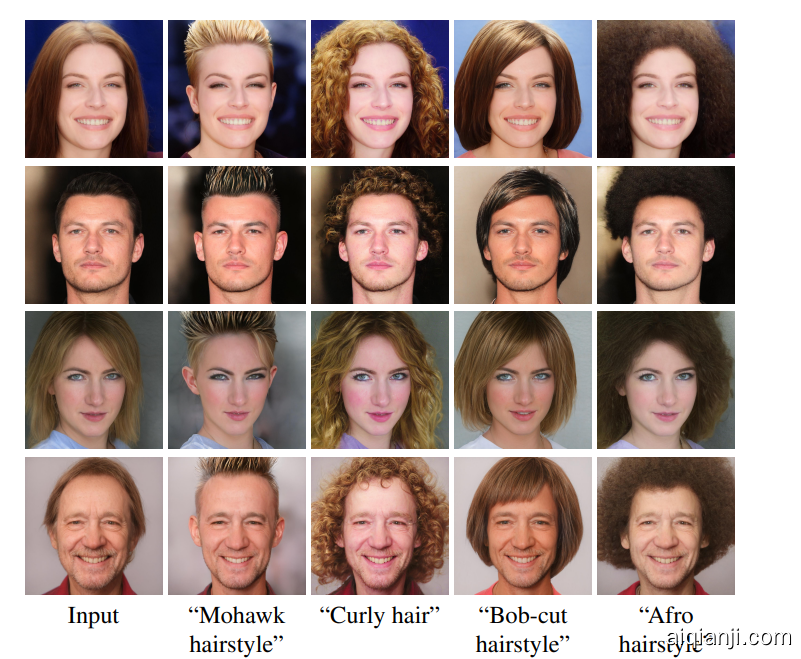

As before, when the edit is expected to change the identity, we do not use the identity loss. The parameter values we use for the examples in this paper are $ \lambda_L2 = 0.8, \lambda_ID = 0.1 $ , except for the Trump manipulation in Figureglobal-vs-mapper, where the parameter values we use are $ \lambda_L2 = 2, \lambda_ID = 0 $ . In Figuremapper-hair we provide several examples for hair style edits, where a different mapper used in each column. In all of these examples, the mapper succeeds in preserving the identity and most of the other visual attributes that are not related to hair. Note, that the resulting hair appearance is adapted to the individual; this is particularly apparent in the Curly hair and Bob-cut hairstyle edits. It should be noted that the text prompts are not limited to a single attribute at a time. Figuremapper-multi shows four different combinations of hair attributes, straight/curly and short/long, each yielding the expected outcome. This degree of control has not been demonstrated by any previous method we're aware of. Since the latent mapper infers a custom-tailored manipulation step for each input image, it is interesting to examine the extent to which the direction of the step in latent space varies over different inputs. To test this, we first invert the test set of CelebA-HQ using e4e . Next, we feed the inverted latent codes into several trained mappers and compute the cosine similarity between all pairs of the resulting manipulation directions. The mean and the standard deviation of the cosine similarity for each mapper is reported in Tablemapper-directions. The table shows that even though the mapper infers manipulation steps that are adapted to the input image, in practice, the cosine similarity of these steps for a given text prompt is high, implying that their directions are not as different as one might expect.

如前所述,当预期编辑会更改身份时,我们不使用identity loss。我们在本文中的示例中使用的参数值是$\lambda_L2 = 0.8, \lambda_ID = 0.1 $,除了QuotGLOBAL-VS-MAPPER中的特朗普操纵,其中我们使用的参数值是$\lambda_L2 = 2, \lambda_ID = 0 $。在Figuremapper-头发中,我们为发型编辑提供了几个示例,其中每列中使用的不同映射器。在所有这些示例中,映射器成功地保留了与头发无关的身份和其他视觉属性。注意,所得头发外观适应各个;这在卷发和鲍勃切割发型编辑中特别明显。应注意,文本提示不限于一次单个属性。 Figuremapper-Multi显示了四种不同的头发属性组合,直/卷曲和短/长,每个都会产生预期的结果。任何先前的方法都没有证明这种控制程度。由于潜像映射器为每个输入图像推送定制定制的操作步骤,因此有趣的是检查潜伏空间中步骤方向在不同输入中变化的程度。为了测试这一点,我们首先使用E4E倒置Celeba-HQ的测试集。接下来,我们将反转的潜码馈送到几个训练的映射器中,并计算所产生的操纵方向的所有成对之间的余弦相似性。在 Tablemapper-directions上报道了每个映射器的余弦相似性的平均值和标准偏差。表格展示即使映射器infers操作步骤,它们在实践中,对于给定文本提示的这些步骤的余弦相似性很高,这意味着它们的方向与可能期望的方向不同。

Figure 4: Hair style edits using our mapper. The driving text prompts are indicated below each column. All input images are inversions of real images.

图4:使用我们的映射器进行发型编辑。驱动文本提示显示在每列下方。所有输入图像都是真实图像的反演。

Global Directions 全局方向

While the latent mapper allows fast inference time, we find that it sometimes falls short when a fine-grained disentangled manipulation is desired. Furthermore, as we have seen, the directions of different manipulation steps for a given text prompt tend to be similar. Motivated by these observations, in this section we propose a method for mapping a text prompt into a single, global direction in StyleGAN's style space $ \Delta $ , which has been shown to be more disentangled than other latent spaces. Let $ s \in S $ denote a style code, and $ G(s) $ the corresponding generated image. Given a text prompt indicating a desired attribute, we seek a manipulation direction $ \Delta s $ , such that $ G(s + \alpha\Delta s) $ yields an image where that attribute is introduced or amplified, without significantly affecting other attributes. The manipulation strength is controlled by $ \alpha $ . Our high-level idea is to first use the CLIP text encoder to obtain a vector $ \Delta t $ in CLIP's joint language-image embedding and then map this vector into a manipulation direction $ \Delta s $ in $ S $ . A stable $ \Delta t $ is obtained from natural language, using prompt engineering, as described below. The corresponding direction $ \Delta s $ is then determined by assessing the relevance of each style channel to the target attribute.

虽然潜在映射器允许快速的推理时间,但我们发现,当需要进行细粒度的解纠缠处理时,它有时会不足。此外,正如我们所看到的,给定文本提示的不同操作步骤的方向趋于相似。基于这些观察,在本节中,我们提出了一种在StyleGAN的样式空间S中将文本提示映射到单个全局方向的方法,已被证明比其他潜在空间[ wu2020stylespace ]更 容易纠缠。

让$ s \in S $表示样式代码,$ G(s) $相应的生成图像。给定指示所需属性的文本提示,我们寻求操纵方向$\Delta s $,使得$ G(s + \alpha\Delta s) $产生介绍或放大该属性的图像,而不会显着影响其他属性。操纵强度由$\alpha $控制。我们的高级想法是首先使用CLIP文本编码器获取向量$\Delta t $,然后将此向量映射到$ S $中的操作方向$\Delta s $。如下所述,使用迅速的工程获得稳定的$\Delta t $。然后通过评估每个样式通道与目标属性的相关性来确定相应的方向$\Delta s$。

More formally, denote by $ \clipI $ the manifold of image embeddings in CLIP's joint embedding space, and by $ \clipT $ the manifold of its text embeddings. We distinguish between these two manifolds, because there is no one-to-one mapping between them: an image may contain a large number of visual attributes, which can hardly be comprehensively described by a single text sentence; conversely, a given sentence may describe many different images. During CLIP training, all embeddings are normalized to a unit norm, and therefore only the direction of embedding contains semantic information, while the norm may be ignored. Thus, in well trained areas of the CLIP space, we expect directions on the $ \clipT $ and $ \clipI $ manifolds that correspond to the same semantic changes to be roughly collinear (i.e., have large cosine similarity), and nearly identical after normalization.

更正式,通过$\clipI $表示剪辑的联合嵌入空间中的 image embeddings,以及$\clipT $其文本嵌入的text embedding。我们将这两个流形区分开来,因为它们之间没有一对一的映射:图像可能包含大量的视觉属性,很难用一个文本语句来全面描述;相反,给定的句子可能描述许多不同的图像。在CLIP训练期间,所有嵌入都被标准化为一个单位规范,因此,只有嵌入的方向包含语义信息,而该规范可能会被忽略。因此,在CLIP空间的训练有素的地区,我们期望在对应于相同的语义变化的$\clipT $和$\clipI$歧管的方向,该方法大致相连(即,具有大余弦相似性),并且在归一化之后几乎相同。

Given a pair of images, $ G(s) $ and $ G(s+\alpha\Delta s) $ , we denote their $ \clipI $ embeddings by $ i $ and $ i + \Delta i $ , respectively. Thus, the difference between the two images in CLIP space is given by $ \Delta i $ . Given a natural language instruction encoded as $ \Delta t $ , and assuming collinearity between $ \Delta t $ and $ \Delta i $ , we can determine a manipulation direction $ \Delta s $ by assessing the relevance of each channel in $ S $ to the direction $ \Delta i $ .

给定一对图像,$ G(s) $和$ G(s+\alpha\Delta s) $,我们分别表示$\clipI$嵌入$ i $和$ i + \Delta i $。因此,夹子空间中的两个图像之间的差异由$\Delta i $给出。给定编码为$\Delta t $的自然语言指令,并且假设$\Delta t$和$\Delta i$之间的相连性,我们可以通过评估$ S $中的每个通道的相关性$\Delta i $来确定操纵方向$\Delta s$。

From natural language to Δt

In order to reduce text embedding noise, Radford utilize a technique called prompt engineering that feeds several sentences with the same meaning to the text encoder, and averages their embeddings. For example, for ImageNet zero-shot classification, a bank of 80 different sentence templates is used, such as a bad photo of a {}, a cropped photo of the {}, a black and white photo of a {}, and a painting of a {}. At inference time, the target class is automatically substituted into these templates to build a bank of sentences with similar semantics, whose embeddings are then averaged. This process improves zero-shot classification accuracy by an additional $ 3.5% $ over using a single text prompt. Similarly, we also employ prompt engineering (using the same ImageNet prompt bank) in order to compute stable directions in $ \clipT $ . Specifically, our method should be provided with text description of a target attribute and a corresponding neutral class. For example, when manipulating images of cars, the target attribute might be specified as a sports car, in which case the corresponding neutral class might be a car. Prompt engineering is then applied to produce the average embeddings for the target and the neutral class, and the normalized difference between the two embeddings is used as the target direction $ \Delta t $ .

为了减少文本嵌入噪声,Radford利用了一种称为提示工程的技术,该技术将多个句子提供与文本编码器相同的含义,并平均其嵌入式。例如,对于Imagenet零拍分类,使用了80个不同句子模板的银行,例如\ {}的坏照片,\ {}的裁剪照片,黑白照片{},以及\ {}的绘画。在推理时间以后,目标类被自动替换为这些模板,以构建具有类似语义的句子库,然后平均嵌入。使用单个文本提示,此过程通过额外的$ 3.5% $提高零拍分类精度。同样,我们还采用了提示工程(使用相同的想象件提示银行),以计算$\clipT $中的稳定方向。具体地,我们的方法应该提供目标属性的文本描述和相应的中性类。例如,当操纵汽车的图像时,目标属性可能被指定为跑车,在这种情况下,相应的中立类可能是汽车。然后应用提示工程以产生目标和中性类的平均嵌入,并且两个嵌入的归一化差异用作目标方向$\Delta t $。

Channelwise relevance 通道相关性

Next, our goal is to construct a style space manipulation direction $ \Delta s $ that would yield a change $ \Delta i $ , collinear with the target direction $ \Delta t $ . For this purpose, we need to assess the relevance of each channel $ c $ of $ S $ to a given direction $ \Delta i $ in CLIP's joint embedding space. We generate a collection of style codes $ s \in S $ , and perturb only the $ c $ channel of each style code by adding a negative and a positive value.

接下来,我们的目标是构造一个风格的空间操纵方向$\Delta s $,它将产生更改$\Delta i $,与目标方向$\Delta t $共线。为此目的,我们需要评估$ S $的每个通道$ c $与给定方向$\Delta i $中的每个通道$ c $在剪辑的关节嵌入空间中。我们通过添加负值和正值,生成一系列样式代码$ s \in S $,并且仅扰乱了每个样式代码的$ c $通道。

Denoting by $ \Delta i_c $ the CLIP space direction between the resulting pair of images, the relevance of channel $ c $ to the target manipulation is estimated as the mean projection of $ \Delta i_c $ onto $ \Delta i $ :

表示由$\Delta i_c $在得到的一对图像之间的剪辑空间方向,估计通道$ c $到目标操纵的相关性作为$\Delta i_c $到$\Delta i$:

$$ R_c(\Delta i) = \mathbb{E}_{s \in S }{\Delta i_c \cdot\Delta i} $$

In practice, we use 100 image pairs to estimate the mean. The pairs of images that we generate are given by $ G(s \pm\alpha\Delta s_c) $ , where $ \Delta s_c $ is a zero vector, except its $ c $ coordinate, which is set to the standard deviation of the channel. The magnitude of the perturbation is set to $ \alpha=5 $ .

在实践中,我们使用100个图像对来估计均值。我们生成的图像对由$ G(s \pm\alpha\Delta s_c) $提供,其中$\Delta s_c $是零向量,除了其$ c $坐标,该坐标被设置为通道的标准偏差。扰动的幅度被设置为$\alpha=5 $。

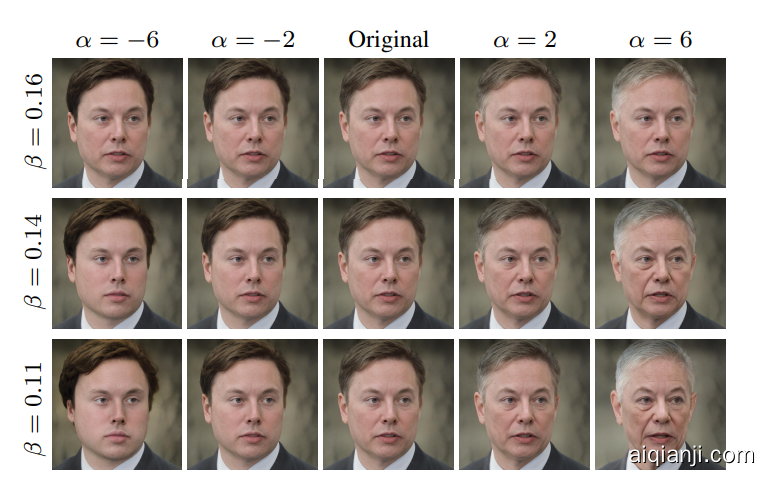

Figure 6 : Image manipulation driven by the prompt “grey hair” for different manipulation strengths and disentanglement thresholds. Moving along the Δs direction, causes the hair color to become more grey, while steps in the −Δs direction yields darker hair. The effect becomes stronger as the strength α increases. When the disentanglement threshold β is high, only the hair color is affected, and as β is lowered, additional correlated attributes, such as wrinkles and the shape of the face are affected as well.

图6:由提示“灰发”驱动的图像处理,具有不同的处理强度和解开阈值。沿着Δs 方向,使头发的颜色变灰,而进入 -Δs方向产生深色头发。强度越强,效果越强α增加。当解开阈值β 高,只影响头发的颜色,并且 β 降低时,其他相关属性(例如皱纹和脸部形状)也会受到影响。

Having estimated the relevance $ R_c $ of each channel, we ignore channels whose $ R_c $ falls below a threshold $ \beta $ . This parameter may be used to control the degree of disentanglement in the manipulation: using higher threshold values results in more disentangled manipulations, but at the same time the visual effect of the manipulation is reduced. Since various high-level attributes, such as age, involve a combination of several low